Instances and Images Guide

Managing Instances and Images

Abstract

Preface

Red Hat OpenStack Platform (RHOSP) provides the foundation to build a private or public Infrastructure-as-a-Service (IaaS) cloud on top of Red Hat Enterprise Linux. It offers a massively scalable, fault-tolerant platform for the development of cloud-enabled workloads.

This guide discusses procedures for creating and managing images, and instances. It also mentions the procedure for configuring the storage for instances for Red Hat OpenStack Platform.

You can manage the cloud using either the OpenStack dashboard or the command-line clients. Most procedures can be carried out using either method; some of the more advanced procedures can only be executed on the command line. This guide provides procedures for the dashboard where possible.

For the complete suite of documentation for Red Hat OpenStack Platform, see Red Hat OpenStack Platform Documentation Suite.

Chapter 1. Image Service

This chapter discusses the steps you can follow to manage images and storage in Red Hat OpenStack Platform.

A virtual machine image is a file which contains a virtual disk which has a bootable operating system installed on it. Virtual machine images are supported in different formats. The following formats are available on Red Hat OpenStack Platform:

-

RAW- Unstructured disk image format. -

QCOW2- Disk format supported by QEMU emulator. This format includes QCOW2v3 (sometimes referred to as QCOW3), which requires QEMU 1.1 or higher. -

ISO- Sector-by-sector copy of the data on a disk, stored in a binary file. -

AKI- Indicates an Amazon Kernel Image. -

AMI- Indicates an Amazon Machine Image. -

ARI- Indicates an Amazon RAMDisk Image. -

VDI- Disk format supported by VirtualBox virtual machine monitor and the QEMU emulator. -

VHD- Common disk format used by virtual machine monitors from VMware, VirtualBox, and others. -

VMDK- Disk format supported by many common virtual machine monitors.

While ISO is not normally considered a virtual machine image format, since ISOs contain bootable filesystems with an installed operating system, you can treat them the same as you treat other virtual machine image files.

To download the official Red Hat Enterprise Linux cloud images, your account must have a valid Red Hat Enterprise Linux subscription:

You will be prompted to enter your Red Hat account credentials if you are not logged in to the Customer Portal.

1.1. Understanding the Image Service

The following notable OpenStack Image service (glance) features are available.

1.1.1. Image Signing and Verification

Image signing and verification protects image integrity and authenticity by enabling deployers to sign images and save the signatures and public key certificates as image properties.

By taking advantage of this feature, you can:

- Sign an image using your private key and upload the image, the signature, and a reference to your public key certificate (the verification metadata). The Image service then verifies that the signature is valid.

- Create an image in the Compute service, have the Compute service sign the image, and upload the image and its verification metadata. The Image service again verifies that the signature is valid.

- Request a signed image in the Compute service. The Image service provides the image and its verification metadata, allowing the Compute service to validate the image before booting it.

For information on image signing and verification, refer to the Validate Glance Images chapter of the Manage Secrets with OpenStack Key Manager Guide.

1.1.2. Image conversion

Image conversion converts images by calling the task API while importing an image.

As part of the import workflow, a plugin provides the image conversion. This plugin can be activated or deactivated based on the deployer configuration. Therefore, the deployer needs to specify the preferred format of images for the deployment.

Internally, the Image service receives the bits of the image in a particular format. These bits are stored in a temporary location. The plugin is then triggered to convert the image to the target format and moved to a final destination. When the task is finished, the temporary location is deleted. As a result, the format uploaded initially is not retained by the Image service.

For more information about image conversion, see Enabling image conversion.

The conversion can be triggered only when importing an image. It does not run when uploading an image. For example:

1.1.3. Image Introspection

Every image format comes with a set of metadata embedded inside the image itself. For example, a stream optimized vmdk would contain the following parameters:

By introspecting this vmdk, you can easily know that the disk_type is streamOptimized, and the adapter_type is buslogic. These metadata parameters are useful for the consumer of the image. In Compute, the workflow to instantiate a streamOptimized disk is different from the one to instantiate a flat disk. This new feature allows metadata extraction. You can achieve image introspection by calling the task API while importing the image. An administrator can override metadata settings.

1.1.4. Interoperable Image Import

The OpenStack Image service provides two methods for importing images using the interoperable image import workflow:

-

web-download(default) for importing images from a URI and -

glance-directfor importing from a local file system.

1.1.5. Improving Scalability with Image Service Caching

Use the glance-api caching mechanism to store copies of images on your local machine and retrieve them automatically to improve scalability. With Image service caching, the glance-api can run on multiple hosts. This means that it does not need to retrieve the same image from back-end storage multiple times. Image service caching does not affect any Image service operations.

To configure Image service caching with TripleO heat templates, complete the following steps.

Procedure

In an environment file, set the value of the

GlanceCacheEnabledparameter totrue, which automatically sets theflavorvalue tokeystone+cachemanagementin theglance-api.confheat template:parameter_defaults: GlanceCacheEnabled: trueparameter_defaults: GlanceCacheEnabled: trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

Include the environment file in the

openstack overcloud deploycommand when you redeploy the overcloud.

1.2. Manage Images

The OpenStack Image service (glance) provides discovery, registration, and delivery services for disk and server images. It provides the ability to copy or snapshot a server image, and immediately store it away. Stored images can be used as a template to get new servers up and running quickly and more consistently than installing a server operating system and individually configuring services.

1.2.1. Creating an Image

This section provides you with the steps to manually create OpenStack-compatible images in the QCOW2 format using Red Hat Enterprise Linux 7 ISO files, Red Hat Enterprise Linux 6 ISO files, or Windows ISO files.

1.2.1.1. Use a KVM Guest Image With Red Hat OpenStack Platform

You can use a ready RHEL KVM guest QCOW2 image:

These images are configured with cloud-init and must take advantage of ec2-compatible metadata services for provisioning SSH keys in order to function properly.

Ready Windows KVM guest QCOW2 images are not available.

For the KVM guest images:

-

The

rootaccount in the image is disabled, butsudoaccess is granted to a special user namedcloud-user. -

There is no

rootpassword set for this image.

The root password is locked in /etc/shadow by placing !! in the second field.

For an OpenStack instance, it is recommended that you generate an ssh keypair from the OpenStack dashboard or command line and use that key combination to perform an SSH public authentication to the instance as root.

When the instance is launched, this public key will be injected to it. You can then authenticate using the private key downloaded while creating the keypair.

If you do not want to use keypairs, you can use the admin password that has been set using the Inject an admin Password Into an Instance procedure.

If you want to create custom Red Hat Enterprise Linux or Windows images, see Create a Red Hat Enterprise Linux 7 Image, Create a Red Hat Enterprise Linux 6 Image, or Create a Windows Image.

1.2.1.2. Create Custom Red Hat Enterprise Linux or Windows Images

Prerequisites:

- Linux host machine to create an image. This can be any machine on which you can install and run the Linux packages.

-

libvirt, virt-manager (run command

dnf groupinstall -y @virtualization). This installs all packages necessary for creating a guest operating system. -

Libguestfs tools (run command

dnf install -y libguestfs-tools-c). This installs a set of tools for accessing and modifying virtual machine images. - A Red Hat Enterprise Linux 7 or 6 ISO file (see RHEL 7.2 Binary DVD or RHEL 6.8 Binary DVD) or a Windows ISO file. If you do not have a Windows ISO file, visit the Microsoft TechNet Evaluation Center and download an evaluation image.

-

Text editor, if you want to change the

kickstartfiles (RHEL only).

If you install the libguestfs-tools package on the undercloud, disable iscsid.socket to avoid port conflicts with the tripleo_iscsid service on the undercloud:

sudo systemctl disable --now iscsid.socket

$ sudo systemctl disable --now iscsid.socket

In the following procedures, all commands with the [root@host]# prompt should be run on your host machine.

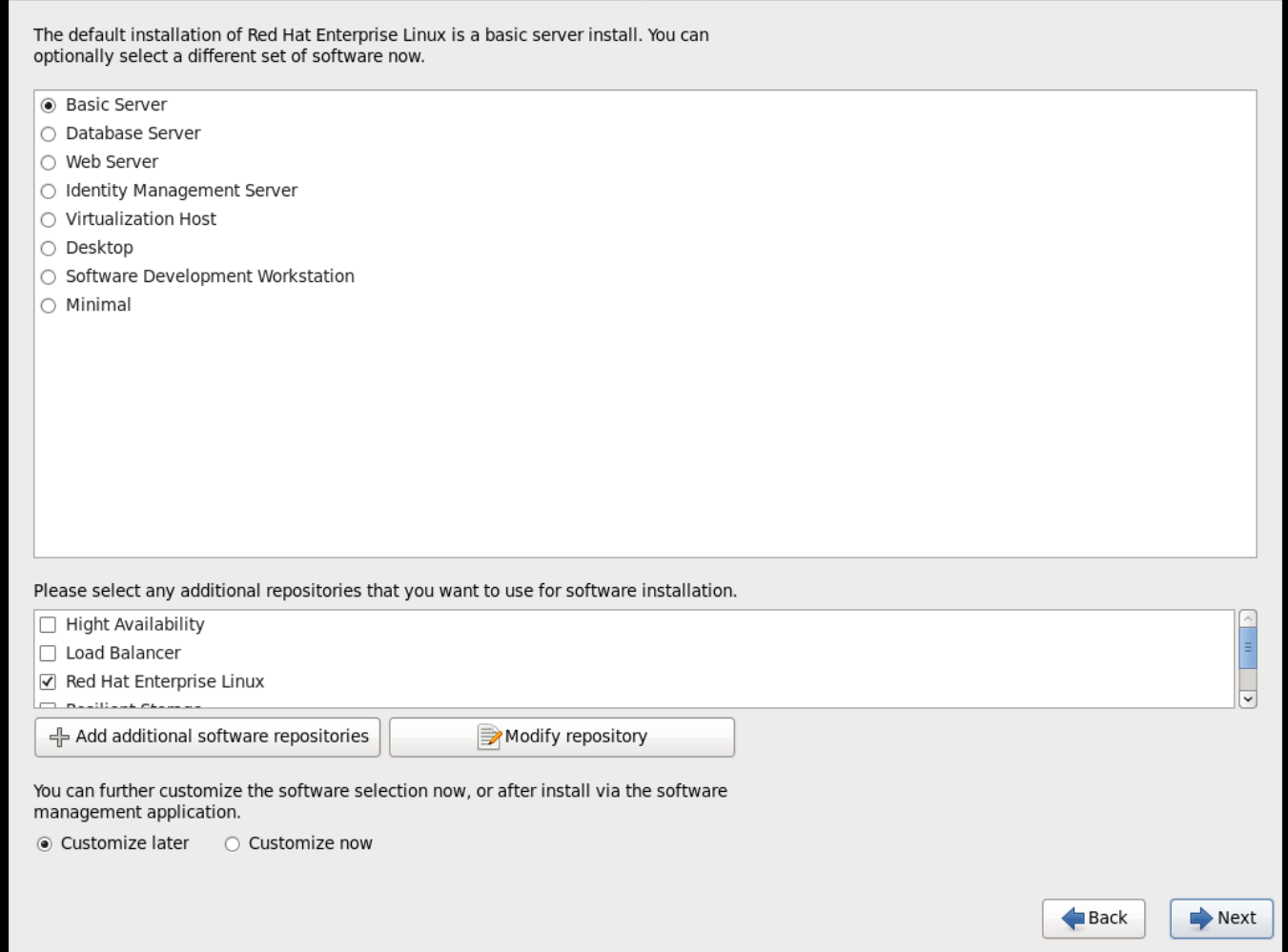

1.2.1.2.1. Create a Red Hat Enterprise Linux 7 Image

This section provides you with the steps to manually create an OpenStack-compatible image in the QCOW2 format using a Red Hat Enterprise Linux 7 ISO file.

Start the installation using

virt-installas shown below:Copy to Clipboard Copied! Toggle word wrap Toggle overflow This launches an instance and starts the installation process.

NoteIf the instance does not launch automatically, run the

virt-viewercommand to view the console:virt-viewer rhel7

[root@host]# virt-viewer rhel7Copy to Clipboard Copied! Toggle word wrap Toggle overflow Set up the virtual machine as follows:

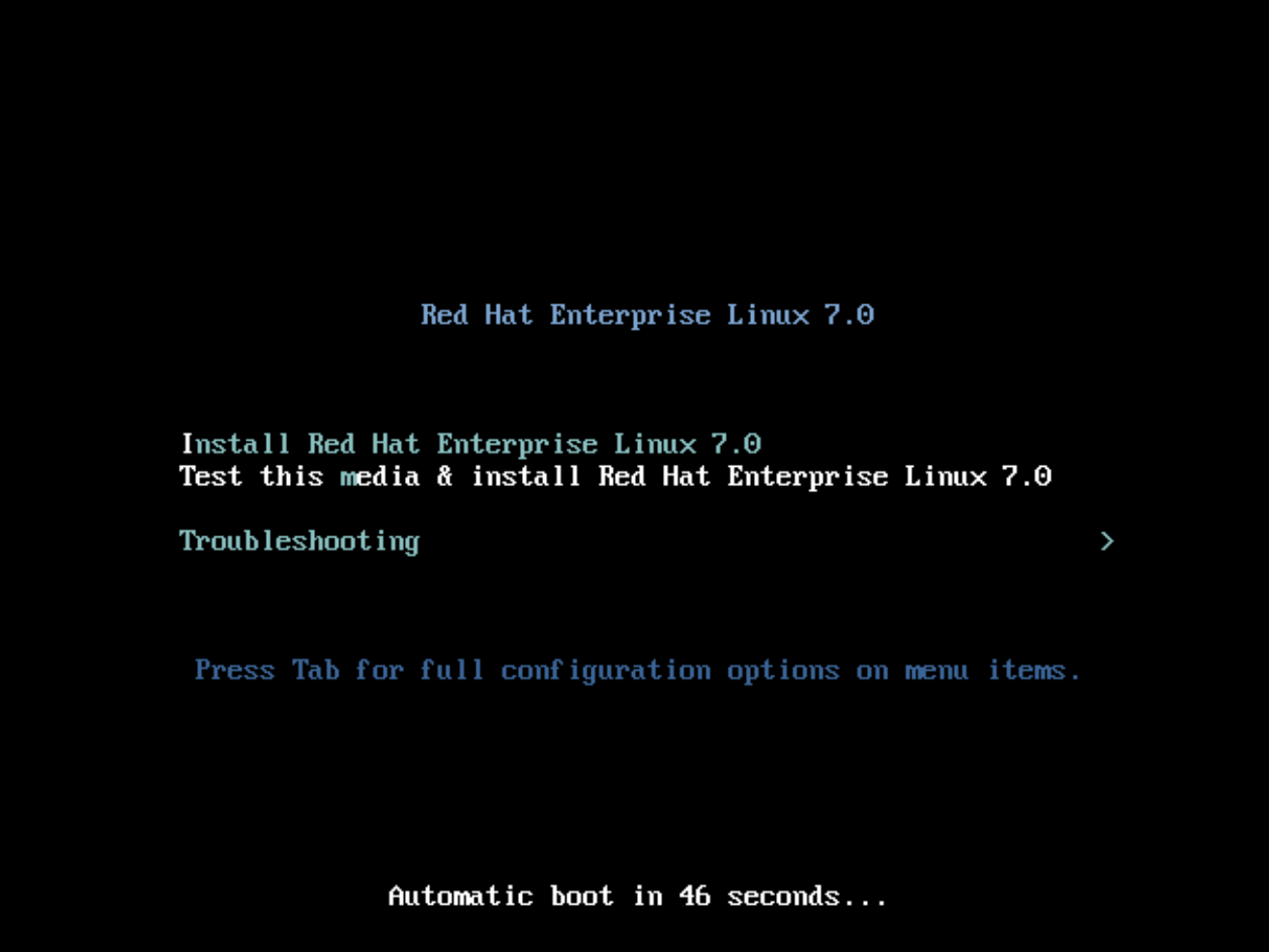

-

At the initial Installer boot menu, choose the

Install Red Hat Enterprise Linux 7.X option.

- Choose the appropriate Language and Keyboard options.

-

When prompted about which type of devices your installation uses, choose Auto-detected installation media.

-

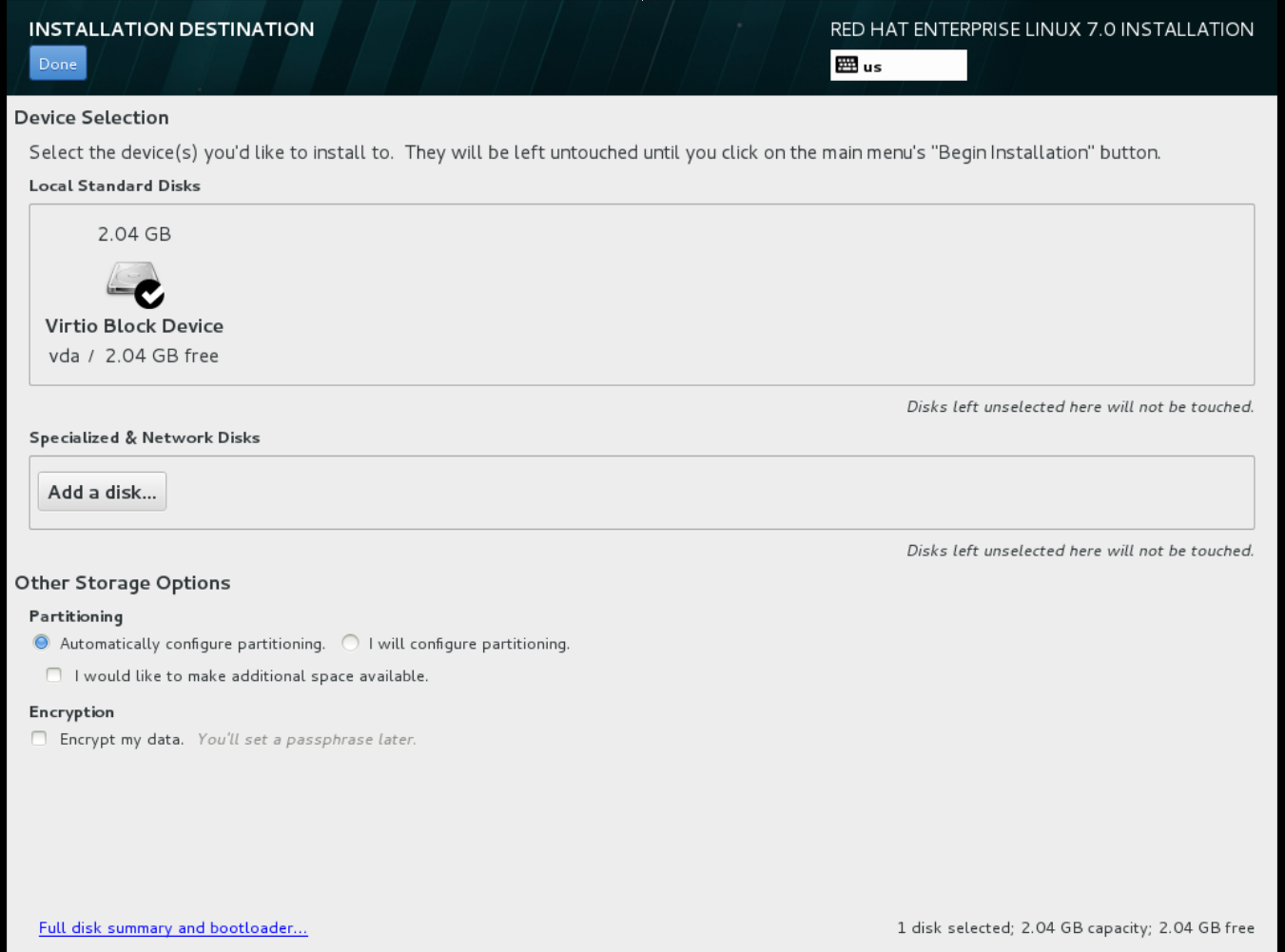

When prompted about which type of installation destination, choose Local Standard Disks.

For other storage options, choose Automatically configure partitioning.

For other storage options, choose Automatically configure partitioning.

- For software selection, choose Minimal Install.

-

For network and host name, choose

eth0for network and choose ahostnamefor your device. The default host name islocalhost.localdomain. -

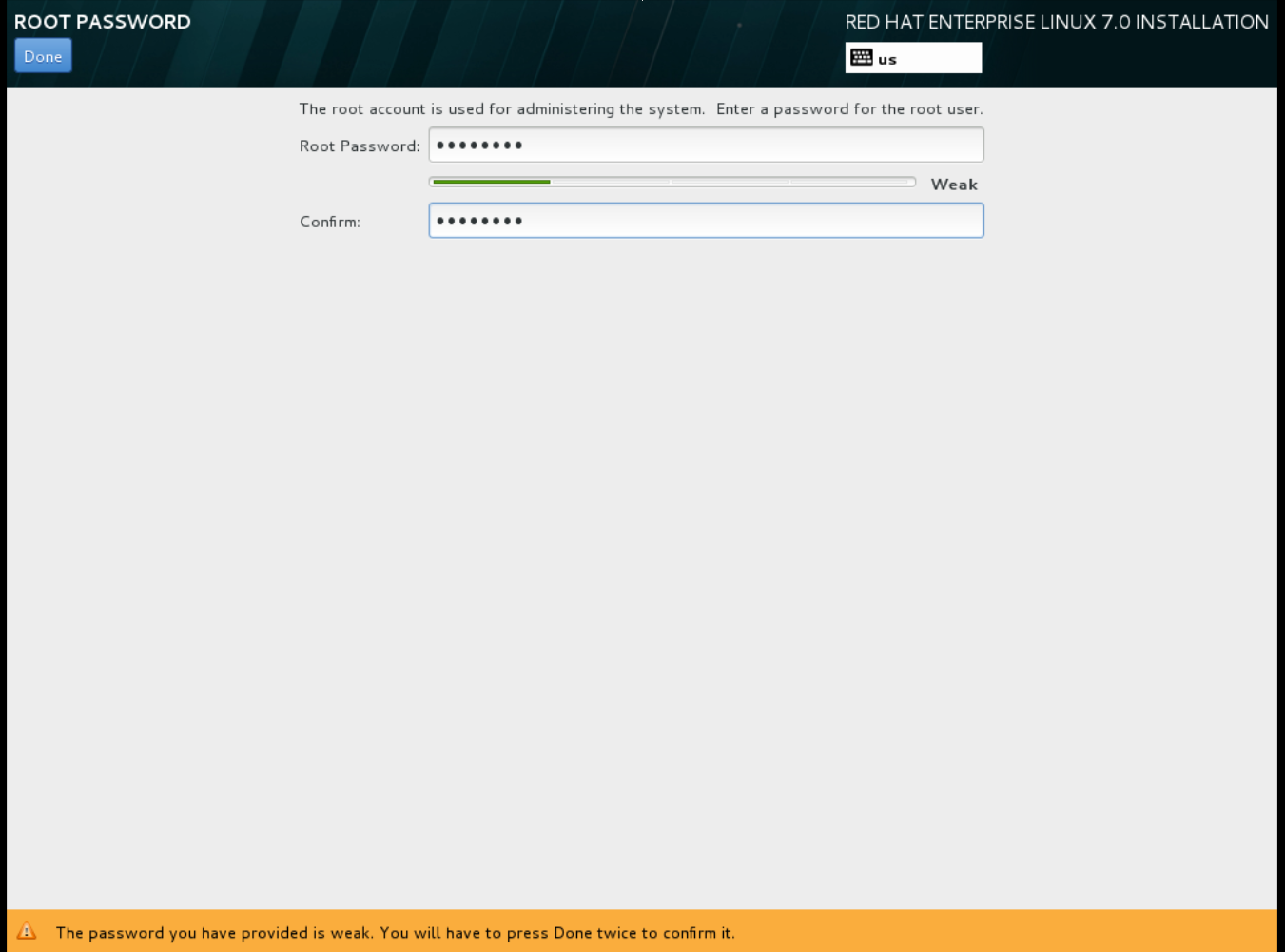

Choose the

rootpassword. The installation process completes and the Complete! screen appears.

The installation process completes and the Complete! screen appears.

-

At the initial Installer boot menu, choose the

- After the installation is complete, reboot the instance and log in as the root user.

Update the

/etc/sysconfig/network-scripts/ifcfg-eth0file so it only contains the following values:TYPE=Ethernet DEVICE=eth0 ONBOOT=yes BOOTPROTO=dhcp NM_CONTROLLED=no

TYPE=Ethernet DEVICE=eth0 ONBOOT=yes BOOTPROTO=dhcp NM_CONTROLLED=noCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Reboot the machine.

Register the machine with the Content Delivery Network.

sudo subscription-manager register sudo subscription-manager attach --pool=Valid-Pool-Number-123456 sudo subscription-manager repos --enable=rhel-7-server-rpms

# sudo subscription-manager register # sudo subscription-manager attach --pool=Valid-Pool-Number-123456 # sudo subscription-manager repos --enable=rhel-7-server-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Update the system:

dnf -y update

# dnf -y updateCopy to Clipboard Copied! Toggle word wrap Toggle overflow Install the

cloud-initpackages:dnf install -y cloud-utils-growpart cloud-init

# dnf install -y cloud-utils-growpart cloud-initCopy to Clipboard Copied! Toggle word wrap Toggle overflow Edit the

/etc/cloud/cloud.cfgconfiguration file and undercloud_init_modulesadd:- resolv-conf

- resolv-confCopy to Clipboard Copied! Toggle word wrap Toggle overflow The

resolv-confoption automatically configures theresolv.confwhen an instance boots for the first time. This file contains information related to the instance such asnameservers,domainand other options.Add the following line to

/etc/sysconfig/networkto avoid problems accessing the EC2 metadata service:NOZEROCONF=yes

NOZEROCONF=yesCopy to Clipboard Copied! Toggle word wrap Toggle overflow To ensure the console messages appear in the

Logtab on the dashboard and thenova console-logoutput, add the following boot option to the/etc/default/grubfile:GRUB_CMDLINE_LINUX_DEFAULT="console=tty0 console=ttyS0,115200n8"

GRUB_CMDLINE_LINUX_DEFAULT="console=tty0 console=ttyS0,115200n8"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Run the

grub2-mkconfigcommand:grub2-mkconfig -o /boot/grub2/grub.cfg

# grub2-mkconfig -o /boot/grub2/grub.cfgCopy to Clipboard Copied! Toggle word wrap Toggle overflow The output is as follows:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Un-register the virtual machine so that the resulting image does not contain the same subscription details for every instance cloned based on it:

subscription-manager repos --disable=* subscription-manager unregister dnf clean all

# subscription-manager repos --disable=* # subscription-manager unregister # dnf clean allCopy to Clipboard Copied! Toggle word wrap Toggle overflow Power off the instance:

poweroff

# poweroffCopy to Clipboard Copied! Toggle word wrap Toggle overflow Reset and clean the image using the

virt-sysprepcommand so it can be used to create instances without issues:virt-sysprep -d rhel7

[root@host]# virt-sysprep -d rhel7Copy to Clipboard Copied! Toggle word wrap Toggle overflow Reduce image size using the

virt-sparsifycommand. This command converts any free space within the disk image back to free space within the host:virt-sparsify --compress /tmp/rhel7.qcow2 rhel7-cloud.qcow2

[root@host]# virt-sparsify --compress /tmp/rhel7.qcow2 rhel7-cloud.qcow2Copy to Clipboard Copied! Toggle word wrap Toggle overflow This creates a new

rhel7-cloud.qcow2file in the location from where the command is run.

The rhel7-cloud.qcow2 image file is ready to be uploaded to the Image service. For more information on uploading this image to your OpenStack deployment using the dashboard, see Upload an Image.

1.2.1.2.2. Create a Red Hat Enterprise Linux 6 Image

This section provides you with the steps to manually create an OpenStack-compatible image in the QCOW2 format using a Red Hat Enterprise Linux 6 ISO file.

Start the installation using

virt-install:Copy to Clipboard Copied! Toggle word wrap Toggle overflow This launches an instance and starts the installation process.

NoteIf the instance does not launch automatically, run the

virt-viewercommand to view the console:virt-viewer rhel6

[root@host]# virt-viewer rhel6Copy to Clipboard Copied! Toggle word wrap Toggle overflow Set up the virtual machines as follows:

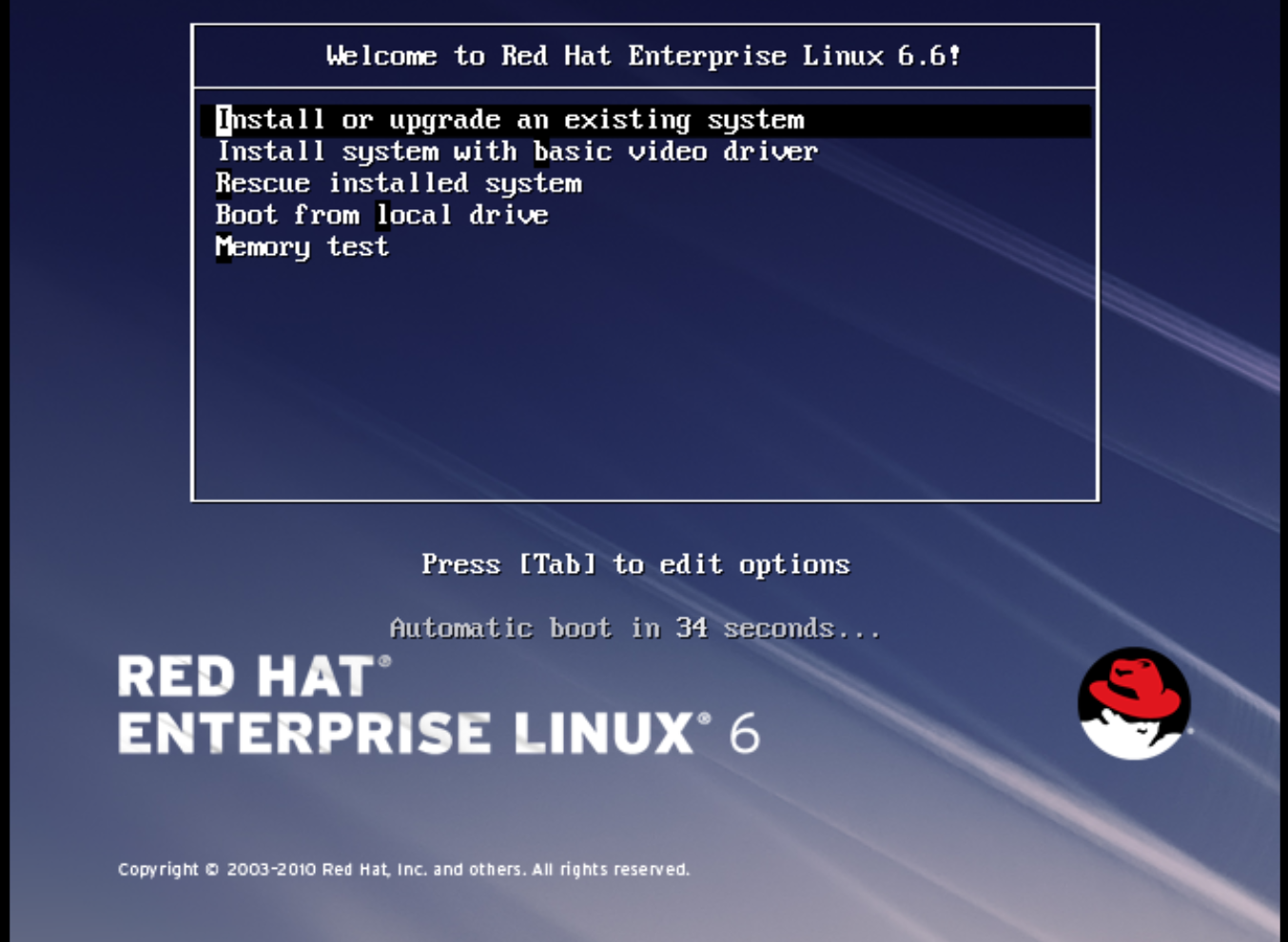

At the initial Installer boot menu, choose the Install or upgrade an existing system option.

Step through the installation prompts. Accept the defaults.

Step through the installation prompts. Accept the defaults.

The installer checks for the disc and lets you decide whether you want to test your installation media before installation. Select OK to run the test or Skip to proceed without testing.

- Choose the appropriate Language and Keyboard options.

-

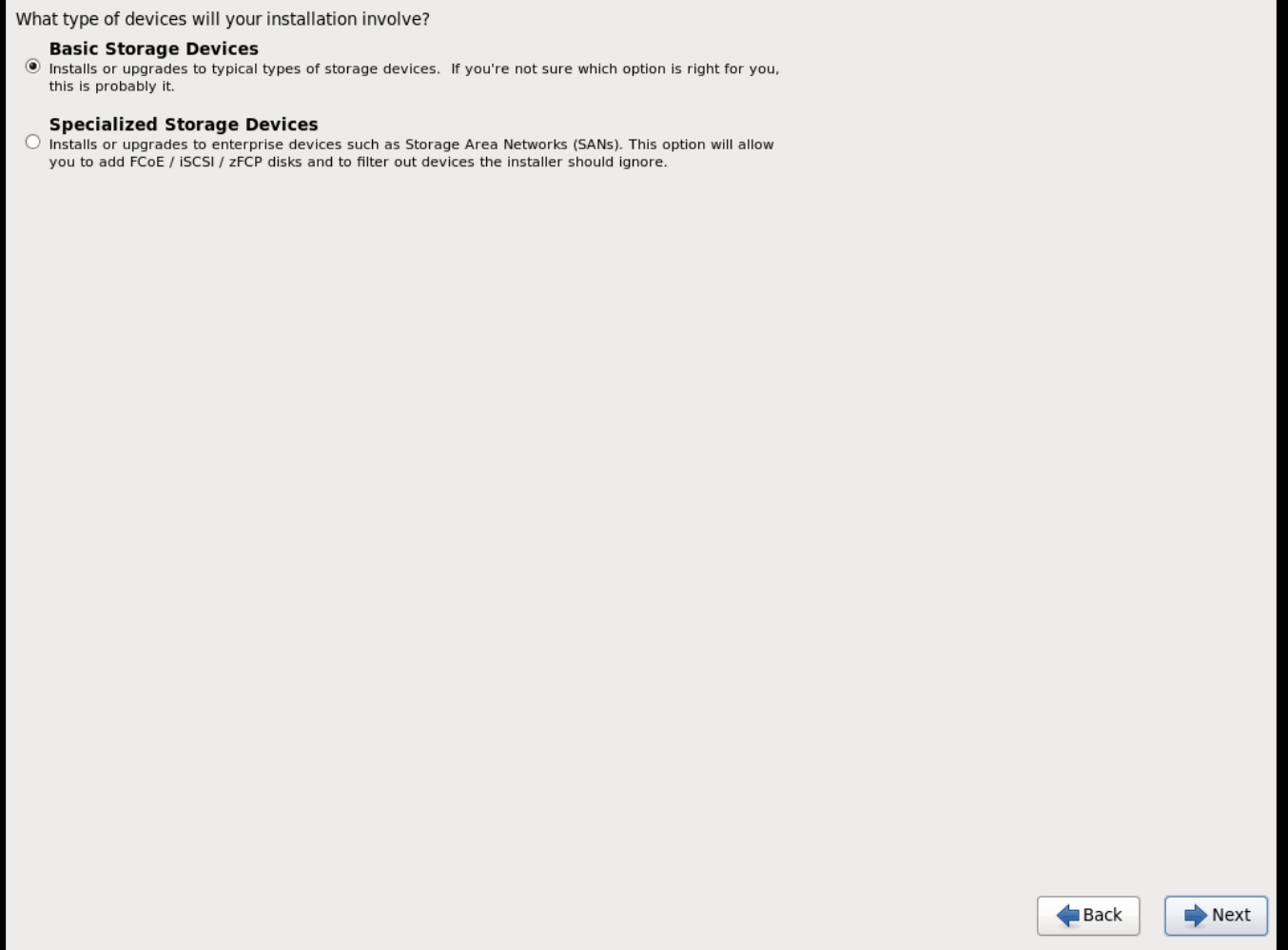

When prompted about which type of devices your installation uses, choose Basic Storage Devices.

-

Choose a

hostnamefor your device. The default host name islocalhost.localdomain. -

Set timezone and

rootpassword. -

Based on the space on the disk, choose the type of installation.

-

Choose the Basic Server install, which installs an SSH server.

- The installation process completes and Congratulations, your Red Hat Enterprise Linux installation is complete screen appears.

-

Reboot the instance and log in as the

rootuser. Update the

/etc/sysconfig/network-scripts/ifcfg-eth0file so it only contains the following values:TYPE=Ethernet DEVICE=eth0 ONBOOT=yes BOOTPROTO=dhcp NM_CONTROLLED=no

TYPE=Ethernet DEVICE=eth0 ONBOOT=yes BOOTPROTO=dhcp NM_CONTROLLED=noCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Reboot the machine.

Register the machine with the Content Delivery Network:

sudo subscription-manager register sudo subscription-manager attach --pool=Valid-Pool-Number-123456 sudo subscription-manager repos --enable=rhel-6-server-rpms

# sudo subscription-manager register # sudo subscription-manager attach --pool=Valid-Pool-Number-123456 # sudo subscription-manager repos --enable=rhel-6-server-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Update the system:

dnf -y update

# dnf -y updateCopy to Clipboard Copied! Toggle word wrap Toggle overflow Install the

cloud-initpackages:dnf install -y cloud-utils-growpart cloud-init

# dnf install -y cloud-utils-growpart cloud-initCopy to Clipboard Copied! Toggle word wrap Toggle overflow Edit the

/etc/cloud/cloud.cfgconfiguration file and undercloud_init_modulesadd:- resolv-conf

- resolv-confCopy to Clipboard Copied! Toggle word wrap Toggle overflow The

resolv-confoption automatically configures theresolv.confconfiguration file when an instance boots for the first time. This file contains information related to the instance such asnameservers,domain, and other options.To prevent network issues, create the

/etc/udev/rules.d/75-persistent-net-generator.rulesfile as follows:echo "#" > /etc/udev/rules.d/75-persistent-net-generator.rules

# echo "#" > /etc/udev/rules.d/75-persistent-net-generator.rulesCopy to Clipboard Copied! Toggle word wrap Toggle overflow This prevents

/etc/udev/rules.d/70-persistent-net.rulesfile from being created. If/etc/udev/rules.d/70-persistent-net.rulesis created, networking may not function properly when booting from snapshots (the network interface is created as "eth1" rather than "eth0" and IP address is not assigned).Add the following line to

/etc/sysconfig/networkto avoid problems accessing the EC2 metadata service:NOZEROCONF=yes

NOZEROCONF=yesCopy to Clipboard Copied! Toggle word wrap Toggle overflow To ensure the console messages appear in the

Logtab on the dashboard and thenova console-logoutput, add the following boot option to the/etc/grub.conf:console=tty0 console=ttyS0,115200n8

console=tty0 console=ttyS0,115200n8Copy to Clipboard Copied! Toggle word wrap Toggle overflow Un-register the virtual machine so that the resulting image does not contain the same subscription details for every instance cloned based on it:

subscription-manager repos --disable=* subscription-manager unregister dnf clean all

# subscription-manager repos --disable=* # subscription-manager unregister # dnf clean allCopy to Clipboard Copied! Toggle word wrap Toggle overflow Power off the instance:

poweroff

# poweroffCopy to Clipboard Copied! Toggle word wrap Toggle overflow Reset and clean the image using the

virt-sysprepcommand so it can be used to create instances without issues:virt-sysprep -d rhel6

[root@host]# virt-sysprep -d rhel6Copy to Clipboard Copied! Toggle word wrap Toggle overflow Reduce image size using the

virt-sparsifycommand. This command converts any free space within the disk image back to free space within the host:virt-sparsify --compress rhel6.qcow2 rhel6-cloud.qcow2

[root@host]# virt-sparsify --compress rhel6.qcow2 rhel6-cloud.qcow2Copy to Clipboard Copied! Toggle word wrap Toggle overflow This creates a new

rhel6-cloud.qcow2file in the location from where the command is run.NoteYou will need to manually resize the partitions of instances based on the image in accordance with the disk space in the flavor that is applied to the instance.

The rhel6-cloud.qcow2 image file is ready to be uploaded to the Image service. For more information on uploading this image to your OpenStack deployment using the dashboard, see Upload an Image

1.2.1.2.3. Create a Windows Image

This section provides you with the steps to manually create an OpenStack-compatible image in the QCOW2 format using a Windows ISO file.

Start the installation using

virt-installas shown below:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Replace the values of the

virt-installparameters as follows:- name — the name that the Windows guest should have.

- size — disk size in GB.

- path — the path to the Windows installation ISO file.

RAM — the requested amount of RAM in MB.

NoteThe

--os-type=windowsparameter ensures that the clock is set up correctly for the Windows guest, and enables its Hyper-V enlightenment features.Note that

virt-installsaves the guest image as/var/lib/libvirt/images/name.qcow2by default. If you want to keep the guest image elsewhere, change the parameter of the--diskoption as follows:--disk path=filename,size=size

--disk path=filename,size=sizeCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace filename with the name of the file which should store the guest image (and optionally its path); for example

path=win8.qcow2,size=8creates an 8 GB file namedwin8.qcow2in the current working directory.TipIf the guest does not launch automatically, run the

virt-viewercommand to view the console:virt-viewer name

[root@host]# virt-viewer nameCopy to Clipboard Copied! Toggle word wrap Toggle overflow

- Installation of Windows systems is beyond the scope of this document. For instructions on how to install Windows, see the relevant Microsoft documentation.

-

To allow the newly installed Windows system to use the virtualized hardware, you may need to install virtio drivers in it. To so do, first install the

virtio-winpackage on the host system. This package contains the virtio ISO image, which is to be attached as a CD-ROM drive to the Windows guest. See Chapter 8. KVM Para-virtualized (virtio) Drivers in the Virtualization Deployment and Administration Guide for detailed instructions on how to install thevirtio-winpackage, add the virtio ISO image to the guest, and install the virtio drivers. To complete the setup, download and execute Cloudbase-Init on the Windows system. At the end of the installation of Cloudbase-Init, select the

Run SysprepandShutdowncheck boxes. TheSyspreptool makes the guest unique by generating an OS ID, which is used by certain Microsoft services.ImportantRed Hat does not provide technical support for Cloudbase-Init. If you encounter an issue, contact Cloudbase Solutions.

When the Windows system shuts down, the name.qcow2 image file is ready to be uploaded to the Image service. For more information on uploading this image to your OpenStack deployment using the dashboard or the command line, see Upload an Image.

1.2.1.3. Use libosinfo

Image Service (glance) can process libosinfo data for images, making it easier to configure the optimal virtual hardware for an instance. This can be done by adding the libosinfo-formatted operating system name to the glance image.

This example specifies that the image with ID

654dbfd5-5c01-411f-8599-a27bd344d79buses the libosinfo value ofrhel7.2:openstack image set 654dbfd5-5c01-411f-8599-a27bd344d79b --property os_name=rhel7.2

$ openstack image set 654dbfd5-5c01-411f-8599-a27bd344d79b --property os_name=rhel7.2Copy to Clipboard Copied! Toggle word wrap Toggle overflow As a result, Compute will supply virtual hardware optimized for

rhel7.2whenever an instance is built using the654dbfd5-5c01-411f-8599-a27bd344d79bimage.NoteFor a complete list of

libosinfovalues, refer to the libosinfo project: https://gitlab.com/libosinfo/osinfo-db/tree/master/data/os

1.2.2. Upload an Image

- In the dashboard, select Project > Compute > Images.

- Click Create Image.

- Fill out the values, and click Create Image when finished.

| Field | Notes |

|---|---|

| Name | Name for the image. The name must be unique within the project. |

| Description | Brief description to identify the image. |

| Image Source | Image source: Image Location or Image File. Based on your selection, the next field is displayed. |

| Image Location or Image File |

|

| Format | Image format (for example, qcow2). |

| Architecture | Image architecture. For example, use i686 for a 32-bit architecture or x86_64 for a 64-bit architecture. |

| Minimum Disk (GB) | Minimum disk size required to boot the image. If this field is not specified, the default value is 0 (no minimum). |

| Minimum RAM (MB) | Minimum memory size required to boot the image. If this field is not specified, the default value is 0 (no minimum). |

| Public | If selected, makes the image public to all users with access to the project. |

| Protected | If selected, ensures only users with specific permissions can delete this image. |

When the image has been successfully uploaded, its status is changed to active, which indicates that the image is available for use. Note that the Image service can handle even large images that take a long time to upload — longer than the lifetime of the Identity service token which was used when the upload was initiated. This is due to the fact that the Image service first creates a trust with the Identity service so that a new token can be obtained and used when the upload is complete and the status of the image is to be updated.

You can also use the glance image-create command with the property option to upload an image. More values are available on the command line. For a complete listing, see Image Configuration Parameters.

1.2.3. Update an Image

- In the dashboard, select Project > Compute > Images.

Click Edit Image from the dropdown list.

NoteThe Edit Image option is available only when you log in as an

adminuser. When you log in as ademouser, you have the option to Launch an instance or Create Volume.- Update the fields and click Update Image when finished. You can update the following values - name, description, kernel ID, ramdisk ID, architecture, format, minimum disk, minimum RAM, public, protected.

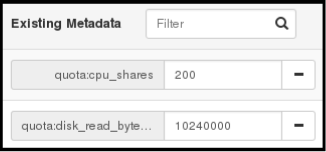

- Click the drop-down menu and select Update Metadata option.

- Specify metadata by adding items from the left column to the right one. In the left column, there are metadata definitions from the Image Service Metadata Catalog. Select Other to add metadata with the key of your choice and click Save when finished.

You can also use the glance image-update command with the property option to update an image. More values are available on the command line; for a complete listing, see Image Configuration Parameters.

1.2.4. Import an Image

You can import images into the Image service (glance) using web-download to import an image from a URI and glance-direct to import an image from a local file system. Both options are enabled by default.

Import methods are configured by the cloud administrator. Run the glance import-info command to list available import options.

1.2.4.1. Import from a Remote URI

You can use the web-download method to copy an image from a remote URI.

Create an image and specify the URI of the image to import.

glance image-create --uri <URI>

glance image-create --uri <URI>Copy to Clipboard Copied! Toggle word wrap Toggle overflow -

You can monitor the image’s availability using the

glance image-show <image-ID>command where the ID is the one provided during image creation.

The Image service web-download method uses a two-stage process to perform the import. First, it creates an image record. Second, it retrieves the image the specified URI. This method provides a more secure way to import images than the deprecated copy-from method used in Image API v1.

The URI is subject to optional blacklist and whitelist filtering as described in the Advanced Overcloud Customization Guide.

The Image Property Injection plugin may inject metadata properties to the image as described in the Advanced Overcloud Customization Guide. These injected properties determine which compute nodes the image instances are launched on.

1.2.4.2. Import from a Local Volume

The glance-direct method creates an image record, which generates an image ID. Once the image is uploaded to the service from a local volume, it is stored in a staging area and is made active after it passes any configured checks. The glance-direct method requires a shared staging area when used in a highly available (HA) configuration.

Image uploads using the glance-direct method fail in an HA environment if a common staging area is not present. In an HA active-active environment, API calls are distributed to the glance controllers. The download API call could be sent to a different controller than the API call to upload the image. For more information about configuring the staging area, refer to the Storage Configuration section in the Advanced Overcloud Customization Guide.

The glance-direct method uses three different calls to import an image:

-

glance image-create -

glance image-stage -

glance image-import

You can use the glance image-create-via-import command to perform all three of these calls in one command. In the example below, uppercase words should be replaced with the appropriate options.

glance image-create-via-import --container-format FORMAT --disk-format DISKFORMAT --name NAME --file /PATH/TO/IMAGE

glance image-create-via-import --container-format FORMAT --disk-format DISKFORMAT --name NAME --file /PATH/TO/IMAGEOnce the image moves from the staging area to the back end location, the image is listed. However, it may take some time for the image to become active.

You can monitor the image’s availability using the glance image-show <image-ID> command where the ID is the one provided during image creation.

1.2.5. Delete an Image

- In the dashboard, select Project > Compute > Images.

- Select the image you want to delete and click Delete Images.

1.2.6. Hide or Unhide an Image

You can hide public images from normal listings presented to users. For instance, you can hide obsolete CentOS 7 images and show only the latest version to simplify the user experience. Users can discover and use hidden images.

To hide an image:

glance image-update <image-id> --hidden 'true'

glance image-update <image-id> --hidden 'true'

To create a hidden image, add the --hidden argument to the glance image-create command.

To unhide an image:

glance image-update <image-id> --hidden 'false'

glance image-update <image-id> --hidden 'false'1.2.8. Enabling image conversion

With the GlanceImageImportPlugins parameter enabled, you can upload a QCOW2 image, and the Image service will convert it to RAW.

Image conversion is automatically enabled when you use Red Hat Ceph Storage RBD to store images and boot Nova instances.

To enable image conversion, create an environment file that contains the following parameter value and include the new environment file with the -e option in the openstack overcloud deploy command:

parameter_defaults: GlanceImageImportPlugins:'image_conversion'

parameter_defaults:

GlanceImageImportPlugins:'image_conversion'1.2.9. Converting an image to RAW format

Red Hat Ceph Storage can store, but does not support using, QCOW2 images to host virtual machine (VM) disks.

When you upload a QCOW2 image and create a VM from it, the compute node downloads the image, converts the image to RAW, and uploads it back into Ceph, which can then use it. This process affects the time it takes to create VMs, especially during parallel VM creation.

For example, when you create multiple VMs simultaneously, uploading the converted image to the Ceph cluster may impact already running workloads. The upload process can starve those workloads of IOPS and impede storage responsiveness.

To boot VMs in Ceph more efficiently (ephemeral back end or boot from volume), the glance image format must be RAW.

Converting an image to RAW may yield an image that is larger in size than the original QCOW2 image file. Run the following command before the conversion to determine the final RAW image size:

qemu-img info <image>.qcow2

qemu-img info <image>.qcow2To convert an image from QCOW2 to RAW format, do the following:

qemu-img convert -p -f qcow2 -O raw <original qcow2 image>.qcow2 <new raw image>.raw

qemu-img convert -p -f qcow2 -O raw <original qcow2 image>.qcow2 <new raw image>.raw1.2.9.1. Configuring Image Service to accept RAW and ISO only

Optionally, to configure the Image Service to accept only RAW and ISO image formats, deploy using an additional environment file that contains the following:

parameter_defaults:

ExtraConfig:

glance::config::api_config:

image_format/disk_formats:

value: "raw,iso"

parameter_defaults:

ExtraConfig:

glance::config::api_config:

image_format/disk_formats:

value: "raw,iso"1.2.10. Storing an image in RAW format

With the GlanceImageImportPlugins parameter enabled, run the following command to store a previously created image in RAW format:

-

For

--name, replaceNAMEwith the name of the image; this is the name that will appear inglance image-list. -

For

--uri, replacehttp://server/image.qcow2with the location and file name of the QCOW2 image.

This command example creates the image record and imports it by using the web-download method. The glance-api downloads the image from the --uri location during the import process. If web-download is not available, glanceclient cannot automatically download the image data. Run the glance import-info command to list the available image import methods.

Chapter 2. Configuring the Compute (nova) service

Use environment files to customize the Compute (nova) service. Puppet generates and stores this configuration in the /var/lib/config-data/puppet-generated/<nova_container>/etc/nova/nova.conf file. Use the following configuration methods to customize the Compute service configuration:

Heat parameters - as detailed in the Compute (nova) Parameters section in the Overcloud Parameters guide. For example:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Puppet parameters - as defined in

/etc/puppet/modules/nova/manifests/*:parameter_defaults: ComputeExtraConfig: nova::compute::force_raw_images: Trueparameter_defaults: ComputeExtraConfig: nova::compute::force_raw_images: TrueCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteOnly use this method if an equivalent heat parameter does not exist.

Manual hieradata overrides - for customizing parameters when no heat or Puppet parameter exists. For example, the following sets the

disk_allocation_ratioin the[DEFAULT]section on the Compute role:parameter_defaults: ComputeExtraConfig: nova::config::nova_config: DEFAULT/disk_allocation_ratio: value: '2.0'parameter_defaults: ComputeExtraConfig: nova::config::nova_config: DEFAULT/disk_allocation_ratio: value: '2.0'Copy to Clipboard Copied! Toggle word wrap Toggle overflow

If a heat parameter exists, it must be used instead of the Puppet parameter; if a Puppet parameter exists, but not a heat parameter, then the Puppet parameter must be used instead of the manual override method. The manual override method must only be used if there is no equivalent heat or Puppet parameter.

Follow the guidance in Identifying Parameters to Modify to determine if a heat or Puppet parameter is available for customizing a particular configuration.

See Parameters in the Advanced Overcloud Customization guide for further details on configuring overcloud services.

2.1. Configuring memory for overallocation

When you use memory overcommit (NovaRAMAllocationRatio >= 1.0), you need to deploy your overcloud with enough swap space to support the allocation ratio.

If your NovaRAMAllocationRatio parameter is set to < 1, follow the RHEL recommendations for swap size. For more information, see Recommended system swap space in the RHEL Managing Storage Devices guide.

Prerequisites

- You have calculated the swap size your node requires. For more information, see Section 2.3, “Calculating swap size”.

Procedure

Copy the

/usr/share/openstack-tripleo-heat-templates/environments/enable-swap.yamlfile to your environment file directory:cp /usr/share/openstack-tripleo-heat-templates/environments/enable-swap.yaml /home/stack/templates/enable-swap.yaml

$ cp /usr/share/openstack-tripleo-heat-templates/environments/enable-swap.yaml /home/stack/templates/enable-swap.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Configure the swap size by adding the following parameters to your

enable-swap.yamlfile:parameter_defaults: swap_size_megabytes: <swap size in MB> swap_path: <full path to location of swap, default: /swap>

parameter_defaults: swap_size_megabytes: <swap size in MB> swap_path: <full path to location of swap, default: /swap>Copy to Clipboard Copied! Toggle word wrap Toggle overflow To apply this configuration, add the

enable_swap.yamlenvironment file to the stack with your other environment files and deploy the overcloud:(undercloud) $ openstack overcloud deploy --templates \ -e [your environment files] \ -e /home/stack/templates/enable-swap.yaml \

(undercloud) $ openstack overcloud deploy --templates \ -e [your environment files] \ -e /home/stack/templates/enable-swap.yaml \Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.2. Calculating reserved host memory on Compute nodes

To determine the total amount of RAM to reserve for host processes, you need to allocate enough memory for each of the following:

- The resources that run on the node, for instance, OSD consumes 3 GB of memory.

- The emulator overhead required to visualize instances on a host.

- The hypervisor for each instance.

After you calculate the additional demands on memory, use the following formula to help you determine the amount of memory to reserve for host processes on each node:

NovaReservedHostMemory = total_RAM - ( (vm_no * (avg_instance_size + overhead)) + (resource1 * resource_ram) + (resource _n_ * resource_ram))

NovaReservedHostMemory = total_RAM - ( (vm_no * (avg_instance_size + overhead)) + (resource1 * resource_ram) + (resource _n_ * resource_ram))-

Replace

vm_nowith the number of instances. -

Replace

avg_instance_sizewith the average amount of memory each instance can use. -

Replace

overheadwith the hypervisor overhead required for each instance. -

Replace

resource1with the number of a resource type on the node. -

Replace

resource_ramwith the amount of RAM each resource of this type requires.

2.3. Calculating swap size

The allocated swap size must be large enough to handle any memory overcommit. You can use the following formulas to calculate the swap size your node requires:

-

overcommit_ratio =

NovaRAMAllocationRatio- 1 -

Minimum swap size (MB) =

(total_RAM * overcommit_ratio) + RHEL_min_swap -

Recommended (maximum) swap size (MB) =

total_RAM * (overcommit_ratio + percentage_of_RAM_to_use_for_swap)

The percentage_of_RAM_to_use_for_swap variable creates a buffer to account for QEMU overhead and any other resources consumed by the operating system or host services.

For instance, to use 25% of the available RAM for swap, with 64GB total RAM, and NovaRAMAllocationRatio set to 1:

- Recommended (maximum) swap size = 64000 MB * (0 + 0.25) = 16000 MB

For information on how to calculate the NovaReservedHostMemory value, see Section 2.2, “Calculating reserved host memory on Compute nodes”.

For information on how to determine the RHEL_min_swap value, see Recommended system swap space in the RHEL Managing Storage Devices guide.

Chapter 3. Configure OpenStack Compute Storage

This chapter describes the architecture for the back-end storage of images in OpenStack Compute (nova), and provides basic configuration options.

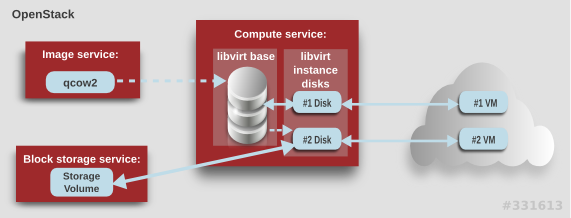

3.1. Architecture Overview

In Red Hat OpenStack Platform, the OpenStack Compute service uses the KVM hypervisor to execute compute workloads. The libvirt driver handles all interactions with KVM, and enables the creation of virtual machines.

Two types of libvirt storage must be considered for Compute:

- Base image, which is a cached and formatted copy of the Image service image.

-

Instance disk, which is created using the

libvirtbase and is the back end for the virtual machine instance. Instance disk data can be stored either in Compute’s ephemeral storage (using thelibvirtbase) or in persistent storage (for example, using Block Storage).

The steps that Compute takes to create a virtual machine instance are:

-

Cache the Image service’s backing image as the

libvirtbase. - Convert the base image to the raw format (if configured).

- Resize the base image to match the VM’s flavor specifications.

- Use the base image to create the libvirt instance disk.

In the diagram above, the #1 instance disk uses ephemeral storage; the #2 disk uses a block-storage volume.

Ephemeral storage is an empty, unformatted, additional disk available to an instance. This storage value is defined by the instance flavor. The value provided by the user must be less than or equal to the ephemeral value defined for the flavor. The default value is 0, meaning no ephemeral storage is created.

The ephemeral disk appears in the same way as a plugged-in hard drive or thumb drive. It is available as a block device which you can check using the lsblk command. You can format it, mount it, and use it however you normally would a block device. There is no way to preserve or reference that disk beyond the instance it is attached to.

Block storage volume is persistant storage available to an instance regardless of the state of the running instance.

3.2. Configuration

You can configure performance tuning and security for your virtual disks by customizing the Compute (nova) configuration files. Compute is configured in custom environment files and heat templates using the parameters detailed in the Compute (nova) Parameters section in the Overcloud Parameters guide. This configuration is generated and stored in the /var/lib/config-data/puppet-generated/<nova_container>/etc/nova/nova.conf file, as detailed in the following table.

| Section | Parameter | Description | Default |

|---|---|---|---|

| [DEFAULT] |

|

Whether to convert a

Converting the base to raw uses more space for any image that could have been used directly by the hypervisor (for example, a qcow2 image). If you have a system with slower I/O or less available space, you might want to specify false, trading the higher CPU requirements of compression for that of minimized input bandwidth.

Raw base images are always used with |

|

| [DEFAULT] |

|

Whether to use CoW (Copy on Write) images for

| true |

| [DEFAULT] |

|

Preallocation mode for

Even when not using CoW instance disks, the copy each VM gets is sparse and so the VM may fail unexpectedly at run time with ENOSPC. By running | none |

| [DEFAULT] |

|

Whether to enable direct resizing of the base image by accessing the image over a block device (boolean). This is only necessary for images with older versions of Because this parameter enables the direct mounting of images which might otherwise be disabled for security reasons, it is not enabled by default. |

|

| [DEFAULT] |

|

The default format that is used for a new ephemeral volume. Value can be: |

|

| [DEFAULT] |

|

Number of seconds to wait between runs of the image cache manager, which impacts base caching on libvirt compute nodes. This period is used in the auto removal of unused cached images (see |

|

| [DEFAULT] |

|

Whether to enable the automatic removal of unused base images (checked every |

|

| [DEFAULT] |

|

How old an unused base image must be before being removed from the |

|

|

[ |

|

Image type to use for |

|

Chapter 4. Virtual Machine Instances

OpenStack Compute is the central component that provides virtual machines on demand. Compute interacts with the Identity service for authentication, Image service for images (used to launch instances), and the dashboard service for the user and administrative interface.

Red Hat OpenStack Platform allows you to easily manage virtual machine instances in the cloud. The Compute service creates, schedules, and manages instances, and exposes this functionality to other OpenStack components. This chapter discusses these procedures along with procedures to add components like key pairs, security groups, host aggregates and flavors. The term instance is used by OpenStack to mean a virtual machine instance.

4.1. Manage Instances

Before you can create an instance, you need to ensure certain other OpenStack components (for example, a network, key pair and an image or a volume as the boot source) are available for the instance.

This section discusses the procedures to add these components, create and manage an instance. Managing an instance refers to updating, and logging in to an instance, viewing how the instances are being used, resizing or deleting them.

4.1.1. Add Components

Use the following sections to create a network, key pair and upload an image or volume source. These components are used in the creation of an instance and are not available by default. You will also need to create a new security group to allow SSH access to the user.

- In the dashboard, select Project.

- Select Network > Networks, and ensure there is a private network to which you can attach the new instance (to create a network, see Create a Network section in the Networking Guide).

- Select Compute > Access & Security > Key Pairs, and ensure there is a key pair (to create a key pair, see Section 4.2.1.1, “Create a Key Pair”).

Ensure that you have either an image or a volume that can be used as a boot source:

- To view boot-source images, select the Images tab (to create an image, see Section 1.2.1, “Creating an Image”).

- To view boot-source volumes, select the Volumes tab (to create a volume, see Create a Volume in the Storage Guide).

- Select Compute > Access & Security > Security Groups, and ensure you have created a security group rule (to create a security group, see Project Security Management in the Users and Identity Management Guide).

4.1.2. Launch an Instance

Launch one or more instances from the dashboard.

By default, the Launch Instance form is used to launch instances. However, you can also enable a Launch Instance wizard that simplifies the steps required. For more information, see Appendix B, Enabling the Launch Instance Wizard.

- In the dashboard, select Project > Compute > Instances.

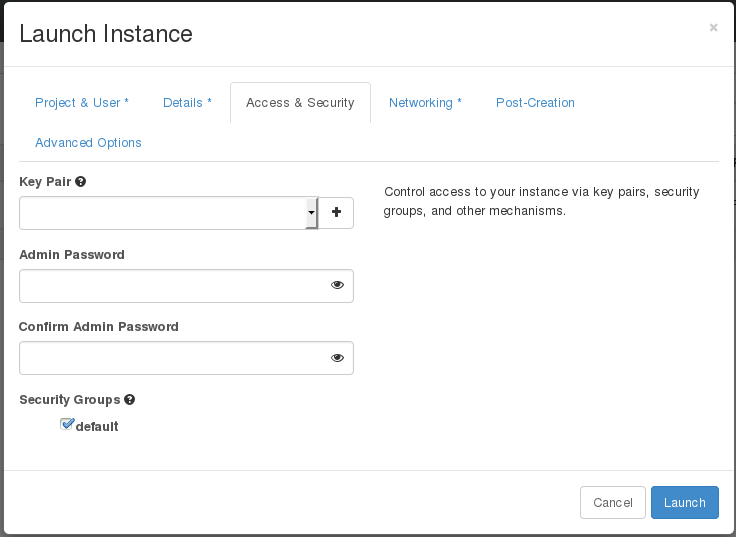

- Click Launch Instance.

- Fill out the fields (those marked with '* ' are required), and click Launch.

One or more instances are created, and launched based on the options provided.

4.1.2.1. Launch Instance Options

The following table outlines the options available when launching a new instance using the Launch Instance form. The same options are also available in the Launch instance wizard.

| Tab | Field | Notes |

|---|---|---|

| Project and User | Project | Select the project from the dropdown list. |

| User | Select the user from the dropdown list. | |

| Details | Availability Zone | Zones are logical groupings of cloud resources in which your instance can be placed. If you are unsure, use the default zone (for more information, see Section 4.4, “Manage Host Aggregates”). |

| Instance Name | A name to identify your instance. | |

| Flavor | The flavor determines what resources the instance is given (for example, memory). For default flavor allocations and information on creating new flavors, see Section 4.3, “Manage Flavors”. | |

| Instance Count | The number of instances to create with these parameters. "1" is preselected. | |

| Instance Boot Source | Depending on the item selected, new fields are displayed allowing you to select the source:

| |

| Access and Security | Key Pair | The specified key pair is injected into the instance and is used to remotely access the instance using SSH (if neither a direct login information or a static key pair is provided). Usually one key pair per project is created. |

| Security Groups | Security groups contain firewall rules which filter the type and direction of the instance’s network traffic (for more information on configuring groups, see Project Security Management in the Users and Identity Management Guide). | |

| Networking | Selected Networks | You must select at least one network. Instances are typically assigned to a private network, and then later given a floating IP address to enable external access. |

| Post-Creation | Customization Script Source | You can provide either a set of commands or a script file, which will run after the instance is booted (for example, to set the instance host name or a user password). If Direct Input is selected, write your commands in the Script Data field; otherwise, specify your script file. Note Any script that starts with #cloud-config is interpreted as using the cloud-config syntax (for information on the syntax, see http://cloudinit.readthedocs.org/en/latest/topics/examples.html). |

| Advanced Options | Disk Partition | By default, the instance is built as a single partition and dynamically resized as needed. However, you can choose to manually configure the partitions yourself. |

| Configuration Drive | If selected, OpenStack writes metadata to a read-only configuration drive that is attached to the instance when it boots (instead of to Compute’s metadata service). After the instance has booted, you can mount this drive to view its contents (enables you to provide files to the instance). |

4.1.4. Resize an Instance

To resize an instance (memory or CPU count), you must select a new flavor for the instance that has the right capacity. If you are increasing the size, remember to first ensure that the host has enough space.

- Ensure communication between hosts by setting up each host with SSH key authentication so that Compute can use SSH to move disks to other hosts (for example, compute nodes can share the same SSH key).

Enable resizing on the original host by setting the

allow_resize_to_same_hostparameter to "True" in your Compute environment file.NoteThe

allow_resize_to_same_hostparameter does not resize the instance on the same host. Even if the parameter equals "True" on all Compute nodes, the scheduler does not force the instance to resize on the same host. This is the expected behavior.- In the dashboard, select Project > Compute > Instances.

- Click the instance’s Actions arrow, and select Resize Instance.

- Select a new flavor in the New Flavor field.

If you want to manually partition the instance when it launches (results in a faster build time):

- Select Advanced Options.

- In the Disk Partition field, select Manual.

- Click Resize.

4.1.5. Connect to an Instance

This section discusses the different methods you can use to access an instance console using the dashboard or the command-line interface. You can also directly connect to an instance’s serial port allowing you to debug even if the network connection fails.

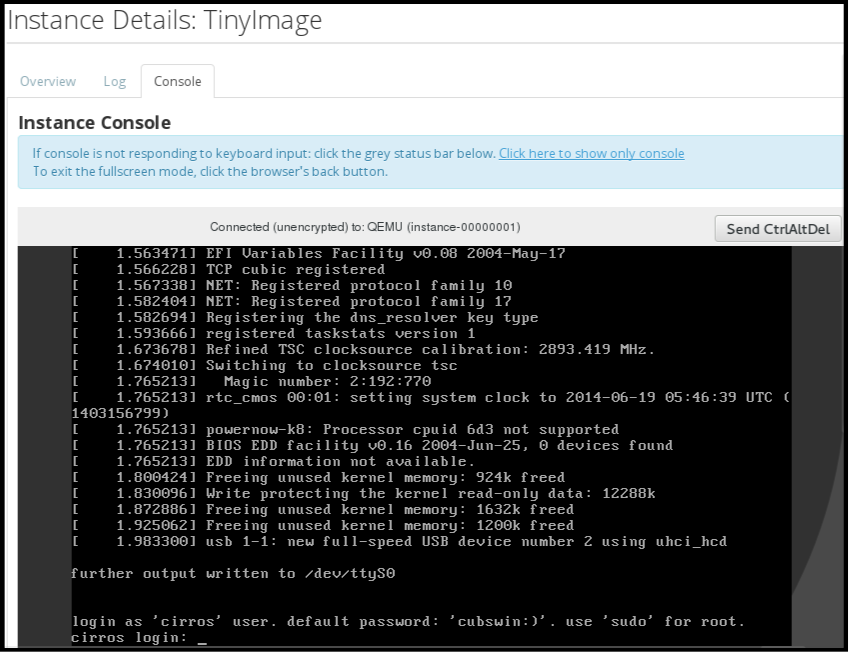

4.1.5.1. Access an Instance Console using the Dashboard

The console allows you a way to directly access your instance within the dashboard.

- In the dashboard, select Compute > Instances.

-

Click the instance’s More button and select Console.

- Log in using the image’s user name and password (for example, a CirrOS image uses cirros/cubswin:)).

4.1.5.2. Directly Connect to a VNC Console

You can directly access an instance’s VNC console using a URL returned by nova get-vnc-console command.

- Browser

To obtain a browser URL, use:

nova get-vnc-console INSTANCE_ID novnc

$ nova get-vnc-console INSTANCE_ID novncCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Java Client

To obtain a Java-client URL, use:

nova get-vnc-console INSTANCE_ID xvpvnc

$ nova get-vnc-console INSTANCE_ID xvpvncCopy to Clipboard Copied! Toggle word wrap Toggle overflow

nova-xvpvncviewer provides a simple example of a Java client. To download the client, use:

git clone https://github.com/cloudbuilders/nova-xvpvncviewer cd nova-xvpvncviewer/viewer make

# git clone https://github.com/cloudbuilders/nova-xvpvncviewer

# cd nova-xvpvncviewer/viewer

# makeRun the viewer with the instance’s Java-client URL:

java -jar VncViewer.jar URL

# java -jar VncViewer.jar URLThis tool is provided only for customer convenience, and is not officially supported by Red Hat.

4.1.6. View Instance Usage

The following usage statistics are available:

Per Project

To view instance usage per project, select Project > Compute > Overview. A usage summary is immediately displayed for all project instances.

You can also view statistics for a specific period of time by specifying the date range and clicking Submit.

Per Hypervisor

If logged in as an administrator, you can also view information for all projects. Click Admin > System and select one of the tabs. For example, the Resource Usage tab offers a way to view reports for a distinct time period. You might also click Hypervisors to view your current vCPU, memory, or disk statistics.

NoteThe

vCPU Usagevalue (x of y) reflects the number of total vCPUs of all virtual machines (x) and the total number of hypervisor cores (y).

4.1.7. Delete an Instance

- In the dashboard, select Project > Compute > Instances, and select your instance.

- Click Terminate Instance.

Deleting an instance does not delete its attached volumes; you must do this separately (see Delete a Volume in the Storage Guide).

4.1.8. Manage Multiple Instances at Once

If you need to start multiple instances at the same time (for example, those that were down for compute or controller maintenance) you can do so easily at Project > Compute > Instances:

- Click the check boxes in the first column for the instances that you want to start. If you want to select all of the instances, click the check box in the first row in the table.

- Click More Actions above the table and select Start Instances.

Similarly, you can shut off or soft reboot multiple instances by selecting the respective actions.

4.2. Manage Instance Security

You can manage access to an instance by assigning it the correct security group (set of firewall rules) and key pair (enables SSH user access). Further, you can assign a floating IP address to an instance to enable external network access. The sections below outline how to create and manage key pairs, security groups, floating IP addresses and logging in to an instance using SSH. There is also a procedure for injecting an admin password in to an instance.

For information on managing security groups, see Project Security Management in the Users and Identity Management Guide.

4.2.1. Manage Key Pairs

Key pairs provide SSH access to the instances. Each time a key pair is generated, its certificate is downloaded to the local machine and can be distributed to users. Typically, one key pair is created for each project (and used for multiple instances).

You can also import an existing key pair into OpenStack.

4.2.1.1. Create a Key Pair

- In the dashboard, select Project > Compute > Access & Security.

- On the Key Pairs tab, click Create Key Pair.

- Specify a name in the Key Pair Name field, and click Create Key Pair.

When the key pair is created, a key pair file is automatically downloaded through the browser. Save this file for later connections from external machines. For command-line SSH connections, you can load this file into SSH by executing:

ssh-add ~/.ssh/os-key.pem

# ssh-add ~/.ssh/os-key.pem4.2.1.2. Import a Key Pair

- In the dashboard, select Project > Compute > Access & Security.

- On the Key Pairs tab, click Import Key Pair.

- Specify a name in the Key Pair Name field, and copy and paste the contents of your public key into the Public Key field.

- Click Import Key Pair.

4.2.1.3. Delete a Key Pair

- In the dashboard, select Project > Compute > Access & Security.

- On the Key Pairs tab, click the key’s Delete Key Pair button.

4.2.2. Create a Security Group

Security groups are sets of IP filter rules that can be assigned to project instances, and which define networking access to the instance. Security group are project specific; project members can edit the default rules for their security group and add new rule sets.

- In the dashboard, select the Project tab, and click Compute > Access & Security.

- On the Security Groups tab, click + Create Security Group.

- Provide a name and description for the group, and click Create Security Group.

For more information on managing project security, see Project Security Management in the Users and Identity Management Guide.

4.2.3. Create, Assign, and Release Floating IP Addresses

By default, an instance is given an internal IP address when it is first created. However, you can enable access through the public network by creating and assigning a floating IP address (external address). You can change an instance’s associated IP address regardless of the instance’s state.

Projects have a limited range of floating IP address that can be used (by default, the limit is 50), so you should release these addresses for reuse when they are no longer needed. Floating IP addresses can only be allocated from an existing floating IP pool, see Create Floating IP Pools in the Networking Guide.

4.2.3.1. Allocate a Floating IP to the Project

- In the dashboard, select Project > Compute > Access & Security.

- On the Floating IPs tab, click Allocate IP to Project.

- Select a network from which to allocate the IP address in the Pool field.

- Click Allocate IP.

4.2.3.2. Assign a Floating IP

- In the dashboard, select Project > Compute > Access & Security.

- On the Floating IPs tab, click the address' Associate button.

Select the address to be assigned in the IP address field.

NoteIf no addresses are available, you can click the

+button to create a new address.- Select the instance to be associated in the Port to be Associated field. An instance can only be associated with one floating IP address.

- Click Associate.

4.2.3.3. Release a Floating IP

- In the dashboard, select Project > Compute > Access & Security.

- On the Floating IPs tab, click the address' menu arrow (next to the Associate/Disassociate button).

- Select Release Floating IP.

4.2.4. Log in to an Instance

Prerequisites:

- Ensure that the instance’s security group has an SSH rule (see Project Security Management in the Users and Identity Management Guide).

- Ensure the instance has a floating IP address (external address) assigned to it (see Section 4.2.3, “Create, Assign, and Release Floating IP Addresses”).

- Obtain the instance’s key-pair certificate. The certificate is downloaded when the key pair is created; if you did not create the key pair yourself, ask your administrator (see Section 4.2.1, “Manage Key Pairs”).

To first load the key pair file into SSH, and then use ssh without naming it:

Change the permissions of the generated key-pair certificate.

chmod 600 os-key.pem

$ chmod 600 os-key.pemCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check whether

ssh-agentis already running:ps -ef | grep ssh-agent

# ps -ef | grep ssh-agentCopy to Clipboard Copied! Toggle word wrap Toggle overflow If not already running, start it up with:

eval `ssh-agent`

# eval `ssh-agent`Copy to Clipboard Copied! Toggle word wrap Toggle overflow On your local machine, load the key-pair certificate into SSH. For example:

ssh-add ~/.ssh/os-key.pem

$ ssh-add ~/.ssh/os-key.pemCopy to Clipboard Copied! Toggle word wrap Toggle overflow - You can now SSH into the file with the user supplied by the image.

The following example command shows how to SSH into the Red Hat Enterprise Linux guest image with the user cloud-user:

ssh cloud-user@192.0.2.24

$ ssh cloud-user@192.0.2.24You can also use the certificate directly. For example:

ssh -i /myDir/os-key.pem cloud-user@192.0.2.24

$ ssh -i /myDir/os-key.pem cloud-user@192.0.2.244.2.5. Inject an admin Password Into an Instance

You can inject an admin (root) password into an instance using the following procedure.

In the

/etc/openstack-dashboard/local_settingsfile, set thechange_set_passwordparameter value toTrue.can_set_password: True

can_set_password: TrueCopy to Clipboard Copied! Toggle word wrap Toggle overflow Set the

inject_passwordparameter to "True" in your Compute environment file.inject_password=true

inject_password=trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow Restart the Compute service.

service nova-compute restart

# service nova-compute restartCopy to Clipboard Copied! Toggle word wrap Toggle overflow

When you use the nova boot command to launch a new instance, the output of the command displays an adminPass parameter. You can use this password to log into the instance as the root user.

The Compute service overwrites the password value in the /etc/shadow file for the root user. This procedure can also be used to activate the root account for the KVM guest images. For more information on how to use KVM guest images, see Section 1.2.1.1, “Use a KVM Guest Image With Red Hat OpenStack Platform”

You can also set a custom password from the dashboard. To enable this, run the following command after you have set can_set_password parameter to true.

systemctl restart httpd.service

# systemctl restart httpd.service

The newly added admin password fields are as follows:

These fields can be used when you launch or rebuild an instance.

4.3. Manage Flavors

Each created instance is given a flavor (resource template), which determines the instance’s size and capacity. Flavors can also specify secondary ephemeral storage, swap disk, metadata to restrict usage, or special project access (none of the default flavors have these additional attributes defined).

| Name | vCPUs | RAM | Root Disk Size |

|---|---|---|---|

| m1.tiny | 1 | 512 MB | 1 GB |

| m1.small | 1 | 2048 MB | 20 GB |

| m1.medium | 2 | 4096 MB | 40 GB |

| m1.large | 4 | 8192 MB | 80 GB |

| m1.xlarge | 8 | 16384 MB | 160 GB |

The majority of end users will be able to use the default flavors. However, you can create and manage specialized flavors. For example, you can:

- Change default memory and capacity to suit the underlying hardware needs.

- Add metadata to force a specific I/O rate for the instance or to match a host aggregate.

Behavior set using image properties overrides behavior set using flavors (for more information, see Section 1.2, “Manage Images”).

4.3.1. Update Configuration Permissions

By default, only administrators can create flavors or view the complete flavor list (select Admin > System > Flavors). To allow all users to configure flavors, specify the following in the /etc/nova/policy.json file (nova-api server):

"compute_extension:flavormanage": "",

4.3.2. Create a Flavor

- As an admin user in the dashboard, select Admin > System > Flavors.

Click Create Flavor, and specify the following fields:

Expand Table 4.4. Flavor Options Tab Field Description Flavor Information

Name

Unique name.

ID

Unique ID. The default value,

auto, generates a UUID4 value, but you can also manually specify an integer or UUID4 value.VCPUs

Number of virtual CPUs.

RAM (MB)

Memory (in megabytes).

Root Disk (GB)

Ephemeral disk size (in gigabytes); to use the native image size, specify

0. This disk is not used if Instance Boot Source=Boot from Volume.Epehemeral Disk (GB)

Secondary ephemeral disk size (in gigabytes) available to an instance. This disk is destroyed when an instance is deleted.

The default value is

0, which implies that no ephemeral disk is created.Swap Disk (MB)

Swap disk size (in megabytes).

Flavor Access

Selected Projects

Projects which can use the flavor. If no projects are selected, all projects have access (

Public=Yes).- Click Create Flavor.

4.3.3. Update General Attributes

- As an admin user in the dashboard, select Admin > System > Flavors.

- Click the flavor’s Edit Flavor button.

- Update the values, and click Save.

4.3.4. Update Flavor Metadata

In addition to editing general attributes, you can add metadata to a flavor (extra_specs), which can help fine-tune instance usage. For example, you might want to set the maximum-allowed bandwidth or disk writes.

- Pre-defined keys determine hardware support or quotas. Pre-defined keys are limited by the hypervisor you are using (for libvirt, see Table 4.5, “Libvirt Metadata”).

-

Both pre-defined and user-defined keys can determine instance scheduling. For example, you might specify

SpecialComp=True; any instance with this flavor can then only run in a host aggregate with the same key-value combination in its metadata (see Section 4.4, “Manage Host Aggregates”).

4.3.4.1. View Metadata

- As an admin user in the dashboard, select Admin > System > Flavors.

-

Click the flavor’s Metadata link (

YesorNo). All current values are listed on the right-hand side under Existing Metadata.

4.3.4.2. Add Metadata

You specify a flavor’s metadata using a key/value pair.

- As an admin user in the dashboard, select Admin > System > Flavors.

-

Click the flavor’s Metadata link (

YesorNo). All current values are listed on the right-hand side under Existing Metadata. - Under Available Metadata, click on the Other field, and specify the key you want to add (see Table 4.5, “Libvirt Metadata”).

- Click the + button; you can now view the new key under Existing Metadata.

Fill in the key’s value in its right-hand field.

- When finished with adding key-value pairs, click Save.

| Key | Description |

|---|---|

|

| Action that configures support limits per instance. Valid actions are:

Example: |

|

| Definition of NUMA topology for the instance. For flavors whose RAM and vCPU allocations are larger than the size of NUMA nodes in the compute hosts, defining NUMA topology enables hosts to better utilize NUMA and improve performance of the guest OS. NUMA definitions defined through the flavor override image definitions. Valid definitions are:

Note

If the values of Example when the instance has 8 vCPUs and 4GB RAM:

The scheduler looks for a host with 2 NUMA nodes with the ability to run 6 CPUs + 3072 MB, or 3 GB, of RAM on one node, and 2 CPUS + 1024 MB, or 1 GB, of RAM on another node. If a host has a single NUMA node with capability to run 8 CPUs and 4 GB of RAM, it will not be considered a valid match. |

|

| An instance watchdog device can be used to trigger an action if the instance somehow fails (or hangs). Valid actions are:

Example: |

|

| You can use this parameter to specify the NUMA affinity policy for PCI passthrough devices and SR-IOV interfaces. Set to one of the following valid values:

Example: |

|

|

A random-number generator device can be added to an instance using its image properties (see If the device has been added, valid actions are:

Example: |

|

| Maximum permitted RAM to be allowed for video devices (in MB).

Example: |

|

| Enforcing limit for the instance. Valid options are:

Example: In addition, the VMware driver supports the following quota options, which control upper and lower limits for CPUs, RAM, disks, and networks, as well as shares, which can be used to control relative allocation of available resources among tenants:

|

4.4. Manage Host Aggregates

A single Compute deployment can be partitioned into logical groups for performance or administrative purposes. OpenStack uses the following terms:

Host aggregates - A host aggregate creates logical units in a OpenStack deployment by grouping together hosts. Aggregates are assigned Compute hosts and associated metadata; a host can be in more than one host aggregate. Only administrators can see or create host aggregates.

An aggregate’s metadata is commonly used to provide information for use with the Compute scheduler (for example, limiting specific flavors or images to a subset of hosts). Metadata specified in a host aggregate will limit the use of that host to any instance that has the same metadata specified in its flavor.

Administrators can use host aggregates to handle load balancing, enforce physical isolation (or redundancy), group servers with common attributes, or separate out classes of hardware. When you create an aggregate, a zone name must be specified, and it is this name which is presented to the end user.

Availability zones - An availability zone is the end-user view of a host aggregate. An end user cannot view which hosts make up the zone, nor see the zone’s metadata; the user can only see the zone’s name.

End users can be directed to use specific zones which have been configured with certain capabilities or within certain areas.

4.4.1. Enable Host Aggregate Scheduling

By default, host-aggregate metadata is not used to filter instance usage. You must update the Compute scheduler’s configuration to enable metadata usage:

- Open your Compute environment file.

Add the following values to the

NovaSchedulerDefaultFiltersparameter, if they are not already present:AggregateInstanceExtraSpecsFilterfor host aggregate metadata.NoteScoped specifications must be used for setting flavor

extra_specswhen specifying bothAggregateInstanceExtraSpecsFilterandComputeCapabilitiesFilterfilters as values of the sameNovaSchedulerDefaultFiltersparameter, otherwise theComputeCapabilitiesFilterwill fail to select a suitable host. For details on the namespaces to use to scope the flavorextra_specskeys for these filters, see Table 4.7, “Scheduling Filters”.-

AvailabilityZoneFilterfor availability zone host specification when launching an instance.

- Save the configuration file.

- Deploy the overcloud.

4.4.2. View Availability Zones or Host Aggregates

As an admin user in the dashboard, select Admin > System > Host Aggregates. All currently defined aggregates are listed in the Host Aggregates section; all zones are in the Availability Zones section.

4.4.3. Add a Host Aggregate

- As an admin user in the dashboard, select Admin > System > Host Aggregates. All currently defined aggregates are listed in the Host Aggregates section.

- Click Create Host Aggregate.

- Add a name for the aggregate in the Name field, and a name by which the end user should see it in the Availability Zone field.

- Click Manage Hosts within Aggregate.

- Select a host for use by clicking its + icon.

- Click Create Host Aggregate.

4.4.4. Update a Host Aggregate

- As an admin user in the dashboard, select Admin > System > Host Aggregates. All currently defined aggregates are listed in the Host Aggregates section.

To update the instance’s Name or Availability zone:

- Click the aggregate’s Edit Host Aggregate button.

- Update the Name or Availability Zone field, and click Save.

To update the instance’s Assigned hosts:

- Click the aggregate’s arrow icon under Actions.

- Click Manage Hosts.

- Change a host’s assignment by clicking its + or - icon.

- When finished, click Save.

To update the instance’s Metadata:

- Click the aggregate’s arrow icon under Actions.

- Click the Update Metadata button. All current values are listed on the right-hand side under Existing Metadata.

- Under Available Metadata, click on the Other field, and specify the key you want to add. Use predefined keys (see Table 4.6, “Host Aggregate Metadata”) or add your own (which will only be valid if exactly the same key is set in an instance’s flavor).

Click the + button; you can now view the new key under Existing Metadata.

NoteRemove a key by clicking its - icon.

Click Save.

Expand Table 4.6. Host Aggregate Metadata Key Description filter_tenant_idIf specified, the aggregate only hosts this tenant (project). Depends on the

AggregateMultiTenancyIsolationfilter being set for the Compute scheduler.

4.4.5. Delete a Host Aggregate

- As an admin user in the dashboard, select Admin > System > Host Aggregates. All currently defined aggregates are listed in the Host Aggregates section.

Remove all assigned hosts from the aggregate:

- Click the aggregate’s arrow icon under Actions.

- Click Manage Hosts.

- Remove all hosts by clicking their - icon.

- When finished, click Save.

- Click the aggregate’s arrow icon under Actions.

- Click Delete Host Aggregate in this and the next dialog screen.

4.5. Schedule Hosts

The Compute scheduling service determines on which host, or host aggregate, to place an instance. As an administrator, you can influence where the scheduler places an instance. For example, you might want to limit scheduling to hosts in a certain group or with the right RAM.

You can configure the following components:

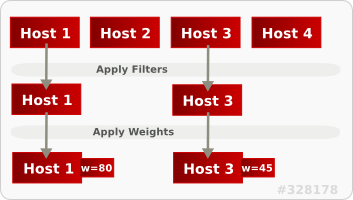

- Filters - Determine the initial set of hosts on which an instance might be placed (see Section 4.5.1, “Configure Scheduling Filters”).

- Weights - When filtering is complete, the resulting set of hosts are prioritized using the weighting system. The highest weight has the highest priority (see Section 4.5.2, “Configure Scheduling Weights”).

-

Scheduler service - There are a number of configuration options in the

/var/lib/config-data/puppet-generated/<nova_container>/etc/nova/nova.conffile (on the scheduler host), which determine how the scheduler executes its tasks, and handles weights and filters. - Placement service - Specify the traits an instance requires a host to have, such as the type of storage disk, or the Intel CPU instruction set extension (see Section 4.5.3, “Configure Placement Service Traits”).

In the following diagram, both host 1 and 3 are eligible after filtering. Host 1 has the highest weight and therefore has the highest priority for scheduling.

4.5.1. Configure Scheduling Filters

You define the filters you want the scheduler to use using the NovaSchedulerDefaultFilters parameter in your Compute environment file. Filters can be added or removed.

The default configuration runs the following filters in the scheduler:

- RetryFilter

- AvailabilityZoneFilter

- ComputeFilter

- ComputeCapabilitiesFilter

- ImagePropertiesFilter

- ServerGroupAntiAffinityFilter

- ServerGroupAffinityFilter

Some filters use information in parameters passed to the instance in:

-

The

nova bootcommand. - The instance’s flavor (see Section 4.3.4, “Update Flavor Metadata”)

- The instance’s image (see Appendix A, Image Configuration Parameters).

All available filters are listed in the following table.

| Filter | Description |

|---|---|

|

| Only passes hosts in host aggregates whose metadata matches the instance’s image metadata; only valid if a host aggregate is specified for the instance. For more information, see Section 1.2.1, “Creating an Image”. |

|

| Metadata in the host aggregate must match the host’s flavor metadata. For more information, see Section 4.3.4, “Update Flavor Metadata”. |

|

This filter can only be specified in the same

| |

|

|

A host with the specified Note The tenant can still place instances on other hosts. |

|

| Passes all available hosts (however, does not disable other filters). |

|

| Filters using the instance’s specified availability zone. |

|

|

Ensures Compute metadata is read correctly. Anything before the |

|

| Passes only hosts that are operational and enabled. |

|

|

Enables an instance to build on a host that is different from one or more specified hosts. Specify |

|

| Only passes hosts that match the instance’s image properties. For more information, see Section 1.2.1, “Creating an Image”. |

|

|

Passes only isolated hosts running isolated images that are specified using |

|

| Recognises and uses an instance’s custom JSON filters:

|

|

The filter is specified as a query hint in the

| |

|

| Filters out hosts with unavailable metrics. |

|