Bare Metal Provisioning

Install, Configure, and Use the Bare Metal Service (Ironic)

Abstract

Preface

This document provides instructions for installing and configuring the Bare Metal service (ironic) in the overcloud, and using the service to provision and manage physical machines for end users.

The Bare Metal service components are also used by the Red Hat OpenStack Platform director, as part of the undercloud, to provision and manage the bare metal nodes that make up the OpenStack environment (the overcloud). For more information about how the director uses the Bare Metal service, see the Director Installation and Usage guide.

Chapter 1. About the Bare Metal Service

The OpenStack Bare Metal service (ironic) provides the components required to provision and manage physical machines for end users. The Bare Metal service in the overcloud interacts with the following OpenStack services:

- OpenStack Compute (nova) provides scheduling, tenant quotas, IP assignment, and a user-facing API for virtual machine instance management, while the Bare Metal service provides the administrative API for hardware management.

- OpenStack Identity (keystone) provides request authentication and assists the Bare Metal service in locating other OpenStack services.

- OpenStack Image service (glance) manages images and image metadata.

- OpenStack Networking (neutron) provides DHCP and network configuration.

- OpenStack Object Storage (swift) is used by certain drivers to expose temporary URLs to images.

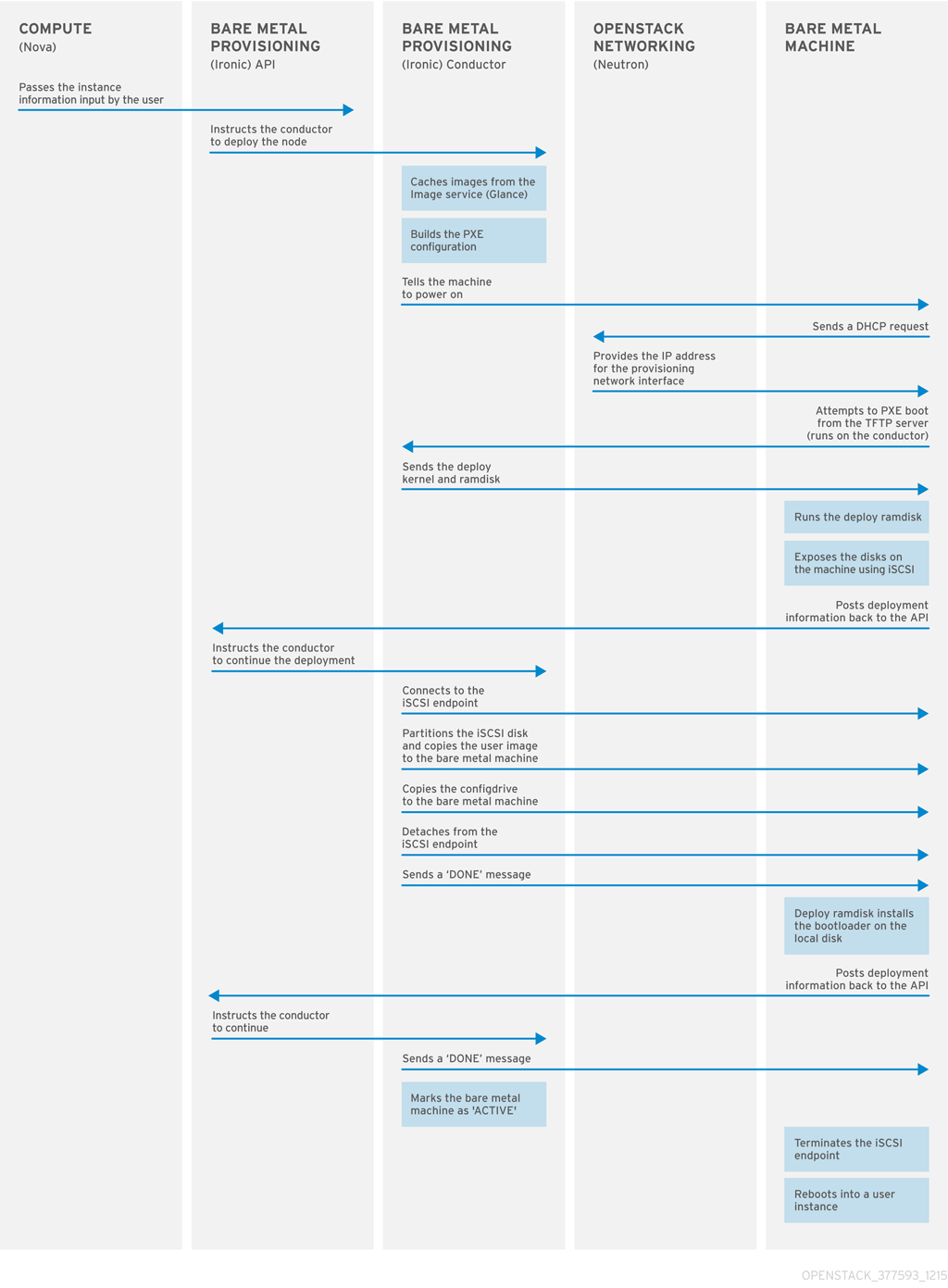

The Bare Metal service uses iPXE to provision physical machines. The following diagram outlines how the OpenStack services interact during the provisioning process when a user launches a new machine with the default drivers.

Chapter 2. Planning for Bare Metal Provisioning

This chapter outlines the requirements for configuring the Bare Metal service, including installation assumptions, hardware requirements, and networking requirements.

2.1. Installation Assumptions

This guide assumes that you have installed the director on the undercloud node, and are ready to install the Bare Metal service along with the rest of the overcloud. For more information on installing the director, see Installing the Undercloud.

The Bare Metal service in the overcloud is designed for a trusted tenant environment, as the bare metal nodes have direct access to the control plane network of your OpenStack installation. If you implement a custom composable network for Ironic services in the overcloud, users do not need to access the control plane.

2.2. Hardware Requirements

Overcloud Requirements

The hardware requirements for an overcloud with the Bare Metal service are the same as for the standard overcloud. For more information, see Overcloud Requirements in the Director Installation and Usage guide.

Bare Metal Machine Requirements

The hardware requirements for bare metal machines that will be provisioned vary depending on the operating system you are installing.

- For Red Hat Enterprise Linux 8, see the Red Hat Enterprise Linux 8 Performing a standard RHEL installation .

- For Red Hat Enterprise Linux 7, see the Red Hat Enterprise Linux 7 Installation Guide.

- For Red Hat Enterprise Linux 6, see the Red Hat Enterprise Linux 6 Installation Guide.

All bare metal machines that you want to provision require the following:

- A NIC to connect to the bare metal network.

-

A power management interface (for example, IPMI) connected to a network reachable from the

ironic-conductorservice. By default,ironic-conductorruns on all of the controller nodes, unless you are using composable roles and runningironic-conductorelsewhere. - PXE boot on the bare metal network. Disable PXE boot on all other NICs in the deployment.

2.3. Networking requirements

The bare metal network:

This is a private network that the Bare Metal service uses for the following operations:

- The provisioning and management of bare metal machines on the overcloud.

- Cleaning bare metal nodes before and between deployments.

- Tenant access to the bare metal nodes.

The bare metal network provides DHCP and PXE boot functions to discover bare metal systems. This network must use a native VLAN on a trunked interface so that the Bare Metal service can serve PXE boot and DHCP requests.

You can configure the bare metal network in two ways:

- Use a flat bare metal network for Ironic Conductor services. This network must route to the Ironic services on the control plane. If you define an isolated bare metal network, the bare metal nodes cannot PXE boot.

- Use a custom composable network to implement Ironic services in the overcloud.

The Bare Metal service in the overcloud is designed for a trusted tenant environment, as the bare metal nodes have direct access to the control plane network of your OpenStack installation. If you implement a custom composable network for Ironic services in the overcloud, users do not need to access the control plane.

Network tagging:

- The control plane network (the director’s provisioning network) is always untagged.

- The bare metal network must be untagged for provisioning, and must also have access to the Ironic API.

- Other networks may be tagged.

Overcloud controllers:

The controller nodes with the Bare Metal service must have access to the bare metal network.

Bare metal nodes:

The NIC which the bare metal node is configured to PXE-boot from must have access to the bare metal network.

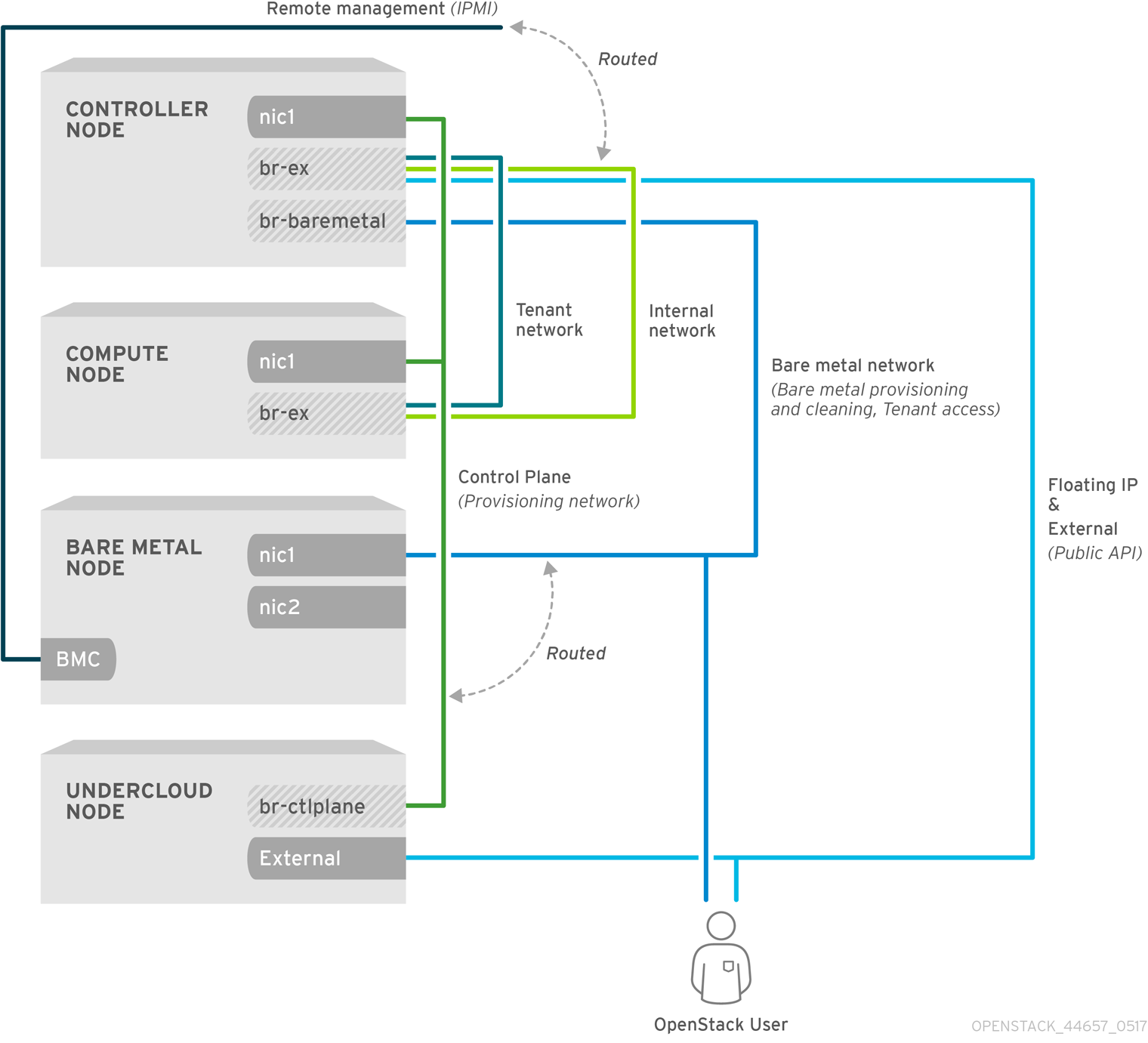

2.3.1. The Default Bare Metal Network

In this architecture, the bare metal network is separated from the control plane network. The bare metal network is a flat network that also acts as the tenant network.

- The bare metal network is created by the OpenStack operator. This network requires a route to the director provisioning network.

- Ironic users have access to the public OpenStack APIs, and to the bare metal network. Since the bare metal network is routed to the director’s provisioning network, users also have indirect access to the control plane.

- Ironic uses the bare metal network for node cleaning.

Default bare metal network architecture diagram

2.3.2. The Custom Composable Network

In this architecture, the bare metal network is a custom composable network that does not have access to the control plane. Creating this network might be preferable if you want to limit access to the control plane.

- The custom composable bare metal network is created by the OpenStack operator.

- Ironic users have access to the public OpenStack APIs, and to the custom composable bare metal network.

- Ironic uses the custom composable bare metal network for node cleaning. :leveloffset: +1

Chapter 3. Deploying an Overcloud with the Bare Metal Service

For full details about overcloud deployment with the director, see Director Installation and Usage. This chapter covers only the deployment steps specific to ironic.

3.1. Creating the Ironic template

Use an environment file to deploy the overcloud with the Bare Metal service enabled. A template is located on the director node at /usr/share/openstack-tripleo-heat-templates/environments/services/ironic-overcloud.yaml.

Filling in the template

Additional configuration can be specified either in the provided template or in an additional yaml file, for example ~/templates/ironic.yaml.

For a hybrid deployment with both bare metal and virtual instances, you must add

AggregateInstanceExtraSpecsFilterto the list ofNovaSchedulerDefaultFilters. If you have not setNovaSchedulerDefaultFiltersanywhere, you can do so in ironic.yaml. For an example, see Section 3.4, “Example Templates”.NoteIf you are using SR-IOV, NovaSchedulerDefaultFilters is already set in

tripleo-heat-templates/environments/neutron-sriov.yaml. AppendAggregateInstanceExtraSpecsFilterto this list.-

The type of cleaning that occurs before and between deployments is set by

IronicCleaningDiskErase. By default, this is set to ‘full’ bydeployment/ironic/ironic-conductor-container-puppet.yaml. Setting this to ‘metadata’ can substantially speed up the process, as it cleans only the partition table, however, since the deployment will be less secure in a multi-tenant environment, you should do this only in a trusted tenant environment. -

You can add drivers with the

IronicEnabledHardwareTypesparameter. By default,ipmiandredfishare enabled.

For a full list of configuration parameters, see Bare Metal in the Overcloud Parameters guide.

3.2. Configuring the undercloud for bare metal provisioning over IPv6

This feature is available in this release as a Technology Preview, and therefore is not fully supported by Red Hat. It should only be used for testing, and should not be deployed in a production environment. For more information about Technology Preview features, see Scope of Coverage Details.

If you have IPv6 nodes and infrastructure, you can configure the undercloud and the provisioning network to use IPv6 instead of IPv4 so that director can provision and deploy Red Hat OpenStack Platform onto IPv6 nodes. However, there are some considerations:

- Stateful DHCPv6 is available only with a limited set of UEFI firmware. For more information, see Bugzilla #1575026.

- Dual stack IPv4/6 is not available.

- Tempest validations might not perform correctly.

- IPv4 to IPv6 migration is not available during upgrades.

Modify the undercloud.conf file to enable IPv6 provisioning in Red Hat OpenStack Platform.

Prerequisites

- An IPv6 address on the undercloud. For more information, see Configuring an IPv6 address on the undercloud in the IPv6 Networking for the Overcloud guide.

Procedure

-

Copy the sample

undercloud.conffile, or modify your existingundercloud.conffile. Set the following parameter values in the

undercloud.conffile:-

Set

ipv6_address_modetodhcpv6-statelessordhcpv6-statefulif your NIC supports stateful DHCPv6 with Red Hat OpenStack Platform. For more information about stateful DHCPv6 availability, see Bugzilla #1575026. -

Set

enable_routed_networkstotrueif you do not want the undercloud to create a router on the provisioning network. In this case, the data center router must provide router advertisements. Otherwise, set this value tofalse. -

Set

local_ipto the IPv6 address of the undercloud. -

Use IPv6 addressing for the undercloud interface parameters

undercloud_public_hostandundercloud_admin_host. In the

[ctlplane-subnet]section, use IPv6 addressing in the following parameters:-

cidr -

dhcp_start -

dhcp_end -

gateway -

inspection_iprange

-

In the

[ctlplane-subnet]section, set an IPv6 nameserver for the subnet in thedns_nameserversparameter.Copy to Clipboard Copied! Toggle word wrap Toggle overflow

-

Set

3.3. Network Configuration

If you use the default flat bare metal network, you must create a bridge br-baremetal for ironic to use. You can specify this in an additional template:

~/templates/network-environment.yaml

parameter_defaults: NeutronBridgeMappings: datacentre:br-ex,baremetal:br-baremetal NeutronFlatNetworks: datacentre,baremetal

parameter_defaults:

NeutronBridgeMappings: datacentre:br-ex,baremetal:br-baremetal

NeutronFlatNetworks: datacentre,baremetalYou can configure this bridge either in the provisioning network (control plane) of the controllers, so that you can reuse this network as the bare metal network, or add a dedicated network. The configuration requirements are the same, however the bare metal network cannot be VLAN-tagged, as it is used for provisioning.

~/templates/nic-configs/controller.yaml

The Bare Metal service in the overcloud is designed for a trusted tenant environment, as the bare metal nodes have direct access to the control plane network of your OpenStack installation.

3.3.1. Configuring a custom IPv4 provisioning network

The default flat provisioning network can introduce security concerns in a customer environment as a tenant can interfere with the undercloud network. To prevent this risk, you can configure a custom composable bare metal provisioning network for ironic services that does not have access to the control plane:

Configure the shell to access Identity as the administrative user:

source ~/stackrc

$ source ~/stackrcCopy to Clipboard Copied! Toggle word wrap Toggle overflow Copy the

network_data.yamlfile:cp /usr/share/openstack-tripleo-heat-templates/network_data.yaml .

(undercloud) [stack@host01 ~]$ cp /usr/share/openstack-tripleo-heat-templates/network_data.yaml .Copy to Clipboard Copied! Toggle word wrap Toggle overflow Edit the new

network_data.yamlfile and add a new network for IPv4 overcloud provisioning:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Update the

network_environments.yamlandnic-configs/controller.yamlfiles to use the new network.In the

network_environments.yamlfile, remap Ironic networks:ServiceNetMap: IronicApiNetwork: oc_provisioning IronicNetwork: oc_provisioning

ServiceNetMap: IronicApiNetwork: oc_provisioning IronicNetwork: oc_provisioningCopy to Clipboard Copied! Toggle word wrap Toggle overflow In the

nic-configs/controller.yamlfile, add an interface and necessary parameters:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Copy the

roles_data.yamlfile:cp /usr/share/openstack-tripleo-heat-templates/roles_data.yaml .

(undercloud) [stack@host01 ~]$ cp /usr/share/openstack-tripleo-heat-templates/roles_data.yaml .Copy to Clipboard Copied! Toggle word wrap Toggle overflow Edit the new

roles_data.yamland add the new network for the controller:networks: ... OcProvisioning: subnet: oc_provisioning_subnetnetworks: ... OcProvisioning: subnet: oc_provisioning_subnetCopy to Clipboard Copied! Toggle word wrap Toggle overflow Include the new

network_data.yamlandroles_data.yamlfiles in the deploy command:-n /home/stack/network_data.yaml \ -r /home/stack/roles_data.yaml \

-n /home/stack/network_data.yaml \ -r /home/stack/roles_data.yaml \Copy to Clipboard Copied! Toggle word wrap Toggle overflow

3.3.2. Configuring a custom IPv6 provisioning network

This feature is available in this release as a Technology Preview, and therefore is not fully supported by Red Hat. It should only be used for testing, and should not be deployed in a production environment. For more information about Technology Preview features, see Scope of Coverage Details.

Create a custom IPv6 provisioning network to provision and deploy the overcloud over IPv6.

Procedure

Configure the shell to access Identity as the administrative user:

source ~/stackrc

$ source ~/stackrcCopy to Clipboard Copied! Toggle word wrap Toggle overflow Copy the

network_data.yamlfile:cp /usr/share/openstack-tripleo-heat-templates/network_data.yaml .

$ cp /usr/share/openstack-tripleo-heat-templates/network_data.yaml .Copy to Clipboard Copied! Toggle word wrap Toggle overflow Edit the new

network_data.yamlfile and add a new network for overcloud provisioning:Copy to Clipboard Copied! Toggle word wrap Toggle overflow -

Replace

$IPV6_ADDRESSwith the IPv6 address of your IPv6 subnet. -

Replace

$IPV6_MASKwith the IPv6 network mask for your IPv6 subnet. -

Replace

$IPV6_START_ADDRESSand$IPV6_END_ADDRESSwith the IPv6 range that you want to use for address allocation. -

Replace

$IPV6_GW_ADDRESSwith the IPv6 address of your gateway.

-

Replace

Create a new file

network-environment.yamland define IPv6 settings for the provisioning network:touch /home/stack/network-environment.yaml`

$ touch /home/stack/network-environment.yaml`Copy to Clipboard Copied! Toggle word wrap Toggle overflow Remap the ironic networks to use the new IPv6 provisioning network:

ServiceNetMap: IronicApiNetwork: oc_provisioning_ipv6 IronicNetwork: oc_provisioning_ipv6

ServiceNetMap: IronicApiNetwork: oc_provisioning_ipv6 IronicNetwork: oc_provisioning_ipv6Copy to Clipboard Copied! Toggle word wrap Toggle overflow Set the

IronicIpVersionparameter to6:parameter_defaults: IronicIpVersion: 6

parameter_defaults: IronicIpVersion: 6Copy to Clipboard Copied! Toggle word wrap Toggle overflow Set the

RabbitIPv6,MysqlIPv6, andRedisIPv6parameters toTrue:parameter_defaults: RabbitIPv6: True MysqlIPv6: True RedisIPv6: True

parameter_defaults: RabbitIPv6: True MysqlIPv6: True RedisIPv6: TrueCopy to Clipboard Copied! Toggle word wrap Toggle overflow Set the

ControlPlaneSubnetCidrparameter to the subnet IPv6 mask length for the provisioning network:parameter_defaults: ControlPlaneSubetCidr: '64'

parameter_defaults: ControlPlaneSubetCidr: '64'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Set the

ControlPlaneDefaultRouteparameter to the IPv6 address of the gateway router for the provisioning network:parameter_defaults: ControlPlaneDefaultRoute: <ipv6-address>

parameter_defaults: ControlPlaneDefaultRoute: <ipv6-address>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Add an interface and necessary parameters to the

nic-configs/controller.yamlfile:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Copy the

roles_data.yamlfile:cp /usr/share/openstack-tripleo-heat-templates/roles_data.yaml .

(undercloud) [stack@host01 ~]$ cp /usr/share/openstack-tripleo-heat-templates/roles_data.yaml .Copy to Clipboard Copied! Toggle word wrap Toggle overflow Edit the new

roles_data.yamland add the new network for the controller:networks: ... - OcProvisioningIPv6networks: ... - OcProvisioningIPv6Copy to Clipboard Copied! Toggle word wrap Toggle overflow

When you deploy the overcloud, include the new network_data.yaml and roles_data.yaml files in the deployment command with the -n and -r options, and the network-environment.yaml file with the -e option:

For more information about IPv6 network configuration, see Configuring the network in the IPv6 Networking for the Overcloud guide.

3.4. Example Templates

The following is an example template file. This file might not meet the requirements of your environment. Before using this example, ensure that it does not interfere with any existing configuration in your environment.

~/templates/ironic.yaml

In this example:

-

The

AggregateInstanceExtraSpecsFilterallows both virtual and bare metal instances, for a hybrid deployment. - Disk cleaning that is done before and between deployments erases only the partition table (metadata).

3.5. Enabling Ironic Introspection in the Overcloud

To enable Bare Metal introspection, include both the following files in the deploy command:

- For deployments using

OVN - ironic-overcloud.yaml

- ironic-inspector.yaml

- For deployments using

OVS - ironic.yaml

- ironic-inspector.yaml

You can find these files in the /usr/share/openstack-tripleo-heat-templates/environments/services directory. Use the following example to include configuration details for the ironic inspector that correspond to your environment:

parameter_defaults:

IronicInspectorSubnets:

- ip_range: 192.168.101.201,192.168.101.250

IPAImageURLs: '["http://192.168.24.1:8088/agent.kernel", "http://192.168.24.1:8088/agent.ramdisk"]'

IronicInspectorInterface: 'br-baremetal'

parameter_defaults:

IronicInspectorSubnets:

- ip_range: 192.168.101.201,192.168.101.250

IPAImageURLs: '["http://192.168.24.1:8088/agent.kernel", "http://192.168.24.1:8088/agent.ramdisk"]'

IronicInspectorInterface: 'br-baremetal'IronicInspectorSubnets

This parameter can contain multiple ranges and works with both spine and leaf.

IPAImageURLs

This parameter contains details about the IPA kernel and ramdisk. In most cases, you can use the same images that you use on the undercloud. If you omit this parameter, place alternatives on each controller.

IronicInspectorInterface

Use this parameter to specify the bare metal network interface.

If you use a composable Ironic or IronicConductor role, you must include the IronicInspector service in the Ironic role in your roles file.

ServicesDefault: OS::TripleO::Services::IronicInspector

ServicesDefault:

OS::TripleO::Services::IronicInspector3.6. Deploying the Overcloud

To enable the Bare Metal service, include your ironic environment files with the -e option when deploying or redeploying the overcloud, along with the rest of your overcloud configuration.

For example:

For more information about deploying the overcloud, see Deployment command options and Including Environment Files in Overcloud Creation in the Director Installation and Usage guide.

For more information about deploying the overcloud over IPv6, see Setting up your environment and Creating the overcloud in the IPv6 Networking for the Overcloud guide.

3.7. Testing the Bare Metal Service

You can use the OpenStack Integration Test Suite to validate your Red Hat OpenStack deployment. For more information, see the OpenStack Integration Test Suite Guide.

Additional Ways to Verify the Bare Metal Service:

Configure the shell to access Identity as the administrative user:

source ~/overcloudrc

$ source ~/overcloudrcCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check that the

nova-computeservice is running on the controller nodes:openstack compute service list -c Binary -c Host -c Status

$ openstack compute service list -c Binary -c Host -c StatusCopy to Clipboard Copied! Toggle word wrap Toggle overflow If you have changed the default ironic drivers, ensure that the required drivers are enabled:

openstack baremetal driver list

$ openstack baremetal driver listCopy to Clipboard Copied! Toggle word wrap Toggle overflow Ensure that the ironic endpoints are listed:

openstack catalog list

$ openstack catalog listCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 4. Configuring for the Bare Metal Service After Deployment

This section describes the steps necessary to configure your overcloud after deployment.

4.1. Configuring OpenStack Networking

Configure OpenStack Networking to communicate with the Bare Metal service for DHCP, PXE boot, and other requirements. You can configure the bare metal network in two ways:

- Use a flat bare metal network for Ironic Conductor services. This network must route to the Ironic services on the control plane network.

- Use a custom composable network to implement Ironic services in the overcloud.

Follow the procedures in this section to configure OpenStack Networking for a single flat network for provisioning onto bare metal, or to configure a new composable network that does not rely on an unused isolated network or a flat network. The configuration uses the ML2 plug-in and the Open vSwitch agent.

Perform all steps in the following procedure on the server that hosts the OpenStack Networking service, while logged in as the root user.

4.1.1. Configuring OpenStack Networking to Communicate with the Bare Metal Service on a flat Bare Metal Network

Configure the shell to access Identity as the administrative user:

source ~/overcloudrc

$ source ~/overcloudrcCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create the flat network over which to provision bare metal instances:

openstack network create \ --provider-network-type flat \ --provider-physical-network baremetal \ --share NETWORK_NAME

$ openstack network create \ --provider-network-type flat \ --provider-physical-network baremetal \ --share NETWORK_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace NETWORK_NAME with a name for this network. The name of the physical network over which the virtual network is implemented (in this case

baremetal) was set earlier in the~/templates/network-environment.yamlfile, with the parameterNeutronBridgeMappings.Create the subnet on the flat network:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Replace the following values:

- Replace SUBNET_NAME with a name for the subnet.

- Replace NETWORK_NAME with the name of the provisioning network that you created in the previous step.

- Replace NETWORK_CIDR with the Classless Inter-Domain Routing (CIDR) representation of the block of IP addresses that the subnet represents. The block of IP addresses specified by the range starting with START_IP and ending with END_IP must fall within the block of IP addresses specified by NETWORK_CIDR.

- Replace GATEWAY_IP with the IP address or host name of the router interface that acts as the gateway for the new subnet. This address must be within the block of IP addresses specified by NETWORK_CIDR, but outside of the block of IP addresses specified by the range starting with START_IP and ending with END_IP.

- Replace START_IP with the IP address that denotes the start of the range of IP addresses within the new subnet from which floating IP addresses will be allocated.

- Replace END_IP with the IP address that denotes the end of the range of IP addresses within the new subnet from which floating IP addresses will be allocated.

Create a router for the network and subnet to ensure that the OpenStack Networking Service serves metadata requests:

openstack router create ROUTER_NAME

$ openstack router create ROUTER_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace

ROUTER_NAMEwith a name for the router.Attach the subnet to the new router:

openstack router add subnet ROUTER_NAME BAREMETAL_SUBNET

$ openstack router add subnet ROUTER_NAME BAREMETAL_SUBNETCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace ROUTER_NAME with the name of your router and BAREMETAL_SUBNET with the ID or name of the subnet that you created previously. This allows the metadata requests from

cloud-initto be served and the node configured.

4.1.2. Configuring OpenStack Networking to Communicate with the Bare Metal Service on a Custom Composable Bare Metal Network

Create a vlan network with a VlanID that matches the

OcProvisioningnetwork that you create during deployment. Name the new networkprovisioningto match the default name of the cleaning network.openstack network create \ --share \ --provider-network-type vlan \ --provider-physical-network datacentre \ --provider-segment 205 provisioning

(overcloud) [stack@host01 ~]$ openstack network create \ --share \ --provider-network-type vlan \ --provider-physical-network datacentre \ --provider-segment 205 provisioningCopy to Clipboard Copied! Toggle word wrap Toggle overflow If the name of the overcloud network is not

provisioning, log in to the controller and run the following commands to rename and restart the network:heat-admin@overcloud-controller-0 ~]$ sudo vi /var/lib/config-data/puppet-generated/ironic/etc/ironic/ironic.conf

heat-admin@overcloud-controller-0 ~]$ sudo vi /var/lib/config-data/puppet-generated/ironic/etc/ironic/ironic.confCopy to Clipboard Copied! Toggle word wrap Toggle overflow heat-admin@overcloud-controller-0 ~]$ sudo podman restart ironic_conductor

heat-admin@overcloud-controller-0 ~]$ sudo podman restart ironic_conductorCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.2. Configuring Node Cleaning

By default, the Bare Metal service is set to use a network named provisioning for node cleaning. However, network names are not unique in OpenStack Networking, so it is possible for a tenant to create a network with the same name, causing a conflict with the Bare Metal service. Therefore, it is recommended to use the network UUID instead.

Configure cleaning by providing the provider network UUID on the controller running the Bare Metal Service:

~/templates/ironic.yamlparameter_defaults: IronicCleaningNetwork: UUIDparameter_defaults: IronicCleaningNetwork: UUIDCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace UUID with the UUID of the bare metal network that you create in the previous steps.

You can find the UUID with the

openstack network showcommand:openstack network show NETWORK_NAME -f value -c id

openstack network show NETWORK_NAME -f value -c idCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteYou must perform this configuration after the initial overcloud deployment, because the UUID for the network is not available beforehand.

-

Apply the changes by redeploying the overcloud with the

openstack overcloud deploycommand as described in Section 3.6, “Deploying the Overcloud”. Uncomment the following line and replace

<None>with the UUID of the bare metal network:cleaning_network = <None>

cleaning_network = <None>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Restart the Bare Metal service:

systemctl restart openstack-ironic-conductor.service

# systemctl restart openstack-ironic-conductor.serviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Redeploying the overcloud with openstack overcloud deploy reverts any manual changes, so ensure that you have added the cleaning configuration to ~/templates/ironic.yaml (described in the previous step) before you next use the openstack overcloud deploy command.

4.2.1. Manual Node Cleaning

To initiate node cleaning manually, the node must be in the manageable state.

Node cleaning has two modes:

Metadata only clean - Removes partitions from all disks on a given node. This is a faster clean cycle, but less secure since it erases only partition tables. Use this mode only on trusted tenant environments.

Full clean - Removes all data from all disks, using either ATA secure erase or by shredding. This can take several hours to complete.

To initiate a metadata clean:

openstack baremetal node clean _UUID_ \

--clean-steps '[{"interface": "deploy", "step": "erase_devices_metadata"}]'

$ openstack baremetal node clean _UUID_ \

--clean-steps '[{"interface": "deploy", "step": "erase_devices_metadata"}]'

To initiate a full clean:

openstack baremetal node clean _UUID_ \

--clean-steps '[{"interface": "deploy", "step": "erase_devices"}]'

$ openstack baremetal node clean _UUID_ \

--clean-steps '[{"interface": "deploy", "step": "erase_devices"}]'Replace UUID with the UUID of the node that you want to clean.

After a successful cleaning, the node state returns to manageable. If the state is clean failed, inspect the last_error field for the cause of failure.

4.3. Creating the Bare Metal Flavor

You must create a flavor to use as a part of the deployment. The specifications (memory, CPU, and disk) of this flavor must be equal to or less than the hardware specifications of your bare metal node.

Configure the shell to access Identity as the administrative user:

source ~/overcloudrc

$ source ~/overcloudrcCopy to Clipboard Copied! Toggle word wrap Toggle overflow List existing flavors:

openstack flavor list

$ openstack flavor listCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a new flavor for the Bare Metal service:

openstack flavor create \ --id auto --ram RAM \ --vcpus VCPU --disk DISK \ --property baremetal=true \ --public baremetal

$ openstack flavor create \ --id auto --ram RAM \ --vcpus VCPU --disk DISK \ --property baremetal=true \ --public baremetalCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace

RAMwith the amount of memory,VCPUwith the number of vCPUs andDISKwith the disk storage value. The propertybaremetalis used to distinguish bare metal from virtual instances.Verify that the new flavor is created with the correct values:

openstack flavor list

$ openstack flavor listCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.4. Creating the Bare Metal Images

The deployment requires two sets of images:

-

The deploy image is used by the Bare Metal service to boot the bare metal node and copy a user image onto the bare metal node. The deploy image consists of the

kernelimage and theramdiskimage. The user image is the image deployed onto the bare metal node. The user image also has a

kernelimage andramdiskimage, but additionally, the user image contains amainimage. The main image is either a root partition, or a whole-disk image.- A whole-disk image is an image that contains the partition table and boot loader. The Bare Metal service does not control the subsequent reboot of a node deployed with a whole-disk image as the node supports localboot.

- A root partition image contains only the root partition of the operating system. If you use a root partition, after the deploy image is loaded into the Image service, you can set the deploy image as the node boot image in the node properties. A subsequent reboot of the node uses netboot to pull down the user image.

The examples in this section use a root partition image to provision bare metal nodes.

4.4.1. Preparing the Deploy Images

You do not have to create the deploy image as it was already used when the overcloud was deployed by the undercloud. The deploy image consists of two images - the kernel image and the ramdisk image:

/tftpboot/agent.kernel /tftpboot/agent.ramdisk

/tftpboot/agent.kernel

/tftpboot/agent.ramdisk

These images are often in the home directory, unless you have deleted them, or unpacked them elsewhere. If they are not in the home directory, and you still have the rhosp-director-images-ipa package installed, these images are in the /usr/share/rhosp-director-images/ironic-python-agent*.tar file.

Extract the images and upload them to the Image service:

4.4.2. Preparing the User Image

The final image that you need is the user image that will be deployed on the bare metal node. User images also have a kernel and ramdisk, along with a main image. To download and install these packages, you must first configure whole disk image environment variables to suit your requirements.

4.4.3. Disk image environment variables

As a part of the disk image building process, the director requires a base image and registration details to obtain packages for the new overcloud image. Define these attributes with the following Linux environment variables.

The image building process temporarily registers the image with a Red Hat subscription and unregisters the system when the image building process completes.

To build a disk image, set Linux environment variables that suit your environment and requirements:

- DIB_LOCAL_IMAGE

- Sets the local image that you want to use as the basis for your whole disk image.

- REG_ACTIVATION_KEY

- Use an activation key instead of login details as part of the registration process.

- REG_AUTO_ATTACH

- Defines whether to attach the most compatible subscription automatically.

- REG_BASE_URL

-

The base URL of the content delivery server that contains packages for the image. The default Customer Portal Subscription Management process uses

https://cdn.redhat.com. If you use a Red Hat Satellite 6 server, set this parameter to the base URL of your Satellite server. - REG_ENVIRONMENT

- Registers to an environment within an organization.

- REG_METHOD

-

Sets the method of registration. Use

portalto register a system to the Red Hat Customer Portal. Usesatelliteto register a system with Red Hat Satellite 6. - REG_ORG

- The organization where you want to register the images.

- REG_POOL_ID

- The pool ID of the product subscription information.

- REG_PASSWORD

- Sets the password for the user account that registers the image.

- REG_REPOS

-

A comma-separated string of repository names. Each repository in this string is enabled through

subscription-manager. - REG_SAT_URL

- The base URL of the Satellite server to register overcloud nodes. Use the Satellite HTTP URL and not the HTTPS URL for this parameter. For example, use http://satellite.example.com and not https://satellite.example.com.

- REG_SERVER_URL

-

Sets the host name of the subscription service to use. The default host name is for the Red Hat Customer Portal at

subscription.rhn.redhat.com. If you use a Red Hat Satellite 6 server, set this parameter to the host name of your Satellite server. - REG_USER

- Sets the user name for the account that registers the image.

4.4.4. Installing the User Image

- Download the Red Hat Enterprise Linux KVM guest image from the Customer Portal (requires login).

Define DIB_LOCAL_IMAGE as the downloaded image:

export DIB_LOCAL_IMAGE=rhel-8.0-x86_64-kvm.qcow2

$ export DIB_LOCAL_IMAGE=rhel-8.0-x86_64-kvm.qcow2Copy to Clipboard Copied! Toggle word wrap Toggle overflow Set your registration information. If you use Red Hat Customer Portal, you must configure the following information:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow If you use Red Hat Satellite, you must configure the following information:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow If you have any offline repositories, you can define DIB_YUM_REPO_CONF as local repository configuration:

export DIB_YUM_REPO_CONF=<path-to-local-repository-config-file>

$ export DIB_YUM_REPO_CONF=<path-to-local-repository-config-file>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create the user images using the

diskimage-buildertool:disk-image-create rhel8 baremetal -o rhel-image

$ disk-image-create rhel8 baremetal -o rhel-imageCopy to Clipboard Copied! Toggle word wrap Toggle overflow This command extracts the kernel as

rhel-image.vmlinuzand initial ramdisk asrhel-image.initrd.Upload the images to the Image service:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

4.5. Configuring Deploy Interfaces

When provisioning bare metal nodes, the Ironic service on the overcloud writes a base operating system image to the disk on the bare metal node. By default, the deploy interface mounts the image on an iSCSI mount and then copies the image to disk on each node. Alternatively, you can use direct deploy, which writes disk images from a HTTP location directly to disk on bare metal nodes.

4.5.1. Understanding the deploy process

Deploy interfaces have a critical role in the provisioning process. Deploy interfaces orchestrate the deployment and define the mechanism for transferring the image to the target disk.

Prerequisites

-

Dependent packages configured on the bare metal service nodes that run

ironic-conductor. - OpenStack Compute (nova) must be configured to use the bare metal service endpoint.

- Flavors must be created for the available hardware, and nova must boot the new node from the correct flavor.

Images must be available in Glance:

- bm-deploy-kernel

- bm-deploy-ramdisk

- user-image

- user-image-vmlinuz

- user-image-initrd

- Hardware to enroll with the Ironic API service.

Workflow

Use the following example workflow to understand the standard deploy process. Depending on the ironic driver interfaces that you use, some of the steps might differ:

- The Nova scheduler receives a boot instance request from the Nova API.

- The Nova scheduler identifies the relevant hypervisor and identifies the target physical node.

- The Nova compute manager claims the resources of the selected hypervisor.

- The Nova compute manager creates unbound tenant virtual interfaces (VIFs) in the Networking service according to the network interfaces that the nova boot request specifies.

Nova compute invokes

driver.spawnfrom the Nova compute virt layer to create a spawn task that contains all of the necessary information. During the spawn process, the virt driver completes the following steps.- Updates the target ironic node with information about the deploy image, instance UUID, requested capabilities, and flavor propertires.

- Calls the ironic API to validate the power and deploy interfaces of the target node.

- Attaches the VIFs to the node. Each neutron port can be attached to any ironic port or group. Port groups have higher priority than ports.

- Generates config drive.

- The Nova ironic virt driver issues a deploy request with the Ironic API to the Ironic conductor that services the bare metal node.

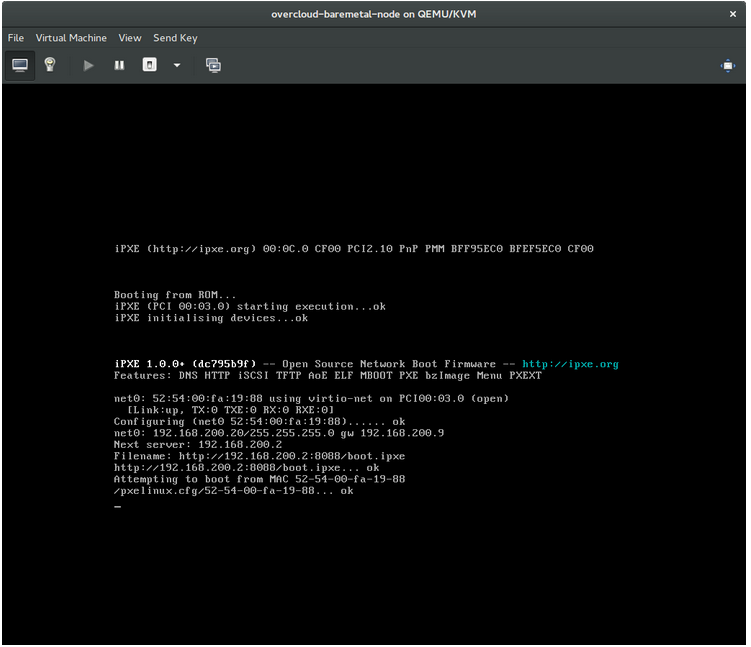

- Virtual interfaces are plugged in and the Neutron API updates DHCP to configure PXE/TFTP options.

- The ironic node boot interface prepares (i)PXE configuration and caches the deploy kernel and ramdisk.

- The ironic node management interface issues commands to enable network boot of the node.

- The ironic node deploy interface caches the instance image, kernel, and ramdisk, if necessary.

- The ironic node power interface instructs the node to power on.

- The node boots the deploy ramdisk.

- With iSCSI deployment, the conductor copies the image over iSCSI to the physical node. With direct deployment, the deploy ramdisk downloads the image from a temporary URL. This URL must be a Swift API compatible object store or a HTTP URL.

- The node boot interface switches PXE configuration to refer to instance images and instructs the ramdisk agent to soft power off the node. If the soft power off fails, the bare metal node is powered off with IPMI/BMC.

- The deploy interface instructs the network interface to remove any provisioning ports, binds the tenant ports to the node, and powers the node on.

The provisioning state of the new bare metal node is now active.

4.5.2. Configuring the direct deploy interface on the overcloud

The iSCSI deploy interface is the default deploy interface. However, you can enable the direct deploy interface to download an image from a HTTP location to the target disk.

Your overcloud node memory tmpfs must have at least 8GB of RAM.

Procedure

Create or modify a custom environment file

/home/stack/templates/direct_deploy.yamland specify theIronicEnabledDeployInterfacesand theIronicDefaultDeployInterfaceparameters.parameter_defaults: IronicEnabledDeployInterfaces: direct IronicDefaultDeployInterface: direct

parameter_defaults: IronicEnabledDeployInterfaces: direct IronicDefaultDeployInterface: directCopy to Clipboard Copied! Toggle word wrap Toggle overflow If you register your nodes with iscsi, retain the

iscsivalue in theIronicEnabledDeployInterfacesparameter:parameter_defaults: IronicEnabledDeployInterfaces: direct,iscsi IronicDefaultDeployInterface: direct

parameter_defaults: IronicEnabledDeployInterfaces: direct,iscsi IronicDefaultDeployInterface: directCopy to Clipboard Copied! Toggle word wrap Toggle overflow By default, the Bare Metal Service (ironic) agent on each node obtains the image stored in the Object Storage Service (swift) through a HTTP link. Alternatively, Ironic can stream this image directly to the node through the

ironic-conductorHTTP server. To change the service providing the image, set theIronicImageDownloadSourcetohttpin the/home/stack/templates/direct_deploy.yamlfile:parameter_defaults: IronicEnabledDeployInterfaces: direct IronicDefaultDeployInterface: direct IronicImageDownloadSource: http

parameter_defaults: IronicEnabledDeployInterfaces: direct IronicDefaultDeployInterface: direct IronicImageDownloadSource: httpCopy to Clipboard Copied! Toggle word wrap Toggle overflow Include the custom environment with your overcloud deployment:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Wait until deployment completes.

If you did not specify IronicDefaultDeployInterface or to use a different deploy interface, specify the deploy interface when you create or update a node:

+

openstack baremetal node create --driver ipmi --deploy-interface direct openstack baremetal node set <NODE> --deploy-interface direct

$ openstack baremetal node create --driver ipmi --deploy-interface direct

$ openstack baremetal node set <NODE> --deploy-interface direct4.6. Adding Physical Machines as Bare Metal Nodes

There are two methods to enroll a bare metal node:

- Prepare an inventory file with the node details, import the file into the Bare Metal service, and make the nodes available.

- Register a physical machine as a bare metal node, then manually add its hardware details and create ports for each of its Ethernet MAC addresses. These steps can be performed on any node which has your overcloudrc file.

Both methods are detailed in this section.

After enrolling the physical machines, Compute is not immediately notified of new resources, because Compute’s resource tracker synchronizes periodically. Changes will be visible after the next periodic task is run. This value, scheduler_driver_task_period, can be updated in /etc/nova/nova.conf. The default period is 60 seconds.

4.6.1. Enrolling a Bare Metal Node With an Inventory File

Create a file

overcloud-nodes.yaml, including the node details. You can enroll multiple nodes with one file.Copy to Clipboard Copied! Toggle word wrap Toggle overflow Replace the following values:

-

<IPMI_IP>with the address of the Bare Metal controller. -

<USER>with your username. -

<PASSWORD>with your password. -

<CPU_COUNT>with the number of CPUs. -

<CPU_ARCHITECTURE>with the type of architecture of the CPUs. -

<MEMORY>with the amount of memory in MiB. -

<ROOT_DISK>with the size of the root disk in GiB. <MAC_ADDRESS>with the MAC address of the NIC used to PXE boot.You must include

root_deviceonly if the machine has multiple disks. Replace<SERIAL>with the serial number of the disk that you want to use for deployment.

-

Configure the shell to use Identity as the administrative user:

source ~/overcloudrc

$ source ~/overcloudrcCopy to Clipboard Copied! Toggle word wrap Toggle overflow Import the inventory file into ironic:

openstack baremetal create overcloud-nodes.yaml

$ openstack baremetal create overcloud-nodes.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

The nodes are now in the

enrollstate. Specify the deploy kernel and deploy ramdisk on each node:

openstack baremetal node set NODE_UUID \ --driver-info deploy_kernel=KERNEL_UUID \ --driver-info deploy_ramdisk=INITRAMFS_UUID

$ openstack baremetal node set NODE_UUID \ --driver-info deploy_kernel=KERNEL_UUID \ --driver-info deploy_ramdisk=INITRAMFS_UUIDCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace the following values:

- Replace NODE_UUID with the unique identifier for the node. Alternatively, use the node’s logical name.

Replace KERNEL_UUID with the unique identifier for the kernel deploy image that was uploaded to the Image service. Find this value with the following command:

openstack image show bm-deploy-kernel -f value -c id

$ openstack image show bm-deploy-kernel -f value -c idCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace INITRAMFS_UUID with the unique identifier for the ramdisk image that was uploaded to the Image service. Find this value with the following command:

openstack image show bm-deploy-ramdisk -f value -c id

$ openstack image show bm-deploy-ramdisk -f value -c idCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Set the node’s provisioning state to

available:openstack baremetal node manage _NODE_UUID_ openstack baremetal node provide _NODE_UUID_

$ openstack baremetal node manage _NODE_UUID_ $ openstack baremetal node provide _NODE_UUID_Copy to Clipboard Copied! Toggle word wrap Toggle overflow The bare metal service cleans the node if you enabled node cleaning,

Check that the nodes were successfully enrolled:

openstack baremetal node list

$ openstack baremetal node listCopy to Clipboard Copied! Toggle word wrap Toggle overflow There may be a delay between enrolling a node and its state being shown.

4.6.2. Enrolling a Bare Metal Node Manually

Configure the shell to use Identity as the administrative user:

source ~/overcloudrc

$ source ~/overcloudrcCopy to Clipboard Copied! Toggle word wrap Toggle overflow Add a new node:

openstack baremetal node create --driver ipmi --name NAME

$ openstack baremetal node create --driver ipmi --name NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow To create a node, you must specify the driver name. This example uses

ipmi. To use a different driver, you must enable the driver by setting theIronicEnabledHardwareTypesparameter. For more information on supported drivers, see Appendix A, Bare Metal Drivers.ImportantNote the unique identifier for the node.

Update the node driver information to allow the Bare Metal service to manage the node:

openstack baremetal node set NODE_UUID \ --driver_info PROPERTY=VALUE \ --driver_info PROPERTY=VALUE

$ openstack baremetal node set NODE_UUID \ --driver_info PROPERTY=VALUE \ --driver_info PROPERTY=VALUECopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace the following values:

- Replace NODE_UUID with the unique identifier for the node. Alternatively, use the node’s logical name.

- Replace PROPERTY with a required property returned by the ironic driver-properties command.

- Replace VALUE with a valid value for that property.

Specify the deploy kernel and deploy ramdisk for the node driver:

openstack baremetal node set NODE_UUID \ --driver-info deploy_kernel=KERNEL_UUID \ --driver-info deploy_ramdisk=INITRAMFS_UUID

$ openstack baremetal node set NODE_UUID \ --driver-info deploy_kernel=KERNEL_UUID \ --driver-info deploy_ramdisk=INITRAMFS_UUIDCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace the following values:

- Replace NODE_UUID with the unique identifier for the node. Alternatively, use the node’s logical name.

- Replace KERNEL_UUID with the unique identifier for the .kernel image that was uploaded to the Image service.

- Replace INITRAMFS_UUID with the unique identifier for the .initramfs image that was uploaded to the Image service.

Update the node’s properties to match the hardware specifications on the node:

openstack baremetal node set NODE_UUID \ --property cpus=CPU \ --property memory_mb=RAM_MB \ --property local_gb=DISK_GB \ --property cpu_arch=ARCH

$ openstack baremetal node set NODE_UUID \ --property cpus=CPU \ --property memory_mb=RAM_MB \ --property local_gb=DISK_GB \ --property cpu_arch=ARCHCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace the following values:

- Replace NODE_UUID with the unique identifier for the node. Alternatively, use the node’s logical name.

- Replace CPU with the number of CPUs.

- Replace RAM_MB with the RAM (in MB).

- Replace DISK_GB with the disk size (in GB).

- Replace ARCH with the architecture type.

OPTIONAL: Configure the node to reboot after initial deployment from a local boot loader installed on the node’s disk, instead of using PXE from

ironic-conductor. You must also set the local boot capability on the flavor used to provision the node. To enable local boot, the image used to deploy the node must contain grub2. Configure local boot:openstack baremetal node set NODE_UUID \ --property capabilities="boot_option:local"

$ openstack baremetal node set NODE_UUID \ --property capabilities="boot_option:local"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Replace NODE_UUID with the unique identifier for the node. Alternatively, use the node’s logical name.

Inform the Bare Metal service of the node’s network card by creating a port with the MAC address of the NIC on the provisioning network:

openstack baremetal port create --node NODE_UUID MAC_ADDRESS

$ openstack baremetal port create --node NODE_UUID MAC_ADDRESSCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace NODE_UUID with the unique identifier for the node. Replace MAC_ADDRESS with the MAC address of the NIC used to PXE boot.

If you have multiple disks, set the root device hints. This informs the deploy ramdisk which disk it should use for deployment.

openstack baremetal node set NODE_UUID \ --property root_device={"PROPERTY": "VALUE"}$ openstack baremetal node set NODE_UUID \ --property root_device={"PROPERTY": "VALUE"}Copy to Clipboard Copied! Toggle word wrap Toggle overflow Replace with the following values:

- Replace NODE_UUID with the unique identifier for the node. Alternatively, use the node’s logical name.

Replace PROPERTY and VALUE with details about the disk that you want to use for deployment, for example

root_device='{"size": 128}'The following properties are supported:

-

model(String): Device identifier. -

vendor(String): Device vendor. -

serial(String): Disk serial number. -

hctl(String): Host:Channel:Target:Lun for SCSI. -

size(Integer): Size of the device in GB. -

wwn(String): Unique storage identifier. -

wwn_with_extension(String): Unique storage identifier with the vendor extension appended. -

wwn_vendor_extension(String): Unique vendor storage identifier. -

rotational(Boolean): True for a rotational device (HDD), otherwise false (SSD). name(String): The name of the device, for example: /dev/sdb1 Use this property only for devices with persistent names.NoteIf you specify more than one property, the device must match all of those properties.

-

Validate the configuration of the node:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Replace NODE_UUID with the unique identifier for the node. Alternatively, use the node’s logical name. The output of the

openstack baremetal node validatecommand should report eitherTrueorNonefor each interface. Interfaces markedNoneare those that you have not configured, or those that are not supported for your driver.NoteInterfaces may fail validation due to missing 'ramdisk', 'kernel', and 'image_source' parameters. This result is fine, because the Compute service populates those missing parameters at the beginning of the deployment process.

4.7. Configuring Redfish virtual media boot

This feature is available in this release as a Technology Preview, and therefore is not fully supported by Red Hat. It should only be used for testing, and should not be deployed in a production environment. For more information about Technology Preview features, see Scope of Coverage Details.

You can use Redfish virtual media boot to supply a boot image to the Baseboard Management Controller (BMC) of a node so that the BMC can insert the image into one of the virtual drives. The node can then boot from the virtual drive into the operating system that exists in the image.

Redfish hardware types support booting deploy, rescue, and user images over virtual media. The Bare Metal service (ironic) uses kernel and ramdisk images associated with a node to build bootable ISO images for UEFI or BIOS boot modes at the moment of node deployment. The major advantage of virtual media boot is that you can eliminate the TFTP image transfer phase of PXE and use HTTP GET, or other methods, instead.

4.7.1. Deploying a bare metal server with Redfish virtual media boot

This feature is available in this release as a Technology Preview, and therefore is not fully supported by Red Hat. It should only be used for testing, and should not be deployed in a production environment. For more information about Technology Preview features, see Scope of Coverage Details.

To boot a node with the redfish hardware type over virtual media, set the boot interface to redfish-virtual-media and, for UEFI nodes, define the EFI System Partition (ESP) image. Then configure an enrolled node to use Redfish virtual media boot.

Prerequisites

-

Redfish driver enabled in the

enabled_hardware_typesparameter in theundercloud.conffile. - A bare metal node registered and enrolled.

- IPA and instance images in the Image Service (glance).

- For UEFI nodes, you must also have an EFI system partition image (ESP) available in the Image Service (glance).

- A bare metal flavor.

- A network for cleaning and provisioning.

Sushy library installed:

sudo yum install sushy

$ sudo yum install sushyCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Procedure

Set the Bare Metal service (ironic) boot interface to

redfish-virtual-media:openstack baremetal node set --boot-interface redfish-virtual-media $NODE_NAME

$ openstack baremetal node set --boot-interface redfish-virtual-media $NODE_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace

$NODE_NAMEwith the name of the node.For UEFI nodes, set the boot mode to

uefi:openstack baremetal node set --property capabilities="boot_mode:uefi" $NODE_NAME

$ openstack baremetal node set --property capabilities="boot_mode:uefi" $NODE_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace

$NODE_NAMEwith the name of the node.NoteFor BIOS nodes, do not complete this step.

For UEFI nodes, define the EFI System Partition (ESP) image:

openstack baremetal node set --driver-info bootloader=$ESP $NODE_NAME

$ openstack baremetal node set --driver-info bootloader=$ESP $NODE_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace

$ESPwith the glance image UUID or URL for the ESP image, and replace$NODE_NAMEwith the name of the node.NoteFor BIOS nodes, do not complete this step.

Create a port on the bare metal node and associate the port with the MAC address of the NIC on the bare metal node:

openstack baremetal port create --pxe-enabled True --node $UUID $MAC_ADDRESS

$ openstack baremetal port create --pxe-enabled True --node $UUID $MAC_ADDRESSCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace

$UUIDwith the UUID of the bare metal node, and replace$MAC_ADDRESSwith the MAC address of the NIC on the bare metal node.Create the new bare metal server:

openstack server create \ --flavor baremetal \ --image $IMAGE \ --network $NETWORK \ test_instance$ openstack server create \ --flavor baremetal \ --image $IMAGE \ --network $NETWORK \ test_instanceCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace

$IMAGEand$NETWORKwith the names of the image and network that you want to use.

4.8. Using Host Aggregates to Separate Physical and Virtual Machine Provisioning

OpenStack Compute uses host aggregates to partition availability zones, and group together nodes with specific shared properties. When an instance is provisioned, Compute’s scheduler compares properties on the flavor with the properties assigned to host aggregates, and ensures that the instance is provisioned in the correct aggregate and on the correct host: either on a physical machine or as a virtual machine.

Complete the steps in this section to perform the following operations:

-

Add the property

baremetalto your flavors, setting it to eithertrueorfalse. -

Create separate host aggregates for bare metal hosts and compute nodes with a matching

baremetalproperty. Nodes grouped into an aggregate inherit this property.

Creating a Host Aggregate

Set the

baremetalproperty totrueon the baremetal flavor.openstack flavor set baremetal --property baremetal=true

$ openstack flavor set baremetal --property baremetal=trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow Set the

baremetalproperty tofalseon the flavors used for virtual instances.openstack flavor set FLAVOR_NAME --property baremetal=false

$ openstack flavor set FLAVOR_NAME --property baremetal=falseCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a host aggregate called

baremetal-hosts:openstack aggregate create --property baremetal=true baremetal-hosts

$ openstack aggregate create --property baremetal=true baremetal-hostsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Add each controller node to the

baremetal-hostsaggregate:openstack aggregate add host baremetal-hosts HOSTNAME

$ openstack aggregate add host baremetal-hosts HOSTNAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteIf you have created a composable role with the

NovaIronicservice, add all the nodes with this service to thebaremetal-hostsaggregate. By default, only the controller nodes have theNovaIronicservice.Create a host aggregate called

virtual-hosts:openstack aggregate create --property baremetal=false virtual-hosts

$ openstack aggregate create --property baremetal=false virtual-hostsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Add each compute node to the

virtual-hostsaggregate:openstack aggregate add host virtual-hosts HOSTNAME

$ openstack aggregate add host virtual-hosts HOSTNAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow If you did not add the following Compute filter scheduler when deploying the overcloud, add it now to the existing list under

scheduler_default_filtersin /etc/nova/nova.conf:AggregateInstanceExtraSpecsFilter

AggregateInstanceExtraSpecsFilterCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 5. Administering Bare Metal Nodes

This chapter describes how to provision a physical machine on an enrolled bare metal node. Instances can be launched either from the command line or from the OpenStack dashboard.

5.1. Launching an Instance Using the Command Line Interface

Use the openstack command line interface to deploy a bare metal instance.

Deploying an Instance on the Command Line

Configure the shell to access Identity as the administrative user:

source ~/overcloudrc

$ source ~/overcloudrcCopy to Clipboard Copied! Toggle word wrap Toggle overflow Deploy the instance:

openstack server create \ --nic net-id=NETWORK_UUID \ --flavor baremetal \ --image IMAGE_UUID \ INSTANCE_NAME

$ openstack server create \ --nic net-id=NETWORK_UUID \ --flavor baremetal \ --image IMAGE_UUID \ INSTANCE_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace the following values:

- Replace NETWORK_UUID with the unique identifier for the network that was created for use with the Bare Metal service.

- Replace IMAGE_UUID with the unique identifier for the disk image that was uploaded to the Image service.

- Replace INSTANCE_NAME with a name for the bare metal instance.

To assign the instance to a security group, include

--security-group SECURITY_GROUP, replacing SECURITY_GROUP with the name of the security group. Repeat this option to add the instance to multiple groups. For more information on security group management, see the Users and Identity Management Guide.Check the status of the instance:

openstack server list --name INSTANCE_NAME

$ openstack server list --name INSTANCE_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow

5.2. Launch an Instance Using the Dashboard

Use the dashboard graphical user interface to deploy a bare metal instance.

Deploying an Instance in the Dashboard

- Log in to the dashboard at http[s]://DASHBOARD_IP/dashboard.

- Click Project > Compute > Instances

Click Launch Instance.

-

In the Details tab, specify the Instance Name and select

1for Count. -

In the Source tab, select an

Imagefrom Select Boot Source, then click the+(plus) symbol to select an operating system disk image. The chosen image moves to Allocated. -

In the Flavor tab, select

baremetal. -

In the Networks tab, use the

+(plus) and-(minus) buttons to move required networks from Available to Allocated. Ensure that the shared network created for the Bare Metal service is selected here. - If you want to assign the instance to a security group, in the Security Groups tab, use the arrow to move the group to Allocated.

-

In the Details tab, specify the Instance Name and select

- Click Launch Instance.

5.3. Configure Port Groups in the Bare Metal Provisioning Service

Port group functionality for bare metal nodes is available in this release as a Technology Preview, and therefore is not fully supported by Red Hat. It should be used only for testing, and should not be deployed in a production environment. For more information about Technology Preview features, see Scope of Coverage Details.

Port groups (bonds) provide a method to aggregate multiple network interfaces into a single ‘bonded’ interface. Port group configuration always takes precedence over an individual port configuration.

If a port group has a physical network, then all the ports in that port group should have the same physical network. The Bare Metal Provisioning service supports configuration of port groups in the instances using configdrive.

Bare Metal Provisioning service API version 1.26 supports port group configuration.

5.3.1. Configure the Switches

To configure port groups in a Bare Metal Provisioning deployment, you must configure the port groups on the switches manually. You must ensure that the mode and properties on the switch correspond to the mode and properties on the bare metal side as the naming can vary on the switch.

You cannot use port groups for provisioning and cleaning if you need to boot a deployment using iPXE.

Port group fallback allows all the ports in a port group to fallback to individual switch ports when a connection fails. Based on whether a switch supports port group fallback or not, you can use the ``--support-standalone-ports`` and ``--unsupport-standalone-ports`` options.

5.3.2. Configure Port Groups in the Bare Metal Provisioning Service

Create a port group by specifying the node to which it belongs, its name, address, mode, properties and whether it supports fallback to standalone ports.

openstack baremetal port group create --node NODE_UUID --name NAME --address MAC_ADDRESS --mode MODE --property miimon=100 --property xmit_hash_policy="layer2+3" --support-standalone-ports

# openstack baremetal port group create --node NODE_UUID --name NAME --address MAC_ADDRESS --mode MODE --property miimon=100 --property xmit_hash_policy="layer2+3" --support-standalone-portsCopy to Clipboard Copied! Toggle word wrap Toggle overflow You can also update a port group using the

openstack baremetal port group setcommand.If you do not specify an address, the deployed instance port group address is the same as the OpenStack Networking port. The port group will not be configured if the

neutronport is not attached.During interface attachment, port groups have a higher priority than the ports, so they are used first. Currently, it is not possible to specify whether a port group or a port is desired in an interface attachment request. Port groups that do not have any ports will be ignored.

NotePort groups must be configured manually in standalone mode either in the image or by generating the

configdriveand adding it to the node’sinstance_info. Ensure that you havecloud-initversion 0.7.7 or later for the port group configuration to work.Associate a port with a port group:

During port creation:

openstack baremetal port create --node NODE_UUID --address MAC_ADDRESS --port-group test

# openstack baremetal port create --node NODE_UUID --address MAC_ADDRESS --port-group testCopy to Clipboard Copied! Toggle word wrap Toggle overflow During port update:

openstack baremetal port set PORT_UUID --port-group PORT_GROUP_UUID

# openstack baremetal port set PORT_UUID --port-group PORT_GROUP_UUIDCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Boot an instance by providing an image that has

cloud-initor supports bonding.To check if the port group has been configured properly, run the following command:

cat /proc/net/bonding/bondX

# cat /proc/net/bonding/bondXCopy to Clipboard Copied! Toggle word wrap Toggle overflow Here,

Xis a number autogenerated bycloud-initfor each configured port group, starting with a0and incremented by one for each configured port group.

5.4. Determining the Host to IP Address Mapping

Use the following commands to determine which IP addresses are assigned to which host and also to which bare metal node.

This feature allows you to know the host to IP mapping from the undercloud without needing to access the hosts directly.

To filter a particular host, run the following command:

To map the hosts to bare metal nodes, run the following command:

5.5. Attaching and Detaching a Virtual Network Interface

The Bare Metal Provisioning service has an API to manage the mapping between virtual network interfaces, for example, the ones used in the OpenStack Networking service and the physical interfaces (NICs). These interfaces are configurable for each Bare Metal Provisioning node, allowing you to set the virtual network interface (VIF) to physical network interface (PIF) mapping logic using the openstack baremetal node vif* commands.

The following example procedure describes the steps to attach and detach VIFs.

List the VIF IDs currently connected to the bare metal node:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow After the VIF is attached, the Bare Metal service updates the virtual port in the OpenStack Networking service with the actual MAC address of the physical port.

This can be checked using the following command:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a new port on the network where you have created the

baremetal-0node:openstack port create --network baremetal --fixed-ip ip-address=192.168.24.24 baremetal-0-extra

$ openstack port create --network baremetal --fixed-ip ip-address=192.168.24.24 baremetal-0-extraCopy to Clipboard Copied! Toggle word wrap Toggle overflow Remove a port from the instance:

openstack server remove port overcloud-baremetal-0 4475bc5a-6f6e-466d-bcb6-6c2dce0fba16

$ openstack server remove port overcloud-baremetal-0 4475bc5a-6f6e-466d-bcb6-6c2dce0fba16Copy to Clipboard Copied! Toggle word wrap Toggle overflow Check that the IP address no longer exists on the list:

openstack server list

$ openstack server listCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check if there are VIFs attached to the node:

openstack baremetal node vif list baremetal-0 openstack port list

$ openstack baremetal node vif list baremetal-0 $ openstack port listCopy to Clipboard Copied! Toggle word wrap Toggle overflow Add the newly created port:

openstack server add port overcloud-baremetal-0 baremetal-0-extra

$ openstack server add port overcloud-baremetal-0 baremetal-0-extraCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that the new IP address shows the new port:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Check if the VIF ID is the UUID of the new port:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Check if the OpenStack Networking port MAC address is updated and matches one of the Bare Metal service ports:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Reboot the bare metal node so that it recognizes the new IP address:

openstack server reboot overcloud-baremetal-0

$ openstack server reboot overcloud-baremetal-0Copy to Clipboard Copied! Toggle word wrap Toggle overflow After detaching or attaching interfaces, the bare metal OS removes, adds, or modifies the network interfaces that have changed. When you replace a port, a DHCP request obtains the new IP address, but this may take some time since the old DHCP lease is still valid. The simplest way to initiate these changes immediately is to reboot the bare metal host.

5.6. Configuring Notifications for the Bare Metal Service

You can configure the bare metal service to display notifications for different events that occur within the service. These notifications can be used by external services for billing purposes, monitoring a data store, and so on. This section describes how to enable these notifications.

To enable notifications for the baremetal service, you must set the following options in your ironic.conf configuration file.

-

The

notification_leveloption in the[DEFAULT]section determines the minimum priority level for which notifications are sent. The values for this option can be set todebug,info,warning,error, orcritical. If the option is set towarning, all notifications with priority levelwarning,error, orcriticalare sent, but not notifications with priority leveldebugorinfo. If this option is not set, no notifications are sent. The priority level of each available notification is documented below. -

The

transport_urloption in the[oslo_messaging_notifications]section determines the message bus used when sending notifications. If this is not set, the default transport used for RPC is used.

All notifications are emitted on the ironic_versioned_notifications topic in the message bus. Generally, each type of message that traverses the message bus is associated with a topic that describes the contents of the message.

The notifications can be lost and there is no guarantee that a notification will make it across the message bus to the end user.

5.7. Configuring Automatic Power Fault Recovery

Ironic has a string field fault that records power, cleaning, and rescue abort failures for nodes.

| Fault | Description |

|---|---|

| power failure | The node is in maintenance mode due to power sync failures that exceed the maximum number of retries. |

| clean failure | The node is in maintenance mode due to the failure of a cleaning operation. |

| rescue abort failure | The node is in maintenance mode due to the failure of a cleaning operation during rescue abort. |

| none | There is no fault present. |

Conductor checks the value of this field periodically. If the conductor detects a power failure state and can successfully restore power to the node, the node is removed from maintenance mode and restored to operation.

If the operator places a node in maintenance mode manually, the conductor does not automatically remove the node from maintenance mode.

The default interval is 300 seconds, however, you can configure this interval with director using hieradata:

ironic::conductor::power_failure_recovery_interval

ironic::conductor::power_failure_recovery_interval

To disable automatic power fault recovery, set the value to 0.

5.8. Introspecting Overcloud Nodes

You can perform introspection of Overcloud nodes to monitor the specification of the nodes.

Source the rc file:

source ~/overcloudrc

$ source ~/overcloudrcCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run the introspection command:

openstack baremetal introspection start [--wait] <NODENAME>

$ openstack baremetal introspection start [--wait] <NODENAME>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Replace <NODENAME> with the name of the node that you want to inspect.

Check the introspection status:

openstack baremetal introspection status <NODENAME>

$ openstack baremetal introspection status <NODENAME>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Replace <NODENAME> with the name of the node.

Chapter 6. Booting from cinder volumes

This section contains information on creating and connecting volumes created in OpenStack Block Storage (cinder) to bare metal instances created with OpenStack Bare Metal (ironic).

6.1. Cinder volume boot for bare metal nodes

You can boot bare metal nodes from a block storage device that is stored in OpenStack Block Storage (cinder). OpenStack Bare Metal (ironic) connects bare metal nodes to volumes through an iSCSI interface.

Ironic enables this feature during the overcloud deployment. However, consider the following conditions prior to deployment:

-

The overcloud requires the cinder iSCSI backend to be enabled. Set the

CinderEnableIscsiBackendheat parameter totrueduring overcloud deployment. - You cannot use the cinder volume boot feature with a Red Hat Ceph Storage backend.

-

You must set the

rd.iscsi.firmware=1kernel parameter on the boot disk.

6.2. Configuring nodes for cinder volume boot

You must configure certain options for each bare metal node to successfully boot from a cinder volume.

Procedure

-

Log in to the undercloud as the

stackuser. Source the overcloud credentials:

source ~/overcloudrc

$ source ~/overcloudrcCopy to Clipboard Copied! Toggle word wrap Toggle overflow Set the

iscsi_bootcapability totrueand thestorage-interfacetocinderfor the selected node:openstack baremetal node set --property capabilities=iscsi_boot:true --storage-interface cinder <NODEID>

$ openstack baremetal node set --property capabilities=iscsi_boot:true --storage-interface cinder <NODEID>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Replace `<NODEID> with the ID of the chosen node.

Create an iSCSI connector for the node:

openstack baremetal volume connector create --node <NODEID> --type iqn --connector-id iqn.2010-10.org.openstack.node<NUM>

$ openstack baremetal volume connector create --node <NODEID> --type iqn --connector-id iqn.2010-10.org.openstack.node<NUM>Copy to Clipboard Copied! Toggle word wrap Toggle overflow The connector ID for each node must be unique. In the example, the connector is

iqn.2010-10.org.openstack.node<NUM>where<NUM>is an incremented number for each node.

6.3. Configuring iSCSI kernel parameters on the boot disk

You must enable the iSCSI booting in the kernel on the image. To accomplish this, mount the QCOW2 image and enable iSCSI components on the image.

Prerequisites

Download a Red Hat Enterprise Linux QCOW2 image and copy it to the

/home/stack/directory on the undercloud. You can download Red Hat Enterprise Linux KVM images in QCOW2 format from the following pages:

Procedure

-

Log in to the undercloud as the

stackuser. Mount the QCOW2 image and access it as the

rootuser:Load the