Networking Guide

An advanced guide to Red Hat OpenStack Platform Networking

Abstract

Preface

The OpenStack Networking service (codename neutron) is the software-defined networking component of Red Hat OpenStack Platform 16.0.

Software-defined networking (SDN)

Network administrators can use software-defined networking (SDN) to manage network services through abstraction of lower-level functionality. While server workloads have been migrated into virtual environments, they are still just servers that look for a network connection to send and receive data. SDN meets this need by moving networking equipment (such as routers and switches) into the same virtualized space. If you are already familiar with basic networking concepts, then it is easy to consider that these physical networking concepts have now been virtualized, just like the servers that they connect.

Topics covered in this book

- Preface - Offers a brief definition of software-defined networking (SDN).

Part 1 - Covers common administrative tasks and basic troubleshooting steps:

- Adding and removing network resources

- Troubleshooting basic networks

- Troubleshooting project networks

Part 2 - Contains cookbook-style scenarios for advanced Red Hat OpenStack Platform Networking features, including:

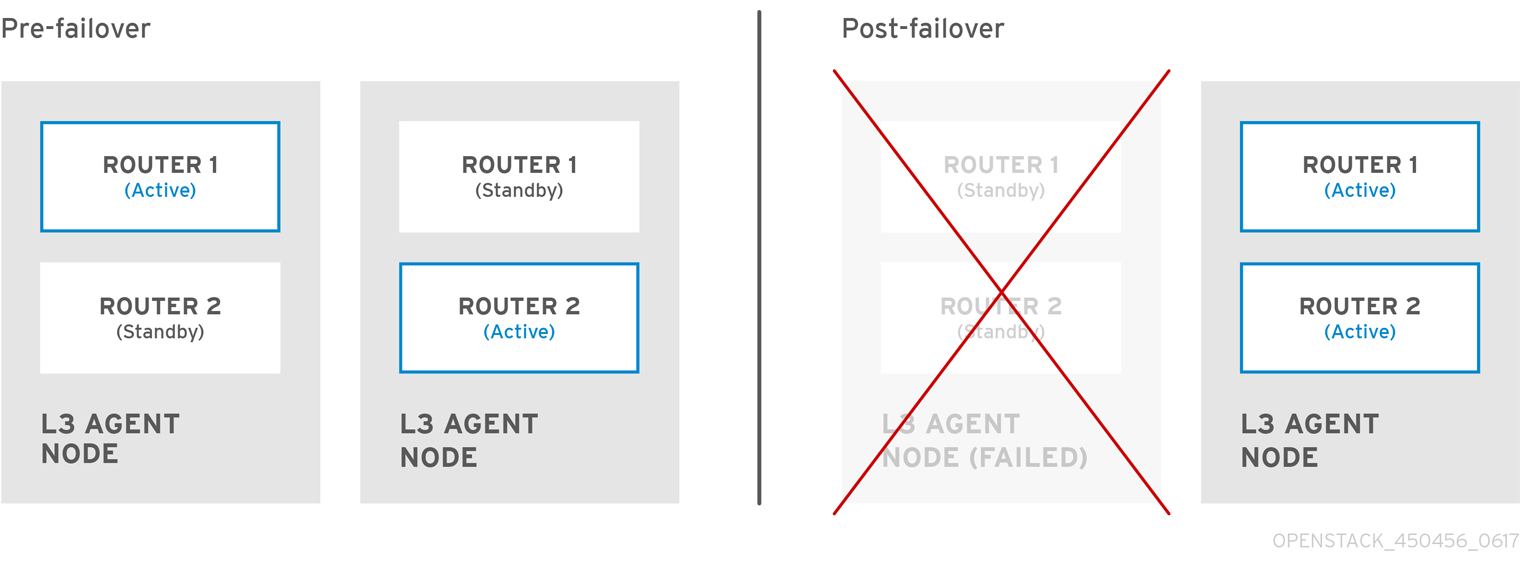

- Configuring Layer 3 High Availability for virtual routers

- Configuring DVR and other networking features

Chapter 1. Networking overview

1.1. How networking works

The term networking refers to the act of moving information from one computer to another. At the most basic level, this is performed by running a cable between two machines, each with network interface cards (NICs) installed. In the OSI networking model, the cable represents layer 1.

Now, if you want more than two computers to get involved in the conversation, you would need to scale out this configuration by adding a device called a switch. Enterprise switches have multiple Ethernet ports where you can connect additional machines. A network of multiple machines is called a Local Area Network (LAN).

Because they increase complexity, switches represent another layer of the OSI model, layer two. Each NIC has a unique MAC address number assigned to the hardware, and this number enables machines connected to the same switch to find each other. The switch maintains a list of which MAC addresses are plugged into which ports, so that when one computer attempts to send data to another, the switch knows where they are both situated, and adjusts entries in the CAM (Content Addressable Memory), which monitors of MAC-address-to-port mappings.

1.1.1. VLANs

You can use VLANs to segment network traffic for computers running on the same switch. This means that you can logically divide your switch by configuring the ports to be members of different networks — they are basically mini-LANs that you can use to separate traffic for security reasons.

For example, if your switch has 24 ports in total, you can assign ports 1-6 to VLAN200, and ports 7-18 to VLAN201. As a result, computers connected to VLAN200 are completely separate from those on VLAN201; they cannot communicate directly, and if they wanted to, the traffic must pass through a router as if they were two separate physical switches. Firewalls can also be useful for governing which VLANs can communicate with each other.

1.2. Connecting two LANs together

If you have two LANs running on two separate switches, and you want them to share information with each other. You have two options for configuring this communication:

Use 802.1Q VLAN tagging to configure a single VLAN that spans across both physical switches:

You must connect one end of a network cable to a port on one switch, connect the other end to a port on the other switch, and then configure these ports as 802.1Q tagged ports (sometimes known as trunk ports). These two switches act as one big logical switch, and the connected computers can find each other.

The downside to this option is scalability. You can only daisy-chain a limited number of switches until overhead becomes an issue.

Obtain a router and use cables to connect it to each switch:

The router is aware of the networks configured on both switches. Each end of the cable plugged into the switch receives an IP address, known as the default gateway for that network. A default gateway defines the destination where traffic is sent when it is clear that the destination machine is not on the same LAN as the source machine. By establishing a default gateway, each computer can send traffic to other computers without knowing specific information about the destination. Each computer sends traffic to the default gateway, and the router determines which destination computer receives the traffic. Routing works on layer 3 of the OSI model, and is where the familiar concepts like IP addresses and subnets operate.

1.2.1. Firewalls

Firewalls can filter traffic across multiple OSI layers, including layer 7 (for inspecting actual content). Firewalls are often situated in the same network segments as routers, where they govern the traffic moving between all the networks. Firewalls refer to a predefined set of rules that prescribe which traffic can enter a network. These rules can become very granular, for example:

"Servers on VLAN200 may only communicate with computers on VLAN201, and only on a Thursday afternoon, and only if they are sending encrypted web traffic (HTTPS) in one direction".

To help enforce these rules, some firewalls also perform Deep Packet Inspection (DPI) at layers 5-7, whereby they examine the contents of packets to ensure that the packets are legitimate. Hackers can exfiltrate data by having the traffic masquerade as something it is not. DPI is one of the means that you can use to mitigate that threat.

1.3. Working with OpenStack Networking (neutron)

These same networking concepts apply in OpenStack, where they are known as Software-defined networking (SDN). The OpenStack Networking (neutron) component provides the API for virtual networking capabilities, and includes switches, routers, and firewalls. The virtual network infrastructure allows your instances to communicate with each other and also externally using the physical network. The Open vSwitch bridge allocates virtual ports to instances, and can span across the network infrastructure to the physical network for incoming and outgoing traffic.

1.4. Working with CIDR format

IP addresses are generally first allocated in blocks of subnets. For example, the IP address range 192.168.100.0 - 192.168.100.255 with a subnet mask of 255.555.255.0 allows for 254 IP addresses (the first and last addresses are reserved).

These subnets can be represented in a number of ways:

Common usage:

Subnet addresses are traditionally displayed using the network address accompanied by the subnet mask:

- Network Address: 192.168.100.0

- Subnet mask: 255.255.255.0

CIDR format:

The subnet mask is shortened into its total number of active bits.

For example, in

192.168.100.0/24,/24is a shortened representation of255.255.255.0, and is a total of the number of flipped bits when converted to binary.Also, CIDR format can be used in

ifcfg-xxxscripts instead of theNETMASKvalue:#NETMASK=255.255.255.0 PREFIX=24

#NETMASK=255.255.255.0 PREFIX=24Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 2. OpenStack networking concepts

OpenStack Networking has system services to manage core services such as routing, DHCP, and metadata. Together, these services are included in the concept of the Controller node, which is a conceptual role assigned to a physical server. A physical server is typically assigned the role of Network node and dedicated to the task of managing Layer 3 routing for network traffic to and from instances. In OpenStack Networking, you can have multiple physical hosts performing this role, allowing for redundant service in the event of hardware failure. For more information, see the chapter on Layer 3 High Availability.

Red Hat OpenStack Platform 11 added support for composable roles, allowing you to separate network services into a custom role. However, for simplicity, this guide assumes that a deployment uses the default controller role.

2.1. Installing OpenStack Networking (neutron)

The OpenStack Networking component is installed as part of a Red Hat OpenStack Platform director deployment. For more information about director deployment, see Director Installation and Usage.

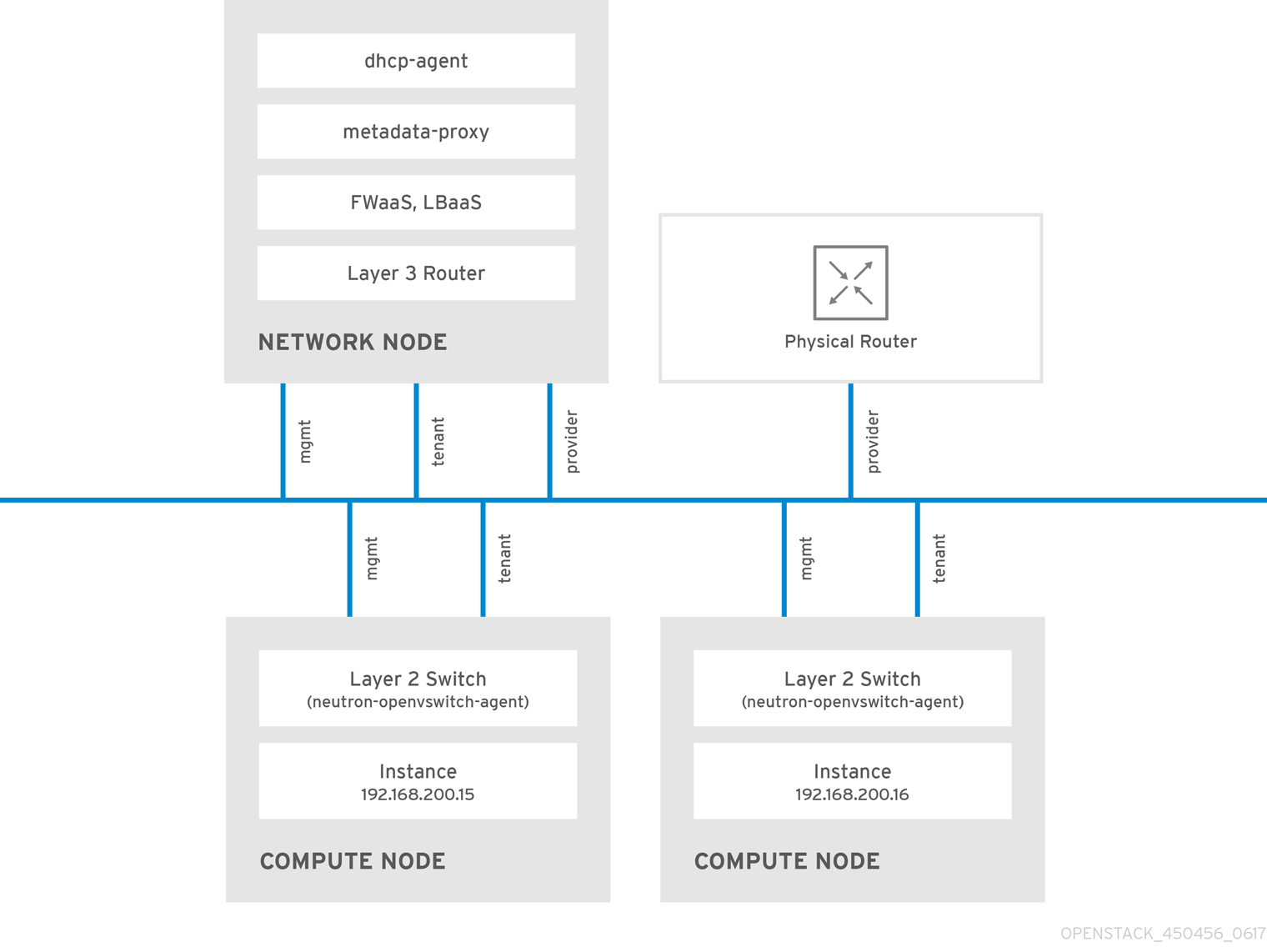

2.2. OpenStack Networking diagram

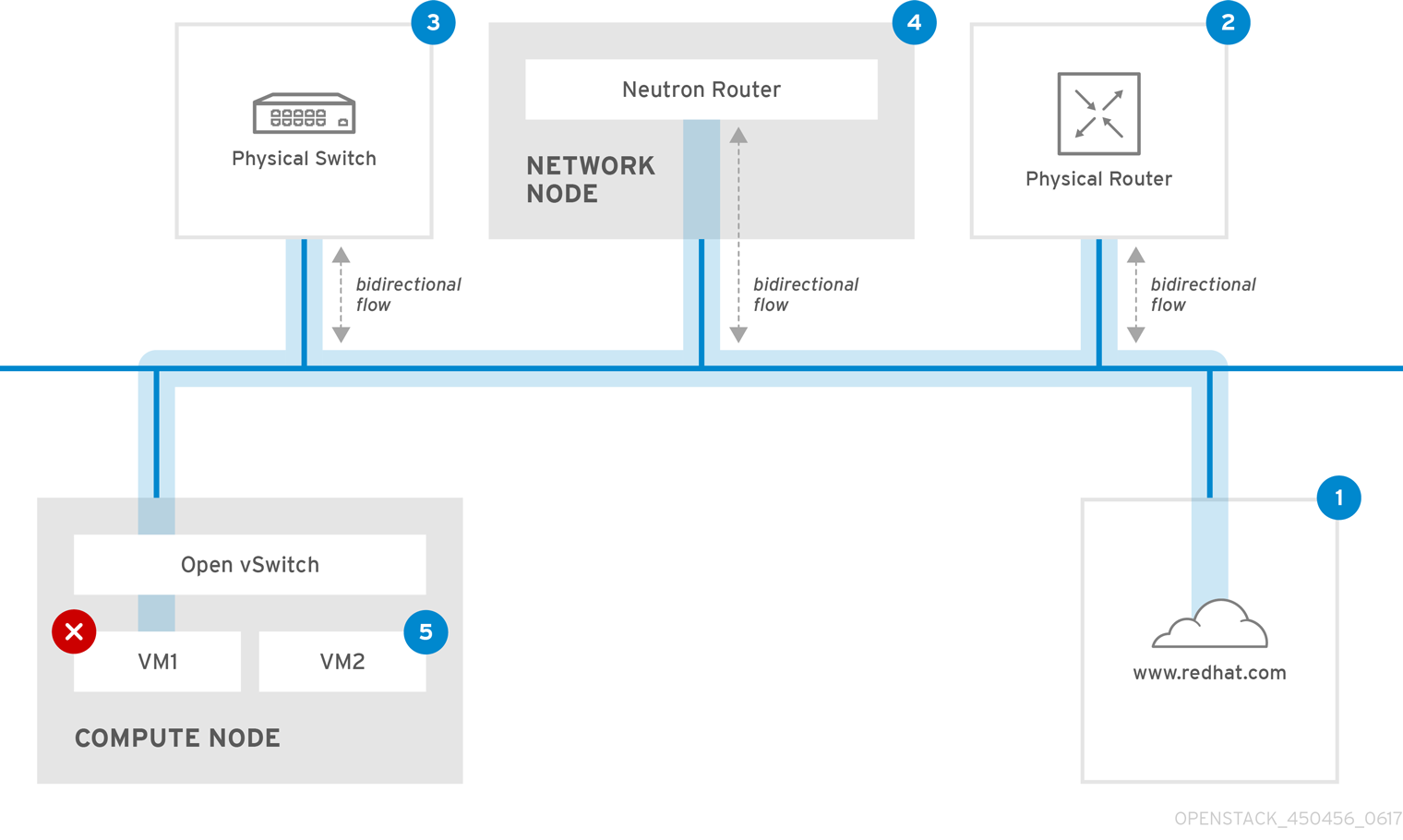

This diagram depicts a sample OpenStack Networking deployment, with a dedicated OpenStack Networking node performing layer 3 routing and DHCP, and running the advanced services firewall as a service (FWaaS) and load balancing as a Service (LBaaS). Two Compute nodes run the Open vSwitch (openvswitch-agent) and have two physical network cards each, one for project traffic, and another for management connectivity. The OpenStack Networking node has a third network card specifically for provider traffic:

2.3. Security groups

Security groups and rules filter the type and direction of network traffic that neutron ports send and receive. This provides an additional layer of security to complement any firewall rules present on the compute instance. The security group is a container object with one or more security rules. A single security group can manage traffic to multiple compute instances.

Ports created for floating IP addresses, OpenStack Networking LBaaS VIPs, and instances are associated with a security group. If you do not specify a security group, then the port is associated with the default security group. By default, this group drops all inbound traffic and allows all outbound traffic. However, traffic flows between instances that are members of the default security group, because the group has a remote group ID that points to itself.

To change the filtering behavior of the default security group, you can add security rules to the group, or create entirely new security groups.

2.4. Open vSwitch

Open vSwitch (OVS) is a software-defined networking (SDN) virtual switch similar to the Linux software bridge. OVS provides switching services to virtualized networks with support for industry standard , OpenFlow, and sFlow. OVS can also integrate with physical switches using layer 2 features, such as STP, LACP, and 802.1Q VLAN tagging. Open vSwitch version 1.11.0-1.el6 or later also supports tunneling with VXLAN and GRE.

For more information about network interface bonds, see the Network Interface Bonding chapter of the Advanced Overcloud Customization guide.

To mitigate the risk of network loops in OVS, only a single interface or a single bond can be a member of a given bridge. If you require multiple bonds or interfaces, you can configure multiple bridges.

2.5. Modular layer 2 (ML2) networking

ML2 is the OpenStack Networking core plug-in introduced in the OpenStack Havana release. Superseding the previous model of monolithic plug-ins, the ML2 modular design enables the concurrent operation of mixed network technologies. The monolithic Open vSwitch and Linux Bridge plug-ins have been deprecated and removed; their functionality is now implemented by ML2 mechanism drivers.

ML2 is the default OpenStack Networking plug-in, with OVN configured as the default mechanism driver.

2.5.1. The reasoning behind ML2

Previously, OpenStack Networking deployments could use only the plug-in selected at implementation time. For example, a deployment running the Open vSwitch (OVS) plug-in was required to use the OVS plug-in exclusively. The monolithic plug-in did not support the simultaneously use of another plug-in such as linuxbridge. This limitation made it difficult to meet the needs of environments with heterogeneous requirements.

2.5.2. ML2 network types

Multiple network segment types can be operated concurrently. In addition, these network segments can interconnect using ML2 support for multi-segmented networks. Ports are automatically bound to the segment with connectivity; it is not necessary to bind ports to a specific segment. Depending on the mechanism driver, ML2 supports the following network segment types:

- flat

- GRE

- local

- VLAN

- VXLAN

- Geneve

Enable Type drivers in the ML2 section of the ml2_conf.ini file. For example:

[ml2] type_drivers = local,flat,vlan,gre,vxlan,geneve

[ml2]

type_drivers = local,flat,vlan,gre,vxlan,geneve2.5.3. ML2 mechanism drivers

Plug-ins are now implemented as mechanisms with a common code base. This approach enables code reuse and eliminates much of the complexity around code maintenance and testing.

For the list of supported mechanism drivers, see Release Notes.

The default mechanism driver is OVN. Mechanism drivers are enabled in the ML2 section of the ml2_conf.ini file. For example:

[ml2] mechanism_drivers = ovn

[ml2]

mechanism_drivers = ovnRed Hat OpenStack Platform director manages these settings. Do not change them manually.

2.6. ML2 type and mechanism driver compatibility

| Mechanism Driver | Type Driver | ||||

|---|---|---|---|---|---|

| flat | gre | vlan | vxlan | geneve | |

| ovn | yes | no | yes | no | yes |

| openvswitch | yes | yes | yes | yes | no |

2.7. Limits of the ML2/OVN mechanism driver

2.7.1. No supported ML2/OVS to ML2/OVN migration method in this release

This release of the Red Hat OpenStack Platform (RHOSP) does not provide a supported migration from the ML2/OVS mechanism driver to the ML2/OVN mechanism driver. This RHOSP release does not support the OpenStack community migration strategy. Migration support is planned for a future RHOSP release.

To track the progress of migration support, see https://bugzilla.redhat.com/show_bug.cgi?id=1862888.

2.7.2. ML2/OVS features not yet supported by ML2/OVN

| Feature | Notes | Track this Feature |

|---|---|---|

| Fragmentation / Jumbo Frames | OVN does not yet support sending ICMP "fragmentation needed" packets. Larger ICMP/UDP packets that require fragmentation do not work with ML2/OVN as they would with the ML2/OVS driver implementation. TCP traffic is handled by maximum segment sized (MSS) clamping. | https://bugzilla.redhat.com/show_bug.cgi?id=1547074 (ovn-network) https://bugzilla.redhat.com/show_bug.cgi?id=1702331 (Core ovn) |

| Port Forwarding | OVN does not support port forwarding. | https://bugzilla.redhat.com/show_bug.cgi?id=1654608 https://blueprints.launchpad.net/neutron/+spec/port-forwarding |

| Security Groups Logging API | ML2/OVN does not provide a log file that logs security group events such as an instance trying to execute restricted operations or access restricted ports in remote servers. | |

| Multicast | When using ML2/OVN as the integration bridge, multicast traffic is treated as broadcast traffic. The integration bridge operates in FLOW mode, so IGMP snooping is not available. To support this, core OVN must support IGMP snooping. | |

| SR-IOV | Presently, SR-IOV only works with the neutron DHCP agent deployed. | |

| Provisioning Baremetal Machines with OVN DHCP |

The built-in DHCP server on OVN presently can not provision baremetal nodes. It cannot serve DHCP for the provisioning networks. Chainbooting iPXE requires tagging ( | |

| OVS_DPDK | OVS_DPDK is presently not supported with OVN. |

2.8. Using the ML2/OVS mechanism driver instead of the default ML2/OVN driver

If your application requires the ML2/OVS mechanism driver, you can deploy the overcloud with the environment file neutron-ovs.yaml, which disables the default ML2/OVN mechanism driver and enables ML2/OVS.

2.8.1. Using ML2/OVS in a new RHOSP 16.0 deployment

In the overcloud deployment command, include the environment file neutron-ovs.yaml as shown in the following example.

-e /usr/share/openstack-tripleo-heat-templates/environments/services/neutron-ovs.yaml

-e /usr/share/openstack-tripleo-heat-templates/environments/services/neutron-ovs.yamlFor more information about using environment files, see Including Environment Files in Overcloud Creation in the Advanced Overcloud Customization guide.

2.8.2. Upgrading from ML2/OVS in a previous RHOSP to ML2/OVS in RHOSP 16.0

To keep using ML2/OVS after an upgrade from a previous version of RHOSP that uses ML2/OVS, follow Red Hat’s upgrade procedure as documented, and do not perform the ML2/OVS-to-ML2/OVN migration.

The upgrade procedure includes adding -e /usr/share/openstack-tripleo-heat-templates/environments/services/neutron-ovs.yaml to the overcloud deployment command.

2.9. Configuring the L2 population driver

The L2 Population driver enables broadcast, multicast, and unicast traffic to scale out on large overlay networks. By default, Open vSwitch GRE and VXLAN replicate broadcasts to every agent, including those that do not host the destination network. This design requires the acceptance of significant network and processing overhead. The alternative design introduced by the L2 Population driver implements a partial mesh for ARP resolution and MAC learning traffic; it also creates tunnels for a particular network only between the nodes that host the network. This traffic is sent only to the necessary agent by encapsulating it as a targeted unicast.

To enable the L2 Population driver, complete the following steps:

1. Enable the L2 population driver by adding it to the list of mechanism drivers. You also must enable at least one tunneling driver enabled; either GRE, VXLAN, or both. Add the appropriate configuration options to the ml2_conf.ini file:

[ml2] type_drivers = local,flat,vlan,gre,vxlan,geneve mechanism_drivers = l2population

[ml2]

type_drivers = local,flat,vlan,gre,vxlan,geneve

mechanism_drivers = l2populationNeutron’s Linux Bridge ML2 driver and agent were deprecated in Red Hat OpenStack Platform 11. The Open vSwitch (OVS) plugin OpenStack Platform director default, and is recommended by Red Hat for general usage.

2. Enable L2 population in the openvswitch_agent.ini file. Enable it on each node that contains the L2 agent:

[agent] l2_population = True

[agent]

l2_population = True

To install ARP reply flows, configure the arp_responder flag:

[agent] l2_population = True arp_responder = True

[agent]

l2_population = True

arp_responder = True2.10. OpenStack Networking services

By default, Red Hat OpenStack Platform includes components that integrate with the ML2 and Open vSwitch plugin to provide networking functionality in your deployment:

2.10.1. L3 agent

The L3 agent is part of the openstack-neutron package. Use network namespaces to provide each project with its own isolated layer 3 routers, which direct traffic and provide gateway services for the layer 2 networks. The L3 agent assists with managing these routers. The nodes that host the L3 agent must not have a manually-configured IP address on a network interface that is connected to an external network. Instead there must be a range of IP addresses from the external network that are available for use by OpenStack Networking. Neutron assigns these IP addresses to the routers that provide the link between the internal and external networks. The IP range that you select must be large enough to provide a unique IP address for each router in the deployment as well as each floating IP.

2.10.2. DHCP agent

The OpenStack Networking DHCP agent manages the network namespaces that are spawned for each project subnet to act as DHCP server. Each namespace runs a dnsmasq process that can allocate IP addresses to virtual machines on the network. If the agent is enabled and running when a subnet is created then by default that subnet has DHCP enabled.

2.10.3. Open vSwitch agent

The Open vSwitch (OVS) neutron plug-in uses its own agent, which runs on each node and manages the OVS bridges. The ML2 plugin integrates with a dedicated agent to manage L2 networks. By default, Red Hat OpenStack Platform uses ovs-agent, which builds overlay networks using OVS bridges.

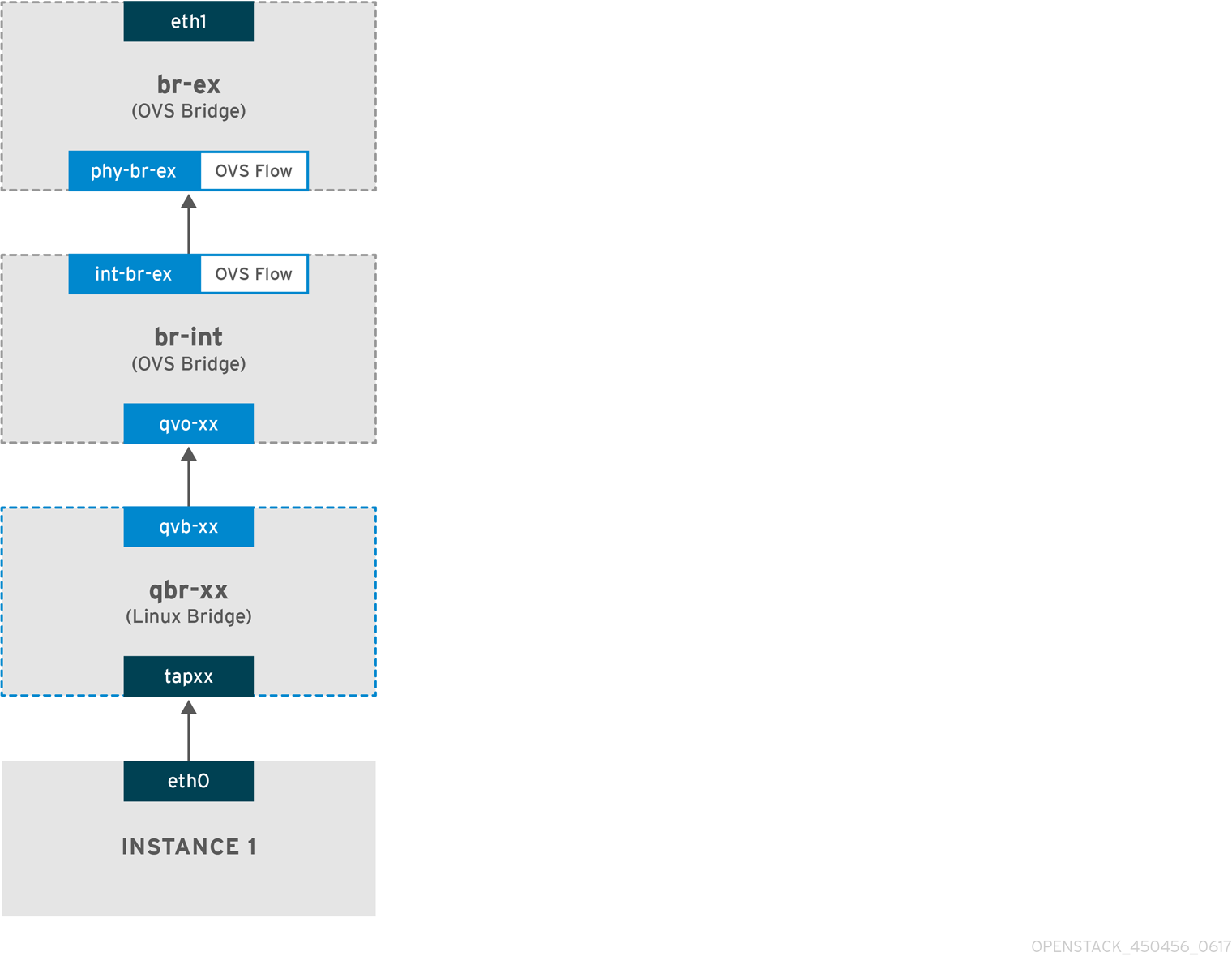

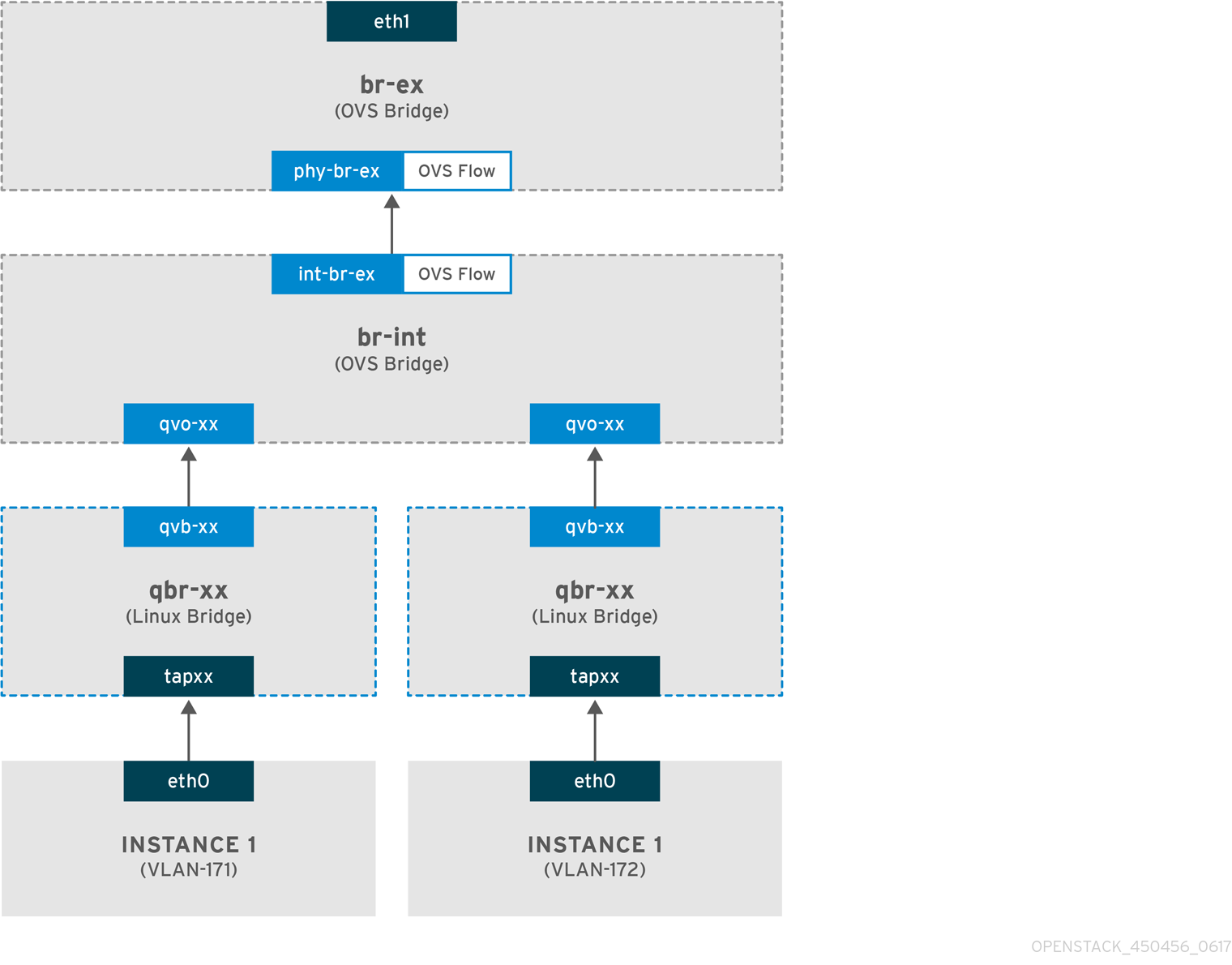

2.11. Project and provider networks

The following diagram presents an overview of the project and provider network types, and illustrates how they interact within the overall OpenStack Networking topology:

2.11.1. Project networks

Users create project networks for connectivity within projects. Project networks are fully isolated by default and are not shared with other projects. OpenStack Networking supports a range of project network types:

- Flat - All instances reside on the same network, which can also be shared with the hosts. No VLAN tagging or other network segregation occurs.

- VLAN - OpenStack Networking allows users to create multiple provider or project networks using VLAN IDs (802.1Q tagged) that correspond to VLANs present in the physical network. This allows instances to communicate with each other across the environment. They can also communicate with dedicated servers, firewalls, load balancers and other network infrastructure on the same layer 2 VLAN.

- VXLAN and GRE tunnels - VXLAN and GRE use network overlays to support private communication between instances. An OpenStack Networking router is required to enable traffic to traverse outside of the GRE or VXLAN project network. A router is also required to connect directly-connected project networks with external networks, including the Internet; the router provides the ability to connect to instances directly from an external network using floating IP addresses. VXLAN and GRE type drivers are compatible with the ML2/OVS mechanism driver.

- GENEVE tunnels - GENEVE recognizes and accommodates changing capabilities and needs of different devices in network virtualization. It provides a framework for tunneling rather than being prescriptive about the entire system. Geneve defines the content of the metadata flexibly that is added during encapsulation and tries to adapt to various virtualization scenarios. It uses UDP as its transport protocol and is dynamic in size using extensible option headers. Geneve supports unicast, multicast, and broadcast. The GENEVE type driver is compatible with the ML2/OVN mechanism driver.

You can configure QoS policies for project networks. For more information, see Chapter 10, Configuring Quality of Service (QoS) policies.

2.11.2. Provider networks

The OpenStack administrator creates provider networks. Provider networks map directly to an existing physical network in the data center. Useful network types in this category include flat (untagged) and VLAN (802.1Q tagged). You can also share provider networks among projects as part of the network creation process.

2.11.2.1. Flat provider networks

You can use flat provider networks to connect instances directly to the external network. This is useful if you have multiple physical networks (for example, physnet1 and physnet2) and separate physical interfaces (eth0 → physnet1 and eth1 → physnet2), and intend to connect each Compute and Network node to those external networks. To use multiple vlan-tagged interfaces on a single interface to connect to multiple provider networks, see Section 7.3, “Using VLAN provider networks”.

2.11.2.2. Configuring networking for Controller nodes

1. Edit /etc/neutron/plugin.ini (symbolic link to /etc/neutron/plugins/ml2/ml2_conf.ini) to add flat to the existing list of values and set flat_networks to *. For example:

type_drivers = vxlan,flat flat_networks =*

type_drivers = vxlan,flat

flat_networks =*

2. Create an external network as a flat network and associate it with the configured physical_network. Configure it as a shared network (using --share) to let other users create instances that connect to the external network directly.

openstack network create --share --provider-network-type flat --provider-physical-network physnet1 --external public01

# openstack network create --share --provider-network-type flat --provider-physical-network physnet1 --external public01

3. Create a subnet using the openstack subnet create command, or the dashboard. For example:

openstack subnet create --no-dhcp --allocation-pool start=192.168.100.20,end=192.168.100.100 --gateway 192.168.100.1 --network public01 public_subnet

# openstack subnet create --no-dhcp --allocation-pool start=192.168.100.20,end=192.168.100.100 --gateway 192.168.100.1 --network public01 public_subnet

4. Restart the neutron-server service to apply the change:

systemctl restart tripleo_neutron_api

systemctl restart tripleo_neutron_api2.11.2.3. Configuring networking for the Network and Compute nodes

Complete the following steps on the Network node and Compute nodes to connect the nodes to the external network, and allow instances to communicate directly with the external network.

1. Create an external network bridge (br-ex) and add an associated port (eth1) to it:

Create the external bridge in /etc/sysconfig/network-scripts/ifcfg-br-ex:

In /etc/sysconfig/network-scripts/ifcfg-eth1, configure eth1 to connect to br-ex:

Reboot the node or restart the network service for the changes to take effect.

2. Configure physical networks in /etc/neutron/plugins/ml2/openvswitch_agent.ini and map bridges to the physical network:

bridge_mappings = physnet1:br-ex

bridge_mappings = physnet1:br-exFor more information on bridge mappings, see Chapter 11, Configuring bridge mappings.

3. Restart the neutron-openvswitch-agent service on both the network and compute nodes to apply the changes:

systemctl restart neutron-openvswitch-agent

systemctl restart neutron-openvswitch-agent2.11.2.4. Configuring the Network node

1. Set external_network_bridge = to an empty value in /etc/neutron/l3_agent.ini:

Setting external_network_bridge = to an empty value allows multiple external network bridges. OpenStack Networking creates a patch from each bridge to br-int.

external_network_bridge =

external_network_bridge =

2. Restart neutron-l3-agent for the changes to take effect.

systemctl restart neutron-l3-agent

systemctl restart neutron-l3-agent

If there are multiple flat provider networks, then each of them must have a separate physical interface and bridge to connect them to the external network. Configure the ifcfg-* scripts appropriately and use a comma-separated list for each network when specifying the mappings in the bridge_mappings option. For more information on bridge mappings, see Chapter 11, Configuring bridge mappings.

2.12. Layer 2 and layer 3 networking

When designing your virtual network, anticipate where the majority of traffic is going to be sent. Network traffic moves faster within the same logical network, rather than between multiple logical networks. This is because traffic between logical networks (using different subnets) must pass through a router, resulting in additional latency.

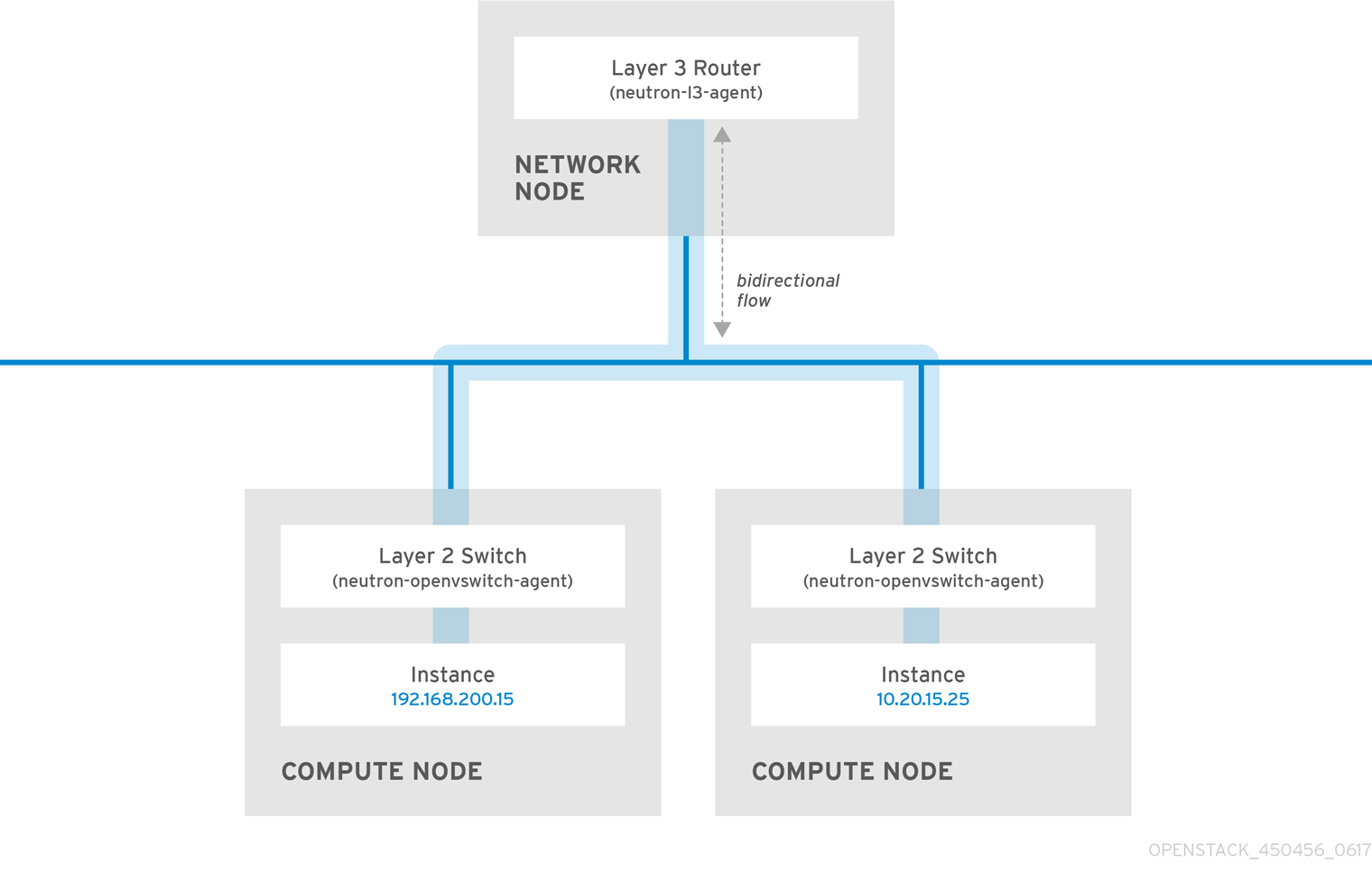

Consider the diagram below which has network traffic flowing between instances on separate VLANs:

Even a high performance hardware router adds latency to this configuration.

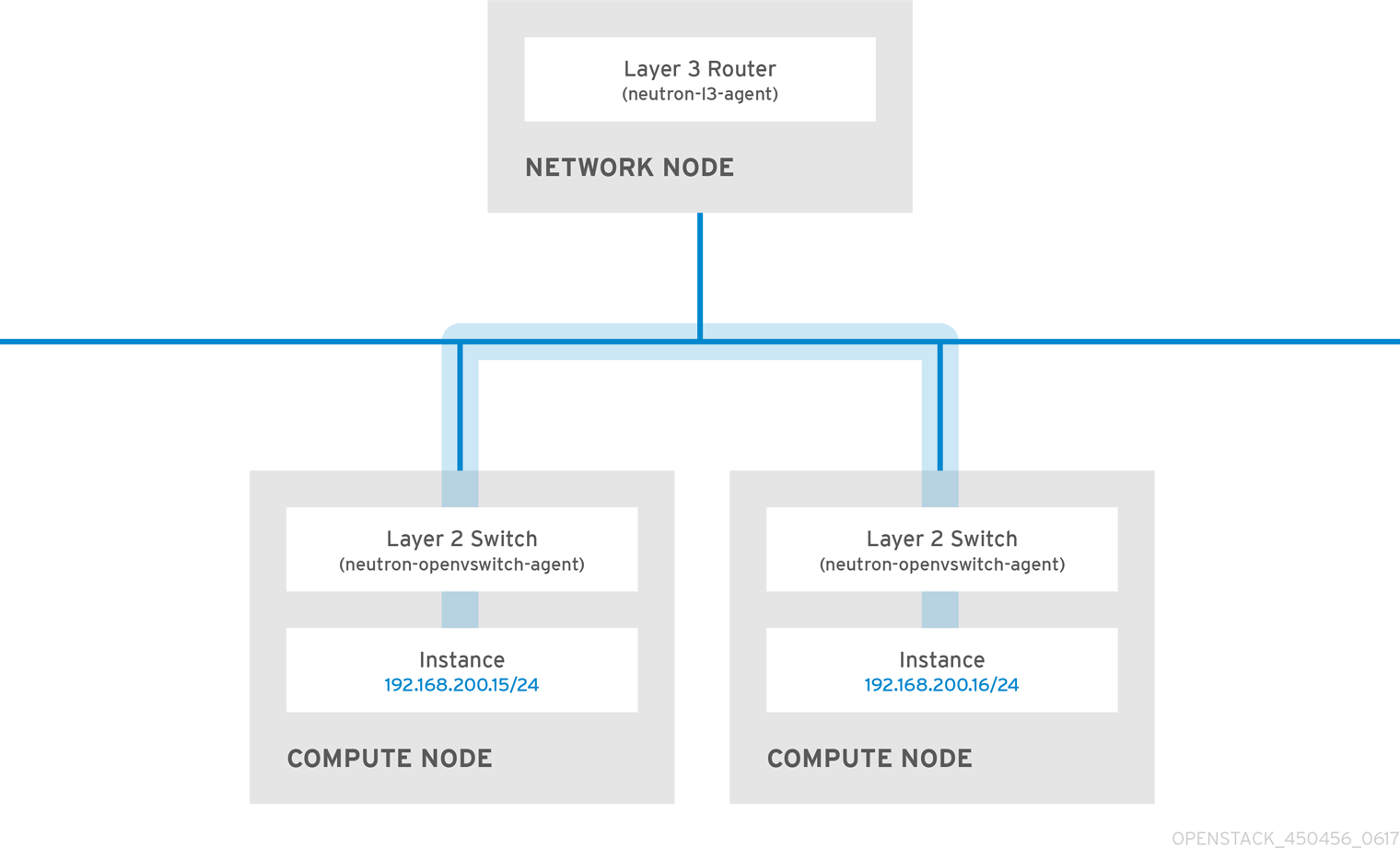

2.12.1. Use switching where possible

Because switching occurs at a lower level of the network (layer 2) it can function faster than the routing that occurs at layer 3. Design as few hops as possible between systems that communicate frequently. For example, the following diagram depicts a switched network that spans two physical nodes, allowing the two instances to communicate directly without using a router for navigation first. Note that the instances now share the same subnet, to indicate that they are on the same logical network:

To allow instances on separate nodes to communicate as if they are on the same logical network, use an encapsulation tunnel such as VXLAN or GRE. Red Hat recommends adjusting the MTU size from end-to-end to accommodate the additional bits required for the tunnel header, otherwise network performance can be negatively impacted as a result of fragmentation. For more information, see Configure MTU Settings.

You can further improve the performance of VXLAN tunneling by using supported hardware that features VXLAN offload capabilities. The full list is available here: https://access.redhat.com/articles/1390483

Part I. Common Tasks

Covers common administrative tasks and basic troubleshooting steps.

Chapter 3. Common administrative networking tasks

OpenStack Networking (neutron) is the software-defined networking component of Red Hat OpenStack Platform. The virtual network infrastructure enables connectivity between instances and the physical external network.

This section contains information about common administration tasks, such as adding and removing subnets and routers to suit your Red Hat OpenStack Platform deployment.

3.1. Creating a network

Create a network so that your instances can communicate with each other and receive IP addresses using DHCP. You can also integrate a network with external networks in your Red Hat OpenStack Platform deployment, or elsewhere, such as the physical network. This integration allows your instances to communicate with outside systems. For more information, see Bridge the physical network.

When creating networks, it is important to know that networks can host multiple subnets. This is useful if you intend to host distinctly different systems in the same network, and prefer a measure of isolation between them. For example, you can designate that only webserver traffic is present on one subnet, while database traffic traverses another. Subnets are isolated from each other, and any instance that wants to communicate with another subnet must have their traffic directed by a router. Consider placing systems that require a high volume of traffic amongst themselves in the same subnet, so that they do not require routing, and can avoid the subsequent latency and load.

- In the dashboard, select Project > Network > Networks.

Click +Create Network and specify the following values:

Expand Field Description Network Name

Descriptive name, based on the role that the network will perform. If you are integrating the network with an external VLAN, consider appending the VLAN ID number to the name. For example,

webservers_122, if you are hosting HTTP web servers in this subnet, and your VLAN tag is122. Or you might useinternal-onlyif you intend to keep the network traffic private, and not integrate the network with an external network.Admin State

Controls whether the network is immediately available. Use this field to create the network in a Down state, where it is logically present but inactive. This is useful if you do not intend to enter the network into production immediately.

Click the Next button, and specify the following values in the Subnet tab:

Expand Field Description Create Subnet

Determines whether to create a subnet. For example, you might not want to create a subnet if you intend to keep this network as a placeholder without network connectivity.

Subnet Name

Enter a descriptive name for the subnet.

Network Address

Enter the address in CIDR format, which contains the IP address range and subnet mask in one value. To determine the address, calculate the number of bits masked in the subnet mask and append that value to the IP address range. For example, the subnet mask 255.255.255.0 has 24 masked bits. To use this mask with the IPv4 address range 192.168.122.0, specify the address 192.168.122.0/24.

IP Version

Specifies the internet protocol version, where valid types are IPv4 or IPv6. The IP address range in the Network Address field must match whichever version you select.

Gateway IP

IP address of the router interface for your default gateway. This address is the next hop for routing any traffic destined for an external location, and must be within the range that you specify in the Network Address field. For example, if your CIDR network address is 192.168.122.0/24, then your default gateway is likely to be 192.168.122.1.

Disable Gateway

Disables forwarding and isolates the subnet.

Click Next to specify DHCP options:

- Enable DHCP - Enables DHCP services for this subnet. You can use DHCP to automate the distribution of IP settings to your instances.

IPv6 Address - Configuration Modes. If you create an IPv6 network, you must specify how to allocate IPv6 addresses and additional information:

- No Options Specified - Select this option if you want to set IP addresses manually, or if you use a non OpenStack-aware method for address allocation.

- SLAAC (Stateless Address Autoconfiguration) - Instances generate IPv6 addresses based on Router Advertisement (RA) messages sent from the OpenStack Networking router. Use this configuration to create an OpenStack Networking subnet with ra_mode set to slaac and address_mode set to slaac.

- DHCPv6 stateful - Instances receive IPv6 addresses as well as additional options (for example, DNS) from the OpenStack Networking DHCPv6 service. Use this configuration to create a subnet with ra_mode set to dhcpv6-stateful and address_mode set to dhcpv6-stateful.

- DHCPv6 stateless - Instances generate IPv6 addresses based on Router Advertisement (RA) messages sent from the OpenStack Networking router. Additional options (for example, DNS) are allocated from the OpenStack Networking DHCPv6 service. Use this configuration to create a subnet with ra_mode set to dhcpv6-stateless and address_mode set to dhcpv6-stateless.

- Allocation Pools - Range of IP addresses that you want DHCP to assign. For example, the value 192.168.22.100,192.168.22.100 considers all up addresses in that range as available for allocation.

- DNS Name Servers - IP addresses of the DNS servers available on the network. DHCP distributes these addresses to the instances for name resolution.

- Host Routes - Static host routes. First, specify the destination network in CIDR format, followed by the next hop that you want to use for routing (for example, 192.168.23.0/24, 10.1.31.1). Provide this value if you need to distribute static routes to instances.

Click Create.

You can view the complete network in the Networks tab. You can also click Edit to change any options as needed. When you create instances, you can configure them now to use its subnet, and they receive any specified DHCP options.

3.2. Creating an advanced network

Advanced network options are available for administrators, when creating a network from the Admin view. Use these options to specify projects and to define the network type that you want to use.

To create an advanced network, complete the following steps:

- In the dashboard, select Admin > Networks > Create Network > Project.

- Select the project that you want to host the new network with the Project drop-down list.

Review the options in Provider Network Type:

- Local - Traffic remains on the local Compute host and is effectively isolated from any external networks.

- Flat - Traffic remains on a single network and can also be shared with the host. No VLAN tagging or other network segregation takes place.

- VLAN - Create a network using a VLAN ID that corresponds to a VLAN present in the physical network. This option allows instances to communicate with systems on the same layer 2 VLAN.

- GRE - Use a network overlay that spans multiple nodes for private communication between instances. Traffic egressing the overlay must be routed.

- VXLAN - Similar to GRE, and uses a network overlay to span multiple nodes for private communication between instances. Traffic egressing the overlay must be routed.

Click Create Network.

Review the Project Network Topology to validate that the network has been successfully created.

3.3. Adding network routing

To allow traffic to be routed to and from your new network, you must add its subnet as an interface to an existing virtual router:

- In the dashboard, select Project > Network > Routers.

Select your virtual router name in the Routers list, and click Add Interface.

In the Subnet list, select the name of your new subnet. You can optionally specify an IP address for the interface in this field.

Click Add Interface.

Instances on your network can now communicate with systems outside the subnet.

3.4. Deleting a network

There are occasions where it becomes necessary to delete a network that was previously created, perhaps as housekeeping or as part of a decommissioning process. You must first remove or detach any interfaces where the network is still in use, before you can successfully delete a network.

To delete a network in your project, together with any dependent interfaces, complete the following steps:

In the dashboard, select Project > Network > Networks.

Remove all router interfaces associated with the target network subnets.

To remove an interface, find the ID number of the network that you want to delete by clicking on your target network in the Networks list, and looking at the ID field. All the subnets associated with the network share this value in the Network ID field.

Navigate to Project > Network > Routers, click the name of your virtual router in the Routers list, and locate the interface attached to the subnet that you want to delete.

You can distinguish this subnet from the other subnets by the IP address that served as the gateway IP. You can further validate the distinction by ensuring that the network ID of the interface matches the ID that you noted in the previous step.

- Click the Delete Interface button for the interface that you want to delete.

- Select Project > Network > Networks, and click the name of your network.

Click the Delete Subnet button for the subnet that you want to delete.

NoteIf you are still unable to remove the subnet at this point, ensure it is not already being used by any instances.

- Select Project > Network > Networks, and select the network you would like to delete.

- Click Delete Networks.

3.5. Purging the networking for a project

Use the neutron purge command to delete all neutron resources that belong to a particular project.

For example, to purge the neutron resources of the test-project project prior to deletion, run the following commands:

3.6. Working with subnets

Use subnets to grant network connectivity to instances. Each instance is assigned to a subnet as part of the instance creation process, therefore it’s important to consider proper placement of instances to best accommodate their connectivity requirements.

You can create subnets only in pre-existing networks. Remember that project networks in OpenStack Networking can host multiple subnets. This is useful if you intend to host distinctly different systems in the same network, and prefer a measure of isolation between them.

For example, you can designate that only webserver traffic is present on one subnet, while database traffic traverse another.

Subnets are isolated from each other, and any instance that wants to communicate with another subnet must have their traffic directed by a router. Therefore, you can lessen network latency and load by grouping systems in the same subnet that require a high volume of traffic between each other.

3.6.1. Creating a subnet

To create a subnet, follow these steps:

- In the dashboard, select Project > Network > Networks, and click the name of your network in the Networks view.

Click Create Subnet, and specify the following values:

Expand Field Description Subnet Name

Descriptive subnet name.

Network Address

Address in CIDR format, which contains the IP address range and subnet mask in one value. To determine the CIDR address, calculate the number of bits masked in the subnet mask and append that value to the IP address range. For example, the subnet mask 255.255.255.0 has 24 masked bits. To use this mask with the IPv4 address range 192.168.122.0, specify the address 192.168.122.0/24.

IP Version

Internet protocol version, where valid types are IPv4 or IPv6. The IP address range in the Network Address field must match whichever protocol version you select.

Gateway IP

IP address of the router interface for your default gateway. This address is the next hop for routing any traffic destined for an external location, and must be within the range that you specify in the Network Address field. For example, if your CIDR network address is 192.168.122.0/24, then your default gateway is likely to be 192.168.122.1.

Disable Gateway

Disables forwarding and isolates the subnet.

Click Next to specify DHCP options:

- Enable DHCP - Enables DHCP services for this subnet. You can use DHCP to automate the distribution of IP settings to your instances.

IPv6 Address - Configuration Modes. If you create an IPv6 network, you must specify how to allocate IPv6 addresses and additional information:

- No Options Specified - Select this option if you want to set IP addresses manually, or if you use a non OpenStack-aware method for address allocation.

- SLAAC (Stateless Address Autoconfiguration) - Instances generate IPv6 addresses based on Router Advertisement (RA) messages sent from the OpenStack Networking router. Use this configuration to create an OpenStack Networking subnet with ra_mode set to slaac and address_mode set to slaac.

- DHCPv6 stateful - Instances receive IPv6 addresses as well as additional options (for example, DNS) from the OpenStack Networking DHCPv6 service. Use this configuration to create a subnet with ra_mode set to dhcpv6-stateful and address_mode set to dhcpv6-stateful.

- DHCPv6 stateless - Instances generate IPv6 addresses based on Router Advertisement (RA) messages sent from the OpenStack Networking router. Additional options (for example, DNS) are allocated from the OpenStack Networking DHCPv6 service. Use this configuration to create a subnet with ra_mode set to dhcpv6-stateless and address_mode set to dhcpv6-stateless.

- Allocation Pools - Range of IP addresses that you want DHCP to assign. For example, the value 192.168.22.100,192.168.22.100 considers all up addresses in that range as available for allocation.

- DNS Name Servers - IP addresses of the DNS servers available on the network. DHCP distributes these addresses to the instances for name resolution.

- Host Routes - Static host routes. First, specify the destination network in CIDR format, followed by the next hop that you want to use for routing (for example, 192.168.23.0/24, 10.1.31.1). Provide this value if you need to distribute static routes to instances.

Click Create.

You can view the subnet in the Subnets list. You can also click Edit to change any options as needed. When you create instances, you can configure them now to use its subnet, and they receive any specified DHCP options.

3.7. Deleting a subnet

You can delete a subnet if it is no longer in use. However, if any instances are still configured to use the subnet, the deletion attempt fails and the dashboard displays an error message.

Complete the following steps to delete a specific subnet in a network:

- In the dashboard, select Project > Network > Networks.

- Click the name of your network.

- Select the target subnet, and click Delete Subnets.

3.8. Adding a router

OpenStack Networking provides routing services using an SDN-based virtual router. Routers are a requirement for your instances to communicate with external subnets, including those in the physical network. Routers and subnets connect using interfaces, with each subnet requiring its own interface to the router.

The default gateway of a router defines the next hop for any traffic received by the router. Its network is typically configured to route traffic to the external physical network using a virtual bridge.

To create a router, complete the following steps:

- In the dashboard, select Project > Network > Routers, and click Create Router.

- Enter a descriptive name for the new router, and click Create router.

- Click Set Gateway next to the entry for the new router in the Routers list.

- In the External Network list, specify the network that you want to receive traffic destined for an external location.

Click Set Gateway.

After you add a router, you must configure any subnets you have created to send traffic using this router. You do this by creating interfaces between the subnet and the router.

The default routes for subnets must not be overwritten. When the default route for a subnet is removed, the L3 agent automatically removes the corresponding route in the router namespace too, and network traffic cannot flow to and from the associated subnet. If the existing router namespace route has been removed, to fix this problem, perform these steps:

- Disassociate all floating IPs on the subnet.

- Detach the router from the subnet.

- Re-attach the router to the subnet.

- Re-attach all floating IPs.

3.9. Deleting a router

You can delete a router if it has no connected interfaces.

To remove its interfaces and delete a router, complete the following steps:

- In the dashboard, select Project > Network > Routers, and click the name of the router that you want to delete.

- Select the interfaces of type Internal Interface, and click Delete Interfaces.

- From the Routers list, select the target router and click Delete Routers.

3.10. Adding an interface

You can use interfaces to interconnect routers with subnets so that routers can direct any traffic that instances send to destinations outside of their intermediate subnet.

To add a router interface and connect the new interface to a subnet, complete these steps:

This procedure uses the Network Topology feature. Using this feature, you can see a graphical representation of all your virtual routers and networks while you to perform network management tasks.

- In the dashboard, select Project > Network > Network Topology.

- Locate the router that you want to manage, hover your mouse over it, and click Add Interface.

Specify the Subnet that you want to connect to the router.

You can also specify an IP address. The address is useful for testing and troubleshooting purposes, since a successful ping to this interface indicates that the traffic is routing as expected.

Click Add interface.

The Network Topology diagram automatically updates to reflect the new interface connection between the router and subnet.

3.11. Deleting an interface

You can remove an interface to a subnet if you no longer require the router to direct traffic for the subnet.

To delete an interface, complete the following steps:

- In the dashboard, select Project > Network > Routers.

- Click the name of the router that hosts the interface that you want to delete.

- Select the interface type (Internal Interface), and click Delete Interfaces.

3.12. Configuring IP addressing

Follow the procedures in this section to manage your IP address allocation in OpenStack Networking.

3.12.1. Creating floating IP pools

You can use floating IP addresses to direct ingress network traffic to your OpenStack instances. First, you must define a pool of validly routable external IP addresses, which you can then assign to instances dynamically. OpenStack Networking routes all incoming traffic destined for that floating IP to the instance that you associate with the floating IP.

OpenStack Networking allocates floating IP addresses to all projects (tenants) from the same IP ranges/CIDRs. As a result, all projects can consume floating IPs from every floating IP subnet. You can manage this behavior using quotas for specific projects. For example, you can set the default to 10 for ProjectA and ProjectB, while setting the quota for ProjectC to 0.

When you create an external subnet, you can also define the floating IP allocation pool. If the subnet hosts only floating IP addresses, consider disabling DHCP allocation with the --no-dhcp option in the openstack subnet create command:

openstack subnet create --no-dhcp --allocation-pool start=IP_ADDRESS,end=IP_ADDRESS --gateway IP_ADDRESS --network SUBNET_RANGE NETWORK_NAME

# openstack subnet create --no-dhcp --allocation-pool start=IP_ADDRESS,end=IP_ADDRESS --gateway IP_ADDRESS --network SUBNET_RANGE NETWORK_NAMEFor example:

openstack subnet create --no-dhcp --allocation_pool start=192.168.100.20,end=192.168.100.100 --gateway 192.168.100.1 --network 192.168.100.0/24 public

# openstack subnet create --no-dhcp --allocation_pool start=192.168.100.20,end=192.168.100.100 --gateway 192.168.100.1 --network 192.168.100.0/24 public3.12.2. Assigning a specific floating IP

You can assign a specific floating IP address to an instance using the nova command.

nova floating-ip-associate INSTANCE_NAME IP_ADDRESS

# nova floating-ip-associate INSTANCE_NAME IP_ADDRESS

In this example, a floating IP address is allocated to an instance named corp-vm-01:

nova floating-ip-associate corp-vm-01 192.168.100.20

# nova floating-ip-associate corp-vm-01 192.168.100.203.12.3. Assigning a random floating IP

To dynamically allocate floating IP addresses to instances, complete these steps:

Enter the following

openstackcommand:openstack floating ip create

# openstack floating ip createCopy to Clipboard Copied! Toggle word wrap Toggle overflow In this example, you do not select a particular IP address, but instead request that OpenStack Networking allocate a floating IP address from the pool:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow After you allocate the IP address, you can assign it to a particular instance.

Enter the following command to locate the port ID associated with your instance:

openstack port list

# openstack port listCopy to Clipboard Copied! Toggle word wrap Toggle overflow (The port ID maps to the fixed IP address allocated to the instance.)

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Associate the instance ID with the port ID of the instance:

openstack server add floating ipINSTANCE_NAME_OR_ID FLOATING_IP_ADDRESSFor example:

openstack server add floating ip VM1 172.24.4.225

# openstack server add floating ip VM1 172.24.4.225Copy to Clipboard Copied! Toggle word wrap Toggle overflow Validate that you used the correct port ID for the instance by making sure that the MAC address (third column) matches the port on the instance.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

3.13. Creating multiple floating IP pools

OpenStack Networking supports one floating IP pool for each L3 agent. Therefore, you must scale your L3 agents to create additional floating IP pools.

Make sure that in /var/lib/config-data/neutron/etc/neutron/neutron.conf the property handle_internal_only_routers is set to True for only one L3 agent in your environment. This option configures the L3 agent to manage only non-external routers.

3.14. Bridging the physical network

Bridge your virtual network to the physical network to enable connectivity to and from virtual instances.

In this procedure, the example physical interface, eth0, is mapped to the bridge, br-ex; the virtual bridge acts as the intermediary between the physical network and any virtual networks.

As a result, all traffic traversing eth0 uses the configured Open vSwitch to reach instances.

For more information, see Chapter 11, Configuring bridge mappings.

To map a physical NIC to the virtual Open vSwitch bridge, complete the following steps:

Open

/etc/sysconfig/network-scripts/ifcfg-eth0in a text editor, and update the following parameters with values appropriate for the network at your site:- IPADDR

- NETMASK GATEWAY

DNS1 (name server)

Here is an example:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Open

/etc/sysconfig/network-scripts/ifcfg-br-exin a text editor and update the virtual bridge parameters with the IP address values that were previously allocated to eth0:Copy to Clipboard Copied! Toggle word wrap Toggle overflow You can now assign floating IP addresses to instances and make them available to the physical network.

Chapter 4. Planning IP address usage

An OpenStack deployment can consume a larger number of IP addresses than might be expected. This section contains information about correctly anticipating the quantity of addresses that you require, and where the addresses are used in your environment.

4.1. VLAN planning

When you plan your Red Hat OpenStack Platform deployment, you start with a number of subnets, from which you allocate individual IP addresses. When you use multiple subnets you can segregate traffic between systems into VLANs.

For example, it is ideal that your management or API traffic is not on the same network as systems that serve web traffic. Traffic between VLANs travels through a router where you can implement firewalls to govern traffic flow.

You must plan your VLANs as part of your overall plan that includes traffic isolation, high availability, and IP address utilization for the various types of virtual networking resources in your deployment.

The maximum number of VLANs in a single network, or in one OVS agent for a network node, is 4094. In situations where you require more than the maximum number of VLANs, you can create several provider networks (VXLAN networks) and several network nodes, one per network. Each node can contain up to 4094 private networks.

4.2. Types of network traffic

You can allocate separate VLANs for the different types of network traffic that you want to host. For example, you can have separate VLANs for each of these types of networks. Only the External network must be routable to the external physical network. In this release, director provides DHCP services.

You do not require all of the isolated VLANs in this section for every OpenStack deployment.. For example, if your cloud users do not create ad hoc virtual networks on demand, then you may not require a project network. If you want each VM to connect directly to the same switch as any other physical system, connect your Compute nodes directly to a provider network and configure your instances to use that provider network directly.

- Provisioning network - This VLAN is dedicated to deploying new nodes using director over PXE boot. OpenStack Orchestration (heat) installs OpenStack onto the overcloud bare metal servers. These servers attach to the physical network to receive the platform installation image from the undercloud infrastructure.

Internal API network - The OpenStack services use the Internal API networkfor communication, including API communication, RPC messages, and database communication. In addition, this network is used for operational messages between controller nodes. When planning your IP address allocation, note that each API service requires its own IP address. Specifically, you must plan IP addresses for each of the following services:

- vip-msg (ampq)

- vip-keystone-int

- vip-glance-int

- vip-cinder-int

- vip-nova-int

- vip-neutron-int

- vip-horizon-int

- vip-heat-int

- vip-ceilometer-int

- vip-swift-int

- vip-keystone-pub

- vip-glance-pub

- vip-cinder-pub

- vip-nova-pub

- vip-neutron-pub

- vip-horizon-pub

- vip-heat-pub

- vip-ceilometer-pub

- vip-swift-pub

When using High Availability, Pacemaker moves VIP addresses between the physical nodes.

- Storage - Block Storage, NFS, iSCSI, and other storage services. Isolate this network to separate physical Ethernet links for performance reasons.

- Storage Management - OpenStack Object Storage (swift) uses this network to synchronise data objects between participating replica nodes. The proxy service acts as the intermediary interface between user requests and the underlying storage layer. The proxy receives incoming requests and locates the necessary replica to retrieve the requested data. Services that use a Ceph back end connect over the Storage Management network, since they do not interact with Ceph directly but rather use the front end service. Note that the RBD driver is an exception; this traffic connects directly to Ceph.

- Project networks - Neutron provides each project with their own networks using either VLAN segregation (where each project network is a network VLAN), or tunneling using VXLAN or GRE. Network traffic is isolated within each project network. Each project network has an IP subnet associated with it, and multiple project networks may use the same addresses.

- External - The External network hosts the public API endpoints and connections to the Dashboard (horizon). You can also use this network for SNAT. In a production deployment, it is common to use a separate network for floating IP addresses and NAT.

- Provider networks - Use provider networks to attach instances to existing network infrastructure. You can use provider networks to map directly to an existing physical network in the data center, using flat networking or VLAN tags. This allows an instance to share the same layer-2 network as a system external to the OpenStack Networking infrastructure.

4.3. IP address consumption

The following systems consume IP addresses from your allocated range:

- Physical nodes - Each physical NIC requires one IP address. It is common practice to dedicate physical NICs to specific functions. For example, allocate management and NFS traffic to distinct physical NICs, sometimes with multiple NICs connecting across to different switches for redundancy purposes.

- Virtual IPs (VIPs) for High Availability - Plan to allocate between one and three VIPs for each network that controller nodes share.

4.4. Virtual networking

The following virtual resources consume IP addresses in OpenStack Networking. These resources are considered local to the cloud infrastructure, and do not need to be reachable by systems in the external physical network:

- Project networks - Each project network requires a subnet that it can use to allocate IP addresses to instances.

- Virtual routers - Each router interface plugging into a subnet requires one IP address. If you want to use DHCP, each router interface requires two IP addresses.

- Instances - Each instance requires an address from the project subnet that hosts the instance. If you require ingress traffic, you must allocate a floating IP address to the instance from the designated external network.

- Management traffic - Includes OpenStack Services and API traffic. All services share a small number of VIPs. API, RPC and database services communicate on the internal API VIP.

4.5. Example network plan

This example shows a number of networks that accommodate multiple subnets, with each subnet being assigned a range of IP addresses:

| Subnet name | Address range | Number of addresses | Subnet Mask |

|---|---|---|---|

| Provisioning network | 192.168.100.1 - 192.168.100.250 | 250 | 255.255.255.0 |

| Internal API network | 172.16.1.10 - 172.16.1.250 | 241 | 255.255.255.0 |

| Storage | 172.16.2.10 - 172.16.2.250 | 241 | 255.255.255.0 |

| Storage Management | 172.16.3.10 - 172.16.3.250 | 241 | 255.255.255.0 |

| Tenant network (GRE/VXLAN) | 172.16.4.10 - 172.16.4.250 | 241 | 255.255.255.0 |

| External network (incl. floating IPs) | 10.1.2.10 - 10.1.3.222 | 469 | 255.255.254.0 |

| Provider network (infrastructure) | 10.10.3.10 - 10.10.3.250 | 241 | 255.255.252.0 |

Chapter 5. Reviewing OpenStack Networking router ports

Virtual routers in OpenStack Networking use ports to interconnect with subnets. You can review the state of these ports to determine whether they connect as expected.

5.1. Viewing current port status

Complete the following steps to lists all of the ports that attach to a particular router and to retrieve the current state of a port (DOWN or ACTIVE):

To view all the ports that attach to the router named r1, run the following command:

neutron router-port-list r1

# neutron router-port-list r1Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example result:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow To view the details of each port, run the following command. Include the port ID of the port that you want to view. The result includes the port status, indicated in the following example as having an

ACTIVEstate:openstack port show b58d26f0-cc03-43c1-ab23-ccdb1018252a

# openstack port show b58d26f0-cc03-43c1-ab23-ccdb1018252aCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example result:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Perform step 2 for each port to retrieve its status.

Chapter 6. Troubleshooting provider networks

A deployment of virtual routers and switches, also known as software-defined networking (SDN), may seem to introduce complexity. However, the diagnostic process of troubleshooting network connectivity in OpenStack Networking is similar to the diagnostic process for physical networks. If you use VLANs, you can consider the virtual infrastructure as a trunked extension of the physical network, rather than a wholly separate environment.

6.1. Basic ping testing

The ping command is a useful tool for analyzing network connectivity problems. The results serve as a basic indicator of network connectivity, but might not entirely exclude all connectivity issues, such as a firewall blocking the actual application traffic. The ping command sends traffic to specific destinations, and then reports back whether the attempts were successful.

The ping command is an ICMP operation. To use ping, you must allow ICMP traffic to traverse any intermediary firewalls.

Ping tests are most useful when run from the machine experiencing network issues, so it may be necessary to connect to the command line via the VNC management console if the machine seems to be completely offline.

For example, the following ping test command validates multiple layers of network infrastructure in order to succeed; name resolution, IP routing, and network switching must all function correctly:

You can terminate the ping command with Ctrl-c, after which a summary of the results is presented. Zero percent packet loss indicates that the connection was stable and did not time out.

--- e1890.b.akamaiedge.net ping statistics --- 3 packets transmitted, 3 received, 0% packet loss, time 2003ms rtt min/avg/max/mdev = 13.461/13.498/13.541/0.100 ms

--- e1890.b.akamaiedge.net ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2003ms

rtt min/avg/max/mdev = 13.461/13.498/13.541/0.100 ms

The results of a ping test can be very revealing, depending on which destination you test. For example, in the following diagram VM1 is experiencing some form of connectivity issue. The possible destinations are numbered in blue, and the conclusions drawn from a successful or failed result are presented:

1. The internet - a common first step is to send a ping test to an internet location, such as www.redhat.com.

- Success: This test indicates that all the various network points in between the machine and the Internet are functioning correctly. This includes the virtual and physical network infrastructure.

- Failure: There are various ways in which a ping test to a distant internet location can fail. If other machines on your network are able to successfully ping the internet, that proves the internet connection is working, and the issue is likely within the configuration of the local machine.

2. Physical router - This is the router interface that the network administrator designates to direct traffic onward to external destinations.

- Success: Ping tests to the physical router can determine whether the local network and underlying switches are functioning. These packets do not traverse the router, so they do not prove whether there is a routing issue present on the default gateway.

- Failure: This indicates that the problem lies between VM1 and the default gateway. The router/switches might be down, or you may be using an incorrect default gateway. Compare the configuration with that on another server that you know is functioning correctly. Try pinging another server on the local network.

3. Neutron router - This is the virtual SDN (Software-defined Networking) router that Red Hat OpenStack Platform uses to direct the traffic of virtual machines.

- Success: Firewall is allowing ICMP traffic, the Networking node is online.

- Failure: Confirm whether ICMP traffic is permitted in the security group of the instance. Check that the Networking node is online, confirm that all the required services are running, and review the L3 agent log (/var/log/neutron/l3-agent.log).

4. Physical switch - The physical switch manages traffic between nodes on the same physical network.

- Success: Traffic sent by a VM to the physical switch must pass through the virtual network infrastructure, indicating that this segment is functioning correctly.

- Failure: Check that the physical switch port is configured to trunk the required VLANs.

5. VM2 - Attempt to ping a VM on the same subnet, on the same Compute node.

- Success: The NIC driver and basic IP configuration on VM1 are functional.

- Failure: Validate the network configuration on VM1. Or, firewall on VM2 might simply be blocking ping traffic. In addition, verify the virtual switching configuration and review the Open vSwitch (or Linux Bridge) log files.

6.2. Troubleshooting VLAN networks

OpenStack Networking can trunk VLAN networks through to the SDN switches. Support for VLAN-tagged provider networks means that virtual instances can integrate with server subnets in the physical network.

To troubleshoot connectivity to a VLAN Provider network, complete these steps:

Ping the gateway with

ping <gateway-IP-address>.Consider this example, in which a network is created with these commands:

openstack network create --provider-network-type vlan --provider-physical-network phy-eno1 --provider-segment 120 provider openstack subnet create --no-dhcp --allocation-pool start=192.168.120.1,end=192.168.120.153 --gateway 192.168.120.254 --network provider public_subnet

# openstack network create --provider-network-type vlan --provider-physical-network phy-eno1 --provider-segment 120 provider # openstack subnet create --no-dhcp --allocation-pool start=192.168.120.1,end=192.168.120.153 --gateway 192.168.120.254 --network provider public_subnetCopy to Clipboard Copied! Toggle word wrap Toggle overflow In this example, the gateway IP address is 192.168.120.254.

ping 192.168.120.254

$ ping 192.168.120.254Copy to Clipboard Copied! Toggle word wrap Toggle overflow If the ping fails, do the following:

Confirm that you have network flow for the associated VLAN.

It is possible that the VLAN ID has not been set. In this example, OpenStack Networking is configured to trunk VLAN 120 to the provider network. (See --provider:segmentation_id=120 in the example in step 1.)

Confirm the VLAN flow on the bridge interface using the command,

ovs-ofctl dump-flows <bridge-name>.In this example the bridge is named br-ex:

ovs-ofctl dump-flows br-ex NXST_FLOW reply (xid=0x4): cookie=0x0, duration=987.521s, table=0, n_packets=67897, n_bytes=14065247, idle_age=0, priority=1 actions=NORMAL cookie=0x0, duration=986.979s, table=0, n_packets=8, n_bytes=648, idle_age=977, priority=2,in_port=12 actions=drop

# ovs-ofctl dump-flows br-ex NXST_FLOW reply (xid=0x4): cookie=0x0, duration=987.521s, table=0, n_packets=67897, n_bytes=14065247, idle_age=0, priority=1 actions=NORMAL cookie=0x0, duration=986.979s, table=0, n_packets=8, n_bytes=648, idle_age=977, priority=2,in_port=12 actions=dropCopy to Clipboard Copied! Toggle word wrap Toggle overflow

6.2.1. Reviewing the VLAN configuration and log files

OpenStack Networking (neutron) agents - Use the

openstack network agent listcommand to verify that all agents are up and registered with the correct names:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Review /var/log/containers/neutron/openvswitch-agent.log - this log should provide confirmation that the creation process used the ovs-ofctl command to configure VLAN trunking.

-

Validate external_network_bridge in the /etc/neutron/l3_agent.ini file. If there is a hardcoded value in the

external_network_bridgeparameter, you cannot use a provider network with the L3-agent, and you cannot create the necessary flows. Theexternal_network_bridgevalue must be in the format `external_network_bridge = "" `. - Check the network_vlan_ranges value in the /etc/neutron/plugin.ini file. For provider networks, do not specify the numeric VLAN ID. Specify IDs only when using VLAN isolated project networks.

-

Validate the OVS agent configuration file bridge mappings, confirm that the bridge mapped to

phy-eno1exists and is properly connected toeno1.

6.3. Troubleshooting from within project networks

In OpenStack Networking, all project traffic is contained within network namespaces so that projects can configure networks without interfering with each other. For example, network namespaces allow different projects to have the same subnet range of 192.168.1.1/24 without interference between them.

To begin troubleshooting a project network, first determine which network namespace contains the network:

List all the project networks using the

openstack network listcommand:Copy to Clipboard Copied! Toggle word wrap Toggle overflow In this example,examine the web-servers network. Make a note of the id value in the web-server row (9cb32fe0-d7fb-432c-b116-f483c6497b08). This value is appended to the network namespace, which helps you identify the namespace in the next step.

List all the network namespaces using the

ip netns listcommand:Copy to Clipboard Copied! Toggle word wrap Toggle overflow The output contains a namespace that matches the web-servers network id. In this example the namespace is qdhcp-9cb32fe0-d7fb-432c-b116-f483c6497b08.

Examine the configuration of the web-servers network by running commands within the namespace, prefixing the troubleshooting commands with

ip netns exec <namespace>:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.3.1. Performing advanced ICMP testing within the namespace

Capture ICMP traffic using the

tcpdumpcommand:ip netns exec qrouter-62ed467e-abae-4ab4-87f4-13a9937fbd6b tcpdump -qnntpi any icmp

# ip netns exec qrouter-62ed467e-abae-4ab4-87f4-13a9937fbd6b tcpdump -qnntpi any icmpCopy to Clipboard Copied! Toggle word wrap Toggle overflow In a separate command line window, perform a ping test to an external network:

ip netns exec qrouter-62ed467e-abae-4ab4-87f4-13a9937fbd6b ping www.redhat.com

# ip netns exec qrouter-62ed467e-abae-4ab4-87f4-13a9937fbd6b ping www.redhat.comCopy to Clipboard Copied! Toggle word wrap Toggle overflow In the terminal running the tcpdump session, observe detailed results of the ping test.

tcpdump: listening on any, link-type LINUX_SLL (Linux cooked), capture size 65535 bytes IP (tos 0xc0, ttl 64, id 55447, offset 0, flags [none], proto ICMP (1), length 88) 172.24.4.228 > 172.24.4.228: ICMP host 192.168.200.20 unreachable, length 68 IP (tos 0x0, ttl 64, id 22976, offset 0, flags [DF], proto UDP (17), length 60) 172.24.4.228.40278 > 192.168.200.21: [bad udp cksum 0xfa7b -> 0xe235!] UDP, length 32tcpdump: listening on any, link-type LINUX_SLL (Linux cooked), capture size 65535 bytes IP (tos 0xc0, ttl 64, id 55447, offset 0, flags [none], proto ICMP (1), length 88) 172.24.4.228 > 172.24.4.228: ICMP host 192.168.200.20 unreachable, length 68 IP (tos 0x0, ttl 64, id 22976, offset 0, flags [DF], proto UDP (17), length 60) 172.24.4.228.40278 > 192.168.200.21: [bad udp cksum 0xfa7b -> 0xe235!] UDP, length 32Copy to Clipboard Copied! Toggle word wrap Toggle overflow

When you perform a tcpdump analysis of traffic, you might observe the responding packets heading to the router interface rather than the instance. This is expected behavior, as the qrouter performs DNAT on the return packets.

Chapter 7. Connecting an instance to the physical network

This chapter contains information about using provider networks to connect instances directly to an external network.

7.1. Overview of the OpenStack Networking topology

OpenStack Networking (neutron) has two categories of services distributed across a number of node types.

- Neutron server - This service runs the OpenStack Networking API server, which provides the API for end-users and services to interact with OpenStack Networking. This server also integrates with the underlying database to store and retrieve project network, router, and loadbalancer details, among others.

Neutron agents - These are the services that perform the network functions for OpenStack Networking:

-

neutron-dhcp-agent- manages DHCP IP addressing for project private networks. -

neutron-l3-agent- performs layer 3 routing between project private networks, the external network, and others.

-

-

Compute node - This node hosts the hypervisor that runs the virtual machines, also known as instances. A Compute node must be wired directly to the network in order to provide external connectivity for instances. This node is typically where the l2 agents run, such as

neutron-openvswitch-agent.

7.1.1. Service placement

The OpenStack Networking services can either run together on the same physical server, or on separate dedicated servers, which are named according to their roles:

- Controller node - The server that runs API service.

- Network node - The server that runs the OpenStack Networking agents.

- Compute node - The hypervisor server that hosts the instances.

The steps in this chapter apply to an environment that contains these three node types. If your deployment has both the Controller and Network node roles on the same physical node, then you must perform the steps from both sections on that server. This also applies for a High Availability (HA) environment, where all three nodes might be running the Controller node and Network node services with HA. As a result, you must complete the steps in sections applicable to Controller and Network nodes on all three nodes.

7.2. Using flat provider networks

The procedures in this section create flat provider networks that can connect instances directly to external networks. You would do this if you have multiple physical networks (for example, physnet1, physnet2) and separate physical interfaces (eth0 -> physnet1, and eth1 -> physnet2), and you need to connect each Compute node and Network node to those external networks.

If you want to connect multiple VLAN-tagged interfaces (on a single NIC) to multiple provider networks, see Section 7.3, “Using VLAN provider networks”.

7.2.1. Configuring the Controller nodes

1. Edit /etc/neutron/plugin.ini (which is symlinked to /etc/neutron/plugins/ml2/ml2_conf.ini), add flat to the existing list of values, and set flat_networks to *:

type_drivers = vxlan,flat flat_networks =*

type_drivers = vxlan,flat

flat_networks =*2. Create a flat external network and associate it with the configured physical_network. Create this network as a shared network so that other users can connect their instances directly to it:

openstack network create --provider-network-type flat --provider-physical-network physnet1 --external public01

# openstack network create --provider-network-type flat --provider-physical-network physnet1 --external public01

3. Create a subnet within this external network using the openstack subnet create command, or the OpenStack Dashboard:

openstack subnet create --dhcp --allocation-pool start=192.168.100.20,end=192.168.100.100 --gateway 192.168.100.1 --network public01 public_subnet

# openstack subnet create --dhcp --allocation-pool start=192.168.100.20,end=192.168.100.100 --gateway 192.168.100.1 --network public01 public_subnet

4. Restart the neutron-server service to apply this change:

systemctl restart neutron-server.service

# systemctl restart neutron-server.service7.2.2. Configuring the Network node and Compute nodes

Complete the following steps on the Network node and the Compute nodes so that these nodes can connect to the external network, and can allow instances to communicate directly with the external network

1. Create the Open vSwitch bridge and port. Run the following command to create the external network bridge (br-ex) and add a corresponding port (eth1)

i. Edit /etc/sysconfig/network-scripts/ifcfg-eth1:

ii. Edit /etc/sysconfig/network-scripts/ifcfg-br-ex:

2. Restart the network service to apply these changes:

systemctl restart network.service

# systemctl restart network.service

3. Configure the physical networks in /etc/neutron/plugins/ml2/openvswitch_agent.ini and map the bridge to the physical network:

For more information on configuring bridge_mappings, see Chapter 11, Configuring bridge mappings.

bridge_mappings = physnet1:br-ex

bridge_mappings = physnet1:br-ex

4. Restart the neutron-openvswitch-agent service on the Network and Compute nodes to apply these changes:

systemctl restart neutron-openvswitch-agent

# systemctl restart neutron-openvswitch-agent7.2.3. Configuring the Network node

1. Set the external_network_bridge = parameter to an empty value in /etc/neutron/l3_agent.ini to enable the use of external provider networks.

# Name of bridge used for external network traffic. This should be set to empty value for the linux bridge external_network_bridge =

# Name of bridge used for external network traffic. This should be set to

# empty value for the linux bridge

external_network_bridge =

2. Restart neutron-l3-agent to apply these changes: