Manage Red Hat Quay

Preface

Once you have deployed a Red Hat Quay registry, there are many ways you can further configure and manage that deployment. Topics covered here include:

- Advanced Red Hat Quay configuration

- Setting notifications to alert you of a new Red Hat Quay release

- Securing connections with SSL/TLS certificates

- Directing action logs storage to Elasticsearch

- Configuring image security scanning with Clair

- Scan pod images with the Container Security Operator

- Integrate Red Hat Quay into OpenShift Container Platform with the Quay Bridge Operator

- Mirroring images with repository mirroring

- Sharing Red Hat Quay images with a BitTorrent service

- Authenticating users with LDAP

- Enabling Quay for Prometheus and Grafana metrics

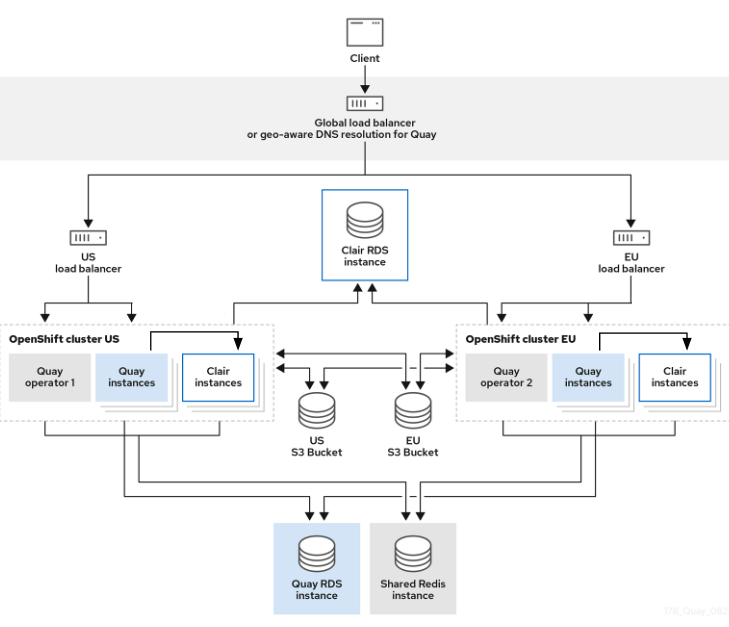

- Setting up geo-replication

- Troubleshooting Red Hat Quay

For a complete list of Red Hat Quay configuration fields, see the Configure Red Hat Quay page.

Chapter 1. Advanced Red Hat Quay configuration

You can configure your Red Hat Quay after initial deployment using one of the following interfaces:

-

The Red Hat Quay Config Tool. With this tool, a web-based interface for configuring the Red Hat Quay cluster is provided when running the

Quaycontainer inconfigmode. This method is recommended for configuring the Red Hat Quay service. -

Editing the

config.yaml. Theconfig.yamlfile contains most configuration information for the Red Hat Quay cluster. Editing theconfig.yamlfile directly is possible, but it is only recommended for advanced tuning and performance features that are not available through the Config Tool. - Red Hat Quay API. Some Red Hat Quay features can be configured through the API.

This content in this section describes how to use each of the aforementioned interfaces and how to configure your deployment with advanced features.

1.1. Using Red Hat Quay Config Tool to modify Red Hat Quay

The Red Hat Quay Config Tool is made available by running a Quay container in config mode alongside the regular Red Hat Quay service.

Use the following sections to run the Config Tool from the Red Hat Quay Operator, or to run the Config Tool on host systems from the command line interface (CLI).

1.1.1. Running the Config Tool from the Red Hat Quay Operator

When running the Red Hat Quay Operator on OpenShift Container Platform, the Config Tool is readily available to use. Use the following procedure to access the Red Hat Quay Config Tool.

Prerequisites

- You have deployed the Red Hat Quay Operator on OpenShift Container Platform.

Procedure.

-

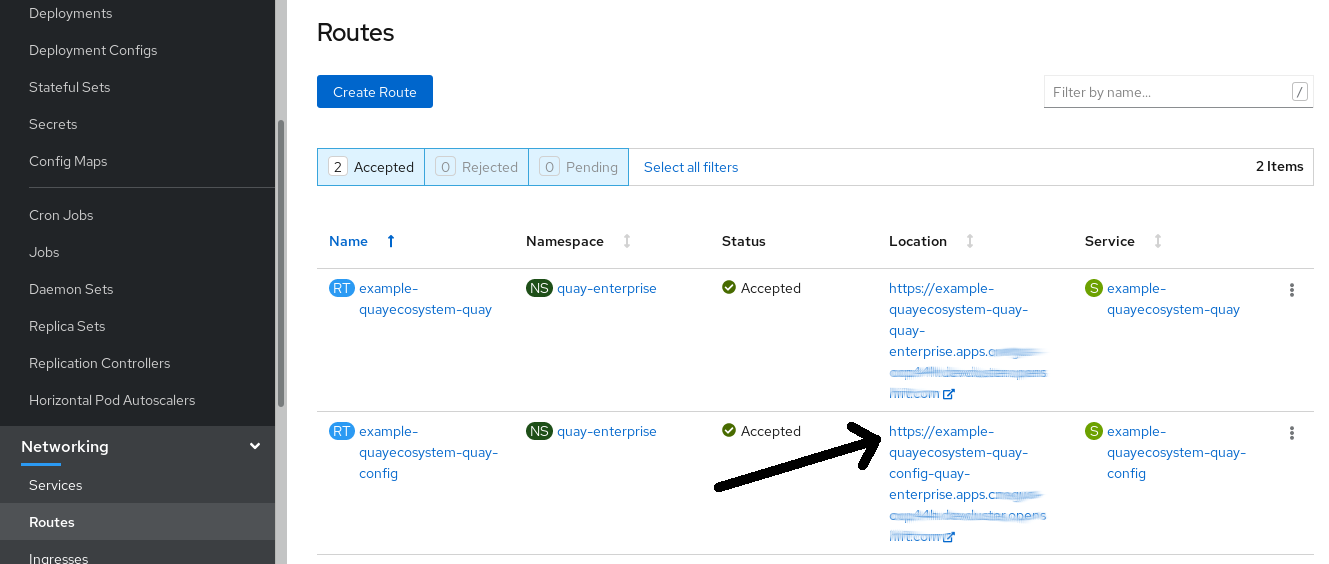

On the OpenShift console, select the Red Hat Quay project, for example,

quay-enterprise. In the navigation pane, select Networking → Routes. You should see routes to both the Red Hat Quay application and Config Tool, as shown in the following image:

-

Select the route to the Config Tool, for example,

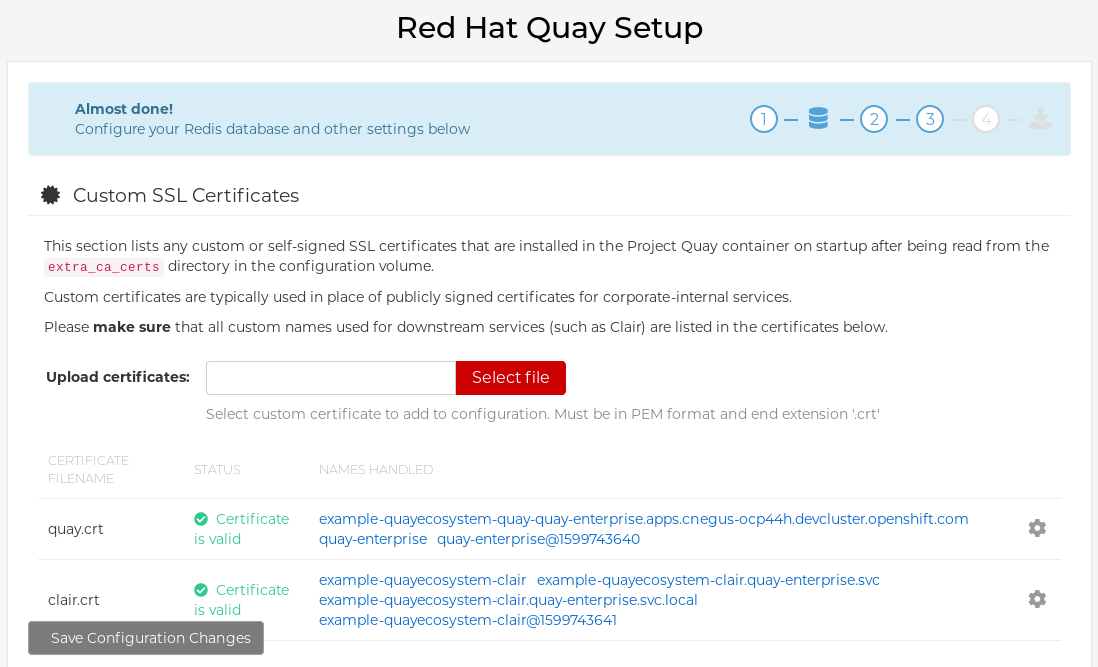

example-quayecosystem-quay-config. The Config Tool UI should open in your browser. Select Modify configuration for this cluster to bring up the Config Tool setup, for example:

- Make the desired changes, and then select Save Configuration Changes.

- Make any corrections needed by clicking Continue Editing, or, select Next to continue.

-

When prompted, select Download Configuration. This will download a tarball of your new

config.yaml, as well as any certificates and keys used with your Red Hat Quay setup. Theconfig.yamlcan be used to make advanced changes to your configuration or use as a future reference. - Select Go to deployment rollout → Populate the configuration to deployments. Wait for the Red Hat Quay pods to restart for the changes to take effect.

1.1.2. Running the Config Tool from the command line

If you are running Red Hat Quay from a host system, you can use the following procedure to make changes to your configuration after the initial deployment.

Prerequisites

-

You have installed either

podmanordocker.

-

You have installed either

- Start Red Hat Quay in configuration mode.

On the first

Quaynode, enter the following command:podman run --rm -it --name quay_config -p 8080:8080 \ -v path/to/config-bundle:/conf/stack \ registry.redhat.io/quay/quay-rhel8:v3.9.17 config <my_secret_password>$ podman run --rm -it --name quay_config -p 8080:8080 \ -v path/to/config-bundle:/conf/stack \ registry.redhat.io/quay/quay-rhel8:v3.9.17 config <my_secret_password>Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteTo modify an existing config bundle, you can mount your configuration directory into the

Quaycontainer.-

When the Red Hat Quay configuration tool starts, open your browser and navigate to the URL and port used in your configuration file, for example,

quay-server.example.com:8080. - Enter your username and password.

- Modify your Red Hat Quay cluster as desired.

1.1.3. Deploying the config tool using TLS certificates

You can deploy the config tool with secured TLS certificates by passing environment variables to the runtime variable. This ensures that sensitive data like credentials for the database and storage backend are protected.

The public and private keys must contain valid Subject Alternative Names (SANs) for the route that you deploy the config tool on.

The paths can be specified using CONFIG_TOOL_PRIVATE_KEY and CONFIG_TOOL_PUBLIC_KEY.

If you are running your deployment from a container, the CONFIG_TOOL_PRIVATE_KEY and CONFIG_TOOL_PUBLIC_KEY values the locations of the certificates inside of the container. For example:

1.2. Using the API to modify Red Hat Quay

See the Red Hat Quay API Guide for information on how to access Red Hat Quay API.

1.3. Editing the config.yaml file to modify Red Hat Quay

Some advanced configuration features that are not available through the Config Tool can be implemented by editing the config.yaml file directly. Available settings are described in the Schema for Red Hat Quay configuration

The following examples are settings you can change directly in the config.yaml file.

1.3.1. Add name and company to Red Hat Quay sign-in

By setting the following field, users are prompted for their name and company when they first sign in. This is an optional field, but can provide your with extra data about your Red Hat Quay users.

--- FEATURE_USER_METADATA: true ---

---

FEATURE_USER_METADATA: true

---1.3.2. Disable TLS Protocols

You can change the SSL_PROTOCOLS setting to remove SSL protocols that you do not want to support in your Red Hat Quay instance. For example, to remove TLS v1 support from the default SSL_PROTOCOLS:['TLSv1','TLSv1.1','TLSv1.2'], change it to the following:

--- SSL_PROTOCOLS : ['TLSv1.1','TLSv1.2'] ---

---

SSL_PROTOCOLS : ['TLSv1.1','TLSv1.2']

---1.3.3. Rate limit API calls

Adding the FEATURE_RATE_LIMITS parameter to the config.yaml file causes nginx to limit certain API calls to 30-per-second. If FEATURE_RATE_LIMITS is not set, API calls are limited to 300-per-second, effectively making them unlimited.

Rate limiting is important when you must ensure that the available resources are not overwhelmed with traffic.

Some namespaces might require unlimited access, for example, if they are important to CI/CD and take priority. In that scenario, those namespaces might be placed in a list in the config.yaml file using the NON_RATE_LIMITED_NAMESPACES.

1.3.4. Adjust database connection pooling

Red Hat Quay is composed of many different processes which all run within the same container. Many of these processes interact with the database.

With the DB_CONNECTION_POOLING parameter, each process that interacts with the database will contain a connection pool These per-process connection pools are configured to maintain a maximum of 20 connections. When under heavy load, it is possible to fill the connection pool for every process within a Red Hat Quay container. Under certain deployments and loads, this might require analysis to ensure that Red Hat Quay does not exceed the database’s configured maximum connection count.

Over time, the connection pools will release idle connections. To release all connections immediately, Red Hat Quay must be restarted.

Database connection pooling can be toggled by setting the DB_CONNECTION_POOLING to True or False. For example:

--- DB_CONNECTION_POOLING: true ---

---

DB_CONNECTION_POOLING: true

---

When DB_CONNECTION_POOLING is enabled, you can change the maximum size of the connection pool with the DB_CONNECTION_ARGS in your config.yaml. For example:

--- DB_CONNECTION_ARGS: max_connections: 10 ---

---

DB_CONNECTION_ARGS:

max_connections: 10

---1.3.4.1. Database connection arguments

You can customize your Red Hat Quay database connection settings within the config.yaml file. These are dependent on your deployment’s database driver, for example, psycopg2 for Postgres and pymysql for MySQL. You can also pass in argument used by Peewee’s connection pooling mechanism. For example:

1.3.4.2. Database SSL configuration

Some key-value pairs defined under the DB_CONNECTION_ARGS field are generic, while others are specific to the database. In particular, SSL configuration depends on the database that you are deploying.

1.3.4.2.1. PostgreSQL SSL connection arguments

The following YAML shows a sample PostgreSQL SSL configuration:

--- DB_CONNECTION_ARGS: sslmode: verify-ca sslrootcert: /path/to/cacert ---

---

DB_CONNECTION_ARGS:

sslmode: verify-ca

sslrootcert: /path/to/cacert

---

The sslmode parameter determines whether, or with, what priority a secure SSL TCP/IP connection will be negotiated with the server. There are six modes for the sslmode parameter:

- disabl:: Only try a non-SSL connection.

- allow: Try a non-SSL connection first. Upon failure, try an SSL connection.

- prefer: Default. Try an SSL connection first. Upon failure, try a non-SSL connection.

-

require: Only try an SSL connection. If a root CA file is present, verify the connection in the same way as if

verify-cawas specified. - verify-ca: Only try an SSL connection, and verify that the server certificate is issued by a trust certificate authority (CA).

- verify-full: Only try an SSL connection. Verify that the server certificate is issued by a trust CA, and that the requested server host name matches that in the certificate.

For more information about the valid arguments for PostgreSQL, see Database Connection Control Functions.

1.3.4.2.2. MySQL SSL connection arguments

The following YAML shows a sample MySQL SSL configuration:

---

DB_CONNECTION_ARGS:

ssl:

ca: /path/to/cacert

---

---

DB_CONNECTION_ARGS:

ssl:

ca: /path/to/cacert

---For more information about the valid connection arguments for MySQL, see Connecting to the Server Using URI-Like Strings or Key-Value Pairs.

1.3.4.3. HTTP connection counts

You can specify the quantity of simultaneous HTTP connections using environment variables. The environment variables can be specified as a whole, or for a specific component. The default for each is 50 parallel connections per process. See the following YAML for example environment variables;

Specifying a count for a specific component will override any value set in the WORKER_CONNECTION_COUNT configuration field.

1.3.4.4. Dynamic process counts

To estimate the quantity of dynamically sized processes, the following calculation is used by default.

Red Hat Quay queries the available CPU count from the entire machine. Any limits applied using kubernetes or other non-virtualized mechanisms will not affect this behavior. Red Hat Quay makes its calculation based on the total number of processors on the Node. The default values listed are simply targets, but shall not exceed the maximum or be lower than the minimum.

Each of the following process quantities can be overridden using the environment variable specified below:

registry - Provides HTTP endpoints to handle registry action

- minimum: 8

- maximum: 64

- default: $CPU_COUNT x 4

- environment variable: WORKER_COUNT_REGISTRY

web - Provides HTTP endpoints for the web-based interface

- minimum: 2

- maximum: 32

- default: $CPU_COUNT x 2

- environment_variable: WORKER_COUNT_WEB

secscan - Interacts with Clair

- minimum: 2

- maximum: 4

- default: $CPU_COUNT x 2

- environment variable: WORKER_COUNT_SECSCAN

1.3.4.5. Environment variables

Red Hat Quay allows overriding default behavior using environment variables. The following table lists and describes each variable and the values they can expect.

| Variable | Description | Values |

|---|---|---|

| WORKER_COUNT_REGISTRY |

Specifies the number of processes to handle registry requests within the |

Integer between |

| WORKER_COUNT_WEB | Specifies the number of processes to handle UI/Web requests within the container. |

Integer between |

| WORKER_COUNT_SECSCAN | Specifies the number of processes to handle Security Scanning (for example, Clair) integration within the container. |

Integer. Because the Operator specifies 2 vCPUs for resource requests and limits, setting this value between |

| DB_CONNECTION_POOLING | Toggle database connection pooling. |

|

1.3.4.6. Turning off connection pooling

Red Hat Quay deployments with a large amount of user activity can regularly hit the 2k maximum database connection limit. In these cases, connection pooling, which is enabled by default for Red Hat Quay, can cause database connection count to rise exponentially and require you to turn off connection pooling.

If turning off connection pooling is not enough to prevent hitting the 2k database connection limit, you need to take additional steps to deal with the problem. If this happens, you might need to increase the maximum database connections to better suit your workload.

Chapter 2. Using the configuration API

The configuration tool exposes 4 endpoints that can be used to build, validate, bundle and deploy a configuration. The config-tool API is documented at https://github.com/quay/config-tool/blob/master/pkg/lib/editor/API.md. In this section, you will see how to use the API to retrieve the current configuration and how to validate any changes you make.

2.1. Retrieving the default configuration

If you are running the configuration tool for the first time, and do not have an existing configuration, you can retrieve the default configuration. Start the container in config mode:

sudo podman run --rm -it --name quay_config \ -p 8080:8080 \ registry.redhat.io/quay/quay-rhel8:v3.9.17 config secret

$ sudo podman run --rm -it --name quay_config \

-p 8080:8080 \

registry.redhat.io/quay/quay-rhel8:v3.9.17 config secret

Use the config endpoint of the configuration API to get the default:

curl -X GET -u quayconfig:secret http://quay-server:8080/api/v1/config | jq

$ curl -X GET -u quayconfig:secret http://quay-server:8080/api/v1/config | jqThe value returned is the default configuration in JSON format:

2.2. Retrieving the current configuration

If you have already configured and deployed the Quay registry, stop the container and restart it in configuration mode, loading the existing configuration as a volume:

sudo podman run --rm -it --name quay_config \ -p 8080:8080 \ -v $QUAY/config:/conf/stack:Z \ registry.redhat.io/quay/quay-rhel8:v3.9.17 config secret

$ sudo podman run --rm -it --name quay_config \

-p 8080:8080 \

-v $QUAY/config:/conf/stack:Z \

registry.redhat.io/quay/quay-rhel8:v3.9.17 config secret

Use the config endpoint of the API to get the current configuration:

curl -X GET -u quayconfig:secret http://quay-server:8080/api/v1/config | jq

$ curl -X GET -u quayconfig:secret http://quay-server:8080/api/v1/config | jqThe value returned is the current configuration in JSON format, including database and Redis configuration data:

2.3. Validating configuration using the API

You can validate a configuration by posting it to the config/validate endpoint:

The returned value is an array containing the errors found in the configuration. If the configuration is valid, an empty array [] is returned.

2.4. Determining the required fields

You can determine the required fields by posting an empty configuration structure to the config/validate endpoint:

The value returned is an array indicating which fields are required:

Chapter 3. Getting Red Hat Quay release notifications

To keep up with the latest Red Hat Quay releases and other changes related to Red Hat Quay, you can sign up for update notifications on the Red Hat Customer Portal. After signing up for notifications, you will receive notifications letting you know when there is new a Red Hat Quay version, updated documentation, or other Red Hat Quay news.

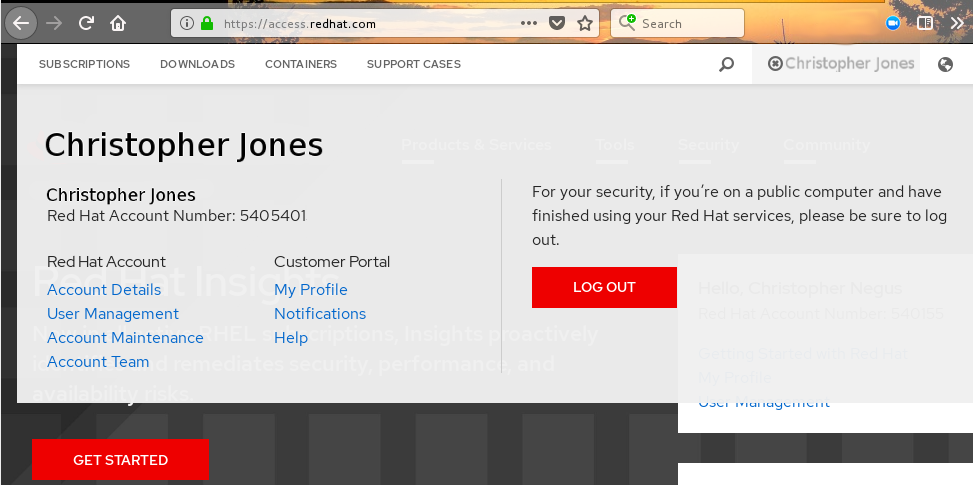

- Log into the Red Hat Customer Portal with your Red Hat customer account credentials.

-

Select your user name (upper-right corner) to see Red Hat Account and Customer Portal selections:

- Select Notifications. Your profile activity page appears.

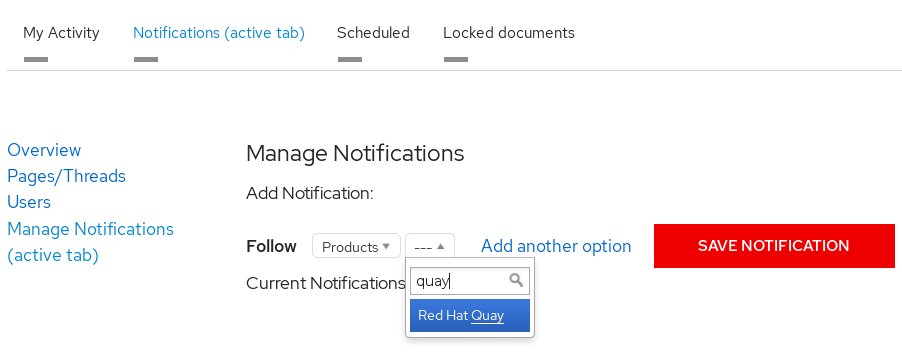

- Select the Notifications tab.

- Select Manage Notifications.

- Select Follow, then choose Products from the drop-down box.

-

From the drop-down box next to the Products, search for and select Red Hat Quay:

- Select the SAVE NOTIFICATION button. Going forward, you will receive notifications when there are changes to the Red Hat Quay product, such as a new release.

Chapter 4. Using SSL to protect connections to Red Hat Quay

4.1. Using SSL/TLS

To configure Red Hat Quay with a self-signed certificate, you must create a Certificate Authority (CA) and then generate the required key and certificate files.

The following examples assume you have configured the server hostname quay-server.example.com using DNS or another naming mechanism, such as adding an entry in your /etc/hosts file:

cat /etc/hosts ... 192.168.1.112 quay-server.example.com

$ cat /etc/hosts

...

192.168.1.112 quay-server.example.com4.2. Creating a certificate authority and signing a certificate

Use the following procedures to create a certificate file and a primary key file named ssl.cert and ssl.key.

4.2.1. Creating a certificate authority

Use the following procedure to create a certificate authority (CA)

Procedure

Generate the root CA key by entering the following command:

openssl genrsa -out rootCA.key 2048

$ openssl genrsa -out rootCA.key 2048Copy to Clipboard Copied! Toggle word wrap Toggle overflow Generate the root CA certificate by entering the following command:

openssl req -x509 -new -nodes -key rootCA.key -sha256 -days 1024 -out rootCA.pem

$ openssl req -x509 -new -nodes -key rootCA.key -sha256 -days 1024 -out rootCA.pemCopy to Clipboard Copied! Toggle word wrap Toggle overflow Enter the information that will be incorporated into your certificate request, including the server hostname, for example:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

4.2.2. Signing a certificate

Use the following procedure to sign a certificate.

Procedure

Generate the server key by entering the following command:

openssl genrsa -out ssl.key 2048

$ openssl genrsa -out ssl.key 2048Copy to Clipboard Copied! Toggle word wrap Toggle overflow Generate a signing request by entering the following command:

openssl req -new -key ssl.key -out ssl.csr

$ openssl req -new -key ssl.key -out ssl.csrCopy to Clipboard Copied! Toggle word wrap Toggle overflow Enter the information that will be incorporated into your certificate request, including the server hostname, for example:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a configuration file

openssl.cnf, specifying the server hostname, for example:openssl.cnf

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Use the configuration file to generate the certificate

ssl.cert:openssl x509 -req -in ssl.csr -CA rootCA.pem -CAkey rootCA.key -CAcreateserial -out ssl.cert -days 356 -extensions v3_req -extfile openssl.cnf

$ openssl x509 -req -in ssl.csr -CA rootCA.pem -CAkey rootCA.key -CAcreateserial -out ssl.cert -days 356 -extensions v3_req -extfile openssl.cnfCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.3. Configuring SSL using the command line interface

Use the following procedure to configure SSL/TLS using the command line interface.

Prerequisites

- You have created a certificate authority and signed the certificate.

Procedure

Copy the certificate file and primary key file to your configuration directory, ensuring they are named

ssl.certandssl.keyrespectively:cp ~/ssl.cert ~/ssl.key $QUAY/config

cp ~/ssl.cert ~/ssl.key $QUAY/configCopy to Clipboard Copied! Toggle word wrap Toggle overflow Change into the

$QUAY/configdirectory by entering the following command:cd $QUAY/config

$ cd $QUAY/configCopy to Clipboard Copied! Toggle word wrap Toggle overflow Edit the

config.yamlfile and specify that you want Red Hat Quay to handle TLS/SSL:config.yaml

... SERVER_HOSTNAME: quay-server.example.com ... PREFERRED_URL_SCHEME: https ...

... SERVER_HOSTNAME: quay-server.example.com ... PREFERRED_URL_SCHEME: https ...Copy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: Append the contents of the rootCA.pem file to the end of the ssl.cert file by entering the following command:

cat rootCA.pem >> ssl.cert

$ cat rootCA.pem >> ssl.certCopy to Clipboard Copied! Toggle word wrap Toggle overflow Stop the

Quaycontainer by entering the following command:sudo podman stop quay

$ sudo podman stop quayCopy to Clipboard Copied! Toggle word wrap Toggle overflow Restart the registry by entering the following command:

sudo podman run -d --rm -p 80:8080 -p 443:8443 \ --name=quay \ -v $QUAY/config:/conf/stack:Z \ -v $QUAY/storage:/datastorage:Z \ registry.redhat.io/quay/quay-rhel8:v3.9.17

$ sudo podman run -d --rm -p 80:8080 -p 443:8443 \ --name=quay \ -v $QUAY/config:/conf/stack:Z \ -v $QUAY/storage:/datastorage:Z \ registry.redhat.io/quay/quay-rhel8:v3.9.17Copy to Clipboard Copied! Toggle word wrap Toggle overflow

4.4. Configuring SSL/TLS using the Red Hat Quay UI

Use the following procedure to configure SSL/TLS using the Red Hat Quay UI.

To configure SSL using the command line interface, see "Configuring SSL/TLS using the command line interface".

Prerequisites

- You have created a certificate authority and signed the certificate.

Procedure

Start the

Quaycontainer in configuration mode:sudo podman run --rm -it --name quay_config -p 80:8080 -p 443:8443 registry.redhat.io/quay/quay-rhel8:v3.9.17 config secret

$ sudo podman run --rm -it --name quay_config -p 80:8080 -p 443:8443 registry.redhat.io/quay/quay-rhel8:v3.9.17 config secretCopy to Clipboard Copied! Toggle word wrap Toggle overflow - In the Server Configuration section, select Red Hat Quay handles TLS for SSL/TLS. Upload the certificate file and private key file created earlier, ensuring that the Server Hostname matches the value used when the certificates were created.

- Validate and download the updated configuration.

Stop the

Quaycontainer and then restart the registry by entering the following command:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

4.5. Testing SSL configuration using the command line

Use the

podman logincommand to attempt to log in to the Quay registry with SSL enabled:sudo podman login quay-server.example.com Username: quayadmin Password: Error: error authenticating creds for "quay-server.example.com": error pinging docker registry quay-server.example.com: Get "https://quay-server.example.com/v2/": x509: certificate signed by unknown authority

$ sudo podman login quay-server.example.com Username: quayadmin Password: Error: error authenticating creds for "quay-server.example.com": error pinging docker registry quay-server.example.com: Get "https://quay-server.example.com/v2/": x509: certificate signed by unknown authorityCopy to Clipboard Copied! Toggle word wrap Toggle overflow Podman does not trust self-signed certificates. As a workaround, use the

--tls-verifyoption:sudo podman login --tls-verify=false quay-server.example.com Username: quayadmin Password: Login Succeeded!

$ sudo podman login --tls-verify=false quay-server.example.com Username: quayadmin Password: Login Succeeded!Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Configuring Podman to trust the root Certificate Authority (CA) is covered in a subsequent section.

4.6. Testing SSL configuration using the browser

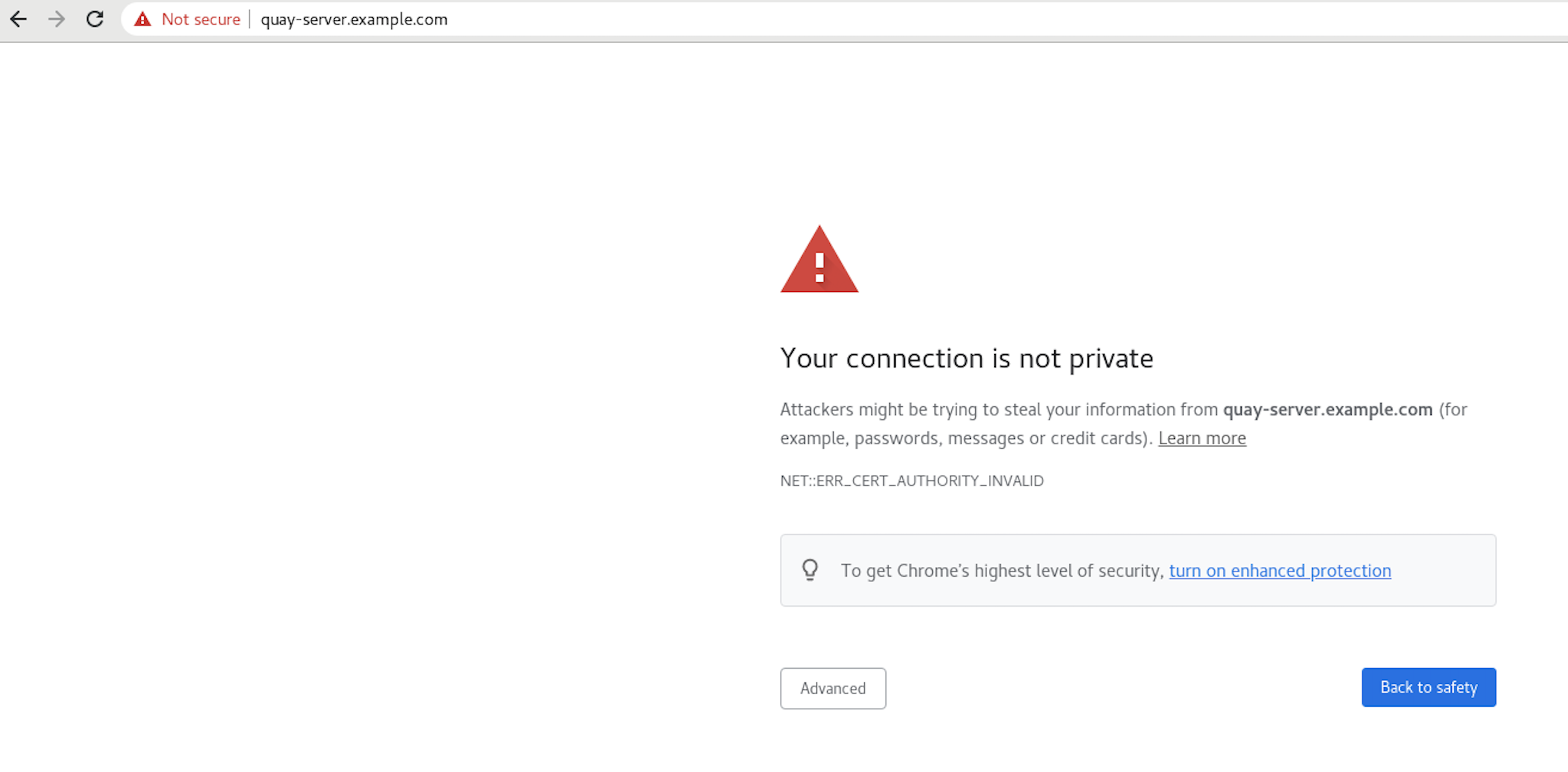

When you attempt to access the Quay registry, in this case, https://quay-server.example.com, the browser warns of the potential risk:

Proceed to the log in screen, and the browser will notify you that the connection is not secure:

Configuring the system to trust the root Certificate Authority (CA) is covered in the subsequent section.

4.7. Configuring podman to trust the Certificate Authority

Podman uses two paths to locate the CA file, namely, /etc/containers/certs.d/ and /etc/docker/certs.d/.

Copy the root CA file to one of these locations, with the exact path determined by the server hostname, and naming the file

ca.crt:sudo cp rootCA.pem /etc/containers/certs.d/quay-server.example.com/ca.crt

$ sudo cp rootCA.pem /etc/containers/certs.d/quay-server.example.com/ca.crtCopy to Clipboard Copied! Toggle word wrap Toggle overflow Alternatively, if you are using Docker, you can copy the root CA file to the equivalent Docker directory:

sudo cp rootCA.pem /etc/docker/certs.d/quay-server.example.com/ca.crt

$ sudo cp rootCA.pem /etc/docker/certs.d/quay-server.example.com/ca.crtCopy to Clipboard Copied! Toggle word wrap Toggle overflow

You should no longer need to use the --tls-verify=false option when logging in to the registry:

sudo podman login quay-server.example.com Username: quayadmin Password: Login Succeeded!

$ sudo podman login quay-server.example.com

Username: quayadmin

Password:

Login Succeeded!4.8. Configuring the system to trust the certificate authority

Use the following procedure to configure your system to trust the certificate authority.

Procedure

Enter the following command to copy the

rootCA.pemfile to the consolidated system-wide trust store:sudo cp rootCA.pem /etc/pki/ca-trust/source/anchors/

$ sudo cp rootCA.pem /etc/pki/ca-trust/source/anchors/Copy to Clipboard Copied! Toggle word wrap Toggle overflow Enter the following command to update the system-wide trust store configuration:

sudo update-ca-trust extract

$ sudo update-ca-trust extractCopy to Clipboard Copied! Toggle word wrap Toggle overflow Optional. You can use the

trust listcommand to ensure that theQuayserver has been configured:trust list | grep quay label: quay-server.example.com$ trust list | grep quay label: quay-server.example.comCopy to Clipboard Copied! Toggle word wrap Toggle overflow Now, when you browse to the registry at

https://quay-server.example.com, the lock icon shows that the connection is secure:

To remove the

rootCA.pemfile from system-wide trust, delete the file and update the configuration:sudo rm /etc/pki/ca-trust/source/anchors/rootCA.pem

$ sudo rm /etc/pki/ca-trust/source/anchors/rootCA.pemCopy to Clipboard Copied! Toggle word wrap Toggle overflow sudo update-ca-trust extract

$ sudo update-ca-trust extractCopy to Clipboard Copied! Toggle word wrap Toggle overflow trust list | grep quay

$ trust list | grep quayCopy to Clipboard Copied! Toggle word wrap Toggle overflow

More information can be found in the RHEL 9 documentation in the chapter Using shared system certificates.

Chapter 5. Adding TLS Certificates to the Red Hat Quay Container

To add custom TLS certificates to Red Hat Quay, create a new directory named extra_ca_certs/ beneath the Red Hat Quay config directory. Copy any required site-specific TLS certificates to this new directory.

5.1. Add TLS certificates to Red Hat Quay

View certificate to be added to the container

cat storage.crt -----BEGIN CERTIFICATE----- MIIDTTCCAjWgAwIBAgIJAMVr9ngjJhzbMA0GCSqGSIb3DQEBCwUAMD0xCzAJBgNV [...] -----END CERTIFICATE-----

$ cat storage.crt -----BEGIN CERTIFICATE----- MIIDTTCCAjWgAwIBAgIJAMVr9ngjJhzbMA0GCSqGSIb3DQEBCwUAMD0xCzAJBgNV [...] -----END CERTIFICATE-----Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create certs directory and copy certificate there

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Obtain the

Quaycontainer’sCONTAINER IDwithpodman ps:sudo podman ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS 5a3e82c4a75f <registry>/<repo>/quay:v3.9.17 "/sbin/my_init" 24 hours ago Up 18 hours 0.0.0.0:80->80/tcp, 0.0.0.0:443->443/tcp, 443/tcp grave_keller

$ sudo podman ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS 5a3e82c4a75f <registry>/<repo>/quay:v3.9.17 "/sbin/my_init" 24 hours ago Up 18 hours 0.0.0.0:80->80/tcp, 0.0.0.0:443->443/tcp, 443/tcp grave_kellerCopy to Clipboard Copied! Toggle word wrap Toggle overflow Restart the container with that ID:

sudo podman restart 5a3e82c4a75f

$ sudo podman restart 5a3e82c4a75fCopy to Clipboard Copied! Toggle word wrap Toggle overflow Examine the certificate copied into the container namespace:

sudo podman exec -it 5a3e82c4a75f cat /etc/ssl/certs/storage.pem -----BEGIN CERTIFICATE----- MIIDTTCCAjWgAwIBAgIJAMVr9ngjJhzbMA0GCSqGSIb3DQEBCwUAMD0xCzAJBgNV

$ sudo podman exec -it 5a3e82c4a75f cat /etc/ssl/certs/storage.pem -----BEGIN CERTIFICATE----- MIIDTTCCAjWgAwIBAgIJAMVr9ngjJhzbMA0GCSqGSIb3DQEBCwUAMD0xCzAJBgNVCopy to Clipboard Copied! Toggle word wrap Toggle overflow

5.2. Adding custom SSL/TLS certificates when Red Hat Quay is deployed on Kubernetes

When deployed on Kubernetes, Red Hat Quay mounts in a secret as a volume to store config assets. Currently, this breaks the upload certificate function of the superuser panel.

As a temporary workaround, base64 encoded certificates can be added to the secret after Red Hat Quay has been deployed.

Use the following procedure to add custom SSL/TLS certificates when Red Hat Quay is deployed on Kubernetes.

Prerequisites

- Red Hat Quay has been deployed.

-

You have a custom

ca.crtfile.

Procedure

Base64 encode the contents of an SSL/TLS certificate by entering the following command:

cat ca.crt | base64 -w 0

$ cat ca.crt | base64 -w 0Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

...c1psWGpqeGlPQmNEWkJPMjJ5d0pDemVnR2QNCnRsbW9JdEF4YnFSdVd3PT0KLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo=

...c1psWGpqeGlPQmNEWkJPMjJ5d0pDemVnR2QNCnRsbW9JdEF4YnFSdVd3PT0KLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo=Copy to Clipboard Copied! Toggle word wrap Toggle overflow Enter the following

kubectlcommand to edit thequay-enterprise-config-secretfile:kubectl --namespace quay-enterprise edit secret/quay-enterprise-config-secret

$ kubectl --namespace quay-enterprise edit secret/quay-enterprise-config-secretCopy to Clipboard Copied! Toggle word wrap Toggle overflow Add an entry for the certificate and paste the full

base64encoded stringer under the entry. For example:custom-cert.crt: c1psWGpqeGlPQmNEWkJPMjJ5d0pDemVnR2QNCnRsbW9JdEF4YnFSdVd3PT0KLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo=

custom-cert.crt: c1psWGpqeGlPQmNEWkJPMjJ5d0pDemVnR2QNCnRsbW9JdEF4YnFSdVd3PT0KLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo=Copy to Clipboard Copied! Toggle word wrap Toggle overflow Use the

kubectl deletecommand to remove all Red Hat Quay pods. For example:kubectl delete pod quay-operator.v3.7.1-6f9d859bd-p5ftc quayregistry-clair-postgres-7487f5bd86-xnxpr quayregistry-quay-app-upgrade-xq2v6 quayregistry-quay-config-editor-6dfdcfc44f-hlvwm quayregistry-quay-database-859d5445ff-cqthr quayregistry-quay-redis-84f888776f-hhgms

$ kubectl delete pod quay-operator.v3.7.1-6f9d859bd-p5ftc quayregistry-clair-postgres-7487f5bd86-xnxpr quayregistry-quay-app-upgrade-xq2v6 quayregistry-quay-config-editor-6dfdcfc44f-hlvwm quayregistry-quay-database-859d5445ff-cqthr quayregistry-quay-redis-84f888776f-hhgmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Afterwards, the Red Hat Quay deployment automatically schedules replace pods with the new certificate data.

Chapter 6. Configuring action log storage for Elasticsearch and Splunk

By default, the previous three months of usage logs are stored in the Red Hat Quay database and exposed through the web UI on organization and repository levels. Appropriate administrative privileges are required to see log entries. For deployments with a large amount of logged operations, you can store the usage logs in Elasticsearch and Splunk instead of the Red Hat Quay database backend.

6.1. Configuring action log storage for Elasticsearch

To configure action log storage for Elasticsearch, you must provide your own Elasticsearch stack, as it is not included with Red Hat Quay as a customizable component.

Enabling Elasticsearch logging can be done during Red Hat Quay deployment or post-deployment using the configuration tool. The resulting configuration is stored in the config.yaml file. When configured, usage log access continues to be provided through the web UI for repositories and organizations.

Use the following procedure to configure action log storage for Elasticsearch:

Procedure

- Obtain an Elasticsearch account.

- Open the Red Hat Quay Config Tool (either during or after Red Hat Quay deployment).

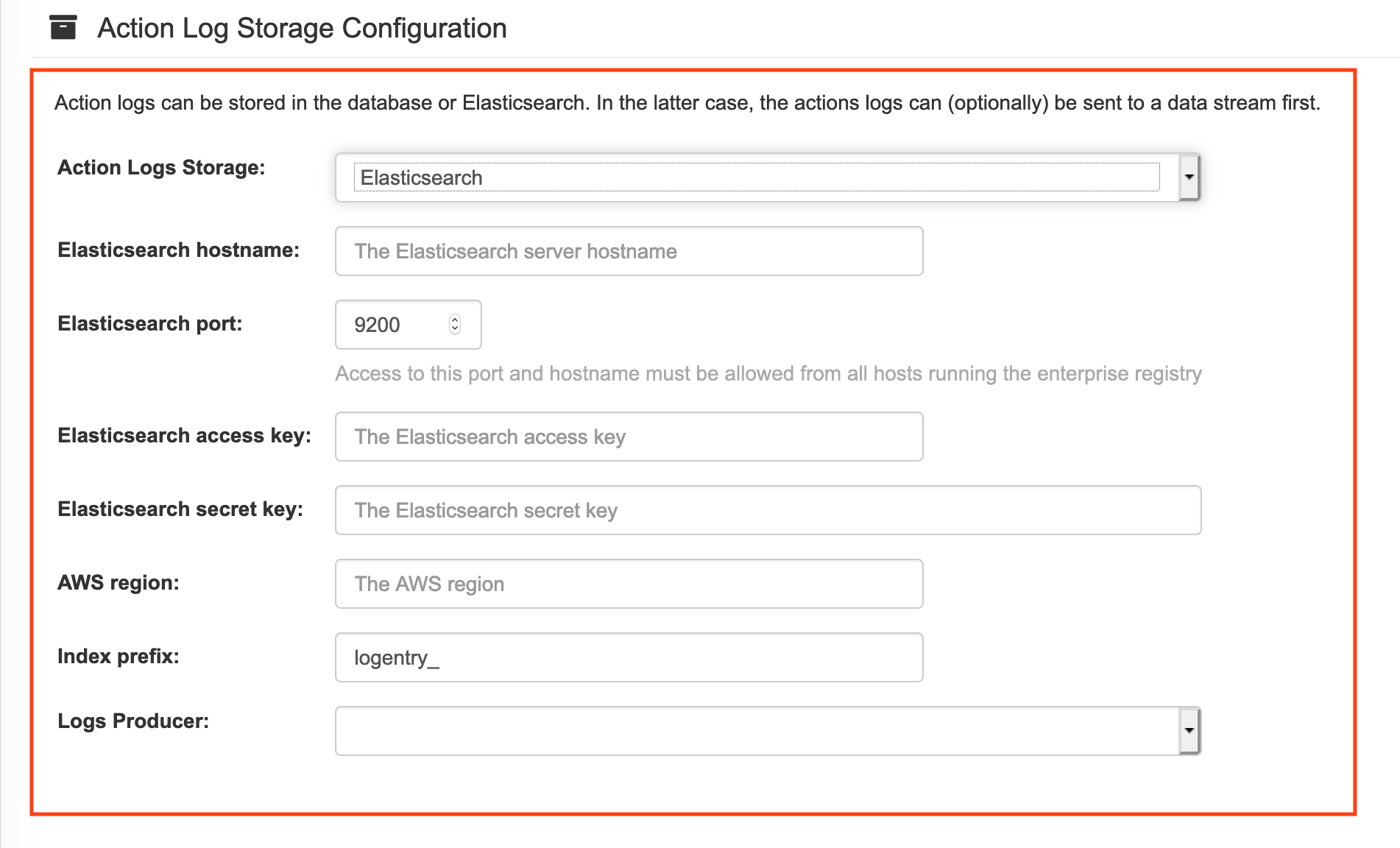

Scroll to the Action Log Storage Configuration setting and select Elasticsearch. The following figure shows the Elasticsearch settings that appear:

Fill in the following information for your Elasticsearch instance:

- Elasticsearch hostname: The hostname or IP address of the system providing the Elasticsearch service.

- Elasticsearch port: The port number providing the Elasticsearch service on the host you just entered. Note that the port must be accessible from all systems running the Red Hat Quay registry. The default is TCP port 9200.

- Elasticsearch access key: The access key needed to gain access to the Elastic search service, if required.

- Elasticsearch secret key: The secret key needed to gain access to the Elastic search service, if required.

- AWS region: If you are running on AWS, set the AWS region (otherwise, leave it blank).

- Index prefix: Choose a prefix to attach to log entries.

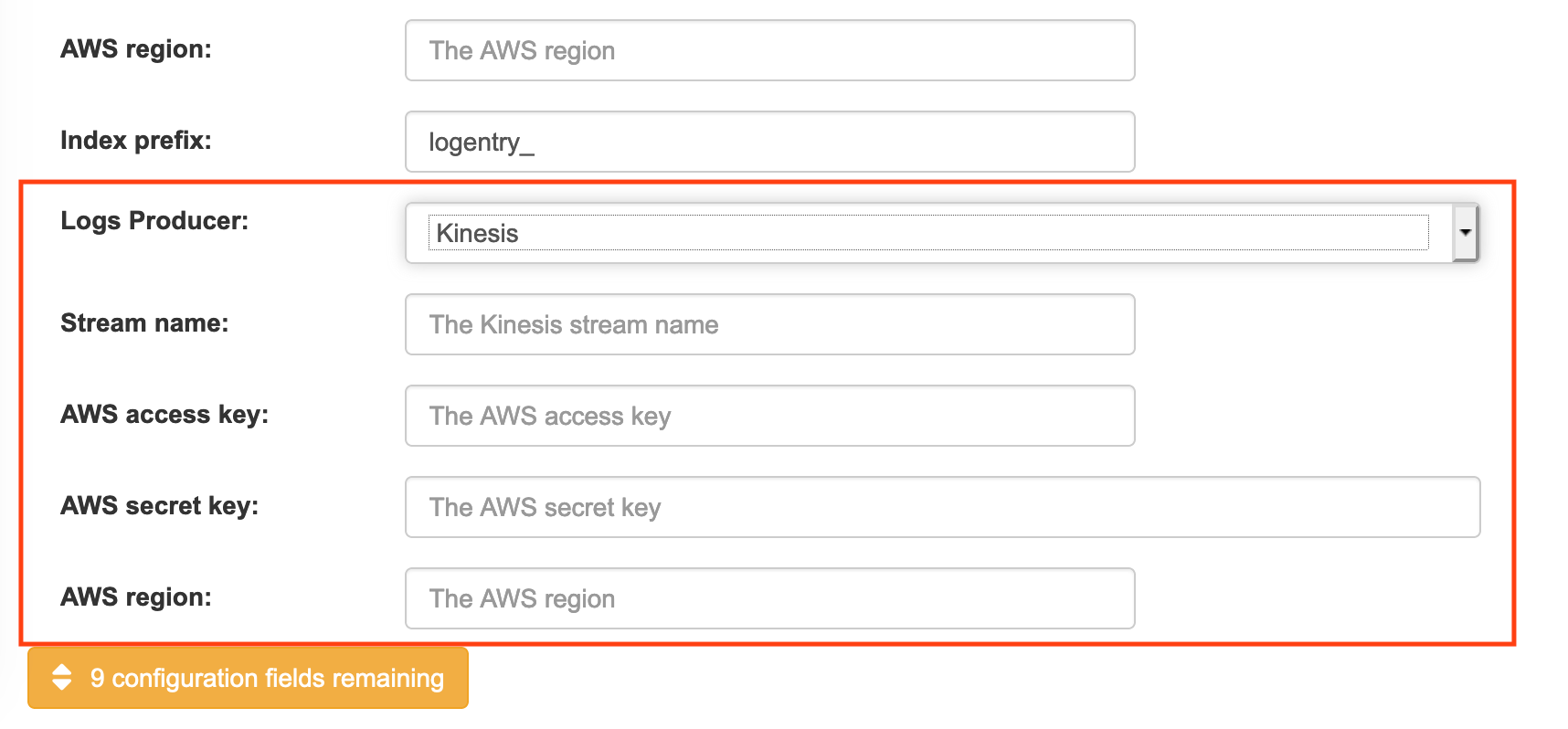

Logs Producer: Choose either Elasticsearch (default) or Kinesis to direct logs to an intermediate Kinesis stream on AWS. You need to set up your own pipeline to send logs from Kinesis to Elasticsearch (for example, Logstash). The following figure shows additional fields you would need to fill in for Kinesis:

If you chose Elasticsearch as the Logs Producer, no further configuration is needed. If you chose Kinesis, fill in the following:

- Stream name: The name of the Kinesis stream.

- AWS access key: The name of the AWS access key needed to gain access to the Kinesis stream, if required.

- AWS secret key: The name of the AWS secret key needed to gain access to the Kinesis stream, if required.

- AWS region: The AWS region.

- When you are done, save the configuration. The configuration tool checks your settings. If there is a problem connecting to the Elasticsearch or Kinesis services, you will see an error and have the opportunity to continue editing. Otherwise, logging will begin to be directed to your Elasticsearch configuration after the cluster restarts with the new configuration.

6.2. Configuring action log storage for Splunk

Splunk is an alternative to Elasticsearch that can provide log analyses for your Red Hat Quay data.

Enabling Splunk logging can be done during Red Hat Quay deployment or post-deployment using the configuration tool. The resulting configuration is stored in the config.yaml file. When configured, usage log access continues to be provided through the Splunk web UI for repositories and organizations.

Use the following procedures to enable Splunk for your Red Hat Quay deployment.

6.2.1. Installing and creating a username for Splunk

Use the following procedure to install and create Splunk credentials.

Procedure

- Create a Splunk account by navigating to Splunk and entering the required credentials.

- Navigate to the Splunk Enterprise Free Trial page, select your platform and installation package, and then click Download Now.

-

Install the Splunk software on your machine. When prompted, create a username, for example,

splunk_adminand password. -

After creating a username and password, a localhost URL will be provided for your Splunk deployment, for example,

http://<sample_url>.remote.csb:8000/. Open the URL in your preferred browser. - Log in with the username and password you created during installation. You are directed to the Splunk UI.

6.2.2. Generating a Splunk token

Use one of the following procedures to create a bearer token for Splunk.

6.2.2.1. Generating a Splunk token using the Splunk UI

Use the following procedure to create a bearer token for Splunk using the Splunk UI.

Prerequisites

- You have installed Splunk and created a username.

Procedure

- On the Splunk UI, navigate to Settings → Tokens.

- Click Enable Token Authentication.

- Ensure that Token Authentication is enabled by clicking Token Settings and selecting Token Authentication if necessary.

- Optional: Set the expiration time for your token. This defaults at 30 days.

- Click Save.

- Click New Token.

- Enter information for User and Audience.

- Optional: Set the Expiration and Not Before information.

Click Create. Your token appears in the Token box. Copy the token immediately.

ImportantIf you close out of the box before copying the token, you must create a new token. The token in its entirety is not available after closing the New Token window.

6.2.2.2. Generating a Splunk token using the CLI

Use the following procedure to create a bearer token for Splunk using the CLI.

Prerequisites

- You have installed Splunk and created a username.

Procedure

In your CLI, enter the following

CURLcommand to enable token authentication, passing in your Splunk username and password:curl -k -u <username>:<password> -X POST <scheme>://<host>:<port>/services/admin/token-auth/tokens_auth -d disabled=false

$ curl -k -u <username>:<password> -X POST <scheme>://<host>:<port>/services/admin/token-auth/tokens_auth -d disabled=falseCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a token by entering the following

CURLcommand, passing in your Splunk username and password.curl -k -u <username>:<password> -X POST <scheme>://<host>:<port>/services/authorization/tokens?output_mode=json --data name=<username> --data audience=Users --data-urlencode expires_on=+30d

$ curl -k -u <username>:<password> -X POST <scheme>://<host>:<port>/services/authorization/tokens?output_mode=json --data name=<username> --data audience=Users --data-urlencode expires_on=+30dCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Save the generated bearer token.

6.2.3. Configuring Red Hat Quay to use Splunk

Use the following procedure to configure Red Hat Quay to use Splunk.

Prerequisites

- You have installed Splunk and created a username.

- You have generated a Splunk bearer token.

Procedure

Open your Red Hat Quay

config.yamlfile and add the following configuration fields:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- String. The Splunk cluster endpoint.

- 2

- Integer. The Splunk management cluster endpoint port. Differs from the Splunk GUI hosted port. Can be found on the Splunk UI under Settings → Server Settings → General Settings.

- 3

- String. The generated bearer token for Splunk.

- 4

- String. The URL scheme for access the Splunk service. If Splunk is configured to use TLS/SSL, this must be

https. - 5

- Boolean. Whether to enable TLS/SSL. Defaults to

True. - 6

- String. The Splunk index prefix. Can be a new, or used, index. Can be created from the Splunk UI.

- 7

- String. The relative container path to a single

.pemfile containing a certificate authority (CA) for TLS/SSL validation.

If you are configuring

ssl_ca_path, you must configure the SSL/TLS certificate so that Red Hat Quay will trust it.-

If you are using a standalone deployment of Red Hat Quay, SSL/TLS certificates can be provided by placing the certificate file inside of the

extra_ca_certsdirectory, or inside of the relative container path and specified byssl_ca_path. If you are using the Red Hat Quay Operator, create a config bundle secret, including the certificate authority (CA) of the Splunk server. For example:

oc create secret generic --from-file config.yaml=./config_390.yaml --from-file extra_ca_cert_splunkserver.crt=./splunkserver.crt config-bundle-secret

$ oc create secret generic --from-file config.yaml=./config_390.yaml --from-file extra_ca_cert_splunkserver.crt=./splunkserver.crt config-bundle-secretCopy to Clipboard Copied! Toggle word wrap Toggle overflow Specify the

conf/stack/extra_ca_certs/splunkserver.crtfile in yourconfig.yaml. For example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

-

If you are using a standalone deployment of Red Hat Quay, SSL/TLS certificates can be provided by placing the certificate file inside of the

6.2.4. Creating an action log

Use the following procedure to create a user account that can forward action logs to Splunk.

You must use the Splunk UI to view Red Hat Quay action logs. At this time, viewing Splunk action logs on the Red Hat Quay Usage Logs page is unsupported, and returns the following message: Method not implemented. Splunk does not support log lookups.

Prerequisites

- You have installed Splunk and created a username.

- You have generated a Splunk bearer token.

-

You have configured your Red Hat Quay

config.yamlfile to enable Splunk.

Procedure

- Log in to your Red Hat Quay deployment.

- Click on the name of the organization that you will use to create an action log for Splunk.

- In the navigation pane, click Robot Accounts → Create Robot Account.

-

When prompted, enter a name for the robot account, for example

spunkrobotaccount, then click Create robot account. - On your browser, open the Splunk UI.

- Click Search and Reporting.

In the search bar, enter the name of your index, for example,

<splunk_log_index_name>and press Enter.The search results populate on the Splunk UI, showing information like

host,sourcetype, etc. By clicking the>arrow, you can see metadata for the logs, such as theip, JSON metadata, and account name.

Chapter 7. Clair for Red Hat Quay

Clair v4 (Clair) is an open source application that leverages static code analyses for parsing image content and reporting vulnerabilities affecting the content. Clair is packaged with Red Hat Quay and can be used in both standalone and Operator deployments. It can be run in highly scalable configurations, where components can be scaled separately as appropriate for enterprise environments.

7.1. Clair vulnerability databases

Clair uses the following vulnerability databases to report for issues in your images:

- Ubuntu Oval database

- Debian Security Tracker

- Red Hat Enterprise Linux (RHEL) Oval database

- SUSE Oval database

- Oracle Oval database

- Alpine SecDB database

- VMWare Photon OS database

- Amazon Web Services (AWS) UpdateInfo

- Open Source Vulnerability (OSV) Database

For information about how Clair does security mapping with the different databases, see Claircore Severity Mapping.

7.1.1. Information about Open Source Vulnerability (OSV) database for Clair

Open Source Vulnerability (OSV) is a vulnerability database and monitoring service that focuses on tracking and managing security vulnerabilities in open source software.

OSV provides a comprehensive and up-to-date database of known security vulnerabilities in open source projects. It covers a wide range of open source software, including libraries, frameworks, and other components that are used in software development. For a full list of included ecosystems, see defined ecosystems.

Clair also reports vulnerability and security information for golang, java, and ruby ecosystems through the Open Source Vulnerability (OSV) database.

By leveraging OSV, developers and organizations can proactively monitor and address security vulnerabilities in open source components that they use, which helps to reduce the risk of security breaches and data compromises in projects.

For more information about OSV, see the OSV website.

7.2. Setting up Clair on standalone Red Hat Quay deployments

For standalone Red Hat Quay deployments, you can set up Clair manually.

Procedure

In your Red Hat Quay installation directory, create a new directory for the Clair database data:

mkdir /home/<user-name>/quay-poc/postgres-clairv4

$ mkdir /home/<user-name>/quay-poc/postgres-clairv4Copy to Clipboard Copied! Toggle word wrap Toggle overflow Set the appropriate permissions for the

postgres-clairv4file by entering the following command:setfacl -m u:26:-wx /home/<user-name>/quay-poc/postgres-clairv4

$ setfacl -m u:26:-wx /home/<user-name>/quay-poc/postgres-clairv4Copy to Clipboard Copied! Toggle word wrap Toggle overflow Deploy a Clair Postgres database by entering the following command:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Install the Postgres

uuid-osspmodule for your Clair deployment:podman exec -it postgresql-clairv4 /bin/bash -c 'echo "CREATE EXTENSION IF NOT EXISTS \"uuid-ossp\"" | psql -d clair -U postgres'

$ podman exec -it postgresql-clairv4 /bin/bash -c 'echo "CREATE EXTENSION IF NOT EXISTS \"uuid-ossp\"" | psql -d clair -U postgres'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

CREATE EXTENSION

CREATE EXTENSIONCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteClair requires the

uuid-osspextension to be added to its Postgres database. For users with proper privileges, creating the extension will automatically be added by Clair. If users do not have the proper privileges, the extension must be added before start Clair.If the extension is not present, the following error will be displayed when Clair attempts to start:

ERROR: Please load the "uuid-ossp" extension. (SQLSTATE 42501).Stop the

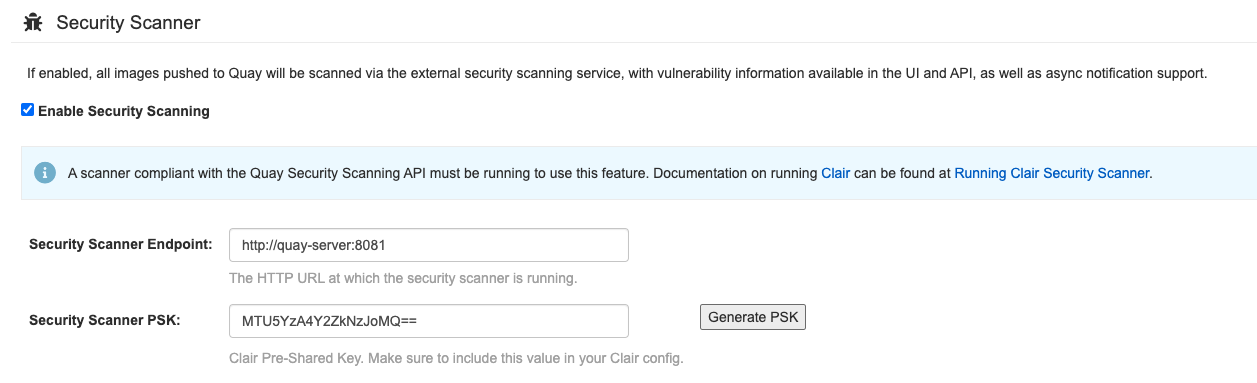

Quaycontainer if it is running and restart it in configuration mode, loading the existing configuration as a volume:sudo podman run --rm -it --name quay_config \ -p 80:8080 -p 443:8443 \ -v $QUAY/config:/conf/stack:Z \ registry.redhat.io/quay/quay-rhel8:{productminv} config secret$ sudo podman run --rm -it --name quay_config \ -p 80:8080 -p 443:8443 \ -v $QUAY/config:/conf/stack:Z \ registry.redhat.io/quay/quay-rhel8:{productminv} config secretCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Log in to the configuration tool and click Enable Security Scanning in the Security Scanner section of the UI.

-

Set the HTTP endpoint for Clair using a port that is not already in use on the

quay-serversystem, for example,8081. Create a pre-shared key (PSK) using the Generate PSK button.

Security Scanner UI

-

Validate and download the

config.yamlfile for Red Hat Quay, and then stop theQuaycontainer that is running the configuration editor. Extract the new configuration bundle into your Red Hat Quay installation directory, for example:

tar xvf quay-config.tar.gz -d /home/<user-name>/quay-poc/

$ tar xvf quay-config.tar.gz -d /home/<user-name>/quay-poc/Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a folder for your Clair configuration file, for example:

mkdir /etc/opt/clairv4/config/

$ mkdir /etc/opt/clairv4/config/Copy to Clipboard Copied! Toggle word wrap Toggle overflow Change into the Clair configuration folder:

cd /etc/opt/clairv4/config/

$ cd /etc/opt/clairv4/config/Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a Clair configuration file, for example:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow For more information about Clair’s configuration format, see Clair configuration reference.

Start Clair by using the container image, mounting in the configuration from the file you created:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteRunning multiple Clair containers is also possible, but for deployment scenarios beyond a single container the use of a container orchestrator like Kubernetes or OpenShift Container Platform is strongly recommended.

7.3. Clair on OpenShift Container Platform

To set up Clair v4 (Clair) on a Red Hat Quay deployment on OpenShift Container Platform, it is recommended to use the Red Hat Quay Operator. By default, the Red Hat Quay Operator will install or upgrade a Clair deployment along with your Red Hat Quay deployment and configure Clair automatically.

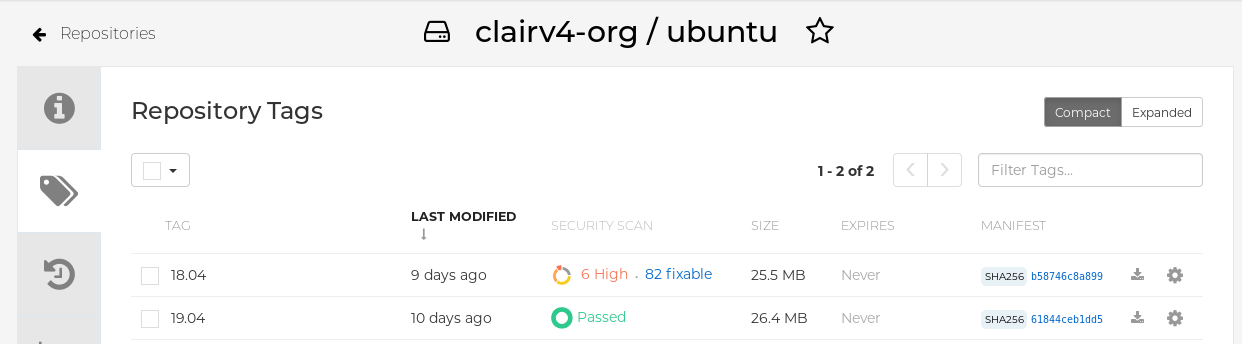

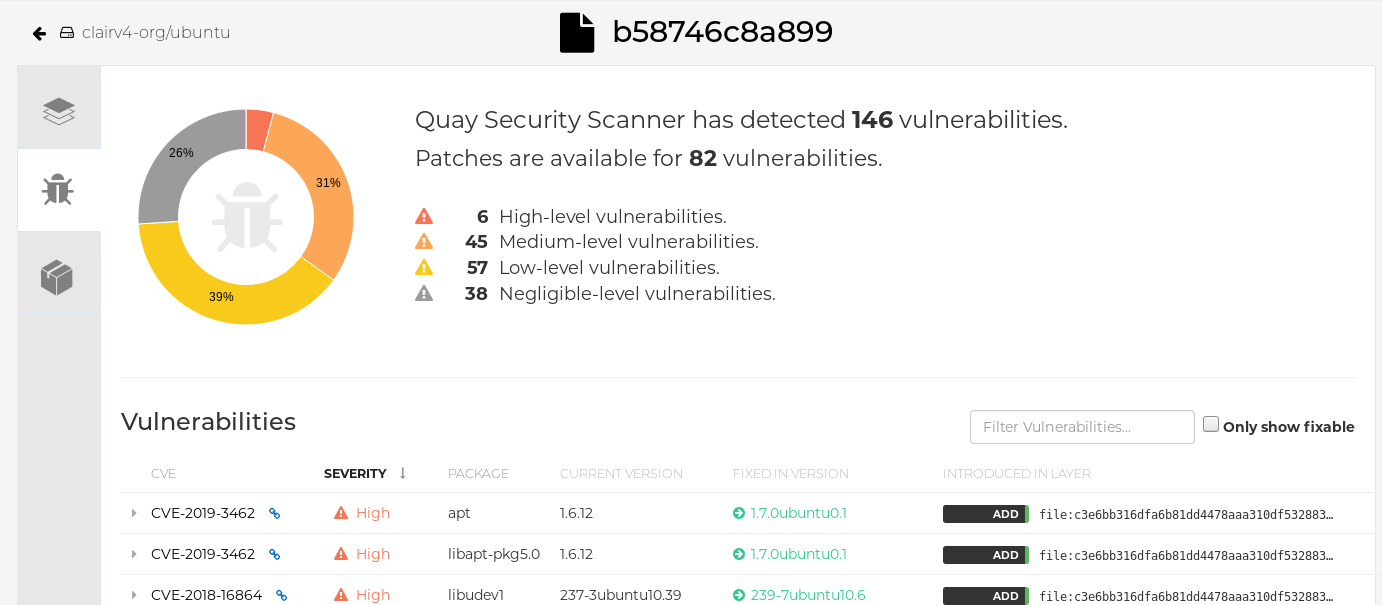

7.4. Testing Clair

Use the following procedure to test Clair on either a standalone Red Hat Quay deployment, or on an OpenShift Container Platform Operator-based deployment.

Prerequisites

- You have deployed the Clair container image.

Procedure

Pull a sample image by entering the following command:

podman pull ubuntu:20.04

$ podman pull ubuntu:20.04Copy to Clipboard Copied! Toggle word wrap Toggle overflow Tag the image to your registry by entering the following command:

sudo podman tag docker.io/library/ubuntu:20.04 <quay-server.example.com>/<user-name>/ubuntu:20.04

$ sudo podman tag docker.io/library/ubuntu:20.04 <quay-server.example.com>/<user-name>/ubuntu:20.04Copy to Clipboard Copied! Toggle word wrap Toggle overflow Push the image to your Red Hat Quay registry by entering the following command:

sudo podman push --tls-verify=false quay-server.example.com/quayadmin/ubuntu:20.04

$ sudo podman push --tls-verify=false quay-server.example.com/quayadmin/ubuntu:20.04Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Log in to your Red Hat Quay deployment through the UI.

- Click the repository name, for example, quayadmin/ubuntu.

In the navigation pane, click Tags.

Report summary

Click the image report, for example, 45 medium, to show a more detailed report:

Report details

Note

NoteIn some cases, Clair shows duplicate reports on images, for example,

ubi8/nodejs-12orubi8/nodejs-16. This occurs because vulnerabilities with same name are for different packages. This behavior is expected with Clair vulnerability reporting and will not be addressed as a bug.

Chapter 8. Repository mirroring

8.1. Repository mirroring

Red Hat Quay repository mirroring lets you mirror images from external container registries, or another local registry, into your Red Hat Quay cluster. Using repository mirroring, you can synchronize images to Red Hat Quay based on repository names and tags.

From your Red Hat Quay cluster with repository mirroring enabled, you can perform the following:

- Choose a repository from an external registry to mirror

- Add credentials to access the external registry

- Identify specific container image repository names and tags to sync

- Set intervals at which a repository is synced

- Check the current state of synchronization

To use the mirroring functionality, you need to perform the following actions:

- Enable repository mirroring in the Red Hat Quay configuration file

- Run a repository mirroring worker

- Create mirrored repositories

All repository mirroring configurations can be performed using the configuration tool UI or by the Red Hat Quay API.

8.2. Repository mirroring compared to geo-replication

Red Hat Quay geo-replication mirrors the entire image storage backend data between 2 or more different storage backends while the database is shared, for example, one Red Hat Quay registry with two different blob storage endpoints. The primary use cases for geo-replication include the following:

- Speeding up access to the binary blobs for geographically dispersed setups

- Guaranteeing that the image content is the same across regions

Repository mirroring synchronizes selected repositories, or subsets of repositories, from one registry to another. The registries are distinct, with each registry having a separate database and separate image storage.

The primary use cases for mirroring include the following:

- Independent registry deployments in different data centers or regions, where a certain subset of the overall content is supposed to be shared across the data centers and regions

- Automatic synchronization or mirroring of selected (allowlisted) upstream repositories from external registries into a local Red Hat Quay deployment

Repository mirroring and geo-replication can be used simultaneously.

| Feature / Capability | Geo-replication | Repository mirroring |

|---|---|---|

| What is the feature designed to do? | A shared, global registry | Distinct, different registries |

| What happens if replication or mirroring has not been completed yet? | The remote copy is used (slower) | No image is served |

| Is access to all storage backends in both regions required? | Yes (all Red Hat Quay nodes) | No (distinct storage) |

| Can users push images from both sites to the same repository? | Yes | No |

| Is all registry content and configuration identical across all regions (shared database)? | Yes | No |

| Can users select individual namespaces or repositories to be mirrored? | No | Yes |

| Can users apply filters to synchronization rules? | No | Yes |

| Are individual / different role-base access control configurations allowed in each region | No | Yes |

8.3. Using repository mirroring

The following list shows features and limitations of Red Hat Quay repository mirroring:

- With repository mirroring, you can mirror an entire repository or selectively limit which images are synced. Filters can be based on a comma-separated list of tags, a range of tags, or other means of identifying tags through Unix shell-style wildcards. For more information, see the documentation for wildcards.

- When a repository is set as mirrored, you cannot manually add other images to that repository.

- Because the mirrored repository is based on the repository and tags you set, it will hold only the content represented by the repository and tag pair. For example if you change the tag so that some images in the repository no longer match, those images will be deleted.

- Only the designated robot can push images to a mirrored repository, superseding any role-based access control permissions set on the repository.

- Mirroring can be configured to rollback on failure, or to run on a best-effort basis.

- With a mirrored repository, a user with read permissions can pull images from the repository but cannot push images to the repository.

- Changing settings on your mirrored repository can be performed in the Red Hat Quay user interface, using the Repositories → Mirrors tab for the mirrored repository you create.

- Images are synced at set intervals, but can also be synced on demand.

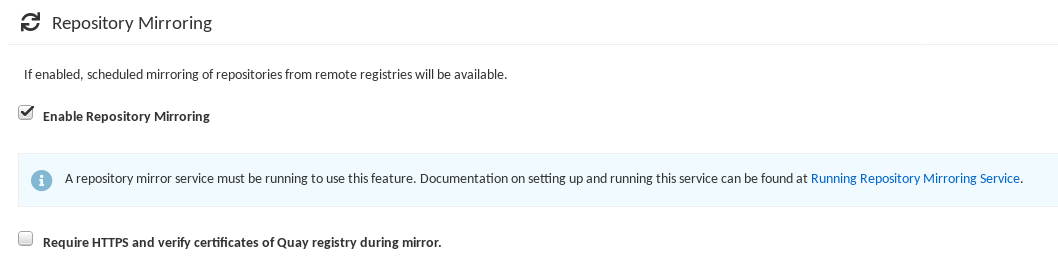

8.4. Mirroring configuration UI

Start the

Quaycontainer in configuration mode and select the Enable Repository Mirroring check box. If you want to require HTTPS communications and verify certificates during mirroring, select the HTTPS and cert verification check box.

-

Validate and download the

configurationfile, and then restart Quay in registry mode using the updated config file.

8.5. Mirroring configuration fields

| Field | Type | Description |

|---|---|---|

| FEATURE_REPO_MIRROR | Boolean |

Enable or disable repository mirroring |

| REPO_MIRROR_INTERVAL | Number |

The number of seconds between checking for repository mirror candidates |

| REPO_MIRROR_SERVER_HOSTNAME | String |

Replaces the |

| REPO_MIRROR_TLS_VERIFY | Boolean |

Require HTTPS and verify certificates of Quay registry during mirror. |

| REPO_MIRROR_ROLLBACK | Boolean |

When set to

Default: |

8.6. Mirroring worker

Use the following procedure to start the repository mirroring worker.

Procedure

If you have not configured TLS communications using a

/root/ca.crtcertificate, enter the following command to start aQuaypod with therepomirroroption:sudo podman run -d --name mirroring-worker \ -v $QUAY/config:/conf/stack:Z \ registry.redhat.io/quay/quay-rhel8:v3.9.17 repomirror

$ sudo podman run -d --name mirroring-worker \ -v $QUAY/config:/conf/stack:Z \ registry.redhat.io/quay/quay-rhel8:v3.9.17 repomirrorCopy to Clipboard Copied! Toggle word wrap Toggle overflow If you have configured TLS communications using a

/root/ca.crtcertificate, enter the following command to start the repository mirroring worker:sudo podman run -d --name mirroring-worker \ -v $QUAY/config:/conf/stack:Z \ -v /root/ca.crt:/etc/pki/ca-trust/source/anchors/ca.crt:Z \ registry.redhat.io/quay/quay-rhel8:v3.9.17 repomirror

$ sudo podman run -d --name mirroring-worker \ -v $QUAY/config:/conf/stack:Z \ -v /root/ca.crt:/etc/pki/ca-trust/source/anchors/ca.crt:Z \ registry.redhat.io/quay/quay-rhel8:v3.9.17 repomirrorCopy to Clipboard Copied! Toggle word wrap Toggle overflow

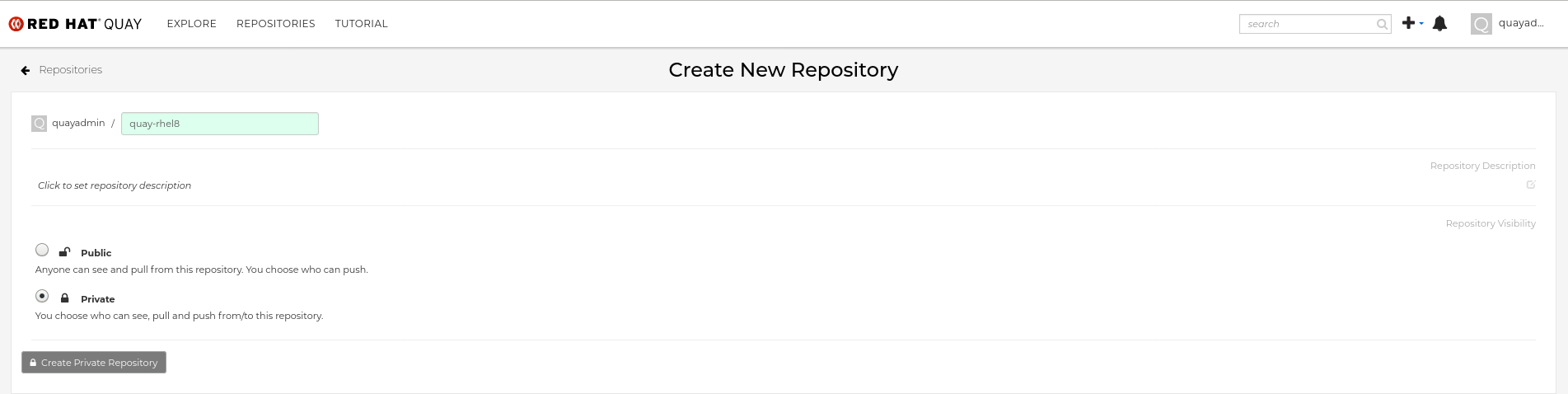

8.7. Creating a mirrored repository

When mirroring a repository from an external container registry, you must create a new private repository. Typically, the same name is used as the target repository, for example, quay-rhel8.

8.7.1. Repository mirroring settings

Use the following procedure to adjust the settings of your mirrored repository.

Prerequisites

- You have enabled repository mirroring in your Red Hat Quay configuration file.

- You have deployed a mirroring worker.

Procedure

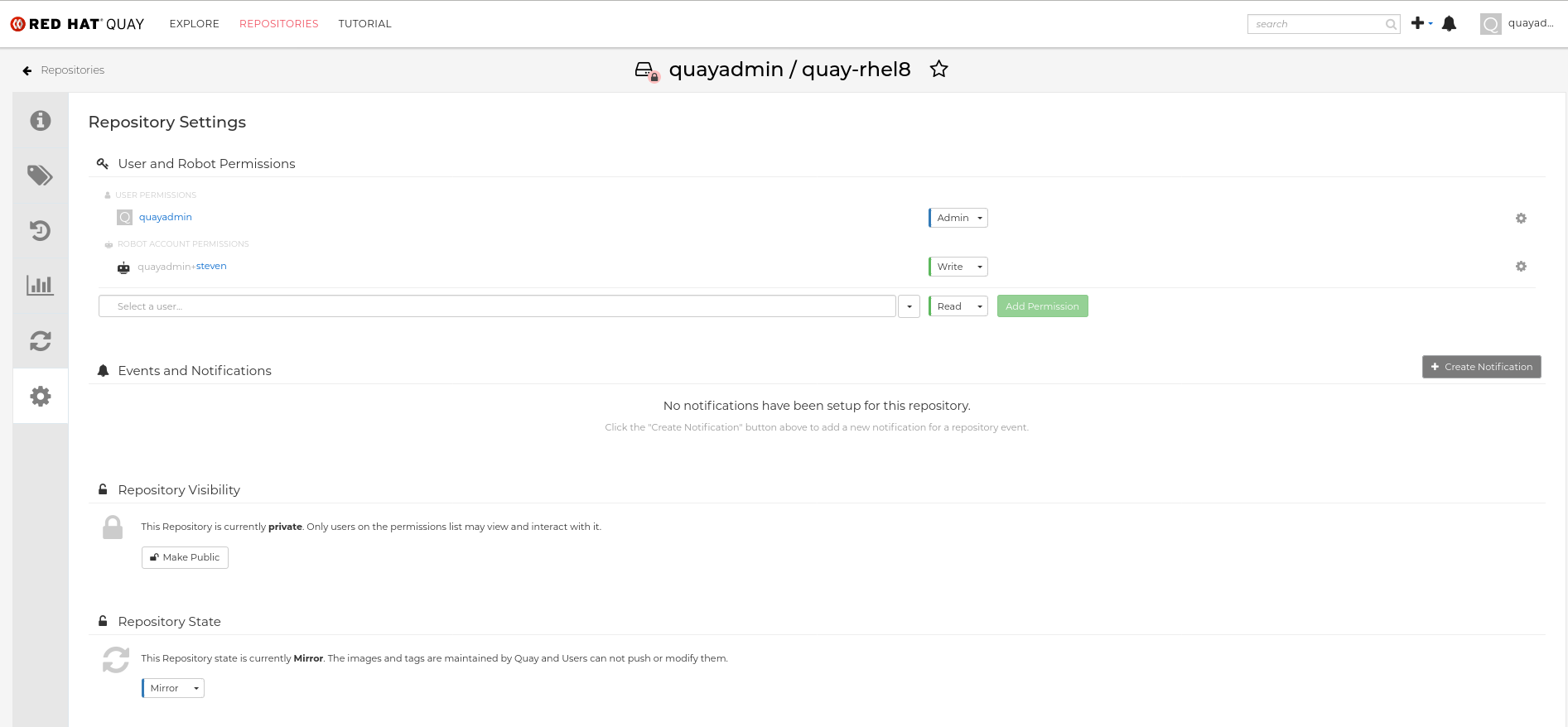

In the Settings tab, set the Repository State to

Mirror:

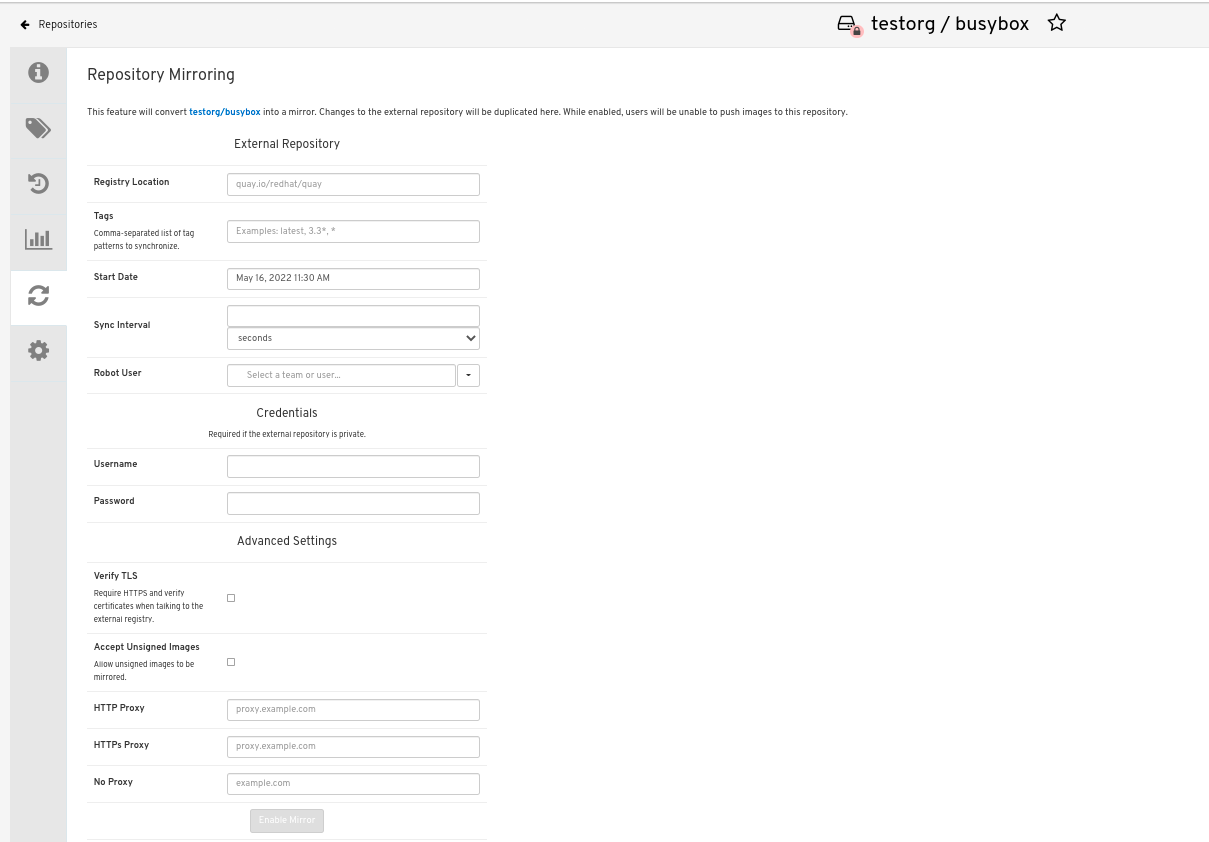

In the Mirror tab, enter the details for connecting to the external registry, along with the tags, scheduling and access information:

Enter the details as required in the following fields:

-

Registry Location: The external repository you want to mirror, for example,

registry.redhat.io/quay/quay-rhel8 - Tags: This field is required. You may enter a comma-separated list of individual tags or tag patterns. (See Tag Patterns section for details.)

- Start Date: The date on which mirroring begins. The current date and time is used by default.

- Sync Interval: Defaults to syncing every 24 hours. You can change that based on hours or days.

- Robot User: Create a new robot account or choose an existing robot account to do the mirroring.

- Username: The username for accessing the external registry holding the repository you are mirroring.

- Password: The password associated with the Username. Note that the password cannot include characters that require an escape character (\).

-

Registry Location: The external repository you want to mirror, for example,

8.7.2. Advanced settings

In the Advanced Settings section, you can configure SSL/TLS and proxy with the following options:

- Verify TLS: Select this option if you want to require HTTPS and to verify certificates when communicating with the target remote registry.

- Accept Unsigned Images: Selecting this option allows unsigned images to be mirrored.

- HTTP Proxy: Select this option if you want to require HTTPS and to verify certificates when communicating with the target remote registry.

- HTTPS PROXY: Identify the HTTPS proxy server needed to access the remote site, if a proxy server is needed.

- No Proxy: List of locations that do not require proxy.

8.7.3. Synchronize now

Use the following procedure to initiate the mirroring operation.

Procedure

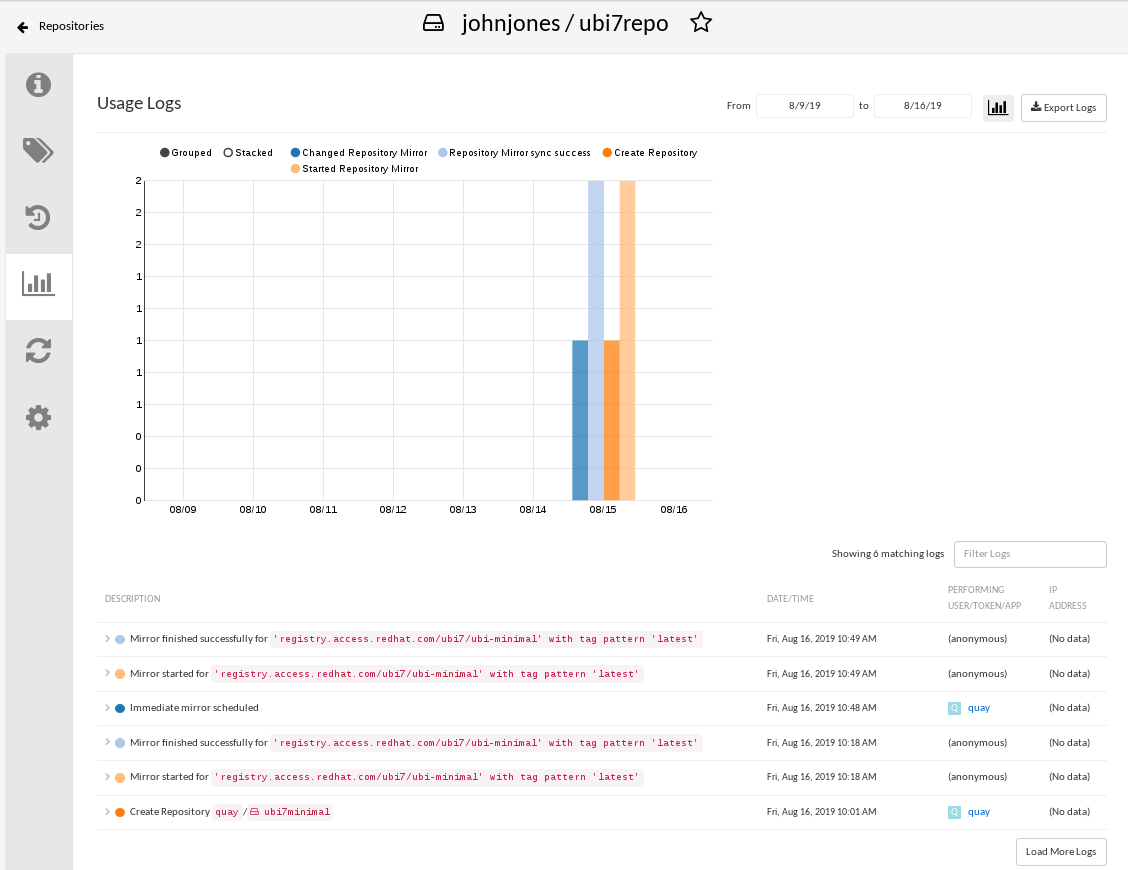

To perform an immediate mirroring operation, press the Sync Now button on the repository’s Mirroring tab. The logs are available on the Usage Logs tab:

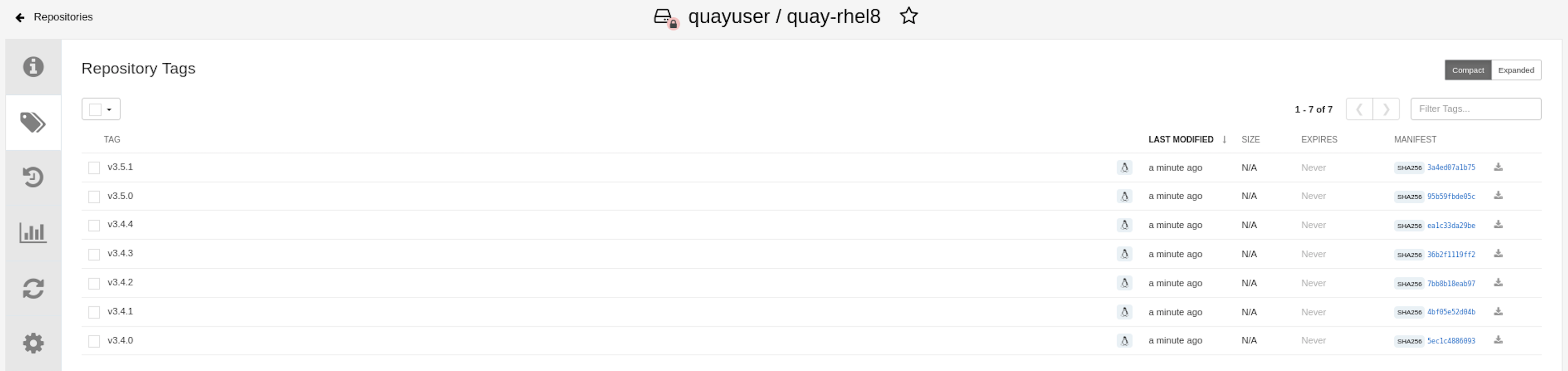

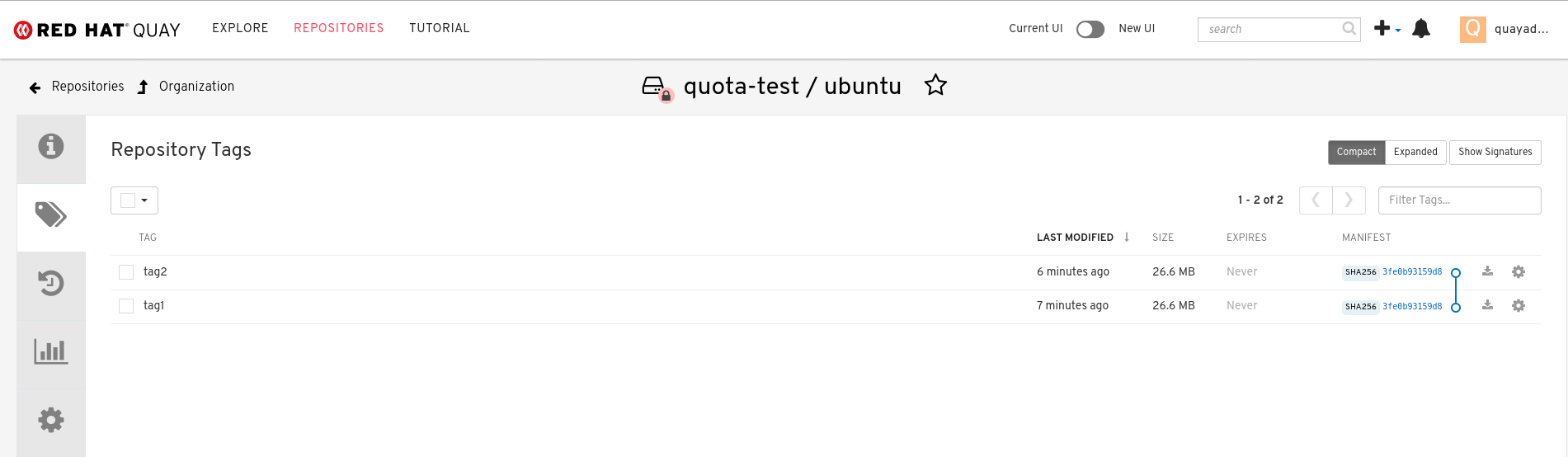

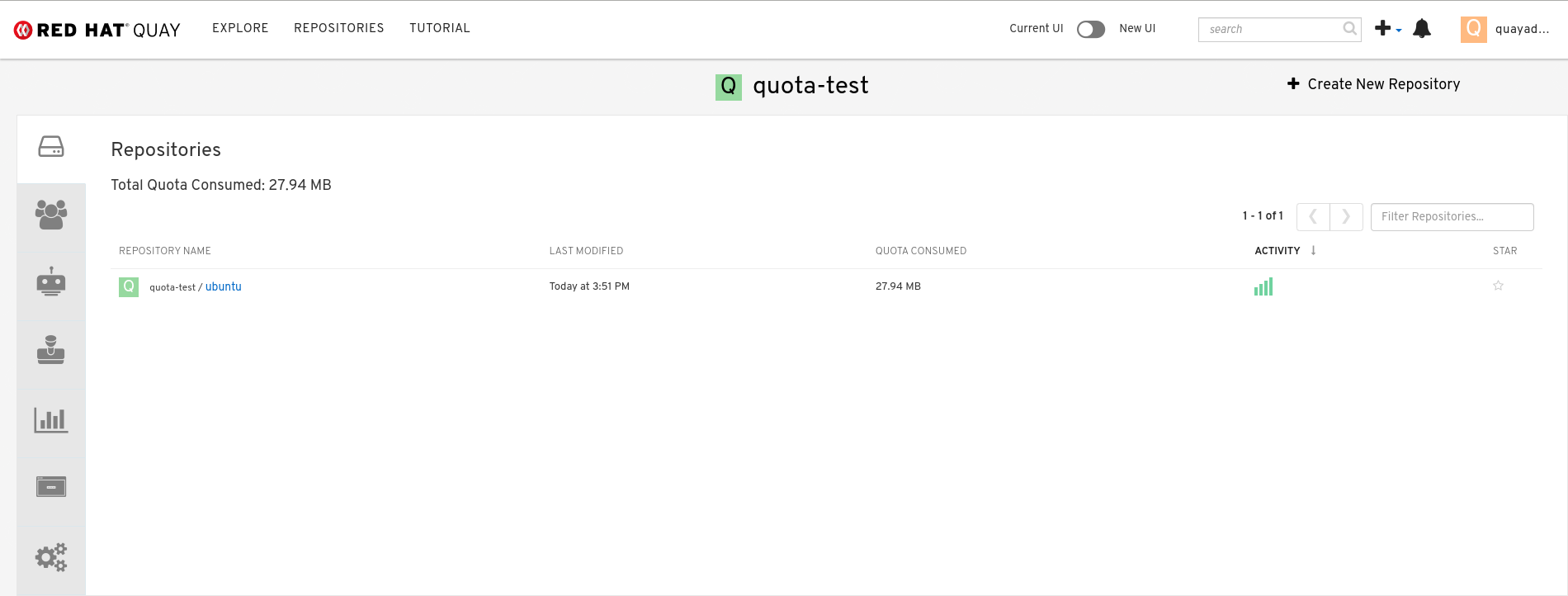

When the mirroring is complete, the images will appear in the Tags tab:

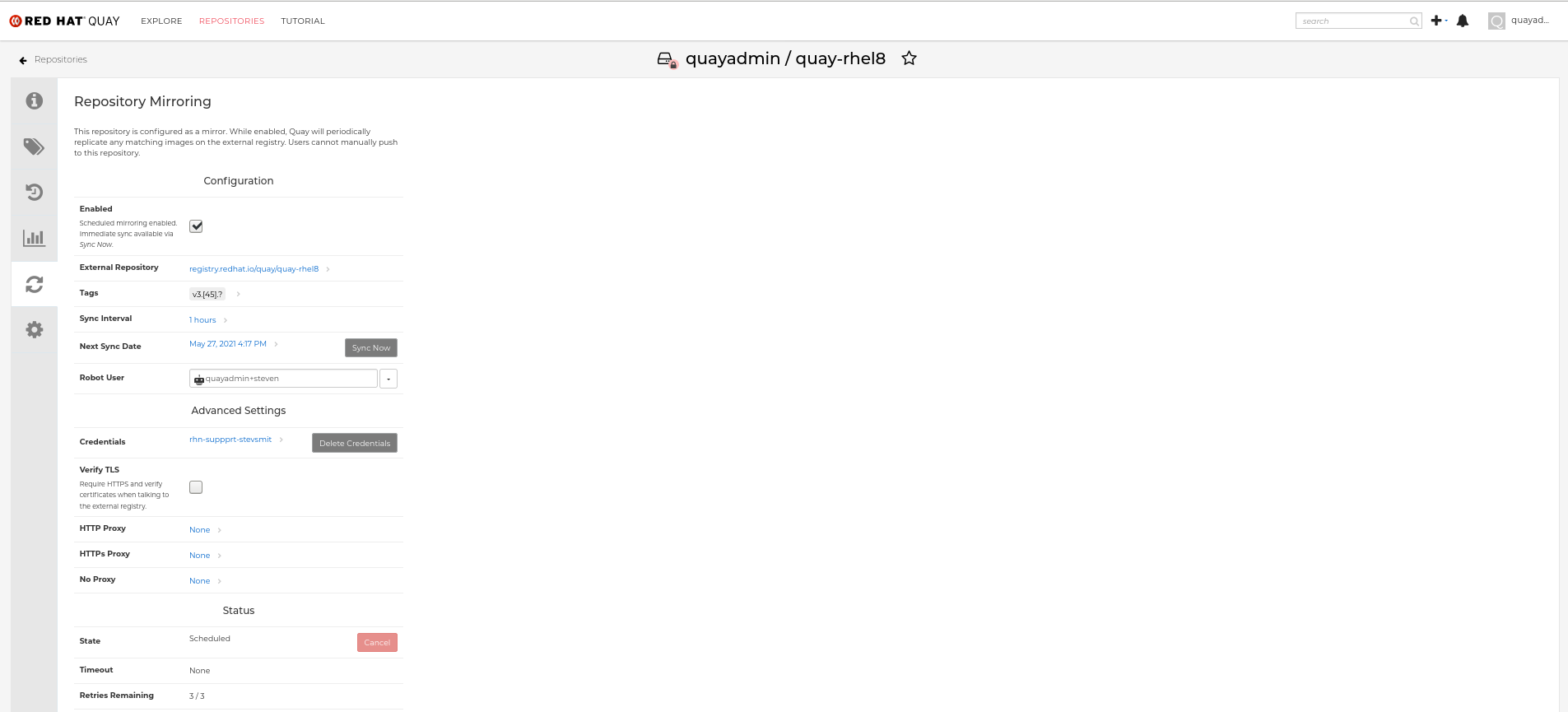

Below is an example of a completed Repository Mirroring screen:

8.8. Event notifications for mirroring

There are three notification events for repository mirroring:

- Repository Mirror Started

- Repository Mirror Success

- Repository Mirror Unsuccessful

The events can be configured inside of the Settings tab for each repository, and all existing notification methods such as email, Slack, Quay UI, and webhooks are supported.

8.9. Mirroring tag patterns

At least one tag must be entered. The following table references possible image tag patterns.

8.9.1. Pattern syntax

| Pattern | Description |

| * | Matches all characters |

| ? | Matches any single character |

| [seq] | Matches any character in seq |

| [!seq] | Matches any character not in seq |

8.9.2. Example tag patterns

| Example Pattern | Example Matches |

| v3* | v32, v3.1, v3.2, v3.2-4beta, v3.3 |

| v3.* | v3.1, v3.2, v3.2-4beta |

| v3.? | v3.1, v3.2, v3.3 |

| v3.[12] | v3.1, v3.2 |

| v3.[12]* | v3.1, v3.2, v3.2-4beta |

| v3.[!1]* | v3.2, v3.2-4beta, v3.3 |

8.10. Working with mirrored repositories

Once you have created a mirrored repository, there are several ways you can work with that repository. Select your mirrored repository from the Repositories page and do any of the following:

- Enable/disable the repository: Select the Mirroring button in the left column, then toggle the Enabled check box to enable or disable the repository temporarily.

Check mirror logs: To make sure the mirrored repository is working properly, you can check the mirror logs. To do that, select the Usage Logs button in the left column. Here’s an example:

- Sync mirror now: To immediately sync the images in your repository, select the Sync Now button.

- Change credentials: To change the username and password, select DELETE from the Credentials line. Then select None and add the username and password needed to log into the external registry when prompted.

- Cancel mirroring: To stop mirroring, which keeps the current images available but stops new ones from being synced, select the CANCEL button.

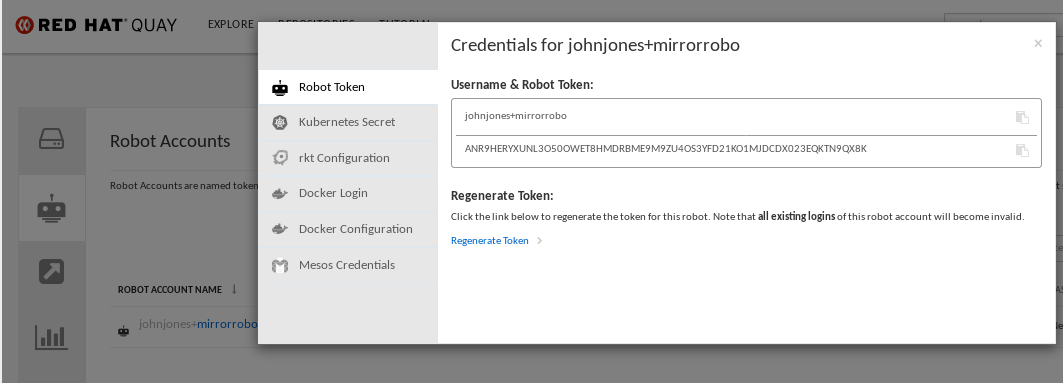

Set robot permissions: Red Hat Quay robot accounts are named tokens that hold credentials for accessing external repositories. By assigning credentials to a robot, that robot can be used across multiple mirrored repositories that need to access the same external registry.

You can assign an existing robot to a repository by going to Account Settings, then selecting the Robot Accounts icon in the left column. For the robot account, choose the link under the REPOSITORIES column. From the pop-up window, you can:

- Check which repositories are assigned to that robot.

-

Assign read, write or Admin privileges to that robot from the PERMISSION field shown in this figure:

Change robot credentials: Robots can hold credentials such as Kubernetes secrets, Docker login information, and Mesos bundles. To change robot credentials, select the Options gear on the robot’s account line on the Robot Accounts window and choose View Credentials. Add the appropriate credentials for the external repository the robot needs to access.

- Check and change general setting: Select the Settings button (gear icon) from the left column on the mirrored repository page. On the resulting page, you can change settings associated with the mirrored repository. In particular, you can change User and Robot Permissions, to specify exactly which users and robots can read from or write to the repo.

8.11. Repository mirroring recommendations

Best practices for repository mirroring include the following:

- Repository mirroring pods can run on any node. This means that you can run mirroring on nodes where Red Hat Quay is already running.

- Repository mirroring is scheduled in the database and runs in batches. As a result, repository workers check each repository mirror configuration file and reads when the next sync needs to be. More mirror workers means more repositories can be mirrored at the same time. For example, running 10 mirror workers means that a user can run 10 mirroring operators in parallel. If a user only has 2 workers with 10 mirror configurations, only 2 operators can be performed.

The optimal number of mirroring pods depends on the following conditions:

- The total number of repositories to be mirrored

- The number of images and tags in the repositories and the frequency of changes

Parallel batching

For example, if a user is mirroring a repository that has 100 tags, the mirror will be completed by one worker. Users must consider how many repositories one wants to mirror in parallel, and base the number of workers around that.

Multiple tags in the same repository cannot be mirrored in parallel.

Chapter 9. IPv6 and dual-stack deployments

Your standalone Red Hat Quay deployment can now be served in locations that only support IPv6, such as Telco and Edge environments. Support is also offered for dual-stack networking so your Red Hat Quay deployment can listen on IPv4 and IPv6 simultaneously.

For a list of known limitations, see IPv6 limitations

9.1. Enabling the IPv6 protocol family

Use the following procedure to enable IPv6 support on your standalone Red Hat Quay deployment.

Prerequisites

- You have updated Red Hat Quay to 3.8.

- Your host and container software platform (Docker, Podman) must be configured to support IPv6.

Procedure

In your deployment’s

config.yamlfile, add theFEATURE_LISTEN_IP_VERSIONparameter and set it toIPv6, for example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Start, or restart, your Red Hat Quay deployment.

Check that your deployment is listening to IPv6 by entering the following command:

curl <quay_endpoint>/health/instance {"data":{"services":{"auth":true,"database":true,"disk_space":true,"registry_gunicorn":true,"service_key":true,"web_gunicorn":true}},"status_code":200}$ curl <quay_endpoint>/health/instance {"data":{"services":{"auth":true,"database":true,"disk_space":true,"registry_gunicorn":true,"service_key":true,"web_gunicorn":true}},"status_code":200}Copy to Clipboard Copied! Toggle word wrap Toggle overflow

After enabling IPv6 in your deployment’s config.yaml, all Red Hat Quay features can be used as normal, so long as your environment is configured to use IPv6 and is not hindered by the ipv6-limitations[current limitations].

If your environment is configured to IPv4, but the FEATURE_LISTEN_IP_VERSION configuration field is set to IPv6, Red Hat Quay will fail to deploy.

9.2. Enabling the dual-stack protocol family

Use the following procedure to enable dual-stack (IPv4 and IPv6) support on your standalone Red Hat Quay deployment.

Prerequisites

- You have updated Red Hat Quay to 3.8.

- Your host and container software platform (Docker, Podman) must be configured to support IPv6.

Procedure

In your deployment’s

config.yamlfile, add theFEATURE_LISTEN_IP_VERSIONparameter and set it todual-stack, for example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Start, or restart, your Red Hat Quay deployment.

Check that your deployment is listening to both channels by entering the following command:

For IPv4, enter the following command:

curl --ipv4 <quay_endpoint> {"data":{"services":{"auth":true,"database":true,"disk_space":true,"registry_gunicorn":true,"service_key":true,"web_gunicorn":true}},"status_code":200}$ curl --ipv4 <quay_endpoint> {"data":{"services":{"auth":true,"database":true,"disk_space":true,"registry_gunicorn":true,"service_key":true,"web_gunicorn":true}},"status_code":200}Copy to Clipboard Copied! Toggle word wrap Toggle overflow For IPv6, enter the following command:

curl --ipv6 <quay_endpoint> {"data":{"services":{"auth":true,"database":true,"disk_space":true,"registry_gunicorn":true,"service_key":true,"web_gunicorn":true}},"status_code":200}$ curl --ipv6 <quay_endpoint> {"data":{"services":{"auth":true,"database":true,"disk_space":true,"registry_gunicorn":true,"service_key":true,"web_gunicorn":true}},"status_code":200}Copy to Clipboard Copied! Toggle word wrap Toggle overflow

After enabling dual-stack in your deployment’s config.yaml, all Red Hat Quay features can be used as normal, so long as your environment is configured for dual-stack.

9.3. IPv6 and dua-stack limitations

Currently, attempting to configure your Red Hat Quay deployment with the common Azure Blob Storage configuration will not work on IPv6 single stack environments. Because the endpoint of Azure Blob Storage does not support IPv6, there is no workaround in place for this issue.

For more information, see PROJQUAY-4433.

Currently, attempting to configure your Red Hat Quay deployment with Amazon S3 CloudFront will not work on IPv6 single stack environments. Because the endpoint of Amazon S3 CloudFront does not support IPv6, there is no workaround in place for this issue.

For more information, see PROJQUAY-4470.

Chapter 10. LDAP Authentication Setup for Red Hat Quay

Lightweight Directory Access Protocol (LDAP) is an open, vendor-neutral, industry standard application protocol for accessing and maintaining distributed directory information services over an Internet Protocol (IP) network. Red Hat Quay supports using LDAP as an identity provider.

10.1. Considerations when enabling LDAP

Prior to enabling LDAP for your Red Hat Quay deployment, you should consider the following.

Existing Red Hat Quay deployments

Conflicts between usernames can arise when you enable LDAP for an existing Red Hat Quay deployment that already has users configured. For example, one user, alice, was manually created in Red Hat Quay prior to enabling LDAP. If the username alice also exists in the LDAP directory, Red Hat Quay automatically creates a new user, alice-1, when alice logs in for the first time using LDAP. Red Hat Quay then automatically maps the LDAP credentials to the alice account. For consistency reasons, this might be erroneous for your Red Hat Quay deployment. It is recommended that you remove any potentially conflicting local account names from Red Hat Quay prior to enabling LDAP.

Manual User Creation and LDAP authentication

When Red Hat Quay is configured for LDAP, LDAP-authenticated users are automatically created in Red Hat Quay’s database on first log in, if the configuration option FEATURE_USER_CREATION is set to True. If this option is set to False, the automatic user creation for LDAP users fails, and the user is not allowed to log in. In this scenario, the superuser needs to create the desired user account first. Conversely, if FEATURE_USER_CREATION is set to True, this also means that a user can still create an account from the Red Hat Quay login screen, even if there is an equivalent user in LDAP.

10.2. Configuring LDAP for Red Hat Quay

Use the following procedure to configure LDAP for your Red Hat Quay deployment.

Procedure

You can use the Red Hat Quay config tool to configure LDAP.

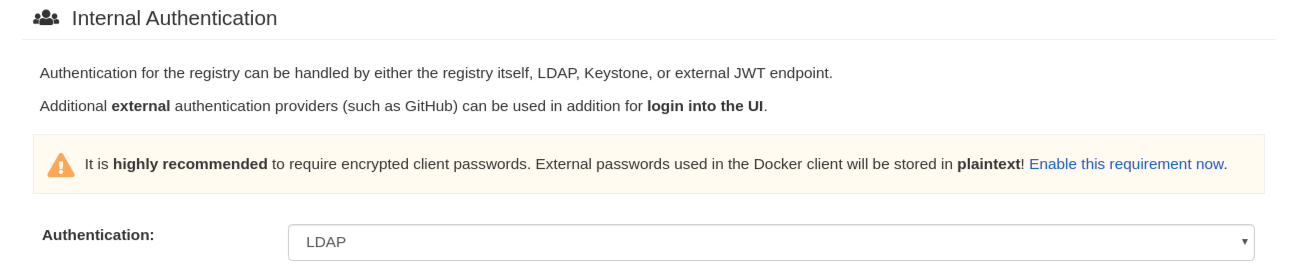

Using the Red Hat Quay config tool, locate the Authentication section. Select LDAP from the dropdown menu, and update the LDAP configuration fields as required.

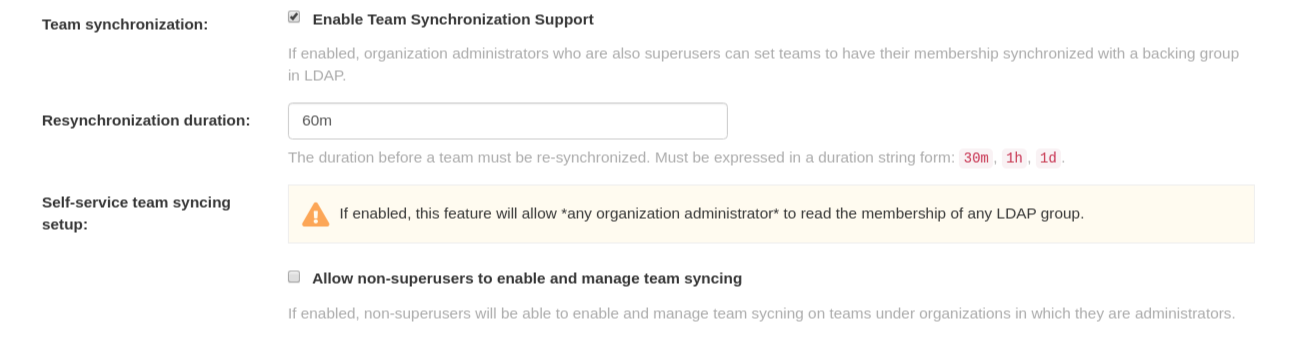

Optional. On the Team synchronization box, and click Enable Team Syncrhonization Support. With team synchronization enabled, Red Hat Quay administrators who are also superusers can set teams to have their membership synchronized with a backing group in LDAP.

-

For Resynchronization duration enter 60m. This option sets the resynchronization duration at which a team must be re-synchronized. This field must be set similar to the following examples:

30m,1h,1d. Optional. For Self-service team syncing setup, you can click Allow non-superusers to enable and manage team syncing to allow superusers the ability to enable and manage team syncing under the organizations that they are administrators for.

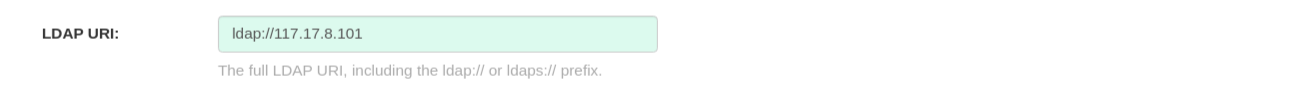

Locate the LDAP URI box and provide a full LDAP URI, including the ldap:// or ldaps:// prefix, for example,

ldap://117.17.8.101.

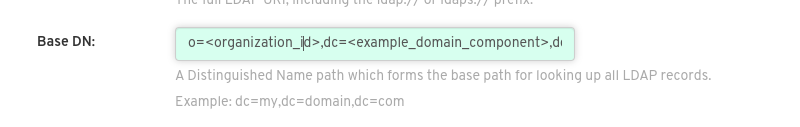

Under Base DN, provide a name which forms the base path for looking up all LDAP records, for example,

o=<organization_id>,dc=<example_domain_component>,dc=com.

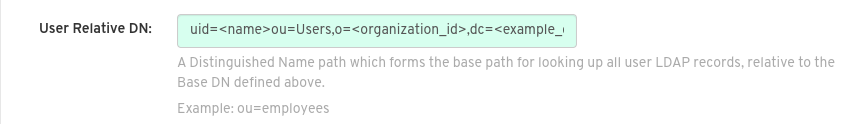

Under User Relative DN, provide a list of Distinguished Name path(s), which form the secondary base path(s) for looking up all user LDAP records relative to the Base DN defined above. For example,

uid=<name>,ou=Users,o=<organization_id>,dc=<example_domain_component>,dc=com. This path, or these paths, is tried if the user is not found through the primary relative DN. Note

NoteUser Relative DN is relative to Base DN, for example,

ou=Usersand notou=Users,dc=<example_domain_component>,dc=com.Optional. Provide Secondary User Relative DNs if there are multiple Organizational Units where user objects are located. You can type in the Organizational Units and click Add to add multiple RDNs. For example,

ou=Users,ou=NYC and ou=Users,ou=SFO.The User Relative DN searches with subtree scope. For example, if your organization has Organization Units

NYCandSFOunder the Users OU (that is,ou=SFO,ou=Usersandou=NYC,ou=Users), Red Hat Quay can authenticate users from both theNYCandSFOOrganizational Units if the User Relative DN is set toUsers(ou=Users).Optional. Fill in the Additional User Filter Expression field for all user lookup queries if desired. Distinguished Names used in the filter must be full based. The Base DN is not added automatically added to this field, and you must wrap the text in parentheses, for example,

(memberOf=cn=developers,ou=groups,dc=<example_domain_component>,dc=com).

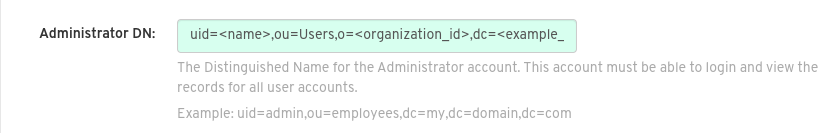

Fill in the Administrator DN field for the Red Hat Quay administrator account. This account must be able to login and view the records for all users accounts. For example:

uid=<name>,ou=Users,o=<organization_id>,dc=<example_domain_component>,dc=com.

Fill in the Administrator DN Password field. This is the password for the administrator distinguished name.

ImportantThe password for this field is stored in plaintext inside of the

config.yamlfile. Setting up a dedicated account of using a password hash is highly recommended.Optional. Fill in the UID Attribute field. This is the name of the property field in the LDAP user records that stores your user’s username. Most commonly, uid is entered for this field. This field can be used to log into your Red Hat Quay deployment.

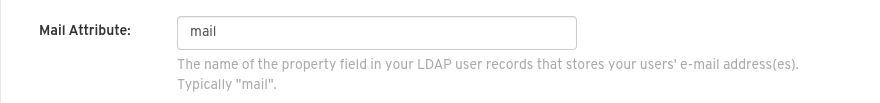

Optional. Fill in the Mail Attribute field. This is the name of the property field in your LDAP user records that stores your user’s e-mail addresses. Most commonly, mail is entered for this field. This field can be used to log into your Red Hat Quay deployment.

Note

Note- The username to log in must exist in the User Relative DN.

-

If you are using Microsoft Active Directory to setup your LDAP deployment, you must use

sAMAccountNamefor your UID attribute.

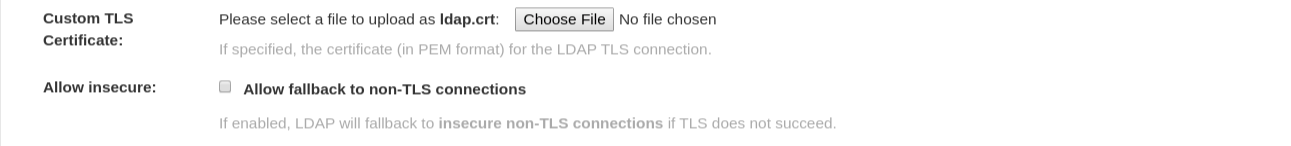

Optional. You can add a custom SSL/TLS certificate by clicking Choose File under the Custom TLS Certificate optionl. Additionally, you can enable fallbacks to insecure, non-TLS connections by checking the Allow fallback to non-TLS connections box.

If you upload an SSl/TLS certificate, you must provide an ldaps:// prefix, for example,

LDAP_URI: ldaps://ldap_provider.example.org.

Alternatively, you can update your

config.yamlfile directly to include all relevant information. For example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - After you have added all required LDAP fields, click the Save Configuration Changes button to validate the configuration. All validation must succeed before proceeding. Additional configuration can be performed by selecting the Continue Editing button.

10.3. Enabling the LDAP_RESTRICTED_USER_FILTER configuration field

The LDAP_RESTRICTED_USER_FILTER configuration field is a subset of the LDAP_USER_FILTER configuration field. When configured, this option allows Red Hat Quay administrators the ability to configure LDAP users as restricted users when Red Hat Quay uses LDAP as its authentication provider.

Use the following procedure to enable LDAP restricted users on your Red Hat Quay deployment.

Prerequisites

- Your Red Hat Quay deployment uses LDAP as its authentication provider.

-

You have configured the

LDAP_USER_FILTERfield in yourconfig.yamlfile.

Procedure

In your deployment’s

config.yamlfile, add theLDAP_RESTRICTED_USER_FILTERparameter and specify the group of restricted users, for example,members:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Start, or restart, your Red Hat Quay deployment.

After enabling the LDAP_RESTRICTED_USER_FILTER feature, your LDAP Red Hat Quay users are restricted from reading and writing content, and creating organizations.

10.4. Enabling the LDAP_SUPERUSER_FILTER configuration field

With the LDAP_SUPERUSER_FILTER field configured, Red Hat Quay administrators can configure Lightweight Directory Access Protocol (LDAP) users as superusers if Red Hat Quay uses LDAP as its authentication provider.

Use the following procedure to enable LDAP superusers on your Red Hat Quay deployment.

Prerequisites

- Your Red Hat Quay deployment uses LDAP as its authentication provider.

-

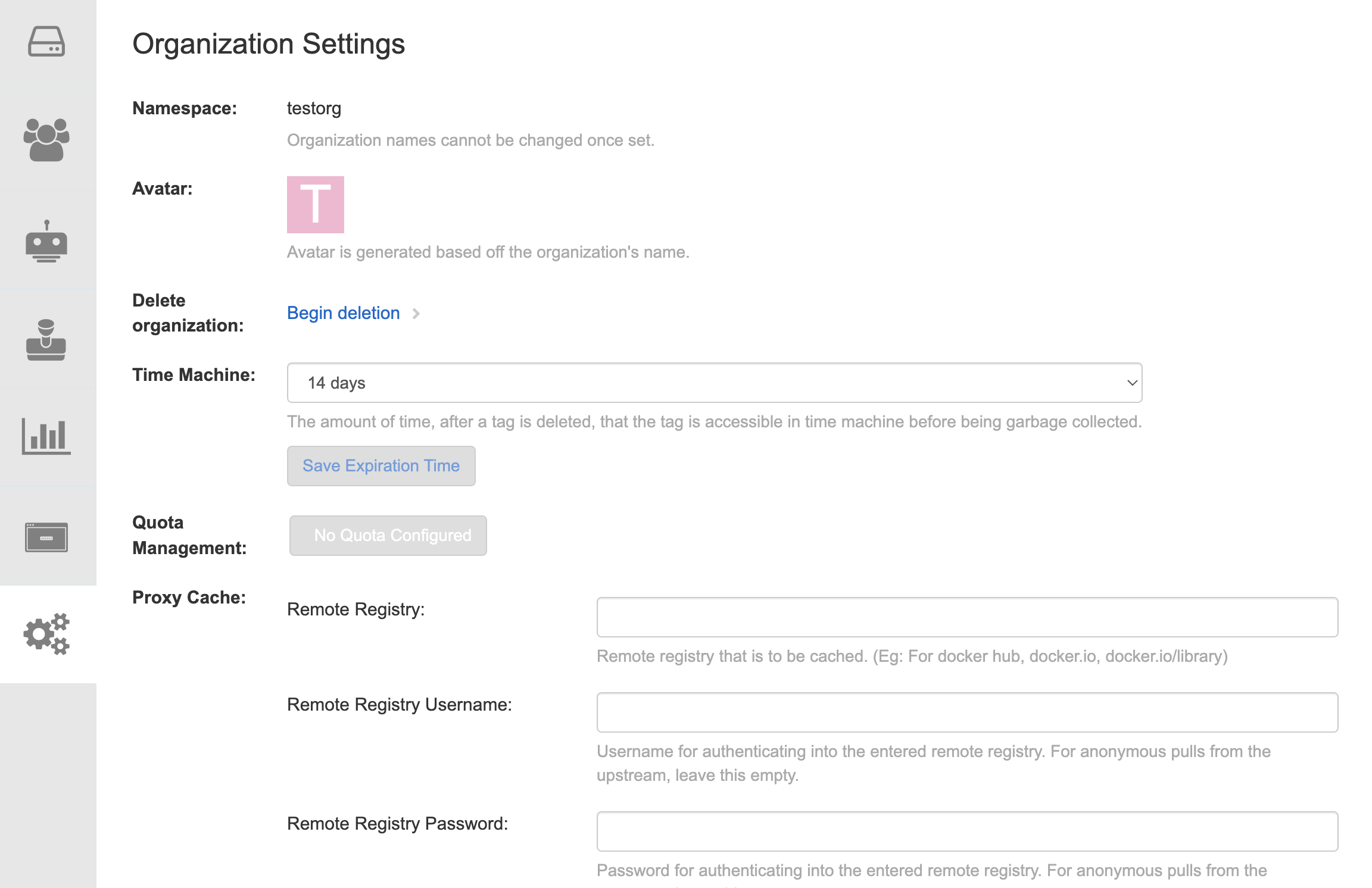

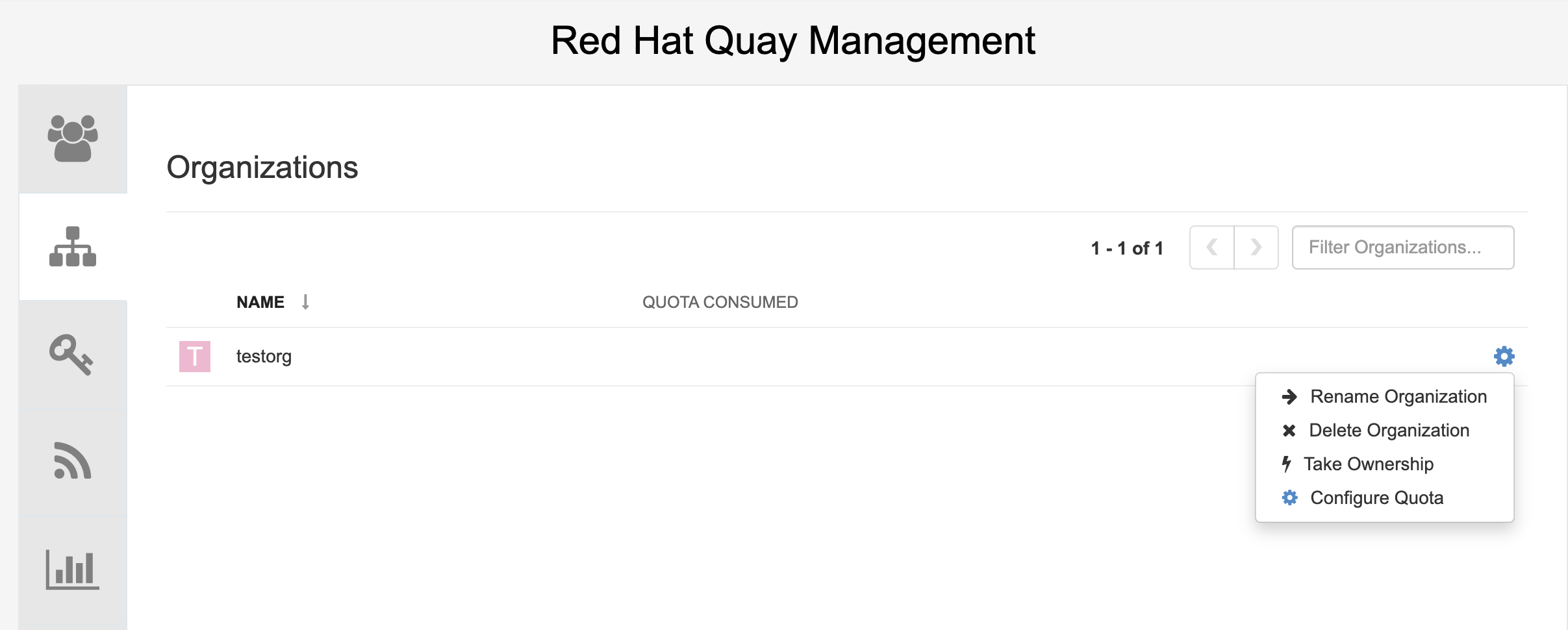

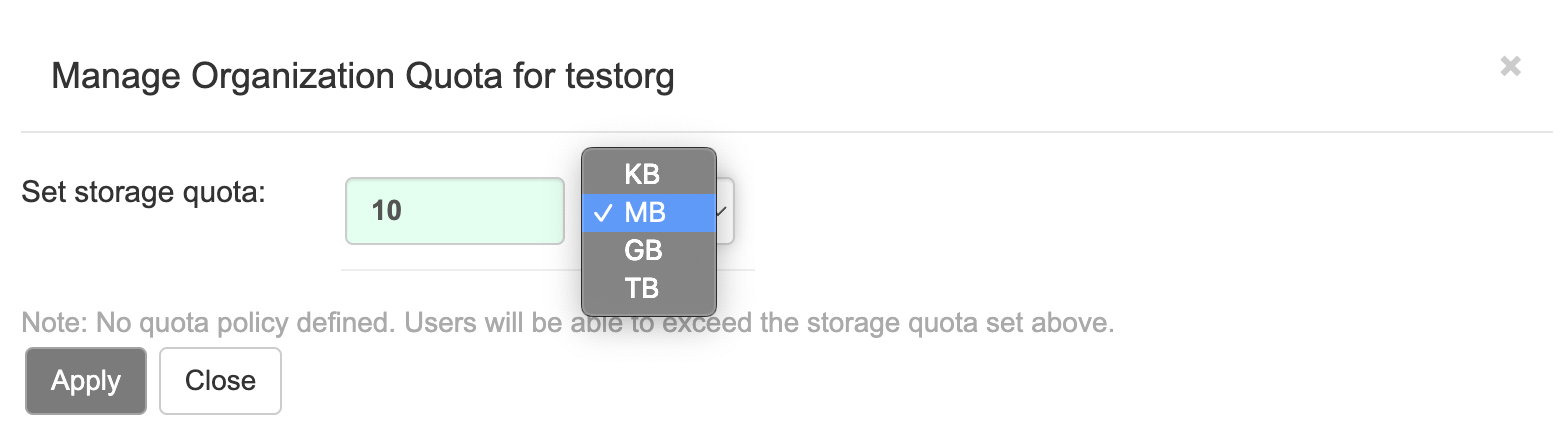

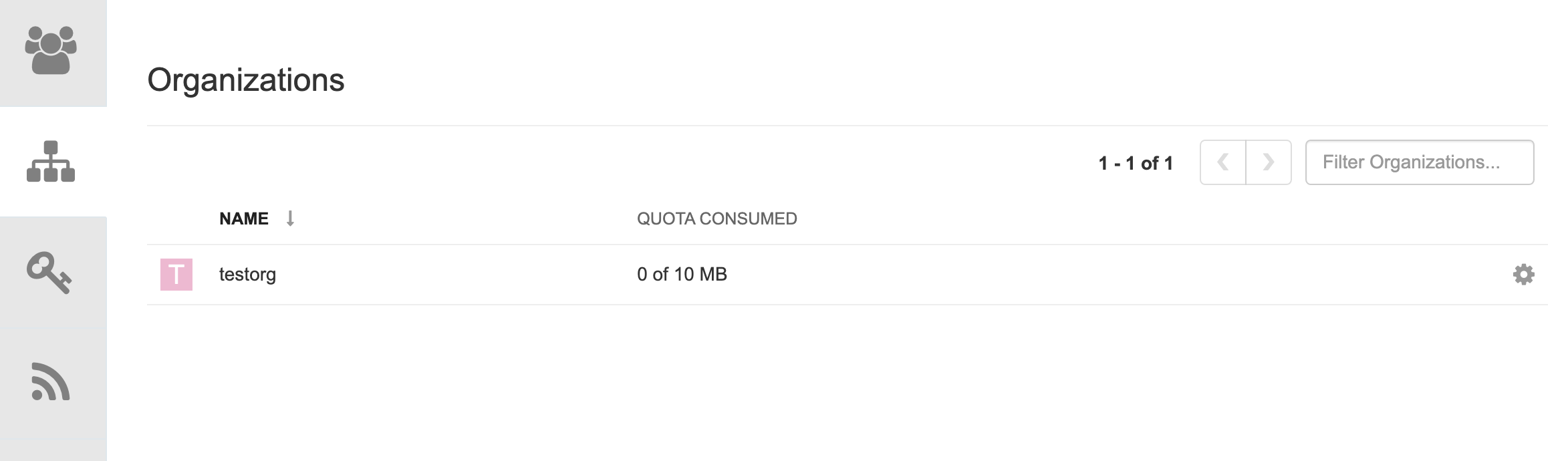

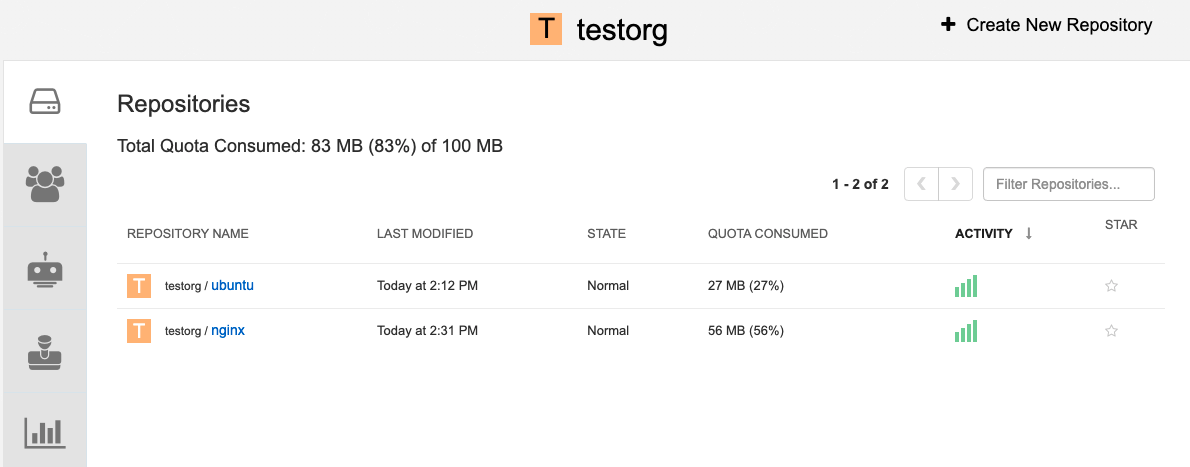

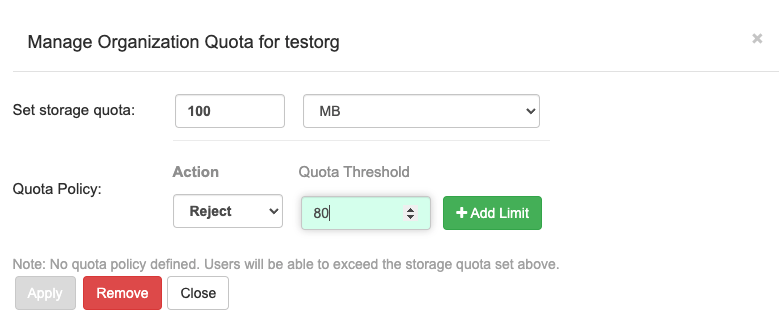

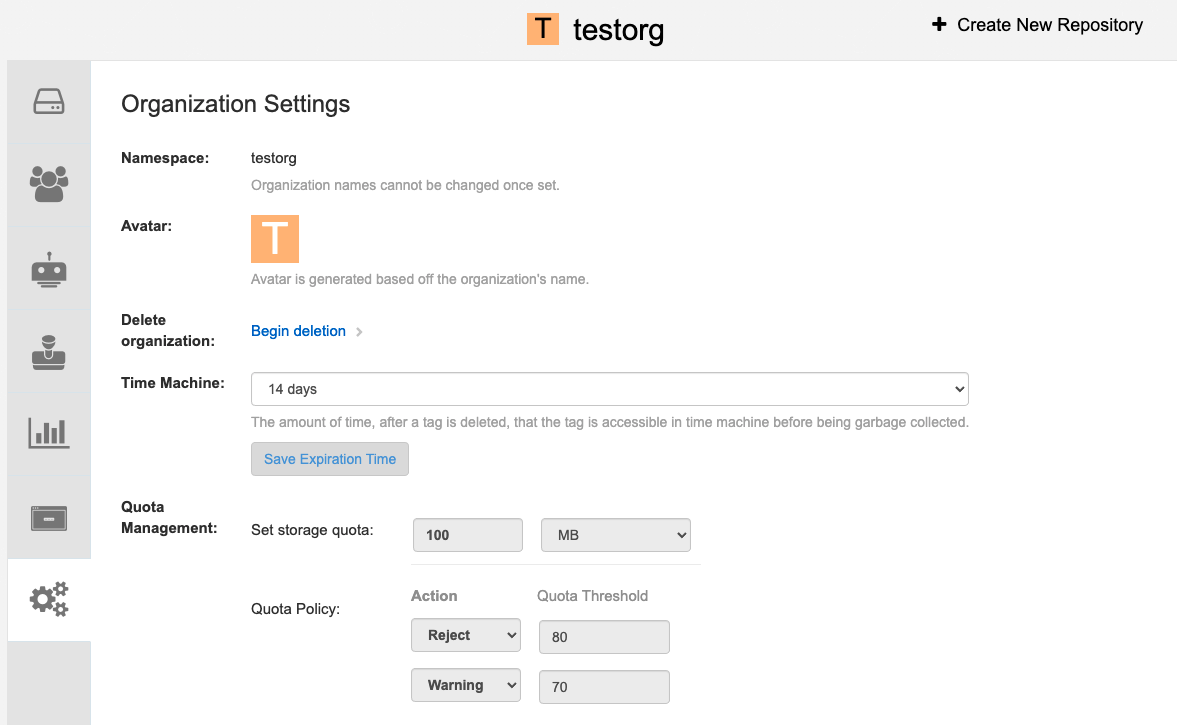

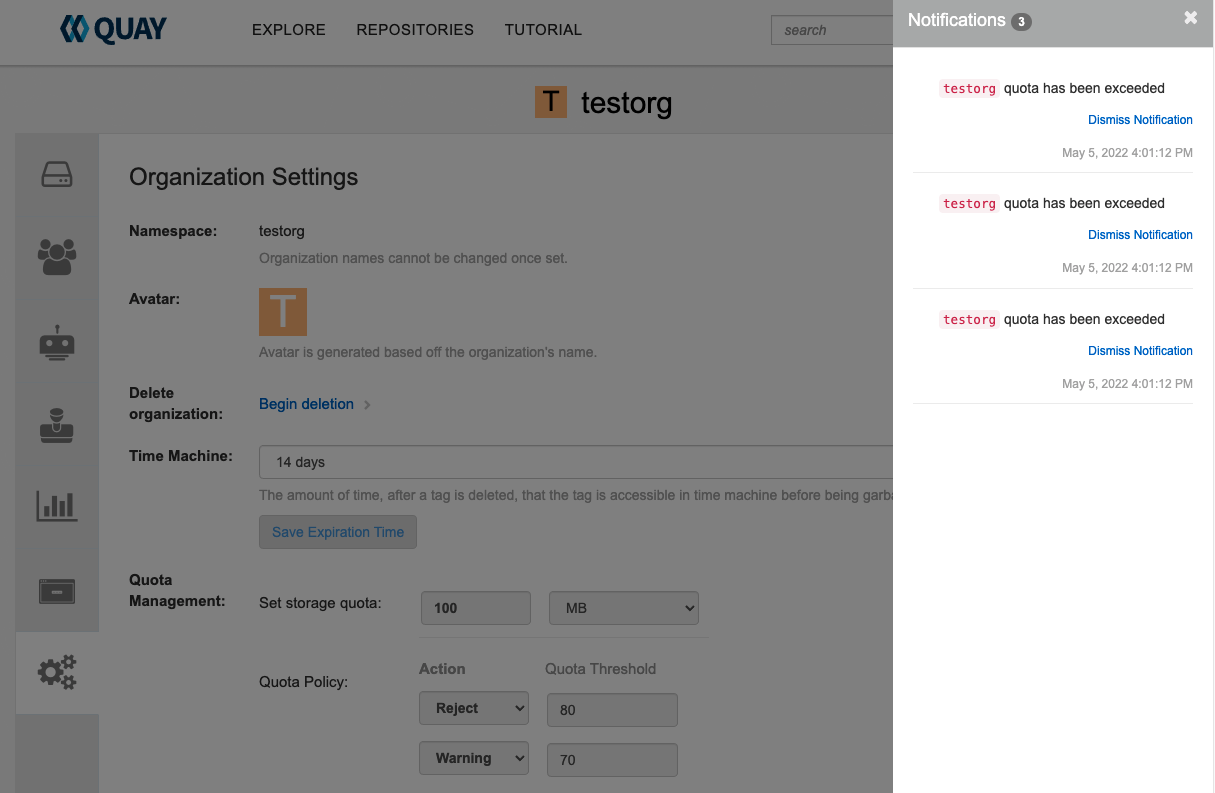

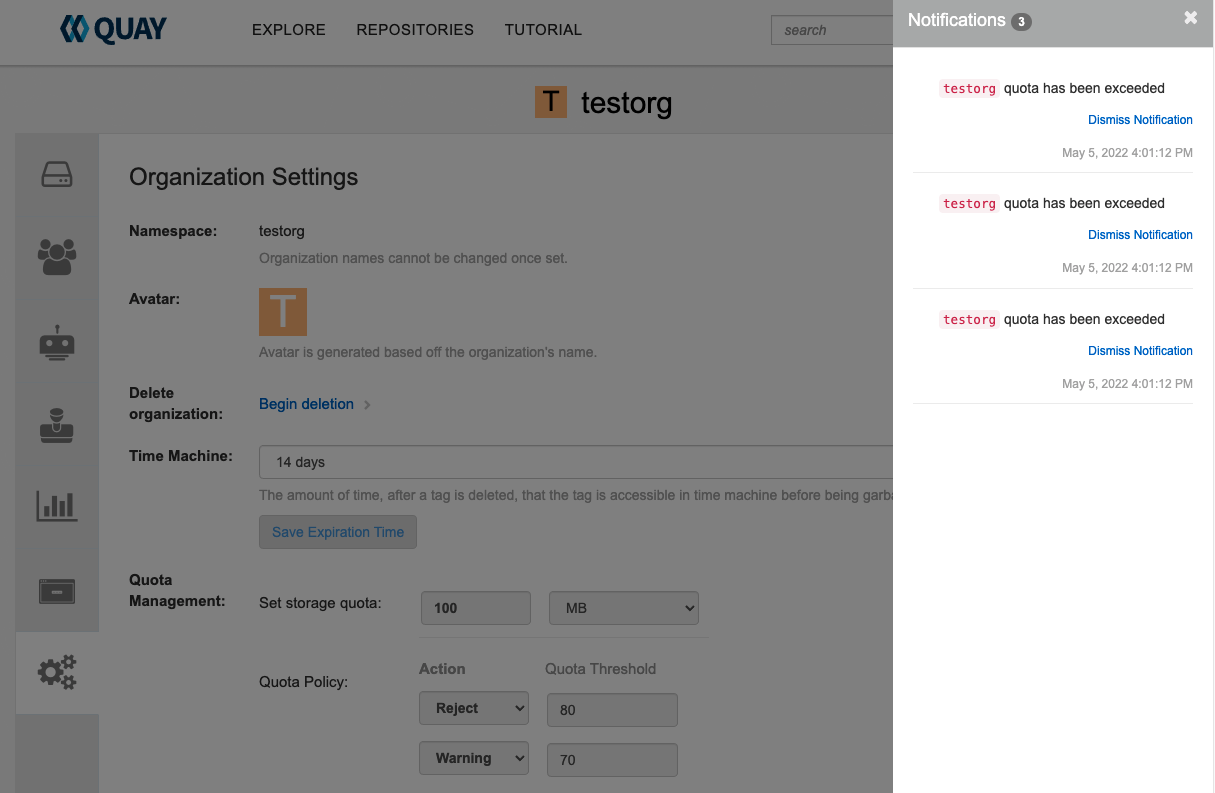

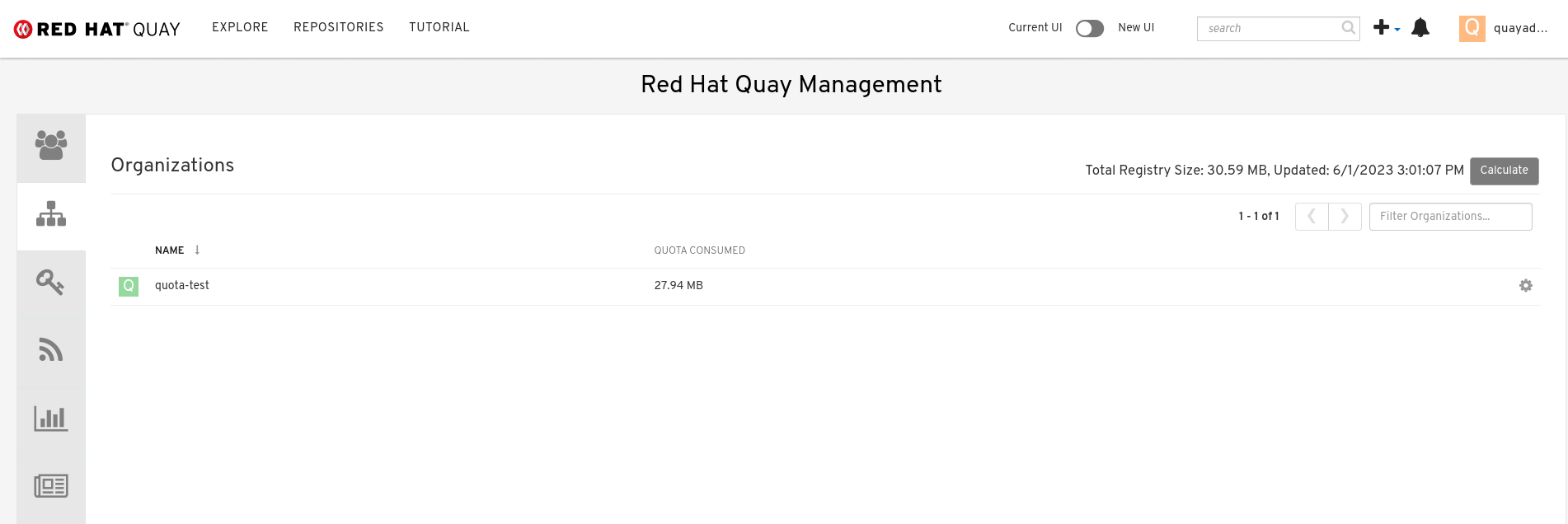

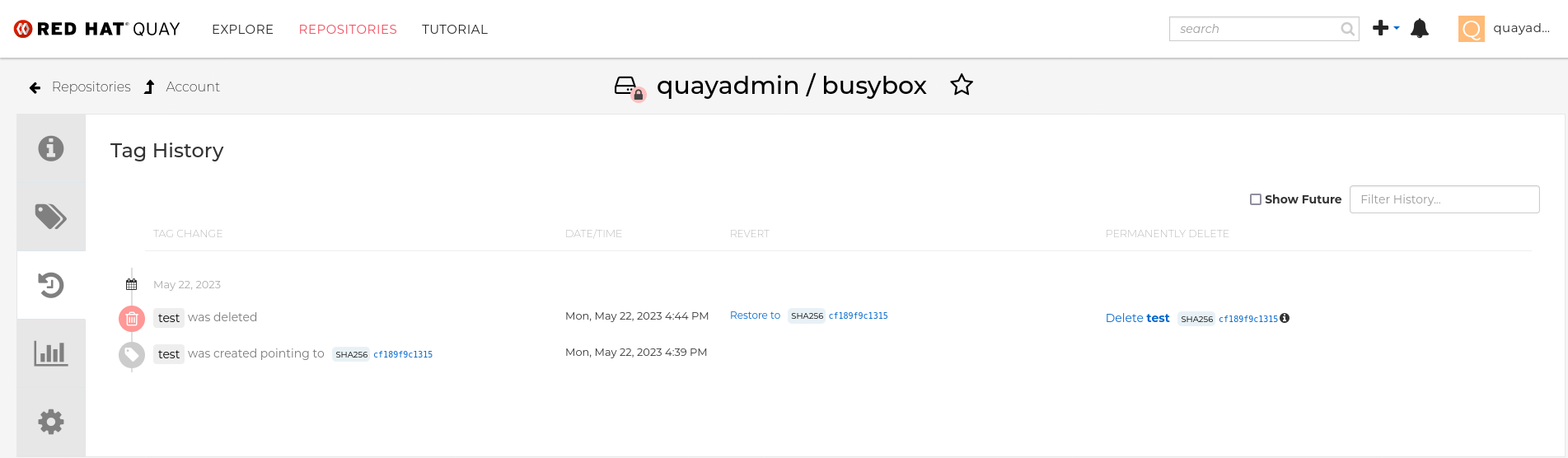

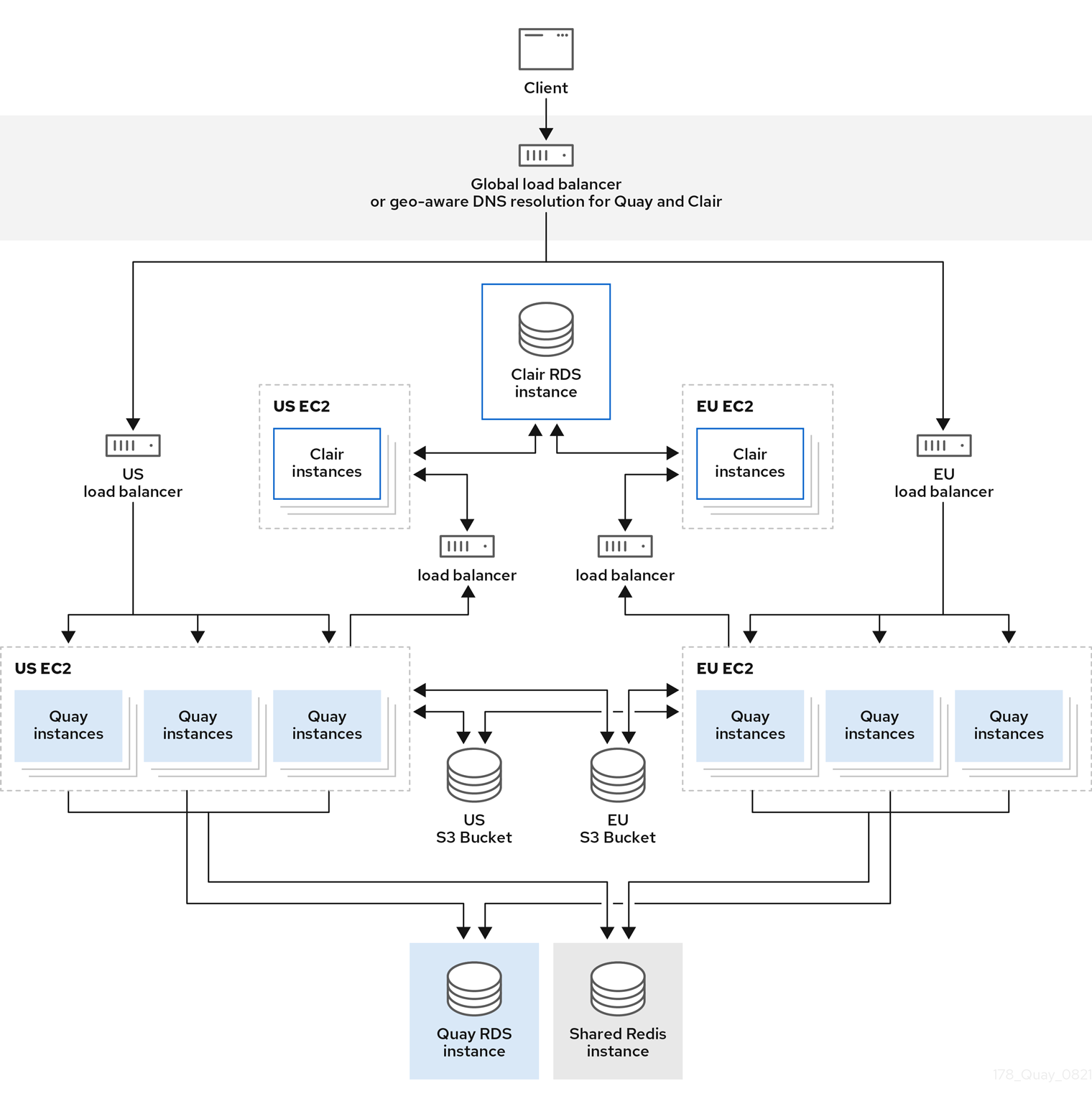

You have configured the