Provisioning Guide

A guide to provisioning physical and virtual hosts on Red Hat Satellite Servers.

Abstract

Chapter 1. Introduction

1.1. Provisioning Overview

Provisioning is a process that starts with a bare physical or virtual machine and ends with a fully configured, ready-to-use operating system. Using Red Hat Satellite, you can define and automate fine-grained provisioning for a large number of hosts.

There are many provisioning methods. For example, you can use Satellite Server’s integrated Capsule or an external Capsule Server to provision bare metal hosts using PXE based methods. You can also provision cloud instances from specific providers through their APIs. These provisioning methods are part of the Red Hat Satellite application life cycle to create, manage, and update hosts.

Red Hat Satellite has different methods for provisioning hosts:

- Bare Metal Provisioning

- Satellite provisions bare metal hosts primarily through PXE boot and MAC address identification. You can create host entries and specify the MAC address of the physical host to provision. You can also boot blank hosts to use Satellite’s discovery service, which creates a pool of ready-to-provision hosts.

- Cloud Providers

- Satellite connects to private and public cloud providers to provision instances of hosts from images that are stored with the Cloud environment. This also includes selecting which hardware profile or flavor to use.

- Virtualization Infrastructure

- Satellite connects to virtualization infrastructure services such as Red Hat Virtualization and VMware to provision virtual machines from virtual image templates or using the same PXE-based boot methods as bare metal providers.

1.2. Network Boot Provisioning Workflow

PXE booting assumes that a host, either physical or virtual, is configured to boot from network as the first booting device, and from the hard drive as the second booting device.

The provisioning process follows a basic PXE workflow:

- You create a host and select a domain and subnet. Satellite requests an available IP address from the DHCP Capsule Server that is associated with the subnet or from the PostgreSQL database in Satellite. Satellite loads this IP address into the IP address field in the Create Host window. When you complete all the options for the new host, submit the new host request.

Depending on the configuration specifications of the host and its domain and subnet, Satellite creates the following settings:

- A DHCP record on Capsule Server that is associated with the subnet.

- A forward DNS record on Capsule Server that is associated with the domain.

- A reverse DNS record on the DNS Capsule Server that is associated with the subnet.

- PXELinux, Grub, Grub2, and iPXE configuration files for the host in the TFTP Capsule Server that is associated with the subnet.

- A Puppet certificate on the associated Puppet server.

- A realm on the associated identity server.

- The new host requests a DHCP reservation from the DHCP server.

-

The DHCP server responds to the reservation request and returns TFTP

next-serverandfilenameoptions. - The host requests the boot loader and menu from the TFTP server according to the PXELoader setting.

- A boot loader is returned over TFTP.

- The boot loader fetches configuration for the host through its provisioning interface MAC address.

- The boot loader fetches the operating system installer kernel, init RAM disk, and boot parameters.

- The installer requests the provisioning template from Satellite.

- Satellite renders the provision template and returns the result to the host.

The installer performs installation of the operating system.

- The installer registers the host to Satellite using Red Hat Subscription Manager.

-

The installer installs management tools such as

katello-agentandpuppet. -

The installer notifies Satellite of a successful build in the

postinstallscript.

- The PXE configuration files revert to a local boot template.

- The host reboots.

- The new host requests a DHCP reservation from the DHCP server.

-

The DHCP server responds to the reservation request and returns TFTP

next-serverandfilenameoptions. - The host requests the bootloader and menu from the TFTP server according to the PXELoader setting.

- A boot loader is returned over TFTP.

- The boot loader fetches the configuration for the host through its provision interface MAC address.

- The boot loader initiates boot from the local drive.

- If you configured the host to use any Puppet classes, the host configures itself using the modules.

This workflow differs depending on custom options. For example:

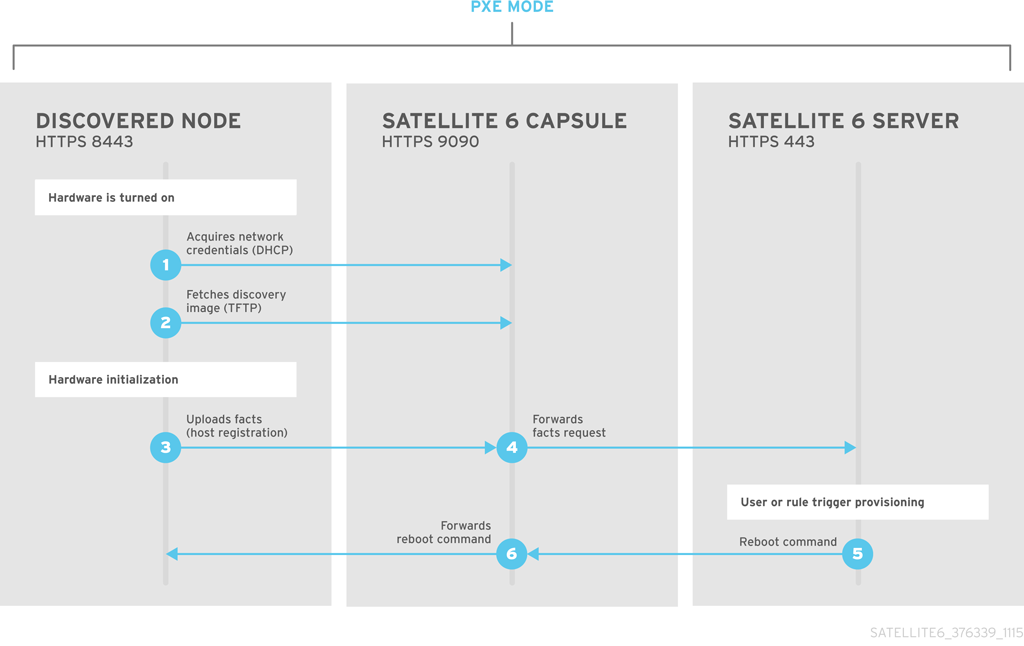

- Discovery

- If you use the discovery service, Satellite automatically detects the MAC address of the new host and restarts the host after you submit a request. Note that TCP port 8443 must be reachable by the Capsule to which the host is attached for Satellite to restart the host.

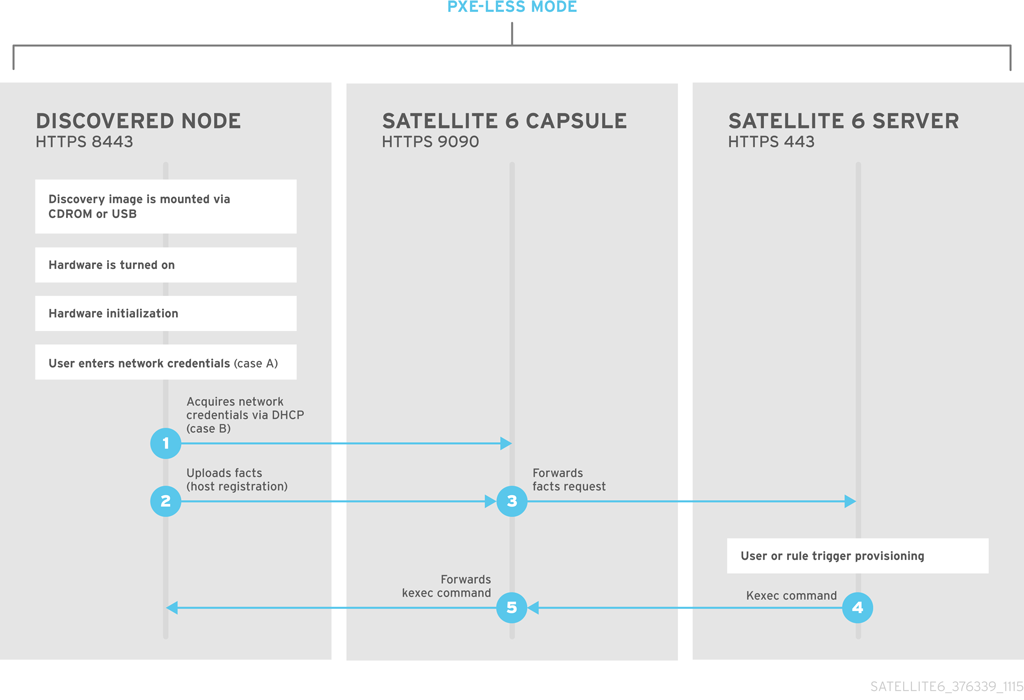

- PXE-less Provisioning

- After you submit a new host request, you must boot the specific host with the boot disk that you download from Satellite and transfer using a USB port of the host.

- Compute Resources

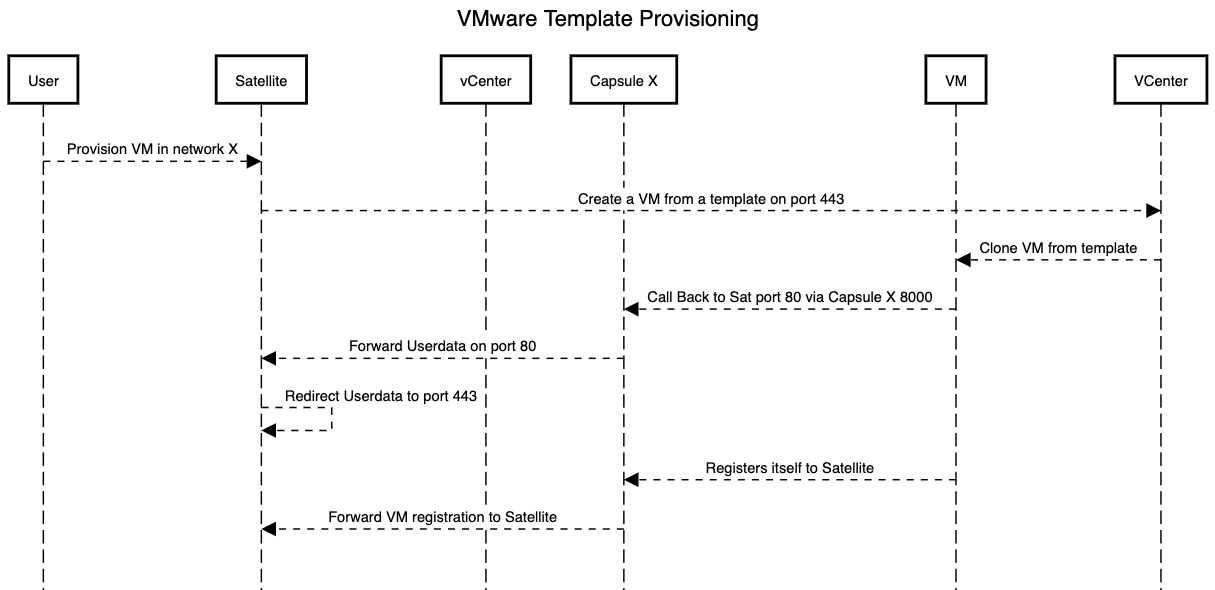

Satellite creates the virtual machine and retrieves the MAC address and stores the MAC address in Satellite. If you use image-based provisioning, the host does not follow the standard PXE boot and operating system installation. The compute resource creates a copy of the image for the host to use. Depending on image settings in Satellite, seed data can be passed in for initial configuration, for example using

cloud-init. Satellite can connect using SSH to the host and execute a template to finish the customization.NoteBy default, deleting the provisioned profile host from Satellite does not destroy the actual VM on the external compute resource. To destroy the VM when deleting the host entry on Satellite, navigate to Administer > Settings > Provisioning and configure this behavior using the destroy_vm_on_host_delete setting. If you do not destroy the associated VM and attempt to create a new VM with the same resource name later, it will fail because that VM name already exists in the external compute resource. You can still register the existing VM to Satellite using the standard host registration workflow you would use for any already provisioned host.

Chapter 2. Configuring Provisioning Resources

2.1. Provisioning Contexts

A provisioning context is the combination of an organization and location that you specify for Satellite components. The organization and location that a component belongs to sets the ownership and access for that component.

Organizations divide Red Hat Satellite 6 components into logical groups based on ownership, purpose, content, security level, and other divisions. You can create and manage multiple organizations through Red Hat Satellite 6 and assign components to each individual organization. This ensures Satellite Server provisions hosts within a certain organization and only uses components that are assigned to that organization. For more information about organizations, see Managing Organizations in the Content Management Guide.

Locations function similar to organizations. The difference is that locations are based on physical or geographical setting. Users can nest locations in a hierarchy. For more information about locations, see Managing Locations in the Content Management Guide.

2.2. Setting the Provisioning Context

When you set a provisioning context, you define which organization and location to use for provisioning hosts.

The organization and location menus are located in the menu bar, on the upper left of the Satellite web UI. If you have not selected an organization and location to use, the menu displays: Any Organization and Any Location.

Procedure

- Click Any Organization and select the organization.

- Click Any Location and select the location to use.

Each user can set their default provisioning context in their account settings. Click the user name in the upper right of the Satellite web UI and select My account to edit your user account settings.

CLI procedure

When using the CLI, include either

--organizationor--organization-labeland--locationor--location-idas an option. For example:hammer host list --organization "Default_Organization" --location "Default_Location"

# hammer host list --organization "Default_Organization" --location "Default_Location"Copy to Clipboard Copied! Toggle word wrap Toggle overflow This command outputs hosts allocated for the Default_Organization and Default_Location.

2.3. Creating Operating Systems

An operating system is a collection of resources that define how Satellite Server installs a base operating system on a host. Operating system entries combine previously defined resources, such as installation media, partition tables, provisioning templates, and others.

Importing operating systems from Red Hat’s CDN creates new entries on the Hosts > Operating Systems page.

You can also add custom operating systems using the following procedure. To use the CLI instead of the web UI, see the CLI procedure.

Procedure

- In the Satellite web UI, navigate to Hosts > Operating systems and click New Operating system.

- In the Name field, enter a name to represent the operating system entry.

- In the Major field, enter the number that corresponds to the major version of the operating system.

- In the Minor field, enter the number that corresponds to the minor version of the operating system.

- In the Description field, enter a description of the operating system.

- From the Family list, select the operating system’s family.

- From the Root Password Hash list, select the encoding method for the root password.

- From the Architectures list, select the architectures that the operating system uses.

- Click the Partition table tab and select the possible partition tables that apply to this operating system.

- Optional: if you use non-Red Hat content, click the Installation Media tab and select the installation media that apply to this operating system. For more information, see Adding Installation Media to Satellite.

- Click the Templates tab and select a PXELinux template, a Provisioning template, and a Finish template for your operating system to use. You can select other templates, for example an iPXE template, if you plan to use iPXE for provisioning.

- Click Submit to save your provisioning template.

CLI procedure

Create the operating system using the

hammer os createcommand:hammer os create --name "MyOS" \ --description "My_custom_operating_system" \ --major 7 --minor 3 --family "Redhat" --architectures "x86_64" \ --partition-tables "My_Partition" --media "Red_Hat" \ --provisioning-templates "My_Provisioning_Template"

# hammer os create --name "MyOS" \ --description "My_custom_operating_system" \ --major 7 --minor 3 --family "Redhat" --architectures "x86_64" \ --partition-tables "My_Partition" --media "Red_Hat" \ --provisioning-templates "My_Provisioning_Template"Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.4. Updating the Details of Multiple Operating Systems

Use this procedure to update the details of multiple operating systems. This example shows you how to assign each operating system a partition table called Kickstart default, a configuration template called Kickstart default PXELinux, and a provisioning template called Kickstart Default.

Procedure

On Satellite Server, run the following Bash script:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Display information about the updated operating system to verify that the operating system is updated correctly:

hammer os info --id 1

# hammer os info --id 1Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.5. Creating Architectures

An architecture in Satellite represents a logical grouping of hosts and operating systems. Architectures are created by Satellite automatically when hosts check in with Puppet. Basic i386 and x86_64 architectures are already preset in Satellite.

Use this procedure to create an architecture in Satellite.

Supported Architectures

Only Intel x86_64 architecture is supported for provisioning using PXE, Discovery, and boot disk. For more information, see the Red Hat Knowledgebase solution Supported architectures and provisioning scenarios in Satellite 6.

Procedure

- In the Satellite web UI, navigate to Hosts > Architectures and click Create Architecture.

- In the Name field, enter a name for the architecture.

- From the Operating Systems list, select an operating system. If none are available, you can create and assign them under Hosts > Operating Systems.

- Click Submit.

CLI procedure

Enter the

hammer architecture createcommand to create an architecture. Specify its name and operating systems that include this architecture:hammer architecture create --name "Architecture_Name" \ --operatingsystems "os"

# hammer architecture create --name "Architecture_Name" \ --operatingsystems "os"Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.6. Creating Hardware Models

Use this procedure to create a hardware model in Satellite so that you can specify which hardware model a host uses.

Procedure

- In the Satellite web UI, navigate to Hosts > Hardware Models and click Create Model.

- In the Name field, enter a name for the hardware model.

- Optionally, in the Hardware Model and Vendor Class fields, you can enter corresponding information for your system.

- In the Info field, enter a description of the hardware model.

- Click Submit to save your hardware model.

CLI procedure

Create a hardware model using the

hammer model createcommand. The only required parameter is--name. Optionally, enter the hardware model with the--hardware-modeloption, a vendor class with the--vendor-classoption, and a description with the--infooption:hammer model create --name "model_name" --info "description" \ --hardware-model "hardware_model" --vendor-class "vendor_class"

# hammer model create --name "model_name" --info "description" \ --hardware-model "hardware_model" --vendor-class "vendor_class"Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.7. Using a Synced Kickstart Repository for a Host’s Operating System

Satellite contains a set of synchronized kickstart repositories that you use to install the provisioned host’s operating system. For more information about adding repositories, see Syncing Repositories in the Content Management Guide.

Use this procedure to set up a kickstart repository.

Prerequisites

You must enable both BaseOS and Appstream Kickstart before provisioning.

Procedure

Add the synchronized kickstart repository that you want to use to the existing Content View, or create a new Content View and add the kickstart repository.

For Red Hat Enterprise Linux 8, ensure that you add both Red Hat Enterprise Linux 8 for x86_64 - AppStream Kickstart x86_64 8 and Red Hat Enterprise Linux 8 for x86_64 - BaseOS Kickstart x86_64 8 repositories.

If you use a disconnected environment, you must import the Kickstart repositories from a Red Hat Enterprise Linux binary DVD. For more information, see Importing Kickstart Repositories in the Content Management Guide.

- Publish a new version of the Content View where the kickstart repository is added and promote it to a required lifecycle environment. For more information, see Managing Content Views in the Content Management Guide.

- When you create a host, in the Operating System tab, for Media Selection, select the Synced Content check box.

To view the kickstart tree, enter the following command:

hammer medium list --organization "your_organization"

# hammer medium list --organization "your_organization"2.8. Adding Installation Media to Satellite

Installation media are sources of packages that Satellite Server uses to install a base operating system on a machine from an external repository. You can use this parameter to install third-party content. Red Hat content is delivered through repository syncing instead.

Installation media must be in the format of an operating system installation tree, and must be accessible to the machine hosting the installer through an HTTP URL. You can view installation media by navigating to Hosts > Installation Media menu.

By default, Satellite includes installation media for some official Linux distributions. Note that some of those installation media are targeted for a specific version of an operating system. For example CentOS mirror (7.x) must be used for CentOS 7 or earlier, and CentOS mirror (8.x) must be used for CentOS 8 or later.

If you want to improve download performance when using installation media to install operating systems on multiple host, you must modify the installation medium’s Path to point to the closest mirror or a local copy.

To use the CLI instead of the web UI, see the CLI procedure.

Procedure

- In the Satellite web UI, navigate to Hosts > Installation Media and click Create Medium.

- In the Name field, enter a name to represent the installation media entry.

In the Path enter the URL or NFS share that contains the installation tree. You can use following variables in the path to represent multiple different system architectures and versions:

-

$arch- The system architecture. -

$version- The operating system version. -

$major- The operating system major version. $minor- The operating system minor version.Example HTTP path:

http://download.example.com/centos/$version/Server/$arch/os/

http://download.example.com/centos/$version/Server/$arch/os/Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example NFS path:

nfs://download.example.com:/centos/$version/Server/$arch/os/

nfs://download.example.com:/centos/$version/Server/$arch/os/Copy to Clipboard Copied! Toggle word wrap Toggle overflow Synchronized content on Capsule Servers always uses an HTTP path. Capsule Server managed content does not support NFS paths.

-

-

From the Operating system family list, select the distribution or family of the installation medium. For example, CentOS and Fedora are in the

Red Hatfamily. - Click the Organizations and Locations tabs, to change the provisioning context. Satellite Server adds the installation medium to the set provisioning context.

- Click Submit to save your installation medium.

CLI procedure

Create the installation medium using the

hammer medium createcommand:hammer medium create --name "CustomOS" --os-family "Redhat" \ --path 'http://download.example.com/centos/$version/Server/$arch/os/' \ --organizations "My_Organization" --locations "My_Location"

# hammer medium create --name "CustomOS" --os-family "Redhat" \ --path 'http://download.example.com/centos/$version/Server/$arch/os/' \ --organizations "My_Organization" --locations "My_Location"Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.9. Creating Partition Tables

A partition table is a type of template that defines the way Satellite Server configures the disks available on a new host. A Partition table uses the same ERB syntax as provisioning templates. Red Hat Satellite contains a set of default partition tables to use, including a Kickstart default. You can also edit partition table entries to configure the preferred partitioning scheme, or create a partition table entry and add it to the operating system entry.

To use the CLI instead of the web UI, see the CLI procedure.

Procedure

- In the Satellite web UI, navigate to Hosts > Partition Tables and click Create Partition Table.

- In the Name field, enter a name for the partition table.

- Select the Default check box if you want to set the template to automatically associate with new organizations or locations.

- Select the Snippet check box if you want to identify the template as a reusable snippet for other partition tables.

- From the Operating System Family list, select the distribution or family of the partitioning layout. For example, Red Hat Enterprise Linux, CentOS, and Fedora are in the Red Hat family.

In the Template editor field, enter the layout for the disk partition. For example:

zerombr clearpart --all --initlabel autopart

zerombr clearpart --all --initlabel autopartCopy to Clipboard Copied! Toggle word wrap Toggle overflow You can also use the Template file browser to upload a template file.

The format of the layout must match that for the intended operating system. For example, Red Hat Enterprise Linux 7.2 requires a layout that matches a kickstart file.

- In the Audit Comment field, add a summary of changes to the partition layout.

- Click the Organizations and Locations tabs to add any other provisioning contexts that you want to associate with the partition table. Satellite adds the partition table to the current provisioning context.

- Click Submit to save your partition table.

CLI procedure

-

Before you create a partition table with the CLI, create a plain text file that contains the partition layout. This example uses the

~/my-partitionfile. Create the installation medium using the

hammer partition-table createcommand:hammer partition-table create --name "My Partition" --snippet false \ --os-family Redhat --file ~/my-partition --organizations "My_Organization" \ --locations "My_Location"

# hammer partition-table create --name "My Partition" --snippet false \ --os-family Redhat --file ~/my-partition --organizations "My_Organization" \ --locations "My_Location"Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.10. Dynamic Partition Example

Using an Anaconda kickstart template, the following section instructs Anaconda to erase the whole disk, automatically partition, enlarge one partition to maximum size, and then proceed to the next sequence of events in the provisioning process:

zerombr

clearpart --all --initlabel

autopart <%= host_param('autopart_options') %>

zerombr

clearpart --all --initlabel

autopart <%= host_param('autopart_options') %>Dynamic partitioning is executed by the installation program. Therefore, you can write your own rules to specify how you want to partition disks according to runtime information from the node, for example, disk sizes, number of drives, vendor, or manufacturer.

If you want to provision servers and use dynamic partitioning, add the following example as a template. When the #Dynamic entry is included, the content of the template loads into a %pre shell scriplet and creates a /tmp/diskpart.cfg that is then included into the Kickstart partitioning section.

2.11. Provisioning Templates

A provisioning template defines the way Satellite Server installs an operating system on a host.

Red Hat Satellite includes many template examples. In the Satellite web UI, navigate to Hosts > Provisioning templates to view them. You can create a template or clone a template and edit the clone. For help with templates, navigate to Hosts > Provisioning templates > Create Template > Help.

Templates accept the Embedded Ruby (ERB) syntax. For more information, see Template Writing Reference in Managing Hosts.

You can download provisioning templates. Before you can download the template, you must create a debug certificate. For more information, see Creating an Organization Debug Certificate in the Content Management Guide.

You can synchronize templates between Satellite Server and a Git repository or a local directory. For more information, see Synchronizing Templates Repositories in the Managing Hosts guide.

To view the history of changes applied to a template, navigate to Hosts > Provisioning templates, select one of the templates, and click History. Click Revert to override the content with the previous version. You can also revert to an earlier change. Click Show Diff to see information about a specific change:

- The Template Diff tab displays changes in the body of a provisioning template.

- The Details tab displays changes in the template description.

- The History tab displays the user who made a change to the template and date of the change.

2.12. Types of Provisioning Templates

There are various types of provisioning templates:

- Provision

- The main template for the provisioning process. For example, a kickstart template. For more information about kickstart template syntax, see the Kickstart Syntax Reference in the Red Hat Enterprise Linux 7 Installation Guide.

- PXELinux, PXEGrub, PXEGrub2

- PXE-based templates that deploy to the template Capsule associated with a subnet to ensure that the host uses the installer with the correct kernel options. For BIOS provisioning, select PXELinux template. For UEFI provisioning, select PXEGrub2.

- Finish

Post-configuration scripts to execute using an SSH connection when the main provisioning process completes. You can use Finishing templates only for imaged-based provisioning in virtual or cloud environments that do not support user_data. Do not confuse an image with a foreman discovery ISO, which is sometimes called a Foreman discovery image. An image in this context is an install image in a virtualized environment for easy deployment.

When a finish script successfully exits with the return code

0, Red Hat Satellite treats the code as a success and the host exits the build mode. Note that there are a few finish scripts with a build mode that uses a call back HTTP call. These scripts are not used for image-based provisioning, but for post configuration of operating-system installations such as Debian, Ubuntu, and BSD.- user_data

Post-configuration scripts for providers that accept custom data, also known as seed data. You can use the user_data template to provision virtual machines in cloud or virtualised environments only. This template does not require Satellite to be able to reach the host; the cloud or virtualization platform is responsible for delivering the data to the image.

Ensure that the image that you want to provision has the software to read the data installed and set to start during boot. For example,

cloud-init, which expects YAML input, orignition, which expects JSON input.- cloud_init

Some environments, such as VMWare, either do not support custom data or have their own data format that limits what can be done during customization. In this case, you can configure a cloud-init client with the

foremanplug-in, which attempts to download the template directly from Satellite over HTTP or HTTPS. This technique can be used in any environment, preferably virtualized.Ensure that you meet the following requirements to use the

cloud_inittemplate:- Ensure that the image that you want to provision has the software to read the data installed and set to start during boot.

A provisioned host is able to reach Satellite from the IP address that matches the host’s provisioning interface IP.

Note that cloud-init does not work behind NAT.

- Bootdisk

- Templates for PXE-less boot methods.

- Kernel Execution (kexec)

Kernel execution templates for PXE-less boot methods.

NoteKernel Execution is a Technology Preview feature. Technology Preview features are not fully supported under Red Hat Subscription Service Level Agreements (SLAs), may not be functionally complete, and are not intended for production use. However, these features provide early access to upcoming product innovations, enabling customers to test functionality and provide feedback during the development process.

- Script

- An arbitrary script not used by default but useful for custom tasks.

- ZTP

- Zero Touch Provisioning templates.

- POAP

- PowerOn Auto Provisioning templates.

- iPXE

-

Templates for

iPXEorgPXEenvironments to use instead of PXELinux.

2.13. Creating Provisioning Templates

A provisioning template defines the way Satellite Server installs an operating system on a host. Use this procedure to create a new provisioning template.

Procedure

- In the Satellite web UI, navigate to Hosts > Provisioning Templates and click Create Template.

- In the Name field, enter a name for the provisioning template.

- Fill in the rest of the fields as required. The Help tab provides information about the template syntax and details the available functions, variables, and methods that can be called on different types of objects within the template.

CLI procedure

-

Before you create a template with the CLI, create a plain text file that contains the template. This example uses the

~/my-templatefile. Create the template using the

hammer template createcommand and specify the type with the--typeoption:hammer template create --name "My Provisioning Template" \ --file ~/my-template --type provision --organizations "My_Organization" \ --locations "My_Location"

# hammer template create --name "My Provisioning Template" \ --file ~/my-template --type provision --organizations "My_Organization" \ --locations "My_Location"Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.14. Cloning Provisioning Templates

A provisioning template defines the way Satellite Server installs an operating system on a host. Use this procedure to clone a template and add your updates to the clone.

Procedure

- In the Satellite web UI, navigate to Hosts > Provisioning Templates and search for the template that you want to use.

- Click Clone to duplicate the template.

- In the Name field, enter a name for the provisioning template.

- Select the Default check box to set the template to associate automatically with new organizations or locations.

- In the Template editor field, enter the body of the provisioning template. You can also use the Template file browser to upload a template file.

- In the Audit Comment field, enter a summary of changes to the provisioning template for auditing purposes.

- Click the Type tab and if your template is a snippet, select the Snippet check box. A snippet is not a standalone provisioning template, but a part of a provisioning template that can be inserted into other provisioning templates.

- From the Type list, select the type of the template. For example, Provisioning template.

- Click the Association tab and from the Applicable Operating Systems list, select the names of the operating systems that you want to associate with the provisioning template.

- Optionally, click Add combination and select a host group from the Host Group list or an environment from the Environment list to associate provisioning template with the host groups and environments.

- Click the Organizations and Locations tabs to add any additional contexts to the template.

- Click Submit to save your provisioning template.

2.15. Creating Compute Profiles

You can use compute profiles to predefine virtual machine hardware details such as CPUs, memory, and storage. A default installation of Red Hat Satellite contains three predefined profiles:

-

1-Small -

2-Medium -

3-Large

Procedure

- In the Satellite web UI, navigate to Infrastructure > Compute Profiles and click Create Compute Profile.

- In the Name field, enter a name for the profile.

- Click Submit. A new window opens with the name of the compute profile.

- In the new window, click the name of each compute resource and edit the attributes you want to set for this compute profile.

CLI procedure

The compute profile CLI commands are not yet implemented in Red Hat Satellite 6.10.

2.16. Setting a Default Encrypted Root Password for Hosts

If you do not want to set a plain text default root password for the hosts that you provision, you can use a default encrypted password.

Procedure

Generate an encrypted password:

python -c 'import crypt,getpass;pw=getpass.getpass(); print(crypt.crypt(pw)) if (pw==getpass.getpass("Confirm: ")) else exit()'# python -c 'import crypt,getpass;pw=getpass.getpass(); print(crypt.crypt(pw)) if (pw==getpass.getpass("Confirm: ")) else exit()'Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Copy the password for later use.

- In the Satellite web UI, navigate to Administer > Settings.

- On the Settings page, select the Provisioning tab.

- In the Name column, navigate to Root password, and click Click to edit.

- Paste the encrypted password, and click Save.

2.17. Using noVNC to Access Virtual Machines

You can use your browser to access the VNC console of VMs created by Satellite.

Satellite supports using noVNC on the following virtualization platforms:

- VMware

- Libvirt

- Red Hat Virtualization

Prerequisites

- You must have a virtual machine created by Satellite.

- For existing virtual machines, ensure that the Display type in the Compute Resource settings is VNC.

- You must import the Katello root CA certificate into your Satellite Server. Adding a security exception in the browser is not enough for using noVNC. For more information, see the Installing the Katello Root CA Certificate section in the Administering Red Hat Satellite guide.

Procedure

On the VM host system, configure the firewall to allow VNC service on ports 5900 to 5930:

On Red Hat Enterprise Linux 6:

iptables -A INPUT -p tcp --dport 5900:5930 -j ACCEPT service iptables save

# iptables -A INPUT -p tcp --dport 5900:5930 -j ACCEPT # service iptables saveCopy to Clipboard Copied! Toggle word wrap Toggle overflow On Red Hat Enterprise Linux 7:

firewall-cmd --add-port=5900-5930/tcp firewall-cmd --add-port=5900-5930/tcp --permanent

# firewall-cmd --add-port=5900-5930/tcp # firewall-cmd --add-port=5900-5930/tcp --permanentCopy to Clipboard Copied! Toggle word wrap Toggle overflow

- In the Satellite web UI, navigate to Infrastructure > Compute Resources and select the name of a compute resource.

- In the Virtual Machines tab, select the name of a VM host. Ensure the machine is powered on and then select Console.

Chapter 3. Configuring Networking

Each provisioning type requires some network configuration. Use this chapter to configure network services in your integrated Capsule on Satellite Server.

New hosts must have access to your Capsule Server. Capsule Server can be either your integrated Capsule on Satellite Server or an external Capsule Server. You might want to provision hosts from an external Capsule Server when the hosts are on isolated networks and cannot connect to Satellite Server directly, or when the content is synchronized with Capsule Server. Provisioning using the external Capsule Server can save on network bandwidth.

Configuring Capsule Server has two basic requirements:

Configuring network services. This includes:

- Content delivery services

- Network services (DHCP, DNS, and TFTP)

- Puppet configuration

- Defining network resource data in Satellite Server to help configure network interfaces on new hosts.

The following instructions have similar applications to configuring standalone Capsules managing a specific network. To configure Satellite to use external DHCP, DNS, and TFTP services, see Configuring External Services in Installing Satellite Server from a Connected Network.

3.1. Network Resources

Satellite contains networking resources that you must set up and configure to create a host. Satellite includes the following networking resources:

- Domain

-

You must assign every host that is managed by Satellite to a domain. Using the domain, Satellite can manage A, AAAA, and PTR records. Even if you do not want Satellite to manage your DNS servers, you still must create and associate at least one domain. Domains are included in the naming conventions Satellite hosts, for example, a host with the name

test123in theexample.comdomain has the fully qualified domain nametest123.example.com. - Subnet

You must assign every host managed by Satellite to a subnet. Using subnets, Satellite can then manage IPv4 reservations. If there are no reservation integrations, you still must create and associate at least one subnet. When you manage a subnet in Satellite, you cannot create DHCP records for that subnet outside of Satellite. In Satellite, you can use IP Address Management (IPAM) to manage IP addresses with one of the following options:

DHCP: DHCP Capsule manages the assignment of IP addresses by finding the next available IP address starting from the first address of the range and skipping all addresses that are reserved. Before assigning an IP address, Capsule sends an ICMP and TCP pings to check whether the IP address is in use. Note that if a host is powered off, or has a firewall configured to disable connections, Satellite makes a false assumption that the IP address is available. This check does not work for hosts that are turned off, therefore, the DHCP option can only be used with subnets that Satellite controls and that do not have any hosts created externally.

The Capsule DHCP module retains the offered IP addresses for a short period of time to prevent collisions during concurrent access, so some IP addresses in the IP range might remain temporarily unused.

- Internal DB: Satellite finds the next available IP address from the Subnet range by excluding all IP addresses from the Satellite database in sequence. The primary source of data is the database, not DHCP reservations. This IPAM is not safe when multiple hosts are being created in parallel; in that case, use DHCP or Random DB IPAM instead.

- Random DB: Satellite finds the next available IP address from the Subnet range by excluding all IP addresses from the Satellite database randomly. The primary source of data is the database, not DHCP reservations. This IPAM is safe to use with concurrent host creation as IP addresses are returned in random order, minimizing the chance of a conflict.

- EUI-64: Extended Unique Identifier (EUI) 64bit IPv6 address generation, as per RFC2373, is obtained through the 48-bit MAC address.

- External IPAM: Delegates IPAM to an external system through Capsule feature. Satellite currently does not ship with any external IPAM implementations, but several plug-ins are in development.

None: IP address for each host must be entered manually.

Options DHCP, Internal DB and Random DB can lead to DHCP conflicts on subnets with records created externally. These subnets must be under exclusive Satellite control.

For more information about adding a subnet, see Section 3.7, “Adding a Subnet to Satellite Server”.

- DHCP Ranges

- You can define the same DHCP range in Satellite Server for both discovered and provisioned systems, but use a separate range for each service within the same subnet.

3.2. Satellite and DHCP Options

Satellite manages DHCP reservations through a DHCP Capsule. Satellite also sets the next-server and filename DHCP options.

The next-server option

The next-server option provides the IP address of the TFTP server to boot from. This option is not set by default and must be set for each TFTP Capsule. You can use the satellite-installer command with the --foreman-proxy-tftp-servername option to set the TFTP server in the /etc/foreman-proxy/settings.d/tftp.yml file:

satellite-installer --foreman-proxy-tftp-servername 1.2.3.4

# satellite-installer --foreman-proxy-tftp-servername 1.2.3.4Each TFTP Capsule then reports this setting through the API and Satellite can retrieve the configuration information when it creates the DHCP record.

When the PXE loader is set to none, Satellite does not populate the next-server option into the DHCP record.

If the next-server option remains undefined, Satellite uses reverse DNS search to find a TFTP server address to assign, but you might encounter the following problems:

- DNS timeouts during provisioning

- Querying of incorrect DNS server. For example, authoritative rather than caching

-

Errors about incorrect IP address for the TFTP server. For example,

PTR record was invalid

If you encounter these problems, check the DNS setup on both Satellite and Capsule, specifically the PTR record resolution.

The filename option

The filename option contains the full path to the file that downloads and executes during provisioning. The PXE loader that you select for the host or host group defines which filename option to use. When the PXE loader is set to none, Satellite does not populate the filename option into the DHCP record. Depending on the PXE loader option, the filename changes as follows:

| PXE loader option | filename entry | Notes |

|---|---|---|

| PXELinux BIOS |

| |

| PXELinux UEFI |

| |

| iPXE Chain BIOS |

| |

| PXEGrub2 UEFI |

| x64 can differ depending on architecture |

| iPXE UEFI HTTP |

|

Requires the |

| Grub2 UEFI HTTP |

|

Requires the |

3.3. Troubleshooting DHCP Problems in Satellite

Satellite can manage an ISC DHCP server on internal or external DHCP Capsule. Satellite can list, create, and delete DHCP reservations and leases. However, there are a number of problems that you might encounter on occasions.

Out of sync DHCP records

When an error occurs during DHCP orchestration, DHCP records in the Satellite database and the DHCP server might not match. To fix this, you must add missing DHCP records from the Satellite database to the DHCP server and then remove unwanted records from the DHCP server as per the following steps:

To preview the DHCP records that are going to be added to the DHCP server, enter the following command:

foreman-rake orchestration:dhcp:add_missing subnet_name=NAME

# foreman-rake orchestration:dhcp:add_missing subnet_name=NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow If you are satisfied by the preview changes in the previous step, apply them by entering the above command with the

perform=1argument:foreman-rake orchestration:dhcp:add_missing subnet_name=NAME perform=1

# foreman-rake orchestration:dhcp:add_missing subnet_name=NAME perform=1Copy to Clipboard Copied! Toggle word wrap Toggle overflow To keep DHCP records in Satellite and in the DHCP server synchronized, you can remove unwanted DHCP records from the DHCP server. Note that Satellite assumes that all managed DHCP servers do not contain third-party records, therefore, this step might delete those unexpected records. To preview what records are going to be removed from the DHCP server, enter the following command:

foreman-rake orchestration:dhcp:remove_offending subnet_name=NAME

# foreman-rake orchestration:dhcp:remove_offending subnet_name=NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow If you are satisfied by the preview changes in the previous step, apply them by entering the above command with the

perform=1argument:foreman-rake orchestration:dhcp:remove_offending subnet_name=NAME perform=1

# foreman-rake orchestration:dhcp:remove_offending subnet_name=NAME perform=1Copy to Clipboard Copied! Toggle word wrap Toggle overflow

PXE loader option change

When the PXE loader option is changed for an existing host, this causes a DHCP conflict. The only workaround is to overwrite the DHCP entry.

Incorrect permissions on DHCP files

An operating system update can update the dhcpd package. This causes the permissions of important directories and files to reset so that the DHCP Capsule cannot read the required information.

For more information, see DHCP error while provisioning host from Satellite server Error ERF12-6899 ProxyAPI::ProxyException: Unable to set DHCP entry RestClient::ResourceNotFound 404 Resource Not Found on Red Hat Knowledgebase.

Changing the DHCP Capsule entry

Satellite manages DHCP records only for hosts that are assigned to subnets with a DHCP Capsule set. If you create a host and then clear or change the DHCP Capsule, when you attempt to delete the host, the action fails.

If you create a host without setting the DHCP Capsule and then try to set the DHCP Capsule, this causes DHCP conflicts.

Deleted hosts entries in the dhcpd.leases file

Any changes to a DHCP lease are appended to the end of the dhcpd.leases file. Because entries are appended to the file, it is possible that two or more entries of the same lease can exist in the dhcpd.leases file at the same time. When there are two or more entries of the same lease, the last entry in the file takes precedence. Group, subgroup and host declarations in the lease file are processed in the same manner. If a lease is deleted, { deleted; } is appended to the declaration.

3.4. Prerequisites for Image Based Provisioning

Post-Boot Configuration Method

Images that use the finish post-boot configuration scripts require a managed DHCP server, such as Satellite’s integrated Capsule or an external Capsule. The host must be created with a subnet associated with a DHCP Capsule, and the IP address of the host must be a valid IP address from the DHCP range.

It is possible to use an external DHCP service, but IP addresses must be entered manually. The SSH credentials corresponding to the configuration in the image must be configured in Satellite to enable the post-boot configuration to be made.

Check the following items when troubleshooting a virtual machine booted from an image that depends on post-configuration scripts:

- The host has a subnet assigned in Satellite Server.

- The subnet has a DHCP Capsule assigned in Satellite Server.

- The host has a valid IP address assigned in Satellite Server.

- The IP address acquired by the virtual machine using DHCP matches the address configured in Satellite Server.

- The virtual machine created from an image responds to SSH requests.

- The virtual machine created from an image authorizes the user and password, over SSH, which is associated with the image being deployed.

- Satellite Server has access to the virtual machine via SSH keys. This is required for the virtual machine to receive post-configuration scripts from Satellite Server.

Pre-Boot Initialization Configuration Method

Images that use the cloud-init scripts require a DHCP server to avoid having to include the IP address in the image. A managed DHCP Capsule is preferred. The image must have the cloud-init service configured to start when the system boots and fetch a script or configuration data to use in completing the configuration.

Check the following items when troubleshooting a virtual machine booted from an image that depends on initialization scripts included in the image:

- There is a DHCP server on the subnet.

-

The virtual machine has the

cloud-initservice installed and enabled.

For information about the differing levels of support for finish and cloud-init scripts in virtual-machine images, see the Red Hat Knowledgebase Solution What are the supported compute resources for the finish and cloud-init scripts on the Red Hat Customer Portal.

3.5. Configuring Network Services

Some provisioning methods use Capsule Server services. For example, a network might require Capsule Server to act as a DHCP server. A network can also use PXE boot services to install the operating system on new hosts. This requires configuring Capsule Server to use the main PXE boot services: DHCP, DNS, and TFTP.

Use the satellite-installer command with the options to configure these services on Satellite Server.

To configure these services on an external Capsule Server, run satellite-installer --scenario capsule.

Satellite Server uses eth0 for external communication, such as connecting to Red Hat’s CDN.

Procedure

To configure network services on Satellite’s integrated Capsule, complete the following steps:

Enter the

satellite-installercommand to configure the required network services:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Find Capsule Server that you configure:

hammer proxy list

# hammer proxy listCopy to Clipboard Copied! Toggle word wrap Toggle overflow Refresh features of Capsule Server to view the changes:

hammer proxy refresh-features --name "satellite.example.com"

# hammer proxy refresh-features --name "satellite.example.com"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Verify the services configured on Capsule Server:

hammer proxy info --name "satellite.example.com"

# hammer proxy info --name "satellite.example.com"Copy to Clipboard Copied! Toggle word wrap Toggle overflow

3.5.1. Multiple Subnets or Domains via Installer

The satellite-installer options allow only for a single DHCP subnet or DNS domain. One way to define more than one subnet is by using a custom configuration file.

For every additional subnet or domain, create an entry in /etc/foreman-installer/custom-hiera.yaml file:

Execute satellite-installer to perform the changes and verify that the /etc/dhcp/dhcpd.conf contains appropriate entries. Subnets must be then defined in Satellite database.

3.5.2. DHCP, DNS, and TFTP Options for Network Configuration

DHCP Options

- --foreman-proxy-dhcp

-

Enables the DHCP service. You can set this option to

trueorfalse. - --foreman-proxy-dhcp-managed

-

Enables Foreman to manage the DHCP service. You can set this option to

trueorfalse. - --foreman-proxy-dhcp-gateway

- The DHCP pool gateway. Set this to the address of the external gateway for hosts on your private network.

- --foreman-proxy-dhcp-interface

-

Sets the interface for the DHCP service to listen for requests. Set this to

eth1. - --foreman-proxy-dhcp-nameservers

-

Sets the addresses of the nameservers provided to clients through DHCP. Set this to the address for Satellite Server on

eth1. - --foreman-proxy-dhcp-range

- A space-separated DHCP pool range for Discovered and Unmanaged services.

- --foreman-proxy-dhcp-server

- Sets the address of the DHCP server to manage.

DNS Options

- --foreman-proxy-dns

-

Enables DNS service. You can set this option to

trueorfalse. - --foreman-proxy-dns-managed

-

Enables Foreman to manage the DNS service. You can set this option to

trueorfalse. - --foreman-proxy-dns-forwarders

- Sets the DNS forwarders. Set this to your DNS servers.

- --foreman-proxy-dns-interface

-

Sets the interface to listen for DNS requests. Set this to

eth1. - --foreman-proxy-dns-reverse

- The DNS reverse zone name.

- --foreman-proxy-dns-server

- Sets the address of the DNS server to manage.

- --foreman-proxy-dns-zone

- Sets the DNS zone name.

TFTP Options

- --foreman-proxy-tftp

-

Enables TFTP service. You can set this option to

trueorfalse. - --foreman-proxy-tftp-managed

-

Enables Foreman to manage the TFTP service. You can set this option to

trueorfalse. - --foreman-proxy-tftp-servername

- Sets the TFTP server to use. Ensure that you use Capsule’s IP address.

Run satellite-installer --help to view more options related to DHCP, DNS, TFTP, and other Satellite Capsule services

3.5.3. Using TFTP Services through NAT

You can use Satellite TFTP services through NAT. To do this, on all NAT routers or firewalls, you must enable a TFTP service on UDP port 69 and enable the TFTP state tracking feature. For more information, see the documentation for your NAT device.

Using NAT on Red Hat Enterprise Linux 7:

Use the following command to allow TFTP service on UDP port 69, load the kernel TFTP state tracking module, and make the changes persistent:

firewall-cmd --add-service=tftp && firewall-cmd --runtime-to-permanent

# firewall-cmd --add-service=tftp && firewall-cmd --runtime-to-permanentFor a NAT running on Red Hat Enterprise Linux 6:

Configure the firewall to allow TFTP service UDP on port 69.

iptables -A OUTPUT -i eth0 -p udp --sport 69 -m state \ --state ESTABLISHED -j ACCEPT service iptables save

# iptables -A OUTPUT -i eth0 -p udp --sport 69 -m state \ --state ESTABLISHED -j ACCEPT # service iptables saveCopy to Clipboard Copied! Toggle word wrap Toggle overflow Load the

ip_conntrack_tftpkernel TFTP state module. In the/etc/sysconfig/iptables-configfile, locateIPTABLES_MODULESand addip_conntrack_tftpas follows:IPTABLES_MODULES="ip_conntrack_tftp"

IPTABLES_MODULES="ip_conntrack_tftp"Copy to Clipboard Copied! Toggle word wrap Toggle overflow

3.6. Adding a Domain to Satellite Server

Satellite Server defines domain names for each host on the network. Satellite Server must have information about the domain and Capsule Server responsible for domain name assignment.

Checking for Existing Domains

Satellite Server might already have the relevant domain created as part of Satellite Server installation. Switch the context to Any Organization and Any Location then check the domain list to see if it exists.

DNS Server Configuration Considerations

During the DNS record creation, Satellite performs conflict DNS lookups to verify that the host name is not in active use. This check runs against one of the following DNS servers:

- The system-wide resolver if Administer > Settings > Query local nameservers is set to true.

- The nameservers that are defined in the subnet associated with the host.

- The authoritative NS-Records that are queried from the SOA from the domain name associated with the host.

If you experience timeouts during DNS conflict resolution, check the following settings:

- The subnet nameservers must be reachable from Satellite Server.

- The domain name must have a Start of Authority (SOA) record available from Satellite Server.

-

The system resolver in the

/etc/resolv.conffile must have a valid and working configuration.

To use the CLI instead of the web UI, see the CLI procedure.

Procedure

To add a domain to Satellite, complete the following steps:

- In the Satellite web UI, navigate to Infrastructure > Domains and click Create Domain.

- In the DNS Domain field, enter the full DNS domain name.

- In the Fullname field, enter the plain text name of the domain.

- Click the Parameters tab and configure any domain level parameters to apply to hosts attached to this domain. For example, user defined Boolean or string parameters to use in templates.

- Click Add Parameter and fill in the Name and Value fields.

- Click the Locations tab, and add the location where the domain resides.

- Click the Organizations tab, and add the organization that the domain belongs to.

- Click Submit to save the changes.

CLI procedure

Use the

hammer domain createcommand to create a domain:hammer domain create --name "domain_name.com" \ --description "My example domain" --dns-id 1 \ --locations "My_Location" --organizations "My_Organization"

# hammer domain create --name "domain_name.com" \ --description "My example domain" --dns-id 1 \ --locations "My_Location" --organizations "My_Organization"Copy to Clipboard Copied! Toggle word wrap Toggle overflow

In this example, the --dns-id option uses 1, which is the ID of your integrated Capsule on Satellite Server.

3.7. Adding a Subnet to Satellite Server

You must add information for each of your subnets to Satellite Server because Satellite configures interfaces for new hosts. To configure interfaces, Satellite Server must have all the information about the network that connects these interfaces.

To use the CLI instead of the web UI, see the CLI procedure.

Procedure

- In the Satellite web UI, navigate to Infrastructure > Subnets, and in the Subnets window, click Create Subnet.

- In the Name field, enter a name for the subnet.

- In the Description field, enter a description for the subnet.

- In the Network address field, enter the network address for the subnet.

- In the Network prefix field, enter the network prefix for the subnet.

- In the Network mask field, enter the network mask for the subnet.

- In the Gateway address field, enter the external gateway for the subnet.

- In the Primary DNS server field, enter a primary DNS for the subnet.

- In the Secondary DNS server, enter a secondary DNS for the subnet.

- From the IPAM list, select the method that you want to use for IP address management (IPAM). For more information about IPAM, see Section 3.1, “Network Resources”.

- Enter the information for the IPAM method that you select. Click the Remote Execution tab and select the Capsule that controls the remote execution.

- Click the Domains tab and select the domains that apply to this subnet.

- Click the Capsules tab and select the Capsule that applies to each service in the subnet, including DHCP, TFTP, and reverse DNS services.

- Click the Parameters tab and configure any subnet level parameters to apply to hosts attached to this subnet. For example, user defined Boolean or string parameters to use in templates.

- Click the Locations tab and select the locations that use this Capsule.

- Click the Organizations tab and select the organizations that use this Capsule.

- Click Submit to save the subnet information.

CLI procedure

Create the subnet with the following command:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

In this example, the --dhcp-id, --dns-id, and --tftp-id options use 1, which is the ID of the integrated Capsule in Satellite Server.

Chapter 4. Using Infoblox as DHCP and DNS Providers

You can use Capsule Server to connect to your Infoblox application to create and manage DHCP and DNS records, and to reserve IP addresses.

The supported Infoblox version is NIOS 8.0 or higher and Satellite 6.10 or higher.

4.1. Limitations

All DHCP and DNS records can be managed only in a single Network or DNS view. After you install the Infoblox modules on Capsule and set up the view using the satellite-installer command, you cannot edit the view.

Capsule Server communicates with a single Infoblox node using the standard HTTPS web API. If you want to configure clustering and High Availability, make the configurations in Infoblox.

Hosting PXE-related files using Infoblox’s TFTP functionality is not supported. You must use Capsule as a TFTP server for PXE provisioning. For more information, see Chapter 3, Configuring Networking.

Satellite IPAM feature cannot be integrated with Infoblox.

4.2. Prerequisites

You must have Infoblox account credentials to manage DHCP and DNS entries in Satellite.

Ensure that you have Infoblox administration roles with the names: DHCP Admin and DNS Admin.

The administration roles must have permissions or belong to an admin group that permits the accounts to perform tasks through the Infoblox API.

4.3. Installing the Infoblox CA Certificate on Capsule Server

You must install Infoblox HTTPS CA certificate on the base system for all Capsules that you want to integrate with Infoblox applications.

You can download the certificate from the Infoblox web UI, or you can use the following OpenSSL commands to download the certificate:

update-ca-trust enable openssl s_client -showcerts -connect infoblox.example.com:443 </dev/null | \ openssl x509 -text >/etc/pki/ca-trust/source/anchors/infoblox.crt update-ca-trust extract

# update-ca-trust enable

# openssl s_client -showcerts -connect infoblox.example.com:443 </dev/null | \

openssl x509 -text >/etc/pki/ca-trust/source/anchors/infoblox.crt

# update-ca-trust extract-

The

infoblox.example.comentry must match the host name for the Infoblox application in the X509 certificate.

To test the CA certificate, use a CURL query:

curl -u admin:password https://infoblox.example.com/wapi/v2.0/network

# curl -u admin:password https://infoblox.example.com/wapi/v2.0/networkExample positive response:

Use the following Red Hat Knowledgebase article to install the certificate: How to install a CA certificate on Red Hat Enterprise Linux 6 / 7.

4.4. Installing the DHCP Infoblox module

Use this procedure to install the DHCP Infoblox module on Capsule. Note that you cannot manage records in separate views.

You can also install DHCP and DNS Infoblox modules simultaneously by combining this procedure and Section 4.5, “Installing the DNS Infoblox Module”

DHCP Infoblox Record Type Considerations

Use only the --foreman-proxy-plugin-dhcp-infoblox-record-type fixedaddress option to configure the DHCP and DNS modules.

Configuring both DHCP and DNS Infoblox modules with the host record type setting causes DNS conflicts and is not supported. If you install the Infoblox module on Capsule Server with the --foreman-proxy-plugin-dhcp-infoblox-record-type option set to host, you must unset both DNS Capsule and Reverse DNS Capsule options because Infoblox does the DNS management itself. You cannot use the host option without creating conflicts and, for example, being unable to rename hosts in Satellite.

Procedure

To install the Infoblox module for DHCP, complete the following steps:

On Capsule, enter the following command:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - In the Satellite web UI, navigate to Infrastructure > Capsules and select the Capsule with the Infoblox DHCP module and click Refresh.

- Ensure that the dhcp features are listed.

- For all domains managed through Infoblox, ensure that the DNS Capsule is set for that domain. To verify, in the Satellite web UI, navigate to Infrastructure > Domains, and inspect the settings of each domain.

- For all subnets managed through Infoblox, ensure that DHCP Capsule and Reverse DNS Capsule is set. To verify, in the Satellite web UI, navigate to Infrastructure > Subnets, and inspect the settings of each subnet.

4.5. Installing the DNS Infoblox Module

Use this procedure to install the DNS Infoblox module on Capsule. You can also install DHCP and DNS Infoblox modules simultaneously by combining this procedure and Section 4.4, “Installing the DHCP Infoblox module”.

DNS records are managed only in the default DNS view, it’s not possible to specify which DNS view to use.

To install the DNS Infoblox module, complete the following steps:

On Capsule, enter the following command to configure the Infoblox module:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Optionally, you can change the value of the

--foreman-proxy-plugin-dns-infoblox-dns-viewoption to specify a DNS Infoblox view other than the default view.- In the Satellite web UI, navigate to Infrastructure > Capsules and select the Capsule with the Infoblox DNS module and click Refresh.

- Ensure that the dns features are listed.

Chapter 5. Configuring iPXE to Reduce Provisioning Times

You can use Satellite to configure PXELinux to chainboot iPXE in BIOS mode and boot using the HTTP protocol if you have the following restrictions that prevent you from using PXE:

- A network with unmanaged DHCP servers.

- A PXE service that is blacklisted on your network or restricted by a firewall.

- An unreliable TFTP UDP-based protocol because of, for example, a low-bandwidth network.

For more information about iPXE support, see Supported architectures for provisioning article.

iPXE Overview

iPXE is an open source network boot firmware. It provides a full PXE implementation enhanced with additional features, including booting from HTTP server. For more information, see ipxe.org.

There are three methods of using iPXE with Red Hat Satellite:

- Booting virtual machines using hypervisors that use iPXE as primary firmware.

- Using PXELinux through TFTP to chainload iPXE directly on bare metal hosts.

- Using PXELinux through UNDI, which uses HTTP to transfer the kernel and the initial RAM disk on bare-metal hosts.

Security Information

The iPXE binary in Red Hat Enterprise Linux is built without any security features. For this reason, you can only use HTTP, and cannot use HTTPS.

All security-related features of iPXE in Red Hat Enterprise Linux are not supported. For more information, see Red Hat Enterprise Linux HTTPS support in iPXE.

Prerequisites

Before you begin, ensure that the following conditions are met:

- A host exists on Red Hat Satellite to use.

- The MAC address of the provisioning interface matches the host configuration.

- The provisioning interface of the host has a valid DHCP reservation.

- The NIC is capable of PXE booting. For more information, see supported hardware on ipxe.org for a list of hardware drivers expected to work with an iPXE-based boot disk.

- The NIC is compatible with iPXE.

To prepare iPXE environment, you must perform this procedure on all Capsules.

Procedure

Enable the tftp and httpboot services:

satellite-installer --foreman-proxy-httpboot true --foreman-proxy-tftp true

# satellite-installer --foreman-proxy-httpboot true --foreman-proxy-tftp trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow Install the

ipxe-bootimgsRPM package:yum install ipxe-bootimgs

# yum install ipxe-bootimgsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Copy the iPXE firmware with the Linux kernel header to the TFTP directory:

cp /usr/share/ipxe/ipxe.lkrn /var/lib/tftpboot/

# cp /usr/share/ipxe/ipxe.lkrn /var/lib/tftpboot/Copy to Clipboard Copied! Toggle word wrap Toggle overflow Copy the UNDI iPXE firmware to the TFTP directory:

cp /usr/share/ipxe/undionly.kpxe /var/lib/tftpboot/undionly-ipxe.0

# cp /usr/share/ipxe/undionly.kpxe /var/lib/tftpboot/undionly-ipxe.0Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Correct the SELinux file contexts:

restorecon -RvF /var/lib/tftpboot/

# restorecon -RvF /var/lib/tftpboot/Copy to Clipboard Copied! Toggle word wrap Toggle overflow Optionally, configure Foreman discovery. For more information, see Chapter 7, Configuring the Discovery Service.

- In the Satellite web UI, navigate to Administer > Settings, and click the Provisioning tab.

- Locate the Default PXE global template entry row and, in the Value column, change the value to discovery.

5.1. Booting virtual machines

Some virtualization hypervisors use iPXE as primary firmware for PXE booting. Because of this, you can boot virtual machines without TFTP and PXELinux.

Chainbooting virtual machine workflow

Using virtualization hypervisors removes the need for TFTP and PXELinux. It has the following workflow:

- Virtual machine starts

- iPXE retrieves the network credentials using DHCP

- iPXE retrieves the HTTP address using DHCP

- iPXE loads the iPXE bootstrap template from Capsule

- iPXE loads the iPXE template with MAC as a URL parameter from Capsule

- iPXE loads the kernel and initial RAM disk of the installer

Ensure that the hypervisor that you want to use supports iPXE. The following virtualization hypervisors support iPXE:

- libvirt

- Red Hat Virtualization

- RHEV

Configuring Satellite Server to use iPXE

You can use the default template to configure iPXE booting for hosts. If you want to change the default values in the template, clone the template and edit the clone.

Procedure

-

In the Satellite web UI, navigate to Hosts > Provisioning Templates, enter

Kickstart default iPXEand click Search. - Optional: If you want to change the template, click Clone, enter a unique name, and click Submit.

- Click the name of the template you want to use.

- If you clone the template, you can make changes you require on the Template tab.

- Click the Association tab and select the operating systems that your host uses.

- Click the Locations tab and add the location where the host resides.

- Click the Organizations tab and add the organization that the host belongs to.

- Click Submit to save the changes.

- Navigate to Hosts > Operating systems and select the operating system of your host.

- Click the Templates tab.

- From the iPXE Template list, select the template you want to use.

- Click Submit to save the changes.

- Navigate to Hosts > All Hosts.

- In the Hosts page, select the host that you want to use.

- Select the Operating System tab.

- Set PXE Loader to iPXE Embedded or None.

- Select the Templates tab.

- From the iPXE template list, select Review to verify that the Kickstart default iPXE template is the correct template.

Configure the

dhcpd.conffile as follows:if exists user-class and option user-class = "iPXE" { filename "http://capsule.example.com:8000/unattended/iPXE?bootstrap=1"; } # elseif existing statements if non-iPXE environment should be preservedif exists user-class and option user-class = "iPXE" { filename "http://capsule.example.com:8000/unattended/iPXE?bootstrap=1"; } # elseif existing statements if non-iPXE environment should be preservedCopy to Clipboard Copied! Toggle word wrap Toggle overflow If you use an isolated network, use a Capsule Server URL with TCP port

8000, instead of the URL of Satellite Server.NoteIf you have changed the port using the

--foreman-proxy-http-port installeroption, use your custom port. You must update the/etc/dhcp/dhcpd.conffile after every upgrade.

5.2. Chainbooting iPXE from PXELinux

Use this procedure to set up iPXE to use a built-in driver for network communication or UNDI interface. To use HTTP with iPXE, use iPXE build with built-in drivers (ipxe.lkrn). Universal Network Device Interface (UNDI) is a minimalistic UDP/IP stack that implements TFTP client, however, cannot support other protocols like HTTP (undionly-ipxe.0). You can choose to either load ipxe.lkrn or undionly-ipxe.0 file depending on the networking hardware capabilities and iPXE driver availablity.

Chainbooting iPXE directly or with UNDI workflow

- Host powers on

- PXE driver retrieves the network credentials using DHCP

-

PXE driver retrieves the PXELinux firmware

pxelinux.0using TFTP - PXELinux searches for the configuration file on the TFTP server

-

PXELinux chainloads iPXE

ipxe.lkrnorundionly-ipxe.0 - iPXE retrieves the network credentials using DHCP again

- iPXE retrieves HTTP address using DHCP

- iPXE chainloads the iPXE template from the template Capsule

- iPXE loads the kernel and initial RAM disk of the installer

Configuring Red Hat Satellite Server to use iPXE

You can use the default template to configure iPXE booting for hosts. If you want to change the default values in the template, clone the template and edit the clone.

- In the Satellite web UI, navigate to Hosts > Provisioning Templates.

-

Enter

PXELinux chain iPXEto useipxe.lkrnor, for BIOS systems, enterPXELinux chain iPXE UNDIto useundionly-ipxe.0, and click Search. - Optional: If you want to change the template, click Clone, enter a unique name, and click Submit.

- Click the name of the template you want to use.

- If you clone the template, you can make changes you require on the Template tab.

- Click the Association tab and select the operating systems that your host uses.

- Click the Locations tab and add the location where the host resides.

- Click the Organizations tab and add the organization that the host belongs to.

- Click Submit to save the changes.

-

In the Provisioning Templates page, enter

Kickstart default iPXEinto the search field and click Search. - Optional: If you want to change the template, click Clone, enter a unique name, and click Submit.

- Click the name of the template you want to use.

- If you clone the template, you can make changes you require on the Template tab.

- Click the Association tab and associate the template with the operating system that your host uses.

- Click the Locations tab and add the location where the host resides.

- Click the Organizations tab and add the organization that the host belongs to.

- Click Submit to save the changes.

- Navigate to Hosts > Operating systems and select the operating system of your host.

- Click the Templates tab.

- From the PXELinux template list, select the template you want to use.

- From the iPXE template list, select the template you want to use.

- Click Submit to save the changes.

- Navigate to Hosts > All Hosts, and select the host you want to use.

- Select the Operating System tab.

-

Set PXE Loader to PXELinux BIOS to chainboot iPXE via PXELinux, or to iPXE Chain BIOS to load

undionly-ipxe.0directly. - Select the Templates tab, and from the PXELinux template list, select Review to verify the template is the correct template.

- From the iPXE template list, select Review to verify the template is the correct template. If there is no PXELinux entry, or you cannot find the new template, navigate to Hosts > All Hosts, and on your host, click Edit. Click the Operating system tab and click the Provisioning Template Resolve button to refresh the list of templates.

Configure the

dhcpd.conffile as follows:if exists user-class and option user-class = "iPXE" { filename "http://capsule.example.com:8000/unattended/iPXE?bootstrap=1"; } # elseif existing statements if non-iPXE environment should be preservedif exists user-class and option user-class = "iPXE" { filename "http://capsule.example.com:8000/unattended/iPXE?bootstrap=1"; } # elseif existing statements if non-iPXE environment should be preservedCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteIf you have changed the port using the

--foreman-proxy-http-portinstaller option, use your custom port. You must update the/etc/dhcp/dhcpd.conffile after every upgrade.

Chapter 6. Using PXE to Provision Hosts

There are four main ways to provision bare metal instances with Red Hat Satellite 6.10:

- Unattended Provisioning

- New hosts are identified by a MAC address and Satellite Server provisions the host using a PXE boot process.

- Unattended Provisioning with Discovery

- New hosts use PXE boot to load the Satellite Discovery service. This service identifies hardware information about the host and lists it as an available host to provision. For more information, see Chapter 7, Configuring the Discovery Service.

- PXE-less Provisioning with Discovery

- New hosts use an ISO boot disk that loads the Satellite Discovery service. This service identifies hardware information about the host and lists it as an available host to provision. For more information, see Section 7.7, “Implementing PXE-less Discovery”.

BIOS and UEFI Support

With Red Hat Satellite, you can perform both BIOS and UEFI based PXE provisioning.

Both BIOS and UEFI interfaces work as interpreters between the computer’s operating system and firmware, initializing the hardware components and starting the operating system at boot time.

For information about supported workflows, see Supported architectures and provisioning scenarios.

In Satellite provisioning, the PXE loader option defines the DHCP filename option to use during provisioning. For BIOS systems, use the PXELinux BIOS option to enable a provisioned node to download the pxelinux.0 file over TFTP. For UEFI systems, use the PXEGrub2 UEFI option to enable a TFTP client to download grub2/grubx64.efi file.

For BIOS provisioning, you must associate a PXELinux template with the operating system.

For UEFI provisioning, you must associate a PXEGrub2 template with the operating system.

If you associate both PXELinux and PXEGrub2 templates, Satellite 6 can deploy configuration files for both on a TFTP server, so that you can switch between PXE loaders easily.

6.1. Prerequisites for Bare Metal Provisioning

The requirements for bare metal provisioning include:

A Capsule Server managing the network for bare metal hosts. For unattended provisioning and discovery-based provisioning, Satellite Server requires PXE server settings.

For more information about networking requirements, see Chapter 3, Configuring Networking.

For more information about the Discovery service, Chapter 7, Configuring the Discovery Service.

- A bare metal host or a blank VM.

- Synchronized content repositories for Red Hat Enterprise Linux. For more information, see Syncing Repositories in the Content Management Guide.

- An activation key for host registration. For more information, see Creating An Activation Key in the Content Management guide.

6.2. Configuring the Security Token Validity Duration

To adjust the token’s duration of validity, in the Satellite web UI, navigate to Administer > Settings, and click the Provisioning tab. Find the Token duration option, and click the edit icon and edit the duration, or enter 0 to disable token generation.

If token generation is disabled, an attacker can spoof client IP address and download kickstart from Satellite Server, including the encrypted root password.

6.3. Creating Hosts with Unattended Provisioning

Unattended provisioning is the simplest form of host provisioning. You enter the host details on Satellite Server and boot your host. Satellite Server automatically manages the PXE configuration, organizes networking services, and provides the operating system and configuration for the host.

This method of provisioning hosts uses minimal interaction during the process.

To use the CLI instead of the web UI, see the CLI procedure.

Procedure

- In the Satellite web UI, navigate to Hosts > Create Host.

- In the Name field, enter a name for the host.