Server Administration Guide

For Use with Red Hat Single Sign-On 7.2

Abstract

Chapter 1. Overview

Red Hat Single Sign-On is a single sign on solution for web apps and RESTful web services. The goal of Red Hat Single Sign-On is to make security simple so that it is easy for application developers to secure the apps and services they have deployed in their organization. Security features that developers normally have to write for themselves are provided out of the box and are easily tailorable to the individual requirements of your organization. Red Hat Single Sign-On provides customizable user interfaces for login, registration, administration, and account management. You can also use Red Hat Single Sign-On as an integration platform to hook it into existing LDAP and Active Directory servers. You can also delegate authentication to third party identity providers like Facebook and Google+.

1.1. Features

- Single-Sign On and Single-Sign Out for browser applications.

- OpenID Connect support.

- OAuth 2.0 support.

- SAML support.

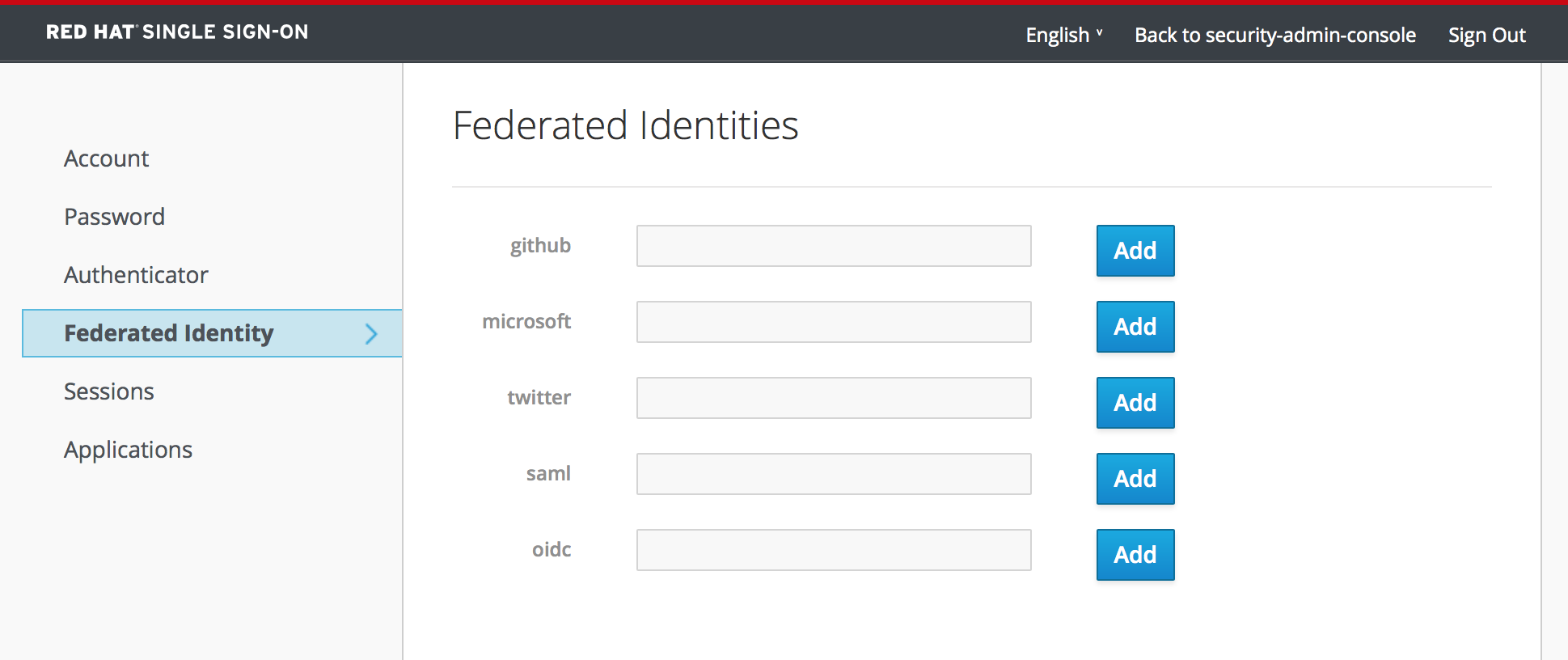

- Identity Brokering - Authenticate with external OpenID Connect or SAML Identity Providers.

- Social Login - Enable login with Google, GitHub, Facebook, Twitter, and other social networks.

- User Federation - Sync users from LDAP and Active Directory servers.

- Kerberos bridge - Automatically authenticate users that are logged-in to a Kerberos server.

- Admin Console for central management of users, roles, role mappings, clients and configuration.

- Account Management console that allows users to centrally manage their account.

- Theme support - Customize all user facing pages to integrate with your applications and branding.

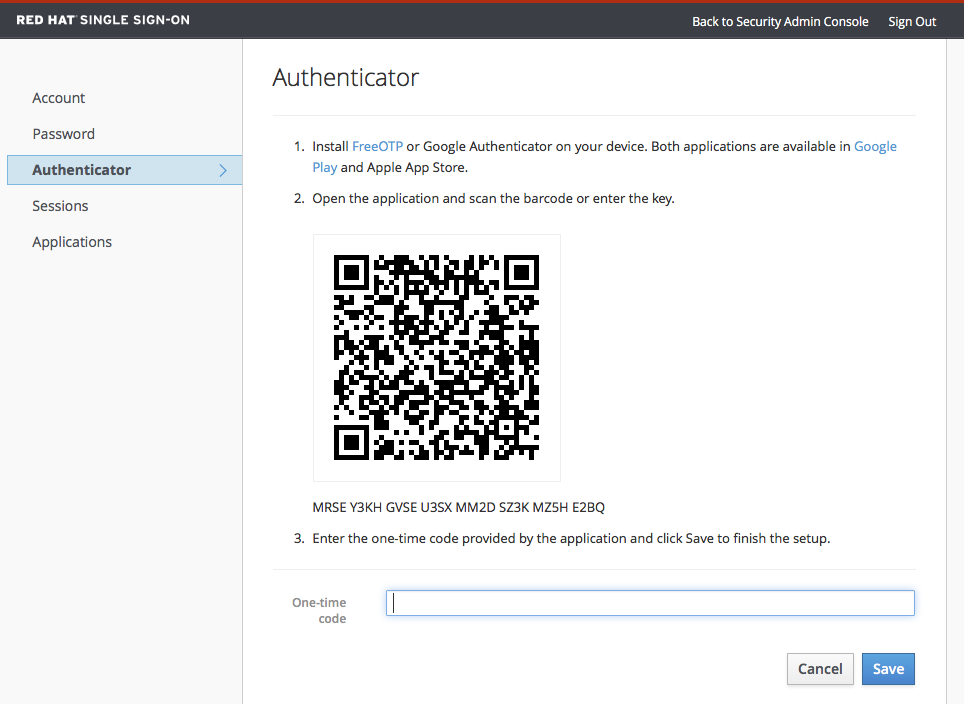

- Two-factor Authentication - Support for TOTP/HOTP via Google Authenticator or FreeOTP.

- Login flows - optional user self-registration, recover password, verify email, require password update, etc.

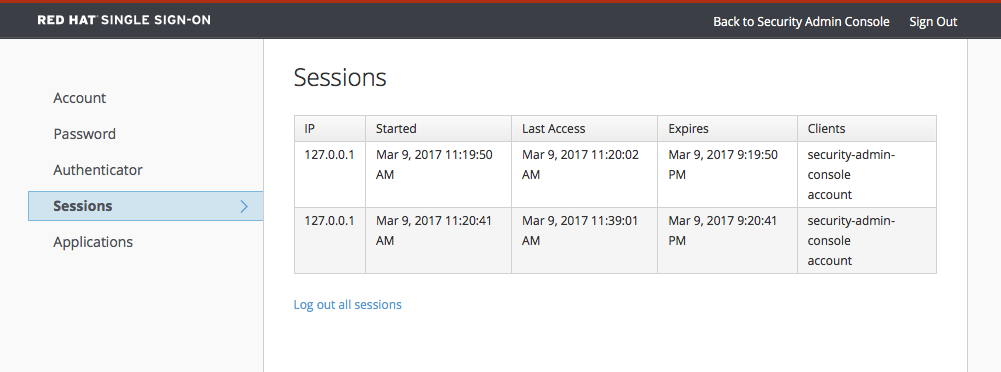

- Session management - Admins and users themselves can view and manage user sessions.

- Token mappers - Map user attributes, roles, etc. how you want into tokens and statements.

- Not-before revocation policies per realm, application and user.

- CORS support - Client adapters have built-in support for CORS.

- Client adapters for JavaScript applications, JBoss EAP, Fuse, etc.

- Supports any platform/language that has an OpenID Connect Resource Provider library or SAML 2.0 Service Provider library.

1.2. How Does Security Work?

Red Hat Single Sign-On is a separate server that you manage on your network. Applications are configured to point to and be secured by this server. Red Hat Single Sign-On uses open protocol standards like OpenID Connect or SAML 2.0 to secure your applications. Browser applications redirect a user’s browser from the application to the Red Hat Single Sign-On authentication server where they enter their credentials. This is important because users are completely isolated from applications and applications never see a user’s credentials. Applications instead are given an identity token or assertion that is cryptographically signed. These tokens can have identity information like username, address, email, and other profile data. They can also hold permission data so that applications can make authorization decisions. These tokens can also be used to make secure invocations on REST-based services.

1.3. Core Concepts and Terms

There are some key concepts and terms you should be aware of before attempting to use Red Hat Single Sign-On to secure your web applications and REST services.

- users

- Users are entities that are able to log into your system. They can have attributes associated with themselves like email, username, address, phone number, and birth day. They can be assigned group membership and have specific roles assigned to them.

- authentication

- The process of identifying and validating a user.

- authorization

- The process of granting access to a user.

- credentials

- Credentials are pieces of data that Red Hat Single Sign-On uses to verify the identity of a user. Some examples are passwords, one-time-passwords, digital certificates, or even fingerprints.

- roles

-

Roles identify a type or category of user.

Admin,user,manager, andemployeeare all typical roles that may exist in an organization. Applications often assign access and permissions to specific roles rather than individual users as dealing with users can be too fine grained and hard to manage. - user role mapping

- A user role mapping defines a mapping between a role and a user. A user can be associated with zero or more roles. This role mapping information can be encapsulated into tokens and assertions so that applications can decide access permissions on various resources they manage.

- composite roles

-

A composite role is a role that can be associated with other roles. For example a

superusercomposite role could be associated with thesales-adminandorder-entry-adminroles. If a user is mapped to thesuperuserrole they also inherit thesales-adminandorder-entry-adminroles. - groups

- Groups manage groups of users. Attributes can be defined for a group. You can map roles to a group as well. Users that become members of a group inherit the attributes and role mappings that group defines.

- realms

- A realm manages a set of users, credentials, roles, and groups. A user belongs to and logs into a realm. Realms are isolated from one another and can only manage and authenticate the users that they control.

- clients

- Clients are entities that can request Red Hat Single Sign-On to authenticate a user. Most often, clients are applications and services that want to use Red Hat Single Sign-On to secure themselves and provide a single sign-on solution. Clients can also be entities that just want to request identity information or an access token so that they can securely invoke other services on the network that are secured by Red Hat Single Sign-On.

- client adapters

- Client adapters are plugins that you install into your application environment to be able to communicate and be secured by Red Hat Single Sign-On. Red Hat Single Sign-On has a number of adapters for different platforms that you can download. There are also third-party adapters you can get for environments that we don’t cover.

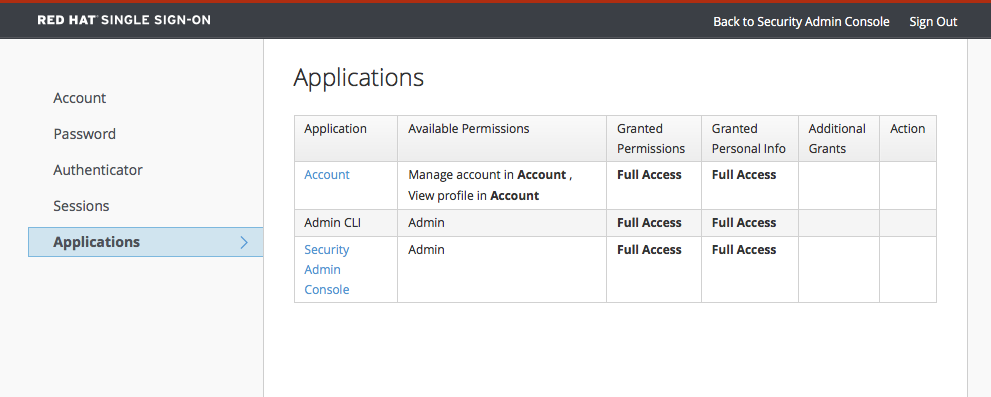

- consent

- Consent is when you as an admin want a user to give permission to a client before that client can participate in the authentication process. After a user provides their credentials, Red Hat Single Sign-On will pop up a screen identifying the client requesting a login and what identity information is requested of the user. User can decide whether or not to grant the request.

- client templates

- When a client is registered you need to enter configuration information about that client. It is often useful to store a template to make create new clients easier. Red Hat Single Sign-On provides the concept of a client template for this.

- client role

- Clients can define roles that are specific to them. This is basically a role namespace dedicated to the client.

- identity token

- A token that provides identity information about the user. Part of the OpenID Connect specification.

- access token

- A token that can be provided as part of an HTTP request that grants access to the service being invoked on. This is part of the OpenID Connect and OAuth 2.0 specification.

- assertion

- Information about a user. This usually pertains to an XML blob that is included in a SAML authentication response that provided identity metadata about an authenticated user.

- service account

- Each client has a built-in service account which allows it to obtain an access token.

- direct grant

- A way for a client to obtain an access token on behalf of a user via a REST invocation.

- protocol mappers

- For each client you can tailor what claims and assertions are stored in the OIDC token or SAML assertion. You do this per client by creating and configuring protocol mappers.

- session

- When a user logs in, a session is created to manage the login session. A session contains information like when the user logged in and what applications have participated within single-sign on during that session. Both admins and users can view session information.

- user federation provider

- Red Hat Single Sign-On can store and manage users. Often, companies already have LDAP or Active Directory services that store user and credential information. You can point Red Hat Single Sign-On to validate credentials from those external stores and pull in identity information.

- identity provider

- An identity provider (IDP) is a service that can authenticate a user. Red Hat Single Sign-On is an IDP.

- identity provider federation

- Red Hat Single Sign-On can be configured to delegate authentication to one or more IDPs. Social login via Facebook or Google+ is an example of identity provider federation. You can also hook Red Hat Single Sign-On to delegate authentication to any other OpenID Connect or SAML 2.0 IDP.

- identity provider mappers

- When doing IDP federation you can map incoming tokens and assertions to user and session attributes. This helps you propagate identity information from the external IDP to your client requesting authentication.

- required actions

-

Required actions are actions a user must perform during the authentication process. A user will not be able to complete the authentication process until these actions are complete. For example, an admin may schedule users to reset their passwords every month. An

update passwordrequired action would be set for all these users. - authentication flows

- Authentication flows are work flows a user must perform when interacting with certain aspects of the system. A login flow can define what credential types are required. A registration flow defines what profile information a user must enter and whether something like reCAPTCHA must be used to filter out bots. Credential reset flow defines what actions a user must do before they can reset their password.

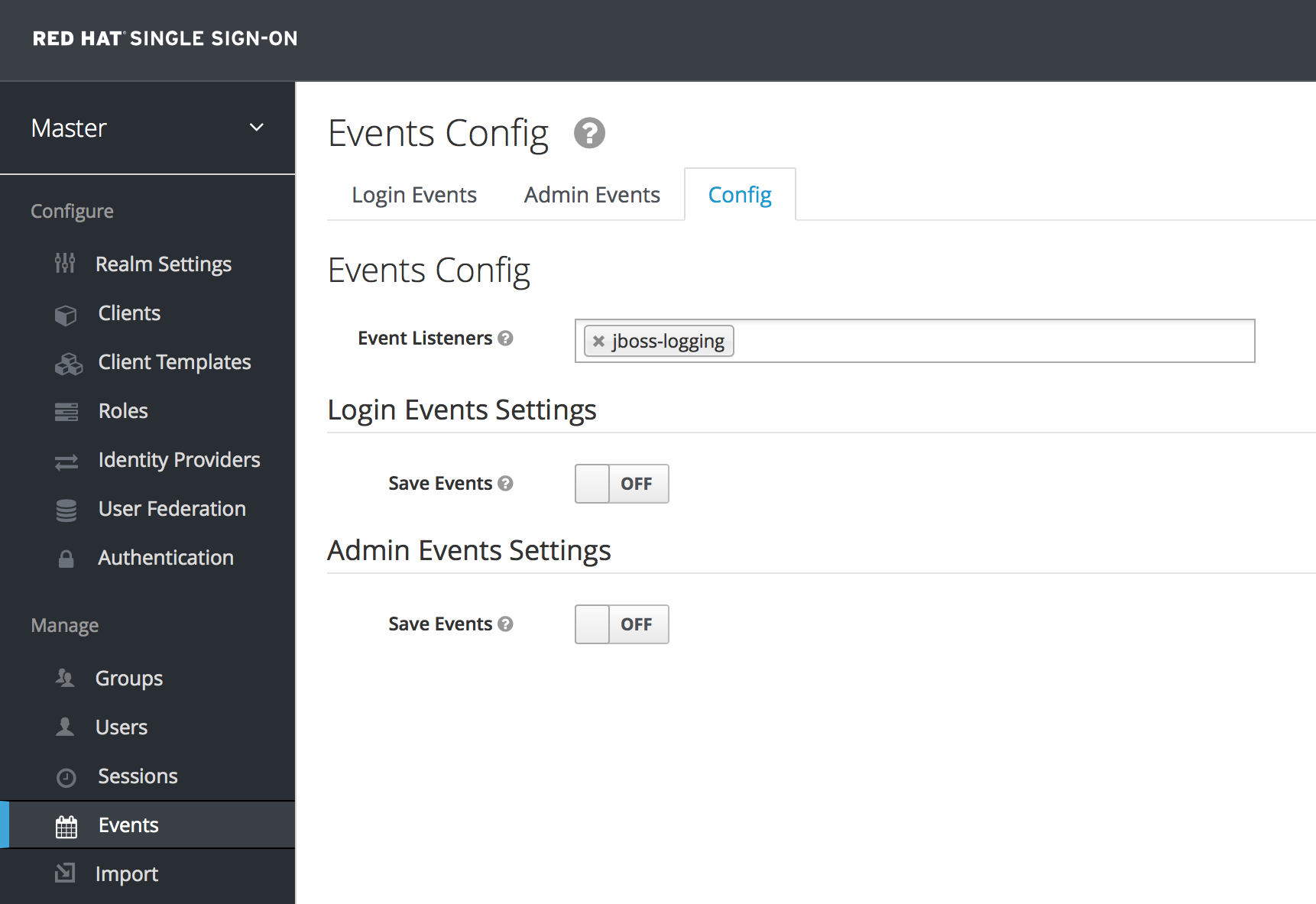

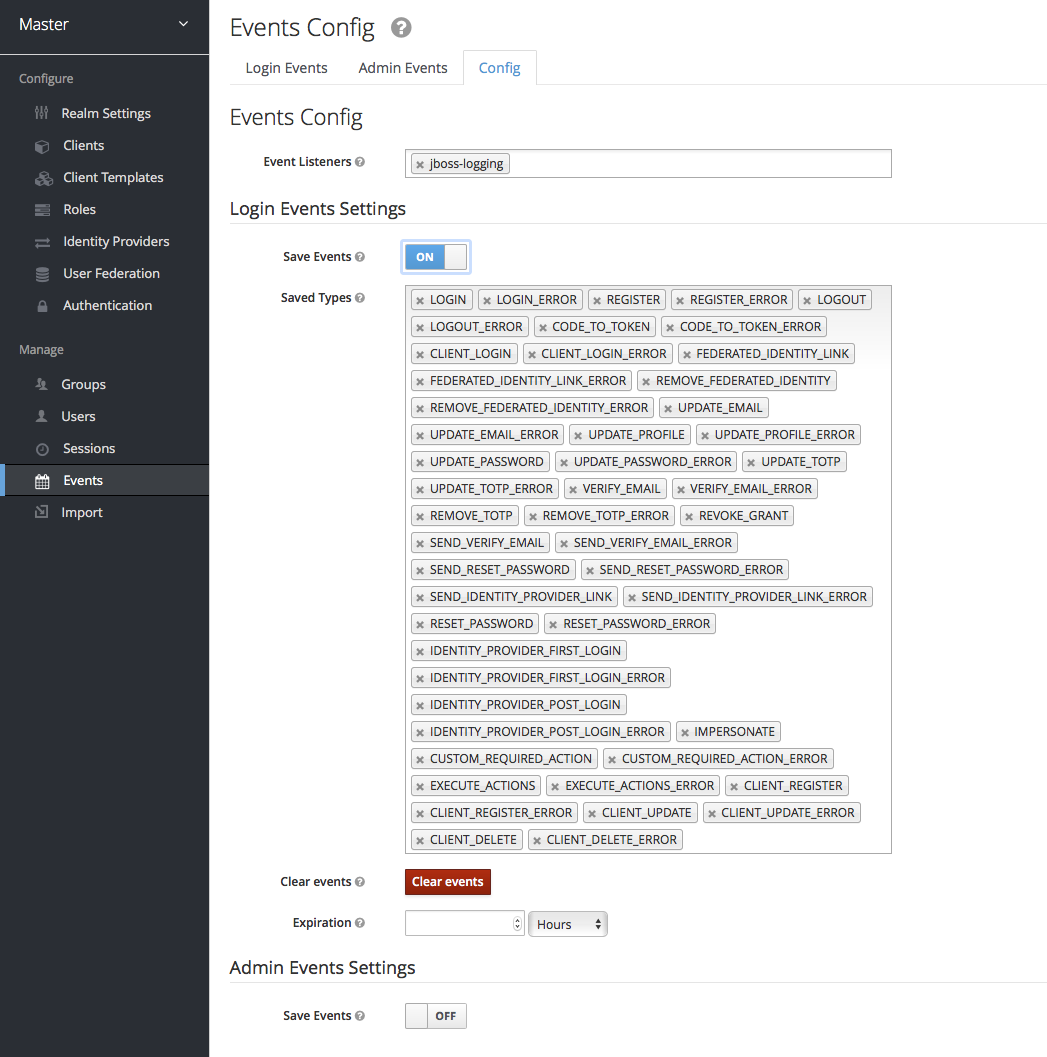

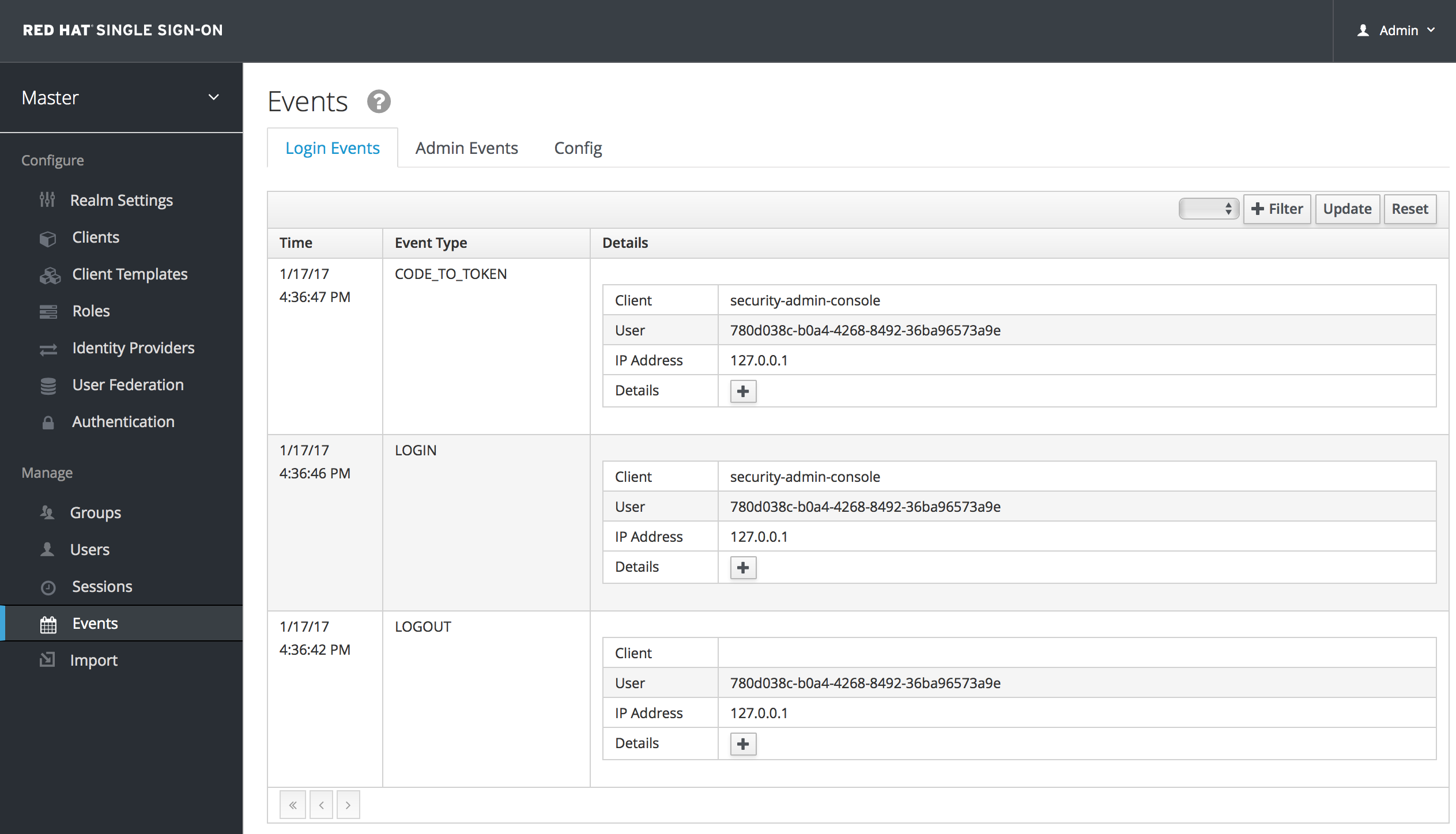

- events

- Events are audit streams that admins can view and hook into.

- themes

- Every screen provided by Red Hat Single Sign-On is backed by a theme. Themes define HTML templates and stylesheets which you can override as needed.

Chapter 2. Server Initialization

After performing all the installation and configuration tasks defined in the Server Installation and Configuration Guide, you will need to create an initial admin account. Red Hat Single Sign-On does not have any configured admin account out of the box. This account will allow you to create an admin that can log into the master realm’s administration console so that you can start creating realms, users and registering applications to be secured by Red Hat Single Sign-On.

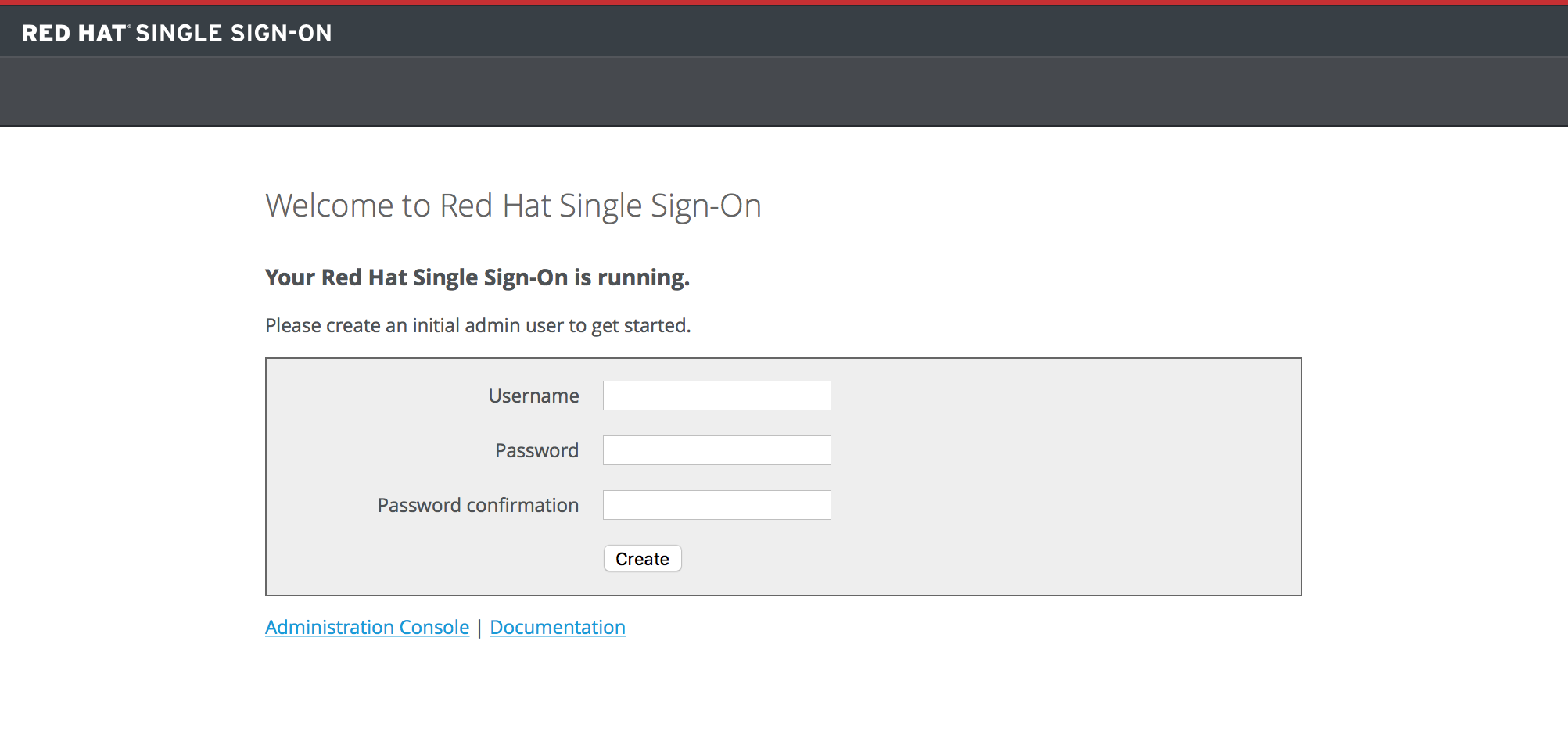

If your server is accessible from localhost, you can boot it up and create this admin user by going to the http://localhost:8080/auth URL.

Welcome Page

Simply specify the username and password you want for this initial admin.

If you cannot access the server via a localhost address, or just want to provision Red Hat Single Sign-On from the command line you can do this with the …/bin/add-user-keycloak script.

add-user-keycloak script

The parameters are a little different depending if you are using the standalone operation mode or domain operation mode. For standalone mode, here is how you use the script.

Linux/Unix

.../bin/add-user-keycloak.sh -r master -u <username> -p <password>

$ .../bin/add-user-keycloak.sh -r master -u <username> -p <password>Windows

> ...\bin\add-user-keycloak.bat -r master -u <username> -p <password>

> ...\bin\add-user-keycloak.bat -r master -u <username> -p <password>

For domain mode, you have to point the script to one of your server hosts using the -sc switch.

Linux/Unix

.../bin/add-user-keycloak.sh --sc domain/servers/server-one/configuration -r master -u <username> -p <password>

$ .../bin/add-user-keycloak.sh --sc domain/servers/server-one/configuration -r master -u <username> -p <password>Windows

> ...\bin\add-user-keycloak.bat --sc domain/servers/server-one/configuration -r master -u <username> -p <password>

> ...\bin\add-user-keycloak.bat --sc domain/servers/server-one/configuration -r master -u <username> -p <password>Chapter 3. Admin Console

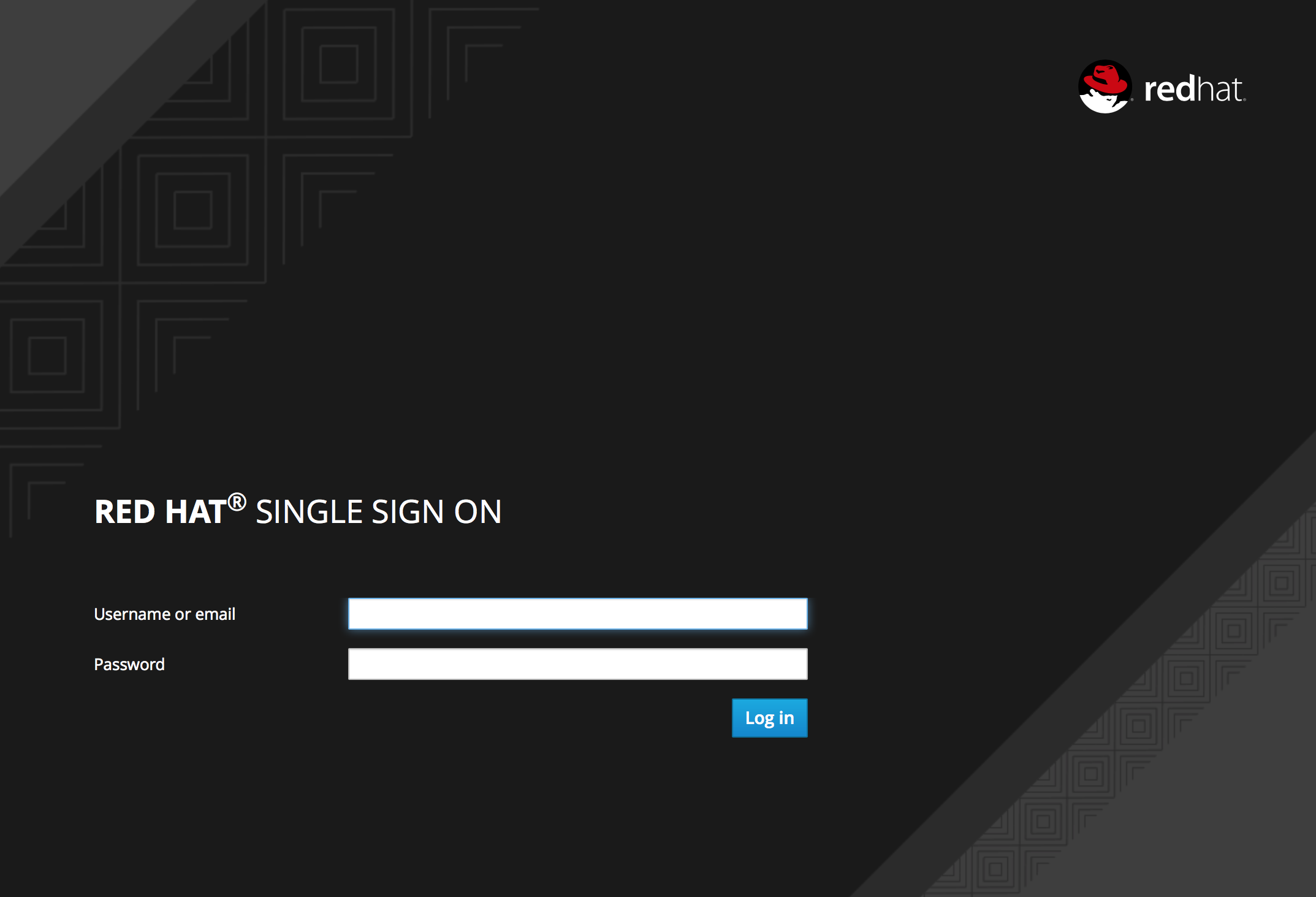

The bulk of your administrative tasks will be done through the Red Hat Single Sign-On Admin Console. You can go to the console url directly at http://localhost:8080/auth/admin/

Login Page

Enter the username and password you created on the Welcome Page or the add-user-keycloak script in the bin directory. This will bring you to the Red Hat Single Sign-On Admin Console.

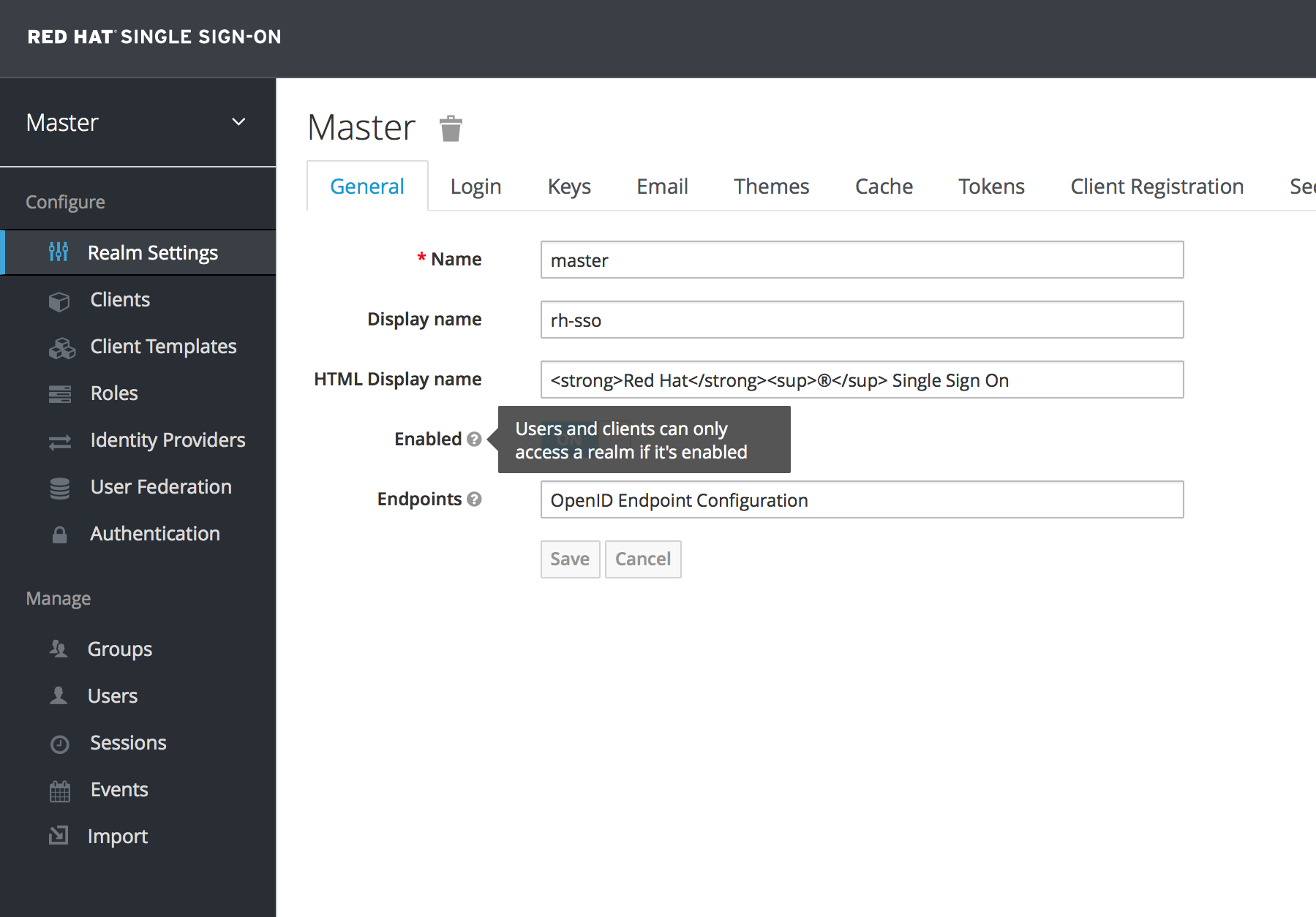

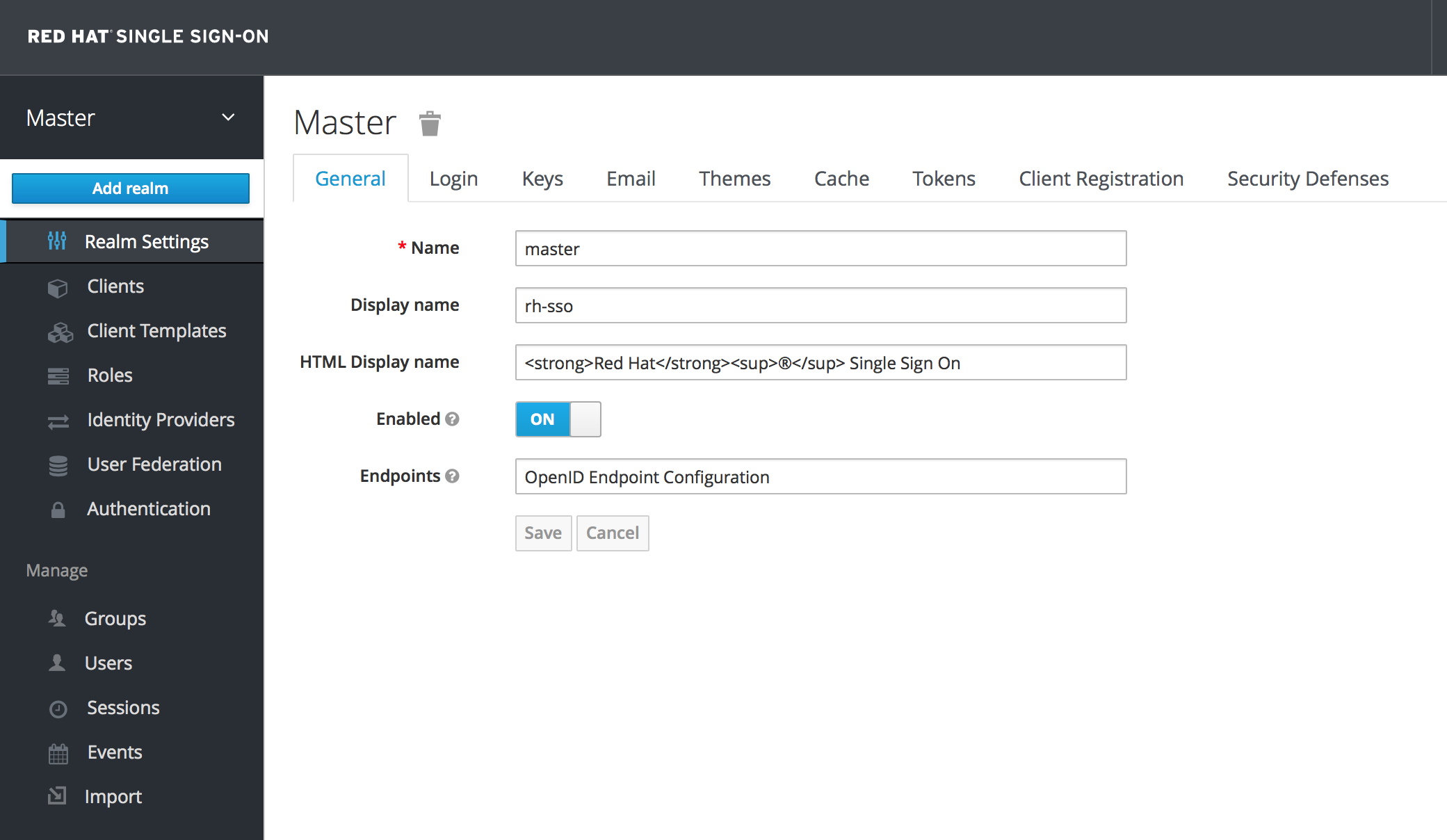

Admin Console

The left drop down menu allows you to pick a realm you want to manage or to create a new one. The right drop down menu allows you to view your user account or logout. If you are curious about a certain feature, button, or field within the Admin Console, simply hover your mouse over any question mark ? icon. This will pop up tooltip text to describe the area of the console you are interested in. The image above shows the tooltip in action.

3.1. The Master Realm

When you boot Red Hat Single Sign-On for the first time Red Hat Single Sign-On creates a pre-defined realm for you. This initial realm is the master realm. It is the highest level in the hierarchy of realms. Admin accounts in this realm have permissions to view and manage any other realm created on the server instance. When you define your initial admin account, you create an account in the master realm. Your initial login to the admin console will also be via the master realm.

We recommend that you do not use the master realm to manage the users and applications in your organization. Reserve use of the master realm for super admins to create and manage the realms in your system. Following this security model helps prevent accidental changes and follows the tradition of permitting user accounts access to only those privileges and powers necessary for the successful completion of their current task.

It is possible to disable the master realm and define admin accounts within each individual new realm you create. Each realm has its own dedicated Admin Console that you can log into with local accounts. This guide talks more about this in the Dedicated Realm Admin Consoles chapter.

3.2. Create a New Realm

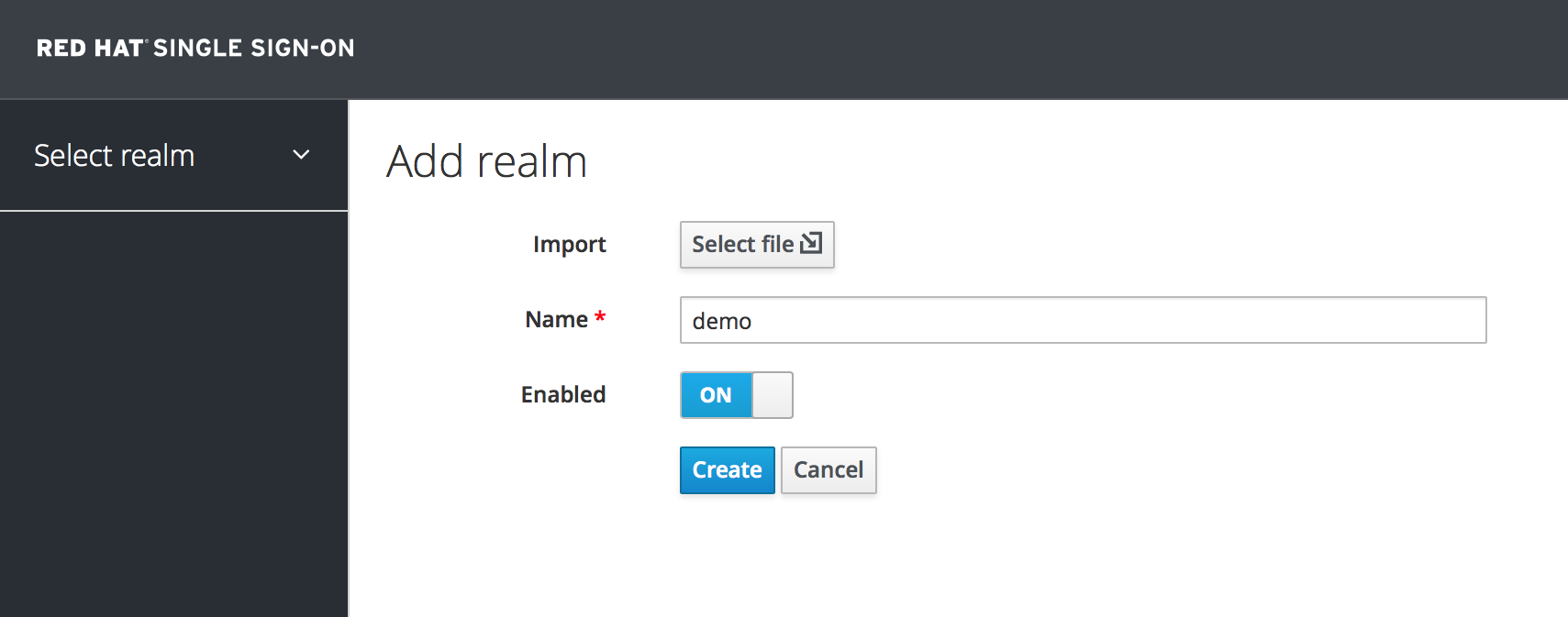

Creating a new realm is very simple. Mouse over the top left corner drop down menu that is titled with Master. If you are logged in the master realm this drop down menu lists all the realms created. The last entry of this drop down menu is always Add Realm. Click this to add a realm.

Add Realm Menu

This menu option will bring you to the Add Realm page. Specify the realm name you want to define and click the Create button. Alternatively you can import a JSON document that defines your new realm. We’ll go over this in more detail in the Export and Import chapter.

Create Realm

After creating the realm you are brought back to the main Admin Console page. The current realm will now be set to the realm you just created. You can switch between managing different realms by doing a mouse over on the top left corner drop down menu.

3.3. SSL Mode

Each realm has an SSL Mode associated with it. The SSL Mode defines the SSL/HTTPS requirements for interacting with the realm. Browsers and applications that interact with the realm must honor the SSL/HTTPS requirements defined by the SSL Mode or they will not be allowed to interact with the server.

Red Hat Single Sign-On is not set up by default to handle SSL/HTTPS. It is highly recommended that you either enable SSL on the Red Hat Single Sign-On server itself or on a reverse proxy in front of the Red Hat Single Sign-On server.

To configure the SSL Mode of your realm, you need to click on the Realm Settings left menu item and go to the Login tab.

Login Tab

The Require SSL option allows you to pick the SSL Mode you want. Here is an explanation of each mode:

- external requests

-

Users can interact with Red Hat Single Sign-On so long as they stick to private IP addresses like

localhost,127.0.0.1,10.0.x.x,192.168.x.x, and172..16.x.x. If you try to access Red Hat Single Sign-On from a non-private IP address you will get an error. - none

- Red Hat Single Sign-On does not require SSL. This should really only be used in development when you are playing around with things and don’t want to bother configuring SSL on your server.

- all requests

- Red Hat Single Sign-On requires SSL for all IP addresses.

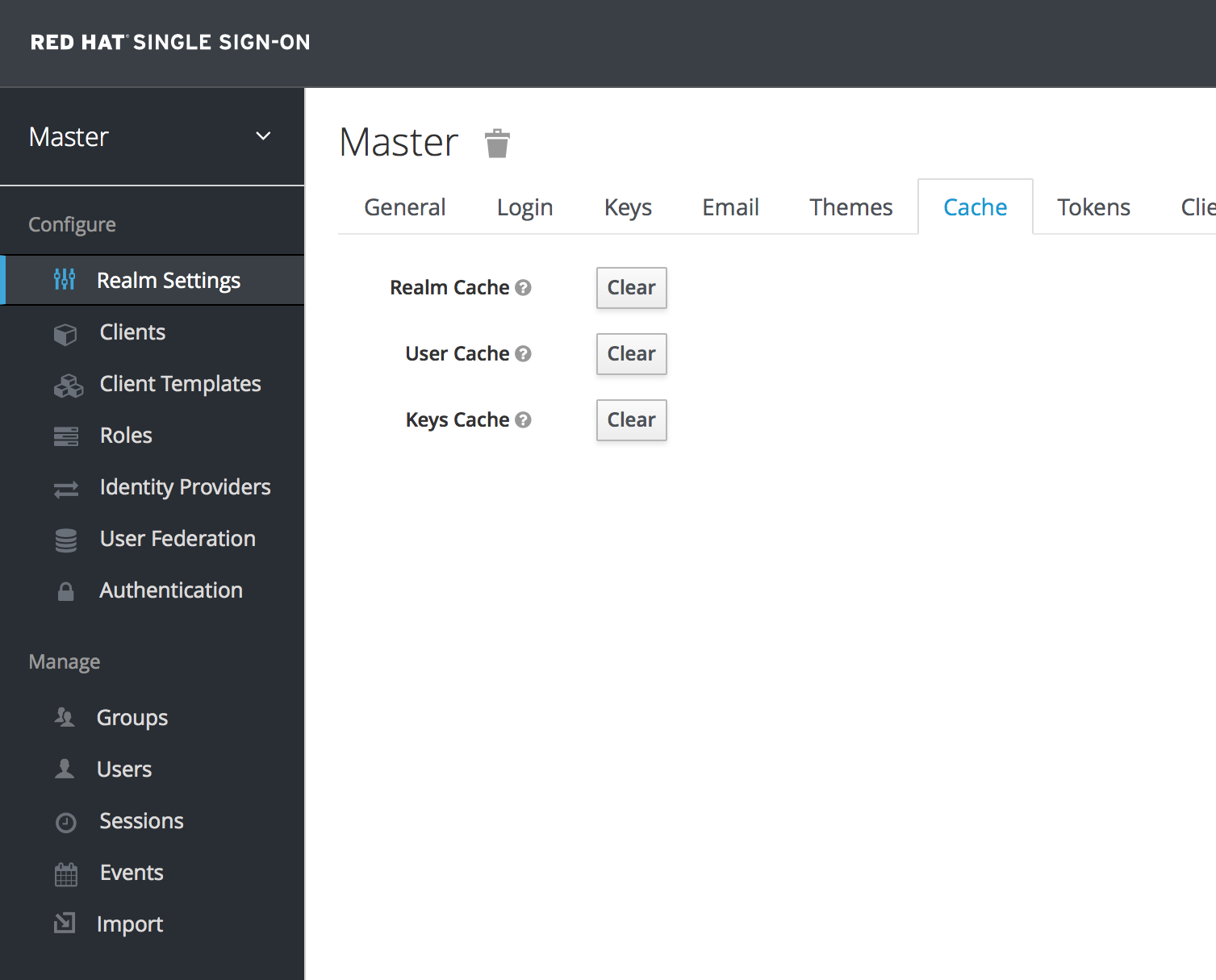

3.4. Clearing Server Caches

Red Hat Single Sign-On will cache everything it can in memory within the limits of your JVM and/or the limits you’ve configured it for. If the Red Hat Single Sign-On database is modified by a third party (i.e. a DBA) outside the scope of the server’s REST APIs or Admin Console there’s a chance parts of the in-memory cache may be stale. You can clear the realm cache, user cache or cache of external public keys (Public keys of external clients or Identity providers, which Red Hat Single Sign-On usually uses for verify signatures of particular external entity) from the Admin Console by going to the Realm Settings left menu item and the Cache tab.

Cache tab

Just click the clear button on the cache you want to evict.

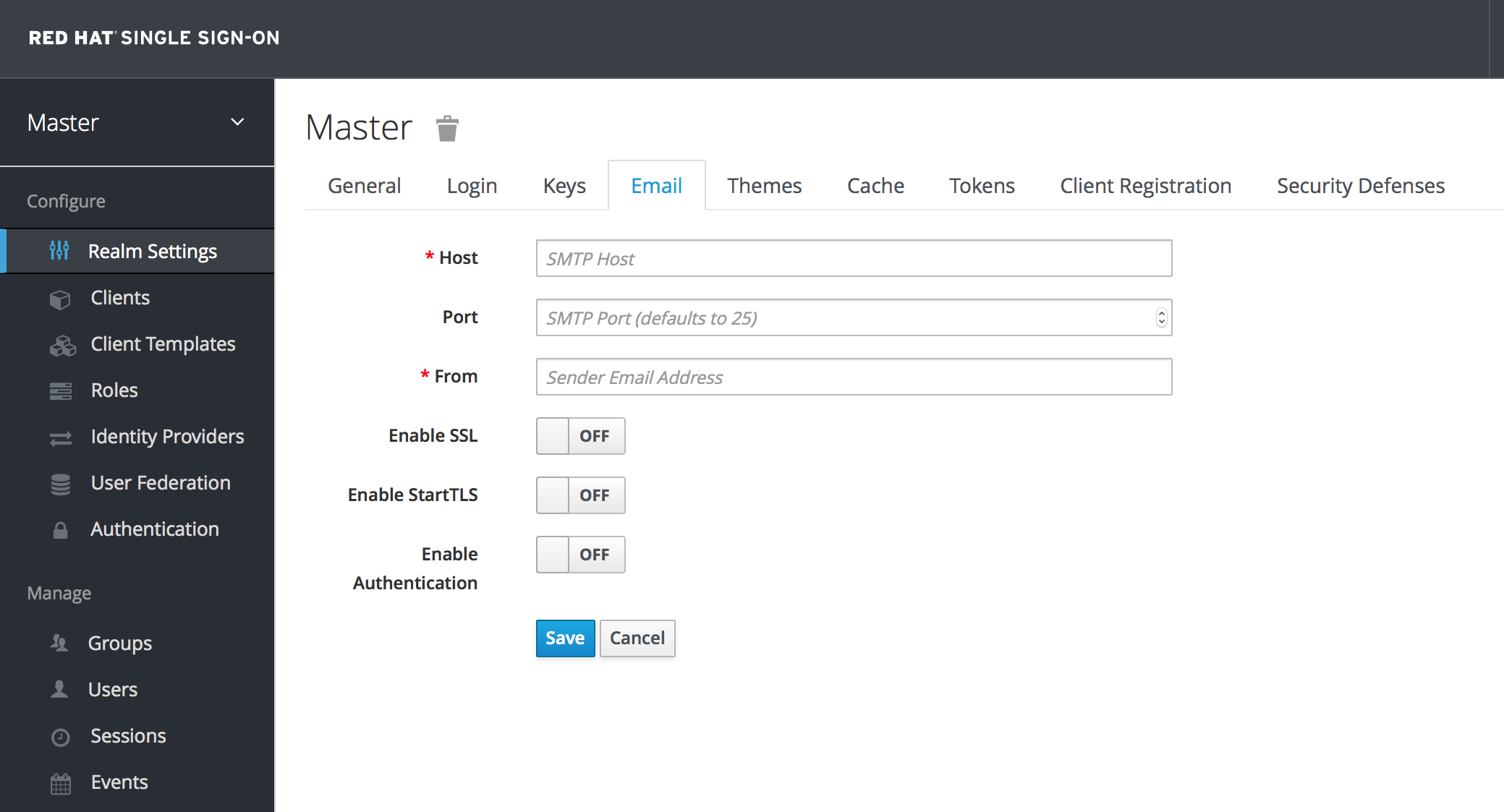

3.5. Email Settings

Red Hat Single Sign-On sends emails to users to verify their email address, when they forget their passwords, or when an admin needs to receive notifications about a server event. To enable Red Hat Single Sign-On to send emails you need to provide Red Hat Single Sign-On with your SMTP server settings. This is configured per realm. Go to the Realm Settings left menu item and click the Email tab.

Email Tab

- Host

-

Hostdenotes the SMTP server hostname used for sending emails. - Port

-

Portdenotes the SMTP server port. - From

-

Fromdenotes the address used for theFromSMTP-Header for the emails sent. - From Display Name

-

From Display Nameallows to configure an user friendly email address aliases (optional). If not set the plainFromemail address will be displayed in email clients. - Reply To

-

Reply Todenotes the address used for theReply-ToSMTP-Header for the mails sent (optional). If not set the plainFromemail address will be used. - Reply To Display Name

-

Reply To Display Nameallows to configure an user friendly email address aliases (optional). If not set the plainReply Toemail address will be displayed. - Envelope From

-

Envelope Fromdenotes the Bounce Address used for theReturn-PathSMTP-Header for the mails sent (optional).

As emails are used for recovering usernames and passwords it’s recommended to use SSL or TLS, especially if the SMTP server is on an external network. To enable SSL click on Enable SSL or to enable TLS click on Enable TLS. You will most likely also need to change the Port (the default port for SSL/TLS is 465).

If your SMTP server requires authentication click on Enable Authentication and insert the Username and Password.

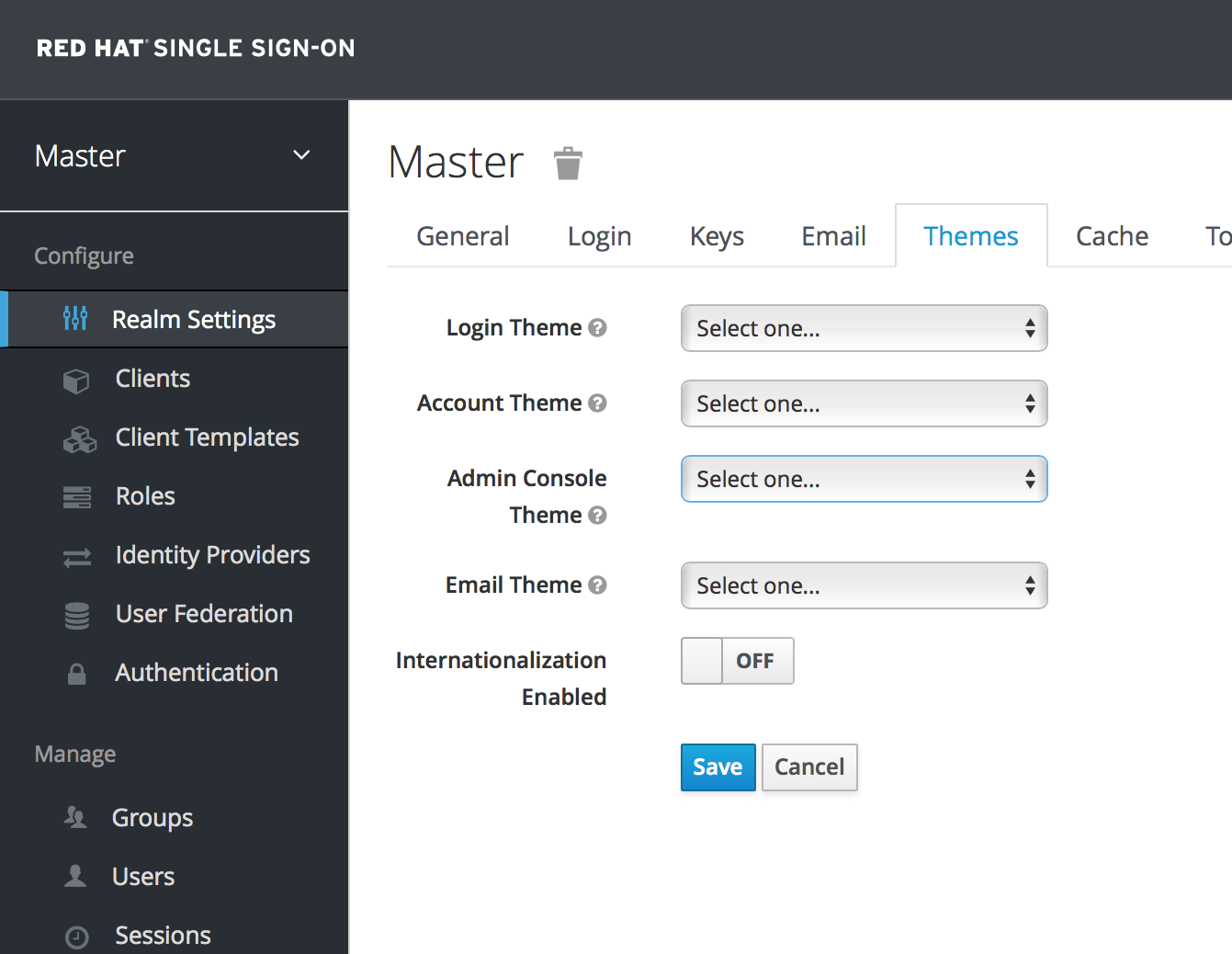

3.6. Themes and Internationalization

Themes allow you to change the look and feel of any UI in Red Hat Single Sign-On. Themes are configured per realm. To change a theme go to the Realm Settings left menu item and click on the Themes tab.

Themes Tab

Pick the theme you want for each UI category and click Save.

- Login Theme

- Username password entry, OTP entry, new user registration, and other similar screens related to login.

- Account Theme

- Each user has an User Account Management UI.

- Admin Console Theme

- The skin of the Red Hat Single Sign-On Admin Console.

- Email Theme

- Whenever Red Hat Single Sign-On has to send out an email, it uses templates defined in this theme to craft the email.

The Server Developer Guide goes into how to create a new themes or modify existing ones.

3.6.1. Internationalization

Every UI screen is internationalized in Red Hat Single Sign-On. The default language is English, but if you turn on the Internationalization switch on the Theme tab you can choose which locales you want to support and what the default locale will be. The next time a user logs in, they will be able to choose a language on the login page to use for the login screens, User Account Management UI, and Admin Console. The Server Developer Guide explains how you can offer additional languages.

Chapter 4. User Management

This section describes the administration functions for managing users.

4.1. Searching For Users

If you need to manage a specific user, click on Users in the left menu bar.

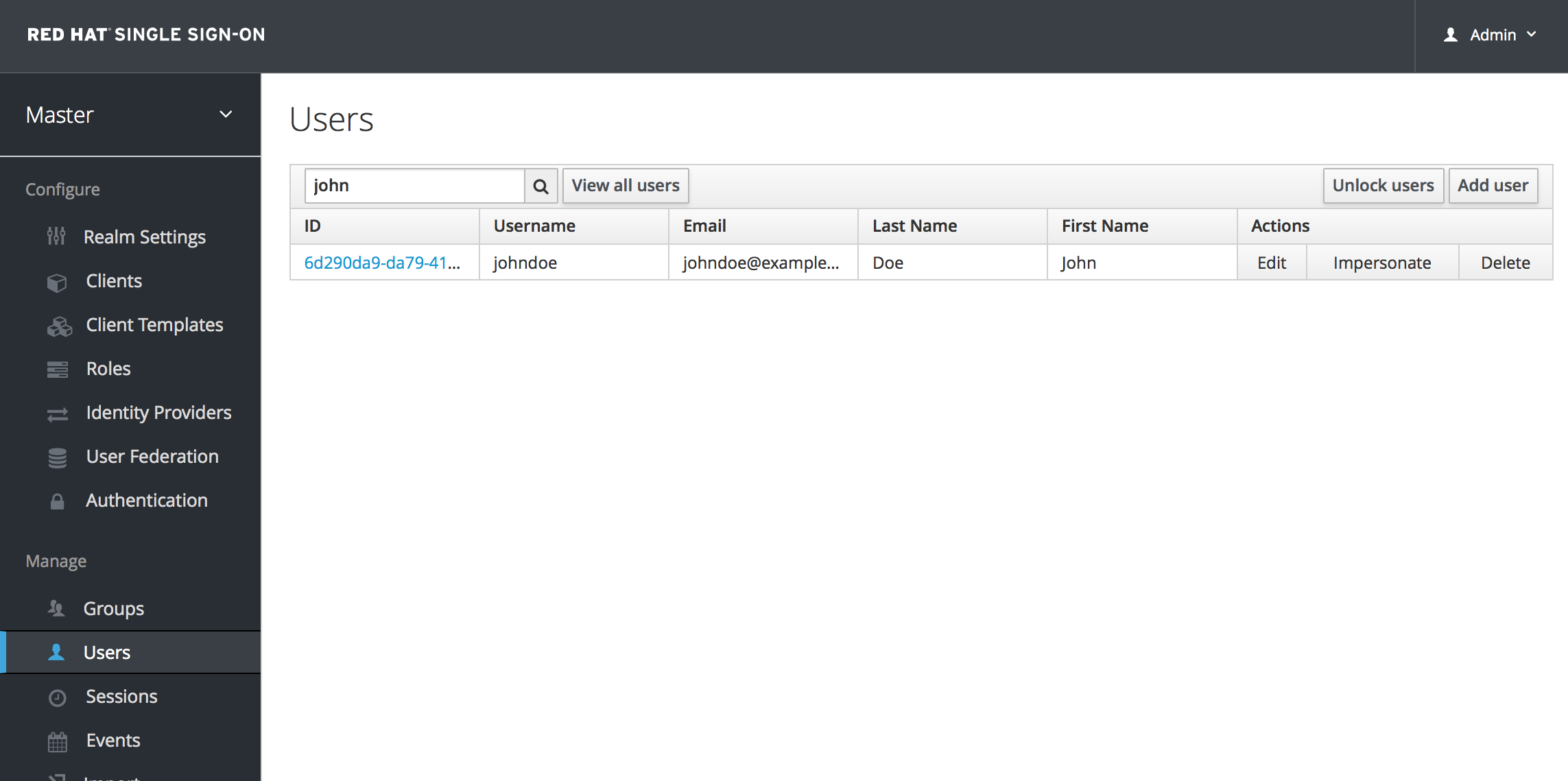

Users

This menu option brings you to the user list page. In the search box you can type in a full name, last name, or email address you want to search for in the user database. The query will bring up all users that match your criteria. The View all users button will list every user in the system. This will search just local Red Hat Single Sign-On database and not the federated database (ie. LDAP) because some backends like LDAP don’t have a way to page through users. So if you want the users from federated backend to be synced into Red Hat Single Sign-On database you need to either:

- Adjust search criteria. That will sync just the backend users matching the criteria into Red Hat Single Sign-On database.

-

Go to

User Federationtab and clickSync all usersorSync changed usersin the page with your federation provider.

See User Federation for more details.

4.2. Creating New Users

To create a user click on Users in the left menu bar.

Users

This menu option brings you to the user list page. On the right side of the empty user list, you should see an Add User button. Click that to start creating your new user.

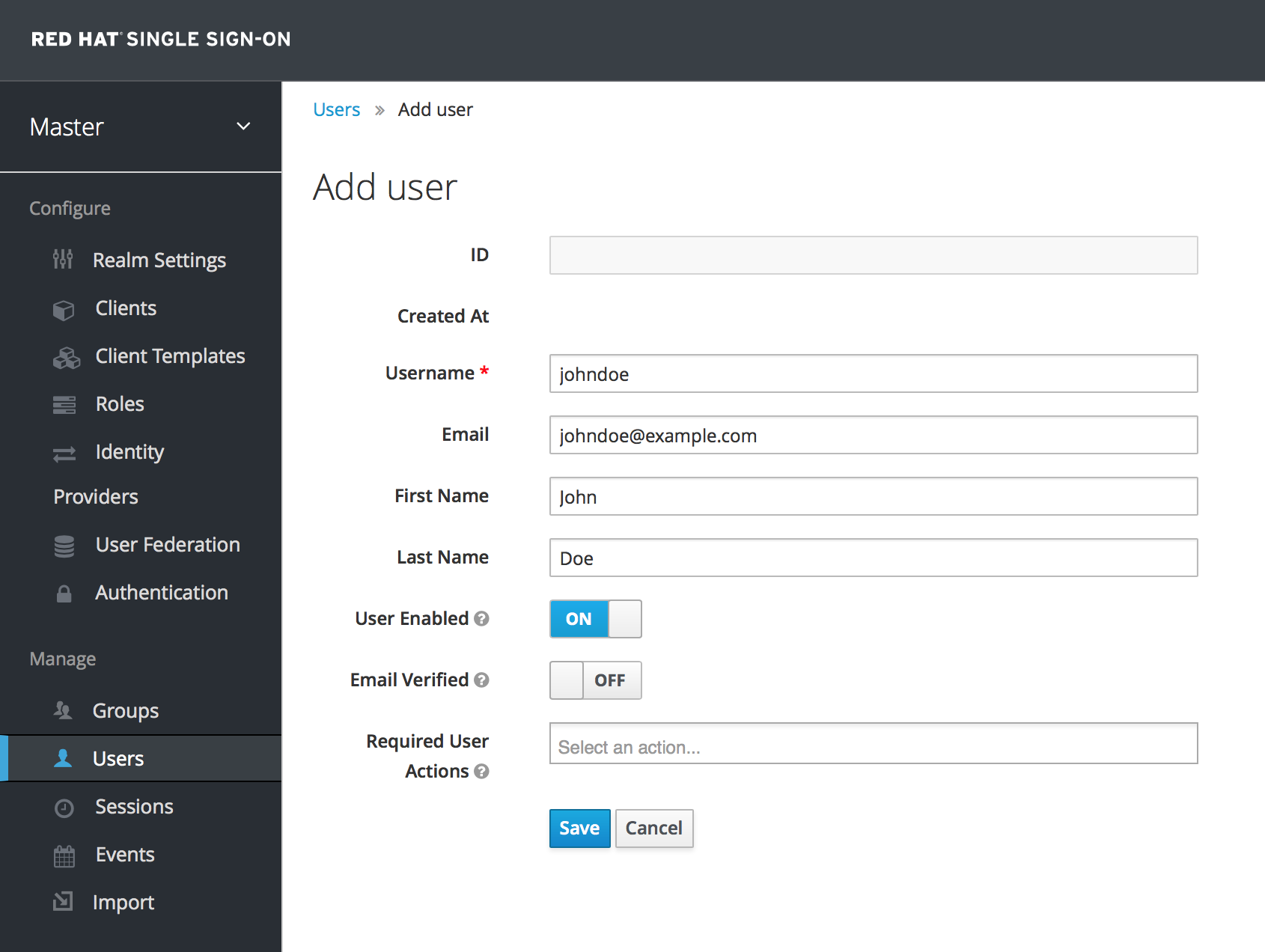

Add User

The only required field is Username. Click save. This will bring you to the management page for your new user.

4.3. User Attributes

Beyond basic user metadata like name and email, you can store arbitrary user attributes. Choose a user to manage then click on the Attributes tab.

Users

Enter in the attribute name and value in the empty fields and click the Add button next to it to add a new field. Note that any edits you make on this page will not be stored until you hit the Save button.

4.4. User Credentials

When viewing a user if you go to the Credentials tab you can manage a user’s credentials.

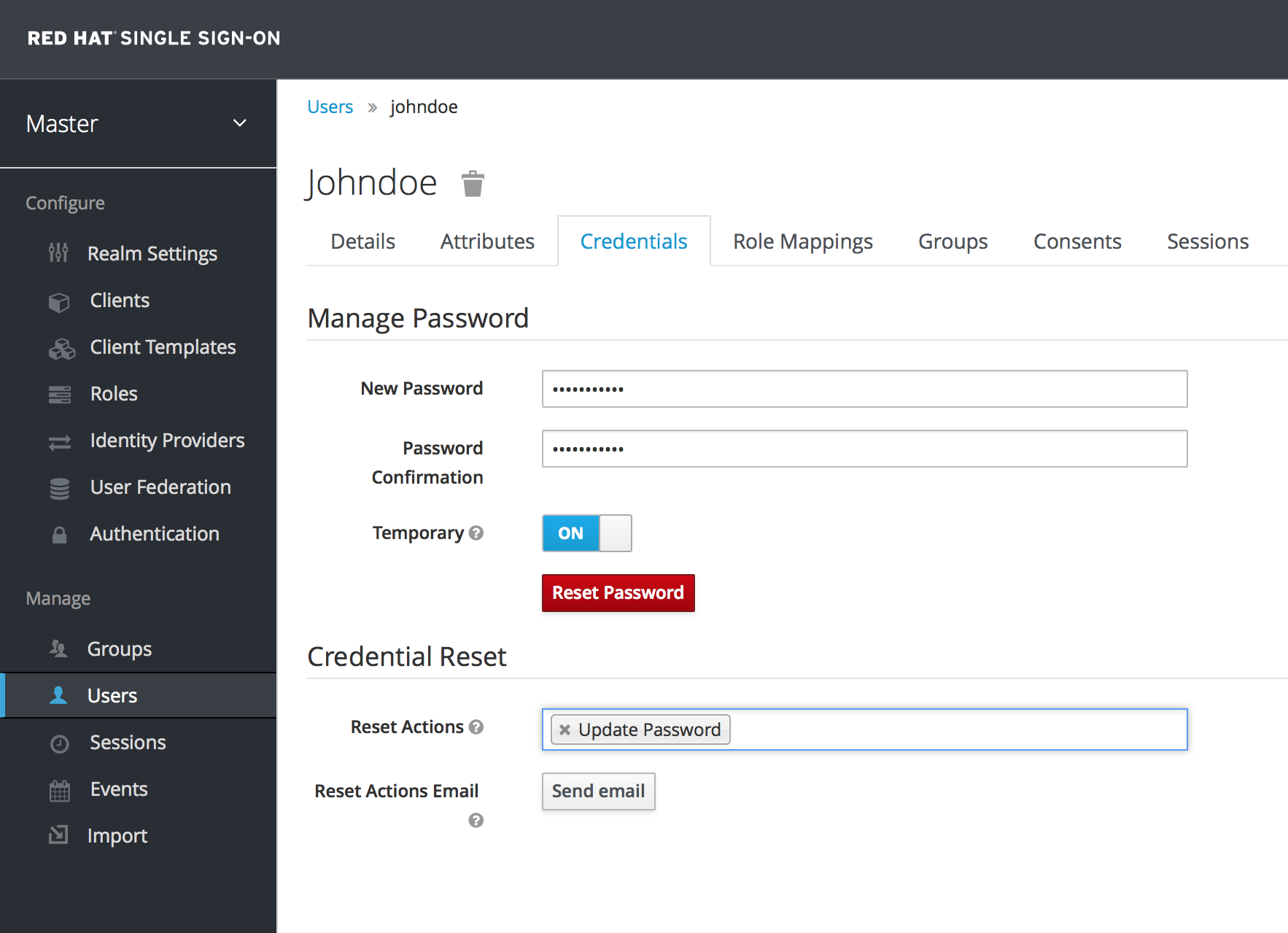

Credential Management

4.4.1. Changing Passwords

To change a user’s password, type in a new one. A Reset Password button will show up that you click after you’ve typed everything in. If the Temporary switch is on, this new password can only be used once and the user will be asked to change their password after they have logged in.

Alternatively, if you have email set up, you can send an email to the user that asks them to reset their password. Choose Update Password from the Reset Actions list box and click Send Email. You can optionally set the validity of the e-mail link which defaults to the one preset in Tokens tab in the realm settings. The sent email contains a link that will bring the user to the update password screen.

4.4.2. Changing OTPs

You cannot configure One-Time Passwords for a specific user within the Admin Console. This is the responsibility of the user. If the user has lost their OTP generator all you can do is disable OTP for them on the Credentials tab. If OTP is optional in your realm, the user will have to go to the User Account Management service to re-configure a new OTP generator. If OTP is required, then the user will be asked to re-configure a new OTP generator when they log in.

Like passwords, you can alternatively send an email to the user that will ask them to reset their OTP generator. Choose Configure OTP in the Reset Actions list box and click the Send Email button. The sent email contains a link that will bring the user to the OTP setup screen.

4.5. Required Actions

Required Actions are tasks that a user must finish before they are allowed to log in. A user must provide their credentials before required actions are executed. Once a required action is completed, the user will not have to perform the action again. Here are an explanation of some of the built-in required action types:

- Update Password

- When set, a user must change their password.

- Configure OTP

- When set, a user must configure a one-time password generator on their mobile device using either the Free OTP or Google Authenticator application.

- Verify Email

- When set, a user must verify that they have a valid email account. An email will be sent to the user with a link they have to click. Once this workflow is successfully completed, they will be allowed to log in.

- Update Profile

- This required action asks the user to update their profile information, i.e. their name, address, email, and/or phone number.

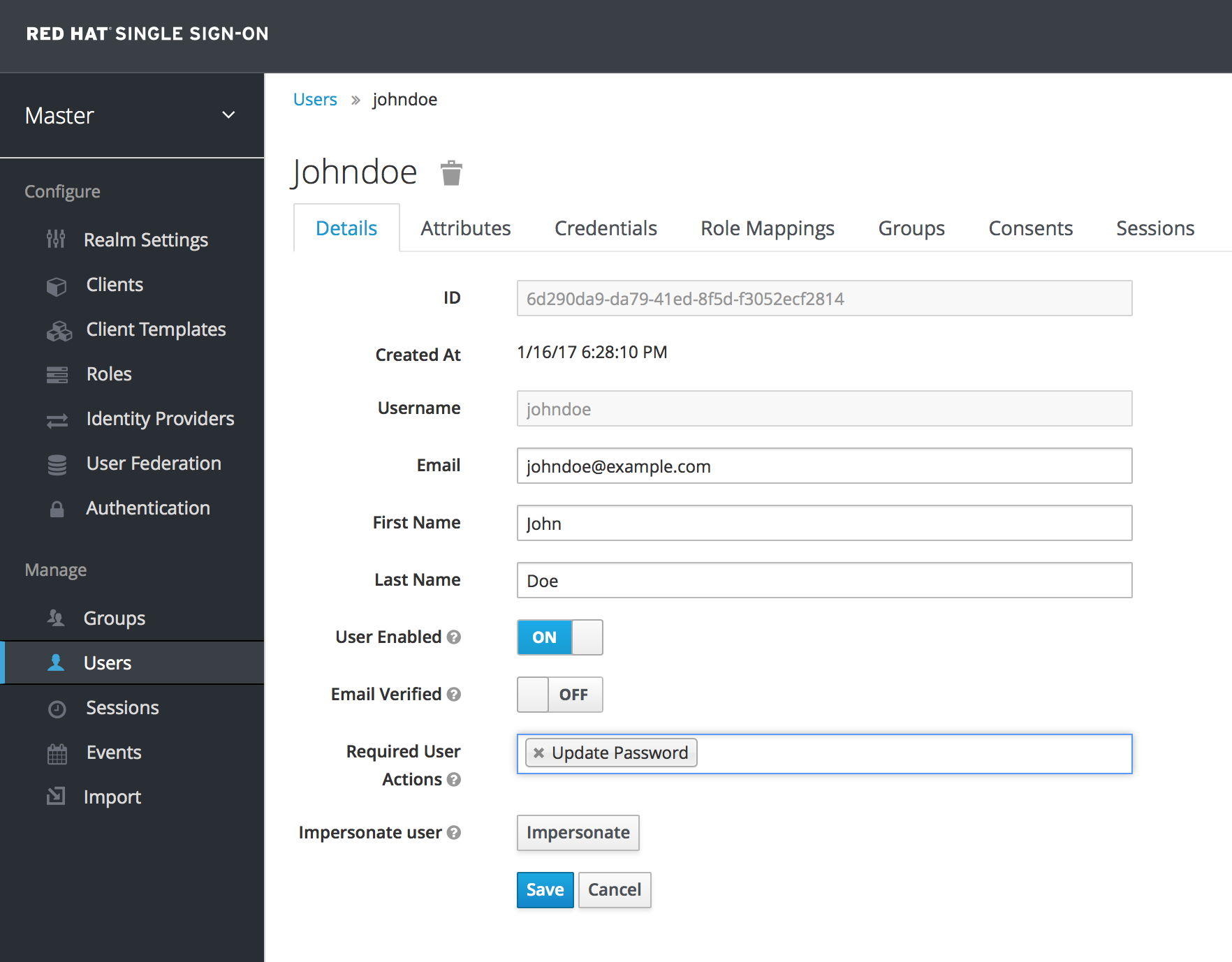

Admins can add required actions for each individual user within the user’s Details tab in the Admin Console.

Setting Required Action

In the Required User Actions list box, select all the actions you want to add to the account. If you want to remove one, click the X next to the action name. Also remember to click the Save button after you’ve decided what actions to add.

4.5.1. Default Required Actions

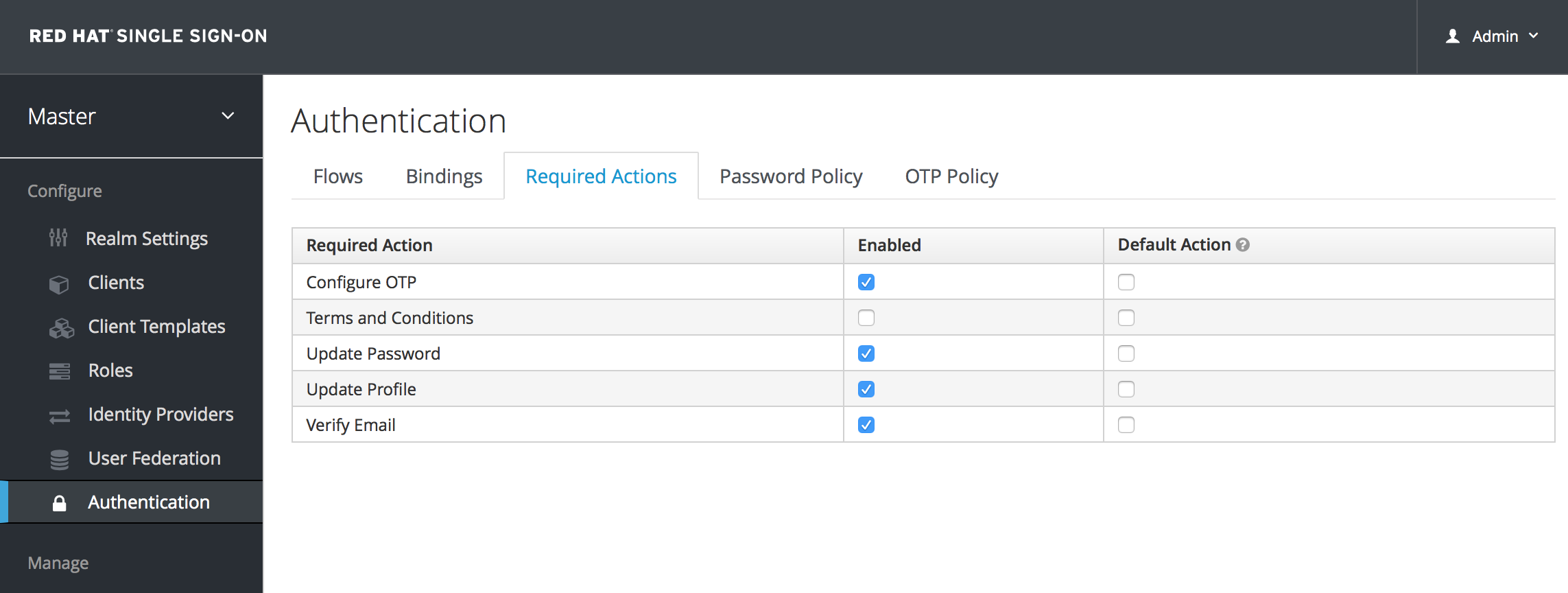

You can also specify required actions that will be added to an account whenever a new user is created, i.e. through the Add User button the user list screen, or via the user registration link on the login page. To specify the default required actions go to the Authentication left menu item and click on the Required Actions tab.

Default Required Actions

Simply click the checkbox in the Default Action column of the required actions that you want to be executed when a brand new user logs in.

4.5.2. Terms and Conditions

Many organizations have a requirement that when a new user logs in for the first time, they need to agree to the terms and conditions of the website. Red Hat Single Sign-On has this functionality implemented as a required action, but it requires some configuration. For one, you have to go to the Required Actions tab described earlier and enable the Terms and Conditions action. You must also edit the terms.ftl file in the base login theme. See the Server Developer Guide for more information on extending and creating themes.

4.6. Impersonation

It is often useful for an admin to impersonate a user. For example, a user may be experiencing a bug in one of your applications and an admin may want to impersonate the user to see if they can duplicate the problem. Admins with the appropriate permission can impersonate a user. There are two locations an admin can initiate impersonation. The first is on the Users list tab.

Users

You can see here that the admin has searched for jim. Next to Jim’s account you can see an impersonate button. Click that to impersonate the user.

Also, you can impersonate the user from the user Details tab.

User Details

Near the bottom of the page you can see the Impersonate button. Click that to impersonate the user.

When impersonating, if the admin and the user are in the same realm, then the admin will be logged out and automatically logged in as the user being impersonated. If the admin and user are not in the same realm, the admin will remain logged in, but additionally be logged in as the user in that user’s realm. In both cases, the browser will be redirected to the impersonated user’s User Account Management page.

Any user with the realm’s impersonation role can impersonate a user. Please see the Admin Console Access Control chapter for more details on assigning administration permissions.

4.7. User Registration

You can enable Red Hat Single Sign-On to allow user self registration. When enabled, the login page has a registration link the user can click on to create their new account.

When user self registration is enabled it is possible to use the registration form to detect valid usernames and emails. To prevent this enable reCAPTCHA Support.

Enabling registration is pretty simple. Go to the Realm Settings left menu and click it. Then go to the Login tab. There is a User Registration switch on this tab. Turn it on, then click the Save button.

Login Tab

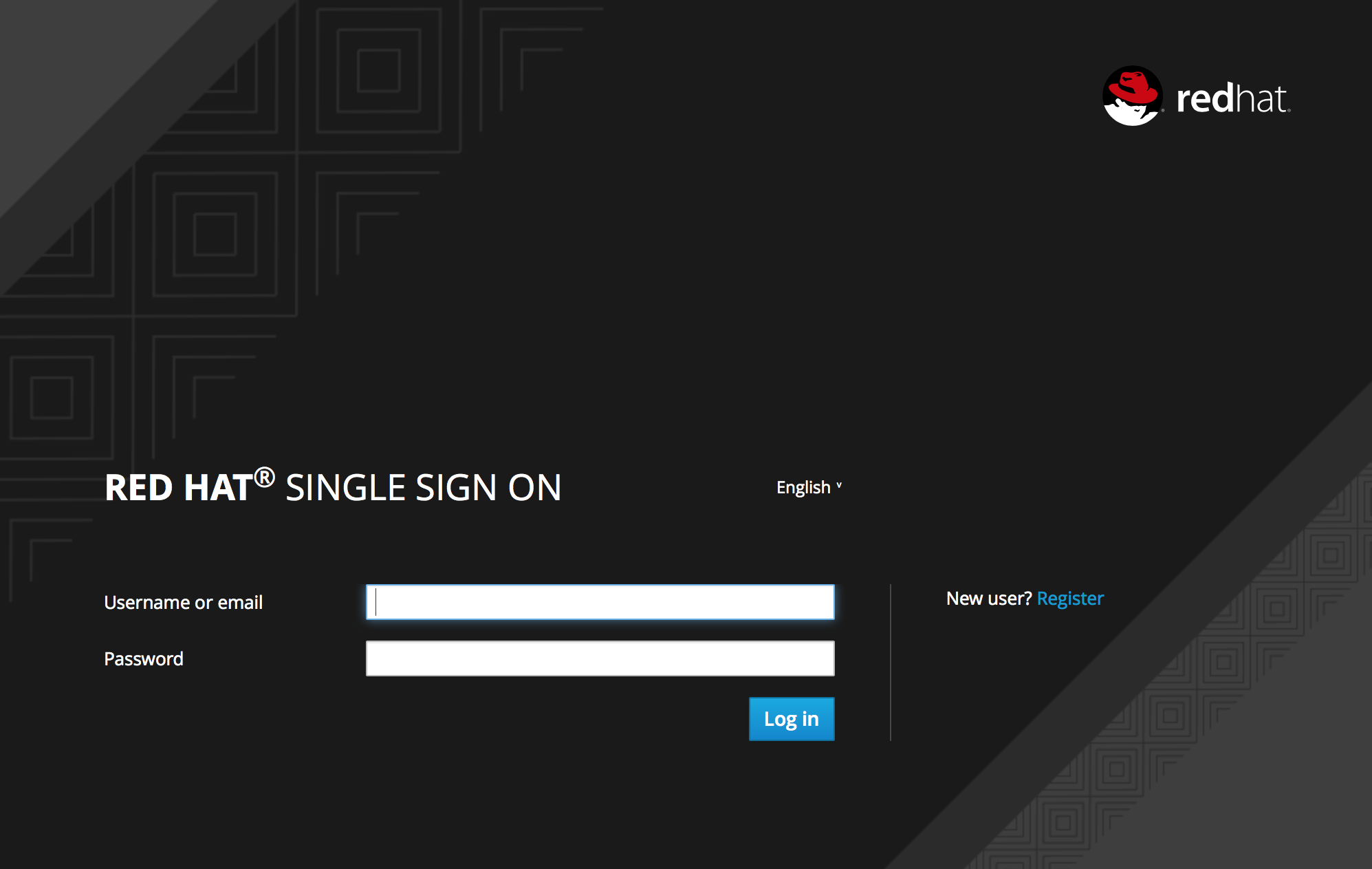

After you enable this setting, a Register link should show up on the login page.

Registration Link

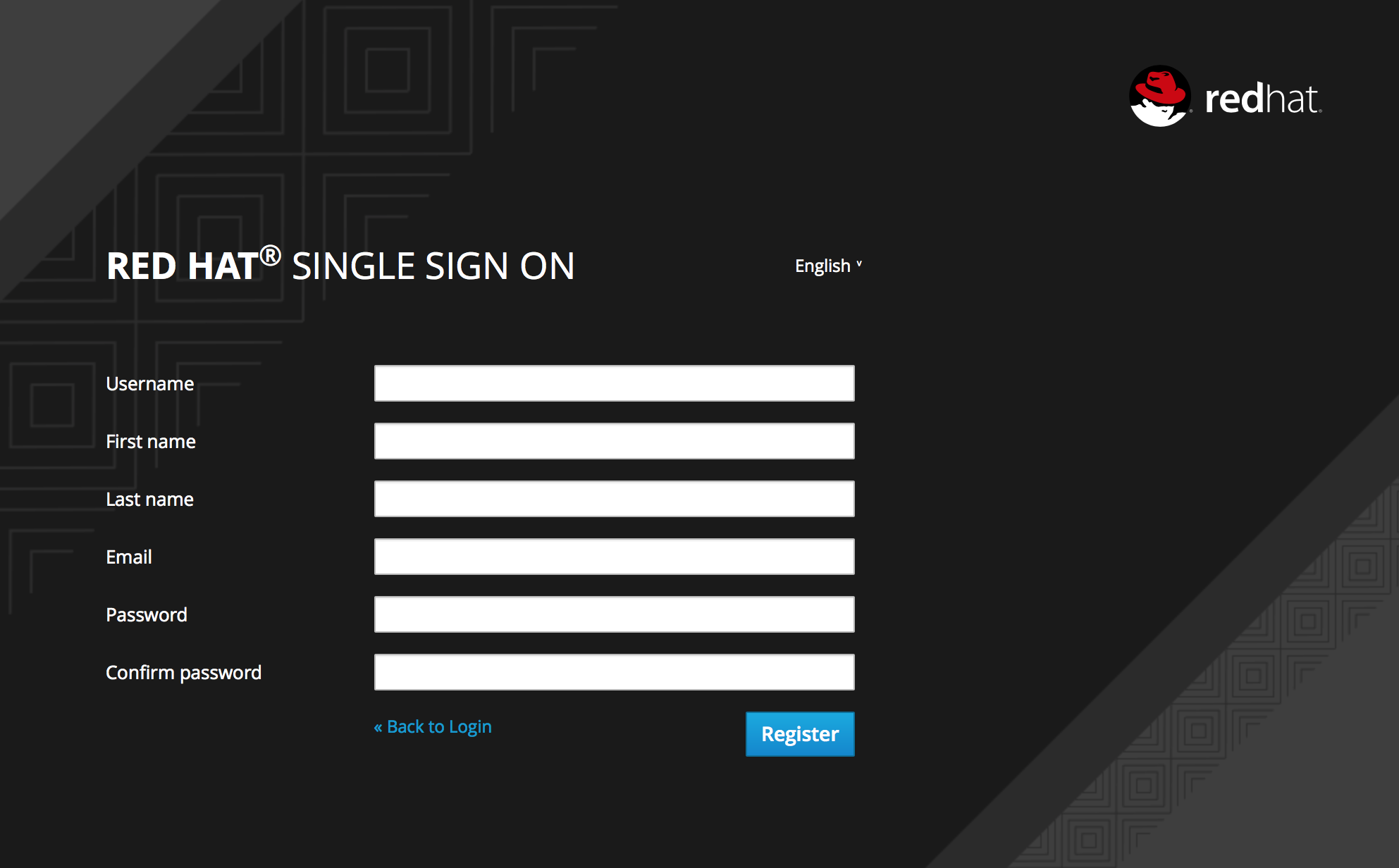

Clicking on this link will bring the user to the registration page where they have to enter in some user profile information and a new password.

Registration Form

You can change the look and feel of the registration form as well as removing or adding additional fields that must be entered. See the Server Developer Guide for more information.

4.7.1. reCAPTCHA Support

To safeguard registration against bots, Red Hat Single Sign-On has integration with Google reCAPTCHA. To enable this you need to first go to Google Recaptcha Website and create an API key so that you can get your reCAPTCHA site key and secret. (FYI, localhost works by default so you don’t have to specify a domain).

Next, there are a few steps you need to perform in the Red Hat Single Sign-On Admin Console. Click the Authentication left menu item and go to the Flows tab. Select the Registration flow from the drop down list on this page.

Registration Flow

Set the 'reCAPTCHA' requirement to Required by clicking the appropriate radio button. This will enable reCAPTCHA on the screen. Next, you have to enter in the reCAPTCHA site key and secret that you generated at the Google reCAPTCHA Website. Click on the 'Actions' button that is to the right of the reCAPTCHA flow entry, then "Config" link, and enter in the reCAPTCHA site key and secret on this config page.

Recaptcha Config Page

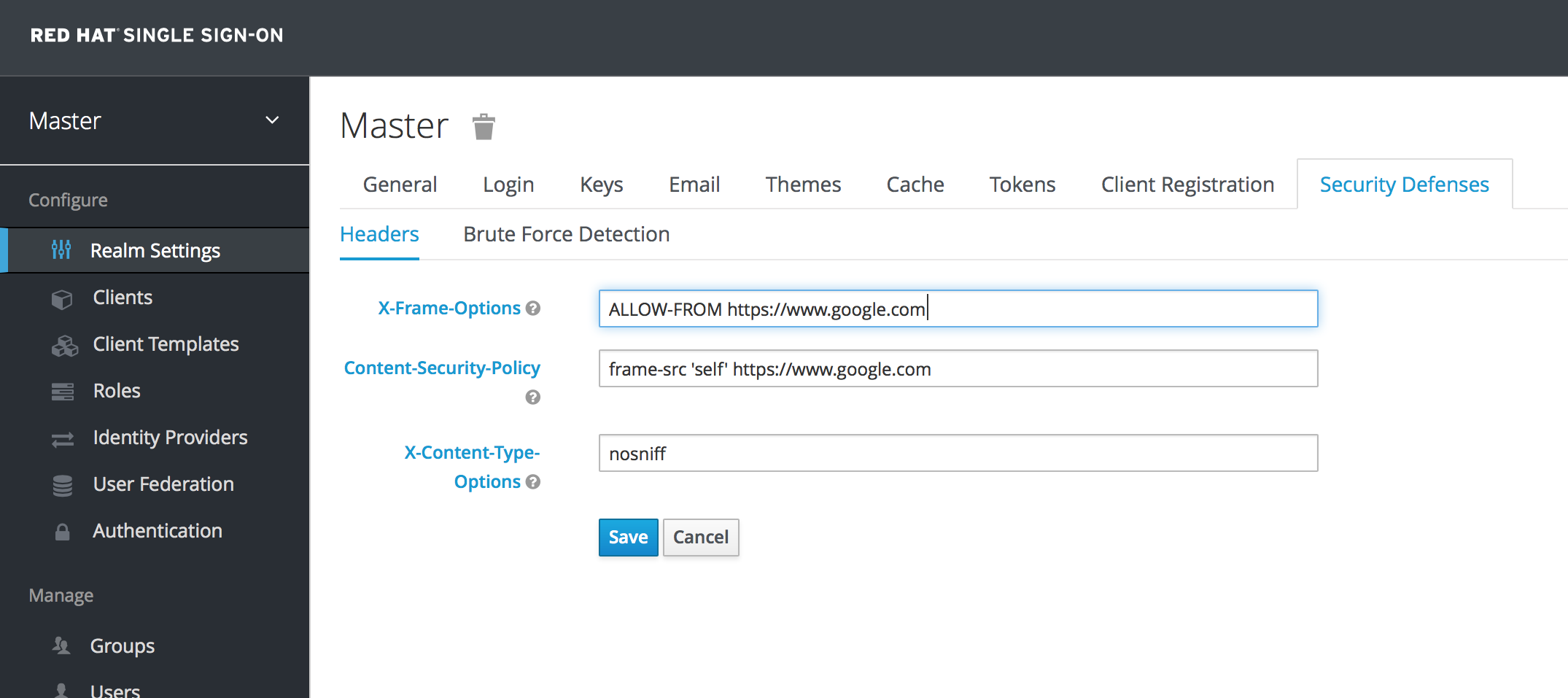

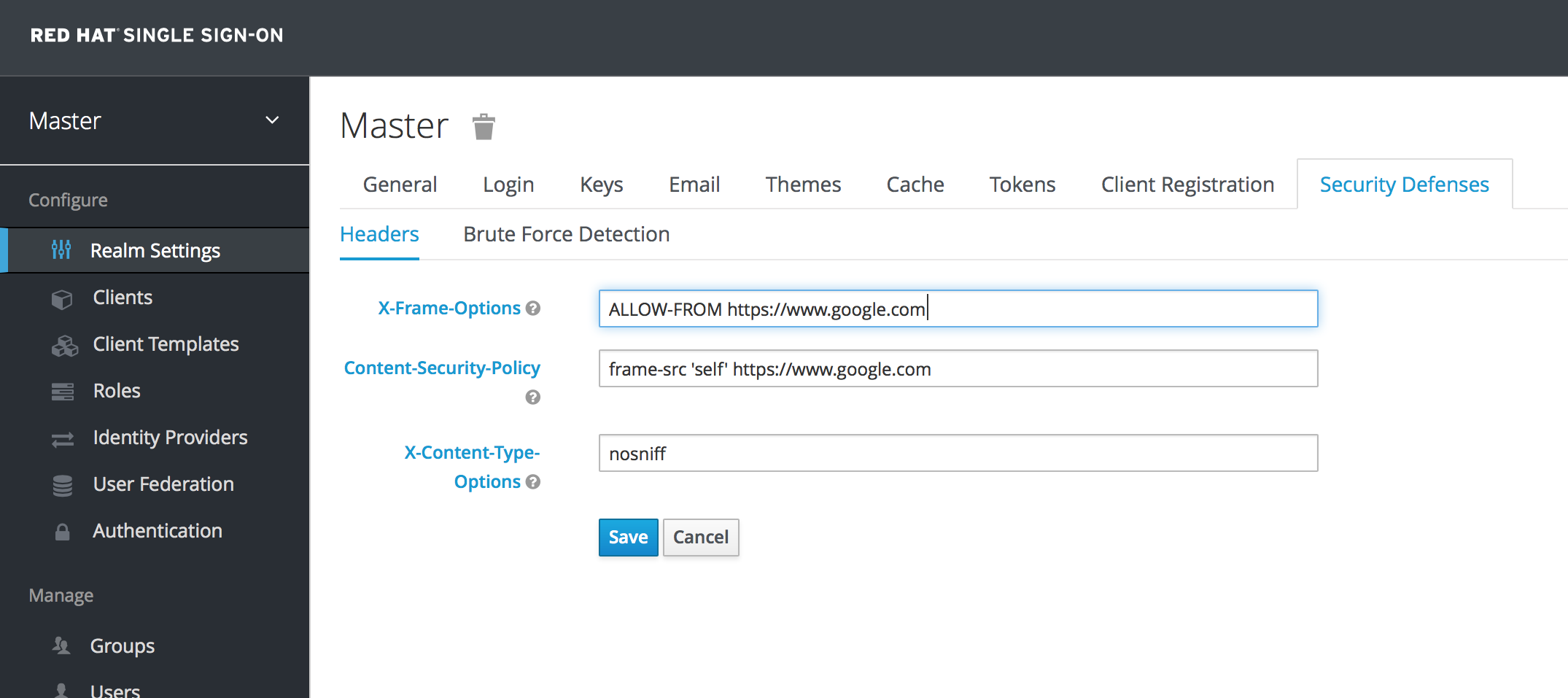

The final step you have to do is to change some default HTTP response headers that Red Hat Single Sign-On sets. Red Hat Single Sign-On will prevent a website from including any login page within an iframe. This is to prevent clickjacking attacks. You need to authorize Google to use the registration page within an iframe. Go to the Realm Settings left menu item and then go to the Security Defenses tab. You will need to add https://www.google.com to the values of both the X-Frame-Options and Content-Security-Policy headers.

Authorizing Iframes

Once you do this, reCAPTCHA should show up on your registration page. You may want to edit register.ftl in your login theme to muck around with the placement and styling of the reCAPTCHA button. See the Server Developer Guide for more information on extending and creating themes.

Chapter 5. Login Page Settings

There are several nice built-in login page features you can enable if you need the functionality.

5.1. Forgot Password

If you enable it, users are able to reset their credentials if they forget their password or lose their OTP generator. Go to the Realm Settings left menu item, and click on the Login tab. Switch on the Forgot Password switch.

Login Tab

A forgot password link will now show up on your login pages.

Forgot Password Link

Clicking on this link will bring the user to a page where they can enter in their username or email and receive an email with a link to reset their credentials.

Forgot Password Page

The text sent in the email is completely configurable. You just need to extend or edit the theme associated with it. See the Server Developer Guide for more information.

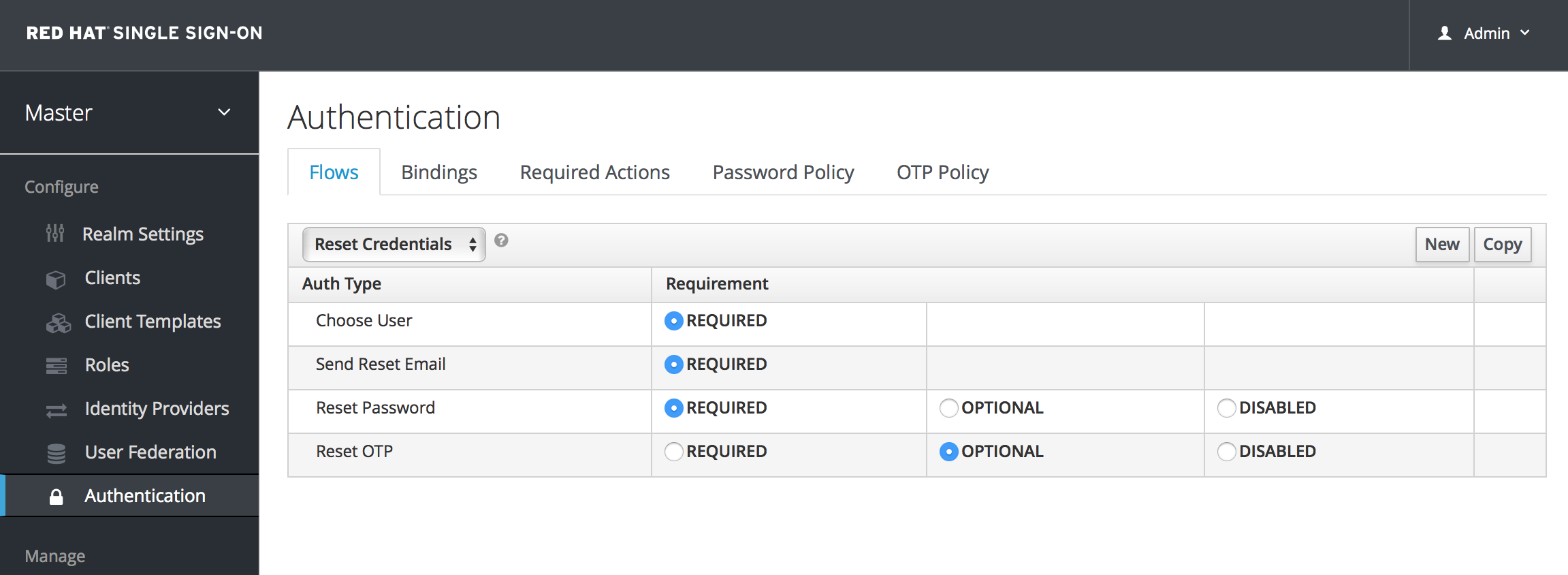

When the user clicks on the email link, they will be asked to update their password, and, if they have an OTP generator set up, they will also be asked to reconfigure this as well. Depending on the security requirements of your organization you may not want users to be able to reset their OTP generator through email. You can change this behavior by going to the Authentication left menu item, clicking on the Flows tab, and selecting the Reset Credentials flow:

Reset Credentials Flow

If you do not want OTP reset, then just chose the disabled radio button to the right of Reset OTP.

5.2. Remember Me

If a logged in user closes their browser, their session is destroyed and they will have to log in again. You can set things up so that if a user checks a remember me checkbox, they will remain logged in even if the browser is closed. This basically turns the login cookie from a session-only cookie to a persistence cookie.

To enable this feature go to Realm Settings left menu item and click on the Login tab and turn on the Remember Me switch:

Login Tab

Once you save this setting, a remember me checkbox will be displayed on the realm’s login page.

Remember Me

Chapter 6. Authentication

There are a few features you should be aware of when configuring authentication for your realm. Many organizations have strict password and OTP policies that you can enforce via settings in the Admin Console. You may or may not want to require different credential types for authentication. You may want to give users the option to login via Kerberos or disable or enable various built-in credential types. This chapter covers all of these topics.

6.1. Password Policies

Each new realm created has no password policies associated with it. Users can have as short, as long, as complex, as insecure a password, as they want. Simple settings are fine for development or learning Red Hat Single Sign-On, but unacceptable in production environments. Red Hat Single Sign-On has a rich set of password policies you can enable through the Admin Console.

Click on the Authentication left menu item and go to the Password Policy tab. Choose the policy you want to add in the right side drop down list box. This will add the policy in the table on the screen. Choose the parameters for the policy. Hit the Save button to store your changes.

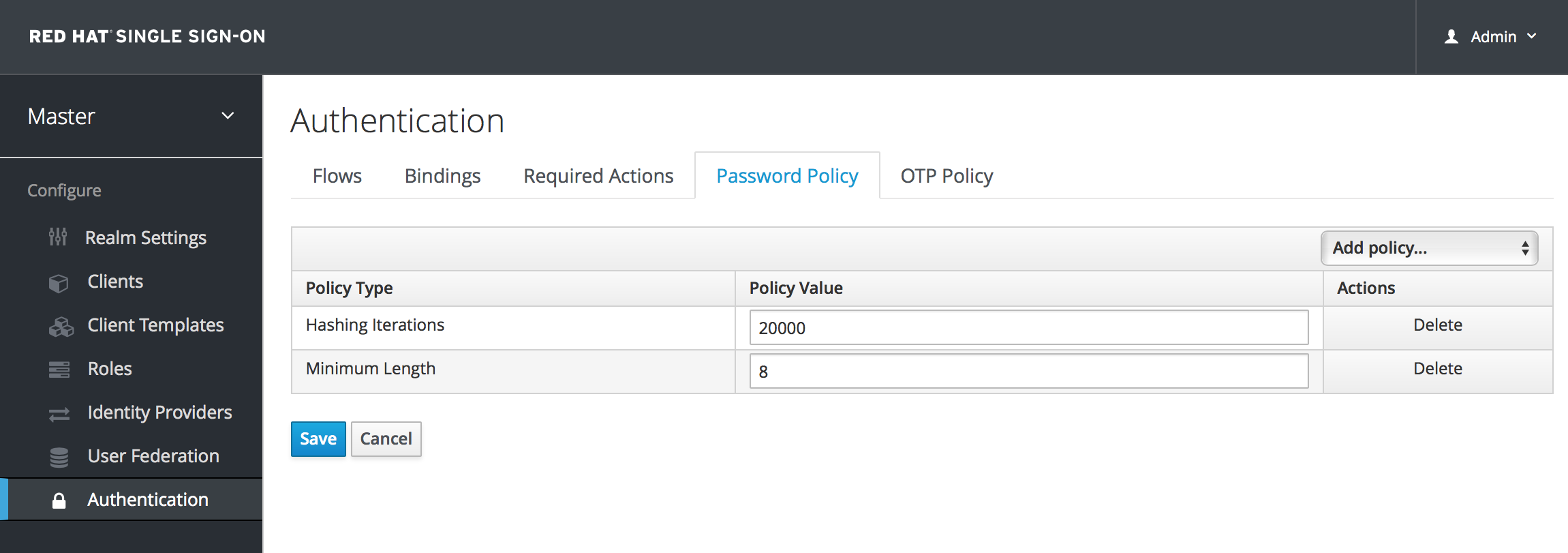

Password Policy

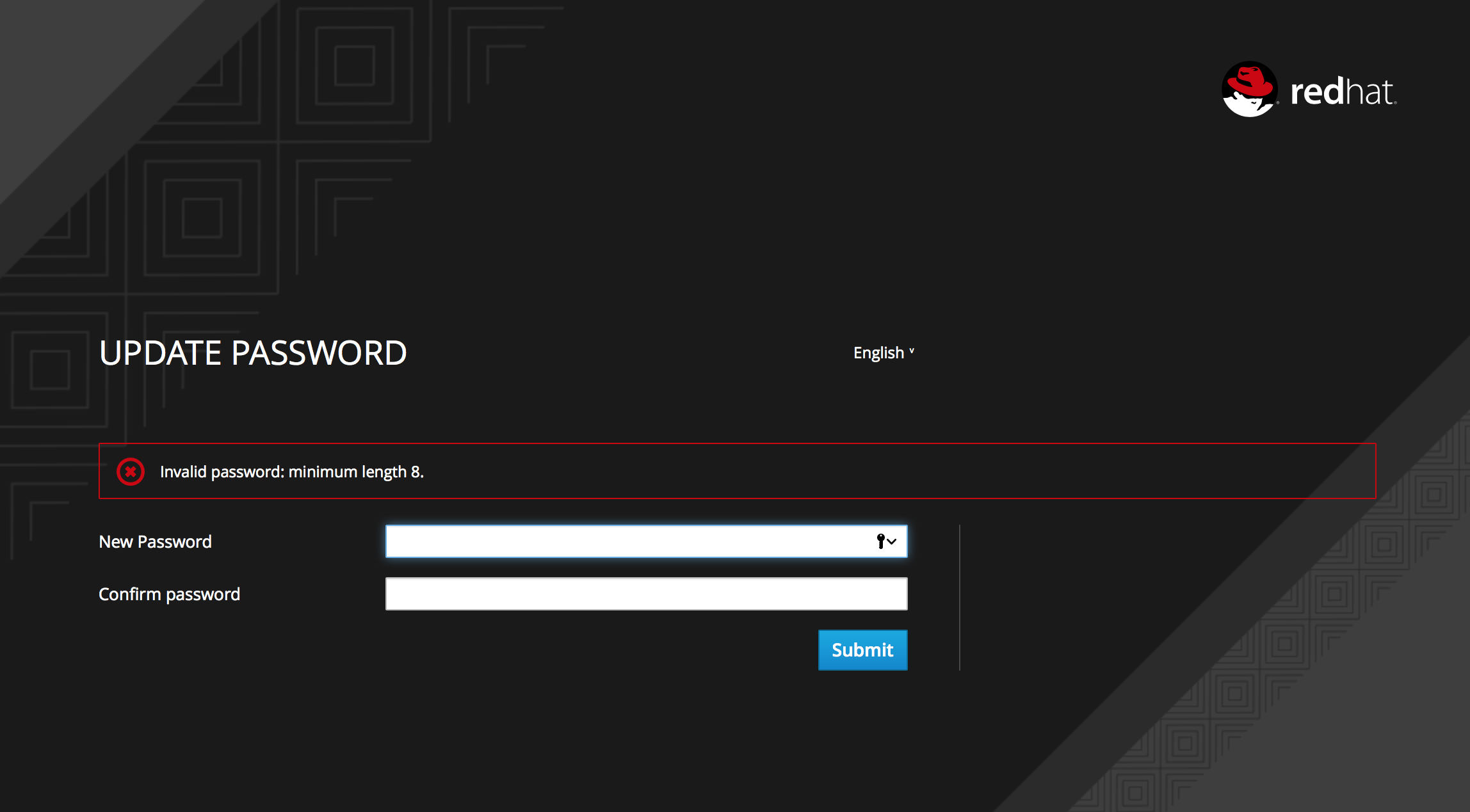

After saving your policy, user registration and the Update Password required action will enforce your new policy. An example of a user failing the policy check:

Failed Password Policy

If the password policy is updated, an Update Password action must be set for every user. An automatic trigger is scheduled as a future enhancement.

6.1.1. Password Policy Types

Here’s an explanation of each policy type:

- Hashing Algorithm

- Passwords are not stored as clear text. Instead they are hashed using standard hashing algorithms before they are stored or validated. Supported values are pbkdf2, pbkdf2-sha256 and pbkdf2-sha512.

- Hashing Iterations

- This value specifies the number of times a password will be hashed before it is stored or verified. The default value is 20,000. This hashing is done in the rare case that a hacker gets access to your password database. Once they have access to the database, they can reverse engineer user passwords. The industry recommended value for this parameter changes every year as CPU power improves. A higher hashing iteration value takes more CPU power for hashing, and can impact performance. You’ll have to weigh what is more important to you. Performance or protecting your passwords stores. There may be more cost effective ways of protecting your password stores.

- Digits

- The number of digits required to be in the password string.

- Lowercase Characters

- The number of lower case letters required to be in the password string.

- Uppercase Characters

- The number of upper case letters required to be in the password string.

- Special Characters

- The number of special characters like '?!#%$' required to be in the password string.

- Not Username

- When set, the password is not allowed to be the same as the username.

- Regular Expression

- Define one or more Perl regular expression patterns that passwords must match.

- Expire Password

- The number of days for which the password is valid. After the number of days has expired, the user is required to change their password.

- Not Recently Used

- This policy saves a history of previous passwords. The number of old passwords stored is configurable. When a user changes their password they cannot use any stored passwords.

- Password Blacklist

-

This policy checks if a given password is contained in a blacklist file, which is potentially a very large file. Password blacklists are UTF-8 plain-text files with Unix line endings where every line represents a blacklisted password. The file name of the blacklist file must be provided as the password policy value, e.g.

10_million_password_list_top_1000000.txt. Blacklist files are resolved against${jboss.server.data.dir}/password-blacklists/by default. This path can be customized via thekeycloak.password.blacklists.pathsystem property, or theblacklistsPathproperty of thepasswordBlacklistpolicy SPI configuration.

6.2. OTP Policies

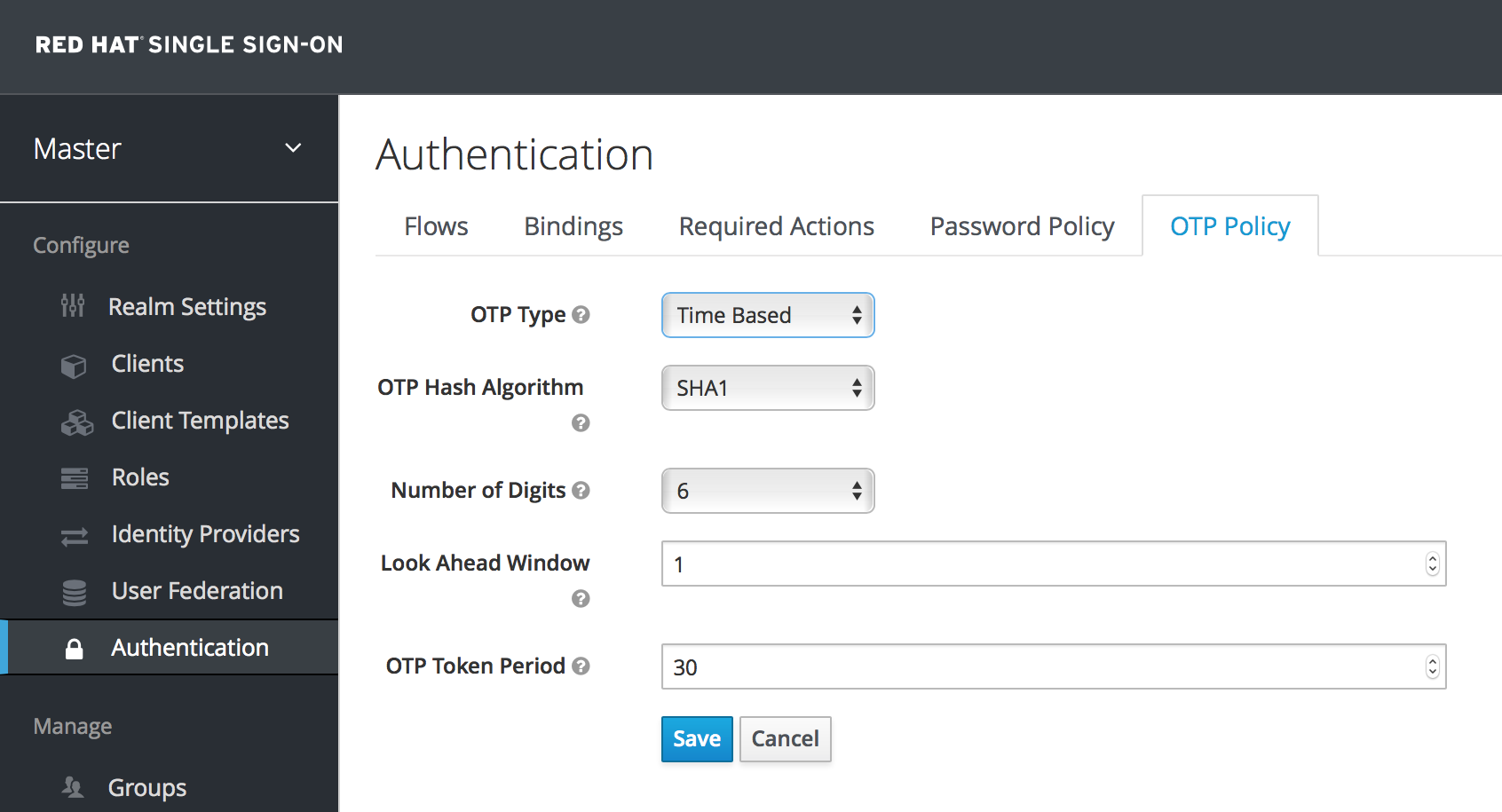

Red Hat Single Sign-On has a number of policies you can set up for your FreeOTP or Google Authenticator One-Time Password generator. Click on the Authentication left menu item and go to the OTP Policy tab.

OTP Policy

Any policies you set here will be used to validate one-time passwords. When configuring OTP, FreeOTP and Google Authenticator can scan a QR code that is generated on the OTP set up page that Red Hat Single Sign-On has. The bar code is also generated from information configured on the OTP Policy tab.

6.2.1. TOTP vs. HOTP

There are two different algorithms to choose from for your OTP generators. Time Based (TOTP) and Counter Based (HOTP). For TOTP, your token generator will hash the current time and a shared secret. The server validates the OTP by comparing the all hashes within a certain window of time to the submitted value. So, TOTPs are valid only for a short window of time (usually 30 seconds). For HOTP a shared counter is used instead of the current time. The server increments the counter with each successful OTP login. So, valid OTPs only change after a successful login.

TOTP is considered a little more secure because the matchable OTP is only valid for a short window of time while the OTP for HOTP can be valid for an indeterminate amount of time. HOTP is much more user friendly as the user won’t have to hurry to enter in their OTP before the time interval is up. With the way Red Hat Single Sign-On has implemented TOTP this distinction becomes a little more blurry. HOTP requires a database update every time the server wants to increment the counter. This can be a performance drain on the authentication server when there is heavy load. So, to provide a more efficient alternative, TOTP does not remember passwords used. This bypasses the need to do any DB updates, but the downside is that TOTPs can be re-used in the valid time interval. For future versions of Red Hat Single Sign-On it is planned that you will be able to configure whether TOTP checks older OTPs in the time interval.

6.2.2. TOTP Configuration Options

- OTP Hash Algorithm

- Default is SHA1, more secure options are SHA256 and SHA512.

- Number of Digits

- How many characters is the OTP? Short means more user friendly as it is less the user has to type. More means more security.

- Look Ahead Window

- How many intervals ahead should the server try and match the hash? This exists so just in case the clock of the TOTP generator or authentication server get out of sync. The default value of 1 is usually good enough. For example, if the time interval for a new token is every 30 seconds, the default value of 1 means that it will only accept valid tokens in that 30 second window. Each increment of this config value will increase the valid window by 30 seconds.

- OTP Token Period

- Time interval in seconds a new TOTP will be generated by the token generator. And, the time window the server is matching a hash.

6.2.3. HOTP Configuration Options

- OTP Hash Algorithm

- Default is SHA1, more secure options are SHA256 and SHA512.

- Number of Digits

- How many characters is the OTP? Short means more user friendly as it is less the user has to type. More means more security.

- Look Ahead Window

- How many counters ahead should the server try and match the hash? The default value is 1. This exists to cover the case where the user’s counter gets ahead of the server’s. This can often happen as users often increment the counter manually too many times by accident. This value really should be increased to a value of 10 or so.

- Initial Counter

- What is the value of the initial counter?

6.3. Authentication Flows

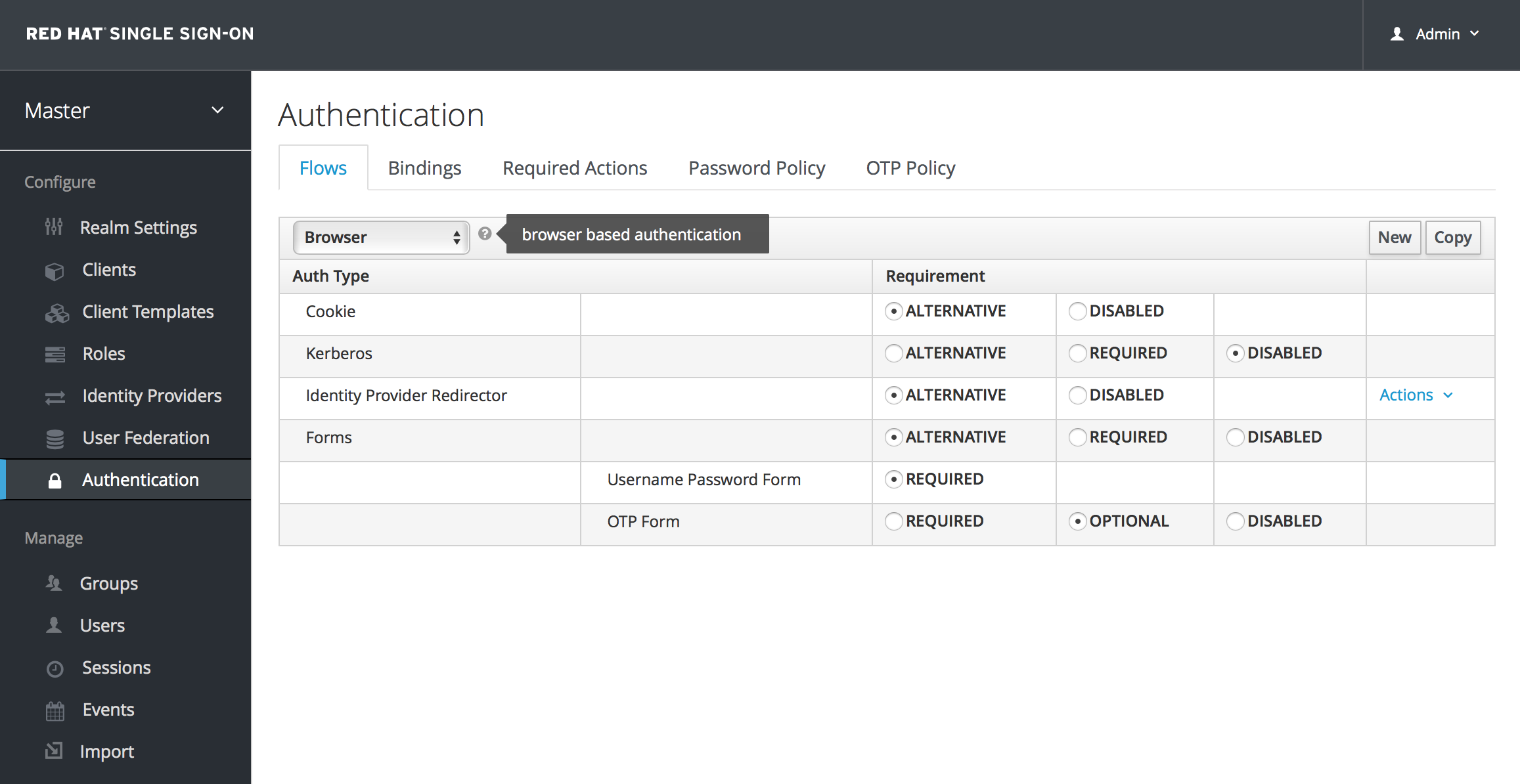

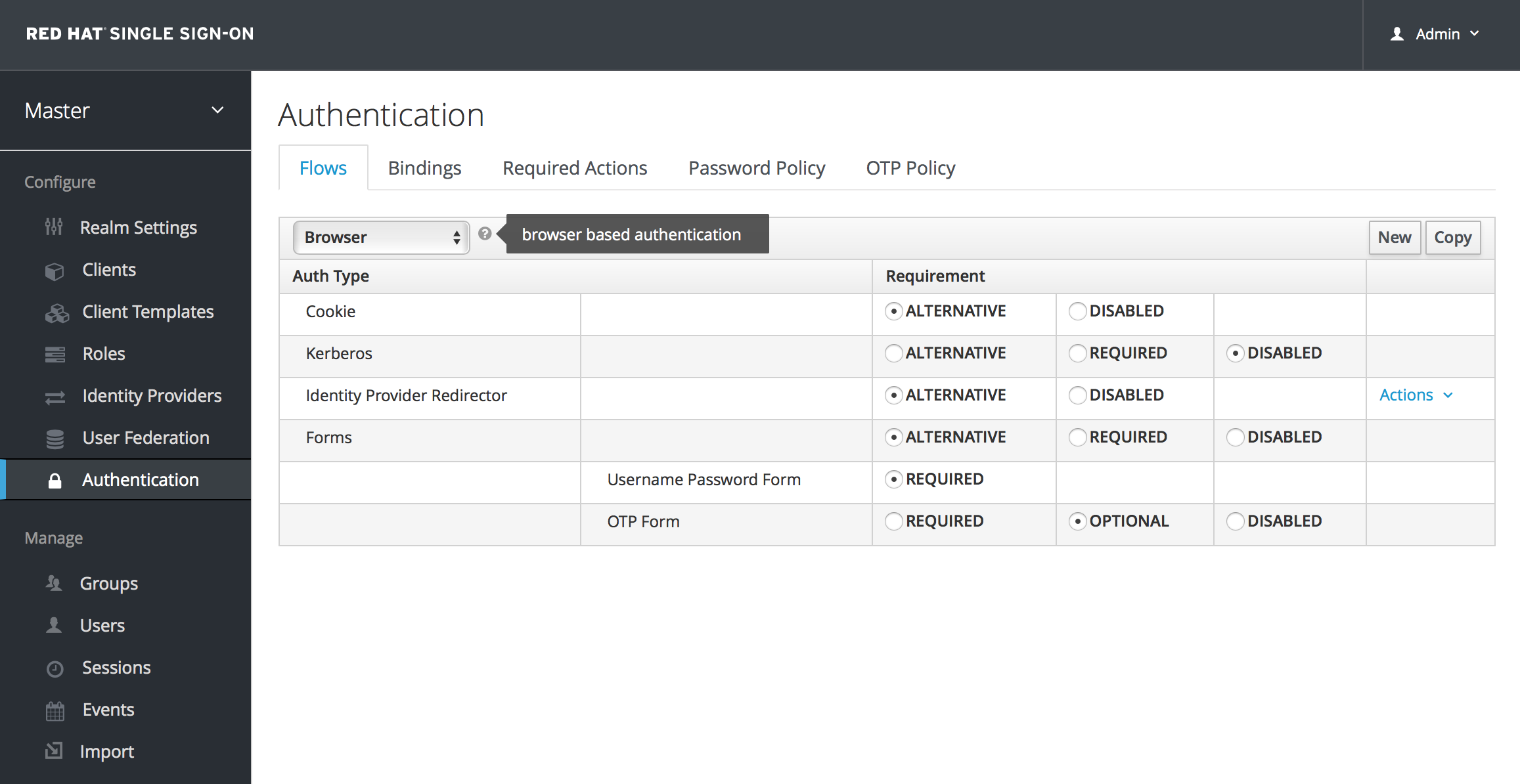

An authentication flow is a container for all authentications, screens, and actions that must happen during login, registration, and other Red Hat Single Sign-On workflows. If you go to the admin console Authentication left menu item and go to the Flows tab, you can view all the defined flows in the system and what actions and checks each flow requires. This section does a walk through of the browser login flow. In the left drop down list select browser to come to the screen shown below:

Browser Flow

If you hover over the tooltip (the tiny question mark) to the right of the flow selection list, this will describe what the flow is and does.

The Auth Type column is the name of authentication or action that will be executed. If an authentication is indented this means it is in a sub-flow and may or may not be executed depending on the behavior of its parent. The Requirement column is a set of radio buttons which define whether or not the action will execute. Let’s describe what each radio button means:

- Required

-

This authentication execution must execute successfully. If the user doesn’t have that type of authentication mechanism configured and there is a required action associated with that authentication type, then a required action will be attached to that account. For example, if you switch

OTP FormtoRequired, users that don’t have an OTP generator configured will be asked to do so. - Optional

- If the user has the authentication type configured, it will be executed. Otherwise, it will be ignored.

- Disabled

- If disabled, the authentication type is not executed.

- Alternative

- This means that at least one alternative authentication type must execute successfully at that level of the flow.

This is better described in an example. Let’s walk through the browser authentication flow.

-

The first authentication type is

Cookie. When a user successfully logs in for the first time, a session cookie is set. If this cookie has already been set, then this authentication type is successful. Since the cookie provider returned success and each execution at this level of the flow is alternative, no other execution is executed and this results in a successful login. - Next the flow looks at the Kerberos execution. This authenticator is disabled by default and will be skipped.

-

The next execution is a subflow called Forms. Since this subflow is marked as alternative it will not be executed if the

Cookieauthentication type passed. This subflow contains additional authentication type that needs to be executed. The executions for this subflow are loaded and the same processing logic occurs - The first execution in the Forms subflow is the Username Password Form. This authentication type renders the username and password page. It is marked as required so the user must enter in a valid username and password.

- The next execution is the OTP Form. This is marked as optional. If the user has OTP set up, then this authentication type must run and be successful. If the user doesn’t have OTP set up, this authentication type is ignored.

6.4. Kerberos

Red Hat Single Sign-On supports login with a Kerberos ticket through the SPNEGO protocol. SPNEGO (Simple and Protected GSSAPI Negotiation Mechanism) is used to authenticate transparently through the web browser after the user has been authenticated when logging-in his session. For non-web cases or when ticket is not available during login, Red Hat Single Sign-On also supports login with Kerberos username/password.

A typical use case for web authentication is the following:

- User logs into his desktop (Such as a Windows machine in Active Directory domain or Linux machine with Kerberos integration enabled).

- User then uses his browser (IE/Firefox/Chrome) to access a web application secured by Red Hat Single Sign-On.

- Application redirects to Red Hat Single Sign-On login.

-

Red Hat Single Sign-On renders HTML login screen together with status 401 and HTTP header

WWW-Authenticate: Negotiate -

In case that the browser has Kerberos ticket from desktop login, it transfers the desktop sign on information to the Red Hat Single Sign-On in header

Authorization: Negotiate 'spnego-token'. Otherwise it just displays the login screen. - Red Hat Single Sign-On validates token from the browser and authenticates the user. It provisions user data from LDAP (in case of LDAPFederationProvider with Kerberos authentication support) or let user to update his profile and prefill data (in case of KerberosFederationProvider).

- Red Hat Single Sign-On returns back to the application. Communication between Red Hat Single Sign-On and application happens through OpenID Connect or SAML messages. The fact that Red Hat Single Sign-On was authenticated through Kerberos is hidden from the application. So Red Hat Single Sign-On acts as broker to Kerberos/SPNEGO login.

For setup there are 3 main parts:

- Setup and configuration of Kerberos server (KDC)

- Setup and configuration of Red Hat Single Sign-On server

- Setup and configuration of client machines

6.4.1. Setup of Kerberos server

This is platform dependent. Exact steps depend on your OS and the Kerberos vendor you’re going to use. Consult Windows Active Directory, MIT Kerberos and your OS documentation for how exactly to setup and configure Kerberos server.

At least you will need to:

- Add some user principals to your Kerberos database. You can also integrate your Kerberos with LDAP, which means that user accounts will be provisioned from LDAP server.

Add service principal for "HTTP" service. For example if your Red Hat Single Sign-On server will be running on

www.mydomain.orgyou may need to add principalHTTP/www.mydomain.org@MYDOMAIN.ORGassuming that MYDOMAIN.ORG will be your Kerberos realm.For example on MIT Kerberos you can run a "kadmin" session. If you are on the same machine where is MIT Kerberos, you can simply use the command:

sudo kadmin.local

sudo kadmin.localThen add HTTP principal and export his key to a keytab file with the commands like:

addprinc -randkey HTTP/www.mydomain.org@MYDOMAIN.ORG ktadd -k /tmp/http.keytab HTTP/www.mydomain.org@MYDOMAIN.ORG

addprinc -randkey HTTP/www.mydomain.org@MYDOMAIN.ORG

ktadd -k /tmp/http.keytab HTTP/www.mydomain.org@MYDOMAIN.ORG

The Keytab file /tmp/http.keytab will need to be accessible on the host where Red Hat Single Sign-On server will be running.

6.4.2. Setup and configuration of Red Hat Single Sign-On server

You need to install a kerberos client on your machine. This is also platform dependent. If you are on Fedora, Ubuntu or RHEL, you can install the package freeipa-client, which contains a Kerberos client and several other utilities. Configure the kerberos client (on linux it’s in file /etc/krb5.conf ). You need to put your Kerberos realm and at least configure the HTTP domains your server will be running on. For the example realm MYDOMAIN.ORG you may configure the domain_realm section like this:

[domain_realm] .mydomain.org = MYDOMAIN.ORG mydomain.org = MYDOMAIN.ORG

[domain_realm]

.mydomain.org = MYDOMAIN.ORG

mydomain.org = MYDOMAIN.ORG

Next you need to export the keytab file with the HTTP principal and make sure the file is accessible to the process under which Red Hat Single Sign-On server is running. For production, it’s ideal if it’s readable just by this process and not by someone else. For the MIT Kerberos example above, we already exported keytab to /tmp/http.keytab . If your KDC and Red Hat Single Sign-On are running on same host, you have that file already available.

6.4.2.1. Enable SPNEGO Processing

Red Hat Single Sign-On does not have the SPNEGO protocol support turned on by default. So, you have to go to the browser flow and enable Kerberos.

Browser Flow

Switch the Kerberos requirement from disabled to either alternative or required. Alternative basically means that Kerberos is optional. If the user’s browser hasn’t been configured to work with SPNEGO/Kerberos, then Red Hat Single Sign-On will fall back to the regular login screens. If you set the requirement to required then all users must have Kerberos enabled for their browser.

6.4.2.2. Configure Kerberos User Storage Federation Provider

Now that the SPNEGO protocol is turned on at the authentication server, you’ll need to configure how Red Hat Single Sign-On interprets the Kerberos ticket. This is done through User Storage Federation. We have 2 different federation providers with Kerberos authentication support.

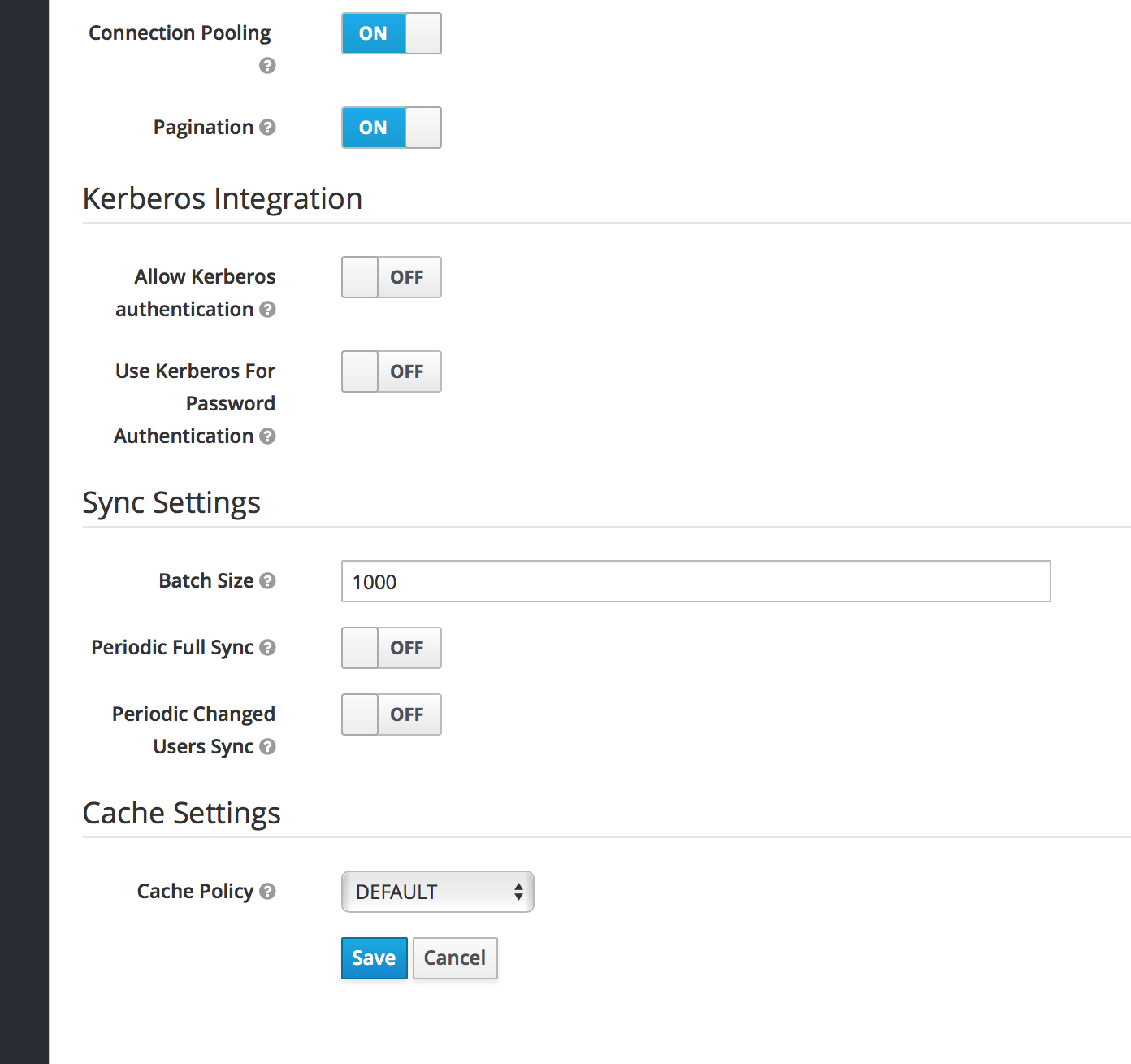

If you want to authenticate with Kerberos backed by an LDAP server, you have to first configure the LDAP Federation Provider. If you look at the configuration page for your LDAP provider you’ll see a Kerberos Integration section.

LDAP Kerberos Integration

Turning on the switch Allow Kerberos authentication will make Red Hat Single Sign-On use the Kerberos principal to lookup information about the user so that it can be imported into the Red Hat Single Sign-On environment.

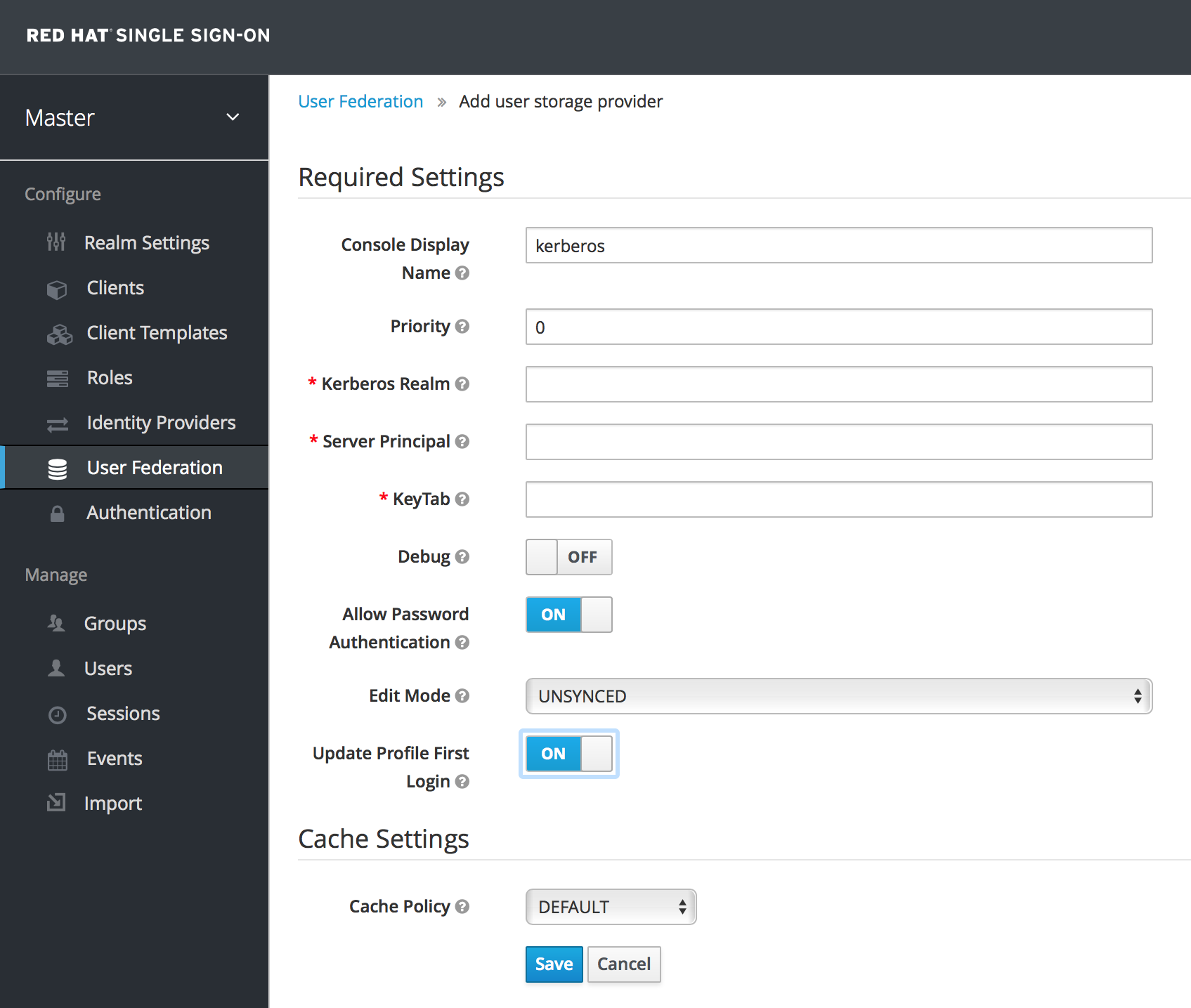

If your Kerberos solution is not backed by an LDAP server, you have to use the Kerberos User Storage Federation Provider. Go to the User Federation left menu item and select Kerberos from the Add provider select box.

Kerberos User Storage Provider

This provider parses the Kerberos ticket for simple principal information and does a small import into the local Red Hat Single Sign-On database. User profile information like first name, last name, and email are not provisioned.

6.4.3. Setup and configuration of client machines

Clients need to install kerberos client and setup krb5.conf as described above. Additionally they need to enable SPNEGO login support in their browser. See configuring Firefox for Kerberos if you are using that browser. URI .mydomain.org must be allowed in the network.negotiate-auth.trusted-uris config option.

In a Windows domain, clients usually don’t need to configure anything special as IE is already able to participate in SPNEGO authentication for the Windows domain.

6.4.4. Credential Delegation

Kerberos 5 supports the concept of credential delegation. In this scenario, your applications may want access to the Kerberos ticket so that they can re-use it to interact with other services secured by Kerberos. Since the SPNEGO protocol is processed in the Red Hat Single Sign-On server, you have to propagate the GSS credential to your application within the OpenID Connect token claim or a SAML assertion attribute that is transmitted to your application from the Red Hat Single Sign-On server. To have this claim inserted into the token or assertion, each application will need to enable the built-in protocol mapper called gss delegation credential. This is enabled in the Mappers tab of the application’s client page. See Protocol Mappers chapter for more details.

Applications will need to deserialize the claim it receives from Red Hat Single Sign-On before it can use it to make GSS calls against other services. Once you deserialize the credential from the access token to the GSSCredential object, the GSSContext will need to be created with this credential passed to the method GSSManager.createContext for example like this:

Note that you also need to configure forwardable kerberos tickets in krb5.conf file and add support for delegated credentials to your browser.

Credential delegation has some security implications so only use it if you really need it. It’s highly recommended to use it together with HTTPS. See for example this article for more details.

6.4.5. Cross-realm trust

In the Kerberos V5 protocol, the realm is a set of Kerberos principals defined in the Kerberos database (typically LDAP server). The Kerberos protocol has a concept of cross-realm trust. For example, if there are 2 kerberos realms A and B, the cross-realm trust will allow the users from realm A to access resources (services) of realm B. This means that realm B trusts the realm A.

Kerberos cross-realm trust

The Red Hat Single Sign-On server has support for cross-realm trust. There are few things which need to be done to achieve this:

-

Configure the Kerberos servers for the cross-realm trust. This step is dependent on the concrete Kerberos server implementations used. In general, it is needed to add the Kerberos principal

krbtgt/B@Ato both Kerberos databases of realm A and B. It is needed that this principal has same keys on both Kerberos realms. This is usually achieved when the principals have same password, key version number and there are same ciphers used in both realms. It is recommended to consult the Kerberos server documentation for more details.

The cross-realm trust is unidirectional by default. If you want bidirectional trust to have realm A also trust realm B, you must also add the principal krbtgt/A@B to both Kerberos databases. However, trust is transitive by default. If realm B trusts realm A and realm C trusts realm B, then realm C automatically trusts realm A without a need to have principal krbtgt/C@A available. Some additional configuration (for example capaths) may be needed to configure on Kerberos client side, so that the clients are able to find the trust path. Consult the Kerberos documentation for more details.

Configure Red Hat Single Sign-On server

-

If you use an LDAP storage provider with Kerberos support, you need to configure the server principal for realm B as in this example:

HTTP/mydomain.com@B. The LDAP server must be able to find the users from realm A if you want users from realm A to successfully authenticate to Red Hat Single Sign-On, as Red Hat Single Sign-On server must be able to do SPNEGO flow and then find the users. For example, kerberos principal userjohn@Amust be available as a user in the LDAP under an LDAP DN such asuid=john,ou=People,dc=example,dc=com. If you want both users from realm A and B to authenticate, you need to ensure that LDAP is able to find users from both realms A and B. We want to improve this limitation in future versions, so you can potentially create more separate LDAP providers for separate realms and ensure that SPNEGO works for both of them. -

If you use a Kerberos user storage provider (typically the Kerberos without LDAP integration), you need to configure the server principal as

HTTP/mydomain.com@Band users from both Kerberos realms A and B should be able to authenticate.

-

If you use an LDAP storage provider with Kerberos support, you need to configure the server principal for realm B as in this example:

For the Kerberos user storage provider, it is recommended that there are no conflicting users among kerberos realms. If conflicting users exist, they will be mapped to the same Red Hat Single Sign-On user. This is also something, which we want to improve in future versions and provide some more flexible mappings from Kerberos principals to Red Hat Single Sign-On usernames.

6.4.6. Troubleshooting

If you have issues, we recommend that you enable additional logging to debug the problem:

-

Enable

Debugflag in admin console for Kerberos or LDAP federation providers -

Enable TRACE logging for category

org.keycloakin logging section ofstandalone/configuration/standalone.xmlto receive more infostandalone/log/server.log -

Add system properties

-Dsun.security.krb5.debug=trueand-Dsun.security.spnego.debug=true

6.5. X.509 Client Certificate User Authentication

Red Hat Single Sign-On supports login with a X.509 client certificate if the server is configured for mutual SSL authentication.

A typical workflow is as follows:

- A client sends an authentication request over SSL/TLS channel

- During SSL/TLS handshake, the server and the client exchange their x.509/v3 certificates

- The container (WildFly) validates the certificate PKIX path and the certificate expiration

The x.509 client certificate authenticator validates the client certificate as follows:

- Optionally checks the certificate revocation status using CRL and/or CRL Distribution Points

- Optionally checks the Certificate revocation status using OCSP (Online Certificate Status Protocol)

- Optionally validates whether the key usage in the certificate matches the expected key usage

- Optionally validates whether the extended key usage in the certificate matches the expected extended key usage

- If any of the above checks fails, the x.509 authentication fails

- Otherwise, the authenticator extracts the certificate identity and maps it to an existing user

Once the certificate is mapped to an existing user, the behavior diverges depending on the authentication flow:

- In the Browser Flow, the server prompts the user to confirm identity or to ignore it and instead sign in with username/password

- In the case of the Direct Grant Flow, the server signs in the user

6.5.1. Features

- Supported Certificate Identity Sources

- Match SubjectDN using regular expression

- X500 Subject’s e-mail attribute

- X500 Subject’s e-mail from Subject Alternative Name Extension (RFC822Name General Name)

- X500 Subject’s Common Name attribute

- Match IssuerDN using regular expression

- X500 Issuer’s e-mail attribute

- X500 Issuer’s Common Name attribute

- Certificate Serial Number

- Regular Expressions

- The certificate identity can be extracted from either Subject DN or Issuer DN using a regular expression as a filter. For example, the regular expression below will match the e-mail attribute:

emailAddress=(.*?)(?:,|$)

emailAddress=(.*?)(?:,|$)

The regular expression filtering is applicable only if the Identity Source is set to either Match SubjectDN using regular expression or Match IssuerDN using regular expression.

- Mapping certificate identity to an existing user

-

The certificate identity mapping can be configured to map the extracted user identity to an existing user’s username or e-mail or to a custom attribute which value matches the certificate identity. For example, setting the

Identity sourceto Subject’s e-mail andUser mapping methodto Username or email will have the X.509 client certificate authenticator use the e-mail attribute in the certificate’s Subject DN as a search criteria to look up an existing user by username or by e-mail.

Please notice that if we disable Login with email at realm settings, the same rules will be applied to certificate authentication. In other words, users won’t be able to log in using e-mail attribute.

- Other Features: Extended Certificate Validation

- Revocation status checking using CRL

- Revocation status checking using CRL/Distribution Point

- Revocation status checking using OCSP/Responder URI

- Certificate KeyUsage validation

- Certificate ExtendedKeyUsage validation

6.5.2. Enable X.509 Client Certificate User Authentication

The following sections describe how to configure Wildfly/Undertow and the Red Hat Single Sign-On Server to enable X.509 client certificate authentication.

- Enable mutual SSL in WildFly

See Enable SSL and SSL for the instructions how to enable SSL in Wildfly.

- Open RHSSO_HOME/standalone/configuration/standalone.xml and add a new realm:

ssl/keystore-

The

sslelement contains thekeystoreelement that defines how to load the server public key pair from a JKS keystore ssl/keystore/path- A path to a JKS keystore

ssl/keystore/relative-to- Defines a path the keystore path is relative to

ssl/keystore/keystore-password- The password to open the keystore

ssl/keystore/alias(optional)- The alias of the entry in the keystore. Set it if the keystore contains multiple entries

ssl/keystore/key-password(optional)- The private key password, if different from the keystore password.

authentication/truststore- Defines how to load a trust store to verify the certificate presented by the remote side of the inbound/outgoing connection. Typically, the truststore contains a collection of trusted CA certificates.

authentication/truststore/path- A path to a JKS keystore that contains the certificates of the trusted CAs (certificate authorities)

authentication/truststore/relative-to- Defines a path the truststore path is relative to

authentication/truststore/keystore-password- The password to open the truststore

- Enable https listener

See HTTPS Listener for the instructions how to enable HTTPS in Wildfly.

- Add the <https-listener> element as shown below:

https-listener/security-realm- The value must match the name of the realm from the previous section

https-listener/verify-client-

If set to

REQUESTED, the server will optionally ask for a client certificate. Setting the attribute toREQUIREDwill have the server to refuse inbound connections if no client certificate has been provided.

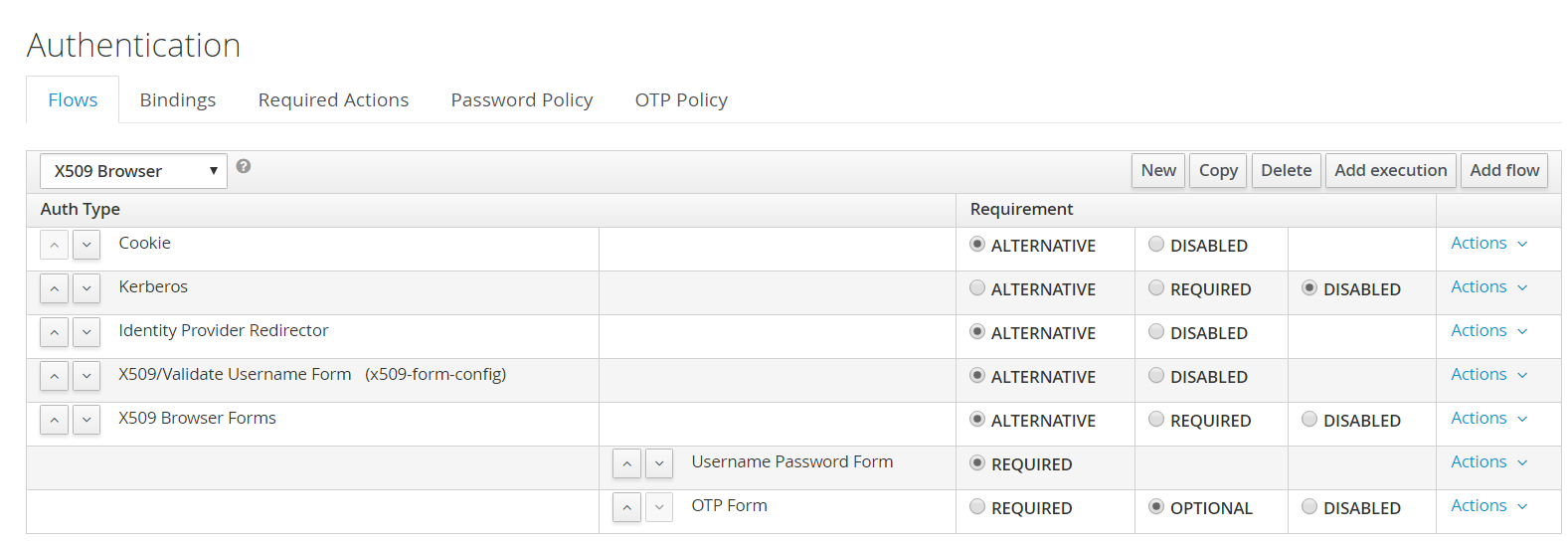

6.5.3. Adding X.509 Client Certificate Authentication to a Browser Flow

- Select a realm, click on Authentication link, select the "Browser" flow

- Make a copy of the built-in "Browser" flow. You may want to give the new flow a distinctive name, i.e. "X.509 Browser"

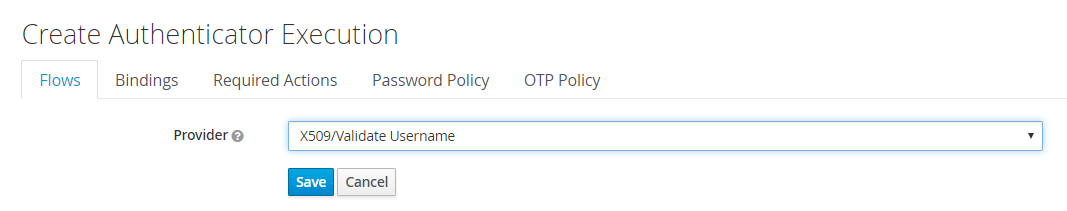

- Using the drop down, select the copied flow, and click on "Add Execution"

- Select "X509/Validate User Form" using the drop down and click on "Save"

- Using the up/down arrows, change the order of the "X509/Validate Username Form" by moving it above the "Browser Forms" execution, and set the requirement to "ALTERNATIVE"

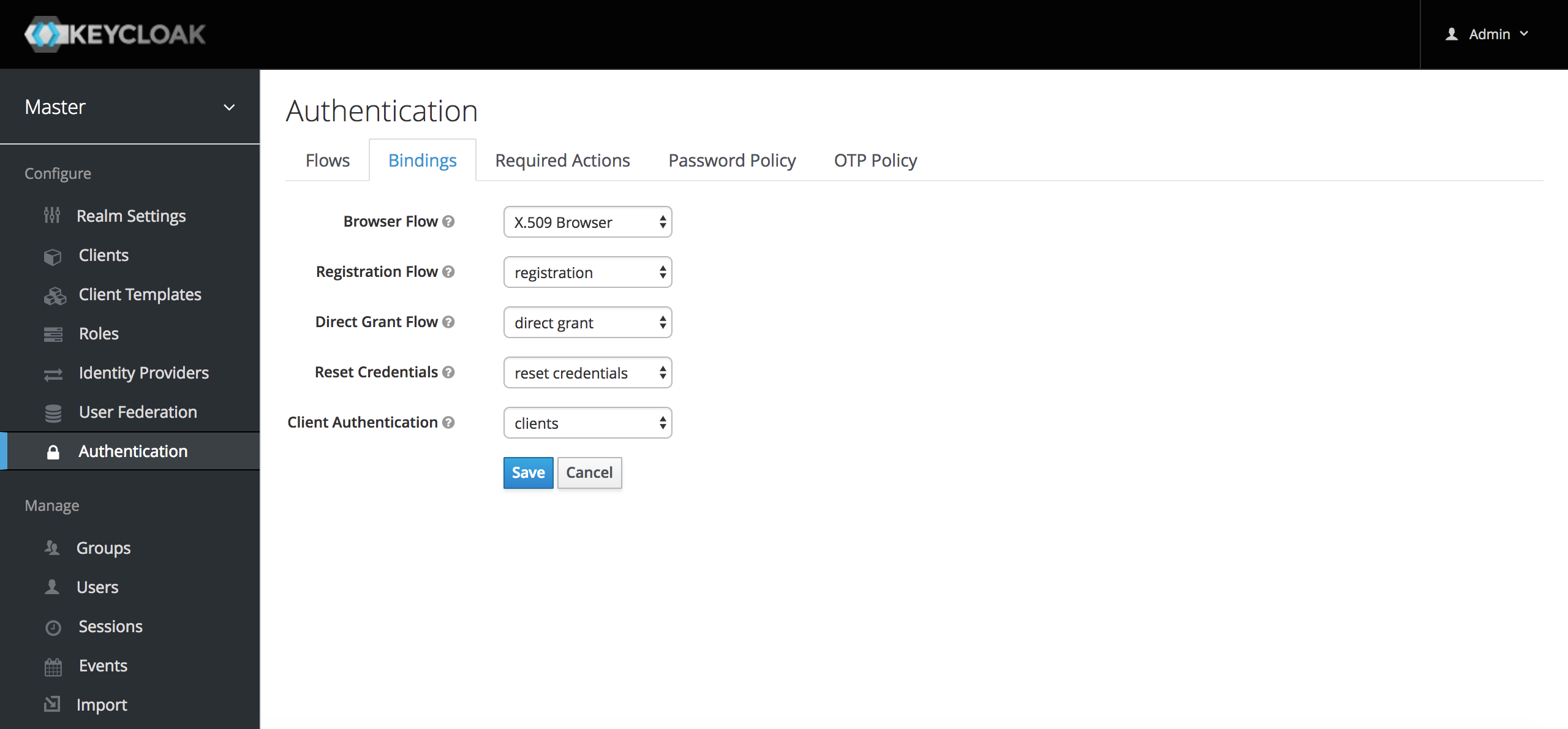

- Select the "Bindings" tab, find the drop down for "Browser Flow". Select the newly created X509 browser flow from the drop down and click on "Save".

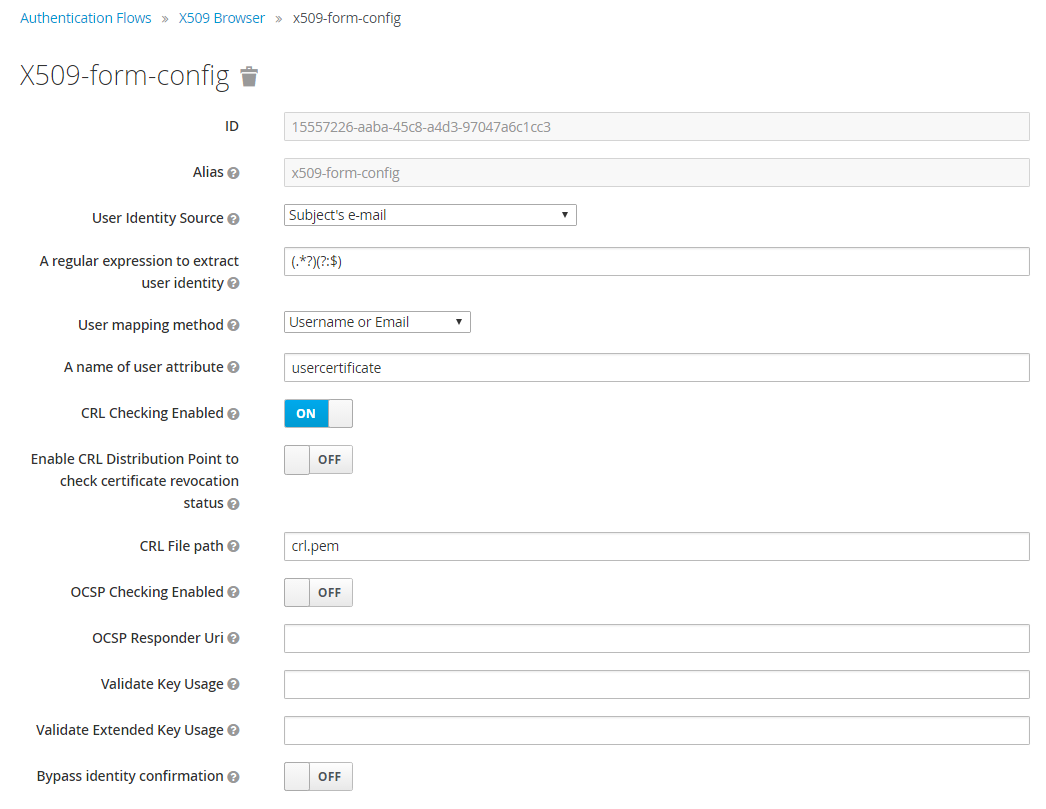

- Configuring X.509 Client Certificate Authentication

-

User Identity Source- Defines how to extract the user identity from a client certificate.

A regular expression(optional)- Defines a regular expression to use as a filter to extract the certificate identity. The regular expression must contain a single group.

User Mapping Method- Defines how to match the certificate identity to an existing user. Username or e-mail will search for an existing user by username or e-mail. Custom Attribute Mapper will search for an existing user with a custom attribute which value matches the certificate identity. The name of the custom attribute is configurable.

A name of user attribute(optional)- A custom attribute which value will be matched against the certificate identity.

CRL Checking Enabled(optional)- Defines whether to check the revocation status of the certificate using Certificate Revocation List.

Enable CRL Distribution Point to check certificate revocation status(optional)- Defines whether to use CDP to check the certificate revocation status. Most PKI authorities include CDP in their certificates.

CRL file path(optional)-

Defines a path to a file that contains a CRL list. The value must be a path to a valid file if

CRL Checking Enabledoption is turned on. OCSP Checking Enabled(optional)- Defines whether to check the certificate revocation status using Online Certificate Status Protocol.

OCSP Responder URI(optional)- Allows to override a value of the OCSP responder URI in the certificate.

Validate Key Usage(optional)- Verifies whether the certificate’s KeyUsage extension bits are set. For example, "digitalSignature,KeyEncipherment" will verify if bits 0 and 2 in the KeyUsage extension are asserted. Leave the parameter empty to disable the Key Usage validation. See RFC5280, Section-4.2.1.3. The server will raise an error only when flagged as critical by the issuing CA and there is a key usage extension mismatch.

Validate Extended Key Usage(optional)- Verifies one or more purposes as defined in the Extended Key Usage extension. See RFC5280, Section-4.2.1.12. Leave the parameter empty to disable the Extended Key Usage validation. The server will raise an error only when flagged as critical by the issuing CA and there is a key usage extension mismatch.

Bypass identity confirmation- If set, X.509 client certificate authentication will not prompt the user to confirm the certificate identity and will automatically sign in the user upon successful authentication.

6.5.4. Adding X.509 Client Certificate Authentication to a Direct Grant Flow

- Using Red Hat Single Sign-On admin console, click on "Authentication" and select the "Direct Grant" flow,

- Make a copy of the build-in "Direct Grant" flow. You may want to give the new flow a distinctive name, i.e. "X509 Direct Grant",

- Delete "Validate Username" and "Password" authenticators,

- Click on "Execution" and add "X509/Validate Username" and click on "Save" to add the execution step to the parent flow.

-

Change the

Requirementto REQUIRED.

- Set up the x509 authentication configuration by following the steps described earlier in the x.509 Browser Flow section.

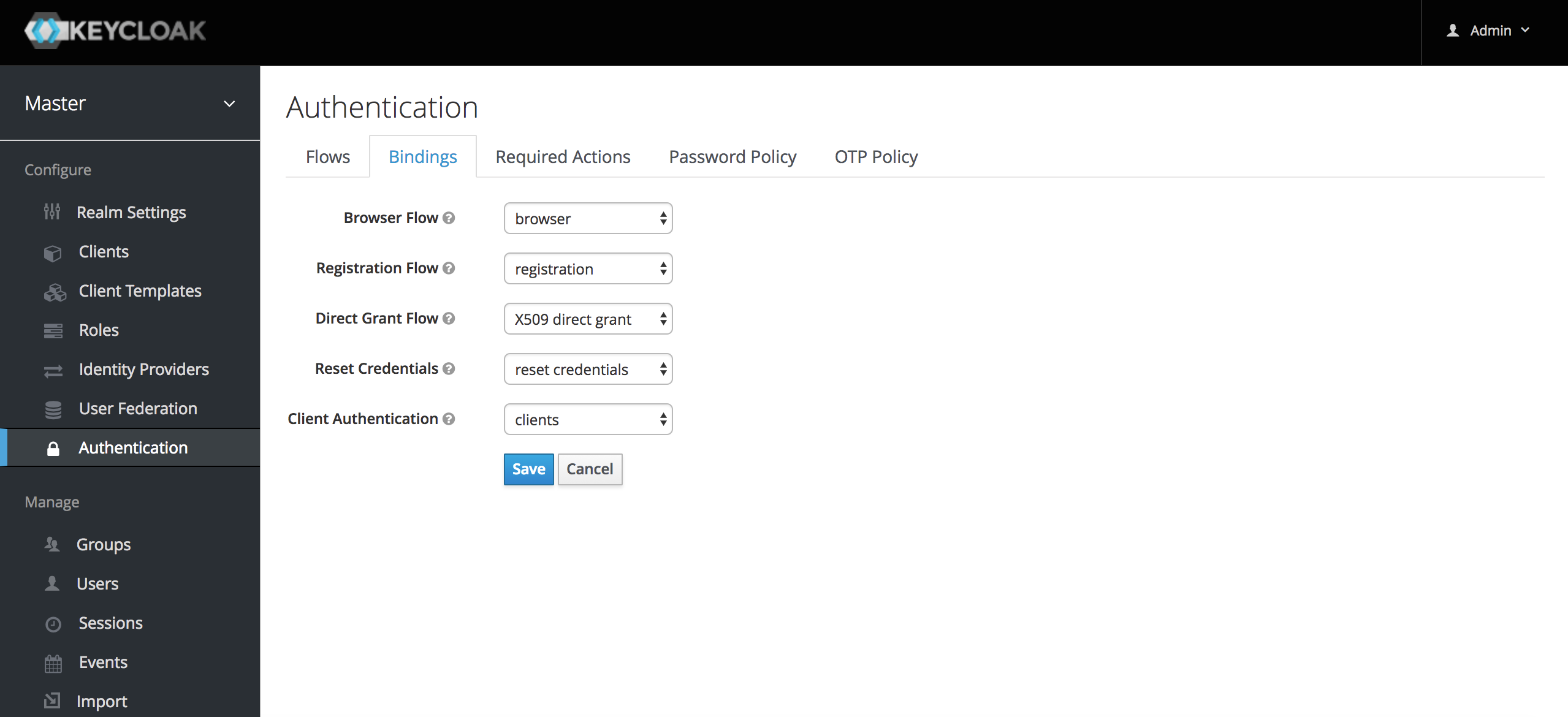

- Select the "Bindings" tab, find the drop down for "Direct Grant Flow". Select the newly created X509 direct grant flow from the drop down and click on "Save".

6.5.5. Client certificate lookup

When an HTTP request is sent directly to Red Hat Single Sign-On server, the JBoss EAP undertow subsystem will establish an SSL handshake and extract the client certificate. The client certificate will be then saved to the attribute javax.servlet.request.X509Certificate of the HTTP request, as specified in the servlet specification. The Red Hat Single Sign-On X509 authenticator will be then able to lookup the certificate from this attribute.

However, when the Red Hat Single Sign-On server listens to HTTP requests behind a load balancer or reverse proxy, it may be the proxy server which extracts the client certificate and establishes the mutual SSL connection. A reverse proxy usually puts the authenticated client certificate in the HTTP header of the underlying request and forwards it to the back end Red Hat Single Sign-On server. In this case, Red Hat Single Sign-On must be able to look up the X.509 certificate chain from the HTTP headers instead of from the attribute of HTTP request, as is done for Undertow.

If Red Hat Single Sign-On is behind a reverse proxy, you usually need to configure alternative provider of the x509cert-lookup SPI in RHSSO_HOME/standalone/configuration/standalone.xml. Along with the default provider, which looks up the certificate from the HTTP header, we also have two additional built-in providers: haproxy and apache, which are described next.

6.5.5.1. HAProxy certificate lookup provider

You can use this provider when your Red Hat Single Sign-On server is behind an HAProxy reverse proxy. Configure the server like this:

In this example configuration, the client certificate will be looked up from the HTTP header, SSL_CLIENT_CERT, and the other certificates from its chain will be looked up from HTTP headers like CERT_CHAIN_0 , CERT_CHAIN_1, …, CERT_CHAIN_9 . The attribute certificateChainLength is the maximum length of the chain, so the last one tried attribute would be CERT_CHAIN_9 .

Consult the HAProxy documentation for the details of how the HTTP Headers for the client certificate and client certificate chain can be configured and their proper names.

6.5.5.2. Apache certificate lookup provider

You can use this provider when your Red Hat Single Sign-On server is behind an Apache reverse proxy. Configure the server like this:

The configuration is same as for the haproxy provider. Consult the Apache documentation for the details of how the HTTP Headers for the client certificate and client certificate chain can be configured and their proper names.

6.5.5.3. Other reverse proxy implementations

We do not have built-in support for other reverse proxy implementations. However, it is possible that other reverse proxies can be made to behave in a similar way to apache or haproxy and that some of those providers can be used. If none of those works, you may need to create your own implementation of the org.keycloak.services.x509.X509ClientCertificateLookupFactory and org.keycloak.services.x509.X509ClientCertificateLookup provider. See the Server Developer Guide for the details on how to add your own provider.

6.5.6. Troubleshooting

- Direct Grant authentication with X.509

- The following template can be used to request a token using the Resource Owner Password Credentials Grant:

curl https://[host][:port]/auth/realms/master/protocol/openid-connect/token \

--insecure \

--data "grant_type=password&scope=openid profile&username=&password=&client_id=CLIENT_ID&client_secret=CLIENT_SECRET" \

-E /path/to/client_cert.crt \

--key /path/to/client_cert.key

$ curl https://[host][:port]/auth/realms/master/protocol/openid-connect/token \

--insecure \

--data "grant_type=password&scope=openid profile&username=&password=&client_id=CLIENT_ID&client_secret=CLIENT_SECRET" \

-E /path/to/client_cert.crt \

--key /path/to/client_cert.key[host][:port]- The host and the port number of a remote Red Hat Single Sign-On server that has been configured to allow users authenticate with x.509 client certificates using the Direct Grant Flow.

CLIENT_ID- A client id.

CLIENT_SECRET- For confidential clients, a client secret; otherwise, leave it empty.

client_cert.crt- A public key certificate that will be used to verify the identity of the client in mutual SSL authentication. The certificate should be in PEM format.

client_cert.key- A private key in the public key pair. Also expected in PEM format.

Chapter 7. SSO Protocols

The chapter gives a brief overview of the authentication protocols and how the Red Hat Single Sign-On authentication server and the applications it secures interact with these protocols.

7.1. OpenID Connect

OpenID Connect (OIDC) is an authentication protocol that is an extension of OAuth 2.0. While OAuth 2.0 is only a framework for building authorization protocols and is mainly incomplete, OIDC is a full-fledged authentication and authorization protocol. OIDC also makes heavy use of the Json Web Token (JWT) set of standards. These standards define an identity token JSON format and ways to digitally sign and encrypt that data in a compact and web-friendly way.

There are really two types of use cases when using OIDC. The first is an application that asks the Red Hat Single Sign-On server to authenticate a user for them. After a successful login, the application will receive an identity token and an access token. The identity token contains information about the user such as username, email, and other profile information. The access token is digitally signed by the realm and contains access information (like user role mappings) that the application can use to determine what resources the user is allowed to access on the application.

The second type of use cases is that of a client that wants to gain access to remote services. In this case, the client asks Red Hat Single Sign-On to obtain an access token it can use to invoke on other remote services on behalf of the user. Red Hat Single Sign-On authenticates the user then asks the user for consent to grant access to the client requesting it. The client then receives the access token. This access token is digitally signed by the realm. The client can make REST invocations on remote services using this access token. The REST service extracts the access token, verifies the signature of the token, then decides based on access information within the token whether or not to process the request.

7.1.1. OIDC Auth Flows

OIDC has different ways for a client or application to authenticate a user and receive an identity and access token. Which path you use depends greatly on the type of application or client requesting access. All of these flows are described in the OIDC and OAuth 2.0 specifications so only a brief overview will be provided here.

7.1.1.1. Authorization Code Flow

This is a browser-based protocol and it is what we recommend you use to authenticate and authorize browser-based applications. It makes heavy use of browser redirects to obtain an identity and access token. Here’s a brief summary:

- Browser visits application. The application notices the user is not logged in, so it redirects the browser to Red Hat Single Sign-On to be authenticated. The application passes along a callback URL (a redirect URL) as a query parameter in this browser redirect that Red Hat Single Sign-On will use when it finishes authentication.

- Red Hat Single Sign-On authenticates the user and creates a one-time, very short lived, temporary code. Red Hat Single Sign-On redirects back to the application using the callback URL provided earlier and additionally adds the temporary code as a query parameter in the callback URL.

- The application extracts the temporary code and makes a background out of band REST invocation to Red Hat Single Sign-On to exchange the code for an identity, access and refresh token. Once this temporary code has been used once to obtain the tokens, it can never be used again. This prevents potential replay attacks.

It is important to note that access tokens are usually short lived and often expired after only minutes. The additional refresh token that was transmitted by the login protocol allows the application to obtain a new access token after it expires. This refresh protocol is important in the situation of a compromised system. If access tokens are short lived, the whole system is only vulnerable to a stolen token for the lifetime of the access token. Future refresh token requests will fail if an admin has revoked access. This makes things more secure and more scalable.

Another important aspect of this flow is the concept of a public vs. a confidential client. Confidential clients are required to provide a client secret when they exchange the temporary codes for tokens. Public clients are not required to provide this client secret. Public clients are perfectly fine so long as HTTPS is strictly enforced and you are very strict about what redirect URIs are registered for the client. HTML5/JavaScript clients always have to be public clients because there is no way to transmit the client secret to them in a secure manner. Again, this is ok so long as you use HTTPS and strictly enforce redirect URI registration. This guide goes more detail into this in the Managing Clients chapter.

Red Hat Single Sign-On also supports the optional Proof Key for Code Exchange specification.

7.1.1.2. Implicit Flow

This is a browser-based protocol that is similar to Authorization Code Flow except there are fewer requests and no refresh tokens involved. We do not recommend this flow as there remains the possibility of access tokens being leaked in the browser history as tokens are transmitted via redirect URIs (see below). Also, since this flow doesn’t provide the client with a refresh token, access tokens would either have to be long-lived or users would have to re-authenticate when they expired. This flow is supported because it is in the OIDC and OAuth 2.0 specification. Here’s a brief summary of the protocol:

- Browser visits application. The application notices the user is not logged in, so it redirects the browser to Red Hat Single Sign-On to be authenticated. The application passes along a callback URL (a redirect URL) as a query parameter in this browser redirect that Red Hat Single Sign-On will use when it finishes authentication.

- Red Hat Single Sign-On authenticates the user and creates an identity and access token. Red Hat Single Sign-On redirects back to the application using the callback URL provided earlier and additionally adding the identity and access tokens as query parameters in the callback URL.

- The application extracts the identity and access tokens from the callback URL.

7.1.1.3. Resource Owner Password Credentials Grant (Direct Access Grants)

This is referred to in the Admin Console as Direct Access Grants. This is used by REST clients that want to obtain a token on behalf of a user. It is one HTTP POST request that contains the credentials of the user as well as the id of the client and the client’s secret (if it is a confidential client). The user’s credentials are sent within form parameters. The HTTP response contains identity, access, and refresh tokens.

7.1.1.4. Client Credentials Grant

This is also used by REST clients, but instead of obtaining a token that works on behalf of an external user, a token is created based on the metadata and permissions of a service account that is associated with the client. More info together with example is in Service Accounts chapter.

7.1.2. Red Hat Single Sign-On Server OIDC URI Endpoints

Here’s a list of OIDC endpoints that the Red Hat Single Sign-On publishes. These URLs are useful if you are using a non-Red Hat Single Sign-On client adapter to talk OIDC with the auth server. These are all relative URLs and the root of the URL being the HTTP(S) protocol, hostname, and usually path prefixed with /auth: i.e. https://localhost:8080/auth

- /realms/{realm-name}/protocol/openid-connect/token

- This is the URL endpoint for obtaining a temporary code in the Authorization Code Flow or for obtaining tokens via the Implicit Flow, Direct Grants, or Client Grants.

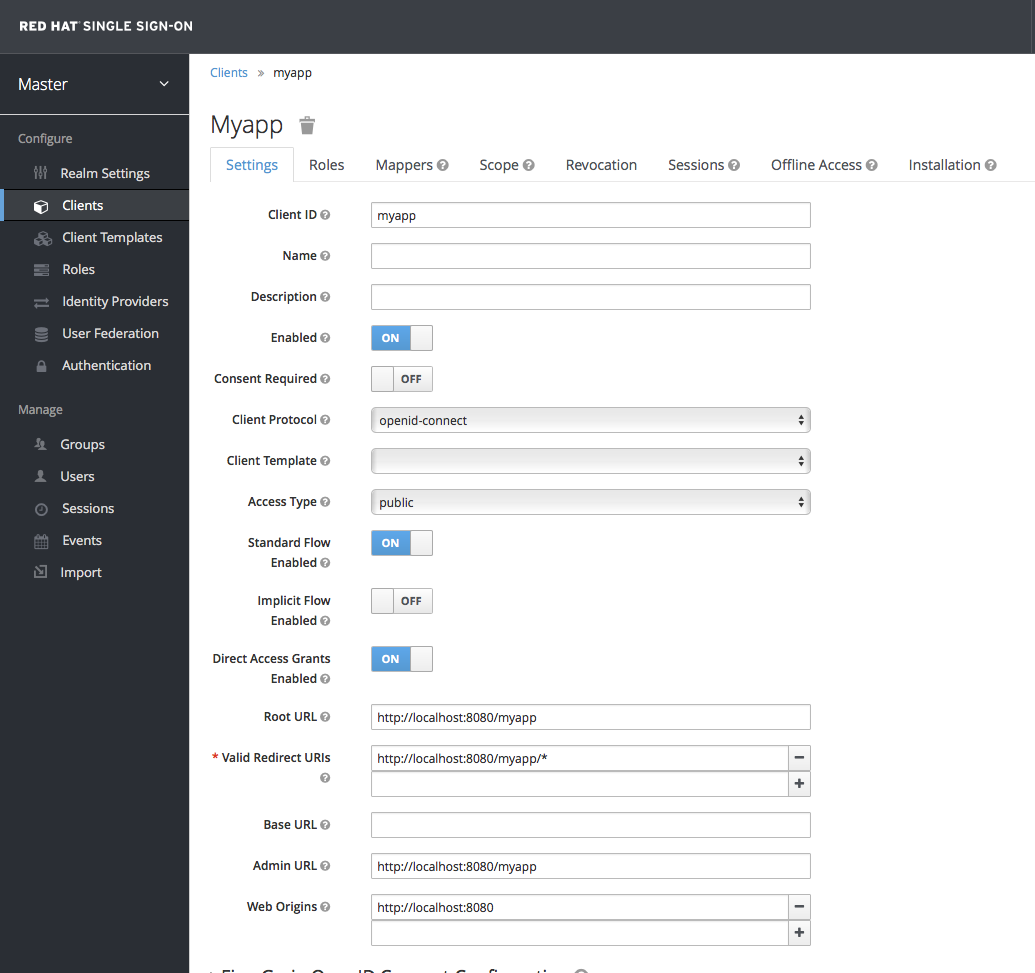

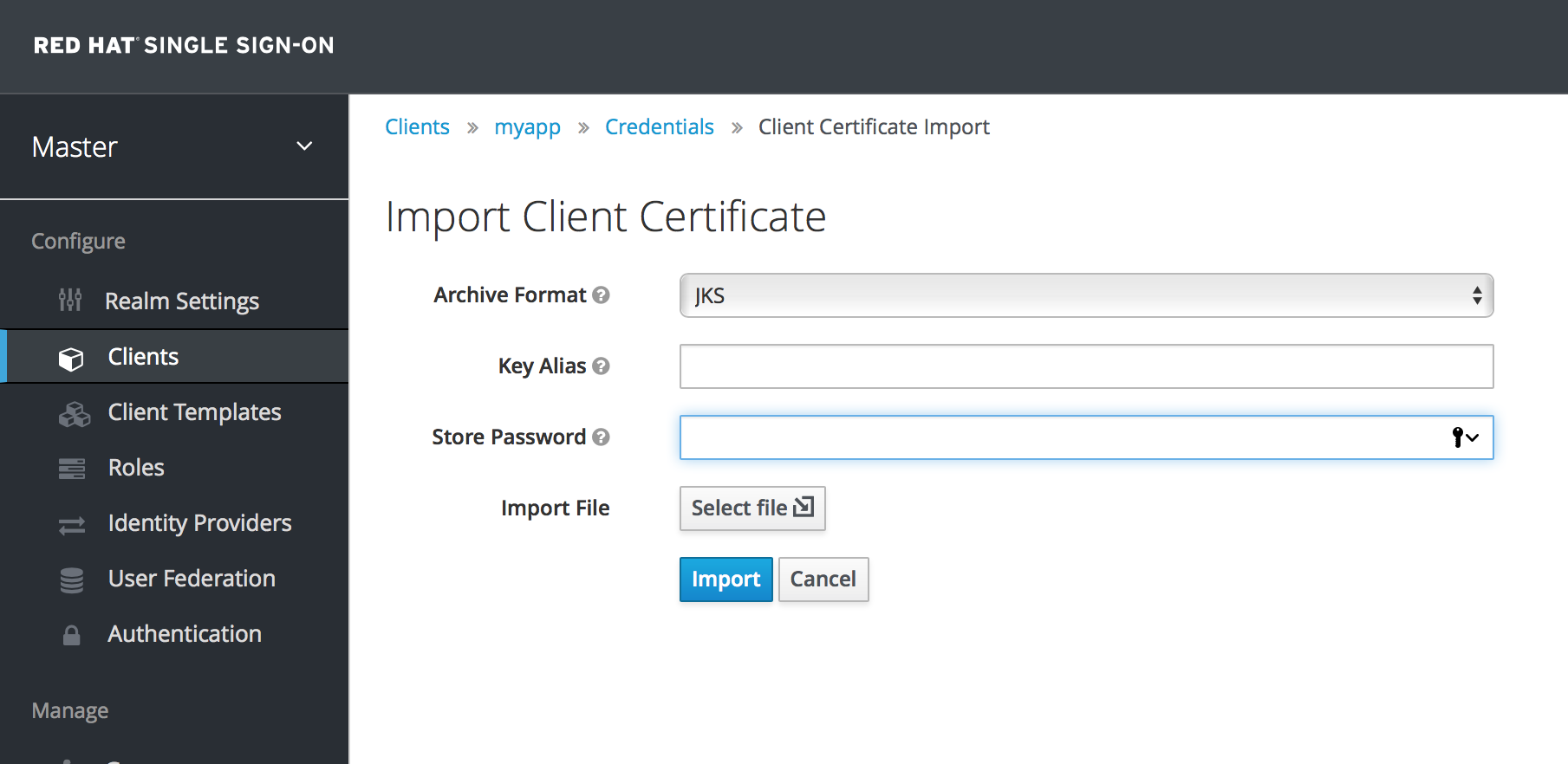

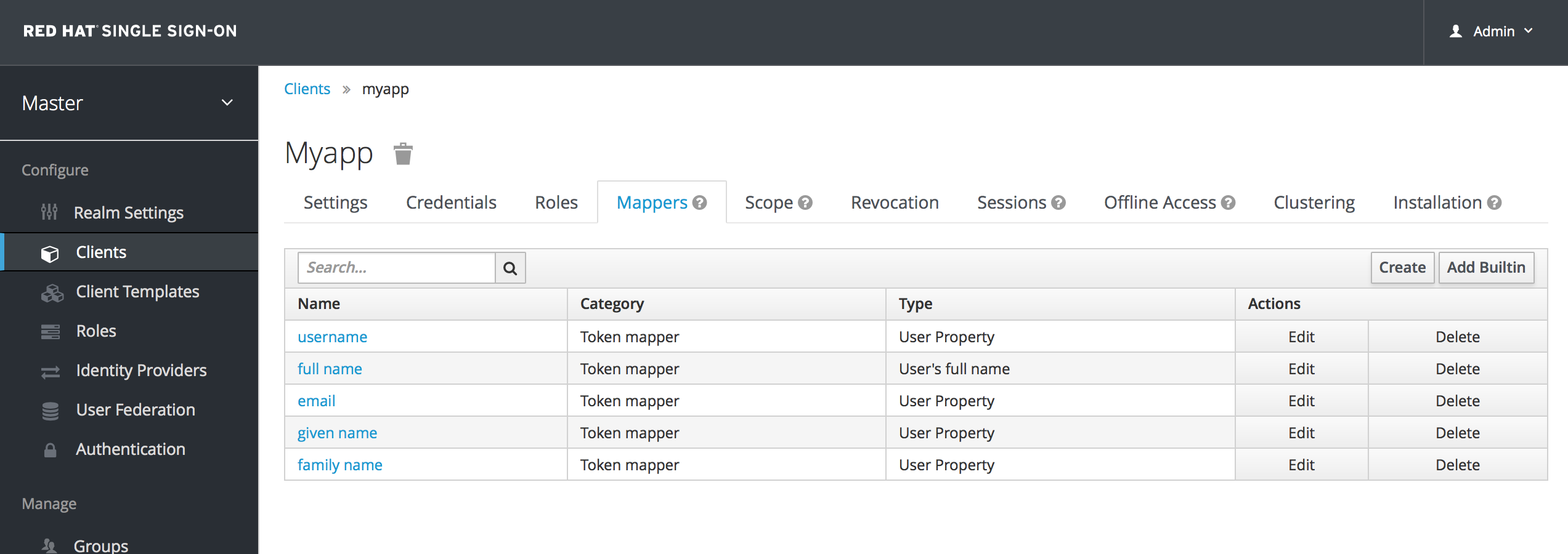

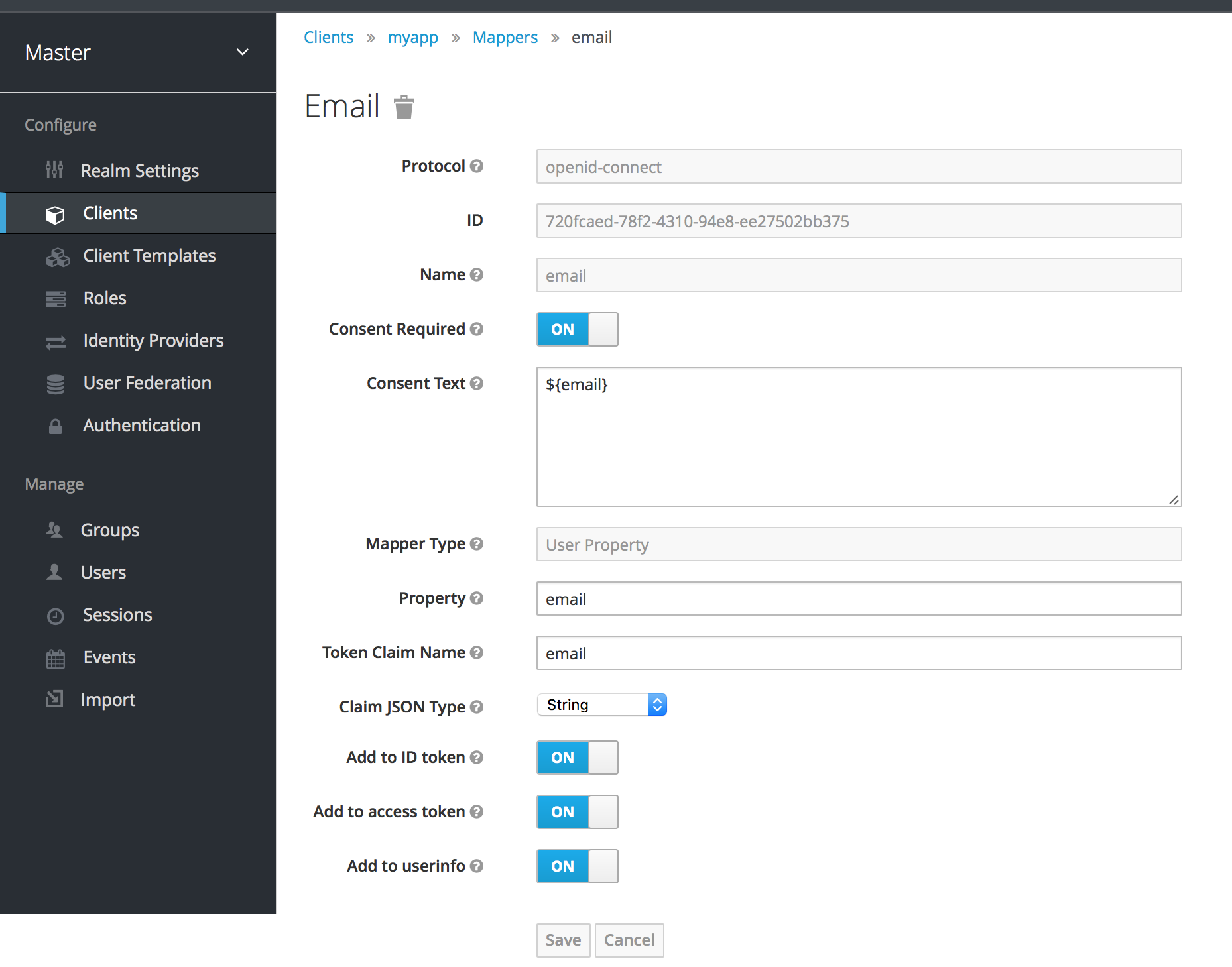

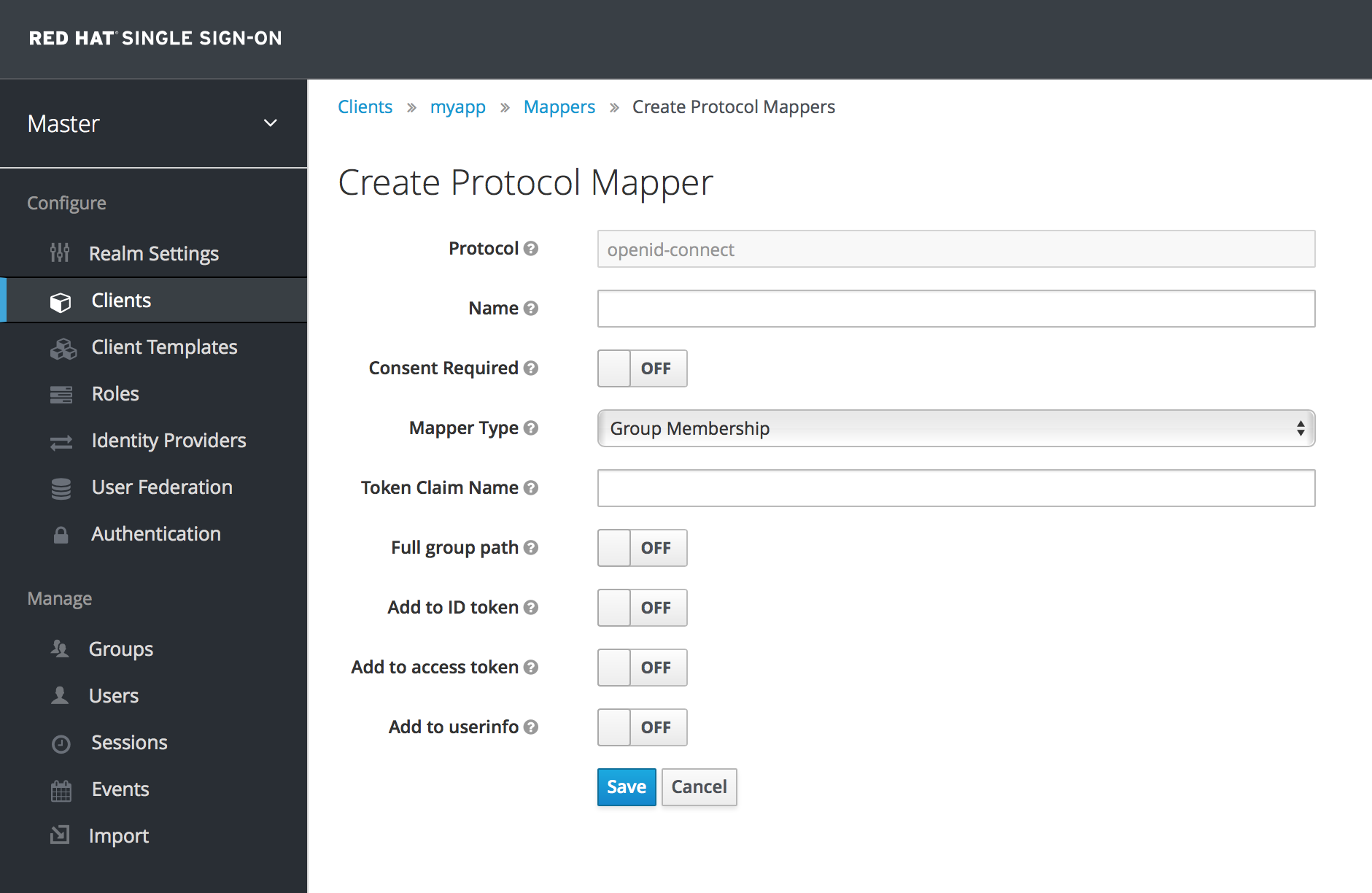

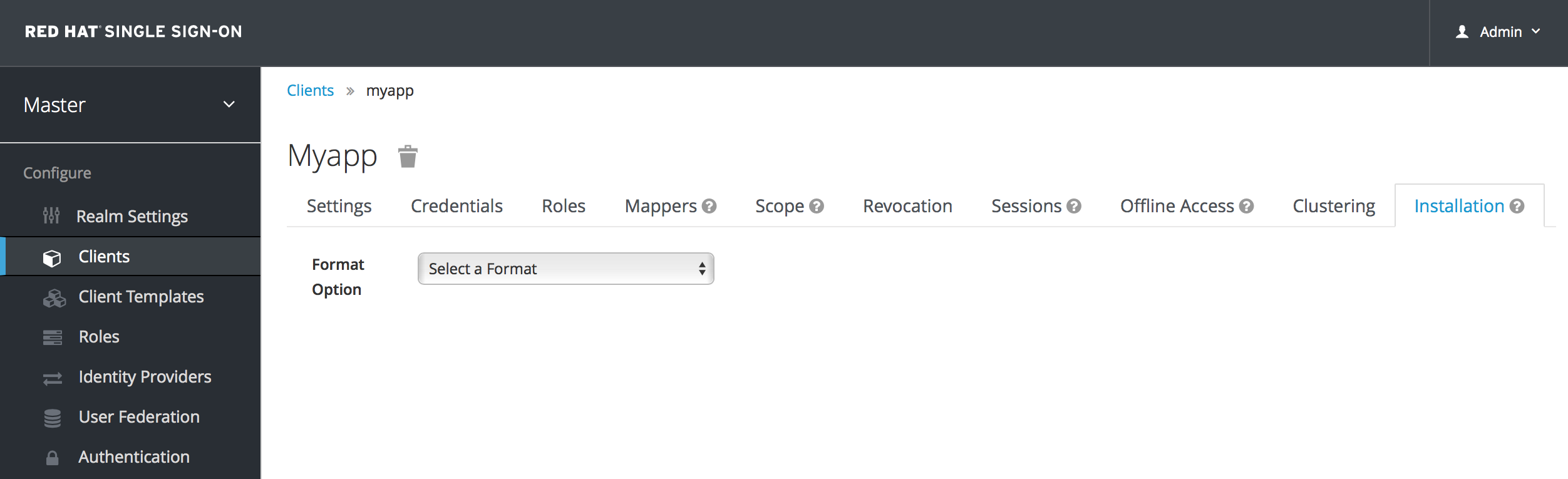

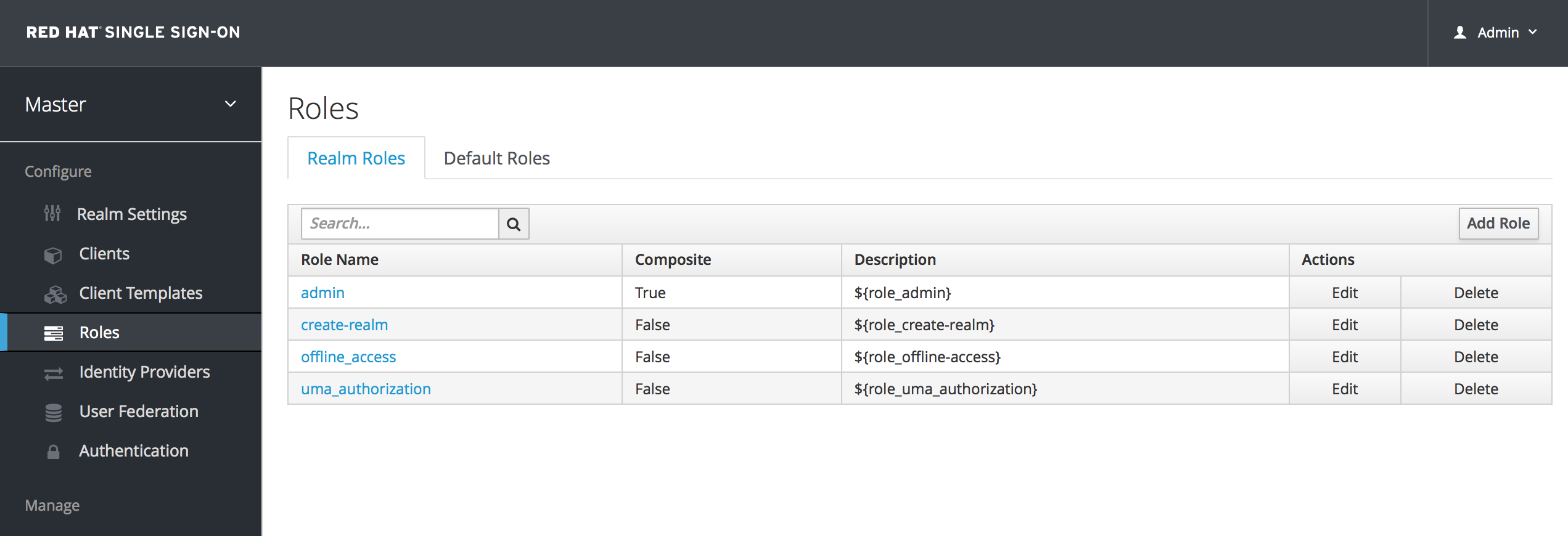

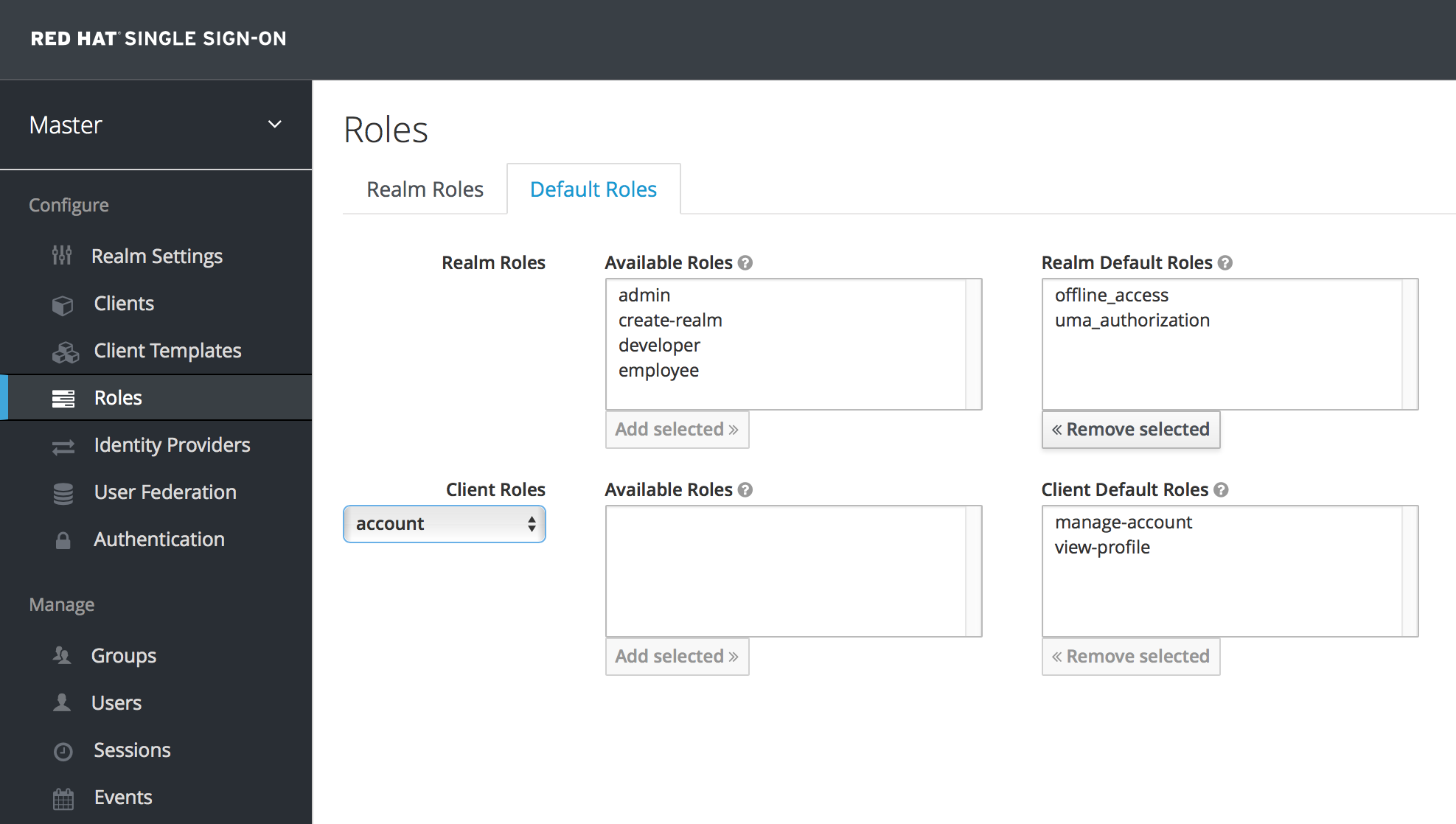

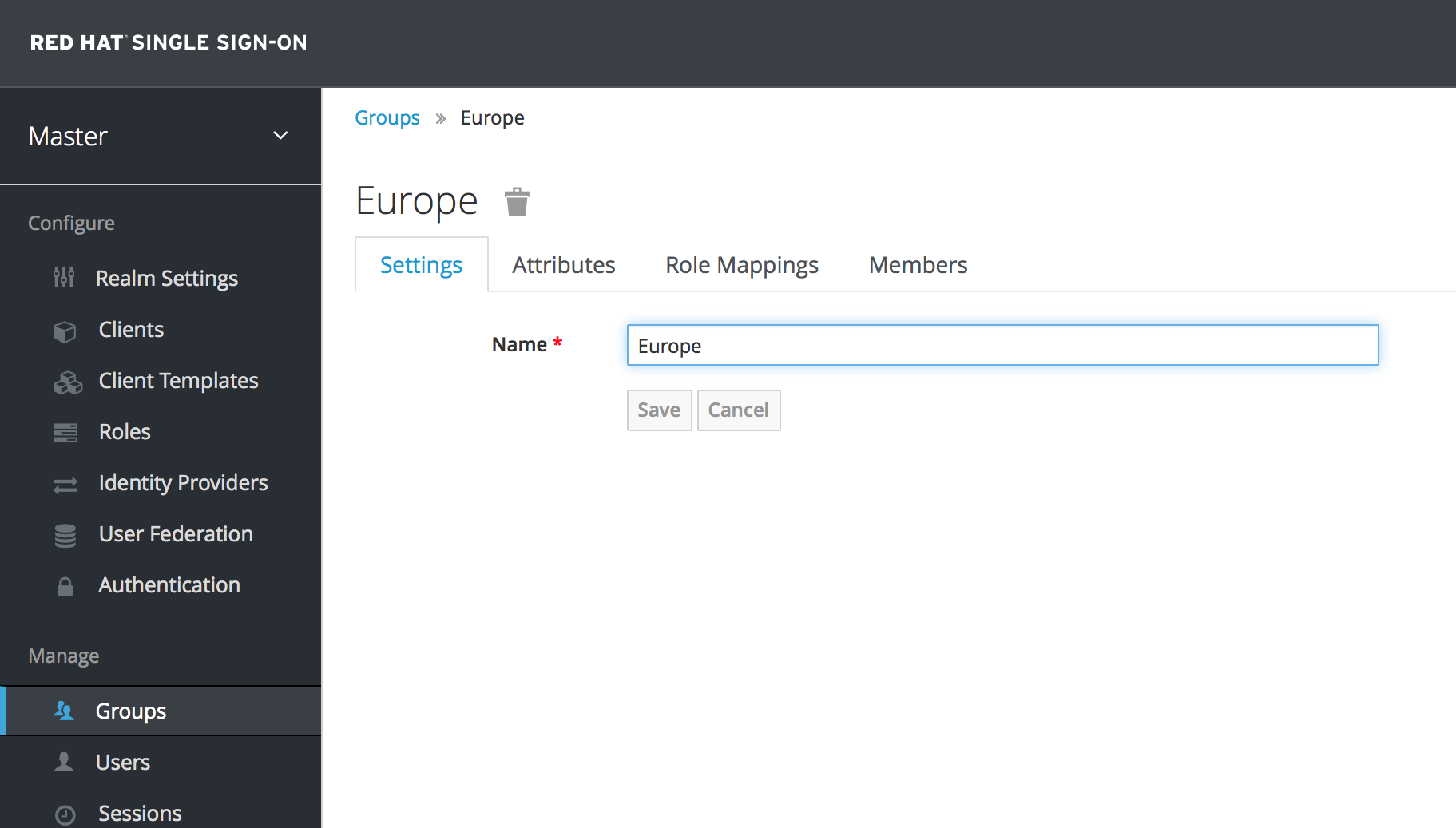

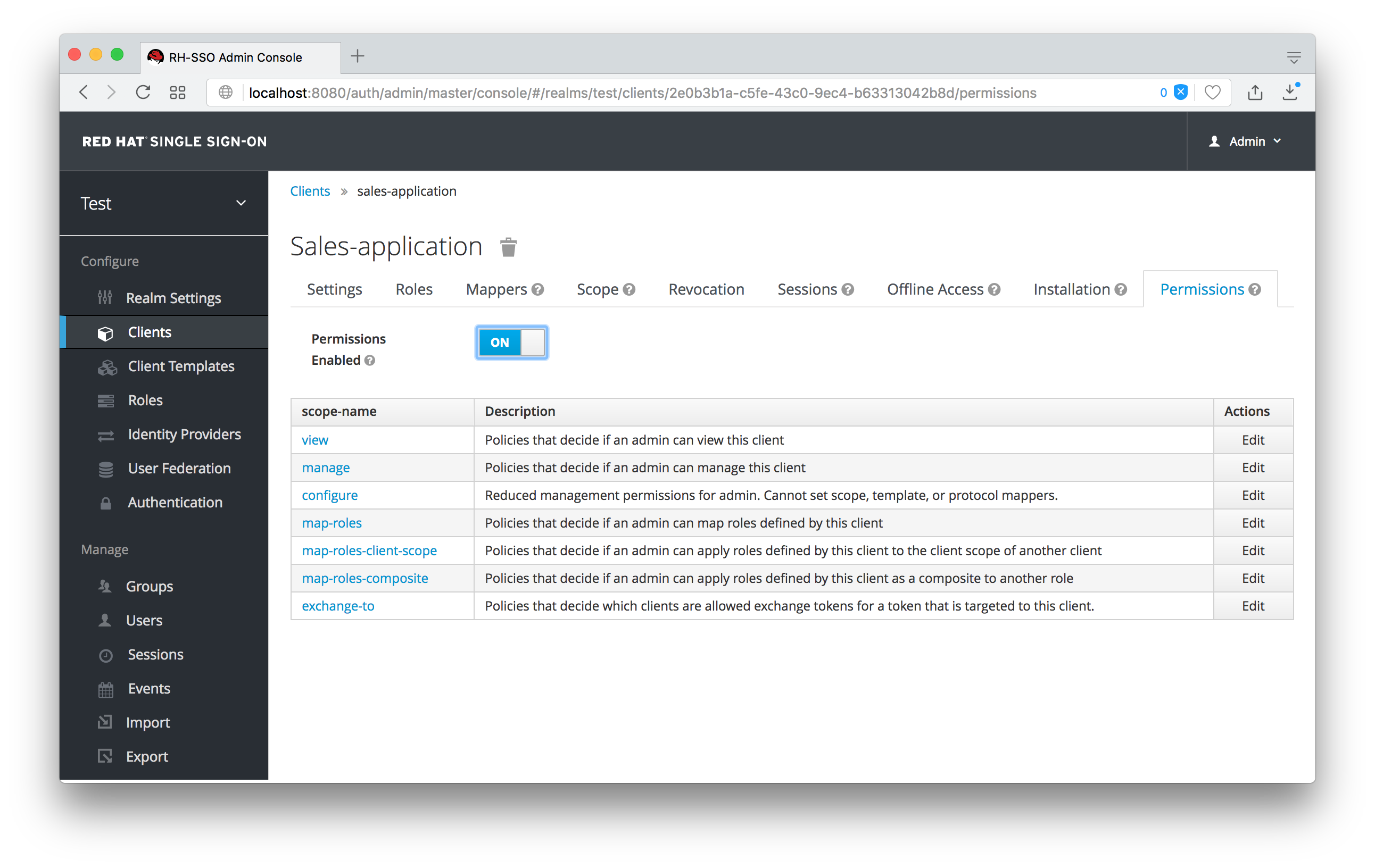

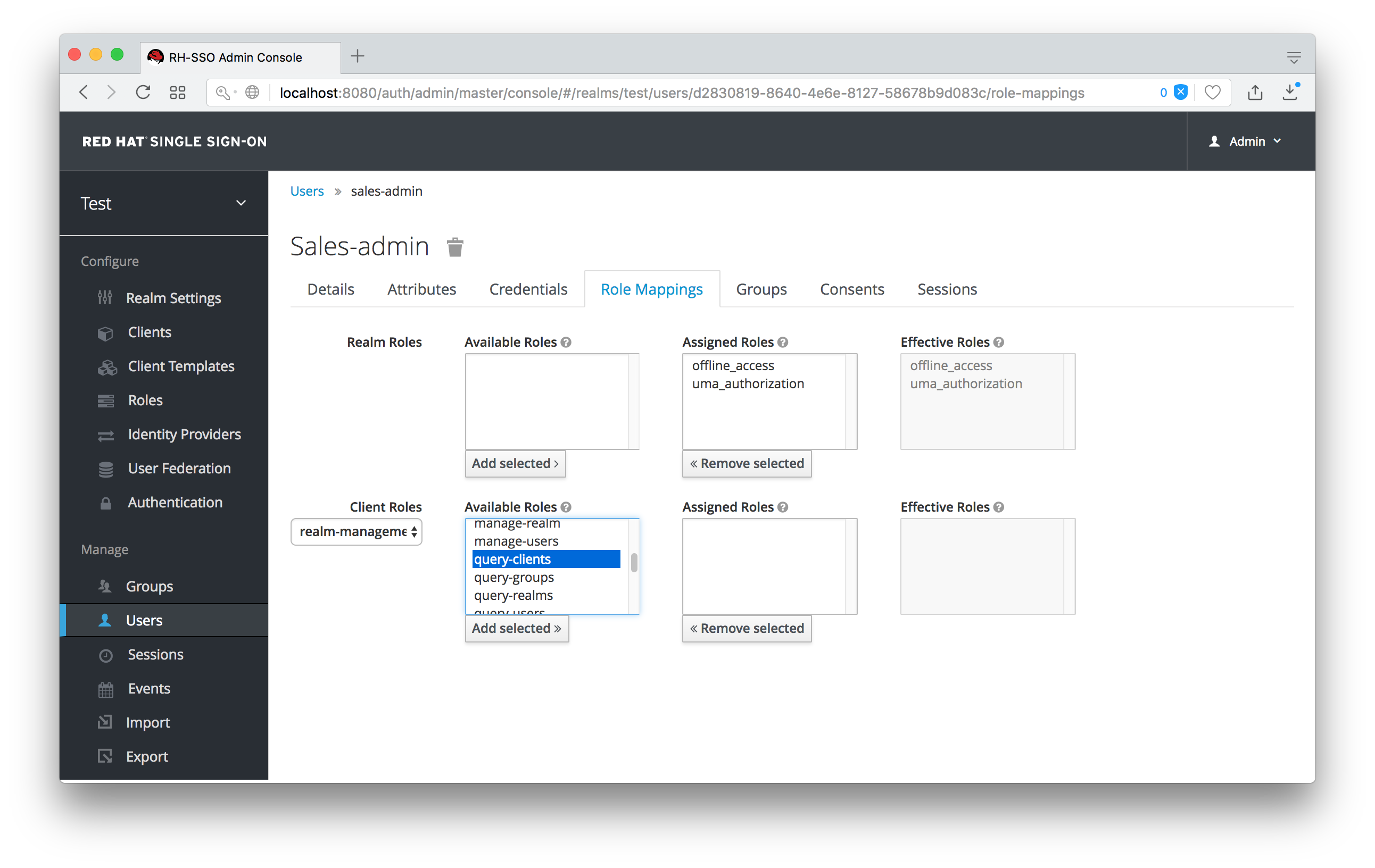

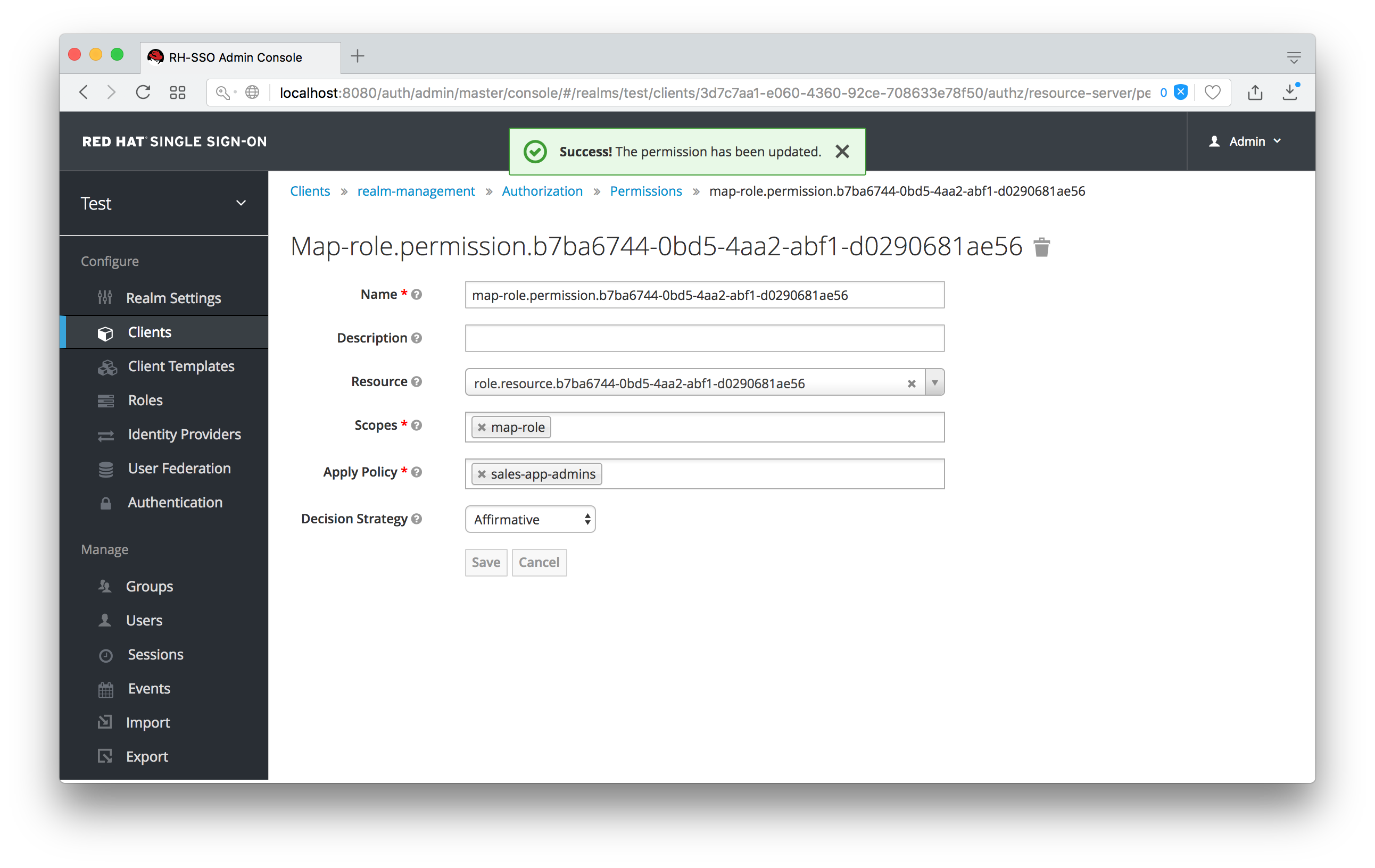

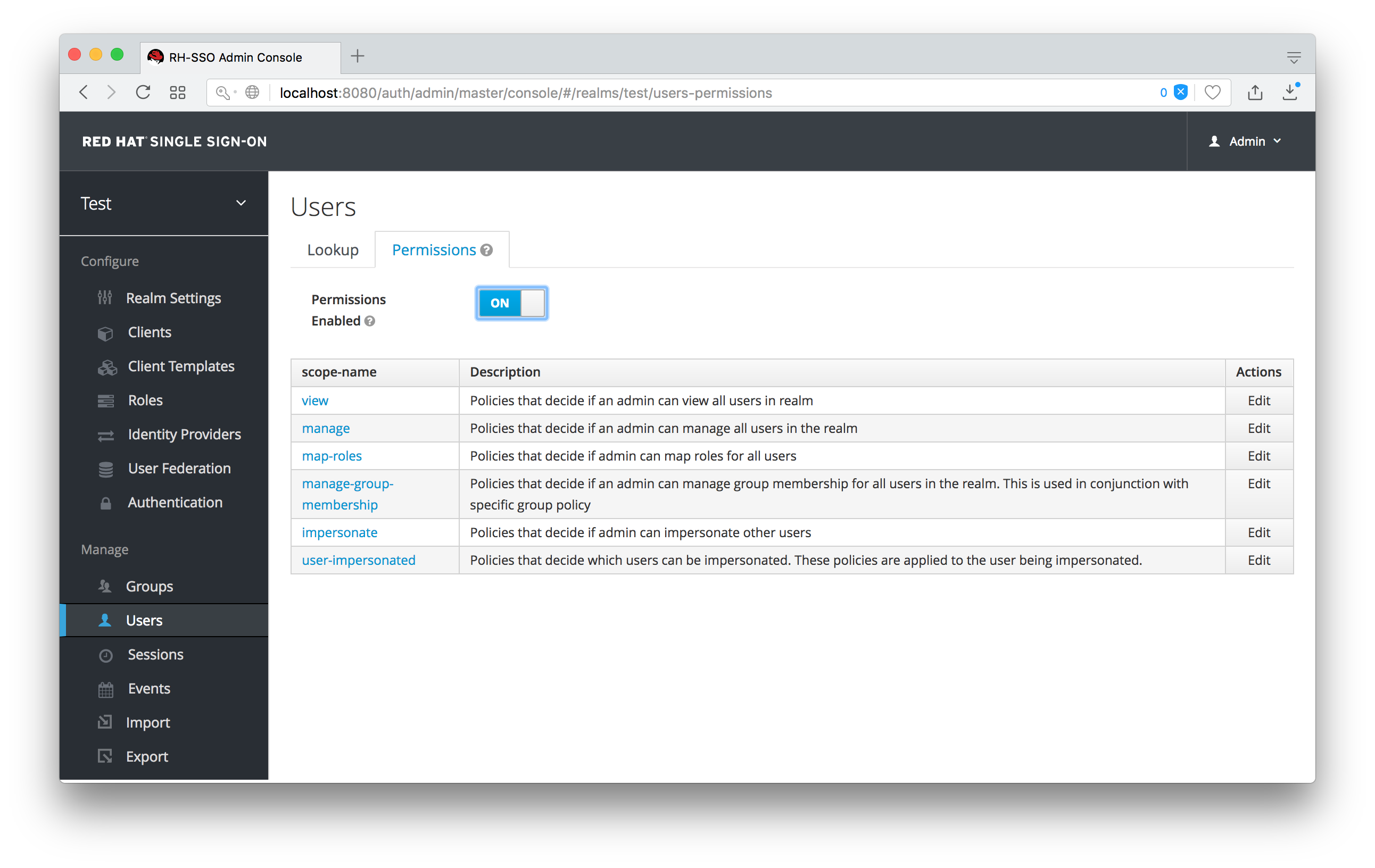

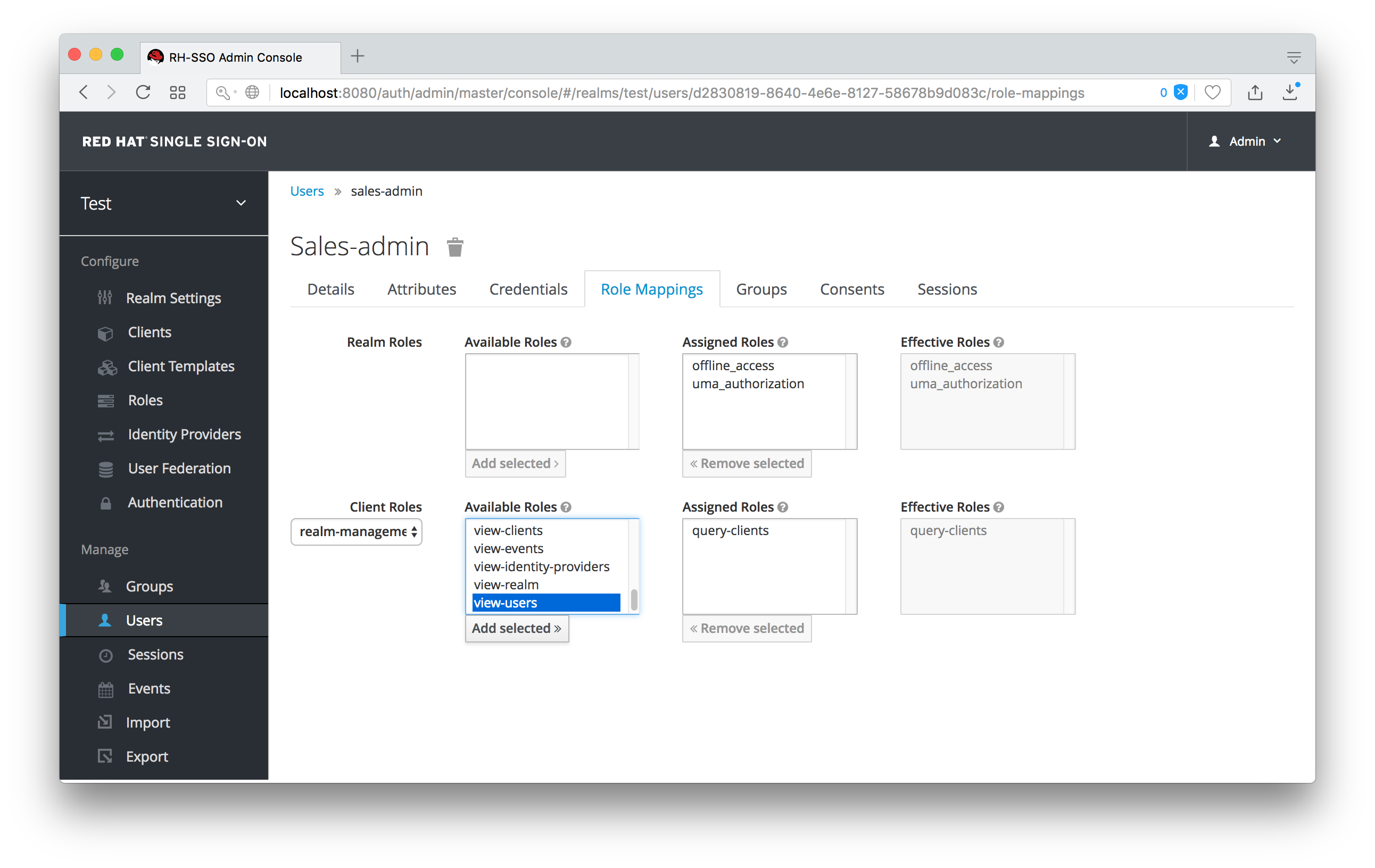

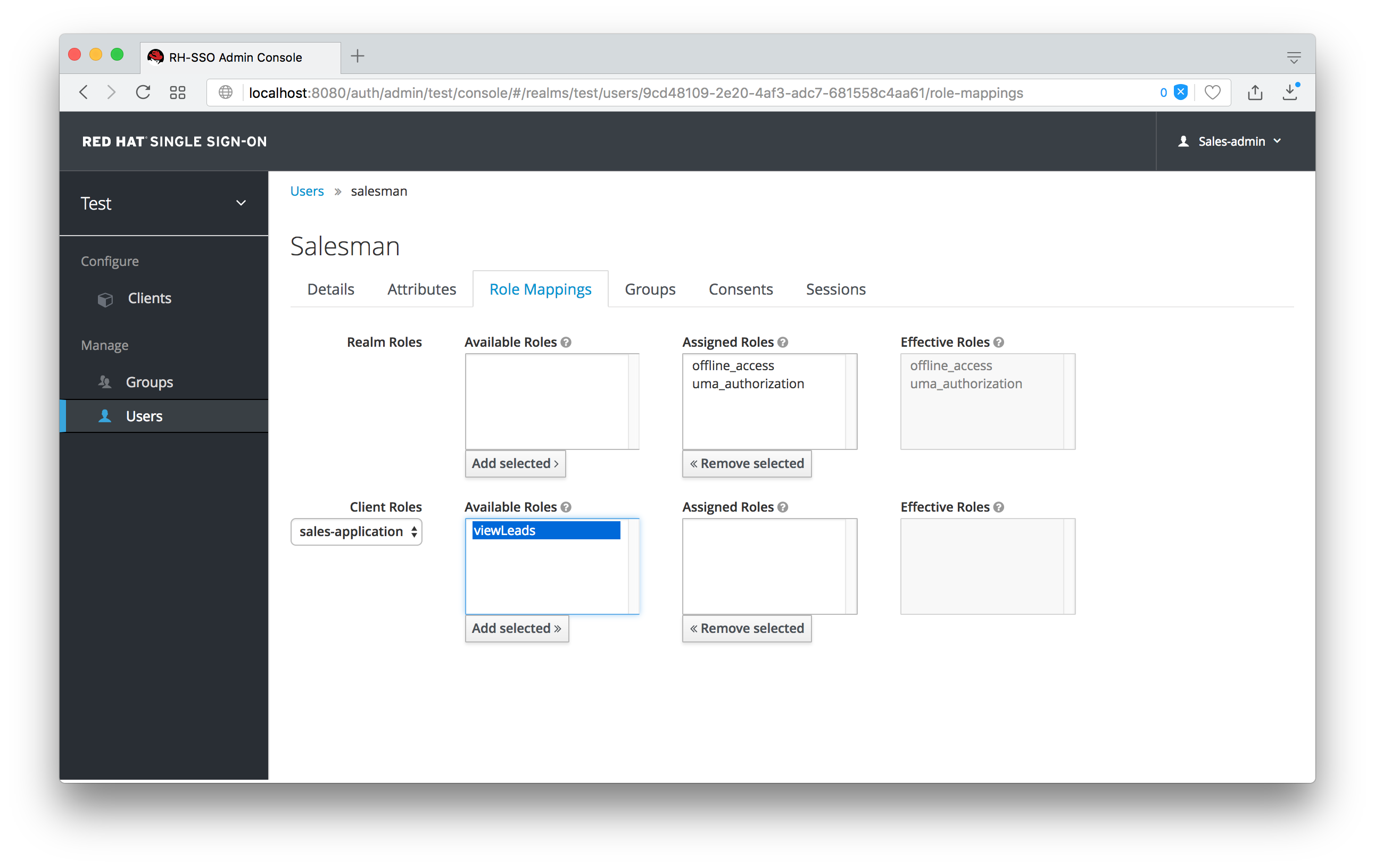

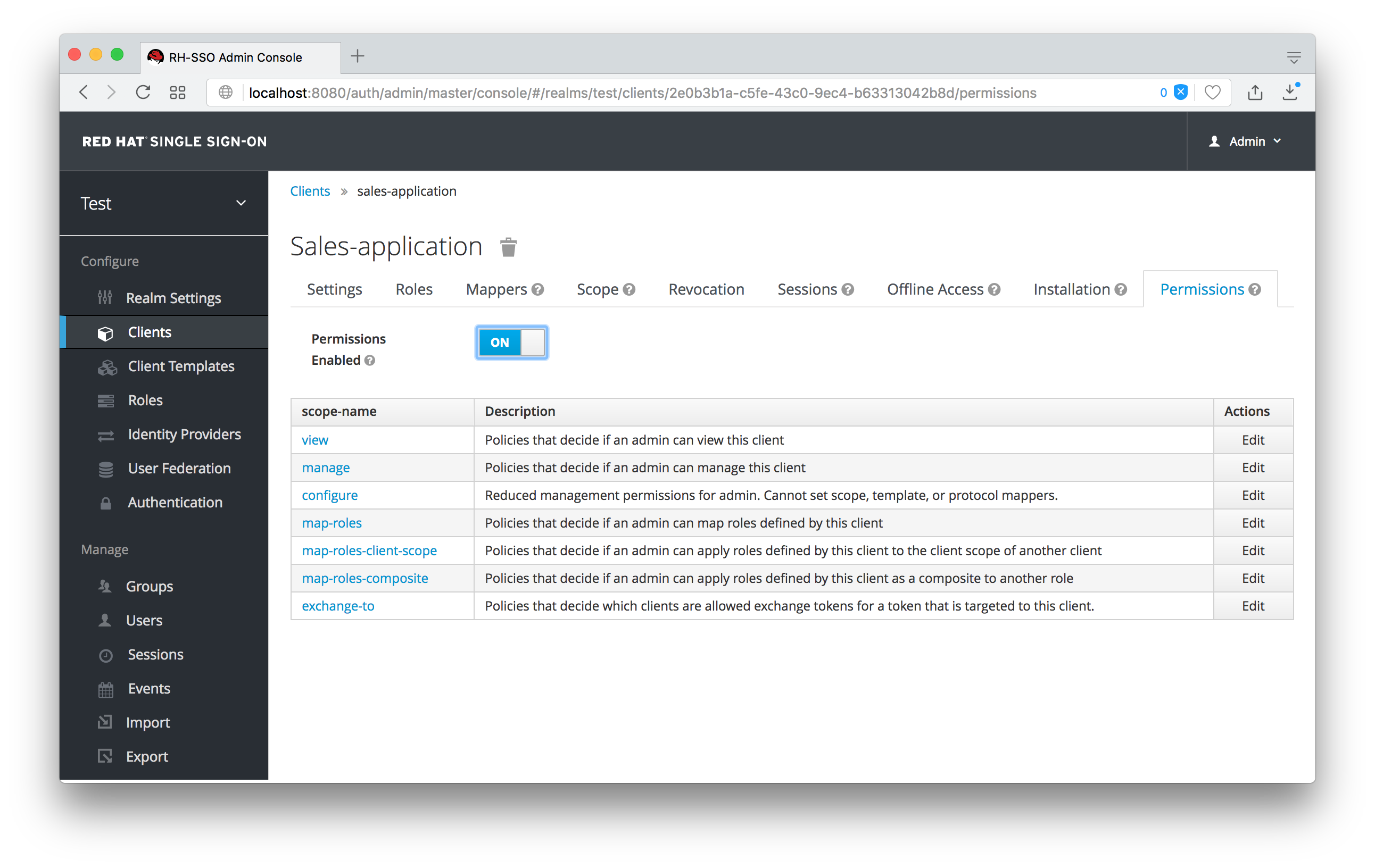

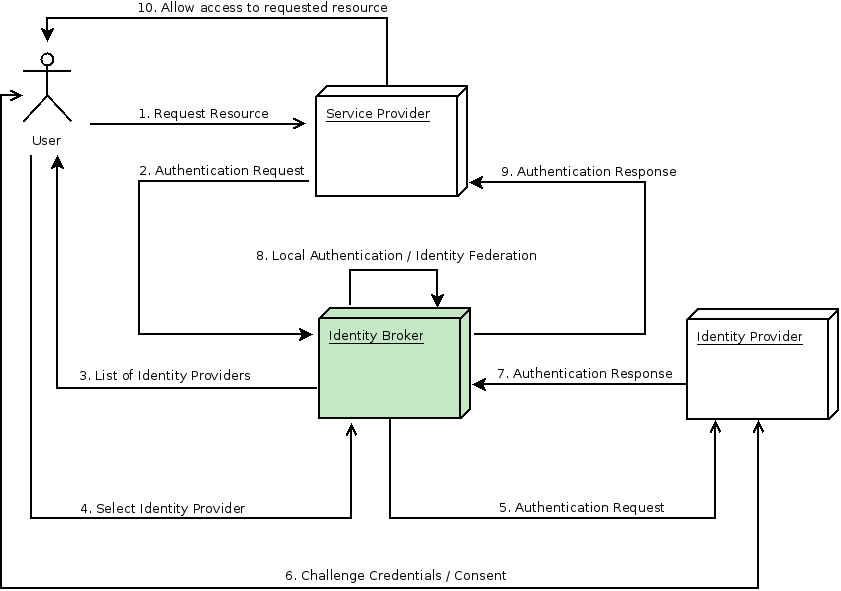

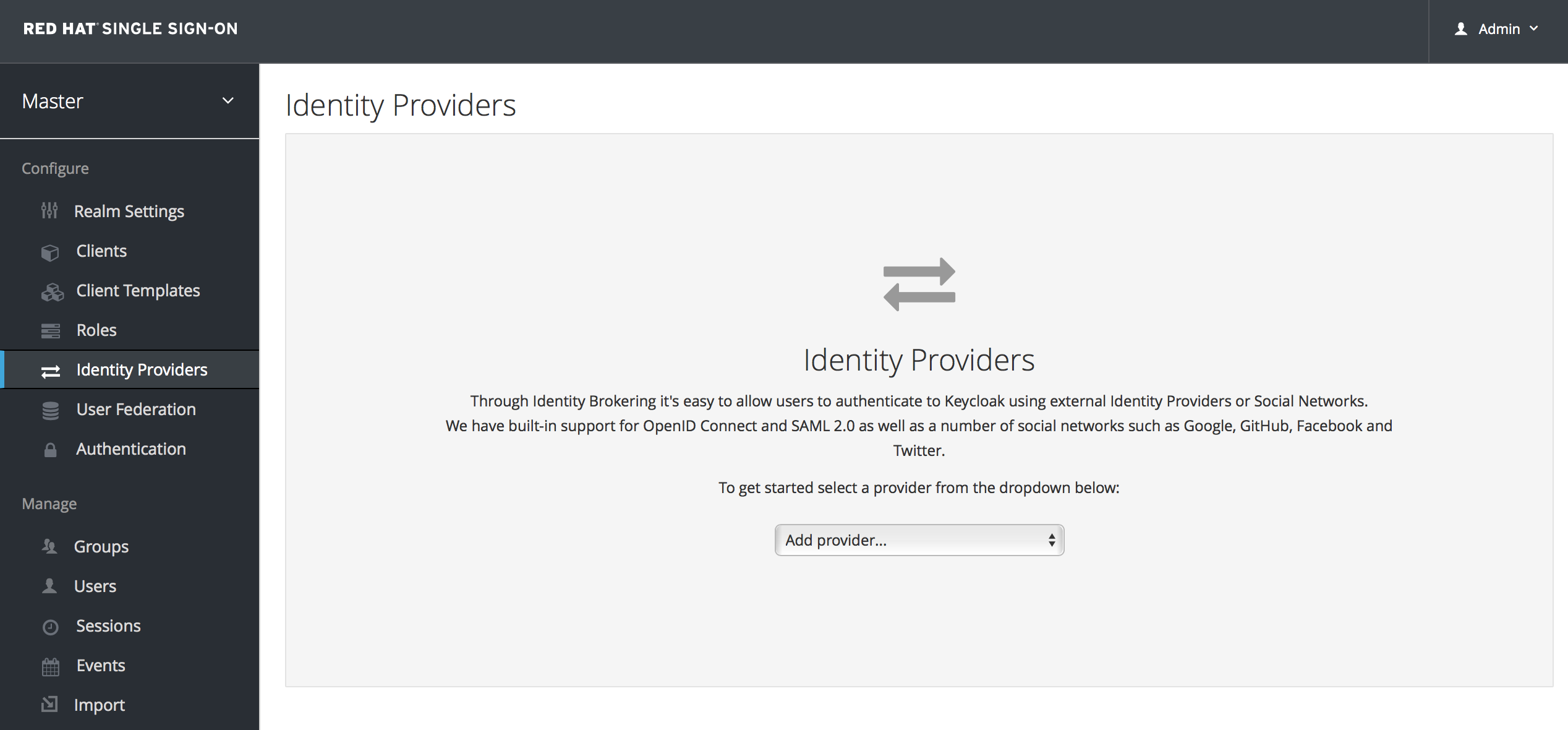

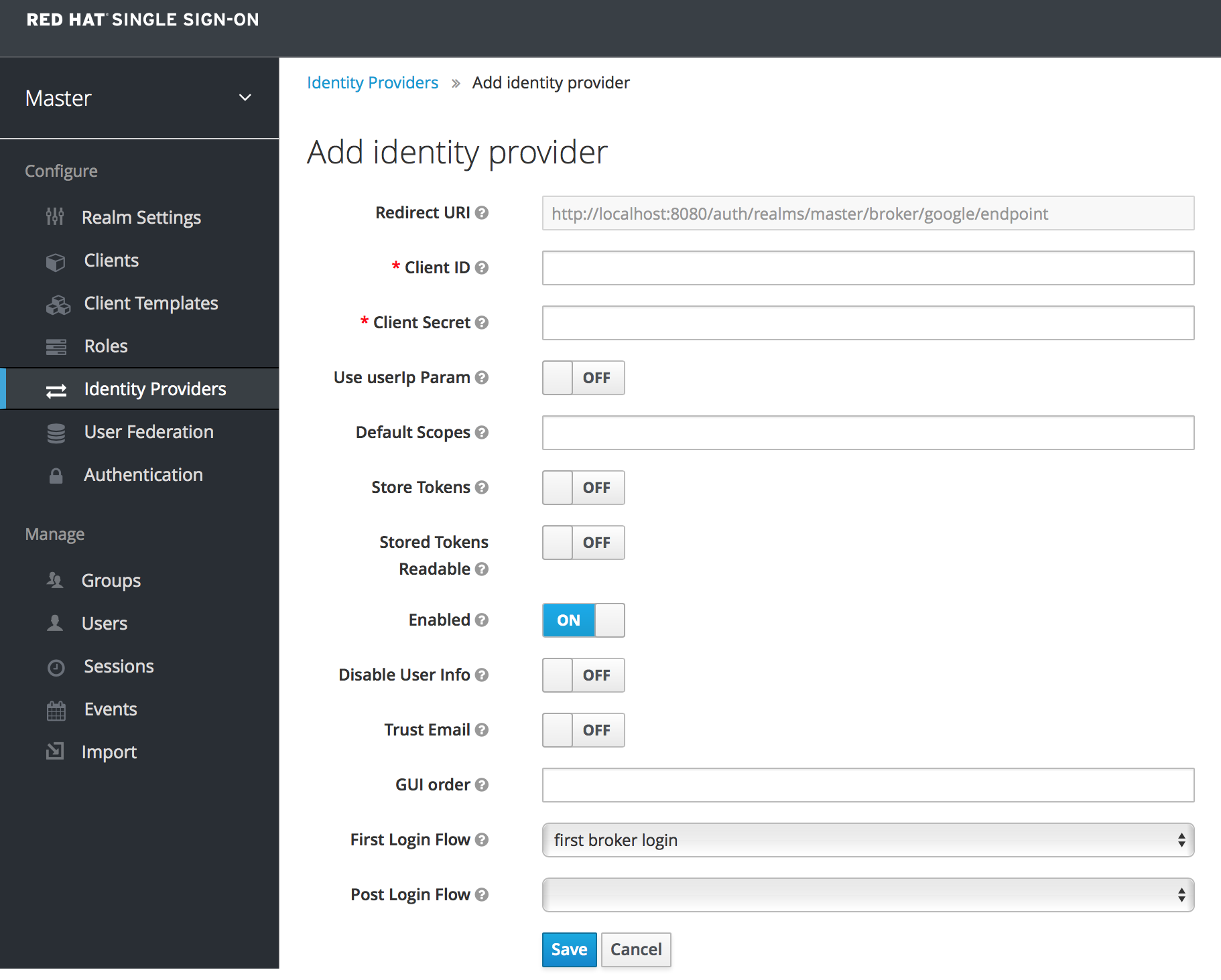

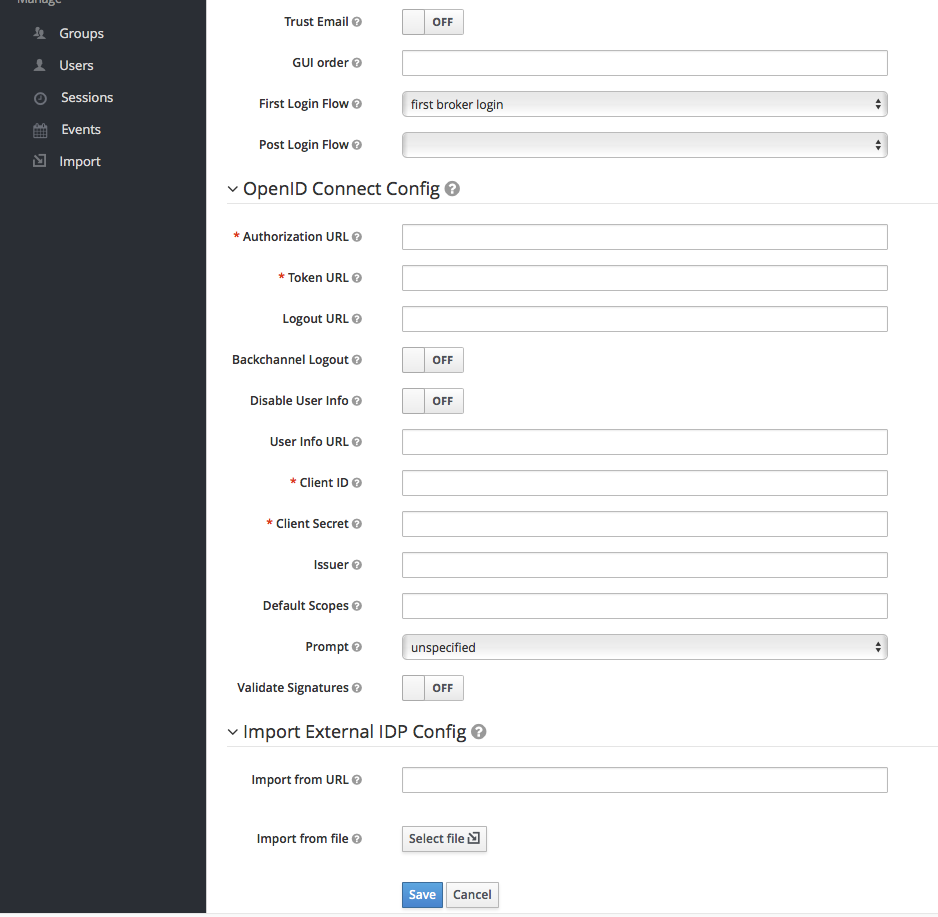

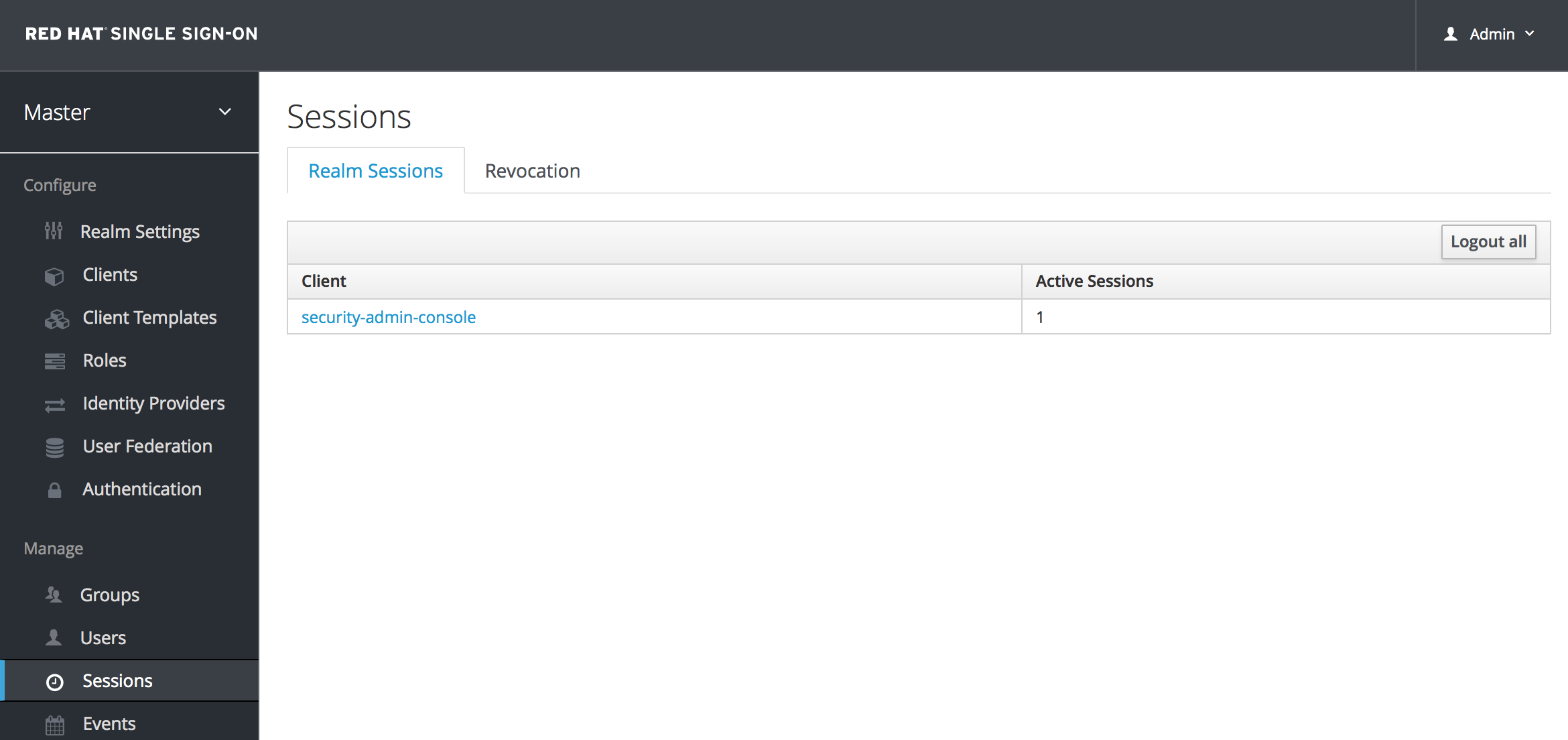

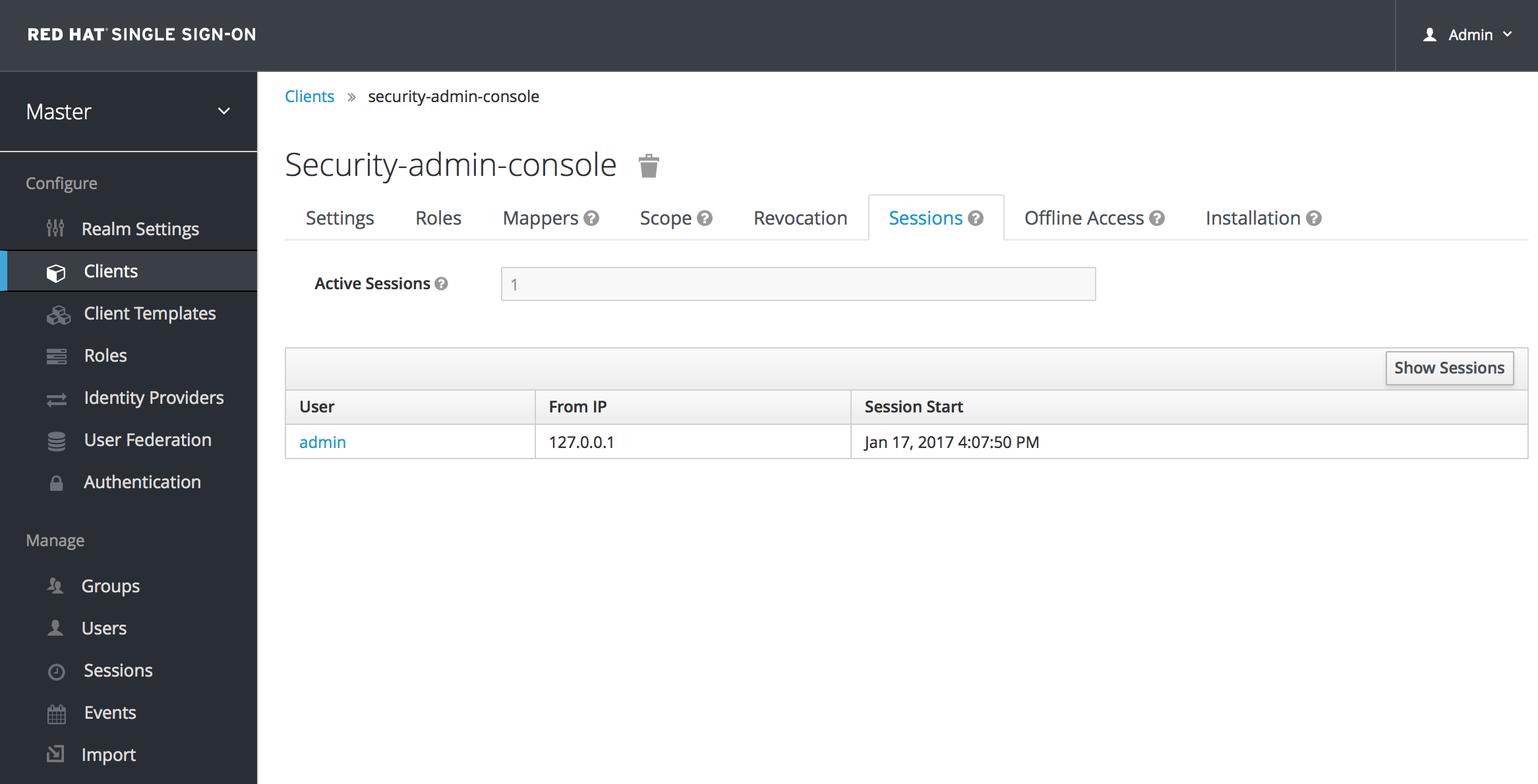

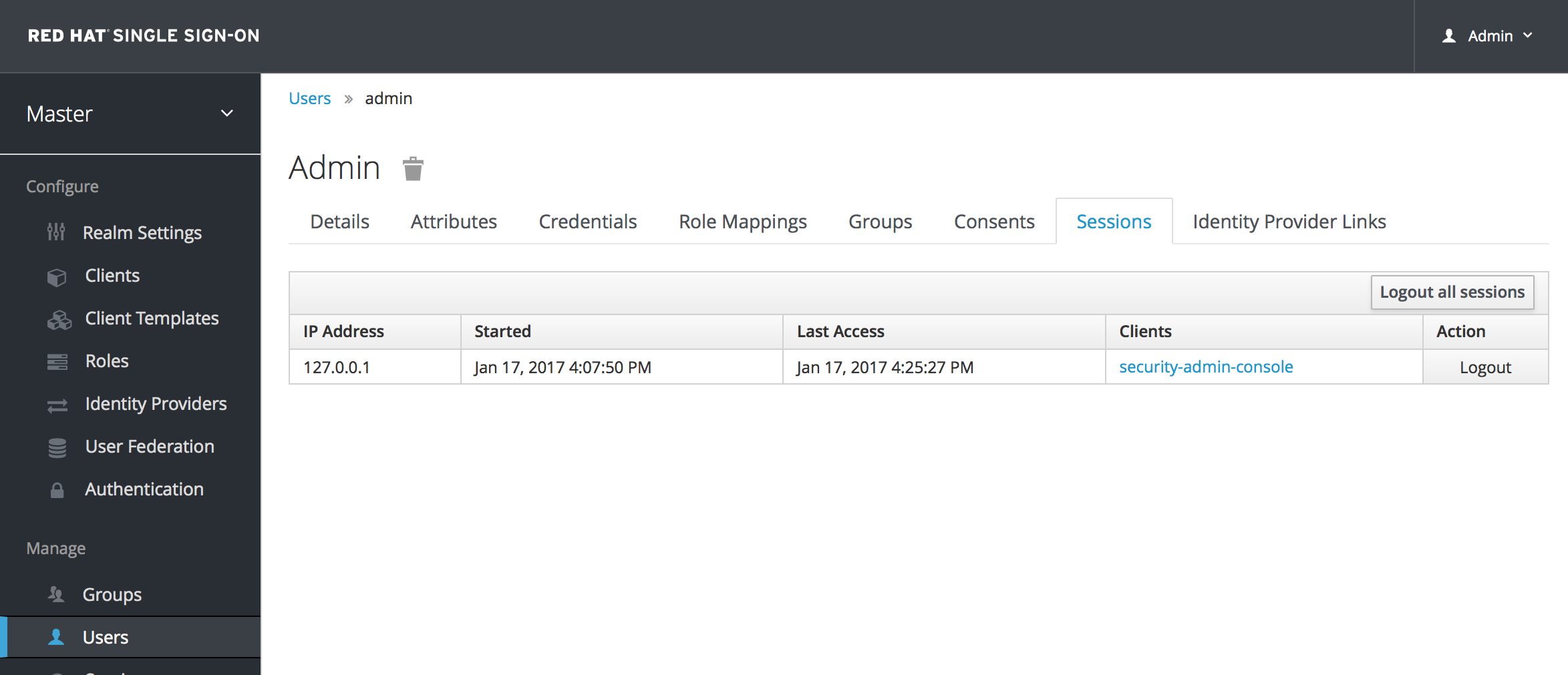

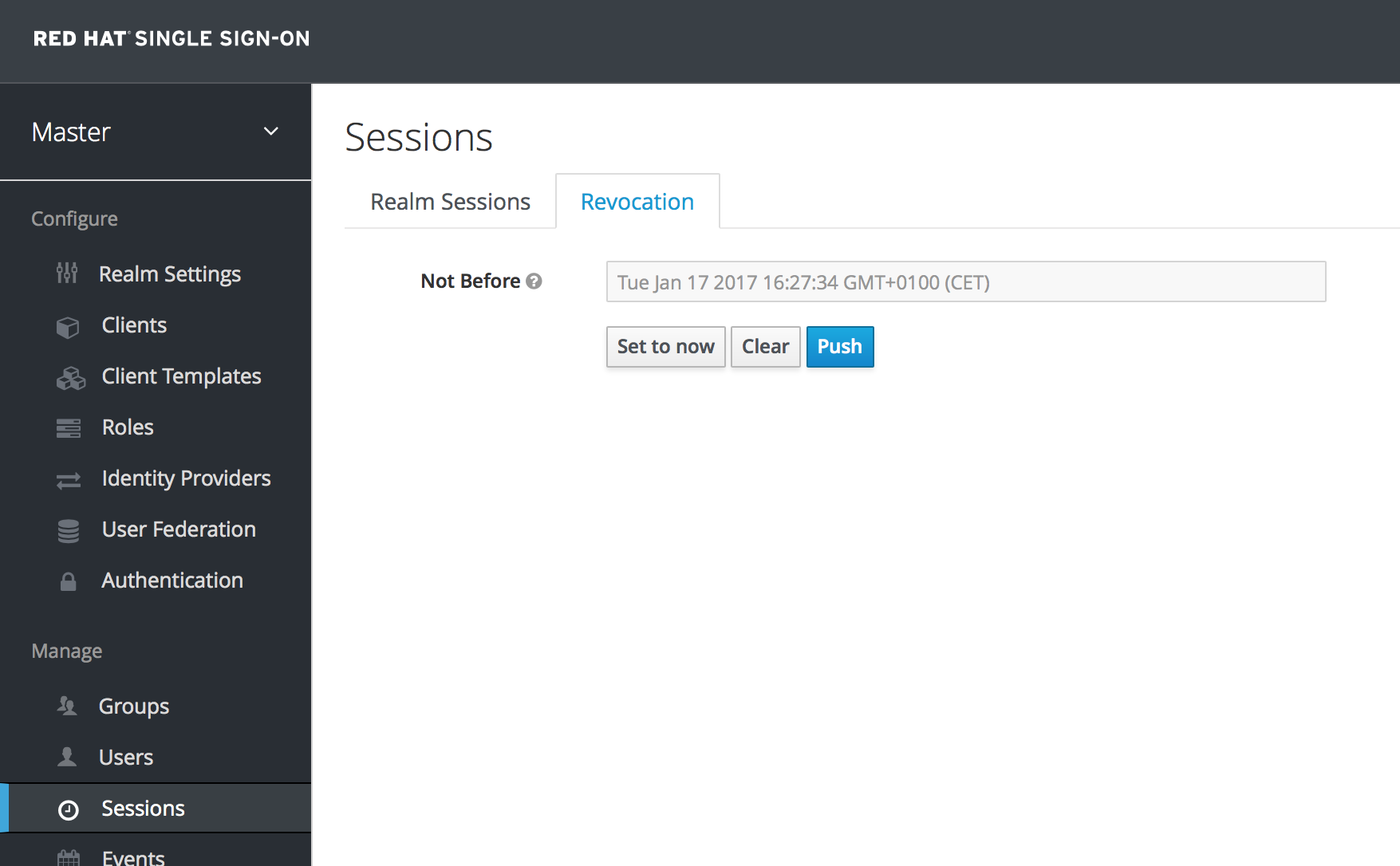

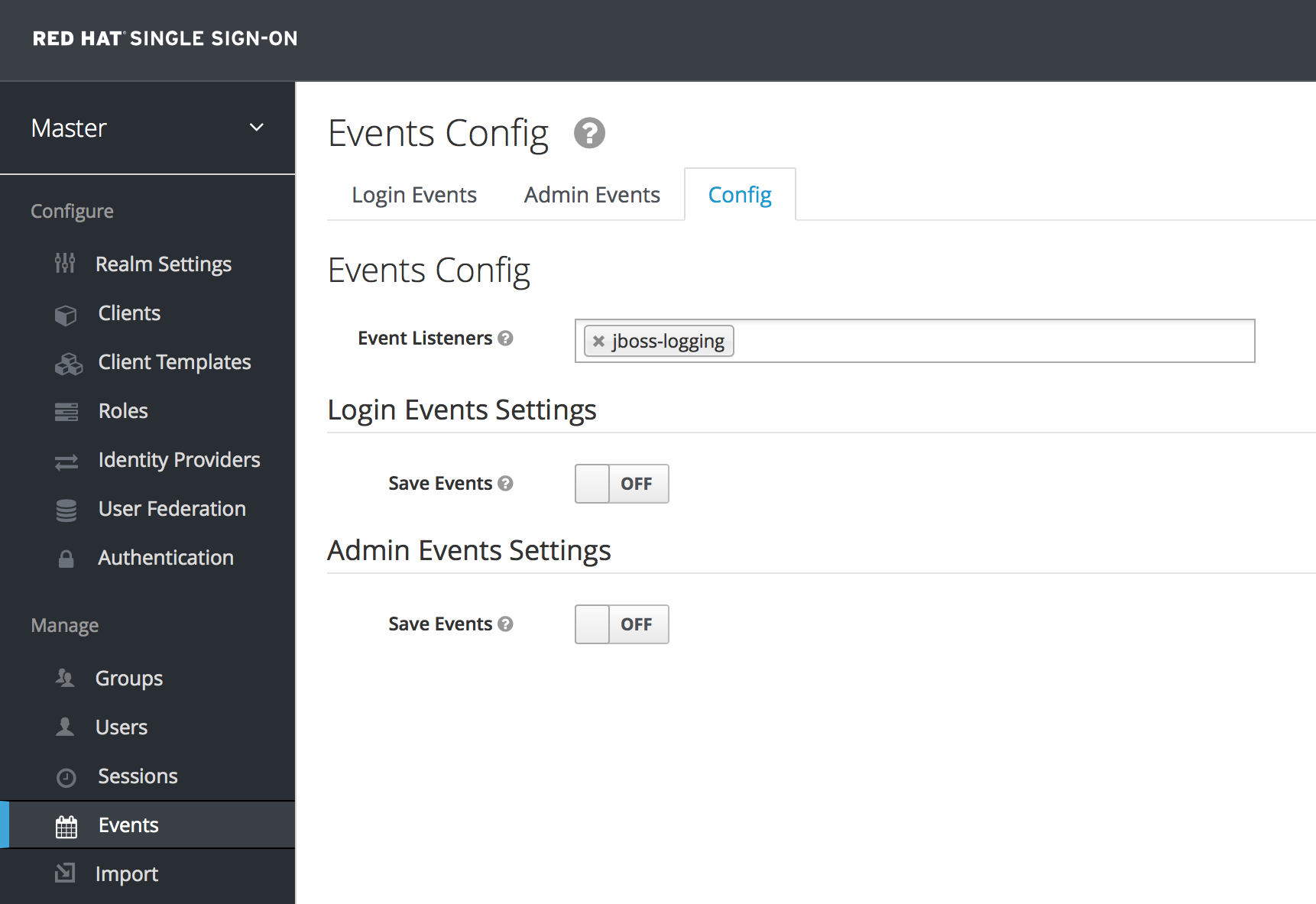

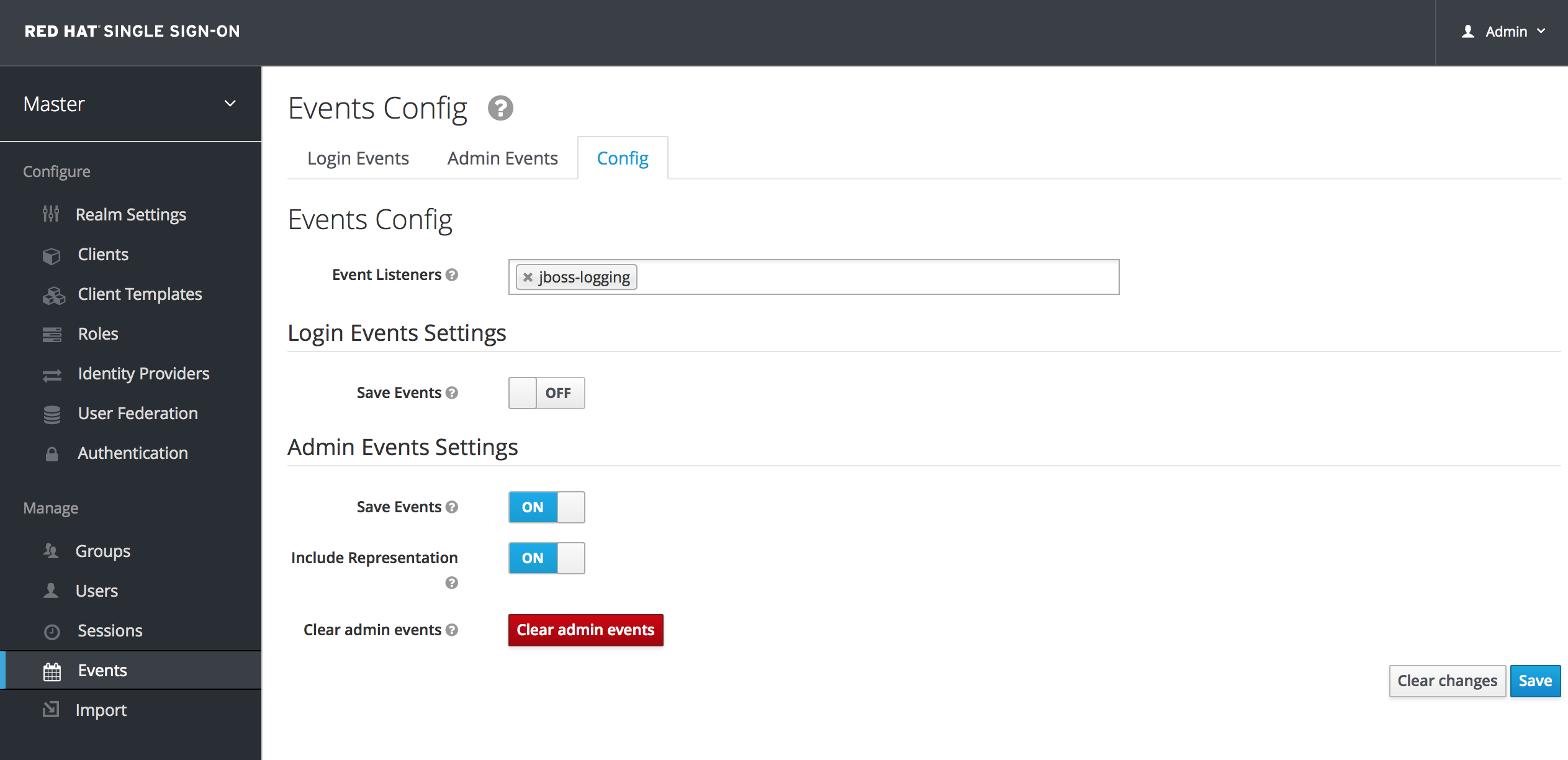

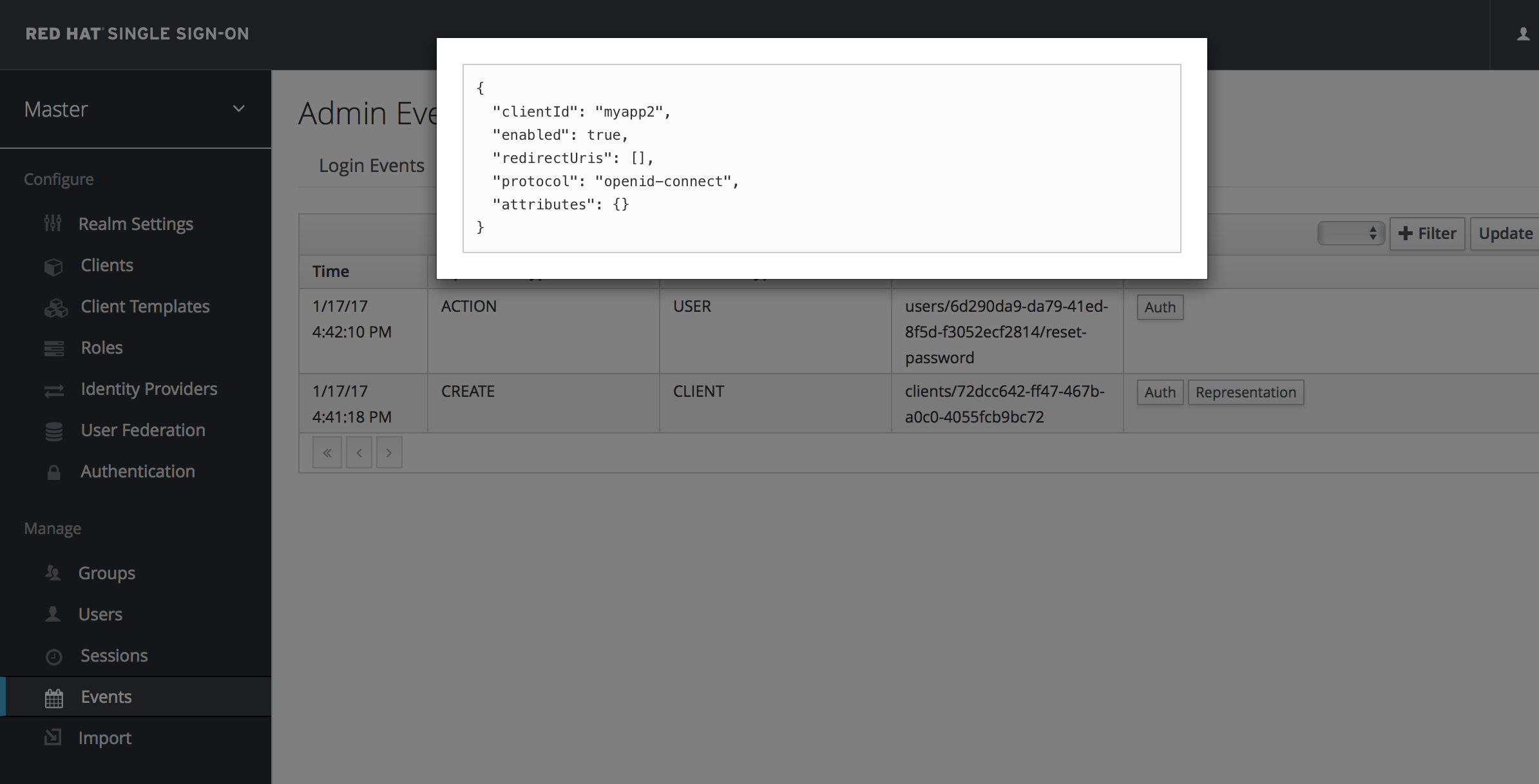

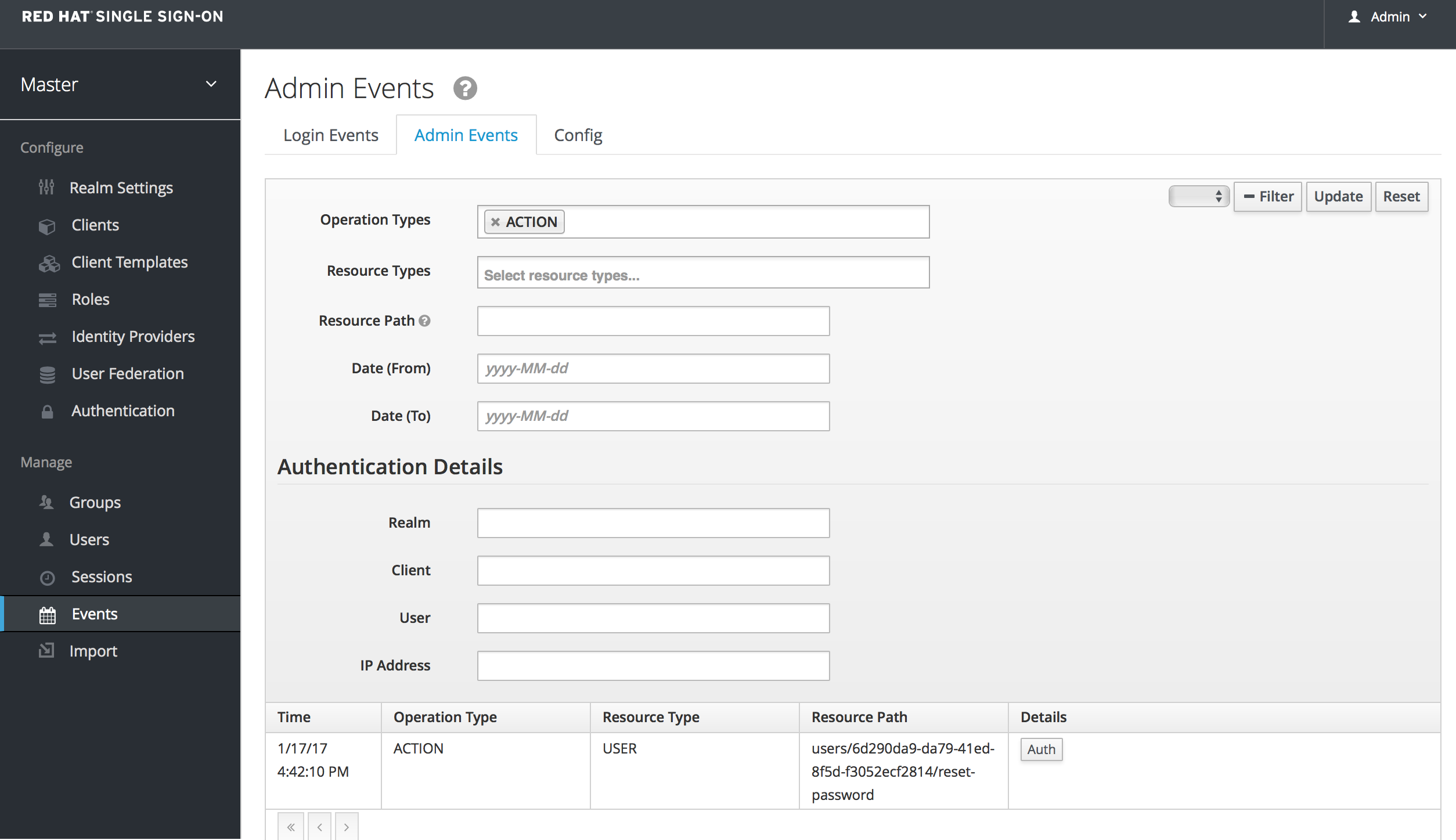

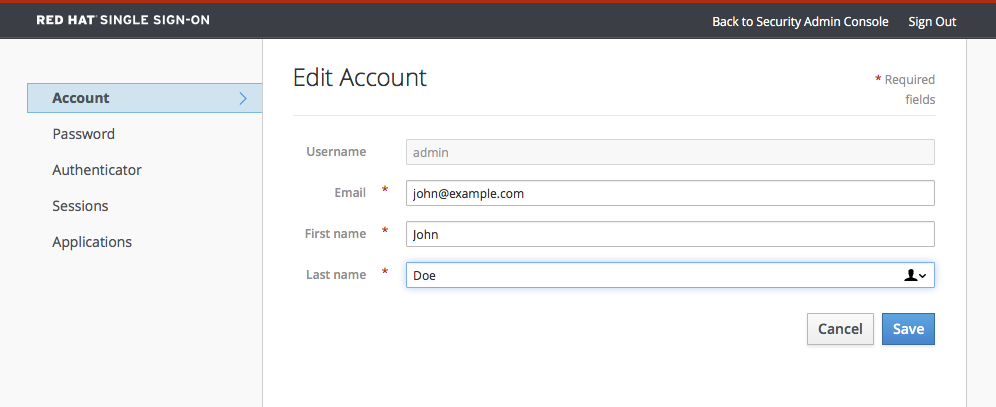

- /realms/{realm-name}/protocol/openid-connect/auth