Administration Guide

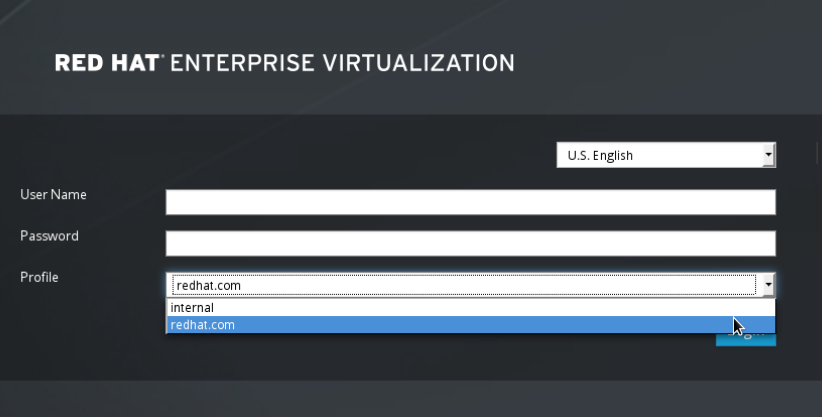

Administration Tasks in Red Hat Enterprise Virtualization

Abstract

Chapter 1. Administering and Maintaining the Red Hat Enterprise Virtualization Environment

- Managing physical and virtual resources such as hosts and virtual machines. This includes upgrading and adding hosts, importing domains, converting virtual machines created on foreign hypervisors, and managing virtual machine pools.

- Monitoring the overall system resources for potential problems such as extreme load on one of the hosts, insufficient memory or disk space, and taking any necessary actions (such as migrating virtual machines to other hosts to lessen the load or freeing resources by shutting down machines).

- Responding to the new requirements of virtual machines (for example, upgrading the operating system or allocating more memory).

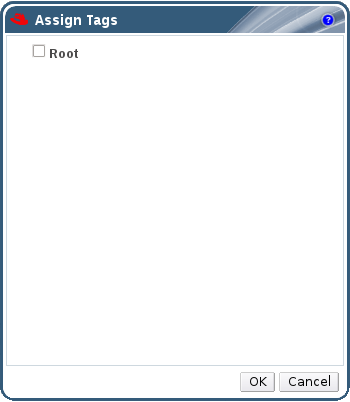

- Managing customized object properties using tags.

- Managing searches saved as public bookmarks.

- Managing user setup and setting permission levels.

- Troubleshooting for specific users or virtual machines for overall system functionality.

- Generating general and specific reports.

1.1. Global Configuration

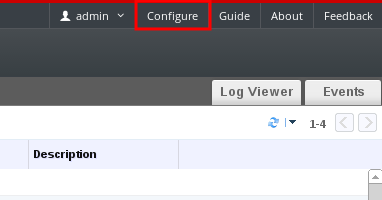

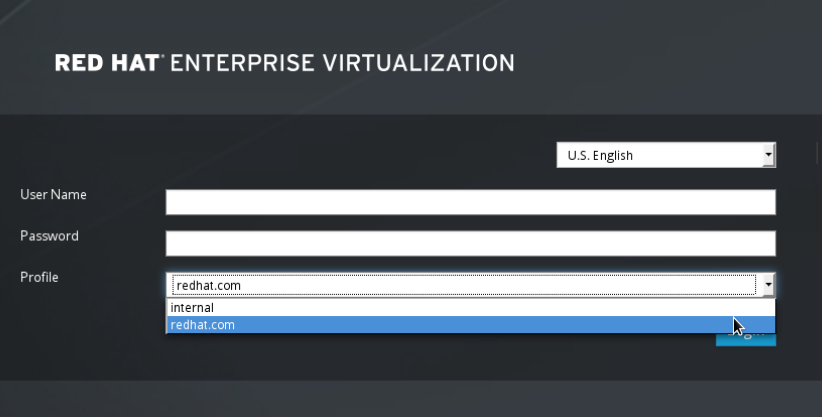

Figure 1.1. Accessing the Configure window

1.1.1. Roles

1.1.1.1. Creating a New Role

Procedure 1.1. Creating a New Role

- On the header bar, click the Configure button to open the Configure window. The window shows a list of default User and Administrator roles, and any custom roles.

- Click New. The New Role dialog box displays.

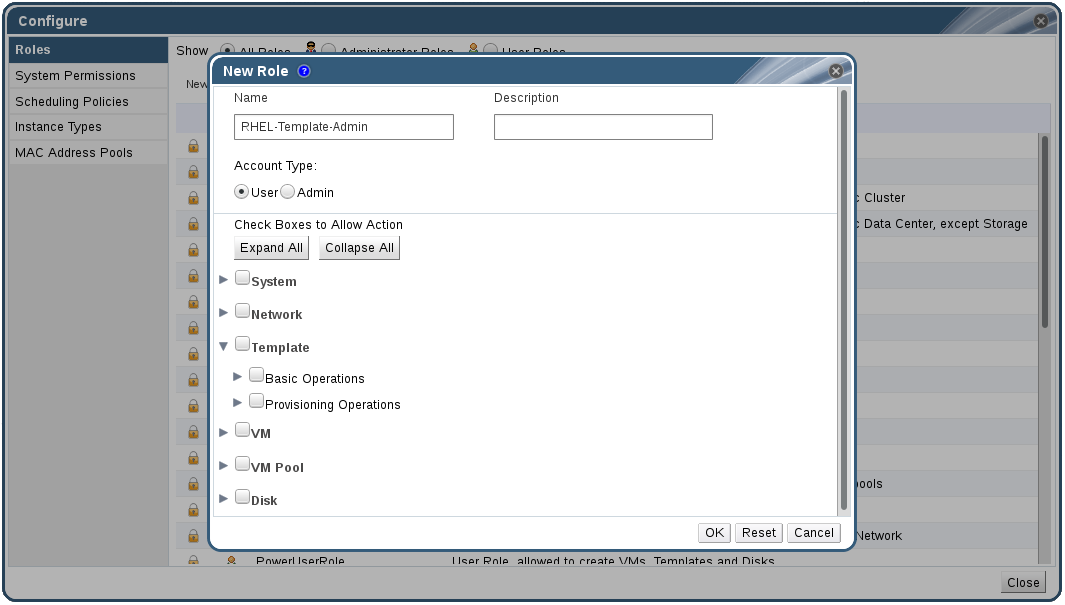

Figure 1.2. The New Role Dialog

- Enter the Name and Description of the new role.

- Select either Admin or User as the Account Type.

- Use the or buttons to view more or fewer of the permissions for the listed objects in the Check Boxes to Allow Action list. You can also expand or collapse the options for each object.

- For each of the objects, select or clear the actions you wish to permit or deny for the role you are setting up.

- Click to apply the changes you have made. The new role displays on the list of roles.

1.1.1.2. Editing or Copying a Role

Procedure 1.2. Editing or Copying a Role

- On the header bar, click the Configure button to open the Configure window. The window shows a list of default User and Administrator roles, and any custom roles.

- Select the role you wish to change. Click Edit to open the Edit Role window, or click Copy to open the Copy Role window.

- If necessary, edit the Name and Description of the role.

- Use the or buttons to view more or fewer of the permissions for the listed objects. You can also expand or collapse the options for each object.

- For each of the objects, select or clear the actions you wish to permit or deny for the role you are editing.

- Click to apply the changes you have made.

1.1.1.3. User Role and Authorization Examples

Example 1.1. Cluster Permissions

cluster called Accounts. She is assigned the ClusterAdmin role on the accounts cluster. This enables her to manage all virtual machines in the cluster, since the virtual machines are child objects of the cluster. Managing the virtual machines includes editing, adding, or removing virtual resources such as disks, and taking snapshots. It does not allow her to manage any resources outside this cluster. Because ClusterAdmin is an administrator role, it allows her to use the Administration Portal to manage these resources, but does not give her any access via the User Portal.

Example 1.2. VM PowerUser Permissions

johndesktop for him. John is assigned the UserVmManager role on the johndesktop virtual machine. This allows him to access this single virtual machine using the User Portal. Because he has UserVmManager permissions, he can modify the virtual machine and add resources to it, such as new virtual disks. Because UserVmManager is a user role, it does not allow him to use the Administration Portal.

Example 1.3. Data Center Power User Role Permissions

PowerUserRole permissions for the data center in which her new virtual machine will reside. This is because to create a new virtual machine, she needs to make changes to several components within the data center, including creating the virtual machine disk image in the storage domain.

DataCenterAdmin privileges to Penelope. As a PowerUser for a data center, Penelope can log in to the User Portal and perform virtual machine-specific actions on virtual machines within the data center. She cannot perform data center-level operations such as attaching hosts or storage to a data center.

Example 1.4. Network Administrator Permissions

NetworkAdmin privileges on the IT department's data center, she can add and remove networks in the data center, and attach and detach networks for all virtual machines belonging to the data center.

VnicProfileUser permissions and UserVmManager permissions for the virtual machines used by the internal training department. With these permissions, Pat can perform simple administrative tasks such as adding network interfaces onto virtual machines in the Extended tab of the User Portal. However, he does not have permissions to alter the networks for the hosts on which the virtual machines run, or the networks on the data center to which the virtual machines belong.

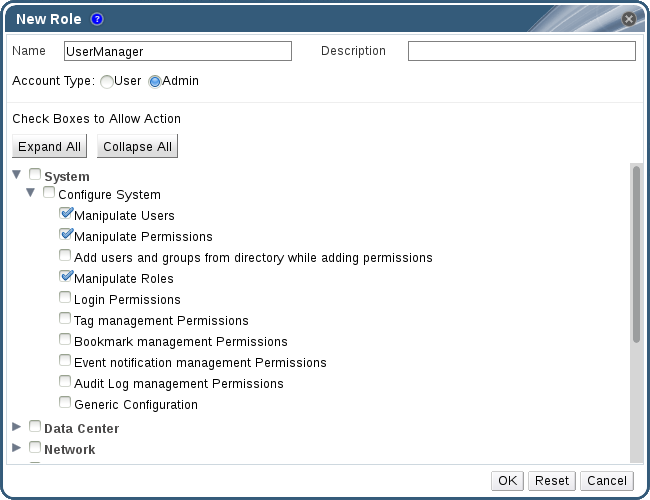

Example 1.5. Custom Role Permissions

Figure 1.3. UserManager Custom Role

System - the top level object of the hierarchy shown in Figure 1.3, “UserManager Custom Role”. This means they apply to all other objects in the system. The role is set to have an Account Type of Admin. This means that when she is assigned this role, Rachel can only use the Administration Portal, not the User Portal.

1.1.2. System Permissions

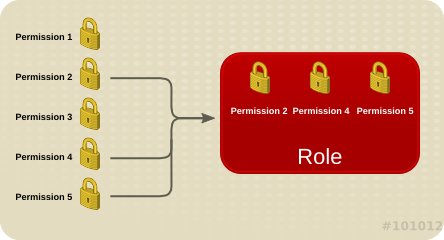

Figure 1.4. Permissions & Roles

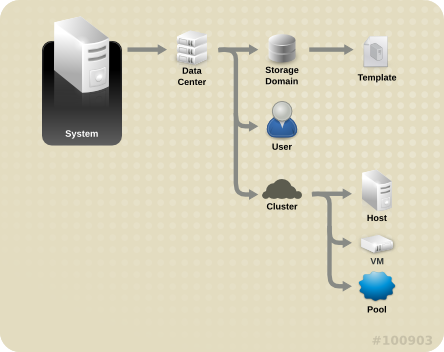

Figure 1.5. Red Hat Enterprise Virtualization Object Hierarchy

1.1.2.1. User Properties

1.1.2.2. User and Administrator Roles

- Administrator Role: Allows access to the Administration Portal for managing physical and virtual resources. An administrator role confers permissions for actions to be performed in the User Portal; however, it has no bearing on what a user can see in the User Portal.

- User Role: Allows access to the User Portal for managing and accessing virtual machines and templates. A user role determines what a user can see in the User Portal. Permissions granted to a user with an administrator role are reflected in the actions available to that user in the User Portal.

administrator role on a cluster, you can manage all virtual machines in the cluster using the Administration Portal. However, you cannot access any of these virtual machines in the User Portal; this requires a user role.

1.1.2.3. User Roles Explained

| Role | Privileges | Notes |

|---|---|---|

| UserRole | Can access and use virtual machines and pools. | Can log in to the User Portal, use assigned virtual machines and pools, view virtual machine state and details. |

| PowerUserRole | Can create and manage virtual machines and templates. | Apply this role to a user for the whole environment with the Configure window, or for specific data centers or clusters. For example, if a PowerUserRole is applied on a data center level, the PowerUser can create virtual machines and templates in the data center. |

| UserVmManager | System administrator of a virtual machine. | Can manage virtual machines and create and use snapshots. A user who creates a virtual machine in the User Portal is automatically assigned the UserVmManager role on the machine. |

| Role | Privileges | Notes |

|---|---|---|

| UserTemplateBasedVm | Limited privileges to only use Templates. | Can use templates to create virtual machines. |

| DiskOperator | Virtual disk user. | Can use, view and edit virtual disks. Inherits permissions to use the virtual machine to which the virtual disk is attached. |

| VmCreator | Can create virtual machines in the User Portal. | This role is not applied to a specific virtual machine; apply this role to a user for the whole environment with the Configure window. Alternatively apply this role for specific data centers or clusters. When applying this role to a cluster, you must also apply the DiskCreator role on an entire data center, or on specific storage domains. |

| TemplateCreator | Can create, edit, manage and remove virtual machine templates within assigned resources. | This role is not applied to a specific template; apply this role to a user for the whole environment with the Configure window. Alternatively apply this role for specific data centers, clusters, or storage domains. |

| DiskCreator | Can create, edit, manage and remove virtual machine disks within assigned clusters or data centers. | This role is not applied to a specific virtual disk; apply this role to a user for the whole environment with the Configure window. Alternatively apply this role for specific data centers or storage domains. |

| TemplateOwner | Can edit and delete the template, assign and manage user permissions for the template. | This role is automatically assigned to the user who creates a template. Other users who do not have TemplateOwner permissions on a template cannot view or use the template. |

| VnicProfileUser | Logical network and network interface user for virtual machine and template. | Can attach or detach network interfaces from specific logical networks. |

1.1.2.4. Administrator Roles Explained

| Role | Privileges | Notes |

|---|---|---|

| SuperUser | System Administrator of the Red Hat Enterprise Virtualization environment. | Has full permissions across all objects and levels, can manage all objects across all data centers. |

| ClusterAdmin | Cluster Administrator. | Possesses administrative permissions for all objects underneath a specific cluster. |

| DataCenterAdmin | Data Center Administrator. | Possesses administrative permissions for all objects underneath a specific data center except for storage. |

Important

| Role | Privileges | Notes |

|---|---|---|

| TemplateAdmin | Administrator of a virtual machine template. | Can create, delete, and configure the storage domains and network details of templates, and move templates between domains. |

| StorageAdmin | Storage Administrator. | Can create, delete, configure, and manage an assigned storage domain. |

| HostAdmin | Host Administrator. | Can attach, remove, configure, and manage a specific host. |

| NetworkAdmin | Network Administrator. | Can configure and manage the network of a particular data center or cluster. A network administrator of a data center or cluster inherits network permissions for virtual pools within the cluster. |

| VmPoolAdmin | System Administrator of a virtual pool. | Can create, delete, and configure a virtual pool; assign and remove virtual pool users; and perform basic operations on a virtual machine in the pool. |

| GlusterAdmin | Gluster Storage Administrator. | Can create, delete, configure, and manage Gluster storage volumes. |

| VmImporterExporter | Import and export Administrator of a virtual machine. | Can import and export virtual machines. Able to view all virtual machines and templates exported by other users. |

1.1.3. Scheduling Policies

1.1.3.1. Creating a Scheduling Policy

Procedure 1.3. Creating a Scheduling Policy

- Click the button in the header bar of the Administration Portal to open the Configure window.

- Click Scheduling Policies to view the scheduling policies tab.

- Click to open the New Scheduling Policy window.

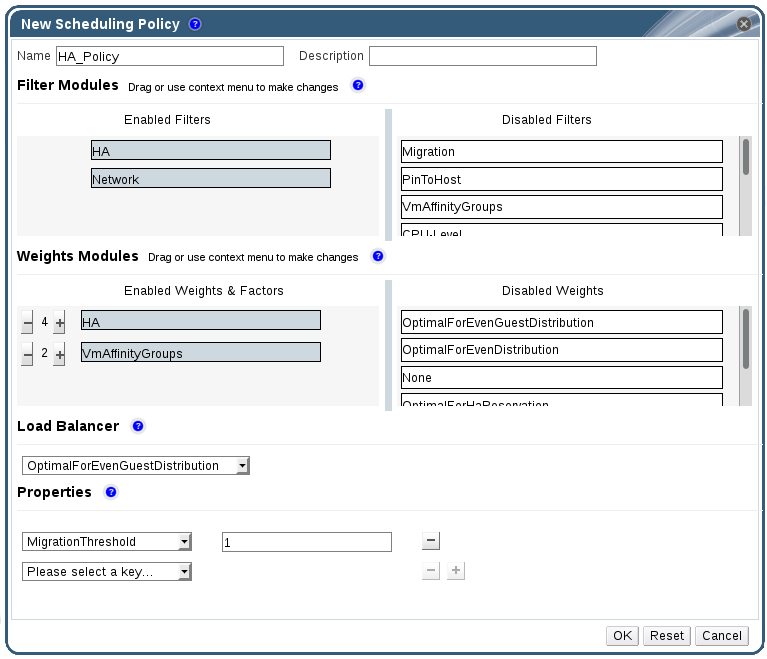

Figure 1.6. The New Scheduling Policy Window

- Enter a Name and Description for the scheduling policy.

- Configure filter modules:

- In the Filter Modules section, drag and drop the preferred filter modules to apply to the scheduling policy from the Disabled Filters section into the Enabled Filters section.

- Specific filter modules can also be set as the First, to be given highest priority, or Last, to be given lowest priority, for basic optimization.To set the priority, right-click any filter module, hover the cursor over Position and select First or Last.

- Configure weight modules:

- In the Weights Modules section, drag and drop the preferred weights modules to apply to the scheduling policy from the Disabled Weights section into the Enabled Weights & Factors section.

- Use the and buttons to the left of the enabled weight modules to increase or decrease the weight of those modules.

- Specify a load balancing policy:

- From the drop-down menu in the Load Balancer section, select the load balancing policy to apply to the scheduling policy.

- From the drop-down menu in the Properties section, select a load balancing property to apply to the scheduling policy and use the text field to the right of that property to specify a value.

- Use the and buttons to add or remove additional properties.

- Click .

1.1.3.2. Explanation of Settings in the New Scheduling Policy and Edit Scheduling Policy Window

|

Field Name

|

Description

|

|---|---|

|

Name

|

The name of the scheduling policy. This is the name used to refer to the scheduling policy in the Red Hat Enterprise Virtualization Manager.

|

|

Description

|

A description of the scheduling policy. This field is recommended but not mandatory.

|

|

Filter Modules

|

A set of filters for controlling the hosts on which a virtual machine in a cluster can run. Enabling a filter will filter out hosts that do not meet the conditions specified by that filter, as outlined below:

|

|

Weights Modules

|

A set of weightings for controlling the relative priority of factors considered when determining the hosts in a cluster on which a virtual machine can run.

|

|

Load Balancer

|

This drop-down menu allows you to select a load balancing module to apply. Load balancing modules determine the logic used to migrate virtual machines from hosts experiencing high usage to hosts experiencing lower usage.

|

|

Properties

|

This drop-down menu allows you to add or remove properties for load balancing modules, and is only available when you have selected a load balancing module for the scheduling policy. No properties are defined by default, and the properties that are available are specific to the load balancing module that is selected. Use the and buttons to add or remove additional properties to or from the load balancing module.

|

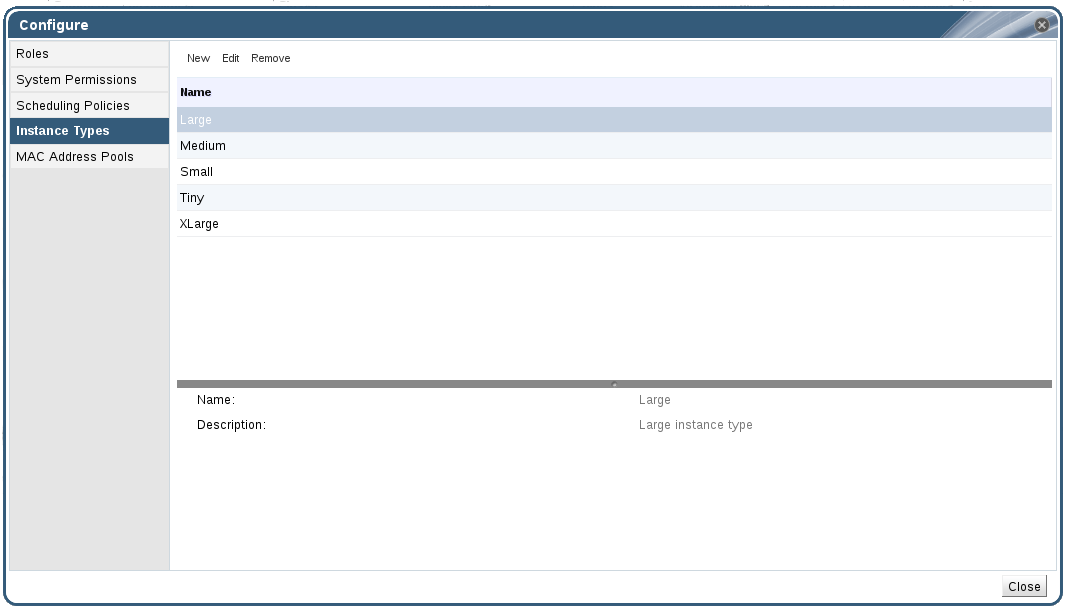

1.1.4. Instance Types

|

Name

|

Memory

|

vCPUs

|

|---|---|---|

|

Tiny

|

512 MB

|

1

|

|

Small

|

2 GB

|

1

|

|

Medium

|

4 GB

|

2

|

|

Large

|

8 GB

|

2

|

|

XLarge

|

16 GB

|

4

|

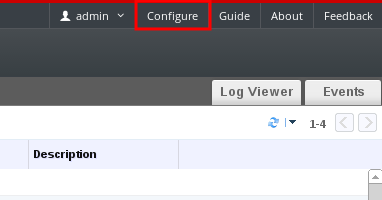

Figure 1.7. The Instance Types Tab

). If the value of one of these fields is changed, the virtual machine will be detached from the instance type, changing to Custom, and the chain will appear broken (

). If the value of one of these fields is changed, the virtual machine will be detached from the instance type, changing to Custom, and the chain will appear broken (

). However, if the value is changed back, the chain will relink and the instance type will move back to the selected one.

). However, if the value is changed back, the chain will relink and the instance type will move back to the selected one.

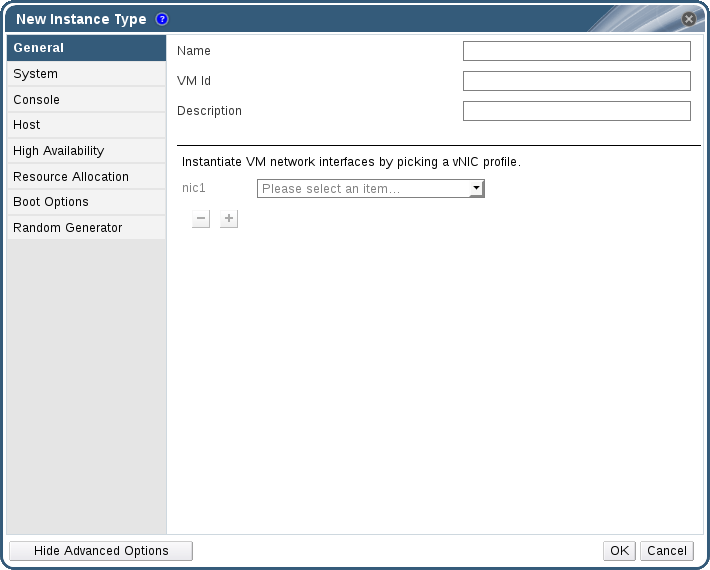

1.1.4.1. Creating Instance Types

Procedure 1.4. Creating an Instance Type

- On the header bar, click Configure.

- Click the Instance Types tab.

- Click to open the New Instance Type window.

Figure 1.8. The New Instance Type Window

- Enter a Name and Description for the instance type.

- Click and configure the instance type's settings as required. The settings that appear in the New Instance Type window are identical to those in the New Virtual Machine window, but with the relevant fields only. See Explanation of Settings in the New Virtual Machine and Edit Virtual Machine Windows in the Virtual Machine Management Guide.

- Click .

1.1.4.2. Editing Instance Types

Procedure 1.5. Editing Instance Type Properties

- On the header bar, click .

- Click the tab.

- Select the instance type to be edited.

- Click to open the Edit Instance Type window.

- Change the settings as required.

- Click .

1.1.4.3. Removing Instance Types

Procedure 1.6. Removing an Instance Type

- On the header bar, click .

- Click the tab.

- Select the instance type to be removed.

- Click to open the Remove Instance Type window.

- If any virtual machines are based on the instance type to be removed, a warning window listing the attached virtual machines will appear. To continue removing the instance type, select the Approve Operation checkbox. Otherwise click .

- Click .

1.1.5. MAC Address Pools

1.1.5.1. Creating MAC Address Pools

Procedure 1.7. Creating a MAC Address Pool

- On the header bar, click the Configure button to open the window.

- Click the MAC Address Pools tab.

- Click the button to open the New MAC Address Pool window.

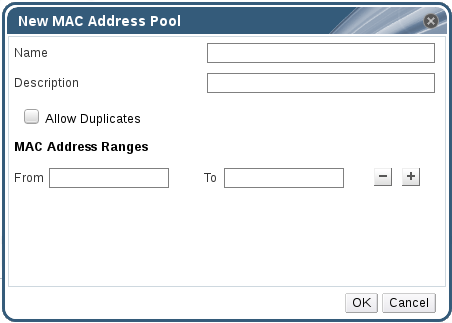

Figure 1.9. The New MAC Address Pool Window

- Enter the Name and Description of the new MAC address pool.

- Select the Allow Duplicates check box to allow a MAC address to be used multiple times in a pool. The MAC address pool will not automatically use a duplicate MAC address, but enabling the duplicates option means a user can manually use a duplicate MAC address.

Note

If one MAC address pool has duplicates disabled, and another has duplicates enabled, each MAC address can be used once in the pool with duplicates disabled but can be used multiple times in the pool with duplicates enabled. - Enter the required MAC Address Ranges. To enter multiple ranges click the plus button next to the From and To fields.

- Click .

1.1.5.2. Editing MAC Address Pools

Procedure 1.8. Editing MAC Address Pool Properties

- On the header bar, click the Configure button to open the window.

- Click the MAC Address Pools tab.

- Select the MAC address pool to be edited.

- Click the button to open the Edit MAC Address Pool window.

- Change the Name, Description, Allow Duplicates, and MAC Address Ranges fields as required.

Note

When a MAC address range is updated, the MAC addresses of existing NICs are not reassigned. MAC addresses that were already assigned, but are outside of the new MAC address range, are added as user-specified MAC addresses and are still tracked by that MAC address pool. - Click .

1.1.5.3. Editing MAC Address Pool Permissions

Procedure 1.9. Editing MAC Address Pool Permissions

- On the header bar, click the Configure button to open the Configure window.

- Click the MAC Address Pools tab.

- Select the required MAC address pool.

- Edit the user permissions for the MAC address pool:

- To add user permissions to a MAC address pool:

- Click Add in the user permissions pane at the bottom of the Configure window.

- Search for and select the required users.

- Select the required role from the Role to Assign drop-down list.

- Click OK to add the user permissions.

- To remove user permissions from a MAC address pool:

- Select the user permission to be removed in the user permissions pane at the bottom of the Configure window.

- Click Remove to remove the user permissions.

1.1.5.4. Removing MAC Address Pools

Procedure 1.10. Removing a MAC Address Pool

- On the header bar, click the Configure button to open the window.

- Click the MAC Address Pools tab.

- Select the MAC address pool to be removed.

- Click the button to open the Remove MAC Address Pool window.

- Click .

Part I. Administering the Resources

Chapter 2. Quality of Service

2.1. Storage Quality of Service

2.1.1. Creating a Storage Quality of Service Entry

Procedure 2.1. Creating a Storage Quality of Service Entry

- Click the Data Centers resource tab and select a data center.

- Click QoS in the details pane.

- Click Storage.

- Click .

- Enter a name for the quality of service entry in the QoS Name field.

- Enter a description for the quality of service entry in the Description field.

- Specify the throughput quality of service:

- Select the Throughput check box.

- Enter the maximum permitted total throughput in the Total field.

- Enter the maximum permitted throughput for read operations in the Read field.

- Enter the maximum permitted throughput for write operations in the Write field.

- Specify the input and output quality of service:

- Select the IOps check box.

- Enter the maximum permitted number of input and output operations per second in the Total field.

- Enter the maximum permitted number of input operations per second in the Read field.

- Enter the maximum permitted number of output operations per second in the Write field.

- Click .

2.1.2. Removing a Storage Quality of Service Entry

Procedure 2.2. Removing a Storage Quality of Service Entry

- Click the Data Centers resource tab and select a data center.

- Click QoS in the details pane.

- Click Storage.

- Select the storage quality of service entry to remove.

- Click .

- Click when prompted.

[unlimited].

2.2. Virtual Machine Network Quality of Service

Important

2.2.1. Creating a Virtual Machine Network Quality of Service Entry

Procedure 2.3. Creating a Virtual Machine Network Quality of Service Entry

- Click the Data Centers resource tab and select a data center.

- Click the QoS tab in the details pane.

- Click .

- Click .

- Enter a name for the virtual machine network quality of service entry in the Name field.

- Enter the limits for the Inbound and Outbound network traffic.

- Click .

2.2.2. Settings in the New Virtual Machine Network QoS and Edit Virtual Machine Network QoS Windows Explained

|

Field Name

|

Description

|

|---|---|

|

Data Center

|

The data center to which the virtual machine network QoS policy is to be added. This field is configured automatically according to the selected data center.

|

|

Name

|

A name to represent the virtual machine network QoS policy within the Manager.

|

|

Inbound

|

The settings to be applied to inbound traffic. Select or clear the Inbound check box to enable or disable these settings.

|

|

Outbound

|

The settings to be applied to outbound traffic. Select or clear the Outbound check box to enable or disable these settings.

|

2.2.3. Removing a Virtual Machine Network Quality of Service Entry

Procedure 2.4. Removing a Virtual Machine Network Quality of Service Entry

- Click the Data Centers resource tab and select a data center.

- Click the QoS tab in the details pane.

- Click VM Network.

- Select the virtual machine network quality of service entry to remove.

- Click .

- Click when prompted.

2.3. Host Network Quality of Service

2.3.1. Creating a Host Network Quality of Service Entry

Procedure 2.5. Creating a Host Network Quality of Service Entry

- Click the Data Centers resource tab and select a data center.

- Click QoS in the details pane.

- Click Host Network.

- Click .

- Enter a name for the quality of service entry in the QoS Name field.

- Enter a description for the quality of service entry in the Description field.

- Enter the desired values for Weighted Share, Rate Limit [Mbps], and Committed Rate [Mbps].

- Click .

2.3.2. Settings in the New Host Network Quality of Service and Edit Host Network Quality of Service Windows Explained

|

Field Name

|

Description

|

|---|---|

|

Data Center

|

The data center to which the host network QoS policy is to be added. This field is configured automatically according to the selected data center.

|

|

QoS Name

|

A name to represent the host network QoS policy within the Manager.

|

|

Description

|

A description of the host network QoS policy.

|

|

Outbound

|

The settings to be applied to outbound traffic.

|

2.3.3. Removing a Host Network Quality of Service Entry

Procedure 2.6. Removing a Host Network Quality of Service Entry

- Click the Data Centers resource tab and select a data center.

- Click the QoS tab in the details pane.

- Click Host Network.

- Select the network quality of service entry to remove.

- Click .

- Click when prompted.

2.4. CPU Quality of Service

2.4.1. Creating a CPU Quality of Service Entry

Procedure 2.7. Creating a CPU Quality of Service Entry

- Click the Data Centers resource tab and select a data center.

- Click QoS in the details pane.

- Click CPU.

- Click .

- Enter a name for the quality of service entry in the QoS Name field.

- Enter a description for the quality of service entry in the Description field.

- Enter the maximum processing capability the quality of service entry permits in the Limit field, in percentage. Do not include the

%symbol. - Click .

2.4.2. Removing a CPU Quality of Service Entry

Procedure 2.8. Removing a CPU Quality of Service Entry

- Click the Data Centers resource tab and select a data center.

- Click QoS in the details pane.

- Click CPU.

- Select the CPU quality of service entry to remove.

- Click .

- Click when prompted.

[unlimited].

Chapter 3. Data Centers

3.1. Introduction to Data Centers

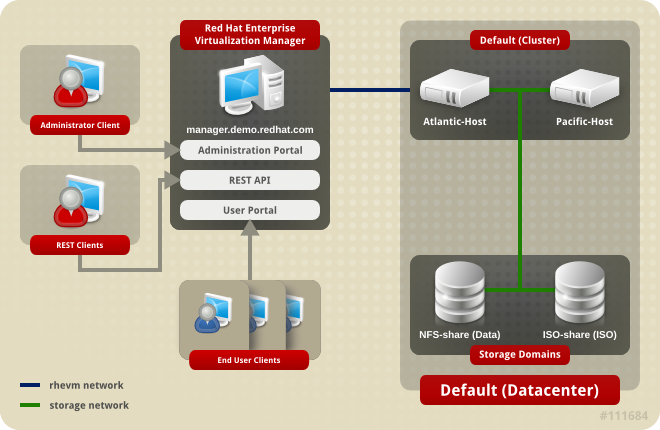

Figure 3.1. Data Centers

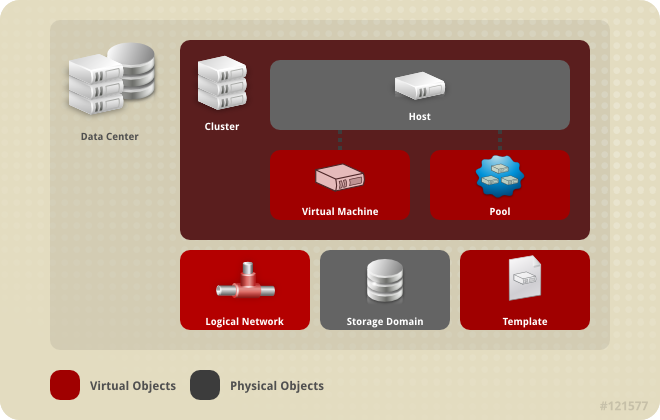

Figure 3.2. Data Center Objects

3.2. The Storage Pool Manager

3.3. SPM Priority

3.4. Using the Events Tab to Identify Problem Objects in Data Centers

3.5. Data Center Tasks

3.5.1. Creating a New Data Center

Note

Procedure 3.1. Creating a New Data Center

- Select the Data Centers resource tab to list all data centers in the results list.

- Click to open the New Data Center window.

- Enter the Name and Description of the data center.

- Select the storage Type, Compatibility Version, and Quota Mode of the data center from the drop-down menus.

- Optionally, change the MAC address pool for the data center. The default MAC address pool is preselected by default. For more information on creating MAC address pools see Section 1.1.5, “MAC Address Pools”.

- Click the MAC Address Pool tab.

- Select the required MAC address pool from the MAC Address Pool drop-down list.

- Click to create the data center and open the New Data Center - Guide Me window.

- The Guide Me window lists the entities that need to be configured for the data center. Configure these entities or postpone configuration by clicking the button; configuration can be resumed by selecting the data center and clicking the button.

3.5.2. Explanation of Settings in the New Data Center and Edit Data Center Windows

|

Field

|

Description/Action

|

|---|---|

|

Name

|

The name of the data center. This text field has a 40-character limit and must be a unique name with any combination of uppercase and lowercase letters, numbers, hyphens, and underscores.

|

|

Description

| The description of the data center. This field is recommended but not mandatory. |

|

Type

|

The storage type. Choose one of the following:

The type of data domain dictates the type of the data center and cannot be changed after creation without significant disruption. Multiple types of storage domains (iSCSI, NFS, FC, POSIX, and Gluster) can be added to the same data center, though local and shared domains cannot be mixed.

|

|

Compatibility Version

|

The version of Red Hat Enterprise Virtualization. Choose one of the following:

|

|

Quota Mode

| Quota is a resource limitation tool provided with Red Hat Enterprise Virtualization. Choose one of:

|

|

MAC Address Pool

|

The MAC address pool of the data center. If no other MAC address pool is assigned the default MAC address pool is used. For more information on MAC address pools see Section 1.1.5, “MAC Address Pools”

|

3.5.3. Re-Initializing a Data Center: Recovery Procedure

Procedure 3.2. Re-Initializing a Data Center

- Click the Data Centers resource tab and select the data center to re-initialize.

- Ensure that any storage domains attached to the data center are in maintenance mode.

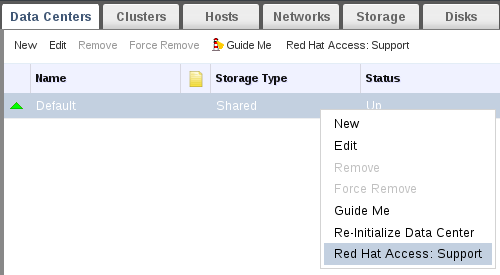

- Right-click the data center and select Re-Initialize Data Center from the drop-down menu to open the Data Center Re-Initialize window.

- The Data Center Re-Initialize window lists all available (detached; in maintenance mode) storage domains. Click the radio button for the storage domain you are adding to the data center.

- Select the Approve operation check box.

- Click to close the window and re-initialize the data center.

3.5.4. Removing a Data Center

Procedure 3.3. Removing a Data Center

- Ensure the storage domains attached to the data center is in maintenance mode.

- Click the Data Centers resource tab and select the data center to remove.

- Click to open the Remove Data Center(s) confirmation window.

- Click .

3.5.5. Force Removing a Data Center

Non Responsive if the attached storage domain is corrupt or if the host becomes Non Responsive. You cannot Remove the data center under either circumstance.

Procedure 3.4. Force Removing a Data Center

- Click the Data Centers resource tab and select the data center to remove.

- Click Force Remove to open the Force Remove Data Center confirmation window.

- Select the Approve operation check box.

- Click OK

3.5.6. Changing the Data Center Compatibility Version

Note

Procedure 3.5. Changing the Data Center Compatibility Version

- From the Administration Portal, click the Data Centers tab.

- Select the data center to change from the list displayed.

- Click .

- Change the Compatibility Version to the desired value.

- Click to open the Change Data Center Compatibility Version confirmation window.

- Click to confirm.

Warning

3.6. Data Centers and Storage Domains

3.6.1. Attaching an Existing Data Domain to a Data Center

Procedure 3.6. Attaching an Existing Data Domain to a Data Center

- Click the Data Centers resource tab and select the appropriate data center.

- Select the Storage tab in the details pane to list the storage domains already attached to the data center.

- Click to open the Attach Storage window.

- Select the check box for the data domain to attach to the data center. You can select multiple check boxes to attach multiple data domains.

- Click .

3.6.2. Attaching an Existing ISO domain to a Data Center

Procedure 3.7. Attaching an Existing ISO Domain to a Data Center

- Click the Data Centers resource tab and select the appropriate data center.

- Select the Storage tab in the details pane to list the storage domains already attached to the data center.

- Click to open the Attach ISO Library window.

- Click the radio button for the appropriate ISO domain.

- Click .

3.6.3. Attaching an Existing Export Domain to a Data Center

Procedure 3.8. Attaching an Existing Export Domain to a Data Center

- Click the Data Centers resource tab and select the appropriate data center.

- Select the Storage tab in the details pane to list the storage domains already attached to the data center.

- Click to open the Attach Export Domain window.

- Click the radio button for the appropriate Export domain.

- Click .

3.6.4. Detaching a Storage Domain from a Data Center

Note

Procedure 3.9. Detaching a Storage Domain from a Data Center

- Click the Data Centers resource tab and select the appropriate data center.

- Select the Storage tab in the details pane to list the storage domains attached to the data center.

- Select the storage domain to detach. If the storage domain is

Active, click to open the Maintenance Storage Domain(s) confirmation window. - Click to initiate maintenance mode.

- Click to open the Detach Storage confirmation window.

- Click .

3.7. Data Centers and Permissions

3.7.1. Managing System Permissions for a Data Center

- Create and remove clusters associated with the data center.

- Add and remove hosts, virtual machines, and pools associated with the data center.

- Edit user permissions for virtual machines associated with the data center.

Note

3.7.2. Data Center Administrator Roles Explained

The table below describes the administrator roles and privileges applicable to data center administration.

| Role | Privileges | Notes |

|---|---|---|

| DataCenterAdmin | Data Center Administrator | Can use, create, delete, manage all physical and virtual resources within a specific data center except for storage, including clusters, hosts, templates and virtual machines. |

| NetworkAdmin | Network Administrator | Can configure and manage the network of a particular data center. A network administrator of a data center inherits network permissions for virtual machines within the data center as well. |

3.7.3. Assigning an Administrator or User Role to a Resource

Procedure 3.10. Assigning a Role to a Resource

- Use the resource tabs, tree mode, or the search function to find and select the resource in the results list.

- Click the tab in the details pane to list the assigned users, the user's role, and the inherited permissions for the selected resource.

- Click .

- Enter the name or user name of an existing user into the Search text box and click . Select a user from the resulting list of possible matches.

- Select a role from the Role to Assign: drop-down list.

- Click .

3.7.4. Removing an Administrator or User Role from a Resource

Procedure 3.11. Removing a Role from a Resource

- Use the resource tabs, tree mode, or the search function to find and select the resource in the results list.

- Click the tab in the details pane to list the assigned users, the user's role, and the inherited permissions for the selected resource.

- Select the user to remove from the resource.

- Click . The Remove Permission window opens to confirm permissions removal.

- Click .

Chapter 4. Clusters

4.1. Introduction to Clusters

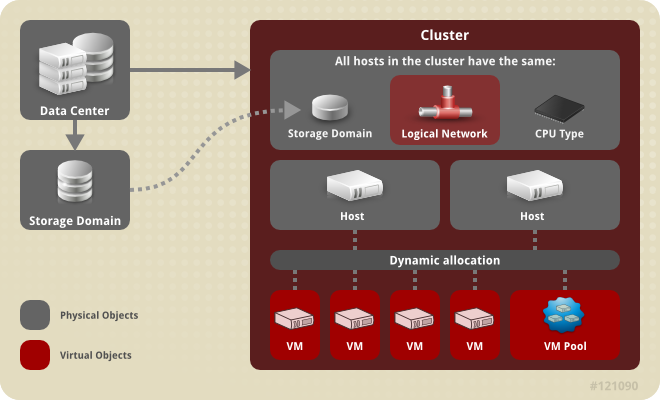

Figure 4.1. Cluster

4.2. Cluster Tasks

4.2.1. Creating a New Cluster

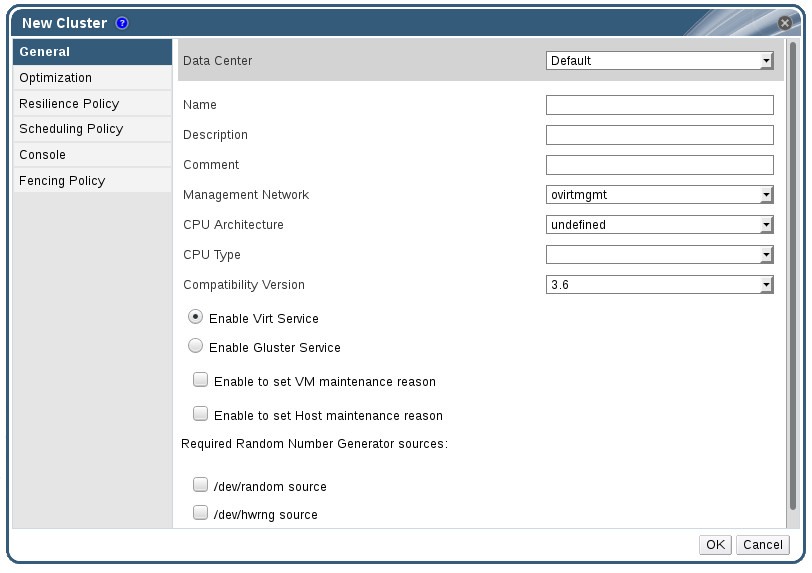

Procedure 4.1. Creating a New Cluster

- Select the Clusters resource tab.

- Click .

- Select the Data Center the cluster will belong to from the drop-down list.

- Enter the Name and Description of the cluster.

- Select a network from the Management Network drop-down list to assign the management network role.

- Select the CPU Architecture and CPU Type from the drop-down lists. It is important to match the CPU processor family with the minimum CPU processor type of the hosts you intend to attach to the cluster, otherwise the host will be non-operational.

Note

For both Intel and AMD CPU types, the listed CPU models are in logical order from the oldest to the newest. If your cluster includes hosts with different CPU models, select the oldest CPU model. For more information on each CPU model, see https://access.redhat.com/solutions/634853. - Select the Compatibility Version of the cluster from the drop-down list.

- Select either the Enable Virt Service or Enable Gluster Service radio button to define whether the cluster will be populated with virtual machine hosts or with Gluster-enabled nodes. Note that you cannot add Red Hat Enterprise Virtualization Hypervisor hosts to a Gluster-enabled cluster.

- Optionally select the Enable to set VM maintenance reason check box to enable an optional reason field when a virtual machine is shut down from the Manager, allowing the administrator to provide an explanation for the maintenance.

- Optionally select the Enable to set Host maintenance reason check box to enable an optional reason field when a host is placed into maintenance mode from the Manager, allowing the administrator to provide an explanation for the maintenance.

- Select either the /dev/random source (Linux-provided device) or /dev/hwrng source (external hardware device) check box to specify the random number generator device that all hosts in the cluster will use.

- Click the Optimization tab to select the memory page sharing threshold for the cluster, and optionally enable CPU thread handling and memory ballooning on the hosts in the cluster.

- Click the Resilience Policy tab to select the virtual machine migration policy.

- Click the Scheduling Policy tab to optionally configure a scheduling policy, configure scheduler optimization settings, enable trusted service for hosts in the cluster, enable HA Reservation, and add a custom serial number policy.

- Click the Console tab to optionally override the global SPICE proxy, if any, and specify the address of a SPICE proxy for hosts in the cluster.

- Click the Fencing policy tab to enable or disable fencing in the cluster, and select fencing options.

- Click to create the cluster and open the New Cluster - Guide Me window.

- The Guide Me window lists the entities that need to be configured for the cluster. Configure these entities or postpone configuration by clicking the button; configuration can be resumed by selecting the cluster and clicking the button.

4.2.2. Explanation of Settings and Controls in the New Cluster and Edit Cluster Windows

4.2.2.1. General Cluster Settings Explained

Figure 4.2. New Cluster window

|

Field

|

Description/Action

|

|---|---|

|

Data Center

|

The data center that will contain the cluster. The data center must be created before adding a cluster.

|

|

Name

|

The name of the cluster. This text field has a 40-character limit and must be a unique name with any combination of uppercase and lowercase letters, numbers, hyphens, and underscores.

|

|

Description / Comment

| The description of the cluster or additional notes. These fields are recommended but not mandatory. |

|

Management Network

|

The logical network which will be assigned the management network role. The default is ovirtmgmt. On existing clusters, the management network can only be changed via the button in the Logical Networks tab in the details pane.

|

| CPU Architecture | The CPU architecture of the cluster. Different CPU types are available depending on which CPU architecture is selected.

|

|

CPU Type

| The CPU type of the cluster. Choose one of:

|

|

Compatibility Version

| The version of Red Hat Enterprise Virtualization. Choose one of:

|

|

Enable Virt Service

| If this radio button is selected, hosts in this cluster will be used to run virtual machines. |

|

Enable Gluster Service

| If this radio button is selected, hosts in this cluster will be used as Red Hat Gluster Storage Server nodes, and not for running virtual machines. You cannot add a Red Hat Enterprise Virtualization Hypervisor host to a cluster with this option enabled. |

|

Import existing gluster configuration

|

This check box is only available if the Enable Gluster Service radio button is selected. This option allows you to import an existing Gluster-enabled cluster and all its attached hosts to Red Hat Enterprise Virtualization Manager.

The following options are required for each host in the cluster that is being imported:

|

| Enable to set VM maintenance reason | If this check box is selected, an optional reason field will appear when a virtual machine in the cluster is shut down from the Manager. This allows you to provide an explanation for the maintenance, which will appear in the logs and when the virtual machine is powered on again. |

| Enable to set Host maintenance reason | If this check box is selected, an optional reason field will appear when a host in the cluster is moved into maintenance mode from the Manager. This allows you to provide an explanation for the maintenance, which will appear in the logs and when the host is activated again. |

| Required Random Number Generator sources: |

If one of the following check boxes is selected, all hosts in the cluster must have that device available. This enables passthrough of entropy from the random number generator device to virtual machines.

|

4.2.2.2. Optimization Settings Explained

|

Field

|

Description/Action

|

|---|---|

|

Memory Optimization

|

|

|

CPU Threads

|

Selecting the Count Threads As Cores check box allows hosts to run virtual machines with a total number of processor cores greater than the number of cores in the host.

The exposed host threads would be treated as cores which can be utilized by virtual machines. For example, a 24-core system with 2 threads per core (48 threads total) can run virtual machines with up to 48 cores each, and the algorithms to calculate host CPU load would compare load against twice as many potential utilized cores.

|

|

Memory Balloon

|

Selecting the Enable Memory Balloon Optimization check box enables memory overcommitment on virtual machines running on the hosts in this cluster. When this option is set, the Memory Overcommit Manager (MoM) will start ballooning where and when possible, with a limitation of the guaranteed memory size of every virtual machine.

To have a balloon running, the virtual machine needs to have a balloon device with relevant drivers. Each virtual machine in cluster level 3.2 and higher includes a balloon device, unless specifically removed. Each host in this cluster receives a balloon policy update when its status changes to

Up. If necessary, you can manually update the balloon policy on a host without having to change the status. See Section 4.2.5, “Updating the MoM Policy on Hosts in a Cluster”.

It is important to understand that in some scenarios ballooning may collide with KSM. In such cases MoM will try to adjust the balloon size to minimize collisions. Additionally, in some scenarios ballooning may cause sub-optimal performance for a virtual machine. Administrators are advised to use ballooning optimization with caution.

|

|

KSM control

|

Selecting the Enable KSM check box enables MoM to run Kernel Same-page Merging (KSM) when necessary and when it can yield a memory saving benefit that outweighs its CPU cost.

|

4.2.2.3. Resilience Policy Settings Explained

|

State

|

Description

|

|---|---|

|

Non Operational

|

Non-operational hosts can be communicated with by the Manager, but have an incorrect configuration, for example a missing logical network. If a host becomes non-operational, the migration of virtual machines depends on the cluster resilience policy.

|

|

Non Responsive

|

Non-responsive hosts cannot be communicated with by the Manager. If a host becomes non-responsive, all virtual machines with high availability are restarted on a different host in the cluster.

|

|

Field

|

Description/Action

|

|---|---|

|

Migrate Virtual Machines

|

Migrates all virtual machines in order of their defined priority.

|

|

Migrate only Highly Available Virtual Machines

|

Migrates only highly available virtual machines to prevent overloading other hosts.

|

|

Do Not Migrate Virtual Machines

| Prevents virtual machines from being migrated. |

4.2.2.4. Scheduling Policy Settings Explained

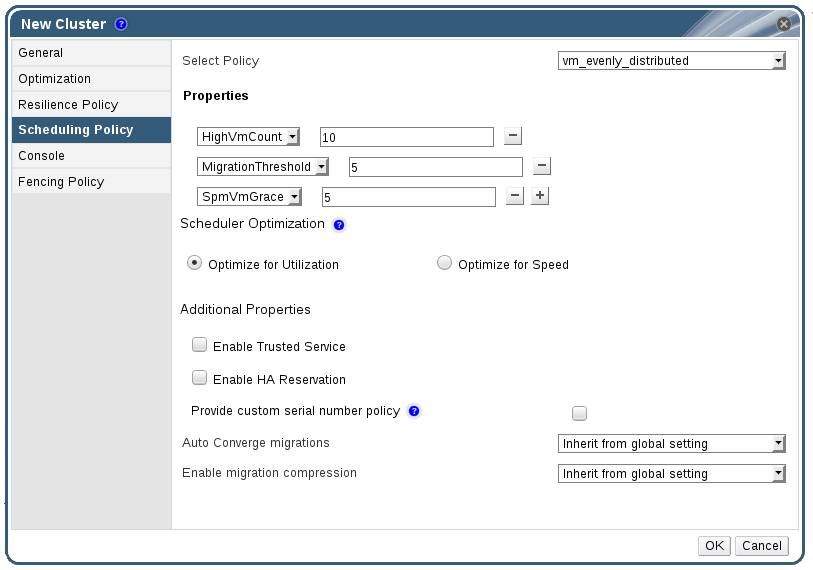

Figure 4.3. Scheduling Policy Settings: vm_evenly_distributed

|

Field

|

Description/Action

|

|---|---|

|

Select Policy

|

Select a policy from the drop-down list.

|

|

Properties

|

The following properties appear depending on the selected policy, and can be edited if necessary:

|

|

Scheduler Optimization

|

Optimize scheduling for host weighing/ordering.

|

|

Enable Trusted Service

|

Enable integration with an OpenAttestation server. Before this can be enabled, use the

engine-config tool to enter the OpenAttestation server's details. For more information, see Section 9.4, “Trusted Compute Pools”.

|

|

Enable HA Reservation

|

Enable the Manager to monitor cluster capacity for highly available virtual machines. The Manager ensures that appropriate capacity exists within a cluster for virtual machines designated as highly available to migrate in the event that their existing host fails unexpectedly.

|

|

Provide custom serial number policy

|

This check box allows you to specify a serial number policy for the virtual machines in the cluster. Select one of the following options:

|

|

Auto Converge migrations

|

This option allows you to set whether auto-convergence is used during live migration of virtual machines in the cluster. Large virtual machines with high workloads can dirty memory more quickly than the transfer rate achieved during live migration, and prevent the migration from converging. Auto-convergence capabilities in QEMU allow you to force convergence of virtual machine migrations. QEMU automatically detects a lack of convergence and triggers a throttle-down of the vCPUs on the virtual machines. Auto-convergence is disabled globally by default.

|

|

Enable migration compression

|

This option allows you to set whether migration compression is used during live migration of virtual machines in the cluster. This feature uses Xor Binary Zero Run-Length-Encoding to reduce virtual machine downtime and total live migration time for virtual machines running memory write-intensive workloads or for any application with a sparse memory update pattern. Migration compression is disabled globally by default.

|

mom.Controllers.Balloon - INFO Ballooning guest:half1 from 1096400 to 1991580 are logged to /var/log/vdsm/mom.log. /var/log/vdsm/mom.log is the Memory Overcommit Manager log file.

4.2.2.5. Cluster Console Settings Explained

|

Field

|

Description/Action

|

|---|---|

|

Define SPICE Proxy for Cluster

|

Select this check box to enable overriding the SPICE proxy defined in global configuration. This feature is useful in a case where the user (who is, for example, connecting via the User Portal) is outside of the network where the hypervisors reside.

|

|

Overridden SPICE proxy address

|

The proxy by which the SPICE client will connect to virtual machines. The address must be in the following format:

protocol://[host]:[port] |

4.2.2.6. Fencing Policy Settings Explained

| Field | Description/Action |

|---|---|

| Enable fencing | Enables fencing on the cluster. Fencing is enabled by default, but can be disabled if required; for example, if temporary network issues are occurring or expected, administrators can disable fencing until diagnostics or maintenance activities are completed. Note that if fencing is disabled, highly available virtual machines running on non-responsive hosts will not be restarted elsewhere. |

| Skip fencing if host has live lease on storage | If this check box is selected, any hosts in the cluster that are Non Responsive and still connected to storage will not be fenced. |

| Skip fencing on cluster connectivity issues | If this check box is selected, fencing will be temporarily disabled if the percentage of hosts in the cluster that are experiencing connectivity issues is greater than or equal to the defined Threshold. The Threshold value is selected from the drop-down list; available values are 25, 50, 75, and 100. |

4.2.3. Editing a Resource

Edit the properties of a resource.

Procedure 4.2. Editing a Resource

- Use the resource tabs, tree mode, or the search function to find and select the resource in the results list.

- Click to open the Edit window.

- Change the necessary properties and click .

The new properties are saved to the resource. The Edit window will not close if a property field is invalid.

4.2.4. Setting Load and Power Management Policies for Hosts in a Cluster

Procedure 4.3. Setting Load and Power Management Policies for Hosts

- Use the resource tabs, tree mode, or the search function to find and select the cluster in the results list.

- Click to open the Edit Cluster window.

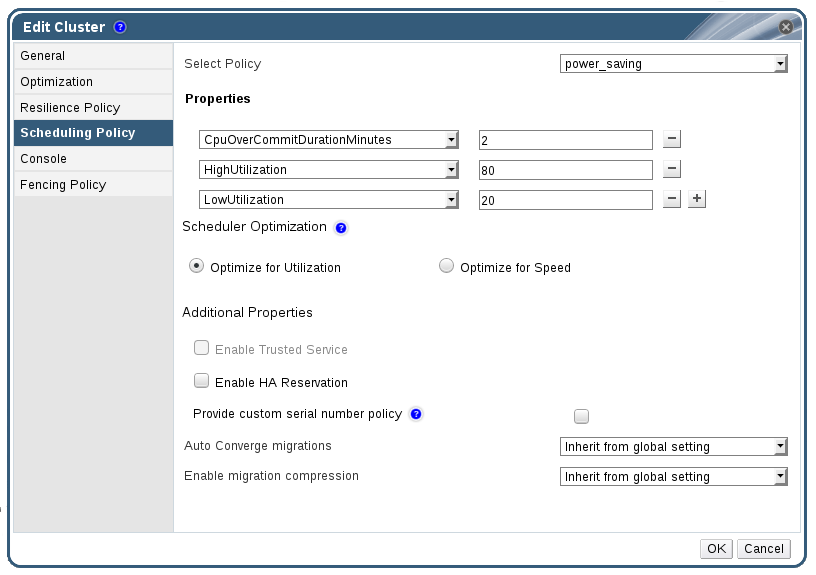

Figure 4.4. Edit Scheduling Policy

- Select one of the following policies:

- none

- vm_evenly_distributed

- Set the maximum number of virtual machines that can run on each host in the HighVmCount field.

- Define the maximum acceptable difference between the number of virtual machines on the most highly-utilized host and the number of virtual machines on the least-utilized host in the MigrationThreshold field.

- Define the number of slots for virtual machines to be reserved on SPM hosts in the SpmVmGrace field.

- evenly_distributed

- Set the time (in minutes) that a host can run a CPU load outside of the defined utilization values before the scheduling policy takes action in the CpuOverCommitDurationMinutes field.

- Enter the CPU utilization percentage at which virtual machines start migrating to other hosts in the HighUtilization field.

- Enter the minimum required free memory in MB at which virtual machines start migrating to other hosts in the MinFreeMemoryForUnderUtilized.

- Enter the maximum required free memory in MB at which virtual machines start migrating to other hosts in the MaxFreeMemoryForOverUtilized.

- power_saving

- Set the time (in minutes) that a host can run a CPU load outside of the defined utilization values before the scheduling policy takes action in the CpuOverCommitDurationMinutes field.

- Enter the CPU utilization percentage below which the host will be considered under-utilized in the LowUtilization field.

- Enter the CPU utilization percentage at which virtual machines start migrating to other hosts in the HighUtilization field.

- Enter the minimum required free memory in MB at which virtual machines start migrating to other hosts in the MinFreeMemoryForUnderUtilized.

- Enter the maximum required free memory in MB at which virtual machines start migrating to other hosts in the MaxFreeMemoryForOverUtilized.

- Choose one of the following as the Scheduler Optimization for the cluster:

- Select Optimize for Utilization to include weight modules in scheduling to allow best selection.

- Select Optimize for Speed to skip host weighting in cases where there are more than ten pending requests.

- If you are using an OpenAttestation server to verify your hosts, and have set up the server's details using the

engine-configtool, select the Enable Trusted Service check box. - Optionally select the Enable HA Reservation check box to enable the Manager to monitor cluster capacity for highly available virtual machines.

- Optionally select the Provide custom serial number policy check box to specify a serial number policy for the virtual machines in the cluster, and then select one of the following options:

- Select Host ID to set the host's UUID as the virtual machine's serial number.

- Select Vm ID to set the virtual machine's UUID as its serial number.

- Select Custom serial number, and then specify a custom serial number in the text field.

- Click .

4.2.5. Updating the MoM Policy on Hosts in a Cluster

Procedure 4.4. Synchronizing MoM Policy on a Host

- Click the Clusters tab and select the cluster to which the host belongs.

- Click the Hosts tab in the details pane and select the host that requires an updated MoM policy.

- Click .

4.2.6. CPU Profiles

4.2.6.1. Creating a CPU Profile

Procedure 4.5. Creating a CPU Profile

- Click the Clusters resource tab and select a cluster.

- Click the CPU Profiles sub tab in the details pane.

- Click .

- Enter a name for the CPU profile in the Name field.

- Enter a description for the CPU profile in the Description field.

- Select the quality of service to apply to the CPU profile from the QoS list.

- Click .

4.2.6.2. Removing a CPU Profile

Procedure 4.6. Removing a CPU Profile

- Click the Clusters resource tab and select a cluster.

- Click the CPU Profiles sub tab in the details pane.

- Select the CPU profile to remove.

- Click .

- Click .

default CPU profile.

4.2.7. Importing an Existing Red Hat Gluster Storage Cluster

gluster peer status command is executed on that host through SSH, then displays a list of hosts that are a part of the cluster. You must manually verify the fingerprint of each host and provide passwords for them. You will not be able to import the cluster if one of the hosts in the cluster is down or unreachable. As the newly imported hosts do not have VDSM installed, the bootstrap script installs all the necessary VDSM packages on the hosts after they have been imported, and reboots them.

Important

Procedure 4.7. Importing an Existing Red Hat Gluster Storage Cluster to Red Hat Enterprise Virtualization Manager

- Select the Clusters resource tab to list all clusters in the results list.

- Click to open the New Cluster window.

- Select the Data Center the cluster will belong to from the drop-down menu.

- Enter the Name and Description of the cluster.

- Select the Enable Gluster Service radio button and the Import existing gluster configuration check box.The Import existing gluster configuration field is displayed only if you select Enable Gluster Service radio button.

- In the Address field, enter the hostname or IP address of any server in the cluster.The host Fingerprint displays to ensure you are connecting with the correct host. If a host is unreachable or if there is a network error, an error Error in fetching fingerprint displays in the Fingerprint field.

- Enter the Root Password for the server, and click OK.

- The Add Hosts window opens, and a list of hosts that are a part of the cluster displays.

- For each host, enter the Name and the Root Password.

- If you wish to use the same password for all hosts, select the Use a Common Password check box to enter the password in the provided text field.Click to set the entered password all hosts.Make sure the fingerprints are valid and submit your changes by clicking .

4.2.8. Explanation of Settings in the Add Hosts Window

| Field | Description |

|---|---|

| Use a common password | Tick this check box to use the same password for all hosts belonging to the cluster. Enter the password in the Password field, then click the Apply button to set the password on all hosts. |

| Name | Enter the name of the host. |

| Hostname/IP | This field is automatically populated with the fully qualified domain name or IP of the host you provided in the New Cluster window. |

| Root Password | Enter a password in this field to use a different root password for each host. This field overrides the common password provided for all hosts in the cluster. |

| Fingerprint | The host fingerprint is displayed to ensure you are connecting with the correct host. This field is automatically populated with the fingerprint of the host you provided in the New Cluster window. |

4.2.9. Removing a Cluster

Move all hosts out of a cluster before removing it.

Note

Procedure 4.8. Removing a Cluster

- Use the resource tabs, tree mode, or the search function to find and select the cluster in the results list.

- Ensure there are no hosts in the cluster.

- Click to open the Remove Cluster(s) confirmation window.

- Click

The cluster is removed.

4.2.10. Changing the Cluster Compatibility Version

Note

Procedure 4.9. Changing the Cluster Compatibility Version

- From the Administration Portal, click the Clusters tab.

- Select the cluster to change from the list displayed.

- Click .

- Change the Compatibility Version to the desired value.

- Click to open the Change Cluster Compatibility Version confirmation window.

- Click to confirm.

Warning

4.3. Clusters and Permissions

4.3.1. Managing System Permissions for a Cluster

- Create and remove associated clusters.

- Add and remove hosts, virtual machines, and pools associated with the cluster.

- Edit user permissions for virtual machines associated with the cluster.

Note

4.3.2. Cluster Administrator Roles Explained

The table below describes the administrator roles and privileges applicable to cluster administration.

| Role | Privileges | Notes |

|---|---|---|

| ClusterAdmin | Cluster Administrator |

Can use, create, delete, manage all physical and virtual resources in a specific cluster, including hosts, templates and virtual machines. Can configure network properties within the cluster such as designating display networks, or marking a network as required or non-required.

However, a ClusterAdmin does not have permissions to attach or detach networks from a cluster, to do so NetworkAdmin permissions are required.

|

| NetworkAdmin | Network Administrator | Can configure and manage the network of a particular cluster. A network administrator of a cluster inherits network permissions for virtual machines within the cluster as well. |

4.3.3. Assigning an Administrator or User Role to a Resource

Procedure 4.10. Assigning a Role to a Resource

- Use the resource tabs, tree mode, or the search function to find and select the resource in the results list.

- Click the tab in the details pane to list the assigned users, the user's role, and the inherited permissions for the selected resource.

- Click .

- Enter the name or user name of an existing user into the Search text box and click . Select a user from the resulting list of possible matches.

- Select a role from the Role to Assign: drop-down list.

- Click .

4.3.4. Removing an Administrator or User Role from a Resource

Procedure 4.11. Removing a Role from a Resource

- Use the resource tabs, tree mode, or the search function to find and select the resource in the results list.

- Click the tab in the details pane to list the assigned users, the user's role, and the inherited permissions for the selected resource.

- Select the user to remove from the resource.

- Click . The Remove Permission window opens to confirm permissions removal.

- Click .

Chapter 5. Logical Networks

5.1. Logical Network Tasks

5.1.1. Using the Networks Tab

- Attaching or detaching the networks to clusters and hosts

- Removing network interfaces from virtual machines and templates

- Adding and removing permissions for users to access and manage networks

Warning

Important

- Directory Services

- DNS

- Storage

5.1.2. Creating a New Logical Network in a Data Center or Cluster

Procedure 5.1. Creating a New Logical Network in a Data Center or Cluster

- Click the Data Centers or Clusters resource tabs, and select a data center or cluster in the results list.

- Click the Logical Networks tab of the details pane to list the existing logical networks.

- From the Data Centers details pane, click to open the New Logical Network window.

- From the Clusters details pane, click to open the New Logical Network window.

- Enter a Name, Description, and Comment for the logical network.

- Optionally select the Create on external provider check box. Select the External Provider from the drop-down list and provide the IP address of the Physical Network.If Create on external provider is selected, the Network Label, VM Network, and MTU options are disabled.

- Enter a new label or select an existing label for the logical network in the Network Label text field.

- Optionally enable Enable VLAN tagging.

- Optionally disable VM Network.

- Set the MTU value to Default (1500) or Custom.

- From the Cluster tab, select the clusters to which the network will be assigned. You can also specify whether the logical network will be a required network.

- If Create on external provider is selected, the Subnet tab will be visible. From the Subnet tab, select the Create subnet and enter a Name, CIDR, and Gateway address, and select an IP Version for the subnet that the logical network will provide. You can also add DNS servers as required.

- From the vNIC Profiles tab, add vNIC profiles to the logical network as required.

- Click OK.

Note

5.1.3. Editing a Logical Network

Procedure 5.2. Editing a Logical Network

Important

- Click the Data Centers resource tab, and select the data center of the logical network in the results list.

- Click the Logical Networks tab in the details pane to list the logical networks in the data center.

- Select a logical network and click to open the Edit Logical Network window.

- Edit the necessary settings.

- Click OK to save the changes.

Note

5.1.4. Removing a Logical Network

ovirtmgmt management network.

Procedure 5.3. Removing Logical Networks

- Click the Data Centers resource tab, and select the data center of the logical network in the results list.

- Click the Logical Networks tab in the details pane to list the logical networks in the data center.

- Select a logical network and click to open the Remove Logical Network(s) window.

- Optionally, select the Remove external network(s) from the provider(s) as well check box to remove the logical network both from the Manager and from the external provider if the network is provided by an external provider.

- Click OK.

5.1.5. Viewing or Editing the Gateway for a Logical Network

Procedure 5.4. Viewing or Editing the Gateway for a Logical Network

- Click the Hosts resource tab, and select the desired host.

- Click the Network Interfaces tab in the details pane to list the network interfaces attached to the host and their configurations.

- Click the button to open the Setup Host Networks window.

- Hover your cursor over an assigned logical network and click the pencil icon to open the Edit Management Network window.

5.1.6. Explanation of Settings and Controls in the New Logical Network and Edit Logical Network Windows

5.1.6.1. Logical Network General Settings Explained

|

Field Name

|

Description

|

|---|---|

|

Name

|

The name of the logical network. This text field has a 15-character limit and must be a unique name with any combination of uppercase and lowercase letters, numbers, hyphens, and underscores.

|

|

Description

|

The description of the logical network. This text field has a 40-character limit.

|

|

Comment

|

A field for adding plain text, human-readable comments regarding the logical network.

|

|

Create on external provider

|

Allows you to create the logical network to an OpenStack Networking instance that has been added to the Manager as an external provider.

External Provider - Allows you to select the external provider on which the logical network will be created.

|

|

Enable VLAN tagging

|

VLAN tagging is a security feature that gives all network traffic carried on the logical network a special characteristic. VLAN-tagged traffic cannot be read by interfaces that do not also have that characteristic. Use of VLANs on logical networks also allows a single network interface to be associated with multiple, differently VLAN-tagged logical networks. Enter a numeric value in the text entry field if VLAN tagging is enabled.

|

|

VM Network

|

Select this option if only virtual machines use this network. If the network is used for traffic that does not involve virtual machines, such as storage communications, do not select this check box.

|

|

MTU

|

Choose either Default, which sets the maximum transmission unit (MTU) to the value given in the parenthesis (), or Custom to set a custom MTU for the logical network. You can use this to match the MTU supported by your new logical network to the MTU supported by the hardware it interfaces with. Enter a numeric value in the text entry field if Custom is selected.

|

|

Network Label

|

Allows you to specify a new label for the network or select from existing labels already attached to host network interfaces. If you select an existing label, the logical network will be automatically assigned to all host network interfaces with that label.

|

5.1.6.2. Logical Network Cluster Settings Explained

|

Field Name

|

Description

|

|---|---|

|

Attach/Detach Network to/from Cluster(s)

|

Allows you to attach or detach the logical network from clusters in the data center and specify whether the logical network will be a required network for individual clusters.

Name - the name of the cluster to which the settings will apply. This value cannot be edited.

Attach All - Allows you to attach or detach the logical network to or from all clusters in the data center. Alternatively, select or clear the Attach check box next to the name of each cluster to attach or detach the logical network to or from a given cluster.

Required All - Allows you to specify whether the logical network is a required network on all clusters. Alternatively, select or clear the Required check box next to the name of each cluster to specify whether the logical network is a required network for a given cluster.

|

5.1.6.3. Logical Network vNIC Profiles Settings Explained

|

Field Name

|

Description

|

|---|---|

|

vNIC Profiles

|

Allows you to specify one or more vNIC profiles for the logical network. You can add or remove a vNIC profile to or from the logical network by clicking the plus or minus button next to the vNIC profile. The first field is for entering a name for the vNIC profile.

Public - Allows you to specify whether the profile is available to all users.

QoS - Allows you to specify a network quality of service (QoS) profile to the vNIC profile.

|

5.1.7. Designate a Specific Traffic Type for a Logical Network with the Manage Networks Window

Procedure 5.5. Specifying Traffic Types for Logical Networks

- Click the Clusters resource tab, and select a cluster from the results list.

- Select the Logical Networks tab in the details pane to list the logical networks assigned to the cluster.

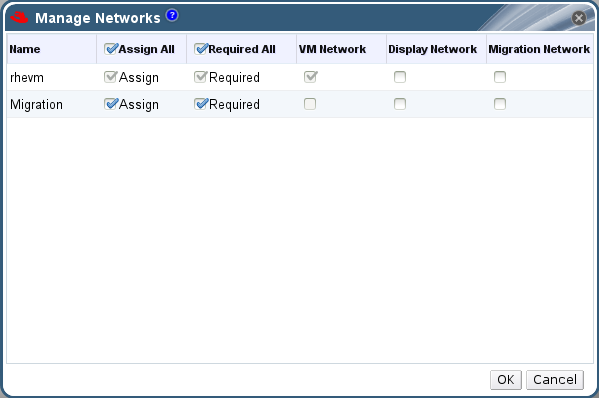

- Click to open the Manage Networks window.

Figure 5.1. Manage Networks

- Select appropriate check boxes.

- Click to save the changes and close the window.

Note

5.1.8. Explanation of Settings in the Manage Networks Window

|

Field

|

Description/Action

|

|---|---|

|

Assign

|

Assigns the logical network to all hosts in the cluster.

|

|

Required

|

A Network marked "required" must remain operational in order for the hosts associated with it to function properly. If a required network ceases to function, any hosts associated with it become non-operational.

|

|

VM Network

| A logical network marked "VM Network" carries network traffic relevant to the virtual machine network. |

|

Display Network

| A logical network marked "Display Network" carries network traffic relevant to SPICE and to the virtual network controller. |

|

Migration Network

| A logical network marked "Migration Network" carries virtual machine and storage migration traffic. |

5.1.9. Editing the Virtual Function Configuration on a NIC

Procedure 5.6. Editing the Virtual Function Configuration on a NIC

- Select an SR-IOV-capable host and click the Network Interfaces tab in the details pane.

- Click to open the Setup Host Networks window.

- Select an SR-IOV-capable NIC, marked with a

, and click the pencil icon to open the Edit Virtual Functions (SR-IOV) configuration of NIC window.

, and click the pencil icon to open the Edit Virtual Functions (SR-IOV) configuration of NIC window.

- To edit the number of virtual functions, click the Number of VFs setting drop-down button and edit the Number of VFs text field.

Important

Changing the number of VFs will delete all previous VFs on the network interface before creating new VFs. This includes any VFs that have virtual machines directly attached. - The All Networks check box is selected by default, allowing all networks to access the virtual functions. To specify the virtual networks allowed to access the virtual functions, select the Specific networks radio button to list all networks. You can then either select the check box for desired networks, or you can use the Labels text field to automatically select networks based on one or more network labels.

- Click to close the window. Note that the configuration changes will not take effect until you click the button in the Setup Host Networks window.

5.2. Virtual Network Interface Cards

5.2.1. vNIC Profile Overview

5.2.2. Creating or Editing a vNIC Profile

Note

Procedure 5.7. Creating or editing a vNIC Profile

- Click the Networks resource tab, and select a logical network in the results list.

- Select the vNIC Profiles tab in the details pane. If you selected the logical network in tree mode, you can select the vNIC Profiles tab in the results list.

- Click or to open the VM Interface Profile window.

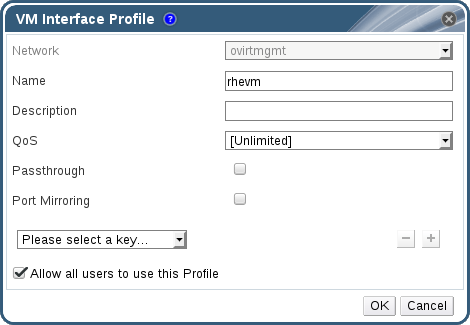

Figure 5.2. The VM Interface Profile window

- Enter the Name and Description of the profile.

- Select the relevant Quality of Service policy from the QoS list.

- Select the Passthrough check box to enable passthrough of the vNIC and allow direct device assignment of a virtual function. Enabling the passthrough property will disable QoS and port mirroring as these are not compatible. For more information on passthrough, see Section 5.2.4, “Enabling Passthrough on a vNIC Profile”.

- Use the Port Mirroring and Allow all users to use this Profile check boxes to toggle these options.

- Select a custom property from the custom properties list, which displays Please select a key... by default. Use the and buttons to add or remove custom properties.

- Click .

Note

5.2.3. Explanation of Settings in the VM Interface Profile Window

|

Field Name

|

Description

|

|---|---|

|

Network

|

A drop-down menu of the available networks to apply the vNIC profile.

|

|

Name

|

The name of the vNIC profile. This must be a unique name with any combination of uppercase and lowercase letters, numbers, hyphens, and underscores between 1 and 50 characters.

|

| Description |

The description of the vNIC profile. This field is recommended but not mandatory.

|

| QoS |

A drop-down menu of the available Network Quality of Service policies to apply to the vNIC profile. QoS policies regulate inbound and outbound network traffic of the vNIC.

|

| Passthrough |

A check box to toggle the passthrough property. Passthrough allows a vNIC to connect directly to a virtual function of a host NIC. The passthrough property cannot be edited if the vNIC profile is attached to a virtual machine.

Both QoS and port mirroring are disabled in the vNIC profile if passthrough is enabled.

|

| Port Mirroring |

A check box to toggle port mirroring. Port mirroring copies layer 3 network traffic on the logical network to a virtual interface on a virtual machine. It it not selected by default. For further details, see Port Mirroring in the Technical Reference.

|

| Device Custom Properties |

A drop-down menu to select available custom properties to apply to the vNIC profile. Use the and buttons to add and remove properties respectively.

|

| Allow all users to use this Profile |

A check box to toggle the availability of the profile to all users in the environment. It is selected by default.

|

5.2.4. Enabling Passthrough on a vNIC Profile

Procedure 5.8. Enabling Passthrough

- Select a logical network from the Networks results list and click the vNIC Profiles tab in the details pane to list all vNIC profiles for that logical network.

- Click to open the VM Interface Profile window.

- Enter the Name and Description of the profile.

- Select the Passthrough check box. This will disable QoS and Port Mirroring.

- If necessary, select a custom property from the custom properties list, which displays Please select a key... by default. Use the and buttons to add or remove custom properties.

- Click to save the profile and close the window.

5.2.5. Removing a vNIC Profile

Procedure 5.9. Removing a vNIC Profile

- Click the Networks resource tab, and select a logical network in the results list.

- Select the Profiles tab in the details pane to display available vNIC profiles. If you selected the logical network in tree mode, you can select the VNIC Profiles tab in the results list.

- Select one or more profiles and click to open the Remove VM Interface Profile(s) window.

- Click to remove the profile and close the window.

5.2.6. Assigning Security Groups to vNIC Profiles

Note

Note

neutron security-group-list

# neutron security-group-listProcedure 5.10. Assigning Security Groups to vNIC Profiles

- Click the Networks tab and select a logical network from the results list.

- Click the vNIC Profiles tab in the details pane.

- Click , or select an existing vNIC profile and click , to open the VM Interface Profile window.

- From the custom properties drop-down list, select SecurityGroups. Leaving the custom property drop-down blank applies the default security settings, which permit all outbound traffic and intercommunication but deny all inbound traffic from outside of the default security group. Note that removing the SecurityGroups property later will not affect the applied security group.

- In the text field, enter the ID of the security group to attach to the vNIC profile.

- Click .

5.2.7. User Permissions for vNIC Profiles

Procedure 5.11. User Permissions for vNIC Profiles

- Click the Networks tab and select a logical network from the results list.

- Select the vNIC Profiles resource tab to display the vNIC profiles.

- Select the Permissions tab in the details pane to show the current user permissions for the profile.

- Use the button to open the Add Permission to User window, and the button to open the Remove Permission window, to affect user permissions for the vNIC profile.

5.2.8. Configuring vNIC Profiles for UCS Integration

vdsm-hook-vmfex-dev hook allows virtual machines to connect to Cisco's UCS-defined port profiles by configuring the vNIC profile. The UCS-defined port profiles contain the properties and settings used to configure virtual interfaces in UCS. The vdsm-hook-vmfex-dev hook is installed by default with VDSM. See Appendix A, VDSM and Hooks for more information.

Note

Procedure 5.12. Configuring the Custom Device Property

- On the Red Hat Enterprise Virtualization Manager, configure the

vmfexcustom property and set the cluster compatibility level using--cver.engine-config -s CustomDeviceProperties='{type=interface;prop={vmfex=^[a-zA-Z0-9_.-]{2,32}$}}' --cver=3.6# engine-config -s CustomDeviceProperties='{type=interface;prop={vmfex=^[a-zA-Z0-9_.-]{2,32}$}}' --cver=3.6Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Verify that the

vmfexcustom device property was added.engine-config -g CustomDeviceProperties

# engine-config -g CustomDevicePropertiesCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Restart the engine.

service ovirt-engine restart

# service ovirt-engine restartCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Procedure 5.13. Configuring a vNIC Profile for UCS Integration

- Click the Networks resource tab, and select a logical network in the results list.

- Select the vNIC Profiles tab in the details pane. If you selected the logical network in tree mode, you can select the vNIC Profiles tab in the results list.

- Click or to open the VM Interface Profile window.

- Enter the Name and Description of the profile.

- Select the

vmfexcustom property from the custom properties list and enter the UCS port profile name. - Click .

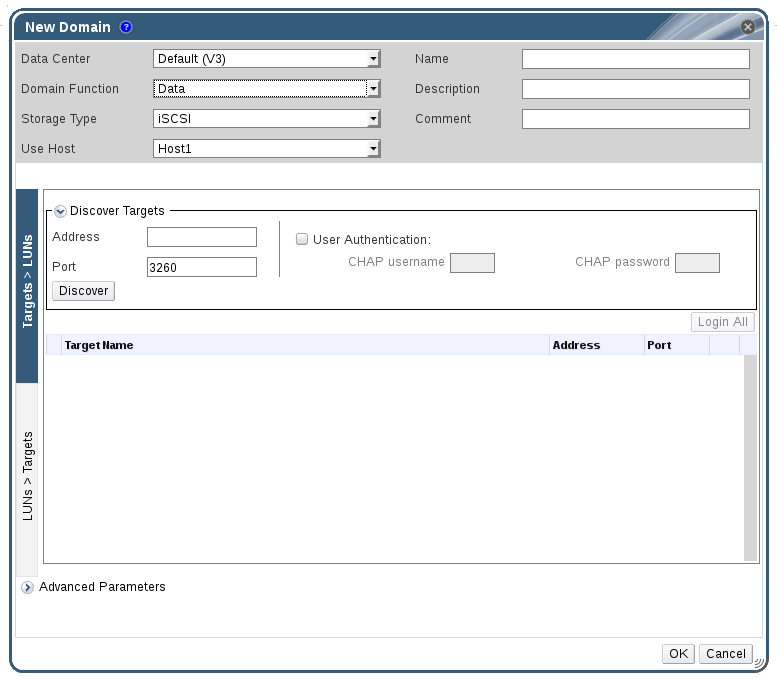

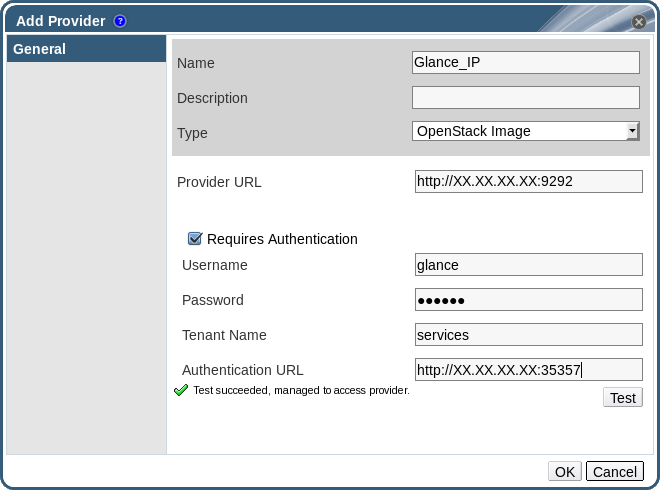

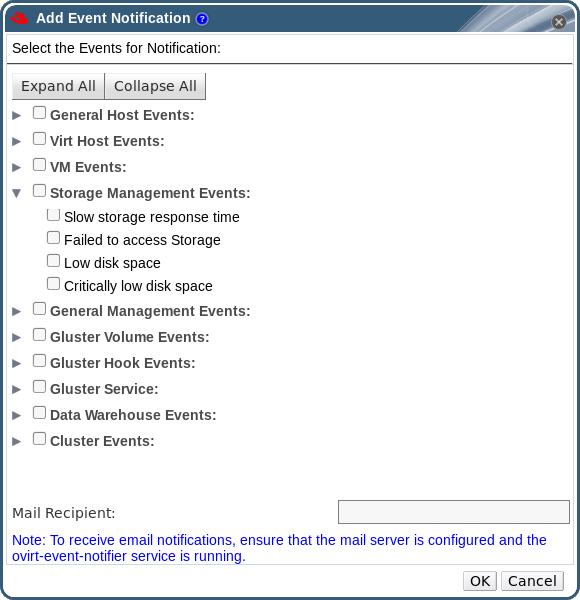

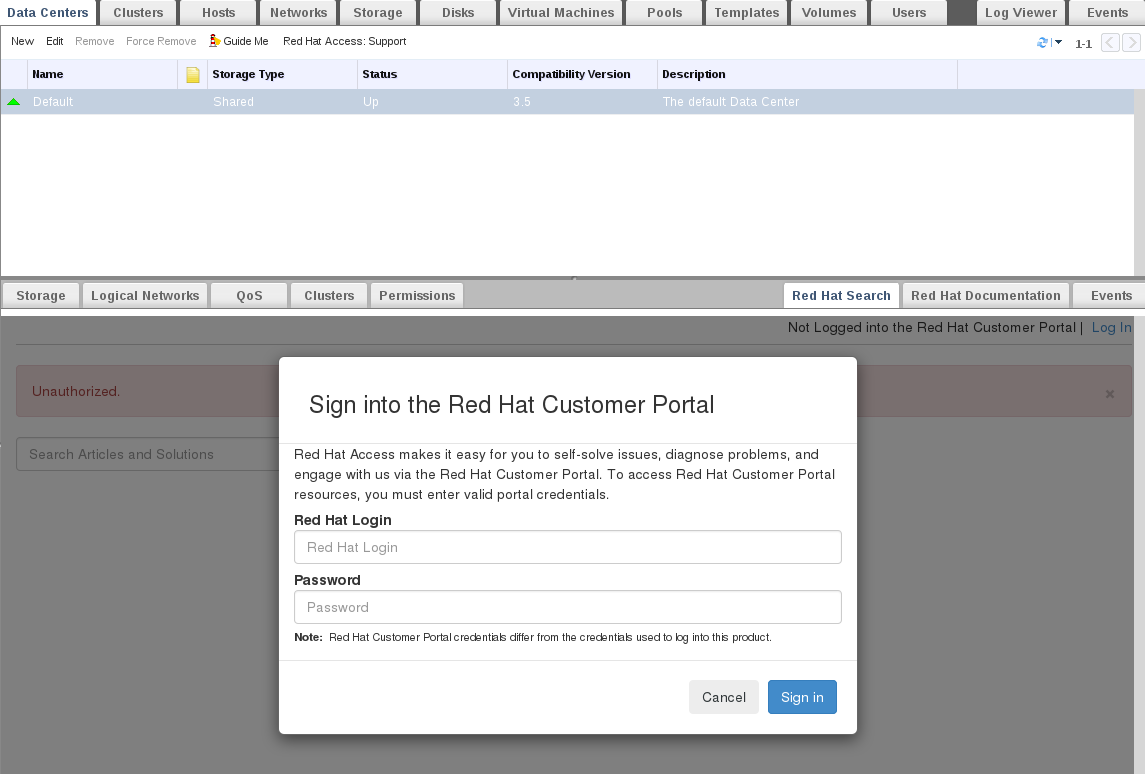

5.3. External Provider Networks