Virtual Machine Management Guide

Managing Virtual Machines in Red Hat Virtualization

Abstract

Chapter 1. Introduction

1.1. Audience

Important

1.2. Supported Virtual Machine Operating Systems

1.3. Virtual Machine Performance Parameters

1.4. Installing Supporting Components on Client Machines

1.4.1. Installing Console Components

1.4.1.1. Installing Remote Viewer on Red Hat Enterprise Linux

Procedure 1.1. Installing Remote Viewer on Linux

- Install the virt-viewer package:

yum install virt-viewer

# yum install virt-viewerCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Restart your browser for the changes to take effect.

1.4.1.2. Installing Remote Viewer on Windows

Procedure 1.2. Installing Remote Viewer on Windows

- Open a web browser and download one of the following installers according to the architecture of your system.

- Virt Viewer for 32-bit Windows:

https://your-manager-fqdn/ovirt-engine/services/files/spice/virt-viewer-x86.msi

https://your-manager-fqdn/ovirt-engine/services/files/spice/virt-viewer-x86.msiCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Virt Viewer for 64-bit Windows:

https://your-manager-fqdn/ovirt-engine/services/files/spice/virt-viewer-x64.msi

https://your-manager-fqdn/ovirt-engine/services/files/spice/virt-viewer-x64.msiCopy to Clipboard Copied! Toggle word wrap Toggle overflow

- Open the folder where the file was saved.

- Double-click the file.

- Click Run if prompted by a security warning.

- Click Yes if prompted by User Account Control.

1.4.2. Installing usbdk on Windows

Procedure 1.3. Installing usbdk on Windows

- Open a web browser and download one of the following installers according to the architecture of your system.

- usbdk for 32-bit Windows:

https://[your manager's address]/ovirt-engine/services/files/spice/usbdk-x86.msi

https://[your manager's address]/ovirt-engine/services/files/spice/usbdk-x86.msiCopy to Clipboard Copied! Toggle word wrap Toggle overflow - usbdk for 64-bit Windows:

https://[your manager's address]/ovirt-engine/services/files/spice/usbdk-x64.msi

https://[your manager's address]/ovirt-engine/services/files/spice/usbdk-x64.msiCopy to Clipboard Copied! Toggle word wrap Toggle overflow

- Open the folder where the file was saved.

- Double-click the file.

- Click Run if prompted by a security warning.

- Click Yes if prompted by User Account Control.

Chapter 2. Installing Linux Virtual Machines

- Create a blank virtual machine on which to install an operating system.

- Add a virtual disk for storage.

- Add a network interface to connect the virtual machine to the network.

- Install an operating system on the virtual machine. See your operating system's documentation for instructions.

- Red Hat Enterprise Linux 6: https://access.redhat.com/documentation/en-US/Red_Hat_Enterprise_Linux/6/html/Installation_Guide/index.html

- Red Hat Enterprise Linux 7: https://access.redhat.com/documentation/en-US/Red_Hat_Enterprise_Linux/7/html/Installation_Guide/index.html

- Red Hat Enterprise Linux Atomic Host 7: https://access.redhat.com/documentation/en/red-hat-enterprise-linux-atomic-host/7/single/installation-and-configuration-guide/

- Register the virtual machine with the Content Delivery Network and subscribe to the relevant entitlements.

- Install guest agents and drivers for additional virtual machine functionality.

2.1. Creating a Linux Virtual Machine

Procedure 2.1. Creating Linux Virtual Machines

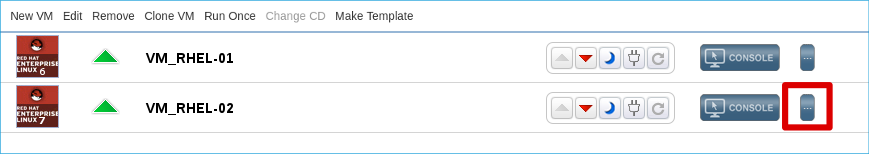

- Click the tab.

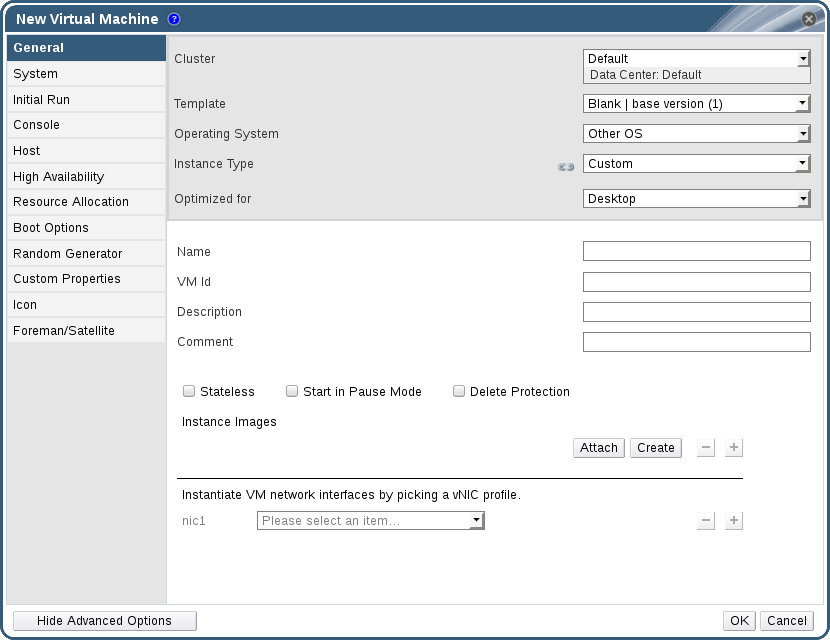

- Click the button to open the New Virtual Machine window.

Figure 2.1. The New Virtual Machine Window

- Select a Linux variant from the Operating System drop-down list.

- Enter a Name for the virtual machine.

- Add storage to the virtual machine. or a virtual disk under Instance Images.

- Click and select an existing virtual disk.

- Click and enter a Size(GB) and Alias for a new virtual disk. You can accept the default settings for all other fields, or change them if required. See Section A.3, “Explanation of Settings in the New Virtual Disk and Edit Virtual Disk Windows” for more details on the fields for all disk types.

- Connect the virtual machine to the network. Add a network interface by selecting a vNIC profile from the nic1 drop-down list at the bottom of the General tab.

- Specify the virtual machine's Memory Size on the System tab.

- Choose the First Device that the virtual machine will boot from on the Boot Options tab.

- You can accept the default settings for all other fields, or change them if required. For more details on all fields in the New Virtual Machine window, see Section A.1, “Explanation of Settings in the New Virtual Machine and Edit Virtual Machine Windows”.

- Click .

Down. Before you can use this virtual machine, you must install an operating system and register with the Content Delivery Network.

2.2. Starting the Virtual Machine

2.2.1. Starting a Virtual Machine

Procedure 2.2. Starting Virtual Machines

- Click the Virtual Machines tab and select a virtual machine with a status of

Down. - Click the run (

) button.

Alternatively, right-click the virtual machine and select .

) button.

Alternatively, right-click the virtual machine and select .

Up, and the operating system installation begins. Open a console to the virtual machine if one does not open automatically.

Note

2.2.2. Opening a Console to a Virtual Machine

Procedure 2.3. Connecting to Virtual Machines

- Install Remote Viewer if it is not already installed. See Section 1.4.1, “Installing Console Components”.

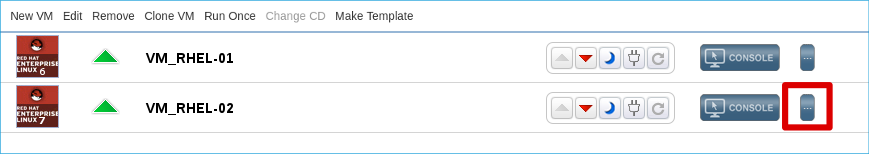

- Click the Virtual Machines tab and select a virtual machine.

- Click the console button or right-click the virtual machine and select Console. A

console.vvfile will be downloaded. Click on the file and a console window will automatically open for the virtual machine.

Note

2.2.3. Opening a Serial Console to a Virtual Machine

Important

engine-setup on new installations. The serial console relies on the ovirt-vmconsole package and the ovirt-vmconsole-proxy on the Manager, and the ovirt-vmconsole package and the ovirt-vmconsole-host package on virtualization hosts. These packages are installed by default on new installations. To install the packages on existing installations, reinstall the host. See Reinstalling Hosts in the Administration Guide.

Procedure 2.4. Connecting to a Virtual Machine Serial Console

- On the client machine from which you will access the virtual machine serial console, generate an SSH key pair. The Manager supports standard SSH key types. For example, generate an RSA key:

ssh-keygen -t rsa -b 2048 -C "admin@internal" -f .ssh/serialconsolekey

# ssh-keygen -t rsa -b 2048 -C "admin@internal" -f .ssh/serialconsolekeyCopy to Clipboard Copied! Toggle word wrap Toggle overflow This command generates a public key and a private key. - In the Administration Portal or the User Portal, click the name of the signed-in user on the header bar, and then click to open the Edit Options window.

- In the User's Public Key text field, paste the public key of the client machine that will be used to access the serial console.

- Click the Virtual Machines tab and select a virtual machine.

- Click .

- In the Console tab of the Edit Virtual Machine window, select the Enable VirtIO serial console check box.

- On the client machine, connect to the virtual machine's serial console:

- If a single virtual machine is available, this command connects the user to that virtual machine:

ssh -t -p 2222 ovirt-vmconsole@MANAGER_IP Red Hat Enterprise Linux Server release 6.7 (Santiago) Kernel 2.6.32-573.3.1.el6.x86_64 on an x86_64 USER login:

# ssh -t -p 2222 ovirt-vmconsole@MANAGER_IP Red Hat Enterprise Linux Server release 6.7 (Santiago) Kernel 2.6.32-573.3.1.el6.x86_64 on an x86_64 USER login:Copy to Clipboard Copied! Toggle word wrap Toggle overflow If more than one virtual machine is available, this command lists the available virtual machines:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Enter the number of the machine to which you want to connect, and press Enter. - Alternatively, connect directly to a virtual machine using its unique identifier or its name:

ssh -t -p 2222 ovirt-vmconsole@MANAGER_IP --vm-id vmid1

# ssh -t -p 2222 ovirt-vmconsole@MANAGER_IP --vm-id vmid1Copy to Clipboard Copied! Toggle word wrap Toggle overflow ssh -t -p 2222 ovirt-vmconsole@MANAGER_IP --vm-name vm1

# ssh -t -p 2222 ovirt-vmconsole@MANAGER_IP --vm-name vm1Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Important

2.2.4. Automatically Connecting to a Virtual Machine

Procedure 2.5. Automatically Connecting to a Virtual Machine

- Click the name of the signed-in user on the header bar then click Options to open the Edit Options window.

- Click the Connect Automatically check box.

- Click OK.

2.3. Subscribing to the Required Entitlements

Procedure 2.6. Subscribing to the Required Entitlements Using Subscription Manager

- Register your system with the Content Delivery Network, entering your Customer Portal user name and password when prompted:

subscription-manager register

# subscription-manager registerCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Locate the relevant subscription pools and note down the pool identifiers.

subscription-manager list --available

# subscription-manager list --availableCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Use the pool identifiers located in the previous step to attach the required entitlements.

subscription-manager attach --pool=pool_id

# subscription-manager attach --pool=pool_idCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Disable all existing repositories:

subscription-manager repos --disable=*

# subscription-manager repos --disable=*Copy to Clipboard Copied! Toggle word wrap Toggle overflow - When a system is subscribed to a subscription pool with multiple repositories, only the main repository is enabled by default. Others are available, but disabled. Enable any additional repositories:

subscription-manager repos --enable=repository

# subscription-manager repos --enable=repositoryCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Ensure that all packages currently installed are up to date:

yum update

# yum updateCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.4. Installing Guest Agents and Drivers

2.4.1. Red Hat Virtualization Guest Agents and Drivers

|

Driver

|

Description

|

Works on

|

|---|---|---|

virtio-net

|

Paravirtualized network driver provides enhanced performance over emulated devices like rtl.

|

Server and Desktop.

|

virtio-block

|

Paravirtualized HDD driver offers increased I/O performance over emulated devices like IDE by optimizing the coordination and communication between the guest and the hypervisor. The driver complements the software implementation of the virtio-device used by the host to play the role of a hardware device.

|

Server and Desktop.

|

virtio-scsi

|

Paravirtualized iSCSI HDD driver offers similar functionality to the virtio-block device, with some additional enhancements. In particular, this driver supports adding hundreds of devices, and names devices using the standard SCSI device naming scheme.

|

Server and Desktop.

|

virtio-serial

|

Virtio-serial provides support for multiple serial ports. The improved performance is used for fast communication between the guest and the host that avoids network complications. This fast communication is required for the guest agents and for other features such as clipboard copy-paste between the guest and the host and logging.

|

Server and Desktop.

|

virtio-balloon

|

Virtio-balloon is used to control the amount of memory a guest actually accesses. It offers improved memory over-commitment. The balloon drivers are installed for future compatibility but not used by default in Red Hat Virtualization.

|

Server and Desktop.

|

qxl

|

A paravirtualized display driver reduces CPU usage on the host and provides better performance through reduced network bandwidth on most workloads.

|

Server and Desktop.

|

|

Guest agent/tool

|

Description

|

Works on

|

|---|---|---|

ovirt-guest-agent-common

|

Allows the Red Hat Virtualization Manager to receive guest internal events and information such as IP address and installed applications. Also allows the Manager to execute specific commands, such as shut down or reboot, on a guest.

On Red Hat Enterprise Linux 6 and later guests, the ovirt-guest-agent-common installs tuned on your virtual machine and configures it to use an optimized, virtualized-guest profile.

|

Server and Desktop.

|

spice-agent

|

The SPICE agent supports multiple monitors and is responsible for client-mouse-mode support to provide a better user experience and improved responsiveness than the QEMU emulation. Cursor capture is not needed in client-mouse-mode. The SPICE agent reduces bandwidth usage when used over a wide area network by reducing the display level, including color depth, disabling wallpaper, font smoothing, and animation. The SPICE agent enables clipboard support allowing cut and paste operations for both text and images between client and guest, and automatic guest display setting according to client-side settings. On Windows guests, the SPICE agent consists of vdservice and vdagent.

|

Server and Desktop.

|

rhev-sso

|

An agent that enables users to automatically log in to their virtual machines based on the credentials used to access the Red Hat Virtualization Manager.

|

Desktop.

|

2.4.2. Installing the Guest Agents and Drivers on Red Hat Enterprise Linux

Procedure 2.7. Installing the Guest Agents and Drivers on Red Hat Enterprise Linux

- Log in to the Red Hat Enterprise Linux virtual machine.

- Enable the Red Hat Virtualization Agent repository:

- For Red Hat Enterprise Linux 6

subscription-manager repos --enable=rhel-6-server-rhv-4-agent-rpms

# subscription-manager repos --enable=rhel-6-server-rhv-4-agent-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow - For Red Hat Enterprise Linux 7

subscription-manager repos --enable=rhel-7-server-rh-common-rpms

# subscription-manager repos --enable=rhel-7-server-rh-common-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow

- Install the ovirt-guest-agent-common package and dependencies:

yum install ovirt-guest-agent-common

# yum install ovirt-guest-agent-commonCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Start and enable the service:

- For Red Hat Enterprise Linux 6

service ovirt-guest-agent start chkconfig ovirt-guest-agent on

# service ovirt-guest-agent start # chkconfig ovirt-guest-agent onCopy to Clipboard Copied! Toggle word wrap Toggle overflow - For Red Hat Enterprise Linux 7

systemctl start ovirt-guest-agent.service systemctl enable ovirt-guest-agent.service

# systemctl start ovirt-guest-agent.service # systemctl enable ovirt-guest-agent.serviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow

- Start and enable the

qemu-gaservice:- For Red Hat Enterprise Linux 6

service qemu-ga start chkconfig qemu-ga on

# service qemu-ga start # chkconfig qemu-ga onCopy to Clipboard Copied! Toggle word wrap Toggle overflow - For Red Hat Enterprise Linux 7

systemctl start qemu-guest-agent.service systemctl enable qemu-guest-agent.service

# systemctl start qemu-guest-agent.service # systemctl enable qemu-guest-agent.serviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow

ovirt-guest-agent that you can configure via the ovirt-guest-agent.conf configuration file in the /etc/ directory.

Chapter 3. Installing Windows Virtual Machines

- Create a blank virtual machine on which to install an operating system.

- Add a virtual disk for storage.

- Add a network interface to connect the virtual machine to the network.

- Attach the

virtio-win.vfddiskette to the virtual machine so that VirtIO-optimized device drivers can be installed during the operating system installation. - Install an operating system on the virtual machine. See your operating system's documentation for instructions.

- Install guest agents and drivers for additional virtual machine functionality.

3.1. Creating a Windows Virtual Machine

Procedure 3.1. Creating Windows Virtual Machines

- You can change the default virtual machine name length with the

engine-configtool. Run the following command on the Manager machine:engine-config --set MaxVmNameLength=integer

# engine-config --set MaxVmNameLength=integerCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Click the tab.

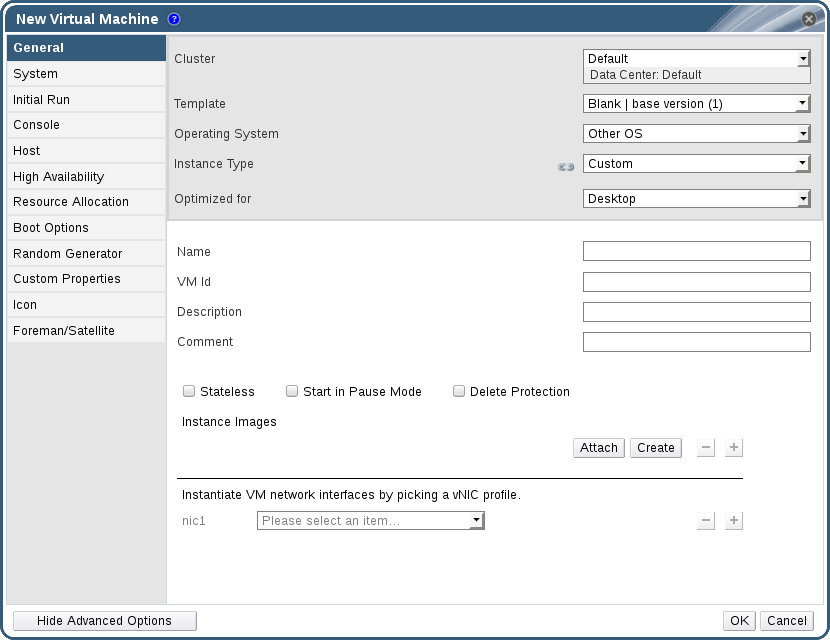

- Click to open the New Virtual Machine window.

Figure 3.1. The New Virtual Machine Window

- Select a Windows variant from the Operating System drop-down list.

- Enter a Name for the virtual machine.

- Add storage to the virtual machine. or a virtual disk under Instance Images.

- Click and select an existing virtual disk.

- Click and enter a Size(GB) and Alias for a new virtual disk. You can accept the default settings for all other fields, or change them if required. See Section A.3, “Explanation of Settings in the New Virtual Disk and Edit Virtual Disk Windows” for more details on the fields for all disk types.

- Connect the virtual machine to the network. Add a network interface by selecting a vNIC profile from the nic1 drop-down list at the bottom of the General tab.

- Specify the virtual machine's Memory Size on the System tab.

- Choose the First Device that the virtual machine will boot from on the Boot Options tab.

- You can accept the default settings for all other fields, or change them if required. For more details on all fields in the New Virtual Machine window, see Section A.1, “Explanation of Settings in the New Virtual Machine and Edit Virtual Machine Windows”.

- Click .

Down. Before you can use this virtual machine, you must install an operating system and VirtIO-optimized disk and network drivers.

3.2. Starting the Virtual Machine Using the Run Once Option

3.2.1. Installing Windows on VirtIO-Optimized Hardware

virtio-win.vfd diskette to your virtual machine. These drivers provide a performance improvement over emulated device drivers.

Red Hat VirtIO network interface and a disk that uses the VirtIO interface to your virtual machine.

Note

virtio-win.vfd diskette is placed automatically on ISO storage domains that are hosted on the Manager server. An administrator must manually upload it to other ISO storage domains using the engine-iso-uploader tool.

Procedure 3.2. Installing VirtIO Drivers during Windows Installation

- Click the Virtual Machines tab and select a virtual machine.

- Click .

- Expand the Boot Options menu.

- Select the Attach Floppy check box, and select

virtio-win.vfdfrom the drop-down list. - Select the Attach CD check box, and select the required Windows ISO from the drop-down list.

- Move CD-ROM to the top of the Boot Sequence field.

- Configure the rest of your Run Once options as required. See Section A.5, “Explanation of Settings in the Run Once Window” for more details.

- Click OK.

Up, and the operating system installation begins. Open a console to the virtual machine if one does not open automatically.

virtio-win.vfd diskette that was attached to your virtual machine as A:. For each supported virtual machine architecture and Windows version, there is a folder on the disk containing optimized hardware device drivers.

3.2.2. Opening a Console to a Virtual Machine

Procedure 3.3. Connecting to Virtual Machines

- Install Remote Viewer if it is not already installed. See Section 1.4.1, “Installing Console Components”.

- Click the Virtual Machines tab and select a virtual machine.

- Click the console button or right-click the virtual machine and select Console.

- If the connection protocol is set to SPICE, a console window will automatically open for the virtual machine.

- If the connection protocol is set to VNC, a

console.vvfile will be downloaded. Click on the file and a console window will automatically open for the virtual machine.

Note

3.3. Installing Guest Agents and Drivers

3.3.1. Red Hat Virtualization Guest Agents and Drivers

|

Driver

|

Description

|

Works on

|

|---|---|---|

virtio-net

|

Paravirtualized network driver provides enhanced performance over emulated devices like rtl.

|

Server and Desktop.

|

virtio-block

|

Paravirtualized HDD driver offers increased I/O performance over emulated devices like IDE by optimizing the coordination and communication between the guest and the hypervisor. The driver complements the software implementation of the virtio-device used by the host to play the role of a hardware device.

|

Server and Desktop.

|

virtio-scsi

|

Paravirtualized iSCSI HDD driver offers similar functionality to the virtio-block device, with some additional enhancements. In particular, this driver supports adding hundreds of devices, and names devices using the standard SCSI device naming scheme.

|

Server and Desktop.

|

virtio-serial

|

Virtio-serial provides support for multiple serial ports. The improved performance is used for fast communication between the guest and the host that avoids network complications. This fast communication is required for the guest agents and for other features such as clipboard copy-paste between the guest and the host and logging.

|

Server and Desktop.

|

virtio-balloon

|

Virtio-balloon is used to control the amount of memory a guest actually accesses. It offers improved memory over-commitment. The balloon drivers are installed for future compatibility but not used by default in Red Hat Virtualization.

|

Server and Desktop.

|

qxl

|

A paravirtualized display driver reduces CPU usage on the host and provides better performance through reduced network bandwidth on most workloads.

|

Server and Desktop.

|

|

Guest agent/tool

|

Description

|

Works on

|

|---|---|---|

ovirt-guest-agent-common

|

Allows the Red Hat Virtualization Manager to receive guest internal events and information such as IP address and installed applications. Also allows the Manager to execute specific commands, such as shut down or reboot, on a guest.

On Red Hat Enterprise Linux 6 and later guests, the ovirt-guest-agent-common installs tuned on your virtual machine and configures it to use an optimized, virtualized-guest profile.

|

Server and Desktop.

|

spice-agent

|

The SPICE agent supports multiple monitors and is responsible for client-mouse-mode support to provide a better user experience and improved responsiveness than the QEMU emulation. Cursor capture is not needed in client-mouse-mode. The SPICE agent reduces bandwidth usage when used over a wide area network by reducing the display level, including color depth, disabling wallpaper, font smoothing, and animation. The SPICE agent enables clipboard support allowing cut and paste operations for both text and images between client and guest, and automatic guest display setting according to client-side settings. On Windows guests, the SPICE agent consists of vdservice and vdagent.

|

Server and Desktop.

|

rhev-sso

|

An agent that enables users to automatically log in to their virtual machines based on the credentials used to access the Red Hat Virtualization Manager.

|

Desktop.

|

3.3.2. Installing the Guest Agents and Drivers on Windows

rhev-tools-setup.iso ISO file, which is provided by the rhev-guest-tools-iso package installed as a dependency to the Red Hat Virtualization Manager. This ISO file is located in /usr/share/rhev-guest-tools-iso/rhev-tools-setup.iso on the system on which the Red Hat Virtualization Manager is installed.

Note

rhev-tools-setup.iso ISO file is automatically copied to the default ISO storage domain, if any, when you run engine-setup, or must be manually uploaded to an ISO storage domain.

Note

rhev-tools-setup.iso ISO file must be manually attached to running Windows virtual machines to install updated versions of the tools and drivers. If the APT service is enabled on virtual machines, the updated ISO files will be automatically attached.

Note

ISSILENTMODE and ISNOREBOOT to RHEV-toolsSetup.exe to silently install the guest agents and drivers and prevent the machine on which they have been installed from rebooting immediately after installation. The machine can then be rebooted later once the deployment process is complete.

D:\RHEV-toolsSetup.exe ISSILENTMODE ISNOREBOOT

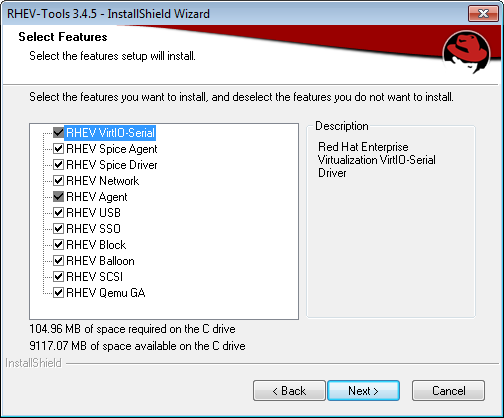

D:\RHEV-toolsSetup.exe ISSILENTMODE ISNOREBOOTProcedure 3.4. Installing the Guest Agents and Drivers on Windows

- Log in to the virtual machine.

- Select the CD Drive containing the

rhev-tools-setup.isofile. - Double-click RHEV-toolsSetup.

- Click at the welcome screen.

- Follow the prompts on the RHEV-Tools InstallShield Wizard window. Ensure all check boxes in the list of components are selected.

Figure 3.2. Selecting All Components of Red Hat Virtualization Tools for Installation

- Once installation is complete, select

Yes, I want to restart my computer nowand click to apply the changes.

RHEV Agent that you can configure using the rhev-agent configuration file located in C:\Program Files\Redhat\RHEV\Drivers\Agent.

3.3.3. Automating Guest Additions on Windows Guests with Red Hat Virtualization Application Provisioning Tool(APT)

rhev-tools-setup.iso ISO file to the virtual machine.

Procedure 3.5. Installing the APT Service on Windows

- Log in to the virtual machine.

- Select the CD Drive containing the

rhev-tools-setup.isofile. - Double-click RHEV-Application Provisioning Tool.

- Click in the User Account Control window.

- Once installation is complete, ensure the

Start RHEV-apt Servicecheck box is selected in the RHEV-Application Provisioning Tool InstallShield Wizard window, and click to apply the changes.

Note

Start RHEV-apt Service check box. You can stop, start, or restart the service at any time using the Services window.

Chapter 4. Additional Configuration

4.1. Configuring Single Sign-On for Virtual Machines

Important

4.1.1. Configuring Single Sign-On for Red Hat Enterprise Linux Virtual Machines Using IPA (IdM)

Important

Procedure 4.1. Configuring Single Sign-On for Red Hat Enterprise Linux Virtual Machines

- Log in to the Red Hat Enterprise Linux virtual machine.

- Enable the required repository:

- For Red Hat Enterprise Linux 6

subscription-manager repos --enable=rhel-6-server-rhv-4-agent-rpms

# subscription-manager repos --enable=rhel-6-server-rhv-4-agent-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow - For Red Hat Enterprise Linux 7

subscription-manager repos --enable=rhel-7-server-rh-common-rpms

# subscription-manager repos --enable=rhel-7-server-rh-common-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow

- Download and install the guest agent packages:

yum install ovirt-guest-agent-common

# yum install ovirt-guest-agent-commonCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Install the single sign-on packages:

yum install ovirt-guest-agent-pam-module yum install ovirt-guest-agent-gdm-plugin

# yum install ovirt-guest-agent-pam-module # yum install ovirt-guest-agent-gdm-pluginCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Install the IPA packages:

yum install ipa-client

# yum install ipa-clientCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Run the following command and follow the prompts to configure ipa-client and join the virtual machine to the domain:

ipa-client-install --permit --mkhomedir

# ipa-client-install --permit --mkhomedirCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note

In environments that use DNS obfuscation, this command should be:ipa-client-install --domain=FQDN --server==FQDN

# ipa-client-install --domain=FQDN --server==FQDNCopy to Clipboard Copied! Toggle word wrap Toggle overflow - For Red Hat Enterprise Linux 7.2 and later, run:

authconfig --enablenis --update

# authconfig --enablenis --updateCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note

Red Hat Enterprise Linux 7.2 has a new version of the System Security Services Daemon (SSSD) which introduces configuration that is incompatible with the Red Hat Virtualization Manager guest agent single sign-on implementation. The command will ensure that single sign-on works. - Fetch the details of an IPA user:

getent passwd IPA_user_name

# getent passwd IPA_user_nameCopy to Clipboard Copied! Toggle word wrap Toggle overflow This will return something like this:some-ipa-user:*:936600010:936600001::/home/some-ipa-user:/bin/sh

some-ipa-user:*:936600010:936600001::/home/some-ipa-user:/bin/shCopy to Clipboard Copied! Toggle word wrap Toggle overflow You will need this information in the next step to create a home directory for some-ipa-user. - Set up a home directory for the IPA user:

- Create the new user's home directory:

mkdir /home/some-ipa-user

# mkdir /home/some-ipa-userCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Give the new user ownership of the new user's home directory:

chown 935500010:936600001 /home/some-ipa-user

# chown 935500010:936600001 /home/some-ipa-userCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.1.2. Configuring Single Sign-On for Red Hat Enterprise Linux Virtual Machines Using Active Directory

Important

Procedure 4.2. Configuring Single Sign-On for Red Hat Enterprise Linux Virtual Machines

- Log in to the Red Hat Enterprise Linux virtual machine.

- Enable the Red Hat Virtualization Agent repository:

- For Red Hat Enterprise Linux 6

subscription-manager repos --enable=rhel-6-server-rhv-4-agent-rpms

# subscription-manager repos --enable=rhel-6-server-rhv-4-agent-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow - For Red Hat Enterprise Linux 7

subscription-manager repos --enable=rhel-7-server-rh-common-rpms

# subscription-manager repos --enable=rhel-7-server-rh-common-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow

- Download and install the guest agent packages:

yum install ovirt-guest-agent-common

# yum install ovirt-guest-agent-commonCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Install the single sign-on packages:

yum install ovirt-guest-agent-gdm-plugin

# yum install ovirt-guest-agent-gdm-pluginCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Install the Samba client packages:

yum install samba-client samba-winbind samba-winbind-clients

# yum install samba-client samba-winbind samba-winbind-clientsCopy to Clipboard Copied! Toggle word wrap Toggle overflow - On the virtual machine, modify the

/etc/samba/smb.conffile to contain the following, replacingDOMAINwith the short domain name andREALM.LOCALwith the Active Directory realm:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Join the virtual machine to the domain:

net ads join -U user_name

net ads join -U user_nameCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Start the winbind service and ensure it starts on boot:

- For Red Hat Enterprise Linux 6

service winbind start chkconfig winbind on

# service winbind start # chkconfig winbind onCopy to Clipboard Copied! Toggle word wrap Toggle overflow - For Red Hat Enterprise Linux 7

systemctl start winbind.service systemctl enable winbind.service

# systemctl start winbind.service # systemctl enable winbind.serviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow

- Verify that the system can communicate with Active Directory:

- Verify that a trust relationship has been created:

wbinfo -t

# wbinfo -tCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Verify that you can list users:

wbinfo -u

# wbinfo -uCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Verify that you can list groups:

wbinfo -g

# wbinfo -gCopy to Clipboard Copied! Toggle word wrap Toggle overflow

- Configure the NSS and PAM stack:

- Open the Authentication Configuration window:

authconfig-tui

# authconfig-tuiCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Select the Use Winbind check box, select Next and press Enter.

- Select the OK button and press Enter.

4.1.3. Configuring Single Sign-On for Windows Virtual Machines

RHEV Guest Tools ISO file provides this agent. If the RHEV-toolsSetup.iso image is not available in your ISO domain, contact your system administrator.

Procedure 4.3. Configuring Single Sign-On for Windows Virtual Machines

- Select the Windows virtual machine. Ensure the machine is powered up.

- Click Change CD.

- Select

RHEV-toolsSetup.isofrom the list of images. - Click OK.

- Click the Console icon and log in to the virtual machine.

- On the virtual machine, locate the CD drive to access the contents of the guest tools ISO file and launch

RHEV-ToolsSetup.exe. After the tools have been installed, you will be prompted to restart the machine to apply the changes.

4.1.4. Disabling Single Sign-on for Virtual Machines

Procedure 4.4. Disabling Single Sign-On for Virtual Machines

- Select a virtual machine and click .

- Click the Console tab.

- Select the Disable Single Sign On check box.

- Click .

4.2. Configuring USB Devices

Important

4.2.1. Using USB Devices on Virtual Machines

- Client

- Red Hat Enterprise Linux 7.1 and later

- Red Hat Enterprise Linux 6.0 and later

- Windows 10

- Windows 8

- Windows 7

- Windows 2008

- Windows 2008 Server R2

- Guest

- Red Hat Enterprise Linux 7.1 and later

- Red Hat Enterprise Linux 6.0 and later

- Windows 7

- Windows XP

- Windows 2008

Note

4.2.2. Using USB Devices on a Windows Client

Procedure 4.5. Using USB Devices on a Windows Client

- When the usbdk driver is installed, select a virtual machine that has been configured to use the SPICE protocol.

- Ensure USB support is set to Enabled:

- Click Edit.

- Click the Console tab.

- Select Enabled from the USB Support drop-down list.

- Click OK.

- Click the button and select the Enable USB Auto-Share check box.

- Start the virtual machine and click the button to connect to that virtual machine. When you plug your USB device into the client machine, it will automatically be redirected to appear on your guest machine.

4.2.3. Using USB Devices on a Red Hat Enterprise Linux Client

Procedure 4.6. Using USB devices on a Red Hat Enterprise Linux client

- Click the Virtual Machines tab and select a virtual machine that has been configured to use the SPICE protocol.

- Ensure USB support is set to Enabled:

- Click Edit.

- Click the Console tab.

- Select Enabled from the USB Support drop-down list.

- Click OK.

- Click the button and select the Enable USB Auto-Share check box.

- Start the virtual machine and click the button to connect to that virtual machine. When you plug your USB device into the client machine, it will automatically be redirected to appear on your guest machine.

4.3. Configuring Multiple Monitors

4.3.1. Configuring Multiple Displays for Red Hat Enterprise Linux Virtual Machines

- Start a SPICE session with the virtual machine.

- Open the View drop-down menu at the top of the SPICE client window.

- Open the Display menu.

- Click the name of a display to enable or disable that display.

Note

By default, Display 1 is the only display that is enabled on starting a SPICE session with a virtual machine. If no other displays are enabled, disabling this display will close the session.

4.3.2. Configuring Multiple Displays for Windows Virtual Machines

- Click the Virtual Machines tab and select a virtual machine.

- With the virtual machine in a powered-down state, click Edit.

- Click the Console tab.

- Select the number of displays from the Monitors drop-down list.

Note

This setting controls the maximum number of displays that can be enabled for the virtual machine. While the virtual machine is running, additional displays can be enabled up to this number. - Click Ok.

- Start a SPICE session with the virtual machine.

- Open the View drop-down menu at the top of the SPICE client window.

- Open the Display menu.

- Click the name of a display to enable or disable that display.

Note

By default, Display 1 is the only display that is enabled on starting a SPICE session with a virtual machine. If no other displays are enabled, disabling this display will close the session.

4.4. Configuring Console Options

4.4.1. Console Options

Simple Protocol for Independent Computing Environments (SPICE) is the recommended connection protocol for both Linux virtual machines and Windows virtual machines. To open a console to a virtual machine using SPICE, use Remote Viewer.

Virtual Network Computing (VNC) can be used to open consoles to both Linux virtual machines and Windows virtual machines. To open a console to a virtual machine using VNC, use Remote Viewer or a VNC client.

Remote Desktop Protocol (RDP) can only be used to open consoles to Windows virtual machines, and is only available when you access a virtual machines from a Windows machine on which Remote Desktop has been installed. Before you can connect to a Windows virtual machine using RDP, you must set up remote sharing on the virtual machine and configure the firewall to allow remote desktop connections.

Note

4.4.1.1. Accessing Console Options

Procedure 4.7. Accessing Console Options

- Select a running virtual machine.

- Open the Console Options window.

- In the Administration Portal, right-click the virtual machine and click Console Options.

- In the User Portal, click the Edit Console Options button.

Figure 4.1. The User Portal Edit Console Options Button

Note

4.4.1.2. SPICE Console Options

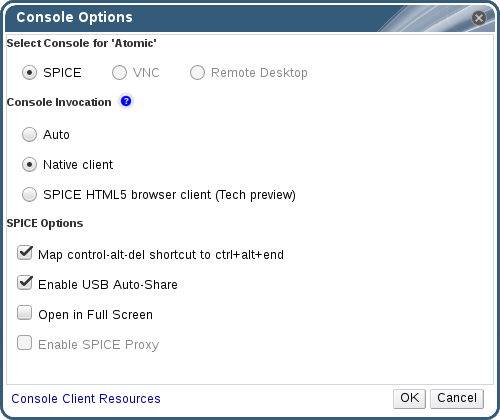

Figure 4.2. The Console Options window

Console Invocation

- Auto: The Manager automatically selects the method for invoking the console.

- Native client: When you connect to the console of the virtual machine, a file download dialog provides you with a file that opens a console to the virtual machine via Remote Viewer.

- SPICE HTML5 browser client (Tech preview): When you connect to the console of the virtual machine, a browser tab is opened that acts as the console.

SPICE Options

- Map control-alt-del shortcut to ctrl+alt+end: Select this check box to map the Ctrl+Alt+Del key combination to Ctrl+Alt+End inside the virtual machine.

- Enable USB Auto-Share: Select this check box to automatically redirect USB devices to the virtual machine. If this option is not selected, USB devices will connect to the client machine instead of the guest virtual machine. To use the USB device on the guest machine, manually enable it in the SPICE client menu.

- Open in Full Screen: Select this check box for the virtual machine console to automatically open in full screen when you connect to the virtual machine. Press SHIFT+F11 to toggle full screen mode on or off.

- Enable SPICE Proxy: Select this check box to enable the SPICE proxy.

- Enable WAN options: Select this check box to set the parameters

WANDisableEffectsandWANColorDepthtoanimationand16bits respectively on Windows virtual machines. Bandwidth in WAN environments is limited and this option prevents certain Windows settings from consuming too much bandwidth.

4.4.1.3. VNC Console Options

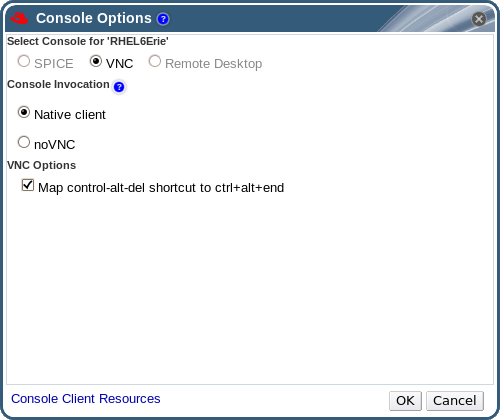

Figure 4.3. The Console Options window

Console Invocation

- Native Client: When you connect to the console of the virtual machine, a file download dialog provides you with a file that opens a console to the virtual machine via Remote Viewer.

- noVNC: When you connect to the console of the virtual machine, a browser tab is opened that acts as the console.

VNC Options

- Map control-alt-delete shortcut to ctrl+alt+end: Select this check box to map the Ctrl+Alt+Del key combination to Ctrl+Alt+End inside the virtual machine.

4.4.1.4. RDP Console Options

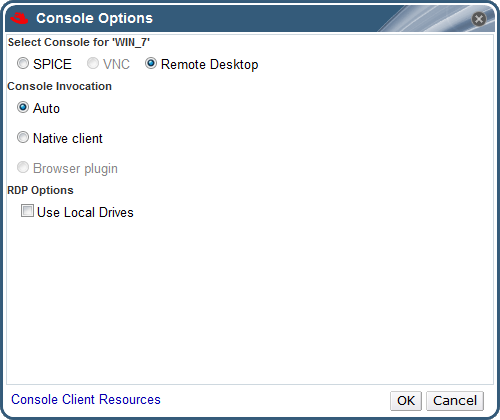

Figure 4.4. The Console Options window

Console Invocation

- Auto: The Manager automatically selects the method for invoking the console.

- Native client: When you connect to the console of the virtual machine, a file download dialog provides you with a file that opens a console to the virtual machine via Remote Desktop.

RDP Options

- Use Local Drives: Select this check box to make the drives on the client machine accessible on the guest virtual machine.

4.4.2. Remote Viewer Options

4.4.2.1. Remote Viewer Options

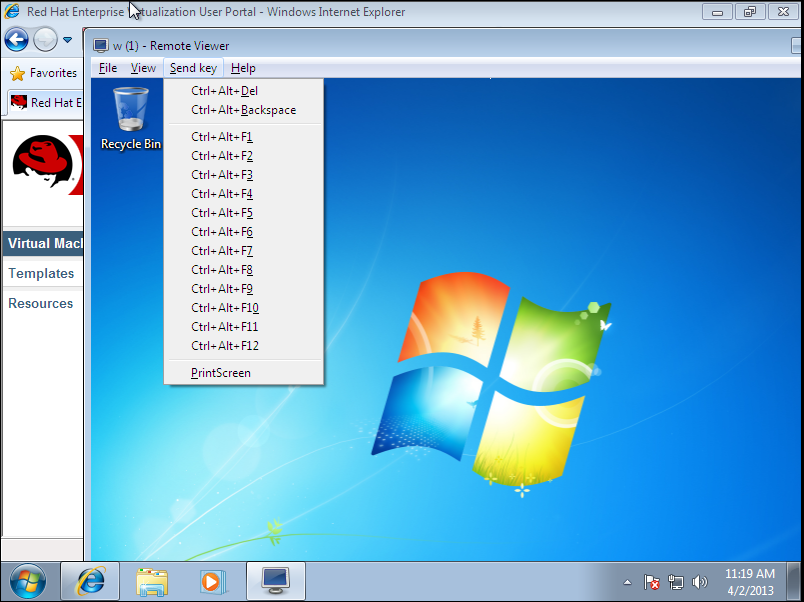

Figure 4.5. The Remote Viewer connection menu

| Option | Hotkey |

|---|---|

| File |

|

| View |

|

| Send key |

|

| Help | The About entry displays the version details of Virtual Machine Viewer that you are using. |

| Release Cursor from Virtual Machine | SHIFT+F12 |

4.4.2.2. Remote Viewer Hotkeys

Note

4.4.2.3. Manually Associating console.vv Files with Remote Viewer

console.vv file when attempting to open a console to a virtual machine using the native client console option, and Remote Viewer is already installed, then you can manually associate console.vv files with Remote Viewer so that Remote Viewer can automatically use those files to open consoles.

Procedure 4.8. Manually Associating console.vv Files with Remote Viewer

- Start the virtual machine.

- Open the Console Options window.

- In the Administration Portal, right-click the virtual machine and click Console Options.

- In the User Portal, click the Edit Console Options button.

Figure 4.6. The User Portal Edit Console Options Button

- Change the console invocation method to Native client and click .

- Attempt to open a console to the virtual machine, then click when prompted to open or save the

console.vvfile. - Navigate to the location on your local machine where you saved the file.

- Double-click the

console.vvfile and select when prompted. - In the Open with window, select and click the button.

- Navigate to the

C:\Users\[user name]\AppData\Local\virt-viewer\bindirectory and selectremote-viewer.exe. - Click and then click .

console.vv file that the Red Hat Virtualization Manager provides to open a console to that virtual machine without prompting you to select the application to use.

4.5. Configuring a Watchdog

4.5.1. Adding a Watchdog Card to a Virtual Machine

Procedure 4.9. Adding Watchdog Cards to Virtual Machines

- Click the Virtual Machines tab and select a virtual machine.

- Click .

- Click the High Availability tab.

- Select the watchdog model to use from the Watchdog Model drop-down list.

- Select an action from the Watchdog Action drop-down list. This is the action that the virtual machine takes when the watchdog is triggered.

- Click .

4.5.2. Installing a Watchdog

watchdog service.

Procedure 4.10. Installing Watchdogs

- Log in to the virtual machine on which the watchdog card is attached.

- Install the watchdog package and dependencies:

yum install watchdog

# yum install watchdogCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Edit the

/etc/watchdog.conffile and uncomment the following line:watchdog-device = /dev/watchdog

watchdog-device = /dev/watchdogCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Save the changes.

- Start the

watchdogservice and ensure this service starts on boot:- Red Hat Enterprise Linux 6:

service watchdog start chkconfig watchdog on

# service watchdog start # chkconfig watchdog onCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Red Hat Enterprise Linux 7:

systemctl start watchdog.service systemctl enable watchdog.service

# systemctl start watchdog.service # systemctl enable watchdog.serviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.5.3. Confirming Watchdog Functionality

watchdog service is active.

Warning

Procedure 4.11. Confirming Watchdog Functionality

- Log in to the virtual machine on which the watchdog card is attached.

- Confirm that the watchdog card has been identified by the virtual machine:

lspci | grep watchdog -i

# lspci | grep watchdog -iCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Run one of the following commands to confirm that the watchdog is active:

- Trigger a kernel panic:

echo c > /proc/sysrq-trigger

# echo c > /proc/sysrq-triggerCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Terminate the

watchdogservice:kill -9 `pgrep watchdog`

# kill -9 `pgrep watchdog`Copy to Clipboard Copied! Toggle word wrap Toggle overflow

4.5.4. Parameters for Watchdogs in watchdog.conf

watchdog service available in the /etc/watchdog.conf file. To configure an option, you must uncomment that option and restart the watchdog service after saving the changes.

Note

watchdog service and using the watchdog command, see the watchdog man page.

| Variable name | Default Value | Remarks |

|---|---|---|

ping | N/A | An IP address that the watchdog attempts to ping to verify whether that address is reachable. You can specify multiple IP addresses by adding additional ping lines. |

interface | N/A | A network interface that the watchdog will monitor to verify the presence of network traffic. You can specify multiple network interfaces by adding additional interface lines. |

file | /var/log/messages | A file on the local system that the watchdog will monitor for changes. You can specify multiple files by adding additional file lines. |

change | 1407 | The number of watchdog intervals after which the watchdog checks for changes to files. A change line must be specified on the line directly after each file line, and applies to the file line directly above that change line. |

max-load-1 | 24 | The maximum average load that the virtual machine can sustain over a one-minute period. If this average is exceeded, then the watchdog is triggered. A value of 0 disables this feature. |

max-load-5 | 18 | The maximum average load that the virtual machine can sustain over a five-minute period. If this average is exceeded, then the watchdog is triggered. A value of 0 disables this feature. By default, the value of this variable is set to a value approximately three quarters that of max-load-1. |

max-load-15 | 12 | The maximum average load that the virtual machine can sustain over a fifteen-minute period. If this average is exceeded, then the watchdog is triggered. A value of 0 disables this feature. By default, the value of this variable is set to a value approximately one half that of max-load-1. |

min-memory | 1 | The minimum amount of virtual memory that must remain free on the virtual machine. This value is measured in pages. A value of 0 disables this feature. |

repair-binary | /usr/sbin/repair | The path and file name of a binary file on the local system that will be run when the watchdog is triggered. If the specified file resolves the issues preventing the watchdog from resetting the watchdog counter, then the watchdog action is not triggered. |

test-binary | N/A | The path and file name of a binary file on the local system that the watchdog will attempt to run during each interval. A test binary allows you to specify a file for running user-defined tests. |

test-timeout | N/A | The time limit, in seconds, for which user-defined tests can run. A value of 0 allows user-defined tests to continue for an unlimited duration. |

temperature-device | N/A | The path to and name of a device for checking the temperature of the machine on which the watchdog service is running. |

max-temperature | 120 | The maximum allowed temperature for the machine on which the watchdog service is running. The machine will be halted if this temperature is reached. Unit conversion is not taken into account, so you must specify a value that matches the watchdog card being used. |

admin | root | The email address to which email notifications are sent. |

interval | 10 | The interval, in seconds, between updates to the watchdog device. The watchdog device expects an update at least once every minute, and if there are no updates over a one-minute period, then the watchdog is triggered. This one-minute period is hard-coded into the drivers for the watchdog device, and cannot be configured. |

logtick | 1 | When verbose logging is enabled for the watchdog service, the watchdog service periodically writes log messages to the local system. The logtick value represents the number of watchdog intervals after which a message is written. |

realtime | yes | Specifies whether the watchdog is locked in memory. A value of yes locks the watchdog in memory so that it is not swapped out of memory, while a value of no allows the watchdog to be swapped out of memory. If the watchdog is swapped out of memory and is not swapped back in before the watchdog counter reaches zero, then the watchdog is triggered. |

priority | 1 | The schedule priority when the value of realtime is set to yes. |

pidfile | /var/run/syslogd.pid | The path and file name of a PID file that the watchdog monitors to see if the corresponding process is still active. If the corresponding process is not active, then the watchdog is triggered. |

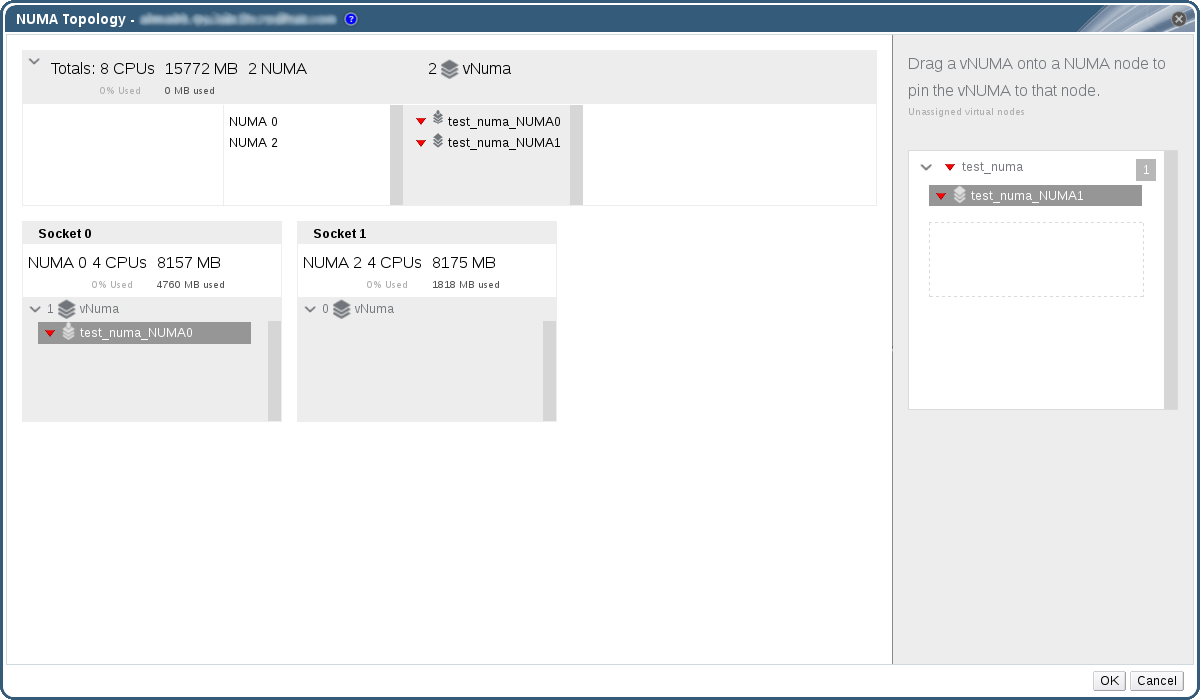

4.6. Configuring Virtual NUMA

numactl --hardware. The output of this command should show at least two NUMA nodes. You can also view the host's NUMA topology in the Administration Portal by selecting the host from the Hosts tab and clicking . This button is only available when the selected host has at least two NUMA nodes.

Procedure 4.12. Configuring Virtual NUMA

- Click the Virtual Machines tab and select a virtual machine.

- Click .

- Click the Host tab.

- Select the Specific Host(s) radio button and select a host from the list. The selected host must have at least two NUMA nodes.

- Select Do not allow migration from the Migration Options drop-down list.

- Enter a number into the NUMA Node Count field to assign virtual NUMA nodes to the virtual machine.

- Select Strict, Preferred, or Interleave from the Tune Mode drop-down list. If the selected mode is Preferred, the NUMA Node Count must be set to

1. - Click .

Figure 4.7. The NUMA Topology Window

- In the NUMA Topology window, click and drag virtual NUMA nodes from the box on the right to host NUMA nodes on the left as required, and click .

- Click .

Note

4.7. Configuring Red Hat Satellite Errata Management for a Virtual Machine

- The host that the virtual machine runs on also needs to be configured to receive errata information from Satellite. See Configuring Satellite Errata Management for a Host in the Administration Guide for more information.

- The virtual machine must have the ovirt-guest-agent package installed. This package allows the virtual machine to report its host name to the Red Hat Virtualization Manager. This allows the Red Hat Satellite server to identify the virtual machine as a content host and report the applicable errata. For more information on installing the ovirt-guest-agent package see Section 2.4.2, “Installing the Guest Agents and Drivers on Red Hat Enterprise Linux” for Red Hat Enterprise Linux virtual machines and Section 3.3.2, “Installing the Guest Agents and Drivers on Windows” for Windows virtual machines.

Important

Procedure 4.13. Configuring Red Hat Satellite Errata Management

Note

- Click the Virtual Machines tab and select a virtual machine.

- Click .

- Click the Foreman/Satellite tab.

- Select the required Satellite server from the Provider drop-down list.

- Click .

4.8. Configuring Headless Virtual Machines

Prerequisites

- If you are editing an existing virtual machine, and the Red Hat Virtualization guest agent has not been installed, note the machine's IP prior to selecting Headless Mode.

- Before running a virtual machine in headless mode, the GRUB configuration for this machine must be set to console mode otherwise the guest operating system's boot process will hang. To set console mode, comment out the spashimage flag in the GRUB menu configuration file:

#splashimage=(hd0,0)/grub/splash.xpm.gz serial --unit=0 --speed=9600 --parity=no --stop=1 terminal --timeout=2 serial

#splashimage=(hd0,0)/grub/splash.xpm.gz serial --unit=0 --speed=9600 --parity=no --stop=1 terminal --timeout=2 serialCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Note

Procedure 4.14. Configuring a Headless Virtual Machine

- Click the Virtual Machines tab and select a virtual machine.

- Click .

- Click the Console tab.

- Select Headless Mode. All other fields in the Graphical Console section are disabled.

- Optionally, select Enable VirtIO serial console to enable comunicating with the virtual machine via serial console. This is higly recommended.

- Reboot the virtual machine if it is running. See Section 6.3, “Rebooting a Virtual Machine”.

Chapter 5. Editing Virtual Machines

5.1. Editing Virtual Machine Properties

Procedure 5.1. Editing Virtual Machines

- Select the virtual machine to be edited.

- Click .

- Change settings as required.Changes to the following settings are applied immediately:

- Name

- Description

- Comment

- Optimized for (Desktop/Server)

- Delete Protection

- Network Interfaces

- Memory Size (Edit this field to hot plug virtual memory. See Section 5.5, “Hot Plugging Virtual Memory”.)

- Virtual Sockets (Edit this field to hot plug CPUs. See Section 5.6, “Hot Plugging vCPUs”.)

- Use custom migration downtime

- Highly Available

- Priority for Run/Migration queue

- Disable strict user checking

- Icon

- Click .

- If the Next Start Configuration pop-up window appears, click .

) appears as a reminder of the pending changes.

) appears as a reminder of the pending changes.

5.2. Editing IO Threads

Procedure 5.2. Editing IO Threads

- Select the virtual machine to be edited.

- Click .

- Click the Resource Allocation tab.

- Select the IO Threads Enabled check box. Red Hat recommends using the default number of IO threads, which is

1. - Click .

- Click the Reboot icon to restart the virtual machine.If you increased the number of IO threads, you must reactivate the disks so that the disks will be remapped according to the correct number of controllers:

- Click the Shutdown icon to stop the virtual machine.

- Click the Disks tab in the details pane.

- Select each disk and click Deactivate.

- Select each disk and click Activate.

- Click the Run icon to start the virtual machine.

Procedure 5.3. Viewing Disk Controller Assignment

- Log in to the host machine.

- Use the

dumpxmlcommand to view the mapping of disks to controllers:virsh -r dumpxml virtual_machine_name

# virsh -r dumpxml virtual_machine_nameCopy to Clipboard Copied! Toggle word wrap Toggle overflow

5.3. Network Interfaces

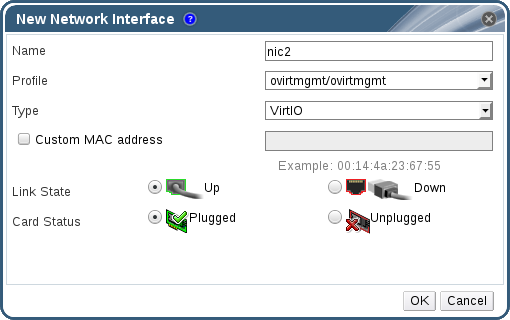

5.3.1. Adding a New Network Interface

Procedure 5.4. Adding Network Interfaces to Virtual Machines

- Click the Virtual Machines tab and select a virtual machine.

- Click the Network Interfaces tab in the details pane.

- Click .

Figure 5.1. New Network Interface window

- Enter the Name of the network interface.

- Use the drop-down lists to select the Profile and the Type of the network interface. The Profile and Type drop-down lists are populated in accordance with the profiles and network types available to the cluster and the network interface cards available to the virtual machine.

- Select the Custom MAC address check box and enter a MAC address for the network interface card as required.

- Click .

5.3.2. Editing a Network Interface

Procedure 5.5. Editing Network Interfaces

- Click the Virtual Machines tab and select a virtual machine.

- Click the Network Interfaces tab in the details pane and select the network interface to edit.

- Click .

- Change settings as required. You can specify the Name, Profile, Type, and Custom MAC address. See Section 5.3.1, “Adding a New Network Interface”.

- Click .

5.3.3. Hot Plugging a Network Interface

Note

Procedure 5.6. Hot Plugging Network Interfaces

- Click the Virtual Machines tab and select a virtual machine.

- Click the Network Interfaces tab in the details pane and select the network interface to hot plug.

- Click .

- Set the Card Status to Plugged to enable the network interface, or set it to Unplugged to disable the network interface.

- Click .

5.3.4. Removing a Network Interface

Procedure 5.7. Removing Network Interfaces

- Click the Virtual Machines tab and select a virtual machine.

- Click the Network Interfaces tab in the details pane and select the network interface to remove.

- Click .

- Click .

5.4. Virtual Disks

5.4.1. Adding a New Virtual Disk

Procedure 5.8. Adding Disks to Virtual Machines

- Click the Virtual Machines tab and select a virtual machine.

- Click the Disks tab in the details pane.

- Click .

Figure 5.2. The New Virtual Disk Window

- Use the appropriate radio buttons to switch between Image, Direct LUN, or Cinder. Virtual disks added in the User Portal can only be Image disks. Direct LUN and Cinder disks can be added in the Administration Portal.

- Enter a Size(GB), Alias, and Description for the new disk.

- Use the drop-down lists and check boxes to configure the disk. See Section A.3, “Explanation of Settings in the New Virtual Disk and Edit Virtual Disk Windows” for more details on the fields for all disk types.

- Click .

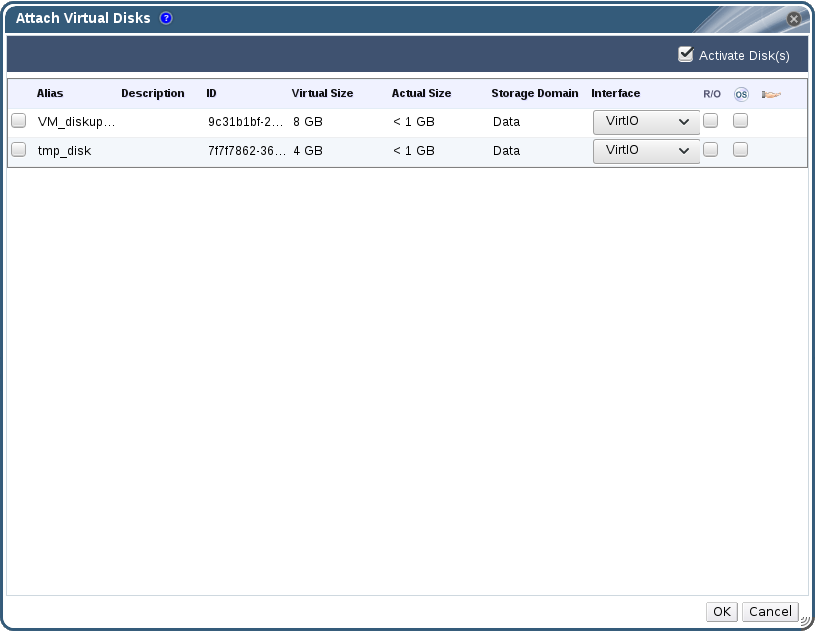

5.4.2. Attaching an Existing Disk to a Virtual Machine

Procedure 5.9. Attaching Virtual Disks to Virtual Machines

- Click the Virtual Machines tab and select a virtual machine.

- Click the Disks tab in the details pane.

- Click .

Figure 5.3. The Attach Virtual Disks Window

- Select one or more virtual disks from the list of available disks and select the required interface from the Interface drop-down.

- Click .

Note

5.4.3. Extending the Available Size of a Virtual Disk

fdisk utility to resize the partitions and file systems as required. See How to Resize a Partition using fdisk for more information.

Procedure 5.10. Extending the Available Size of Virtual Disks

- Click the Virtual Machines tab and select a virtual machine.

- Click the Disks tab in the details pane and select the disk to edit.

- Click .

- Enter a value in the

Extend size by(GB)field. - Click .

locked for a short time, during which the drive is resized. When the resizing of the drive is complete, the status of the drive becomes OK.

5.4.4. Hot Plugging a Virtual Disk

Note

Procedure 5.11. Hot Plugging Virtual Disks

- Click the Virtual Machines tab and select a virtual machine.

- Click the Disks tab in the details pane and select the virtual disk to hot plug.

- Click to enable the disk, or click to disable the disk.

- Click .

5.4.5. Removing a Virtual Disk from a Virtual Machine

Procedure 5.12. Removing Virtual Disks From Virtual Machines

- Click the Virtual Machines tab and select a virtual machine.

- Click the Disks tab in the details pane and select the virtual disk to remove.

- Click .

- Click .

- Click .

- Optionally, select the Remove Permanently check box to completely remove the virtual disk from the environment. If you do not select this option - for example, because the disk is a shared disk - the virtual disk will remain in the Disks resource tab.

- Click .

blkdiscard command is called on the logical volume when it is removed and the underlying storage is notified that the blocks are free. See Setting Discard After Delete for a Storage Domain in the Administration Guide. A blkdiscard is also called on the logical volume when a virtual disk is removed if the virtual disk is attached to at least one virtual machine with the Enable Discard check box selected.

5.4.6. Importing a Disk Image from an Imported Storage Domain

Note

Procedure 5.13. Importing a Disk Image

- Select a storage domain that has been imported into the data center.

- In the details pane, click Disk Import.

- Select one or more disk images and click to open the Import Disk(s) window.

- Select the appropriate Disk Profile for each disk.

- Click to import the selected disks.

5.4.7. Importing an Unregistered Disk Image from an Imported Storage Domain

Note

Procedure 5.14. Importing a Disk Image

- Select a storage domain that has been imported into the data center.

- Right-click the storage domain and select Scan Disks so that the Manager can identify unregistered disks.

- In the details pane, click Disk Import.

- Select one or more disk images and click to open the Import Disk(s) window.

- Select the appropriate Disk Profile for each disk.

- Click to import the selected disks.

5.5. Hot Plugging Virtual Memory

Important

Important

Procedure 5.15. Hot Plugging Virtual Memory

- Click the Virtual Machines tab and select a running virtual machine.

- Click .

- Click the System tab.

- Increase the Memory Size by entering the total amount required. Memory can be added in multiples of 256 MB. By default, the maximum memory allowed for the virtual machine is set to 4x the memory size specified. Though the value is changed in the user interface, the maximum value is not hot plugged, and you will see the pending changes icon. To avoid that, you can change the maximum memory back to the original value.

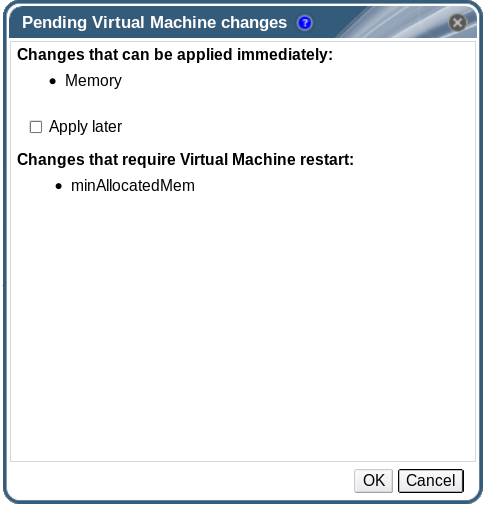

- Click .This action opens the Pending Virtual Machine changes window, as some values such as maxMemorySizeMb and minAllocatedMem will not change until the virtual machine is restarted. However, the hot plug action is triggered by the change to the Memory Size value, which can be applied immediately.

Figure 5.4. Hot Plug Virtual Memory

- Click OK.

5.6. Hot Plugging vCPUs

Important

- The virtual machine's Operating System must be explicitly set in the New Virtual Machine or Edit Virtual Machine window.

- The virtual machine's operating system must support CPU hot plug. See the table below for support details.

- Windows virtual machines must have the guest agents installed. See Section 3.3.2, “Installing the Guest Agents and Drivers on Windows”.

Procedure 5.16. Hot Plugging vCPUs

- Click the Virtual Machines tab and select a running virtual machine.

- Click .

- Click the System tab.

- Change the value of Virtual Sockets as required.

- Click .

|

Operating System

|

Version

|

Architecture

|

Hot Plug Supported

|

Hot Unplug Supported

|

|---|---|---|---|---|

|

Red Hat Enterprise Linux Atomic Host 7

|

x86

|

Yes

|

Yes

| |

|

Red Hat Enterprise Linux 6.3+

|

x86

|

Yes

|

Yes

| |

|

Red Hat Enterprise Linux 7.0+

|

x86

|

Yes

|

Yes

| |

|

Red Hat Enterprise Linux 7.3+

|

PPC64

|

Yes

|

Yes

| |

|

Microsoft Windows Server 2008

|

All

|

x86

|

No

|

No

|

|

Microsoft Windows Server 2008

|

Standard, Enterprise

|

x64

|

No

|

No

|

|

Microsoft Windows Server 2008

|

Datacenter

|

x64

|

Yes

|

No

|

|

Microsoft Windows Server 2008 R2

|

All

|

x86

|

No

|

No

|

|

Microsoft Windows Server 2008 R2

|

Standard, Enterprise

|

x64

|

No

|

No

|

|

Microsoft Windows Server 2008 R2

|

Datacenter

|

x64

|

Yes

|

No

|

|

Microsoft Windows Server 2012

|

All

|

x64

|

Yes

|

No

|

|

Microsoft Windows Server 2012 R2

|

All

|

x64

|

Yes

|

No

|

|

Microsoft Windows Server 2016

|

Standard, Datacenter

|

x64

|

Yes

|

No

|

|

Microsoft Windows 7

|

All

|

x86

|

No

|

No

|

|

Microsoft Windows 7

|

Starter, Home, Home Premium, Professional

|

x64

|

No

|

No

|

|

Microsoft Windows 7

|

Enterprise, Ultimate

|

x64

|

Yes

|

No

|

|

Microsoft Windows 8.x

|

All

|

x86

|

Yes

|

No

|

|

Microsoft Windows 8.x

|

All

|

x64

|

Yes

|

No

|

|

Microsoft Windows 10

|

All

|

x86

|

Yes

|

No

|

|

Microsoft Windows 10

|

All

|

x64

|

Yes

|

No

|

5.7. Pinning a Virtual Machine to Multiple Hosts

Note

Procedure 5.17. Pinning Virtual Machines to Multiple Hosts

- Click the Virtual Machines tab and select a virtual machine.

- Click .

- Click the Host tab.

- Select the Specific Host(s) radio button under Start Running On and select two or more hosts from the list.

- Select Do not allow migration from the Migration Options drop-down list.

- Click the High Availability tab.

- Select the Highly Available check box.

- Select Low, Medium, or High from the Priority drop-down list. When migration is triggered, a queue is created in which the high priority virtual machines are migrated first. If a cluster is running low on resources, only the high priority virtual machines are migrated.

- Click .

5.8. Changing the CD for a Virtual Machine

Note

Procedure 5.18. Changing the CD for a Virtual Machine

- Click the Virtual Machines tab and select a running virtual machine.

- Click .

- Select an option from the drop-down list:

- Select an ISO file from the list to eject the CD currently accessible to the virtual machine and mount that ISO file as a CD.

- Select from the list to eject the CD currently accessible to the virtual machine.

- Click .

5.9. Smart Card Authentication

Procedure 5.19. Enabling Smart Cards

- Ensure that the smart card hardware is plugged into the client machine and is installed according to manufacturer's directions.

- Click the Virtual Machines tab and select a virtual machine.

- Click .

- Click the tab and select the check box.

- Click .

- Connect to the running virtual machine by clicking the icon. Smart card authentication is now passed from the client hardware to the virtual machine.

Important

Procedure 5.20. Disabling Smart Cards

- Click the Virtual Machines tab and select a virtual machine.

- Click .

- Click the tab, and clear the check box.

- Click .

Procedure 5.21. Configuring Client Systems for Smart Card Sharing

- Smart cards may require certain libraries in order to access their certificates. These libraries must be visible to the NSS library, which spice-gtk uses to provide the smart card to the guest. NSS expects the libraries to provide the PKCS #11 interface.

- Make sure that the module architecture matches spice-gtk/remote-viewer's architecture. For instance, if you have only the 32b PKCS #11 library available, you must install the 32b build of virt-viewer in order for smart cards to work.

Procedure 5.22. Configuring RHEL clients with CoolKey Smart Card Middleware

- CoolKey Smart Card middleware is a part of Red Hat Enterprise Linux. Install the

Smart card supportgroup. If the Smart Card Support group is installed on a Red Hat Enterprise Linux system, smart cards are redirected to the guest when Smart Cards are enabled. The following command installs theSmart card supportgroup:yum groupinstall "Smart card support"

# yum groupinstall "Smart card support"Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Procedure 5.23. Configuring RHEL clients with Other Smart Card Middleware

- Register the library in the system's NSS database. Run the following command as root:

modutil -dbdir /etc/pki/nssdb -add "module name" -libfile /path/to/library.so

# modutil -dbdir /etc/pki/nssdb -add "module name" -libfile /path/to/library.soCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Procedure 5.24. Configuring Windows Clients

- Red Hat does not provide PKCS #11 support to Windows clients. Libraries that provide PKCS #11 support must be obtained from third parties. When such libraries are obtained, register them by running the following command as a user with elevated privileges:

modutil -dbdir %PROGRAMDATA%\pki\nssdb -add "module name" -libfile C:\Path\to\module.dll

modutil -dbdir %PROGRAMDATA%\pki\nssdb -add "module name" -libfile C:\Path\to\module.dllCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 6. Administrative Tasks

6.1. Shutting Down a Virtual Machine

Procedure 6.1. Shutting Down a Virtual Machine

- Click the Virtual Machines tab and select a running virtual machine.

- Click the shut down (

) button.

Alternatively, right-click the virtual machine and select Shutdown.

) button.

Alternatively, right-click the virtual machine and select Shutdown. - Optionally in the Administration Portal, enter a Reason for shutting down the virtual machine in the Shut down Virtual Machine(s) confirmation window. This allows you to provide an explanation for the shutdown, which will appear in the logs and when the virtual machine is powered on again.

Note

The virtual machine shutdown Reason field will only appear if it has been enabled in the cluster settings. For more information, see Explanation of Settings and Controls in the New Cluster and Edit Cluster Windows in the Administration Guide. - Click in the Shut down Virtual Machine(s) confirmation window.

Down.

6.2. Suspending a Virtual Machine

Procedure 6.2. Suspending a Virtual Machine

- Click the Virtual Machines tab and select a running virtual machine.

- Click the Suspend (

) button.

Alternatively, right-click the virtual machine and select Suspend.

) button.

Alternatively, right-click the virtual machine and select Suspend.

Suspended.

6.3. Rebooting a Virtual Machine

Procedure 6.3. Rebooting a Virtual Machine

- Click the Virtual Machines tab and select a running virtual machine.

- Click the Reboot (

) button.

Alternatively, right-click the virtual machine and select Reboot.

) button.

Alternatively, right-click the virtual machine and select Reboot. - Click in the Reboot Virtual Machine(s) confirmation window.

Reboot In Progress before returning to Up.

6.4. Removing a Virtual Machine

Important

Procedure 6.4. Removing Virtual Machines

- Click the Virtual Machines tab and select the virtual machine to remove.

- Click .

- Optionally, select the Remove Disk(s) check box to remove the virtual disks attached to the virtual machine together with the virtual machine. If the Remove Disk(s) check box is cleared, then the virtual disks remain in the environment as floating disks.

- Click .

6.5. Cloning a Virtual Machine

Important

Procedure 6.5. Cloning Virtual Machines

- Click the Virtual Machines tab and select the virtual machine to clone.

- Click .

- Enter a Clone Name for the new virtual machine.

- Click .

6.6. Updating Virtual Machine Guest Agents and Drivers

6.6.1. Updating the Guest Agents and Drivers on Red Hat Enterprise Linux

Procedure 6.6. Updating the Guest Agents and Drivers on Red Hat Enterprise Linux

- Log in to the Red Hat Enterprise Linux virtual machine.

- Update the ovirt-guest-agent-common package:

yum update ovirt-guest-agent-common

# yum update ovirt-guest-agent-commonCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Restart the service:

- For Red Hat Enterprise Linux 6

service ovirt-guest-agent restart

# service ovirt-guest-agent restartCopy to Clipboard Copied! Toggle word wrap Toggle overflow - For Red Hat Enterprise Linux 7

systemctl restart ovirt-guest-agent.service

# systemctl restart ovirt-guest-agent.serviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow

6.6.2. Updating the Guest Agents and Drivers on Windows

Procedure 6.7. Updating the Guest Agents and Drivers on Windows

- On the Red Hat Virtualization Manager, update the Red Hat Virtualization Guest Tools to the latest version:

yum update -y rhev-guest-tools-iso*

# yum update -y rhev-guest-tools-iso*Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Upload the ISO file to your ISO domain, replacing [ISODomain] with the name of your ISO domain:

engine-iso-uploader --iso-domain=[ISODomain] upload /usr/share/rhev-guest-tools-iso/rhev-tools-setup.iso

engine-iso-uploader --iso-domain=[ISODomain] upload /usr/share/rhev-guest-tools-iso/rhev-tools-setup.isoCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note

Therhev-tools-setup.isofile is a symbolic link to the most recently updated ISO file. The link is automatically changed to point to the newest ISO file every time you update the rhev-guest-tools-iso package. - In the Administration or User Portal, if the virtual machine is running, use the Change CD button to attach the latest

rhev-tools-setup.isofile to each of your virtual machines. If the virtual machine is powered off, click the Run Once button and attach the ISO as a CD. - Select the CD Drive containing the updated ISO and execute the

RHEV-ToolsSetup.exefile.

6.7. Viewing Red Hat Satellite Errata for a Virtual Machine

Procedure 6.8. Viewing Red Hat Satellite Errata

- Click the Virtual Machines tab and select a virtual machine.

- Click tab in the details pane.

6.8. Virtual Machines and Permissions

6.8.1. Managing System Permissions for a Virtual Machine

- Create, edit, and remove virtual machines.

- Run, suspend, shutdown, and stop virtual machines.

Note

6.8.2. Virtual Machines Administrator Roles Explained

| Role | Privileges | Notes |

|---|---|---|

| DataCenterAdmin | Data Center Administrator | Possesses administrative permissions for all objects underneath a specific data center except for storage. |

| ClusterAdmin | Cluster Administrator | Possesses administrative permissions for all objects underneath a specific cluster. |

| NetworkAdmin | Network Administrator | Possesses administrative permissions for all operations on a specific logical network. Can configure and manage networks attached to virtual machines. To configure port mirroring on a virtual machine network, apply the NetworkAdmin role on the network and the UserVmManager role on the virtual machine. |

6.8.3. Virtual Machine User Roles Explained

| Role | Privileges | Notes |

|---|---|---|

| UserRole | Can access and use virtual machines and pools. | Can log in to the User Portal and use virtual machines and pools. |

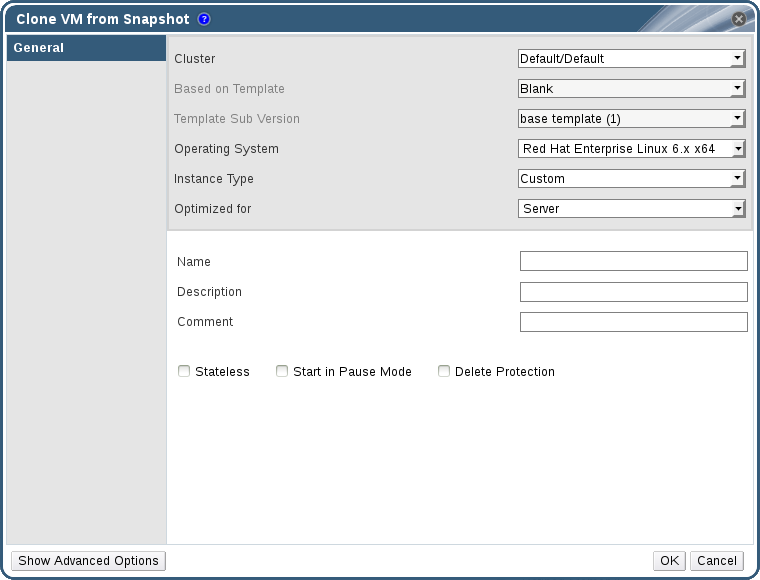

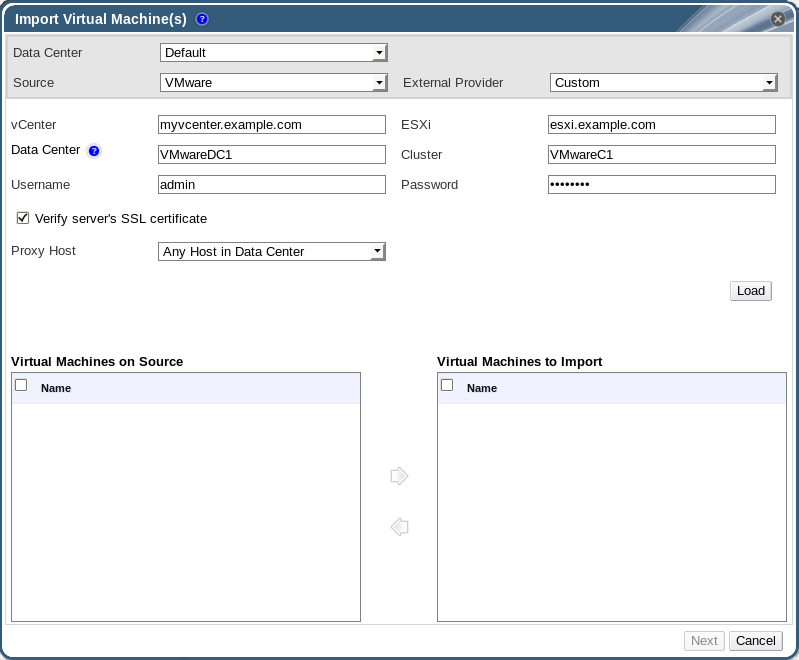

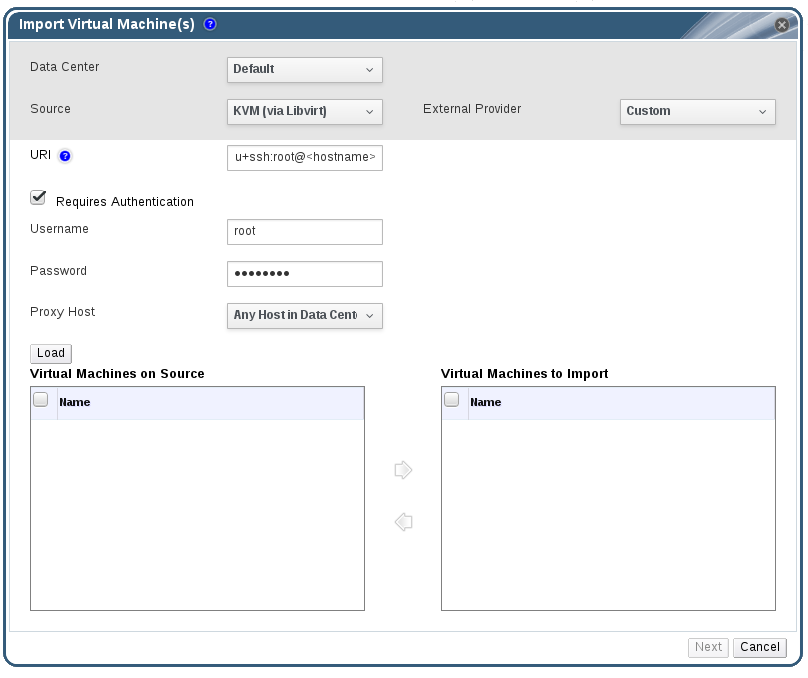

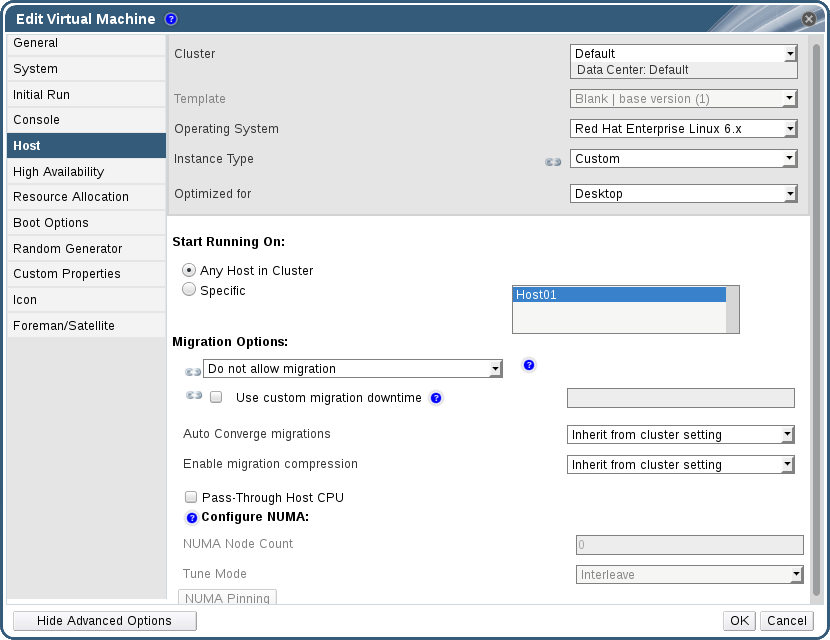

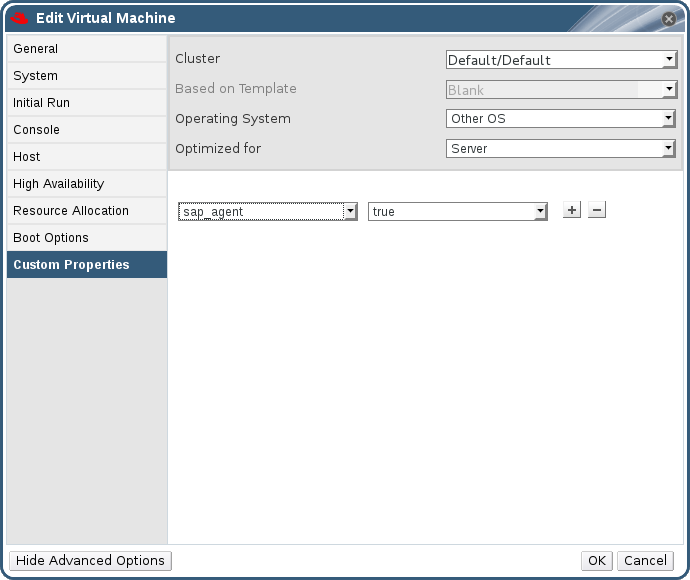

| PowerUserRole | Can create and manage virtual machines and templates. | Apply this role to a user for the whole environment with the Configure window, or for specific data centers or clusters. For example, if a PowerUserRole is applied on a data center level, the PowerUser can create virtual machines and templates in the data center. Having a PowerUserRole is equivalent to having the VmCreator, DiskCreator, and TemplateCreator roles. |