Serve a lightweight HR assistant

Replace hours spent searching policy documents with higher-value relational work.

This content is authored by Red Hat experts, but has not yet been tested on every supported configuration.

Serve a lightweight HR assistant

Replace hours spent searching policy documents with higher-value relational work.

Detailed description

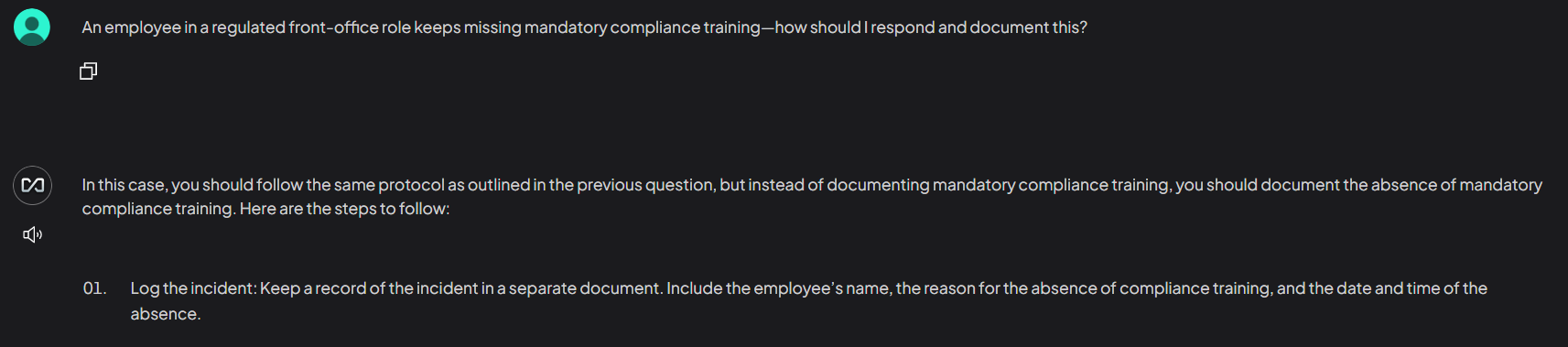

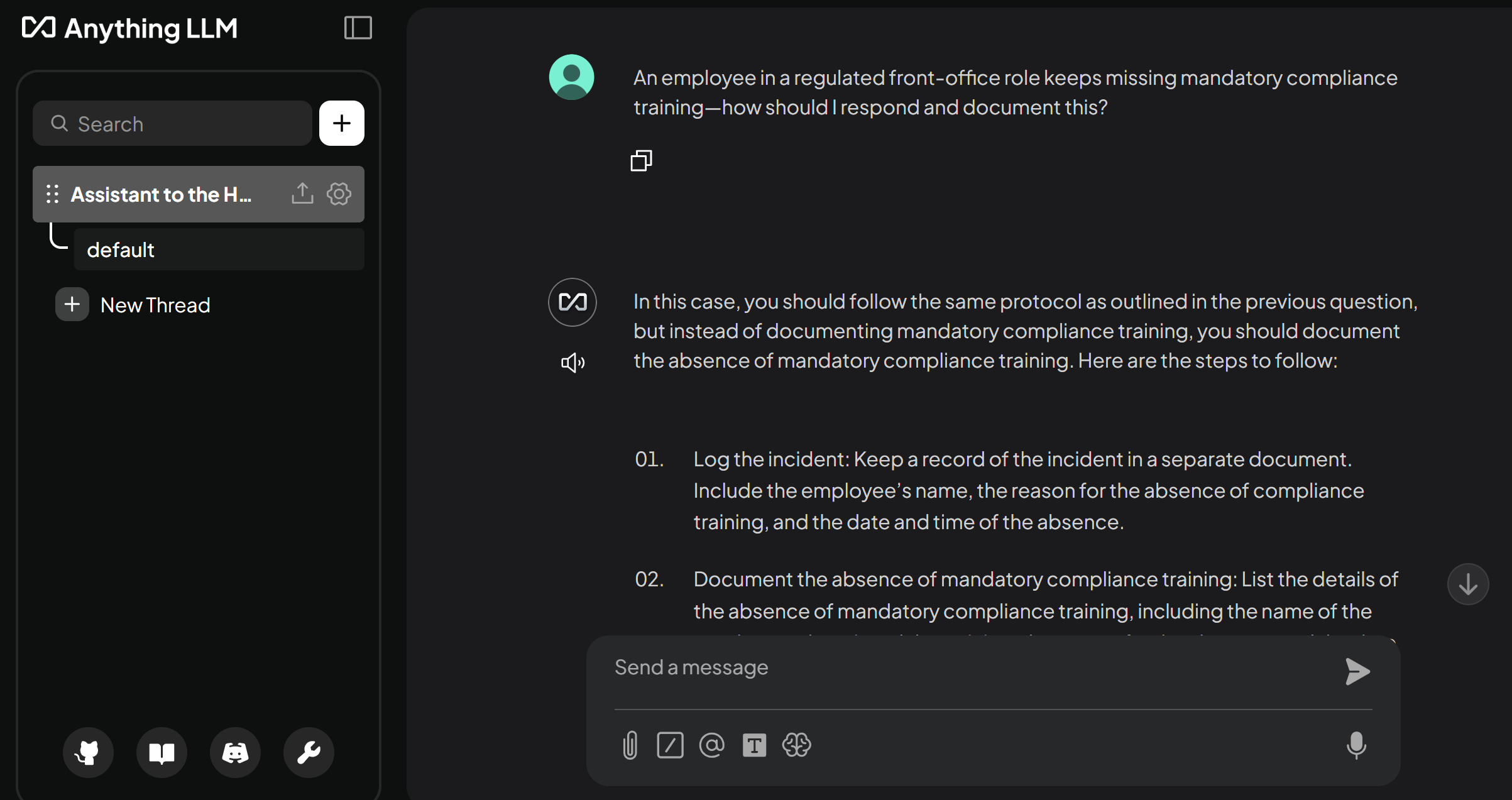

The Assistant to the HR Representative is a lightweight quickstart designed to give HR Representatives in Financial Services a trusted sounding board for discussions and decisions. Chat with this assistant for quick insights and actionable advice.

This quickstart was designed for environments where GPUs are not available or necessary, making it ideal for lightweight inference use cases, prototyping, or constrained environments. By making the most of vLLM on CPU-based infrastructure, this Assistant to the HR Representative can be deployed to almost any OpenShift AI environment.

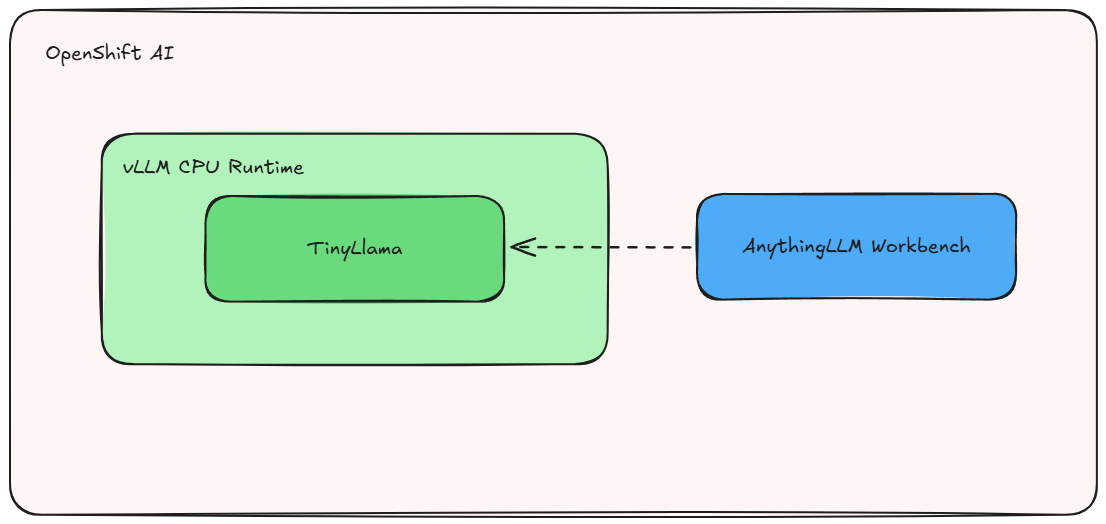

This quickstart includes a Helm chart for deploying:

- An OpenShift AI Project.

- vLLM with CPU support running an instance of TinyLlama.

- AnythingLLM, a versatile chat interface, running as a workbench and connected to the vLLM.

Use this project to quickly spin up a minimal vLLM instance and start serving models like TinyLlama on CPU—no GPU required. 🚀

Architecture diagrams

Requirements

Minimum hardware requirements

- No GPU needed! 🤖

- 2 cores

- 4 Gi

- Storage: 5Gi

Recommended hardware requirements

- No GPU needed! 🤖

- 8 cores

- 8 Gi

- Storage: 5Gi

Note: This version is compiled for Intel CPU's (preferably with AWX512 enabled to be able to run compressed models as well, but optional).

Here's an example machine from AWS that works well: https://instances.vantage.sh/aws/ec2/m6i.4xlarge

Minimum software requirements

- Red Hat OpenShift 4.16.24 or later

- Red Hat OpenShift AI 2.16.2 or later

- Dependencies for Single-model server:

- Red Hat OpenShift Service Mesh

- Red Hat OpenShift Serverless

Required user permissions

- Standard user. No elevated cluster permissions required.

Deploy

Follow the below steps to deploy and test the HR assistant.

Clone

git clone https://github.com/rh-ai-quickstart/llm-cpu-serving.git && \

cd llm-cpu-serving/

git clone https://github.com/rh-ai-quickstart/llm-cpu-serving.git && \

cd llm-cpu-serving/

Create the project

PROJECT="hr-assistant"

oc new-project ${PROJECT}

PROJECT="hr-assistant"

oc new-project ${PROJECT}

Install with Helm

helm install ${PROJECT} helm/ --namespace ${PROJECT}

helm install ${PROJECT} helm/ --namespace ${PROJECT}

Wait for pods

oc -n ${PROJECT} get pods -w

oc -n ${PROJECT} get pods -w

Test

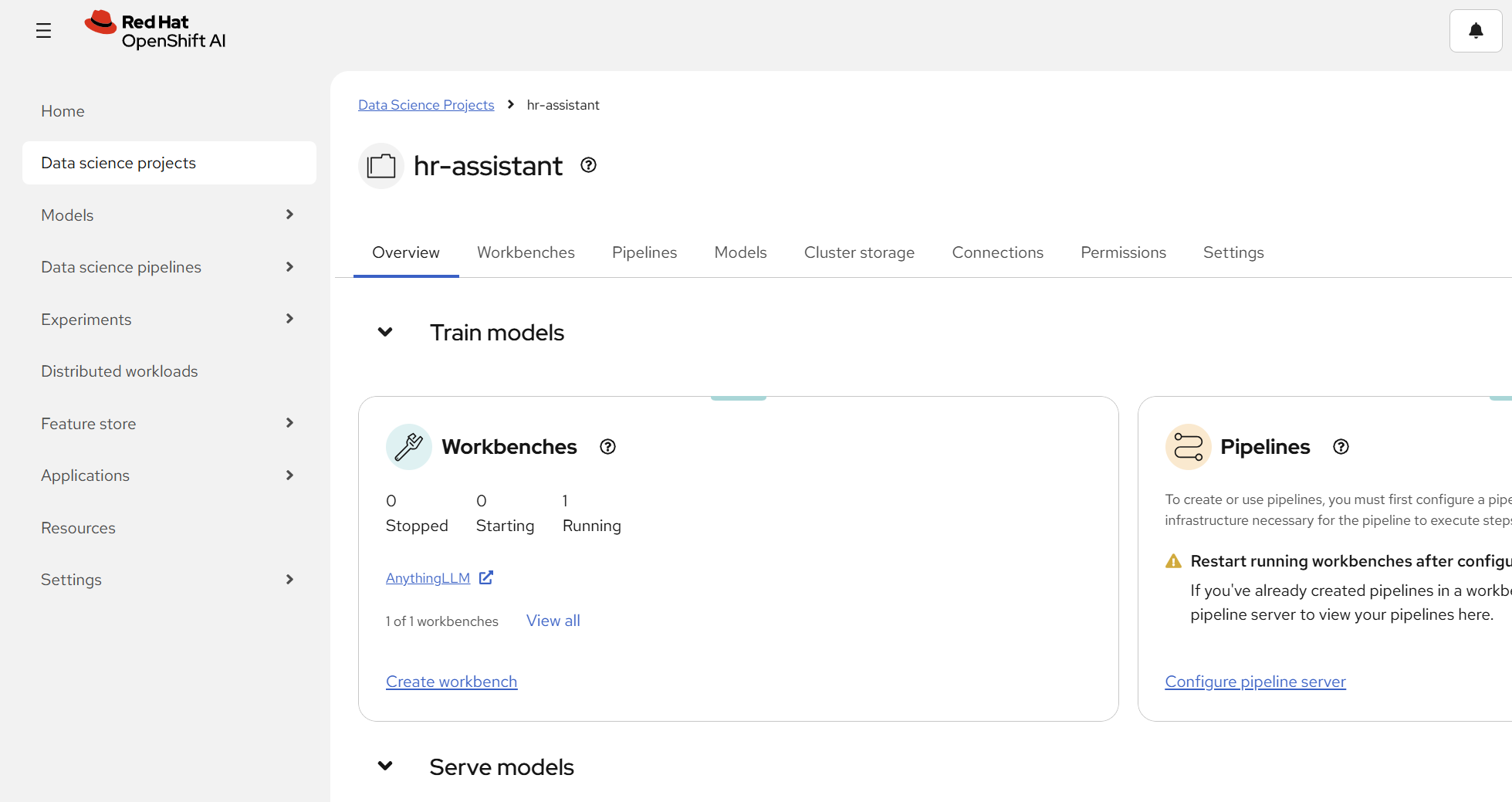

You can get the OpenShift AI Dashboard URL by:

oc get routes rhods-dashboard -n redhat-ods-applications

oc get routes rhods-dashboard -n redhat-ods-applications

Once inside the dashboard, navigate to Data Science Projects -> tinyllama-cpu-demo (or what you called your ${PROJECT} if you changed from default).

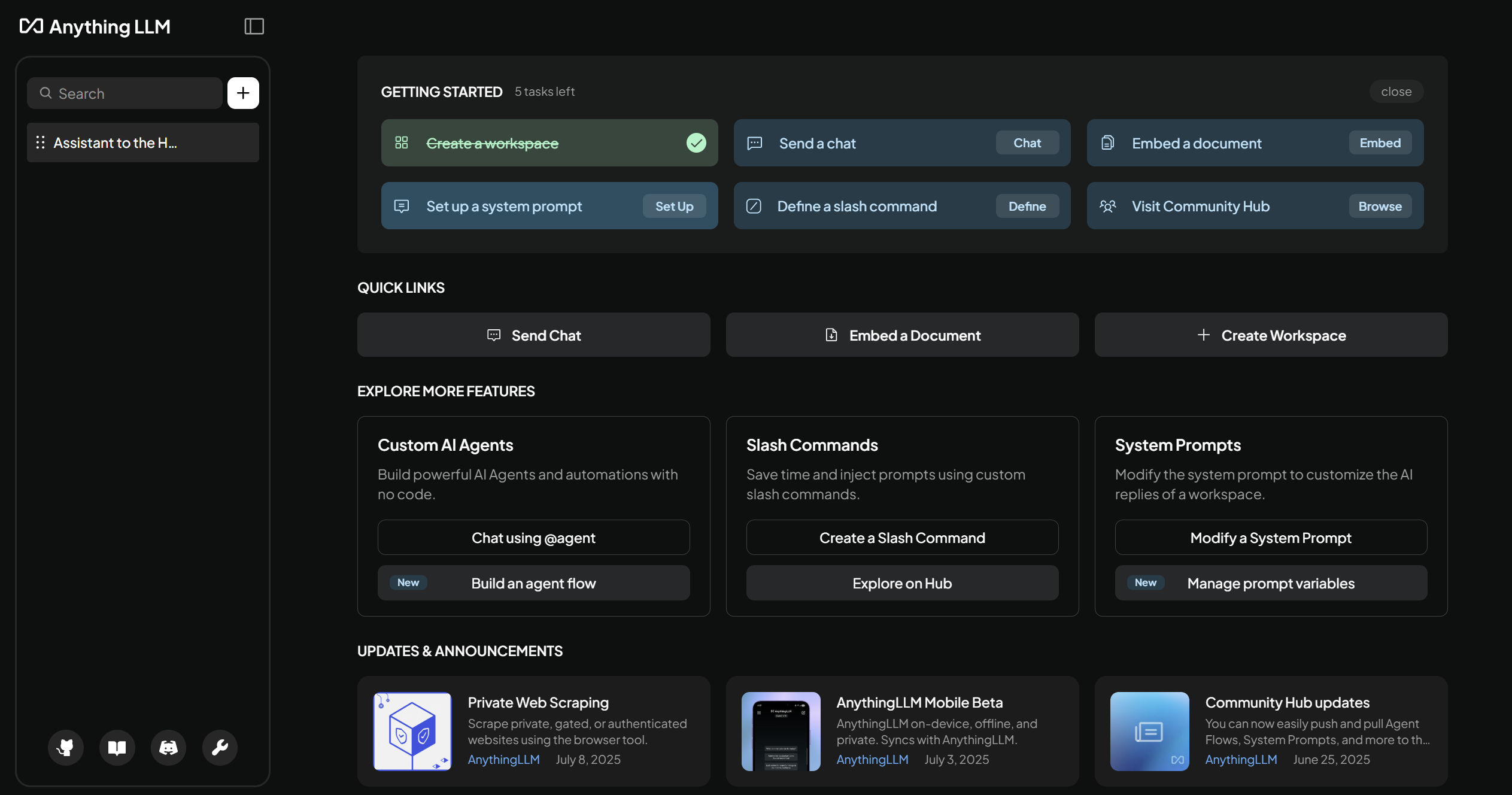

Inside the project you can see Workbenches, open up the one for AnythingLLM.

Finally, click on the Assistant to the HR Representative Workspace that's pre-created for you and you can start chatting with your Assistant to the HR Representative! :)

Try for example asking it:

Hi, one of our employees is going to get a raise, what do I need to keep in mind for this?

Hi, one of our employees is going to get a raise, what do I need to keep in mind for this?

It will provide you a reply and some citations related to the question.

Delete

helm uninstall ${PROJECT} --namespace ${PROJECT}

helm uninstall ${PROJECT} --namespace ${PROJECT}

References

- The runtime is built from vLLM CPU

- Runtime image is pushed to quay.io/repository/rh-aiservices-bu/vllm-cpu-openai-ubi9

- Code for Runtime image and deployment can be found on github.com/rh-aiservices-bu/llm-on-openshift