Este contenido no está disponible en el idioma seleccionado.

Deployment Guide

Deployment, Configuration and Administration of Red Hat Enterprise Linux 6

Abstract

Part I. Basic System Configuration

Chapter 1. Keyboard Configuration

1.1. Changing the Keyboard Layout

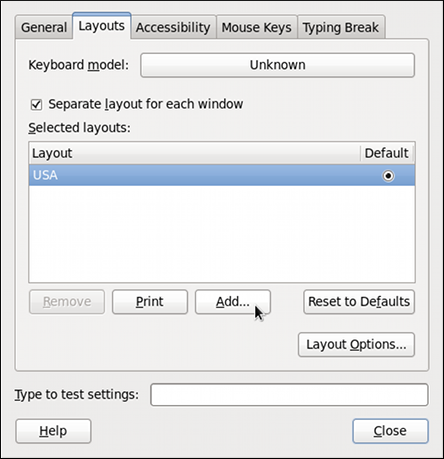

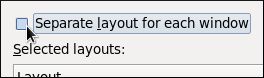

Figure 1.1. Keyboard Layout Preferences

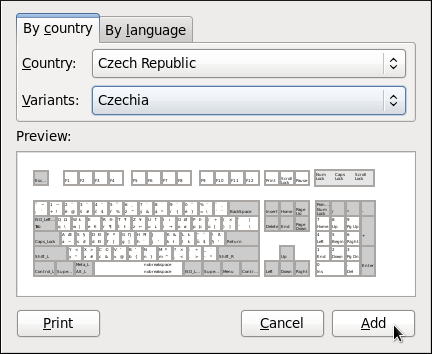

Figure 1.2. Choosing a layout

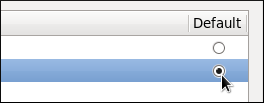

Figure 1.3. Selecting the default layout

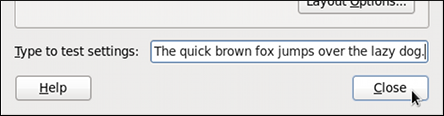

Figure 1.4. Testing the layout

Note

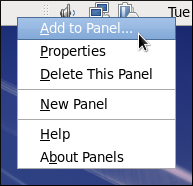

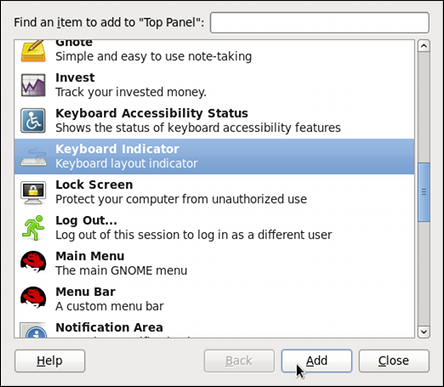

1.2. Adding the Keyboard Layout Indicator

Figure 1.5. Adding a new applet

Figure 1.6. Selecting the Keyboard Indicator

Figure 1.7. The Keyboard Indicator applet

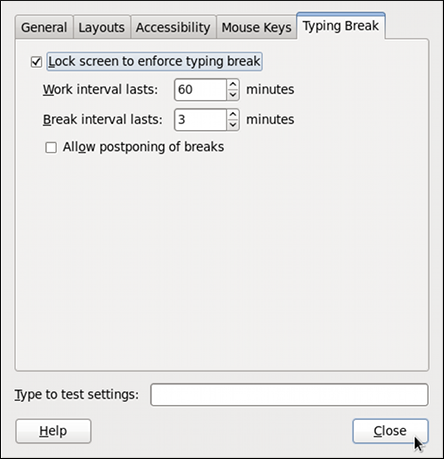

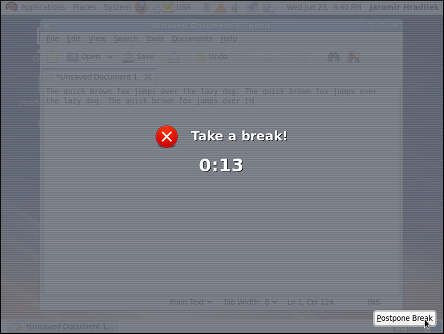

1.3. Setting Up a Typing Break

Figure 1.8. Typing Break Properties

Figure 1.9. Taking a break

Chapter 2. Date and Time Configuration

2.1. Date/Time Properties Tool

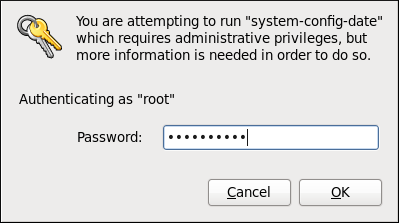

system-config-date command at a shell prompt (e.g., xterm or GNOME Terminal). Unless you are already authenticated, you will be prompted to enter the superuser password.

Figure 2.1. Authentication Query

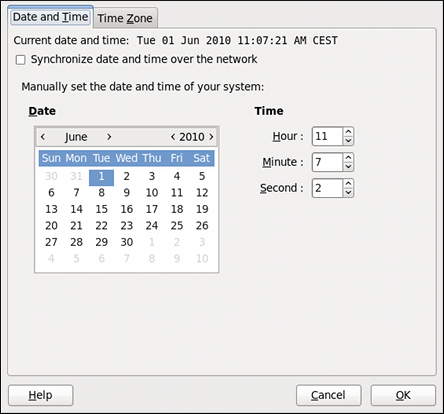

2.1.1. Date and Time Properties

Figure 2.2. Date and Time Properties

- Change the current date. Use the arrows to the left and right of the month and year to change the month and year respectively. Then click inside the calendar to select the day of the month.

- Change the current time. Use the up and down arrow buttons beside the Hour, Minute, and Second, or replace the values directly.

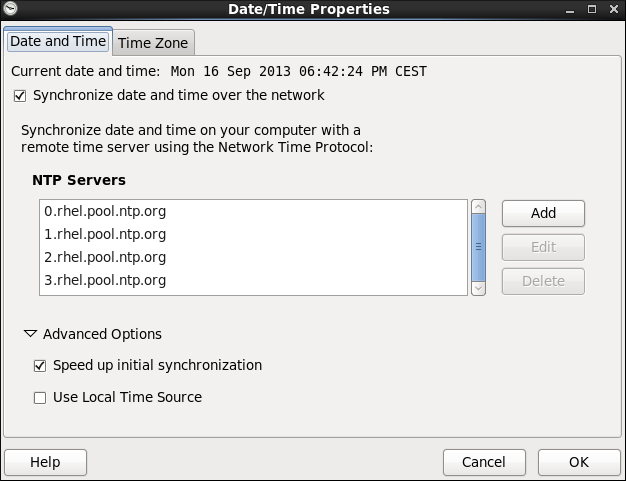

2.1.2. Network Time Protocol Properties

Figure 2.3. Network Time Protocol Properties

Note

2.1.3. Time Zone Properties

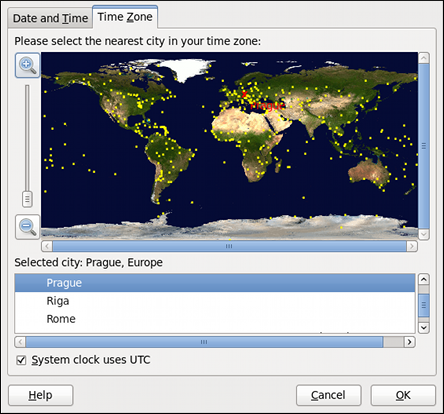

Figure 2.4. Time Zone Properties

- Using the interactive map. Click “zoom in” and “zoom out” buttons next to the map, or click on the map itself to zoom into the selected region. Then choose the city specific to your time zone. A red X appears and the time zone selection changes in the list below the map.

- Use the list below the map. To make the selection easier, cities and countries are grouped within their specific continents. Note that non-geographic time zones have also been added to address needs in the scientific community.

2.2. Command Line Configuration

~]$ su -

Password:2.2.1. Date and Time Setup

date command allows the superuser to set the system date and time manually:

- Change the current date. Type the command in the following form at a shell prompt, replacing the YYYY with a four-digit year, MM with a two-digit month, and DD with a two-digit day of the month:

~]#

date +%D -s YYYY-MM-DDFor example, to set the date to 2 June 2010, type:~]#

date +%D -s 2010-06-02 - Change the current time. Use the following command, where HH stands for an hour, MM is a minute, and SS is a second, all typed in a two-digit form:

~]#

date +%T -s HH:MM:SSIf your system clock is set to use UTC (Coordinated Universal Time), add the following option:~]#

date +%T -s HH:MM:SS -uFor instance, to set the system clock to 11:26 PM using the UTC, type:~]#

date +%T -s 23:26:00 -u

date without any additional argument:

Example 2.1. Displaying the current date and time

~]$ date

Wed Jun 2 11:58:48 CEST 20102.2.2. Network Time Protocol Setup

- Firstly, check whether the selected NTP server is accessible:

~]#

ntpdate -q server_addressFor example:~]#

ntpdate -q 0.rhel.pool.ntp.org - When you find a satisfactory server, run the ntpdate command followed by one or more server addresses:

~]#

ntpdate server_address...For instance:~]#

ntpdate 0.rhel.pool.ntp.org 1.rhel.pool.ntp.orgUnless an error message is displayed, the system time should now be set. You can check the current by setting typingdatewithout any additional arguments as shown in Section 2.2.1, “Date and Time Setup”. - In most cases, these steps are sufficient. Only if you really need one or more system services to always use the correct time, enable running the ntpdate at boot time:

~]#

chkconfig ntpdate onFor more information about system services and their setup, see Chapter 12, Services and Daemons.Note

If the synchronization with the time server at boot time keeps failing, i.e., you find a relevant error message in the/var/log/boot.logsystem log, try to add the following line to/etc/sysconfig/network:NETWORKWAIT=1

- Open the NTP configuration file

/etc/ntp.confin a text editor such as vi or nano, or create a new one if it does not already exist:~]#

nano /etc/ntp.conf - Now add or edit the list of public NTP servers. If you are using Red Hat Enterprise Linux 6, the file should already contain the following lines, but feel free to change or expand these according to your needs:

server 0.rhel.pool.ntp.org iburst server 1.rhel.pool.ntp.org iburst server 2.rhel.pool.ntp.org iburst server 3.rhel.pool.ntp.org iburst

Theiburstdirective at the end of each line is to speed up the initial synchronization. As of Red Hat Enterprise Linux 6.5 it is added by default. If upgrading from a previous minor release, and your/etc/ntp.conffile has been modified, then the upgrade to Red Hat Enterprise Linux 6.5 will create a new file/etc/ntp.conf.rpmnewand will not alter the existing/etc/ntp.conffile. - Once you have the list of servers complete, in the same file, set the proper permissions, giving the unrestricted access to localhost only:

restrict default kod nomodify notrap nopeer noquery restrict -6 default kod nomodify notrap nopeer noquery restrict 127.0.0.1 restrict -6 ::1

- Save all changes, exit the editor, and restart the NTP daemon:

~]#

service ntpd restart - Make sure that

ntpdis started at boot time:~]#

chkconfig ntpd on

Chapter 3. Managing Users and Groups

3.1. What Users and Groups Are

Note

cat /usr/share/doc/setup-2.8.14/uidgidUID_MIN and GID_MIN directives in the /etc/login.defs file:

[file contents truncated] UID_MIN 5000 [file contents truncated] GID_MIN 5000 [file contents truncated]

newgrp command, after which all newly created files are owned by the new group. A supplementary group serves to grant a certain set of users, its members, access to a certain set of files, those owned by this group.

root, and access permissions can be changed by both the root user and file owner.

umask and can be configured in the /etc/bashrc file for all users, or in ~/.bashrc for each user individually . The configuration in ~/.bashrc overrides the configuration in /etc/bashrc. Additionally, the umask command overrides the default permissions for the duration of the shell session.

/etc/shadow file, which is only readable by the root user. The file also stores information about password aging and policies for specific accounts. The default values for a newly created account are stored in the /etc/login.defs and /etc/default/useradd files. The Red Hat Enterprise Linux 6 Security Guide provides more security-related information about users and groups.

3.2. Managing Users via the User Manager Application

To start the User Manager application:

- From the toolbar, select → → .

- Or, type

system-config-usersat the shell prompt.

Note

root.

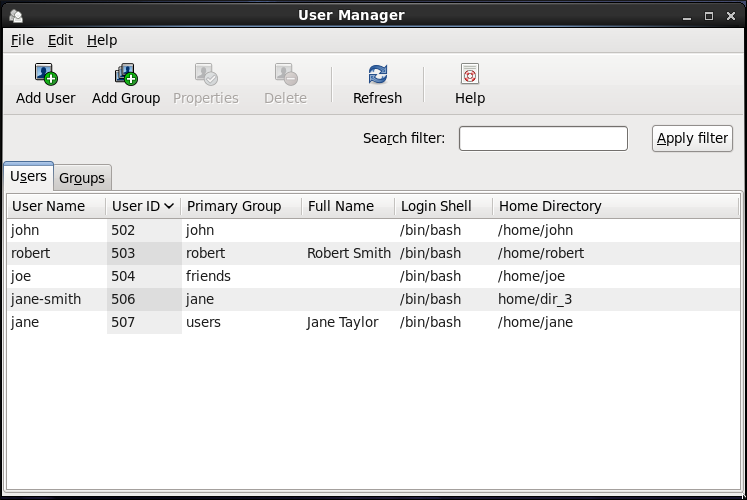

3.2.1. Viewing Users

Figure 3.1. Viewing Users

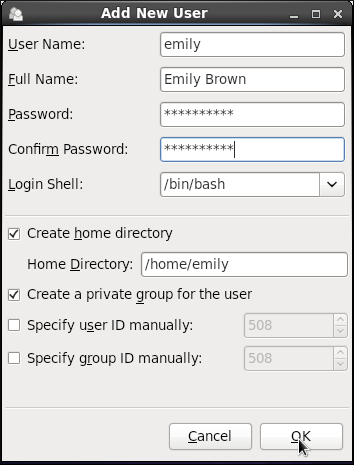

3.2.2. Adding a New User

- Click the button.

- Enter the user name and full name in the appropriate fields

- Type the user's password in the Password and Confirm Password fields. The password must be at least six characters long.

Note

For safety reasons, choose a long password not based on a dictionary term; use a combination of letters, numbers, and special characters. - Select a login shell for the user from the Login Shell drop-down list or accept the default value of .

- Clear the Create home directory check box if you choose not to create the home directory for a new user in

/home/username/.You can also change this home directory by editing the content of the Home Directory text box. Note that when the home directory is created, default configuration files are copied into it from the/etc/skel/directory. - Clear the Create a private group for the user check box if you do not want a unique group with the same name as the user to be created. User private group (UPG) is a group assigned to a user account to which that user exclusively belongs, which is used for managing file permissions for individual users.

- Specify a user ID for the user by selecting Specify user ID manually. If the option is not selected, the next available user ID above 500 is assigned to the new user.

- Click the button to complete the process.

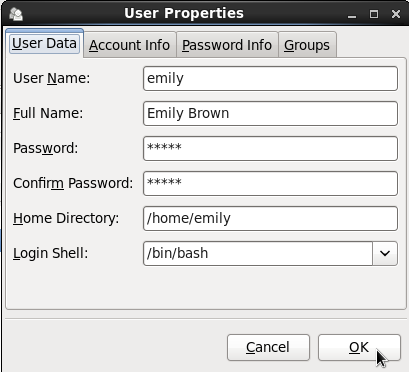

3.2.3. Modifying User Properties

- Select the user from the user list by clicking once on the user name.

- Click from the toolbar or choose → from the drop-down menu.

Figure 3.2. User Properties

- There are four tabs you can update to your preferences. When you have finished, click the button to save your changes.

3.3. Managing Groups via the User Manager Application

3.3.1. Viewing Groups

Figure 3.3. Viewing Groups

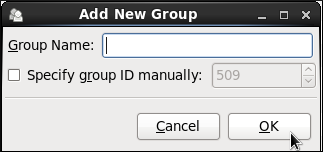

3.3.2. Adding a New Group

- Select from the User Manager toolbar:

Figure 3.4. New Group

- Type the name of the new group.

- Specify the group ID (GID) for the new group by checking the Specify group ID manually check box.

- Select the GID. Note that Red Hat Enterprise Linux also reserves group IDs lower than 500 for system groups.

- Click to create the group. The new group appears in the group list.

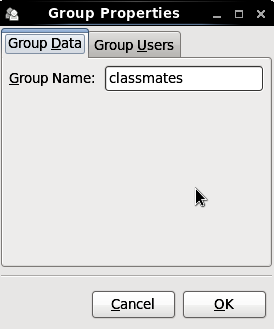

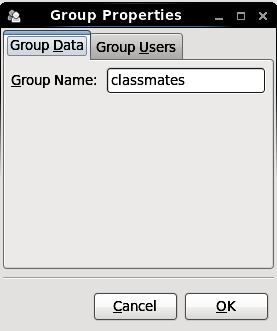

3.3.3. Modifying Group Properties

- Select the group from the group list by clicking on its name.

- Click from the toolbar or choose → from the drop-down menu.

Figure 3.5. Group Properties

- The Group Users tab displays the list of group members. Use this tab to add or remove users from the group. Click to save your changes.

3.4. Managing Users via Command-Line Tools

useradd, usermod, userdel, or passwd. The files affected include /etc/passwd which stores user accounts information and /etc/shadow, which stores secure user account information.

3.4.1. Creating Users

useradd utility creates new users and adds them to the system. Following the short procedure below, you will create a default user account with its UID, automatically create a home directory where default user settings will be stored, /home/username/, and set the default shell to /bin/bash.

- Run the following command at a shell prompt as

rootsubstituting username with the name of your choice:useradd username - By setting a password unlock the account to make it accessible. Type the password twice when the program prompts you to.

passwd

Example 3.1. Creating a User with Default Settings

~]# useradd robert ~]# passwd robert Changing password for user robert New password: Re-type new password: passwd: all authentication tokens updated successfully.

useradd robert command creates an account named robert. If you run cat /etc/passwd to view the content of the /etc/passwd file, you can learn more about the new user from the line displayed to you:

robert:x:502:502::/home/robert:/bin/bash

robert has been assigned a UID of 502, which reflects the rule that the default UID values from 0 to 499 are typically reserved for system accounts. GID, group ID of User Private Group, equals to UID. The home directory is set to /home/robert and login shell to /bin/bash. The letter x signals that shadow passwords are used and that the hashed password is stored in /etc/shadow.

useradd (see the useradd(8) man page for the whole list of options). As you can see from the basic syntax of the command, you can add one or more options:

useradd [option(s)] username-c option to specify, for example, the full name of the user when creating them. Use -c followed by a string, which adds a comment to the user:

useradd -c "string" usernameExample 3.2. Specifying a User's Full Name when Creating a User

~]# useradd -c "Robert Smith" robert ~]# cat /etc/passwd robert:x:502:502:Robert Smith:/home/robert:/bin/bash

robert, sometimes called the login name, and full name Robert Smith.

/home/username/ directory for the user account, set a different one instead of it. Execute the command below:

useradd -d home_directoryExample 3.3. Adding a User with non-default Home Directory

~]# useradd -d /home/dir_1 robert

robert's home directory is now not the default /home/robert but /home/dir_1/.

useradd with the -M option. However, when such a user logs into a system that has just booted and their home directory does not exist, their login directory will be the root directory. If such a user logs into a system using the su command, their login directory will be the current directory of the previous user.

useradd -M username/home directory while creating a new user, make use of the -m and -k options together followed by the path.

Example 3.4. Creating a User while Copying Contents to the Home Directory

/dir_1 to /home/jane, which is the default home directory of a new user jane:

~]# useradd -m -k /dir_1 jane

useradd command, this means creating an account for a certain amount of time only and disabling it at a certain date. This is a particularly useful setting as there is no security risk resulting from forgetting to delete a certain account. For this, the -e option is used with the specified expire_date in the YYYY-MM-DD format.

Note

useradd -e YYYY-MM-DD usernameExample 3.5. Setting the Account Expiration Date

~]# useradd -e 2015-11-05 emily

emily will be created now and automatically disabled on 5 November, 2015.

/bin/bash, but can be changed by the -s option to any other shell different from bash, ksh, csh, tsh, for example.

useradd -s login_shell usernameExample 3.6. Adding a User with Non-default Shell

~]# useradd -s /bin/ksh robert

robert which has the /bin/ksh shell.

-r option creates a system account, which is an account for administrative use that has some, but not all, root privileges. Such accounts have a UID lower than the value of UID_MIN defined in /etc/login.defs, typically 500 and above for ordinary users.

useradd -r username

3.4.2. Attaching New Users to Groups

useradd command creates a User Private Group (UPG, a group assigned to a user account to which that user exclusively belongs) whenever a new user is added to the system and names the group after the user. For example, when the account robert is created, an UPG named robert is created at the same time, the only member of which is the user robert.

User Private Group for a user for whatever reason, execute the useradd command with the following option:

useradd -N username-g and -G options. While the -g option specifies the primary group membership, -G refers to supplementary groups into which the user is also included. The group names you specify must already exist on the system.

Example 3.7. Adding a User to a Group

~]# useradd -g "friends" -G "family,schoolmates" emily

useradd -g "friends" -G "family,schoolmates" emily command creates the user emily but emily's primary group is set to friends as specified by the -g option. emily is also a group member of the supplementary groups family and schoolmates.

usermod command with the -G option and a list of groups divided by commas, no spaces:

usermod -G group_1,group_2,group_33.4.3. Updating Users' Authentication

useradd username command, the password is automatically set to never expire (see the /etc/shadow file).

passwd, the standard utility for administering the /etc/passwd file. The syntax of the passwd command look as follows:

passwd option(s) username!). If you later find a reason to unlock the account, passwd has a reverse operation for locking. Only root can carry out these two operations.

passwd -l usernamepasswd -u username

Example 3.8. Unlocking a User Password

~]# passwd -l robert Locking password for user robert. passwd: Success ~]# passwd -u robert passwd: Warning: unlocked password would be empty passwd: Unsafe operation (use -f to force)

-l option locks robert's account password successfully. However, running the passwd -u command does not unlock the password because by default passwd refuses to create a passwordless account.

passwd with the -e option. The user will be forced to change the password during the next login attempt:

passwd -e username-n option) and the maximum (the -x option) lifetimes. To inform the user about their password expiration, use the -w option. All these options must be accompanied with the number of days and can be run as root only.

Example 3.9. Adjusting Aging Data for User Passwords

~]# passwd -n 10 -x 60 -w 3 jane

jane will begin receiving warnings in advance that her password will expire to 3 day.

-S option which outputs a short information for you to know the status of the password for a given account:

~]# passwd -S jane jane LK 2014-07-22 10 60 3 -1 (Password locked.)

useradd command, which disables the account permanently. A value of 0 disables the account as soon as the password has expired, and a value of -1 disables the feature, that is, the user will have to change his password when the password expires. The -f option is used to specify the number of days after a password expires until the account is disabled (but may be unblocked by system administrator):

useradd -f number-of-days usernamepasswd command see the passwd(1) man page.

3.4.4. Modifying User Settings

usermod command. The logic of using usermod is identical to useradd as well as its syntax:

usermod option(s) username-l option with the new user name (or login).

Example 3.10. Changing User's Login

~]# usermod -l "emily-smith" emily

-l option changes the name of the user from the login emily to the new login, emily-smith. Nothing else is changed. In particular, emily's home directory name (/home/emily) remains the same unless it is changed manually to reflect the new user name.

Note

Example 3.11. Changing User's UID and Home Directory

~]# usermod -a -u 699 -d /home/dir_2 robert

-a -u and -d options changes the settings of user robert. Now, his ID is 699 instead of 501, and his home directory is no longer /home/robert but /home/dir_2.

usermod command you can also move the content of the user's home directory to a new location, or lock the account by locking its password.

Example 3.12. Changing User's

~]# usermod -m -d /home/jane -L jane

-m and -d options used together move the content of jane's home directory to the /home/dir_3 directory. The -L option locks the access to jane's account by locking its password.

usermod command, see the usermod(8) man page or run usermod --help on the command line.

3.4.5. Deleting Users

userdel command on the command line as root.

userdel usernameuserdel with the -r option removes files in the user's home directory along with the home directory itself and the user's mail spool. Files located in other file systems have to be searched for and deleted manually.

userdel -r usernameNote

-r option is relatively safer, and thus recommended, compared to -f which forces the removal of the user account even if the user is still logged in.

3.4.6. Displaying Comprehensive User Information

lslogins [OPTIONS]lslogins(1) manual page or the output of the lslogins --help command for the complete list of available options and their usage.

lslogins command without any options shows default information about all system and user accounts on the system. Specifically, their UID, user name, and GECOS information, as well as information about the user's last login to the system, and whether their password is locked or login by password disabled.

Example 3.13. Displaying basic information about all accounts on the system

~]# lslogins

UID USER PWD-LOCK PWD-DENY LAST-LOGIN GECOS

0 root 0 0 root

1 bin 0 1 bin

2 daemon 0 1 daemon

3 adm 0 1 adm

4 lp 0 1 lp

5 sync 0 1 sync

6 shutdown 0 1 Jul21/16:20 shutdown

7 halt 0 1 halt

8 mail 0 1 mail

10 uucp 0 1 uucp

11 operator 0 1 operator

12 games 0 1 games

13 gopher 0 1 gopher

14 ftp 0 1 FTP User

29 rpcuser 0 1 RPC Service User

32 rpc 0 1 Rpcbind Daemon

38 ntp 0 1

42 gdm 0 1

48 apache 0 1 Apache

68 haldaemon 0 1 HAL daemon

69 vcsa 0 1 virtual console memory owner

72 tcpdump 0 1

74 sshd 0 1 Privilege-separated SSH

81 dbus 0 1 System message bus

89 postfix 0 1

99 nobody 0 1 Nobody

113 usbmuxd 0 1 usbmuxd user

170 avahi-autoipd 0 1 Avahi IPv4LL Stack

173 abrt 0 1

497 pulse 0 1 PulseAudio System Daemon

498 saslauth 0 1 Saslauthd user

499 rtkit 0 1 RealtimeKit

500 jsmith 0 0 10:56:12 John Smith

501 jdoe 0 0 12:13:53 John Doe

502 esmith 0 0 12:59:05 Emily Smith

503 jeyre 0 0 12:22:14 Jane Eyre

65534 nfsnobody 0 1 Anonymous NFS Userlslogins LOGIN command, where LOGIN is either a UID or a user name. The following example displays detailed information about John Doe's account and his activity on the system:

Example 3.14. Displaying detailed information about a single account

~]# lslogins jdoe

Username: jdoe

UID: 501

Gecos field: John Doe

Home directory: /home/jdoe

Shell: /bin/bash

No login: no

Password is locked: no

Password no required: no

Login by password disabled: no

Primary group: jdoe

GID: 501

Supplementary groups: users

Supplementary group IDs: 100

Last login: 12:13:53

Last terminal: pts/3

Last hostname: 192.168.100.1

Hushed: no

Password expiration warn interval: 7

Password changed: Aug01/02:00

Maximal change time: 99999

Password expiration: Sep01/02:00

Selinux context: unconfined_u:unconfined_r:unconfined_t:s0-s0:c0.c1023--logins=LOGIN option, you can display information about a group of accounts that are specified as a list of UIDs or user names. Specifying the --output=COLUMNS option, where COLUMNS is a list of available output parameters, you can customize the output of the lslogins command. For example, the following command shows login activity of the users root, jsmith, jdoe, and esmith:

Example 3.15. Displaying specific information about a group of users

~]#lslogins --logins=0,500,jdoe,esmith \> --output=UID,USER,LAST-LOGIN,LAST-TTY,FAILED-LOGIN,FAILED-TTYUID USER LAST-LOGIN LAST-TTY FAILED-LOGIN FAILED-TTY 0 root 500 jsmith 10:56:12 pts/2 501 jdoe 12:13:53 pts/3 502 esmith 15:46:16 pts/3 15:46:09 ssh:notty

--system-accs option. To address user accounts, use the --user-accs. For example, the following command displays information about supplementary groups and password expirations for all user accounts:

Example 3.16. Displaying information about supplementary groups and password expiration for all user accounts

~]# lslogins --user-accs --supp-groups --acc-expiration

UID USER GID GROUP SUPP-GIDS SUPP-GROUPS PWD-WARN PWD-MIN PWD-MAX PWD-CHANGE

PWD-EXPIR

0 root 0 root 7 99999 Jul21/02:00

500 jsmith 500 jsmith 1000,100 staff,users 7 99999 Jul21/02:00

501 jdoe 501 jdoe 100 users 7 99999 Aug01/02:00

Sep01/02:00

502 esmith 502 esmith 100 users 7 99999 Aug01/02:00

503 jeyre 503 jeyre 1000,100 staff,users 7 99999 Jul28/02:00

Sep01/02:00

65534 nfsnobody 65534 nfsnobody Jul21/02:00

lslogins commands according to the user's needs makes lslogins an ideal tool to use in scripts and for automatic processing. For example, the following command returns a single string that represents the time and date of the last login. This string can be passed as input to another utility for further processing.

Example 3.17. Displaying a single piece of information without the heading

~]# lslogins --logins=jsmith --output=LAST-LOGIN --time-format=iso | tail -1

2014-08-06T10:56:12+02003.5. Managing Groups via Command-Line Tools

groupadd, groupmod, groupdel, or gpasswd. The files affected include /etc/group which stores group account information and /etc/gshadow, which stores secure group account information.

3.5.1. Creating Groups

groupadd command is run at the shell prompt as root.

groupadd group_nameExample 3.18. Creating a Group with Default Settings

~]# groupadd friends

groupadd command creates a new group called friends. You can read more information about the group from the newly-created line in the /etc/group file:

classmates:x:30005:

friends is attached with a unique GID (group ID) of 30005 and is not attached with any users. Optionally, you can set a password for a group by running gpasswd groupname.

groupadd option(s) groupnamegroupadd command with the -g option. Remember that this value must be unique (unless the -o option is used) and the value must be non-negative.

groupadd -g GIDExample 3.19. Creating a Group with Specified GID

schoolmates and sets GID of 60002 for it:

~]# groupadd -g 60002 schoolmates

-g and GID already exists, groupadd refuses to create another group with existing GID. As a workaround, use the -f option, with which groupadd creates a group, but with a different GID.

groupadd -f GID-r option to the groupadd command. System groups are used for system purposes, which practically means that GID is allocated from 1 to 499 within the reserved range of 999.

groupadd -r group_namegroupadd, see the groupadd(8) man pages.

3.5.2. Attaching Users to Groups

gpasswd command.

gpasswd -a username which_group_to_editgpasswd -d username which_group_to_edit--members option dividing them with commas and no spaces:

gpasswd --members username_1,username_2 which_group_to_edit3.5.3. Updating Group Authentication

gpasswd command administers /etc/group and /etc/gshadow files. Note that this command works only if run by a group administrator.

root user can add group administrators with the gpasswd -A users groupname where users is a comma-separated list of existing users you want to be group administrators (without any spaces between commas).

gpasswd command with the relevant group name. You will be prompted to type the new password of the group.

gpasswd groupnameExample 3.20. Changing a Group Password

~]# gpasswd crowd Changing password for group crowd New password: Re-enter new password:

crowd has been changed.

-r option.

gpasswd -r schoolmates3.5.4. Modifying Group Settings

groupmod command. The logic of using groupmod is identical to groupadd as well as its syntax:

groupmod option(s) groupnamegroupmod command in the following way:

groupmod -g GID_NEW which_group_to_editNote

groupmod -n new_groupname groupnameExample 3.21. Changing a Group's Name

schoolmates to crowd:

~]# groupmod -n crowd schoolmates

3.5.5. Deleting Groups

groupdel command modifies the system account files, deleting all entries that see the group. The named group must exist when you execute this command.

groupdel groupname3.6. Additional Resources

3.6.1. Installed Documentation

- chage(1) — A command to modify password aging policies and account expiration.

- gpasswd(1) — A command to administer the

/etc/groupfile. - groupadd(8) — A command to add groups.

- grpck(8) — A command to verify the

/etc/groupfile. - groupdel(8) — A command to remove groups.

- groupmod(8) — A command to modify group membership.

- pwck(8) — A command to verify the

/etc/passwdand/etc/shadowfiles. - pwconv(8) — A tool to convert standard passwords to shadow passwords.

- pwunconv(8) — A tool to convert shadow passwords to standard passwords.

- useradd(8) — A command to add users.

- userdel(8) — A command to remove users.

- usermod(8) — A command to modify users.

- group(5) — The file containing group information for the system.

- passwd(5) — The file containing user information for the system.

- shadow(5) — The file containing passwords and account expiration information for the system.

- login.defs(5) - The file containing shadow password suite configuration.

- useradd(8) - For

/etc/default/useradd, section “Changing the default values” in manual page.

Chapter 4. Gaining Privileges

root is potentially dangerous and can lead to widespread damage to the system and data. This chapter covers ways to gain administrative privileges using the su and sudo programs. These programs allow specific users to perform tasks which would normally be available only to the root user while maintaining a higher level of control and system security.

4.1. The su Command

su command, they are prompted for the root password and, after authentication, are given a root shell prompt.

su command, the user is the root user and has absolute administrative access to the system[1]. In addition, once a user has become root, it is possible for them to use the su command to change to any other user on the system without being prompted for a password.

~]# usermod -a -G wheel usernamewheel group.

- Click the menu on the Panel, point to and then click to display the User Manager. Alternatively, type the command

system-config-usersat a shell prompt. - Click the Users tab, and select the required user in the list of users.

- Click on the toolbar to display the User Properties dialog box (or choose on the menu).

- Click the Groups tab, select the check box for the wheel group, and then click .

wheel group, it is advisable to only allow these specific users to use the su command. To do this, you will need to edit the PAM configuration file for su: /etc/pam.d/su. Open this file in a text editor and remove the comment (#) from the following line:

#auth required pam_wheel.so use_uid

wheel can switch to another user using the su command.

Note

root user is part of the wheel group by default.

4.2. The sudo Command

sudo command offers another approach to giving users administrative access. When trusted users precede an administrative command with sudo, they are prompted for their own password. Then, when they have been authenticated and assuming that the command is permitted, the administrative command is executed as if they were the root user.

sudo command is as follows:

sudo <command>mount.

sudo command allows for a high degree of flexibility. For instance, only users listed in the /etc/sudoers configuration file are allowed to use the sudo command and the command is executed in the user's shell, not a root shell. This means the root shell can be completely disabled as shown in the Red Hat Enterprise Linux 6 Security Guide.

sudo is logged to the file /var/log/messages and the command issued along with the issuer's user name is logged to the file /var/log/secure. Should you require additional logging, use the pam_tty_audit module to enable TTY auditing for specified users by adding the following line to your /etc/pam.d/system-auth file:

session required pam_tty_audit.so disable=<pattern> enable=<pattern>

session required pam_tty_audit.so disable=* enable=root

sudo command is that an administrator can allow different users access to specific commands based on their needs.

sudo configuration file, /etc/sudoers, should use the visudo command.

visudo and add a line similar to the following in the user privilege specification section:

juan ALL=(ALL) ALL

juan, can use sudo from any host and execute any command.

sudo:

%users localhost=/sbin/shutdown -h now

/sbin/shutdown -h now as long as it is issued from the console.

sudoers has a detailed listing of options for this file.

Important

sudo command. You can avoid them by editing the /etc/sudoers configuration file using visudo as described above. Leaving the /etc/sudoers file in its default state gives every user in the wheel group unlimited root access.

- By default,

sudostores the sudoer's password for a five minute timeout period. Any subsequent uses of the command during this period will not prompt the user for a password. This could be exploited by an attacker if the user leaves his workstation unattended and unlocked while still being logged in. This behavior can be changed by adding the following line to the/etc/sudoersfile:Defaults timestamp_timeout=<value>

where <value> is the desired timeout length in minutes. Setting the <value> to 0 causessudoto require a password every time. - If a sudoer's account is compromised, an attacker can use

sudoto open a new shell with administrative privileges:sudo /bin/bashOpening a new shell as root in this or similar fashion gives the attacker administrative access for a theoretically unlimited amount of time, bypassing the timeout period specified in the/etc/sudoersfile and never requiring the attacker to input a password forsudoagain until the newly opened session is closed.

4.3. Additional Resources

Installed Documentation

- su(1) - the manual page for

suprovides information regarding the options available with this command. - sudo(8) - the manual page for

sudoincludes a detailed description of this command as well as a list of options available for customizingsudo's behavior. - pam(8) - the manual page describing the use of Pluggable Authentication Modules for Linux.

Online Documentation

- Red Hat Enterprise Linux 6 Security Guide - The Security Guide describes in detail security risks and mitigating techniques related to programs for gaining privileges.

- Red Hat Enterprise Linux 6 Managing Single Sign-On and Smart Cards - This guide provides, among other things, a detailed description of Pluggable Authentication Modules (PAM), their configuration and usage.

Chapter 5. Console Access

- They can run certain programs that they otherwise cannot run.

- They can access certain files that they otherwise cannot access. These files normally include special device files used to access diskettes, CD-ROMs, and so on.

halt, poweroff, and reboot.

5.1. Disabling Console Program Access for Non-root Users

/etc/security/console.apps/ directory. To list these programs, run the following command:

~]$ ls /etc/security/console.apps

abrt-cli-root

config-util

eject

halt

poweroff

reboot

rhn_register

setup

subscription-manager

subscription-manager-gui

system-config-network

system-config-network-cmd

xserver

/etc/security/console.apps/ resides in the /etc/pam.d/ directory and is named the same as the program. Using this file, you can configure PAM to deny access to the program if the user is not root. To do that, insert line auth requisite pam_deny.so directly after the first uncommented line auth sufficient pam_rootok.so.

Example 5.1. Disabling Access to the Reboot Program

/etc/security/console.apps/reboot, insert line auth requisite pam_deny.so into the /etc/pam.d/reboot PAM configuration file:

#%PAM-1.0 auth sufficient pam_rootok.so auth requisite pam_deny.so auth required pam_console.so #auth include system-auth account required pam_permit.so

reboot utility is disabled.

/etc/security/console.apps/ partially derive their PAM configuration from the /etc/pam.d/config-util configuration file. This allows to change configuration for all these programs at once by editing /etc/pam.d/config-util. To find all these programs, search for PAM configuration files that refer to the config-util file:

~]# grep -l "config-util" /etc/pam.d/*

/etc/pam.d/abrt-cli-root

/etc/pam.d/rhn_register

/etc/pam.d/subscription-manager

/etc/pam.d/subscription-manager-gui

/etc/pam.d/system-config-network

/etc/pam.d/system-config-network-cmdhalt, poweroff, reboot, and other programs, which by default are accessible from the console.

5.2. Disabling Rebooting Using Ctrl+Alt+Del

/etc/init/control-alt-delete.conf file. By default, the shutdown utility with the -r option is used to shutdown and reboot the system.

exec true command, which does nothing. To do that, run the following command as root:

~]# echo "exec true" >> /etc/init/control-alt-delete.override

Part II. Subscription and Support

Chapter 6. Registering the System and Managing Subscriptions

Note

6.1. Registering the System and Attaching Subscriptions

subscription-manager commands are supposed to be run as root.

- Run the following command to register your system. You will be prompted to enter your user name and password. Note that the user name and password are the same as your login credentials for Red Hat Customer Portal.

subscription-manager register - Determine the pool ID of a subscription that you require. To do so, type the following at a shell prompt to display a list of all subscriptions that are available for your system:

subscription-manager list --availableFor each available subscription, this command displays its name, unique identifier, expiration date, and other details related to your subscription. To list subscriptions for all architectures, add the--alloption. The pool ID is listed on a line beginning withPool ID. - Attach the appropriate subscription to your system by entering a command as follows:

subscription-manager attach --pool=pool_idReplace pool_id with the pool ID you determined in the previous step.To verify the list of subscriptions your system has currently attached, at any time, run:subscription-manager list --consumed

Note

yum and subscription-manager to work correctly. Refer to the "Setting Firewall Access for Content Delivery" section of the Red Hat Enterprise Linux 6 Subscription Management guide if you use a firewall and to the "Using an HTTP Proxy" section if you use a proxy.

6.2. Managing Software Repositories

/etc/yum.repos.d/ directory. To verify that, use yum to list all enabled repositories:

yum repolistsubscription-manager repos --listrhel-variant-rhscl-version-rpms rhel-variant-rhscl-version-debug-rpms rhel-variant-rhscl-version-source-rpms

server or workstation), and version is the Red Hat Enterprise Linux system version (6 or 7), for example:

rhel-server-rhscl-6-eus-rpms rhel-server-rhscl-6-eus-source-rpms rhel-server-rhscl-6-eus-debug-rpms

subscription-manager repos --enable repositorysubscription-manager repos --disable repository6.3. Removing Subscriptions

- Determine the serial number of the subscription you want to remove by listing information about already attached subscriptions:

subscription-manager list --consumedThe serial number is the number listed asserial. For instance,744993814251016831in the example below:SKU: ES0113909 Contract: 01234567 Account: 1234567 Serial: 744993814251016831 Pool ID: 8a85f9894bba16dc014bccdd905a5e23 Active: False Quantity Used: 1 Service Level: SELF-SUPPORT Service Type: L1-L3 Status Details: Subscription Type: Standard Starts: 02/27/2015 Ends: 02/27/2016 System Type: Virtual

- Enter a command as follows to remove the selected subscription:

subscription-manager remove --serial=serial_numberReplace serial_number with the serial number you determined in the previous step.

subscription-manager remove --all6.4. Additional Resources

Installed Documentation

subscription-manager(8) — the manual page for Red Hat Subscription Management provides a complete list of supported options and commands.

Related Books

- Red Hat Subscription Management collection of guides — These guides contain detailed information how to use Red Hat Subscription Management.

- Installation Guide — see the Firstboot chapter for detailed information on how to register during the firstboot process.

Online Resources

- Red Hat Access Labs — The Red Hat Access Labs includes a “Registration Assistant”.

See Also

- Chapter 4, Gaining Privileges documents how to gain administrative privileges by using the

suandsudocommands. - Chapter 8, Yum provides information about using the yum packages manager to install and update software.

- Chapter 9, PackageKit provides information about using the PackageKit package manager to install and update software.

Chapter 7. Accessing Support Using the Red Hat Support Tool

SSH or from any terminal. It enables, for example, searching the Red Hat Knowledgebase from the command line, copying solutions directly on the command line, opening and updating support cases, and sending files to Red Hat for analysis.

7.1. Installing the Red Hat Support Tool

root:

~]# yum install redhat-support-tool

7.2. Registering the Red Hat Support Tool Using the Command Line

~]#

Where username is the user name of the Red Hat Customer Portal account.redhat-support-tool config user username~]#

redhat-support-tool config passwordPlease enter the password for username:

7.3. Using the Red Hat Support Tool in Interactive Shell Mode

~]$ redhat-support-tool

Welcome to the Red Hat Support Tool.

Command (? for help):

The tool can be run as an unprivileged user, with a consequently reduced set of commands, or as root.

? character. The program or menu selection can be exited by entering the q or e character. You will be prompted for your Red Hat Customer Portal user name and password when you first search the Knowledgebase or support cases. Alternately, set the user name and password for your Red Hat Customer Portal account using interactive mode, and optionally save it to the configuration file.

7.4. Configuring the Red Hat Support Tool

config --help:

~]#redhat-support-toolWelcome to the Red Hat Support Tool. Command (? for help):config --helpUsage: config [options] config.option <new option value> Use the 'config' command to set or get configuration file values. Options: -h, --help show this help message and exit -g, --global Save configuration option in /etc/redhat-support-tool.conf. -u, --unset Unset configuration option. The configuration file options which can be set are: user : The Red Hat Customer Portal user. password : The Red Hat Customer Portal password. debug : CRITICAL, ERROR, WARNING, INFO, or DEBUG url : The support services URL. Default=https://api.access.redhat.com proxy_url : A proxy server URL. proxy_user: A proxy server user. proxy_password: A password for the proxy server user. ssl_ca : Path to certificate authorities to trust during communication. kern_debug_dir: Path to the directory where kernel debug symbols should be downloaded and cached. Default=/var/lib/redhat-support-tool/debugkernels Examples: - config user - config user my-rhn-username - config --unset user

Procedure 7.1. Registering the Red Hat Support Tool Using Interactive Mode

- Start the tool by entering the following command:

~]#

redhat-support-tool - Enter your Red Hat Customer Portal user name:

Command (? for help):

To save your user name to the global configuration file, add theconfig user username-goption. - Enter your Red Hat Customer Portal password:

Command (? for help):

config passwordPlease enter the password for username:

7.4.1. Saving Settings to the Configuration Files

~/.redhat-support-tool/redhat-support-tool.conf configuration file. If required, it is recommended to save passwords to this file because it is only readable by that particular user. When the tool starts, it will read values from the global configuration file /etc/redhat-support-tool.conf and from the local configuration file. Locally stored values and options take precedence over globally stored settings.

Warning

/etc/redhat-support-tool.conf configuration file because the password is just base64 encoded and can easily be decoded. In addition, the file is world readable.

-g, --global option as follows:

Command (? for help): config setting -g value

Note

-g, --global option, the Red Hat Support Tool must be run as root because normal users do not have the permissions required to write to /etc/redhat-support-tool.conf.

-u, --unset option as follows:

Command (? for help): config setting -u value

This will clear, unset, the parameter from the tool and fall back to the equivalent setting in the global configuration file, if available.

Note

-u, --unset option, but they can be cleared, unset, from the current running instance of the tool by using the -g, --global option simultaneously with the -u, --unset option. If running as root, values and options can be removed from the global configuration file using -g, --global simultaneously with the -u, --unset option.

7.5. Opening and Updating Support Cases Using Interactive Mode

Procedure 7.2. Opening a New Support Case Using Interactive Mode

- Start the tool by entering the following command:

~]#

redhat-support-tool - Enter the

opencasecommand:Command (? for help):

opencase - Follow the on screen prompts to select a product and then a version.

- Enter a summary of the case.

- Enter a description of the case and press Ctrl+D on an empty line when complete.

- Select a severity of the case.

- Optionally chose to see if there is a solution to this problem before opening a support case.

- Confirm you would still like to open the support case.

Support case 0123456789 has successfully been opened

- Optionally chose to attach an SOS report.

- Optionally chose to attach a file.

Procedure 7.3. Viewing and Updating an Existing Support Case Using Interactive Mode

- Start the tool by entering the following command:

~]#

redhat-support-tool - Enter the

getcasecommand:Command (? for help):

Where case-number is the number of the case you want to view and update.getcase case-number - Follow the on screen prompts to view the case, modify or add comments, and get or add attachments.

Procedure 7.4. Modifying an Existing Support Case Using Interactive Mode

- Start the tool by entering the following command:

~]#

redhat-support-tool - Enter the

modifycasecommand:Command (? for help):

Where case-number is the number of the case you want to view and update.modifycase case-number - The modify selection list appears:

Type the number of the attribute to modify or 'e' to return to the previous menu. 1 Modify Type 2 Modify Severity 3 Modify Status 4 Modify Alternative-ID 5 Modify Product 6 Modify Version End of options.

Follow the on screen prompts to modify one or more of the options. - For example, to modify the status, enter

3:Selection: 3 1 Waiting on Customer 2 Waiting on Red Hat 3 Closed Please select a status (or 'q' to exit):

7.6. Viewing Support Cases on the Command Line

~]# redhat-support-tool getcase case-number

Where case-number is the number of the case you want to download.

7.7. Additional Resources

Part III. Installing and Managing Software

Chapter 8. Yum

Important

Note

yum to install, update or remove packages on your system. All examples in this chapter assume that you have already obtained superuser privileges by using either the su or sudo command.

8.1. Checking For and Updating Packages

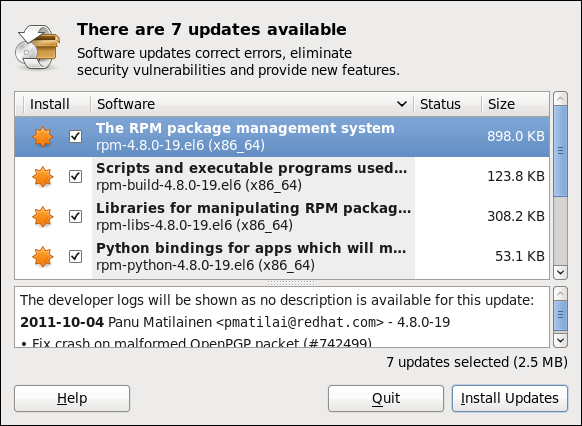

8.1.1. Checking For Updates

yumcheck-update

~]# yum check-update

Loaded plugins: product-id, refresh-packagekit, subscription-manager

Updating Red Hat repositories.

INFO:rhsm-app.repolib:repos updated: 0

PackageKit.x86_64 0.5.8-2.el6 rhel

PackageKit-glib.x86_64 0.5.8-2.el6 rhel

PackageKit-yum.x86_64 0.5.8-2.el6 rhel

PackageKit-yum-plugin.x86_64 0.5.8-2.el6 rhel

glibc.x86_64 2.11.90-20.el6 rhel

glibc-common.x86_64 2.10.90-22 rhel

kernel.x86_64 2.6.31-14.el6 rhel

kernel-firmware.noarch 2.6.31-14.el6 rhel

rpm.x86_64 4.7.1-5.el6 rhel

rpm-libs.x86_64 4.7.1-5.el6 rhel

rpm-python.x86_64 4.7.1-5.el6 rhel

udev.x86_64 147-2.15.el6 rhel

yum.noarch 3.2.24-4.el6 rhelPackageKit— the name of the packagex86_64— the CPU architecture the package was built for0.5.8— the version of the updated package to be installedrhel— the repository in which the updated package is located

yum.

8.1.2. Updating Packages

Updating a Single Package

root:

yumupdatepackage_name

~]# yum update udev

Loaded plugins: product-id, refresh-packagekit, subscription-manager

Updating Red Hat repositories.

INFO:rhsm-app.repolib:repos updated: 0

Setting up Update Process

Resolving Dependencies

--> Running transaction check

---> Package udev.x86_64 0:147-2.15.el6 set to be updated

--> Finished Dependency Resolution

Dependencies Resolved

===========================================================================

Package Arch Version Repository Size

===========================================================================

Updating:

udev x86_64 147-2.15.el6 rhel 337 k

Transaction Summary

===========================================================================

Install 0 Package(s)

Upgrade 1 Package(s)

Total download size: 337 k

Is this ok [y/N]:Loaded plugins: product-id, refresh-packagekit, subscription-manager—yumalways informs you which Yum plug-ins are installed and enabled. See Section 8.5, “Yum Plug-ins” for general information on Yum plug-ins, or to Section 8.5.3, “Plug-in Descriptions” for descriptions of specific plug-ins.udev.x86_64— you can download and install new udev package.yumpresents the update information and then prompts you as to whether you want it to perform the update;yumruns interactively by default. If you already know which transactions theyumcommand plans to perform, you can use the-yoption to automatically answeryesto any questions thatyumasks (in which case it runs non-interactively). However, you should always examine which changesyumplans to make to the system so that you can easily troubleshoot any problems that might arise.If a transaction does go awry, you can view Yum's transaction history by using theyum historycommand as described in Section 8.3, “Working with Transaction History”.

Important

yum always installs a new kernel in the same sense that RPM installs a new kernel when you use the command rpm -i kernel. Therefore, you do not need to worry about the distinction between installing and upgrading a kernel package when you use yum: it will do the right thing, regardless of whether you are using the yum update or yum install command.

rpm -i kernel command (which installs a new kernel) instead of rpm -u kernel (which replaces the current kernel). See Section B.2.2, “Installing and Upgrading” for more information on installing/upgrading kernels with RPM.

Updating All Packages and Their Dependencies

yum update (without any arguments):

yum updateUpdating Security-Related Packages

yum command with a set of highly-useful security-centric commands, subcommands and options. See Section 8.5.3, “Plug-in Descriptions” for specific information.

Updating Packages Automatically

cron daemon and downloads metadata from your package repositories. With the yum-cron service enabled, the user can schedule an automated daily Yum update as a cron job.

Note

~]# yum install yum-cron~]# chkconfig yum-cron on~]# service yum-cron start~]# service yum-cron status/etc/sysconfig/yum-cron file.

yum-cron can be found in the comments within /etc/sysconfig/yum-cron and at the yum-cron(8) manual page.

8.1.3. Preserving Configuration File Changes

8.1.4. Upgrading the System Off-line with ISO and Yum

yum update command with the Red Hat Enterprise Linux installation ISO image is an easy and quick way to upgrade systems to the latest minor version. The following steps illustrate the upgrading process:

- Create a target directory to mount your ISO image. This directory is not automatically created when mounting, so create it before proceeding to the next step. As

root, type:mkdirmount_dirReplace mount_dir with a path to the mount directory. Typically, users create it as a subdirectory in the/mediadirectory. - Mount the Red Hat Enterprise Linux 6 installation ISO image to the previously created target directory. As

root, type:mount-oloopiso_name mount_dirReplace iso_name with a path to your ISO image and mount_dir with a path to the target directory. Here, the-oloopoption is required to mount the file as a block device. - Copy the

media.repofile from the mount directory to the/etc/yum.repos.d/directory. Note that configuration files in this directory must have the .repo extension to function properly.cpmount_dir/media.repo/etc/yum.repos.d/new.repoThis creates a configuration file for the yum repository. Replace new.repo with the filename, for example rhel6.repo. - Edit the new configuration file so that it points to the Red Hat Enterprise Linux installation ISO. Add the following line into the

/etc/yum.repos.d/new.repofile:baseurl=file:///mount_dir

Replace mount_dir with a path to the mount point. - Update all yum repositories including

/etc/yum.repos.d/new.repocreated in previous steps. Asroot, type:yumupdateThis upgrades your system to the version provided by the mounted ISO image. - After successful upgrade, you can unmount the ISO image. As

root, type:umountmount_dirwhere mount_dir is a path to your mount directory. Also, you can remove the mount directory created in the first step. Asroot, type:rmdirmount_dir - If you will not use the previously created configuration file for another installation or update, you can remove it. As

root, type:rm/etc/yum.repos.d/new.repo

Example 8.1. Upgrading from Red Hat Enterprise Linux 6.3 to 6.4

RHEL6.4-Server-20130130.0-x86_64-DVD1.iso. A target directory created for mounting is /media/rhel6/. As root, change into the directory with your ISO image and type:

~]#mount-oloopRHEL6.4-Server-20130130.0-x86_64-DVD1.iso/media/rhel6/

media.repo file from the mount directory:

~]#cp/media/rhel6/media.repo/etc/yum.repos.d/rhel6.repo

/etc/yum.repos.d/rhel6.repo copied in the previous step:

baseurl=file:///media/rhel6/

RHEL6.4-Server-20130130.0-x86_64-DVD1.iso. As root, execute:

~]#yumupdate

~]#umount/media/rhel6/

~]#rmdir/media/rhel6/

~]#rm/etc/yum.repos.d/rhel6.repo

8.2. Packages and Package Groups

8.2.1. Searching Packages

yumsearchterm…

Example 8.2. Searching for packages matching a specific string

~]$ yum search vim gvim emacs

Loaded plugins: langpacks, product-id, search-disabled-repos, subscription-manager

============================= N/S matched: vim ==============================

vim-X11.x86_64 : The VIM version of the vi editor for the X Window System

vim-common.x86_64 : The common files needed by any version of the VIM editor

[output truncated]

============================ N/S matched: emacs =============================

emacs.x86_64 : GNU Emacs text editor

emacs-auctex.noarch : Enhanced TeX modes for Emacs

[output truncated]

Name and summary matches mostly, use "search all" for everything.

Warning: No matches found for: gvimyum search command is useful for searching for packages you do not know the name of, but for which you know a related term. Note that by default, yum search returns matches in package name and summary, which makes the search faster. Use the yum search all command for a more exhaustive but slower search.

8.2.2. Listing Packages

yum list and related commands provide information about packages, package groups, and repositories.

* (which expands to match any character multiple times) and ? (which expands to match any one character).

Note

yum command, otherwise the Bash shell will interpret these expressions as pathname expansions, and potentially pass all files in the current directory that match the globs to yum. To make sure the glob expressions are passed to yum as intended, either:

- escape the wildcard characters by preceding them with a backslash character

- double-quote or single-quote the entire glob expression.

-

yum list glob_expression - Lists information on installed and available packages matching all glob expressions.

Example 8.3. Listing all ABRT add-ons and plug-ins using glob expressions

Packages with various ABRT add-ons and plug-ins either begin with “abrt-addon-”, or “abrt-plugin-”. To list these packages, type the following at a shell prompt:~]#

yum list abrt-addon\* abrt-plugin\*Loaded plugins: product-id, refresh-packagekit, subscription-manager Updating Red Hat repositories. INFO:rhsm-app.repolib:repos updated: 0 Installed Packages abrt-addon-ccpp.x86_64 1.0.7-5.el6 @rhel abrt-addon-kerneloops.x86_64 1.0.7-5.el6 @rhel abrt-addon-python.x86_64 1.0.7-5.el6 @rhel abrt-plugin-bugzilla.x86_64 1.0.7-5.el6 @rhel abrt-plugin-logger.x86_64 1.0.7-5.el6 @rhel abrt-plugin-sosreport.x86_64 1.0.7-5.el6 @rhel abrt-plugin-ticketuploader.x86_64 1.0.7-5.el6 @rhel -

yum list all - Lists all installed and available packages.

-

yum list installed - Lists all packages installed on your system. The rightmost column in the output lists the repository from which the package was retrieved.

Example 8.4. Listing installed packages using a double-quoted glob expression

To list all installed packages that begin with “krb” followed by exactly one character and a hyphen, type:~]#

yum list installed "krb?-*"Loaded plugins: product-id, refresh-packagekit, subscription-manager Updating Red Hat repositories. INFO:rhsm-app.repolib:repos updated: 0 Installed Packages krb5-libs.x86_64 1.8.1-3.el6 @rhel krb5-workstation.x86_64 1.8.1-3.el6 @rhel -

yum list available - Lists all available packages in all enabled repositories.

Example 8.5. Listing available packages using a single glob expression with escaped wildcard characters

To list all available packages with names that contain “gstreamer” and then “plugin”, run the following command:~]#

yum list available gstreamer\*plugin\*Loaded plugins: product-id, refresh-packagekit, subscription-manager Updating Red Hat repositories. INFO:rhsm-app.repolib:repos updated: 0 Available Packages gstreamer-plugins-bad-free.i686 0.10.17-4.el6 rhel gstreamer-plugins-base.i686 0.10.26-1.el6 rhel gstreamer-plugins-base-devel.i686 0.10.26-1.el6 rhel gstreamer-plugins-base-devel.x86_64 0.10.26-1.el6 rhel gstreamer-plugins-good.i686 0.10.18-1.el6 rhel -

yum grouplist - Lists all package groups.

-

yum repolist - Lists the repository ID, name, and number of packages it provides for each enabled repository.

8.2.3. Displaying Package Information

yuminfopackage_name

~]# yum info abrt

Loaded plugins: product-id, refresh-packagekit, subscription-manager

Updating Red Hat repositories.

INFO:rhsm-app.repolib:repos updated: 0

Installed Packages

Name : abrt

Arch : x86_64

Version : 1.0.7

Release : 5.el6

Size : 578 k

Repo : installed

From repo : rhel

Summary : Automatic bug detection and reporting tool

URL : https://fedorahosted.org/abrt/

License : GPLv2+

Description: abrt is a tool to help users to detect defects in applications

: and to create a bug report with all informations needed by

: maintainer to fix it. It uses plugin system to extend its

: functionality.yum info package_name command is similar to the rpm -q --info package_name command, but provides as additional information the ID of the Yum repository the RPM package is found in (look for the From repo: line in the output).

yumdbinfopackage_name

user indicates it was installed by the user, and dep means it was brought in as a dependency). For example, to display additional information about the yum package, type:

~]# yumdb info yum

Loaded plugins: product-id, refresh-packagekit, subscription-manager

yum-3.2.27-4.el6.noarch

checksum_data = 23d337ed51a9757bbfbdceb82c4eaca9808ff1009b51e9626d540f44fe95f771

checksum_type = sha256

from_repo = rhel

from_repo_revision = 1298613159

from_repo_timestamp = 1298614288

installed_by = 4294967295

reason = user

releasever = 6.1yumdb command, see the yumdb(8) manual page.

Listing Files Contained in a Package

repoquery--listpackage_name

repoquery command, see the repoquery manual page.

yum provides command, described in Finding which package owns a file

8.2.4. Installing Packages

Installing Individual Packages

yuminstallpackage_name

yuminstallpackage_name package_name

i686, type:

~]# yum install sqlite.i686~]# yum install perl-Crypt-\*yum install. If you know the name of the binary you want to install, but not its package name, you can give yum install the path name:

~]# yum install /usr/sbin/namedyum then searches through its package lists, finds the package which provides /usr/sbin/named, if any, and prompts you as to whether you want to install it.

Note

named binary, but you do not know in which bin or sbin directory is the file installed, use the yum provides command with a glob expression:

~]# yum provides "*bin/named"

Loaded plugins: product-id, refresh-packagekit, subscription-manager

Updating Red Hat repositories.

INFO:rhsm-app.repolib:repos updated: 0

32:bind-9.7.0-4.P1.el6.x86_64 : The Berkeley Internet Name Domain (BIND)

: DNS (Domain Name System) server

Repo : rhel

Matched from:

Filename : /usr/sbin/namedyum provides "*/file_name" is a common and useful trick to find the package(s) that contain file_name.

Installing a Package Group

yum grouplist -v command lists the names of all package groups, and, next to each of them, their groupid in parentheses. The groupid is always the term in the last pair of parentheses, such as kde-desktop in the following example:

~]# yum -v grouplist kde\*

Loading "product-id" plugin

Loading "refresh-packagekit" plugin

Loading "subscription-manager" plugin

Updating Red Hat repositories.

INFO:rhsm-app.repolib:repos updated: 0

Config time: 0.123

Yum Version: 3.2.29

Setting up Group Process

Looking for repo options for [rhel]

rpmdb time: 0.001

group time: 1.291

Available Groups:

KDE Desktop (kde-desktop)

Donegroupinstall:

yumgroupinstallgroup_name

yumgroupinstallgroupid

install command if you prepend it with an @-symbol (which tells yum that you want to perform a groupinstall):

yuminstall@group

KDE Desktop group:

~]#yum groupinstall "KDE Desktop"~]#yum groupinstall kde-desktop~]#yum install @kde-desktop

8.2.5. Removing Packages

Removing Individual Packages

root:

yumremovepackage_name

~]# yum remove totem rhythmbox sound-juicerinstall, remove can take these arguments:

- package names

- glob expressions

- file lists

- package provides

Warning

Removing a Package Group

install syntax:

yumgroupremovegroup

yumremove@group

KDE Desktop group:

~]#yum groupremove "KDE Desktop"~]#yum groupremove kde-desktop~]#yum remove @kde-desktop

Important

yum to remove only those packages which are not required by any other packages or groups by adding the groupremove_leaf_only=1 directive to the [main] section of the /etc/yum.conf configuration file. For more information on this directive, see Section 8.4.1, “Setting [main] Options”.

8.3. Working with Transaction History

yum history command allows users to review information about a timeline of Yum transactions, the dates and times they occurred, the number of packages affected, whether transactions succeeded or were aborted, and if the RPM database was changed between transactions. Additionally, this command can be used to undo or redo certain transactions.

8.3.1. Listing Transactions

root, either run yum history with no additional arguments, or type the following at a shell prompt:

yumhistorylist

all keyword:

yumhistorylistall

yumhistoryliststart_id..end_id

yumhistorylistglob_expression

~]# yum history list 1..5

Loaded plugins: product-id, refresh-packagekit, subscription-manager

ID | Login user | Date and time | Action(s) | Altered

-------------------------------------------------------------------------------

5 | Jaromir ... <jhradilek> | 2011-07-29 15:33 | Install | 1

4 | Jaromir ... <jhradilek> | 2011-07-21 15:10 | Install | 1

3 | Jaromir ... <jhradilek> | 2011-07-16 15:27 | I, U | 73

2 | System <unset> | 2011-07-16 15:19 | Update | 1

1 | System <unset> | 2011-07-16 14:38 | Install | 1106

history listyum history list command produce tabular output with each row consisting of the following columns:

ID— an integer value that identifies a particular transaction.Login user— the name of the user whose login session was used to initiate a transaction. This information is typically presented in theFull Name <username>form. For transactions that were not issued by a user (such as an automatic system update),System <unset>is used instead.Date and time— the date and time when a transaction was issued.Action(s)— a list of actions that were performed during a transaction as described in Table 8.1, “Possible values of the Action(s) field”.Altered— the number of packages that were affected by a transaction, possibly followed by additional information as described in Table 8.2, “Possible values of the Altered field”.

| Action | Abbreviation | Description |

|---|---|---|

Downgrade | D | At least one package has been downgraded to an older version. |

Erase | E | At least one package has been removed. |

Install | I | At least one new package has been installed. |

Obsoleting | O | At least one package has been marked as obsolete. |

Reinstall | R | At least one package has been reinstalled. |

Update | U | At least one package has been updated to a newer version. |

| Symbol | Description |

|---|---|

< | Before the transaction finished, the rpmdb database was changed outside Yum. |

> | After the transaction finished, the rpmdb database was changed outside Yum. |

* | The transaction failed to finish. |

# | The transaction finished successfully, but yum returned a non-zero exit code. |

E | The transaction finished successfully, but an error or a warning was displayed. |

P | The transaction finished successfully, but problems already existed in the rpmdb database. |

s | The transaction finished successfully, but the --skip-broken command-line option was used and certain packages were skipped. |

root:

yumhistorysummary

yumhistorysummarystart_id..end_id

yum history list command, you can also display a summary of transactions regarding a certain package or packages by supplying a package name or a glob expression:

yumhistorysummaryglob_expression

~]# yum history summary 1..5

Loaded plugins: product-id, refresh-packagekit, subscription-manager

Login user | Time | Action(s) | Altered

-------------------------------------------------------------------------------

Jaromir ... <jhradilek> | Last day | Install | 1

Jaromir ... <jhradilek> | Last week | Install | 1

Jaromir ... <jhradilek> | Last 2 weeks | I, U | 73

System <unset> | Last 2 weeks | I, U | 1107

history summaryyum history summary command produce simplified tabular output similar to the output of yum history list.

yum history list and yum history summary are oriented towards transactions, and although they allow you to display only transactions related to a given package or packages, they lack important details, such as package versions. To list transactions from the perspective of a package, run the following command as root:

yumhistorypackage-listglob_expression

~]# yum history package-list subscription-manager\*

Loaded plugins: product-id, refresh-packagekit, subscription-manager

ID | Action(s) | Package

-------------------------------------------------------------------------------

3 | Updated | subscription-manager-0.95.11-1.el6.x86_64

3 | Update | 0.95.17-1.el6_1.x86_64

3 | Updated | subscription-manager-firstboot-0.95.11-1.el6.x86_64

3 | Update | 0.95.17-1.el6_1.x86_64

3 | Updated | subscription-manager-gnome-0.95.11-1.el6.x86_64

3 | Update | 0.95.17-1.el6_1.x86_64

1 | Install | subscription-manager-0.95.11-1.el6.x86_64

1 | Install | subscription-manager-firstboot-0.95.11-1.el6.x86_64

1 | Install | subscription-manager-gnome-0.95.11-1.el6.x86_64

history package-list8.3.2. Examining Transactions

root, use the yum history summary command in the following form:

yumhistorysummaryid

root:

yumhistoryinfoid

yum automatically uses the last transaction. Note that when specifying more than one transaction, you can also use a range:

yumhistoryinfostart_id..end_id

~]# yum history info 4..5

Loaded plugins: product-id, refresh-packagekit, subscription-manager

Transaction ID : 4..5

Begin time : Thu Jul 21 15:10:46 2011

Begin rpmdb : 1107:0c67c32219c199f92ed8da7572b4c6df64eacd3a

End time : 15:33:15 2011 (22 minutes)

End rpmdb : 1109:1171025bd9b6b5f8db30d063598f590f1c1f3242

User : Jaromir Hradilek <jhradilek>

Return-Code : Success

Command Line : install screen

Command Line : install yum-plugin-security

Transaction performed with:

Installed rpm-4.8.0-16.el6.x86_64

Installed yum-3.2.29-17.el6.noarch

Installed yum-metadata-parser-1.1.2-16.el6.x86_64

Packages Altered:

Install screen-4.0.3-16.el6.x86_64

Install yum-plugin-security-1.1.30-17.el6.noarch

history inforoot:

yumhistoryaddon-infoid

yum history info, when no id is provided, yum automatically uses the latest transaction. Another way to see the latest transaction is to use the last keyword:

yumhistoryaddon-infolast

yum history addon-info command would provide the following output:

~]# yum history addon-info 4

Loaded plugins: product-id, refresh-packagekit, subscription-manager

Transaction ID: 4

Available additional history information:

config-main

config-repos

saved_tx

history addon-infoconfig-main— global Yum options that were in use during the transaction. See Section 8.4.1, “Setting [main] Options” for information on how to change global options.config-repos— options for individual Yum repositories. See Section 8.4.2, “Setting [repository] Options” for information on how to change options for individual repositories.saved_tx— the data that can be used by theyum load-transactioncommand in order to repeat the transaction on another machine (see below).

root:

yumhistoryaddon-infoid information

8.3.3. Reverting and Repeating Transactions

yum history command provides means to revert or repeat a selected transaction. To revert a transaction, type the following at a shell prompt as root:

yumhistoryundoid

root, run the following command:

yumhistoryredoid

last keyword to undo or repeat the latest transaction.

yum history undo and yum history redo commands only revert or repeat the steps that were performed during a transaction. If the transaction installed a new package, the yum history undo command will uninstall it, and if the transaction uninstalled a package the command will again install it. This command also attempts to downgrade all updated packages to their previous version, if these older packages are still available.

root:

yum-qhistoryaddon-infoidsaved_tx>file_name

root:

yumload-transactionfile_name

rpmdb version stored in the file must be identical to the version on the target system. You can verify the rpmdb version by using the yum version nogroups command.

8.3.4. Completing Transactions

root:

yum-complete-transaction/var/lib/yum/transaction-all and /var/lib/yum/transaction-done files. If there are more unfinished transactions, yum-complete-transaction attempts to complete the most recent one first.

--cleanup-only option:

yum-complete-transaction--cleanup-only

8.3.5. Starting New Transaction History

root:

yumhistorynew

/var/lib/yum/history/ directory. The old transaction history will be kept, but will not be accessible as long as a newer database file is present in the directory.

8.4. Configuring Yum and Yum Repositories

yum and related utilities is located at /etc/yum.conf. This file contains one mandatory [main] section, which allows you to set Yum options that have global effect, and can also contain one or more [repository] sections, which allow you to set repository-specific options. However, it is recommended to define individual repositories in new or existing .repo files in the /etc/yum.repos.d/ directory. The values you define in individual [repository] sections of the /etc/yum.conf file override values set in the [main] section.

- set global Yum options by editing the

[main]section of the/etc/yum.confconfiguration file; - set options for individual repositories by editing the

[repository]sections in/etc/yum.confand.repofiles in the/etc/yum.repos.d/directory; - use Yum variables in

/etc/yum.confand files in the/etc/yum.repos.d/directory so that dynamic version and architecture values are handled correctly; - add, enable, and disable Yum repositories on the command line; and,

- set up your own custom Yum repository.

8.4.1. Setting [main] Options

/etc/yum.conf configuration file contains exactly one [main] section, and while some of the key-value pairs in this section affect how yum operates, others affect how Yum treats repositories. You can add many additional options under the [main] section heading in /etc/yum.conf.

/etc/yum.conf configuration file can look like this:

[main]

cachedir=/var/cache/yum/$basearch/$releasever

keepcache=0

debuglevel=2

logfile=/var/log/yum.log

exactarch=1

obsoletes=1

gpgcheck=1

plugins=1

installonly_limit=3

[comments abridged]

# PUT YOUR REPOS HERE OR IN separate files named file.repo

# in /etc/yum.repos.d[main] section:

assumeyes=value- where value is one of:

0—yumshould prompt for confirmation of critical actions it performs. This is the default.1— Do not prompt for confirmation of criticalyumactions. Ifassumeyes=1is set,yumbehaves in the same way that the command-line option-ydoes. cachedir=directory- where directory is an absolute path to the directory where Yum should store its cache and database files. By default, Yum's cache directory is

/var/cache/yum/$basearch/$releasever.See Section 8.4.3, “Using Yum Variables” for descriptions of the$basearchand$releaseverYum variables. debuglevel=value- where value is an integer between

1and10. Setting a higherdebuglevelvalue causesyumto display more detailed debugging output.debuglevel=0disables debugging output, whiledebuglevel=2is the default. exactarch=value- where value is one of:

0— Do not take into account the exact architecture when updating packages.1— Consider the exact architecture when updating packages. With this setting,yumwill not install an i686 package to update an i386 package already installed on the system. This is the default. exclude=package_name [more_package_names]- This option allows you to exclude packages by keyword during installation/updates. Listing multiple packages for exclusion can be accomplished by quoting a space-delimited list of packages. Shell globs using wildcards (for example,

*and?) are allowed. gpgcheck=value- where value is one of:

0— Disable GPG signature-checking on packages in all repositories, including local package installation.1— Enable GPG signature-checking on all packages in all repositories, including local package installation.gpgcheck=1is the default, and thus all packages' signatures are checked.If this option is set in the[main]section of the/etc/yum.conffile, it sets the GPG-checking rule for all repositories. However, you can also setgpgcheck=valuefor individual repositories instead; that is, you can enable GPG-checking on one repository while disabling it on another. Settinggpgcheck=valuefor an individual repository in its corresponding.repofile overrides the default if it is present in/etc/yum.conf.For more information on GPG signature-checking, see Section B.3, “Checking a Package's Signature”. groupremove_leaf_only=value- where value is one of:

0—yumshould not check the dependencies of each package when removing a package group. With this setting,yumremoves all packages in a package group, regardless of whether those packages are required by other packages or groups.groupremove_leaf_only=0is the default.1—yumshould check the dependencies of each package when removing a package group, and remove only those packages which are not required by any other package or group.For more information on removing packages, see Intelligent package group removal. installonlypkgs=space separated list of packages- Here you can provide a space-separated list of packages which

yumcan install, but will never update. See the yum.conf(5) manual page for the list of packages which are install-only by default.If you add theinstallonlypkgsdirective to/etc/yum.conf, you should ensure that you list all of the packages that should be install-only, including any of those listed under theinstallonlypkgssection of yum.conf(5). In particular, kernel packages should always be listed ininstallonlypkgs(as they are by default), andinstallonly_limitshould always be set to a value greater than2so that a backup kernel is always available in case the default one fails to boot. installonly_limit=value- where value is an integer representing the maximum number of versions that can be installed simultaneously for any single package listed in the