Ce contenu n'est pas disponible dans la langue sélectionnée.

High Availability Add-On Overview

Overview of the High Availability Add-On for Red Hat Enterprise Linux

Abstract

Introduction

- Red Hat Enterprise Linux Installation Guide — Provides information regarding installation of Red Hat Enterprise Linux 6.

- Red Hat Enterprise Linux Deployment Guide — Provides information regarding the deployment, configuration and administration of Red Hat Enterprise Linux 6.

- Configuring and Managing the High Availability Add-On Provides information about configuring and managing the High Availability Add-On (also known as Red Hat Cluster) for Red Hat Enterprise Linux 6.

- Logical Volume Manager Administration — Provides a description of the Logical Volume Manager (LVM), including information on running LVM in a clustered environment.

- Global File System 2: Configuration and Administration — Provides information about installing, configuring, and maintaining Red Hat GFS2 (Red Hat Global File System 2), which is included in the Resilient Storage Add-On.

- DM Multipath — Provides information about using the Device-Mapper Multipath feature of Red Hat Enterprise Linux 6.

- Load Balancer Administration — Provides information on configuring high-performance systems and services with the Red Hat Load Balancer Add-On (Formerly known as Linux Virtual Server [LVS]).

- Release Notes — Provides information about the current release of Red Hat products.

Note

1. We Need Feedback!

6.9.

Chapter 1. High Availability Add-On Overview

1.1. Cluster Basics

- Storage

- High availability

- Load balancing

- High performance

rgmanager.

Note

1.2. High Availability Add-On Introduction

- Cluster infrastructure — Provides fundamental functions for nodes to work together as a cluster: configuration-file management, membership management, lock management, and fencing.

- High availability Service Management — Provides failover of services from one cluster node to another in case a node becomes inoperative.

- Cluster administration tools — Configuration and management tools for setting up, configuring, and managing the High Availability Add-On. The tools are for use with the Cluster Infrastructure components, the high availability and Service Management components, and storage.

- Red Hat GFS2 (Global File System 2) — Part of the Resilient Storage Add-On, this provides a cluster file system for use with the High Availability Add-On. GFS2 allows multiple nodes to share storage at a block level as if the storage were connected locally to each cluster node. GFS2 cluster file system requires a cluster infrastructure.

- Cluster Logical Volume Manager (CLVM) — Part of the Resilient Storage Add-On, this provides volume management of cluster storage. CLVM support also requires cluster infrastructure.

- Load Balancer Add-On — Routing software that provides IP-Load-balancing. the Load Balancer Add-On runs in a pair of redundant virtual servers that distributes client requests evenly to real servers that are behind the virtual servers.

1.3. Cluster Infrastructure

- Cluster management

- Lock management

- Fencing

- Cluster configuration management

Chapter 2. Cluster Management with CMAN

2.1. Cluster Quorum

Note

2.1.1. Quorum Disks

ccs administration in the Cluster Administration manual.

2.1.2. Tie-breakers

- Have a two node configuration with the fence devices on a different network path than the path used for cluster communication

- Have a two node configuration where fencing is at the fabric level - especially for SCSI reservations

Chapter 3. RGManager

httpd to one group of nodes while mysql can be restricted to a separate set of nodes.

- Failover Domains - How the RGManager failover domain system works

- Service Policies - RGManager's service startup and recovery policies

- Resource Trees - How RGManager's resource trees work, including start/stop orders and inheritance

- Service Operational Behaviors - How RGManager's operations work and what states mean

- Virtual Machine Behaviors - Special things to remember when running VMs in an RGManager cluster

- Resource Actions - The agent actions RGManager uses and how to customize their behavior from the

cluster.conffile. - Event Scripting - If RGManager's failover and recovery policies do not fit in your environment, you can customize your own using this scripting subsystem.

3.1. Failover Domains

- preferred node or preferred member: The preferred node is the member designated to run a given service if the member is online. We can emulate this behavior by specifying an unordered, unrestricted failover domain of exactly one member.

- restricted domain: Services bound to the domain may only run on cluster members which are also members of the failover domain. If no members of the failover domain are available, the service is placed in the stopped state. In a cluster with several members, using a restricted failover domain can ease configuration of a cluster service (such as httpd), which requires identical configuration on all members that run the service. Instead of setting up the entire cluster to run the cluster service, you must set up only the members in the restricted failover domain that you associate with the cluster service.

- unrestricted domain: The default behavior, services bound to this domain may run on all cluster members, but will run on a member of the domain whenever one is available. This means that if a service is running outside of the domain and a member of the domain comes online, the service will migrate to that member, unless nofailback is set.

- ordered domain: The order specified in the configuration dictates the order of preference of members within the domain. The highest-ranking member of the domain will run the service whenever it is online. This means that if member A has a higher-rank than member B, the service will migrate to A if it was running on B if A transitions from offline to online.

- unordered domain: The default behavior, members of the domain have no order of preference; any member may run the service. Services will always migrate to members of their failover domain whenever possible, however, in an unordered domain.

- failback: Services on members of an ordered failover domain should fail back to the node that it was originally running on before the node failed, which is useful for frequently failing nodes to prevent frequent service shifts between the failing node and the failover node.

3.1.1. Behavior Examples

- Ordered, restricted failover domain {A, B, C}

- With nofailback unset: A service 'S' will always run on member 'A' whenever member 'A' is online and there is a quorum. If all members of {A, B, C} are offline, the service will not run. If the service is running on 'C' and 'A' transitions online, the service will migrate to 'A'.With nofailback set: A service 'S' will run on the highest priority cluster member when a quorum is formed. If all members of {A, B, C} are offline, the service will not run. If the service is running on 'C' and 'A' transitions online, the service will remain on 'C' unless 'C' fails, at which point it will fail over to 'A'.

- Unordered, restricted failover domain {A, B, C}

- A service 'S' will only run if there is a quorum and at least one member of {A, B, C} is online. If another member of the domain transitions online, the service does not relocate.

- Ordered, unrestricted failover domain {A, B, C}

- With nofailback unset: A service 'S' will run whenever there is a quorum. If a member of the failover domain is online, the service will run on the highest-priority member, otherwise a member of the cluster will be chosen at random to run the service. That is, the service will run on 'A' whenever 'A' is online, followed by 'B'.With nofailback set: A service 'S' will run whenever there is a quorum. If a member of the failover domain is online at quorum formation, the service will run on the highest-priority member of the failover domain. That is, if 'B' is online (but 'A' is not), the service will run on 'B'. If, at some later point, 'A' joins the cluster, the service will not relocate to 'A'.

- Unordered, unrestricted failover domain {A, B, C}

- This is also called a "Set of Preferred Members". When one or more members of the failover domain are online, the service will run on a nonspecific online member of the failover domain. If another member of the failover domain transitions online, the service does not relocate.

3.2. Service Policies

Note

3.2.1. Start Policy

- autostart (default) - start the service when RGManager boots and a quorum forms. If set to '0', the cluster will not start the service and instead place it in to the disabled state.

3.2.2. Recovery Policy

- restart (default) - restart the service on the same node. If no other recovery policy is specified, this recovery policy is used. If restarting fails, RGManager falls back to relocate the service.

- relocate - Try to start the service on other node(s) in the cluster. If no other nodes successfully start the service, the service is then placed in the stopped state.

- disable - Do nothing. Place the service into the disabled state.

- restart-disable - Attempt to restart the service, in place. Place the service into the disabled state if restarting fails.

3.2.3. Restart Policy Extensions

<service name="myservice" max_restarts="3" restart_expire_time="300" ...> ... </service>

<service name="myservice" max_restarts="3" restart_expire_time="300" ...>

...

</service>

Note

3.3. Resource Trees - Basics / Definitions

- Resource trees are XML representations of resources, their attributes, parent/child and sibling relationships. The root of a resource tree is almost always a special type of resource called a service. Resource tree, resource group, and service are usually used interchangeably on this wiki. From RGManager's perspective, a resource tree is an atomic unit. All components of a resource tree are started on the same cluster node.

- fs:myfs and ip:10.1.1.2 are siblings

- fs:myfs is the parent of script:script_child

- script:script_child is the child of fs:myfs

3.3.1. Parent / Child Relationships, Dependencies, and Start Ordering

- Parents are started before children

- Children must all stop (cleanly) before a parent may be stopped

- From these two, you could say that a child resource is dependent on its parent resource

- In order for a resource to be considered in good health, all of its dependent children must also be in good health

3.4. Service Operations and States

3.4.1. Service Operations

- enable — start the service, optionally on a preferred target and optionally according to failover domain rules. In absence of either, the local host where clusvcadm is run will start the service. If the original start fails, the service behaves as though a relocate operation was requested (see below). If the operation succeeds, the service is placed in the started state.

- disable — stop the service and place into the disabled state. This is the only permissible operation when a service is in the failed state.

- relocate — move the service to another node. Optionally, the administrator may specify a preferred node to receive the service, but the inability for the service to run on that host (for example, if the service fails to start or the host is offline) does not prevent relocation, and another node is chosen. RGManager attempts to start the service on every permissible node in the cluster. If no permissible target node in the cluster successfully starts the service, the relocation fails and the service is attempted to be restarted on the original owner. If the original owner cannot restart the service, the service is placed in the stopped state.

- stop — stop the service and place into the stopped state.

- migrate — migrate the virtual machine to another node. The administrator must specify a target node. Depending on the failure, a failure to migrate may result with the virtual machine in the failed state or in the started state on the original owner.

3.4.1.1. The freeze Operation

3.4.1.1.1. Service Behaviors when Frozen

- status checks are disabled

- start operations are disabled

- stop operations are disabled

- Failover will not occur (even if you power off the service owner)

Important

- You must not stop all instances of RGManager when a service is frozen unless you plan to reboot the hosts prior to restarting RGManager.

- You must not unfreeze a service until the reported owner of the service rejoins the cluster and restarts RGManager.

3.4.2. Service States

- disabled — The service will remain in the disabled state until either an administrator re-enables the service or the cluster loses quorum (at which point, the autostart parameter is evaluated). An administrator may enable the service from this state.

- failed — The service is presumed dead. This state occurs whenever a resource's stop operation fails. An administrator must verify that there are no allocated resources (mounted file systems, and so on) prior to issuing a disable request. The only action which can take place from this state is disable.

- stopped — When in the stopped state, the service will be evaluated for starting after the next service or node transition. This is a very temporary measure. An administrator may disable or enable the service from this state.

- recovering — The cluster is trying to recover the service. An administrator may disable the service to prevent recovery if desired.

- started — If a service status check fails, recover it according to the service recovery policy. If the host running the service fails, recover it following failover domain and exclusive service rules. An administrator may relocate, stop, disable, and (with virtual machines) migrate the service from this state.

Note

starting and stopping are special transitional states of the started state.

3.5. Virtual Machine Behaviors

3.5.1. Normal Operations

- Starting (enabling)

- Stopping (disabling)

- Status monitoring

- Relocation

- Recovery

3.5.2. Migration

- live (default) — the virtual machine continues to run while most of its memory contents are copied to the destination host. This minimizes the inaccessibility of the VM (typically well under 1 second) at the expense of performance of the VM during the migration and total amount of time it takes for the migration to complete.

- pause - the virtual machine is frozen in memory while its memory contents are copied to the destination host. This minimizes the amount of time it takes for a virtual machine migration to complete.

Important

3.5.3. RGManager Virtual Machine Features

3.5.3.1. Virtual Machine Tracking

clusvcadm if the VM is already running will cause RGManager to search the cluster for the VM and mark the VM as started wherever it is found.

virsh will cause RGManager to search the cluster for the VM and mark the VM as started wherever it is found.

Note

3.5.3.2. Transient Domain Support

/etc/libvirt/qemu in sync across the cluster.

3.5.3.2.1. Management Features

3.5.4. Unhandled Behaviors

- Using a non-cluster-aware tool (such as virsh or xm) to manipulate a virtual machine's state or configuration while the cluster is managing the virtual machine. Checking the virtual machine's state is fine (for example, virsh list, virsh dumpxml).

- Migrating a cluster-managed VM to a non-cluster node or a node in the cluster which is not running RGManager. RGManager will restart the VM in the previous location, causing two instances of the VM to be running, resulting in file system corruption.

3.6. Resource Actions

- start - start the resource

- stop - stop the resource

- status - check the status of the resource

- metadata - report the OCF RA XML metadata

3.6.1. Return Values

- 0 - success

- stop after stop or stop when not running must return successstart after start or start when running must return success

- nonzero - failure

- if the stop operation ever returns a nonzero value, the service enters the failed state and the service must be recovered manually.

Chapter 4. Fencing

fenced.

fenced, when notified of the failure, fences the failed node. Other cluster-infrastructure components determine what actions to take — that is, they perform any recovery that needs to be done. For example, DLM and GFS2, when notified of a node failure, suspend activity until they detect that fenced has completed fencing the failed node. Upon confirmation that the failed node is fenced, DLM and GFS2 perform recovery. DLM releases locks of the failed node; GFS2 recovers the journal of the failed node.

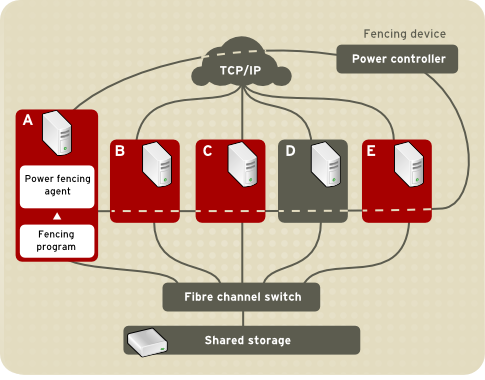

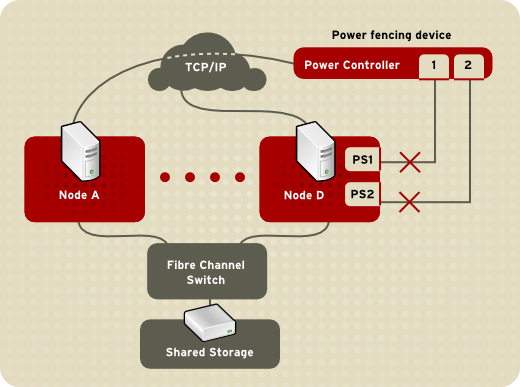

- Power fencing — A fencing method that uses a power controller to power off an inoperable node.

- storage fencing — A fencing method that disables the Fibre Channel port that connects storage to an inoperable node.

- Other fencing — Several other fencing methods that disable I/O or power of an inoperable node, including IBM Bladecenters, PAP, DRAC/MC, HP ILO, IPMI, IBM RSA II, and others.

Figure 4.1. Power Fencing Example

Figure 4.2. Storage Fencing Example

Figure 4.3. Fencing a Node with Dual Power Supplies

Figure 4.4. Fencing a Node with Dual Fibre Channel Connections

Chapter 5. Lock Management

rgmanager uses DLM to synchronize service states.

5.1. DLM Locking Model

- Six locking modes that increasingly restrict access to a resource

- The promotion and demotion of locks through conversion

- Synchronous completion of lock requests

- Asynchronous completion

- Global data through lock value blocks

- The node is a part of a cluster.

- All nodes agree on cluster membership and has quorum.

- An IP address must communicate with the DLM on a node. Normally the DLM uses TCP/IP for inter-node communications which restricts it to a single IP address per node (though this can be made more redundant using the bonding driver). The DLM can be configured to use SCTP as its inter-node transport which allows multiple IP addresses per node.

5.2. Lock States

- Granted — The lock request succeeded and attained the requested mode.

- Converting — A client attempted to change the lock mode and the new mode is incompatible with an existing lock.

- Blocked — The request for a new lock could not be granted because conflicting locks exist.

Chapter 6. Configuration and Administration Tools

/etc/cluster/cluster.conf specifies the High Availability Add-On configuration. The configuration file is an XML file that describes the following cluster characteristics:

- Cluster name — Specifies the cluster name, cluster configuration file revision level, and basic fence timing properties used when a node joins a cluster or is fenced from the cluster.

- Cluster — Specifies each node of the cluster, specifying node name, node ID, number of quorum votes, and fencing method for that node.

- Fence Device — Specifies fence devices in the cluster. Parameters vary according to the type of fence device. For example for a power controller used as a fence device, the cluster configuration defines the name of the power controller, its IP address, login, and password.

- Managed Resources — Specifies resources required to create cluster services. Managed resources includes the definition of failover domains, resources (for example an IP address), and services. Together the managed resources define cluster services and failover behavior of the cluster services.

/usr/share/cluster/cluster.rng during startup time and when a configuration is reloaded. Also, you can validate a cluster configuration any time by using the ccs_config_validate command.

/usr/share/doc/cman-X.Y.ZZ/cluster_conf.html (for example /usr/share/doc/cman-3.0.12/cluster_conf.html).

- XML validity — Checks that the configuration file is a valid XML file.

- Configuration options — Checks to make sure that options (XML elements and attributes) are valid.

- Option values — Checks that the options contain valid data (limited).

6.1. Cluster Administration Tools

- Conga — This is a comprehensive user interface for installing, configuring, and managing Red Hat High Availability Add-On. Refer to Configuring and Managing the High Availability Add-On for information about configuring and managing High Availability Add-On with Conga.

- Luci — This is the application server that provides the user interface for Conga. It allows users to manage cluster services and provides access to help and online documentation when needed.

- Ricci — This is a service daemon that manages distribution of the cluster configuration. Users pass configuration details using the Luci interface, and the configuration is loaded in to corosync for distribution to cluster nodes.

- As of the Red Hat Enterprise Linux 6.1 release and later, the Red Hat High Availability Add-On provides support for the

ccscluster configuration command, which allows an administrator to create, modify and view the cluster.conf cluster configuration file. Refer to the Cluster Administration manual for information about configuring and managing the High Availability Add-On with theccscommand.

Note

system-config-cluster is not available in Red Hat Enterprise Linux 6.

Chapter 7. Virtualization and High Availability

- VMs as Highly Available Resources/Services

- Guest Clusters

7.1. VMs as Highly Available Resources/Services

- If a large number of VMs are being made HA across a large number of physical hosts, using RHEV may be the better solution as it has more sophisticated algorithms for managing VM placement that take into consideration things like host CPU, memory, and load information.

- If a small number of VMs are being made HA across a small number of physical hosts, using Red Hat Enterprise Linux HA may be the better solution because less additional infrastructure is required. The smallest Red Hat Enterprise Linux HA VM solution needs two physical hosts for a 2 node cluster. The smallest RHEV solution requires 4 nodes: 2 to provide HA for the RHEVM server and 2 to act as VM hosts.

- There is no strict guideline for how many hosts or VMs would be considered a 'large number'. But keep in mind that the maximum number of hosts in a single Red Hat Enterprise Linux HA Cluster is 16, and that any Cluster with 8 or more hosts will need an architecture review from Red Hat to determine supportability.

- If your HA VMs are providing services that are using shared infrastructure, either Red Hat Enterprise Linux HA or RHEV can be used.

- If you need to provide HA for a small set of critical services that are running inside of VMs, Red Hat Enterprise Linux HA or RHEV can be used.

- If you are looking to provide infrastructure to allow rapid provisioning of VMs, RHEV should be used.

- RHEV VM HA is meant to be dynamic. Addition of new VMs to the RHEV 'cluster' can be done easily and is fully supported.

- Red Hat Enterprise Linux VM HA is not meant to be a highly dynamic environment. A cluster with a fixed set of VMs should be set up and then for the lifetime of the cluster it is not recommended to add or remove additional VMs

- Red Hat Enterprise Linux HA should not be used to provide infrastructure for creating cloud-like environments due to the static nature of cluster configuration as well as the relatively low physical node count maximum (16 nodes)

- Red Hat Enterprise Linux 5.0+ supports Xen in conjunction with Red Hat Enterprise Linux AP Cluster

- Red Hat Enterprise Linux 5.4 introduced support for KVM virtual machines as managed resources in Red Hat Enterprise Linux AP Cluster as a Technology Preview.

- Red Hat Enterprise Linux 5.5+ elevates support for KVM virtual machines to be fully supported.

- Red Hat Enterprise Linux 6.0+ supports KVM virtual machines as highly available resources in the Red Hat Enterprise Linux 6 High Availability Add-On.

- Red Hat Enterprise Linux 6.0+ does not support Xen virtual machines with the Red Hat Enterprise Linux 6 High Availability Add-On, since Red Hat Enterprise Linux 6 no longer supports Xen.

Note

7.1.1. General Recommendations

- In Red Hat Enterprise Linux 5.3 and below, RGManager utilized the native Xen interfaces for managing Xen domU's (guests). In Red Hat Enterprise Linux 5.4 this was changed to use libvirt for both the Xen and KVM hypervisors to provide a consistent interface between both hypervisor types. In addition to this architecture change there were numerous bug fixes released in Red Hat Enterprise Linux 5.4 and 5.4.z, so it is advisable to upgrade your host clusters to at least the latest Red Hat Enterprise Linux 5.5 packages before configuring Xen managed services.

- For KVM managed services you must upgrade to Red Hat Enterprise Linux 5.5 as this is the first version of Red Hat Enterprise Linux where this functionality is fully supported.

- Always check the latest Red Hat Enterprise Linux errata before deploying a Cluster to make sure that you have the latest fixes for known issues or bugs.

- Mixing hosts of different hypervisor types is not supported. The host cluster must either be all Xen or all KVM based.

- Host hardware should be provisioned such that they are capable of absorbing relocated guests from multiple other failed hosts without causing a host to overcommit memory or severely overcommit virtual CPUs. If enough failures occur to cause overcommit of either memory or virtual CPUs this can lead to severe performance degradation and potentially cluster failure.

- Directly using the xm or libvirt tools (virsh, virt-manager) to manage (live migrate, stop, start) virtual machines that are under RGManager control is not supported or recommended since this would bypass the cluster management stack.

- Each VM name must be unique cluster wide, including local-only / non-cluster VMs. Libvirtd only enforces unique names on a per-host basis. If you clone a VM by hand, you must change the name in the clone's configuration file.

7.2. Guest Clusters

- Red Hat Enterprise Linux 5.3+ Xen hosts fully support running guest clusters where the guest operating systems are also Red Hat Enterprise Linux 5.3 or above:

- Xen guest clusters can use either fence_xvm or fence_scsi for guest fencing.

- Usage of fence_xvm/fence_xvmd requires a host cluster to be running to support fence_xvmd and fence_xvm must be used as the guest fencing agent on all clustered guests.

- Shared storage can be provided by either iSCSI or Xen shared block devices backed either by host block storage or by file backed storage (raw images).

- Red Hat Enterprise Linux 5.5+ KVM hosts do not support running guest clusters.

- Red Hat Enterprise Linux 6.1+ KVM hosts support running guest clusters where the guest operating systems are either Red Hat Enterprise Linux 6.1+ or Red Hat Enterprise Linux 5.6+. Red Hat Enterprise Linux 4 guests are not supported.

- Mixing bare metal cluster nodes with cluster nodes that are virtualized is permitted.

- Red Hat Enterprise Linux 5.6+ guest clusters can use either fence_xvm or fence_scsi for guest fencing.

- Red Hat Enterprise Linux 6.1+ guest clusters can use either fence_xvm (in the

fence-virtpackage) or fence_scsi for guest fencing. - The Red Hat Enterprise Linux 6.1+ KVM Hosts must use fence_virtd if the guest cluster is using fence_virt or fence_xvm as the fence agent. If the guest cluster is using fence_scsi then fence_virtd on the hosts is not required.

- fence_virtd can operate in three modes:

- Standalone mode where the host to guest mapping is hard coded and live migration of guests is not allowed

- Using the Openais Checkpoint service to track live-migrations of clustered guests. This requires a host cluster to be running.

- Using the Qpid Management Framework (QMF) provided by the libvirt-qpid package. This utilizes QMF to track guest migrations without requiring a full host cluster to be present.

- Shared storage can be provided by either iSCSI or KVM shared block devices backed by either host block storage or by file backed storage (raw images).

- Red Hat Enterprise Virtualization Management (RHEV-M) versions 2.2+ and 3.0 currently support Red Hat Enterprise Linux 5.6+ and Red Hat Enterprise Linux 6.1+ clustered guests.

- Guest clusters must be homogeneous (either all Red Hat Enterprise Linux 5.6+ guests or all Red Hat Enterprise Linux 6.1+ guests).

- Mixing bare metal cluster nodes with cluster nodes that are virtualized is permitted.

- Fencing is provided by fence_scsi in RHEV-M 2.2+ and by both fence_scsi and fence_rhevm in RHEV-M 3.0. Fencing is supported using fence_scsi as described below:

- Use of fence_scsi with iSCSI storage is limited to iSCSI servers that support SCSI 3 Persistent Reservations with the PREEMPT AND ABORT command. Not all iSCSI servers support this functionality. Check with your storage vendor to ensure that your server is compliant with SCSI 3 Persistent Reservation support. Note that the iSCSI server shipped with Red Hat Enterprise Linux does not presently support SCSI 3 Persistent Reservations, so it is not suitable for use with fence_scsi.

- VMware vSphere 4.1, VMware vCenter 4.1, VMware ESX and ESXi 4.1 support running guest clusters where the guest operating systems are Red Hat Enterprise Linux 5.7+ or Red Hat Enterprise Linux 6.2+. Version 5.0 of VMware vSphere, vCenter, ESX and ESXi are also supported; however, due to an incomplete WDSL schema provided in the initial release of VMware vSphere 5.0, the fence_vmware_soap utility does not work on the default install. Refer to the Red Hat Knowledgebase https://access.redhat.com/knowledge/ for updated procedures to fix this issue.

- Guest clusters must be homogeneous (either all Red Hat Enterprise Linux 5.7+ guests or all Red Hat Enterprise Linux 6.1+ guests).

- Mixing bare metal cluster nodes with cluster nodes that are virtualized is permitted.

- The fence_vmware_soap agent requires the 3rd party VMware perl APIs. This software package must be downloaded from VMware's web site and installed onto the Red Hat Enterprise Linux clustered guests.

- Alternatively, fence_scsi can be used to provide fencing as described below.

- Shared storage can be provided by either iSCSI or VMware raw shared block devices.

- Use of VMware ESX guest clusters is supported using either fence_vmware_soap or fence_scsi.

- Use of Hyper-V guest clusters is unsupported at this time.

7.2.1. Using fence_scsi and iSCSI Shared Storage

- In all of the above virtualization environments, fence_scsi and iSCSI storage can be used in place of native shared storage and the native fence devices.

- fence_scsi can be used to provide I/O fencing for shared storage provided over iSCSI if the iSCSI target properly supports SCSI 3 persistent reservations and the PREEMPT AND ABORT command. Check with your storage vendor to determine if your iSCSI solution supports the above functionality.

- The iSCSI server software shipped with Red Hat Enterprise Linux does not support SCSI 3 persistent reservations; therefore, it cannot be used with fence_scsi. It is suitable for use as a shared storage solution in conjunction with other fence devices like fence_vmware or fence_rhevm, however.

- If using fence_scsi on all guests, a host cluster is not required (in the Red Hat Enterprise Linux 5 Xen/KVM and Red Hat Enterprise Linux 6 KVM Host use cases)

- If fence_scsi is used as the fence agent, all shared storage must be over iSCSI. Mixing of iSCSI and native shared storage is not permitted.

7.2.2. General Recommendations

- As stated above it is recommended to upgrade both the hosts and guests to the latest Red Hat Enterprise Linux packages before using virtualization capabilities, as there have been many enhancements and bug fixes.

- Mixing virtualization platforms (hypervisors) underneath guest clusters is not supported. All underlying hosts must use the same virtualization technology.

- It is not supported to run all guests in a guest cluster on a single physical host as this provides no high availability in the event of a single host failure. This configuration can be used for prototype or development purposes, however.

- Best practices include the following:

- It is not necessary to have a single host per guest, but this configuration does provide the highest level of availability since a host failure only affects a single node in the cluster. If you have a 2-to-1 mapping (two guests in a single cluster per physical host) this means a single host failure results in two guest failures. Therefore it is advisable to get as close to a 1-to-1 mapping as possible.

- Mixing multiple independent guest clusters on the same set of physical hosts is not supported at this time when using the fence_xvm/fence_xvmd or fence_virt/fence_virtd fence agents.

- Mixing multiple independent guest clusters on the same set of physical hosts will work if using fence_scsi + iSCSI storage or if using fence_vmware + VMware (ESX/ESXi and vCenter).

- Running non-clustered guests on the same set of physical hosts as a guest cluster is supported, but since hosts will physically fence each other if a host cluster is configured, these other guests will also be terminated during a host fencing operation.

- Host hardware should be provisioned such that memory or virtual CPU overcommit is avoided. Overcommitting memory or virtual CPU will result in performance degradation. If the performance degradation becomes critical the cluster heartbeat could be affected, which may result in cluster failure.

Appendix A. Revision History

| Revision History | |||

|---|---|---|---|

| Revision 4-4 | Tue Apr 18 2017 | ||

| |||

| Revision 4-3 | Wed Mar 8 2017 | ||

| |||

| Revision 4-1 | Fri Dec 16 2016 | ||

| |||

| Revision 3-4 | Tue Apr 26 2016 | ||

| |||

| Revision 3-3 | Wed Mar 9 2016 | ||

| |||

| Revision 2-3 | Wed Jul 22 2015 | ||

| |||

| Revision 2-2 | Wed Jul 8 2015 | ||

| |||

| Revision 2-1 | Mon Apr 3 2015 | ||

| |||

| Revision 1-13 | Wed Oct 8 2014 | ||

| |||

| Revision 1-12 | Thu Aug 7 2014 | ||

| |||

| Revision 1-7 | Wed Nov 20 2013 | ||

| |||

| Revision 1-4 | Mon Feb 18 2013 | ||

| |||

| Revision 1-3 | Mon Jun 18 2012 | ||

| |||

| Revision 1-2 | Fri Aug 26 2011 | ||

| |||

| Revision 1-1 | Wed Nov 10 2010 | ||

| |||