Questo contenuto non è disponibile nella lingua selezionata.

Storage Administration Guide

Deploying and configuring single-node storage in RHEL 7

Abstract

Chapter 1. Overview

1.1. New Features and Enhancements in Red Hat Enterprise Linux 7

eCryptfs not included

System Storage Manager

XFS Is the Default File System

File System Restructure

/bin, /sbin, /lib, and /lib64 are now nested under /usr.

Snapper

Btrfs (Technology Preview)

Note

NFSv2 No Longer Supported

Part I. File Systems

Note

Chapter 2. File System Structure and Maintenance

- Shareable and unsharable files

- Shareable files can be accessed locally and by remote hosts. Unsharable files are only available locally.

- Variable and static files

- Variable files, such as documents, can be changed at any time. Static files, such as binaries, do not change without an action from the system administrator.

2.1. Overview of Filesystem Hierarchy Standard (FHS)

- Compatibility with other FHS-compliant systems

- The ability to mount a

/usr/partition as read-only. This is crucial, since/usr/contains common executables and should not be changed by users. In addition, since/usr/is mounted as read-only, it should be mountable from the CD-ROM drive or from another machine via a read-only NFS mount.

2.1.1. FHS Organization

Note

2.1.1.1. Gathering File System Information

df Command

df command reports the system's disk space usage. Its output looks similar to the following:

Example 2.1. df Command Output

df shows the partition size in 1 kilobyte blocks and the amount of used and available disk space in kilobytes. To view the information in megabytes and gigabytes, use the command df -h. The -h argument stands for "human-readable" format. The output for df -h looks similar to the following:

Example 2.2. df -h Command Output

Note

/dev/shm represents the system's virtual memory file system.

du Command

du command displays the estimated amount of space being used by files in a directory, displaying the disk usage of each subdirectory. The last line in the output of du shows the total disk usage of the directory. To see only the total disk usage of a directory in human-readable format, use du -hs. For more options, see man du.

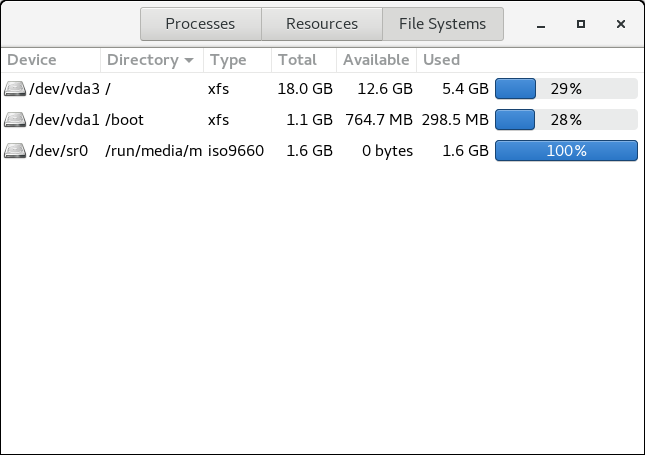

Gnome System Monitor

gnome-system-monitor. Select the File Systems tab to view the system's partitions. The following figure illustrates the File Systems tab.

Figure 2.1. File Systems Tab in GNOME System Monitor

2.1.1.2. The /boot/ Directory

/boot/ directory contains static files required to boot the system, for example, the Linux kernel. These files are essential for the system to boot properly.

Warning

/boot/ directory. Doing so renders the system unbootable.

2.1.1.3. The /dev/ Directory

/dev/ directory contains device nodes that represent the following device types:

- devices attached to the system;

- virtual devices provided by the kernel.

udevd daemon creates and removes device nodes in /dev/ as needed.

/dev/ directory and subdirectories are defined as either character (providing only a serial stream of input and output, for example, mouse or keyboard) or block (accessible randomly, such as a hard drive or a floppy drive). If GNOME or KDE is installed, some storage devices are automatically detected when connected (such as with USB) or inserted (such as a CD or DVD drive), and a pop-up window displaying the contents appears.

| File | Description |

|---|---|

/dev/hda | The master device on the primary IDE channel. |

/dev/hdb | The slave device on the primary IDE channel. |

/dev/tty0 | The first virtual console. |

/dev/tty1 | The second virtual console. |

/dev/sda | The first device on the primary SCSI or SATA channel. |

/dev/lp0 | The first parallel port. |

- Mapped device

- A logical volume in a volume group, for example,

/dev/mapper/VolGroup00-LogVol02. - Static device

- A traditional storage volume, for example,

/dev/sdbX, where sdb is a storage device name and X is the partition number./dev/sdbXcan also be/dev/disk/by-id/WWID, or/dev/disk/by-uuid/UUID. For more information, see Section 25.8, “Persistent Naming”.

2.1.1.4. The /etc/ Directory

/etc/ directory is reserved for configuration files that are local to the machine. It should not contain any binaries; if there are any binaries, move them to /usr/bin/ or /usr/sbin/.

/etc/skel/ directory stores "skeleton" user files, which are used to populate a home directory when a user is first created. Applications also store their configuration files in this directory and may reference them when executed. The /etc/exports file controls which file systems export to remote hosts.

2.1.1.5. The /mnt/ Directory

/mnt/ directory is reserved for temporarily mounted file systems, such as NFS file system mounts. For all removable storage media, use the /var/run/media/user directory.

Important

/mnt directory must not be used by installation programs.

2.1.1.6. The /opt/ Directory

/opt/ directory is normally reserved for software and add-on packages that are not part of the default installation. A package that installs to /opt/ creates a directory bearing its name, for example, /opt/packagename/. In most cases, such packages follow a predictable subdirectory structure; most store their binaries in /opt/packagename/bin/ and their man pages in /opt/packagename/man/.

2.1.1.7. The /proc/ Directory

/proc/ directory contains special files that either extract information from the kernel or send information to it. Examples of such information include system memory, CPU information, and hardware configuration. For more information about /proc/, see Section 2.3, “The /proc Virtual File System”.

2.1.1.8. The /srv/ Directory

/srv/ directory contains site-specific data served by a Red Hat Enterprise Linux system. This directory gives users the location of data files for a particular service, such as FTP, WWW, or CVS. Data that only pertains to a specific user should go in the /home/ directory.

2.1.1.9. The /sys/ Directory

/sys/ directory utilizes the new sysfs virtual file system specific to the kernel. With the increased support for hot plug hardware devices in the kernel, the /sys/ directory contains information similar to that held by /proc/, but displays a hierarchical view of device information specific to hot plug devices.

2.1.1.10. The /usr/ Directory

/usr/ directory is for files that can be shared across multiple machines. The /usr/ directory is often on its own partition and is mounted read-only. At a minimum, /usr/ should contain the following subdirectories:

/usr/bin- This directory is used for binaries.

/usr/etc- This directory is used for system-wide configuration files.

/usr/games- This directory stores games.

/usr/include- This directory is used for C header files.

/usr/kerberos- This directory is used for Kerberos-related binaries and files.

/usr/lib- This directory is used for object files and libraries that are not designed to be directly utilized by shell scripts or users.As of Red Hat Enterprise Linux 7.0, the

/lib/directory has been merged with/usr/lib. Now it also contains libraries needed to execute the binaries in/usr/bin/and/usr/sbin/. These shared library images are used to boot the system or execute commands within the root file system. /usr/libexec- This directory contains small helper programs called by other programs.

/usr/sbin- As of Red Hat Enterprise Linux 7.0,

/sbinhas been moved to/usr/sbin. This means that it contains all system administration binaries, including those essential for booting, restoring, recovering, or repairing the system. The binaries in/usr/sbin/require root privileges to use. /usr/share- This directory stores files that are not architecture-specific.

/usr/src- This directory stores source code.

/usr/tmplinked to/var/tmp- This directory stores temporary files.

/usr/ directory should also contain a /local/ subdirectory. As per the FHS, this subdirectory is used by the system administrator when installing software locally, and should be safe from being overwritten during system updates. The /usr/local directory has a structure similar to /usr/, and contains the following subdirectories:

/usr/local/bin/usr/local/etc/usr/local/games/usr/local/include/usr/local/lib/usr/local/libexec/usr/local/sbin/usr/local/share/usr/local/src

/usr/local/ differs slightly from the FHS. The FHS states that /usr/local/ should be used to store software that should remain safe from system software upgrades. Since the RPM Package Manager can perform software upgrades safely, it is not necessary to protect files by storing them in /usr/local/.

/usr/local/ for software local to the machine. For instance, if the /usr/ directory is mounted as a read-only NFS share from a remote host, it is still possible to install a package or program under the /usr/local/ directory.

2.1.1.11. The /var/ Directory

/usr/ as read-only, any programs that write log files or need spool/ or lock/ directories should write them to the /var/ directory. The FHS states /var/ is for variable data, which includes spool directories and files, logging data, transient and temporary files.

/var/ directory:

/var/account//var/arpwatch//var/cache//var/crash//var/db//var/empty//var/ftp//var/gdm//var/kerberos//var/lib//var/local//var/lock//var/log//var/maillinked to/var/spool/mail//var/mailman//var/named//var/nis//var/opt//var/preserve//var/run//var/spool//var/tmp//var/tux//var/www//var/yp/

Important

/var/run/media/user directory contains subdirectories used as mount points for removable media such as USB storage media, DVDs, CD-ROMs, and Zip disks. Note that previously, the /media/ directory was used for this purpose.

messages and lastlog, go in the /var/log/ directory. The /var/lib/rpm/ directory contains RPM system databases. Lock files go in the /var/lock/ directory, usually in directories for the program using the file. The /var/spool/ directory has subdirectories that store data files for some programs. These subdirectories include:

/var/spool/at//var/spool/clientmqueue//var/spool/cron//var/spool/cups//var/spool/exim//var/spool/lpd//var/spool/mail//var/spool/mailman//var/spool/mqueue//var/spool/news//var/spool/postfix//var/spool/repackage//var/spool/rwho//var/spool/samba//var/spool/squid//var/spool/squirrelmail//var/spool/up2date//var/spool/uucp//var/spool/uucppublic//var/spool/vbox/

2.2. Special Red Hat Enterprise Linux File Locations

/var/lib/rpm/ directory. For more information on RPM, see man rpm.

/var/cache/yum/ directory contains files used by the Package Updater, including RPM header information for the system. This location may also be used to temporarily store RPMs downloaded while updating the system. For more information about the Red Hat Network, see https://rhn.redhat.com/.

/etc/sysconfig/ directory. This directory stores a variety of configuration information. Many scripts that run at boot time use the files in this directory.

2.3. The /proc Virtual File System

/proc contains neither text nor binary files. Because it houses virtual files, the /proc is referred to as a virtual file system. These virtual files are typically zero bytes in size, even if they contain a large amount of information.

/proc file system is not used for storage. Its main purpose is to provide a file-based interface to hardware, memory, running processes, and other system components. Real-time information can be retrieved on many system components by viewing the corresponding /proc file. Some of the files within /proc can also be manipulated (by both users and applications) to configure the kernel.

/proc files are relevant in managing and monitoring system storage:

- /proc/devices

- Displays various character and block devices that are currently configured.

- /proc/filesystems

- Lists all file system types currently supported by the kernel.

- /proc/mdstat

- Contains current information on multiple-disk or RAID configurations on the system, if they exist.

- /proc/mounts

- Lists all mounts currently used by the system.

- /proc/partitions

- Contains partition block allocation information.

/proc file system, see the Red Hat Enterprise Linux 7 Deployment Guide.

2.4. Discard Unused Blocks

- Batch discard operations are run explicitly by the user with the

fstrimcommand. This command discards all unused blocks in a file system that match the user's criteria. - Online discard operations are specified at mount time, either with the

-o discardoption as part of amountcommand or with thediscardoption in the/etc/fstabfile. They run in real time without user intervention. Online discard operations only discard blocks that are transitioning from used to free.

/sys/block/device/queue/discard_max_bytes file is not zero.

fstrim command on:

- a device that does not support discard operations, or

- a logical device (LVM or MD) comprised of multiple devices, where any one of the device does not support discard operations

fstrim -v /mnt/non_discard fstrim: /mnt/non_discard: the discard operation is not supported

fstrim -v /mnt/non_discard

fstrim: /mnt/non_discard: the discard operation is not supported

Note

mount command allows you to mount a device that does not support discard operations with the -o discard option.

Chapter 3. The XFS File System

- Main Features of XFS

- XFS supports metadata journaling, which facilitates quicker crash recovery.

- The XFS file system can be defragmented and enlarged while mounted and active.

- In addition, Red Hat Enterprise Linux 7 supports backup and restore utilities specific to XFS.

- Allocation Features

- XFS features the following allocation schemes:

- Extent-based allocation

- Stripe-aware allocation policies

- Delayed allocation

- Space pre-allocation

Delayed allocation and other performance optimizations affect XFS the same way that they do ext4. Namely, a program's writes to an XFS file system are not guaranteed to be on-disk unless the program issues anfsync()call afterwards.For more information on the implications of delayed allocation on a file system (ext4 and XFS), see Allocation Features in Chapter 5, The ext4 File System.Note

Creating or expanding files occasionally fails with an unexpected ENOSPC write failure even though the disk space appears to be sufficient. This is due to XFS's performance-oriented design. In practice, it does not become a problem since it only occurs if remaining space is only a few blocks. - Other XFS Features

- The XFS file system also supports the following:

- Extended attributes (

xattr) - This allows the system to associate several additional name/value pairs per file. It is enabled by default.

- Quota journaling

- This avoids the need for lengthy quota consistency checks after a crash.

- Project/directory quotas

- This allows quota restrictions over a directory tree.

- Subsecond timestamps

- This allows timestamps to go to the subsecond.

- Extended attributes (

- Default

atimebehavior isrelatime Relatimeis on by default for XFS. It has almost no overhead compared tonoatimewhile still maintaining saneatimevalues.

3.1. Creating an XFS File System

- To create an XFS file system, use the following command:

mkfs.xfs block_device

# mkfs.xfs block_deviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Replace block_device with the path to a block device. For example,

/dev/sdb1,/dev/disk/by-uuid/05e99ec8-def1-4a5e-8a9d-5945339ceb2a, or/dev/my-volgroup/my-lv. - In general, the default options are optimal for common use.

- When using

mkfs.xfson a block device containing an existing file system, add the-foption to overwrite that file system.

Example 3.1. mkfs.xfs Command Output

mkfs.xfs command:

Note

xfs_growfs command. For more information, see Section 3.4, “Increasing the Size of an XFS File System”).

Striped Block Devices

mkfs.xfs chooses an optimal geometry. This may also be true on some hardware RAIDs that export geometry information to the operating system.

mkfs utility (for ext3, ext4, and xfs) will automatically use this geometry. If stripe geometry is not detected by the mkfs utility and even though the storage does, in fact, have stripe geometry, it is possible to manually specify it when creating the file system using the following options:

- su=value

- Specifies a stripe unit or RAID chunk size. The

valuemust be specified in bytes, with an optionalk,m, orgsuffix. - sw=value

- Specifies the number of data disks in a RAID device, or the number of stripe units in the stripe.

mkfs.xfs -d su=64k,sw=4 /dev/block_device

# mkfs.xfs -d su=64k,sw=4 /dev/block_deviceAdditional Resources

- The mkfs.xfs(8) man page

3.2. Mounting an XFS File System

mount /dev/device /mount/point

# mount /dev/device /mount/pointNote

mke2fs, mkfs.xfs does not utilize a configuration file; they are all specified on the command line.

Write Barriers

nobarrier option:

mount -o nobarrier /dev/device /mount/point

# mount -o nobarrier /dev/device /mount/pointDirect Access Technology Preview

Direct Access (DAX) is available as a Technology Preview on the ext4 and XFS file systems. It is a means for an application to directly map persistent memory into its address space. To use DAX, a system must have some form of persistent memory available, usually in the form of one or more Non-Volatile Dual Inline Memory Modules (NVDIMMs), and a file system that supports DAX must be created on the NVDIMM(s). Also, the file system must be mounted with the dax mount option. Then, an mmap of a file on the dax-mounted file system results in a direct mapping of storage into the application's address space.

3.3. XFS Quota Management

noenforce; this allows usage reporting without enforcing any limits. Valid quota mount options are:

uquota/uqnoenforce: User quotasgquota/gqnoenforce: Group quotaspquota/pqnoenforce: Project quota

xfs_quota tool can be used to set limits and report on disk usage. By default, xfs_quota is run interactively, and in basic mode. Basic mode subcommands simply report usage, and are available to all users. Basic xfs_quota subcommands include:

quota username/userID- Show usage and limits for the given

usernameor numericuserID df- Shows free and used counts for blocks and inodes.

xfs_quota also has an expert mode. The subcommands of this mode allow actual configuration of limits, and are available only to users with elevated privileges. To use expert mode subcommands interactively, use the following command:

xfs_quota -x

# xfs_quota -xreport /path- Reports quota information for a specific file system.

limit- Modify quota limits.

help.

-c option, with -x for expert subcommands.

Example 3.2. Display a Sample Quota Report

/home (on /dev/blockdevice), use the command xfs_quota -x -c 'report -h' /home. This displays output similar to the following:

john, whose home directory is /home/john, use the following command:

xfs_quota -x -c 'limit isoft=500 ihard=700 john' /home/

# xfs_quota -x -c 'limit isoft=500 ihard=700 john' /home/limit subcommand recognizes targets as users. When configuring the limits for a group, use the -g option (as in the previous example). Similarly, use -p for projects.

bsoft or bhard instead of isoft or ihard.

Example 3.3. Set a Soft and Hard Block Limit

accounting on the /target/path file system, use the following command:

xfs_quota -x -c 'limit -g bsoft=1000m bhard=1200m accounting' /target/path

# xfs_quota -x -c 'limit -g bsoft=1000m bhard=1200m accounting' /target/pathNote

bsoft and bhard count by the byte.

Important

rtbhard/rtbsoft) are described in man xfs_quota as valid units when setting quotas, the real-time sub-volume is not enabled in this release. As such, the rtbhard and rtbsoft options are not applicable.

Setting Project Limits

- Add the project-controlled directories to

/etc/projects. For example, the following adds the/var/logpath with a unique ID of 11 to/etc/projects. Your project ID can be any numerical value mapped to your project.echo 11:/var/log >> /etc/projects

# echo 11:/var/log >> /etc/projectsCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Add project names to

/etc/projidto map project IDs to project names. For example, the following associates a project called logfiles with the project ID of 11 as defined in the previous step.echo logfiles:11 >> /etc/projid

# echo logfiles:11 >> /etc/projidCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Initialize the project directory. For example, the following initializes the project directory

/var:xfs_quota -x -c 'project -s logfiles' /var

# xfs_quota -x -c 'project -s logfiles' /varCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Configure quotas for projects with initialized directories:

xfs_quota -x -c 'limit -p bhard=lg logfiles' /var

# xfs_quota -x -c 'limit -p bhard=lg logfiles' /varCopy to Clipboard Copied! Toggle word wrap Toggle overflow

quota, repquota, and edquota for example) may also be used to manipulate XFS quotas. However, these tools cannot be used with XFS project quotas.

Important

xfs_quota over all other available tools.

man xfs_quota, man projid(5), and man projects(5).

3.4. Increasing the Size of an XFS File System

xfs_growfs command:

xfs_growfs /mount/point -D size

# xfs_growfs /mount/point -D size-D size option grows the file system to the specified size (expressed in file system blocks). Without the -D size option, xfs_growfs will grow the file system to the maximum size supported by the device.

-D size, ensure that the underlying block device is of an appropriate size to hold the file system later. Use the appropriate resizing methods for the affected block device.

Note

man xfs_growfs.

3.5. Repairing an XFS File System

xfs_repair:

xfs_repair /dev/device

# xfs_repair /dev/devicexfs_repair utility is highly scalable and is designed to repair even very large file systems with many inodes efficiently. Unlike other Linux file systems, xfs_repair does not run at boot time, even when an XFS file system was not cleanly unmounted. In the event of an unclean unmount, xfs_repair simply replays the log at mount time, ensuring a consistent file system.

Warning

xfs_repair utility cannot repair an XFS file system with a dirty log. To clear the log, mount and unmount the XFS file system. If the log is corrupt and cannot be replayed, use the -L option ("force log zeroing") to clear the log, that is, xfs_repair -L /dev/device. Be aware that this may result in further corruption or data loss.

man xfs_repair.

3.6. Suspending an XFS File System

xfs_freeze mount-point

# xfs_freeze mount-pointNote

xfs_freeze utility is provided by the xfsprogs package, which is only available on x86_64.

xfs_freeze -f /mount/point

# xfs_freeze -f /mount/pointxfs_freeze -u /mount/point

# xfs_freeze -u /mount/pointxfs_freeze to suspend the file system first. Rather, the LVM management tools will automatically suspend the XFS file system before taking the snapshot.

man xfs_freeze.

3.7. Backing Up and Restoring XFS File Systems

- xfsdump for creating the backup

- xfsrestore for restoring from backup

3.7.1. Features of XFS Backup and Restoration

Backup

xfsdump utility to:

- Perform backups to regular file images.Only one backup can be written to a regular file.

- Perform backups to tape drives.The

xfsdumputility also allows you to write multiple backups to the same tape. A backup can span multiple tapes.To back up multiple file systems to a single tape device, simply write the backup to a tape that already contains an XFS backup. This appends the new backup to the previous one. By default,xfsdumpnever overwrites existing backups. - Create incremental backups.The

xfsdumputility uses dump levels to determine a base backup to which other backups are relative. Numbers from0to9refer to increasing dump levels. An incremental backup only backs up files that have changed since the last dump of a lower level:- To perform a full backup, perform a level 0 dump on the file system.

- A level 1 dump is the first incremental backup after a full backup. The next incremental backup would be level 2, which only backs up files that have changed since the last level 1 dump; and so on, to a maximum of level 9.

- Exclude files from a backup using size, subtree, or inode flags to filter them.

Restoration

xfsrestore interactive mode. The interactive mode provides a set of commands to manipulate the backup files.

3.7.2. Backing Up an XFS File System

Procedure 3.1. Backing Up an XFS File System

- Use the following command to back up an XFS file system:

xfsdump -l level [-L label] -f backup-destination path-to-xfs-filesystem

# xfsdump -l level [-L label] -f backup-destination path-to-xfs-filesystemCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Replace level with the dump level of your backup. Use

0to perform a full backup or1to9to perform consequent incremental backups. - Replace backup-destination with the path where you want to store your backup. The destination can be a regular file, a tape drive, or a remote tape device. For example,

/backup-files/Data.xfsdumpfor a file or/dev/st0for a tape drive. - Replace path-to-xfs-filesystem with the mount point of the XFS file system you want to back up. For example,

/mnt/data/. The file system must be mounted. - When backing up multiple file systems and saving them on a single tape device, add a session label to each backup using the

-L labeloption so that it is easier to identify them when restoring. Replace label with any name for your backup: for example,backup_data.

Example 3.4. Backing up Multiple XFS File Systems

- To back up the content of XFS file systems mounted on the

/boot/and/data/directories and save them as files in the/backup-files/directory:xfsdump -l 0 -f /backup-files/boot.xfsdump /boot xfsdump -l 0 -f /backup-files/data.xfsdump /data

# xfsdump -l 0 -f /backup-files/boot.xfsdump /boot # xfsdump -l 0 -f /backup-files/data.xfsdump /dataCopy to Clipboard Copied! Toggle word wrap Toggle overflow - To back up multiple file systems on a single tape device, add a session label to each backup using the

-L labeloption:xfsdump -l 0 -L "backup_boot" -f /dev/st0 /boot xfsdump -l 0 -L "backup_data" -f /dev/st0 /data

# xfsdump -l 0 -L "backup_boot" -f /dev/st0 /boot # xfsdump -l 0 -L "backup_data" -f /dev/st0 /dataCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional Resources

- For more information about backing up XFS file systems, see the xfsdump(8) man page.

3.7.3. Restoring an XFS File System from Backup

Prerequisites

- You need a file or tape backup of XFS file systems, as described in Section 3.7.2, “Backing Up an XFS File System”.

Procedure 3.2. Restoring an XFS File System from Backup

- The command to restore the backup varies depending on whether you are restoring from a full backup or an incremental one, or are restoring multiple backups from a single tape device:

xfsrestore [-r] [-S session-id] [-L session-label] [-i] -f backup-location restoration-path# xfsrestore [-r] [-S session-id] [-L session-label] [-i] -f backup-location restoration-pathCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Replace backup-location with the location of the backup. This can be a regular file, a tape drive, or a remote tape device. For example,

/backup-files/Data.xfsdumpfor a file or/dev/st0for a tape drive. - Replace restoration-path with the path to the directory where you want to restore the file system. For example,

/mnt/data/. - To restore a file system from an incremental (level 1 to level 9) backup, add the

-roption. - To restore a backup from a tape device that contains multiple backups, specify the backup using the

-Sor-Loptions.The-Slets you choose a backup by its session ID, while the-Llets you choose by the session label. To obtain the session ID and session labels, use thexfsrestore -Icommand.Replace session-id with the session ID of the backup. For example,b74a3586-e52e-4a4a-8775-c3334fa8ea2c. Replace session-label with the session label of the backup. For example,my_backup_session_label. - To use

xfsrestoreinteractively, use the-ioption.The interactive dialog begins afterxfsrestorefinishes reading the specified device. Available commands in the interactivexfsrestoreshell includecd,ls,add,delete, andextract; for a complete list of commands, use thehelpcommand.

Example 3.5. Restoring Multiple XFS File Systems

/mnt/:

xfsrestore -f /backup-files/boot.xfsdump /mnt/boot/ xfsrestore -f /backup-files/data.xfsdump /mnt/data/

# xfsrestore -f /backup-files/boot.xfsdump /mnt/boot/

# xfsrestore -f /backup-files/data.xfsdump /mnt/data/xfsrestore -f /dev/st0 -L "backup_boot" /mnt/boot/ xfsrestore -f /dev/st0 -S "45e9af35-efd2-4244-87bc-4762e476cbab" /mnt/data/

# xfsrestore -f /dev/st0 -L "backup_boot" /mnt/boot/

# xfsrestore -f /dev/st0 -S "45e9af35-efd2-4244-87bc-4762e476cbab" /mnt/data/Informational Messages When Restoring a Backup from a Tape

xfsrestore utility might issue messages. The messages inform you whether a match of the requested backup has been found when xfsrestore examines each backup on the tape in sequential order. For example:

Additional Resources

- For more information about restoring XFS file systems, see the xfsrestore(8) man page.

3.8. Configuring Error Behavior

- Continue retries until either:

- the I/O operation succeeds, or

- an I/O operation retry count or time limit is exceeded.

- Consider the error permanent and halt the system.

EIO: Error while trying to write to the deviceENOSPC: No space left on the deviceENODEV: Device cannot be found

3.8.1. Configuration Files for Specific and Undefined Conditions

/sys/fs/xfs/device/error/ directory.

/sys/fs/xfs/device/error/metadata/ directory contains subdirectories for each specific error condition:

/sys/fs/xfs/device/error/metadata/EIO/for theEIOerror condition/sys/fs/xfs/device/error/metadata/ENODEV/for theENODEVerror condition/sys/fs/xfs/device/error/metadata/ENOSPC/for theENOSPCerror condition

/sys/fs/xfs/device/error/metadata/condition/max_retries: controls the maximum number of times that XFS retries the operation./sys/fs/xfs/device/error/metadata/condition/retry_timeout_seconds: the time limit in seconds after which XFS will stop retrying the operation

/sys/fs/xfs/device/error/metadata/default/max_retries: controls the maximum number of retries/sys/fs/xfs/device/error/metadata/default/retry_timeout_seconds: controls the time limit for retrying

3.8.2. Setting File System Behavior for Specific and Undefined Conditions

max_retries file.

- For specific conditions:

echo value > /sys/fs/xfs/device/error/metadata/condition/max_retries

# echo value > /sys/fs/xfs/device/error/metadata/condition/max_retriesCopy to Clipboard Copied! Toggle word wrap Toggle overflow - For undefined conditions:

echo value > /sys/fs/xfs/device/error/metadata/default/max_retries

# echo value > /sys/fs/xfs/device/error/metadata/default/max_retriesCopy to Clipboard Copied! Toggle word wrap Toggle overflow

-1 and the maximum possible value of int, the C signed integer type. This is 2147483647 on 64-bit Linux.

retry_timeout_seconds file.

- For specific conditions:

echo value > /sys/fs/xfs/device/error/metadata/condition/retry_timeout_seconds

# echo value > /sys/fs/xfs/device/error/metadata/condition/retry_timeout_secondsCopy to Clipboard Copied! Toggle word wrap Toggle overflow - For undefined conditions:

echo value > /sys/fs/xfs/device/error/metadata/default/retry_timeout_seconds

# echo value > /sys/fs/xfs/device/error/metadata/default/retry_timeout_secondsCopy to Clipboard Copied! Toggle word wrap Toggle overflow

-1 and 86400, which is the number of seconds in a day.

max_retries and retry_timeout_seconds options, -1 means to retry forever and 0 to stop immediately.

/dev/ directory; for example, sda.

Note

ENODEV, are considered to be fatal and unrecoverable, regardless of the retry count, so their default value is 0.

3.8.3. Setting Unmount Behavior

fail_at_unmount option is set, the file system overrides all other error configurations during unmount, and immediately umnounts the file system without retrying the I/O operation. This allows the unmount operation to succeed even in case of persistent errors.

echo value > /sys/fs/xfs/device/error/fail_at_unmount

# echo value > /sys/fs/xfs/device/error/fail_at_unmount1 or 0:

1means to cancel retrying immediately if an error is found.0means to respect themax_retriesandretry_timeout_secondsoptions.

/dev/ directory; for example, sda.

Important

fail_at_unmount option has to be set as desired before attempting to unmount the file system. After an unmount operation has started, the configuration files and directories may be unavailable.

3.9. Other XFS File System Utilities

- xfs_fsr

- Used to defragment mounted XFS file systems. When invoked with no arguments,

xfs_fsrdefragments all regular files in all mounted XFS file systems. This utility also allows users to suspend a defragmentation at a specified time and resume from where it left off later.In addition,xfs_fsralso allows the defragmentation of only one file, as inxfs_fsr /path/to/file. Red Hat advises not to periodically defrag an entire file system because XFS avoids fragmentation by default. System wide defragmentation could cause the side effect of fragmentation in free space. - xfs_bmap

- Prints the map of disk blocks used by files in an XFS filesystem. This map lists each extent used by a specified file, as well as regions in the file with no corresponding blocks (that is, holes).

- xfs_info

- Prints XFS file system information.

- xfs_admin

- Changes the parameters of an XFS file system. The

xfs_adminutility can only modify parameters of unmounted devices or file systems. - xfs_copy

- Copies the contents of an entire XFS file system to one or more targets in parallel.

- xfs_metadump

- Copies XFS file system metadata to a file. Red Hat only supports using the

xfs_metadumputility to copy unmounted file systems or read-only mounted file systems; otherwise, generated dumps could be corrupted or inconsistent. - xfs_mdrestore

- Restores an XFS metadump image (generated using

xfs_metadump) to a file system image. - xfs_db

- Debugs an XFS file system.

man pages.

3.10. Migrating from ext4 to XFS

3.10.1. Differences Between Ext3/4 and XFS

- File system repair

- Ext3/4 runs

e2fsckin userspace at boot time to recover the journal as needed. XFS, by comparison, performs journal recovery in kernelspace at mount time. Anfsck.xfsshell script is provided but does not perform any useful action as it is only there to satisfy initscript requirements.When an XFS file system repair or check is requested, use thexfs_repaircommand. Use the-noption for a read-only check.Thexfs_repaircommand will not operate on a file system with a dirty log. To repair such a file systemmountandunmountmust first be performed to replay the log. If the log is corrupt and cannot be replayed, the-Loption can be used to zero out in the log.For more information on file system repair of XFS file systems, see Section 12.2.2, “XFS” - Metadata error behavior

- The ext3/4 file system has configurable behavior when metadata errors are encountered, with the default being to simply continue. When XFS encounters a metadata error that is not recoverable it will shut down the file system and return a

EFSCORRUPTEDerror. The system logs will contain details of the error encountered and will recommend runningxfs_repairif necessary. - Quotas

- XFS quotas are not a remountable option. The

-o quotaoption must be specified on the initial mount for quotas to be in effect.While the standard tools in the quota package can perform basic quota administrative tasks (tools such as setquota and repquota), the xfs_quota tool can be used for XFS-specific features, such as Project Quota administration.Thequotacheckcommand has no effect on an XFS file system. The first time quota accounting is turned on XFS does an automaticquotacheckinternally. Because XFS quota metadata is a first-class, journaled metadata object, the quota system will always be consistent until quotas are manually turned off. - File system resize

- The XFS file system has no utility to shrink a file system. XFS file systems can be grown online via the

xfs_growfscommand. - Inode numbers

- For file systems larger than 1 TB with 256-byte inodes, or larger than 2 TB with 512-byte inodes, XFS inode numbers might exceed 2^32. Such large inode numbers cause 32-bit stat calls to fail with the EOVERFLOW return value. The described problem might occur when using the default Red Hat Enterprise Linux 7 configuration: non-striped with four allocation groups. A custom configuration, for example file system extension or changing XFS file system parameters, might lead to a different behavior.Applications usually handle such larger inode numbers correctly. If needed, mount the XFS file system with the

-o inode32parameter to enforce inode numbers below 2^32. Note that usinginode32does not affect inodes that are already allocated with 64-bit numbers.Important

Do not use theinode32option unless it is required by a specific environment. Theinode32option changes allocation behavior. As a consequence, the ENOSPC error might occur if no space is available to allocate inodes in the lower disk blocks. - Speculative preallocation

- XFS uses speculative preallocation to allocate blocks past EOF as files are written. This avoids file fragmentation due to concurrent streaming write workloads on NFS servers. By default, this preallocation increases with the size of the file and will be apparent in "du" output. If a file with speculative preallocation is not dirtied for five minutes the preallocation will be discarded. If the inode is cycled out of cache before that time, then the preallocation will be discarded when the inode is reclaimed.If premature ENOSPC problems are seen due to speculative preallocation, a fixed preallocation amount may be specified with the

-o allocsize=amountmount option. - Fragmentation-related tools

- Fragmentation is rarely a significant issue on XFS file systems due to heuristics and behaviors, such as delayed allocation and speculative preallocation. However, tools exist for measuring file system fragmentation as well as defragmenting file systems. Their use is not encouraged.The

xfs_db fragcommand attempts to distill all file system allocations into a single fragmentation number, expressed as a percentage. The output of the command requires significant expertise to understand its meaning. For example, a fragmentation factor of 75% means only an average of 4 extents per file. For this reason the output of xfs_db's frag is not considered useful and more careful analysis of any fragmentation problems is recommended.Warning

Thexfs_fsrcommand may be used to defragment individual files, or all files on a file system. The later is especially not recommended as it may destroy locality of files and may fragment free space.

Commands Used with ext3 and ext4 Compared to XFS

| Task | ext3/4 | XFS |

|---|---|---|

| Create a file system | mkfs.ext4 or mkfs.ext3 | mkfs.xfs |

| File system check | e2fsck | xfs_repair |

| Resizing a file system | resize2fs | xfs_growfs |

| Save an image of a file system | e2image | xfs_metadump and xfs_mdrestore |

| Label or tune a file system | tune2fs | xfs_admin |

| Backup a file system | dump and restore | xfsdump and xfsrestore |

| Task | ext4 | XFS |

|---|---|---|

| Quota | quota | xfs_quota |

| File mapping | filefrag | xfs_bmap |

Chapter 4. The ext3 File System

- Availability

- After an unexpected power failure or system crash (also called an unclean system shutdown), each mounted ext2 file system on the machine must be checked for consistency by the

e2fsckprogram. This is a time-consuming process that can delay system boot time significantly, especially with large volumes containing a large number of files. During this time, any data on the volumes is unreachable.It is possible to runfsck -non a live filesystem. However, it will not make any changes and may give misleading results if partially written metadata is encountered.If LVM is used in the stack, another option is to take an LVM snapshot of the filesystem and runfsckon it instead.Finally, there is the option to remount the filesystem as read only. All pending metadata updates (and writes) are then forced to the disk prior to the remount. This ensures the filesystem is in a consistent state, provided there is no previous corruption. It is now possible to runfsck -n.The journaling provided by the ext3 file system means that this sort of file system check is no longer necessary after an unclean system shutdown. The only time a consistency check occurs using ext3 is in certain rare hardware failure cases, such as hard drive failures. The time to recover an ext3 file system after an unclean system shutdown does not depend on the size of the file system or the number of files; rather, it depends on the size of the journal used to maintain consistency. The default journal size takes about a second to recover, depending on the speed of the hardware.Note

The only journaling mode in ext3 supported by Red Hat isdata=ordered(default). - Data Integrity

- The ext3 file system prevents loss of data integrity in the event that an unclean system shutdown occurs. The ext3 file system allows you to choose the type and level of protection that your data receives. With regard to the state of the file system, ext3 volumes are configured to keep a high level of data consistency by default.

- Speed

- Despite writing some data more than once, ext3 has a higher throughput in most cases than ext2 because ext3's journaling optimizes hard drive head motion. You can choose from three journaling modes to optimize speed, but doing so means trade-offs in regards to data integrity if the system was to fail.

Note

The only journaling mode in ext3 supported by Red Hat isdata=ordered(default). - Easy Transition

- It is easy to migrate from ext2 to ext3 and gain the benefits of a robust journaling file system without reformatting. For more information on performing this task, see Section 4.2, “Converting to an ext3 File System” .

Note

ext4.ko for these on-disk formats. This means that kernel messages will always refer to ext4 regardless of the ext file system used.

4.1. Creating an ext3 File System

- Format the partition or LVM volume with the ext3 file system using the

mkfs.ext3utility:mkfs.ext3 block_device

# mkfs.ext3 block_deviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Replace block_device with the path to a block device. For example,

/dev/sdb1,/dev/disk/by-uuid/05e99ec8-def1-4a5e-8a9d-5945339ceb2a, or/dev/my-volgroup/my-lv.

- Label the file system using the

e2labelutility:e2label block_device volume_label

# e2label block_device volume_labelCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Configuring UUID

-U option:

mkfs.ext3 -U UUID device

# mkfs.ext3 -U UUID device- Replace UUID with the UUID you want to set: for example,

7cd65de3-e0be-41d9-b66d-96d749c02da7. - Replace device with the path to an ext3 file system to have the UUID added to it: for example,

/dev/sda8.

Additional Resources

- The mkfs.ext3(8) man page

- The e2label(8) man page

4.2. Converting to an ext3 File System

tune2fs command converts an ext2 file system to ext3.

Note

e2fsck utility to check your file system before and after using tune2fs. Before trying to convert ext2 to ext3, back up all file systems in case any errors occur.

ext2 file system to ext3, log in as root and type the following command in a terminal:

tune2fs -j block_device

# tune2fs -j block_devicedf command to display mounted file systems.

4.3. Reverting to an Ext2 File System

/dev/mapper/VolGroup00-LogVol02

Procedure 4.1. Revert from ext3 to ext2

- Unmount the partition by logging in as root and typing:

umount /dev/mapper/VolGroup00-LogVol02

# umount /dev/mapper/VolGroup00-LogVol02Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Change the file system type to ext2 by typing the following command:

tune2fs -O ^has_journal /dev/mapper/VolGroup00-LogVol02

# tune2fs -O ^has_journal /dev/mapper/VolGroup00-LogVol02Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Check the partition for errors by typing the following command:

e2fsck -y /dev/mapper/VolGroup00-LogVol02

# e2fsck -y /dev/mapper/VolGroup00-LogVol02Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Then mount the partition again as ext2 file system by typing:

mount -t ext2 /dev/mapper/VolGroup00-LogVol02 /mount/point

# mount -t ext2 /dev/mapper/VolGroup00-LogVol02 /mount/pointCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace /mount/point with the mount point of the partition.Note

If a.journalfile exists at the root level of the partition, delete it.

/etc/fstab file, otherwise it will revert back after booting.

Chapter 5. The ext4 File System

Note

fsck. For more information, see Chapter 4, The ext3 File System.

- Main Features

- The ext4 file system uses extents (as opposed to the traditional block mapping scheme used by ext2 and ext3), which improves performance when using large files and reduces metadata overhead for large files. In addition, ext4 also labels unallocated block groups and inode table sections accordingly, which allows them to be skipped during a file system check. This makes for quicker file system checks, which becomes more beneficial as the file system grows in size.

- Allocation Features

- The ext4 file system features the following allocation schemes:

- Persistent pre-allocation

- Delayed allocation

- Multi-block allocation

- Stripe-aware allocation

Because of delayed allocation and other performance optimizations, ext4's behavior of writing files to disk is different from ext3. In ext4, when a program writes to the file system, it is not guaranteed to be on-disk unless the program issues anfsync()call afterwards.By default, ext3 automatically forces newly created files to disk almost immediately even withoutfsync(). This behavior hid bugs in programs that did not usefsync()to ensure that written data was on-disk. The ext4 file system, on the other hand, often waits several seconds to write out changes to disk, allowing it to combine and reorder writes for better disk performance than ext3.Warning

Unlike ext3, the ext4 file system does not force data to disk on transaction commit. As such, it takes longer for buffered writes to be flushed to disk. As with any file system, use data integrity calls such asfsync()to ensure that data is written to permanent storage. - Other ext4 Features

- The ext4 file system also supports the following:

- Extended attributes (

xattr) — This allows the system to associate several additional name and value pairs per file. - Quota journaling — This avoids the need for lengthy quota consistency checks after a crash.

Note

The only supported journaling mode in ext4 isdata=ordered(default). - Subsecond timestamps — This gives timestamps to the subsecond.

5.1. Creating an ext4 File System

- To create an ext4 file system, use the following command:

mkfs.ext4 block_device

# mkfs.ext4 block_deviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Replace block_device with the path to a block device. For example,

/dev/sdb1,/dev/disk/by-uuid/05e99ec8-def1-4a5e-8a9d-5945339ceb2a, or/dev/my-volgroup/my-lv. - In general, the default options are optimal for most usage scenarios.

Example 5.1. mkfs.ext4 Command Output

Important

tune2fs to enable certain ext4 features on ext3 file systems. However, using tune2fs in this way has not been fully tested and is therefore not supported in Red Hat Enterprise Linux 7. As a result, Red Hat cannot guarantee consistent performance and predictable behavior for ext3 file systems converted or mounted by using tune2fs.

Striped Block Devices

mkfs.ext4 chooses an optimal geometry. This may also be true on some hardware RAIDs which export geometry information to the operating system.

-E option of mkfs.ext4 (that is, extended file system options) with the following sub-options:

- stride=value

- Specifies the RAID chunk size.

- stripe-width=value

- Specifies the number of data disks in a RAID device, or the number of stripe units in the stripe.

value must be specified in file system block units. For example, to create a file system with a 64k stride (that is, 16 x 4096) on a 4k-block file system, use the following command:

mkfs.ext4 -E stride=16,stripe-width=64 /dev/block_device

# mkfs.ext4 -E stride=16,stripe-width=64 /dev/block_deviceConfiguring UUID

-U option:

mkfs.ext4 -U UUID device

# mkfs.ext4 -U UUID device- Replace UUID with the UUID you want to set: for example,

7cd65de3-e0be-41d9-b66d-96d749c02da7. - Replace device with the path to an ext4 file system to have the UUID added to it: for example,

/dev/sda8.

Additional Resources

- The mkfs.ext4(8) man page

5.2. Mounting an ext4 File System

mount /dev/device /mount/point

# mount /dev/device /mount/pointacl parameter enables access control lists, while the user_xattr parameter enables user extended attributes. To enable both options, use their respective parameters with -o, as in:

mount -o acl,user_xattr /dev/device /mount/point

# mount -o acl,user_xattr /dev/device /mount/pointdata_err=abort can be used to abort the journal if an error occurs in file data.

mount -o data_err=abort /dev/device /mount/point

# mount -o data_err=abort /dev/device /mount/pointtune2fs utility also allows administrators to set default mount options in the file system superblock. For more information on this, refer to man tune2fs.

Write Barriers

nobarrier option, as in:

mount -o nobarrier /dev/device /mount/point

# mount -o nobarrier /dev/device /mount/pointDirect Access Technology Preview

Direct Access (DAX) provides, as a Technology Preview on the ext4 and XFS file systems, a means for an application to directly map persistent memory into its address space. To use DAX, a system must have some form of persistent memory available, usually in the form of one or more Non-Volatile Dual In-line Memory Modules (NVDIMMs), and a file system that supports DAX must be created on the NVDIMM(s). Also, the file system must be mounted with the dax mount option. Then, an mmap of a file on the dax-mounted file system results in a direct mapping of storage into the application's address space.

5.3. Resizing an ext4 File System

resize2fs command:

resize2fs /mount/device size

# resize2fs /mount/device sizeresize2fs command can also decrease the size of an unmounted ext4 file system:

resize2fs /dev/device size

# resize2fs /dev/device sizeresize2fs utility reads the size in units of file system block size, unless a suffix indicating a specific unit is used. The following suffixes indicate specific units:

s— 512 byte sectorsK— kilobytesM— megabytesG— gigabytes

Note

resize2fs automatically expands to fill all available space of the container, usually a logical volume or partition.

man resize2fs.

5.4. Backing up ext2, ext3, or ext4 File Systems

Prerequisites

- If the system has been running for a long time, run the

e2fsckutility on the partitions before backup:e2fsck /dev/device

# e2fsck /dev/deviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Procedure 5.1. Backing up ext2, ext3, or ext4 File Systems

- Back up configuration information, including the content of the

/etc/fstabfile and the output of thefdisk -lcommand. This is useful for restoring the partitions.To capture this information, run thesosreportorsysreportutilities. For more information aboutsosreport, see the What is a sosreport and how to create one in Red Hat Enterprise Linux 4.6 and later? Kdowledgebase article. - Depending on the role of the partition:

- If the partition you are backing up is an operating system partition, boot your system into the rescue mode. See the Booting to Rescue Mode section of the System Administrator's Guide.

- When backing up a regular, data partition, unmount it.Although it is possible to back up a data partition while it is mounted, the results of backing up a mounted data partition can be unpredictable.If you need to back up a mounted file system using the

dumputility, do so when the file system is not under a heavy load. The more activity is happening on the file system when backing up, the higher the risk of backup corruption is.

- Use the

dumputility to back up the content of the partitions:dump -0uf backup-file /dev/device

# dump -0uf backup-file /dev/deviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace backup-file with a path to a file where you want the to store the backup. Replace device with the name of the ext4 partition you want to back up. Make sure that you are saving the backup to a directory mounted on a different partition than the partition you are backing up.Example 5.2. Backing up Multiple ext4 Partitions

To back up the content of the/dev/sda1,/dev/sda2, and/dev/sda3partitions into backup files stored in the/backup-files/directory, use the following commands:dump -0uf /backup-files/sda1.dump /dev/sda1 dump -0uf /backup-files/sda2.dump /dev/sda2 dump -0uf /backup-files/sda3.dump /dev/sda3

# dump -0uf /backup-files/sda1.dump /dev/sda1 # dump -0uf /backup-files/sda2.dump /dev/sda2 # dump -0uf /backup-files/sda3.dump /dev/sda3Copy to Clipboard Copied! Toggle word wrap Toggle overflow To do a remote backup, use thesshutility or configure a password-lesssshlogin. For more information onsshand password-less login, see the Using the ssh Utility and Using Key-based Authentication sections of the System Administrator's Guide.For example, when usingssh:Example 5.3. Performing a Remote Backup Using

sshdump -0u -f - /dev/device | ssh root@remoteserver.example.com dd of=backup-file

# dump -0u -f - /dev/device | ssh root@remoteserver.example.com dd of=backup-fileCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note that if using standard redirection, you must pass the-foption separately.

Additional Resources

- For more information, see the dump(8) man page.

5.5. Restoring ext2, ext3, or ext4 File Systems

Prerequisites

- You need a backup of partitions and their metadata, as described in Section 5.4, “Backing up ext2, ext3, or ext4 File Systems”.

Procedure 5.2. Restoring ext2, ext3, or ext4 File Systems

- If you are restoring an operating system partition, boot your system into Rescue Mode. See the Booting to Rescue Mode section of the System Administrator's Guide.This step is not required for ordinary data partitions.

- Rebuild the partitions you want to restore by using the

fdiskorpartedutilites.If the partitions no longer exist, recreate them. The new partitions must be large enough to contain the restored data. It is important to get the start and end numbers right; these are the starting and ending sector numbers of the partitions obtained from thefdiskutility when backing up.For more information on modifying partitions, see Chapter 13, Partitions - Use the

mkfsutility to format the destination partition:mkfs.ext4 /dev/device

# mkfs.ext4 /dev/deviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow Important

Do not format the partition that stores your backup files. - If you created new partitions, re-label all the partitions so they match their entries in the

/etc/fstabfile:e2label /dev/device label

# e2label /dev/device labelCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Create temporary mount points and mount the partitions on them:

mkdir /mnt/device mount -t ext4 /dev/device /mnt/device

# mkdir /mnt/device # mount -t ext4 /dev/device /mnt/deviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Restore the data from backup on the mounted partition:

cd /mnt/device restore -rf device-backup-file

# cd /mnt/device # restore -rf device-backup-fileCopy to Clipboard Copied! Toggle word wrap Toggle overflow If you want to restore on a remote machine or restore from a backup file that is stored on a remote host, you can use thesshutility. For more information onssh, see the Using the ssh Utility section of the System Administrator's Guide.Note that you need to configure a password-less login for the following commands. For more information on setting up a password-lesssshlogin, see the Using Key-based Authentication section of the System Administrator's Guide.- To restore a partition on a remote machine from a backup file stored on the same machine:

ssh remote-address "cd /mnt/device && cat backup-file | /usr/sbin/restore -r -f -"

# ssh remote-address "cd /mnt/device && cat backup-file | /usr/sbin/restore -r -f -"Copy to Clipboard Copied! Toggle word wrap Toggle overflow - To restore a partition on a remote machine from a backup file stored on a different remote machine:

ssh remote-machine-1 "cd /mnt/device && RSH=/usr/bin/ssh /usr/sbin/restore -rf remote-machine-2:backup-file"

# ssh remote-machine-1 "cd /mnt/device && RSH=/usr/bin/ssh /usr/sbin/restore -rf remote-machine-2:backup-file"Copy to Clipboard Copied! Toggle word wrap Toggle overflow

- Reboot:

systemctl reboot

# systemctl rebootCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Example 5.4. Restoring Multiple ext4 Partitions

/dev/sda1, /dev/sda2, and /dev/sda3 partitions from Example 5.2, “Backing up Multiple ext4 Partitions”:

- Rebuild partitions you want to restore by using the

fdiskcommand. - Format the destination partitions:

mkfs.ext4 /dev/sda1 mkfs.ext4 /dev/sda2 mkfs.ext4 /dev/sda3

# mkfs.ext4 /dev/sda1 # mkfs.ext4 /dev/sda2 # mkfs.ext4 /dev/sda3Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Re-label all the partitions so they match the

/etc/fstabfile:e2label /dev/sda1 Boot1 e2label /dev/sda2 Root e2label /dev/sda3 Data

# e2label /dev/sda1 Boot1 # e2label /dev/sda2 Root # e2label /dev/sda3 DataCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Prepare the working directories.Mount the new partitions:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Mount the partition that contains backup files:mkdir /backup-files mount -t ext4 /dev/sda6 /backup-files

# mkdir /backup-files # mount -t ext4 /dev/sda6 /backup-filesCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Restore the data from backup to the mounted partitions:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Reboot:

systemctl reboot

# systemctl rebootCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional Resources

- For more information, see the restore(8) man page.

5.6. Other ext4 File System Utilities

- e2fsck

- Used to repair an ext4 file system. This tool checks and repairs an ext4 file system more efficiently than ext3, thanks to updates in the ext4 disk structure.

- e2label

- Changes the label on an ext4 file system. This tool also works on ext2 and ext3 file systems.

- quota

- Controls and reports on disk space (blocks) and file (inode) usage by users and groups on an ext4 file system. For more information on using

quota, refer toman quotaand Section 17.1, “Configuring Disk Quotas”. - fsfreeze

- To suspend access to a file system, use the command

# fsfreeze -f mount-pointto freeze it and# fsfreeze -u mount-pointto unfreeze it. This halts access to the file system and creates a stable image on disk.Note

It is unnecessary to usefsfreezefor device-mapper drives.For more information see thefsfreeze(8)manpage.

tune2fs utility can also adjust configurable file system parameters for ext2, ext3, and ext4 file systems. In addition, the following tools are also useful in debugging and analyzing ext4 file systems:

- debugfs

- Debugs ext2, ext3, or ext4 file systems.

- e2image

- Saves critical ext2, ext3, or ext4 file system metadata to a file.

man pages.

Chapter 6. Btrfs (Technology Preview)

Note

6.1. Creating a btrfs File System

mkfs.btrfs /dev/device

# mkfs.btrfs /dev/device6.2. Mounting a btrfs file system

mount /dev/device /mount-point

# mount /dev/device /mount-point- device=/dev/name

- Appending this option to the mount command tells btrfs to scan the named device for a btrfs volume. This is used to ensure the mount will succeed as attempting to mount devices that are not btrfs will cause the mount to fail.

Note

This does not mean all devices will be added to the file system, it only scans them. - max_inline=number

- Use this option to set the maximum amount of space (in bytes) that can be used to inline data within a metadata B-tree leaf. The default is 8192 bytes. For 4k pages it is limited to 3900 bytes due to additional headers that need to fit into the leaf.

- alloc_start=number

- Use this option to set where in the disk allocations start.

- thread_pool=number

- Use this option to assign the number of worker threads allocated.

- discard

- Use this option to enable discard/TRIM on freed blocks.

- noacl

- Use this option to disable the use of ACL's.

- space_cache

- Use this option to store the free space data on disk to make caching a block group faster. This is a persistent change and is safe to boot into old kernels.

- nospace_cache

- Use this option to disable the above

space_cache. - clear_cache

- Use this option to clear all the free space caches during mount. This is a safe option but will trigger the space cache to be rebuilt. As such, leave the file system mounted in order to let the rebuild process finish. This mount option is intended to be used once and only after problems are apparent with the free space.

- enospc_debug

- This option is used to debug problems with "no space left".

- recovery

- Use this option to enable autorecovery upon mount.

6.3. Resizing a btrfs File System

Note

G or g for GiB.

t for terabytes or p for petabytes. It only accepts k, m, and g.

Enlarging a btrfs File System

btrfs filesystem resize amount /mount-point

# btrfs filesystem resize amount /mount-pointbtrfs filesystem resize +200M /btrfssingle Resize '/btrfssingle' of '+200M'

# btrfs filesystem resize +200M /btrfssingle

Resize '/btrfssingle' of '+200M'btrfs filesystem show /mount-point

# btrfs filesystem show /mount-pointdevid of the device to be enlarged, use the following command:

btrfs filesystem resize devid:amount /mount-point

# btrfs filesystem resize devid:amount /mount-pointbtrfs filesystem resize 2:+200M /btrfstest Resize '/btrfstest/' of '2:+200M'

# btrfs filesystem resize 2:+200M /btrfstest

Resize '/btrfstest/' of '2:+200M'

Note

max instead of a specified amount. This will use all remaining free space on the device.

Shrinking a btrfs File System

btrfs filesystem resize amount /mount-point

# btrfs filesystem resize amount /mount-pointbtrfs filesystem resize -200M /btrfssingle Resize '/btrfssingle' of '-200M'

# btrfs filesystem resize -200M /btrfssingle

Resize '/btrfssingle' of '-200M'btrfs filesystem show /mount-point

# btrfs filesystem show /mount-pointdevid of the device to be shrunk, use the following command:

btrfs filesystem resize devid:amount /mount-point

# btrfs filesystem resize devid:amount /mount-pointbtrfs filesystem resize 2:-200M /btrfstest Resize '/btrfstest' of '2:-200M'

# btrfs filesystem resize 2:-200M /btrfstest

Resize '/btrfstest' of '2:-200M'

Set the File System Size

btrfs filesystem resize amount /mount-point

# btrfs filesystem resize amount /mount-pointbtrfs filesystem resize 700M /btrfssingle Resize '/btrfssingle' of '700M'

# btrfs filesystem resize 700M /btrfssingle

Resize '/btrfssingle' of '700M'btrfs filesystem show /mount-point

# btrfs filesystem show /mount-pointdevid of the device to be changed, use the following command:

btrfs filesystem resize devid:amount /mount-point

# btrfs filesystem resize devid:amount /mount-pointbtrfs filesystem resize 2:300M /btrfstest Resize '/btrfstest' of '2:300M'

# btrfs filesystem resize 2:300M /btrfstest

Resize '/btrfstest' of '2:300M'

6.4. Integrated Volume Management of Multiple Devices

6.4.1. Creating a File System with Multiple Devices

mkfs.btrfs command, as detailed in Section 6.1, “Creating a btrfs File System”, accepts the options -d for data, and -m for metadata. Valid specifications are:

raid0raid1raid10dupsingle

-m single option instructs that no duplication of metadata is done. This may be desired when using hardware raid.

Note

Example 6.1. Creating a RAID 10 btrfs File System

mkfs.btrfs /dev/device1 /dev/device2 /dev/device3 /dev/device4

# mkfs.btrfs /dev/device1 /dev/device2 /dev/device3 /dev/device4mkfs.btrfs -m raid0 /dev/device1 /dev/device2

# mkfs.btrfs -m raid0 /dev/device1 /dev/device2mkfs.btrfs -m raid10 -d raid10 /dev/device1 /dev/device2 /dev/device3 /dev/device4

# mkfs.btrfs -m raid10 -d raid10 /dev/device1 /dev/device2 /dev/device3 /dev/device4mkfs.btrfs -m single /dev/device

# mkfs.btrfs -m single /dev/devicesingle option to use the full capacity of each drive when the drives are different sizes.

mkfs.btrfs -d single /dev/device1 /dev/device2 /dev/device3

# mkfs.btrfs -d single /dev/device1 /dev/device2 /dev/device3btrfs device add /dev/device1 /mount-point

# btrfs device add /dev/device1 /mount-pointbtrfs device scan command to discover all multi-device file systems. See Section 6.4.2, “Scanning for btrfs Devices” for more information.

6.4.2. Scanning for btrfs Devices

btrfs device scan to scan all block devices under /dev and probe for btrfs volumes. This must be performed after loading the btrfs module if running with more than one device in a file system.

btrfs device scan

# btrfs device scanbtrfs device scan /dev/device

# btrfs device scan /dev/device6.4.3. Adding New Devices to a btrfs File System

btrfs filesystem show command to list all the btrfs file systems and which devices they include.

btrfs device add command is used to add new devices to a mounted file system.

btrfs filesystem balance command balances (restripes) the allocated extents across all existing devices.

Example 6.2. Add a New Device to a btrfs File System

mkfs.btrfs /dev/device1 mount /dev/device1

# mkfs.btrfs /dev/device1

# mount /dev/device1btrfs device add /dev/device2 /mount-point

# btrfs device add /dev/device2 /mount-point/dev/device1. It must now be balanced to spread across all devices.

btrfs filesystem balance /mount-point

# btrfs filesystem balance /mount-point6.4.4. Converting a btrfs File System

Example 6.3. Converting a btrfs File System

/dev/sdb1 in this case, into a two device, raid1 system in order to protect against a single disk failure, use the following commands:

mount /dev/sdb1 /mnt btrfs device add /dev/sdc1 /mnt btrfs balance start -dconvert=raid1 -mconvert=raid1 /mnt

# mount /dev/sdb1 /mnt

# btrfs device add /dev/sdc1 /mnt

# btrfs balance start -dconvert=raid1 -mconvert=raid1 /mntImportant

6.4.5. Removing btrfs Devices

btrfs device delete command to remove an online device. It redistributes any extents in use to other devices in the file system in order to be safely removed.

Example 6.4. Removing a Device on a btrfs File System

mkfs.btrfs /dev/sdb /dev/sdc /dev/sdd /dev/sde mount /dev/sdb /mnt

# mkfs.btrfs /dev/sdb /dev/sdc /dev/sdd /dev/sde

# mount /dev/sdb /mnt

btrfs device delete /dev/sdc /mnt

# btrfs device delete /dev/sdc /mnt6.4.6. Replacing Failed Devices on a btrfs File System

btrfs device delete missing removes the first device that is described by the file system metadata but not present when the file system was mounted.

Important

- mount in degraded mode,

- add a new device,

- and, remove the missing device.

6.4.7. Registering a btrfs File System in /etc/fstab

initrd or it does not perform a btrfs device scan, it is possible to mount a multi-volume btrfs file system by passing all the devices in the file system explicitly to the mount command.

Example 6.5. Example /etc/fstab Entry

/etc/fstab entry would be:

/dev/sdb /mnt btrfs device=/dev/sdb,device=/dev/sdc,device=/dev/sdd,device=/dev/sde 0

/dev/sdb /mnt btrfs device=/dev/sdb,device=/dev/sdc,device=/dev/sdd,device=/dev/sde 06.5. SSD Optimization

mkfs.btrfs turns off metadata duplication on a single device when /sys/block/device/queue/rotational is zero for the single specified device. This is equivalent to specifying -m single on the command line. It can be overridden and duplicate metadata forced by providing the -m dup option. Duplication is not required due to SSD firmware potentially losing both copies. This wastes space and is a performance cost.

ssd, nossd, and ssd_spread.

ssd option does several things:

- It allows larger metadata cluster allocation.

- It allocates data more sequentially where possible.

- It disables btree leaf rewriting to match key and block order.

- It commits log fragments without batching multiple processes.

Note

ssd mount option only enables the ssd option. Use the nossd option to disable it.

mount -o ssd will find groupings of blocks where there are several free blocks that might have allocated blocks mixed in. The command mount -o ssd_spread ensures there are no allocated blocks mixed in. This improves performance on lower end SSDs.

Note

ssd_spread option enables both the ssd and the ssd_spread options. Use the nossd to disable both these options.

ssd_spread option is never automatically set if none of the ssd options are provided and any of the devices are non-rotational.

6.6. btrfs References

btrfs(8) covers all important management commands. In particular this includes:

- All the subvolume commands for managing snapshots.

- The

devicecommands for managing devices. - The

scrub,balance, anddefragmentcommands.

mkfs.btrfs(8) contains information on creating a btrfs file system including all the options regarding it.

btrfsck(8) for information regarding fsck on btrfs systems.

Chapter 7. Global File System 2

fsck command on a very large file system can take a long time and consume a large amount of memory. Additionally, in the event of a disk or disk-subsystem failure, recovery time is limited by the speed of backup media.

clvmd, and running in a Red Hat Cluster Suite cluster. The daemon makes it possible to use LVM2 to manage logical volumes across a cluster, allowing all nodes in the cluster to share the logical volumes. For information on the Logical Volume Manager, see Red Hat's Logical Volume Manager Administration guide.

gfs2.ko kernel module implements the GFS2 file system and is loaded on GFS2 cluster nodes.

Chapter 8. Network File System (NFS)

8.1. Introduction to NFS

- NFS version 3 (NFSv3) supports safe asynchronous writes and is more robust at error handling than the previous NFSv2. It also supports 64-bit file sizes and offsets, allowing clients to access more than 2 GB of file data.

- NFS version 4 (NFSv4) works through firewalls and on the Internet, no longer requires an

rpcbindservice, supports ACLs, and utilizes stateful operations.

- Sparse Files: It verifies space efficiency of a file and allows placeholder to improve storage efficiency. It is a file having one or more holes; holes are unallocated or uninitialized data blocks consisting only of zeroes.

lseek()operation in NFSv4.2, supportsseek_hole()andseek_data(), which allows application to map out the location of holes in the sparse file. - Space Reservation: It permits storage servers to reserve free space, which prohibits servers to run out of space. NFSv4.2 supports

allocate()operation to reserve space,deallocate()operation to unreserve space, andfallocate()operation to preallocate or deallocate space in a file. - Labeled NFS: It enforces data access rights and enables SELinux labels between a client and a server for individual files on an NFS file system.

- Layout Enhancements: NFSv4.2 provides new operation,

layoutstats(), which the client can use to notify the metadata server about its communication with the layout.

- Enhances performance and security of network, and also includes client-side support for Parallel NFS (pNFS).

- No longer requires a separate TCP connection for callbacks, which allows an NFS server to grant delegations even when it cannot contact the client. For example, when NAT or a firewall interferes.

- It provides exactly once semantics (except for reboot operations), preventing a previous issue whereby certain operations could return an inaccurate result if a reply was lost and the operation was sent twice.

Note

rpcbind [1], lockd, and rpc.statd daemons. The rpc.mountd daemon is still required on the NFS server to set up the exports, but is not involved in any over-the-wire operations.

Note

'-p' command line option that can set the port, making firewall configuration easier.

/etc/exports configuration file to determine whether the client is allowed to access any exported file systems. Once verified, all file and directory operations are available to the user.

Important

rpc.nfsd process now allow binding to any specified port during system start up. However, this can be error-prone if the port is unavailable, or if it conflicts with another daemon.

8.1.1. Required Services

rpcbind service. To share or mount NFS file systems, the following services work together depending on which version of NFS is implemented:

Note

portmap service was used to map RPC program numbers to IP address port number combinations in earlier versions of Red Hat Enterprise Linux. This service is now replaced by rpcbind in Red Hat Enterprise Linux 7 to enable IPv6 support.

- nfs

systemctl start nfsstarts the NFS server and the appropriate RPC processes to service requests for shared NFS file systems.- nfslock

systemctl start nfs-lockactivates a mandatory service that starts the appropriate RPC processes allowing NFS clients to lock files on the server.- rpcbind

rpcbindaccepts port reservations from local RPC services. These ports are then made available (or advertised) so the corresponding remote RPC services can access them.rpcbindresponds to requests for RPC services and sets up connections to the requested RPC service. This is not used with NFSv4.

- rpc.mountd

- This process is used by an NFS server to process

MOUNTrequests from NFSv3 clients. It checks that the requested NFS share is currently exported by the NFS server, and that the client is allowed to access it. If the mount request is allowed, the rpc.mountd server replies with aSuccessstatus and provides theFile-Handlefor this NFS share back to the NFS client. - rpc.nfsd

rpc.nfsdallows explicit NFS versions and protocols the server advertises to be defined. It works with the Linux kernel to meet the dynamic demands of NFS clients, such as providing server threads each time an NFS client connects. This process corresponds to thenfsservice.- lockd

lockdis a kernel thread which runs on both clients and servers. It implements the Network Lock Manager (NLM) protocol, which allows NFSv3 clients to lock files on the server. It is started automatically whenever the NFS server is run and whenever an NFS file system is mounted.- rpc.statd

- This process implements the Network Status Monitor (NSM) RPC protocol, which notifies NFS clients when an NFS server is restarted without being gracefully brought down.

rpc.statdis started automatically by thenfslockservice, and does not require user configuration. This is not used with NFSv4. - rpc.rquotad

- This process provides user quota information for remote users.

rpc.rquotadis started automatically by thenfsservice and does not require user configuration. - rpc.idmapd

rpc.idmapdprovides NFSv4 client and server upcalls, which map between on-the-wire NFSv4 names (strings in the form ofuser@domain) and local UIDs and GIDs. Foridmapdto function with NFSv4, the/etc/idmapd.conffile must be configured. At a minimum, the "Domain" parameter should be specified, which defines the NFSv4 mapping domain. If the NFSv4 mapping domain is the same as the DNS domain name, this parameter can be skipped. The client and server must agree on the NFSv4 mapping domain for ID mapping to function properly.Note