このコンテンツは選択した言語では利用できません。

Introducing Red Hat AMQ 7

Overview of Features and Components

Abstract

Chapter 1. About Red Hat AMQ 7

Red Hat AMQ provides fast, lightweight, and secure messaging for Internet-scale applications. AMQ Broker supports multiple protocols and fast message persistence. AMQ Interconnect leverages the AMQP protocol to distribute and scale your messaging resources across the network. AMQ Clients provides a suite of messaging APIs for multiple languages and platforms.

Think of the AMQ components as tools inside a toolbox. They can be used together or separately to build and maintain your messaging application, and AMQP is the glue in the toolbox that binds them together. AMQ components share a common management console, so you can manage them from a single interface.

Red Hat AMQ 7 includes AMQ Streams. It is based on Apache Kafka, and does not support AMQP.

1.1. Key features

AMQ enables developers to build messaging applications that are fast, reliable, and easy to administer.

Messaging at internet scale

AMQ contains the tools to build advanced, multi-datacenter messaging networks. It can connect clients, brokers, and stand-alone services in a seamless messaging fabric.

Top-tier security and performance

AMQ offers modern SSL/TLS encryption and extensible SASL authentication. AMQ delivers fast, high-volume messaging and class-leading JMS performance.

Broad platform and language support

AMQ works with multiple languages and operating systems, so your diverse application components can communicate. AMQ supports C++, Java, JavaScript, Python, Ruby, and .NET applications, as well as Linux, Windows, and JVM-based environments.

Focused on standards

AMQ implements the Java JMS 1.1 and 2.0 API specifications. Its components support the ISO-standard AMQP 1.0 and MQTT messaging protocols, as well as STOMP and WebSocket.

Centralized management

With AMQ, you can administer all AMQ components from a single management interface. You can use JMX or the REST interface to manage servers programmatically.

Chapter 2. Component overview

Red Hat AMQ consists of AMQ Broker, AMQ Interconnect, and AMQ Clients. They work together to enable network communication in distributed applications.

2.1. AMQ Broker

AMQ Broker is a full-featured, message-oriented middleware broker. It offers advanced addressing and queueing, fast message persistence, and high availability. AMQ Broker supports multiple protocols and operating environments, enabling you to use your existing assets. AMQ Broker supports integration with Red Hat JBoss Enterprise Application Platform.

For more information, see Getting Started with AMQ Broker.

2.2. AMQ Interconnect

AMQ Interconnect provides flexible routing of messages between AMQP-enabled endpoints, including clients, brokers, and standalone services. With a single connection into a network of AMQ Interconnect routers, a client can exchange messages with any other endpoint connected to the network.

AMQ Interconnect does not use master-slave clusters for high availability. It is typically deployed in topologies of multiple routers with redundant network paths, which it uses to provide reliable connectivity. AMQ Interconnect can distribute messaging workloads across the network and achieve new levels of scale with very low latency.

For more information, see Using AMQ Interconnect.

2.3. AMQ Clients

AMQ Clients is a suite of AMQP 1.0 and JMS clients, adapters, and libraries. It includes JMS 2.0 support and new, event-driven APIs to enable integration into existing applications.

For more information, see AMQ Clients Overview.

AMQP clients

JMS clients

- AMQ JMS (AMQP 1.0)

- AMQ Core Protocol JMS

- AMQ OpenWire JMS

Adapters and libraries

2.4. Component compatibility

The following table lists the supported languages, platforms, and protocols of AMQ components. Note that any components supporting the same protocol can interoperate, even if their languages and platforms differ. For instance, AMQ Python can communicate with AMQ JMS.

| Component | Languages | Platforms | Protocols |

|---|---|---|---|

| AMQ Broker | - | JVM | AMQP 1.0, MQTT, OpenWire, STOMP, Core Protocol |

| AMQ Interconnect | - | Linux | AMQP 1.0 |

| AMQ C++ | C++ | Linux, Windows | AMQP 1.0 |

| AMQ JavaScript | JavaScript | Node.js, browsers | AMQP 1.0 |

| AMQ JMS | Java | JVM | AMQP 1.0 |

| AMQ .NET | C# | .NET | AMQP 1.0 |

| AMQ Python | Python | Linux | AMQP 1.0 |

| AMQ Ruby | Ruby | Linux | AMQP 1.0 |

| AMQ Spring Boot Starter | Java | JVM | AMQP 1.0 |

| AMQ Core Protocol JMS | Java | JVM | Core Protocol |

| AMQ OpenWire JMS | Java | JVM | OpenWire |

| AMQ JMS Pool | Java | JVM | - |

For more information, see Red Hat AMQ 7 Supported Configurations.

Chapter 3. Common deployment patterns

Red Hat AMQ 7 can be set up in a large variety of topologies. The following are some of the common deployment patterns you can implement using AMQ components.

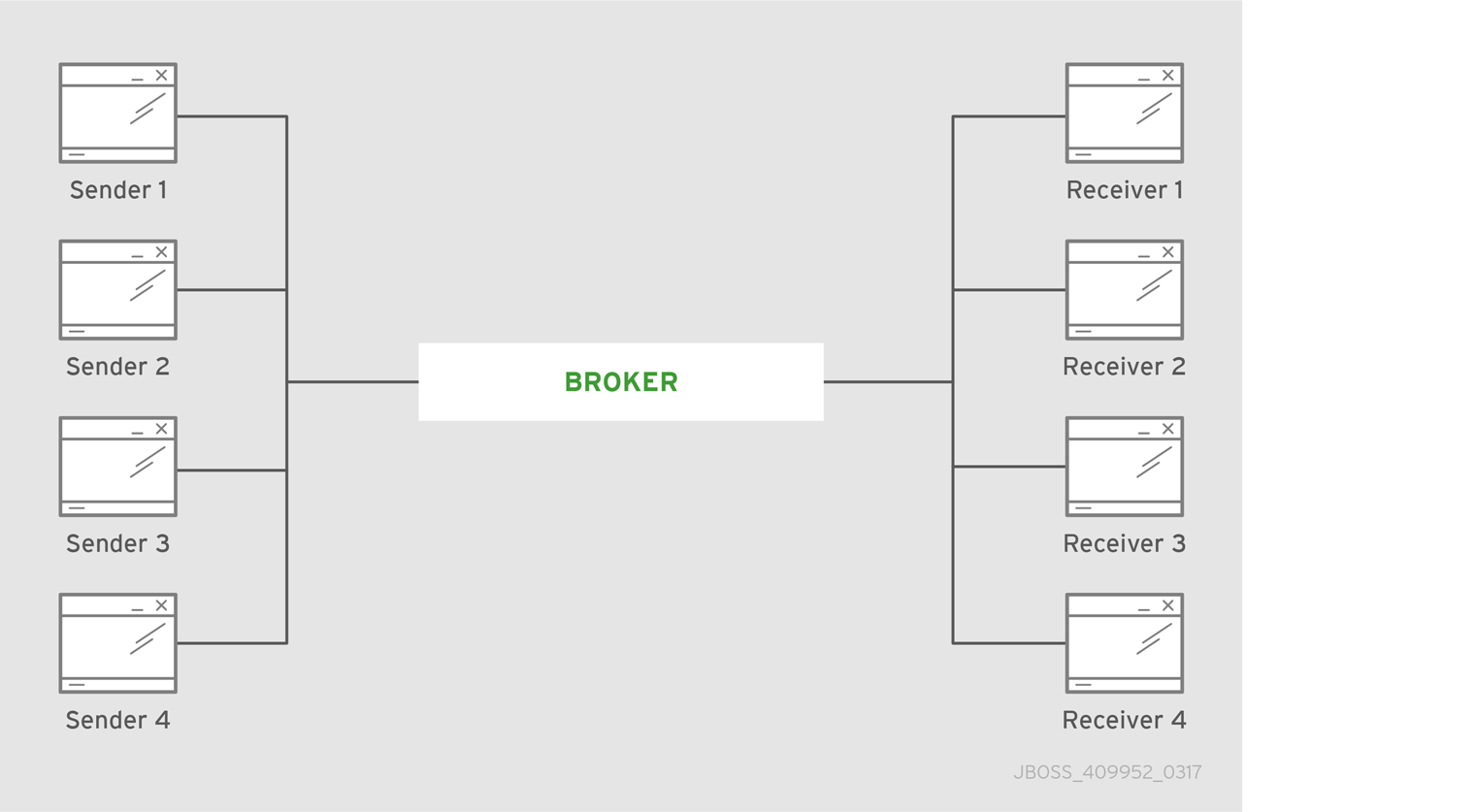

3.1. Central broker

The central broker pattern is relatively easy to set up and maintain. It is also relatively robust. Routes are typically local, because the broker and its clients are always within one network hop of each other, no matter how many nodes are added. This pattern is also known as hub and spoke, with the central broker as the hub and the clients the spokes.

Figure 3.1. Central broker pattern

The only critical element is the central broker node. The focus of your maintenance efforts is on keeping this broker available to its clients.

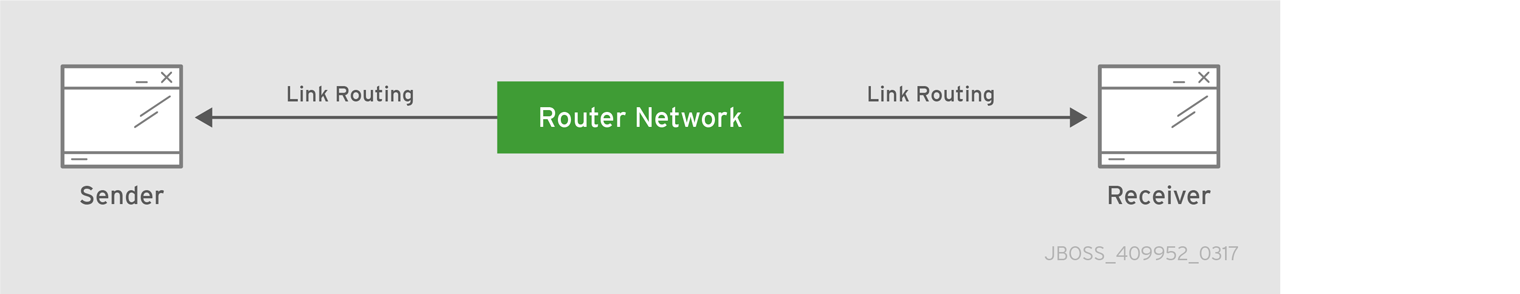

3.2. Routed messaging

When routing messages to remote destinations, the broker stores them in a local queue before forwarding them to their destination. However, sometimes an application requires sending request and response messages in real time, and having the broker store and forward messages is too costly. With AMQ you can use a router in place of a broker to avoid such costs. Unlike a broker, a router does not store messages before forwarding them to a destination. Instead, it works as a lightweight conduit and directly connects two endpoints.

Figure 3.2. Brokerless routed messaging pattern

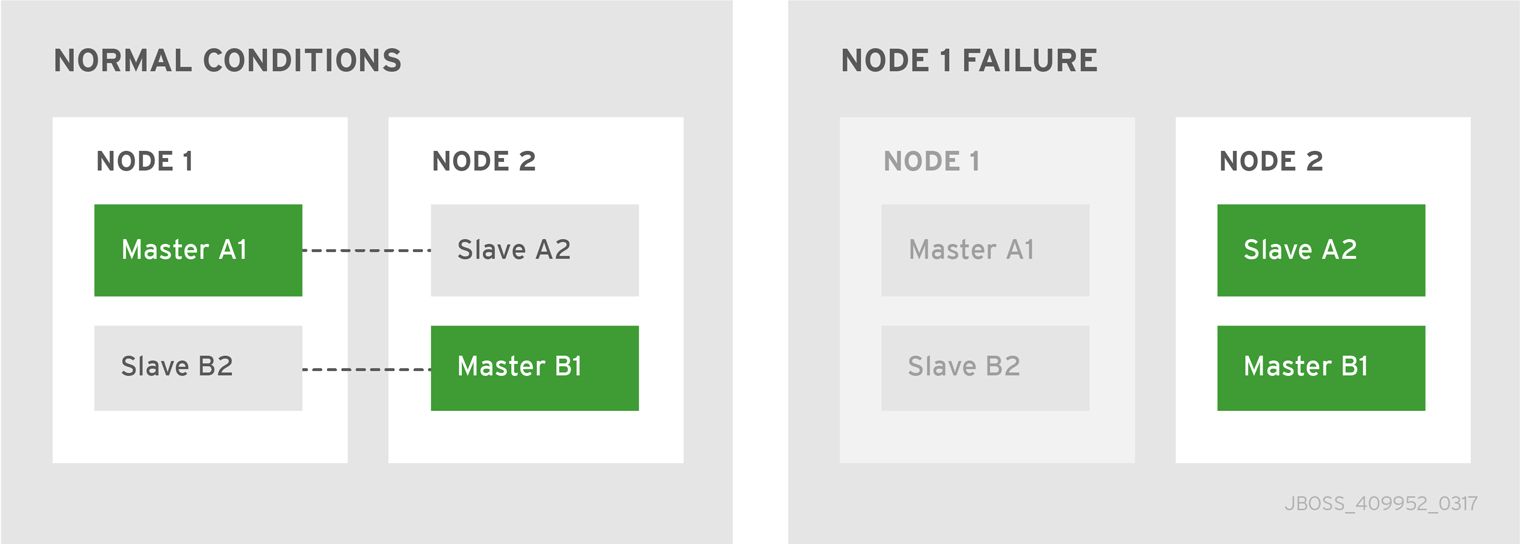

3.3. Highly available brokers

To ensure brokers are available for their clients, deploy a highly available (HA) master-slave pair to create a backup group. You might, for example, deploy two master-slave groups on two nodes. Such a deployment would provide a backup for each active broker, as seen in the following diagram.

Figure 3.3. Master-slave pair

Under normal operating conditions one master broker is active on each node, which can be either a physical server or a virtual machine. If one node fails, the slave on the other node takes over. The result is two active brokers residing on the same healthy node.

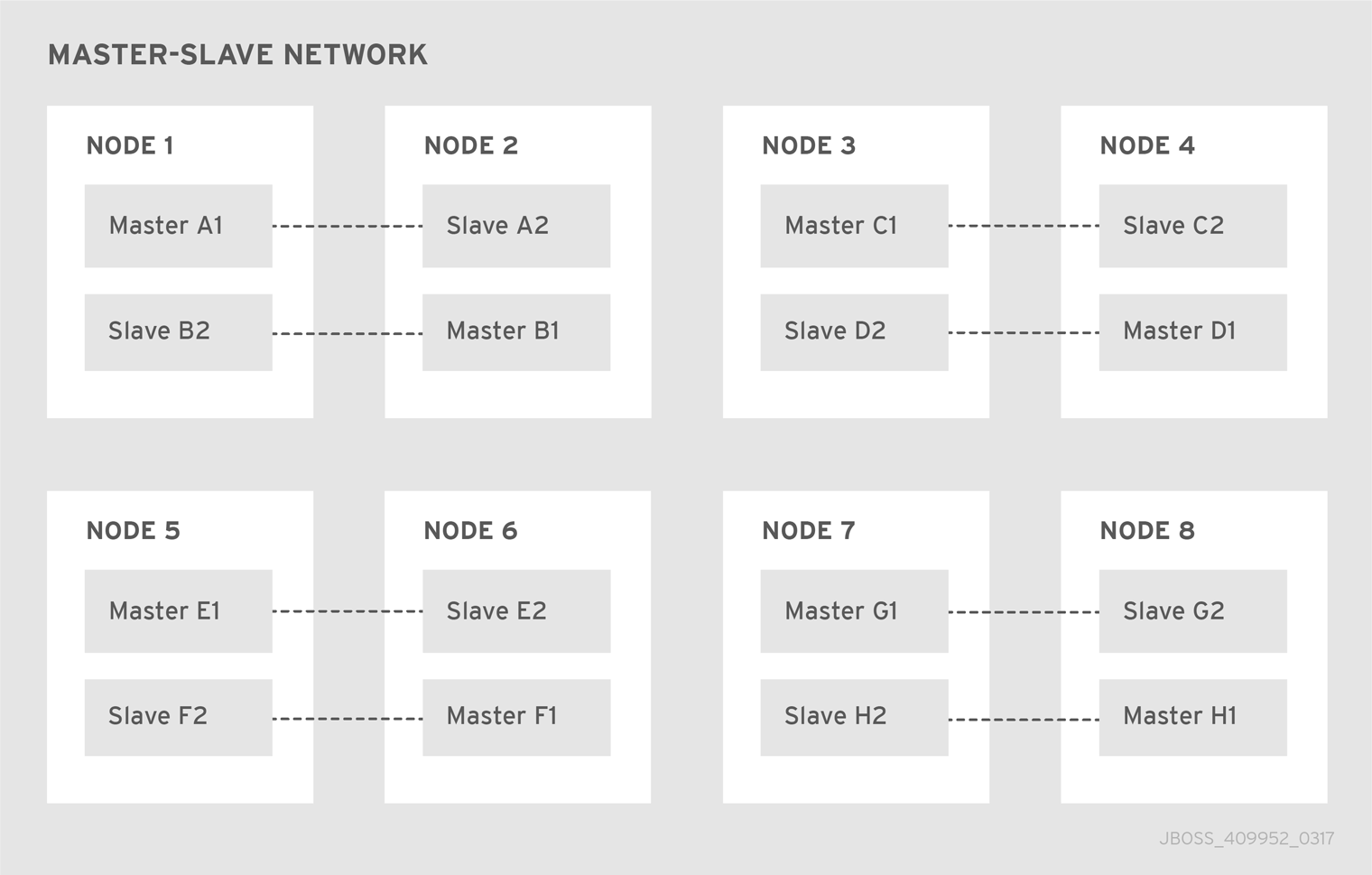

By deploying master-slave pairs, you can scale out an entire network of such backup groups. Larger deployments of this type are useful for distributing the message processing load across many brokers. The broker network in the following diagram consists of eight master-slave groups distributed over eight nodes.

Figure 3.4. Master-slave network

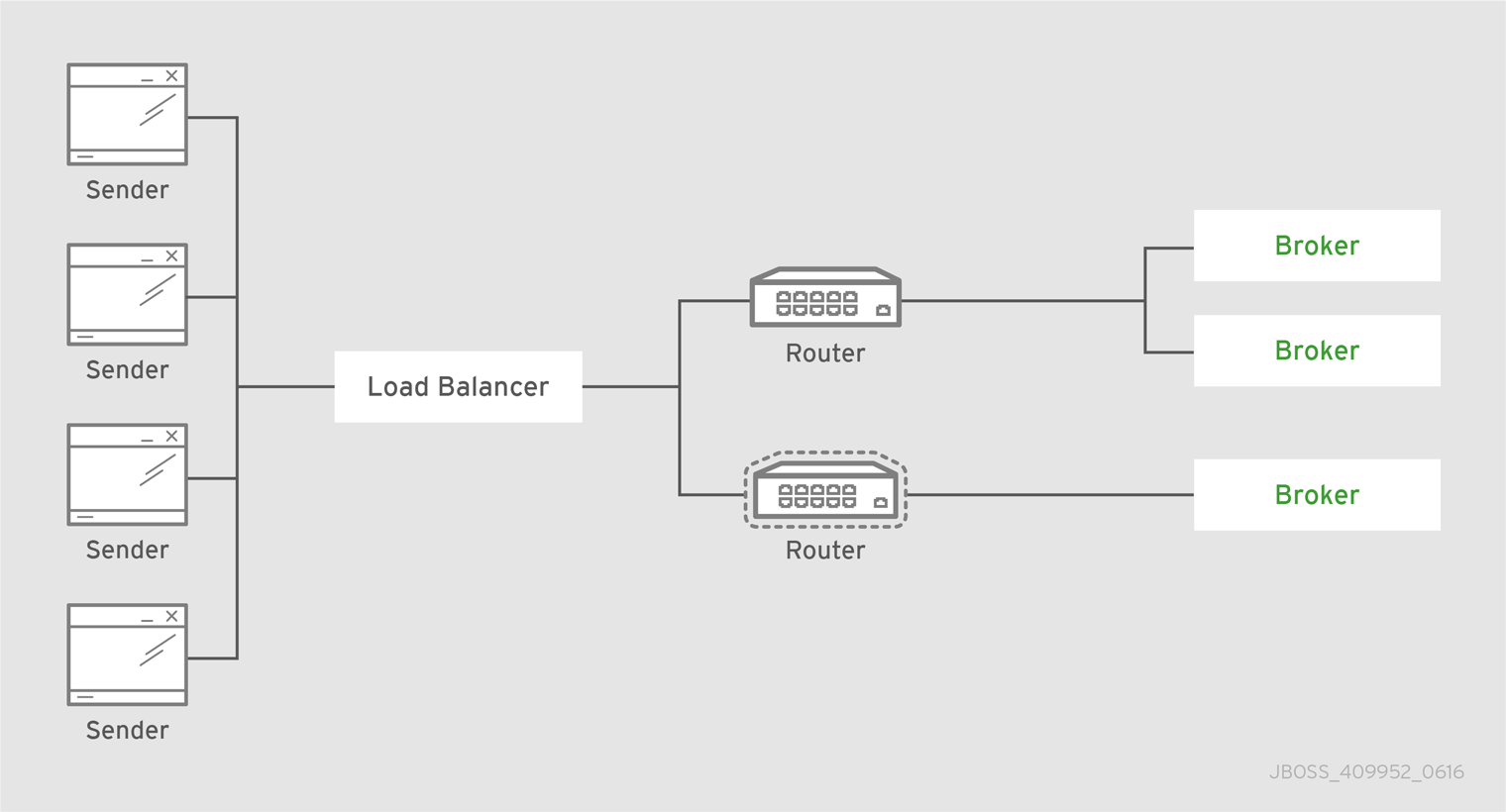

3.4. Router pair behind a load balancer

Deploying two routers behind a load balancer provides high availability, resiliency, and increased scalability for a single-datacenter deployment. Endpoints make their connections to a known URL, supported by the load balancer. Next, the load balancer spreads the incoming connections among the routers so that the connection and messaging load is distributed. If one of the routers fails, the endpoints connected to it will reconnect to the remaining active router.

Figure 3.5. Router pair behind a load balancer

For even greater scalability, you can use a larger number of routers, three or four for example. Each router connects directly to all of the others.

3.5. Router pair in a DMZ

In this deployment architecture, the router network is providing a layer of protection and isolation between the clients in the outside world and the brokers backing an enterprise application.

Figure 3.6. Router pair in a DMZ

Important notes about the DMZ topology:

- Security for the connections within the deployment is separate from the security used for external clients. For example, your deployment might use a private Certificate Authority (CA) for internal security, issuing x.509 certificates to each router and broker for authentication, although external users might use a different, public CA.

- Inter-router connections between the enterprise and the DMZ are always established from the enterprise to the DMZ for security. Therefore, no connections are permitted from the outside into the enterprise. The AMQP protocol enables bi-directional communication after a connection is established, however.

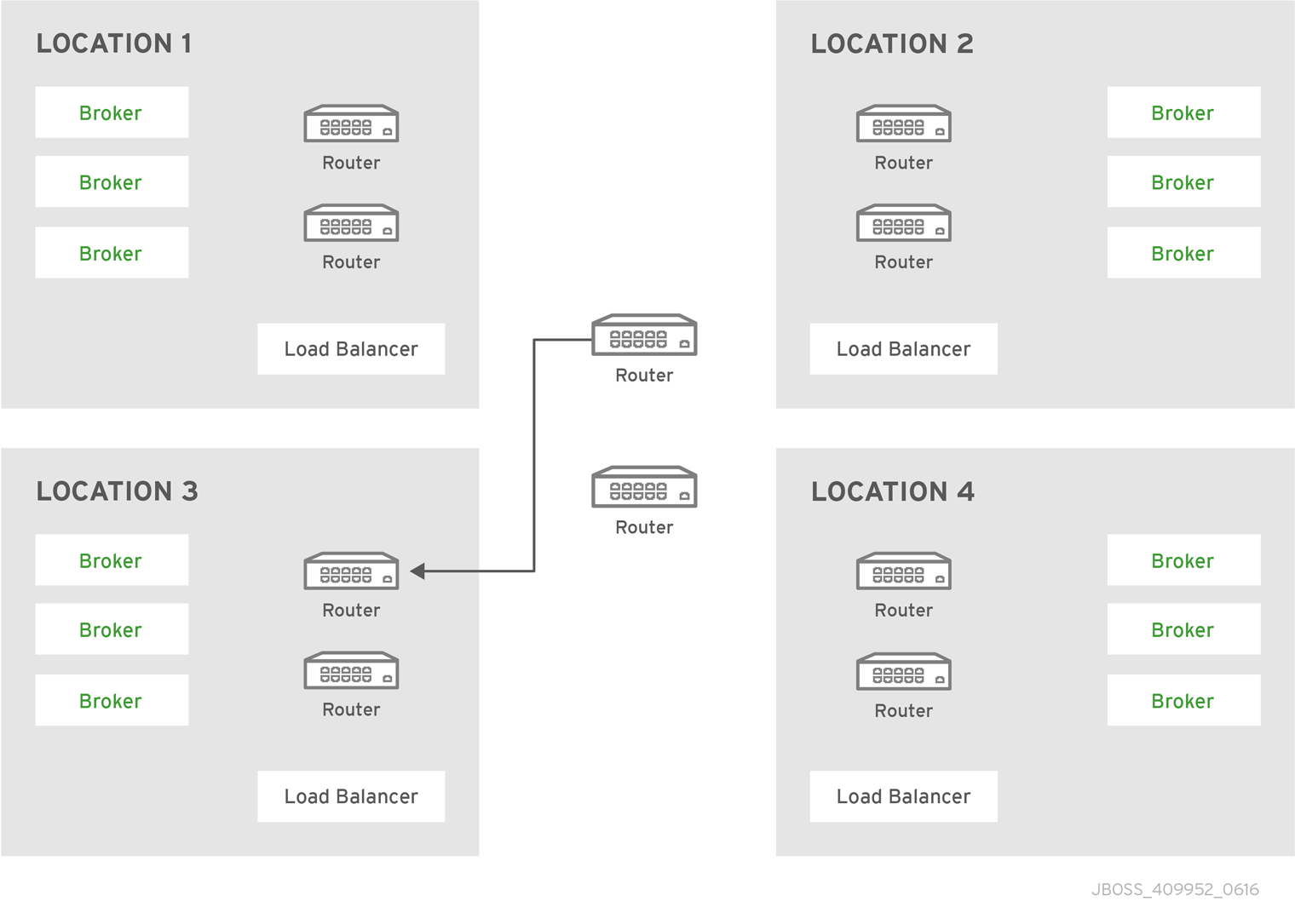

3.6. Router pairs in different data centers

You can use a more complex topology in a deployment of AMQ components that spans multiple locations. You can, for example, deploy a pair of load-balanced routers in each of four locations. You might include two backbone routers in the center to provide redundant connectivity between all locations. The following diagram is an example deployment spanning multiple locations.

Figure 3.7. Multiple interconnected routers

Revised on 2020-12-03 08:48:46 UTC