このコンテンツは選択した言語では利用できません。

Configuring AMQ Broker

For Use with AMQ Broker 7.6

Abstract

Chapter 1. Overview

AMQ Broker configuration files define important settings for a broker instance. By editing a broker’s configuration files, you can control how the broker operates in your environment.

1.1. AMQ Broker configuration files and locations

All of a broker’s configuration files are stored in <broker-instance-dir>/etc. You can configure a broker by editing the settings in these configuration files.

Each broker instance uses the following configuration files:

broker.xml- The main configuration file. You use this file to configure most aspects of the broker, such as network connections, security settings, message addresses, and so on.

bootstrap.xml-

The file that AMQ Broker uses to start a broker instance. You use it to change the location of

broker.xml, configure the web server, and set some security settings. logging.properties- You use this file to set logging properties for the broker instance.

artemis.profile- You use this file to set environment variables used while the broker instance is running.

login.config,artemis-users.properties,artemis-roles.properties- Security-related files. You use these files to set up authentication for user access to the broker instance.

1.2. Understanding the default broker configuration

You configure most of a broker’s functionality by editing the broker.xml configuration file. This file contains default settings, which are sufficient to start and operate a broker. However, you will likely need to change some of the default settings and add new settings to configure the broker for your environment.

By default, broker.xml contains default settings for the following functionality:

- Message persistence

- Acceptors

- Security

- Message addresses

Default message persistence settings

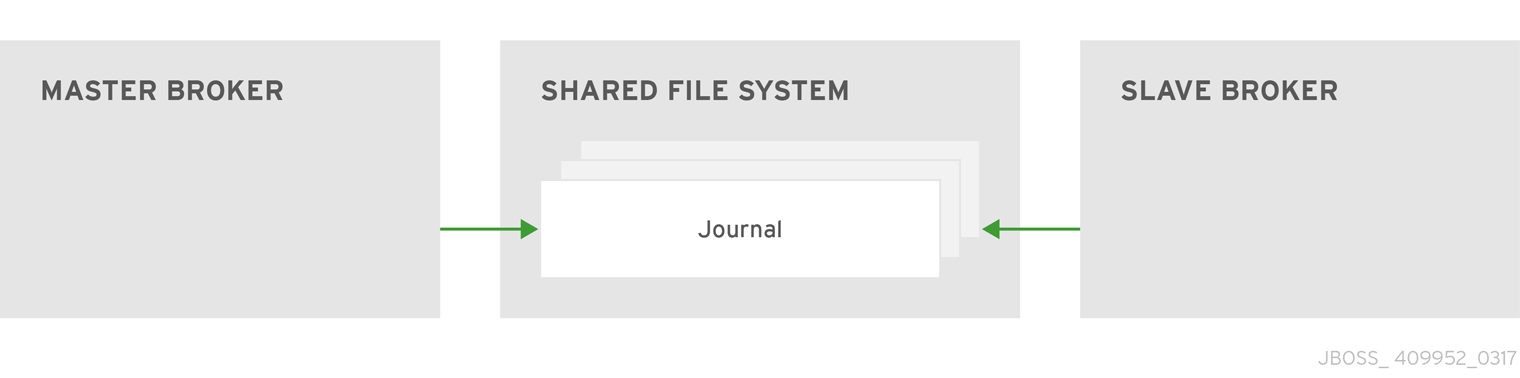

By default, AMQ Broker persistence uses an append-only file journal that consists of a set of files on disk. The journal saves messages, transactions, and other information.

<configuration ...>

<core ...>

...

<persistence-enabled>true</persistence-enabled>

<!-- this could be ASYNCIO, MAPPED, NIO

ASYNCIO: Linux Libaio

MAPPED: mmap files

NIO: Plain Java Files

-->

<journal-type>ASYNCIO</journal-type>

<paging-directory>data/paging</paging-directory>

<bindings-directory>data/bindings</bindings-directory>

<journal-directory>data/journal</journal-directory>

<large-messages-directory>data/large-messages</large-messages-directory>

<journal-datasync>true</journal-datasync>

<journal-min-files>2</journal-min-files>

<journal-pool-files>10</journal-pool-files>

<journal-file-size>10M</journal-file-size>

<!--

This value was determined through a calculation.

Your system could perform 8.62 writes per millisecond

on the current journal configuration.

That translates as a sync write every 115999 nanoseconds.

Note: If you specify 0 the system will perform writes directly to the disk.

We recommend this to be 0 if you are using journalType=MAPPED and journal-datasync=false.

-->

<journal-buffer-timeout>115999</journal-buffer-timeout>

<!--

When using ASYNCIO, this will determine the writing queue depth for libaio.

-->

<journal-max-io>4096</journal-max-io>

<!-- how often we are looking for how many bytes are being used on the disk in ms -->

<disk-scan-period>5000</disk-scan-period>

<!-- once the disk hits this limit the system will block, or close the connection in certain protocols

that won't support flow control. -->

<max-disk-usage>90</max-disk-usage>

<!-- should the broker detect dead locks and other issues -->

<critical-analyzer>true</critical-analyzer>

<critical-analyzer-timeout>120000</critical-analyzer-timeout>

<critical-analyzer-check-period>60000</critical-analyzer-check-period>

<critical-analyzer-policy>HALT</critical-analyzer-policy>

...

</core>

</configuration>Default acceptor settings

Brokers listen for incoming client connections by using an acceptor configuration element to define the port and protocols a client can use to make connections. By default, AMQ Broker includes configuration for an acceptor for each supported messaging protocol.

<configuration ...>

<core ...>

...

<acceptors>

<!-- Acceptor for every supported protocol -->

<acceptor name="artemis">tcp://0.0.0.0:61616?tcpSendBufferSize=1048576;tcpReceiveBufferSize=1048576;protocols=CORE,AMQP,STOMP,HORNETQ,MQTT,OPENWIRE;useEpoll=true;amqpCredits=1000;amqpLowCredits=300</acceptor>

<!-- AMQP Acceptor. Listens on default AMQP port for AMQP traffic -->

<acceptor name="amqp">tcp://0.0.0.0:5672?tcpSendBufferSize=1048576;tcpReceiveBufferSize=1048576;protocols=AMQP;useEpoll=true;amqpCredits=1000;amqpLowCredits=300</acceptor>

<!-- STOMP Acceptor -->

<acceptor name="stomp">tcp://0.0.0.0:61613?tcpSendBufferSize=1048576;tcpReceiveBufferSize=1048576;protocols=STOMP;useEpoll=true</acceptor>

<!-- HornetQ Compatibility Acceptor. Enables HornetQ Core and STOMP for legacy HornetQ clients. -->

<acceptor name="hornetq">tcp://0.0.0.0:5445?anycastPrefix=jms.queue.;multicastPrefix=jms.topic.;protocols=HORNETQ,STOMP;useEpoll=true</acceptor>

<!-- MQTT Acceptor -->

<acceptor name="mqtt">tcp://0.0.0.0:1883?tcpSendBufferSize=1048576;tcpReceiveBufferSize=1048576;protocols=MQTT;useEpoll=true</acceptor>

</acceptors>

...

</core>

</configuration>Default security settings

AMQ Broker contains a flexible role-based security model for applying security to queues, based on their addresses. The default configuration uses wildcards to apply the amq role to all addresses (represented by the number sign, #).

<configuration ...>

<core ...>

...

<security-settings>

<security-setting match="#">

<permission type="createNonDurableQueue" roles="amq"/>

<permission type="deleteNonDurableQueue" roles="amq"/>

<permission type="createDurableQueue" roles="amq"/>

<permission type="deleteDurableQueue" roles="amq"/>

<permission type="createAddress" roles="amq"/>

<permission type="deleteAddress" roles="amq"/>

<permission type="consume" roles="amq"/>

<permission type="browse" roles="amq"/>

<permission type="send" roles="amq"/>

<!-- we need this otherwise ./artemis data imp wouldn't work -->

<permission type="manage" roles="amq"/>

</security-setting>

</security-settings>

...

</core>

</configuration>Default message address settings

AMQ Broker includes a default address that establishes a default set of configuration settings to be applied to any created queue or topic.

Additionally, the default configuration defines two queues: DLQ (Dead Letter Queue) handles messages that arrive with no known destination, and Expiry Queue holds messages that have lived past their expiration and therefore should not be routed to their original destination.

<configuration ...>

<core ...>

...

<address-settings>

...

<!--default for catch all-->

<address-setting match="#">

<dead-letter-address>DLQ</dead-letter-address>

<expiry-address>ExpiryQueue</expiry-address>

<redelivery-delay>0</redelivery-delay>

<!-- with -1 only the global-max-size is in use for limiting -->

<max-size-bytes>-1</max-size-bytes>

<message-counter-history-day-limit>10</message-counter-history-day-limit>

<address-full-policy>PAGE</address-full-policy>

<auto-create-queues>true</auto-create-queues>

<auto-create-addresses>true</auto-create-addresses>

<auto-create-jms-queues>true</auto-create-jms-queues>

<auto-create-jms-topics>true</auto-create-jms-topics>

</address-setting>

</address-settings>

<addresses>

<address name="DLQ">

<anycast>

<queue name="DLQ" />

</anycast>

</address>

<address name="ExpiryQueue">

<anycast>

<queue name="ExpiryQueue" />

</anycast>

</address>

</addresses>

</core>

</configuration>1.3. Reloading configuration updates

By default, a broker checks for changes in the configuration files every 5000 milliseconds. If any are found, the configuration is reloaded to activate the changes. You can change the interval at which the broker checks for configuration changes.

If the configuration is changed, the broker reloads the following modules:

Address settings and queues

When the configuration file is reloaded, the address settings determine how to handle addresses and queues that have been deleted from the configuration file. You can set this with the

config-delete-addressesandconfig-delete-queuesproperties. For more information, see Appendix B, Address Setting Configuration Elements.Security settings

SSL/TLS keystores and truststores on an existing acceptor can be reloaded to establish new certificates without any impact to existing clients. Connected clients, even those with older or differing certificates, can continue to send and receive messages.

Procedure

-

Open the

<broker-instance-dir>/etc/broker.xmlconfiguration file. Within the

<core>element, add the<configuration-file-refresh-period>element and set the refresh period (in milliseconds).This example sets the configuration refresh period to be 60000 milliseconds:

<configuration> <core> ... <configuration-file-refresh-period>60000</configuration-file-refresh-period> ... </core> </configuration>

1.4. Modularizing the broker configuration file

If you have multiple brokers that share common configuration settings, you can define the common configuration in separate files, and then include these files in each broker’s broker.xml configuration file.

The most common configuration settings that you might share between brokers include:

- Addresses

- Address settings

- Security settings

Procedure

Create a separate XML file for each

broker.xmlsection that you want to share.Each XML file can only include a single section from

broker.xml(for example, either addresses or address settings, but not both). The top-level element must also define the element namespace (xmlns="urn:activemq:core").This example shows a security settings configuration defined in

my-security-settings.xml:my-security-settings.xml

<security-settings xmlns="urn:activemq:core"> <security-setting match="a1"> <permission type="createNonDurableQueue" roles="a1.1"/> </security-setting> <security-setting match="a2"> <permission type="deleteNonDurableQueue" roles="a2.1"/> </security-setting> </security-settings>-

Open the

<broker-instance-dir>/etc/broker.xmlconfiguration file for each broker that should use the common configuration settings. For each

broker.xmlfile that you opened, do the following:In the

<configuration>element at the beginning ofbroker.xml, verify that the following line appears:xmlns:xi="http://www.w3.org/2001/XInclude"

Add an XML inclusion for each XML file that contains shared configuration settings.

This example includes the

my-security-settings.xmlfile.broker.xml

<configuration ...> <core ...> ... <xi:include href="/opt/my-broker-config/my-security-settings.xml"/> ... </core> </configuration>If desired, validate

broker.xmlto verify that the XML is valid against the schema.You can use any XML validator program. This example uses

xmllintto validatebroker.xmlagainst theartemis-server.xslschema.$ xmllint --noout --xinclude --schema /opt/redhat/amq-broker/amq-broker-7.2.0/schema/artemis-server.xsd /var/opt/amq-broker/mybroker/etc/broker.xml /var/opt/amq-broker/mybroker/etc/broker.xml validates

Additional resources

- For more information about XML Inclusions (XIncludes), see https://www.w3.org/TR/xinclude/.

1.5. Document conventions

This document uses the following conventions for the sudo command and file paths.

The sudo command

In this document, sudo is used for any command that requires root privileges. You should always exercise caution when using sudo, as any changes can affect the entire system.

For more information about using sudo, see The sudo Command.

About the use of file paths in this document

In this document, all file paths are valid for Linux, UNIX, and similar operating systems (for example, /home/...). If you are using Microsoft Windows, you should use the equivalent Microsoft Windows paths (for example, C:\Users\...).

Chapter 2. Network Connections: Acceptors and Connectors

There are two types of connections used in AMQ Broker: network and In-VM. Network connections are used when the two parties are located in different virtual machines, whether on the same server or physically remote. An In-VM connection is used when the client, whether an application or a server, resides within the same virtual machine as the broker.

Network connections rely on Netty. Netty is a high-performance, low-level network library that allows network connections to be configured in several different ways: using Java IO or NIO, TCP sockets, SSL/TLS, even tunneling over HTTP or HTTPS. Netty also allows for a single port to be used for all messaging protocols. A broker will automatically detect which protocol is being used and direct the incoming message to the appropriate handler for further processing.

The URI within a network connection’s configuration determines its type. For example, using vm in the URI will create an In-VM connection. In the example below, note that the URI of the acceptor starts with vm.

<acceptor name="in-vm-example">vm://0</acceptor>

Using tcp in the URI, alternatively, will create a network connection.

<acceptor name="network-example">tcp://localhost:61617</acceptor>

This chapter will first discuss the two configuration elements specific to network connections, Acceptors and Connectors. Next, configuration steps for TCP, HTTP, and SSL/TLS network connections, as well as In-VM connections, are explained.

2.1. About Acceptors

One of the most important concepts when discussing network connections in AMQ Broker is the acceptor. Acceptors define the way connections are made to the broker. Below is a typical configuration for an acceptor that might be in found inside the configuration file BROKER_INSTANCE_DIR/etc/broker.xml.

<acceptors> <acceptor name="example-acceptor">tcp://localhost:61617</acceptor> </acceptors>

Note that each acceptor is grouped inside an acceptors element. There is no upper limit to the number of acceptors you can list per server.

Configuring an Acceptor

You configure an acceptor by appending key-value pairs to the query string of the URI defined for the acceptor. Use a semicolon (';') to separate multiple key-value pairs, as shown in the following example. It configures an acceptor for SSL/TLS by adding multiple key-value pairs at the end of the URI, starting with sslEnabled=true.

<acceptor name="example-acceptor">tcp://localhost:61617?sslEnabled=true;key-store-path=/path</acceptor>

For details on connector configuration parameters, see Acceptor and Connector Configuration Parameters.

2.2. About Connectors

Whereas acceptors define how a server accepts connections, a connector is used by clients to define how they can connect to a server.

Below is a typical connector as defined in the BROKER_INSTANCE_DIR/etc/broker.xml configuration file:

<connectors> <connector name="example-connector">tcp://localhost:61617</connector> </connectors>

Note that connectors are defined inside a connectors element. There is no upper limit to the number of connectors per server.

Although connectors are used by clients, they are configured on the server just like acceptors. There are a couple of important reasons why:

- A server itself can act as a client and therefore needs to know how to connect to other servers. For example, when one server is bridged to another or when a server takes part in a cluster.

- A server is often used by JMS clients to look up connection factory instances. In these cases, JNDI needs to know details of the connection factories used to create client connections. The information is provided to the client when a JNDI lookup is performed. See Configuring a Connection on the Client Side for more information.

Configuring a Connector

Like acceptors, connectors have their configuration attached to the query string of their URI. Below is an example of a connector that has the tcpNoDelay parameter set to false, which turns off Nagle’s algorithm for this connection.

<connector name="example-connector">tcp://localhost:61616?tcpNoDelay=false</connector>

For details on connector configuration parameters, see Acceptor and Connector Configuration Parameters.

2.3. Configuring a TCP Connection

AMQ Broker uses Netty to provide basic, unencrypted, TCP-based connectivity that can be configured to use blocking Java IO or the newer, non-blocking Java NIO. Java NIO is preferred for better scalability with many concurrent connections. However, using the old IO can sometimes give you better latency than NIO when you are less worried about supporting many thousands of concurrent connections.

If you are running connections across an untrusted network, remember that a TCP network connection is unencrypted. You may want to consider using an SSL or HTTPS configuration to encrypt messages sent over this connection if encryption is a priority. Refer to Configuring Transport Layer Security for details.

When using a TCP connection, all connections are initiated from the client side. In other words, the server does not initiate any connections to the client, which works well with firewall policies that force connections to be initiated from one direction.

For TCP connections, the host and the port of the connector’s URI defines the address used for the connection.

Procedure

-

Open the configuration file

BROKER_INSTANCE_DIR/etc/broker.xml -

Add or modify the connection and include a URI that uses

tcpas the protocol. Be sure to include both an IP or hostname as well as a port.

In the example below, an acceptor is configured as a TCP connection. A broker configured with this acceptor will accept clients making TCP connections to the IP 10.10.10.1 and port 61617.

<acceptors> <acceptor name="tcp-acceptor">tcp://10.10.10.1:61617</acceptor> ... </acceptors>

You configure a connector to use TCP in much the same way.

<connectors> <connector name="tcp-connector">tcp://10.10.10.2:61617</connector> ... </connectors>

The connector above would be referenced by a client, or even the broker itself, when making a TCP connection to the specified IP and port, 10.10.10.2:61617.

For details on available configuration parameters for TCP connections, see Acceptor and Connector Configuration Parameters. Most parameters can be used either with acceptors or connectors, but some only work with acceptors.

2.4. Configuring an HTTP Connection

HTTP connections tunnel packets over the HTTP protocol and are useful in scenarios where firewalls allow only HTTP traffic. With single port support, AMQ Broker will automatically detect if HTTP is being used, so configuring a network connection for HTTP is the same as configuring a connection for TCP. For a full working example showing how to use HTTP, see the http-transport example, located under INSTALL_DIR/examples/features/standard/.

Procedure

-

Open the configuration file

BROKER_INSTANCE_DIR/etc/broker.xml -

Add or modify the connection and include a URI that uses

tcpas the protocol. Be sure to include both an IP or hostname as well as a port

In the example below, the broker will accept HTTP communications from clients connecting to port 80 at the IP address 10.10.10.1. Furthermore, the broker will automatically detect that the HTTP protocol is in use and will communicate with the client accordingly.

<acceptors> <acceptor name="http-acceptor">tcp://10.10.10.1:80</acceptor> ... </acceptors>

Configuring a connector for HTTP is again the same as for TCP.

<connectors> <connector name="http-connector">tcp://10.10.10.2:80</connector> ... </connectors>

Using the configuration in the example above, a broker will create an outbound HTTP connection to port 80 at the IP address 10.10.10.2.

An HTTP connection uses the same configuration parameters as TCP, but it also has some of its own. For details on HTTP-related and other configuration parameters, see Acceptor and Connector Configuration Parameters.

2.5. Configuring an SSL/TLS Connection

You can also configure connections to use SSL/TLS. Refer to Configuring Transport Layer Security for details.

2.6. Configuring an In-VM Connection

An In-VM connection can be used when multiple brokers are co-located in the same virtual machine, as part of a high availability solution for example. In-VM connections can also be used by local clients running in the same JVM as the server. For an in-VM connection, the authority part of the URI defines a unique server ID. In fact, no other part of the URI is needed.

Procedure

-

Open the configuration file

BROKER_INSTANCE_DIR/etc/broker.xml -

Add or modify the connection and include a URI that uses

vmas the protocol.

<acceptors> <acceptor name="in-vm-acceptor">vm://0</acceptor> ... </acceptors>

The example acceptor above tells the broker to accept connections from the server with an ID of 0. The other server must be running in the same virtual machine as the broker.

Configuring a connector as an in-vm connection follows the same syntax.

<connectors> <connector name="in-vm-connector">vm://0</connector> ... </connectors>

The connector in the example above defines how clients establish an in-VM connection to the server with an ID of 0 that resides in the same virtual machine. The client can be be an application or broker.

2.7. Configuring a Connection from the Client Side

Connectors are also used indirectly in client applications. You can configure the JMS connection factory directly on the client side without having to define a connector on the server side:

Map<String, Object> connectionParams = new HashMap<String, Object>();

connectionParams.put(org.apache.activemq.artemis.core.remoting.impl.netty.TransportConstants.PORT_PROP_NAME, 61617);

TransportConfiguration transportConfiguration =

new TransportConfiguration(

"org.apache.activemq.artemis.core.remoting.impl.netty.NettyConnectorFactory", connectionParams);

ConnectionFactory connectionFactory = ActiveMQJMSClient.createConnectionFactoryWithoutHA(JMSFactoryType.CF, transportConfiguration);

Connection jmsConnection = connectionFactory.createConnection();Chapter 3. Network Connections: Protocols

AMQ Broker has a pluggable protocol architecture, so that you can easily enable one or more protocols for a network connection.

The broker supports the following protocols:

In addition to the protocols above, the broker also supports its own native protocol known as "Core". Past versions of this protocol were known as "HornetQ" and used by Red Hat JBoss Enterprise Application Platform.

3.1. Configuring a Network Connection to Use a Protocol

You must associate a protocol with a network connection before you can use it. (See Network Connections: Acceptors and Connectors for more information about how to create and configure network connections.) The default configuration, located in the file BROKER_INSTANCE_DIR/etc/broker.xml, includes several connections already defined. For convenience, AMQ Broker includes an acceptor for each supported protocol, plus a default acceptor that supports all protocols.

Overview of default acceptors

Shown below are the acceptors included by default in the broker.xml configuration file.

<configuration>

<core>

...

<acceptors>

<!-- All-protocols acceptor -->

<acceptor name="artemis">tcp://0.0.0.0:61616?tcpSendBufferSize=1048576;tcpReceiveBufferSize=1048576;protocols=CORE,AMQP,STOMP,HORNETQ,MQTT,OPENWIRE;useEpoll=true;amqpCredits=1000;amqpLowCredits=300</acceptor>

<!-- AMQP Acceptor. Listens on default AMQP port for AMQP traffic -->

<acceptor name="amqp">tcp://0.0.0.0:5672?tcpSendBufferSize=1048576;tcpReceiveBufferSize=1048576;protocols=AMQP;useEpoll=true;amqpCredits=1000;amqpLowCredits=300</acceptor>

<!-- STOMP Acceptor -->

<acceptor name="stomp">tcp://0.0.0.0:61613?tcpSendBufferSize=1048576;tcpReceiveBufferSize=1048576;protocols=STOMP;useEpoll=true</acceptor>

<!-- HornetQ Compatibility Acceptor. Enables HornetQ Core and STOMP for legacy HornetQ clients. -->

<acceptor name="hornetq">tcp://0.0.0.0:5445?anycastPrefix=jms.queue.;multicastPrefix=jms.topic.;protocols=HORNETQ,STOMP;useEpoll=true</acceptor>

<!-- MQTT Acceptor -->

<acceptor name="mqtt">tcp://0.0.0.0:1883?tcpSendBufferSize=1048576;tcpReceiveBufferSize=1048576;protocols=MQTT;useEpoll=true</acceptor>

</acceptors>

...

</core>

</configuration>

The only requirement to enable a protocol on a given network connnection is to add the protocols parameter to the URI for the acceptor. The value of the parameter must be a comma separated list of protocol names. If the protocol parameter is omitted from the URI, all protocols are enabled.

For example, to create an acceptor for receiving messages on port 3232 using the AMQP protocol, follow these steps:

-

Open the configuration file

BROKER_INSTANCE_DIR/etc/broker.xml -

Add the following line to the

<acceptors>stanza:

<acceptor name="ampq">tcp://0.0.0.0:3232?protocols=AMQP</acceptor>

Additional parameters in default acceptors

In a minimal acceptor configuration, you specify a protocol as part of the connection URI. However, the default acceptors in the broker.xml configuration file have some additional parameters configured. The following table details the additional parameters configured for the default acceptors.

| Acceptor(s) | Parameter | Description |

|---|---|---|

| All-protocols acceptor AMQP STOMP | tcpSendBufferSize |

Size of the TCP send buffer in bytes. The default value is |

| tcpReceiveBufferSize |

Size of the TCP receive buffer in bytes. The default value is TCP buffer sizes should be tuned according to the bandwidth and latency of your network. In summary TCP send/receive buffer sizes should be calculated as: buffer_size = bandwidth * RTT.

Where bandwidth is in bytes per second and network round trip time (RTT) is in seconds. RTT can be easily measured using the For fast networks you may want to increase the buffer sizes from the defaults. | |

| All-protocols acceptor AMQP STOMP HornetQ MQTT | useEpoll |

Use Netty epoll if using a system (Linux) that supports it. The Netty native transport offers better performance than the NIO transport. The default value of this option is |

| All-protocols acceptor AMQP | amqpCredits |

Maximum number of messages that an AMQP producer can send, regardless of the total message size. The default value is To learn more about how credits are used to control the flow of AMQP messages, see Section 11.2.3, “Blocking AMQP Messages”. |

| All-protocols acceptor AMQP | amqpLowCredits |

Lower threshold at which the broker replenishes producer credits. The default value is To learn more about how credits are used to control the flow of AMQP messages, see Section 11.2.3, “Blocking AMQP Messages”. |

| HornetQ compatibility acceptor | anycastPrefix |

Prefix that clients use to specify the For more information about configuring a prefix to enable clients to specify a routing type when connecting to an address, see Section 4.13, “Configuring a Prefix to Connect to a Specific Routing Type”. |

| multicastPrefix |

Prefix that clients use to specify the For more information about configuring a prefix to enable clients to specify a routing type when connecting to an address, see Section 4.13, “Configuring a Prefix to Connect to a Specific Routing Type”. |

Additional resources

- For information about other parameters that you can configure for Netty network connections, see Appendix A, Acceptor and Connector Configuration Parameters.

3.2. Using AMQP with a Network Connection

The broker supports the AMQP 1.0 specification. An AMQP link is a uni-directional protocol for messages between a source and a target, that is, a client and the broker.

Procedure

-

Open the configuration file

BROKER_INSTANCE_DIR/etc/broker.xml -

Add or configure an

acceptorto receive AMQP clients by including theprotocolsparameter with a value ofAMQPas part of the URI, as shown in the following example:

<acceptors> <acceptor name="amqp-acceptor">tcp://localhost:5672?protocols=AMQP</acceptor> ... </acceptors>

In the preceding example, the broker accepts AMQP 1.0 clients on port 5672, which is the default AMQP port.

An AMQP link has two endpoints, a sender and a receiver. When senders transmit a message, the broker converts it into an internal format, so it can be forwarded to its destination on the broker. Receivers connect to the destination at the broker and convert the messages back into AMQP before they are delivered.

If an AMQP link is dynamic, a temporary queue is created and either the remote source or the remote target address is set to the name of the temporary queue. If the link is not dynamic, the address of the remote target or source is used for the queue. If the remote target or source does not exist, an exception is sent.

A link target can also be a Coordinator, which is used to handle the underlying session as a transaction, either rolling it back or committing it.

AMQP allows the use of multiple transactions per session, amqp:multi-txns-per-ssn, however the current version of AMQ Broker will support only single transactions per session.

The details of distributed transactions (XA) within AMQP are not provided in the 1.0 version of the specification. If your environment requires support for distributed transactions, it is recommended that you use the AMQ Core Protocol JMS.

See the AMQP 1.0 specification for more information about the protocol and its features.

3.2.1. Using an AMQP Link as a Topic

Unlike JMS, the AMQP protocol does not include topics. However, it is still possible to treat AMQP consumers or receivers as subscriptions rather than just consumers on a queue. By default, any receiving link that attaches to an address with the prefix jms.topic. is treated as a subscription, and a subscription queue is created. The subscription queue is made durable or volatile, depending on how the Terminus Durability is configured, as captured in the following table:

| To create this kind of subscription for a multicast-only queue… | Set Terminus Durability to this… |

|---|---|

| Durable |

|

| Non-durable |

|

The name of a durable queue is composed of the container ID and the link name, for example my-container-id:my-link-name.

AMQ Broker also supports the qpid-jms client and will respect its use of topics regardless of the prefix used for the address.

3.2.2. Configuring AMQP Security

The broker supports AMQP SASL Authentication. See Security for more information about how to configure SASL-based authentication on the broker.

3.3. Using MQTT with a Network Connection

The broker supports MQTT v3.1.1 (and also the older v3.1 code message format). MQTT is a lightweight, client to server, publish/subscribe messaging protocol. MQTT reduces messaging overhead and network traffic, as well as a client’s code footprint. For these reasons, MQTT is ideally suited to constrained devices such as sensors and actuators and is quickly becoming the de facto standard communication protocol for Internet of Things(IoT).

Procedure

-

Open the configuration file

BROKER_INSTANCE_DIR/etc/broker.xml - Add an acceptor with the MQTT protocol enabled. For example:

<acceptors> <acceptor name="mqtt">tcp://localhost:1883?protocols=MQTT</acceptor> ... </acceptors>

MQTT comes with a number of useful features including:

- Quality of Service

- Each message can define a quality of service that is associated with it. The broker will attempt to deliver messages to subscribers at the highest quality of service level defined.

- Retained Messages

- Messages can be retained for a particular address. New subscribers to that address receive the last-sent retained message before any other messages, even if the retained message was sent before the client connected.

- Wild card subscriptions

- MQTT addresses are hierarchical, similar to the hierarchy of a file system. Clients are able to subscribe to specific topics or to whole branches of a hierarchy.

- Will Messages

- Clients are able to set a "will message" as part of their connect packet. If the client abnormally disconnects, the broker will publish the will message to the specified address. Other subscribers receive the will message and can react accordingly.

The best source of information about the MQTT protocol is in the specification. The MQTT v3.1.1 specification can be downloaded from the OASIS website.

3.4. Using OpenWire with a Network Connection

The broker supports the OpenWire protocol, which allows a JMS client to talk directly to a broker. Use this protocol to communicate with older versions of AMQ Broker.

Currently AMQ Broker supports OpenWire clients that use standard JMS APIs only.

Procedure

-

Open the configuration file

BROKER_INSTANCE_DIR/etc/broker.xml Add or modify an

acceptorso that it includesOPENWIREas part of theprotocolparameter, as shown in the following example:<acceptors> <acceptor name="openwire-acceptor">tcp://localhost:61616?protocols=OPENWIRE</acceptor> ... </acceptors>

In the preceding example, the broker will listen on port 61616 for incoming OpenWire commands.

For more details, see the examples located under INSTALL_DIR/examples/protocols/openwire.

3.5. Using STOMP with a Network Connection

STOMP is a text-orientated wire protocol that allows STOMP clients to communicate with STOMP Brokers. The broker supports STOMP 1.0, 1.1 and 1.2. STOMP clients are available for several languages and platforms making it a good choice for interoperability.

Procedure

-

Open the configuration file

BROKER_INSTANCE_DIR/etc/broker.xml -

Configure an existing

acceptoror create a new one and include aprotocolsparameter with a value ofSTOMP, as below.

<acceptors> <acceptor name="stomp-acceptor">tcp://localhost:61613?protocols=STOMP</acceptor> ... </acceptors>

In the preceding example, the broker accepts STOMP connections on the port 61613, which is the default.

See the stomp example located under INSTALL_DIR/examples/protocols for an example of how to configure a broker with STOMP.

3.5.1. Knowing the Limitations When Using STOMP

When using STOMP, the following limitations apply:

-

The broker currently does not support virtual hosting, which means the

hostheader inCONNECTframes are ignored. -

Message acknowledgements are not transactional. The

ACKframe cannot be part of a transaction, and it is ignored if itstransactionheader is set).

3.5.2. Providing IDs for STOMP Messages

When receiving STOMP messages through a JMS consumer or a QueueBrowser, the messages do not contain any JMS properties, for example JMSMessageID, by default. However, you can set a message ID on each incoming STOMP message by using a broker paramater.

Procedure

-

Open the configuration file

BROKER_INSTANCE_DIR/etc/broker.xml -

Set the

stompEnableMessageIdparameter totruefor theacceptorused for STOMP connections, as shown in the following example:

<acceptors> <acceptor name="stomp-acceptor">tcp://localhost:61613?protocols=STOMP;stompEnableMessageId=true</acceptor> ... </acceptors>

By using the stompEnableMessageId parameter, each stomp message sent using this acceptor has an extra property added. The property key is amq-message-id and the value is a String representation of an internal message id prefixed with “STOMP”, as shown in the following example:

amq-message-id : STOMP12345

If stompEnableMessageId is not specified in the configuration, the default value is false.

3.5.3. Setting a Connection’s Time to Live (TTL)

STOMP clients must send a DISCONNECT frame before closing their connections. This allows the broker to close any server-side resources, such as sessions and consumers. However, if STOMP clients exit without sending a DISCONNECT frame, or if they fail, the broker will have no way of knowing immediately whether the client is still alive. STOMP connections therefore are configured to have a "Time to Live" (TTL) of 1 minute. The means that the broker stops the connection to the STOMP client if it has been idle for more than one minute.

Procedure

-

Open the configuration file

BROKER_INSTANCE_DIR/etc/broker.xml -

Add the

connectionTTLparameter to URI of theacceptorused for STOMP connections, as shown in the following example:

<acceptors> <acceptor name="stomp-acceptor">tcp://localhost:61613?protocols=STOMP;connectionTTL=20000</acceptor> ... </acceptors>

In the preceding example, any stomp connection that using the stomp-acceptor will have its TTL set to 20 seconds.

Version 1.0 of the STOMP protocol does not contain any heartbeat frame. It is therefore the user’s responsibility to make sure data is sent within connection-ttl or the broker will assume the client is dead and clean up server-side resources. With version 1.1, you can use heart-beats to maintain the life cycle of stomp connections.

Overriding the Broker’s Default Time to Live (TTL)

As noted, the default TTL for a STOMP connection is one minute. You can override this value by adding the connection-ttl-override attribute to the broker configuration.

Procedure

-

Open the configuration file

BROKER_INSTANCE_DIR/etc/broker.xml -

Add the

connection-ttl-overrideattribute and provide a value in milliseconds for the new default. It belongs inside the<core>stanza, as below.

<configuration ...>

...

<core ...>

...

<connection-ttl-override>30000</connection-ttl-override>

...

</core>

<configuration>

In the preceding example, the default Time to Live (TTL) for a STOMP connection is set to 30 seconds, 30000 milliseconds.

3.5.4. Sending and Consuming STOMP Messages from JMS

STOMP is mainly a text-orientated protocol. To make it simpler to interoperate with JMS, the STOMP implementation checks for presence of the content-length header to decide how to map a STOMP message to JMS.

| If you want a STOMP message to map to a … | The message should…. |

|---|---|

| JMS TextMessage |

Not include a |

| JMS BytesMessage |

Include a |

The same logic applies when mapping a JMS message to STOMP. A STOMP client can confirm the presence of the content-length header to determine the type of the message body (string or bytes).

See the STOMP specification for more information about message headers.

3.5.5. Mapping STOMP Destinations to AMQ Broker Addresses and Queues

When sending messages and subscribing, STOMP clients typically include a destination header. Destination names are string values, which are mapped to a destination on the broker. In AMQ Broker, these destinations are mapped to addresses and queues. See the STOMP specification for more information about the destination frame.

Take for example a STOMP client that sends the following message (headers and body included):

SEND destination:/my/stomp/queue hello queue a ^@

In this case, the broker will forward the message to any queues associated with the address /my/stomp/queue.

For example, when a STOMP client sends a message (by using a SEND frame), the specified destination is mapped to an address.

It works the same way when the client sends a SUBSCRIBE or UNSUBSCRIBE frame, but in this case AMQ Broker maps the destination to a queue.

SUBSCRIBE destination: /other/stomp/queue ack: client ^@

In the preceding example, the broker will map the destination to the queue /other/stomp/queue.

Mapping STOMP Destinations to JMS Destinations

JMS destinations are also mapped to broker addresses and queues. If you want to use STOMP to send messages to JMS destinations, the STOMP destinations must follow the same convention:

Send or subscribe to a JMS Queue by prepending the queue name by

jms.queue.. For example, to send a message to theordersJMS Queue, the STOMP client must send the frame:SEND destination:jms.queue.orders hello queue orders ^@

Send or subscribe to a JMS Topic by prepending the topic name by

jms.topic.. For example, to subscribe to thestocksJMS Topic, the STOMP client must send a frame similar to the following:SUBSCRIBE destination:jms.topic.stocks ^@

Chapter 4. Addresses, Queues, and Topics

AMQ Broker has a unique addressing model that is both powerful and flexible and that offers great performance. The addressing model comprises three main concepts: addresses, queues and routing types.

An address represents a messaging endpoint. Within the configuration, a typical address is given a unique name, 0 or more queues, and a routing type.

A queue is associated with an address. There can be multiple queues per address. Once an incoming message is matched to an address, the message is sent on to one or more of its queues, depending on the routing type configured. Queues can be configured to be automatically created and deleted.

A routing type determines how messages are sent to the queues associated with an address. A AMQ Broker address can be configured with two different routing types.

| If you want your messages routed to… | Use this routing type … |

|---|---|

| A single queue within the matching address, in a point-to-point manner. | anycast |

| Every queue within the matching address, in a publish-subscribe manner. | multicast |

An address must have at least one routing type.

It is possible to define more than one routing type per address, but this typically results in an anti-pattern and is therefore not recommended.

If an address does use both routing types, however, and the client does not show a preference for either one, the broker typically defaults to the anycast routing type. The one exception is when the client uses the MQTT protocol. In that case, the default routing type is multicast.

4.1. Address and Queue Naming Requirements

You should be aware of the following requirements when you configure addresses and queues:

To ensure that a client can connect to a queue regardless of which wire protocol it uses, your address and queue names should not include any of the following characters:

&::,?>-

The

#and*characters are reserved for wildcard expressions. For more information, see the section called “AMQ Broker Wildcard Syntax”. - Address and queue names should not include any spaces.

-

To separate words in an address or queue name, use the configured delimiter character (the default is the

.character). For more information, see the section called “AMQ Broker Wildcard Syntax”.

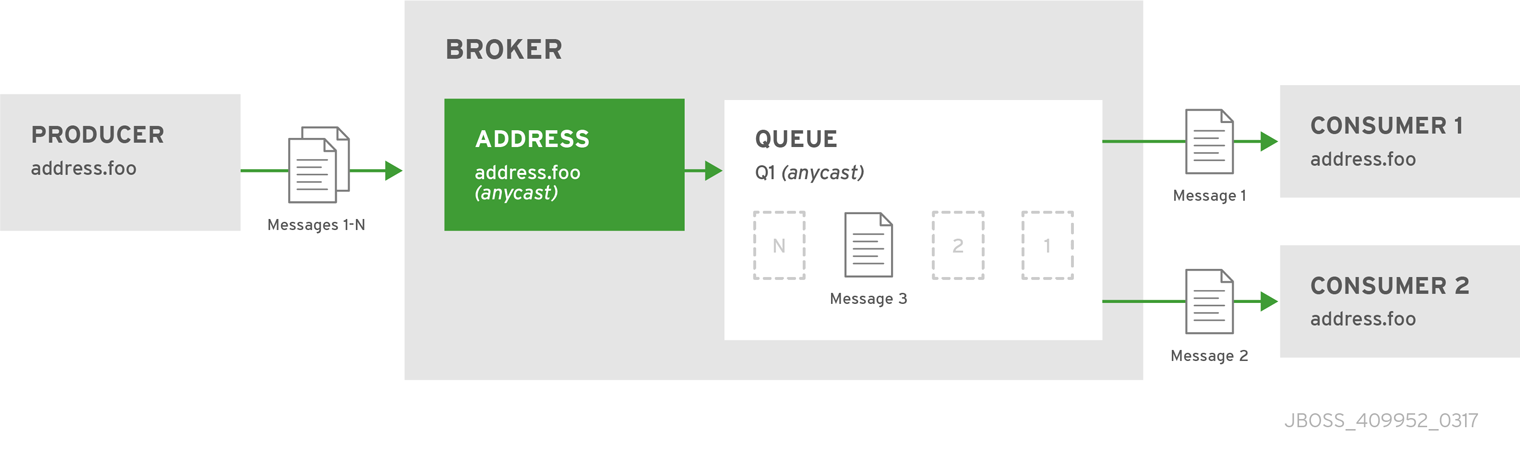

4.2. Configuring Point-to-Point Messaging

Point-to-point messaging is a common scenario in which a message sent by a producer has only one consumer. AMQP and JMS message producers and consumers can make use of point-to-point messaging queues, for example. Define an anycast routing type for an address so that its queues receive messages in a point-to-point manner.

When a message is received on an address using anycast, AMQ Broker locates the queue associated with the address and routes the message to it. When consumers request to consume from the address, the broker locates the relevant queue and associates this queue with the appropriate consumers. If multiple consumers are connected to the same queue, messages are distributed amongst each consumer equally, providing the consumers are equally able to handle them.

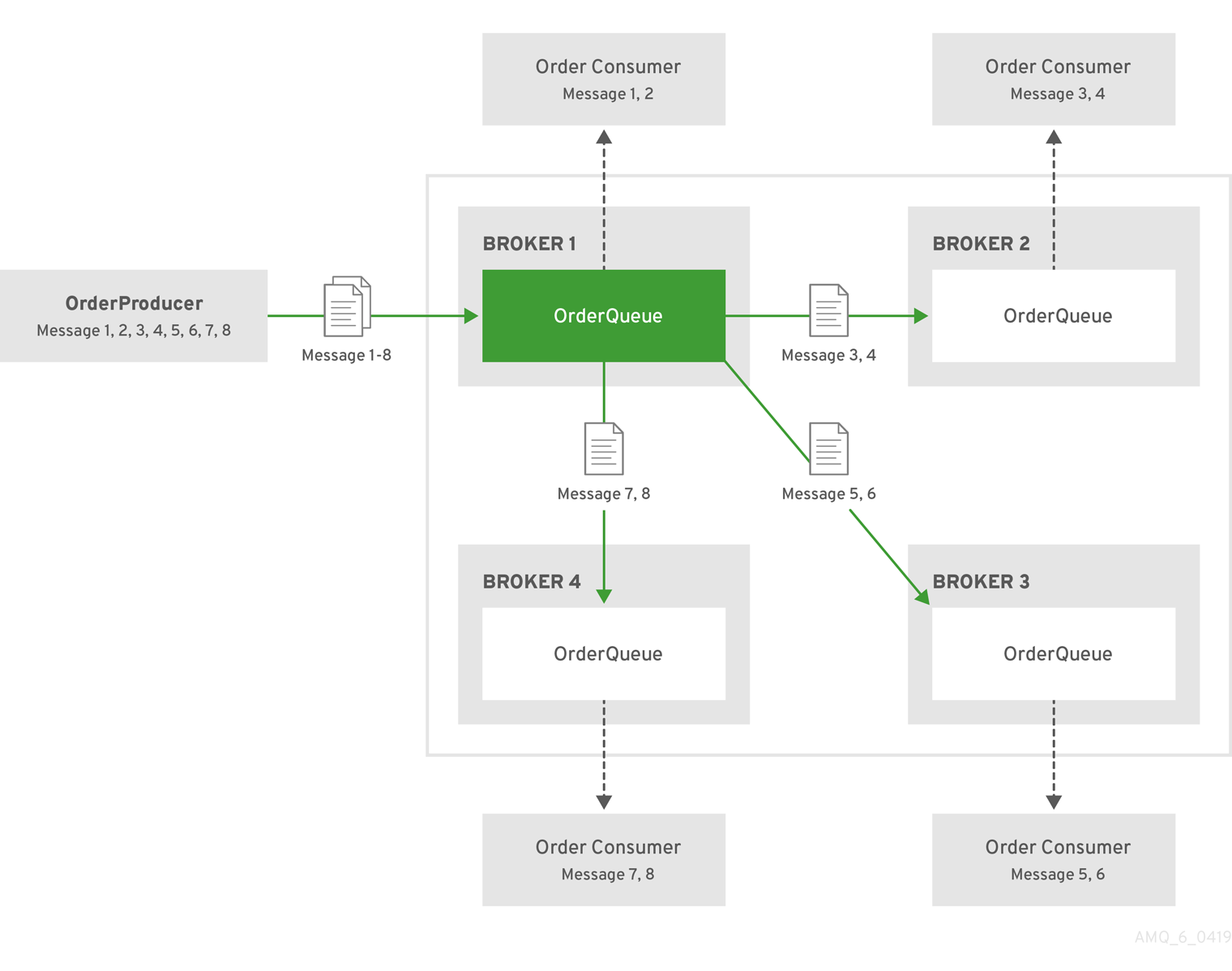

Figure 4.1. Point-to-Point

Procedure

-

Open the file

BROKER_INSTANCE_DIR/etc/broker.xmlfor editing. Wrap an

anycastconfiguration element around the chosenqueueelement of anaddress. Ensure the value ofaddress nameandqueue nameelements are same.<configuration ...> <core ...> ... <address name="durable"> <anycast> <queue name="durable"/> </anycast> </address> </core> </configuration>

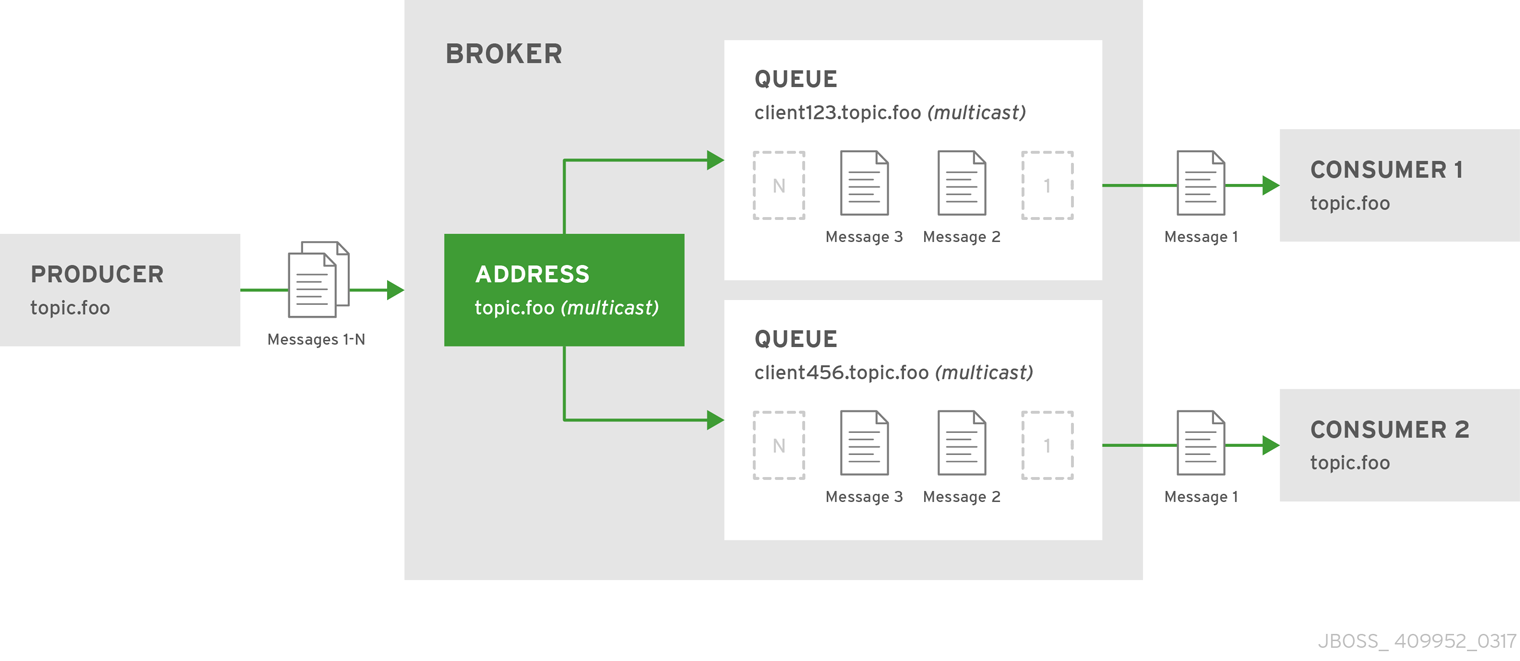

4.3. Configuring Publish-Subscribe Messaging

In a publish-subscribe scenario, messages are sent to every consumer subscribed to an address. JMS topics and MQTT subscriptions are two examples of publish-subscribe messaging. When a message is received on an address with a multicast routing type, AMQ Broker routes a copy of the message to each queue. To reduce the overhead of copying, each queue is sent only a reference to the message and not a full copy.

Figure 4.2. Publish-Subscribe

Procedure

-

Open the file

BROKER_INSTANCE_DIR/etc/broker.xmlfor editing. Add an empty

multicastconfiguration element to the chosen address.<configuration ...> <core ...> ... <address name="topic.foo"> <multicast/> </address> </core> </configuration>(Optional) Add one more

queueelements to the address and wrap themulticastelement around them. This step is typically not needed since the broker automatically creates a queue for each subscription requested by a client.<configuration ...> <core ...> ... <address name="topic.foo"> <multicast> <queue name="client123.topic.foo"/> <queue name="client456.topic.foo"/> </multicast> </address> </core> </configuration>

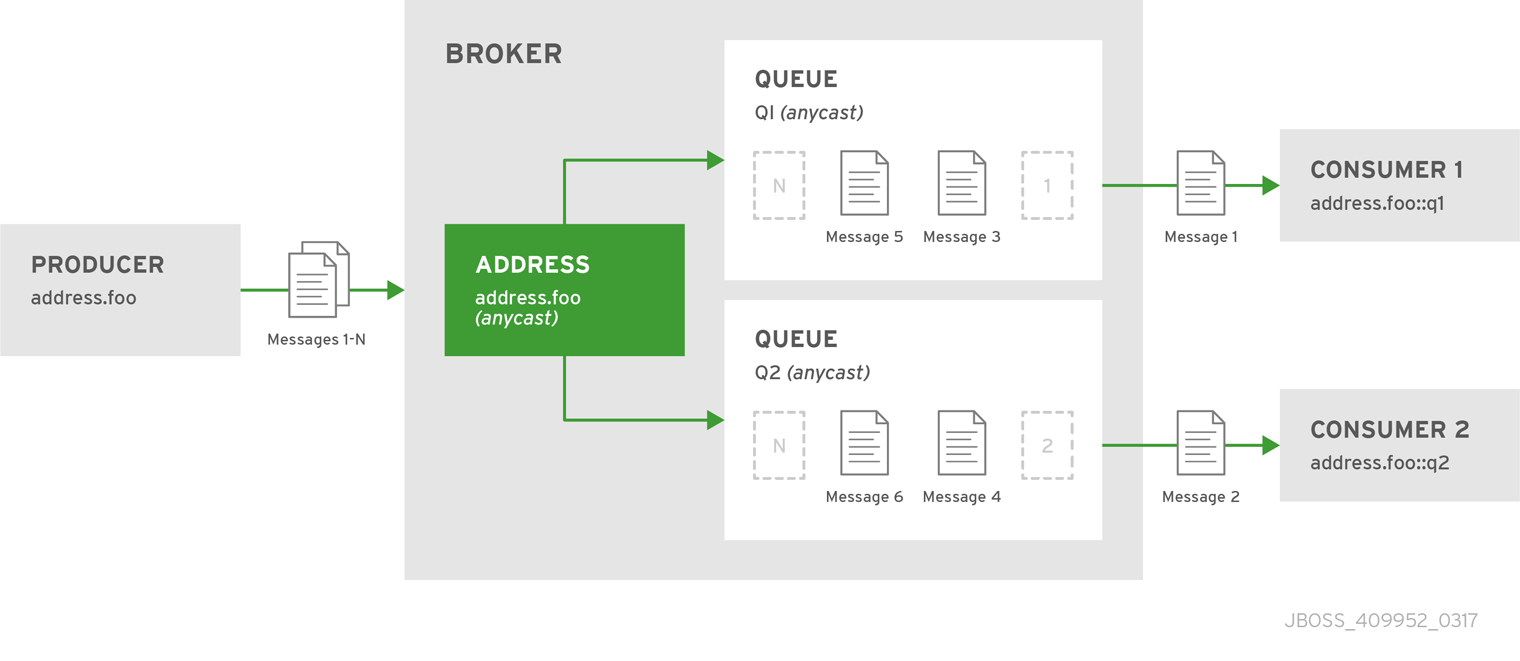

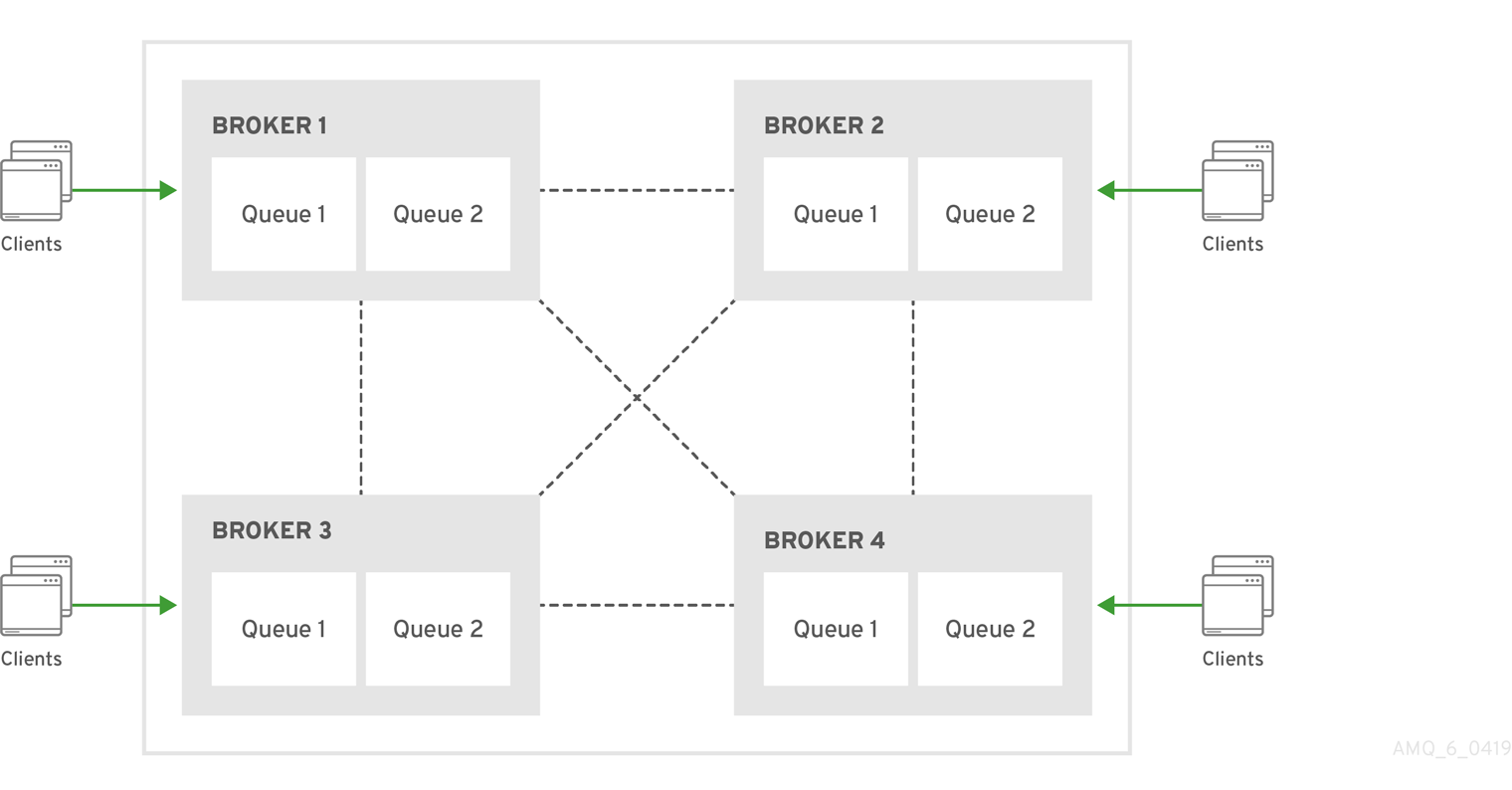

4.4. Configuring a Point-to-Point Using Two Queues

You can define more than one queue on an address by using an anycast routing type. Messages semt to an anycast address are distributed evenly across all associated queues. By using Fully Qualified Queue Names, which are described later, you can have clients connect to a specific queue. If more than one consumer connects to the same queue, AMQ Broker distributes messages between them.

Figure 4.3. Point-to-Point with Two Queues

This is how AMQ Broker handles load balancing of queues across multiple nodes in a cluster.

Procedure

-

Open the file

BROKER_INSTANCE_DIR/etc/broker.xmlfor editing. Wrap an

anycastconfiguration element around thequeueelements in theaddress.<configuration ...> <core ...> ... <address name="address.foo"> <anycast> <queue name="q1"/> <queue name="q2"/> </anycast> </address> </core> </configuration>

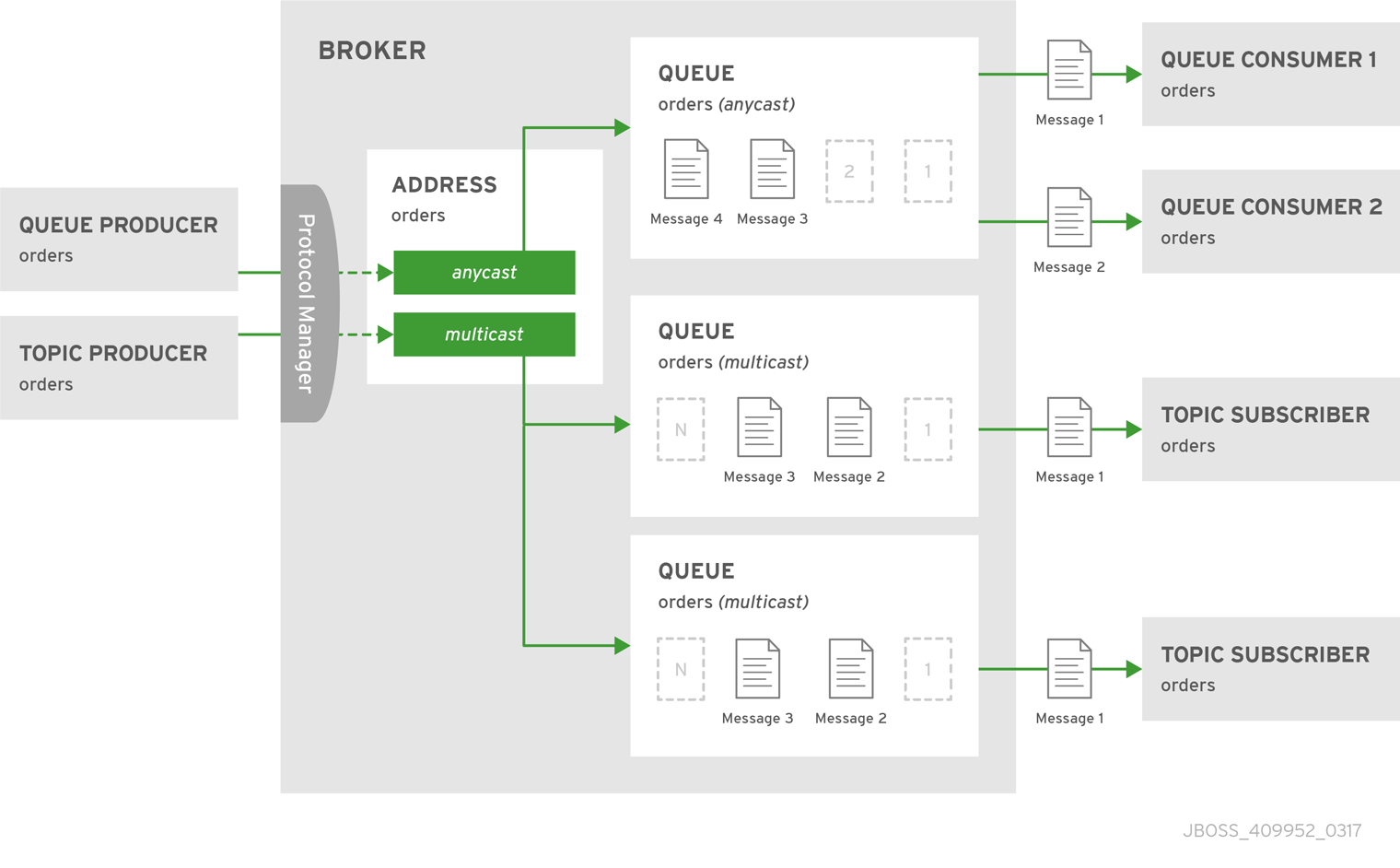

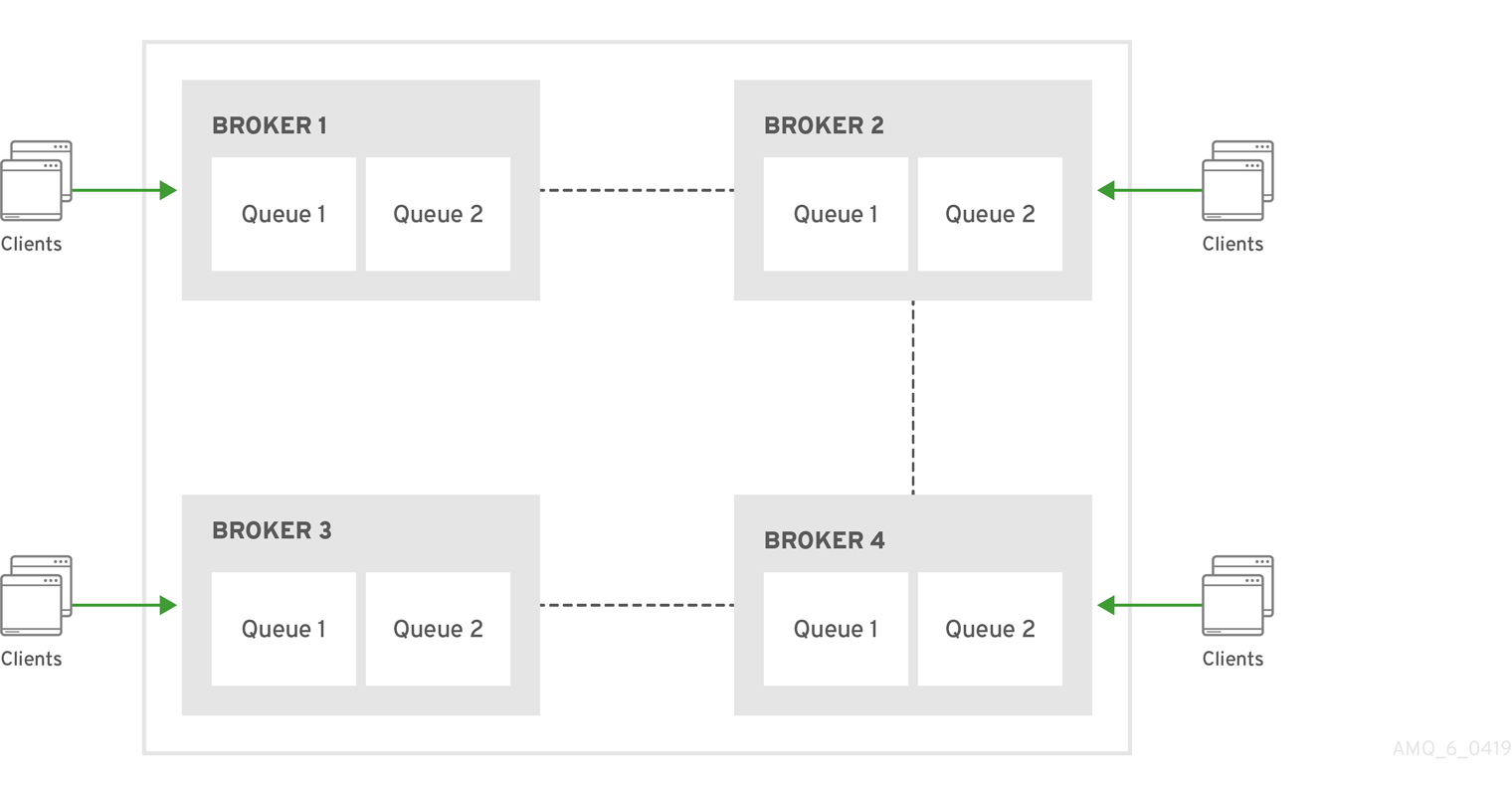

4.5. Using Point-to-Point and Publish-Subscribe Together

It is possible to define an address with both point-to-point and publish-subscribe semantics enabled. While not typically recommended, this can be useful when you want, for example, a JMS Queue named orders and a JMS topic named orders. The different routing types make the addresses appear to be distinct.

Using an example of JMS clients, the messages sent by a JMS queue producer are routed using the anycast routing type. Messages sent by a JMS topic producer uses the multicast routing type. In addition, when a JMS topic consumer attaches, it is attached to its own subscription queue. The JMS queue consumer, however, is attached to the anycast queue.

Figure 4.4. Point-to-Point and Publish-Subscribe

The behavior in this scenario is dependent on the protocol being used. For JMS there is a clear distinction between topic and queue producers and consumers, which makes the logic straightforward. Other protocols like AMQP do not make this distinction. A message being sent via AMQP is routed by both anycast and multicast and consumers default to anycast. For more information, check the behavior of each protocol in the sections on protocols.

The XML excerpt below is an example of what the configuration for an address using both anycast and multicast routing types would look like in BROKER_INSTANCE_DIR/etc/broker.xml. Note that subscription queues are typically created on demand, so there is no need to list specific queue elements inside the multicast routing type.

<configuration ...>

<core ...>

...

<address name="foo.orders">

<anycast>

<queue name="orders"/>

</anycast>

<multicast/>

</address>

</core>

</configuration>4.6. Configuring Subscription Queues

In most cases it is not necessary to pre-create subscription queues because protocol managers create subscription queues automatically when clients first request to subscribe to an address. See Protocol Managers and Addresses for more information. For durable subscriptions, the generated queue name is usually a concatenation of the client id and the address.

Configuring a Durable Subscription Queue

When an queue is configured as a durable subscription, the broker saves messages for any inactive subscribers and delivers them to the subscribers when they reconnect. Clients are therefore guaranteed to receive each message delivered to the queue after subscribing to it.

Procedure

-

Open the file

BROKER_INSTANCE_DIR/etc/broker.xmlfor editing. Add the

durableconfiguration element to the chosenqueueand assign it a value oftrue.<configuration ...> <core ...> ... <address name="durable.foo"> <multicast> <queue name="q1"> <durable>true</durable> </queue> </multicast> </address> </core> </configuration>

Configuring a Non-Shared Durable Subscription

The broker can be configured to prevent more than one consumer from connecting to a queue at any one time. The subscriptions to queues configured this way are therefore "non-shared".

Procedure

-

Open the file

BROKER_INSTANCE_DIR/etc/broker.xmlfor editing. Add the

durableconfiguration element to each chosen queue.<configuration ...> <core ...> ... <address name="non.shared.durable.foo"> <multicast> <queue name="orders1"> <durable>true</durable> </queue> <queue name="orders2"> <durable>true</durable> </queue> </multicast> </address> </core> </configuration>Add the

max-consumersattribute to each chosenqueueelement and assign it a value of1.<configuration ...> <core ...> ... <address name="non.shared.durable.foo"> <multicast> <queue name="orders1" max-consumers="1"> <durable>true</durable> </queue> <queue name="orders2" max-consumers="1"> <durable>true</durable> </queue> </multicast> </address> </core> </configuration>

4.7. Using a Fully Qualified Queue Name

Internally the broker maps a client’s request for an address to specific queues. The broker decides on behalf of the client which queues to send messages, or from which queue to receive messages. However, more advanced use cases might require that the client specify a queue directly. In these situations the client can use a Fully Qualified Queue Name (FQQN), by specifying both the address name and the queue name, separated by a ::.

Prerequisites

An address is configured with two or more queues. In the example below the address

foohas two queues,q1andq2.<configuration ...> <core ...> ... <addresses> <address name="foo"> <anycast> <queue name="q1" /> <queue name="q2" /> </anycast> </address> </addresses> </core> </configuration>

Procedure

In the client code, use both the address name and the queue name when requesting a connection from the broker. Remember to use two colons,

::, to separate the names, as in the example Java code below.String FQQN = "foo::q1"; Queue q1 session.createQueue(FQQN); MessageConsumer consumer = session.createConsumer(q1);

4.8. Configuring Sharded Queues

A common pattern for processing of messages across a queue where only partial ordering is required is to use queue sharding. In AMQ Broker this can be achieved by creating an anycast address that acts as a single logical queue, but which is backed by many underlying physical queues.

Procedure

Open

BROKER_INSTANCE_DIR/etc/broker.xmland add anaddresswith the desired name. In the example below theaddressnamedshardedis added to the configuration.<configuration ...> <core ...> ... <addresses> <address name="sharded"></address> </addresses> </core> </configuration>Add the

anycastrouting type and include the desired number of sharded queues. In the example below, the queuesq1,q2, andq3are added asanycastdestinations.<configuration ...> <core ...> ... <addresses> <address name="sharded"> <anycast> <queue name="q1" /> <queue name="q2" /> <queue name="q3" /> </anycast> </address> </addresses> </core> </configuration>

Using the configuration above, messages sent to sharded are distributed equally across q1, q2 and q3. Clients are able to connect directly to a specific physical queue when using a fully qualified queue name and receive messages sent to that specific queue only.

To tie particular messages to a particular queue, clients can specify a message group for each message. The broker routes grouped messages to the same queue, and one consumer processes them all. See the chapter on Message Grouping for more information.

4.9. Configuring Last Value Queues

A last value queue is a type of queue that discards messages in the queue when a newer message with the same last value property name is placed in the queue. Through this behavior, last value queues retain only the last values for messages of the same property.

A simple use case for a last value queue is for monitoring stock prices, where only the latest value for a particular stock is of interest.

The broker delivers messages that are sent to a last value queue without a configured last value property as normal messages. Such messages are not purged from the queue when a new message with a configured last value property arrives.

You can configure last value queues using:

-

The

broker.xmlconfiguration file - The JMS client

- The Core API

- Address wildcards

For each of the above methods, apart from the Core API, you can specify a custom value for the last value key (also called the last value property), or leave this value unset, meaning that the key is set to the default value instead. The default value for the last value key is _AMQ_LVQ_NAME.

For the Core API, you create last value queues using the constant last value key Message.HDR_LAST_VALUE_NAME to identify last value messages.

4.9.1. Configuring Last Value Queues Using broker.xml

To specify a custom value for the last value key, include lines in your broker.xml configuration file that look like the following:

<address name="my.address"> <multicast> <queue name="prices1" last-value-key="stock_ticker"/> </multicast> </address>

Alternatively, you can configure a last value queue that uses the default last value key name of _AMQ_LVQ_NAME. To do this, set the last-value configuration parameter to true in your broker.xml configuration file, without specifying a value for the last value key. An example of this configuration is shown below.

<address name="my.address"> <multicast> <queue name="prices1" last-value="true"/> </multicast> </address>

4.9.2. Configuring Last Value Queues Using the JMS Client

When using the JMS Client to auto-create destinations used by a consumer, you can specify a last value key as part of the address settings. In this case, the auto-created queues are last value queues. An example of this configuration is shown below.

Queue queue = session.createQueue("my.destination.name?last-value-key=stock_ticker");

Topic topic = session.createTopic("my.destination.name?last-value-key=stock_ticker");

Alternatively, configure a last value queue that uses the default last value key name of _AMQ_LVQ_NAME. To do this, set the last-value configuration parameter to true, without specifying a value for the last value key. An example of this configuration is shown below.

Queue queue = session.createQueue("my.destination.name?last-value=true");

Topic topic = session.createTopic("my.destination.name?last-value=true");4.9.3. Configuring Last Value Queues Using the Core API

To create a last value queue using the Core API, set the lastvalue parameter to true when creating a queue. To do this, use the createQueue method of the ClientSession interface. The syntax for creating a last value queue using this method is shown below.

void createQueue( SimpleString address, RoutingType routingType, SimpleString queueName, SimpleString filter, Boolean durable, Boolean autoCreated, int maxConsumers, Boolean purgeOnNoConsumers, Boolean exclusive, Boolean lastValue )

In this case, the API uses the constant Message.HDR_LAST_VALUE_NAME to identify last value messages delivered to the queue.

Additional Resources

-

For more information about using the

createQueuemethod of the Core API to create a last value queue, see createQueue.

4.9.4. Configuring Last Value Queues Using Address Wildcards

You can use address wildcards in the broker.xml configuration file to configure last value queues for a set of addresses. An example of this configuration is shown below.

<address-setting match="lastValueQueue"> <default-last-value-key>stock_ticker</default-last-value-key> </address-setting>

By default, the value of default-last-value-key is null.

When using address wildcards, you can also use the default last value key name. To do this, set the default-last-value-queue parameter to true, without specifying a value for the last value key.

4.9.5. Example of Last Value Queue Behavior

This example shows the configuration and behavior of a last value queue.

In your broker.xml configuration file, suppose that you have added configuration that looks like the following:

<address name="my.address"> <multicast> <queue name="prices1" last-value-key="stock_ticker"/> </multicast> </address>

The configuration shown above creates a last value queue called prices1, with a last value key of stock_ticker.

Now, suppose that a client sends two messages with the same last value property name to the prices1 queue, as shown below:

TextMessage message = session.createTextMessage("First message with last value property set");

message.setStringProperty("stock_ticker", "36.83");

producer.send(message);TextMessage message = session.createTextMessage("Second message with last value property set");

message.setStringProperty("stock_ticker", "37.02");

producer.send(message);

When two messages with the same last value property name arrive to the last value queue prices1, only the latest message remains in the queue, with the first message being purged. At the command line, you can enter the following lines to validate this behavior:

TextMessage messageReceived = (TextMessage)messageConsumer.receive(5000);

System.out.format("Received message: %s\n", messageReceived.getText());In this example, the output you see is the second message, since both messages use the last value property and the second message was received in the queue after the first.

4.9.6. Creating Non-Destructive Consumers

When a consumer attaches to a queue, the normal behavior is that messages sent to that consumer are acquired exclusively by the consumer. When the consumer acknowledges receipt of the messages, the broker removes the messages from the queue.

An alternative way to configure a consumer is to configure it as a queue browser. In this case, the queue still sends all messages to the consumer. However the browser does not prevent other consumers from receiving the messages. In addition, the messages remain in the queue once the browser has consumed the messages. A consumer configured as a browser is an instance of a non-destructive consumer.

In the case of a last value queue, configuring all consumers as non-destructive consumers ensures that the queue always retains the most up-to-date value for every last value key. The examples in the following sub-sections show how to configure a last value queue to ensure that all consumers are non-destructive.

4.9.6.1. Configuring Non-destructive Consumers Using broker.xml

In the broker.xml configuration file, to create a last value queue, set the non-destructive parameter to true. An example of this configuration is shown below.

<address name="my.address">

<multicast>

<queue name="orders1" last-value-key="stock_ticker" non-destructive="true" />

</multicast>

</address>4.9.6.2. Creating Non-destructive Consumers Using the JMS Client

When using the JMS Client to auto-create destinations used by a consumer, you configure last value queue behavior and non-destructive consumers as part of the address settings. An example of this configuration is shown below.

Queue queue = session.createQueue("my.destination.name?last-value-key=stock_ticker&non-destructive=true");

Topic topic = session.createTopic("my.destination.name?last-value-key=stock_ticker&non-destructive=true");4.9.6.3. Configuring Non-destructive Consumers Using Address Wildcards

You can use address wildcards in the broker.xml configuration file to configure last value queues for a set of addresses. As part of this configuration, you can also specify that consumers are non destructive, by setting default-non-destructive to true. An example of this configuration is shown below.

<address-setting match="lastValueQueue"> <default-last-value-key>stock_ticker </default-last-value-key> <default-non-destructive>true</default-non-destructive> </address-setting>

By default, the value of default-non-destructive is false.

4.9.6.4. Configuring Message Expiry Delay

For a queue other than a last value queue, if you have only non-destructive consumers, the broker never deletes messages from the queue, causing the queue size to increase over time. To prevent this unconstrained growth in queue size, use the expiry-delay parameter to specify when messages expire.

Specify a message expiry delay in the address-setting element of your broker.xml configuration file, as shown in the following example:

<address-setting match="exampleQueue"> <expiry-delay>10</expiry-delay> </address-setting>

When you add the preceding lines to your broker.xml configuration file, the broker sends expired messages in exampleQueue to the expiry address expiryQueue.

expiry-delay defines the expiration time, in milliseconds, that will be applied to messages that are using the default expiration time. By default, messages have an expiration time of 0, meaning that they don’t expire. For messages with an expiration time greater than the default, expiry-delay has no effect.

For example, suppose you set expiry-delay on a queue to 10. If a message with the default expiration time of 0 arrives in the queue, then the broker changes the expiration time of the message from 0 to 10. However, if another message that is using an expiration time of 20 arrives in the queue, then its expiration time remains unchanged. If you set expiry-delay to -1, this feature is disabled. By default, expiry-delay is set to -1.

4.10. Limiting the Number of Consumers Connected to a Queue

Limit the number of consumers connected to a particular queue by using the max-consumers attribute. Create an exclusive consumer by setting max-consumers flag to 1. The default value is -1, which is sets an unlimited number of consumers.

Procedure

Open

BROKER_INSTANCE_DIR/etc/broker.xmland add themax-consumersattribute to the desiredqueue. In the example below, only20consumers can connect to the queueq3at the same time.<configuration ...> <core ...> ... <addresses> <address name="foo"> <anycast> <queue name="q3" max-consumers="20"/> </anycast> </address> </addresses> </core> </configuration>(Optional) Create an exclusive consumer by setting

max-consumersto1, as in the example below.<configuration ...> <core ...> ... <address name="foo"> <anycast> <queue name="q3" max-consumers="1"/> </anycast> </address> </core> </configuration>(Optional) Have an unlimited number of consumers by setting

max-consumersto-1, as in the example below.<configuration ...> <core ...> ... <address name="foo"> <anycast> <queue name="q3" max-consumers="-1"/> </anycast> </address> </core> </configuration>

4.11. Exclusive Queues

Exclusive queues are special queues that route all messages to only one consumer at a time. This is useful when you want all messages to be processed serially by the same consumer. If there are multiple consumers on a queue only one consumer will receive messages. If this consumer disconnects then another consumer is chosen.

4.11.1. Configuring Exclusive Queues

You configure exclusive queues in the broker.xml configuration file something like this.

<configuration ...>

<core ...>

...

<address name="foo.bar">

<multicast>

<queue name="orders1" exclusive="true"/>

</multicast>

</address>

</core>

</configuration>Or on auto-create when using the JMS Client by using address parameters when creating the destination used by the consumer.

Queue queue = session.createQueue("my.destination.name?exclusive=true");

Topic topic = session.createTopic("my.destination.name?exclusive=true");4.11.2. Setting the Exclusive Queue Default

<address-setting match="myQueue">

<default-exclusive-queue>true</default-exclusive-queue>

</address-setting>

The default value for default-exclusive-queue is false.

4.12. Configuring Ring Queues

Generally, queues in AMQ Broker use first-in, first-out (FIFO) semantics. This means that the broker adds messages to the tail of the queue and removes them from the head. A ring queue is a special type of queue that holds a specified, fixed number of messages. The broker maintains the fixed queue size by removing the message at the head of the queue when a new message arrives but the queue already holds the specified number of messages.

For example, consider a ring queue configured with a size of 3 and a producer that sequentially sends messages A, B, C, and D. Once message C arrives to the queue, the number of messages in the queue has reached the configured ring size. At this point, message A is at the head of the queue, while message C is at the tail. When message D arrives to the queue, the broker adds the message to the tail of the queue. To maintain the fixed queue size, the broker removes the message at the head of the queue (that is, message A). Message B is now at the head of the queue.

4.12.1. Configuring Ring Queues

This procedure shows how to configure a ring queue.

Procedure

-

Open the

BROKER_INSTANCE_DIR/etc/broker.xmlconfiguration file. To define a default ring size for all queues on matching addresses that don’t have an explicit ring size set, specify a value for

default-ring-sizein theaddress-settingelement. An example is shown below.<address-settings> <address-setting match="ring.#"> <default-ring-size>3</default-ring-size> </address-setting> </address-settings>The

default-ring-sizeparameter is especially useful for defining the default size of auto-created queues. The default value ofdefault-ring-sizeis-1(that is, no size limit).To define a ring size on a specific queue, add the

ring-sizeparameter to thequeueelement and specify a value. An example is shown below.<addresses> <address name="myRing"> <anycast> <queue name="myRing" ring-size="5" /> </anycast> </address> </addresses>

You can update the value of ring-size while the broker is running. The broker dynamically applies the update. If the new ring-size value is lower than the previous value, the broker does not immediately delete messages from the head of the queue to enforce the new size. New messages sent to the queue still force the deletion of older messages, but the queue does not reach its new, reduced size until it does so naturally, through the normal consumption of messages by clients.

4.12.2. Troubleshooting Ring Queue Behavior

This section describes situations in which the behavior of a ring queue appears to differ from its configuration.

See:

4.12.2.1. In-delivery messages and rollbacks

When a message is in delivery to a consumer, the message is in an "in-between" state, where the message is technically no longer on the queue, but is also not yet acknowledged. A message remains in an in-delivery state until acknowledged by the consumer. Messages that remain in an in-delivery state cannot be removed from the ring queue.

Because the broker cannot remove in-delivery messages, a client can send more messages to a ring queue than the ring size configuration seems to allow. For example, consider this scenario:

-

A producer sends three messages to a ring queue configured with

ring-size="3". All messages are immediately dispatched to a consumer.

At this point,

messageCount=3anddeliveringCount=3.The producer sends another message to the queue. The message is then dispatched to the consumer.

Now,

messageCount=4anddeliveringCount=4. The message count of4is greater than the configured ring size of3. However, the broker is obliged to allow this situation because it cannot remove the in-delivery messages from the queue.Now, suppose that the consumer is closed without acknowledging any of the messages.

In this case, the four in-delivery, unacknowledged messages are canceled back to the broker and added to the head of the queue in the reverse order from which they were consumed. This action puts the queue over its configured ring size. Because a ring queue prefers messages at the tail of the queue over messages at the head, the queue discards the first message sent by the producer, because this was the last message added back to the head of the queue. Transaction or core session rollbacks are treated in the same way.

If you are using the core client directly, or using an AMQ Core Protocol JMS client, you can minimize the number of messages in delivery by reducing the value of the consumerWindowSize parameter (1024 * 1024 bytes by default).

4.12.2.2. Scheduled messages

When a scheduled message is sent to a queue, the message is not immediately added to the tail of the queue like a normal message. Instead, the broker holds the scheduled message in an intermediate buffer and schedules the message for delivery onto the head of the queue, according to the details of the message. However, scheduled messages are still reflected in the message count of the queue. As with in-delivery messages, this behavior can make it appear that the broker is not enforcing the ring queue size. For example, consider this scenario:

At 12:00, a producer sends a message,

A, to a ring queue configured withring-size="3". The message is scheduled for 12:05.At this point,

messageCount=1andscheduledCount=1.At 12:01, producer sends message

Bto the same ring queue.Now,

messageCount=2andscheduledCount=1.At 12:02, producer sends message

Cto the same ring queue.Now,

messageCount=3andscheduledCount=1.At 12:03, producer sends message

Dto the same ring queue.Now,

messageCount=4andscheduledCount=1.The message count for the queue is now

4, one greater than the configured ring size of3. However, the scheduled message is not technically on the queue yet (that is, it is on the broker and scheduled to be put on the queue). At the scheduled delivery time of 12:05, the broker puts the message on the head of the queue. However, since the ring queue has already reached its configured size, the scheduled messageAis immediately removed.

4.12.2.3. Paged messages

Similar to scheduled messages and messages in delivery, paged messages do not count towards the ring queue size enforced by the broker, because messages are actually paged at the address level, not the queue level. A paged message is not technically on a queue, although it is reflected in a queue’s messageCount value.

It is recommended that you do not use paging for addresses with ring queues. Instead, ensure that the entire address can fit into memory. Or, configure the address-full-policy parameter to a value of DROP, BLOCK or FAIL.

Additional resources

- The broker creates internal instances of ring queues when you configure retroactive addressing. To learn more, see Section 4.14, “Configuring Retroactive Addresses”.

4.13. Configuring a Prefix to Connect to a Specific Routing Type

Normally, if a message is received by an address that uses both anycast and multicast, one of the anycast queues receive the message and all of the multicast queues. However, clients can specify a special prefix when connecting to an address to specify whether to connect using anycast or multicast. The prefixes are custom values that are designated using the anycastPrefix and multicastPrefix parameters within the URL of an acceptor.

Configuring an Anycast Prefix

-

In

BROKER_INSTANCE_DIR/etc/broker.xml, add theanycastPrefixto the URL of the desiredacceptor. In the example below, theacceptoris configured to useanycast://for theanycastPrefix. Client code can specifyanycast://foo/if the client needs to send a message to only one of the anycast queues.

<configuration ...>

<core ...>

...

<acceptors>

<!-- Acceptor for every supported protocol -->

<acceptor name="artemis">tcp://0.0.0.0:61616?protocols=AMQP;anycastPrefix=anycast://</acceptor>

</acceptors>

...

</core>

</configuration>Configuring a Multicast Prefix

-

In

BROKER_INSTANCE_DIR/etc/broker.xml, add theanycastPrefixto the URL of the desiredacceptor. In the example below, theacceptoris configured to usemulticast://for themulticastPrefix. Client code can specifymulticast://foo/if the client needs the message sent to only the multicast queues of the address.

<configuration ...>

<core ...>

...

<acceptors>

<!-- Acceptor for every supported protocol -->

<acceptor name="artemis">tcp://0.0.0.0:61616?protocols=AMQP;multicastPrefix=multicast://</acceptor>

</acceptors>

...

</core>

</configuration>4.14. Configuring Retroactive Addresses

Configuring an address as retroactive enables you to preserve messages sent to that address, including when there are no queues yet bound to the address. When queues are later created and bound to the address, the broker retroactively distributes messages to those queues. If an address is not configured as retroactive and does not yet have a queue bound to it, the broker discards messages sent to that address.

When you configure a retroactive address, the broker creates an internal instance of a type of queue known as a ring queue. A ring queue is a special type of queue that holds a specified, fixed number of messages. Once the queue has reached the specified size, the next message that arrives to the queue forces the oldest message out of the queue. When you configure a retroactive address, you indirectly specify the size of the internal ring queue. By default, the internal queue uses the multicast routing type.

The internal ring queue used by a retroactive address is exposed via the management API. You can inspect metrics and perform other common management operations, such as emptying the queue. The ring queue also contributes to the overall memory usage of the address, which affects behavior such as message paging.

This procedure shows how to configure an address as retroactive.

Procedure

-

Open the

BROKER_INSTANCE_DIR/etc/broker.xmlconfiguration file. Specify a value for the

retroactive-message-countparameter in theaddress-settingelement. The value you specify defines the number of messages you want the broker to preserve. For example:<configuration> <core> ... <address-settings> <address-setting match="orders"> <retroactive-message-count>100</retroactive-message-count> </address-setting> </address-settings> ... </core> </configuration>NoteYou can update the value of

retroactive-message-countwhile the broker is running, in either thebroker.xmlconfiguration file or the management API. However, if you reduce the value of this parameter, an additional step is required, because retroactive addresses are implemented via ring queues. A ring queue whosering-sizeparameter is reduced does not automatically delete messages from the queue to achieve the newring-sizevalue. This behavior is a safeguard against unintended message loss. In this case, you need to use the management API to manually reduce the number of messages in the ring queue.

Additional resources

- For more information about ring queues, see Section 4.12, “Configuring Ring Queues”.

4.15. Protocol Managers and Addresses

A protocol manager maps protocol-specific concepts down to the AMQ Broker address model concepts: queues and routing types. For example, when a client sends a MQTT subscription packet with the addresses /house/room1/lights and /house/room2/lights, the MQTT protocol manager understands that the two addresses require multicast semantics. The protocol manager therefore first looks to ensure that multicast is enabled for both addresses. If not, it attempts to dynamically create them. If successful, the protocol manager then creates special subscription queues for each subscription requested by the client.

Each protocol behaves slightly differently. The table below describes what typically happens when subscribe frames to various types of queue are requested.

| If the queue is of this type… | The typical action for a protocol manager is to… |

|---|---|

| Durable Subscription Queue |

Look for the appropriate address and ensures that The special name allows the protocol manager to quickly identify the required client subscription queues should the client disconnect and reconnect at a later date. When the client unsubscribes the queue is deleted. |

| Temporary Subscription Queue |

Look for the appropriate address and ensures that When the client disconnects the queue is deleted. |