このコンテンツは選択した言語では利用できません。

Technical Reference

The technical architecture of Red Hat Virtualization environments

Abstract

Chapter 1. Introduction

1.1. Red Hat Virtualization Manager

The Red Hat Virtualization Manager provides centralized management for a virtualized environment. A number of different interfaces can be used to access the Red Hat Virtualization Manager. Each interface facilitates access to the virtualized environment in a different manner.

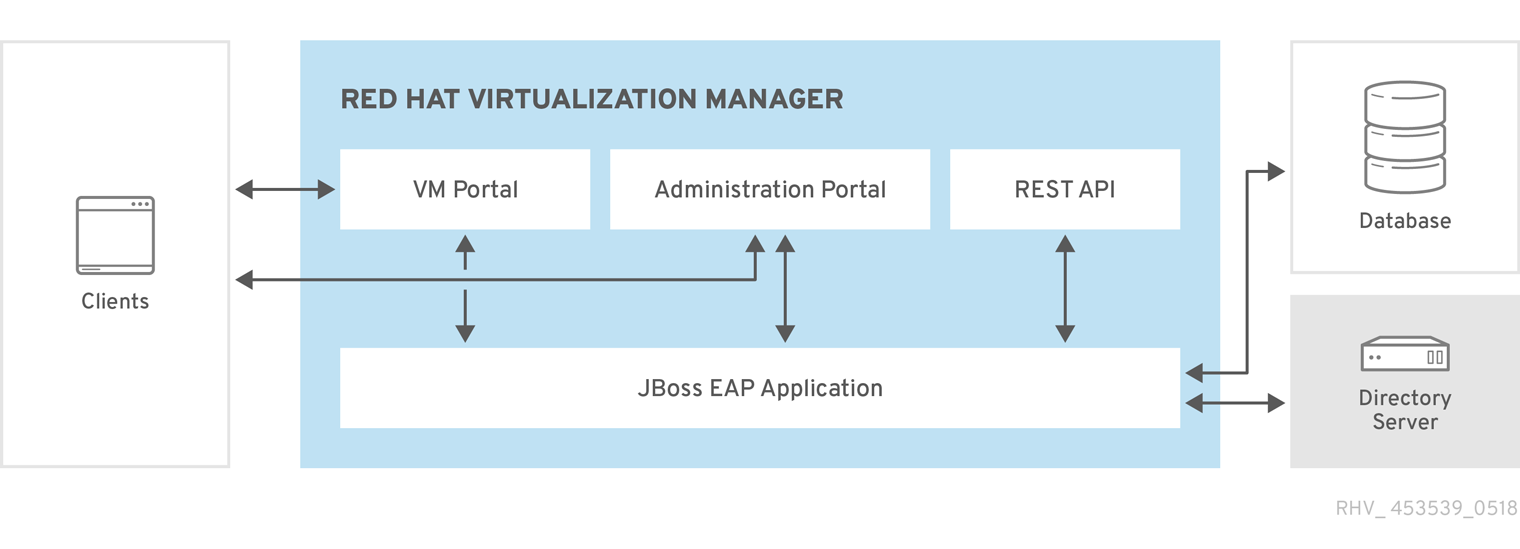

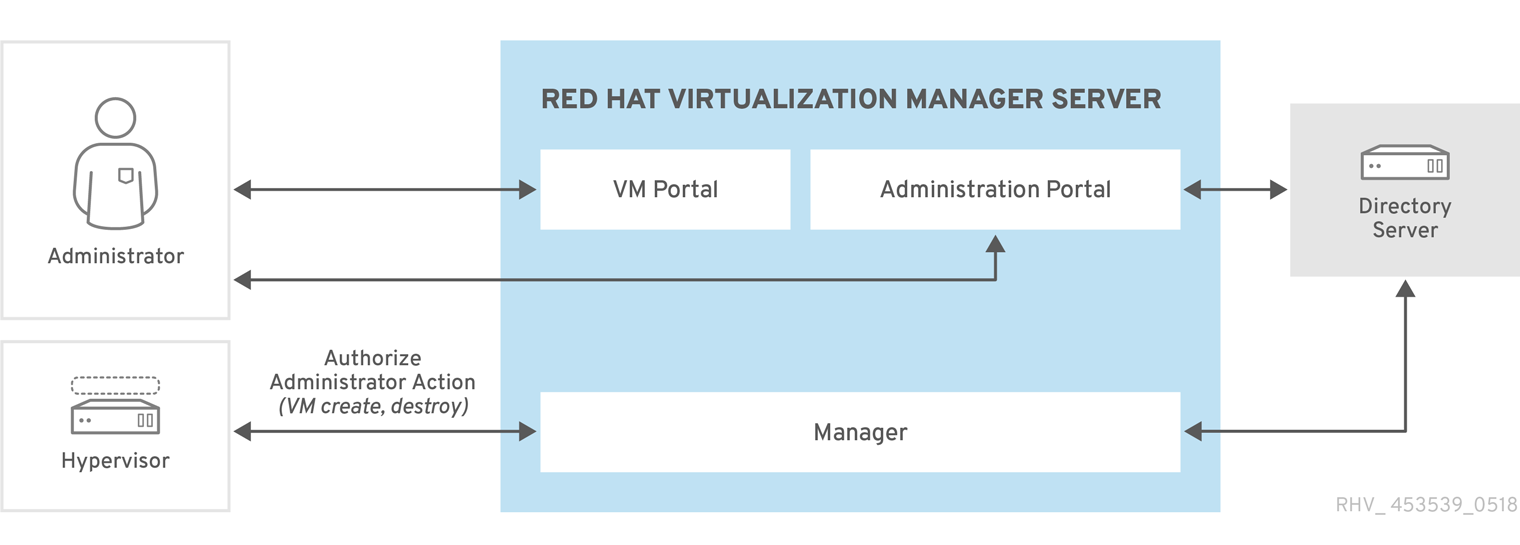

Figure 1.1. Red Hat Virtualization Manager Architecture

The Red Hat Virtualization Manager provides graphical interfaces and an Application Programming Interface (API). Each interface connects to the Manager, an application delivered by an embedded instance of the Red Hat JBoss Enterprise Application Platform. There are a number of other components which support the Red Hat Virtualization Manager in addition to Red Hat JBoss Enterprise Application Platform.

1.2. Red Hat Virtualization Host

A Red Hat Virtualization environment has one or more hosts attached to it. A host is a server that provides the physical hardware that virtual machines make use of.

Red Hat Virtualization Host (RHVH) runs an optimized operating system installed using a special, customized installation media specifically for creating virtualization hosts.

Red Hat Enterprise Linux hosts are servers running a standard Red Hat Enterprise Linux operating system that has been configured after installation to permit use as a host.

Both methods of host installation result in hosts that interact with the rest of the virtualized environment in the same way, and so, will both referred to as hosts.

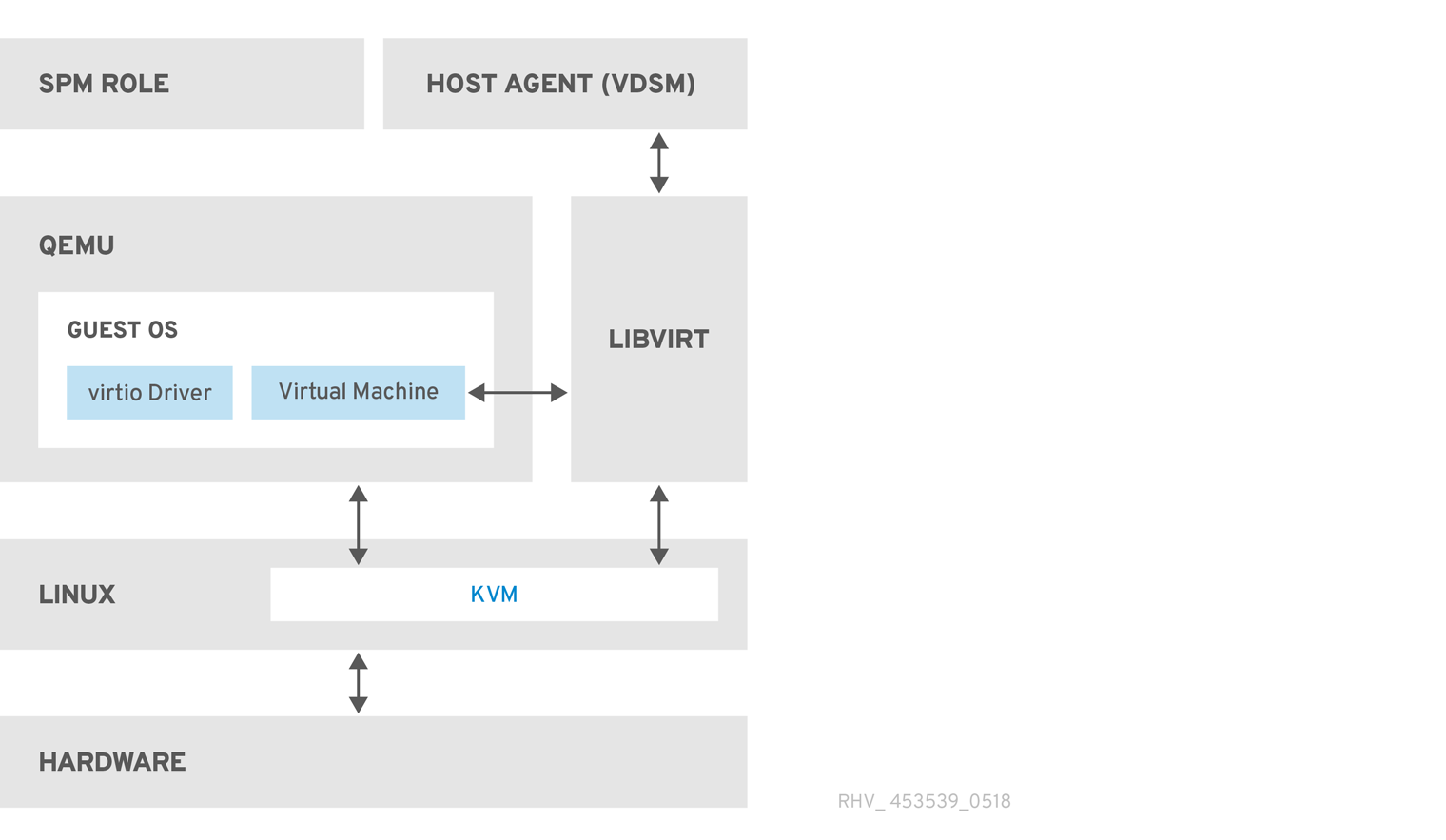

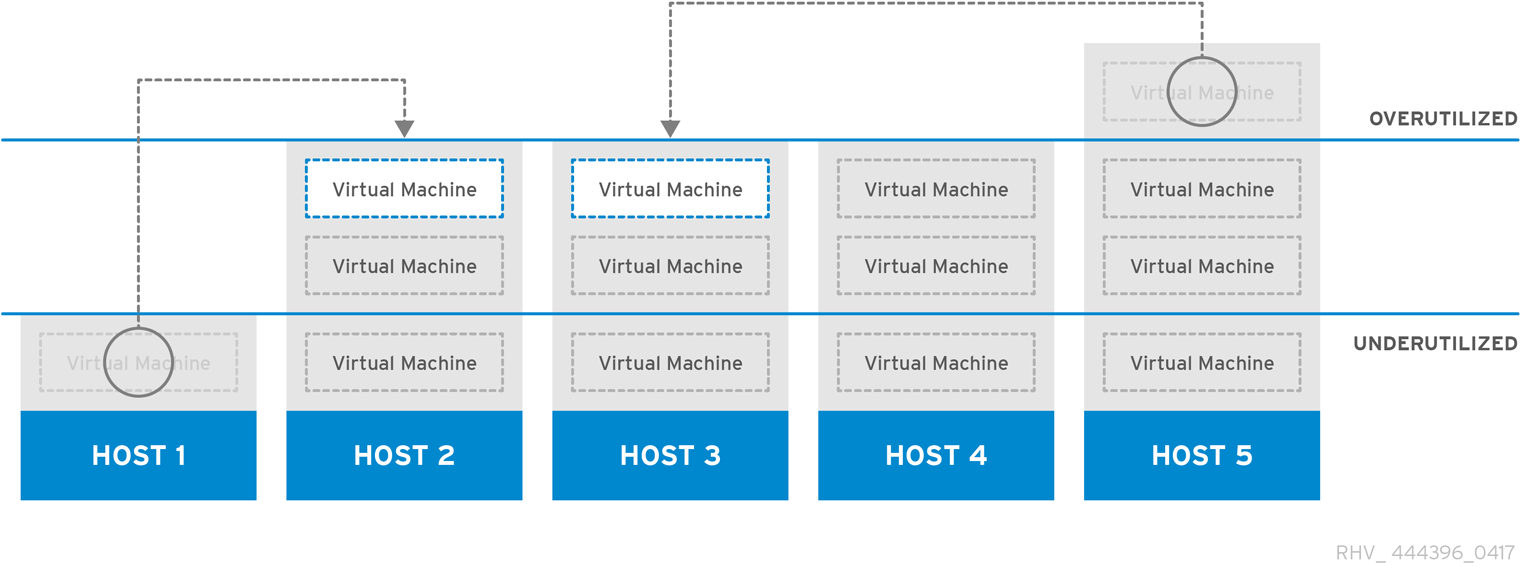

Figure 1.2. Host Architecture

- Kernel-based Virtual Machine (KVM)

- The Kernel-based Virtual Machine (KVM) is a loadable kernel module that provides full virtualization through the use of the Intel VT or AMD-V hardware extensions. Though KVM itself runs in kernel space, the guests running upon it run as individual QEMU processes in user space. KVM allows a host to make its physical hardware available to virtual machines.

- QEMU

- QEMU is a multi-platform emulator used to provide full system emulation. QEMU emulates a full system, for example a PC, including one or more processors, and peripherals. QEMU can be used to launch different operating systems or to debug system code. QEMU, working in conjunction with KVM and a processor with appropriate virtualization extensions, provides full hardware assisted virtualization.

- Red Hat Virtualization Manager Host Agent, VDSM

-

In Red Hat Virtualization, VDSM initiates actions on virtual machines and storage. It also facilitates inter-host communication. VDSM monitors host resources such as memory, storage, and networking. Additionally, VDSM manages tasks such as virtual machine creation, statistics accumulation, and log collection. A VDSM instance runs on each host and receives management commands from the Red Hat Virtualization Manager using the re-configurable port

54321.

- VDSM-REG

-

VDSM uses VDSM-REG to register each host with the Red Hat Virtualization Manager. VDSM-REG supplies information about itself and its host using port

80or port443. - libvirt

- Libvirt facilitates the management of virtual machines and their associated virtual devices. When Red Hat Virtualization Manager initiates virtual machine life-cycle commands (start, stop, reboot), VDSM invokes libvirt on the relevant host machines to execute them.

- Storage Pool Manager, SPM

The Storage Pool Manager (SPM) is a role assigned to one host in a data center. The SPM host has sole authority to make all storage domain structure metadata changes for the data center. This includes creation, deletion, and manipulation of virtual disks, snapshots, and templates. It also includes allocation of storage for sparse block devices on a Storage Area Network(SAN). The role of SPM can be migrated to any host in a data center. As a result, all hosts in a data center must have access to all the storage domains defined in the data center.

Red Hat Virtualization Manager ensures that the SPM is always available. In case of storage connectivity errors, the Manager re-assigns the SPM role to another host.

- Guest Operating System

Guest operating systems do not need to be modified to be installed on virtual machines in a Red Hat Virtualization environment. The guest operating system, and any applications on the guest, are unaware of the virtualized environment and run normally.

Red Hat provides enhanced device drivers that allow faster and more efficient access to virtualized devices. You can also install the Red Hat Virtualization Guest Agent on guests, which provides enhanced guest information to the management console.

1.3. Components that Support the Manager

- Red Hat JBoss Enterprise Application Platform

- Red Hat JBoss Enterprise Application Platform is a Java application server. It provides a framework to support efficient development and delivery of cross-platform Java applications. The Red Hat Virtualization Manager is delivered using Red Hat JBoss Enterprise Application Platform.

The version of the Red Hat JBoss Enterprise Application Platform bundled with Red Hat Virtualization Manager is not to be used to serve other applications. It has been customized for the specific purpose of serving the Red Hat Virtualization Manager. Using the Red Hat JBoss Enterprise Application Platform that is included with the Manager for additional purposes adversely affects its ability to service the Red Hat Virtualization environment.

- Gathering Reports and Historical Data

The Red Hat Virtualization Manager includes a data warehouse that collects monitoring data about hosts, virtual machines, and storage. A number of pre-defined reports are available. Customers can analyze their environments and create reports using any query tools that support SQL.

The Red Hat Virtualization Manager installation process creates two databases. These databases are created on a Postgres instance which is selected during installation.

- The engine database is the primary data store used by the Red Hat Virtualization Manager. Information about the virtualization environment like its state, configuration, and performance are stored in this database.

The ovirt_engine_history database contains configuration information and statistical metrics which are collated over time from the engine operational database. The configuration data in the engine database is examined every minute, and changes are replicated to the ovirt_engine_history database. Tracking the changes to the database provides information on the objects in the database. This enables you to analyze and enhance the performance of your Red Hat Virtualization environment and resolve difficulties.

For more information on generating reports based on the ovirt_engine_history database see the History Database in the Red Hat Virtualization Data Warehouse Guide.

ImportantThe replication of data to the ovirt_engine_history database is performed by the

RHEVM History Service, ovirt-engine-dwhd.- Directory services

- Directory services provide centralized network-based storage of user and organizational information. Types of information stored include application settings, user profiles, group data, policies, and access control. The Red Hat Virtualization Manager supports Active Directory, Identity Management (IdM), OpenLDAP, and Red Hat Directory Server 9. There is also a local, internal domain for administration purposes only. This internal domain has only one user: the admin user.

1.4. Storage

Red Hat Virtualization uses a centralized storage system for virtual disks, templates, snapshots, and ISO files. Storage is logically grouped into storage pools, which are comprised of storage domains. A storage domain is a combination of storage capacity and metadata that describes the internal structure of the storage. There are three types of storage domain; data, export, and ISO.

The data storage domain is the only one required by each data center. A data storage domain is exclusive to a single data center. Export and ISO domains are optional. Storage domains are shared resources, and must be accessible to all hosts in a data center.

Storage networking can be implemented using Network File System (NFS), Internet Small Computer System Interface (iSCSI), GlusterFS, Fibre Channel Protocol (FCP), or any POSIX compliant networked filesystem.

On NFS (and other POSIX-compliant filesystems) domains, all virtual disks, templates, and snapshots are simple files.

On SAN (iSCSI/FCP) domains, block devices are aggregated by Logical Volume Manager (LVM)into a Volume Group (VG). Each virtual disk, template and snapshot is a Logical Volume (LV) on the VG. See the Red Hat Enterprise Linux Logical Volume Manager Administration Guide for more information on LVM.

- Data storage domain

- Data domains hold the virtual hard disk images of all the virtual machines running in the environment. Templates and snapshots of the virtual machines are also stored in the data domain. A data domain cannot be shared across data centers.

- Export storage domain

- An export domain is a temporary storage repository that is used to copy and move images between data centers and Red Hat Virtualization environments. The export domain can be used to back up virtual machines and templates. An export domain can be moved between data centers, but can only be active in one data center at a time.

- ISO storage domain

- ISO domains store ISO files, which are logical CD-ROMs used to install operating systems and applications for the virtual machines. As a logical entity that replaces a library of physical CD-ROMs or DVDs, an ISO domain removes the data center’s need for physical media. An ISO domain can be shared across different data centers.

1.5. Network

The Red Hat Virtualization network architecture facilitates connectivity between the different elements of the Red Hat Virtualization environment. The network architecture not only supports network connectivity, it also allows for network segregation.

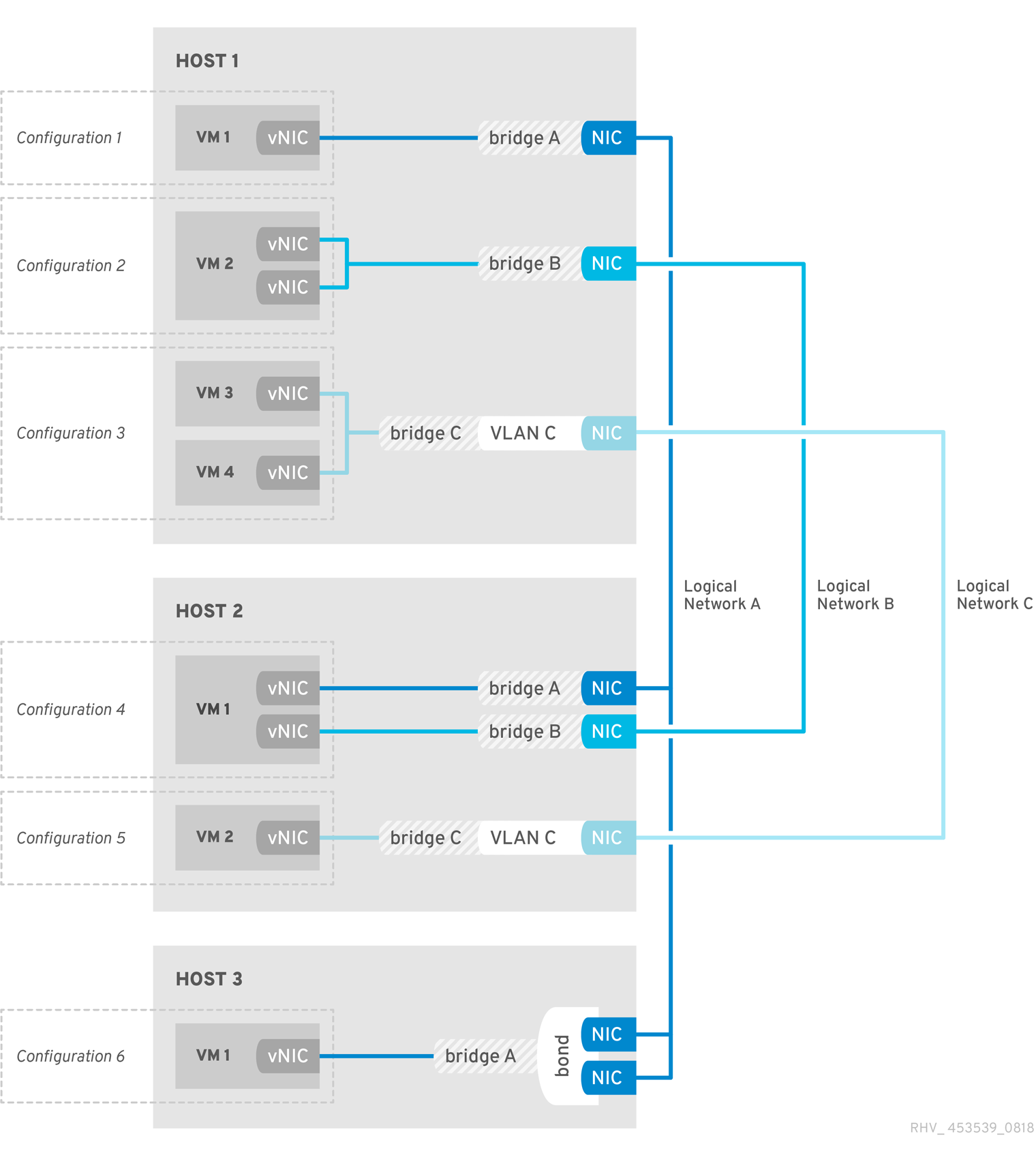

Figure 1.3. Network Architecture

Networking is defined in Red Hat Virtualization in several layers. The underlying physical networking infrastructure must be in place and configured to allow connectivity between the hardware and the logical components of the Red Hat Virtualization environment.

- Networking Infrastructure Layer

The Red Hat Virtualization network architecture relies on some common hardware and software devices:

- Network Interface Controllers (NICs) are physical network interface devices that connect a host to the network.

- Virtual NICs (VNICs) are logical NICs that operate using the host’s physical NICs. They provide network connectivity to virtual machines.

- Bonds bind multiple NICs into a single interface.

- Bridges are a packet-forwarding technique for packet-switching networks. They form the basis of virtual machine logical networks.

- Logical Networks

Logical networks allow segregation of network traffic based on environment requirements. The types of logical network are:

- logical networks that carry virtual machine network traffic,

- logical networks that do not carry virtual machine network traffic,

- optional logical networks,

- and required networks.

All logical networks can either be required or optional.

A logical network that carries virtual machine network traffic is implemented at the host level as a software bridge device. By default, one logical network is defined during the installation of the Red Hat Virtualization Manager: the ovirtmgmt management network.

Other logical networks that can be added by an administrator are: a dedicated storage logical network, and a dedicated display logical network. Logical networks that do not carry virtual machine traffic do not have an associated bridge device on hosts. They are associated with host network interfaces directly.

Red Hat Virtualization segregates management-related network traffic from migration-related network traffic. This makes it possible to use a dedicated network (without routing) for live migration, and ensures that the management network (ovirtmgmt) does not lose its connection to hypervisors during migrations.

- Explanation of logical networks on different layers

- Logical networks have different implications for each layer of the virtualization environment.

Data Center Layer

Logical networks are defined at the data center level. Each data center has the ovirtmgmt management network by default. Further logical networks are optional but recommended. Designation as a VM Network and a custom MTU can be set at the data center level. A logical network that is defined for a data center must also be added to the clusters that use the logical network.

Cluster Layer

Logical networks are made available from a data center, and must be added to the clusters that will use them. Each cluster is connected to the management network by default. You can optionally add to a cluster logical networks that have been defined for the cluster’s parent data center. When a required logical network has been added to a cluster, it must be implemented for each host in the cluster. Optional logical networks can be added to hosts as needed.

Host Layer

Virtual machine logical networks are implemented for each host in a cluster as a software bridge device associated with a given network interface. Non-virtual machine logical networks do not have associated bridges, and are associated with host network interfaces directly. Each host has the management network implemented as a bridge using one of its network devices as a result of being included in a Red Hat Virtualization environment. Further required logical networks that have been added to a cluster must be associated with network interfaces on each host to become operational for the cluster.

Virtual Machine Layer

Logical networks can be made available to virtual machines in the same way that a network can be made available to a physical machine. A virtual machine can have its virtual NIC connected to any virtual machine logical network that has been implemented on the host that runs it. The virtual machine then gains connectivity to any other devices or destinations that are available on the logical network it is connected to.

Example 1.1. Management Network

The management logical network, named ovirtmgmt, is created automatically when the Red Hat Virtualization Manager is installed. The ovirtmgmt network is dedicated to management traffic between the Red Hat Virtualization Manager and hosts. If no other specifically purposed bridges are set up, ovirtmgmt is the default bridge for all traffic.

1.6. Data Centers

A data center is the highest level of abstraction in Red Hat Virtualization. A data center contains three types of information:

- Storage

- This includes storage types, storage domains, and connectivity information for storage domains. Storage is defined for a data center, and available to all clusters in the data center. All host clusters within a data center have access to the same storage domains.

- Logical networks

- This includes details such as network addresses, VLAN tags and STP support. Logical networks are defined for a data center, and are optionally implemented at the cluster level.

- Clusters

- Clusters are groups of hosts with compatible processor cores, either AMD or Intel processors. Clusters are migration domains; virtual machines can be live-migrated to any host within a cluster, and not to other clusters. One data center can hold multiple clusters, and each cluster can contain multiple hosts.

Chapter 2. Storage

2.1. Storage Domains Overview

A storage domain is a collection of images that have a common storage interface. A storage domain contains complete images of templates and virtual machines (including snapshots), ISO files, and metadata about themselves. A storage domain can be made of either block devices (SAN - iSCSI or FCP) or a file system (NAS - NFS, GlusterFS, or other POSIX compliant file systems).

On NAS, all virtual disks, templates, and snapshots are files.

On SAN (iSCSI/FCP), each virtual disk, template or snapshot is a logical volume. Block devices are aggregated into a logical entity called a volume group, and then divided by LVM (Logical Volume Manager) into logical volumes for use as virtual hard disks. See the Red Hat Enterprise Linux Logical Volume Manager Administration Guide for more information on LVM.

Virtual disks can have one of two formats, either QCOW2 or raw. The type of storage can be either sparse or preallocated. Snapshots are always sparse but can be taken for disks of either format.

Virtual machines that share the same storage domain can be migrated between hosts that belong to the same cluster.

2.2. Types of Storage Backing Storage Domains

Storage domains can be implemented using block based and file based storage.

- File Based Storage

The file based storage types supported by Red Hat Virtualization are NFS, GlusterFS, other POSIX compliant file systems, and storage local to hosts.

File based storage is managed externally to the Red Hat Virtualization environment.

NFS storage is managed by a Red Hat Enterprise Linux NFS server, or other third party network attached storage server.

Hosts can manage their own local storage file systems.

- Block Based Storage

Block storage uses unformatted block devices. Block devices are aggregated into volume groups by the Logical Volume Manager (LVM). An instance of LVM runs on all hosts, unaware of the instances running on other hosts. VDSM adds clustering logic on top of LVM by scanning volume groups for changes. When changes are detected, VDSM updates individual hosts by telling them to refresh their volume group information. The hosts divide the volume group into logical volumes, writing logical volume metadata to disk. If more storage capacity is added to an existing storage domain, the Red Hat Virtualization Manager causes VDSM on each host to refresh volume group information.

A Logical Unit Number (LUN) is an individual block device. One of the supported block storage protocols, iSCSI or Fibre Channel, is used to connect to a LUN. The Red Hat Virtualization Manager manages software iSCSI connections to the LUNs. All other block storage connections are managed externally to the Red Hat Virtualization environment. Any changes in a block based storage environment, such as the creation of logical volumes, extension or deletion of logical volumes and the addition of a new LUN are handled by LVM on a specially selected host called the Storage Pool Manager. Changes are then synced by VDSM which storage metadata refreshes across all hosts in the cluster.

2.3. Storage Domain Types

Red Hat Virtualization supports three types of storage domains, as well as the storage types that each storage domain supports.

- The Data Storage Domain stores the hard disk images of all virtual machines in the Red Hat Virtualization environment. Disk images may contain an installed operating system or data stored or generated by a virtual machine. Data storage domains support NFS, iSCSI, FCP, GlusterFS and POSIX compliant storage. A data domain cannot be shared between multiple data centers.

- The Export Storage Domain provides transitory storage for hard disk images and virtual machine templates being transferred between data centers. Additionally, export storage domains store backed up copies of virtual machines. Export storage domains support NFS storage. Multiple data centers can access a single export storage domain but only one data center can use it at a time.

- The ISO Storage Domain stores ISO files, also called images. ISO files are representations of physical CDs or DVDs. In the Red Hat Virtualization environment the common types of ISO files are operating system installation disks, application installation disks, and guest agent installation disks. These images can be attached to virtual machines and booted in the same way that physical disks are inserted into a disk drive and booted. ISO storage domains allow all hosts within the data center to share ISOs, eliminating the need for physical optical media.

2.4. Storage Formats for Virtual Disks

- QCOW2 Formatted Virtual Machine Storage

QCOW2 is a storage format for virtual disks. QCOW stands for QEMU copy-on-write. The QCOW2 format decouples the physical storage layer from the virtual layer by adding a mapping between logical and physical blocks. Each logical block is mapped to its physical offset, which enables storage over-commitment and virtual machine snapshots, where each QCOW volume only represents changes made to an underlying virtual disk.

The initial mapping points all logical blocks to the offsets in the backing file or volume. When a virtual machine writes data to a QCOW2 volume after a snapshot, the relevant block is read from the backing volume, modified with the new information and written into a new snapshot QCOW2 volume. Then the map is updated to point to the new place.

- Raw

The raw storage format has a performance advantage over QCOW2 in that no formatting is applied to virtual disks stored in the raw format. Virtual machine data operations on virtual disks stored in raw format require no additional work from hosts. When a virtual machine writes data to a given offset in its virtual disk, the I/O is written to the same offset on the backing file or logical volume.

Raw format requires that the entire space of the defined image be preallocated unless using externally managed thin provisioned LUNs from a storage array.

2.5. Virtual Disk Storage Allocation Policies

- Preallocated Storage

- All of the storage required for a virtual disk is allocated prior to virtual machine creation. If a 20 GB disk image is created for a virtual machine, the disk image uses 20 GB of storage domain capacity. Preallocated disk images cannot be enlarged. Preallocating storage can mean faster write times because no storage allocation takes place during runtime, at the cost of flexibility. Allocating storage this way reduces the capacity of the Red Hat Virtualization Manager to overcommit storage. Preallocated storage is recommended for virtual machines used for high intensity I/O tasks with less tolerance for latency in storage. Generally, server virtual machines fit this description.

If thin provisioning functionality provided by your storage back-end is being used, preallocated storage should still be selected from the Administration Portal when provisioning storage for virtual machines.

- Sparsely Allocated Storage

- The upper size limit for a virtual disk is set at virtual machine creation time. Initially, the disk image does not use any storage domain capacity. Usage grows as the virtual machine writes data to disk, until the upper limit is reached. Capacity is not returned to the storage domain when data in the disk image is removed. Sparsely allocated storage is appropriate for virtual machines with low or medium intensity I/O tasks with some tolerance for latency in storage. Generally, desktop virtual machines fit this description.

If thin provisioning functionality is provided by your storage back-end, it should be used as the preferred implementation of thin provisioning. Storage should be provisioned from the graphical user interface as preallocated, leaving thin provisioning to the back-end solution.

2.6. Storage Metadata Versions in Red Hat Virtualization

Red Hat Virtualization stores information about storage domains as metadata on the storage domains themselves. Each major release of Red Hat Virtualization has seen improved implementations of storage metadata.

V1 metadata (Red Hat Virtualization 2.x series)

- Each storage domain contains metadata describing its own structure, and all of the names of physical volumes that are used to back virtual disks.

- Master domains additionally contain metadata for all the domains and physical volume names in the storage pool. The total size of this metadata is limited to 2 KB, limiting the number of storage domains that can be in a pool.

- Template and virtual machine base images are read only.

- V1 metadata is applicable to NFS, iSCSI, and FC storage domains.

V2 metadata (Red Hat Enterprise Virtualization 3.0)

- All storage domain and pool metadata is stored as logical volume tags rather than written to a logical volume. Metadata about virtual disk volumes is still stored in a logical volume on the domains.

- Physical volume names are no longer included in the metadata.

- Template and virtual machine base images are read only.

- V2 metadata is applicable to iSCSI, and FC storage domains.

V3 metadata (Red Hat Enterprise Virtualization 3.1 and later)

- All storage domain and pool metadata is stored as logical volume tags rather than written to a logical volume. Metadata about virtual disk volumes is still stored in a logical volume on the domains.

- Virtual machine and template base images are no longer read only. This change enables live snapshots, live storage migration, and clone from snapshot.

- Support for unicode metadata is added, for non-English volume names.

- V3 metadata is applicable to NFS, GlusterFS, POSIX, iSCSI, and FC storage domains.

V4 metadata (Red Hat Virtualization 4.1 and later)

- Support for QCOW2 compat levels - the QCOW image format includes a version number to allow introducing new features that change the image format so that it is incompatible with earlier versions. Newer QEMU versions (1.7 and above) support QCOW2 version 3, which is not backwards compatible, but introduces improvements such as zero clusters and improved performance.

A new xleases volume to support VM leases - this feature adds the ability to acquire a lease per virtual machine on shared storage without attaching the lease to a virtual machine disk.

A VM lease offers two important capabilities:

- Avoiding split-brain.

- Starting a VM on another host if the original host becomes non-responsive, which improves the availability of HA VMs.

V5 metadata (Red Hat Virtualization 4.3 and later)

- Support for 4K (4096 byte) block storage.

- Support for variable SANLOCK allignments.

Support for new properties:

-

BLOCK_SIZE- stores the block size of the storage domain in bytes. ALIGNMENT- determines the formatting and size of the xlease volume. (1MB to 8MB). Determined by the maximum number of host to be supported (value provided by the user) and disk block size.For example: a 512b block size and support for 2000 hosts results in a 1MB xlease volume.

A 4K block size with 2000 hosts results in a 8MB xlease volume.

The default value of maximum hosts is 250, resulting in an xlease volume of 1MB for 4K disks.

-

Deprecated properties:

-

The

LOGBLKSIZE,PHYBLKSIZE,MTIME, andPOOL_UUIDfields were removed from the storage domain metadata. -

The

SIZE(size in blocks) field was replaced byCAP(size in bytes).

-

The

- You cannot boot from a 4K format disk, as the boot disk always uses a 512 byte emulation.

- The nfs format always uses 512 bytes.

2.7. Storage Domain Autorecovery in Red Hat Virtualization

Hosts in a Red Hat Virtualization environment monitor storage domains in their data centers by reading metadata from each domain. A storage domain becomes inactive when all hosts in a data center report that they cannot access the storage domain.

Rather than disconnecting an inactive storage domain, the Manager assumes that the storage domain has become inactive temporarily, because of a temporary network outage for example. Once every 5 minutes, the Manager attempts to re-activate any inactive storage domains.

Administrator intervention may be required to remedy the cause of the storage connectivity interruption, but the Manager handles re-activating storage domains as connectivity is restored.

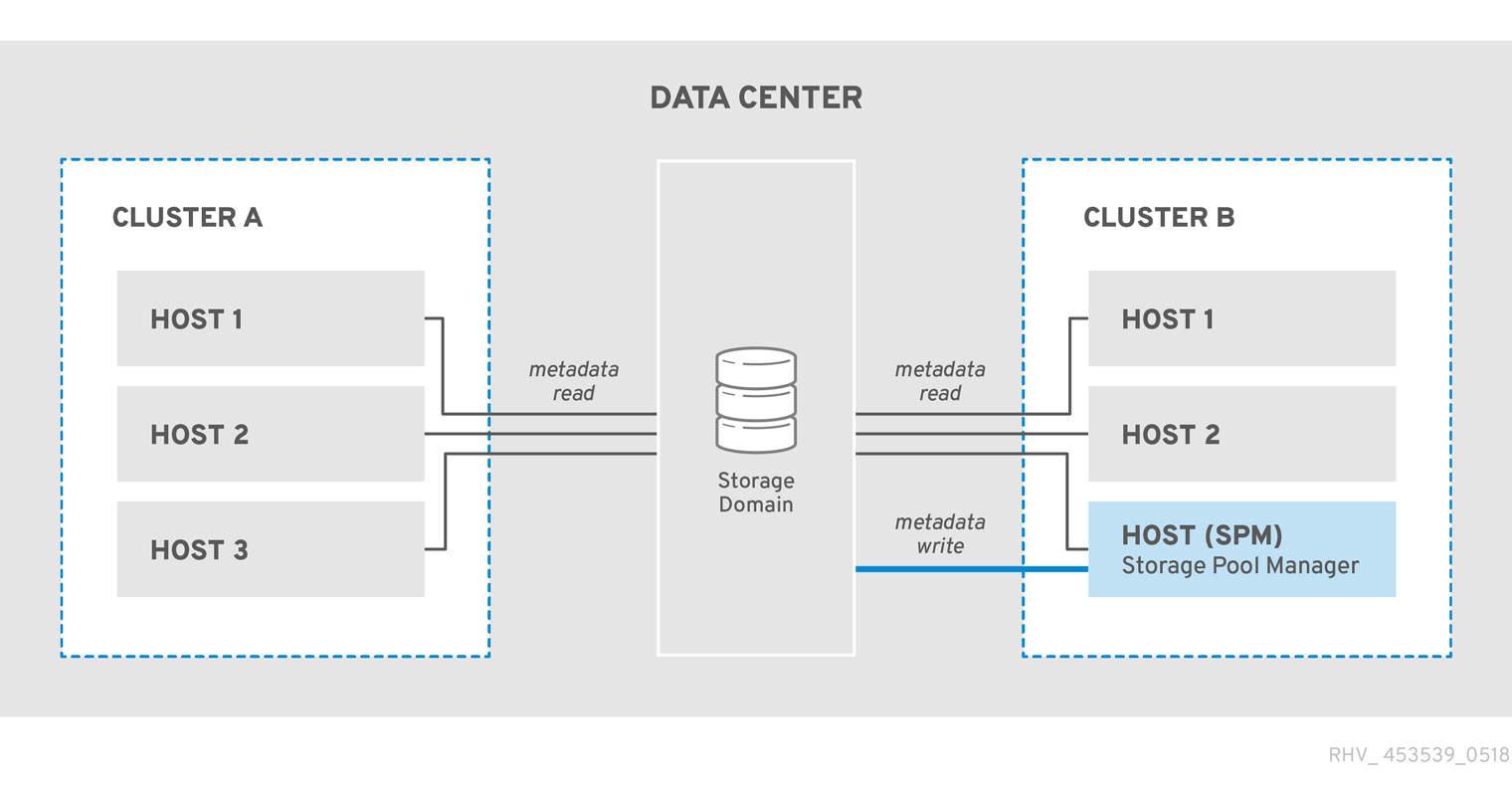

2.8. The Storage Pool Manager

Red Hat Virtualization uses metadata to describe the internal structure of storage domains. Structural metadata is written to a segment of each storage domain. Hosts work with the storage domain metadata based on a single writer, and multiple readers configuration. Storage domain structural metadata tracks image and snapshot creation and deletion, and volume and domain extension.

The host that can make changes to the structure of the data domain is known as the Storage Pool Manager (SPM). The SPM coordinates all metadata changes in the data center, such as creating and deleting disk images, creating and merging snapshots, copying images between storage domains, creating templates and storage allocation for block devices. There is one SPM for every data center. All other hosts can only read storage domain structural metadata.

A host can be manually selected as the SPM, or it can be assigned by the Red Hat Virtualization Manager. The Manager assigns the SPM role by causing a potential SPM host to attempt to assume a storage-centric lease. The lease allows the SPM host to write storage metadata. It is storage-centric because it is written to the storage domain rather than being tracked by the Manager or hosts. Storage-centric leases are written to a special logical volume in the master storage domain called leases. Metadata about the structure of the data domain is written to a special logical volume called metadata. The leases logical volume protects the metadata logical volume from changes.

The Manager uses VDSM to issue the spmStart command to a host, causing VDSM on that host to attempt to assume the storage-centric lease. If the host is successful it becomes the SPM and retains the storage-centric lease until the Red Hat Virtualization Manager requests that a new host assume the role of SPM.

The Manager moves the SPM role to another host if:

- The SPM host can not access all storage domains, but can access the master storage domain

- The SPM host is unable to renew the lease because of a loss of storage connectivity or the lease volume is full and no write operation can be performed

- The SPM host crashes

Figure 2.1. The Storage Pool Manager Exclusively Writes Structural Metadata.

2.9. Storage Pool Manager Selection Process

If a host has not been manually assigned the Storage Pool Manager (SPM) role, the SPM selection process is initiated and managed by the Red Hat Virtualization Manager.

First, the Red Hat Virtualization Manager requests that VDSM confirm which host has the storage-centric lease.

The Red Hat Virtualization Manager tracks the history of SPM assignment from the initial creation of a storage domain onward. The availability of the SPM role is confirmed in three ways:

- The "getSPMstatus" command: the Manager uses VDSM to check with the host that had SPM status last and receives one of "SPM", "Contending", or "Free".

- The metadata volume for a storage domain contains the last host with SPM status.

- The metadata volume for a storage domain contains the version of the last host with SPM status.

If an operational, responsive host retains the storage-centric lease, the Red Hat Virtualization Manager marks that host SPM in the administrator portal. No further action is taken.

If the SPM host does not respond, it is considered unreachable. If power management has been configured for the host, it is automatically fenced. If not, it requires manual fencing. The Storage Pool Manager role cannot be assigned to a new host until the previous Storage Pool Manager is fenced.

When the SPM role and storage-centric lease are free, the Red Hat Virtualization Manager assigns them to a randomly selected operational host in the data center.

If the SPM role assignment fails on a new host, the Red Hat Virtualization Manager adds the host to a list containing hosts the operation has failed on, marking these hosts as ineligible for the SPM role. This list is cleared at the beginning of the next SPM selection process so that all hosts are again eligible.

The Red Hat Virtualization Manager continues request that the Storage Pool Manager role and storage-centric lease be assumed by a randomly selected host that is not on the list of failed hosts until the SPM selection succeeds.

Each time the current SPM is unresponsive or unable to fulfill its responsibilities, the Red Hat Virtualization Manager initiates the Storage Pool Manager selection process.

2.10. Exclusive Resources and Sanlock in Red Hat Virtualization

Certain resources in the Red Hat Virtualization environment must be accessed exclusively.

The SPM role is one such resource. If more than one host were to become the SPM, there would be a risk of data corruption as the same data could be changed from two places at once.

Prior to Red Hat Enterprise Virtualization 3.1, SPM exclusivity was maintained and tracked using a VDSM feature called safelease. The lease was written to a special area on all of the storage domains in a data center. All of the hosts in an environment could track SPM status in a network-independent way. The VDSM’s safe lease only maintained exclusivity of one resource: the SPM role.

Sanlock provides the same functionality, but treats the SPM role as one of the resources that can be locked. Sanlock is more flexible because it allows additional resources to be locked.

Applications that require resource locking can register with Sanlock. Registered applications can then request that Sanlock lock a resource on their behalf, so that no other application can access it. For example, instead of VDSM locking the SPM status, VDSM now requests that Sanlock do so.

Locks are tracked on disk in a lockspace. There is one lockspace for every storage domain. In the case of the lock on the SPM resource, each host’s liveness is tracked in the lockspace by the host’s ability to renew the hostid it received from the Manager when it connected to storage, and to write a timestamp to the lockspace at a regular interval. The ids logical volume tracks the unique identifiers of each host, and is updated every time a host renews its hostid. The SPM resource can only be held by a live host.

Resources are tracked on disk in the leases logical volume. A resource is said to be taken when its representation on disk has been updated with the unique identifier of the process that has taken it. In the case of the SPM role, the SPM resource is updated with the hostid that has taken it.

The Sanlock process on each host only needs to check the resources once to see that they are taken. After an initial check, Sanlock can monitor the lockspaces until timestamp of the host with a locked resource becomes stale.

Sanlock monitors the applications that use resources. For example, VDSM is monitored for SPM status and hostid. If the host is unable to renew it’s hostid from the Manager, it loses exclusivity on all resources in the lockspace. Sanlock updates the resource to show that it is no longer taken.

If the SPM host is unable to write a timestamp to the lockspace on the storage domain for a given amount of time, the host’s instance of Sanlock requests that the VDSM process release its resources. If the VDSM process responds, its resources are released, and the SPM resource in the lockspace can be taken by another host.

If VDSM on the SPM host does not respond to requests to release resources, Sanlock on the host kills the VDSM process. If the kill command is unsuccessful, Sanlock escalates by attempting to kill VDSM using sigkill. If the sigkill is unsuccessful, Sanlock depends on the watchdog daemon to reboot the host.

Every time VDSM on the host renews its hostid and writes a timestamp to the lockspace, the watchdog daemon receives a pet. When VDSM is unable to do so, the watchdog daemon is no longer being petted. After the watchdog daemon has not received a pet for a given amount of time, it reboots the host. This final level of escalation, if reached, guarantees that the SPM resource is released, and can be taken by another host.

2.11. Thin Provisioning and Storage Over-Commitment

The Red Hat Virtualization Manager provides provisioning policies to optimize storage usage within the virtualization environment. A thin provisioning policy allows you to over-commit storage resources, provisioning storage based on the actual storage usage of your virtualization environment.

Storage over-commitment is the allocation of more storage to virtual machines than is physically available in the storage pool. Generally, virtual machines use less storage than what has been allocated to them. Thin provisioning allows a virtual machine to operate as if the storage defined for it has been completely allocated, when in fact only a fraction of the storage has been allocated.

While the Red Hat Virtualization Manager provides its own thin provisioning function, you should use the thin provisioning functionality of your storage back-end if it provides one.

To support storage over-commitment, VDSM defines a threshold which compares logical storage allocation with actual storage usage. This threshold is used to make sure that the data written to a disk image is smaller than the logical volume that backs the disk image. QEMU identifies the highest offset written to in a logical volume, which indicates the point of greatest storage use. VDSM monitors the highest offset marked by QEMU to ensure that the usage does not cross the defined threshold. So long as VDSM continues to indicate that the highest offset remains below the threshold, the Red Hat Virtualization Manager knows that the logical volume in question has sufficient storage to continue operations.

When QEMU indicates that usage has risen to exceed the threshold limit, VDSM communicates to the Manager that the disk image will soon reach the size of it’s logical volume. The Red Hat Virtualization Manager requests that the SPM host extend the logical volume. This process can be repeated as long as the data storage domain for the data center has available space. When the data storage domain runs out of available free space, you must manually add storage capacity to expand it.

2.12. Logical Volume Extension

The Red Hat Virtualization Manager uses thin provisioning to overcommit the storage available in a storage pool, and allocates more storage than is physically available. Virtual machines write data as they operate. A virtual machine with a thinly-provisioned disk image will eventually write more data than the logical volume backing its disk image can hold. When this happens, logical volume extension is used to provide additional storage and facilitate the continued operations for the virtual machine.

Red Hat Virtualization provides a thin provisioning mechanism over LVM. When using QCOW2 formatted storage, Red Hat Virtualization relies on the host system process qemu-kvm to map storage blocks on disk to logical blocks in a sequential manner. This allows, for example, the definition of a logical 100 GB disk backed by a 1 GB logical volume. When qemu-kvm crosses a usage threshold set by VDSM, the local VDSM instance makes a request to the SPM for the logical volume to be extended by another one gigabyte. VDSM on the host running a virtual machine in need of volume extension notifies the SPM VDSM that more space is required. The SPM extends the logical volume and the SPM VDSM instance causes the host VDSM to refresh volume group information and recognize that the extend operation is complete. The host can continue operations.

Logical Volume extension does not require that a host know which other host is the SPM; it could even be the SPM itself. The storage extension communication is done via a storage mailbox. The storage mailbox is a dedicated logical volume on the data storage domain. A host that needs the SPM to extend a logical volume writes a message in an area designated to that particular host in the storage mailbox. The SPM periodically reads the incoming mail, performs requested logical volume extensions, and writes a reply in the outgoing mail. After sending the request, a host monitors its incoming mail for responses every two seconds. When the host receives a successful reply to its logical volume extension request, it refreshes the logical volume map in device mapper to recognize the newly allocated storage.

When the physical storage available to a storage pool is nearly exhausted, multiple images can run out of usable storage with no means to replenish their resources. A storage pool that exhausts its storage causes QEMU to return an enospc error, which indicates that the device no longer has any storage available. At this point, running virtual machines are automatically paused and manual intervention is required to add a new LUN to the volume group.

When a new LUN is added to the volume group, the Storage Pool Manager automatically distributes the additional storage to logical volumes that need it. The automatic allocation of additional resources allows the relevant virtual machines to automatically continue operations uninterrupted or resume operations if stopped.

2.13. The Effect of Storage Domain Actions on Storage Capacity

- Power on, power off, and reboot a stateless virtual machine

- These three processes affect the copy-on-write (COW) layer in a stateless virtual machine. For more information, see the Stateless row of the Virtual Machine General Settings table in the Virtual Machine Management Guide.

- Create a storage domain

Creating a block storage domain results in files with the same names as the seven LVs shown below, and initially should take less capacity.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Delete a storage domain

- Deleting a storage domain frees up capacity on the disk by the same of amount of capacity the process deleted.

- Migrate a storage domain

- Migrating a storage domain does not use additional storage capacity. For more information about migrating storage domains, see Migrating Storage Domains Between Data Centers in the Same Environment in the Administration Guide.

- Move a virtual disk to other storage domain

Migrating a virtual disk requires enough free space to be available on the target storage domain. You can see the target domain’s approximate free space in the Administration Portal.

The storage types in the move process affect the visible capacity. For example, if you move a preallocated disk from block storage to file storage, the resulting free space may be considerably smaller than the initial free space.

Live migrating a virtual disk to another storage domain also creates a snapshot, which is automatically merged after the migration is complete. To learn more about moving virtual disks, see Moving a Virtual Disk in the Administration Guide.

- Pause a storage domain

- Pausing a storage domain does not use any additional storage capacity.

- Create a snapshot of a virtual machine

Creating a snapshot of a virtual machine can affect the storage domain capacity.

- Creating a live snapshot uses memory snapshots by default and generates two additional volumes per virtual machine. The first volume is the sum of the memory, video memory, and 200 MB of buffer. The second volume contains the virtual machine configuration, which is several MB in size. When using block storage, rounding up occurs to the nearest unit Red Hat Virtualization can provide.

- Creating an offline snapshot initially consumes 1 GB of block storage and is dynamic up to the size of the disk.

- Cloning a snapshot creates a new disk the same size as the original disk.

- Committing a snapshot removes all child volumes, depending on where in the chain the commit occurs.

- Deleting a snapshot eventually removes the child volume for each disk and is only supported with a running virtual machine.

- Previewing a snapshot creates a temporary volume per disk, so sufficient capacity must be available to allow the creation of the preview.

- Undoing a snapshot preview removes the temporary volume created by the preview.

- Attach and remove direct LUNs

- Attaching and removing direct LUNs does not affect the storage domain since they are not a storage domain component. For more information, see Overview of Live Storage Migration in the Administration Guide.

Chapter 3. Network

3.1. Network Architecture

Red Hat Virtualization networking can be discussed in terms of basic networking, networking within a cluster, and host networking configurations. Basic networking terms cover the basic hardware and software elements that facilitate networking. Networking within a cluster includes network interactions among cluster level objects such as hosts, logical networks and virtual machines. Host networking configurations covers supported configurations for networking within a host.

A well designed and built network ensures, for example, that high bandwidth tasks receive adequate bandwidth, that user interactions are not crippled by latency, and virtual machines can be successfully migrated within a migration domain. A poorly built network can cause, for example, unacceptable latency, and migration and cloning failures resulting from network flooding.

An alternative method of managing your network is by integrating with Cisco Application Centric Infrastructure (ACI), by configuring Red Hat Virtualization on Cisco’s Application Policy Infrastructure Controller (APIC) version 3.1(1) and later according to the Cisco’s documentation. On the Red Hat Virtualization side, all that is required is connecting the hosts' NICs to the network and the virtual machines' vNICs to the required network. The remaining configuration tasks are managed by Cisco ACI.

3.2. Introduction: Basic Networking Terms

Red Hat Virtualization provides networking functionality between virtual machines, virtualization hosts, and wider networks using:

- A Network Interface Controller (NIC)

- A Bridge

- A Bond

- A Virtual NIC

- A Virtual LAN (VLAN)

NICs, bridges, and VNICs allow for network communication between hosts, virtual machines, local area networks, and the Internet. Bonds and VLANs are optionally implemented to enhance security, fault tolerance, and network capacity.

3.3. Network Interface Controller

The NIC (Network Interface Controller) is a network adapter or LAN adapter that connects a computer to a computer network. The NIC operates on both the physical and data link layers of the machine and allows network connectivity. All virtualization hosts in a Red Hat Virtualization environment have at least one NIC, though it is more common for a host to have two or more NICs.

One physical NIC can have multiple Virtual NICs (VNICs) logically connected to it. A virtual NIC acts as a physical network interface for a virtual machine. To distinguish between a VNIC and the NIC that supports it, the Red Hat Virtualization Manager assigns each VNIC a unique MAC address.

3.4. Bridge

A Bridge is a software device that uses packet forwarding in a packet-switched network. Bridging allows multiple network interface devices to share the connectivity of one NIC and appear on a network as separate physical devices. The bridge examines a packet’s source addresses to determine relevant target addresses. Once the target address is determined, the bridge adds the location to a table for future reference. This allows a host to redirect network traffic to virtual machine associated VNICs that are members of a bridge.

In Red Hat Virtualization a logical network is implemented using a bridge. It is the bridge rather than the physical interface on a host that receives an IP address. The IP address associated with the bridge is not required to be within the same subnet as the virtual machines that use the bridge for connectivity. If the bridge is assigned an IP address on the same subnet as the virtual machines that use it, the host is addressable within the logical network by virtual machines. As a rule it is not recommended to run network exposed services on a virtualization host. Guests are connected to a logical network by their VNICs, and the host is connected to remote elements of the logical network using its NIC. Each guest can have the IP address of its VNIC set independently, by DHCP or statically. Bridges can connect to objects outside the host, but such a connection is not mandatory.

Custom properties can be defined for both the bridge and the Ethernet connection. VDSM passes the network definition and custom properties to the setup network hook script.

3.5. Bonds

A bond is an aggregation of multiple network interface cards into a single software-defined device. Because bonded network interfaces combine the transmission capability of the network interface cards included in the bond to act as a single network interface, they can provide greater transmission speed than that of a single network interface card. Also, because all network interface cards in the bond must fail for the bond itself to fail, bonding provides increased fault tolerance. However, one limitation is that the network interface cards that form a bonded network interface must be of the same make and model to ensure that all network interface cards in the bond support the same options and modes.

The packet dispersal algorithm for a bond is determined by the bonding mode used.

Modes 1, 2, 3 and 4 support both virtual machine (bridged) and non-virtual machine (bridgeless) network types. Modes 0, 5 and 6 support non-virtual machine (bridgeless) networks only.

3.6. Bonding Modes

Red Hat Virtualization uses Mode 4 by default, but supports the following common bonding modes:

Mode 0 (round-robin policy)- Transmits packets through network interface cards in sequential order. Packets are transmitted in a loop that begins with the first available network interface card in the bond and end with the last available network interface card in the bond. All subsequent loops then start with the first available network interface card. Mode 0 offers fault tolerance and balances the load across all network interface cards in the bond. However, Mode 0 cannot be used in conjunction with bridges, and is therefore not compatible with virtual machine logical networks.

Mode 1 (active-backup policy)- Sets all network interface cards to a backup state while one network interface card remains active. In the event of failure in the active network interface card, one of the backup network interface cards replaces that network interface card as the only active network interface card in the bond. The MAC address of the bond in Mode 1 is visible on only one port to prevent any confusion that might otherwise be caused if the MAC address of the bond changed to reflect that of the active network interface card. Mode 1 provides fault tolerance and is supported in Red Hat Virtualization.

Mode 2 (XOR policy)- Selects the network interface card through which to transmit packets based on the result of an XOR operation on the source and destination MAC addresses modulo network interface card slave count. This calculation ensures that the same network interface card is selected for each destination MAC address used. Mode 2 provides fault tolerance and load balancing and is supported in Red Hat Virtualization.

Mode 3 (broadcast policy)- Transmits all packets to all network interface cards. Mode 3 provides fault tolerance and is supported in Red Hat Virtualization.

Mode 4 (IEEE 802.3ad policy)- Creates aggregation groups in which the interfaces share the same speed and duplex settings. Mode 4 uses all network interface cards in the active aggregation group in accordance with the IEEE 802.3ad specification and is supported in Red Hat Virtualization.

Mode 5 (adaptive transmit load balancing policy)- Ensures the distribution of outgoing traffic accounts for the load on each network interface card in the bond and that the current network interface card receives all incoming traffic. If the network interface card assigned to receive traffic fails, another network interface card is assigned to the role of receiving incoming traffic. Mode 5 cannot be used in conjunction with bridges, therefore it is not compatible with virtual machine logical networks.

Mode 6 (adaptive load balancing policy)- Combines Mode 5 (adaptive transmit load balancing policy) with receive load balancing for IPv4 traffic without any special switch requirements. ARP negotiation is used for balancing the receive load. Mode 6 cannot be used in conjunction with bridges, therefore it is not compatible with virtual machine logical networks.

3.7. Switch Configuration for Bonding

Switch configurations vary per the requirements of your hardware. Refer to the deployment and networking configuration guides for your operating system.

For every type of switch it is important to set up the switch bonding with the Link Aggregation Control Protocol (LACP) protocol and not the Cisco Port Aggregation Protocol (PAgP) protocol.

3.8. Virtual Network Interface Cards

Virtual network interface cards (vNICs) are virtual network interfaces that are based on the physical NICs of a host. Each host can have multiple NICs, and each NIC can be a base for multiple vNICs.

When you attach a vNIC to a virtual machine, the Red Hat Virtualization Manager creates several associations between the virtual machine to which the vNIC is being attached, the vNIC itself, and the physical host NIC on which the vNIC is based. Specifically, when a vNIC is attached to a virtual machine, a new vNIC and MAC address are created on the physical host NIC on which the vNIC is based. Then, the first time the virtual machine starts after that vNIC is attached, libvirt assigns the vNIC a PCI address. The MAC address and PCI address are then used to obtain the name of the vNIC (for example, eth0) in the virtual machine.

The process for assigning MAC addresses and associating those MAC addresses with PCI addresses is slightly different when creating virtual machines based on templates or snapshots:

- If PCI addresses have already been created for a template or snapshot, the vNICs on virtual machines created based on that template or snapshot are ordered in accordance with those PCI addresses. MAC addresses are then allocated to the vNICs in that order.

- If PCI addresses have not already been created for a template, the vNICs on virtual machines created based on that template are ordered alphabetically. MAC addresses are then allocated to the vNICs in that order.

- If PCI addresses have not already been created for a snapshot, the Red Hat Virtualization Manager allocates new MAC addresses to the vNICs on virtual machines based on that snapshot.

Once created, vNICs are added to a network bridge device. The network bridge devices are how virtual machines are connected to virtual machine logical networks.

Running the ip addr show command on a virtualization host shows all of the vNICs that are associated with virtual machines on that host. Also visible are any network bridges that have been created to back logical networks, and any NICs used by the host.

The console output from the command shows several devices: one loop back device (lo), one Ethernet device (eth0), one wireless device (wlan0), one VDSM dummy device (;vdsmdummy;), five bond devices (bond0, bond4, bond1, bond2, bond3), and one network bridge (ovirtmgmt).

vNICs are all members of a network bridge device and logical network. Bridge membership can be displayed using the brctl show command:

The console output from the brctl show command shows that the virtio vNICs are members of the ovirtmgmt bridge. All of the virtual machines that the vNICs are associated with are connected to the ovirtmgmt logical network. The eth0 NIC is also a member of the ovirtmgmt bridge. The eth0 device is cabled to a switch that provides connectivity beyond the host.

3.9. Virtual LAN (VLAN)

A VLAN (Virtual LAN) is an attribute that can be applied to network packets. Network packets can be "tagged" into a numbered VLAN. A VLAN is a security feature used to completely isolate network traffic at the switch level. VLANs are completely separate and mutually exclusive. The Red Hat Virtualization Manager is VLAN aware and able to tag and redirect VLAN traffic, however VLAN implementation requires a switch that supports VLANs.

At the switch level, ports are assigned a VLAN designation. A switch applies a VLAN tag to traffic originating from a particular port, marking the traffic as part of a VLAN, and ensures that responses carry the same VLAN tag. A VLAN can extend across multiple switches. VLAN tagged network traffic on a switch is completely undetectable except by machines connected to a port designated with the correct VLAN. A given port can be tagged into multiple VLANs, which allows traffic from multiple VLANs to be sent to a single port, to be deciphered using software on the machine that receives the traffic.

3.10. Network Labels

Network labels can be used to greatly simplify several administrative tasks associated with creating and administering logical networks and associating those logical networks with physical host network interfaces and bonds.

A network label is a plain text, human readable label that can be attached to a logical network or a physical host network interface. There is no strict limit on the length of label, but you must use a combination of lowercase and uppercase letters, underscores and hyphens; no spaces or special characters are allowed.

Attaching a label to a logical network or physical host network interface creates an association with other logical networks or physical host network interfaces to which the same label has been attached, as follows:

Network Label Associations

- When you attach a label to a logical network, that logical network will be automatically associated with any physical host network interfaces with the given label.

- When you attach a label to a physical host network interface, any logical networks with the given label will be automatically associated with that physical host network interface.

- Changing the label attached to a logical network or physical host network interface acts in the same way as removing a label and adding a new label. The association between related logical networks or physical host network interfaces is updated.

Network Labels and Clusters

- When a labeled logical network is added to a cluster and there is a physical host network interface in that cluster with the same label, the logical network is automatically added to that physical host network interface.

- When a labeled logical network is detached from a cluster and there is a physical host network interface in that cluster with the same label, the logical network is automatically detached from that physical host network interface.

Network Labels and Logical Networks With Roles

When a labeled logical network is assigned to act as a display network or migration network, that logical network is then configured on the physical host network interface using DHCP so that the logical network can be assigned an IP address.

Setting a label on a role network (for instance, "a migration network" or "a display network") causes a mass deployment of that network on all hosts. Such mass additions of networks are achieved through the use of DHCP. This method of mass deployment was chosen over a method of typing in static addresses, because of the unscalable nature of the task of typing in many static IP addresses.

3.11. Cluster Networking

Cluster level networking objects include:

- Clusters

- Logical Networks

Figure 3.1. Networking within a cluster

A data center is a logical grouping of multiple clusters and each cluster is a logical group of multiple hosts. Figure 3.1, “Networking within a cluster” depicts the contents of a single cluster.

Hosts in a cluster all have access to the same storage domains. Hosts in a cluster also have logical networks applied at the cluster level. For a virtual machine logical network to become operational for use with virtual machines, the network must be defined and implemented for each host in the cluster using the Red Hat Virtualization Manager. Other logical network types can be implemented on only the hosts that use them.

Multi-host network configuration automatically applies any updated network settings to all of the hosts within the data center to which the network is assigned.

3.12. Logical Networks

Logical networking allows the Red Hat Virtualization environment to separate network traffic by type. For example, the ovirtmgmt network is created by default during the installation of Red Hat Virtualization to be used for management communication between the Manager and hosts. A typical use for logical networks is to group network traffic with similar requirements and usage together. In many cases, a storage network and a display network are created by an administrator to isolate traffic of each respective type for optimization and troubleshooting.

The types of logical network are:

- logical networks that carry virtual machine network traffic,

- logical networks that do not carry virtual machine network traffic,

- optional logical networks,

- and required networks.

All logical networks can either be required or optional.

Logical networks are defined at the data center level, and added to a host. For a required logical network to be operational, it must be implemented for every host in a given cluster.

Each virtual machine logical network in a Red Hat Virtualization environment is backed by a network bridge device on a host. So when a new virtual machine logical network is defined for a cluster, a matching bridge device must be created on each host in the cluster before the logical network can become operational to be used by virtual machines. Red Hat Virtualization Manager automatically creates required bridges for virtual machine logical networks.

The bridge device created by the Red Hat Virtualization Manager to back a virtual machine logical network is associated with a host network interface. If the host network interface that is part of a bridge has network connectivity, then any network interfaces that are subsequently included in the bridge share the network connectivity of the bridge. When virtual machines are created and placed on a particular logical network, their virtual network cards are included in the bridge for that logical network. Those virtual machines can then communicate with each other and with other objects that are connected to the bridge.

Logical networks not used for virtual machine network traffic are associated with host network interfaces directly.

Example 3.1. Example usage of a logical network.

There are two hosts called Red and White in a cluster called Pink in a data center called Purple. Both Red and White have been using the default logical network, ovirtmgmt for all networking functions. The system administrator responsible for Pink decides to isolate network testing for a web server by placing the web server and some client virtual machines on a separate logical network. She decides to call the new logical network network_testing.

First, she defines the logical network for the Purple data center. She then applies it to the Pink cluster. Logical networks must be implemented on a host in maintenance mode. So, the administrator first migrates all running virtual machines to Red, and puts White in maintenance mode. Then she edits the Network associated with the physical network interface that will be included in the bridge. The Link Status for the selected network interface will change from Down to Non-Operational. The non-operational status is because the corresponding bridge must be setup in all hosts in the cluster by adding a physical network interface on each host in the Pink cluster to the network_testing network. Next she activates White, migrates all of the running virtual machines off of Red, and repeats the process for Red.

When both White and Red both have the network_testing logical network bridged to a physical network interface, the network_testing logical network becomes Operational and is ready to be used by virtual machines.

3.13. Required Networks, Optional Networks, and Virtual Machine Networks

A required network is a logical network that must be available to all hosts in a cluster. When a host’s required network becomes non-operational, virtual machines running on that host are migrated to another host; the extent of this migration is dependent upon the chosen scheduling policy. This is beneficial if you have virtual machines running mission critical workloads.

An optional network is a logical network that has not been explicitly declared as Required. Optional networks can be implemented on only the hosts that use them. The presence or absence of optional networks does not affect the Operational status of a host. When a non-required network becomes non-operational, the virtual machines running on the network are not migrated to another host. This prevents unnecessary I/O overload caused by mass migrations. Note that when a logical network is created and added to clusters, the Required box is checked by default.

To change a network’s Required designation, from the Administration Portal, select a network, click the Cluster tab, and click the Manage Networks button.

Virtual machine networks (called a VM network in the user interface) are logical networks designated to carry only virtual machine network traffic. Virtual machine networks can be required or optional. Virtual machines that uses an optional virtual machine network will only start on hosts with that network.

3.14. Virtual Machine Connectivity

In Red Hat Virtualization, a virtual machine has its NIC put on a logical network at the time that the virtual machine is created. From that point, the virtual machine is able to communicate with any other destination on the same network.

From the host perspective, when a virtual machine is put on a logical network, the VNIC that backs the virtual machine’s NIC is added as a member to the bridge device for the logical network. For example, if a virtual machine is on the ovirtmgmt logical network, its VNIC is added as a member of the ovirtmgmt bridge of the host on which that virtual machine runs.

3.15. Port Mirroring

Port mirroring copies layer 3 network traffic on a given logical network and host to a virtual interface on a virtual machine. This virtual machine can be used for network debugging and tuning, intrusion detection, and monitoring the behavior of other virtual machines on the same host and logical network.

The only traffic copied is internal to one logical network on one host. There is no increase on traffic on the network external to the host; however a virtual machine with port mirroring enabled uses more host CPU and RAM than other virtual machines.

Port mirroring is enabled or disabled in the vNIC profiles of logical networks, and has the following limitations:

- Hot plugging vNICs with a profile that has port mirroring enabled is not supported.

- Port mirroring cannot be altered when the vNIC profile is attached to a virtual machine.

Given the above limitations, it is recommended that you enable port mirroring on an additional, dedicated vNIC profile.

Enabling port mirroring reduces the privacy of other network users.

3.16. Host Networking Configurations

Common types of networking configurations for virtualization hosts include:

Bridge and NIC configuration.

This configuration uses a bridge to connect one or more virtual machines (or guests) to the host’s NIC.

An example of this configuration is the automatic creation of the

ovirtmgmtnetwork when installing Red Hat Virtualization Manager. Then, during host installation, the Red Hat Virtualization Manager installs VDSM on the host. The VDSM installation process creates theovirtmgmtbridge which obtains the host’s IP address to enable communication with the Manager.ImportantSet all hosts in a cluster to use the same IP stack for their management network; either IPv4 or IPv6 only. Dual stack is not supported.

Bridge, VLAN, and NIC configuration.

A VLAN can be included in the bridge and NIC configuration to provide a secure channel for data transfer over the network and also to support the option to connect multiple bridges to a single NIC using multiple VLANs.

Bridge, Bond, and VLAN configuration.

A bond creates a logical link that combines the two (or more) physical Ethernet links. The resultant benefits include NIC fault tolerance and potential bandwidth extension, depending on the bonding mode.

Multiple Bridge, Multiple VLAN, and NIC configuration.

This configuration connects a NIC to multiple VLANs.

For example, to connect a single NIC to two VLANs, the network switch can be configured to pass network traffic that has been tagged into one of the two VLANs to one NIC on the host. The host uses two VNICs to separate VLAN traffic, one for each VLAN. Traffic tagged into either VLAN then connects to a separate bridge by having the appropriate VNIC as a bridge member. Each bridge, in turn, connects to multiple virtual machines.

NoteYou can also bond multiple NICs to facilitate a connection with multiple VLANs. Each VLAN in this configuration is defined over the bond comprising the multiple NICs. Each VLAN connects to an individual bridge and each bridge connects to one or more guests.

Chapter 4. Power Management

4.1. Introduction to Power Management and Fencing

The Red Hat Virtualization environment is most flexible and resilient when power management and fencing have been configured. Power management allows the Red Hat Virtualization Manager to control host power cycle operations, most importantly to reboot hosts on which problems have been detected. Fencing is used to isolate problem hosts from a functional Red Hat Virtualization environment by rebooting them, in order to prevent performance degradation. Fenced hosts can then be returned to responsive status through administrator action and be reintegrated into the environment.

Power management and fencing make use of special dedicated hardware in order to restart hosts independently of host operating systems. The Red Hat Virtualization Manager connects to a power management devices using a network IP address or hostname. In the context of Red Hat Virtualization, a power management device and a fencing device are the same thing.

4.2. Power Management by Proxy in Red Hat Virtualization

The Red Hat Virtualization Manager does not communicate directly with fence agents. Instead, the Manager uses a proxy to send power management commands to a host power management device. The Manager uses VDSM to execute power management device actions, so another host in the environment is used as a fencing proxy.

You can select between:

- Any host in the same cluster as the host requiring fencing.

- Any host in the same data center as the host requiring fencing.

A viable fencing proxy host has a status of either UP or Maintenance.

4.3. Power Management

The Red Hat Virtualization Manager is capable of rebooting hosts that have entered a non-operational or non-responsive state, as well as preparing to power off under-utilized hosts to save power. This functionality depends on a properly configured power management device. The Red Hat Virtualization environment supports the following power management devices:

-

American Power Conversion (

apc) -

IBM Bladecenter (

Bladecenter) -

Cisco Unified Computing System (

cisco_ucs) -

Dell Remote Access Card 5 (

drac5) -

Dell Remote Access Card 7 (

drac7) -

Electronic Power Switch (

eps) -

HP BladeSystem (

hpblade) -

Integrated Lights Out (

ilo,ilo2,ilo3,ilo4) -

Intelligent Platform Management Interface (

ipmilan) -

Remote Supervisor Adapter (

rsa) -

Fujitsu-Siemens RSB (

rsb) -

Western Telematic, Inc (

wti)

Red Hat recommends that HP servers use ilo3 or ilo4, Dell servers use drac5 or Integrated Dell Remote Access Controllers (idrac), and IBM servers use ipmilan. Integrated Management Module (IMM) uses the IPMI protocol, and therefore IMM users can use ipmilan.

APC 5.x power management devices are not supported by the apc fence agent. Use the apc_snmp fence agent instead.

In order to communicate with the listed power management devices, the Red Hat Virtualization Manager makes use of fence agents. The Red Hat Virtualization Manager allows administrators to configure a fence agent for the power management device in their environment with parameters the device will accept and respond to. Basic configuration options can be configured using the graphical user interface. Special configuration options can also be entered, and are passed un-parsed to the fence device. Special configuration options are specific to a given fence device, while basic configuration options are for functionalities provided by all supported power management devices. The basic functionalities provided by all power management devices are:

- Status: check the status of the host.

- Start: power on the host.

- Stop: power down the host.

- Restart: restart the host. Actually implemented as stop, wait, status, start, wait, status.

Best practice is to test the power management configuration once when initially configuring it, and occasionally after that to ensure continued functionality.

Resilience is provided by properly configured power management devices in all of the hosts in an environment. Fencing agents allow the Red Hat Virtualization Manager to communicate with host power management devices to bypass the operating system on a problem host, and isolate the host from the rest of its environment by rebooting it. The Manager can then reassign the SPM role, if it was held by the problem host, and safely restart any highly available virtual machines on other hosts.

4.4. Fencing

In the context of the Red Hat Virtualization environment, fencing is a host reboot initiated by the Manager using a fence agent and performed by a power management device. Fencing allows a cluster to react to unexpected host failures as well as enforce power saving, load balancing, and virtual machine availability policies.

Fencing ensures that the role of Storage Pool Manager (SPM) is always assigned to a functional host. If the fenced host was the SPM, the SPM role is relinquished and reassigned to a responsive host. Because the host with the SPM role is the only host that is able to write data domain structure metadata, a non-responsive, un-fenced SPM host causes its environment to lose the ability to create and destroy virtual disks, take snapshots, extend logical volumes, and all other actions that require changes to data domain structure metadata.

When a host becomes non-responsive, all of the virtual machines that are currently running on that host can also become non-responsive. However, the non-responsive host retains the lock on the virtual machine hard disk images for virtual machines it is running. Attempting to start a virtual machine on a second host and assign the second host write privileges for the virtual machine hard disk image can cause data corruption.

Fencing allows the Red Hat Virtualization Manager to assume that the lock on a virtual machine hard disk image has been released; the Manager can use a fence agent to confirm that the problem host has been rebooted. When this confirmation is received, the Red Hat Virtualization Manager can start a virtual machine from the problem host on another host without risking data corruption. Fencing is the basis for highly-available virtual machines. A virtual machine that has been marked highly-available can not be safely started on an alternate host without the certainty that doing so will not cause data corruption.

When a host becomes non-responsive, the Red Hat Virtualization Manager allows a grace period of thirty (30) seconds to pass before any action is taken, to allow the host to recover from any temporary errors. If the host has not become responsive by the time the grace period has passed, the Manager automatically begins to mitigate any negative impact from the non-responsive host. The Manager uses the fencing agent for the power management card on the host to stop the host, confirm it has stopped, start the host, and confirm that the host has been started. When the host finishes booting, it attempts to rejoin the cluster that it was a part of before it was fenced. If the issue that caused the host to become non-responsive has been resolved by the reboot, then the host is automatically set to Up status and is once again capable of starting and hosting virtual machines.

4.5. Soft-Fencing Hosts

Hosts can sometimes become non-responsive due to an unexpected problem, and though VDSM is unable to respond to requests, the virtual machines that depend upon VDSM remain alive and accessible. In these situations, restarting VDSM returns VDSM to a responsive state and resolves this issue.

"SSH Soft Fencing" is a process where the Manager attempts to restart VDSM via SSH on non-responsive hosts. If the Manager fails to restart VDSM via SSH, the responsibility for fencing falls to the external fencing agent if an external fencing agent has been configured.

Soft-fencing over SSH works as follows. Fencing must be configured and enabled on the host, and a valid proxy host (a second host, in an UP state, in the data center) must exist. When the connection between the Manager and the host times out, the following happens:

- On the first network failure, the status of the host changes to "connecting".