Deploy a privacy-focused AI assistant

Build a healthcare AI assistant that ensures your large language model has multiple layers of protection, including PII detection and content moderation.

This content is authored by Red Hat experts, but has not yet been tested on every supported configuration.

Deploy a privacy-focused AI assistant

Build a healthcare AI assistant that ensures your large language model has multiple layers of protection, including PII detection and content moderation.

Detailed description

This quickstart includes a healthcare AI assistant demo showing how guardrails could help protect HIPAA-compliant applications.

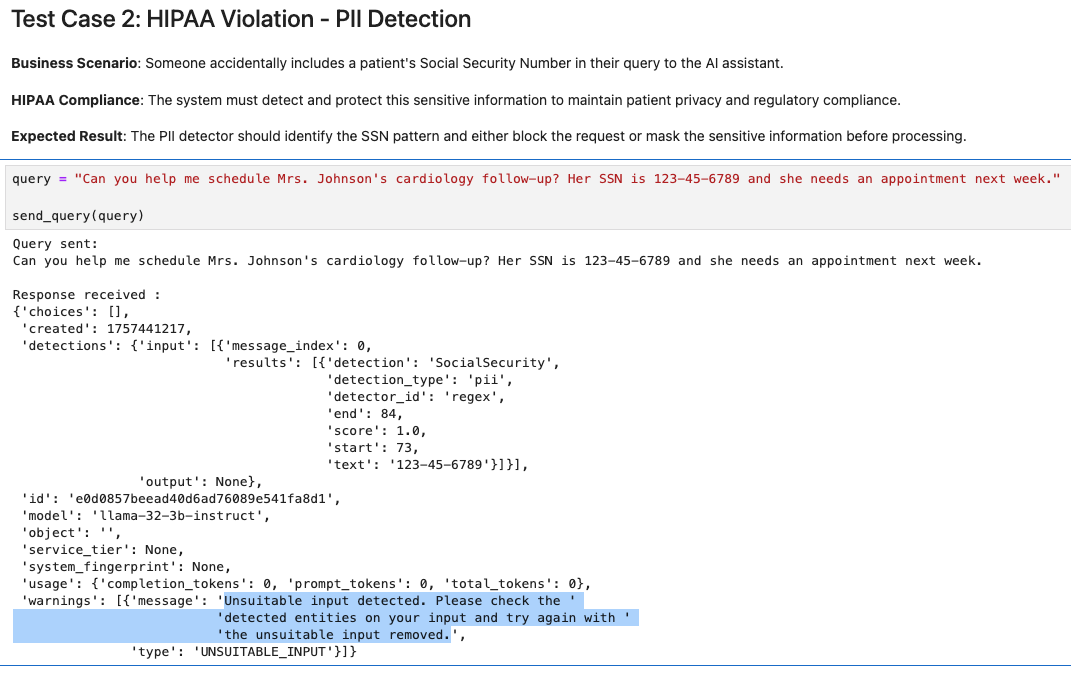

The demo tests a patient services AI with four protection layers:

- PII Detection - Protects Social Security Numbers and medical IDs

- Content Moderation - Blocks inappropriate language

- Prompt Injection Protection - Prevents system manipulation

- Gibberish Detection - Filters out nonsense queries

For example, here's how PII detection works in action:

Explore the complete interactive demo in docs/healthcare-guardrails.ipynb.

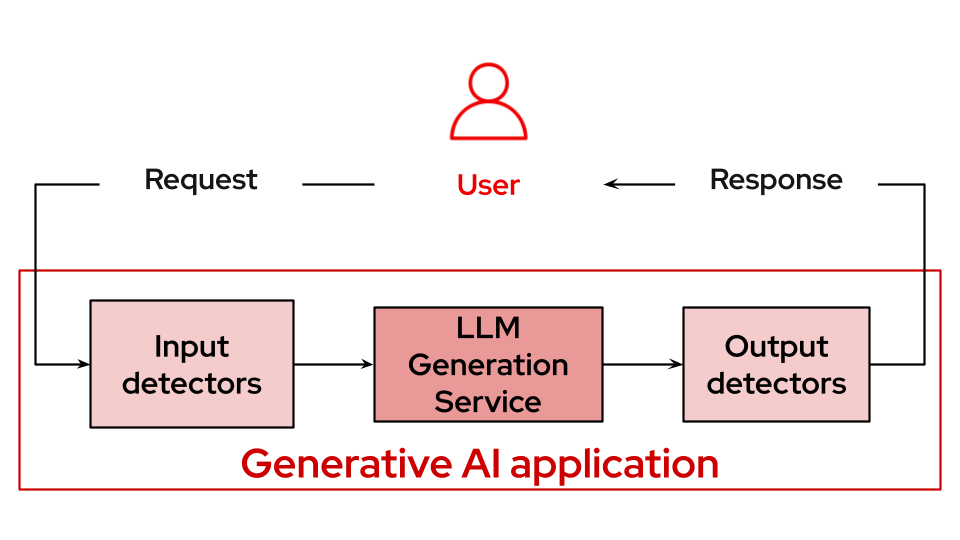

The LLM Guardrails quickstart is a quick-start template for deploying multiple layers of protection for LLM applications using TrustyAI's orchestrator and specialized detector services.

This quickstart includes a Helm chart for deploying:

- A Llama 3.2 3B Instruct model with GPU acceleration.

- Multiple AI safety detectors: gibberish detection, prompt injection detection, and hate/profanity detection.

- TrustyAI GuardrailsOrchestrator for coordinating safety checks.

- Configurable detection thresholds and routing policies.

Architecture diagrams

Requirements

Recommended hardware requirements

- GPU required for main LLM: +24GiB vRAM

- CPU cores: 12+ cores total (4 for LLM + 8 for detectors)

- Memory: 24Gi+ RAM total

- Storage: 10Gi

Minimum hardware requirements

- GPU required for main LLM: 1 x NVIDIA GPU with 24GiB vRAM

- CPU cores: 8+ cores total

- Memory: 16Gi+ RAM total

- Storage: 5Gi

Minimum software requirements

- Red Hat OpenShift 4.19.9

- Red Hat OpenShift Service Mesh 2

- Red Hat OpenShift AI 2.23.0

- KServe needs to be enabled

Please note before you start

This example was tested on Red Hat OpenShift 4.19.9 & Red Hat OpenShift AI 2.23.0.

Required user permissions

- Cluster admin permissions are required

Install

Clone the repository

git clone https://github.com/rh-ai-quickstart/guardrailing-llms.git && cd guardrailing-llms/

git clone https://github.com/rh-ai-quickstart/guardrailing-llms.git && cd guardrailing-llms/

Create a new project

PROJECT="guardrails-demo"

oc new-project ${PROJECT}

PROJECT="guardrails-demo"

oc new-project ${PROJECT}

Install with Helm

helm install ${PROJECT} helm/ --namespace ${PROJECT}

helm install ${PROJECT} helm/ --namespace ${PROJECT}

Wait for the pods to be ready

oc get pod -n ${PROJECT}

oc get pod -n ${PROJECT}

You should see an output similar to:

Test

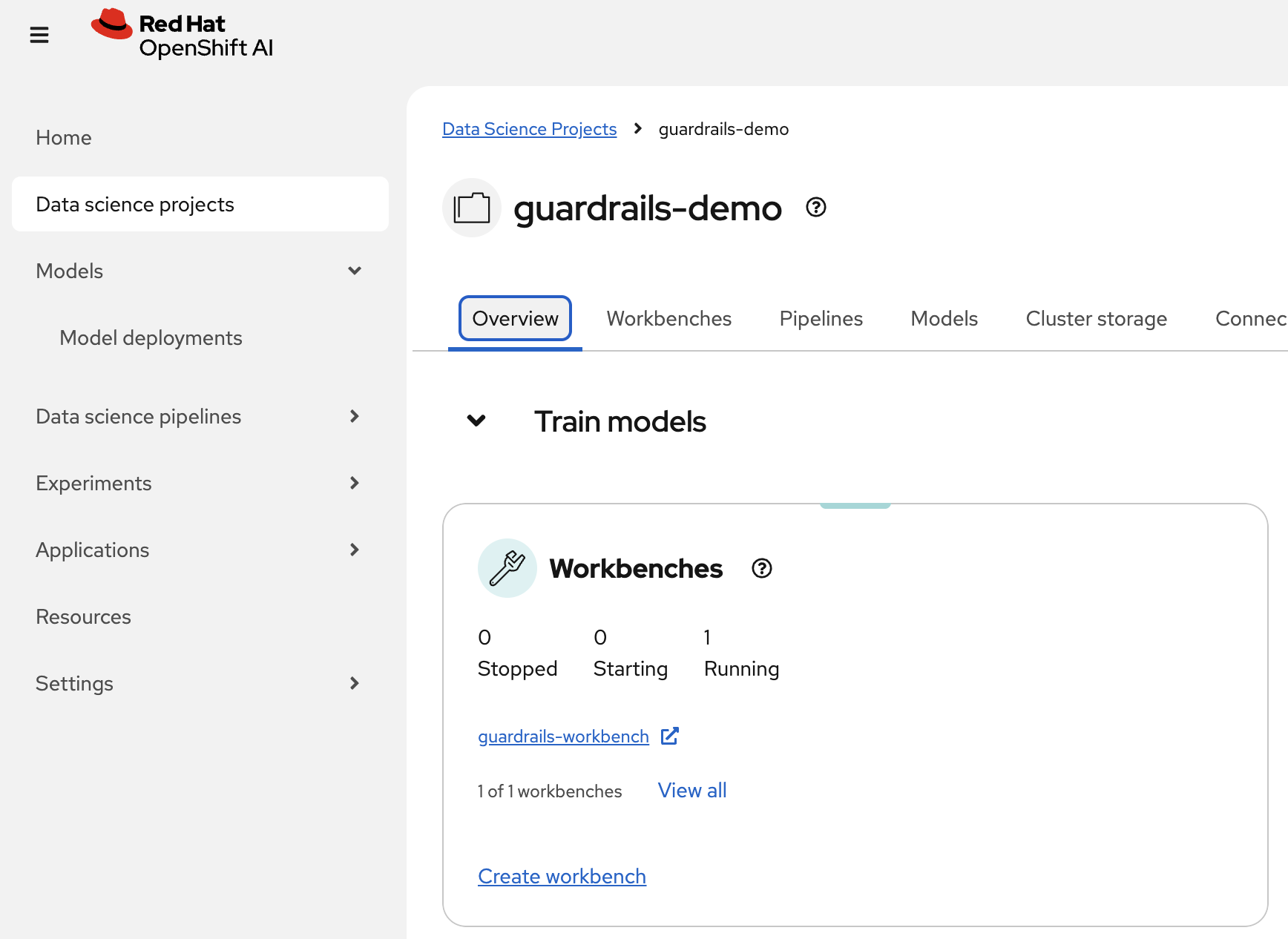

You can get the OpenShift AI Dashboard URL by:

oc get routes rhods-dashboard -n redhat-ods-applications

oc get routes rhods-dashboard -n redhat-ods-applications

Once inside the dashboard, navigate to Data Science Projects -> guardrails-demo (or what you called your ${PROJECT} if you changed from default).

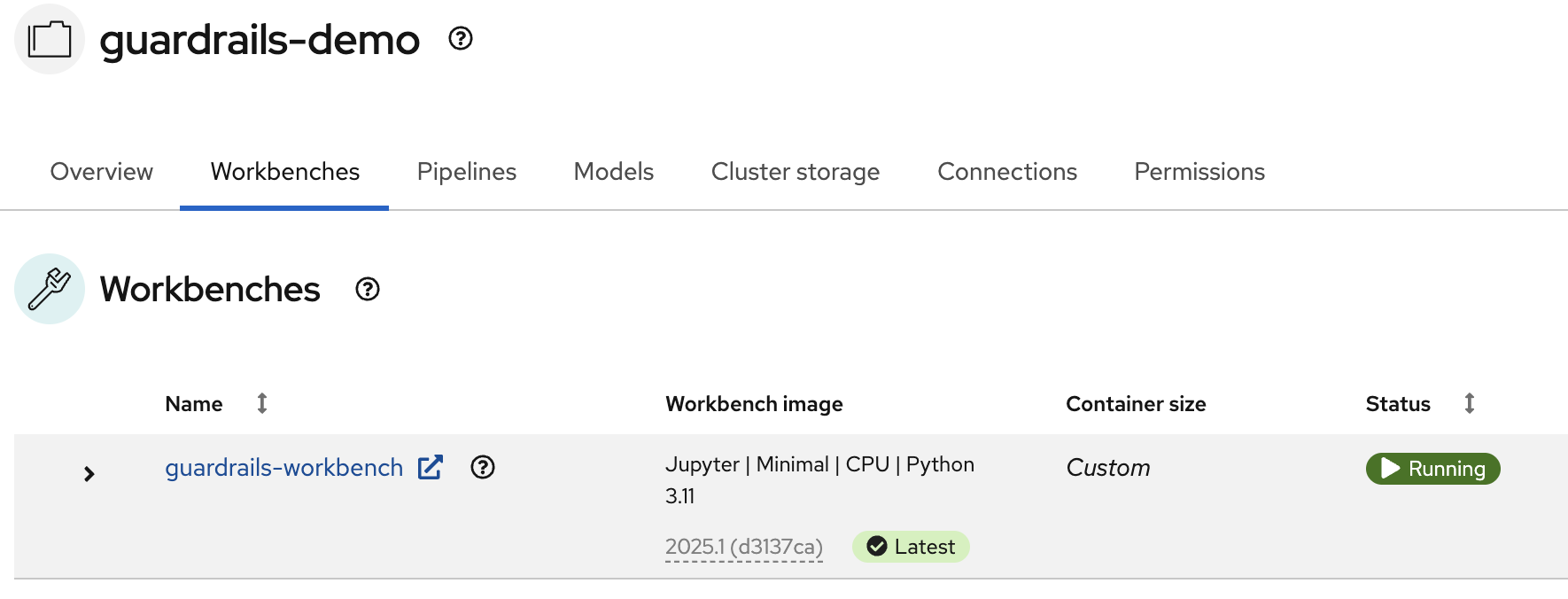

Inside the project you can see Workbenches, open up the one for guardrails-workbench.

Open the workbench, inside of the Jupyter Notebook folder, you'll see the guardrailing-llms repository already cloned, go to docs/healthcare-guardrails.ipynb and follow the instructions.

Enjoy!

Delete

helm uninstall ${PROJECT} --namespace ${PROJECT}

helm uninstall ${PROJECT} --namespace ${PROJECT}