Este conteúdo não está disponível no idioma selecionado.

Performance Tuning Guide

Monitoring and optimizing subsystem throughput in RHEL 7

Abstract

Note

Chapter 1. Introduction

- Back up before you configure

- The default settings in Red Hat Enterprise Linux 7 are suitable for most services running under moderate loads. Improving the performance of a specific subsystem may affect another system adversely. Back up all data and configuration information before you begin tuning your system.

- Test configuration out of production

- The procedures documented in the Performance Tuning Guide are tested extensively by Red Hat engineers in both lab and field. Nonetheless, Red Hat recommends testing all planned configurations in a secure testing environment before applying those configurations to production systems.

Who Should Read This Book

- System administrators

- The Performance Tuning Guide documents the effects of each configuration option in detail so that system administrators can optimize Red Hat Enterprise Linux 7 for their specific purpose. Procedures in this guide are suitable for system administrators with Red Hat Certified Engineer (RHCE) certification or an equivalent amount of experience (3–5 years' experience deploying and managing Linux-based systems).

- System and business analysts

- This guide explains Red Hat Enterprise Linux 7 performance features at a high level. It provides information about how subsystems perform under specific workloads, allowing analysts to determine whether Red Hat Enterprise Linux 7 is suitable for their use case.Where possible, the Performance Tuning Guide also refers readers to more detailed feature documentation. This allows readers to develop the in-depth knowledge required to formulate the detailed deployment and optimization strategies necessary for infrastructure and deployment proposals.

Chapter 2. Performance Monitoring Tools

2.1. /proc

/proc "file system" is a directory that contains a hierarchy of files that represent the current state of the Linux kernel. It allows users and applications to see the kernel's view of the system.

/proc directory also contains information about system hardware and any currently running processes. Most files in the /proc file system are read-only, but some files (primarily those in /proc/sys) can be manipulated by users and applications to communicate configuration changes to the kernel.

/proc directory, refer to the Red Hat Enterprise Linux 7 System Administrator's Guide.

2.2. GNOME System Monitor

- System

- This tab displays basic information about the system's hardware and software.

- Processes

- This tab displays detailed information about active processes and the relationships between those processes. The processes displayed can be filtered to make certain processes easier to find. This tab also lets you perform some actions on the processes displayed, such as start, stop, kill, and change priority.

- Resources

- This tab displays the current CPU time usage, memory and swap space usage, and network usage.

- File Systems

- This tab lists all mounted file systems, and provides some basic information about each, such as the file system type, mount point, and memory usage.

2.3. Built-in Command-Line Tools

2.3.1. top

man top

$ man top2.3.2. ps

man ps

$ man ps2.3.3. Virtual Memory Statistics (vmstat)

man vmstat

$ man vmstat2.3.4. System Activity Reporter (sar)

-i option to set the interval time in seconds, for example, sar -i 60 tells sar to check CPU usage every minute.

man sar

$ man sar2.4. perf

2.5. turbostat

- invariant time stamp counters

- APERF model-specific registers

- MPERF model-specific registers

man turbostat

$ man turbostat2.6. iostat

await value and what can cause its values to be high, see the following Red Hat Knowledgebase article: What exactly is the meaning of value "await" reported by iostat?

2.7. irqbalance

man irqbalance

$ man irqbalance2.8. ss

ss -tmpie which displays detailed information (including internal information) about TCP sockets, memory usage, and processes using the socket.

man ss

$ man ss2.9. numastat

numa_hit values and low numa_miss values. Numastat also provides a number of command line options, which can show how system and process memory is distributed across NUMA nodes in the system.

man numastat

$ man numastat2.10. numad

/proc file system. It tries to maintain a specified resource usage level, and rebalances resource allocation when necessary by moving processes between NUMA nodes. numad attempts to achieve optimal NUMA performance by localizing and isolating significant processes on a subset of the system's NUMA nodes.

man numad

$ man numad2.11. SystemTap

2.12. OProfile

- Performance monitoring samples may not be precise. Because the processor may execute instructions out of order, samples can be recorded from a nearby instruction instead of the instruction that triggered the interrupt.

- OProfile expects processes to start and stop multiple times. As such, samples from multiple runs are allowed to accumulate. You may need to clear the sample data from previous runs.

- OProfile focuses on identifying problems with processes limited by CPU access. It is therefore not useful for identifying processes that are sleeping while they wait for locks on other events.

/usr/share/doc/oprofile-version.

2.13. Valgrind

man valgrind

$ man valgrind/usr/share/doc/valgrind-version when the valgrind package is installed.

2.14. pqos

- Monitoring

- Last Level Cache (LLC) usage and contention monitoring using the Cache Monitoring Technology (CMT)

- Per-thread memory bandwidth monitoring using the Memory Bandwidth Monitoring (MBM) technology

- Allocation

- Controlling the amount of LLC space that is available for specific threads and processes using the Cache Allocation Technology (CAT)

- Controlling code and data placement in the LLC using the Code and Data Prioritization (CDP) technology

pqos --show --verbose

# pqos --show --verboseAdditional Resources

- For more information about using pqos, see the pqos(8) man page.

- For detailed information on the CMT, MBM, CAT, and CDP processor features, see the official Intel documentation: Intel® Resource Director Technology (Intel® RDT).

Chapter 3. Tuned

3.1. Tuned Overview

udev to monitor connected devices and statically and dynamically tunes system settings according to a selected profile. Tuned is distributed with a number of predefined profiles for common use cases like high throughput, low latency, or powersave. It is possible to modify the rules defined for each profile and customize how to tune a particular device. To revert all changes made to the system settings by a certain profile, you can either switch to another profile or deactivate the tuned service.

Note

no-daemon mode, which does not require any resident memory. In this mode, tuned applies the settings and exits. The no-daemon mode is disabled by default because a lot of tuned functionality is missing in this mode, including D-Bus support, hot-plug support, or rollback support for settings. To enable no-daemon mode, set the following in the /etc/tuned/tuned-main.conf file: daemon = 0.

sysctl and sysfs settings and one-shot activation of several configuration tools like ethtool. Tuned also monitors the use of system components and tunes system settings dynamically based on that monitoring information.

/etc/tuned/tuned-main.conf file and changing the dynamic_tuning flag to 1.

3.1.1. Plug-ins

disk- Gets disk load (number of IO operations) per device and measurement interval.

net- Gets network load (number of transferred packets) per network card and measurement interval.

load- Gets CPU load per CPU and measurement interval.

cpu- Sets the CPU governor to the value specified by the

governorparameter and dynamically changes the PM QoS CPU DMA latency according to the CPU load. If the CPU load is lower than the value specified by theload_thresholdparameter, the latency is set to the value specified by thelatency_highparameter, otherwise it is set to value specified bylatency_low. Also the latency can be forced to a specific value without being dynamically changed further. This can be accomplished by setting theforce_latencyparameter to the required latency value. eeepc_she- Dynamically sets the FSB speed according to the CPU load; this feature can be found on some netbooks and is also known as the Asus Super Hybrid Engine. If the CPU load is lower or equal to the value specified by the

load_threshold_powersaveparameter, the plugin sets the FSB speed to the value specified by theshe_powersaveparameter (for details about the FSB frequencies and corresponding values, see the kernel documentation, the provided defaults should work for most users). If the CPU load is higher or equal to the value specified by theload_threshold_normalparameter, it sets the FSB speed to the value specified by theshe_normalparameter. Static tuning is not supported and the plugin is transparently disabled if the hardware support for this feature is not detected. net- Configures wake-on-lan to the values specified by the

wake_on_lanparameter (it uses same syntax as the ethtool utility). It also dynamically changes the interface speed according to the interface utilization. sysctl- Sets various

sysctlsettings specified by the plugin parameters. The syntax isname=value, wherenameis the same as the name provided by the sysctl tool. Use this plugin if you need to change settings that are not covered by other plugins (but prefer specific plugins if the settings are covered by them). usb- Sets autosuspend timeout of USB devices to the value specified by the

autosuspendparameter. The value 0 means that autosuspend is disabled. vm- Enables or disables transparent huge pages depending on the Boolean value of the

transparent_hugepagesparameter. audio- Sets the autosuspend timeout for audio codecs to the value specified by the

timeoutparameter. Currentlysnd_hda_intelandsnd_ac97_codecare supported. The value0means that the autosuspend is disabled. You can also enforce the controller reset by setting the Boolean parameterreset_controllertotrue. disk- Sets the elevator to the value specified by the

elevatorparameter. It also sets ALPM to the value specified by thealpmparameter, ASPM to the value specified by theaspmparameter, scheduler quantum to the value specified by thescheduler_quantumparameter, disk spindown timeout to the value specified by thespindownparameter, disk readahead to the value specified by thereadaheadparameter, and can multiply the current disk readahead value by the constant specified by thereadahead_multiplyparameter. In addition, this plugin dynamically changes the advanced power management and spindown timeout setting for the drive according to the current drive utilization. The dynamic tuning can be controlled by the Boolean parameterdynamicand is enabled by default.Note

Applying a tuned profile which stipulates a different disk readahead value overrides the disk readahead value settings if they have been configured using audevrule. Red Hat recommends using the tuned tool to adjust the disk readahead values. mounts- Enables or disables barriers for mounts according to the Boolean value of the

disable_barriersparameter. script- This plugin can be used for the execution of an external script that is run when the profile is loaded or unloaded. The script is called by one argument which can be

startorstop(it depends on whether the script is called during the profile load or unload). The script file name can be specified by thescriptparameter. Note that you need to correctly implement the stop action in your script and revert all setting you changed during the start action, otherwise the roll-back will not work. For your convenience, thefunctionsBash helper script is installed by default and allows you to import and use various functions defined in it. Note that this functionality is provided mainly for backwards compatibility and it is recommended that you use it as the last resort and prefer other plugins if they cover the required settings. sysfs- Sets various

sysfssettings specified by the plugin parameters. The syntax isname=value, wherenameis thesysfspath to use. Use this plugin in case you need to change some settings that are not covered by other plugins (please prefer specific plugins if they cover the required settings). video- Sets various powersave levels on video cards (currently only the Radeon cards are supported). The powersave level can be specified by using the

radeon_powersaveparameter. Supported values are:default,auto,low,mid,high, anddynpm. For details, refer to http://www.x.org/wiki/RadeonFeature#KMS_Power_Management_Options. Note that this plugin is experimental and the parameter may change in the future releases. bootloader- Adds parameters to the kernel boot command line. This plugin supports the legacy GRUB 1, GRUB 2, and also GRUB with Extensible Firmware Interface (EFI). Customized non-standard location of the grub2 configuration file can be specified by the

grub2_cfg_fileoption. The parameters are added to the current grub configuration and its templates. The machine needs to be rebooted for the kernel parameters to take effect.The parameters can be specified by the following syntax:cmdline=arg1 arg2 ... argn.

cmdline=arg1 arg2 ... argn.Copy to Clipboard Copied! Toggle word wrap Toggle overflow

3.1.2. Installation and Usage

yum install tuned

yum install tunedthroughput-performance- This is pre-selected on Red Hat Enterprise Linux 7 operating systems which act as compute nodes. The goal on such systems is the best throughput performance.

virtual-guest- This is pre-selected on virtual machines. The goal is best performance. If you are not interested in best performance, you would probably like to change it to the

balancedorpowersaveprofile (see bellow). balanced- This is pre-selected in all other cases. The goal is balanced performance and power consumption.

systemctl start tuned

systemctl start tunedsystemctl enable tuned

systemctl enable tunedtuned-adm

tuned-admtuned-adm list

tuned-adm listtuned-adm active

tuned-adm activetuned-adm profile profile

tuned-adm profile profiletuned-adm profile powersave

tuned-adm profile powersavehigh throughput by using the throughput-performance profile and concurrently setting the disk spindown to the low value by the spindown-disk profile. The following example optimizes the system for run in a virtual machine for the best performance and concurrently tune it for the low power consumption while the low power consumption is the priority:

tuned-adm profile virtual-guest powersave

tuned-adm profile virtual-guest powersavetuned-adm recommend

tuned-adm recommendtuned --help

tuned --help3.1.3. Custom Profiles

/usr/lib/tuned/ directory. Each profile has its own directory. The profile consists of the main configuration file called tuned.conf, and optionally other files, for example helper scripts.

/etc/tuned/ directory, which is used for custom profiles. If there are two profiles of the same name, the profile included in /etc/tuned/ is used.

/etc/tuned/ directory to use a profile included in /usr/lib/tuned/ with only certain parameters adjusted or overridden.

tuned.conf file contains several sections. There is one [main] section. The other sections are configurations for plugins instances. All sections are optional including the [main] section. Lines starting with the hash sign (#) are comments.

[main] section has the following option:

include=profile- The specified profile will be included, e.g.

include=powersavewill include thepowersaveprofile.

[NAME] type=TYPE devices=DEVICES

[NAME]

type=TYPE

devices=DEVICES

devices line can contain a list, a wildcard (*), and negation (!). You can also combine rules. If there is no devices line all devices present or later attached on the system of the TYPE will be handled by the plugin instance. This is same as using devices=*. If no instance of the plugin is specified, the plugin will not be enabled. If the plugin supports more options, they can be also specified in the plugin section. If the option is not specified, the default value will be used (if not previously specified in the included plugin). For the list of plugin options refer to Section 3.1.1, “Plug-ins”).

Example 3.1. Describing Plug-ins Instances

sd, such as sda or sdb, and does not disable barriers on them:

[data_disk] type=disk devices=sd* disable_barriers=false

[data_disk]

type=disk

devices=sd*

disable_barriers=false

sda1 and sda2:

[data_disk] type=disk devices=!sda1, !sda2 disable_barriers=false

[data_disk]

type=disk

devices=!sda1, !sda2

disable_barriers=false

[TYPE] devices=DEVICES

[TYPE]

devices=DEVICES

type line. The instance will then be referred to with a name, same as the type. The previous example could be then rewritten into:

[disk] devices=sdb* disable_barriers=false

[disk]

devices=sdb*

disable_barriers=false

include option, then the settings are merged. If they cannot be merged due to a conflict, the last conflicting definition overrides the previous settings in conflict. Sometimes, you do not know what was previously defined. In such cases, you can use the replace boolean option and set it to true. This will cause all the previous definitions with the same name to be overwritten and the merge will not happen.

enabled=false option. This has the same effect as if the instance was never defined. Disabling the plugin can be useful if you are redefining the previous definition from the include option and do not want the plugin to be active in your custom profile.

balanced profile and extends it the way that ALPM for all devices is set to the maximal powersaving.

isolcpus=2 to the kernel boot command line:

[bootloader] cmdline=isolcpus=2

[bootloader]

cmdline=isolcpus=2

3.1.4. Tuned-adm

tuned-adm utility. You can also create, modify, and delete profiles.

tuned-adm list

tuned-adm listtuned-adm active

tuned-adm activetuned-adm profile profile_name

tuned-adm profile profile_nametuned-adm profile latency-performance

tuned-adm profile latency-performancetuned-adm off

tuned-adm offNote

tuned-adm list

tuned-adm listyum search tuned-profiles

yum search tuned-profilesyum install tuned-profiles-profile-name

yum install tuned-profiles-profile-namebalanced- The default power-saving profile. It is intended to be a compromise between performance and power consumption. It tries to use auto-scaling and auto-tuning whenever possible. It has good results for most loads. The only drawback is the increased latency. In the current tuned release it enables the CPU, disk, audio and video plugins and activates the

conservativegovernor. Theradeon_powersaveis set toauto. powersave- A profile for maximum power saving performance. It can throttle the performance in order to minimize the actual power consumption. In the current tuned release it enables USB autosuspend, WiFi power saving and ALPM power savings for SATA host adapters. It also schedules multi-core power savings for systems with a low wakeup rate and activates the

ondemandgovernor. It enables AC97 audio power saving or, depending on your system, HDA-Intel power savings with a 10 seconds timeout. In case your system contains supported Radeon graphics card with enabled KMS it configures it to automatic power saving. On Asus Eee PCs a dynamic Super Hybrid Engine is enabled.Note

Thepowersaveprofile may not always be the most efficient. Consider there is a defined amount of work that needs to be done, for example a video file that needs to be transcoded. Your machine can consume less energy if the transcoding is done on the full power, because the task will be finished quickly, the machine will start to idle and can automatically step-down to very efficient power save modes. On the other hand if you transcode the file with a throttled machine, the machine will consume less power during the transcoding, but the process will take longer and the overall consumed energy can be higher. That is why thebalancedprofile can be generally a better option. throughput-performance- A server profile optimized for high throughput. It disables power savings mechanisms and enables sysctl settings that improve the throughput performance of the disk, network IO and switched to the

deadlinescheduler. CPU governor is set toperformance. latency-performance- A server profile optimized for low latency. It disables power savings mechanisms and enables sysctl settings that improve the latency. CPU governor is set to

performanceand the CPU is locked to the low C states (by PM QoS). network-latency- A profile for low latency network tuning. It is based on the

latency-performanceprofile. It additionally disables transparent hugepages, NUMA balancing and tunes several other network related sysctl parameters. network-throughput- Profile for throughput network tuning. It is based on the

throughput-performanceprofile. It additionally increases kernel network buffers. virtual-guest- A profile designed for Red Hat Enterprise Linux 7 virtual machines as well as VMware guests based on the enterprise-storage profile that, among other tasks, decreases virtual memory swappiness and increases disk readahead values. It does not disable disk barriers.

virtual-host- A profile designed for virtual hosts based on the

enterprise-storageprofile that, among other tasks, decreases virtual memory swappiness, increases disk readahead values and enables a more aggressive value of dirty pages. oracle- A profile optimized for Oracle databases loads based on

throughput-performanceprofile. It additionally disables transparent huge pages and modifies some other performance related kernel parameters. This profile is provided by tuned-profiles-oracle package. It is available in Red Hat Enterprise Linux 6.8 and later. desktop- A profile optimized for desktops, based on the

balancedprofile. It additionally enables scheduler autogroups for better response of interactive applications. cpu-partitioning- The

cpu-partitioningprofile partitions the system CPUs into isolated and housekeeping CPUs. To reduce jitter and interruptions on an isolated CPU, the profile clears the isolated CPU from user-space processes, movable kernel threads, interrupt handlers, and kernel timers.A housekeeping CPU can run all services, shell processes, and kernel threads.You can configure thecpu-partitioningprofile in the/etc/tuned/cpu-partitioning-variables.conffile. The configuration options are:isolated_cores=cpu-list- Lists CPUs to isolate. The list of isolated CPUs is comma-separated or the user can specify the range. You can specify a range using a dash, such as

3-5. This option is mandatory. Any CPU missing from this list is automatically considered a housekeeping CPU. no_balance_cores=cpu-list- Lists CPUs which are not considered by the kernel during system wide process load-balancing. This option is optional. This is usually the same list as

isolated_cores.

For more information oncpu-partitioning, see the tuned-profiles-cpu-partitioning(7) man page.

Note

Optional channel. These profiles are intended for backward compatibility and are no longer developed. The generalized profiles from the base package will mostly perform the same or better. If you do not have specific reason for using them, please prefer the above mentioned profiles from the base package. The compat profiles are following:

default- This has the lowest impact on power saving of the available profiles and only enables CPU and disk plugins of tuned.

desktop-powersave- A power-saving profile directed at desktop systems. Enables ALPM power saving for SATA host adapters as well as the CPU, Ethernet, and disk plugins of tuned.

laptop-ac-powersave- A medium-impact power-saving profile directed at laptops running on AC. Enables ALPM powersaving for SATA host adapters, Wi-Fi power saving, as well as the CPU, Ethernet, and disk plugins of tuned.

laptop-battery-powersave- A high-impact power-saving profile directed at laptops running on battery. In the current tuned implementation it is an alias for the

powersaveprofile. spindown-disk- A power-saving profile for machines with classic HDDs to maximize spindown time. It disables the tuned power savings mechanism, disables USB autosuspend, disables Bluetooth, enables Wi-Fi power saving, disables logs syncing, increases disk write-back time, and lowers disk swappiness. All partitions are remounted with the

noatimeoption. enterprise-storage- A server profile directed at enterprise-class storage, maximizing I/O throughput. It activates the same settings as the

throughput-performanceprofile, multiplies readahead settings, and disables barriers on non-root and non-boot partitions.

Note

atomic-host profile on physical machines, and the atomic-guest profile on virtual machines.

yum install tuned-profiles-atomic

yum install tuned-profiles-atomicatomic-host- A profile optimized for Red Hat Enterprise Linux Atomic Host, when used as a host system on a bare-metal server, using the throughput-performance profile. It additionally increases SELinux AVC cache, PID limit, and tunes netfilter connections tracking.

atomic-guest- A profile optimized for Red Hat Enterprise Linux Atomic Host, when used as a guest system based on the virtual-guest profile. It additionally increases SELinux AVC cache, PID limit, and tunes netfilter connections tracking.

Note

realtime, realtime-virtual-host and realtime-virtual-guest.

realtime profile, install the tuned-profiles-realtime package. Run, as root, the following command:

yum install tuned-profiles-realtime

yum install tuned-profiles-realtimerealtime-virtual-host and realtime-virtual-guest profiles, install the tuned-profiles-nfv package. Run, as root, the following command:

yum install tuned-profiles-nfv

yum install tuned-profiles-nfv3.1.5. powertop2tuned

yum install tuned-utils

yum install tuned-utilspowertop2tuned new_profile_name

powertop2tuned new_profile_name/etc/tuned directory and it bases it on the currently selected tuned profile. For safety reasons all PowerTOP tunings are initially disabled in the new profile. To enable them uncomment the tunings of your interest in the /etc/tuned/profile/tuned.conf. You can use the --enable or -e option that will generate the new profile with most of the tunings suggested by PowerTOP enabled. Some dangerous tunings like the USB autosuspend will still be disabled. If you really need them you will have to uncomment them manually. By default, the new profile is not activated. To activate it run the following command:

tuned-adm profile new_profile_name

tuned-adm profile new_profile_namepowertop2tuned --help

powertop2tuned --help3.2. Performance Tuning with tuned and tuned-adm

tuned Profiles Overview

throughput-performance.

- low latency for storage and network

- high throughput for storage and network

- virtual machine performance

- virtualization host performance

tuned Boot Loader plug-in

tuned Bootloader plug-in to add parameters to the kernel (boot or dracut) command line. Note that only the GRUB 2 boot loader is supported and a reboot is required to apply profile changes. For example, to add the quiet parameter to a tuned profile, include the following lines in the tuned.conf file:

[bootloader] cmdline=quiet

[bootloader]

cmdline=quiet

grub.cfg file.

Environment Variables and Expanding tuned Built-In Functions

tuned-adm profile profile_name and then grub2-mkconfig -o profile_path after updating GRUB 2 configuration, you can use Bash environment variables, which are expanded after running grub2-mkconfig. For example, the following environment variable is expanded to nfsroot=/root:

[bootloader] cmdline="nfsroot=$HOME"

[bootloader]

cmdline="nfsroot=$HOME"

tuned variables as an alternative to environment variables. In the following example, ${isolated_cores} expands to 1,2, so the kernel boots with the isolcpus=1,2 parameter:

${non_isolated_cores} expands to 0,3-5, and the cpulist_invert built-in function is called with the 0,3-5 arguments:

cpulist_invert function inverts the list of CPUs. For a 6-CPU machine, the inversion is 1,2, and the kernel boots with the isolcpus=1,2 command-line parameter.

tuned.conf:

isolated_cores=1,2 to the /etc/tuned/my-variables.conf file, the kernel boots with the isolcpus=1,2 parameter.

Modifying Default System tuned Profiles

Procedure 3.1. Creating a New Tuned Profile Directory

- In

/etc/tuned/, create a new directory named the same as the profile you want to create:/etc/tuned/my_profile_name/. - In the new directory, create a file named

tuned.conf, and include the following lines at the top:[main] include=profile_name

[main] include=profile_nameCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Include your profile modifications. For example, to use the settings from the

throughput-performanceprofile with the value ofvm.swappinessset to 5, instead of default 10, include the following lines:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - To activate the profile, run:

tuned-adm profile my_profile_name

# tuned-adm profile my_profile_nameCopy to Clipboard Copied! Toggle word wrap Toggle overflow

tuned.conf file enables you to keep all your profile modifications after system tuned profiles are updated.

/user/lib/tuned/ to /etc/tuned/. For example:

cp -r /usr/lib/tuned/throughput-performance /etc/tuned

# cp -r /usr/lib/tuned/throughput-performance /etc/tuned

/etc/tuned according to your needs. Note that if there are two profiles of the same name, the profile located in /etc/tuned/ is loaded. The disadvantage of this approach is that if a system profile is updated after a tuned upgrade, the changes will not be reflected in the now-outdated modified version.

Resources

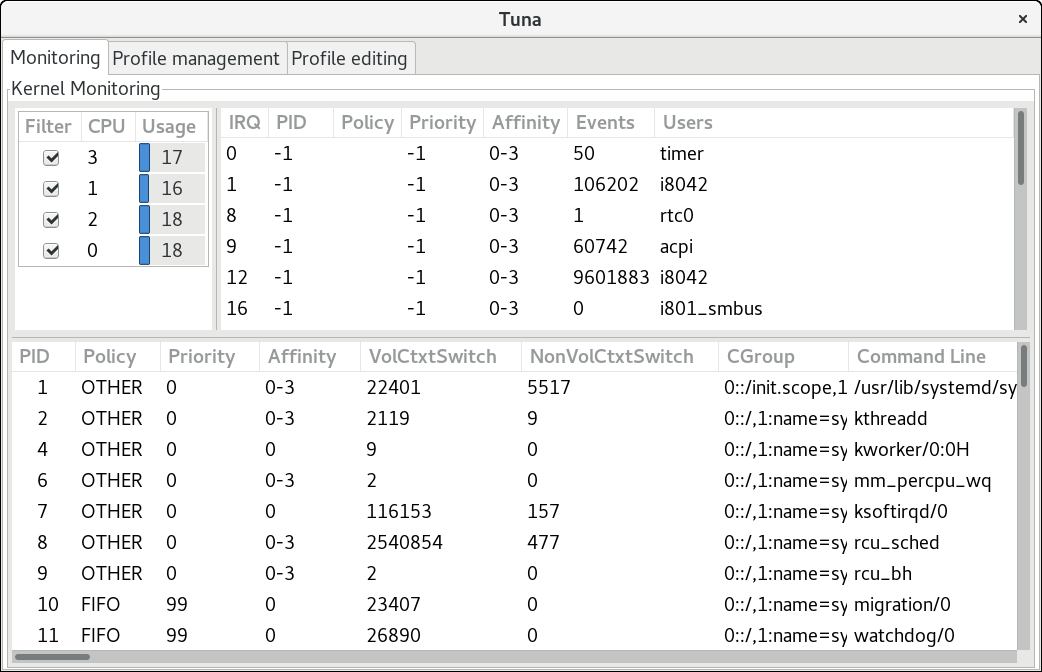

Chapter 4. Tuna

tuna command without any arguments to start the Tuna graphical user interface (GUI). Use the tuna -h command to display available command-line interface (CLI) options. Note that the tuna(8) manual page distinguishes between action and modifier options.

Important

tuna --save=filename command with a descriptive file name to save the current configuration. Note that this command does not save every option that Tuna can change, but saves the kernel thread changes only. Any processes that are not currently running when they are changed are not saved.

4.1. Reviewing the System with Tuna

tuna --show_threads command:

--threads option before --show_threads:

tuna --threads=pid_or_cmd_list --show_threads

# tuna --threads=pid_or_cmd_list --show_threadstuna --show_irqs command:

--irqs option before --show_irqs:

tuna --irqs=number_or_user_list --show_irqs

# tuna --irqs=number_or_user_list --show_irqs4.2. Tuning CPUs with Tuna

/proc/cpuinfo file for detailed information.

tuna --cpus=cpu_list --run=COMMAND

# tuna --cpus=cpu_list --run=COMMANDtuna --cpus=cpu_list --isolate

# tuna --cpus=cpu_list --isolatetuna --cpus=cpu_list --include

# tuna --cpus=cpu_list --include--cpus=0,2.

4.3. Tuning IRQs with Tuna

/proc/interrpupts file. You can also use the tuna --show_irqs command.

--irqs parameter:

tuna --irqs=irq_list --run=COMMAND

# tuna --irqs=irq_list --run=COMMAND--move parameter:

tuna --irqs=irq_list --cpus=cpu_list --move

# tuna --irqs=irq_list --cpus=cpu_list --move--cpus=0,2.

sfc1 and spread them over two CPUs:

tuna --irqs=sfc1\* --cpus=7,8 --move --spread

# tuna --irqs=sfc1\* --cpus=7,8 --move --spread--show_irqs parameter both before and after modifying the IRQs with the --move parameter:

Note

4.4. Tuning Tasks with Tuna

--priority parameter:

tuna --threads=pid_or_cmd_list --priority=[policy:]rt_priority

# tuna --threads=pid_or_cmd_list --priority=[policy:]rt_priority- The pid_or_cmd_list argument is a list of comma-separated PIDs or command-name patterns.

- Set the policy to

RRfor round-robin,FIFOfor first in, first out, orOTHERfor the default policy.For an overview of the scheduling policies, see Section 6.3.6, “Tuning Scheduling Policy”. - Set the rt_priority in the range 1–99. 1 is the lowest priority, and 99 is the highest priority.

tuna --threads=7861 --priority=RR:40

# tuna --threads=7861 --priority=RR:40--show_threads parameter both before and after the modifying --priority parameter:

4.5. Examples of Using Tuna

Example 4.1. Assigning Tasks to Specific CPUs

ssh threads run on CPU 0 and 1, and all http threads on CPU 2 and 3.

tuna --cpus=0,1 --threads=ssh\* --move --cpus=2,3 --threads=http\* --move

# tuna --cpus=0,1 --threads=ssh\* --move --cpus=2,3 --threads=http\* --move- Select CPUs 0 and 1.

- Select all threads that begin with

ssh. - Move the selected threads to the selected CPUs. Tuna sets the affinity mask of threads starting with

sshto the appropriate CPUs. The CPUs can be expressed numerically as 0 and 1, in hex mask as0x3, or in binary as11. - Reset the CPU list to 2 and 3.

- Select all threads that begin with

http. - Move the selected threads to the selected CPUs. Tuna sets the affinity mask of threads starting with

httpto the appropriate CPUs. The CPUs can be expressed numerically as 2 and 3, in hex mask as0xC, or in binary as1100.

Example 4.2. Viewing Current Configurations

--show_threads (-P) parameter to display the current configuration, and then tests if the requested changes were made as expected.

- Select all threads that begin with

gnome-sc. - Show the selected threads to enable the user to verify their affinity mask and RT priority.

- Select CPU 0.

- Move the

gnome-scthreads to the selected CPU (CPU 0). - Show the result of the move.

- Reset the CPU list to CPU 1.

- Move the

gnome-scthreads to the selected CPU (CPU 1). - Show the result of the move.

- Add CPU 0 to the CPU list.

- Move the

gnome-scthreads to the selected CPUs (CPUs 0 and 1). - Show the result of the move.

Chapter 5. Performance Co-Pilot (PCP)

5.1. PCP Overview and Resources

- the monitoring and management of real-time data

- the logging and retrieval of historical data

pmcd) is responsible for collecting performance data on the host system, and various client tools, such as pminfo or pmstat, can be used to retrieve, display, archive, and process this data on the same host or over the network. The pcp package provides the command-line tools and underlying functionality. The graphical tool requires the pcp-gui package.

Resources

- The manual page named PCPIntro serves as an introduction to Performance Co-Pilot. It provides a list of available tools as well as a description of available configuration options and a list of related manual pages. By default, comprehensive documentation is installed in the

/usr/share/doc/pcp-doc/directory, notably the Performance Co-Pilot User's and Administrator's Guide and Performance Co-Pilot Programmer's Guide. - For information on PCP, see the Index of Performance Co-Pilot (PCP) articles, solutions, tutorials and white papers on the Red Hat Customer Portal.

- If you need to determine what PCP tool has the functionality of an older tool you are already familiar with, see the Side-by-side comparison of PCP tools with legacy tools Red Hat Knowledgebase article.

- See the official PCP documentation for an in-depth description of the Performance Co-Pilot and its usage. If you want to start using PCP on Red Hat Enterprise Linux quickly, see the PCP Quick Reference Guide. The official PCP website also contains a list of frequently asked questions.

5.2. XFS File System Performance Analysis with Performance Co-Pilot

5.2.1. Installing XFS PMDA to Gather XFS Data with PCP

yum install pcp

# yum install pcpsystemctl enable pmcd.service

# systemctl enable pmcd.servicesystemctl start pmcd.service

# systemctl start pmcd.serviceInstalling XFS PMDA Manually

- The

collectorrole allows the collection of performance metrics on the current system

- The

monitorrole allows the system to monitor local systems, remote systems, or both.

both collector and monitor, which allows the XFS PMDA to operate correctly in most scenarios.

cd /var/lib/pcp/pmdas/xfs/

# cd /var/lib/pcp/pmdas/xfs/xfs directory, enter:

5.2.2. Configuring and Examining XFS Performance Metrics

Examining Metrics with pminfo

pminfo tool, which displays information about available performance metrics. The command displays a list of all available metrics provided by the XFS PMDA.

pminfo xfs

# pminfo xfs-t metric- Displays one-line help information describing the selected metric.

-T metric- Displays more verbose help text describing the selected metric.

-f metric- Displays the current reading of the performance value that corresponds to the metric.

-t, -T, and -f options with a group of metrics or an individual metric. Most metric data is provided for each mounted XFS file system on the system at time of probing.

.) as a separator. The leaf node semantics (dots) applies to all PCP metrics. For an overview of the types of metrics that are available in each of the groups, see Table A.3, “PCP Metric Groups for XFS”.

Example 5.1. Using the pminfo Tool to Examine XFS Read and Write Metrics

xfs.write_bytes metric:

pminfo -t xfs.write_bytes xfs.write_bytes [number of bytes written in XFS file system write operations]

# pminfo -t xfs.write_bytes

xfs.write_bytes [number of bytes written in XFS file system write operations]

xfs.read_bytes metric:

xfs.read_bytes metric:

pminfo -f xfs.read_bytes

xfs.read_bytes

value 4891346238

# pminfo -f xfs.read_bytes

xfs.read_bytes

value 4891346238

Configuring Metrics with pmstore

xfs.control.reset metric. To modify a metric value, use the pmstore tool.

Example 5.2. Using pmstore to Reset the xfs.control.reset Metric

pmstore with the xfs.control.reset metric to reset the recorded counter values for the XFS PMDA back to zero.

pminfo -f xfs.write

xfs.write

value 325262

$ pminfo -f xfs.write

xfs.write

value 325262

pmstore xfs.control.reset 1 xfs.control.reset old value=0 new value=1

# pmstore xfs.control.reset 1

xfs.control.reset old value=0 new value=1

pminfo -f xfs.write

xfs.write

value 0

$ pminfo -f xfs.write

xfs.write

value 0

5.2.3. Examining XFS Metrics Available per File System

Example 5.3. Obtaining per-Device XFS Metrics with pminfo

pminfo command provides per-device XFS metrics that give instance values for each mounted XFS file system.

5.2.4. Logging Performance Data with pmlogger

pmlogger tool to create archived logs of selected metrics on the system.

/var/lib/pcp/config/pmlogger/config.default. The configuration file specifies which metrics are logged by the primary logging instance.

pmlogger, start a primary logging instance:

systemctl start pmlogger.service

# systemctl start pmlogger.servicesystemctl enable pmlogger.service

# systemctl enable pmlogger.servicepmlogger is enabled and a default configuration file is set, a pmlogger line is included in the PCP configuration:

Modifying the pmlogger Configuration File with pmlogconf

pmlogger service is running, PCP logs a default set of metrics on the host. You can use the pmlogconf utility to check the default configuration, and enable XFS logging groups as needed. Important XFS groups to enable include the XFS information, XFS data, and log I/O traffic groups.

pmlogconf prompts to enable or disable groups of related performance metrics, and to control the logging interval for each enabled group. Group selection is made by pressing y (yes) or n (no) in response to the prompt. To create or modify the generic PCP archive logger configuration file with pmlogconf, enter:

pmlogconf -r /var/lib/pcp/config/pmlogger/config.default

# pmlogconf -r /var/lib/pcp/config/pmlogger/config.defaultModifying the pmlogger Configuration File Manually

pmlogger configuration file manually and add specific metrics with given intervals to create a tailored logging configuration.

Example 5.4. The pmlogger Configuration File with XFS Metrics

pmlogger config.default file with some specific XFS metrics added.

Replaying the PCP Log Archives

- You can export the logs to text files and import them into spreadsheets by using PCP utilities such as

pmdumptext,pmrep, orpmlogsummary. - You can replay the data in the PCP Charts application and use graphs to visualize the retrospective data alongside live data of the system. See Section 5.2.5, “Visual Tracing with PCP Charts”.

pmdumptext tool to view the log files. With pmdumptext, you can parse the selected PCP log archive and export the values into an ASCII table. The pmdumptext tool enables you to dump the entire archive log, or only select metric values from the log by specifying individual metrics on the command line.

Example 5.5. Displaying a Specific XFS Metric Log Information

xfs.perdev.log metric collected in an archive at a 5 second interval and display all headers:

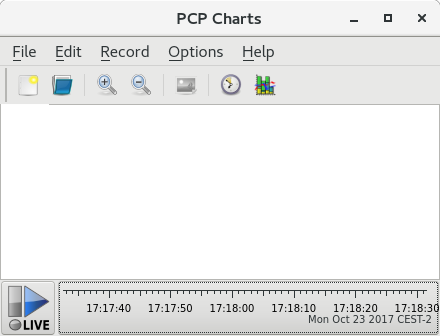

5.2.5. Visual Tracing with PCP Charts

yum install pcp-gui

# yum install pcp-guipmchart command.

pmtime server settings are located at the bottom. The and button allows you to control:

- The interval in which PCP polls the metric data

- The date and time for the metrics of historical data

- Click → to save an image of the current view.

- Click → to start a recording. Click → to stop the recording. After stopping the recording, the recorded metrics are archived to be viewed later.

- line plot

- bar graphs

- utilization graphs

view, allows the metadata associated with one or more charts to be saved. This metadata describes all chart aspects, including the metrics used and the chart columns. You can create a custom view configuration, save it by clicking → , and load the view configuration later. For more information about view configuration files and their syntax, see the pmchart(1) manual page.

Example 5.6. Stacking Chart Graph in PCP Charts View Configuration

loop1.

5.3. Performing Minimal PCP Setup to Gather File System Data

tar.gz archive of the pmlogger output can be analyzed by using various PCP tools, such as PCP Charts, and compared with other sources of performance information.

- Install the pcp package:

yum install pcp

# yum install pcpCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Start the

pmcdservice:systemctl start pmcd.service

# systemctl start pmcd.serviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Run the

pmlogconfutility to update thepmloggerconfiguration and enable the XFS information, XFS data, and log I/O traffic groups:pmlogconf -r /var/lib/pcp/config/pmlogger/config.default

# pmlogconf -r /var/lib/pcp/config/pmlogger/config.defaultCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Start the

pmloggerservice:systemctl start pmlogger.service

# systemctl start pmlogger.serviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Perform operations on the XFS file system.

- Stop the

pmloggerservice:systemctl stop pmcd.service

# systemctl stop pmcd.serviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow systemctl stop pmlogger.service

# systemctl stop pmlogger.serviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Collect the output and save it to a

tar.gzfile named based on the hostname and the current date and time:cd /var/log/pcp/pmlogger/

# cd /var/log/pcp/pmlogger/Copy to Clipboard Copied! Toggle word wrap Toggle overflow tar -czf $(hostname).$(date +%F-%Hh%M).pcp.tar.gz $(hostname)

# tar -czf $(hostname).$(date +%F-%Hh%M).pcp.tar.gz $(hostname)Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 6. CPU

6.1. Considerations

- How processors are connected to each other and to related resources like memory.

- How processors schedule threads for execution.

- How processors handle interrupts in Red Hat Enterprise Linux 7.

6.1.1. System Topology

- Symmetric Multi-Processor (SMP) topology

- SMP topology allows all processors to access memory in the same amount of time. However, because shared and equal memory access inherently forces serialized memory accesses from all the CPUs, SMP system scaling constraints are now generally viewed as unacceptable. For this reason, practically all modern server systems are NUMA machines.

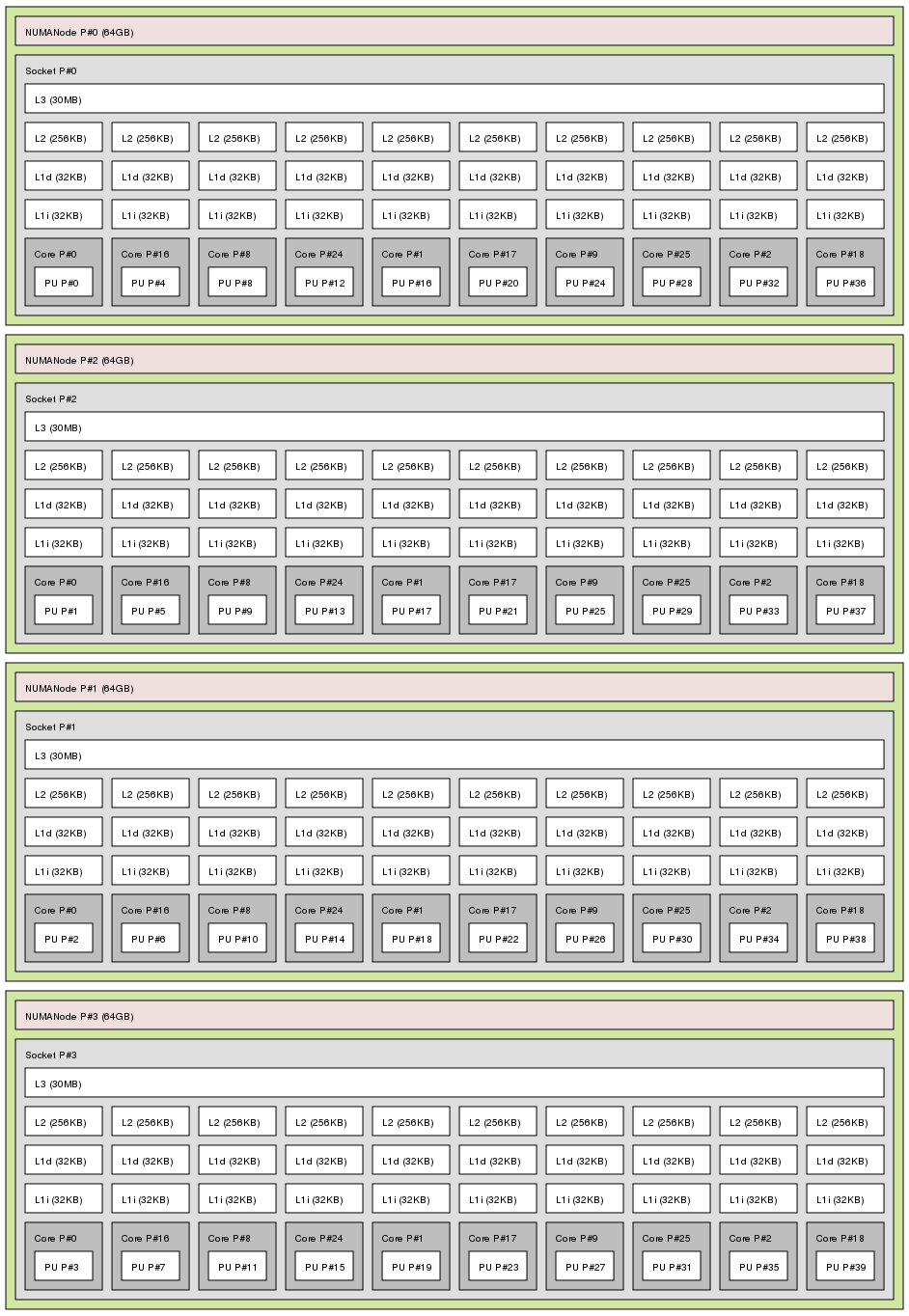

- Non-Uniform Memory Access (NUMA) topology

- NUMA topology was developed more recently than SMP topology. In a NUMA system, multiple processors are physically grouped on a socket. Each socket has a dedicated area of memory, and processors that have local access to that memory are referred to collectively as a node.Processors on the same node have high speed access to that node's memory bank, and slower access to memory banks not on their node. Therefore, there is a performance penalty to accessing non-local memory.Given this performance penalty, performance sensitive applications on a system with NUMA topology should access memory that is on the same node as the processor executing the application, and should avoid accessing remote memory wherever possible.When tuning application performance on a system with NUMA topology, it is therefore important to consider where the application is being executed, and which memory bank is closest to the point of execution.In a system with NUMA topology, the

/sysfile system contains information about how processors, memory, and peripheral devices are connected. The/sys/devices/system/cpudirectory contains details about how processors in the system are connected to each other. The/sys/devices/system/nodedirectory contains information about NUMA nodes in the system, and the relative distances between those nodes.

6.1.1.1. Determining System Topology

numactl --hardware command gives an overview of your system's topology.

lscpu command, provided by the util-linux package, gathers information about the CPU architecture, such as the number of CPUs, threads, cores, sockets, and NUMA nodes.

lstopo command, provided by the hwloc package, creates a graphical representation of your system. The lstopo-no-graphics command provides detailed textual output.

6.1.2. Scheduling

6.1.2.1. Kernel Ticks

nohz_full) to further improve determinism by reducing kernel interference with user-space tasks. This option can be enabled on specified cores with the nohz_full kernel parameter. When this option is enabled on a core, all timekeeping activities are moved to non-latency-sensitive cores. This can be useful for high performance computing and realtime computing workloads where user-space tasks are particularly sensitive to microsecond-level latencies associated with the kernel timer tick.

6.1.3. Interrupt Request (IRQ) Handling

6.2. Monitoring and Diagnosing Performance Problems

6.2.1. turbostat

man turbostat

$ man turbostat6.2.2. numastat

Important

man numastat

$ man numastat6.2.3. /proc/interrupts

/proc/interrupts file lists the number of interrupts sent to each processor from a particular I/O device. It displays the interrupt request (IRQ) number, the number of that type of interrupt request handled by each processor in the system, the type of interrupt sent, and a comma-separated list of devices that respond to the listed interrupt request.

6.2.4. Cache and Memory Bandwidth Monitoring with pqos

- The instructions per cycle (IPC).

- The count of last level cache MISSES.

- The size in kilobytes that the program executing in a given CPU occupies in the LLC.

- The bandwidth to local memory (MBL).

- The bandwidth to remote memory (MBR).

pqos --mon-top

# pqos --mon-topAdditional Resources

- For a general overview of the pqos utility and the related processor features, see Section 2.14, “pqos”.

- For an example of how using CAT can minimize the impact of a noisy neighbor virtual machine on the network performance of Data Plane Development Kit (DPDK), see the Increasing Platform Determinism with Platform Quality of Service for the Data Plane Development Kit Intel white paper.

6.3. Configuration Suggestions

6.3.1. Configuring Kernel Tick Time

nohz_full parameter. On a 16 core system, specifying nohz_full=1-15 enables dynamic tickless behavior on cores 1 through 15, moving all timekeeping to the only unspecified core (core 0). This behavior can be enabled either temporarily at boot time, or persistently via the GRUB_CMDLINE_LINUX option in the /etc/default/grub file. For persistent behavior, run the grub2-mkconfig -o /boot/grub2/grub.cfg command to save your configuration.

- When the system boots, you must manually move rcu threads to the non-latency-sensitive core, in this case core 0.

for i in `pgrep rcu[^c]` ; do taskset -pc 0 $i ; done

# for i in `pgrep rcu[^c]` ; do taskset -pc 0 $i ; doneCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Use the

isolcpusparameter on the kernel command line to isolate certain cores from user-space tasks. - Optionally, set CPU affinity for the kernel's write-back bdi-flush threads to the housekeeping core:

echo 1 > /sys/bus/workqueue/devices/writeback/cpumask

echo 1 > /sys/bus/workqueue/devices/writeback/cpumaskCopy to Clipboard Copied! Toggle word wrap Toggle overflow

perf stat -C 1 -e irq_vectors:local_timer_entry taskset -c 1 stress -t 1 -c 1

# perf stat -C 1 -e irq_vectors:local_timer_entry taskset -c 1 stress -t 1 -c 1while :; do d=1; done.

perf stat -C 1 -e irq_vectors:local_timer_entry taskset -c 1 stress -t 1 -c 1 1000 irq_vectors:local_timer_entry

# perf stat -C 1 -e irq_vectors:local_timer_entry taskset -c 1 stress -t 1 -c 1

1000 irq_vectors:local_timer_entryperf stat -C 1 -e irq_vectors:local_timer_entry taskset -c 1 stress -t 1 -c 1 1 irq_vectors:local_timer_entry

# perf stat -C 1 -e irq_vectors:local_timer_entry taskset -c 1 stress -t 1 -c 1

1 irq_vectors:local_timer_entry6.3.2. Setting Hardware Performance Policy (x86_energy_perf_policy)

performance mode. It requires processor support, which is indicated by the presence of CPUID.06H.ECX.bit3, and must be run with root privileges.

man x86_energy_perf_policy

$ man x86_energy_perf_policy6.3.3. Setting Process Affinity with taskset

Important

man taskset

$ man taskset6.3.4. Managing NUMA Affinity with numactl

man numactl

$ man numactlNote

libnuma library. This library offers a simple programming interface to the NUMA policy supported by the kernel, and can be used for more fine-grained tuning than the numactl application. For more information, see the man page:

man numa

$ man numa6.3.5. Automatic NUMA Affinity Management with numad

numad is an automatic NUMA affinity management daemon. It monitors NUMA topology and resource usage within a system in order to dynamically improve NUMA resource allocation and management.

man numad

$ man numad6.3.6. Tuning Scheduling Policy

6.3.6.1. Scheduling Policies

6.3.6.1.1. Static Priority Scheduling with SCHED_FIFO

SCHED_FIFO (also called static priority scheduling) is a realtime policy that defines a fixed priority for each thread. This policy allows administrators to improve event response time and reduce latency, and is recommended for time sensitive tasks that do not run for an extended period of time.

SCHED_FIFO is in use, the scheduler scans the list of all SCHED_FIFO threads in priority order and schedules the highest priority thread that is ready to run. The priority level of a SCHED_FIFO thread can be any integer from 1 to 99, with 99 treated as the highest priority. Red Hat recommends starting at a low number and increasing priority only when you identify latency issues.

Warning

SCHED_FIFO bandwidth to prevent realtime application programmers from initiating realtime tasks that monopolize the processor.

- /proc/sys/kernel/sched_rt_period_us

- This parameter defines the time period in microseconds that is considered to be one hundred percent of processor bandwidth. The default value is

1000000μs, or 1 second. - /proc/sys/kernel/sched_rt_runtime_us

- This parameter defines the time period in microseconds that is devoted to running realtime threads. The default value is

950000μs, or 0.95 seconds.

6.3.6.1.2. Round Robin Priority Scheduling with SCHED_RR

SCHED_RR is a round-robin variant of SCHED_FIFO. This policy is useful when multiple threads need to run at the same priority level.

SCHED_FIFO, SCHED_RR is a realtime policy that defines a fixed priority for each thread. The scheduler scans the list of all SCHED_RR threads in priority order and schedules the highest priority thread that is ready to run. However, unlike SCHED_FIFO, threads that have the same priority are scheduled round-robin style within a certain time slice.

sched_rr_timeslice_ms kernel parameter (/proc/sys/kernel/sched_rr_timeslice_ms). The lowest value is 1 millisecond.

6.3.6.1.3. Normal Scheduling with SCHED_OTHER

SCHED_OTHER is the default scheduling policy in Red Hat Enterprise Linux 7. This policy uses the Completely Fair Scheduler (CFS) to allow fair processor access to all threads scheduled with this policy. This policy is most useful when there are a large number of threads or data throughput is a priority, as it allows more efficient scheduling of threads over time.

6.3.6.2. Isolating CPUs

isolcpus boot parameter. This prevents the scheduler from scheduling any user-space threads on this CPU.

isolcpus=2,5-7

isolcpus=2,5-7isolcpus parameter, and does not currently achieve the performance gains associated with isolcpus. See Section 6.3.8, “Configuring CPU, Thread, and Interrupt Affinity with Tuna” for more details about this tool.

6.3.7. Setting Interrupt Affinity on AMD64 and Intel 64

smp_affinity, which defines the processors that will handle the interrupt request. To improve application performance, assign interrupt affinity and process affinity to the same processor, or processors on the same core. This allows the specified interrupt and application threads to share cache lines.

Important

Procedure 6.1. Balancing Interrupts Automatically

- If your BIOS exports its NUMA topology, the

irqbalanceservice can automatically serve interrupt requests on the node that is local to the hardware requesting service.For details on configuringirqbalance, see Section A.1, “irqbalance”.

Procedure 6.2. Balancing Interrupts Manually

- Check which devices correspond to the interrupt requests that you want to configure.Starting with Red Hat Enterprise Linux 7.5, the system configures the optimal interrupt affinity for certain devices and their drivers automatically. You can no longer configure their affinity manually. This applies to the following devices:

- Devices using the

be2iscsidriver - NVMe PCI devices

- Find the hardware specification for your platform. Check if the chipset on your system supports distributing interrupts.

- If it does, you can configure interrupt delivery as described in the following steps.Additionally, check which algorithm your chipset uses to balance interrupts. Some BIOSes have options to configure interrupt delivery.

- If it does not, your chipset will always route all interrupts to a single, static CPU. You cannot configure which CPU is used.

- Check which Advanced Programmable Interrupt Controller (APIC) mode is in use on your system.Only non-physical flat mode (

flat) supports distributing interrupts to multiple CPUs. This mode is available only for systems that have up to 8 CPUs.journalctl --dmesg | grep APIC

$ journalctl --dmesg | grep APICCopy to Clipboard Copied! Toggle word wrap Toggle overflow In the command output:- If your system uses a mode other than

flat, you can see a line similar toSetting APIC routing to physical flat. - If you can see no such message, your system uses

flatmode.

If your system usesx2apicmode, you can disable it by adding thenox2apicoption to the kernel command line in the bootloader configuration. - Calculate the

smp_affinitymask.Thesmp_affinityvalue is stored as a hexadecimal bit mask representing all processors in the system. Each bit configures a different CPU. The least significant bit is CPU 0.The default value of the mask isf, meaning that an interrupt request can be handled on any processor in the system. Setting this value to1means that only processor 0 can handle the interrupt.Procedure 6.3. Calculating the Mask

- In binary, use the value

1for CPUs that will handle the interrupts.For example, to handle interrupts by CPU 0 and CPU 7, use0000000010000001as the binary code:Expand Table 6.1. Binary Bits for CPUs CPU 15 14 13 12 11 10 9 8 7 6 5 4 3 2 1 0 Binary 0 0 0 0 0 0 0 0 1 0 0 0 0 0 0 1 - Convert the binary code to hexadecimal.For example, to convert the binary code using Python:

>>> hex(int('0000000010000001', 2)) '0x81'>>> hex(int('0000000010000001', 2)) '0x81'Copy to Clipboard Copied! Toggle word wrap Toggle overflow

On systems with more than 32 processors, you must delimitsmp_affinityvalues for discrete 32 bit groups. For example, if you want only the first 32 processors of a 64 processor system to service an interrupt request, use0xffffffff,00000000. - Set the

smp_affinitymask.The interrupt affinity value for a particular interrupt request is stored in the associated/proc/irq/irq_number/smp_affinityfile.Write the calculated mask to the associated file:echo mask > /proc/irq/irq_number/smp_affinity

# echo mask > /proc/irq/irq_number/smp_affinityCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional Resources

- On systems that support interrupt steering, modifying the

smp_affinityproperty of an interrupt request sets up the hardware so that the decision to service an interrupt with a particular processor is made at the hardware level with no intervention from the kernel.For more information about interrupt steering, see Chapter 9, Networking.

6.3.8. Configuring CPU, Thread, and Interrupt Affinity with Tuna

Chapter 7. Memory

7.1. Considerations

7.1.1. Larger Page Size

HugeTLB feature, also called static huge pages in this guide, and the Transparent Huge Page feature.

7.1.2. Translation Lookaside Buffer Size

7.2. Monitoring and Diagnosing Performance Problems

7.2.1. Monitoring Memory Usage with vmstat

vmstat -s

$ vmstat -sman vmstat

$ man vmstat7.2.2. Profiling Application Memory Usage with Valgrind

yum install valgrind

# yum install valgrind7.2.2.1. Profiling Memory Usage with Memcheck

- Memory access that should not occur

- Undefined or uninitialized value use

- Incorrectly freed heap memory

- Pointer overlap

- Memory leaks

Note

valgrind --tool=memcheck application

# valgrind --tool=memcheck application- --leak-check

- After the application finishes executing, memcheck searches for memory leaks. The default value is

--leak-check=summary, which prints the number of memory leaks found. You can specify--leak-check=yesor--leak-check=fullto output details of each individual leak. To disable, specify--leak-check=no. - --undef-value-errors

- The default value is

--undef-value-errors=yes, which reports errors when undefined values are used. You can also specify--undef-value-errors=no, which will disable this report and slightly speed up Memcheck. - --ignore-ranges

- Specifies one or more ranges that memcheck should ignore when checking for memory addressability, for example,

--ignore-ranges=0xPP-0xQQ,0xRR-0xSS.

/usr/share/doc/valgrind-version/valgrind_manual.pdf.

7.2.2.2. Profiling Cache Usage with Cachegrind

valgrind --tool=cachegrind application

# valgrind --tool=cachegrind application- --I1

- Specifies the size, associativity, and line size of the first level instruction cache, like so:

--I1=size,associativity,line_size. - --D1

- Specifies the size, associativity, and line size of the first level data cache, like so:

--D1=size,associativity,line_size. - --LL

- Specifies the size, associativity, and line size of the last level cache, like so:

--LL=size,associativity,line_size. - --cache-sim

- Enables or disables the collection of cache access and miss counts. This is enabled (

--cache-sim=yes) by default. Disabling both this and--branch-simleaves cachegrind with no information to collect. - --branch-sim

- Enables or disables the collection of branch instruction and incorrect prediction counts. This is enabled (

--branch-sim=yes) by default. Disabling both this and--cache-simleaves cachegrind with no information to collect.Cachegrind writes detailed profiling information to a per-processcachegrind.out.pidfile, where pid is the process identifier. This detailed information can be further processed by the companion cg_annotate tool, like so:cg_annotate cachegrind.out.pid

# cg_annotate cachegrind.out.pidCopy to Clipboard Copied! Toggle word wrap Toggle overflow

cg_diff first second

# cg_diff first second/usr/share/doc/valgrind-version/valgrind_manual.pdf.

7.2.2.3. Profiling Heap and Stack Space with Massif

valgrind --tool=massif application

# valgrind --tool=massif application- --heap

- Specifies whether massif profiles the heap. The default value is

--heap=yes. Heap profiling can be disabled by setting this to--heap=no. - --heap-admin

- Specifies the number of bytes per block to use for administration when heap profiling is enabled. The default value is

8bytes. - --stacks

- Specifies whether massif profiles the stack. The default value is

--stack=no, as stack profiling can greatly slow massif. Set this option to--stack=yesto enable stack profiling. Note that massif assumes that the main stack starts with a size of zero in order to better indicate the changes in stack size that relate to the application being profiled. - --time-unit

- Specifies the interval at which massif gathers profiling data. The default value is

i(instructions executed). You can also specifyms(milliseconds, or realtime) andB(bytes allocated or deallocated on the heap and stack). Examining bytes allocated is useful for short run applications and for testing purposes, as it is most reproducible across different hardware.

massif.out.pid file, where pid is the process identifier of the specified application. The ms_print tool graphs this profiling data to show memory consumption over the execution of the application, as well as detailed information about the sites responsible for allocation at points of peak memory allocation. To graph the data from the massif.out.pid file, execute the following command:

ms_print massif.out.pid

# ms_print massif.out.pid/usr/share/doc/valgrind-version/valgrind_manual.pdf.

7.3. Configuring HugeTLB Huge Pages

boot time and at run time. Reserving at boot time increases the possibility of success because the memory has not yet been significantly fragmented. However, on NUMA machines, the number of pages is automatically split among NUMA nodes. The run-time method allows you to reserve huge pages per NUMA node. If the run-time reservation is done as early as possible in the boot process, the probability of memory fragmentation is lower.

7.3.1. Configuring Huge Pages at Boot Time

- hugepages

- Defines the number of persistent huge pages configured in the kernel at boot time. The default value is 0. It is only possible to allocate huge pages if there are sufficient physically contiguous free pages in the system. Pages reserved by this parameter cannot be used for other purposes.This value can be adjusted after boot by changing the value of the

/proc/sys/vm/nr_hugepagesfile.In a NUMA system, huge pages assigned with this parameter are divided equally between nodes. You can assign huge pages to specific nodes at runtime by changing the value of the node's/sys/devices/system/node/node_id/hugepages/hugepages-1048576kB/nr_hugepagesfile.For more information, read the relevant kernel documentation, which is installed in/usr/share/doc/kernel-doc-kernel_version/Documentation/vm/hugetlbpage.txtby default. - hugepagesz

- Defines the size of persistent huge pages configured in the kernel at boot time. Valid values are 2 MB and 1 GB. The default value is 2 MB.

- default_hugepagesz

- Defines the default size of persistent huge pages configured in the kernel at boot time. Valid values are 2 MB and 1 GB. The default value is 2 MB.

Procedure 7.1. Reserving 1 GB Pages During Early Boot

- Create a HugeTLB pool for 1 GB pages by appending the following line to the kernel command-line options in the

/etc/default/grubfile as root:default_hugepagesz=1G hugepagesz=1G

default_hugepagesz=1G hugepagesz=1GCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Regenerate the GRUB2 configuration using the edited default file. If your system uses BIOS firmware, execute the following command:

grub2-mkconfig -o /boot/grub2/grub.cfg

# grub2-mkconfig -o /boot/grub2/grub.cfgCopy to Clipboard Copied! Toggle word wrap Toggle overflow On a system with UEFI firmware, execute the following command:grub2-mkconfig -o /boot/efi/EFI/redhat/grub.cfg

# grub2-mkconfig -o /boot/efi/EFI/redhat/grub.cfgCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Create a file named

/usr/lib/systemd/system/hugetlb-gigantic-pages.servicewith the following content:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Create a file named

/usr/lib/systemd/hugetlb-reserve-pages.shwith the following content:Copy to Clipboard Copied! Toggle word wrap Toggle overflow On the last line, replace number_of_pages with the number of 1GB pages to reserve and node with the name of the node on which to reserve these pages.Example 7.1. Reserving Pages on

node0andnode1For example, to reserve two 1GB pages onnode0and one 1GB page onnode1, replace the last line with the following code:reserve_pages 2 node0 reserve_pages 1 node1

reserve_pages 2 node0 reserve_pages 1 node1Copy to Clipboard Copied! Toggle word wrap Toggle overflow You can modify it to your needs or add more lines to reserve memory in other nodes. - Make the script executable:

chmod +x /usr/lib/systemd/hugetlb-reserve-pages.sh

# chmod +x /usr/lib/systemd/hugetlb-reserve-pages.shCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Enable early boot reservation:

systemctl enable hugetlb-gigantic-pages

# systemctl enable hugetlb-gigantic-pagesCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Note

nr_hugepages at any time. However, to prevent failures due to memory fragmentation, reserve 1GB pages early during the boot process.

7.3.2. Configuring Huge Pages at Run Time

- /sys/devices/system/node/node_id/hugepages/hugepages-size/nr_hugepages

- Defines the number of huge pages of the specified size assigned to the specified NUMA node. This is supported as of Red Hat Enterprise Linux 7.1. The following example moves adds twenty 2048 kB huge pages to

node2.Copy to Clipboard Copied! Toggle word wrap Toggle overflow - /proc/sys/vm/nr_overcommit_hugepages

- Defines the maximum number of additional huge pages that can be created and used by the system through overcommitting memory. Writing any non-zero value into this file indicates that the system obtains that number of huge pages from the kernel's normal page pool if the persistent huge page pool is exhausted. As these surplus huge pages become unused, they are then freed and returned to the kernel's normal page pool.

7.4. Configuring Transparent Huge Pages

madvise() system call.

cat /sys/kernel/mm/transparent_hugepage/enabled

# cat /sys/kernel/mm/transparent_hugepage/enabled

echo always > /sys/kernel/mm/transparent_hugepage/enabled

# echo always > /sys/kernel/mm/transparent_hugepage/enabled

echo madvise > /sys/kernel/mm/transparent_hugepage/enabled

# echo madvise > /sys/kernel/mm/transparent_hugepage/enabled

echo never > /sys/kernel/mm/transparent_hugepage/enabled

# echo never > /sys/kernel/mm/transparent_hugepage/enabled

direct compaction can be disabled while leaving THP enabled.

echo madvise > /sys/kernel/mm/transparent_hugepage/defrag

# echo madvise > /sys/kernel/mm/transparent_hugepage/defrag

/usr/share/doc/kernel-doc-kernel_version/Documentation/vm/transhuge.txt file, which is available after installing the kernel-doc package.

7.5. Configuring System Memory Capacity

/proc file system. Once you have determined the values that produce optimal performance for your use case, you can set them permanently by using the sysctl command.

echo 1 > /proc/sys/vm/overcommit_memory

# echo 1 > /proc/sys/vm/overcommit_memorysysctl vm.overcommit_memory=1 in /etc/sysctl.conf then run the following command:

sysctl -p

# sysctl -pNote

7.5.1. Virtual Memory Parameters

/proc/sys/vm unless otherwise indicated.

- dirty_ratio

- A percentage value. When this percentage of total system memory is modified, the system begins writing the modifications to disk with the

pdflushoperation. The default value is20percent. - dirty_background_ratio

- A percentage value. When this percentage of total system memory is modified, the system begins writing the modifications to disk in the background. The default value is

10percent. - overcommit_memory

- Defines the conditions that determine whether a large memory request is accepted or denied.The default value is

0. By default, the kernel performs heuristic memory overcommit handling by estimating the amount of memory available and failing requests that are too large. However, since memory is allocated using a heuristic rather than a precise algorithm, overloading memory is possible with this setting.When this parameter is set to1, the kernel performs no memory overcommit handling. This increases the possibility of memory overload, but improves performance for memory-intensive tasks.When this parameter is set to2, the kernel denies requests for memory equal to or larger than the sum of total available swap space and the percentage of physical RAM specified inovercommit_ratio. This reduces the risk of overcommitting memory, but is recommended only for systems with swap areas larger than their physical memory. - overcommit_ratio

- Specifies the percentage of physical RAM considered when

overcommit_memoryis set to2. The default value is50. - max_map_count

- Defines the maximum number of memory map areas that a process can use. The default value (

65530) is appropriate for most cases. Increase this value if your application needs to map more than this number of files. - min_free_kbytes

- Specifies the minimum number of kilobytes to keep free across the system. This is used to determine an appropriate value for each low memory zone, each of which is assigned a number of reserved free pages in proportion to their size.

Warning

Extreme values can damage your system. Settingmin_free_kbytesto an extremely low value prevents the system from reclaiming memory, which can result in system hangs and OOM-killing processes. However, settingmin_free_kbytestoo high (for example, to 5–10% of total system memory) causes the system to enter an out-of-memory state immediately, resulting in the system spending too much time reclaiming memory. - oom_adj

- In the event that the system runs out of memory and the

panic_on_oomparameter is set to0, theoom_killerfunction kills processes until the system can recover, starting from the process with the highestoom_score.Theoom_adjparameter helps determine theoom_scoreof a process. This parameter is set per process identifier. A value of-17disables theoom_killerfor that process. Other valid values are from-16to15.Note

Processes spawned by an adjusted process inherit theoom_scoreof the process. - swappiness

- The swappiness value, ranging from

0to100, controls the degree to which the system favors anonymous memory or the page cache. A high value improves file-system performance while aggressively swapping less active processes out of RAM. A low value avoids swapping processes out of memory, which usually decreases latency at the cost of I/O performance. The default value is60.Warning

Settingswappiness==0will very aggressively avoids swapping out, which increase the risk of OOM killing under strong memory and I/O pressure.

7.5.2. File System Parameters

/proc/sys/fs unless otherwise indicated.

- aio-max-nr

- Defines the maximum allowed number of events in all active asynchronous input/output contexts. The default value is

65536. Modifying this value does not pre-allocate or resize any kernel data structures. - file-max

- Determines the maximum number of file handles for the entire system. The default value on Red Hat Enterprise Linux 7 is the maximum of either

8192, or one tenth of the free memory pages available at the time the kernel starts.Raising this value can resolve errors caused by a lack of available file handles.

7.5.3. Kernel Parameters

/proc/sys/kernel/ directory, can be calculated by the kernel at boot time depending on available system resources.

- msgmax

- Defines the maximum allowable size in bytes of any single message in a message queue. This value must not exceed the size of the queue (

msgmnb). To determine the currentmsgmaxvalue on your system, use:sysctl kernel.msgmax

# sysctl kernel.msgmaxCopy to Clipboard Copied! Toggle word wrap Toggle overflow - msgmnb

- Defines the maximum size in bytes of a single message queue. To determine the current

msgmnbvalue on your system, use:sysctl kernel.msgmnb

# sysctl kernel.msgmnbCopy to Clipboard Copied! Toggle word wrap Toggle overflow - msgmni

- Defines the maximum number of message queue identifiers, and therefore the maximum number of queues. To determine the current

msgmnivalue on your system, use:sysctl kernel.msgmni

# sysctl kernel.msgmniCopy to Clipboard Copied! Toggle word wrap Toggle overflow - shmall

- Defines the total amount of shared memory pages that can be used on the system at one time. A page is 4096 bytes on the AMD64 and Intel 64 architecture, for example.To determine the current

shmallvalue on your system, use:sysctl kernel.shmall

# sysctl kernel.shmallCopy to Clipboard Copied! Toggle word wrap Toggle overflow - shmmax

- Defines the maximum size (in bytes) of a single shared memory segment allowed by the kernel. To determine the current

shmmaxvalue on your system, use:sysctl kernel.shmmax

# sysctl kernel.shmmaxCopy to Clipboard Copied! Toggle word wrap Toggle overflow - shmmni

- Defines the system-wide maximum number of shared memory segments. The default value is

4096on all systems. - threads-max

- Defines the system-wide maximum number of threads available to the kernel at one time. To determine the current

threads-maxvalue on your system, use:The default value is the result of:sysctl kernel.threads-max

# sysctl kernel.threads-maxCopy to Clipboard Copied! Toggle word wrap Toggle overflow The minimum value ismempages / (8 * THREAD_SIZE / PAGE SIZE )

mempages / (8 * THREAD_SIZE / PAGE SIZE )Copy to Clipboard Copied! Toggle word wrap Toggle overflow 20.

Chapter 8. Storage and File Systems

8.1. Considerations

- Data write or read patterns

- Data alignment with underlying geometry

- Block size

- File system size

- Journal size and location

- Recording access times

- Ensuring data reliability

- Pre-fetching data

- Pre-allocating disk space

- File fragmentation

- Resource contention

8.1.1. I/O Schedulers

- deadline

- The default I/O scheduler for all block devices, except for SATA disks.

Deadlineattempts to provide a guaranteed latency for requests from the point at which requests reach the I/O scheduler. This scheduler is suitable for most use cases, but particularly those in which read operations occur more often than write operations.Queued I/O requests are sorted into a read or write batch and then scheduled for execution in increasing LBA order. Read batches take precedence over write batches by default, as applications are more likely to block on read I/O. After a batch is processed,deadlinechecks how long write operations have been starved of processor time and schedules the next read or write batch as appropriate. The number of requests to handle per batch, the number of read batches to issue per write batch, and the amount of time before requests expire are all configurable; see Section 8.4.4, “Tuning the Deadline Scheduler” for details. - cfq

- The default scheduler only for devices identified as SATA disks. The Completely Fair Queueing scheduler,