此内容没有您所选择的语言版本。

Securing Applications and Services Guide

For Use with Red Hat Single Sign-On 7.4

Abstract

Making open source more inclusive

Red Hat is committed to replacing problematic language in our code, documentation, and web properties. We are beginning with these four terms: master, slave, blacklist, and whitelist. Because of the enormity of this endeavor, these changes will be implemented gradually over several upcoming releases. For more details, see our CTO Chris Wright’s message.

Chapter 1. Overview

Red Hat Single Sign-On supports both OpenID Connect (an extension to OAuth 2.0) and SAML 2.0. When securing clients and services the first thing you need to decide is which of the two you are going to use. If you want you can also choose to secure some with OpenID Connect and others with SAML.

To secure clients and services you are also going to need an adapter or library for the protocol you’ve selected. Red Hat Single Sign-On comes with its own adapters for selected platforms, but it is also possible to use generic OpenID Connect Relying Party and SAML Service Provider libraries.

1.1. What are Client Adapters?

Red Hat Single Sign-On client adapters are libraries that make it very easy to secure applications and services with Red Hat Single Sign-On. We call them adapters rather than libraries as they provide a tight integration to the underlying platform and framework. This makes our adapters easy to use and they require less boilerplate code than what is typically required by a library.

1.2. Supported Platforms

1.2.1. OpenID Connect

1.2.1.1. Java

1.2.1.2. JavaScript (client-side)

1.2.1.3. Node.js (server-side)

1.2.2. SAML

1.2.2.1. Java

1.2.2.2. Apache HTTP Server

1.3. Supported Protocols

1.3.1. OpenID Connect

OpenID Connect (OIDC) is an authentication protocol that is an extension of OAuth 2.0. While OAuth 2.0 is only a framework for building authorization protocols and is mainly incomplete, OIDC is a full-fledged authentication and authorization protocol. OIDC also makes heavy use of the Json Web Token (JWT) set of standards. These standards define an identity token JSON format and ways to digitally sign and encrypt that data in a compact and web-friendly way.

There are really two types of use cases when using OIDC. The first is an application that asks the Red Hat Single Sign-On server to authenticate a user for them. After a successful login, the application will receive an identity token and an access token. The identity token contains information about the user such as username, email, and other profile information. The access token is digitally signed by the realm and contains access information (like user role mappings) that the application can use to determine what resources the user is allowed to access on the application.

The second type of use cases is that of a client that wants to gain access to remote services. In this case, the client asks Red Hat Single Sign-On to obtain an access token it can use to invoke on other remote services on behalf of the user. Red Hat Single Sign-On authenticates the user then asks the user for consent to grant access to the client requesting it. The client then receives the access token. This access token is digitally signed by the realm. The client can make REST invocations on remote services using this access token. The REST service extracts the access token, verifies the signature of the token, then decides based on access information within the token whether or not to process the request.

1.3.2. SAML 2.0

SAML 2.0 is a similar specification to OIDC but a lot older and more mature. It has its roots in SOAP and the plethora of WS-* specifications so it tends to be a bit more verbose than OIDC. SAML 2.0 is primarily an authentication protocol that works by exchanging XML documents between the authentication server and the application. XML signatures and encryption are used to verify requests and responses.

In Red Hat Single Sign-On SAML serves two types of use cases: browser applications and REST invocations.

There are really two types of use cases when using SAML. The first is an application that asks the Red Hat Single Sign-On server to authenticate a user for them. After a successful login, the application will receive an XML document that contains something called a SAML assertion that specifies various attributes about the user. This XML document is digitally signed by the realm and contains access information (like user role mappings) that the application can use to determine what resources the user is allowed to access on the application.

The second type of use cases is that of a client that wants to gain access to remote services. In this case, the client asks Red Hat Single Sign-On to obtain a SAML assertion it can use to invoke on other remote services on behalf of the user.

1.3.3. OpenID Connect vs. SAML

Choosing between OpenID Connect and SAML is not just a matter of using a newer protocol (OIDC) instead of the older more mature protocol (SAML).

In most cases Red Hat Single Sign-On recommends using OIDC.

SAML tends to be a bit more verbose than OIDC.

Beyond verbosity of exchanged data, if you compare the specifications you’ll find that OIDC was designed to work with the web while SAML was retrofitted to work on top of the web. For example, OIDC is also more suited for HTML5/JavaScript applications because it is easier to implement on the client side than SAML. As tokens are in the JSON format, they are easier to consume by JavaScript. You will also find several nice features that make implementing security in your web applications easier. For example, check out the iframe trick that the specification uses to easily determine if a user is still logged in or not.

SAML has its uses though. As you see the OIDC specifications evolve you see they implement more and more features that SAML has had for years. What we often see is that people pick SAML over OIDC because of the perception that it is more mature and also because they already have existing applications that are secured with it.

Chapter 2. OpenID Connect

This section describes how you can secure applications and services with OpenID Connect using either Red Hat Single Sign-On adapters or generic OpenID Connect Relying Party libraries.

2.1. Java Adapters

Red Hat Single Sign-On comes with a range of different adapters for Java application. Selecting the correct adapter depends on the target platform.

All Java adapters share a set of common configuration options described in the Java Adapters Config chapter.

2.1.1. Java Adapter Config

Each Java adapter supported by Red Hat Single Sign-On can be configured by a simple JSON file. This is what one might look like:

{

"realm" : "demo",

"resource" : "customer-portal",

"realm-public-key" : "MIGfMA0GCSqGSIb3D...31LwIDAQAB",

"auth-server-url" : "https://localhost:8443/auth",

"ssl-required" : "external",

"use-resource-role-mappings" : false,

"enable-cors" : true,

"cors-max-age" : 1000,

"cors-allowed-methods" : "POST, PUT, DELETE, GET",

"cors-exposed-headers" : "WWW-Authenticate, My-custom-exposed-Header",

"bearer-only" : false,

"enable-basic-auth" : false,

"expose-token" : true,

"verify-token-audience" : true,

"credentials" : {

"secret" : "234234-234234-234234"

},

"connection-pool-size" : 20,

"disable-trust-manager": false,

"allow-any-hostname" : false,

"truststore" : "path/to/truststore.jks",

"truststore-password" : "geheim",

"client-keystore" : "path/to/client-keystore.jks",

"client-keystore-password" : "geheim",

"client-key-password" : "geheim",

"token-minimum-time-to-live" : 10,

"min-time-between-jwks-requests" : 10,

"public-key-cache-ttl": 86400,

"redirect-rewrite-rules" : {

"^/wsmaster/api/(.*)$" : "/api/$1"

}

}

You can use ${…} enclosure for system property replacement. For example ${jboss.server.config.dir} would be replaced by /path/to/Red Hat Single Sign-On. Replacement of environment variables is also supported via the env prefix, e.g. ${env.MY_ENVIRONMENT_VARIABLE}.

The initial config file can be obtained from the admin console. This can be done by opening the admin console, select Clients from the menu and clicking on the corresponding client. Once the page for the client is opened click on the Installation tab and select Keycloak OIDC JSON.

Here is a description of each configuration option:

- realm

- Name of the realm. This is REQUIRED.

- resource

- The client-id of the application. Each application has a client-id that is used to identify the application. This is REQUIRED.

- realm-public-key

- PEM format of the realm public key. You can obtain this from the administration console. This is OPTIONAL and it’s not recommended to set it. If not set, the adapter will download this from Red Hat Single Sign-On and it will always re-download it when needed (eg. Red Hat Single Sign-On rotate it’s keys). However if realm-public-key is set, then adapter will never download new keys from Red Hat Single Sign-On, so when Red Hat Single Sign-On rotate it’s keys, adapter will break.

- auth-server-url

-

The base URL of the Red Hat Single Sign-On server. All other Red Hat Single Sign-On pages and REST service endpoints are derived from this. It is usually of the form

https://host:port/auth. This is REQUIRED. - ssl-required

-

Ensures that all communication to and from the Red Hat Single Sign-On server is over HTTPS. In production this should be set to

all. This is OPTIONAL. The default value is external meaning that HTTPS is required by default for external requests. Valid values are 'all', 'external' and 'none'. - confidential-port

- The confidential port used by the Red Hat Single Sign-On server for secure connections over SSL/TLS. This is OPTIONAL. The default value is 8443.

- use-resource-role-mappings

- If set to true, the adapter will look inside the token for application level role mappings for the user. If false, it will look at the realm level for user role mappings. This is OPTIONAL. The default value is false.

- public-client

- If set to true, the adapter will not send credentials for the client to Red Hat Single Sign-On. This is OPTIONAL. The default value is false.

- enable-cors

- This enables CORS support. It will handle CORS preflight requests. It will also look into the access token to determine valid origins. This is OPTIONAL. The default value is false.

- cors-max-age

-

If CORS is enabled, this sets the value of the

Access-Control-Max-Ageheader. This is OPTIONAL. If not set, this header is not returned in CORS responses. - cors-allowed-methods

-

If CORS is enabled, this sets the value of the

Access-Control-Allow-Methodsheader. This should be a comma-separated string. This is OPTIONAL. If not set, this header is not returned in CORS responses. - cors-allowed-headers

-

If CORS is enabled, this sets the value of the

Access-Control-Allow-Headersheader. This should be a comma-separated string. This is OPTIONAL. If not set, this header is not returned in CORS responses. - cors-exposed-headers

-

If CORS is enabled, this sets the value of the

Access-Control-Expose-Headersheader. This should be a comma-separated string. This is OPTIONAL. If not set, this header is not returned in CORS responses. - bearer-only

- This should be set to true for services. If enabled the adapter will not attempt to authenticate users, but only verify bearer tokens. This is OPTIONAL. The default value is false.

- autodetect-bearer-only

-

This should be set to true if your application serves both a web application and web services (e.g. SOAP or REST). It allows you to redirect unauthenticated users of the web application to the Keycloak login page, but send an HTTP

401status code to unauthenticated SOAP or REST clients instead as they would not understand a redirect to the login page. Keycloak auto-detects SOAP or REST clients based on typical headers likeX-Requested-With,SOAPActionorAccept. The default value is false. - enable-basic-auth

- This tells the adapter to also support basic authentication. If this option is enabled, then secret must also be provided. This is OPTIONAL. The default value is false.

- expose-token

-

If

true, an authenticated browser client (via a JavaScript HTTP invocation) can obtain the signed access token via the URLroot/k_query_bearer_token. This is OPTIONAL. The default value is false. - credentials

- Specify the credentials of the application. This is an object notation where the key is the credential type and the value is the value of the credential type. Currently password and jwt is supported. This is REQUIRED only for clients with 'Confidential' access type.

- connection-pool-size

-

This config option defines how many connections to the Red Hat Single Sign-On server should be pooled. This is OPTIONAL. The default value is

20. - disable-trust-manager

-

If the Red Hat Single Sign-On server requires HTTPS and this config option is set to

trueyou do not have to specify a truststore. This setting should only be used during development and never in production as it will disable verification of SSL certificates. This is OPTIONAL. The default value isfalse. - allow-any-hostname

-

If the Red Hat Single Sign-On server requires HTTPS and this config option is set to

truethe Red Hat Single Sign-On server’s certificate is validated via the truststore, but host name validation is not done. This setting should only be used during development and never in production as it will disable verification of SSL certificates. This seting may be useful in test environments This is OPTIONAL. The default value isfalse. - proxy-url

- The URL for the HTTP proxy if one is used.

- truststore

-

The value is the file path to a truststore file. If you prefix the path with

classpath:, then the truststore will be obtained from the deployment’s classpath instead. Used for outgoing HTTPS communications to the Red Hat Single Sign-On server. Client making HTTPS requests need a way to verify the host of the server they are talking to. This is what the trustore does. The keystore contains one or more trusted host certificates or certificate authorities. You can create this truststore by extracting the public certificate of the Red Hat Single Sign-On server’s SSL keystore. This is REQUIRED unlessssl-requiredisnoneordisable-trust-manageristrue. - truststore-password

-

Password for the truststore. This is REQUIRED if

truststoreis set and the truststore requires a password. - client-keystore

- This is the file path to a keystore file. This keystore contains client certificate for two-way SSL when the adapter makes HTTPS requests to the Red Hat Single Sign-On server. This is OPTIONAL.

- client-keystore-password

-

Password for the client keystore. This is REQUIRED if

client-keystoreis set. - client-key-password

-

Password for the client’s key. This is REQUIRED if

client-keystoreis set. - always-refresh-token

- If true, the adapter will refresh token in every request. Warning - when enabled this will result in a request to Red Hat Single Sign-On for every request to your application.

- register-node-at-startup

- If true, then adapter will send registration request to Red Hat Single Sign-On. It’s false by default and useful only when application is clustered. See Application Clustering for details

- register-node-period

- Period for re-registration adapter to Red Hat Single Sign-On. Useful when application is clustered. See Application Clustering for details

- token-store

- Possible values are session and cookie. Default is session, which means that adapter stores account info in HTTP Session. Alternative cookie means storage of info in cookie. See Application Clustering for details

- token-cookie-path

- When using a cookie store, this option sets the path of the cookie used to store account info. If it’s a relative path, then it is assumed that the application is running in a context root, and is interpreted relative to that context root. If it’s an absolute path, then the absolute path is used to set the cookie path. Defaults to use paths relative to the context root.

- principal-attribute

-

OpenID Connect ID Token attribute to populate the UserPrincipal name with. If token attribute is null, defaults to

sub. Possible values aresub,preferred_username,email,name,nickname,given_name,family_name. - turn-off-change-session-id-on-login

- The session id is changed by default on a successful login on some platforms to plug a security attack vector. Change this to true if you want to turn this off This is OPTIONAL. The default value is false.

- token-minimum-time-to-live

-

Amount of time, in seconds, to preemptively refresh an active access token with the Red Hat Single Sign-On server before it expires. This is especially useful when the access token is sent to another REST client where it could expire before being evaluated. This value should never exceed the realm’s access token lifespan. This is OPTIONAL. The default value is

0seconds, so adapter will refresh access token just if it’s expired. - min-time-between-jwks-requests

-

Amount of time, in seconds, specifying minimum interval between two requests to Red Hat Single Sign-On to retrieve new public keys. It is 10 seconds by default. Adapter will always try to download new public key when it recognize token with unknown

kid. However it won’t try it more than once per 10 seconds (by default). This is to avoid DoS when attacker sends lots of tokens with badkidforcing adapter to send lots of requests to Red Hat Single Sign-On. - public-key-cache-ttl

-

Amount of time, in seconds, specifying maximum interval between two requests to Red Hat Single Sign-On to retrieve new public keys. It is 86400 seconds (1 day) by default. Adapter will always try to download new public key when it recognize token with unknown

kid. If it recognize token with knownkid, it will just use the public key downloaded previously. However at least once per this configured interval (1 day by default) will be new public key always downloaded even if thekidof token is already known. - ignore-oauth-query-parameter

-

Defaults to

false, if set totruewill turn off processing of theaccess_tokenquery parameter for bearer token processing. Users will not be able to authenticate if they only pass in anaccess_token - redirect-rewrite-rules

-

If needed, specify the Redirect URI rewrite rule. This is an object notation where the key is the regular expression to which the Redirect URI is to be matched and the value is the replacement String.

$character can be used for backreferences in the replacement String. - verify-token-audience

-

If set to

true, then during authentication with the bearer token, the adapter will verify whether the token contains this client name (resource) as an audience. The option is especially useful for services, which primarily serve requests authenticated by the bearer token. This is set tofalseby default, however for improved security, it is recommended to enable this. See Audience Support for more details about audience support.

2.1.2. JBoss EAP Adapter

To be able to secure WAR apps deployed on JBoss EAP, you must install and configure the Red Hat Single Sign-On adapter subsystem. You then have two options to secure your WARs.

You can provide an adapter config file in your WAR and change the auth-method to KEYCLOAK within web.xml.

Alternatively, you don’t have to modify your WAR at all and you can secure it via the Red Hat Single Sign-On adapter subsystem configuration in the configuration file, such as standalone.xml. Both methods are described in this section.

2.1.2.1. Installing the adapter

Adapters are available as a separate archive depending on what server version you are using.

Install on JBoss EAP 7:

You can install the EAP 7 adapters either by unzipping a ZIP file, or by using an RPM.

Install the EAP 7 Adapters from a ZIP File:

$ cd $EAP_HOME $ unzip rh-sso-7.4.10.GA-eap7-adapter.zip

Install on JBoss EAP 6:

You can install the EAP 6 adapters either by unzipping a ZIP file, or by using an RPM.

Install the EAP 6 Adapters from a ZIP File:

$ cd $EAP_HOME $ unzip rh-sso-7.4.10.GA-eap6-adapter.zip

This ZIP archive contains JBoss Modules specific to the Red Hat Single Sign-On adapter. It also contains JBoss CLI scripts to configure the adapter subsystem.

To configure the adapter subsystem if the server is not running execute:

Alternatively, you can specify the server.config property while installing adapters from the command line to install adapters using a different config, for example: -Dserver.config=standalone-ha.xml.

JBoss EAP 7.1 or newer

$ ./bin/jboss-cli.sh --file=bin/adapter-elytron-install-offline.cli

The offline script is not available for JBoss EAP 6.4

Alternatively, if the server is running execute:

JBoss EAP 7.1 or newer

$ ./bin/jboss-cli.sh -c --file=bin/adapter-elytron-install.cli

It is possible to use the legacy non-Elytron adapter on JBoss EAP 7.1 or newer as well, meaning you can use adapter-install-offline.cli

JBoss EAP 6.4

$ ./bin/jboss-cli.sh -c --file=bin/adapter-install.cli

2.1.2.2. JBoss SSO

JBoss EAP has built-in support for single sign-on for web applications deployed to the same JBoss EAP instance. This should not be enabled when using Red Hat Single Sign-On.

2.1.2.3. Required Per WAR Configuration

This section describes how to secure a WAR directly by adding configuration and editing files within your WAR package.

The first thing you must do is create a keycloak.json adapter configuration file within the WEB-INF directory of your WAR.

The format of this configuration file is described in the Java adapter configuration section.

Next you must set the auth-method to KEYCLOAK in web.xml. You also have to use standard servlet security to specify role-base constraints on your URLs.

Here’s an example:

<web-app xmlns="http://java.sun.com/xml/ns/javaee"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://java.sun.com/xml/ns/javaee http://java.sun.com/xml/ns/javaee/web-app_3_0.xsd"

version="3.0">

<module-name>application</module-name>

<security-constraint>

<web-resource-collection>

<web-resource-name>Admins</web-resource-name>

<url-pattern>/admin/*</url-pattern>

</web-resource-collection>

<auth-constraint>

<role-name>admin</role-name>

</auth-constraint>

<user-data-constraint>

<transport-guarantee>CONFIDENTIAL</transport-guarantee>

</user-data-constraint>

</security-constraint>

<security-constraint>

<web-resource-collection>

<web-resource-name>Customers</web-resource-name>

<url-pattern>/customers/*</url-pattern>

</web-resource-collection>

<auth-constraint>

<role-name>user</role-name>

</auth-constraint>

<user-data-constraint>

<transport-guarantee>CONFIDENTIAL</transport-guarantee>

</user-data-constraint>

</security-constraint>

<login-config>

<auth-method>KEYCLOAK</auth-method>

<realm-name>this is ignored currently</realm-name>

</login-config>

<security-role>

<role-name>admin</role-name>

</security-role>

<security-role>

<role-name>user</role-name>

</security-role>

</web-app>2.1.2.4. Securing WARs via Adapter Subsystem

You do not have to modify your WAR to secure it with Red Hat Single Sign-On. Instead you can externally secure it via the Red Hat Single Sign-On Adapter Subsystem. While you don’t have to specify KEYCLOAK as an auth-method, you still have to define the security-constraints in web.xml. You do not, however, have to create a WEB-INF/keycloak.json file. This metadata is instead defined within server configuration (i.e. standalone.xml) in the Red Hat Single Sign-On subsystem definition.

<extensions>

<extension module="org.keycloak.keycloak-adapter-subsystem"/>

</extensions>

<profile>

<subsystem xmlns="urn:jboss:domain:keycloak:1.1">

<secure-deployment name="WAR MODULE NAME.war">

<realm>demo</realm>

<auth-server-url>http://localhost:8081/auth</auth-server-url>

<ssl-required>external</ssl-required>

<resource>customer-portal</resource>

<credential name="secret">password</credential>

</secure-deployment>

</subsystem>

</profile>

The secure-deployment name attribute identifies the WAR you want to secure. Its value is the module-name defined in web.xml with .war appended. The rest of the configuration corresponds pretty much one to one with the keycloak.json configuration options defined in Java adapter configuration.

The exception is the credential element.

To make it easier for you, you can go to the Red Hat Single Sign-On Administration Console and go to the Client/Installation tab of the application this WAR is aligned with. It provides an example XML file you can cut and paste.

If you have multiple deployments secured by the same realm you can share the realm configuration in a separate element. For example:

<subsystem xmlns="urn:jboss:domain:keycloak:1.1">

<realm name="demo">

<auth-server-url>http://localhost:8080/auth</auth-server-url>

<ssl-required>external</ssl-required>

</realm>

<secure-deployment name="customer-portal.war">

<realm>demo</realm>

<resource>customer-portal</resource>

<credential name="secret">password</credential>

</secure-deployment>

<secure-deployment name="product-portal.war">

<realm>demo</realm>

<resource>product-portal</resource>

<credential name="secret">password</credential>

</secure-deployment>

<secure-deployment name="database.war">

<realm>demo</realm>

<resource>database-service</resource>

<bearer-only>true</bearer-only>

</secure-deployment>

</subsystem>2.1.2.5. Security Domain

The security context is propagated to the EJB tier automatically.

2.1.3. Installing JBoss EAP Adapter from an RPM

Install the EAP 7 Adapters from an RPM:

With Red Hat Enterprise Linux 7, the term channel was replaced with the term repository. In these instructions only the term repository is used.

You must subscribe to the JBoss EAP 7.3 repository before you can install the JBoss EAP 7 adapters from an RPM.

Prerequisites

- Ensure that your Red Hat Enterprise Linux system is registered to your account using Red Hat Subscription Manager. For more information see the Red Hat Subscription Management documentation.

- If you are already subscribed to another JBoss EAP repository, you must unsubscribe from that repository first.

For Red Hat Enterprise Linux 6, 7: Using Red Hat Subscription Manager, subscribe to the JBoss EAP 7.3 repository using the following command. Replace <RHEL_VERSION> with either 6 or 7 depending on your Red Hat Enterprise Linux version.

$ sudo subscription-manager repos --enable=jb-eap-7-for-rhel-<RHEL_VERSION>-server-rpms

For Red Hat Enterprise Linux 8: Using Red Hat Subscription Manager, subscribe to the JBoss EAP 7.3 repository using the following command:

$ sudo subscription-manager repos --enable=jb-eap-7.3-for-rhel-8-x86_64-rpms --enable=rhel-8-for-x86_64-baseos-rpms --enable=rhel-8-for-x86_64-appstream-rpms

Install the JBoss EAP 7 adapters for OIDC using the following command at Red Hat Enterprise Linux 6, 7:

$ sudo yum install eap7-keycloak-adapter-sso7_4

or use following one for Red Hat Enterprise Linux 8:

$ sudo dnf install eap7-keycloak-adapter-sso7_4

The default EAP_HOME path for the RPM installation is /opt/rh/eap7/root/usr/share/wildfly.

Run the appropriate module installation script.

For the OIDC module, enter the following command:

$ $EAP_HOME/bin/jboss-cli.sh -c --file=$EAP_HOME/bin/adapter-install.cli

Your installation is complete.

Install the EAP 6 Adapters from an RPM:

With Red Hat Enterprise Linux 7, the term channel was replaced with the term repository. In these instructions only the term repository is used.

You must subscribe to the JBoss EAP 6 repository before you can install the EAP 6 adapters from an RPM.

Prerequisites

- Ensure that your Red Hat Enterprise Linux system is registered to your account using Red Hat Subscription Manager. For more information see the Red Hat Subscription Management documentation.

- If you are already subscribed to another JBoss EAP repository, you must unsubscribe from that repository first.

Using Red Hat Subscription Manager, subscribe to the JBoss EAP 6 repository using the following command. Replace <RHEL_VERSION> with either 6 or 7 depending on your Red Hat Enterprise Linux version.

$ sudo subscription-manager repos --enable=jb-eap-6-for-rhel-<RHEL_VERSION>-server-rpms

Install the EAP 6 adapters for OIDC using the following command:

$ sudo yum install keycloak-adapter-sso7_4-eap6

The default EAP_HOME path for the RPM installation is /opt/rh/eap6/root/usr/share/wildfly.

Run the appropriate module installation script.

For the OIDC module, enter the following command:

$ $EAP_HOME/bin/jboss-cli.sh -c --file=$EAP_HOME/bin/adapter-install.cli

Your installation is complete.

2.1.4. JBoss Fuse 6 Adapter

Red Hat Single Sign-On supports securing your web applications running inside JBoss Fuse 6.

The only supported version of Fuse 6 is the latest release. If you use earlier versions of Fuse 6, it is possible that some functions will not work correctly. In particular, the Hawtio integration will not work with earlier versions of Fuse 6.

Security for the following items is supported for Fuse:

- Classic WAR applications deployed on Fuse with Pax Web War Extender

- Servlets deployed on Fuse as OSGI services with Pax Web Whiteboard Extender

- Apache Camel Jetty endpoints running with the Camel Jetty component

- Apache CXF endpoints running on their own separate Jetty engine

- Apache CXF endpoints running on the default engine provided by the CXF servlet

- SSH and JMX admin access

- Hawtio administration console

2.1.4.1. Securing Your Web Applications Inside Fuse 6

You must first install the Red Hat Single Sign-On Karaf feature. Next you will need to perform the steps according to the type of application you want to secure. All referenced web applications require injecting the Red Hat Single Sign-On Jetty authenticator into the underlying Jetty server. The steps to achieve this depend on the application type. The details are described below.

2.1.4.2. Installing the Keycloak Feature

You must first install the keycloak feature in the JBoss Fuse environment. The keycloak feature includes the Fuse adapter and all third-party dependencies. You can install it either from the Maven repository or from an archive.

2.1.4.2.1. Installing from the Maven Repository

As a prerequisite, you must be online and have access to the Maven repository.

For Red Hat Single Sign-On you first need to configure a proper Maven repository, so you can install the artifacts. For more information see the JBoss Enterprise Maven repository page.

Assuming the Maven repository is https://maven.repository.redhat.com/ga/, add the following to the $FUSE_HOME/etc/org.ops4j.pax.url.mvn.cfg file and add the repository to the list of supported repositories. For example:

org.ops4j.pax.url.mvn.repositories= \

https://maven.repository.redhat.com/ga/@id=redhat.product.repo

http://repo1.maven.org/maven2@id=maven.central.repo, \

...To install the keycloak feature using the Maven repository, complete the following steps:

Start JBoss Fuse 6.3.0; then in the Karaf terminal type:

features:addurl mvn:org.keycloak/keycloak-osgi-features/9.0.17.redhat-00001/xml/features features:install keycloak

You might also need to install the Jetty 9 feature:

features:install keycloak-jetty9-adapter

- Ensure that the features were installed:

features:list | grep keycloak

2.1.4.2.2. Installing from the ZIP bundle

This is useful if you are offline or do not want to use Maven to obtain the JAR files and other artifacts.

To install the Fuse adapter from the ZIP archive, complete the following steps:

- Download the Red Hat Single Sign-On Fuse adapter ZIP archive.

Unzip it into the root directory of JBoss Fuse. The dependencies are then installed under the

systemdirectory. You can overwrite all existing jar files.Use this for JBoss Fuse 6.3.0:

cd /path-to-fuse/jboss-fuse-6.3.0.redhat-XXX unzip -q /path-to-adapter-zip/rh-sso-7.4.10.GA-fuse-adapter.zip

Start Fuse and run these commands in the fuse/karaf terminal:

features:addurl mvn:org.keycloak/keycloak-osgi-features/9.0.17.redhat-00001/xml/features features:install keycloak

-

Install the corresponding Jetty adapter. Since the artifacts are available directly in the JBoss Fuse

systemdirectory, you do not need to use the Maven repository.

2.1.4.3. Securing a Classic WAR Application

The needed steps to secure your WAR application are:

In the

/WEB-INF/web.xmlfile, declare the necessary:- security constraints in the <security-constraint> element

- login configuration in the <login-config> element

security roles in the <security-role> element.

For example:

<?xml version="1.0" encoding="UTF-8"?> <web-app xmlns="http://java.sun.com/xml/ns/javaee" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://java.sun.com/xml/ns/javaee http://java.sun.com/xml/ns/javaee/web-app_3_0.xsd" version="3.0"> <module-name>customer-portal</module-name> <welcome-file-list> <welcome-file>index.html</welcome-file> </welcome-file-list> <security-constraint> <web-resource-collection> <web-resource-name>Customers</web-resource-name> <url-pattern>/customers/*</url-pattern> </web-resource-collection> <auth-constraint> <role-name>user</role-name> </auth-constraint> </security-constraint> <login-config> <auth-method>BASIC</auth-method> <realm-name>does-not-matter</realm-name> </login-config> <security-role> <role-name>admin</role-name> </security-role> <security-role> <role-name>user</role-name> </security-role> </web-app>

Add the

jetty-web.xmlfile with the authenticator to the/WEB-INF/jetty-web.xmlfile.For example:

<?xml version="1.0"?> <!DOCTYPE Configure PUBLIC "-//Mort Bay Consulting//DTD Configure//EN" "http://www.eclipse.org/jetty/configure_9_0.dtd"> <Configure class="org.eclipse.jetty.webapp.WebAppContext"> <Get name="securityHandler"> <Set name="authenticator"> <New class="org.keycloak.adapters.jetty.KeycloakJettyAuthenticator"> </New> </Set> </Get> </Configure>-

Within the

/WEB-INF/directory of your WAR, create a new file, keycloak.json. The format of this configuration file is described in the Java Adapters Config section. It is also possible to make this file available externally as described in Configuring the External Adapter. Ensure your WAR application imports

org.keycloak.adapters.jettyand maybe some more packages in theMETA-INF/MANIFEST.MFfile, under theImport-Packageheader. Usingmaven-bundle-pluginin your project properly generates OSGI headers in manifest. Note that "*" resolution for the package does not import theorg.keycloak.adapters.jettypackage, since it is not used by the application or the Blueprint or Spring descriptor, but is rather used in thejetty-web.xmlfile.The list of the packages to import might look like this:

org.keycloak.adapters.jetty;version="9.0.17.redhat-00001", org.keycloak.adapters;version="9.0.17.redhat-00001", org.keycloak.constants;version="9.0.17.redhat-00001", org.keycloak.util;version="9.0.17.redhat-00001", org.keycloak.*;version="9.0.17.redhat-00001", *;resolution:=optional

2.1.4.3.1. Configuring the External Adapter

If you do not want the keycloak.json adapter configuration file to be bundled inside your WAR application, but instead made available externally and loaded based on naming conventions, use this configuration method.

To enable the functionality, add this section to your /WEB_INF/web.xml file:

<context-param>

<param-name>keycloak.config.resolver</param-name>

<param-value>org.keycloak.adapters.osgi.PathBasedKeycloakConfigResolver</param-value>

</context-param>

That component uses keycloak.config or karaf.etc java properties to search for a base folder to locate the configuration. Then inside one of those folders it searches for a file called <your_web_context>-keycloak.json.

So, for example, if your web application has context my-portal, then your adapter configuration is loaded from the $FUSE_HOME/etc/my-portal-keycloak.json file.

2.1.4.4. Securing a Servlet Deployed as an OSGI Service

You can use this method if you have a servlet class inside your OSGI bundled project that is not deployed as a classic WAR application. Fuse uses Pax Web Whiteboard Extender to deploy such servlets as web applications.

To secure your servlet with Red Hat Single Sign-On, complete the following steps:

Red Hat Single Sign-On provides PaxWebIntegrationService, which allows injecting jetty-web.xml and configuring security constraints for your application. You need to declare such services in the

OSGI-INF/blueprint/blueprint.xmlfile inside your application. Note that your servlet needs to depend on it. An example configuration:<?xml version="1.0" encoding="UTF-8"?> <blueprint xmlns="http://www.osgi.org/xmlns/blueprint/v1.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://www.osgi.org/xmlns/blueprint/v1.0.0 http://www.osgi.org/xmlns/blueprint/v1.0.0/blueprint.xsd"> <!-- Using jetty bean just for the compatibility with other fuse services --> <bean id="servletConstraintMapping" class="org.eclipse.jetty.security.ConstraintMapping"> <property name="constraint"> <bean class="org.eclipse.jetty.util.security.Constraint"> <property name="name" value="cst1"/> <property name="roles"> <list> <value>user</value> </list> </property> <property name="authenticate" value="true"/> <property name="dataConstraint" value="0"/> </bean> </property> <property name="pathSpec" value="/product-portal/*"/> </bean> <bean id="keycloakPaxWebIntegration" class="org.keycloak.adapters.osgi.PaxWebIntegrationService" init-method="start" destroy-method="stop"> <property name="jettyWebXmlLocation" value="/WEB-INF/jetty-web.xml" /> <property name="bundleContext" ref="blueprintBundleContext" /> <property name="constraintMappings"> <list> <ref component-id="servletConstraintMapping" /> </list> </property> </bean> <bean id="productServlet" class="org.keycloak.example.ProductPortalServlet" depends-on="keycloakPaxWebIntegration"> </bean> <service ref="productServlet" interface="javax.servlet.Servlet"> <service-properties> <entry key="alias" value="/product-portal" /> <entry key="servlet-name" value="ProductServlet" /> <entry key="keycloak.config.file" value="/keycloak.json" /> </service-properties> </service> </blueprint>-

You might need to have the

WEB-INFdirectory inside your project (even if your project is not a web application) and create the/WEB-INF/jetty-web.xmland/WEB-INF/keycloak.jsonfiles as in the Classic WAR application section. Note you don’t need theweb.xmlfile as the security-constraints are declared in the blueprint configuration file.

-

You might need to have the

The

Import-PackageinMETA-INF/MANIFEST.MFmust contain at least these imports:org.keycloak.adapters.jetty;version="9.0.17.redhat-00001", org.keycloak.adapters;version="9.0.17.redhat-00001", org.keycloak.constants;version="9.0.17.redhat-00001", org.keycloak.util;version="9.0.17.redhat-00001", org.keycloak.*;version="9.0.17.redhat-00001", *;resolution:=optional

2.1.4.5. Securing an Apache Camel Application

You can secure Apache Camel endpoints implemented with the camel-jetty component by adding the securityHandler with KeycloakJettyAuthenticator and the proper security constraints injected. You can add the OSGI-INF/blueprint/blueprint.xml file to your Camel application with a similar configuration as below. The roles, security constraint mappings, and Red Hat Single Sign-On adapter configuration might differ slightly depending on your environment and needs.

For example:

<?xml version="1.0" encoding="UTF-8"?>

<blueprint xmlns="http://www.osgi.org/xmlns/blueprint/v1.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xmlns:camel="http://camel.apache.org/schema/blueprint"

xsi:schemaLocation="

http://www.osgi.org/xmlns/blueprint/v1.0.0 http://www.osgi.org/xmlns/blueprint/v1.0.0/blueprint.xsd

http://camel.apache.org/schema/blueprint http://camel.apache.org/schema/blueprint/camel-blueprint.xsd">

<bean id="kcAdapterConfig" class="org.keycloak.representations.adapters.config.AdapterConfig">

<property name="realm" value="demo"/>

<property name="resource" value="admin-camel-endpoint"/>

<property name="bearerOnly" value="true"/>

<property name="authServerUrl" value="http://localhost:8080/auth" />

<property name="sslRequired" value="EXTERNAL"/>

</bean>

<bean id="keycloakAuthenticator" class="org.keycloak.adapters.jetty.KeycloakJettyAuthenticator">

<property name="adapterConfig" ref="kcAdapterConfig"/>

</bean>

<bean id="constraint" class="org.eclipse.jetty.util.security.Constraint">

<property name="name" value="Customers"/>

<property name="roles">

<list>

<value>admin</value>

</list>

</property>

<property name="authenticate" value="true"/>

<property name="dataConstraint" value="0"/>

</bean>

<bean id="constraintMapping" class="org.eclipse.jetty.security.ConstraintMapping">

<property name="constraint" ref="constraint"/>

<property name="pathSpec" value="/*"/>

</bean>

<bean id="securityHandler" class="org.eclipse.jetty.security.ConstraintSecurityHandler">

<property name="authenticator" ref="keycloakAuthenticator" />

<property name="constraintMappings">

<list>

<ref component-id="constraintMapping" />

</list>

</property>

<property name="authMethod" value="BASIC"/>

<property name="realmName" value="does-not-matter"/>

</bean>

<bean id="sessionHandler" class="org.keycloak.adapters.jetty.spi.WrappingSessionHandler">

<property name="handler" ref="securityHandler" />

</bean>

<bean id="helloProcessor" class="org.keycloak.example.CamelHelloProcessor" />

<camelContext id="blueprintContext"

trace="false"

xmlns="http://camel.apache.org/schema/blueprint">

<route id="httpBridge">

<from uri="jetty:http://0.0.0.0:8383/admin-camel-endpoint?handlers=sessionHandler&matchOnUriPrefix=true" />

<process ref="helloProcessor" />

<log message="The message from camel endpoint contains ${body}"/>

</route>

</camelContext>

</blueprint>-

The

Import-PackageinMETA-INF/MANIFEST.MFneeds to contain these imports:

javax.servlet;version="[3,4)", javax.servlet.http;version="[3,4)", org.apache.camel.*, org.apache.camel;version="[2.13,3)", org.eclipse.jetty.security;version="[9,10)", org.eclipse.jetty.server.nio;version="[9,10)", org.eclipse.jetty.util.security;version="[9,10)", org.keycloak.*;version="9.0.17.redhat-00001", org.osgi.service.blueprint, org.osgi.service.blueprint.container, org.osgi.service.event,

2.1.4.6. Camel RestDSL

Camel RestDSL is a Camel feature used to define your REST endpoints in a fluent way. But you must still use specific implementation classes and provide instructions on how to integrate with Red Hat Single Sign-On.

The way to configure the integration mechanism depends on the Camel component for which you configure your RestDSL-defined routes.

The following example shows how to configure integration using the Jetty component, with references to some of the beans defined in previous Blueprint example.

<bean id="securityHandlerRest" class="org.eclipse.jetty.security.ConstraintSecurityHandler">

<property name="authenticator" ref="keycloakAuthenticator" />

<property name="constraintMappings">

<list>

<ref component-id="constraintMapping" />

</list>

</property>

<property name="authMethod" value="BASIC"/>

<property name="realmName" value="does-not-matter"/>

</bean>

<bean id="sessionHandlerRest" class="org.keycloak.adapters.jetty.spi.WrappingSessionHandler">

<property name="handler" ref="securityHandlerRest" />

</bean>

<camelContext id="blueprintContext"

trace="false"

xmlns="http://camel.apache.org/schema/blueprint">

<restConfiguration component="jetty" contextPath="/restdsl"

port="8484">

<!--the link with Keycloak security handlers happens here-->

<endpointProperty key="handlers" value="sessionHandlerRest"></endpointProperty>

<endpointProperty key="matchOnUriPrefix" value="true"></endpointProperty>

</restConfiguration>

<rest path="/hello" >

<description>Hello rest service</description>

<get uri="/{id}" outType="java.lang.String">

<description>Just an helllo</description>

<to uri="direct:justDirect" />

</get>

</rest>

<route id="justDirect">

<from uri="direct:justDirect"/>

<process ref="helloProcessor" />

<log message="RestDSL correctly invoked ${body}"/>

<setBody>

<constant>(__This second sentence is returned from a Camel RestDSL endpoint__)</constant>

</setBody>

</route>

</camelContext>2.1.4.7. Securing an Apache CXF Endpoint on a Separate Jetty Engine

To run your CXF endpoints secured by Red Hat Single Sign-On on separate Jetty engines, complete the following steps:

Add

META-INF/spring/beans.xmlto your application, and in it, declarehttpj:engine-factorywith Jetty SecurityHandler with injectedKeycloakJettyAuthenticator. The configuration for a CFX JAX-WS application might resemble this one:<?xml version="1.0" encoding="UTF-8"?> <beans xmlns="http://www.springframework.org/schema/beans" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xmlns:jaxws="http://cxf.apache.org/jaxws" xmlns:httpj="http://cxf.apache.org/transports/http-jetty/configuration" xsi:schemaLocation=" http://www.springframework.org/schema/beans http://www.springframework.org/schema/beans/spring-beans.xsd http://cxf.apache.org/jaxws http://cxf.apache.org/schemas/jaxws.xsd http://www.springframework.org/schema/osgi http://www.springframework.org/schema/osgi/spring-osgi.xsd http://cxf.apache.org/transports/http-jetty/configuration http://cxf.apache.org/schemas/configuration/http-jetty.xsd"> <import resource="classpath:META-INF/cxf/cxf.xml" /> <bean id="kcAdapterConfig" class="org.keycloak.representations.adapters.config.AdapterConfig"> <property name="realm" value="demo"/> <property name="resource" value="custom-cxf-endpoint"/> <property name="bearerOnly" value="true"/> <property name="authServerUrl" value="http://localhost:8080/auth" /> <property name="sslRequired" value="EXTERNAL"/> </bean> <bean id="keycloakAuthenticator" class="org.keycloak.adapters.jetty.KeycloakJettyAuthenticator"> <property name="adapterConfig"> <ref local="kcAdapterConfig" /> </property> </bean> <bean id="constraint" class="org.eclipse.jetty.util.security.Constraint"> <property name="name" value="Customers"/> <property name="roles"> <list> <value>user</value> </list> </property> <property name="authenticate" value="true"/> <property name="dataConstraint" value="0"/> </bean> <bean id="constraintMapping" class="org.eclipse.jetty.security.ConstraintMapping"> <property name="constraint" ref="constraint"/> <property name="pathSpec" value="/*"/> </bean> <bean id="securityHandler" class="org.eclipse.jetty.security.ConstraintSecurityHandler"> <property name="authenticator" ref="keycloakAuthenticator" /> <property name="constraintMappings"> <list> <ref local="constraintMapping" /> </list> </property> <property name="authMethod" value="BASIC"/> <property name="realmName" value="does-not-matter"/> </bean> <httpj:engine-factory bus="cxf" id="kc-cxf-endpoint"> <httpj:engine port="8282"> <httpj:handlers> <ref local="securityHandler" /> </httpj:handlers> <httpj:sessionSupport>true</httpj:sessionSupport> </httpj:engine> </httpj:engine-factory> <jaxws:endpoint implementor="org.keycloak.example.ws.ProductImpl" address="http://localhost:8282/ProductServiceCF" depends-on="kc-cxf-endpoint" /> </beans>For the CXF JAX-RS application, the only difference might be in the configuration of the endpoint dependent on engine-factory:

<jaxrs:server serviceClass="org.keycloak.example.rs.CustomerService" address="http://localhost:8282/rest" depends-on="kc-cxf-endpoint"> <jaxrs:providers> <bean class="com.fasterxml.jackson.jaxrs.json.JacksonJsonProvider" /> </jaxrs:providers> </jaxrs:server>-

The

Import-PackageinMETA-INF/MANIFEST.MFmust contain those imports:

META-INF.cxf;version="[2.7,3.2)", META-INF.cxf.osgi;version="[2.7,3.2)";resolution:=optional, org.apache.cxf.bus;version="[2.7,3.2)", org.apache.cxf.bus.spring;version="[2.7,3.2)", org.apache.cxf.bus.resource;version="[2.7,3.2)", org.apache.cxf.transport.http;version="[2.7,3.2)", org.apache.cxf.*;version="[2.7,3.2)", org.springframework.beans.factory.config, org.eclipse.jetty.security;version="[9,10)", org.eclipse.jetty.util.security;version="[9,10)", org.keycloak.*;version="9.0.17.redhat-00001"

2.1.4.8. Securing an Apache CXF Endpoint on the Default Jetty Engine

Some services automatically come with deployed servlets on startup. One such service is the CXF servlet running in the http://localhost:8181/cxf context. Securing such endpoints can be complicated. One approach, which Red Hat Single Sign-On is currently using, is ServletReregistrationService, which undeploys a built-in servlet at startup, enabling you to redeploy it on a context secured by Red Hat Single Sign-On.

The configuration file OSGI-INF/blueprint/blueprint.xml inside your application might resemble the one below. Note that it adds the JAX-RS customerservice endpoint, which is endpoint-specific to your application, but more importantly, secures the entire /cxf context.

<?xml version="1.0" encoding="UTF-8"?>

<blueprint xmlns="http://www.osgi.org/xmlns/blueprint/v1.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xmlns:jaxrs="http://cxf.apache.org/blueprint/jaxrs"

xsi:schemaLocation="

http://www.osgi.org/xmlns/blueprint/v1.0.0 http://www.osgi.org/xmlns/blueprint/v1.0.0/blueprint.xsd

http://cxf.apache.org/blueprint/jaxrs http://cxf.apache.org/schemas/blueprint/jaxrs.xsd">

<!-- JAXRS Application -->

<bean id="customerBean" class="org.keycloak.example.rs.CxfCustomerService" />

<jaxrs:server id="cxfJaxrsServer" address="/customerservice">

<jaxrs:providers>

<bean class="com.fasterxml.jackson.jaxrs.json.JacksonJsonProvider" />

</jaxrs:providers>

<jaxrs:serviceBeans>

<ref component-id="customerBean" />

</jaxrs:serviceBeans>

</jaxrs:server>

<!-- Securing of whole /cxf context by unregister default cxf servlet from paxweb and re-register with applied security constraints -->

<bean id="cxfConstraintMapping" class="org.eclipse.jetty.security.ConstraintMapping">

<property name="constraint">

<bean class="org.eclipse.jetty.util.security.Constraint">

<property name="name" value="cst1"/>

<property name="roles">

<list>

<value>user</value>

</list>

</property>

<property name="authenticate" value="true"/>

<property name="dataConstraint" value="0"/>

</bean>

</property>

<property name="pathSpec" value="/cxf/*"/>

</bean>

<bean id="cxfKeycloakPaxWebIntegration" class="org.keycloak.adapters.osgi.PaxWebIntegrationService"

init-method="start" destroy-method="stop">

<property name="bundleContext" ref="blueprintBundleContext" />

<property name="jettyWebXmlLocation" value="/WEB-INF/jetty-web.xml" />

<property name="constraintMappings">

<list>

<ref component-id="cxfConstraintMapping" />

</list>

</property>

</bean>

<bean id="defaultCxfReregistration" class="org.keycloak.adapters.osgi.ServletReregistrationService" depends-on="cxfKeycloakPaxWebIntegration"

init-method="start" destroy-method="stop">

<property name="bundleContext" ref="blueprintBundleContext" />

<property name="managedServiceReference">

<reference interface="org.osgi.service.cm.ManagedService" filter="(service.pid=org.apache.cxf.osgi)" timeout="5000" />

</property>

</bean>

</blueprint>

As a result, all other CXF services running on the default CXF HTTP destination are also secured. Similarly, when the application is undeployed, the entire /cxf context becomes unsecured as well. For this reason, using your own Jetty engine for your applications as described in Secure CXF Application on separate Jetty Engine then gives you more control over security for each individual application.

-

The

WEB-INFdirectory might need to be inside your project (even if your project is not a web application). You might also need to edit the/WEB-INF/jetty-web.xmland/WEB-INF/keycloak.jsonfiles in a similar way as in Classic WAR application. Note that you do not need theweb.xmlfile as the security constraints are declared in the blueprint configuration file. -

The

Import-PackageinMETA-INF/MANIFEST.MFmust contain these imports:

META-INF.cxf;version="[2.7,3.2)", META-INF.cxf.osgi;version="[2.7,3.2)";resolution:=optional, org.apache.cxf.transport.http;version="[2.7,3.2)", org.apache.cxf.*;version="[2.7,3.2)", com.fasterxml.jackson.jaxrs.json;version="[2.5,3)", org.eclipse.jetty.security;version="[9,10)", org.eclipse.jetty.util.security;version="[9,10)", org.keycloak.*;version="9.0.17.redhat-00001", org.keycloak.adapters.jetty;version="9.0.17.redhat-00001", *;resolution:=optional

2.1.4.9. Securing Fuse Administration Services

2.1.4.9.1. Using SSH Authentication to Fuse Terminal

Red Hat Single Sign-On mainly addresses use cases for authentication of web applications; however, if your other web services and applications are protected with Red Hat Single Sign-On, protecting non-web administration services such as SSH with Red Hat Single Sign-On credentials is a best pracrice. You can do this using the JAAS login module, which allows remote connection to Red Hat Single Sign-On and verifies credentials based on Resource Owner Password Credentials.

To enable SSH authentication, complete the following steps:

-

In Red Hat Single Sign-On create a client (for example,

ssh-jmx-admin-client), which will be used for SSH authentication. This client needs to haveDirect Access Grants Enabledselected toOn. In the

$FUSE_HOME/etc/org.apache.karaf.shell.cfgfile, update or specify this property:sshRealm=keycloak

Add the

$FUSE_HOME/etc/keycloak-direct-access.jsonfile with content similar to the following (based on your environment and Red Hat Single Sign-On client settings):{ "realm": "demo", "resource": "ssh-jmx-admin-client", "ssl-required" : "external", "auth-server-url" : "http://localhost:8080/auth", "credentials": { "secret": "password" } }This file specifies the client application configuration, which is used by JAAS DirectAccessGrantsLoginModule from the

keycloakJAAS realm for SSH authentication.Start Fuse and install the

keycloakJAAS realm. The easiest way is to install thekeycloak-jaasfeature, which has the JAAS realm predefined. You can override the feature’s predefined realm by using your ownkeycloakJAAS realm with higher ranking. For details see the JBoss Fuse documentation.Use these commands in the Fuse terminal:

features:addurl mvn:org.keycloak/keycloak-osgi-features/9.0.17.redhat-00001/xml/features features:install keycloak-jaas

Log in using SSH as

adminuser by typing the following in the terminal:ssh -o PubkeyAuthentication=no -p 8101 admin@localhost

-

Log in with password

password.

On some later operating systems, you might also need to use the SSH command’s -o option -o HostKeyAlgorithms=+ssh-dss because later SSH clients do not allow use of the ssh-dss algorithm, by default. However, by default, it is currently used in JBoss Fuse 6.3.0.

Note that the user needs to have realm role admin to perform all operations or another role to perform a subset of operations (for example, the viewer role that restricts the user to run only read-only Karaf commands). The available roles are configured in $FUSE_HOME/etc/org.apache.karaf.shell.cfg or $FUSE_HOME/etc/system.properties.

2.1.4.9.2. Using JMX Authentication

JMX authentication might be necessary if you want to use jconsole or another external tool to remotely connect to JMX through RMI. Otherwise it might be better to use hawt.io/jolokia, since the jolokia agent is installed in hawt.io by default. For more details see Hawtio Admin Console.

To use JMX authentication, complete the following steps:

In the

$FUSE_HOME/etc/org.apache.karaf.management.cfgfile, change the jmxRealm property to:jmxRealm=keycloak

-

Install the

keycloak-jaasfeature and configure the$FUSE_HOME/etc/keycloak-direct-access.jsonfile as described in the SSH section above. - In jconsole you can use a URL such as:

service:jmx:rmi://localhost:44444/jndi/rmi://localhost:1099/karaf-root

and credentials: admin/password (based on the user with admin privileges according to your environment).

2.1.4.10. Securing the Hawtio Administration Console

To secure the Hawtio Administration Console with Red Hat Single Sign-On, complete the following steps:

Add these properties to the

$FUSE_HOME/etc/system.propertiesfile:hawtio.keycloakEnabled=true hawtio.realm=keycloak hawtio.keycloakClientConfig=file://${karaf.base}/etc/keycloak-hawtio-client.json hawtio.rolePrincipalClasses=org.keycloak.adapters.jaas.RolePrincipal,org.apache.karaf.jaas.boot.principal.RolePrincipal-

Create a client in the Red Hat Single Sign-On administration console in your realm. For example, in the Red Hat Single Sign-On

demorealm, create a clienthawtio-client, specifypublicas the Access Type, and specify a redirect URI pointing to Hawtio: http://localhost:8181/hawtio/*. You must also have a corresponding Web Origin configured (in this case, http://localhost:8181). Create the

keycloak-hawtio-client.jsonfile in the$FUSE_HOME/etcdirectory using content similar to that shown in the example below. Change therealm,resource, andauth-server-urlproperties according to your Red Hat Single Sign-On environment. Theresourceproperty must point to the client created in the previous step. This file is used by the client (Hawtio JavaScript application) side.{ "realm" : "demo", "resource" : "hawtio-client", "auth-server-url" : "http://localhost:8080/auth", "ssl-required" : "external", "public-client" : true }Create the

keycloak-hawtio.jsonfile in the$FUSE_HOME/etcdicrectory using content similar to that shown in the example below. Change therealmandauth-server-urlproperties according to your Red Hat Single Sign-On environment. This file is used by the adapters on the server (JAAS Login module) side.{ "realm" : "demo", "resource" : "jaas", "bearer-only" : true, "auth-server-url" : "http://localhost:8080/auth", "ssl-required" : "external", "use-resource-role-mappings": false, "principal-attribute": "preferred_username" }Start JBoss Fuse 6.3.0 and install the keycloak feature if you have not already done so. The commands in Karaf terminal are similar to this example:

features:addurl mvn:org.keycloak/keycloak-osgi-features/9.0.17.redhat-00001/xml/features features:install keycloak

Go to http://localhost:8181/hawtio and log in as a user from your Red Hat Single Sign-On realm.

Note that the user needs to have the proper realm role to successfully authenticate to Hawtio. The available roles are configured in the

$FUSE_HOME/etc/system.propertiesfile inhawtio.roles.

2.1.4.10.1. Securing Hawtio on JBoss EAP 6.4

To run Hawtio on the JBoss EAP 6.4 server, complete the following steps:

Set up Red Hat Single Sign-On as described in the previous section, Securing the Hawtio Administration Console. It is assumed that:

-

you have a Red Hat Single Sign-On realm

demoand clienthawtio-client -

your Red Hat Single Sign-On is running on

localhost:8080 -

the JBoss EAP 6.4 server with deployed Hawtio will be running on

localhost:8181. The directory with this server is referred in next steps as$EAP_HOME.

-

you have a Red Hat Single Sign-On realm

-

Copy the Hawtio web archive, matching your JBoss Fuse 6.3.0 version, to the

$EAP_HOME/standalone/configurationdirectory. For more details about deploying Hawtio see the Fuse Hawtio documentation. -

Copy the

keycloak-hawtio.jsonandkeycloak-hawtio-client.jsonfiles with the above content to the$EAP_HOME/standalone/configurationdirectory. - Install the Red Hat Single Sign-On adapter subsystem to your JBoss EAP 6.4 server as described in the JBoss adapter documentation.

In the

$EAP_HOME/standalone/configuration/standalone.xmlfile configure the system properties as in this example:<extensions> ... </extensions> <system-properties> <property name="hawtio.authenticationEnabled" value="true" /> <property name="hawtio.realm" value="hawtio" /> <property name="hawtio.roles" value="admin,viewer" /> <property name="hawtio.rolePrincipalClasses" value="org.keycloak.adapters.jaas.RolePrincipal" /> <property name="hawtio.keycloakEnabled" value="true" /> <property name="hawtio.keycloakClientConfig" value="${jboss.server.config.dir}/keycloak-hawtio-client.json" /> <property name="hawtio.keycloakServerConfig" value="${jboss.server.config.dir}/keycloak-hawtio.json" /> </system-properties>Add the Hawtio realm to the same file in the

security-domainssection:<security-domain name="hawtio" cache-type="default"> <authentication> <login-module code="org.keycloak.adapters.jaas.BearerTokenLoginModule" flag="required"> <module-option name="keycloak-config-file" value="${hawtio.keycloakServerConfig}"/> </login-module> </authentication> </security-domain>Add the

secure-deploymentsectionhawtioto the adapter subsystem. This ensures that the Hawtio WAR is able to find the JAAS login module classes.<subsystem xmlns="urn:jboss:domain:keycloak:1.1"> <secure-deployment name="your-hawtio-webarchive.war" /> </subsystem>Restart the JBoss EAP 6.4 server with Hawtio:

cd $EAP_HOME/bin ./standalone.sh -Djboss.socket.binding.port-offset=101

- Access Hawtio at http://localhost:8181/hawtio. It is secured by Red Hat Single Sign-On.

2.1.5. JBoss Fuse 7 Adapter

Red Hat Single Sign-On supports securing your web applications running inside JBoss Fuse 7.

JBoss Fuse 7 leverages Undertow adapter which is essentially the same as JBoss EAP 7 Adapter as JBoss Fuse 7.x is bundled with Undertow HTTP engine under the covers and Undertow is used for running various kinds of web applications.

The only supported version of Fuse 7 is the latest release. If you use earlier versions of Fuse 7, it is possible that some functions will not work correctly. In particular, integration will not work at all for versions of Fuse 7 lower than 7.0.1.

Security for the following items is supported for Fuse:

- Classic WAR applications deployed on Fuse with Pax Web War Extender

- Servlets deployed on Fuse as OSGI services with Pax Web Whiteboard Extender and additionally servlets registered through org.osgi.service.http.HttpService#registerServlet() which is standard OSGi Enterprise HTTP Service

- Apache Camel Undertow endpoints running with the Camel Undertow component

- Apache CXF endpoints running on their own separate Undertow engine

- Apache CXF endpoints running on the default engine provided by the CXF servlet

- SSH and JMX admin access

- Hawtio administration console

2.1.5.1. Securing Your Web Applications Inside Fuse 7

You must first install the Red Hat Single Sign-On Karaf feature. Next you will need to perform the steps according to the type of application you want to secure. All referenced web applications require injecting the Red Hat Single Sign-On Undertow authentication mechanism into the underlying web server. The steps to achieve this depend on the application type. The details are described below.

2.1.5.2. Installing the Keycloak Feature

You must first install the keycloak-pax-http-undertow and keycloak-jaas features in the JBoss Fuse environment. The keycloak-pax-http-undertow feature includes the Fuse adapter and all third-party dependencies. The keycloak-jaas contains JAAS module used in realm for SSH and JMX authentication. You can install it either from the Maven repository or from an archive.

2.1.5.2.1. Installing from the Maven Repository

As a prerequisite, you must be online and have access to the Maven repository.

For Red Hat Single Sign-On you first need to configure a proper Maven repository, so you can install the artifacts. For more information see the JBoss Enterprise Maven repository page.

Assuming the Maven repository is https://maven.repository.redhat.com/ga/, add the following to the $FUSE_HOME/etc/org.ops4j.pax.url.mvn.cfg file and add the repository to the list of supported repositories. For example:

config:edit org.ops4j.pax.url.mvn config:property-append org.ops4j.pax.url.mvn.repositories ,https://maven.repository.redhat.com/ga/@id=redhat.product.repo config:update feature:repo-refresh

To install the keycloak feature using the Maven repository, complete the following steps:

Start JBoss Fuse 7.x; then in the Karaf terminal type:

feature:repo-add mvn:org.keycloak/keycloak-osgi-features/9.0.17.redhat-00001/xml/features feature:install keycloak-pax-http-undertow keycloak-jaas

You might also need to install the Undertow feature:

feature:install pax-http-undertow

- Ensure that the features were installed:

feature:list | grep keycloak

2.1.5.2.2. Installing from the ZIP bundle

This is useful if you are offline or do not want to use Maven to obtain the JAR files and other artifacts.

To install the Fuse adapter from the ZIP archive, complete the following steps:

- Download the Red Hat Single Sign-On Fuse adapter ZIP archive.

Unzip it into the root directory of JBoss Fuse. The dependencies are then installed under the

systemdirectory. You can overwrite all existing jar files.Use this for JBoss Fuse 7.x:

cd /path-to-fuse/fuse-karaf-7.x unzip -q /path-to-adapter-zip/rh-sso-7.4.10.GA-fuse-adapter.zip

Start Fuse and run these commands in the fuse/karaf terminal:

feature:repo-add mvn:org.keycloak/keycloak-osgi-features/9.0.17.redhat-00001/xml/features feature:install keycloak-pax-http-undertow keycloak-jaas

-

Install the corresponding Undertow adapter. Since the artifacts are available directly in the JBoss Fuse

systemdirectory, you do not need to use the Maven repository.

2.1.5.3. Securing a Classic WAR Application

The needed steps to secure your WAR application are:

In the

/WEB-INF/web.xmlfile, declare the necessary:- security constraints in the <security-constraint> element

-

login configuration in the <login-config> element. Make sure that the

<auth-method>isKEYCLOAK. security roles in the <security-role> element

For example:

<?xml version="1.0" encoding="UTF-8"?> <web-app xmlns="http://java.sun.com/xml/ns/javaee" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://java.sun.com/xml/ns/javaee http://java.sun.com/xml/ns/javaee/web-app_3_0.xsd" version="3.0"> <module-name>customer-portal</module-name> <welcome-file-list> <welcome-file>index.html</welcome-file> </welcome-file-list> <security-constraint> <web-resource-collection> <web-resource-name>Customers</web-resource-name> <url-pattern>/customers/*</url-pattern> </web-resource-collection> <auth-constraint> <role-name>user</role-name> </auth-constraint> </security-constraint> <login-config> <auth-method>KEYCLOAK</auth-method> <realm-name>does-not-matter</realm-name> </login-config> <security-role> <role-name>admin</role-name> </security-role> <security-role> <role-name>user</role-name> </security-role> </web-app>

Within the

/WEB-INF/directory of your WAR, create a new file, keycloak.json. The format of this configuration file is described in the Java Adapters Config section. It is also possible to make this file available externally as described in Configuring the External Adapter.For example:

{ "realm": "demo", "resource": "customer-portal", "auth-server-url": "http://localhost:8080/auth", "ssl-required" : "external", "credentials": { "secret": "password" } }- Contrary to the Fuse 6 adapter, there are no special OSGi imports needed in MANIFEST.MF.

2.1.5.3.1. Configuration Resolvers

The keycloak.json adapter configuration file can be stored inside a bundle, which is default behaviour, or in a directory on a filesystem. To specify the actual source of the configuration file, set the keycloak.config.resolver deployment parameter to the desired configuration resolver class. For example, in a classic WAR application, set the keycloak.config.resolver context parameter in web.xml file like this:

<context-param>

<param-name>keycloak.config.resolver</param-name>

<param-value>org.keycloak.adapters.osgi.PathBasedKeycloakConfigResolver</param-value>

</context-param>

The following resolvers are available for keycloak.config.resolver:

- org.keycloak.adapters.osgi.BundleBasedKeycloakConfigResolver

-

This is the default resolver. The configuration file is expected inside the OSGi bundle that is being secured. By default, it loads file named

WEB-INF/keycloak.jsonbut this file name can be configured viaconfigLocationproperty. - org.keycloak.adapters.osgi.PathBasedKeycloakConfigResolver

This resolver searches for a file called

<your_web_context>-keycloak.jsoninside a folder that is specified bykeycloak.configsystem property. Ifkeycloak.configis not set,karaf.etcsystem property is used instead.For example, if your web application is deployed into context

my-portal, then your adapter configuration would be loaded either from the${keycloak.config}/my-portal-keycloak.jsonfile, or from${karaf.etc}/my-portal-keycloak.json.- org.keycloak.adapters.osgi.HierarchicalPathBasedKeycloakConfigResolver

This resolver is similar to

PathBasedKeycloakConfigResolverabove, where for given URI path, configuration locations are checked from most to least specific.For example, for

/my/web-app/contextURI, the following configuration locations are searched for existence until the first one exists:-

${karaf.etc}/my-web-app-context-keycloak.json -

${karaf.etc}/my-web-app-keycloak.json -

${karaf.etc}/my-keycloak.json -

${karaf.etc}/keycloak.json

-

2.1.5.4. Securing a Servlet Deployed as an OSGI Service

You can use this method if you have a servlet class inside your OSGI bundled project that is not deployed as a classic WAR application. Fuse uses Pax Web Whiteboard Extender to deploy such servlets as web applications.

To secure your servlet with Red Hat Single Sign-On, complete the following steps:

Red Hat Single Sign-On provides

org.keycloak.adapters.osgi.undertow.PaxWebIntegrationService, which allows configuring authentication method and security constraints for your application. You need to declare such services in theOSGI-INF/blueprint/blueprint.xmlfile inside your application. Note that your servlet needs to depend on it. An example configuration:<?xml version="1.0" encoding="UTF-8"?> <blueprint xmlns="http://www.osgi.org/xmlns/blueprint/v1.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://www.osgi.org/xmlns/blueprint/v1.0.0 http://www.osgi.org/xmlns/blueprint/v1.0.0/blueprint.xsd"> <bean id="servletConstraintMapping" class="org.keycloak.adapters.osgi.PaxWebSecurityConstraintMapping"> <property name="roles"> <list> <value>user</value> </list> </property> <property name="authentication" value="true"/> <property name="url" value="/product-portal/*"/> </bean> <!-- This handles the integration and setting the login-config and security-constraints parameters --> <bean id="keycloakPaxWebIntegration" class="org.keycloak.adapters.osgi.undertow.PaxWebIntegrationService" init-method="start" destroy-method="stop"> <property name="bundleContext" ref="blueprintBundleContext" /> <property name="constraintMappings"> <list> <ref component-id="servletConstraintMapping" /> </list> </property> </bean> <bean id="productServlet" class="org.keycloak.example.ProductPortalServlet" depends-on="keycloakPaxWebIntegration" /> <service ref="productServlet" interface="javax.servlet.Servlet"> <service-properties> <entry key="alias" value="/product-portal" /> <entry key="servlet-name" value="ProductServlet" /> <entry key="keycloak.config.file" value="/keycloak.json" /> </service-properties> </service> </blueprint>-

You might need to have the

WEB-INFdirectory inside your project (even if your project is not a web application) and create the/WEB-INF/keycloak.jsonfile as described in the Classic WAR application section. Note you don’t need theweb.xmlfile as the security-constraints are declared in the blueprint configuration file.

-

You might need to have the

- Contrary to the Fuse 6 adapter, there are no special OSGi imports needed in MANIFEST.MF.

2.1.5.5. Securing an Apache Camel Application

You can secure Apache Camel endpoints implemented with the camel-undertow component by injecting the proper security constraints via blueprint and updating the used component to undertow-keycloak. You have to add the OSGI-INF/blueprint/blueprint.xml file to your Camel application with a similar configuration as below. The roles, security constraint mappings, and adapter configuration might differ slightly depending on your environment and needs.

Compared to the standard undertow component, undertow-keycloak component adds two new properties:

-

configResolveris a resolver bean that supplies Red Hat Single Sign-On adapter configuration. Available resolvers are listed in Configuration Resolvers section. -

allowedRolesis a comma-separated list of roles. User accessing the service has to have at least one role to be permitted the access.

For example:

<?xml version="1.0" encoding="UTF-8"?>

<blueprint xmlns="http://www.osgi.org/xmlns/blueprint/v1.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xmlns:camel="http://camel.apache.org/schema/blueprint"

xsi:schemaLocation="

http://www.osgi.org/xmlns/blueprint/v1.0.0 http://www.osgi.org/xmlns/blueprint/v1.0.0/blueprint.xsd

http://camel.apache.org/schema/blueprint http://camel.apache.org/schema/blueprint/camel-blueprint-2.17.1.xsd">

<bean id="keycloakConfigResolver" class="org.keycloak.adapters.osgi.BundleBasedKeycloakConfigResolver" >

<property name="bundleContext" ref="blueprintBundleContext" />

</bean>

<bean id="helloProcessor" class="org.keycloak.example.CamelHelloProcessor" />

<camelContext id="blueprintContext"

trace="false"

xmlns="http://camel.apache.org/schema/blueprint">

<route id="httpBridge">

<from uri="undertow-keycloak:http://0.0.0.0:8383/admin-camel-endpoint?matchOnUriPrefix=true&configResolver=#keycloakConfigResolver&allowedRoles=admin" />

<process ref="helloProcessor" />

<log message="The message from camel endpoint contains ${body}"/>

</route>

</camelContext>

</blueprint>-

The

Import-PackageinMETA-INF/MANIFEST.MFneeds to contain these imports:

javax.servlet;version="[3,4)", javax.servlet.http;version="[3,4)", javax.net.ssl, org.apache.camel.*, org.apache.camel;version="[2.13,3)", io.undertow.*, org.keycloak.*;version="9.0.17.redhat-00001", org.osgi.service.blueprint, org.osgi.service.blueprint.container

2.1.5.6. Camel RestDSL

Camel RestDSL is a Camel feature used to define your REST endpoints in a fluent way. But you must still use specific implementation classes and provide instructions on how to integrate with Red Hat Single Sign-On.

The way to configure the integration mechanism depends on the Camel component for which you configure your RestDSL-defined routes.

The following example shows how to configure integration using the undertow-keycloak component, with references to some of the beans defined in previous Blueprint example.

<camelContext id="blueprintContext"

trace="false"

xmlns="http://camel.apache.org/schema/blueprint">

<!--the link with Keycloak security handlers happens by using undertow-keycloak component -->

<restConfiguration apiComponent="undertow-keycloak" contextPath="/restdsl" port="8484">

<endpointProperty key="configResolver" value="#keycloakConfigResolver" />

<endpointProperty key="allowedRoles" value="admin,superadmin" />

</restConfiguration>

<rest path="/hello" >

<description>Hello rest service</description>

<get uri="/{id}" outType="java.lang.String">

<description>Just a hello</description>

<to uri="direct:justDirect" />

</get>

</rest>

<route id="justDirect">

<from uri="direct:justDirect"/>

<process ref="helloProcessor" />

<log message="RestDSL correctly invoked ${body}"/>

<setBody>

<constant>(__This second sentence is returned from a Camel RestDSL endpoint__)</constant>

</setBody>

</route>

</camelContext>2.1.5.7. Securing an Apache CXF Endpoint on a Separate Undertow Engine

To run your CXF endpoints secured by Red Hat Single Sign-On on a separate Undertow engine, complete the following steps:

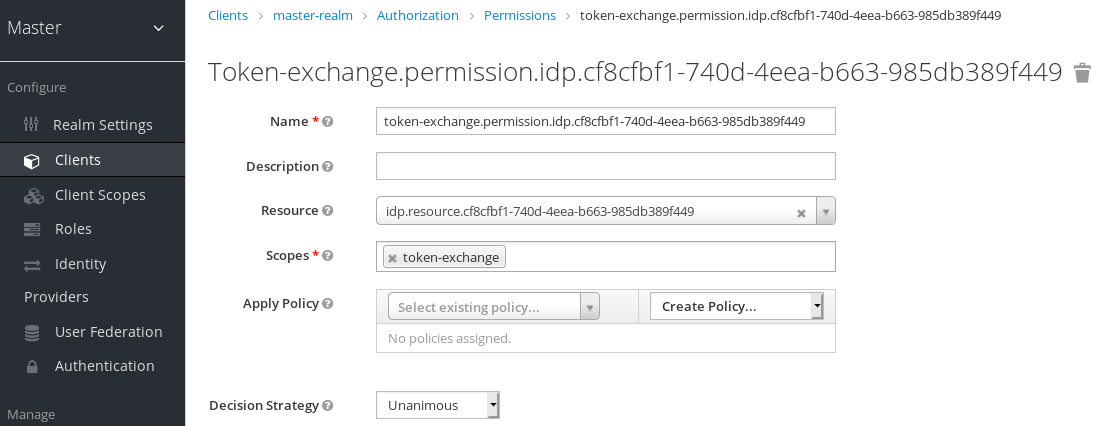

Add