Dieser Inhalt ist in der von Ihnen ausgewählten Sprache nicht verfügbar.

Chapter 3. Configuring IPoIB

By default, InfiniBand does not use the internet protocol (IP) for communication. However, IP over InfiniBand (IPoIB) provides an IP network emulation layer on top of InfiniBand remote direct memory access (RDMA) networks. This allows existing unmodified applications to transmit data over InfiniBand networks, but the performance is lower than if the application would use RDMA natively.

The Mellanox devices, starting from ConnectX-4 and above, on RHEL 8 and later use Enhanced IPoIB mode by default (datagram only). Connected mode is not supported on these devices.

3.1. The IPoIB communication modes

An IPoIB device is configurable in either Datagram or Connected mode. The difference is the type of queue pair the IPoIB layer attempts to open with the machine at the other end of the communication:

In the

Datagrammode, the system opens an unreliable, disconnected queue pair.This mode does not support packages larger than Maximum Transmission Unit (MTU) of the InfiniBand link layer. During transmission of data, the IPoIB layer adds a 4-byte IPoIB header on top of the IP packet. As a result, the IPoIB MTU is 4 bytes less than the InfiniBand link-layer MTU. As

2048is a common InfiniBand link-layer MTU, the common IPoIB device MTU inDatagrammode is2044.In the

Connectedmode, the system opens a reliable, connected queue pair.This mode allows messages larger than the InfiniBand link-layer MTU. The host adapter handles packet segmentation and reassembly. As a result, in the

Connectedmode, the messages sent from Infiniband adapters have no size limits. However, there are limited IP packets due to thedatafield and TCP/IPheaderfield. For this reason, the IPoIB MTU in theConnectedmode is65520bytes.The

Connectedmode has a higher performance but consumes more kernel memory.

Though a system is configured to use the Connected mode, a system still sends multicast traffic by using the Datagram mode because InfiniBand switches and fabric cannot pass multicast traffic in the Connected mode. Also, when the host is not configured to use the Connected mode, the system falls back to the Datagram mode.

While running an application that sends multicast data up to MTU on the interface, configures the interface in Datagram mode or configure the application to cap the send size of a packet that will fit in datagram-sized packets.

3.2. Understanding IPoIB hardware addresses

IPoIB devices have a 20 byte hardware address that consists of the following parts:

- The first 4 bytes are flags and queue pair numbers

The next 8 bytes are the subnet prefix

The default subnet prefix is

0xfe:80:00:00:00:00:00:00. After the device connects to the subnet manager, the device changes this prefix to match with the configured subnet manager.- The last 8 bytes are the Globally Unique Identifier (GUID) of the InfiniBand port that attaches to the IPoIB device

As the first 12 bytes can change, do not use them in the udev device manager rules.

3.3. Renaming IPoIB devices

By default, the kernel names Internet Protocol over InfiniBand (IPoIB) devices, for example, ib0, ib1, and so on. To avoid conflicts, Red Hat recommends creating a rule in the udev device manager to create persistent and meaningful names such as mlx4_ib0.

Prerequisites

- You have installed an InfiniBand device.

Procedure

Display the hardware address of the device

ib0:# ip link show ib0 8: ib0: >BROADCAST,MULTICAST,UP,LOWER_UP< mtu 65520 qdisc pfifo_fast state UP mode DEFAULT qlen 256 link/infiniband 80:00:02:00:fe:80:00:00:00:00:00:00:00:02:c9:03:00:31:78:f2 brd 00:ff:ff:ff:ff:12:40:1b:ff:ff:00:00:00:00:00:00:ff:ff:ff:ff

The last eight bytes of the address are required to create a

udevrule in the next step.To configure a rule that renames the device with the

00:02:c9:03:00:31:78:f2hardware address tomlx4_ib0, edit the/etc/udev/rules.d/70-persistent-ipoib.rulesfile and add anACTIONrule:ACTION=="add", SUBSYSTEM=="net", DRIVERS=="?*", ATTR{type}=="32", ATTR{address}=="?*00:02:c9:03:00:31:78:f2", NAME="mlx4_ib0"Reboot the host:

# reboot

Additional resources

-

udev(7)man page on your system - Understanding IPoIB hardware addresses

3.4. Configuring an IPoIB connection by using nmcli commands

The nmcli command-line utility controls the NetworkManager and reports network status by using CLI.

Prerequisites

- An InfiniBand device is installed on the server

- The corresponding kernel module is loaded

Procedure

Create the InfiniBand connection to use the

mlx4_ib0interface in theConnectedtransport mode and the maximum MTU of65520bytes:# nmcli connection add type infiniband con-name mlx4_ib0 ifname mlx4_ib0 transport-mode Connected mtu 65520Set a

P_Key, for example:# nmcli connection modify mlx4_ib0 infiniband.p-key 0x8002Configure the IPv4 settings:

To use DHCP, enter:

# nmcli connection modify mlx4_ib0 ipv4.method autoSkip this step if

ipv4.methodis already set toauto(default).To set a static IPv4 address, network mask, default gateway, DNS servers, and search domain, enter:

# nmcli connection modify mlx4_ib0 ipv4.method manual ipv4.addresses 192.0.2.1/24 ipv4.gateway 192.0.2.254 ipv4.dns 192.0.2.200 ipv4.dns-search example.com

Configure the IPv6 settings:

To use stateless address autoconfiguration (SLAAC), enter:

# nmcli connection modify mlx4_ib0 ipv6.method autoSkip this step if

ipv6.methodis already set toauto(default).To set a static IPv6 address, network mask, default gateway, DNS servers, and search domain, enter:

# nmcli connection modify mlx4_ib0 ipv6.method manual ipv6.addresses 2001:db8:1::fffe/64 ipv6.gateway 2001:db8:1::fffe ipv6.dns 2001:db8:1::ffbb ipv6.dns-search example.com

To customize other settings in the profile, use the following command:

# nmcli connection modify mlx4_ib0 <setting> <value>Enclose values with spaces or semicolons in quotes.

Activate the profile:

# nmcli connection up mlx4_ib0

Verification

Use the

pingutility to send ICMP packets to the remote host’s InfiniBand adapter, for example:# ping -c5 192.0.2.2

3.5. Configuring an IPoIB connection by using the network RHEL system role

You can use IP over InfiniBand (IPoIB) to send IP packets over an InfiniBand interface. To configure IPoIB, create a NetworkManager connection profile. By using Ansible and the network system role, you can automate this process and remotely configure connection profiles on the hosts defined in a playbook.

You can use the network RHEL system role to configure IPoIB and, if a connection profile for the InfiniBand’s parent device does not exists, the role can create it as well.

Prerequisites

- You have prepared the control node and the managed nodes

- You are logged in to the control node as a user who can run playbooks on the managed nodes.

-

The account you use to connect to the managed nodes has

sudopermissions on them. -

An InfiniBand device named

mlx4_ib0is installed in the managed nodes. - The managed nodes use NetworkManager to configure the network.

Procedure

Create a playbook file, for example

~/playbook.yml, with the following content:--- - name: Configure the network hosts: managed-node-01.example.com tasks: - name: IPoIB connection profile with static IP address settings ansible.builtin.include_role: name: rhel-system-roles.network vars: network_connections: # InfiniBand connection mlx4_ib0 - name: mlx4_ib0 interface_name: mlx4_ib0 type: infiniband # IPoIB device mlx4_ib0.8002 on top of mlx4_ib0 - name: mlx4_ib0.8002 type: infiniband autoconnect: yes infiniband: p_key: 0x8002 transport_mode: datagram parent: mlx4_ib0 ip: address: - 192.0.2.1/24 - 2001:db8:1::1/64 state: upThe settings specified in the example playbook include the following:

type: <profile_type>- Sets the type of the profile to create. The example playbook creates two connection profiles: One for the InfiniBand connection and one for the IPoIB device.

parent: <parent_device>- Sets the parent device of the IPoIB connection profile.

p_key: <value>-

Sets the InfiniBand partition key. If you set this variable, do not set

interface_nameon the IPoIB device. transport_mode: <mode>-

Sets the IPoIB connection operation mode. You can set this variable to

datagram(default) orconnected.

For details about all variables used in the playbook, see the

/usr/share/ansible/roles/rhel-system-roles.network/README.mdfile on the control node.Validate the playbook syntax:

$ ansible-playbook --syntax-check ~/playbook.ymlNote that this command only validates the syntax and does not protect against a wrong but valid configuration.

Run the playbook:

$ ansible-playbook ~/playbook.yml

Verification

Display the IP settings of the

mlx4_ib0.8002device:# ansible managed-node-01.example.com -m command -a 'ip address show mlx4_ib0.8002' managed-node-01.example.com | CHANGED | rc=0 >> ... inet 192.0.2.1/24 brd 192.0.2.255 scope global noprefixroute ib0.8002 valid_lft forever preferred_lft forever inet6 2001:db8:1::1/64 scope link tentative noprefixroute valid_lft forever preferred_lft forever

Display the partition key (P_Key) of the

mlx4_ib0.8002device:# ansible managed-node-01.example.com -m command -a 'cat /sys/class/net/mlx4_ib0.8002/pkey' managed-node-01.example.com | CHANGED | rc=0 >> 0x8002Display the mode of the

mlx4_ib0.8002device:# ansible managed-node-01.example.com -m command -a 'cat /sys/class/net/mlx4_ib0.8002/mode' managed-node-01.example.com | CHANGED | rc=0 >> datagram

Additional resources

-

/usr/share/ansible/roles/rhel-system-roles.network/README.mdfile -

/usr/share/doc/rhel-system-roles/network/directory

3.6. Configuring an IPoIB connection by using nm-connection-editor

The nmcli-connection-editor application configures and manages network connections stored by NetworkManager by using the management console.

Prerequisites

- An InfiniBand device is installed on the server.

- Corresponding kernel module is loaded

-

The

nm-connection-editorpackage is installed.

Procedure

Enter the command:

$ nm-connection-editor- Click the + button to add a new connection.

-

Select the

InfiniBandconnection type and click . On the

InfiniBandtab:- Change the connection name if you want to.

- Select the transport mode.

- Select the device.

- Set an MTU if needed.

-

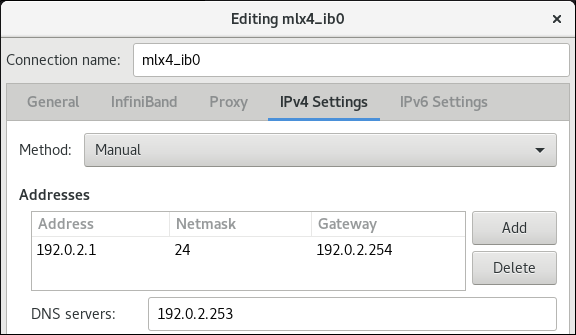

On the

IPv4 Settingstab, configure the IPv4 settings. For example, set a static IPv4 address, network mask, default gateway, and DNS server:

-

On the

IPv6 Settingstab, configure the IPv6 settings. For example, set a static IPv6 address, network mask, default gateway, and DNS server:

- Click to save the team connection.

-

Close

nm-connection-editor. You can set a

P_Keyinterface. As this setting is not available innm-connection-editor, you must set this parameter on the command line.For example, to set

0x8002asP_Keyinterface of themlx4_ib0connection:# nmcli connection modify mlx4_ib0 infiniband.p-key 0x8002

3.7. Testing an RDMA network by using qperf after IPoIB is configured

The qperf utility measures RDMA and IP performance between two nodes in terms of bandwidth, latency, and CPU utilization.

Prerequisites

-

You have installed the

qperfpackage on both hosts. - IPoIB is configured on both hosts.

Procedure

Start

qperfon one of the hosts without any options to act as a server:# qperfUse the following commands on the client. The commands use port

1of themlx4_0host channel adapter in the client to connect to IP address192.0.2.1assigned to the InfiniBand adapter in the server.Display the configuration of the host channel adapter:

# qperf -v -i mlx4_0:1 192.0.2.1 conf conf: loc_node = rdma-dev-01.lab.bos.redhat.com loc_cpu = 12 Cores: Mixed CPUs loc_os = Linux 4.18.0-187.el8.x86_64 loc_qperf = 0.4.11 rem_node = rdma-dev-00.lab.bos.redhat.com rem_cpu = 12 Cores: Mixed CPUs rem_os = Linux 4.18.0-187.el8.x86_64 rem_qperf = 0.4.11Display the Reliable Connection (RC) streaming two-way bandwidth:

# qperf -v -i mlx4_0:1 192.0.2.1 rc_bi_bw rc_bi_bw: bw = 10.7 GB/sec msg_rate = 163 K/sec loc_id = mlx4_0 rem_id = mlx4_0:1 loc_cpus_used = 65 % cpus rem_cpus_used = 62 % cpusDisplay the RC streaming one-way bandwidth:

# qperf -v -i mlx4_0:1 192.0.2.1 rc_bw rc_bw: bw = 6.19 GB/sec msg_rate = 94.4 K/sec loc_id = mlx4_0 rem_id = mlx4_0:1 send_cost = 63.5 ms/GB recv_cost = 63 ms/GB send_cpus_used = 39.5 % cpus recv_cpus_used = 39 % cpus

Additional resources

-

qperf(1)man page on your system