Dieser Inhalt ist in der von Ihnen ausgewählten Sprache nicht verfügbar.

Chapter 4. Mounting NFS shares

As a system administrator, you can mount remote NFS shares on your system to access shared data.

4.1. Services required on an NFS client

Red Hat Enterprise Linux uses a combination of kernel modules and user-space processes to provide access to NFS file shares. The nfs-utils package provides the program files for user-space processes. Install the nfs-utils package to enable NFS client functionality. Principal services used by the NFS client include the following:

| Service name | NFS version | Description |

|---|---|---|

|

| 4 |

A program that services upcalls from the NFSv4 client mapping between NFSv4 names (strings in the form of |

|

| 3 |

A daemon that implements the Network Status Monitor protocol. The two main functions of

Use the |

|

| 3 |

A |

|

| 3 |

A helper program that sends reboot notifications to remote peers that were monitored by |

|

| 3 |

A |

|

| 3, 4 |

A daemon that acts on behalf of the kernel to establish a Generic Security Services (GSS) context with a remote peer (typically initiated from the NFS client to the NFS server, but also initiated from the NFS server to the NFS client in the case of NFSv4 callbacks). This process is necessary for securing NFS using Kerberos V5. The |

|

| 3, 4 |

A |

4.2. Preparing an NFSv3 client to run behind a firewall

An NFS server notifies clients about file locks and the server status. To establish a connection back to the client, you must open the relevant ports in the firewall on the client.

Procedure

By default, NFSv3 RPC services use random ports. To enable a firewall configuration, configure fixed port numbers in the

/etc/nfs.conffile:In the

[lockd]section, set a fixed port number for thenlockmgrRPC service, for example:port=5555

port=5555Copy to Clipboard Copied! Toggle word wrap Toggle overflow With this setting, the service automatically uses this port number for both the UDP and TCP protocol.

In the

[statd]section, set a fixed port number for therpc.statdservice, for example:port=6666

port=6666Copy to Clipboard Copied! Toggle word wrap Toggle overflow With this setting, the service automatically uses this port number for both the UDP and TCP protocol.

Open the relevant ports in

firewalld:firewall-cmd --permanent --add-service=rpc-bind firewall-cmd --permanent --add-port={5555/tcp,5555/udp,6666/tcp,6666/udp} firewall-cmd --reload# firewall-cmd --permanent --add-service=rpc-bind # firewall-cmd --permanent --add-port={5555/tcp,5555/udp,6666/tcp,6666/udp} # firewall-cmd --reloadCopy to Clipboard Copied! Toggle word wrap Toggle overflow Restart the

rpc-statdservice:systemctl restart rpc-statd nfs-server

# systemctl restart rpc-statd nfs-serverCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.3. Preparing an NFSv4 client to run behind a firewall

An NFS server notifies clients about file locks and the server status. To establish a connection back to the client, you must open the relevant ports in the firewall on the client.

NFS v4.1 and later uses the pre-existing client port for callbacks, so the callback port cannot be set separately. For more information, see the How do I set the NFS4 client callback port to a specific port? solution.

Prerequisites

- The server uses the NFS 4.0 protocol.

Procedure

Open the relevant ports in

firewalld:firewall-cmd --permanent --add-port=<callback_port>/tcp firewall-cmd --reload

# firewall-cmd --permanent --add-port=<callback_port>/tcp # firewall-cmd --reloadCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.4. Manually mounting an NFS share

If you do not require that a NFS share is automatically mounted at boot time, you can manually mount it.

You can experience conflicts in your NFSv4 clientid and their sudden expiration if your NFS clients have the same short hostname. To avoid any possible sudden expiration of your NFSv4 clientid, you must use either unique hostnames for NFS clients or configure identifier on each container, depending on what system you are using. For more information, see the Red Hat Knowledgebase solution NFSv4 clientid was expired suddenly due to use same hostname on several NFS clients.

Procedure

Use the following command to mount an NFS share on a client:

mount <nfs_server_ip_or_hostname>:/<exported_share> <mount point>

# mount <nfs_server_ip_or_hostname>:/<exported_share> <mount point>Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example, to mount the

/nfs/projectsshare from theserver.example.comNFS server to/mnt, enter:mount server.example.com:/nfs/projects/ /mnt/

# mount server.example.com:/nfs/projects/ /mnt/Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

As a user who has permissions to access the NFS share, display the content of the mounted share:

ls -l /mnt/

$ ls -l /mnt/Copy to Clipboard Copied! Toggle word wrap Toggle overflow

4.5. Mounting an NFS share automatically when the system boots

Automatic mounting of an NFS share during system boot ensures that critical services reliant on centralized data, such as /home directories hosted on the NFS server, have seamless and uninterrupted access from the moment the system starts up.

Procedure

Edit the

/etc/fstabfile and add a line for the share that you want to mount:<nfs_server_ip_or_hostname>:/<exported_share> <mount point> nfs default 0 0

<nfs_server_ip_or_hostname>:/<exported_share> <mount point> nfs default 0 0Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example, to mount the

/nfs/projectsshare from theserver.example.comNFS server to/home, enter:server.example.com:/nfs/projects /home nfs defaults 0 0

server.example.com:/nfs/projects /home nfs defaults 0 0Copy to Clipboard Copied! Toggle word wrap Toggle overflow Mount the share:

mount /home

# mount /homeCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

As a user who has permissions to access the NFS share, display the content of the mounted share:

ls -l /mnt/

$ ls -l /mnt/Copy to Clipboard Copied! Toggle word wrap Toggle overflow

4.6. Connecting NFS mounts in the web console

Connect a remote directory to your file system by using NFS.

Prerequisites

- You have installed the RHEL 8 web console.

- You have enabled the cockpit service.

Your user account is allowed to log in to the web console.

For instructions, see Installing and enabling the web console.

-

The

cockpit-storagedpackage is installed on your system. - NFS server name or the IP address.

- Path to the directory on the remote server.

Procedure

Log in to the RHEL 8 web console.

For details, see Logging in to the web console.

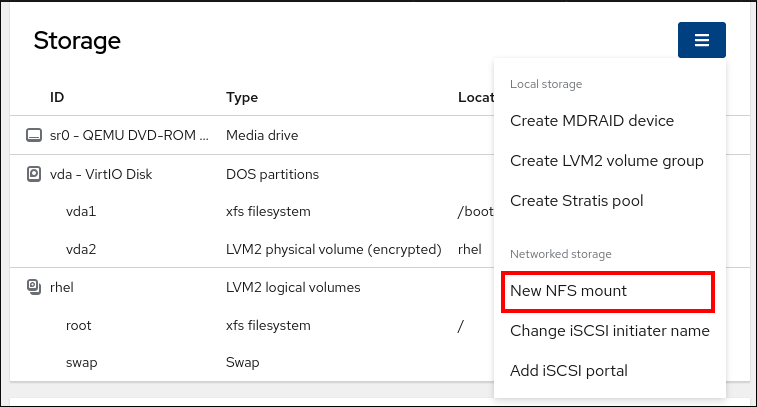

- Click Storage.

- In the Storage table, click the menu button.

From the drop-down menu, select New NFS mount.

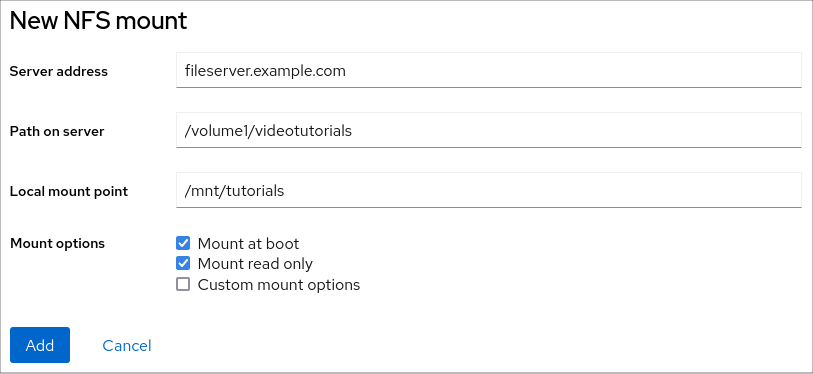

- In the New NFS Mount dialog box, enter the server or IP address of the remote server.

- In the Path on Server field, enter the path to the directory that you want to mount.

- In the Local Mount Point field, enter the path to the directory on your local system where you want to mount the NFS.

In the Mount options checkbox list, select how you want to mount the NFS. You can select multiple options depending on your requirements.

- Check the Mount at boot box if you want the directory to be reachable even after you restart the local system.

- Check the Mount read only box if you do not want to change the content of the NFS.

Check the Custom mount options box and add the mount options if you want to change the default mount option.

- Click Add.

Verification

- Open the mounted directory and verify that the content is accessible.

4.7. Customizing NFS mount options in the web console

Edit an existing NFS mount and add custom mount options.

Custom mount options can help you to troubleshoot the connection or change parameters of the NFS mount such as changing timeout limits or configuring authentication.

Prerequisites

- You have installed the RHEL 8 web console.

- You have enabled the cockpit service.

Your user account is allowed to log in to the web console.

For instructions, see Installing and enabling the web console.

-

The

cockpit-storagedpackage is installed on your system. - An NFS mount is added to your system.

Procedure

- Log in to the RHEL 8 web console. For details, see Logging in to the web console.

- Click Storage.

- In the Storage table, click the NFS mount you want to adjust.

If the remote directory is mounted, click Unmount.

You must unmount the directory during the custom mount options configuration. Otherwise, the web console does not save the configuration and this causes an error.

- Click Edit.

- In the NFS Mount dialog box, select Custom mount option.

Enter mount options separated by a comma. For example:

-

nfsvers=4: The NFS protocol version number -

soft: The type of recovery after an NFS request times out -

sec=krb5: The files on the NFS server can be secured by Kerberos authentication. Both the NFS client and server have to support Kerberos authentication.

For a complete list of the NFS mount options, enter

man nfsin the command line.-

- Click Apply.

- Click Mount.

Verification

- Open the mounted directory and verify that the content is accessible.

4.8. Setting up an NFS client with Kerberos in a Red Hat Enterprise Linux Identity Management domain

If the NFS server uses Kerberos and is enrolled in an Red Hat Enterprise Linux Identity Management (IdM) domain, your client must also be a member of the domain to be able to mount the shares. This enables you to centrally manage users and groups and to use Kerberos for authentication, integrity protection, and traffic encryption.

Prerequisites

- The NFS client is enrolled in a Red Hat Enterprise Linux Identity Management (IdM) domain.

- The exported NFS share uses Kerberos.

Procedure

Obtain a kerberos ticket as an IdM administrator:

kinit admin

# kinit adminCopy to Clipboard Copied! Toggle word wrap Toggle overflow Retrieve the host principal, and store it in the

/etc/krb5.keytabfile:ipa-getkeytab -s idm_server.idm.example.com -p host/nfs_client.idm.example.com -k /etc/krb5.keytab

# ipa-getkeytab -s idm_server.idm.example.com -p host/nfs_client.idm.example.com -k /etc/krb5.keytabCopy to Clipboard Copied! Toggle word wrap Toggle overflow IdM automatically created the

hostprincipal when you joined the host to the IdM domain.Optional: Display the principals in the

/etc/krb5.keytabfile:klist -k /etc/krb5.keytab Keytab name: FILE:/etc/krb5.keytab KVNO Principal ---- -------------------------------------------------------------------------- 6 host/nfs_client.idm.example.com@IDM.EXAMPLE.COM 6 host/nfs_client.idm.example.com@IDM.EXAMPLE.COM 6 host/nfs_client.idm.example.com@IDM.EXAMPLE.COM 6 host/nfs_client.idm.example.com@IDM.EXAMPLE.COM

# klist -k /etc/krb5.keytab Keytab name: FILE:/etc/krb5.keytab KVNO Principal ---- -------------------------------------------------------------------------- 6 host/nfs_client.idm.example.com@IDM.EXAMPLE.COM 6 host/nfs_client.idm.example.com@IDM.EXAMPLE.COM 6 host/nfs_client.idm.example.com@IDM.EXAMPLE.COM 6 host/nfs_client.idm.example.com@IDM.EXAMPLE.COMCopy to Clipboard Copied! Toggle word wrap Toggle overflow Use the

ipa-client-automountutility to configure mapping of IdM IDs:ipa-client-automount Searching for IPA server... IPA server: DNS discovery Location: default Continue to configure the system with these values? [no]: yes Configured /etc/idmapd.conf Restarting sssd, waiting for it to become available. Started autofs

# ipa-client-automount Searching for IPA server... IPA server: DNS discovery Location: default Continue to configure the system with these values? [no]: yes Configured /etc/idmapd.conf Restarting sssd, waiting for it to become available. Started autofsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Mount an exported NFS share, for example:

mount -o sec=krb5i server.idm.example.com:/nfs/projects/ /mnt/

# mount -o sec=krb5i server.idm.example.com:/nfs/projects/ /mnt/Copy to Clipboard Copied! Toggle word wrap Toggle overflow The

-o secoption specifies the Kerberos security method.

Verification

- Log in as an IdM user who has permissions to write on the mounted share.

Obtain a Kerberos ticket:

kinit

$ kinitCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a file on the share, for example:

touch /mnt/test.txt

$ touch /mnt/test.txtCopy to Clipboard Copied! Toggle word wrap Toggle overflow List the directory to verify that the file was created:

ls -l /mnt/test.txt -rw-r--r--. 1 admin users 0 Feb 15 11:54 /mnt/test.txt

$ ls -l /mnt/test.txt -rw-r--r--. 1 admin users 0 Feb 15 11:54 /mnt/test.txtCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.9. Configuring GNOME to store user settings on home directories hosted on an NFS share

If you use GNOME on a system with home directories hosted on an NFS server, you must change the keyfile backend of the dconf database. Otherwise, dconf might not work correctly.

This change affects all users on the host because it changes how dconf manages user settings and configurations stored in the home directories.

Note that the dconf keyfile backend only works if the glib2-fam package is installed. Without this package, notifications on configuration changes made on remote machines are not displayed properly.

With Red Hat Enterprise Linux 8, glib2-fam package is available in the BaseOS repository.

Prerequisites

The

glib2-fampackage is installed:yum install glib2-fam

# yum install glib2-famCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Procedure

Add the following line to the beginning of the

/etc/dconf/profile/userfile. If the file does not exist, create it.service-db:keyfile/user

service-db:keyfile/userCopy to Clipboard Copied! Toggle word wrap Toggle overflow With this setting,

dconfpolls thekeyfileback end to determine whether updates have been made, so settings might not be updated immediately.- The changes take effect when the users logs out and in.

4.10. Frequently used NFS mount options

The following are the commonly-used options when mounting NFS shares. You can use these options with mount commands, in /etc/fstab settings, and the autofs automapper.

lookupcache=mode-

Specifies how the kernel should manage its cache of directory entries for a given mount point. Valid arguments for mode are

all,none, orpositive. nfsvers=versionSpecifies which version of the NFS protocol to use, where version is

3,4,4.0,4.1, or4.2. This is useful for hosts that run multiple NFS servers, or to disable retrying a mount with lower versions. If no version is specified, the client tries version4.2first, then negotiates down until it finds a version supported by the server.The option

versis identical tonfsvers, and is included in this release for compatibility reasons.noacl- Turns off all ACL processing. This can be needed when interfacing with old Red Hat Enterprise Linux versions that are not compatible with the recent ACL technology.

nolock- Disables file locking. This setting can be required when you connect to very old NFS servers.

noexec- Prevents execution of binaries on mounted file systems. This is useful if the system is mounting a non-Linux file system containing incompatible binaries.

nosuid-

Disables the

set-user-identifierandset-group-identifierbits. This prevents remote users from gaining higher privileges by running asetuidprogram. retrans=num-

The number of times the NFS client retries a request before it attempts further recovery action. If the

retransoption is not specified, the NFS client tries each UDP request three times and each TCP request twice. timeo=num-

The time in tenths of a second the NFS client waits for a response before it retries an NFS request. For NFS over TCP, the default

timeovalue is 600 (60 seconds). The NFS client performs linear backoff: After each retransmission the timeout is increased bytimeoup to the maximum of 600 seconds. port=num-

Specifies the numeric value of the NFS server port. For NFSv3, if num is

0(the default value), or not specified, then mount queries therpcbindservice on the remote host for the port number to use. For NFSv4, if num is0, then mount queries therpcbindservice, but if it is not specified, the standard NFS port number of TCP 2049 is used instead and the remoterpcbindis not checked anymore. rsize=numandwsize=numThese options set the maximum number of bytes to be transferred in a single NFS read or write operation.

There is no fixed default value for

rsizeandwsize. By default, NFS uses the largest possible value that both the server and the client support. In Red Hat Enterprise Linux 9, the client and server maximum is 1,048,576 bytes. For more information, see the Red Hat Knowledgebase solution What are the default and maximum values for rsize and wsize with NFS mounts?.sec=optionsSecurity options to use for accessing files on the mounted export. The options value is a colon-separated list of one or more security options.

By default, the client attempts to find a security option that both the client and the server support. If the server does not support any of the selected options, the mount operation fails.

Available options:

-

sec=sysuses local UNIX UIDs and GIDs. These useAUTH_SYSto authenticate NFS operations. -

sec=krb5uses Kerberos V5 instead of local UNIX UIDs and GIDs to authenticate users. -

sec=krb5iuses Kerberos V5 for user authentication and performs integrity checking of NFS operations using secure checksums to prevent data tampering. -

sec=krb5puses Kerberos V5 for user authentication, integrity checking, and encrypts NFS traffic to prevent traffic sniffing. This is the most secure setting, but it also involves the most performance overhead.

-

4.11. Enabling client-side caching of NFS content

FS-Cache is a persistent local cache on the client that file systems can use to take data retrieved from over the network and cache it on the local disk. This helps to minimize network traffic.

4.11.1. How NFS caching works

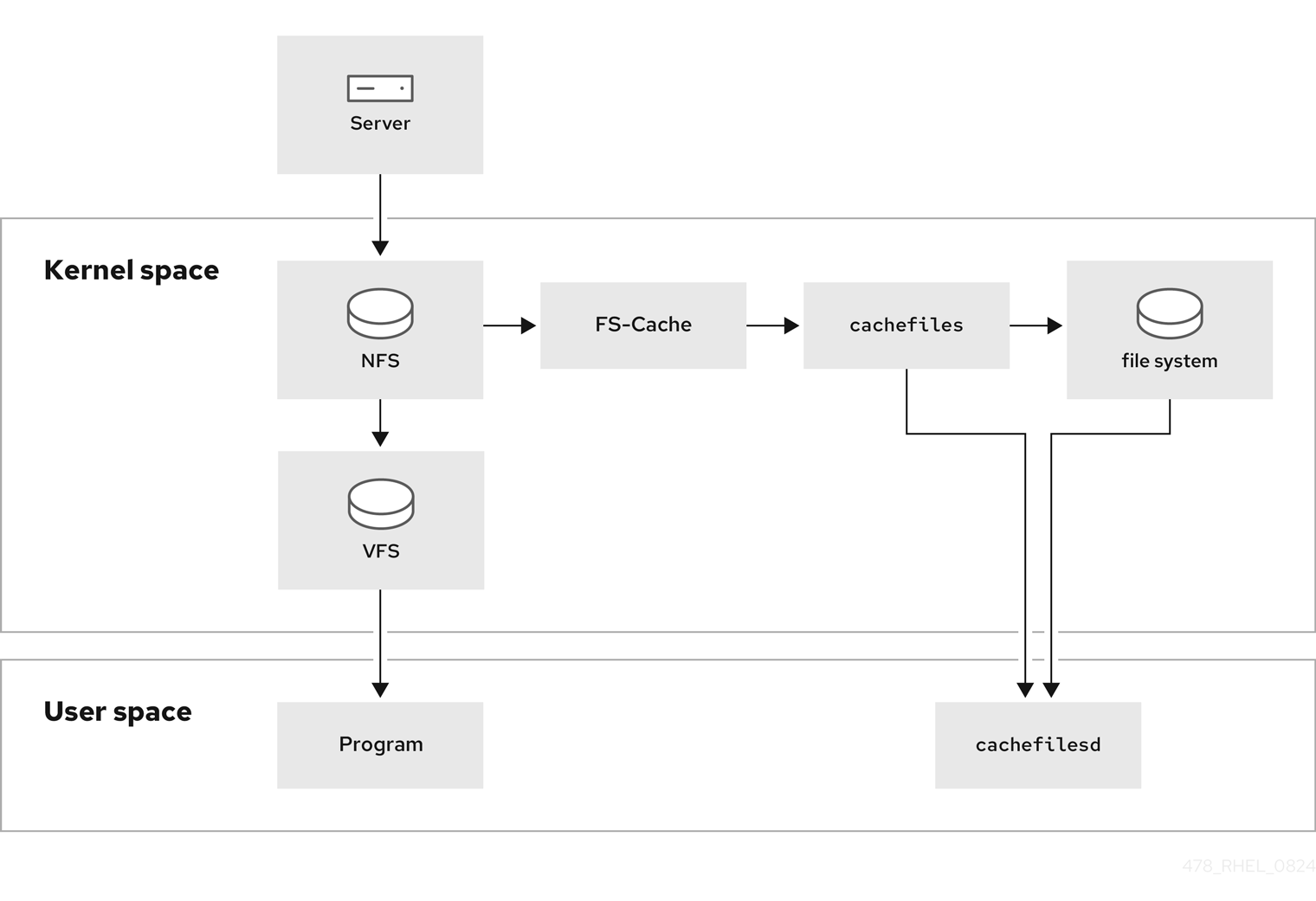

The following diagram is a high-level illustration of how FS-Cache works:

FS-Cache is designed to be as transparent as possible to the users and administrators of a system. FS-Cache allows a file system on a server to interact directly with a client’s local cache without creating an over-mounted file system. With NFS, a mount option instructs the client to mount the NFS share with FS-cache enabled. The mount point will cause automatic upload for two kernel modules: fscache and cachefiles. The cachefilesd daemon communicates with the kernel modules to implement the cache.

FS-Cache does not alter the basic operation of a file system that works over the network. It merely provides that file system with a persistent place in which it can cache data. For example, a client can still mount an NFS share whether or not FS-Cache is enabled. In addition, cached NFS can handle files that will not fit into the cache (whether individually or collectively) as files can be partially cached and do not have to be read completely up front. FS-Cache also hides all I/O errors that occur in the cache from the client file system driver.

To provide caching services, FS-Cache needs a cache back end, the cachefiles service. FS-Cache requires a mounted block-based file system, that supports block mapping (bmap) and extended attributes as its cache back end:

- XFS

- ext3

- ext4

FS-Cache cannot arbitrarily cache any file system, whether through the network or otherwise: the shared file system’s driver must be altered to allow interaction with FS-Cache, data storage or retrieval, and metadata setup and validation. FS-Cache needs indexing keys and coherency data from the cached file system to support persistence: indexing keys to match file system objects to cache objects, and coherency data to determine whether the cache objects are still valid.

Using FS-Cache is a compromise between various factors. If FS-Cache is being used to cache NFS traffic, it may slow the client down, but can massively reduce the network and server loading by satisfying read requests locally without consuming network bandwidth.

4.11.2. Installing and configuring the cachefilesd service

Red Hat Enterprise Linux provides only the cachefiles caching back end. The cachefilesd service initiates and manages cachefiles. The /etc/cachefilesd.conf file controls how cachefiles provides caching services.

Prerequisites

-

The file system mounted under the

/var/cache/fscache/directory isext3,ext4, orxfs. -

The file system mounted under

/var/cache/fscache/uses extended attributes, which is the default if you created the file system on RHEL 8 or later.

Procedure

Install the

cachefilesdpackage:dnf install cachefilesd

# dnf install cachefilesdCopy to Clipboard Copied! Toggle word wrap Toggle overflow Enable and start the

cachefilesdservice:systemctl enable --now cachefilesd

# systemctl enable --now cachefilesdCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Mount an NFS share with the

fscoption to use the cache:To mount a share temporarily, enter:

mount -o fsc server.example.com:/nfs/projects/ /mnt/

# mount -o fsc server.example.com:/nfs/projects/ /mnt/Copy to Clipboard Copied! Toggle word wrap Toggle overflow To mount a share permanently, add the

fscoption to the entry in the/etc/fstabfile:<nfs_server_ip_or_hostname>:/<exported_share> <mount point> nfs fsc 0 0

<nfs_server_ip_or_hostname>:/<exported_share> <mount point> nfs fsc 0 0Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Display the FS-cache statistics:

cat /proc/fs/fscache/stats

# cat /proc/fs/fscache/statsCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.11.3. Sharing NFS cache

Because the cache is persistent, blocks of data in the cache are indexed on a sequence of four keys:

- Level 1: Server details

- Level 2: Some mount options; security type; FSID; a uniquifier string

- Level 3: File Handle

- Level 4: Page number in file

To avoid coherency management problems between superblocks, all NFS superblocks that require to cache the data have unique level 2 keys. Normally, two NFS mounts with the same source volume and options share a superblock, and therefore share the caching, even if they mount different directories within that volume.

Example 4.1. NFS cache sharing:

The following two mounts likely share the superblock as they have the same mount options, especially if because they come from the same partition on the NFS server:

mount -o fsc home0:/nfs/projects /projects mount -o fsc home0:/nfs/home /home/

# mount -o fsc home0:/nfs/projects /projects

# mount -o fsc home0:/nfs/home /home/If the mount options are different, they do not share the superblock:

mount -o fsc,rsize=8192 home0:/nfs/projects /projects mount -o fsc,rsize=65536 home0:/nfs/home /home/

# mount -o fsc,rsize=8192 home0:/nfs/projects /projects

# mount -o fsc,rsize=65536 home0:/nfs/home /home/

The user cannot share caches between superblocks that have different communications or protocol parameters. For example, it is not possible to share caches between NFSv4.0 and NFSv3 or between NFSv4.1 and NFSv4.2 because they force different superblocks. Also setting parameters, such as the read size (rsize), prevents cache sharing because, again, it forces a different superblock.

4.11.4. NFS cache limitations

There are some cache limitations with NFS:

- Opening a file from a shared file system for direct I/O automatically bypasses the cache. This is because this type of access must be direct to the server.

- Opening a file from a shared file system for either direct I/O or writing flushes the cached copy of the file. FS-Cache will not cache the file again until it is no longer opened for direct I/O or writing.

- Furthermore, this release of FS-Cache only caches regular NFS files. FS-Cache will not cache directories, symlinks, device files, FIFOs, and sockets.

4.11.5. How cache culling works

The cachefilesd service works by caching remote data from shared file systems to free space on the local disk. This could potentially consume all available free space, which could cause problems if the disk also contains the root partition. To control this, cachefilesd tries to maintain a certain amount of free space by discarding old objects, such as less-recently accessed objects, from the cache. This behavior is known as cache culling.

Cache culling is done on the basis of the percentage of blocks and the percentage of files available in the underlying file system. There are settings in /etc/cachefilesd.conf which control six limits:

- brun N% (percentage of blocks), frun N% (percentage of files)

- If the amount of free space and the number of available files in the cache rises above both these limits, then culling is turned off.

- bcull N% (percentage of blocks), fcull N% (percentage of files)

- If the amount of available space or the number of files in the cache falls below either of these limits, then culling is started.

- bstop N% (percentage of blocks), fstop N% (percentage of files)

- If the amount of available space or the number of available files in the cache falls below either of these limits, then no further allocation of disk space or files is permitted until culling has raised things above these limits again.

The default value of N for each setting is as follows:

-

brun/frun: 10% -

bcull/fcull: 7% -

bstop/fstop: 3%

When configuring these settings, the following must hold true:

-

0 ≤

bstop<bcull<brun< 100 -

0 ≤

fstop<fcull<frun< 100

These are the percentages of available space and available files and do not appear as 100 minus the percentage displayed by the df program.

Culling depends on both bxxx and fxxx pairs simultaneously; the user cannot treat them separately.