Dieser Inhalt ist in der von Ihnen ausgewählten Sprache nicht verfügbar.

Console Administration Guide

System Administration of Red Hat Gluster Storage Environments using the Administration Portal

Abstract

Chapter 1. Introduction

- Support to quickly create and manage Red Hat Gluster Storage trusted storage pool and volumes.

- Multilevel administration to enable administration of physical infrastructure and virtual objects.

Note

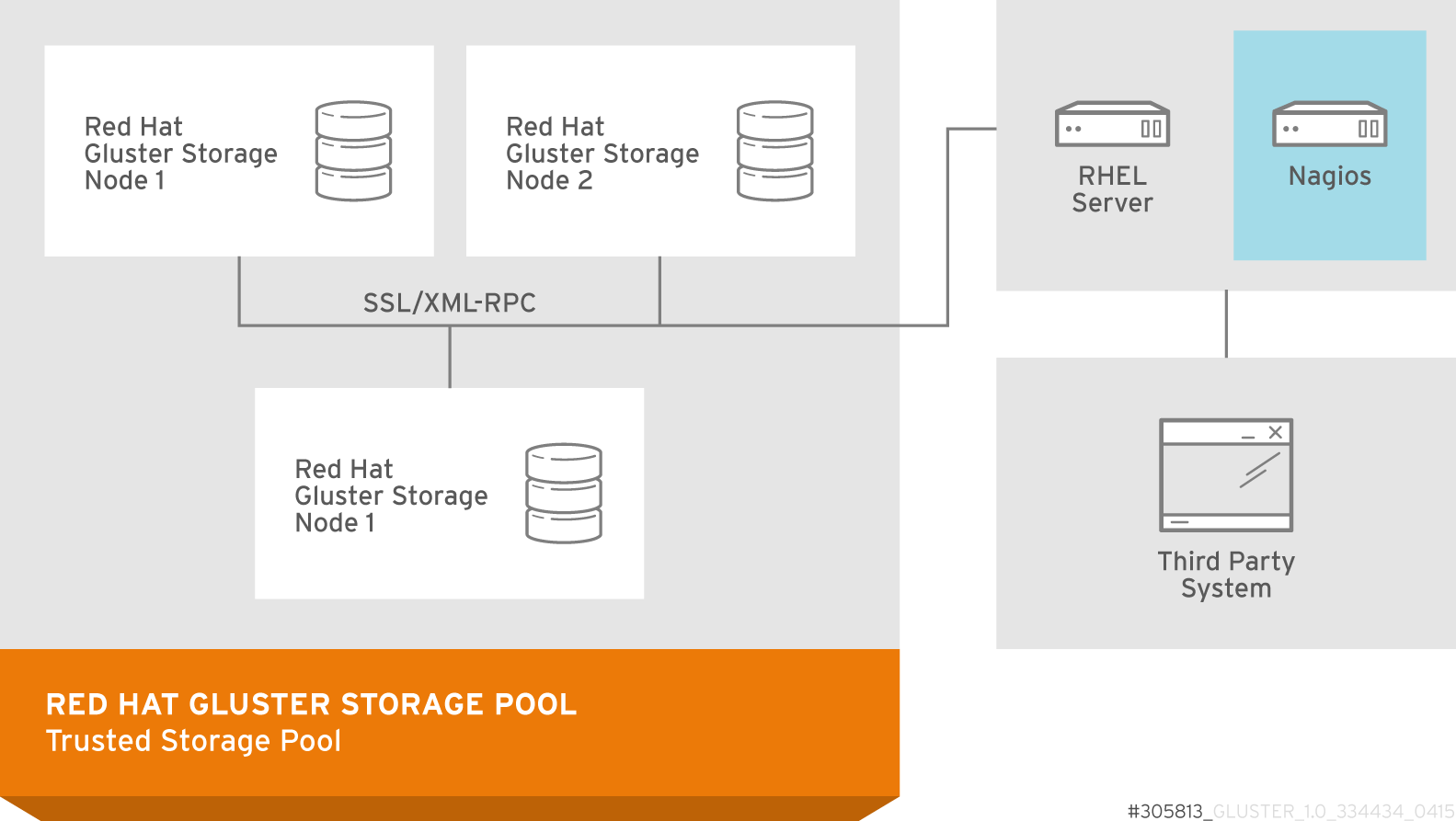

1.1. System Components

1.1.1. Components

1.1.2. The Console

1.1.3. Hosts

1.2. Red Hat Gluster Storage Console Resources

- Hosts - A host is a physical host (a physical machine) running Red Hat Gluster Storage 3.4. Servers are grouped into storage clusters. Red Hat Gluster Storage volumes are created on these clusters. The system and all its components are managed through a centralized management system.

- Clusters - A cluster is a group of linked computers that work together closely, thus in many respects forming a single computer. Hosts in a cluster share the same network infrastructure and the same storage.

- User - Red Hat Gluster Storage supports multiple levels of administrators and users with distinct levels of permissions. System administrators can manage and administer objects of the physical infrastructure, such as clusters, hosts, and volume.

- Events and Monitors - Alerts, warnings, and other notices about activities within the system help the administrator to monitor the performance and operation of various resources.

1.3. Administration of the Red Hat Gluster Storage Console

- Configuring a new logical cluster is the most important task of the system administrator. Designing a new cluster requires an understanding of capacity planning and definition of requirements. This is typically determined by the solution architect, who provides the requirements to the system architect. Preparing to set up the storage environment is a significant part of the setup, and is usually part of the system administrator's role.

- Maintaining the cluster, including performing updates and monitoring usage and performance to keep the cluster responsive to changing needs and loads.

1.3.1. Maintaining the Red Hat Gluster Storage Console

- Managing hosts and other physical resources.

- Managing the storage environment. This includes creating, deleting, expanding and shrinking volumes and clusters.

- Monitoring overall system resources for potential problems such as an extreme load on one of the hosts, insufficient memory or disk space, and taking any necessary actions.

- Managing user setup and access, and setting user and administrator permission levels. This includes assigning or customizing roles to suit the needs of the enterprise.

- Troubleshooting for specific users or hosts or for overall system functionality.

Part I. The Red Hat Gluster Storage Console Interface

Chapter 2. Getting Started

2.1. Graphical User Interface

Figure 2.1. Graphical User Interface Elements of the Administration Portal

Graphical User Interface Elements

Header

Header The Header bar contains the name of the current logged-in user, the button, the button, and the button. The button provides access to version information. The button allows you to configure user roles.  Search Bar

Search Bar The Search bar allows you to quickly search for resources such as hosts and volumes. You can build queries to find the resources that you need. Queries can be as simple as a list of all the hosts in the system, or much more complex. As you type each part of the search query, you will be offered choices to assist you in building the search. The star icon can be used to save the search as a bookmark.  Resource Tabs

Resource Tabs All resources, such as hosts and clusters, can be managed using the appropriate tab. Additionally, the Events tab allows you to manage and view events across the entire system. Clicking a tab displays the results of the most recent search query on the selected object. For example, if you recently searched for all hosts starting with "M", clicking the Hosts tab displays a list of all hosts starting with "M". The Administration Portal provides the following tabs: Clusters, Hosts, Volumes, Users, and Events.  Results List

Results List Perform a task on an individual item, multiple items, or all the items in the results list, by selecting the items and then clicking the relevant action button. If multiple selection is not possible, the button is disabled. Details of a selected item display in the details pane.  Details Pane

Details Pane The Details pane displays detailed information about a selected item in the Results Grid. If multiple items are selected, the Details pane displays information on the first selected item only.  Bookmarks Pane

Bookmarks Pane Bookmarks are used to save frequently used or complicated searches for repeated use. Bookmarks can be added, edited, or removed.  Alerts/Events Pane

Alerts/Events Pane The Alerts pane lists all events with a severity of Error or Warning. The system records all events, which are listed as audits in the Alerts section. Like events, alerts can also be viewed in the lowermost panel of the Events tab by resizing the panel and clicking the Alerts tab. This tabbed panel also appears in other tabs, such as the Hosts tab.

Important

2.1.1. Tree Mode and Flat Mode

Figure 2.2. Tree Mode

Figure 2.3. Flat Mode

2.2. Search

2.2.1. Search Syntax

result-type: {criteria} [sortby sort_spec]

The following examples describe how search queries are used, and help you to understand how Red Hat Gluster Storage Console assists with building search queries.

| Example | Result |

|---|---|

| Volumes: status = up | Displays a list of all volumes that are up. |

| Volumes: cluster = data | Displays a list of all volumes of the cluster data. |

| Events: severity > normal sortby time | Displays the list of all events whose severity is higher than Normal, sorted by time. |

2.2.1.1. Auto-Completion

Volumes: status = down

| Input | List Items Displayed | Action |

|---|---|---|

v | Volumes (1 option only) |

Select

Volumes or;

Type

Volumes

|

Volumes: |

All volumes properties

| Type s |

Volumes: s | volume properties starting with s | Select status or type status |

Volumes: status | =

!=

| Select or type |

Volumes: status = | All status values | Select or type down |

2.2.1.2. Result-Type Options

- Host for a list of hosts

- Event for a list of events

- Users for a list of users

- Cluster for a list of clusters

- Volumes for a list of volumes

2.2.1.3. Search Criteria

{criteria} is as follows:

<prop> <operator> <value>

<obj-type>.<prop> <operator> <value>

The following table describes the parts of the syntax:

| Part | Description | Values | Example | Note |

|---|---|---|---|---|

| prop | The property of the searched-for resource. Can also be the property of a resource type (see obj-type), or tag (custom tag). | See the information for each of the search types in Section 2.2.1.3.1, “Wildcards and Multiple Criteria”. | Status | -- |

| obj-type | A resource type that can be associated with the searched-for resource. | See the explanation of each of the search types in Section 2.2.1.3.1, “Wildcards and Multiple Criteria”. | Users | -- |

| operator | Comparison operators. |

=

!= (not equal)

>

<

>=

<=

| -- | Value options depend on obj-type. |

| Value | What the expression is being compared to. |

String

Integer

Ranking

Date (formatted according to regional settings)

|

Jones

256

normal

|

|

2.2.1.3.1. Wildcards and Multiple Criteria

<value> part of the syntax for strings. For example, to find all users beginning with m, enter m*.

AND and OR. For example:

Volumes: name = m* AND status = Up

AND or OR, AND is implied. AND precedes OR, and OR precedes implied AND.

2.2.1.4. Determining Sort Order

sortby. Sort direction (asc for ascending, desc for descending) can be included.

events: severity > normal sortby time desc

2.2.2. Saving Queries as Bookmarks

2.3. Tags

Procedure 2.1. Creating a tag

- In tree mode or flat mode, click the resource tab for which you wish to create a tag. For example, Hosts.

- Click the Tags tab. Select the node under which you wish to create the tag. For example, click the root node to create it at the highest level. The New button is enabled.

- Click New at the top of the Tags pane. The New Tag dialog box displays.

- Enter the Name and Description of the new tag.

- Click . The new tag is created and displays on the Tags tab.

Procedure 2.2. Modifying a tag

- Click the Tags tab. Select the tag that you wish to modify. The buttons on the Tags tab are enabled.

- Click Edit on the Tags pane. The Edit Tag dialog box displays.

- You can change the Name and Description of the tag.

- Click . The changes in the tag display on the Tags tab.

Procedure 2.3. Deleting a tag

- Click the Tags tab. The list of tags will display.

- Select the tags to be deleted and click . The Remove Tag(s) dialog box displays.

- The tags are displayed in the dialog box. Check that you are sure about the removal. The message warns you that removing the tags will also remove all descendants of the tags.

- Click . The tags are removed and no longer display on the Tags tab. The tags are also removed from all the objects to which they were attached.

Procedure 2.4. Adding or removing a tag to or from one or more object instances

- Search for the objects that you wish to tag or untag so that they are among the objects displayed in the results list.

- Select one or more objects on the results list.

- Click the Assign Tags button on the tool bar or right-click menu option.

- A dialog box provides a list of tags. Select the check box to assign a tag to the object, or deselect the check box to detach the tag from the object.

- Click . The specified tag is now added or removed as a custom property of the selected objects.

- Follow the search instructions in Section 2.2, “Search” , and enter a search query using “tag” as the property and the desired value or set of values as criteria for the search.The objects tagged with the tag criteria that you specified are listed in the results list.

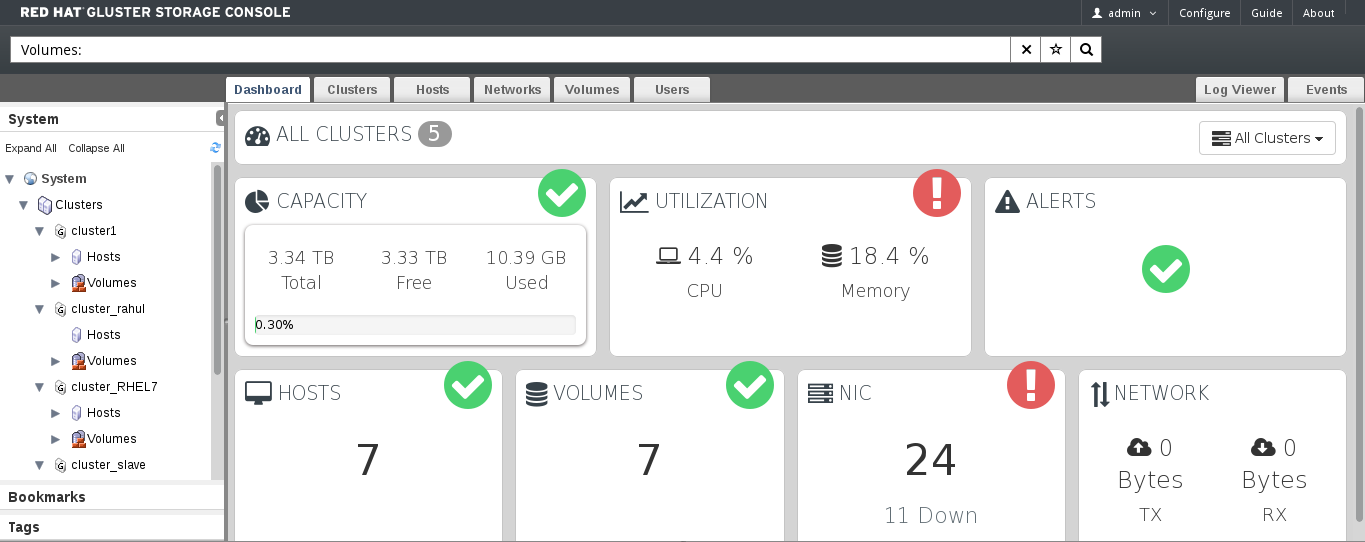

Chapter 3. Dashboard Overview

- Capacity: Displays the Total, Used and Available storage capacity in the system. Its calculated by aggregating data from all the hosts in the System.

- Utilization: Displays the average usage percentage of CPU, Memory. This is averaged across all the hosts in system.

- Alerts : Displays the number of alerts in the system. The Alerts tab will display a red exclamation icon if there is an alert ot alerts. Click on the alerts arrow icon to open the alerts dialog box. To delete the alert/alerts, click the cross icon at the right hand side of the dialogue box.

- Hosts: Displays the total number of Hosts in the system and the number of hosts in down state.

- Volumes:Displays the total number of volumes in the system across all cluster and number of them in UP, DOWN, Degraded, Partial, or Stopped status.

- NICs: Displays the number of network interfaces in the hosts.

- Network: Displays the transmission and receiving rate of the NICs.

Note

3.1. Viewing Cluster Summary

Procedure 3.1. Viewing Cluster Summary

- In Dashboard tab, select the cluster name from drop-down list to view cluster capacity details of a specific cluster.Select All Clusters to view cluster capacity details of all clusters.

Figure 3.1. Dashboard Overview

- View the cluster and volume details by hovering over each dashboard item.

- Click CAPACITY or VOLUMES to view the cluster and volume details respectively.

- Click UTILIZATION, HOST, NIC, or NETWORK to view the host and network details.

Note

Clicking each item takes you to corresponding tab in Red Hat Gluster Storage Console.

Part II. Managing System Components

Chapter 4. Managing Clusters

4.1. Cluster Properties

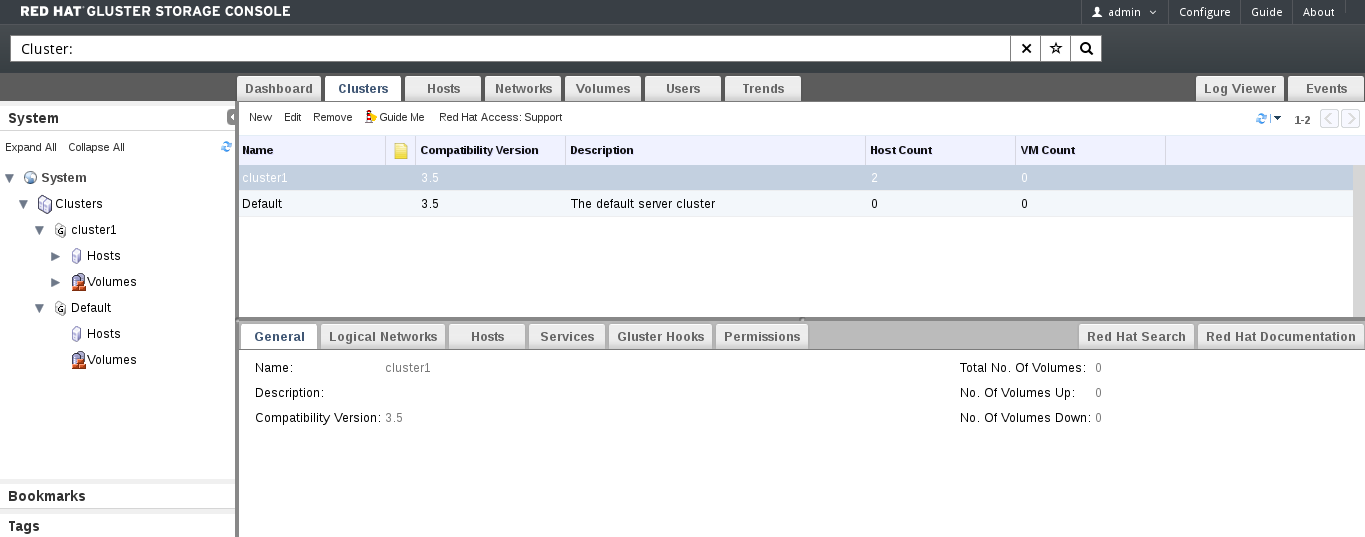

Figure 4.1. Clusters Tab

|

Field

|

Description

|

|---|---|

Name

|

The name of the cluster. This must be a unique name and may use any combination of uppercase or lowercase letters, numbers, hyphens and underscores. Maximum length is 40 characters. The name can start with a number and this field is mandatory.

|

Description

|

The description of the cluster. This field is optional, but recommended.

|

Compatibility Version

|

The version of Red Hat Gluster Storage Console with which the cluster is compatible. All hosts in the cluster must support the indicated version.

Note

The default compatibility version is 3.4.

|

| Feature | Compatibility Version 3.2 | Compatibility Version 3.3 | Compatibility Version 3.4 |

|---|---|---|---|

|

View advanced details of a particular brick of the volume through the Red Hat Gluster Storage Console.

|

Supported

|

Supported

|

Supported

|

|

Synchronize brick status with the engine database.

|

Supported

|

Supported

|

Supported

|

|

Manage glusterFS hooks through the Red Hat Gluster Storage Console. View the list of hooks available in the hosts, view the contents and status of hooks, enable or disable hooks, and resolve hook conflicts.

|

Supported

|

Supported

|

Supported

|

|

Display Services tab with NFS and SHD service status.

|

Supported

|

Supported

|

Supported

|

|

Manage volume rebalance through the Red Hat Gluster Storage Console. Rebalance volume, stop rebalance, and view rebalance status.

|

Not Supported

|

Supported

|

Supported

|

|

Manage remove-brick operations through the Red Hat Gluster Storage Console. Remove-brick, stop remove-brick, view remove-brick status, and retain the brick being removed.

|

Not Supported

|

Supported

|

Supported

|

|

Allow using system's root partition for bricks and and re-using the bricks by clearing the extended attributes.

|

Not Supported

|

Supported

|

Supported

|

|

Addition of RHS U2 nodes

|

Not Supported

|

Supported

|

Supported

|

|

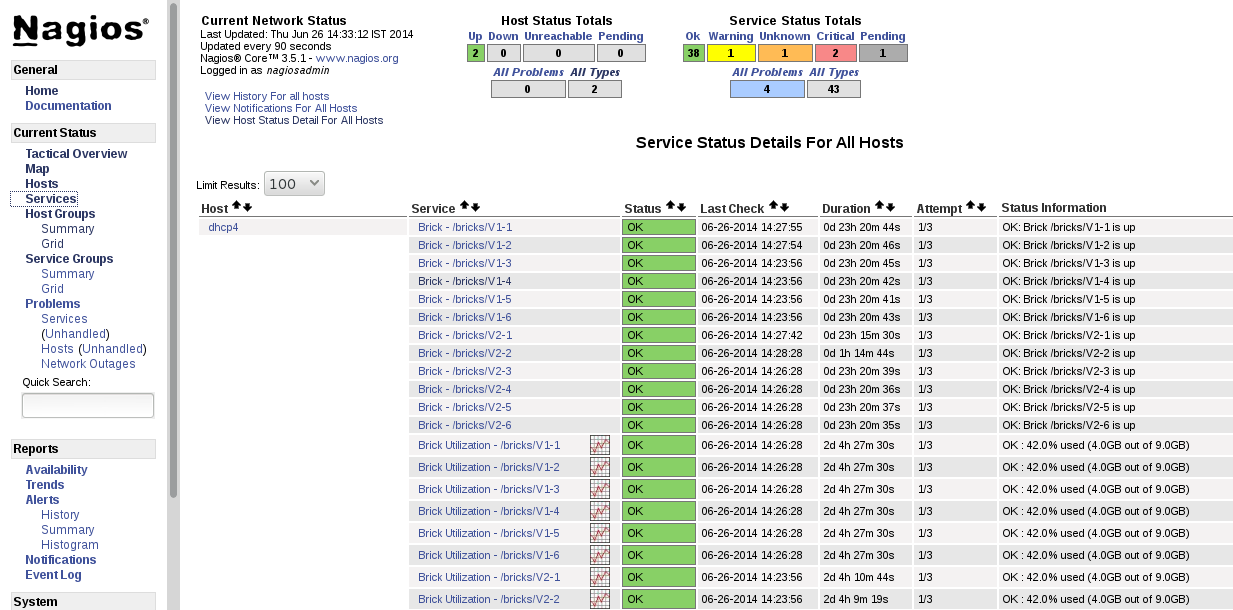

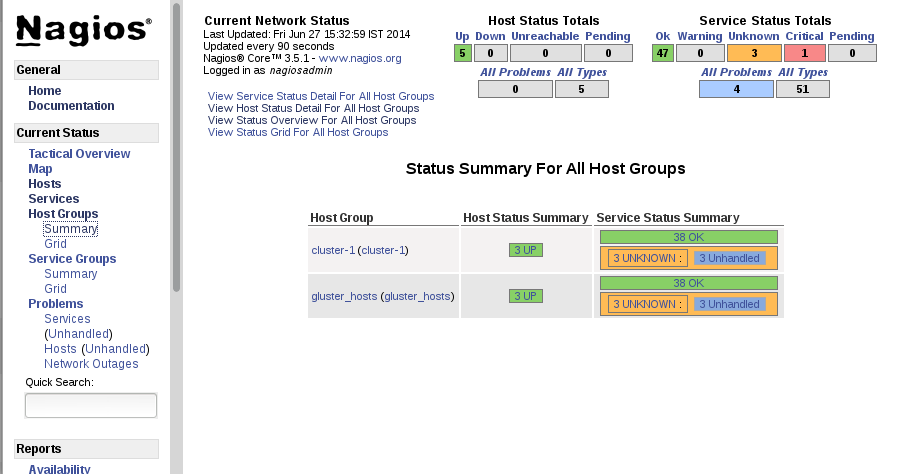

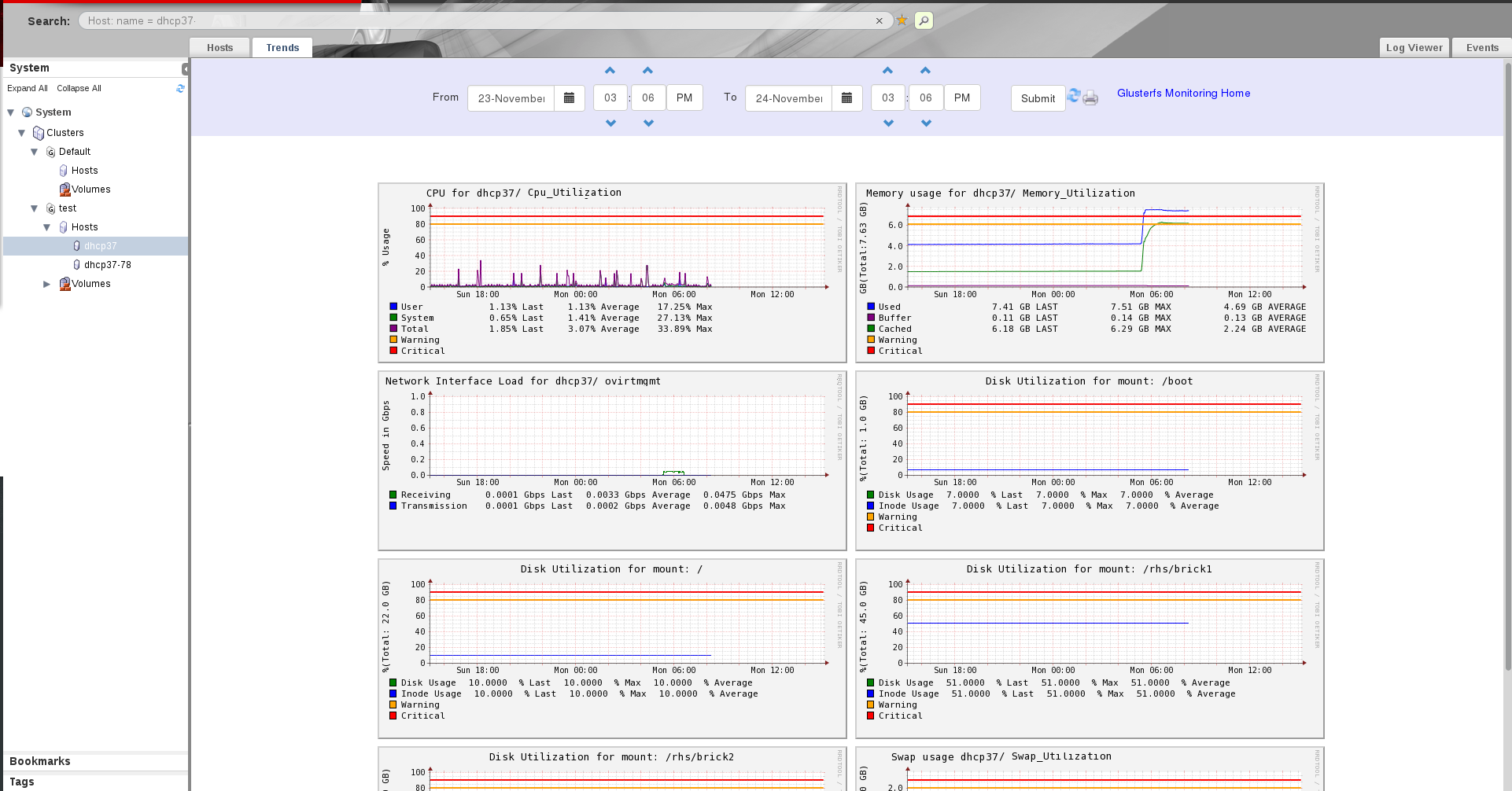

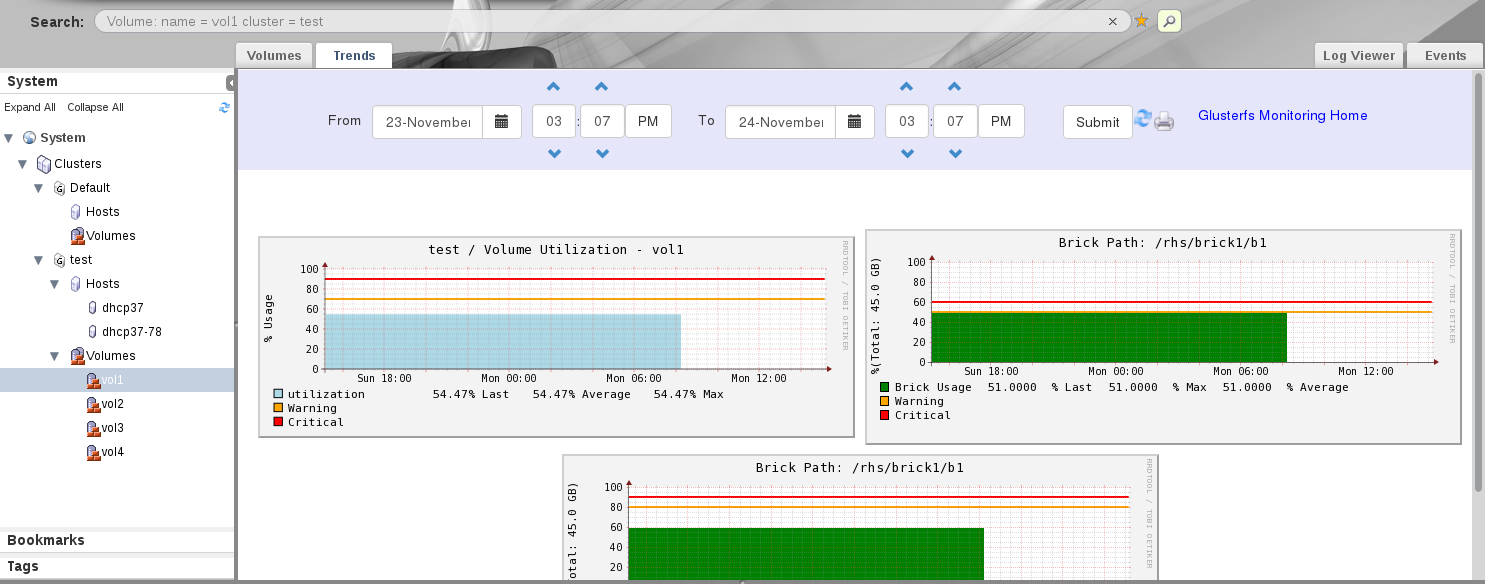

Viewing Nagios Monitoring Trends

|

Not Supported

|

Not Supported

|

Supported

|

4.2. Cluster Operations

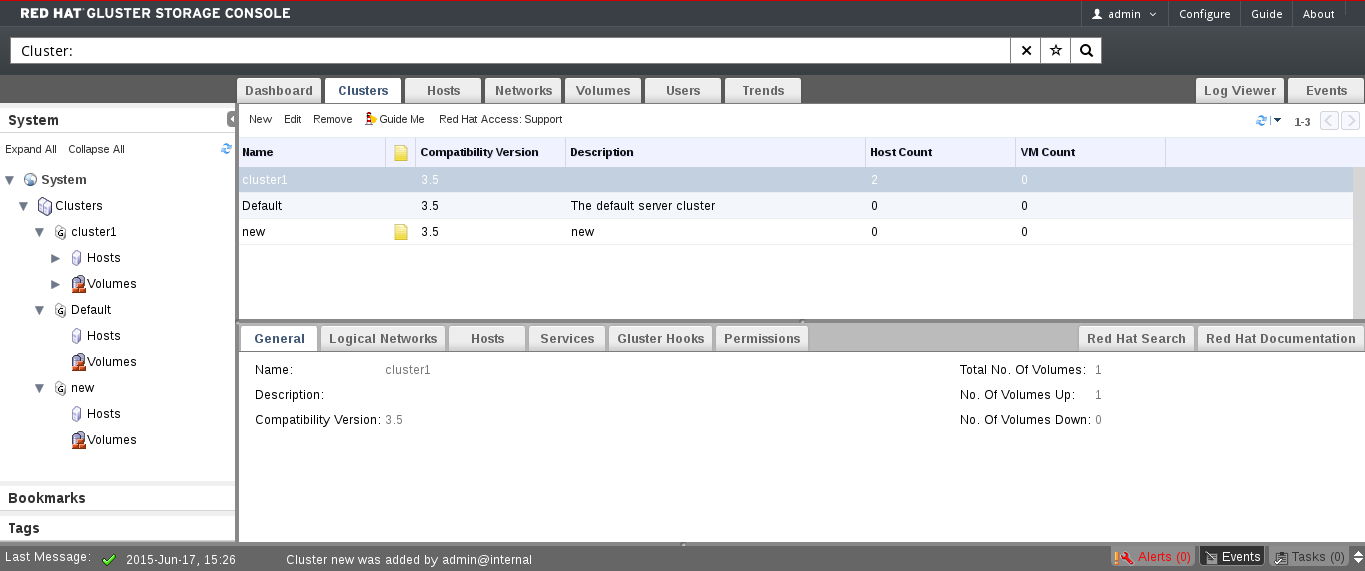

4.2.1. Creating a New Cluster

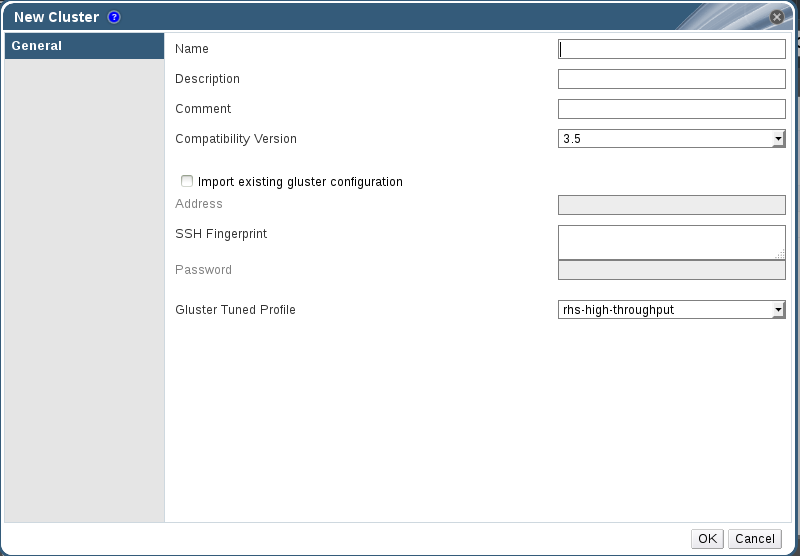

Procedure 4.1. To Create a New Cluster

- Open the Clusters view by expanding the System tab and selecting the Cluster tab in the Tree pane. Alternatively, select Clusters from the Details pane.

- Click to open the New Cluster dialog box.

Figure 4.2. New Cluster Dialog Box

- Enter the cluster Name, Description and Compatibility Version. The name cannot include spaces. When the user selects Import existing gluster configuration and enters the Address, fingerprint will be fetched automatically by the Red Hat Gluster Storage Console.

- Click to create the cluster. The new cluster displays in the Clusters tab.

- Click to configure the cluster. The Guide Me window lists the entities you need to configure for the cluster. Configure these entities or postpone configuration by clicking . You can resume the configuration process by selecting the cluster and clicking . To import an existing cluster, see Section 4.2.2, “Importing an Existing Cluster”.

Tuned profiles helps to enhance the performance of the system by applying some predefined set of system parameters. We have two tuned profiles for Red Hat Gluster Storage:

rhs-high-throughput: It is a default profile which will be applied on the RHGS nodes. This helps to enhance the performance of RHGS Volume.rhs-virtualization: If the number of clients is greater than 100, you must switch to the rhs-virtualization tuned profile. For more information, see Number of Clients in Red Hat Gluster Storage Administration Guide.

4.2.2. Importing an Existing Cluster

peer status command executes on that host through SSH, then displays a list of hosts that are part of the cluster. You must manually verify the fingerprint of each host and provide passwords for them. If some hosts are not reachable, then import cluster will not add these hosts to the cluster during import.

Procedure 4.2. To Import an Existing Cluster

- In the Tree pane, click System tab, then click the Clusters tab.

- Click to open the New Cluster dialog box.

- Enter the cluster Name, Description and Compatibility Version. The name cannot include spaces.

- Select to import the cluster.

- In the Address field, enter the host name or IP address of a host in the cluster.The host Fingerprint displays to indicate the connection host. If a host in unreachable or if there is a network error, Error in fetching fingerprint displays in the Fingerprint field.

- Enter the Root Password for the host in the Password field and click .

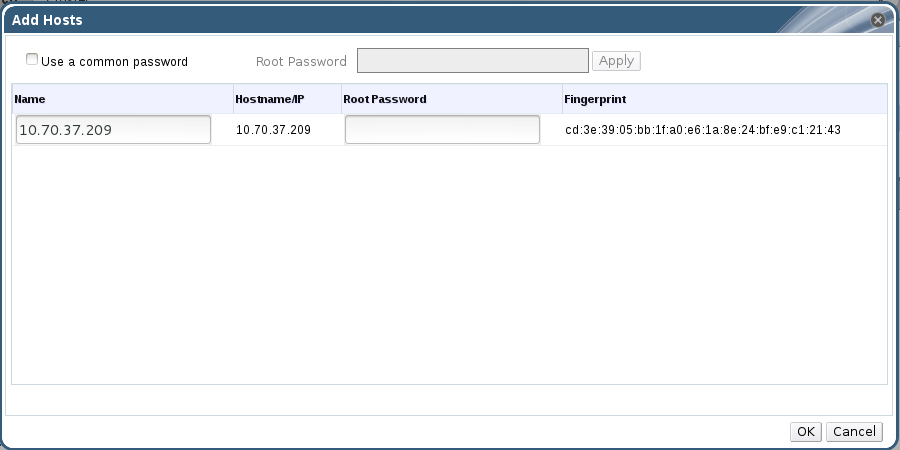

- The Add Hosts window opens, and a list of hosts that are part of the cluster displays.

- For each host, enter the Name and Root Password. If you wish to use the same password for all hosts, check Use a common password and enter a password.

- Click to set the password for all hosts then click to submit the changes.

4.2.3. Editing a Cluster

Procedure 4.3. To Edit a Cluster

- Click the Clusters tab to display the list of host clusters. Select the cluster that you want to edit.

- Click to open the Edit Cluster dialog box.

- Enter a Name and Description for the cluster and select the compatibility version from the Compatibility Version drop down list.

- Click to confirm the changes and display the host cluster details.

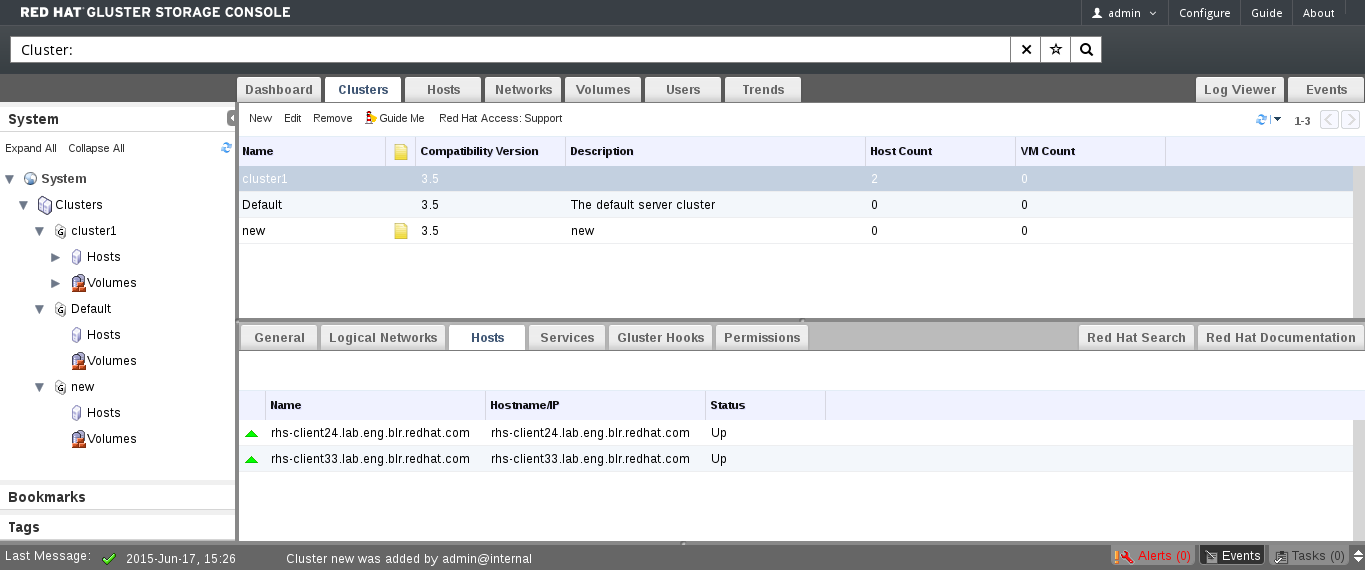

4.2.4. Viewing Hosts in a Cluster

Procedure 4.4. To View Hosts in a Cluster

- Click the Clusters tab to display a list of host clusters. Select the desired cluster to display the Details pane.

- Click the tab to display a list of hosts.

Figure 4.3. The Hosts tab on the Cluster Details pane

4.2.5. Removing a Cluster

Warning

Procedure 4.5. To Remove a Cluster

- Click the Clusters tab to display a list of clusters. If the required cluster is not visible, perform a search.

- Select the cluster to be removed. Ensure that there are no running hosts or volumes.

- Click the button.

- A dialog box lists all the clusters selected for removal. Click to confirm the removal.

4.3. Cluster Entities

A cluster is a collection of hosts. The Hosts tab displays all information related to the hosts in a cluster.

|

Field

|

Description

|

|---|---|

Name

|

The name of the host.

|

Hostname/IP

|

The name of the host/IP address.

|

Status

|

The status of the host.

|

Logical networks enable hosts to communicate with other hosts, and for the Console to communicate with cluster entities. You must define logical networks for each cluster.

|

Field

|

Description

|

|---|---|

Name

| The name of the logical networks in a cluster. |

Status

| The status of the logical networks. |

Role

| The hierarchical permissions available to the logical network. |

Description

| The description of the logical networks. |

Cluster permissions define which users and roles can work in a cluster, and what operations the users and roles can perform.

|

Field

|

Description

|

|---|---|

User

| The user name of an existing user in the directory services. |

Role

| The role of the user. The role comprises of user, permission level and object. Roles can be default or customized roles. |

Inherited Permissions

| The hierarchical permissions available to the user. |

Gluster Hooks are volume lifecycle extensions. You can manage the Gluster Hooks from Red Hat Gluster Storage Console.

|

Field

|

Description

|

|---|---|

Name

| The name of the hook. |

Volume Event

| Events are instances in the execution of volume commands like create, start, stop, add-brick, remove-brick, set and so on. Each of the volume commands have two instances during their execution, namely Pre and Post.

Pre and Post refers to the time just before and after the corresponding volume command has taken effect on a peer respectively.

|

Stage

| When the event should be executed. For example, if the event is Start Volume and the Stage is Post, the hook will be executed after the start of the volume. |

Status

|

Status of the gluster hook.

|

Content Type

|

Content type of the gluster hook.

|

The service running on a host can be searched using the Services tab.

|

Field

|

Description

|

|---|---|

Host

| The ip of the host. |

Service

| The name of the service. |

Port

| The port number of the host. |

Status

|

The status of the host.

|

Process Id

|

The process id of the host.

|

4.4. Cluster Permissions

- Creation and removal of specific clusters.

- Addition and removal of hosts.

- Permission to attach users to hosts within a single cluster.

Note

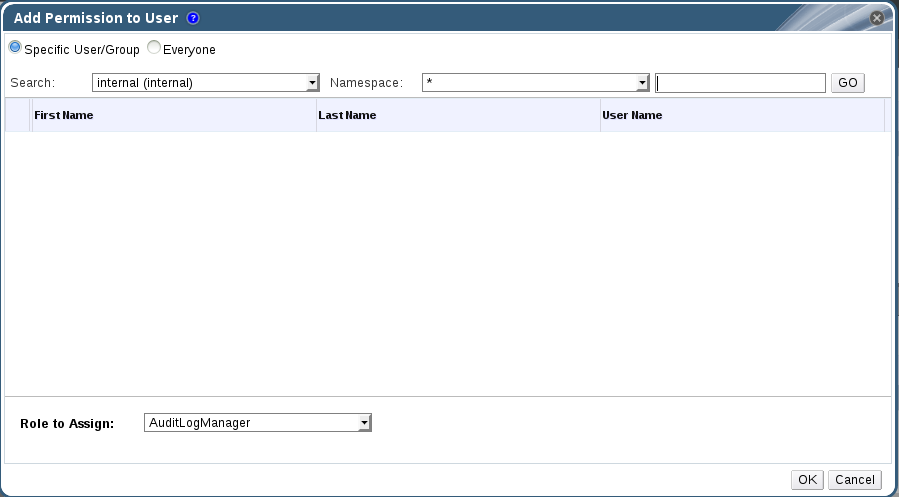

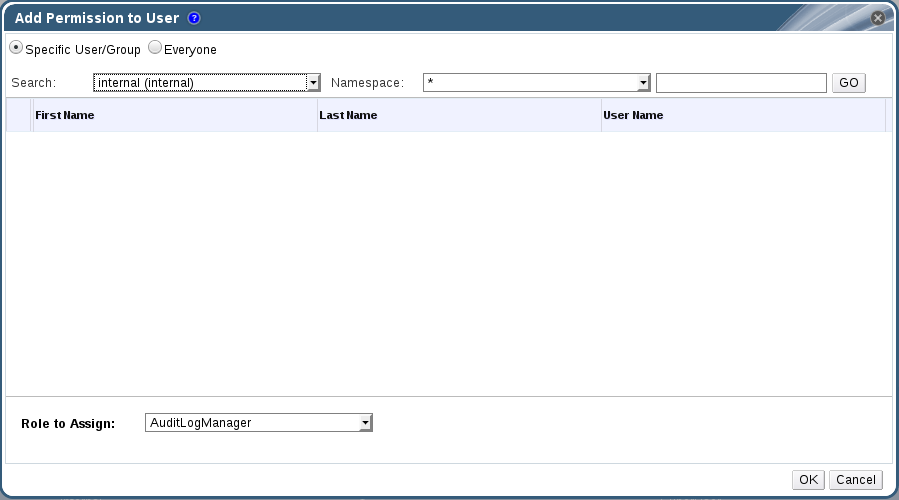

Procedure 4.6. To Add a Cluster Administrator Role

- Click the Clusters tab to display the list of clusters. If the required cluster is not visible, perform a search.

- Select the cluster that you want to edit. Click the tab in the Details pane to display a list of existing users and their current roles and inherited permissions.

- Click to display the Add Permission to User dialog box. Enter all or part of a name or user name in the Search box, then click . A list of possible matches displays in the results list.

- Select the user you want to modify. Scroll through the Role to Assign list and select .

- Click to display the name of the user and their assigned role in the Permissions tab.

Procedure 4.7. To Remove a Cluster Administrator Role

- Click the Clusters tab to display a list of clusters. If the required cluster is not visible, perform a search.

- Select the cluster that you want to edit. Click the tab in the Details pane to display a list of existing users and their current roles and inherited permissions.

- Select the user you want to modify and click . This removes the user from the Permissions tab and from associated hosts and volumes.

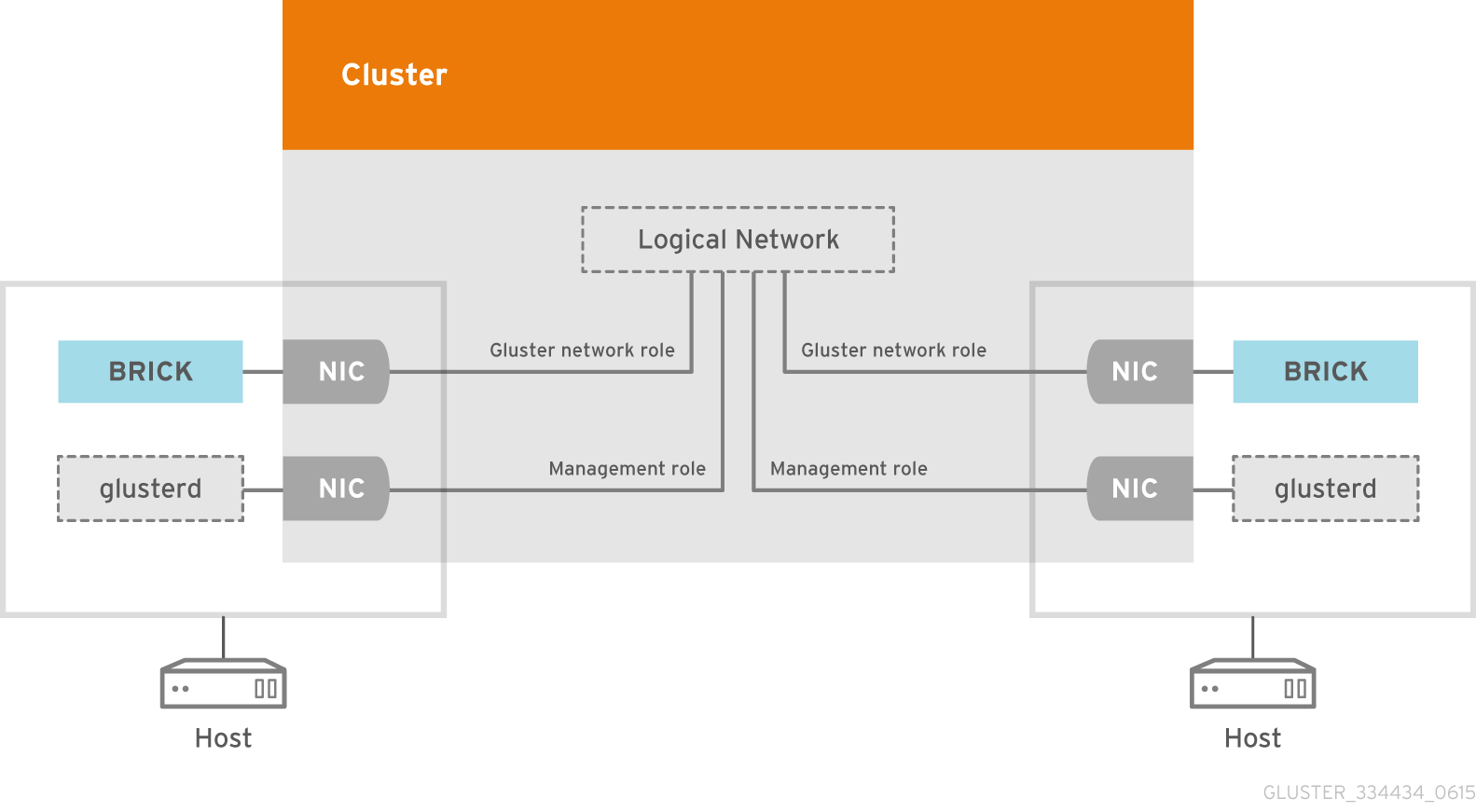

Chapter 5. Logical Networks

5.1. Introduction to Logical Networks

ovirtmgmt. The ovirtmgmt network carries all traffic, until another logical network is created. It is meant especially for management communication between the Red Hat Gluster Storage Console and hosts.

Figure 5.1. Logical Network architecture

Warning

5.2. Required Networks, Optional Networks

5.3. Logical Network Tasks

5.3.1. Using the Networks Tab

- Attaching or detaching the networks to clusters and hosts

- Adding and removing permissions for users to access and manage networks

5.3.2. Creating a New Logical Network in Cluster

Create a logical network and define its use in a cluster.

Procedure 5.1. Creating a New Logical Network in a Cluster

- Click the Networks or Clusters tab in tree mode and select a network or cluster.

- Click the Logical Networks tab of the details pane to list the existing logical networks.

- From the Clusters tab, select Logical Networks sub-tab and click to open the New Logical Network window.

- From the Networks tab, click to open the New Logical Network window.

Figure 5.2. New Logical Network

- Enter a Name, Description, and Comment for the logical network.

- From the Cluster tab, select the clusters to which the network will be assigned. You can also specify whether the logical network will be a required network.

Figure 5.3. New Logical Network - Cluster

- Click OK.

You have defined a logical network as a resource required by a cluster or clusters in the Network. If you entered a label for the logical network, it will be automatically added to all host network interfaces with that label.

5.3.3. Editing a Logical Network

Edit the settings of a logical network.

Procedure 5.2. Editing a Logical Network

- Click Networks tab in tree mode and select a Network.

- Select a logical network and click to open the Edit Logical Network window.

Figure 5.4. New Logical Network - Cluster

- Edit the necessary settings.

- Click OK to save the changes.

You have updated the settings of your logical network.

Note

5.3.4. Explanation of Settings and Controls in the New Logical Network and Edit Logical Network Windows

5.3.4.1. Logical Network General Settings Explained

|

Field Name

|

Description

|

|---|---|

|

Name

|

The name of the logical network. This text field has a 15-character limit and must be a unique name with any combination of uppercase and lowercase letters, numbers, hyphens, and underscores.

|

|

Description

|

The description of the logical network. This text field has a 40-character limit.

|

|

Comment

|

A field for adding plain text, human-readable comments regarding the logical network.

|

|

Network Label

|

Allows you to specify a new label for the network or select from existing labels already attached to host network interfaces. If you select an existing label, the logical network will be automatically assigned to all host network interfaces with that label.

|

5.3.4.2. Logical Network Cluster Settings Explained

|

Field Name

|

Description

|

|---|---|

|

Attach/Detach Network to/from Cluster(s)

|

Allows you to attach or detach the logical network from clusters and specify whether the logical network will be a required network for individual clusters.

Name - the name of the cluster to which the settings will apply. This value cannot be edited.

Attach All - Allows you to attach or detach the logical network to or from all clusters. Alternatively, select or clear the Attach check box next to the name of each cluster to attach or detach the logical network to or from a given cluster.

Required All - Allows you to specify whether the logical network is a required network on all clusters. Alternatively, select or clear the Required check box next to the name of each cluster to specify whether the logical network is a required network for a given cluster.

|

5.3.5. Designate a Specific Traffic Type for a Logical Network with the Manage Networks Window

Specify the traffic type for the logical network to optimize the network traffic flow.

Procedure 5.3. Specifying Traffic Types for Logical Networks

- Click Clusters tab in tree mode and select the cluster in the results list.

- Select the Logical Networks tab in the details pane to list the logical networks assigned to the cluster.

- Click to open the Manage Networks window.

Figure 5.5. Manage Networks Window

- Select appropriate check boxes.

- Click to save the changes and close the window.

You have optimized the network traffic flow by assigning a specific type of traffic to be carried on a specific logical network.

5.3.6. Explanation of Settings in the Manage Networks Window

|

Field

|

Description/Action

|

|---|---|

|

Assign

|

Assigns the logical network to all hosts in the cluster.

|

|

Required

|

A Network marked "required" must remain operational in order for the hosts associated with it to function properly. If a required network ceases to function, any hosts associated with it become non-operational.

|

|

Gluster Network

| A logical network marked "Gluster Network" carries gluster network traffic. |

5.3.7. Network Labels

Network Label Associations

- When you attach a label to a logical network, that logical network will be automatically associated with any physical host network interfaces with the given label.

- When you attach a label to a physical host network interface, any logical networks with the given label will be automatically associated with that physical host network interface.

- Changing the label attached to a logical network or physical host network interface acts in the same way as removing a label and adding a new label. The association between related logical networks or physical host network interfaces is updated.

Network Labels and Clusters

- When a labeled logical network is added to a cluster and there is a physical host network interface in that cluster with the same label, the logical network is automatically added to that physical host network interface.

- When a labeled logical network is detached from a cluster and there is a physical host network interface in that cluster with the same label, the logical network is automatically detached from that physical host network interface.

Network Labels and Logical Networks With Roles

- When a labeled logical network is assigned to act as a display network or migration network, that logical network is then configured on the physical host network interface using DHCP so that the logical network can be assigned an IP address.

5.4. Logical Networks and Permissions

5.4.1. Managing System Permissions for a Network

- Create, edit and remove networks.

- Edit the configuration of the network, including configuring port mirroring.

- Attach and detach networks from resources including clusters and host.

5.4.2. Network Administrator and User Roles Explained

The table below describes the administrator and user roles and privileges applicable to network administration.

| Role | Privileges | Notes |

|---|---|---|

| NetworkAdmin | Network Administrator for cluster or host. The user who creates a network is automatically assigned NetworkAdmin permissions on the created network. | Can configure and manage the network of a particular cluster or host. A network administrator of a cluster inherits network permissions for storage devices within the cluster. To configure port mirroring on a storage device network, apply the NetworkAdmin role on the network. |

| NetworkUser | Logical network and network interface user for virtual machine and template. | Can attach or detach network interfaces from specific logical networks. |

Chapter 6. Managing Red Hat Gluster Storage Hosts

- Must belong to only one cluster in the system.

- Can have an assigned system administrator with system permissions.

Important

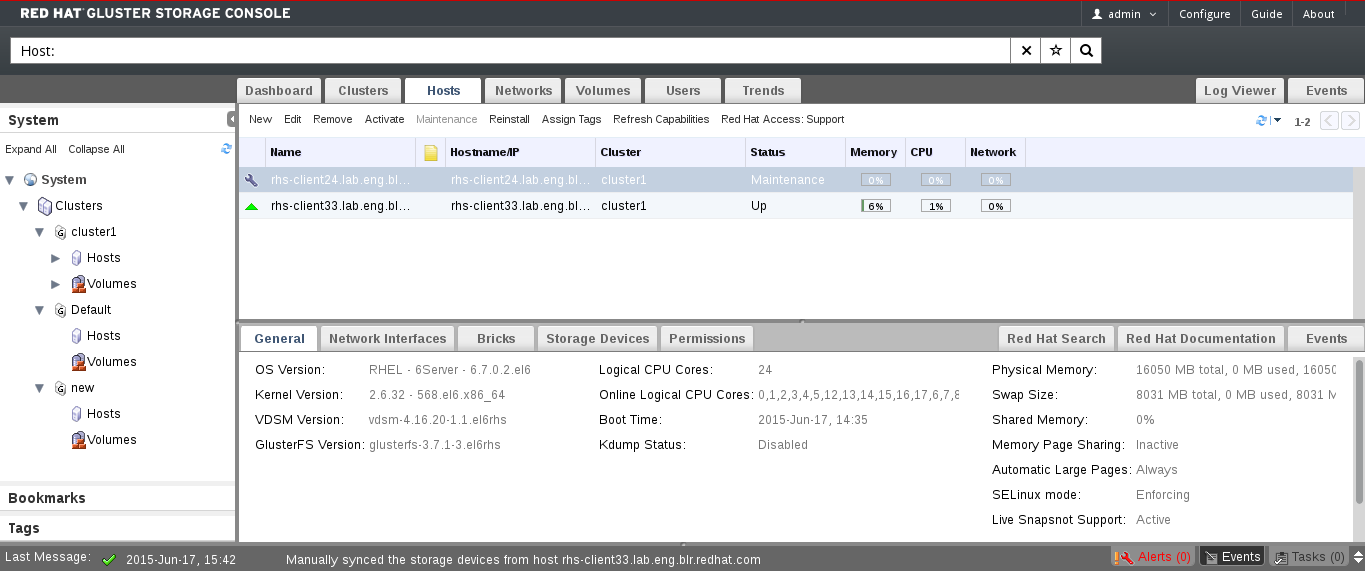

6.1. Hosts Properties

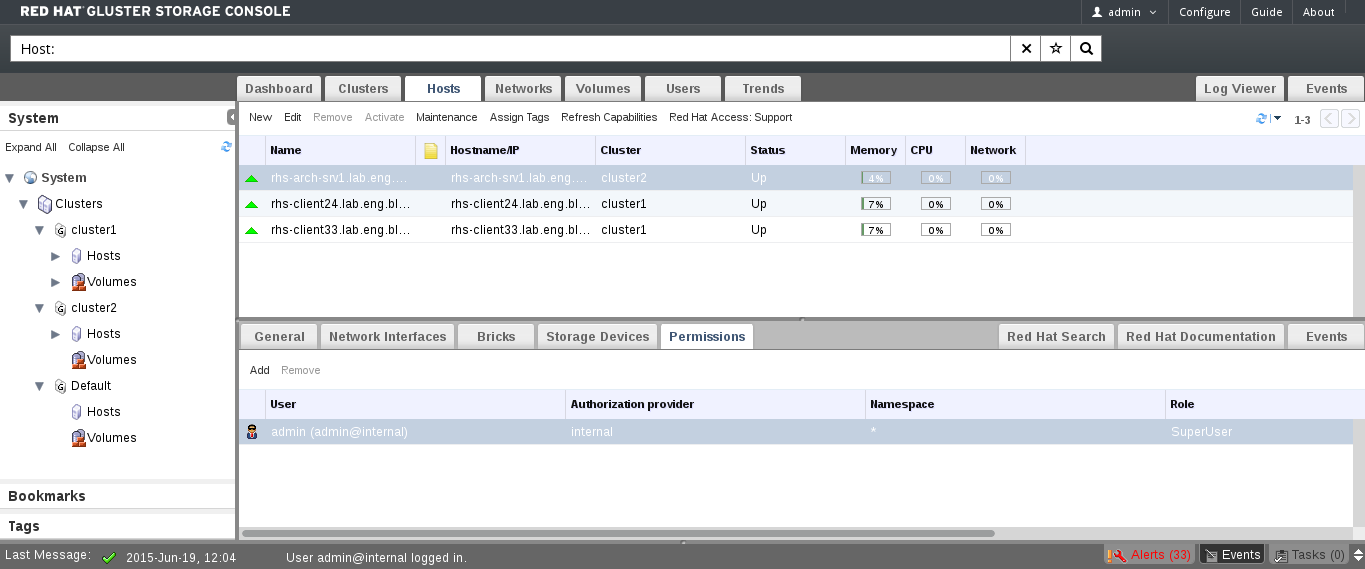

Figure 6.1. Hosts Details Pane

|

Field

|

Description

|

|---|---|

Cluster

| The selected cluster. |

Name

| The host name. |

Address

| The IP address or resolvable hostname of the host. |

6.2. Hosts Operations

6.2.1. Adding Hosts

Important

Before you can add a host to Red Hat Gluster Storage, ensure your environment meets the following criteria:

- The host hardware is Red Hat Enterprise Linux certified. See https://access.redhat.com/ecosystem/#certifiedHardware to confirm that the host has Red Hat certification.

- The host should have a resolvable hostname or static IP address.

- On Red Hat Enterprise Linux 7 nodes, register to the Red Hat Gluster Storage Server Channels if firewall needs to be configured automatically as iptables-service package is required.

Procedure 6.1. To Add a Host

- Click the Hosts tab to list available hosts.

- Click to open the New Host window.

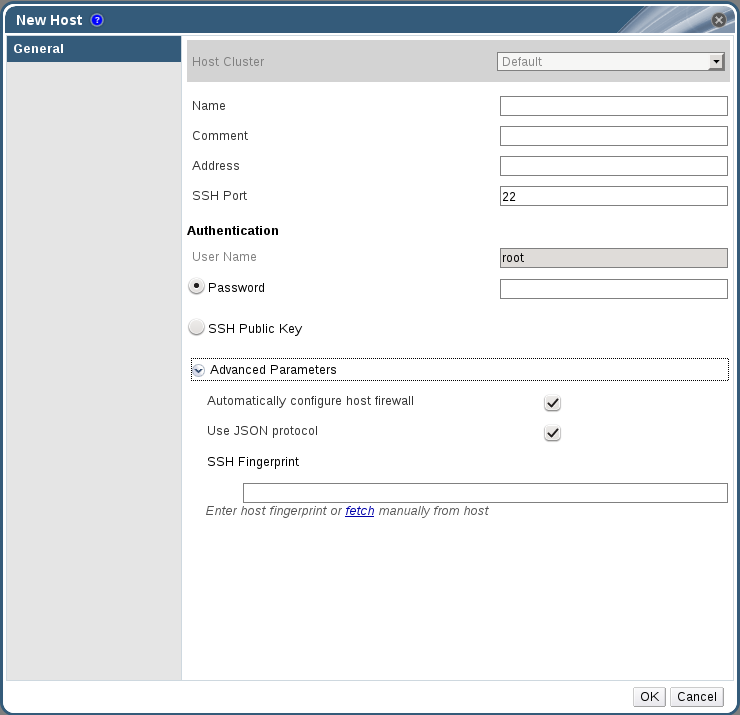

Figure 6.2. New Host Window

- Select the Host Cluster for the new host from the drop-down menu.

Expand Table 6.2. Add Hosts Properties FieldDescriptionHost ClusterThe cluster to which the host belongs. NameThe name of the host. This text field has a 40-character limit and must be a unique name with any combination of uppercase and lowercase letters, numbers, hyphens, and underscores.If Nagios is enabled, the host name given in Name field of Add Host window should match the host name given while configuring Nagios.AddressThe IP address or resolvable hostname of the host. Root PasswordThe password of the host's root user. This can only be given when you add the host, it cannot be edited afterwards. SSH Public KeyCopy the contents in the text box to the /root/.ssh/authorized_keys file on the host if you'd like to use the Manager's ssh key instead of using a password to authenticate with the host. Automatically configure host firewallWhen adding a new host, the Manager can open the required ports on the host's firewall. This is enabled by default. This is an Advanced Parameter.The required ports are opened if this option is selected.SSH FingerprintYou can fetch the host's ssh fingerprint, and compare it with the fingerprint you expect the host to return, ensuring that they match. This is an Advanced Parameter. Note

For Red Hat Enterprise Linux 7 hosts, iptables-service is used to manage firewall and existing firewalld configurations will not be enforced if "Automatically configure host firewall is chosen." - Enter the Name, and Address of the new host.

- Select an authentication method to use with the host:

- Enter the root user's password to use password authentication.

- Copy the key displayed in the SSH PublicKey field to

/root/.ssh/authorized_keyson the host to use public key authentication.

- The mandatory steps for adding a Red Hat Gluster Storage host are complete. Click to show the advanced host settings:

- Optionally disable automatic firewall configuration.

- Optionally disable use of JSON protocol.

Note

With Red Hat Gluster Storage Console, the communication model between the engine and VDSM now uses JSON protocol, which reduces parsing time. As a result, the communication message format has changed from XML format to JSON format. Web requests have changed from synchronous HTTP requests to asynchronous TCP requests. - Optionally add a host SSH fingerprint to increase security. You can add it manually, or fetch it automatically.

- Click to add the host and close the window.The new host displays in the list of hosts with a status of "Installing" and then it goes to "Initialization" state and the host comes up.

Note

The host will be in Up status after the status of "Installing" and "Initialization" state. The host will have Non-Operational status when the host is not compatible with the cluster compatibility version. The Non-Responsive status will be displayed if the host is down or is unreachable.You can view the progress of the host installation in the Details pane.

6.2.2. Activating Hosts

Procedure 6.2. To Activate a Host

- In the Hosts tab, select the host you want to activate.

- Click . The host status changes to Up.

6.2.3. Managing Host Network Interfaces

Note

6.2.3.1. Editing Host Network Interfaces

Procedure 6.3. To Edit a Host Network Interface

- Click the Hosts tab to display a list of hosts. Select the desired host to display the Details pane.

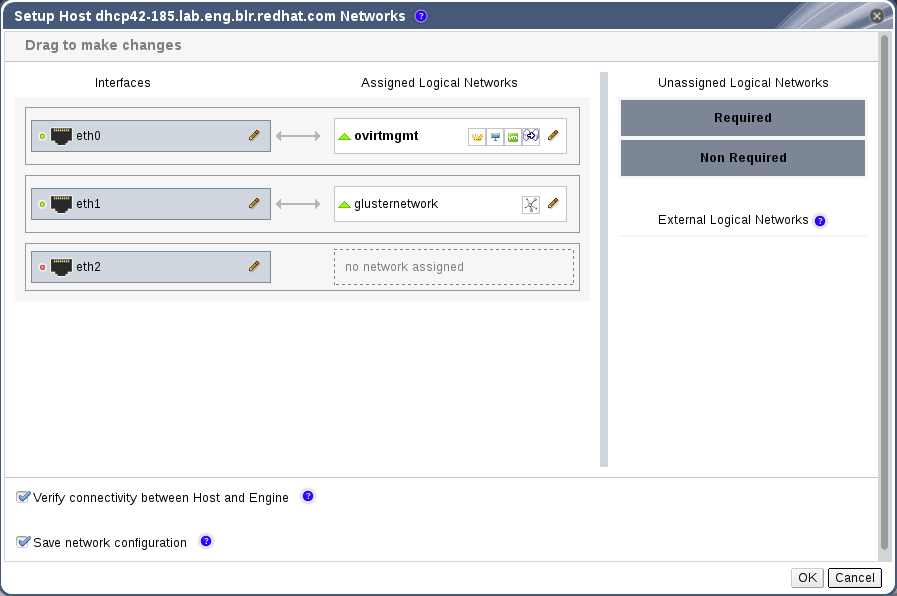

- Click to open the Setup Host Networks window.

Figure 6.3. Setup Host Networks Window

- Attach a logical network to a physical host network interface by selecting and dragging the logical network into the Assigned Logical Networks area next to the physical host network interface.

- If your logical network definition is not synchronized with the network configuration on the host, select the Sync network check box. A logical network cannot be edited or moved to another interface until it is synchronized.Alternatively, right-click the logical network and select a network interface from the drop-down menu.

- Configure the logical network:

- Hover your cursor over an assigned logical network and click the pencil icon to open the Edit Network window.

- Select a Boot Protocol:

- None

- DHCP

- Static - provide the IP and Subnet Mask.

- Click .

- Select Verify connectivity between Host and Engine to run a network check.

- Select Save network configuration if you want the network changes to be persistent when you reboot the environment.

- Click to implement the changes and close the window.

6.2.3.2. Editing Management/Gluster Network Interfaces

Note

Important

Procedure 6.4. To Edit a Management Network Interface

- Click the Hosts tab to display a list of hosts. Select the desired host to display the Details pane.

- Edit the logical networks by hovering over an assigned logical network and clicking the pencil icon to open the Edit Management Network window.

Figure 6.4. Edit Management Network Dialog Box

- If your logical network definition is not synchronized with the network configuration on the host, select the Sync network check box.

- Select a Boot Protocol:

- None

- DHCP

- Static - provide the IP and Subnet Mask.

- Make the required changes to the management network interface:

- To attach the ovirtmgmt management network to a different network interface card, select a different interface from the drop-down list.

- Select the network setting from , or . For the setting, provide the IP, Subnet and Default Gateway information for the host.

- Click to confirm the changes.

- Select Verify connectivity between host and engine if required.

- Select Save network configuration to make the changes persistent when you reboot the environment.

- Click .

- Activate the host. See Section 6.2.2, “Activating Hosts”.

6.2.4. Managing Gluster Sync

Procedure 6.5. To Import a Host to a Cluster

- Click the Cluster tab and select a cluster to display the General tab with details of the cluster.

- In , click Import to display the Add Hosts window.

Figure 6.5. Add Hosts Window

- Enter the Name and Root Password. Select Use a common password if you want to use the same password for all hosts.

- Click .

- Click to add the host to the cluster.

Procedure 6.6. To Detach a Host from a Cluster

- Click the Cluster tab and select a cluster to display the General tab with details of the cluster.

- In , click Detach to display the Detach Hosts window.

- Select the host you want to detach and click . Select Force Detach if you want to perform force removal of the host from the cluster.

6.2.5. Deleting Hosts

Note

Procedure 6.7. To Delete a Host

- Click the Hosts tab to display a list of hosts. Select the host you want to remove. If the required host is not visible, perform a search.

- Click to place the host into maintenance. Click to confirm the action. The Status field of the host changes to Preparing for Maintenance, followed by Maintenance. The icon changes to indicate that the host is in maintenance mode.

Important

If you move the Hosts under Maintenance mode, it stops all gluster process such as brick, self-heal, and geo-replication. If you wish to reuse this host, ensure to remove the gluster related information stored in/var/lib/glusterdmanually. - Click .

- Click to confirm.

6.2.6. Managing Storage Devices

Note

Important

Procedure 6.8. Creating Bricks

- Click the Hosts tab to display a list of hosts.

- Select a host and select the Storage Devices sub-tab. The list of storage devices is displayed.

- Select a storage device from the list and click Create Brick. The Create Brick page is displayed..

- Enter the Brick Name, Mount Point name, and the No. of Physical Disks in RAID Volume.The Mount Point is auto-suggested and can be edited.

- Confirm the Raid Type.

- Click OK. A new thinly provisioned logical volume is created with recommended Red Hat Gluster Storage configurations using the selected storage devices. This Logical Volume will be mounted at the specified mount point and this mount point can be used as brick in gluster volume.

Important

- semanage fcontext -a -t glusterd_brick_t '/rhgs/brick1(/.*)?'

- restorecon -Rv /rhgs/brick1

6.3. Maintaining Hosts

Warning

6.3.1. Moving Hosts into Maintenance Mode

Important

Procedure 6.9. To Move a Host into Maintenance Mode

- Click the Hosts tab to display a list of hosts.

- Click to place the host into maintenance. Click to confirm the action. The Status field of the host changes to Preparing for Maintenance, followed by Maintenance. The icon changes to indicate that the host is in maintenance mode.

- Perform required tasks. When you are ready to reactivate the host, click .

- After the host reactivates, the Status field of the host changes to Up. If the Red Hat Gluster Storage Console is unable to contact or control the host, the Status field displays Non-responsive.

6.3.2. Editing Host Details

Procedure 6.10. To Edit Host Details

- Click the Hosts tab to display a list of hosts.

- If you are moving the host to a different cluster, first place it in maintenance mode by clicking . Click to confirm the action. The Status field of the host changes to Preparing for Maintenance, followed by Maintenance. The icon changes to indicate that the host is in maintenance mode.

- Click to open the Edit Host dialog box.

- To move the host to a different cluster, select the cluster from the Host Cluster drop-down list.

- Make the required edits and click . Activate the host to start using it. See Section 6.2.2, “Activating Hosts”

6.3.3. Customizing Hosts

Note

Procedure 6.11. To Tag a Host

- Click the Hosts tab to display a list of hosts. Select the desired host to display the Details pane.

- Click to open the Assign Tags dialog box.

- Select the required tags and click .

6.4. Hosts Entities

6.4.1. Viewing General Host Information

Procedure 6.12. To View General Host Information

- Click the Hosts tab to display a list of hosts. If the required host is not visible, perform a search.

- Select the desired host to display general information, network interface information and host information in the Details pane.

- Click to display the following information:

- Version information for OS, Kernel, VDSM, and RHS.

- Status of memory page sharing (Active/Inactive) and automatic large pages (Always).

- CPU information: number of CPUs attached, CPU name and type, total physical memory allocated to the selected host, swap size, and shared memory.

- An alert if the host is in Non-Operational or Install-Failed state.

6.4.2. Viewing Network Interfaces on Hosts

Procedure 6.13. To View Network Interfaces on a Host

- Click the Hosts tab to display a list of hosts. If the required host is not visible, perform a search.

- Select the desired host to display the Details pane.

- Click the Network Interfaces tab.

6.4.3. Viewing Permissions on Hosts

Procedure 6.14. To View Permissions on a Host

- Click the Hosts tab to display a list of hosts. If the required host is not visible, perform a search.

- Select the desired host to display the Details pane.

- Click the Permissions tab.

6.4.4. Viewing Events from a Host

Procedure 6.15. To View Events from a Host

- Click the Hosts tab to display a list of hosts. If the required host is not visible, perform a search.

- Select the desired host to display the Details pane.

- Click the Events tab.

6.4.5. Viewing Bricks

Procedure 6.16. To View Bricks on a Host

- Click the Hosts tab to display a list of hosts. If the required host is not visible, perform a search.

- Select the desired host to display the Details pane.

- Click the Bricks tab.

6.5. Hosts Permissions

Note

Procedure 6.17. To Add a Host Administrator Role

- Click the Hosts tab to display a list of hosts. If the required host is not visible, perform a search.

- Select the desired host to display the Details pane.

- Click the Permissions tab to display a list of users and their current roles.

Figure 6.6. Host Permissions Window

- Click to display the Add Permission to User dialog box. Enter all or part of a name or user name in the Search box, then click . A list of possible matches displays in the results list.

- Select the user you want to modify. Scroll through the Role to Assign list and select .

- Click to display the name of the user and their assigned role in the Permissions tab.

Procedure 6.18. To Remove a Host Administrator Role

- Click the Hosts tab to display a list of hosts. If the required host is not visible, perform a search.

- Select the desired host to display the Details pane.

- Click the Permissions tab to display a list of users and their current roles.

- Select the desired user and click

Chapter 7. Managing Volumes

Note

Note

7.1. Creating a Volume

Procedure 7.1. Creating a Volume

- Click the Volumes tab. The Volumes tab lists all volumes in the system.

- Click the . The New Volume window is displayed.

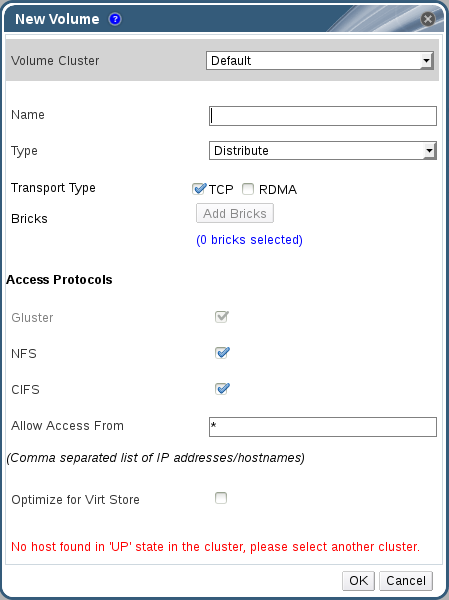

Figure 7.1. New Volume

- Select the cluster from the Volume Cluster drop-down list.

- In the Name field, enter the name of the volume.

Note

You can not create a volume with the name volume. - Select the type of the volume from the Type drop-down list. You can set the volume type to Distribute, Replicate or Distributed Replicate.

Note

- Creating replicated volumes with replica count more than 3 is under technology preview.

- As necessary, click Add Bricks to add bricks to your volume.

Note

At least one brick is required to create a volume. The number of bricks required depends on the type of the volume.For more information on adding bricks to a volume, see Section 7.6.1, “Adding Bricks”. - Configure the Access Protocol for the new volume by selecting NFS, or CIFS, or both check boxes.

- In the Allow Access From field, specify the volume access control as a comma-separated list of IP addresses or hostnames.You can use wildcards to specify ranges of addresses. For example, an asterisk (*) specifies all IP addresses or hostnames. You need to use IP-based authentication for Gluster Filesystem and NFS exports.You can optimize volumes for virt-store by selecting .

- Click to create the volume. The new volume is added and displays on the Volume tab. The volume is configured, and group and storage-owner-gid options are set.

7.2. Starting Volumes

Procedure 7.2. Starting a Volume

- In the Volumes tab, select the volume to be started.You can select multiple volumes to start by using the

ShiftorCtrlkey. - Click the button.

7.3. Configuring Volume Options

Procedure 7.3. Configuring Volume Options

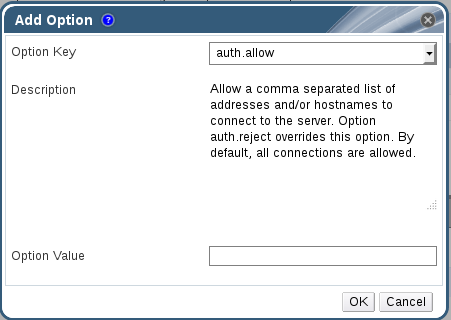

- Click the Volumes tab.A list of volumes displays.

- Select the volume to tune, and click the tab from the Details pane.The Volume Options tab lists the options set for the volume.

- Click to set an option. The Add Option window is displayed. Select the option key from the drop-down list and enter the option value.

Figure 7.2. Add Option

- Click .The option is set and displays in the Volume Options tab.

7.3.1. Edit Volume Options

Procedure 7.4. Editing Volume Options

- Click the Volumes tab.A list of volumes displays.

- Select the volume to edit, and click the Volume Options tab from the Details pane.The Volume Options tab lists the options set for the volume.

- Select the option to edit. Click . The Edit Option window is displayed. Enter a new value for the option in the Option Value field.

- Click .The edited option displays in the Volume Options tab.

7.3.2. Resetting Volume Options

Procedure 7.5. Resetting Volume Options

- Click the Volumes tab.A list of volumes is displayed.

- Select the volume and click the Volume Options tab from the Details pane.The Volume Options tab lists the options set for the volume.

- Select the option to reset. Click Reset. Reset Option window is displayed, prompting to confirm the reset.

- Click .The selected option is reset. The name of the volume option reset is displayed in the Events tab.

Note

7.4. Stopping Volumes

Note

Procedure 7.6. Stopping a Volume

- In the Volumes tab, select the volume to be stopped.You can select multiple volumes to stop by using the

ShiftorCtrlkey. - Click . A window is displayed, prompting to confirm the stop.

Note

Stopping volume will make its data inaccessible. - Click OK.

7.5. Deleting Volumes

Procedure 7.7. Deleting a Volume

- In the Volumes tab, select the volume to be deleted.

- Click Stop. The volume stops.

- Click . A window is displayed, prompting to confirm the deletion. Click . The volume is removed from the cluster.

7.6. Managing Bricks

Note

7.6.1. Adding Bricks

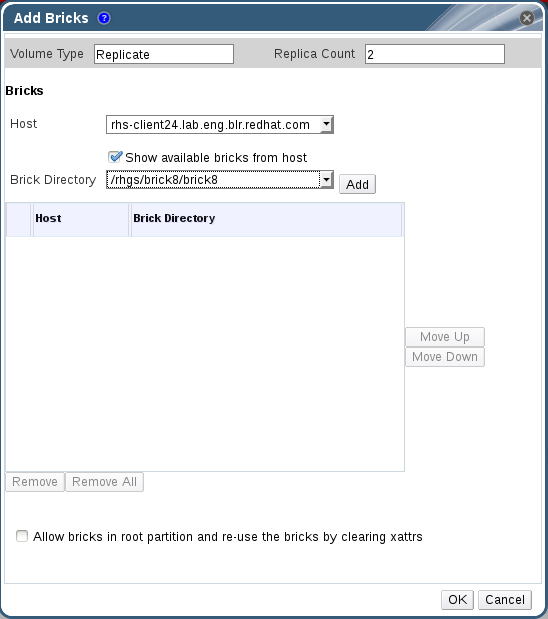

Procedure 7.8. Adding a Brick

- Click the Volumes tab.A list of volumes displays.

- Select the volume to which the new bricks are to be added. Click the tab from the Details pane.The Bricks tab lists the bricks of the selected volume.

- Click to add new bricks. The Add Bricks window is displayed.

Figure 7.3. Add Bricks

Expand Table 7.1. Add Bricks Tab Properties Field/TabDescription/ActionVolume TypeThe type of volume.Replica CountNumber of replicas to keep for each stored item.HostThe selected host from which new bricks are to be added.Brick DirectoryThe directory in the host. - Use the drop-down menu to select the host on which the brick resides.

- Select the brick directory from the drop-down menu.

Note

Uncheck Show available bricks from host to type the brick directory path since the brick is not shown in the brick directory drop-down. - Select the Allow bricks in root partition and re-use the bricks by clearing xattrs to use the system's root partition for storage and to re-use the existing bricks by clearing the extended attributes.

Note

It is not recommended to reuse bricks of restored volume as is. In case of reusing the brick, delete the logical volume and recreate it from the same or different pool (the data on the logical volume will be lost). Otherwise there would be some performance penalty on copy-on-write because the original brick and the restored brick shares the block.Note

Using the system's root partition for storage backend is not recommended. Original bricks of snapshot restored volume is not recommended to be used as a new brick - Click and click . The new bricks are added to the volume and is displayed in the Bricks tab.

7.6.2. Removing Bricks

Note

- When shrinking distributed replicated volumes, the number of bricks being removed must be a multiple of the replica count. For example, to shrink a distributed replicated volume with a replica count of 2, you need to remove bricks in multiples of 2 (such as 2, 4, 6, 8). In addition, the bricks you are removing must be from the same replica set. In a non-replicated volume, all bricks must be available in order to migrate data and perform the remove brick operation. In a replicated volume, at least one of the bricks in the replica must be available.

- You can monitor the status of Remove Bricks operation from the Tasks pane.

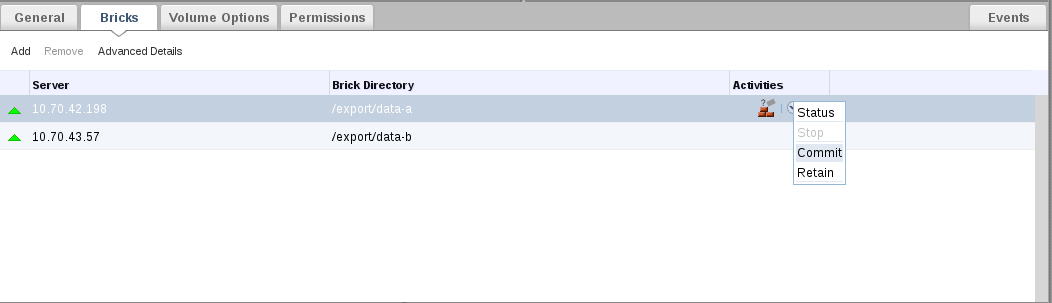

- You can perform Commit, Retain, view Status and Stop from remove-brick icon in the Activities column of Volumes and Bricks sub-tab.

Procedure 7.9. Removing Bricks from an Existing Volume

- Click the Volumes tab.A list of volumes is displayed.

- Select the volume from which bricks are to be removed. Click the Bricks tab from the Details pane.The Bricks tab lists the bricks for the volume.

- Select the brick to remove. Click . The Remove Bricks window is displayed, prompting to confirm the removal of the bricks.

Warning

If the brick is removed without selecting theMigrate Data from the brickscheck box, the data on the brick which is being removed will not be accessible on the glusterFS mount point. If theMigrate Data from the brickscheck box is selected, the data is migrated to other bricks and on a successful commit, the information of the removed bricks is deleted from the volume configuration. Data can still be accessed directly from the brick. - Click , remove brick starts.

Note

- Once remove-brick starts, remove-brick icon is displayed in Activities column of both Volumes and Bricks sub-tab.

- After completion of the remove brick operation, the remove brick icon disappears after 10 minutes.

- In the Activities column, ensure that data migration is completed and select the drop down of the remove-brick icon corresponding to the volume from which bricks are to be removed.

- Click Commit to perform the remove brick operation.

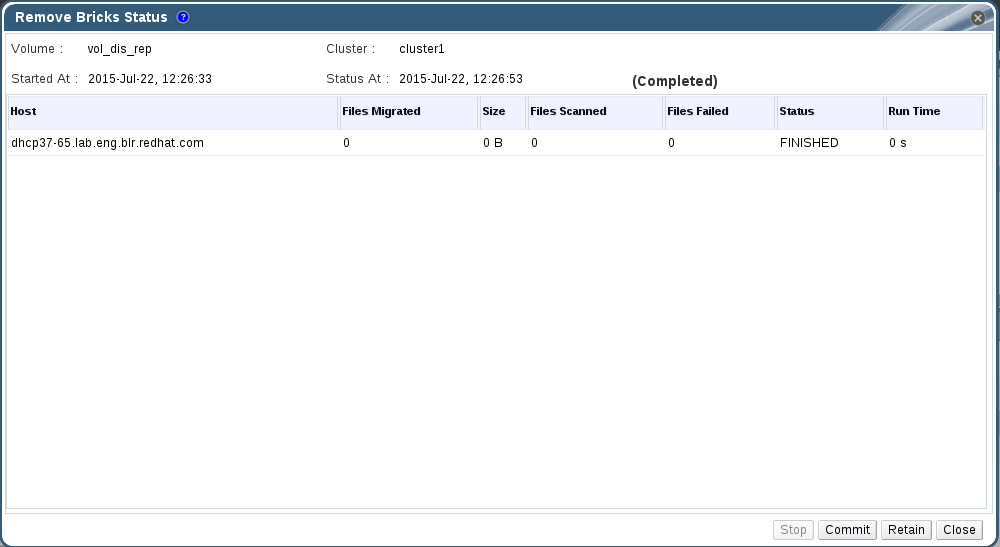

Figure 7.4. Remove Bricks Commit

Note

TheCommitoption is enabled only if the data migration is completed.The remove brick operation is completed and the status is displayed in the Activities column. You can check the status of the remove brick operation by selecting Status from the activities column.

7.6.2.1. Stopping a Remove Brick Operation

Note

- Stop remove-brick operation is a technology preview feature. Technology Preview features are not fully supported under Red Hat subscription level agreements (SLAs), may not be functionally complete, and are not intended for production use. However, these features provide early access to upcoming product innovations, enabling customers to test functionality and provide feedback during the development process. As Red Hat considers making future iterations of Technology Preview features generally available, we will provide commercially reasonable efforts to resolve any reported issues that customers experience when using these features.

- Files which were migrated during Remove Brick operation are not migrated to the same brick when the operation is stopped.

Procedure 7.10. Stopping a Remove Brick Operation

- Click the Volumes tab. A list of volumes displays.

- In the Activities column, select the drop down of the remove-brick icon corresponding to the volume to stop remove brick.

- Click Stop to stop remove brick operation. The remove brick operation is stopped and remove-brick icon in the activities column is updated. The remove brick status is displayed after stopping the remove brick.You can also view the status of the Remove Brick operation by selecting Status from the drop down of the remove-brick icon in the Activities column of Volumes and Bricks sub-tab..

7.6.2.2. Viewing Remove Brick Status

Procedure 7.11. Viewing Remove Brick Status

- Click the Volumes tab. A list of volumes displays.

- In the Activities column, click the arrow corresponding to the volume.

- Click Status to view the status of the remove brick operation. The Remove Bricks Status window displays.

Figure 7.5. Remove Brick Status

- Click one of the options below for the corresponding results

- Stop to stop the remove brick operation

- Commit to commit the remove brick operation

- Retain to retain the brick selected for removal

- Close to close the remove-brick status popup

7.6.2.3. Retaining a brick selected for Removal

Note

Procedure 7.12. Retaining a Brick selected for Removal

- Click the Volumes tab. A list of volumes displays.

- In the Activities column, click the arrow corresponding to the volume.

- Click Retain to retain the brick selected for removal. The brick is not removed and the status of the operation is displayed in the remove brick icon in the Activities column.You can also check the status by selecting the Status option from the drop down of remove-brick icon in the activities column.

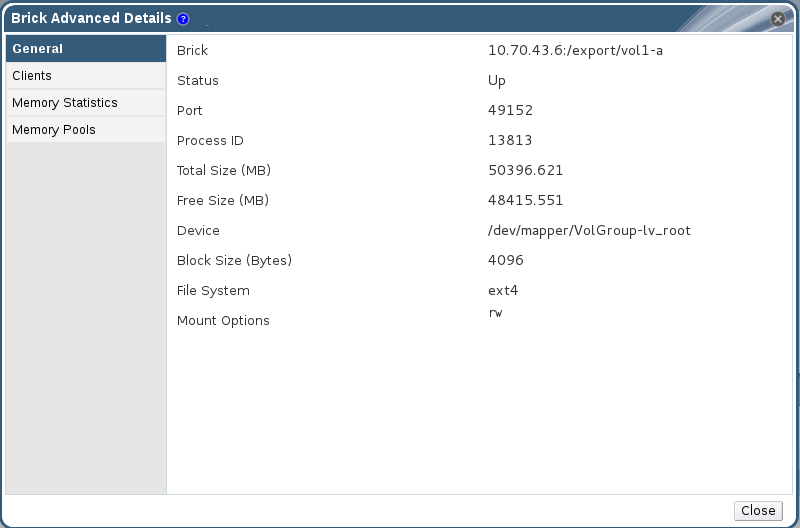

7.6.3. Viewing Advanced Details

Procedure 7.13. Viewing Advanced Details

- Click the Volumes tab. A list of volumes displays.

- Select the required volume and click the tab from the Details pane.

- Select the brick and click . The Brick Advanced Details window displays.

Figure 7.6. Brick Advanced Details

|

Field/Tab

|

Description/Action

|

|---|---|

General

|

Displays additional information about the bricks.

|

Clients

|

Displays a list of clients accessing the volumes.

|

Memory Statistics/Memory Pool

|

Displays the details of memory usage and memory pool for the bricks.

|

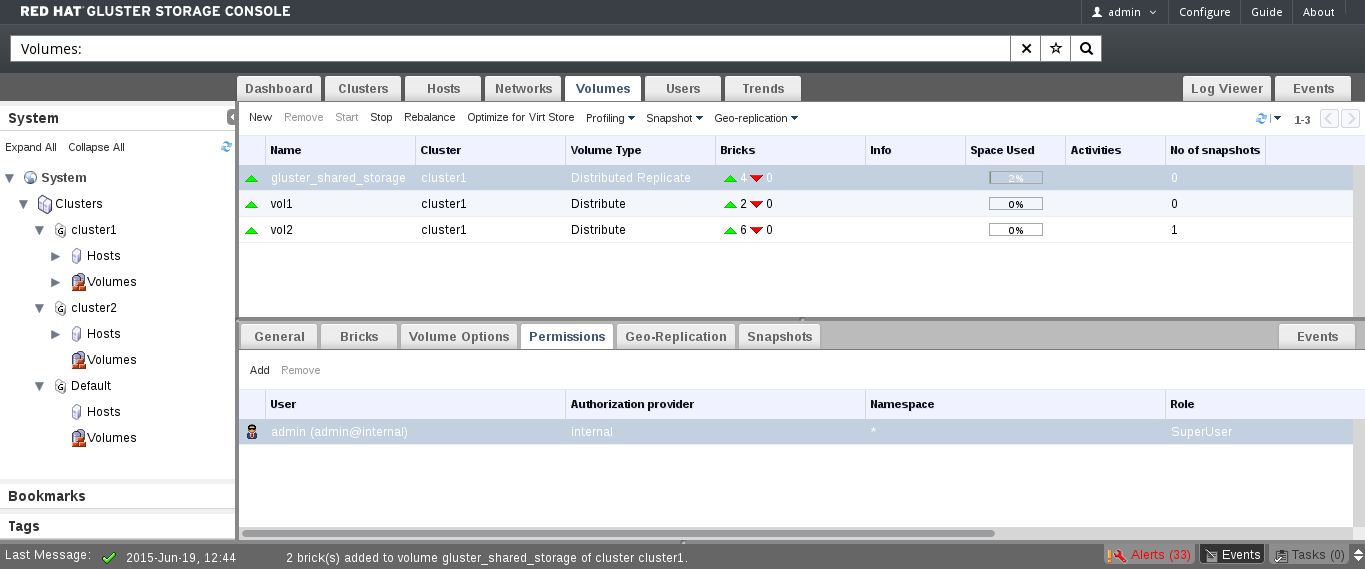

7.7. Volumes Permissions

Procedure 7.14. Assigning a System Administrator Role for a Volume

- Click the Volumes tab. A list of volumes displays.

- Select the volume to edit, and click the tab from the Details pane.The Permissions tab lists users and their current roles and permissions, if any.

Figure 7.7. Volume Permissions

- Click to add an existing user. The Add Permission to User window is displayed. Enter a name, a user name, or part thereof in the Search text box, and click . A list of possible matches displays in the results list.

- Select the check box of the user to be assigned the permissions. Scroll through the Role to Assign list and select

GlusterAdmin.Figure 7.8. Assign GlusterAdmin Permission

- Click .The name of the user displays in the Permissions tab, with an icon and the assigned role.

Note

Procedure 7.15. Removing a System Administrator Role

- Click the Volumes tab. A list of volumes displays.

- Select the required volume and click the tab from the Details pane.The Permissions tab lists users and their current roles and permissions, if any. The Super User and Cluster Administrator, if any, will display in the Inherited Permissions tab. However, none of these higher level roles can be removed.

- Select the appropriate user.

- Click . A window is displayed, prompting to confirm removing the user. Click . The user is removed from the Permissions tab.

7.8. Rebalancing Volume

- Start Rebalance

- Stop Rebalance

- View Rebalance Status

Note

7.8.1. Start Rebalance

- Click the Volumes tab. The Volumes tab is displayed with the list of all volumes in the system.

- Select the volume that you want to Rebalance.

- Click the Rebalance. The Rebalance process starts and the rebalance icon is displayed in the Activities column of the volume. A mouseover script is displayed mentioning that the rebalance is in progress. You can view the rebalance status by selecting status from the rebalance drop-down list .

Note

After completion of the rebalance operation, the rebalance icon disappears after 10 minutes.

7.8.2. Stop Rebalance

- Click the Volumes tab. The Volumes tab is displayed with the list of all volumes in the system.

- Select a volume on which Rebalance need to be stopped.

Note

- You can not stop rebalance for multiple volumes.

- Rebalance can be stopped for volumes only if it is in progress

- In the Activities column, select the drop-down of the Rebalance icon corresponding to the volume.

- Click Stop. The Stop Rebalance window is displayed.

- Click OK to stop rebalance. The Rebalance is stopped and the status window is displayed.You can also check the status of the Rebalance operation by selecting Status option from the drop down of Rebalance icon in the activities column.

7.8.3. View Rebalance Status

- Click the Volumes tab. The Volumes tab is displayed with the list of all volumes in the system.

- Select the volume on which Rebalance is in progress, stopped, completed.

- Click Status option from the Rebalance icon drop down list. The Rebalance Status page is displayed.

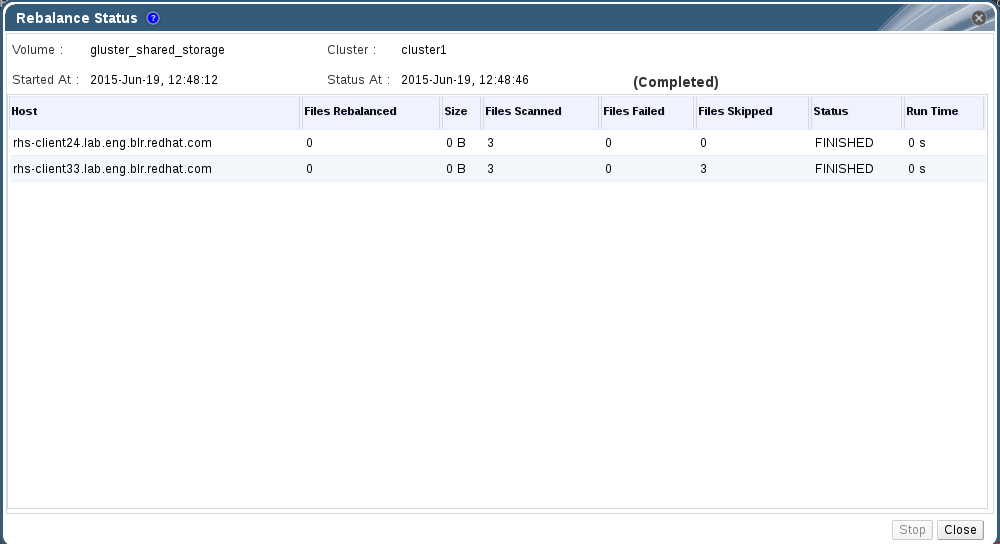

Figure 7.9. Rebalance Status

Note

If the Rebalance Status window is open while Rebalance is stopped using the CLI, the status is displayed asStopped. If the Rebalance Status window is not open, the task status is displayed asUnknownas the status update depends on gluster CLI.You can also stop Rebalance operation by clicking Stop in the Rebalance Status window.

Chapter 8. Managing Gluster Hooks

- View a list of hooks available in the hosts.

- View the content and status of hooks.

- Enable or disable hooks.

- Resolve hook conflicts.

8.1. Viewing the list of Hooks

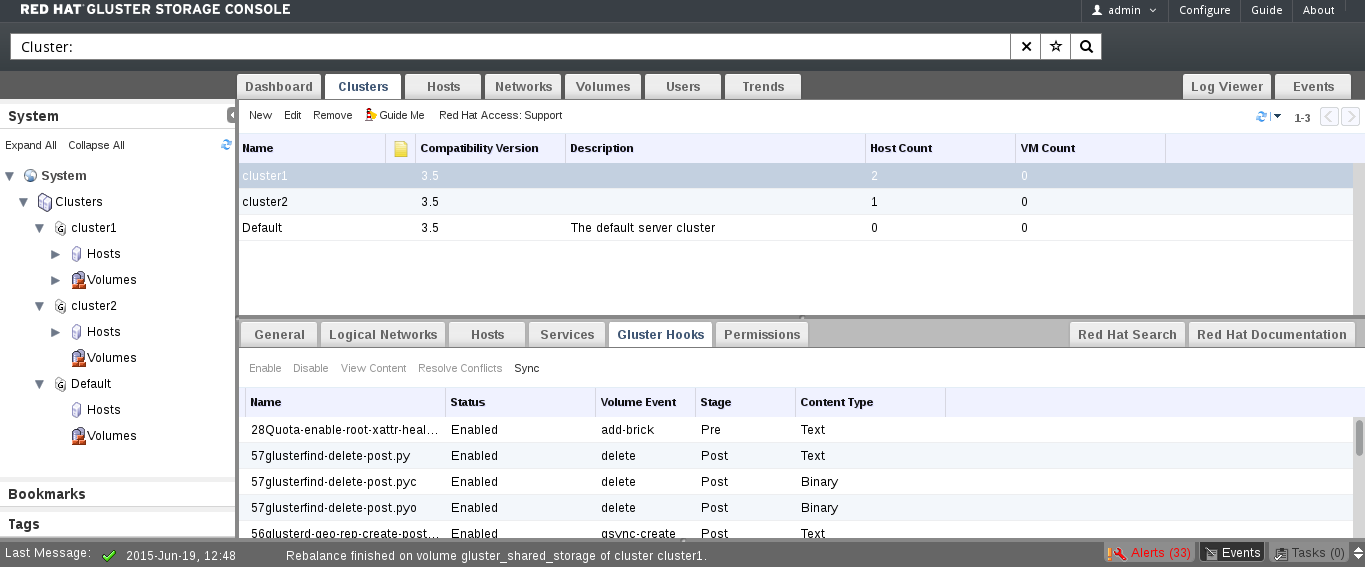

Figure 8.1. Gluster Hooks

8.2. Viewing the Content of Hooks

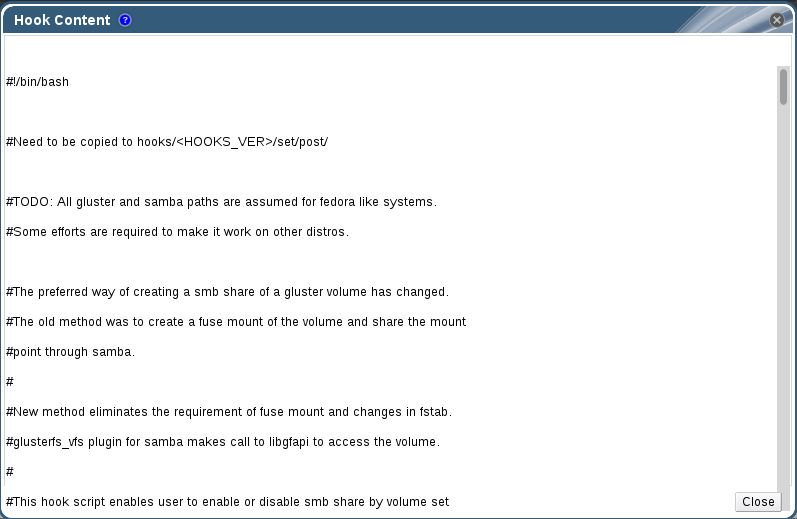

Procedure 8.1. Viewing the Content of a Hook

- Click the Cluster tab and select a cluster. A Gluster Hooks sub-tab displays, listing the hooks in the cluster.

- Select a hook with content type Text and click . The Hook Content window displays with the content of the hook.

Figure 8.2. Hook Content

8.3. Enabling or Disabling Hooks

Procedure 8.2. Enabling or Disabling a Hook

- Click the Cluster tab and select a cluster. A Gluster Hooks sub-tab displays, listing the hooks in the cluster.

- Select the hook and click or .If Disable is selected, Disable Gluster Hooks dialog box displays, prompting you to confirm disabling hook. Click OK to confirm disabling.The hook is enabled or disabled on all nodes of the cluster.The enabled or disabled hooks status update displays in the Gluster Hooks sub-tab.

8.4. Refreshing Hooks

Procedure 8.3. Refreshing a Hook

- Click the Cluster tab and select a cluster. A Gluster Hooks sub-tab displays, listing the hooks in the cluster.

- Click . The hooks are synchronized and displayed.

8.5. Resolving Conflicts

- Content Conflict - the content of the hook is different across servers.

- Status Conflict - the status of the hook is different across servers.

- Missing Conflict - one or more servers of the cluster do not have the hook.

- Content + Status Conflict - both the content and status of the hook are different across servers.

- Content + Status + Missing Conflict - both the content and status of the hook are different across servers, or one or more servers of the cluster do not have the hook.

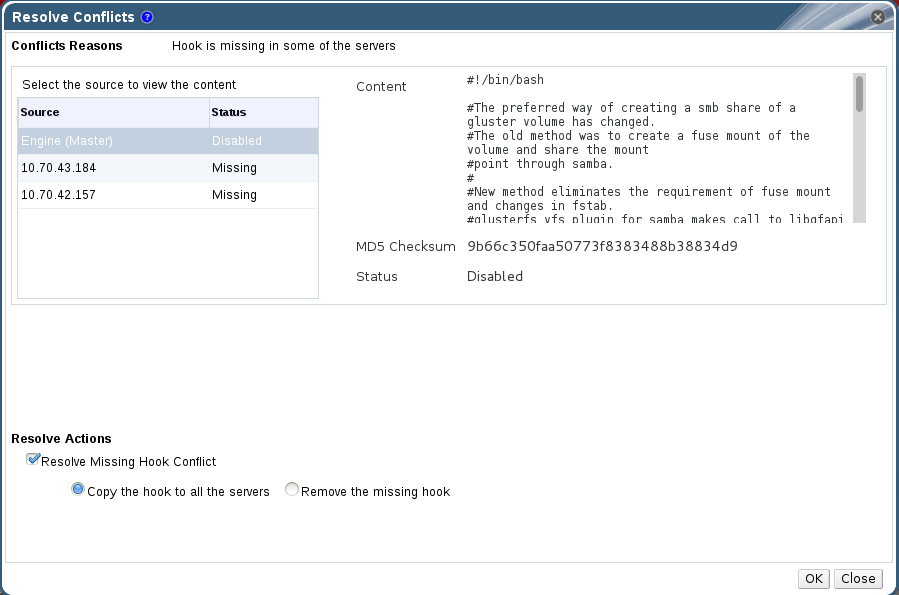

8.5.1. Resolving Missing Hook Conflicts

Procedure 8.4. Resolving a Missing Hook Conflict

- Click the Cluster tab and select a cluster. A Gluster Hooks sub-tab displays, listing the hooks in the cluster.

- Select a hook causing a conflict and click Resolve Conflicts. The Resolve Conflicts window displays.

Figure 8.3. Missing Hook Conflict

- Select one of the options give below:

- Copy the hook to all the servers to copy the hook to all servers.

- Remove the missing hook to remove the hook from all servers and the engine.

- Click OK. The conflict is resolved.

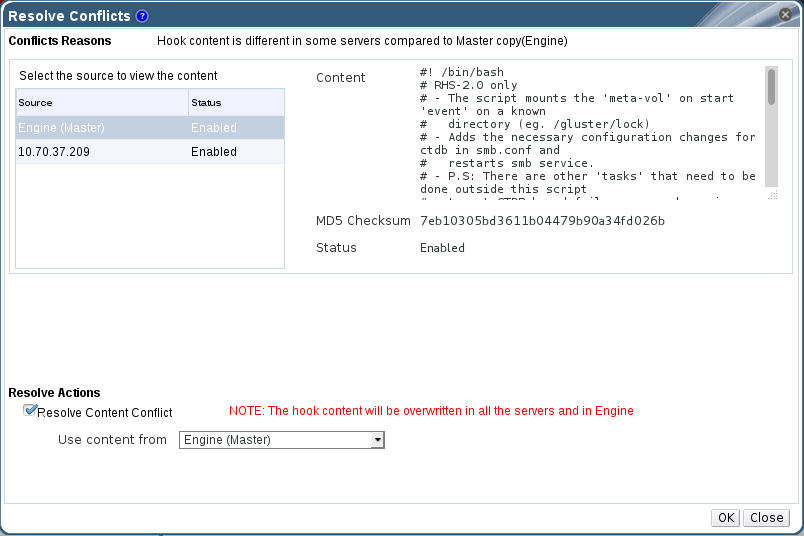

8.5.2. Resolving Content Conflicts

Procedure 8.5. Resolving a Content Conflict

- Click the Cluster tab and select a cluster. A Gluster Hooks sub-tab displays, listing the hooks in the cluster.

- Select the conflicted hook and click Resolve Conflicts. The Resolve Conflicts window displays.

Figure 8.4. Content Conflict

- Select an option from the drop-down list:

- Select a server to copy the content of the hook from the selected server.Or

- Select Engine (Master) to copy the content of the hook from the engine copy.

Note

The content of the hook will be overwritten in all servers and in the engine. - Click OK. The conflict is resolved.

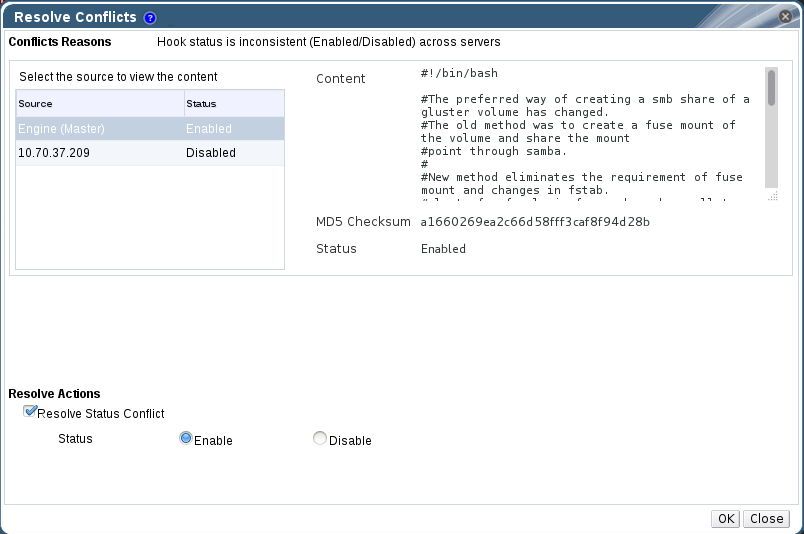

8.5.3. Resolving Status Conflicts

Procedure 8.6. Resolving a Status Conflict

- Click the Cluster tab and select a cluster. A Gluster Hooks sub-tab displays, listing the hooks in the cluster.

- Select the conflicted hook and click Resolve Conflicts. The Resolve Conflicts window displays.

Figure 8.5. Status Conflict

- Set Hook Status to Enable or Disable.

- Click OK. The conflict is resolved.

8.5.4. Resolving Content and Status Conflicts

Procedure 8.7. Resolving a Content and Status Conflict

- Click the Cluster tab and select a cluster. A Gluster Hooks sub-tab displays, listing the hooks in the cluster.

- Select a hook causing a conflict and click Resolve Conflicts. The Resolve Conflicts window displays.

- Select an option from the drop-down list to resolve the content conflict:

- Select a server to copy the content of the hook from the selected server.Or

- Select Engine (Master) to copy the content of the hook from the engine copy.

Note

The content of the hook will be overwritten in all the servers and in engine. - Set Hook Status to Enable or Disable to resolve the status conflict.

- Click OK. The conflict is resolved.

8.5.5. Resolving Content, Status, and Missing Conflicts

Procedure 8.8. Resolving a Content, Status and Missing Conflict

- Click the Cluster tab and select a cluster. A Gluster Hooks sub-tab displays, listing the hooks in the cluster.

- Select the conflicted hook and click Resolve Conflicts. The Resolve Conflicts window displays.

- Select one of the options given below to resolve the missing conflict:

- Copy the hook to all the servers.

- Remove the missing hook.

- Select an option from the drop-down list to resolve the content conflict:

- Select a server to copy the content of the hook from the selected server.Or

- Select Engine (Master) to copy the content of the hook from the engine copy.

Note

The content of the hook will be overwritten in all the servers and in Engine. - Set Hook Status to Enable or Disable to resolve the status conflict.

- Click OK. The conflict is resolved.

Chapter 9. Managing Snapshots

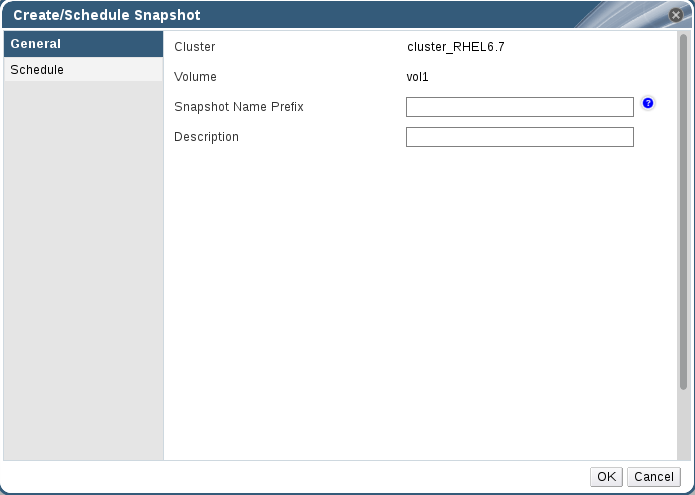

9.1. Creating Snapshots

Procedure 9.1. Creating Snapshots

- Click the Volumes tab. The list of all volumes is displayed.

- Select the volume of which you want to create Snapshot.

- Click Snapshot and click New to open the Create Snapshot page.

Figure 9.1. Creating Snapshots

- Enter the Snapshot Name Prefix and Description.

- Click OK to create Snapshot.The format of the snapshot is <Snapshot name prefix>_<Timezone of RHS node>-<yyyy>.<MM>.<dd>-<hh>.<mm>.<ss>

9.2. Configuring Snapshots

- Hard Limit: If the snapshot count in a volume reaches this limit then no further snapshot creation is allowed. The range is from 1 to 256. Once this limit is reached you have to remove the snapshots to create further snapshots. This limit can be set for the system or per volume. If both system limit and volume limit is configured then the effective max limit would be the lowest of the two value.

- Soft limit: This is a percentage value. The default value is 90%. This configuration works along with auto-delete feature. If auto-delete is enabled then it will delete the oldest snapshot when snapshot count in a volume crosses this limit. When auto-delete is disabled it will not delete any snapshot, but it will display a warning message to the user.

- Auto deletion flag: This will enable or disable auto-delete feature. By default auto-delete is disabled. When enabled it will delete the oldest snapshot when snapshot count in a volume crosses the snap-max-soft-limit. When disabled it will not delete any snapshot, but it will display a warning message to the user.

- Activate-on-Create: Volume snapshots would be auto activated after creation.

Procedure 9.2. Configuring Snapshots

- Click the Volumes tab. The list of all volumes in the system is displayed.

- Select the volume for which you want to configure Snapshot.If a volume is not selected from the list, only the cluster level parameters can be modified and set.

- Click Snapshot.

- Click Options - Clusters or Options - Volume to configure Snapshot for Cluster or Volume respectively.

- Click Snapshot and select Options- Clusters.

- Select the cluster from the drop down list.

- Modify the Snapshot Options. You can set the hard limit, soft limit percentage and enable or disable auto deletion of Snapshots for Clusters.

- Click Update to update the details.

- Click Snapshot and select Options- Volume.

- Modify the Snapshot Options. You can set maximum number of Snapshots for selected volume.

- Click Update to update the details.

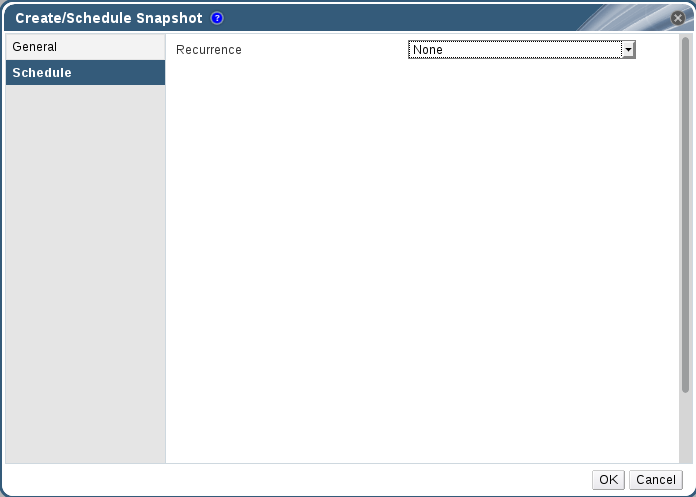

9.3. Scheduling Snapshots

Note

Procedure 9.3. Scheduling Snapshots

- Click the Volumes tab. The list of all volumes in the system is displayed.

- Select the volume of which you want to schedule Snapshot.

- Click Snapshot and click New to open Create/Schedule Snapshot page.

- In General tab, enter Snapshot Name Prefix and Description.

- Click Schedule tab.

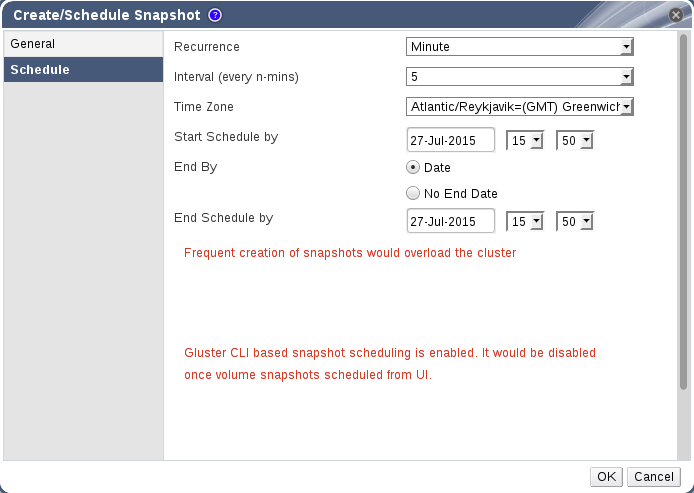

Figure 9.2. Scheduling Snapshots

- Select the recurrence schedule for the Snapshot. You can schedule the snapshot to recur at intervals of a specified number of minutes, hours, days, weeks, or months; either perpetually, or between specified dates.

Figure 9.3. Recurrence Schedule

Set Recurrence to the unit of time that you want to use as an interval between snapshots. If you do not want to set up recurring snapshots, leave this field set toNone.Minutes- Takes a snapshot every N minutes, where N is the value of the Interval field, with the first snapshot being taken at the time specified in the Start Schedule by field.

Hours- Takes a snapshot every N hours, where N is the value of the Interval field, with the first snapshot being taken after the time specified in the Start Schedule by field. Subsequent snapshots will be taken at the start of the hour. For example, if snapshots are to recur every 2 hours, and the first snapshot occurs at 2.20 PM, the next snapshot will occur at 4.00 PM.

Days- Takes a snapshot at the time specified in the Execute At field every N days, where N is the value of the Interval field, with the first snapshot being taken after the time specified in the Start Schedule by field.

Weeks- Takes a snapshot at the time specified in the Execute At field every N weeks, where N is the value of the Interval field, with the first snapshot being taken after the time specified in the Start Schedule by field.

Months- Takes a snapshot at the time specified in the Execute At field every N months, where N is the value of the Interval field, with the first snapshot being taken at the time specified in the Start Schedule by field.

The End by option determines whether snapshots will stop after a certain date. To set an end date, set End by toDate, and use the fields beside End Schedule By to enter a date and time at which snapshots should stop. To take snapshots continuously with no end date, set End by toNo End Date. - Click OK to set the snapshot recurrence schedules.

Note

Important

echo "none" > /var/run/gluster/shared_storage/snaps/current_scheduler.

9.4. Restoring Snapshots

Procedure 9.4. Restoring Snapshots

- Click the Volumes tab. The list of all volumes in the system is displayed.

- Select the volume for which you want to restore the Snapshot.

- Click Snapshots sub-tab and select the Snapshot.

- Click Restore and click OK to confirm Snapshot restore.

Note

While restoring a snapshot, you will lose current state and the volume will be brought down and restored to the state of the selected snapshot.

9.5. Activating Snapshots

Procedure 9.5. Activating Snapshots

- Click the Volumes tab. The list of all volumes in the system is displayed.

- Select the volume for which you want to activate the Snapshot.

- Click Snapshots sub-tab and select the Snapshot.

- Click Activate and click OK to confirm activation of the Snapshot.

9.6. Deactivating Snapshots

Procedure 9.6. Deactivating Snapshots

- Click the Volumes tab. The list of all volumes in the system is displayed.

- Select the volume for which you want to deactivate the Snapshot.

- Click Snapshots sub-tab and select the Snapshot.

- Click Deactivate and click OK to confirm Snapshot deactivation.

9.7. Deleting Snapshots

Procedure 9.7. Deleting Snapshots

- Click the Volumes tab. The list of all volumes in the system is displayed.

- Select the volume for which you want to delete the Snapshot.

- Click Snapshots sub-tab and select the Snapshot.

- Click Delete and click OK confirm deleting the selected Snapshot.To delete all Snapshots for the selected volume, click Delete All.

Chapter 10. Managing Geo-replication

- Source – a Red Hat Gluster Storage volume.

- Destination - a Red Hat Gluster Storage volume.

Note

10.1. Geo-replication Operations

Important

- Manually set the cluster option "cluster.enable-shared-storage" from CLI.

- Set the option use_meta_volume to true.

- For every new node added to the cluster ensure that the cluster option "cluster.enable-shared-storage" is set to the cluster and the meta-volume is mounted.

10.1.1. Creating a Geo-replication session

- Destination and source volumes should not be from same cluster.

- Capacity of destination volume should be greater than or equal to that of source volume.

- Cluster Compatibility version of destination and source volumes should be same.

- Destination volume should not already be a part of another geo replication session.

- Destination volume should be up.

- Destination volume should be empty.

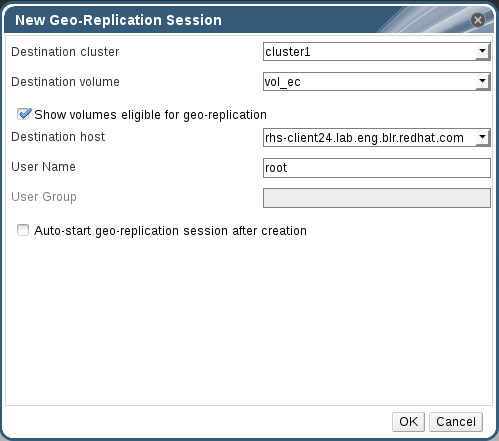

Procedure 10.1. Creating a Geo-replication session

- Click the Volumes tab. The list of volumes in the system is displayed.

- Select the volume for which the geo-replication is to be created and click the Geo-replication option.

- Click New option. The New Geo-Replication Session page is displayed.

Note

- You can also create Geo-Replication session from Geo-Replication sub-tab.

Figure 10.1. New Geo-replication session

- Select the Destination Cluster, Destination Volume, and Destination Host.

- Select Show volumes eligible for geo-replication option to view the list of volumes eligible for geo-replication.

- Enter the User Name. For non-root user, enter the corresponding User roup.

- Select Auto-start geo-replication session after creation option to start the session immediately after creation and click OK.

10.1.2. Viewing Geo-replication session Details

- Initializing: This is the initial phase of the Geo-replication session; it remains in this state for a minute in order to make sure no abnormalities are present.

- Created: The geo-replication session is created, but not started.

- Active: The gsync daemon in this node is active and syncing the data.

- Passive: A replica pair of the active node. The data synchronization is handled by active node. Hence, this node does not sync any data.

- Faulty: The geo-replication session has experienced a problem, and the issue needs to be investigated further.

- Stopped: The geo-replication session has stopped, but has not been deleted.

- Crawl Status

- Changelog Crawl: The changelog translator has produced the changelog and that is being consumed by gsyncd daemon to sync data.

- Hybrid Crawl: The gsyncd daemon is crawling the glusterFS file system and generating pseudo changelog to sync data.

- Checkpoint Status: Displays the status of the checkpoint, if set. Otherwise, it displays as N/A.

Procedure 10.2. Viewing a Geo-replication session Details

- Click the Volumes tab. The list of volumes is displayed.

- Select the desired volume and click Geo-Replication sub-tab.

- Select the session from the Geo-Replication sub-tab.

- Click View Details. The Geo-replication details, Destination Host, Destination Volume, User Name, and Status are displayed.

10.1.3. Starting or Stopping a Geo-replication session

Important

Note

- any node that is a part of the volume is offline.

- if it is unable to stop the geo-replication session on any particular node.

- if the geo-replication session between the master and slave is not active.

Procedure 10.3. Starting and Stopping Geo-replication session

- Click the Volumes tab. The list of volumes is displayed.

- Select the desired volume and click the Geo-Replication sub-tab.

- Select the session from the Geo-Replication sub-tab.

- Click Start or Stop to start or stop the session respectively.

Note

Click Force start session to force the operation on geo-replication session on the nodes that are part of the master volume. If it is unable to successfully perform the operation on any node which is online and part of the master volume, the command will still perform the operation on as many nodes as it can. This command can also be used to re-perform the operation on the nodes where the session has died, or the operation has not been executed.

10.1.4. Pausing or Resuming a Geo-replication session

Procedure 10.4. Pausing or Resuming Geo-replication session

- Click the Volumes tab. The list of volumes is displayed.

- Select the desired volume and click the Geo-Replication sub-tab.

- Select the session from the Geo-Replication sub-tab.

- Click Pause or Resume to pause or resume the Geo-replication session.

10.1.5. Removing a Geo-replication session

Note

Procedure 10.5. Removing Geo-replication session

- Click the Volumes tab. The list of volumes is displayed.

- Select the desired volume and click the Geo-Replication sub-tab.

- Select the session from the Geo-Replication sub-tab.

- Click Remove to remove the Geo-replication session.

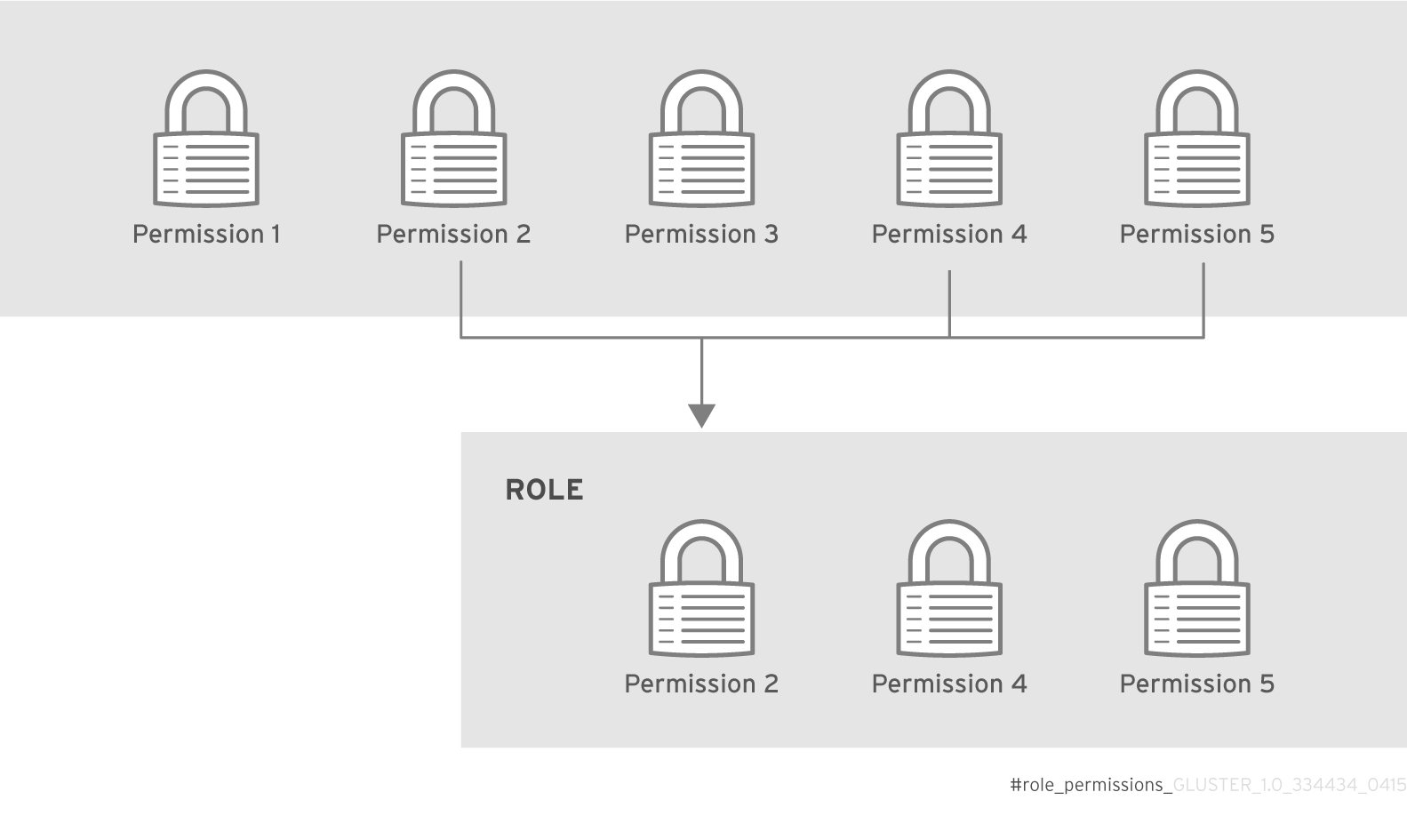

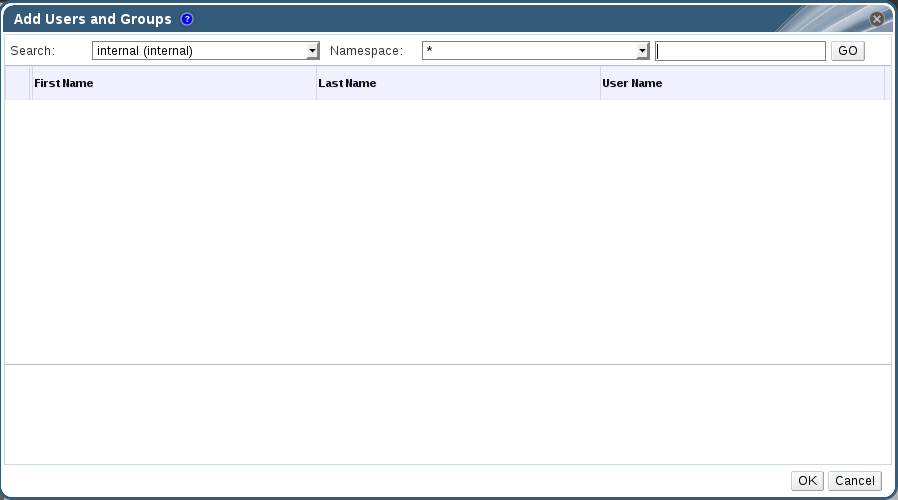

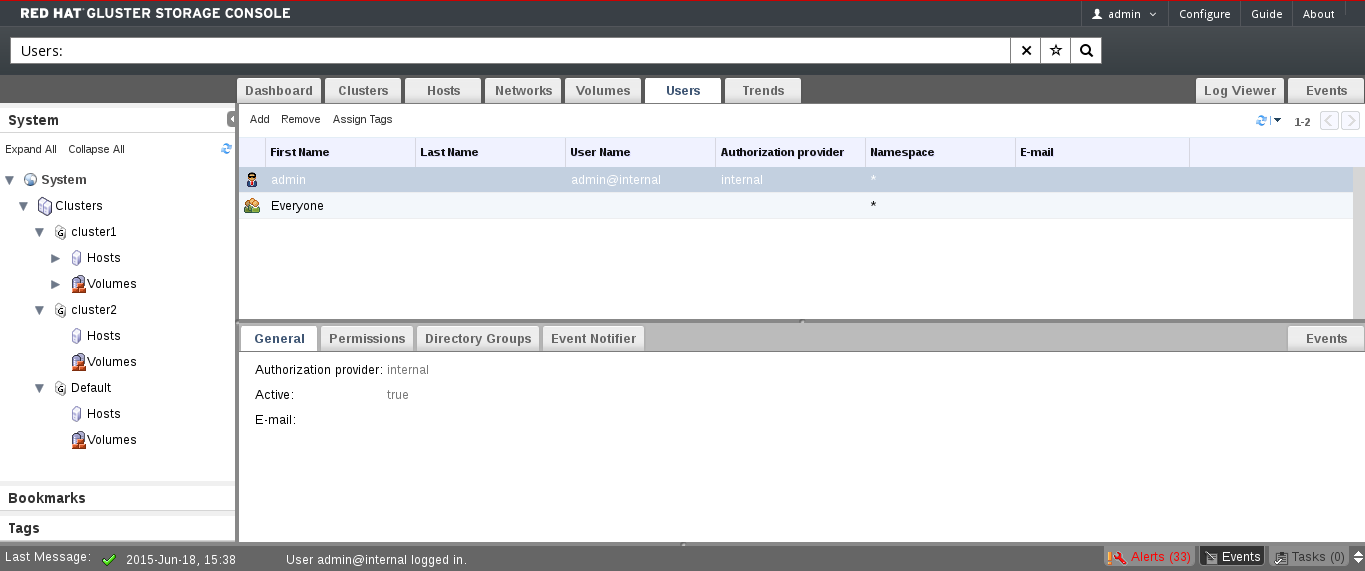

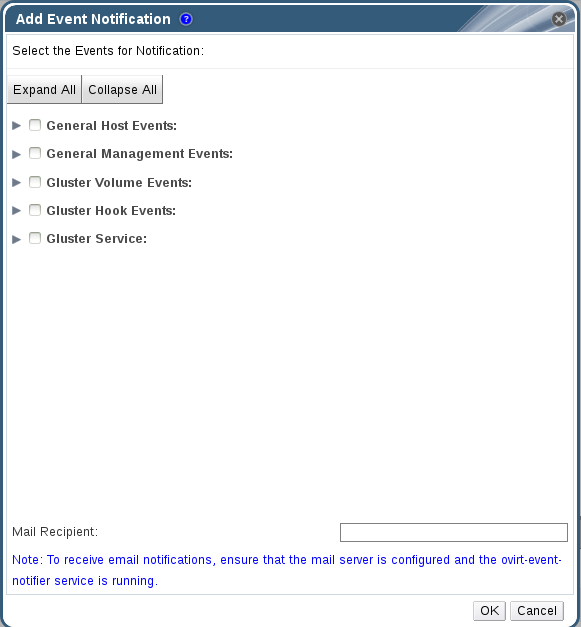

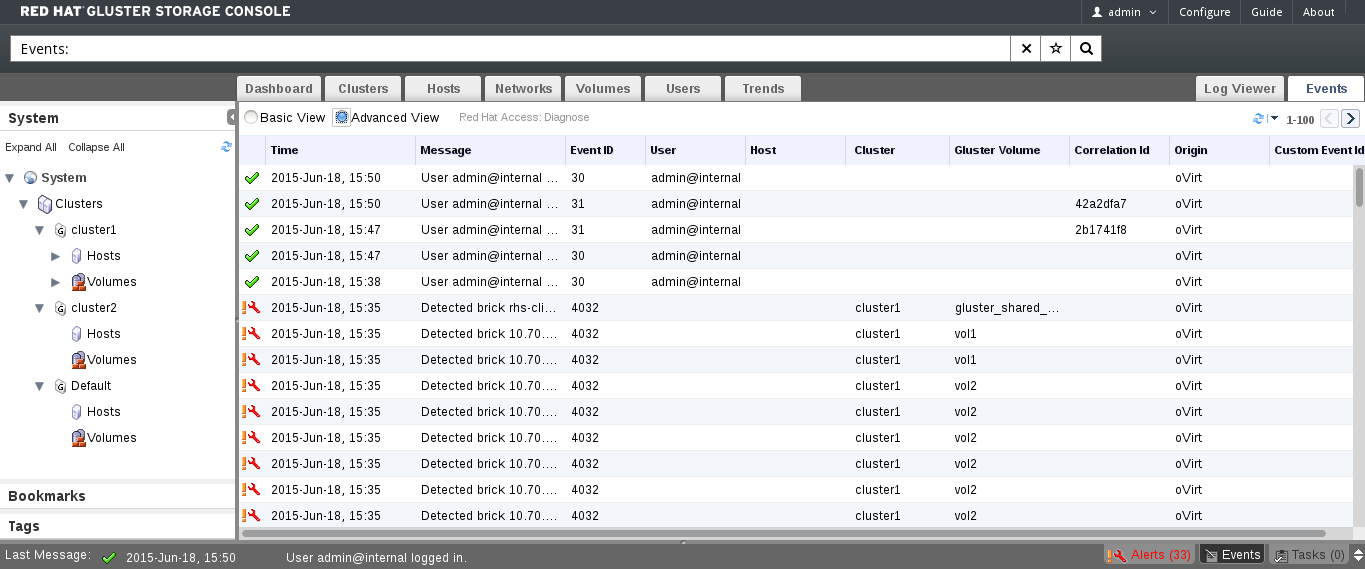

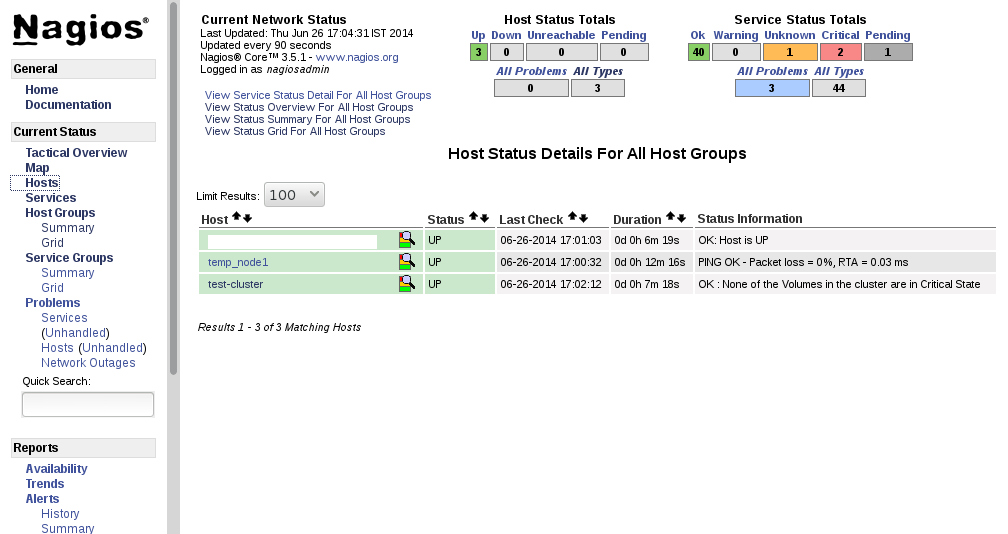

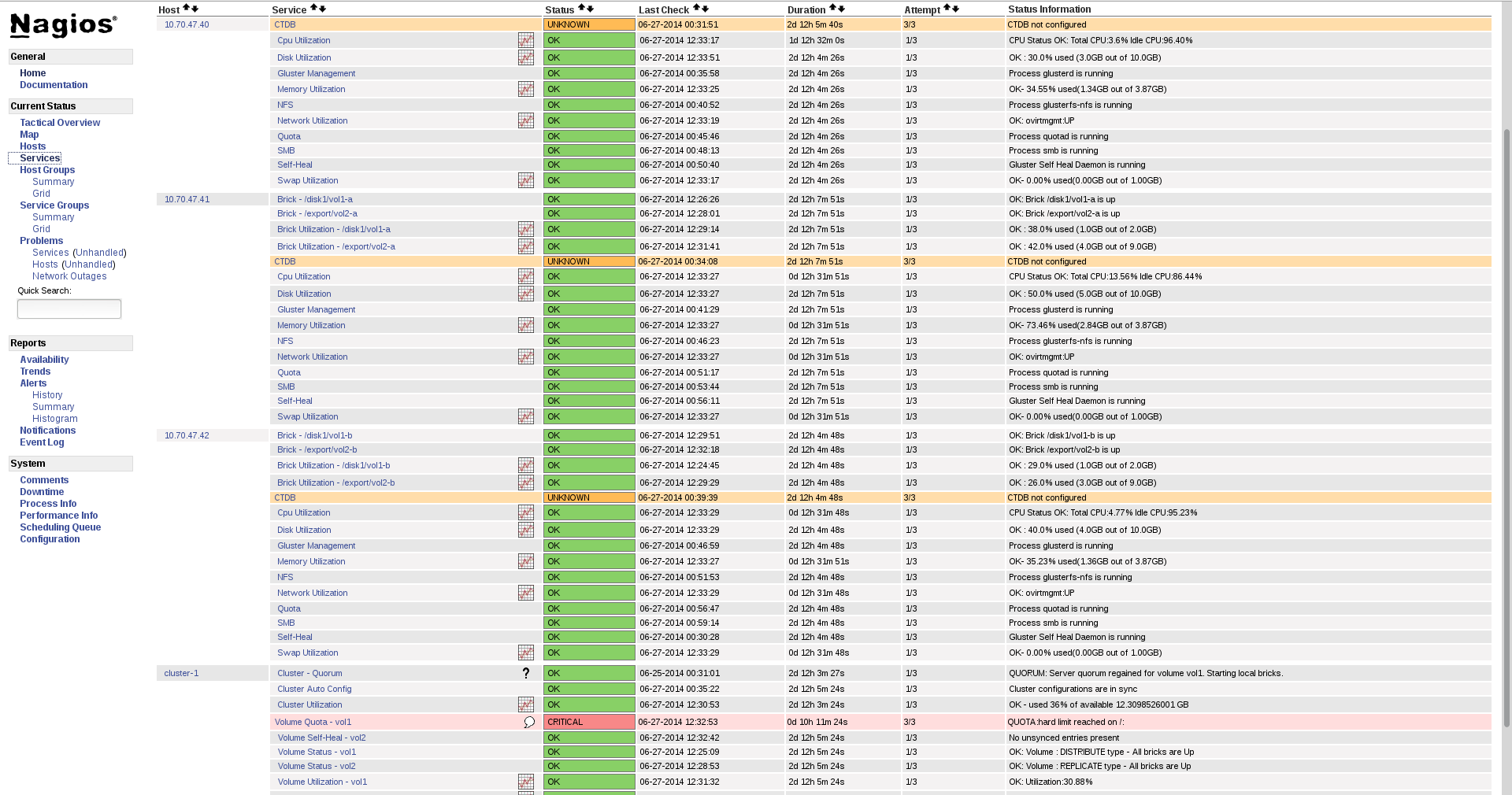

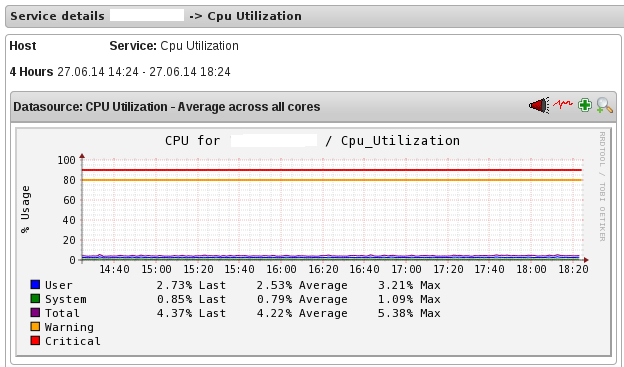

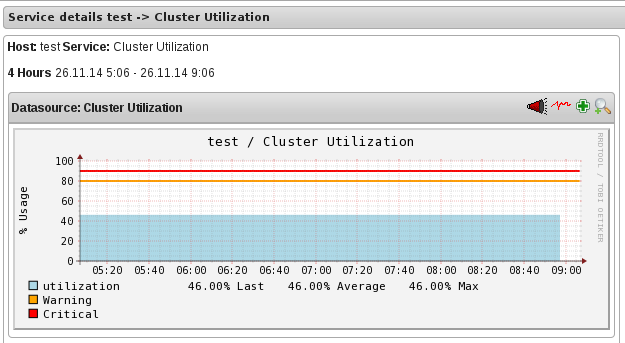

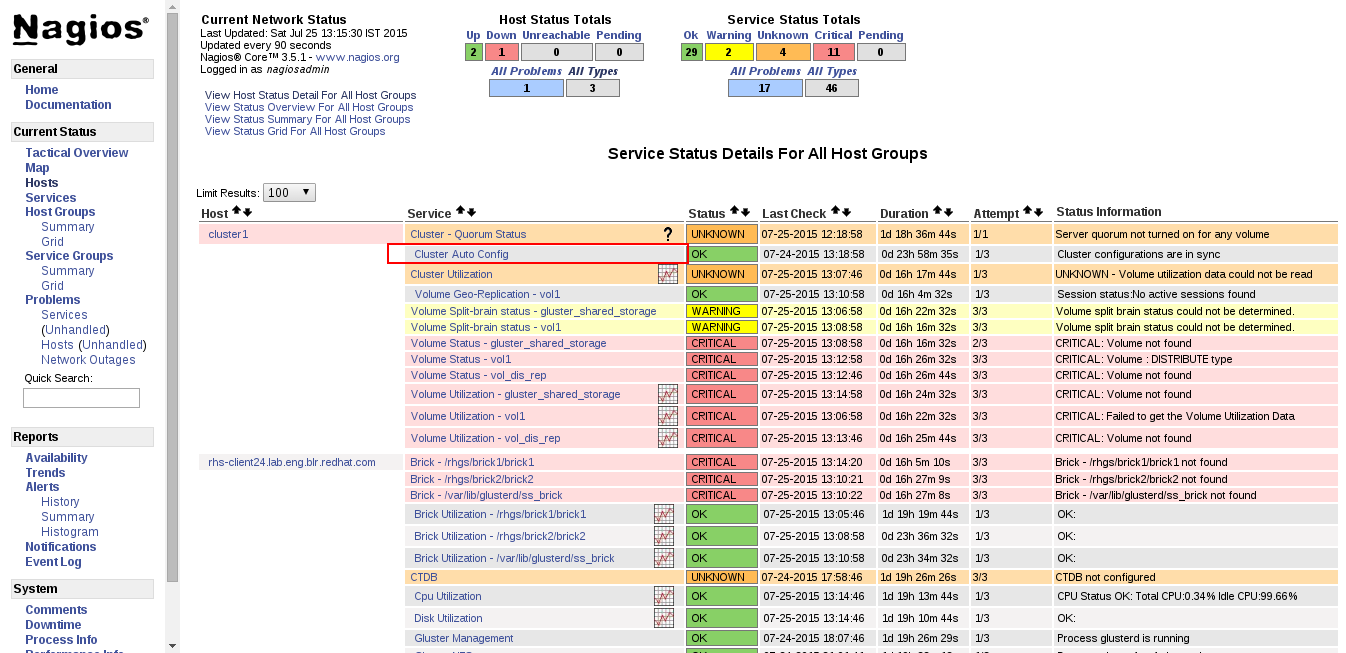

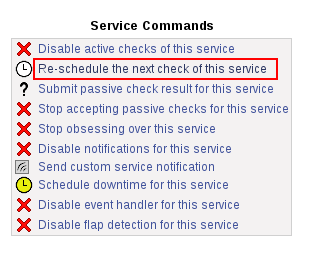

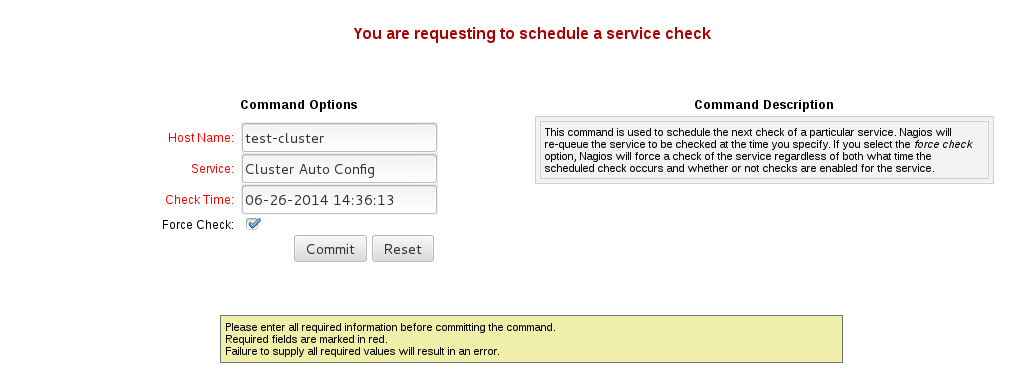

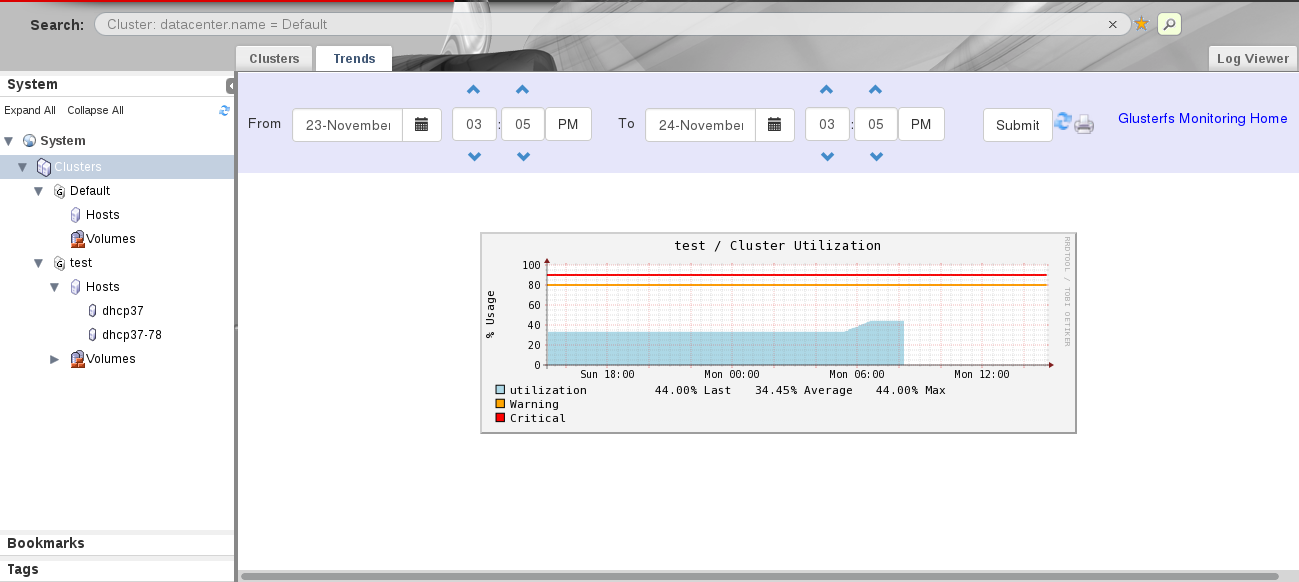

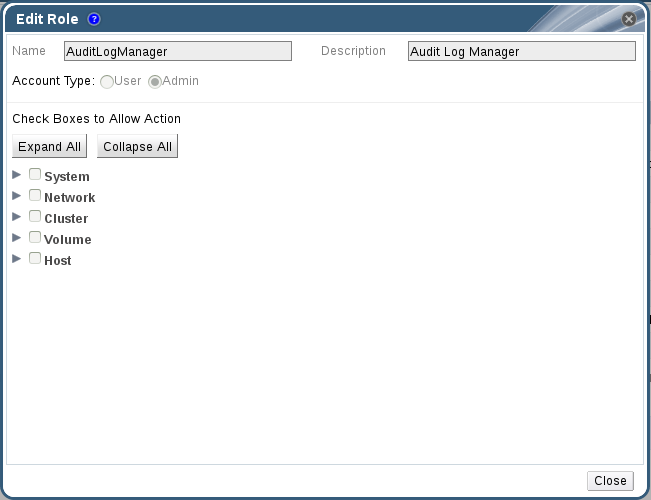

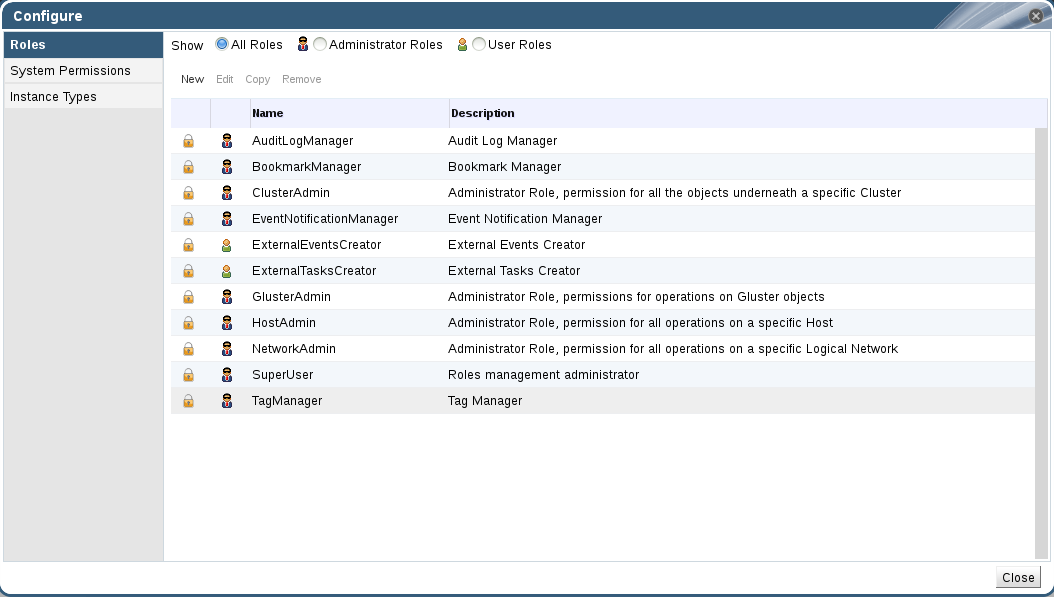

10.1.6. Synchronizing a Geo-replication session