Dieser Inhalt ist in der von Ihnen ausgewählten Sprache nicht verfügbar.

Chapter 6. Configuring Knative broker for Apache Kafka

The Knative broker implementation for Apache Kafka provides integration options for you to use supported versions of the Apache Kafka message streaming platform with OpenShift Serverless. Kafka provides options for event source, channel, broker, and event sink capabilities.

In addition to the Knative Eventing components that are provided as part of a core OpenShift Serverless installation, the KnativeKafka custom resource (CR) can be installed by:

- Cluster administrators, for OpenShift Container Platform

- Cluster or dedicated administrators, for Red Hat OpenShift Service on AWS or for OpenShift Dedicated.

The KnativeKafka CR provides users with additional options, such as:

- Kafka source

- Kafka channel

- Kafka broker

- Kafka sink

6.1. Installing Knative broker for Apache Kafka

The Knative broker implementation for Apache Kafka provides integration options for you to use supported versions of the Apache Kafka message streaming platform with OpenShift Serverless. Knative broker for Apache Kafka functionality is available in an OpenShift Serverless installation if you have installed the KnativeKafka custom resource.

Prerequisites

- You have installed the OpenShift Serverless Operator and Knative Eventing on your cluster.

- You have access to a Red Hat AMQ Streams cluster.

-

Install the OpenShift CLI (

oc) if you want to use the verification steps. - You have cluster administrator permissions on OpenShift Container Platform, or you have cluster or dedicated administrator permissions on Red Hat OpenShift Service on AWS or OpenShift Dedicated.

- You are logged in to the OpenShift Container Platform web console.

Procedure

-

In the Administrator perspective, navigate to Operators

Installed Operators. - Check that the Project dropdown at the top of the page is set to Project: knative-eventing.

- In the list of Provided APIs for the OpenShift Serverless Operator, find the Knative Kafka box and click Create Instance.

Configure the KnativeKafka object in the Create Knative Kafka page.

ImportantTo use the Kafka channel, source, broker, or sink on your cluster, you must toggle the enabled switch for the options you want to use to true. These switches are set to false by default. Additionally, to use the Kafka channel, broker, or sink you must specify the bootstrap servers.

- Use the form for simpler configurations that do not require full control of KnativeKafka object creation.

Edit the YAML for more complex configurations that require full control of KnativeKafka object creation. You can access the YAML by clicking the Edit YAML link on the Create Knative Kafka page.

Example

KnativeKafkacustom resourceCopy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Enables developers to use the

KafkaChannelchannel type in the cluster. - 2

- A comma-separated list of bootstrap servers from your AMQ Streams cluster.

- 3

- Enables developers to use the

KafkaSourceevent source type in the cluster. - 4

- Enables developers to use the Knative broker implementation for Apache Kafka in the cluster.

- 5

- A comma-separated list of bootstrap servers from your Red Hat AMQ Streams cluster.

- 6

- Defines the number of partitions of the Kafka topics, backed by the

Brokerobjects. The default is10. - 7

- Defines the replication factor of the Kafka topics, backed by the

Brokerobjects. The default is3. ThereplicationFactorvalue must be less than or equal to the number of nodes of your Red Hat AMQ Streams cluster. - 8

- Enables developers to use a Kafka sink in the cluster.

- 9

- Defines the log level of the Kafka data plane. Allowed values are

TRACE,DEBUG,INFO,WARNandERROR. The default value isINFO.

WarningDo not use

DEBUGorTRACEas the logging level in production environments. The outputs from these logging levels are verbose and can degrade performance.

- Click Create after you have completed any of the optional configurations for Kafka. You are automatically directed to the Knative Kafka tab where knative-kafka is in the list of resources.

Verification

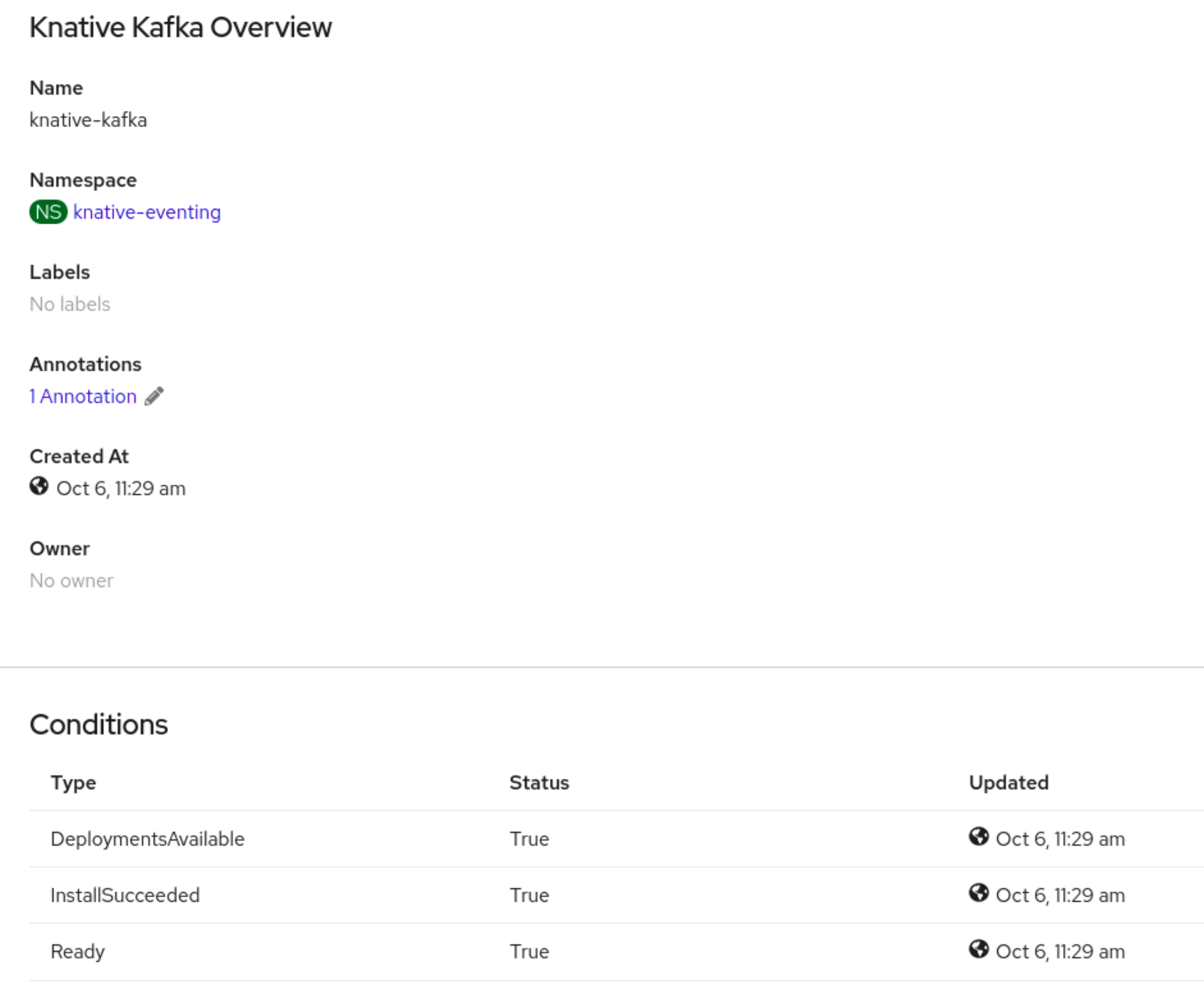

- Click on the knative-kafka resource in the Knative Kafka tab. You are automatically directed to the Knative Kafka Overview page.

View the list of Conditions for the resource and confirm that they have a status of True.

If the conditions have a status of Unknown or False, wait a few moments to refresh the page.

Check that the Knative broker for Apache Kafka resources have been created:

oc get pods -n knative-eventing

$ oc get pods -n knative-eventingCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow