Dieser Inhalt ist in der von Ihnen ausgewählten Sprache nicht verfügbar.

Chapter 6. Custom resource API reference

6.1. Common configuration properties

Common configuration properties apply to more than one resource.

6.1.1. replicas

Use the replicas property to configure replicas.

The type of replication depends on the resource.

-

KafkaTopicuses a replication factor to configure the number of replicas of each partition within a Kafka cluster. - Kafka components use replicas to configure the number of pods in a deployment to provide better availability and scalability.

When running a Kafka component on OpenShift it may not be necessary to run multiple replicas for high availability. When the node where the component is deployed crashes, OpenShift will automatically reschedule the Kafka component pod to a different node. However, running Kafka components with multiple replicas can provide faster failover times as the other nodes will be up and running.

6.1.2. bootstrapServers

Use the bootstrapServers property to configure a list of bootstrap servers.

The bootstrap server lists can refer to Kafka clusters that are not deployed in the same OpenShift cluster. They can also refer to a Kafka cluster not deployed by AMQ Streams.

If on the same OpenShift cluster, each list must ideally contain the Kafka cluster bootstrap service which is named CLUSTER-NAME-kafka-bootstrap and a port number. If deployed by AMQ Streams but on different OpenShift clusters, the list content depends on the approach used for exposing the clusters (routes, ingress, nodeports or loadbalancers).

When using Kafka with a Kafka cluster not managed by AMQ Streams, you can specify the bootstrap servers list according to the configuration of the given cluster.

6.1.3. ssl

You can incorporate SSL configuration and cipher suite specifications to further secure TLS-based communication between your client application and a Kafka cluster. In addition to the standard TLS configuration, you can specify a supported TLS version and enable cipher suites in the configuration for the Kafka broker. You can also add the configuration to your clients if you wish to limit the TLS versions and cipher suites they use. The configuration on the client must only use protocols and cipher suites that are enabled on the broker.

A cipher suite is a set of security mechanisms for secure connection and data transfer. For example, the cipher suite TLS_AES_256_GCM_SHA384 is composed of the following mechanisms, which are used in conjunction with the TLS protocol:

- AES (Advanced Encryption Standard) encryption (256-bit key)

- GCM (Galois/Counter Mode) authenticated encryption

- SHA384 (Secure Hash Algorithm) data integrity protection

The combination is encapsulated in the TLS_AES_256_GCM_SHA384 cipher suite specification.

The ssl.enabled.protocols property specifies the available TLS versions that can be used for secure communication between the cluster and its clients. The ssl.protocol property sets the default TLS version for all connections, and it must be chosen from the enabled protocols. Use the ssl.endpoint.identification.algorithm property to enable or disable hostname verification.

Example SSL configuration

- 1

- Cipher suite specifications enabled.

- 2

- TLS versions supported.

- 3

- Default TLS version is

TLSv1.3. If a client only supports TLSv1.2, it can still connect to the broker and communicate using that supported version, and vice versa if the configuration is on the client and the broker only supports TLSv1.2. - 4

- Hostname verification is enabled by setting to

HTTPS. An empty string disables the verification.

6.1.4. trustedCertificates

Having set tls to configure TLS encryption, use the trustedCertificates property to provide a list of secrets with key names under which the certificates are stored in X.509 format.

You can use the secrets created by the Cluster Operator for the Kafka cluster, or you can create your own TLS certificate file, then create a Secret from the file:

oc create secret generic MY-SECRET \ --from-file=MY-TLS-CERTIFICATE-FILE.crt

oc create secret generic MY-SECRET \

--from-file=MY-TLS-CERTIFICATE-FILE.crtExample TLS encryption configuration

If certificates are stored in the same secret, it can be listed multiple times.

If you want to enable TLS encryption, but use the default set of public certification authorities shipped with Java, you can specify trustedCertificates as an empty array:

Example of enabling TLS with the default Java certificates

tls: trustedCertificates: []

tls:

trustedCertificates: []

For information on configuring mTLS authentication, see the KafkaClientAuthenticationTls schema reference.

6.1.5. resources

Configure resource requests and limits to control resources for AMQ Streams containers. You can specify requests and limits for memory and cpu resources. The requests should be enough to ensure a stable performance of Kafka.

How you configure resources in a production environment depends on a number of factors. For example, applications are likely to be sharing resources in your OpenShift cluster.

For Kafka, the following aspects of a deployment can impact the resources you need:

- Throughput and size of messages

- The number of network threads handling messages

- The number of producers and consumers

- The number of topics and partitions

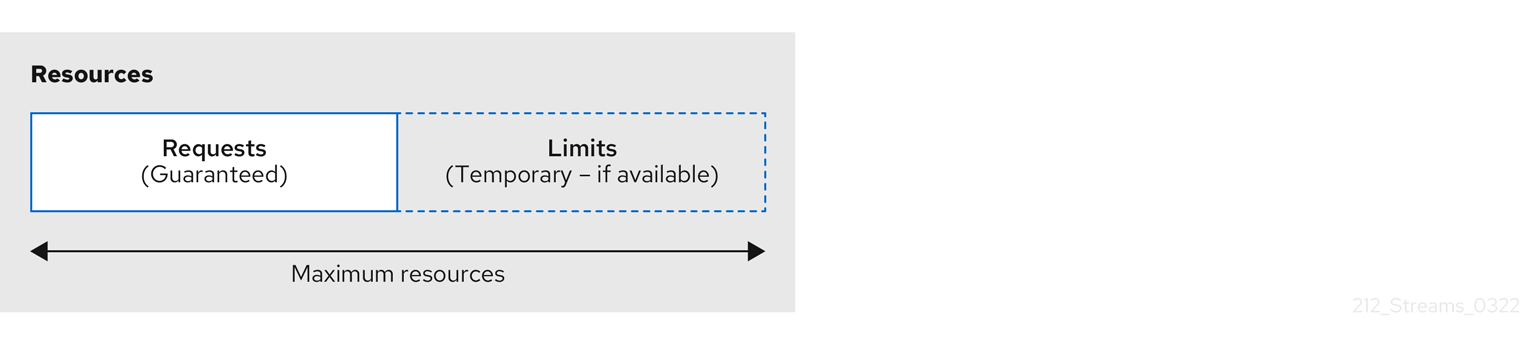

The values specified for resource requests are reserved and always available to the container. Resource limits specify the maximum resources that can be consumed by a given container. The amount between the request and limit is not reserved and might not be always available. A container can use the resources up to the limit only when they are available. Resource limits are temporary and can be reallocated.

Resource requests and limits

If you set limits without requests or vice versa, OpenShift uses the same value for both. Setting equal requests and limits for resources guarantees quality of service, as OpenShift will not kill containers unless they exceed their limits.

You can configure resource requests and limits for one or more supported resources.

Example resource configuration

Resource requests and limits for the Topic Operator and User Operator are set in the Kafka resource.

If the resource request is for more than the available free resources in the OpenShift cluster, the pod is not scheduled.

AMQ Streams uses the OpenShift syntax for specifying memory and cpu resources. For more information about managing computing resources on OpenShift, see Managing Compute Resources for Containers.

- Memory resources

When configuring memory resources, consider the total requirements of the components.

Kafka runs inside a JVM and uses an operating system page cache to store message data before writing to disk. The memory request for Kafka should fit the JVM heap and page cache. You can configure the

jvmOptionsproperty to control the minimum and maximum heap size.Other components don’t rely on the page cache. You can configure memory resources without configuring the

jvmOptionsto control the heap size.Memory requests and limits are specified in megabytes, gigabytes, mebibytes, and gibibytes. Use the following suffixes in the specification:

-

Mfor megabytes -

Gfor gigabytes -

Mifor mebibytes -

Gifor gibibytes

Example resources using different memory units

Copy to Clipboard Copied! Toggle word wrap Toggle overflow For more details about memory specification and additional supported units, see Meaning of memory.

-

- CPU resources

A CPU request should be enough to give a reliable performance at any time. CPU requests and limits are specified as cores or millicpus/millicores.

CPU cores are specified as integers (

5CPU core) or decimals (2.5CPU core). 1000 millicores is the same as1CPU core.Example CPU units

Copy to Clipboard Copied! Toggle word wrap Toggle overflow The computing power of 1 CPU core may differ depending on the platform where OpenShift is deployed.

For more information on CPU specification, see Meaning of CPU.

6.1.6. image

Use the image property to configure the container image used by the component.

Overriding container images is recommended only in special situations where you need to use a different container registry or a customized image.

For example, if your network does not allow access to the container repository used by AMQ Streams, you can copy the AMQ Streams images or build them from the source. However, if the configured image is not compatible with AMQ Streams images, it might not work properly.

A copy of the container image might also be customized and used for debugging.

You can specify which container image to use for a component using the image property in the following resources:

-

Kafka.spec.kafka -

Kafka.spec.zookeeper -

Kafka.spec.entityOperator.topicOperator -

Kafka.spec.entityOperator.userOperator -

Kafka.spec.entityOperator.tlsSidecar -

KafkaConnect.spec -

KafkaMirrorMaker.spec -

KafkaMirrorMaker2.spec -

KafkaBridge.spec

Configuring the image property for Kafka, Kafka Connect, and Kafka MirrorMaker

Kafka, Kafka Connect, and Kafka MirrorMaker support multiple versions of Kafka. Each component requires its own image. The default images for the different Kafka versions are configured in the following environment variables:

-

STRIMZI_KAFKA_IMAGES -

STRIMZI_KAFKA_CONNECT_IMAGES -

STRIMZI_KAFKA_MIRROR_MAKER_IMAGES

These environment variables contain mappings between the Kafka versions and their corresponding images. The mappings are used together with the image and version properties:

-

If neither

imagenorversionare given in the custom resource then theversionwill default to the Cluster Operator’s default Kafka version, and the image will be the one corresponding to this version in the environment variable. -

If

imageis given butversionis not, then the given image is used and theversionis assumed to be the Cluster Operator’s default Kafka version. -

If

versionis given butimageis not, then the image that corresponds to the given version in the environment variable is used. -

If both

versionandimageare given, then the given image is used. The image is assumed to contain a Kafka image with the given version.

The image and version for the different components can be configured in the following properties:

-

For Kafka in

spec.kafka.imageandspec.kafka.version. -

For Kafka Connect and Kafka MirrorMaker in

spec.imageandspec.version.

It is recommended to provide only the version and leave the image property unspecified. This reduces the chance of making a mistake when configuring the custom resource. If you need to change the images used for different versions of Kafka, it is preferable to configure the Cluster Operator’s environment variables.

Configuring the image property in other resources

For the image property in the other custom resources, the given value will be used during deployment. If the image property is missing, the image specified in the Cluster Operator configuration will be used. If the image name is not defined in the Cluster Operator configuration, then the default value will be used.

For Topic Operator:

-

Container image specified in the

STRIMZI_DEFAULT_TOPIC_OPERATOR_IMAGEenvironment variable from the Cluster Operator configuration. -

registry.redhat.io/amq-streams/strimzi-rhel8-operator:2.4.0container image.

-

Container image specified in the

For User Operator:

-

Container image specified in the

STRIMZI_DEFAULT_USER_OPERATOR_IMAGEenvironment variable from the Cluster Operator configuration. -

registry.redhat.io/amq-streams/strimzi-rhel8-operator:2.4.0container image.

-

Container image specified in the

For Entity Operator TLS sidecar:

-

Container image specified in the

STRIMZI_DEFAULT_TLS_SIDECAR_ENTITY_OPERATOR_IMAGEenvironment variable from the Cluster Operator configuration. -

registry.redhat.io/amq-streams/kafka-34-rhel8:2.4.0container image.

-

Container image specified in the

For Kafka Exporter:

-

Container image specified in the

STRIMZI_DEFAULT_KAFKA_EXPORTER_IMAGEenvironment variable from the Cluster Operator configuration. -

registry.redhat.io/amq-streams/kafka-34-rhel8:2.4.0container image.

-

Container image specified in the

For Kafka Bridge:

-

Container image specified in the

STRIMZI_DEFAULT_KAFKA_BRIDGE_IMAGEenvironment variable from the Cluster Operator configuration. -

registry.redhat.io/amq-streams/bridge-rhel8:2.4.0container image.

-

Container image specified in the

For Kafka broker initializer:

-

Container image specified in the

STRIMZI_DEFAULT_KAFKA_INIT_IMAGEenvironment variable from the Cluster Operator configuration. -

registry.redhat.io/amq-streams/strimzi-rhel8-operator:2.4.0container image.

-

Container image specified in the

Example container image configuration

6.1.7. livenessProbe and readinessProbe healthchecks

Use the livenessProbe and readinessProbe properties to configure healthcheck probes supported in AMQ Streams.

Healthchecks are periodical tests which verify the health of an application. When a Healthcheck probe fails, OpenShift assumes that the application is not healthy and attempts to fix it.

For more details about the probes, see Configure Liveness and Readiness Probes.

Both livenessProbe and readinessProbe support the following options:

-

initialDelaySeconds -

timeoutSeconds -

periodSeconds -

successThreshold -

failureThreshold

Example of liveness and readiness probe configuration

For more information about the livenessProbe and readinessProbe options, see the Probe schema reference.

6.1.8. metricsConfig

Use the metricsConfig property to enable and configure Prometheus metrics.

The metricsConfig property contains a reference to a ConfigMap that has additional configurations for the Prometheus JMX Exporter. AMQ Streams supports Prometheus metrics using Prometheus JMX exporter to convert the JMX metrics supported by Apache Kafka and ZooKeeper to Prometheus metrics.

To enable Prometheus metrics export without further configuration, you can reference a ConfigMap containing an empty file under metricsConfig.valueFrom.configMapKeyRef.key. When referencing an empty file, all metrics are exposed as long as they have not been renamed.

Example ConfigMap with metrics configuration for Kafka

Example metrics configuration for Kafka

When metrics are enabled, they are exposed on port 9404.

When the metricsConfig (or deprecated metrics) property is not defined in the resource, the Prometheus metrics are disabled.

For more information about setting up and deploying Prometheus and Grafana, see Introducing Metrics to Kafka in the Deploying and Upgrading AMQ Streams on OpenShift guide.

6.1.9. jvmOptions

The following AMQ Streams components run inside a Java Virtual Machine (JVM):

- Apache Kafka

- Apache ZooKeeper

- Apache Kafka Connect

- Apache Kafka MirrorMaker

- AMQ Streams Kafka Bridge

To optimize their performance on different platforms and architectures, you configure the jvmOptions property in the following resources:

-

Kafka.spec.kafka -

Kafka.spec.zookeeper -

Kafka.spec.entityOperator.userOperator -

Kafka.spec.entityOperator.topicOperator -

Kafka.spec.cruiseControl -

KafkaConnect.spec -

KafkaMirrorMaker.spec -

KafkaMirrorMaker2.spec -

KafkaBridge.spec

You can specify the following options in your configuration:

-Xms- Minimum initial allocation heap size when the JVM starts

-Xmx- Maximum heap size

-XX- Advanced runtime options for the JVM

javaSystemProperties- Additional system properties

gcLoggingEnabled- Enables garbage collector logging

The units accepted by JVM settings, such as -Xmx and -Xms, are the same units accepted by the JDK java binary in the corresponding image. Therefore, 1g or 1G means 1,073,741,824 bytes, and Gi is not a valid unit suffix. This is different from the units used for memory requests and limits, which follow the OpenShift convention where 1G means 1,000,000,000 bytes, and 1Gi means 1,073,741,824 bytes.

-Xms and -Xmx options

In addition to setting memory request and limit values for your containers, you can use the -Xms and -Xmx JVM options to set specific heap sizes for your JVM. Use the -Xms option to set an initial heap size and the -Xmx option to set a maximum heap size.

Specify heap size to have more control over the memory allocated to your JVM. Heap sizes should make the best use of a container’s memory limit (and request) without exceeding it. Heap size and any other memory requirements need to fit within a specified memory limit. If you don’t specify heap size in your configuration, but you configure a memory resource limit (and request), the Cluster Operator imposes default heap sizes automatically. The Cluster Operator sets default maximum and minimum heap values based on a percentage of the memory resource configuration.

The following table shows the default heap values.

| Component | Percent of available memory allocated to the heap | Maximum limit |

|---|---|---|

| Kafka | 50% | 5 GB |

| ZooKeeper | 75% | 2 GB |

| Kafka Connect | 75% | None |

| MirrorMaker 2 | 75% | None |

| MirrorMaker | 75% | None |

| Cruise Control | 75% | None |

| Kafka Bridge | 50% | 31 Gi |

If a memory limit (and request) is not specified, a JVM’s minimum heap size is set to 128M. The JVM’s maximum heap size is not defined to allow the memory to increase as needed. This is ideal for single node environments in test and development.

Setting an appropriate memory request can prevent the following:

- OpenShift killing a container if there is pressure on memory from other pods running on the node.

-

OpenShift scheduling a container to a node with insufficient memory. If

-Xmsis set to-Xmx, the container will crash immediately; if not, the container will crash at a later time.

In this example, the JVM uses 2 GiB (=2,147,483,648 bytes) for its heap. Total JVM memory usage can be a lot more than the maximum heap size.

Example -Xmx and -Xms configuration

# ... jvmOptions: "-Xmx": "2g" "-Xms": "2g" # ...

# ...

jvmOptions:

"-Xmx": "2g"

"-Xms": "2g"

# ...

Setting the same value for initial (-Xms) and maximum (-Xmx) heap sizes avoids the JVM having to allocate memory after startup, at the cost of possibly allocating more heap than is really needed.

Containers performing lots of disk I/O, such as Kafka broker containers, require available memory for use as an operating system page cache. For such containers, the requested memory should be significantly higher than the memory used by the JVM.

-XX option

-XX options are used to configure the KAFKA_JVM_PERFORMANCE_OPTS option of Apache Kafka.

Example -XX configuration

JVM options resulting from the -XX configuration

-XX:+UseG1GC -XX:MaxGCPauseMillis=20 -XX:InitiatingHeapOccupancyPercent=35 -XX:+ExplicitGCInvokesConcurrent -XX:-UseParNewGC

-XX:+UseG1GC -XX:MaxGCPauseMillis=20 -XX:InitiatingHeapOccupancyPercent=35 -XX:+ExplicitGCInvokesConcurrent -XX:-UseParNewGC

When no -XX options are specified, the default Apache Kafka configuration of KAFKA_JVM_PERFORMANCE_OPTS is used.

javaSystemProperties

javaSystemProperties are used to configure additional Java system properties, such as debugging utilities.

Example javaSystemProperties configuration

jvmOptions:

javaSystemProperties:

- name: javax.net.debug

value: ssl

jvmOptions:

javaSystemProperties:

- name: javax.net.debug

value: ssl

For more information about the jvmOptions, see the JvmOptions schema reference.

6.1.10. Garbage collector logging

The jvmOptions property also allows you to enable and disable garbage collector (GC) logging. GC logging is disabled by default. To enable it, set the gcLoggingEnabled property as follows:

Example GC logging configuration

# ... jvmOptions: gcLoggingEnabled: true # ...

# ...

jvmOptions:

gcLoggingEnabled: true

# ...6.2. Schema properties

6.2.1. Kafka schema reference

| Property | Description |

|---|---|

| spec | The specification of the Kafka and ZooKeeper clusters, and Topic Operator. |

| status | The status of the Kafka and ZooKeeper clusters, and Topic Operator. |

6.2.2. KafkaSpec schema reference

Used in: Kafka

| Property | Description |

|---|---|

| kafka | Configuration of the Kafka cluster. |

| zookeeper | Configuration of the ZooKeeper cluster. |

| entityOperator | Configuration of the Entity Operator. |

| clusterCa | Configuration of the cluster certificate authority. |

| clientsCa | Configuration of the clients certificate authority. |

| cruiseControl | Configuration for Cruise Control deployment. Deploys a Cruise Control instance when specified. |

| kafkaExporter | Configuration of the Kafka Exporter. Kafka Exporter can provide additional metrics, for example lag of consumer group at topic/partition. |

| maintenanceTimeWindows | A list of time windows for maintenance tasks (that is, certificates renewal). Each time window is defined by a cron expression. |

| string array |

6.2.3. KafkaClusterSpec schema reference

Used in: KafkaSpec

Full list of KafkaClusterSpec schema properties

Configures a Kafka cluster.

6.2.3.1. listeners

Use the listeners property to configure listeners to provide access to Kafka brokers.

Example configuration of a plain (unencrypted) listener without authentication

6.2.3.2. config

Use the config properties to configure Kafka broker options as keys.

Standard Apache Kafka configuration may be provided, restricted to those properties not managed directly by AMQ Streams.

Configuration options that cannot be configured relate to:

- Security (Encryption, Authentication, and Authorization)

- Listener configuration

- Broker ID configuration

- Configuration of log data directories

- Inter-broker communication

- ZooKeeper connectivity

The values can be one of the following JSON types:

- String

- Number

- Boolean

You can specify and configure the options listed in the Apache Kafka documentation with the exception of those options that are managed directly by AMQ Streams. Specifically, all configuration options with keys equal to or starting with one of the following strings are forbidden:

-

listeners -

advertised. -

broker. -

listener. -

host.name -

port -

inter.broker.listener.name -

sasl. -

ssl. -

security. -

password. -

principal.builder.class -

log.dir -

zookeeper.connect -

zookeeper.set.acl -

authorizer. -

super.user

When a forbidden option is present in the config property, it is ignored and a warning message is printed to the Cluster Operator log file. All other supported options are passed to Kafka.

There are exceptions to the forbidden options. For client connection using a specific cipher suite for a TLS version, you can configure allowed ssl properties. You can also configure the zookeeper.connection.timeout.ms property to set the maximum time allowed for establishing a ZooKeeper connection.

Example Kafka broker configuration

6.2.3.3. brokerRackInitImage

When rack awareness is enabled, Kafka broker pods use init container to collect the labels from the OpenShift cluster nodes. The container image used for this container can be configured using the brokerRackInitImage property. When the brokerRackInitImage field is missing, the following images are used in order of priority:

-

Container image specified in

STRIMZI_DEFAULT_KAFKA_INIT_IMAGEenvironment variable in the Cluster Operator configuration. -

registry.redhat.io/amq-streams/strimzi-rhel8-operator:2.4.0container image.

Example brokerRackInitImage configuration

Overriding container images is recommended only in special situations, where you need to use a different container registry. For example, because your network does not allow access to the container registry used by AMQ Streams. In this case, you should either copy the AMQ Streams images or build them from the source. If the configured image is not compatible with AMQ Streams images, it might not work properly.

6.2.3.4. logging

Kafka has its own configurable loggers:

-

log4j.logger.org.I0Itec.zkclient.ZkClient -

log4j.logger.org.apache.zookeeper -

log4j.logger.kafka -

log4j.logger.org.apache.kafka -

log4j.logger.kafka.request.logger -

log4j.logger.kafka.network.Processor -

log4j.logger.kafka.server.KafkaApis -

log4j.logger.kafka.network.RequestChannel$ -

log4j.logger.kafka.controller -

log4j.logger.kafka.log.LogCleaner -

log4j.logger.state.change.logger -

log4j.logger.kafka.authorizer.logger

Kafka uses the Apache log4j logger implementation.

Use the logging property to configure loggers and logger levels.

You can set the log levels by specifying the logger and level directly (inline) or use a custom (external) ConfigMap. If a ConfigMap is used, you set logging.valueFrom.configMapKeyRef.name property to the name of the ConfigMap containing the external logging configuration. Inside the ConfigMap, the logging configuration is described using log4j.properties. Both logging.valueFrom.configMapKeyRef.name and logging.valueFrom.configMapKeyRef.key properties are mandatory. A ConfigMap using the exact logging configuration specified is created with the custom resource when the Cluster Operator is running, then recreated after each reconciliation. If you do not specify a custom ConfigMap, default logging settings are used. If a specific logger value is not set, upper-level logger settings are inherited for that logger. For more information about log levels, see Apache logging services.

Here we see examples of inline and external logging.

Inline logging

External logging

Any available loggers that are not configured have their level set to OFF.

If Kafka was deployed using the Cluster Operator, changes to Kafka logging levels are applied dynamically.

If you use external logging, a rolling update is triggered when logging appenders are changed.

Garbage collector (GC)

Garbage collector logging can also be enabled (or disabled) using the jvmOptions property.

6.2.3.5. KafkaClusterSpec schema properties

| Property | Description |

|---|---|

| version | The kafka broker version. Defaults to 3.4.0. Consult the user documentation to understand the process required to upgrade or downgrade the version. |

| string | |

| replicas | The number of pods in the cluster. |

| integer | |

| image |

The docker image for the pods. The default value depends on the configured |

| string | |

| listeners | Configures listeners of Kafka brokers. |

|

| |

| config | Kafka broker config properties with the following prefixes cannot be set: listeners, advertised., broker., listener., host.name, port, inter.broker.listener.name, sasl., ssl., security., password., log.dir, zookeeper.connect, zookeeper.set.acl, zookeeper.ssl, zookeeper.clientCnxnSocket, authorizer., super.user, cruise.control.metrics.topic, cruise.control.metrics.reporter.bootstrap.servers,node.id, process.roles, controller. (with the exception of: zookeeper.connection.timeout.ms, sasl.server.max.receive.size,ssl.cipher.suites, ssl.protocol, ssl.enabled.protocols, ssl.secure.random.implementation,cruise.control.metrics.topic.num.partitions, cruise.control.metrics.topic.replication.factor, cruise.control.metrics.topic.retention.ms,cruise.control.metrics.topic.auto.create.retries, cruise.control.metrics.topic.auto.create.timeout.ms,cruise.control.metrics.topic.min.insync.replicas,controller.quorum.election.backoff.max.ms, controller.quorum.election.timeout.ms, controller.quorum.fetch.timeout.ms). |

| map | |

| storage |

Storage configuration (disk). Cannot be updated. The type depends on the value of the |

| authorization |

Authorization configuration for Kafka brokers. The type depends on the value of the |

|

| |

| rack |

Configuration of the |

| brokerRackInitImage |

The image of the init container used for initializing the |

| string | |

| livenessProbe | Pod liveness checking. |

| readinessProbe | Pod readiness checking. |

| jvmOptions | JVM Options for pods. |

| jmxOptions | JMX Options for Kafka brokers. |

| resources | CPU and memory resources to reserve. For more information, see the external documentation for core/v1 resourcerequirements. |

| metricsConfig |

Metrics configuration. The type depends on the value of the |

| logging |

Logging configuration for Kafka. The type depends on the value of the |

| template |

Template for Kafka cluster resources. The template allows users to specify how the |

6.2.4. GenericKafkaListener schema reference

Used in: KafkaClusterSpec

Full list of GenericKafkaListener schema properties

Configures listeners to connect to Kafka brokers within and outside OpenShift.

You configure the listeners in the Kafka resource.

Example Kafka resource showing listener configuration

6.2.4.1. listeners

You configure Kafka broker listeners using the listeners property in the Kafka resource. Listeners are defined as an array.

Example listener configuration

listeners:

- name: plain

port: 9092

type: internal

tls: false

listeners:

- name: plain

port: 9092

type: internal

tls: falseThe name and port must be unique within the Kafka cluster. The name can be up to 25 characters long, comprising lower-case letters and numbers. Allowed port numbers are 9092 and higher with the exception of ports 9404 and 9999, which are already used for Prometheus and JMX.

By specifying a unique name and port for each listener, you can configure multiple listeners.

6.2.4.2. type

The type is set as internal, or for external listeners, as route, loadbalancer, nodeport, ingress or cluster-ip. You can also configure a cluster-ip listener, a type of internal listener you can use to build custom access mechanisms.

- internal

You can configure internal listeners with or without encryption using the

tlsproperty.Example

internallistener configurationCopy to Clipboard Copied! Toggle word wrap Toggle overflow - route

Configures an external listener to expose Kafka using OpenShift

Routesand the HAProxy router.A dedicated

Routeis created for every Kafka broker pod. An additionalRouteis created to serve as a Kafka bootstrap address. Kafka clients can use theseRoutesto connect to Kafka on port 443. The client connects on port 443, the default router port, but traffic is then routed to the port you configure, which is9094in this example.Example

routelistener configurationCopy to Clipboard Copied! Toggle word wrap Toggle overflow - ingress

Configures an external listener to expose Kafka using Kubernetes

Ingressand the Ingress NGINX Controller for Kubernetes.A dedicated

Ingressresource is created for every Kafka broker pod. An additionalIngressresource is created to serve as a Kafka bootstrap address. Kafka clients can use theseIngressresources to connect to Kafka on port 443. The client connects on port 443, the default controller port, but traffic is then routed to the port you configure, which is9095in the following example.You must specify the hostnames used by the bootstrap and per-broker services using

GenericKafkaListenerConfigurationBootstrapandGenericKafkaListenerConfigurationBrokerproperties.Example

ingresslistener configurationCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteExternal listeners using

Ingressare currently only tested with the Ingress NGINX Controller for Kubernetes.- loadbalancer

Configures an external listener to expose Kafka using a

LoadbalancertypeService.A new loadbalancer service is created for every Kafka broker pod. An additional loadbalancer is created to serve as a Kafka bootstrap address. Loadbalancers listen to the specified port number, which is port

9094in the following example.You can use the

loadBalancerSourceRangesproperty to configure source ranges to restrict access to the specified IP addresses.Example

loadbalancerlistener configurationCopy to Clipboard Copied! Toggle word wrap Toggle overflow - nodeport

Configures an external listener to expose Kafka using a

NodePorttypeService.Kafka clients connect directly to the nodes of OpenShift. An additional

NodePorttype of service is created to serve as a Kafka bootstrap address.When configuring the advertised addresses for the Kafka broker pods, AMQ Streams uses the address of the node on which the given pod is running. You can use

preferredNodePortAddressTypeproperty to configure the first address type checked as the node address.Example

nodeportlistener configurationCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteTLS hostname verification is not currently supported when exposing Kafka clusters using node ports.

- cluster-ip

Configures an internal listener to expose Kafka using a per-broker

ClusterIPtypeService.The listener does not use a headless service and its DNS names to route traffic to Kafka brokers. You can use this type of listener to expose a Kafka cluster when using the headless service is unsuitable. You might use it with a custom access mechanism, such as one that uses a specific Ingress controller or the OpenShift Gateway API.

A new

ClusterIPservice is created for each Kafka broker pod. The service is assigned aClusterIPaddress to serve as a Kafka bootstrap address with a per-broker port number. For example, you can configure the listener to expose a Kafka cluster over an Nginx Ingress Controller with TCP port configuration.Example

cluster-iplistener configurationCopy to Clipboard Copied! Toggle word wrap Toggle overflow

6.2.4.3. port

The port number is the port used in the Kafka cluster, which might not be the same port used for access by a client.

-

loadbalancerlisteners use the specified port number, as dointernalandcluster-iplisteners -

ingressandroutelisteners use port 443 for access -

nodeportlisteners use the port number assigned by OpenShift

For client connection, use the address and port for the bootstrap service of the listener. You can retrieve this from the status of the Kafka resource.

Example command to retrieve the address and port for client connection

oc get kafka <kafka_cluster_name> -o=jsonpath='{.status.listeners[?(@.name=="<listener_name>")].bootstrapServers}{"\n"}'

oc get kafka <kafka_cluster_name> -o=jsonpath='{.status.listeners[?(@.name=="<listener_name>")].bootstrapServers}{"\n"}'Listeners cannot be configured to use the ports set aside for interbroker communication (9090 and 9091) and metrics (9404).

6.2.4.4. tls

The TLS property is required.

By default, TLS encryption is not enabled. To enable it, set the tls property to true.

For route and ingress type listeners, TLS encryption must be enabled.

6.2.4.5. authentication

Authentication for the listener can be specified as:

-

mTLS (

tls) -

SCRAM-SHA-512 (

scram-sha-512) -

Token-based OAuth 2.0 (

oauth) -

Custom (

custom)

6.2.4.6. networkPolicyPeers

Use networkPolicyPeers to configure network policies that restrict access to a listener at the network level. The following example shows a networkPolicyPeers configuration for a plain and a tls listener.

In the following example:

-

Only application pods matching the labels

app: kafka-sasl-consumerandapp: kafka-sasl-producercan connect to theplainlistener. The application pods must be running in the same namespace as the Kafka broker. -

Only application pods running in namespaces matching the labels

project: myprojectandproject: myproject2can connect to thetlslistener.

The syntax of the networkPolicyPeers property is the same as the from property in NetworkPolicy resources.

Exanmple network policy configuration

6.2.4.7. GenericKafkaListener schema properties

| Property | Description |

|---|---|

| name | Name of the listener. The name will be used to identify the listener and the related OpenShift objects. The name has to be unique within given a Kafka cluster. The name can consist of lowercase characters and numbers and be up to 11 characters long. |

| string | |

| port | Port number used by the listener inside Kafka. The port number has to be unique within a given Kafka cluster. Allowed port numbers are 9092 and higher with the exception of ports 9404 and 9999, which are already used for Prometheus and JMX. Depending on the listener type, the port number might not be the same as the port number that connects Kafka clients. |

| integer | |

| type |

Type of the listener. Currently the supported types are

|

| string (one of [ingress, internal, route, loadbalancer, cluster-ip, nodeport]) | |

| tls | Enables TLS encryption on the listener. This is a required property. |

| boolean | |

| authentication |

Authentication configuration for this listener. The type depends on the value of the |

|

| |

| configuration | Additional listener configuration. |

| networkPolicyPeers | List of peers which should be able to connect to this listener. Peers in this list are combined using a logical OR operation. If this field is empty or missing, all connections will be allowed for this listener. If this field is present and contains at least one item, the listener only allows the traffic which matches at least one item in this list. For more information, see the external documentation for networking.k8s.io/v1 networkpolicypeer. |

| NetworkPolicyPeer array |

6.2.5. KafkaListenerAuthenticationTls schema reference

Used in: GenericKafkaListener

The type property is a discriminator that distinguishes use of the KafkaListenerAuthenticationTls type from KafkaListenerAuthenticationScramSha512, KafkaListenerAuthenticationOAuth, KafkaListenerAuthenticationCustom. It must have the value tls for the type KafkaListenerAuthenticationTls.

| Property | Description |

|---|---|

| type |

Must be |

| string |

6.2.6. KafkaListenerAuthenticationScramSha512 schema reference

Used in: GenericKafkaListener

The type property is a discriminator that distinguishes use of the KafkaListenerAuthenticationScramSha512 type from KafkaListenerAuthenticationTls, KafkaListenerAuthenticationOAuth, KafkaListenerAuthenticationCustom. It must have the value scram-sha-512 for the type KafkaListenerAuthenticationScramSha512.

| Property | Description |

|---|---|

| type |

Must be |

| string |

6.2.7. KafkaListenerAuthenticationOAuth schema reference

Used in: GenericKafkaListener

The type property is a discriminator that distinguishes use of the KafkaListenerAuthenticationOAuth type from KafkaListenerAuthenticationTls, KafkaListenerAuthenticationScramSha512, KafkaListenerAuthenticationCustom. It must have the value oauth for the type KafkaListenerAuthenticationOAuth.

| Property | Description |

|---|---|

| accessTokenIsJwt |

Configure whether the access token is treated as JWT. This must be set to |

| boolean | |

| checkAccessTokenType |

Configure whether the access token type check is performed or not. This should be set to |

| boolean | |

| checkAudience |

Enable or disable audience checking. Audience checks identify the recipients of tokens. If audience checking is enabled, the OAuth Client ID also has to be configured using the |

| boolean | |

| checkIssuer |

Enable or disable issuer checking. By default issuer is checked using the value configured by |

| boolean | |

| clientAudience |

The audience to use when making requests to the authorization server’s token endpoint. Used for inter-broker authentication and for configuring OAuth 2.0 over PLAIN using the |

| string | |

| clientId | OAuth Client ID which the Kafka broker can use to authenticate against the authorization server and use the introspect endpoint URI. |

| string | |

| clientScope |

The scope to use when making requests to the authorization server’s token endpoint. Used for inter-broker authentication and for configuring OAuth 2.0 over PLAIN using the |

| string | |

| clientSecret | Link to OpenShift Secret containing the OAuth client secret which the Kafka broker can use to authenticate against the authorization server and use the introspect endpoint URI. |

| connectTimeoutSeconds | The connect timeout in seconds when connecting to authorization server. If not set, the effective connect timeout is 60 seconds. |

| integer | |

| customClaimCheck | JsonPath filter query to be applied to the JWT token or to the response of the introspection endpoint for additional token validation. Not set by default. |

| string | |

| disableTlsHostnameVerification |

Enable or disable TLS hostname verification. Default value is |

| boolean | |

| enableECDSA |

The |

| boolean | |

| enableMetrics |

Enable or disable OAuth metrics. Default value is |

| boolean | |

| enableOauthBearer |

Enable or disable OAuth authentication over SASL_OAUTHBEARER. Default value is |

| boolean | |

| enablePlain |

Enable or disable OAuth authentication over SASL_PLAIN. There is no re-authentication support when this mechanism is used. Default value is |

| boolean | |

| failFast |

Enable or disable termination of Kafka broker processes due to potentially recoverable runtime errors during startup. Default value is |

| boolean | |

| fallbackUserNameClaim |

The fallback username claim to be used for the user id if the claim specified by |

| string | |

| fallbackUserNamePrefix |

The prefix to use with the value of |

| string | |

| groupsClaim | JsonPath query used to extract groups for the user during authentication. Extracted groups can be used by a custom authorizer. By default no groups are extracted. |

| string | |

| groupsClaimDelimiter | A delimiter used to parse groups when they are extracted as a single String value rather than a JSON array. Default value is ',' (comma). |

| string | |

| httpRetries | The maximum number of retries to attempt if an initial HTTP request fails. If not set, the default is to not attempt any retries. |

| integer | |

| httpRetryPauseMs | The pause to take before retrying a failed HTTP request. If not set, the default is to not pause at all but to immediately repeat a request. |

| integer | |

| introspectionEndpointUri | URI of the token introspection endpoint which can be used to validate opaque non-JWT tokens. |

| string | |

| jwksEndpointUri | URI of the JWKS certificate endpoint, which can be used for local JWT validation. |

| string | |

| jwksExpirySeconds |

Configures how often are the JWKS certificates considered valid. The expiry interval has to be at least 60 seconds longer then the refresh interval specified in |

| integer | |

| jwksIgnoreKeyUse |

Flag to ignore the 'use' attribute of |

| boolean | |

| jwksMinRefreshPauseSeconds | The minimum pause between two consecutive refreshes. When an unknown signing key is encountered the refresh is scheduled immediately, but will always wait for this minimum pause. Defaults to 1 second. |

| integer | |

| jwksRefreshSeconds |

Configures how often are the JWKS certificates refreshed. The refresh interval has to be at least 60 seconds shorter then the expiry interval specified in |

| integer | |

| maxSecondsWithoutReauthentication |

Maximum number of seconds the authenticated session remains valid without re-authentication. This enables Apache Kafka re-authentication feature, and causes sessions to expire when the access token expires. If the access token expires before max time or if max time is reached, the client has to re-authenticate, otherwise the server will drop the connection. Not set by default - the authenticated session does not expire when the access token expires. This option only applies to SASL_OAUTHBEARER authentication mechanism (when |

| integer | |

| readTimeoutSeconds | The read timeout in seconds when connecting to authorization server. If not set, the effective read timeout is 60 seconds. |

| integer | |

| tlsTrustedCertificates | Trusted certificates for TLS connection to the OAuth server. |

|

| |

| tokenEndpointUri |

URI of the Token Endpoint to use with SASL_PLAIN mechanism when the client authenticates with |

| string | |

| type |

Must be |

| string | |

| userInfoEndpointUri | URI of the User Info Endpoint to use as a fallback to obtaining the user id when the Introspection Endpoint does not return information that can be used for the user id. |

| string | |

| userNameClaim |

Name of the claim from the JWT authentication token, Introspection Endpoint response or User Info Endpoint response which will be used to extract the user id. Defaults to |

| string | |

| validIssuerUri | URI of the token issuer used for authentication. |

| string | |

| validTokenType |

Valid value for the |

| string |

6.2.8. GenericSecretSource schema reference

Used in: KafkaClientAuthenticationOAuth, KafkaListenerAuthenticationCustom, KafkaListenerAuthenticationOAuth

| Property | Description |

|---|---|

| key | The key under which the secret value is stored in the OpenShift Secret. |

| string | |

| secretName | The name of the OpenShift Secret containing the secret value. |

| string |

6.2.9. CertSecretSource schema reference

Used in: ClientTls, KafkaAuthorizationKeycloak, KafkaAuthorizationOpa, KafkaClientAuthenticationOAuth, KafkaListenerAuthenticationOAuth

| Property | Description |

|---|---|

| certificate | The name of the file certificate in the Secret. |

| string | |

| secretName | The name of the Secret containing the certificate. |

| string |

6.2.10. KafkaListenerAuthenticationCustom schema reference

Used in: GenericKafkaListener

Full list of KafkaListenerAuthenticationCustom schema properties

To configure custom authentication, set the type property to custom.

Custom authentication allows for any type of kafka-supported authentication to be used.

Example custom OAuth authentication configuration

A protocol map is generated that uses the sasl and tls values to determine which protocol to map to the listener.

-

SASL = True, TLS = True

SASL_SSL -

SASL = False, TLS = True

SSL -

SASL = True, TLS = False

SASL_PLAINTEXT -

SASL = False, TLS = False

PLAINTEXT

6.2.10.1. listenerConfig

Listener configuration specified using listenerConfig is prefixed with listener.name.<listener_name>-<port>. For example, sasl.enabled.mechanisms becomes listener.name.<listener_name>-<port>.sasl.enabled.mechanisms.

6.2.10.2. secrets

Secrets are mounted to /opt/kafka/custom-authn-secrets/custom-listener-<listener_name>-<port>/<secret_name> in the Kafka broker nodes' containers.

For example, the mounted secret (example) in the example configuration would be located at /opt/kafka/custom-authn-secrets/custom-listener-oauth-bespoke-9093/example.

6.2.10.3. Principal builder

You can set a custom principal builder in the Kafka cluster configuration. However, the principal builder is subject to the following requirements:

- The specified principal builder class must exist on the image. Before building your own, check if one already exists. You’ll need to rebuild the AMQ Streams images with the required classes.

-

No other listener is using

oauthtype authentication. This is because an OAuth listener appends its own principle builder to the Kafka configuration. - The specified principal builder is compatible with AMQ Streams.

Custom principal builders must support peer certificates for authentication, as AMQ Streams uses these to manage the Kafka cluster.

Kafka’s default principal builder class supports the building of principals based on the names of peer certificates. The custom principal builder should provide a principal of type user using the name of the SSL peer certificate.

The following example shows a custom principal builder that satisfies the OAuth requirements of AMQ Streams.

Example principal builder for custom OAuth configuration

6.2.10.4. KafkaListenerAuthenticationCustom schema properties

The type property is a discriminator that distinguishes use of the KafkaListenerAuthenticationCustom type from KafkaListenerAuthenticationTls, KafkaListenerAuthenticationScramSha512, KafkaListenerAuthenticationOAuth. It must have the value custom for the type KafkaListenerAuthenticationCustom.

| Property | Description |

|---|---|

| listenerConfig | Configuration to be used for a specific listener. All values are prefixed with listener.name.<listener_name>. |

| map | |

| sasl | Enable or disable SASL on this listener. |

| boolean | |

| secrets | Secrets to be mounted to /opt/kafka/custom-authn-secrets/custom-listener-<listener_name>-<port>/<secret_name>. |

|

| |

| type |

Must be |

| string |

6.2.11. GenericKafkaListenerConfiguration schema reference

Used in: GenericKafkaListener

Full list of GenericKafkaListenerConfiguration schema properties

Configuration for Kafka listeners.

6.2.11.1. brokerCertChainAndKey

The brokerCertChainAndKey property is only used with listeners that have TLS encryption enabled. You can use the property to provide your own Kafka listener certificates.

Example configuration for a loadbalancer external listener with TLS encryption enabled

6.2.11.2. externalTrafficPolicy

The externalTrafficPolicy property is used with loadbalancer and nodeport listeners. When exposing Kafka outside of OpenShift you can choose Local or Cluster. Local avoids hops to other nodes and preserves the client IP, whereas Cluster does neither. The default is Cluster.

6.2.11.3. loadBalancerSourceRanges

The loadBalancerSourceRanges property is only used with loadbalancer listeners. When exposing Kafka outside of OpenShift use source ranges, in addition to labels and annotations, to customize how a service is created.

Example source ranges configured for a loadbalancer listener

6.2.11.4. class

The class property is only used with ingress listeners. You can configure the Ingress class using the class property.

Example of an external listener of type ingress using Ingress class nginx-internal

6.2.11.5. preferredNodePortAddressType

The preferredNodePortAddressType property is only used with nodeport listeners.

Use the preferredNodePortAddressType property in your listener configuration to specify the first address type checked as the node address. This property is useful, for example, if your deployment does not have DNS support, or you only want to expose a broker internally through an internal DNS or IP address. If an address of this type is found, it is used. If the preferred address type is not found, AMQ Streams proceeds through the types in the standard order of priority:

- ExternalDNS

- ExternalIP

- Hostname

- InternalDNS

- InternalIP

Example of an external listener configured with a preferred node port address type

6.2.11.6. useServiceDnsDomain

The useServiceDnsDomain property is only used with internal and cluster-ip listeners. It defines whether the fully-qualified DNS names that include the cluster service suffix (usually .cluster.local) are used. With useServiceDnsDomain set as false, the advertised addresses are generated without the service suffix; for example, my-cluster-kafka-0.my-cluster-kafka-brokers.myproject.svc. With useServiceDnsDomain set as true, the advertised addresses are generated with the service suffix; for example, my-cluster-kafka-0.my-cluster-kafka-brokers.myproject.svc.cluster.local. Default is false.

Example of an internal listener configured to use the Service DNS domain

If your OpenShift cluster uses a different service suffix than .cluster.local, you can configure the suffix using the KUBERNETES_SERVICE_DNS_DOMAIN environment variable in the Cluster Operator configuration.

6.2.11.7. GenericKafkaListenerConfiguration schema properties

| Property | Description |

|---|---|

| brokerCertChainAndKey |

Reference to the |

| externalTrafficPolicy |

Specifies whether the service routes external traffic to node-local or cluster-wide endpoints. |

| string (one of [Local, Cluster]) | |

| loadBalancerSourceRanges |

A list of CIDR ranges (for example |

| string array | |

| bootstrap | Bootstrap configuration. |

| brokers | Per-broker configurations. |

| ipFamilyPolicy |

Specifies the IP Family Policy used by the service. Available options are |

| string (one of [RequireDualStack, SingleStack, PreferDualStack]) | |

| ipFamilies |

Specifies the IP Families used by the service. Available options are |

| string (one or more of [IPv6, IPv4]) array | |

| createBootstrapService |

Whether to create the bootstrap service or not. The bootstrap service is created by default (if not specified differently). This field can be used with the |

| boolean | |

| class |

Configures a specific class for |

| string | |

| finalizers |

A list of finalizers which will be configured for the |

| string array | |

| maxConnectionCreationRate | The maximum connection creation rate we allow in this listener at any time. New connections will be throttled if the limit is reached. |

| integer | |

| maxConnections | The maximum number of connections we allow for this listener in the broker at any time. New connections are blocked if the limit is reached. |

| integer | |

| preferredNodePortAddressType |

Defines which address type should be used as the node address. Available types are:

This field is used to select the preferred address type, which is checked first. If no address is found for this address type, the other types are checked in the default order. This field can only be used with |

| string (one of [ExternalDNS, ExternalIP, Hostname, InternalIP, InternalDNS]) | |

| useServiceDnsDomain |

Configures whether the OpenShift service DNS domain should be used or not. If set to |

| boolean |

6.2.12. CertAndKeySecretSource schema reference

Used in: GenericKafkaListenerConfiguration, KafkaClientAuthenticationTls

| Property | Description |

|---|---|

| certificate | The name of the file certificate in the Secret. |

| string | |

| key | The name of the private key in the Secret. |

| string | |

| secretName | The name of the Secret containing the certificate. |

| string |

6.2.13. GenericKafkaListenerConfigurationBootstrap schema reference

Used in: GenericKafkaListenerConfiguration

Full list of GenericKafkaListenerConfigurationBootstrap schema properties

Broker service equivalents of nodePort, host, loadBalancerIP and annotations properties are configured in the GenericKafkaListenerConfigurationBroker schema.

6.2.13.1. alternativeNames

You can specify alternative names for the bootstrap service. The names are added to the broker certificates and can be used for TLS hostname verification. The alternativeNames property is applicable to all types of listeners.

Example of an external route listener configured with an additional bootstrap address

6.2.13.2. host

The host property is used with route and ingress listeners to specify the hostnames used by the bootstrap and per-broker services.

A host property value is mandatory for ingress listener configuration, as the Ingress controller does not assign any hostnames automatically. Make sure that the hostnames resolve to the Ingress endpoints. AMQ Streams will not perform any validation that the requested hosts are available and properly routed to the Ingress endpoints.

Example of host configuration for an ingress listener

By default, route listener hosts are automatically assigned by OpenShift. However, you can override the assigned route hosts by specifying hosts.

AMQ Streams does not perform any validation that the requested hosts are available. You must ensure that they are free and can be used.

Example of host configuration for a route listener

6.2.13.3. nodePort

By default, the port numbers used for the bootstrap and broker services are automatically assigned by OpenShift. You can override the assigned node ports for nodeport listeners by specifying the requested port numbers.

AMQ Streams does not perform any validation on the requested ports. You must ensure that they are free and available for use.

Example of an external listener configured with overrides for node ports

6.2.13.4. loadBalancerIP

Use the loadBalancerIP property to request a specific IP address when creating a loadbalancer. Use this property when you need to use a loadbalancer with a specific IP address. The loadBalancerIP field is ignored if the cloud provider does not support the feature.

Example of an external listener of type loadbalancer with specific loadbalancer IP address requests

6.2.13.5. annotations

Use the annotations property to add annotations to OpenShift resources related to the listeners. You can use these annotations, for example, to instrument DNS tooling such as External DNS, which automatically assigns DNS names to the loadbalancer services.

Example of an external listener of type loadbalancer using annotations

6.2.13.6. GenericKafkaListenerConfigurationBootstrap schema properties

| Property | Description |

|---|---|

| alternativeNames | Additional alternative names for the bootstrap service. The alternative names will be added to the list of subject alternative names of the TLS certificates. |

| string array | |

| host |

The bootstrap host. This field will be used in the Ingress resource or in the Route resource to specify the desired hostname. This field can be used only with |

| string | |

| nodePort |

Node port for the bootstrap service. This field can be used only with |

| integer | |

| loadBalancerIP |

The loadbalancer is requested with the IP address specified in this field. This feature depends on whether the underlying cloud provider supports specifying the |

| string | |

| annotations |

Annotations that will be added to the |

| map | |

| labels |

Labels that will be added to the |

| map |

6.2.14. GenericKafkaListenerConfigurationBroker schema reference

Used in: GenericKafkaListenerConfiguration

Full list of GenericKafkaListenerConfigurationBroker schema properties

You can see example configuration for the nodePort, host, loadBalancerIP and annotations properties in the GenericKafkaListenerConfigurationBootstrap schema, which configures bootstrap service overrides.

Advertised addresses for brokers

By default, AMQ Streams tries to automatically determine the hostnames and ports that your Kafka cluster advertises to its clients. This is not sufficient in all situations, because the infrastructure on which AMQ Streams is running might not provide the right hostname or port through which Kafka can be accessed.

You can specify a broker ID and customize the advertised hostname and port in the configuration property of the listener. AMQ Streams will then automatically configure the advertised address in the Kafka brokers and add it to the broker certificates so it can be used for TLS hostname verification. Overriding the advertised host and ports is available for all types of listeners.

Example of an external route listener configured with overrides for advertised addresses

6.2.14.1. GenericKafkaListenerConfigurationBroker schema properties

| Property | Description |

|---|---|

| broker | ID of the kafka broker (broker identifier). Broker IDs start from 0 and correspond to the number of broker replicas. |

| integer | |

| advertisedHost |

The host name which will be used in the brokers' |

| string | |

| advertisedPort |

The port number which will be used in the brokers' |

| integer | |

| host |

The broker host. This field will be used in the Ingress resource or in the Route resource to specify the desired hostname. This field can be used only with |

| string | |

| nodePort |

Node port for the per-broker service. This field can be used only with |

| integer | |

| loadBalancerIP |

The loadbalancer is requested with the IP address specified in this field. This feature depends on whether the underlying cloud provider supports specifying the |

| string | |

| annotations |

Annotations that will be added to the |

| map | |

| labels |

Labels that will be added to the |

| map |

6.2.15. EphemeralStorage schema reference

Used in: JbodStorage, KafkaClusterSpec, ZookeeperClusterSpec

The type property is a discriminator that distinguishes use of the EphemeralStorage type from PersistentClaimStorage. It must have the value ephemeral for the type EphemeralStorage.

| Property | Description |

|---|---|

| id | Storage identification number. It is mandatory only for storage volumes defined in a storage of type 'jbod'. |

| integer | |

| sizeLimit | When type=ephemeral, defines the total amount of local storage required for this EmptyDir volume (for example 1Gi). |

| string | |

| type |

Must be |

| string |

6.2.16. PersistentClaimStorage schema reference

Used in: JbodStorage, KafkaClusterSpec, ZookeeperClusterSpec

The type property is a discriminator that distinguishes use of the PersistentClaimStorage type from EphemeralStorage. It must have the value persistent-claim for the type PersistentClaimStorage.

| Property | Description |

|---|---|

| type |

Must be |

| string | |

| size | When type=persistent-claim, defines the size of the persistent volume claim (i.e 1Gi). Mandatory when type=persistent-claim. |

| string | |

| selector | Specifies a specific persistent volume to use. It contains key:value pairs representing labels for selecting such a volume. |

| map | |

| deleteClaim | Specifies if the persistent volume claim has to be deleted when the cluster is un-deployed. |

| boolean | |

| class | The storage class to use for dynamic volume allocation. |

| string | |

| id | Storage identification number. It is mandatory only for storage volumes defined in a storage of type 'jbod'. |

| integer | |

| overrides |

Overrides for individual brokers. The |

6.2.17. PersistentClaimStorageOverride schema reference

Used in: PersistentClaimStorage

| Property | Description |

|---|---|

| class | The storage class to use for dynamic volume allocation for this broker. |

| string | |

| broker | Id of the kafka broker (broker identifier). |

| integer |

6.2.18. JbodStorage schema reference

Used in: KafkaClusterSpec

The type property is a discriminator that distinguishes use of the JbodStorage type from EphemeralStorage, PersistentClaimStorage. It must have the value jbod for the type JbodStorage.

| Property | Description |

|---|---|

| type |

Must be |

| string | |

| volumes | List of volumes as Storage objects representing the JBOD disks array. |

6.2.19. KafkaAuthorizationSimple schema reference

Used in: KafkaClusterSpec

Full list of KafkaAuthorizationSimple schema properties

Simple authorization in AMQ Streams uses the AclAuthorizer plugin, the default Access Control Lists (ACLs) authorization plugin provided with Apache Kafka. ACLs allow you to define which users have access to which resources at a granular level.

Configure the Kafka custom resource to use simple authorization. Set the type property in the authorization section to the value simple, and configure a list of super users.

Access rules are configured for the KafkaUser, as described in the ACLRule schema reference.

6.2.19.1. superUsers

A list of user principals treated as super users, so that they are always allowed without querying ACL rules.

An example of simple authorization configuration

The super.user configuration option in the config property in Kafka.spec.kafka is ignored. Designate super users in the authorization property instead. For more information, see Kafka broker configuration.

6.2.19.2. KafkaAuthorizationSimple schema properties

The type property is a discriminator that distinguishes use of the KafkaAuthorizationSimple type from KafkaAuthorizationOpa, KafkaAuthorizationKeycloak, KafkaAuthorizationCustom. It must have the value simple for the type KafkaAuthorizationSimple.

| Property | Description |

|---|---|

| type |

Must be |

| string | |

| superUsers | List of super users. Should contain list of user principals which should get unlimited access rights. |

| string array |

6.2.20. KafkaAuthorizationOpa schema reference

Used in: KafkaClusterSpec

Full list of KafkaAuthorizationOpa schema properties

To use Open Policy Agent authorization, set the type property in the authorization section to the value opa, and configure OPA properties as required. AMQ Streams uses Open Policy Agent plugin for Kafka authorization as the authorizer. For more information about the format of the input data and policy examples, see Open Policy Agent plugin for Kafka authorization.

6.2.20.1. url

The URL used to connect to the Open Policy Agent server. The URL has to include the policy which will be queried by the authorizer. Required.

6.2.20.2. allowOnError

Defines whether a Kafka client should be allowed or denied by default when the authorizer fails to query the Open Policy Agent, for example, when it is temporarily unavailable. Defaults to false - all actions will be denied.

6.2.20.3. initialCacheCapacity

Initial capacity of the local cache used by the authorizer to avoid querying the Open Policy Agent for every request. Defaults to 5000.

6.2.20.4. maximumCacheSize

Maximum capacity of the local cache used by the authorizer to avoid querying the Open Policy Agent for every request. Defaults to 50000.

6.2.20.5. expireAfterMs

The expiration of the records kept in the local cache to avoid querying the Open Policy Agent for every request. Defines how often the cached authorization decisions are reloaded from the Open Policy Agent server. In milliseconds. Defaults to 3600000 milliseconds (1 hour).

6.2.20.6. tlsTrustedCertificates

Trusted certificates for TLS connection to the OPA server.

6.2.20.7. superUsers

A list of user principals treated as super users, so that they are always allowed without querying the open Policy Agent policy.

An example of Open Policy Agent authorizer configuration

6.2.20.8. KafkaAuthorizationOpa schema properties

The type property is a discriminator that distinguishes use of the KafkaAuthorizationOpa type from KafkaAuthorizationSimple, KafkaAuthorizationKeycloak, KafkaAuthorizationCustom. It must have the value opa for the type KafkaAuthorizationOpa.

| Property | Description |

|---|---|

| type |

Must be |

| string | |

| url | The URL used to connect to the Open Policy Agent server. The URL has to include the policy which will be queried by the authorizer. This option is required. |

| string | |

| allowOnError |

Defines whether a Kafka client should be allowed or denied by default when the authorizer fails to query the Open Policy Agent, for example, when it is temporarily unavailable). Defaults to |

| boolean | |

| initialCacheCapacity |

Initial capacity of the local cache used by the authorizer to avoid querying the Open Policy Agent for every request Defaults to |

| integer | |

| maximumCacheSize |

Maximum capacity of the local cache used by the authorizer to avoid querying the Open Policy Agent for every request. Defaults to |

| integer | |

| expireAfterMs |

The expiration of the records kept in the local cache to avoid querying the Open Policy Agent for every request. Defines how often the cached authorization decisions are reloaded from the Open Policy Agent server. In milliseconds. Defaults to |

| integer | |

| tlsTrustedCertificates | Trusted certificates for TLS connection to the OPA server. |

|

| |

| superUsers | List of super users, which is specifically a list of user principals that have unlimited access rights. |

| string array | |

| enableMetrics |

Defines whether the Open Policy Agent authorizer plugin should provide metrics. Defaults to |

| boolean |

6.2.21. KafkaAuthorizationKeycloak schema reference

Used in: KafkaClusterSpec

The type property is a discriminator that distinguishes use of the KafkaAuthorizationKeycloak type from KafkaAuthorizationSimple, KafkaAuthorizationOpa, KafkaAuthorizationCustom. It must have the value keycloak for the type KafkaAuthorizationKeycloak.

| Property | Description |

|---|---|

| type |

Must be |

| string | |

| clientId | OAuth Client ID which the Kafka client can use to authenticate against the OAuth server and use the token endpoint URI. |

| string | |

| tokenEndpointUri | Authorization server token endpoint URI. |

| string | |

| tlsTrustedCertificates | Trusted certificates for TLS connection to the OAuth server. |

|

| |

| disableTlsHostnameVerification |

Enable or disable TLS hostname verification. Default value is |

| boolean | |

| delegateToKafkaAcls |

Whether authorization decision should be delegated to the 'Simple' authorizer if DENIED by Red Hat Single Sign-On Authorization Services policies. Default value is |

| boolean | |

| grantsRefreshPeriodSeconds | The time between two consecutive grants refresh runs in seconds. The default value is 60. |

| integer | |

| grantsRefreshPoolSize | The number of threads to use to refresh grants for active sessions. The more threads, the more parallelism, so the sooner the job completes. However, using more threads places a heavier load on the authorization server. The default value is 5. |

| integer | |

| superUsers | List of super users. Should contain list of user principals which should get unlimited access rights. |

| string array | |

| connectTimeoutSeconds | The connect timeout in seconds when connecting to authorization server. If not set, the effective connect timeout is 60 seconds. |

| integer | |

| readTimeoutSeconds | The read timeout in seconds when connecting to authorization server. If not set, the effective read timeout is 60 seconds. |

| integer | |

| httpRetries | The maximum number of retries to attempt if an initial HTTP request fails. If not set, the default is to not attempt any retries. |

| integer | |

| enableMetrics |

Enable or disable OAuth metrics. Default value is |

| boolean |

6.2.22. KafkaAuthorizationCustom schema reference

Used in: KafkaClusterSpec

Full list of KafkaAuthorizationCustom schema properties

To use custom authorization in AMQ Streams, you can configure your own Authorizer plugin to define Access Control Lists (ACLs).

ACLs allow you to define which users have access to which resources at a granular level.

Configure the Kafka custom resource to use custom authorization. Set the type property in the authorization section to the value custom, and the set following properties.

The custom authorizer must implement the org.apache.kafka.server.authorizer.Authorizer interface, and support configuration of super.users using the super.users configuration property.

6.2.22.1. authorizerClass

(Required) Java class that implements the org.apache.kafka.server.authorizer.Authorizer interface to support custom ACLs.

6.2.22.2. superUsers

A list of user principals treated as super users, so that they are always allowed without querying ACL rules.

You can add configuration for initializing the custom authorizer using Kafka.spec.kafka.config.

An example of custom authorization configuration under Kafka.spec

In addition to the Kafka custom resource configuration, the JAR file containing the custom authorizer class along with its dependencies must be available on the classpath of the Kafka broker.

The AMQ Streams Maven build process provides a mechanism to add custom third-party libraries to the generated Kafka broker container image by adding them as dependencies in the pom.xml file under the docker-images/kafka/kafka-thirdparty-libs directory. The directory contains different folders for different Kafka versions. Choose the appropriate folder. Before modifying the pom.xml file, the third-party library must be available in a Maven repository, and that Maven repository must be accessible to the AMQ Streams build process.

The super.user configuration option in the config property in Kafka.spec.kafka is ignored. Designate super users in the authorization property instead. For more information, see Kafka broker configuration.

Custom authorization can make use of group membership information extracted from the JWT token during authentication when using oauth authentication and configuring groupsClaim configuration attribute. Groups are available on the OAuthKafkaPrincipal object during authorize() call as follows:

6.2.22.3. KafkaAuthorizationCustom schema properties

The type property is a discriminator that distinguishes use of the KafkaAuthorizationCustom type from KafkaAuthorizationSimple, KafkaAuthorizationOpa, KafkaAuthorizationKeycloak. It must have the value custom for the type KafkaAuthorizationCustom.

| Property | Description |

|---|---|

| type |

Must be |

| string | |

| authorizerClass | Authorization implementation class, which must be available in classpath. |

| string | |

| superUsers | List of super users, which are user principals with unlimited access rights. |

| string array | |

| supportsAdminApi |

Indicates whether the custom authorizer supports the APIs for managing ACLs using the Kafka Admin API. Defaults to |

| boolean |

6.2.23. Rack schema reference

Used in: KafkaBridgeSpec, KafkaClusterSpec, KafkaConnectSpec, KafkaMirrorMaker2Spec

Full list of Rack schema properties

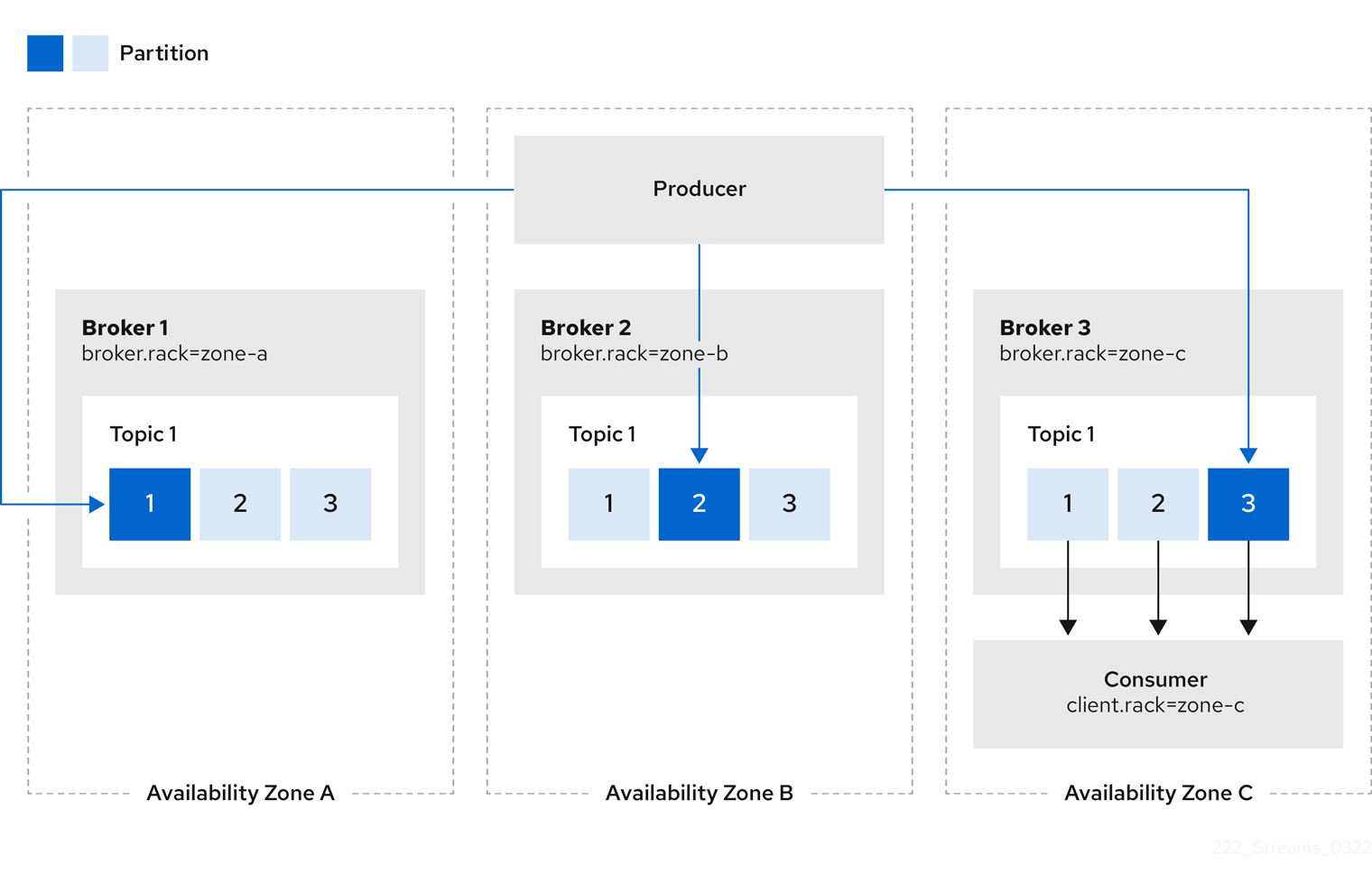

The rack option configures rack awareness. A rack can represent an availability zone, data center, or an actual rack in your data center. The rack is configured through a topologyKey. topologyKey identifies a label on OpenShift nodes that contains the name of the topology in its value. An example of such a label is topology.kubernetes.io/zone (or failure-domain.beta.kubernetes.io/zone on older OpenShift versions), which contains the name of the availability zone in which the OpenShift node runs. You can configure your Kafka cluster to be aware of the rack in which it runs, and enable additional features such as spreading partition replicas across different racks or consuming messages from the closest replicas.

For more information about OpenShift node labels, see Well-Known Labels, Annotations and Taints. Consult your OpenShift administrator regarding the node label that represents the zone or rack into which the node is deployed.

6.2.23.1. Spreading partition replicas across racks

When rack awareness is configured, AMQ Streams will set broker.rack configuration for each Kafka broker. The broker.rack configuration assigns a rack ID to each broker. When broker.rack is configured, Kafka brokers will spread partition replicas across as many different racks as possible. When replicas are spread across multiple racks, the probability that multiple replicas will fail at the same time is lower than if they would be in the same rack. Spreading replicas improves resiliency, and is important for availability and reliability. To enable rack awareness in Kafka, add the rack option to the .spec.kafka section of the Kafka custom resource as shown in the example below.

Example rack configuration for Kafka

The rack in which brokers are running can change in some cases when the pods are deleted or restarted. As a result, the replicas running in different racks might then share the same rack. Use Cruise Control and the KafkaRebalance resource with the RackAwareGoal to make sure that replicas remain distributed across different racks.