教程

Red Hat OpenShift Service on AWS 指南

摘要

第 1 章 教程概述

使用红帽专家的逐步教程,充分利用您的受管 OpenShift 集群。

此内容由红帽专家编写,但尚未测试每个支持的配置。

第 2 章 教程:Red Hat OpenShift Service on AWS 激活和帐户链接

本教程介绍了在部署第一个集群前激活 Red Hat OpenShift Service on AWS 并链接到 AWS 帐户的过程。

如果您收到产品的私有产品,请确保根据本教程前私有提供的说明进行操作。当产品已经激活(替换有效订阅或第一次激活)时,专用优惠针对问题单而设计。

2.1. 先决条件

- 登录到您要与 AWS 帐户关联的红帽帐户,该帐户将激活 Red Hat OpenShift Service on AWS 产品订阅。

- 用于服务账单的 AWS 帐户只能与单个红帽帐户关联。通常,AWS 付费帐户是用于订阅 AWS 上的 Red Hat OpenShift Service,用于帐户链接和计费。

- 所有属于同一红帽机构的团队成员,可在创建 Red Hat OpenShift Service on AWS 集群时使用链接的 AWS 帐户进行服务账单。

2.2. 订阅启用和 AWS 帐户设置

点 Get started 按钮在 AWS 控制台页面中激活 Red Hat OpenShift Service on AWS 产品:

图 2.1. 开始使用

如果您在 AWS 上激活了 Red Hat OpenShift Service on AWS,但没有完成这个过程,您可以点按钮并完成以下步骤中所述完成帐户链接。

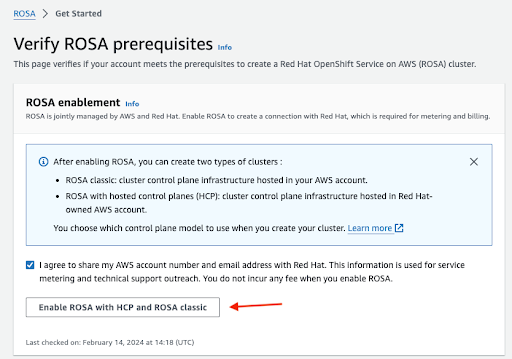

确认您希望您的联系信息与红帽共享并启用该服务:

图 2.2. 启用 Red Hat OpenShift Service on AWS

- 在这一步中启用服务不会收取费用。进行连接用于账单和计量,只有在部署第一个集群后才会进行。这可能需要几分钟时间。

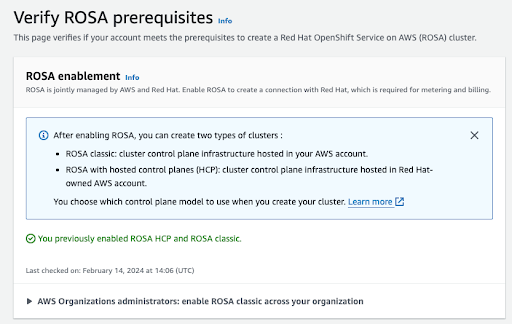

过程完成后,您会看到确认:

图 2.3. Red Hat OpenShift Service on AWS 启用确认

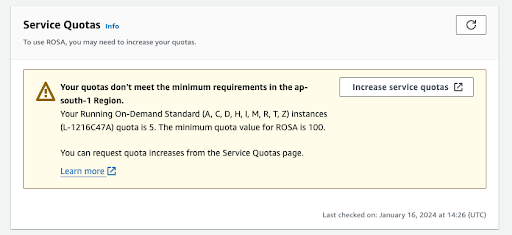

此验证页面中的其他部分显示额外先决条件的状态。如果没有满足任何这些先决条件,则会显示对应的消息。以下是所选区域中配额不足的示例:

图 2.4. 服务配额

- 点 增加服务配额 按钮,或使用 了解更多 链接获取有关如何管理服务配额的更多信息。如果配额不足,请注意配额是特定于区域的配额。您可以使用 Web 控制台右上角的区域切换器来重新运行您感兴趣的任何区域的配额检查,然后根据需要提交服务配额增加请求。

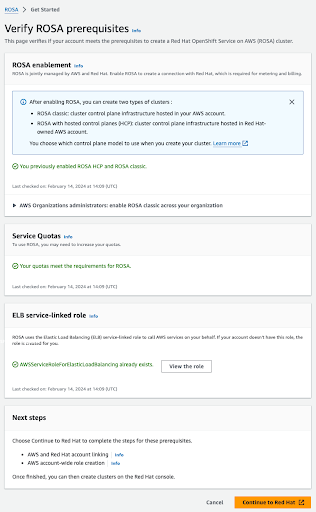

如果满足所有先决条件,该页面类似如下:

图 2.5. 验证 Red Hat OpenShift Service on AWS 的先决条件

ELB 服务链接的角色会自动为您创建。您可以点击任何小 Info blue 链接来获取上下文的帮助和资源。

2.3. AWS 和红帽帐户及订阅链接

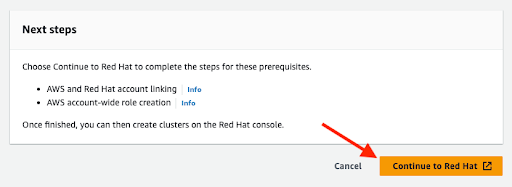

点 orange Continue to Red Hat 按钮继续帐户链接:

图 2.6. 继续红帽

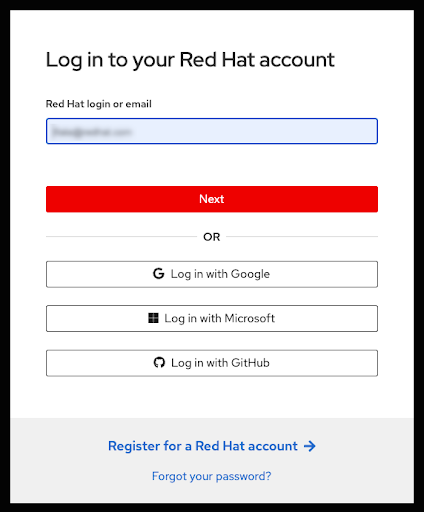

如果您还没有在当前浏览器的会话中登录到您的红帽帐户,则需要登录到您的帐户:

注意您的 AWS 帐户必须链接到单个红帽机构。

图 2.7. 登录到您的红帽帐户

- 您还可以注册新的红帽帐户,或在此页面上重置密码。

- 登录到您要与 AWS 帐户关联的红帽帐户,该帐户已激活了 Red Hat OpenShift Service on AWS 产品订阅。

- 用于服务账单的 AWS 帐户只能与单个红帽帐户关联。通常,AWS 付费帐户是用于订阅 AWS 上的 Red Hat OpenShift Service,用于帐户链接和计费。

- 所有属于同一红帽机构的团队成员,可在创建 Red Hat OpenShift Service on AWS 集群时使用链接的 AWS 帐户进行服务账单。

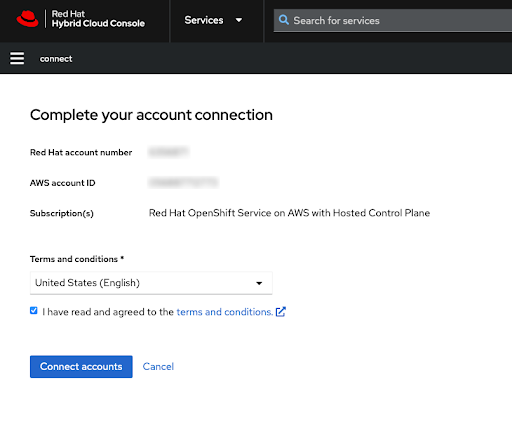

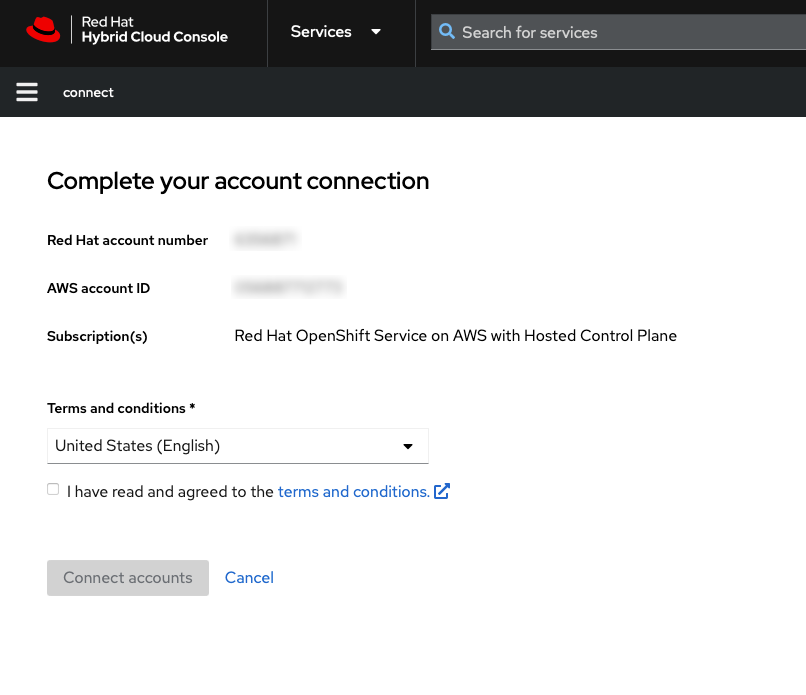

在查看条款和条件后完成红帽帐户链接:

注意只有在 AWS 帐户之前没有链接到任何红帽帐户时,才提供此步骤。

如果 AWS 帐户已链接到用户的登录红帽帐户,则会跳过这一步。

如果 AWS 帐户链接到其他红帽帐户,则会显示错误。请参阅 Correcting Billing Account Information for HCP 集群 以进行故障排除。

图 2.8. 完成您的帐户连接

红帽和 AWS 帐号都会在此屏幕中显示。

如果您同意服务条款,请单击 Connect account 按钮。

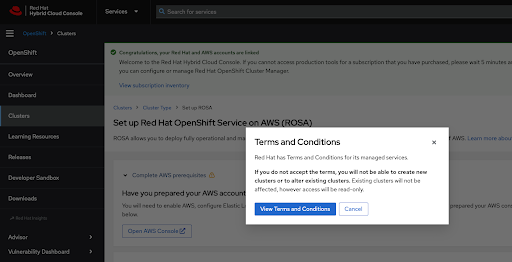

如果您是首次使用 Red Hat Hybrid Cloud 控制台,在能够创建第一个集群前,您需要同意常规受管服务条款和条件:

图 2.9. 条款和条件

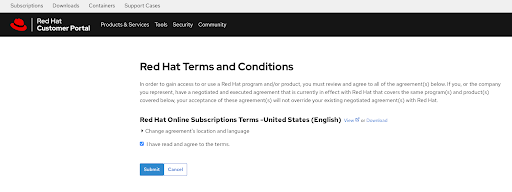

点 View Conditions and Conditions 按钮后会显示需要审核和接受的其他条款 :

图 2.10. 红帽条款和条件

此时您检查了任何其他条款后,提交您的协议。

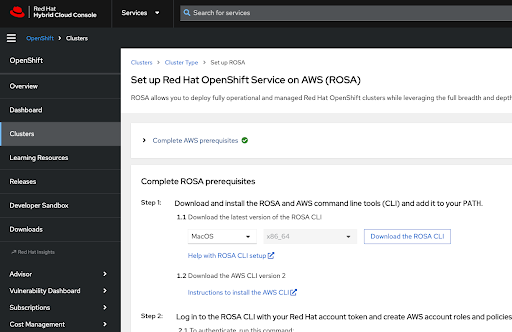

Hybrid Cloud Console 提供了确认 AWS 帐户设置已完成,并列出集群部署的先决条件:

图 2.11. 在 AWS 上完成 Red Hat OpenShift Service on AWS 的先决条件

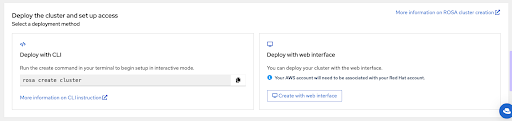

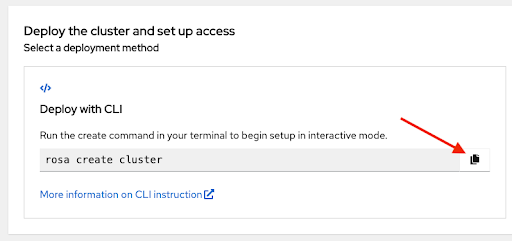

本页的最后一部分显示集群部署选项,可使用

rosaCLI 或通过 Web 控制台:图 2.12. 部署集群并设置访问权限

确保安装了最新的 ROSA 命令行界面(CLI)和 AWS CLI,并已完成上一节中涵盖的 Red Hat OpenShift Service on AWS 的先决条件。如需更多信息 ,请参阅使用 ROSA CLI 设置 的帮助和安装 AWS CLI 的说明。

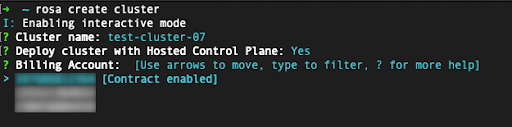

使用

rosa create cluster命令启动集群部署。您可以点 Set up Red Hat OpenShift Service on AWS (ROSA)控制台页面中的副本 按钮,并在终端中粘贴命令。这会以互动模式启动集群创建过程:图 2.13. 部署集群并设置访问权限

-

要使用自定义 AWS 配置集,您的

~/.aws/credentials中指定的非默认配置集之一,您可以在 rosa create cluster 命令中添加-profile <profile_name>,以便命令类似于 rosa create cluster-profile stage。如果没有使用这个选项指定 AWS CLI 配置集,则默认 AWS CLI 配置集将决定集群要部署到的 AWS 基础架构配置集。账单 AWS 配置集通过以下步骤之一进行选择。 在 AWS 集群上部署 Red Hat OpenShift Service 时,需要指定账单 AWS 帐户:

图 2.14. 指定 Billing 帐户

- 只有链接到用户登录的红帽帐户的 AWS 帐户才会显示。

- 指定的 AWS 帐户使用 Red Hat OpenShift Service on AWS 服务。

指示器显示了是否为给定 AWS 账单帐户启用或未启用 Red Hat OpenShift Service on AWS 合同。

- 如果您选择了显示 Contract enabled 标签的 AWS 账单帐户,只有在消耗了预付费合同的容量后,才会收取按需消耗率。

- 没有启用合同 标签的 AWS 帐户会收取适用的按需消耗率。

其他资源

- 详细的集群部署步骤不在本教程范围内。如需有关如何使用 CLI 完成 Red Hat OpenShift Service on AWS 集群的详细信息,请参阅使用默认选项创建 Red Hat OpenShift Service on AWS 集群。

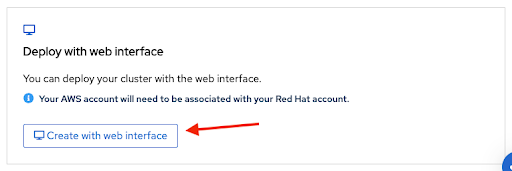

通过在简介 设置 Red Hat OpenShift Service on AWS 页面的底部部分选择第二个选项,可以使用 Web 控制台创建集群:

图 2.15. 使用 Web 界面部署

注意在开始 Web 控制台部署过程前,请完成先决条件。

某些任务(如创建帐户角色)需要

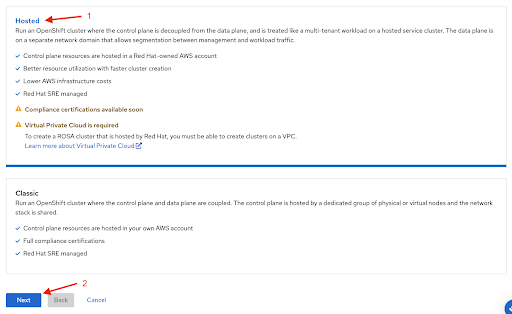

rosaCLI。如果您要首次部署 Red Hat OpenShift Service on AWS,请在启动 Web 控制台部署步骤前执行此 CLI 步骤,直到运行rosa whoami命令为止。使用 Web 控制台创建 Red Hat OpenShift Service on AWS 集群时的第一步是 control plane 选择。在点 Next 按钮前,确保选择了 Hosted 选项:

图 2.16. 选择托管选项

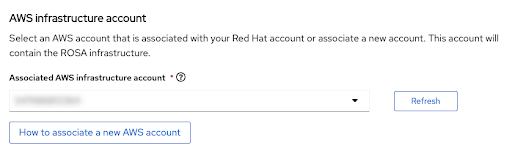

下一步帐户和 角色 允许您指定部署 Red Hat OpenShift Service on AWS 集群的基础架构 AWS 帐户,以及消耗和管理资源的位置:

图 2.17. AWS 基础架构帐户

- 如果没有看到您要在其上部署 Red Hat OpenShift Service on AWS 集群的帐户,请参阅 How to associated associated new AWS 帐户以获取有关如何为此关联创建或链接帐户角色的详细信息。

-

rosaCLI 用于此目的。 -

如果您使用多个 AWS 帐户,并为 AWS CLI 配置其配置集,您可以在使用

rosaCLI 命令时使用-profile 选择器来指定 AWS 配置集。

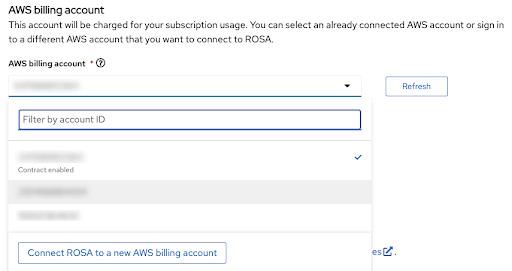

账单 AWS 帐户将立即选中在以下部分:

图 2.18. AWS 账单帐户

- 只有链接到用户登录的红帽帐户的 AWS 帐户才会显示。

- 指定的 AWS 帐户使用 Red Hat OpenShift Service on AWS 服务。

指示器显示了是否为给定 AWS 账单帐户启用或未启用 Red Hat OpenShift Service on AWS 合同。

- 如果您选择了显示 Contract enabled 标签的 AWS 账单帐户,只有在消耗了预付费合同的容量后,才会收取按需消耗率。

- 没有启用合同 标签的 AWS 帐户会收取适用的按需消耗率。

低于账单 AWS 帐户选择的步骤已超出本教程的范围。

其他资源

- 有关使用 CLI 创建集群的详情,请参考使用 CLI 创建 Red Hat OpenShift Service on AWS 集群。

- 如需有关如何使用 Web 控制台完成集群部署的更多详细信息,请参阅此学习路径。

第 3 章 教程:Red Hat OpenShift Service on AWS 私有提供验收和共享

本指南介绍了如何接受 Red Hat OpenShift Service on AWS 的私有服务,以及如何确保所有团队成员都可以为他们置备的集群使用私有提供。

Red Hat OpenShift Service on AWS 成本由 AWS 基础架构成本和 Red Hat OpenShift Service on AWS 服务成本组成。AWS 基础架构成本(如运行所需工作负载的 EC2 实例)会收取基础架构部署的 AWS 帐户。在部署集群时作为"AWS 账单帐户"指定的 AWS 帐户,Red Hat OpenShift Service on AWS 服务成本收取费用。

成本组件可以按照不同的 AWS 帐户进行计费。有关如何计算 Red Hat OpenShift Service on AWS 服务成本和 AWS 基础架构成本的详细描述,请参阅 Red Hat OpenShift Service on AWS 定价页面。

3.1. 接受私有服务

当您获得 Red Hat OpenShift Service on AWS 的私有产品时,会为您提供一个唯一 URL,该 URL 只能通过销售者指定的特定 AWS 帐户 ID 访问。

注意验证您是否使用指定为 buyer 的 AWS 帐户登录。尝试使用另一个 AWS 帐户访问所提供的信息会生成 "page not found" 错误消息,如下面的故障排除部分图 11 所示。

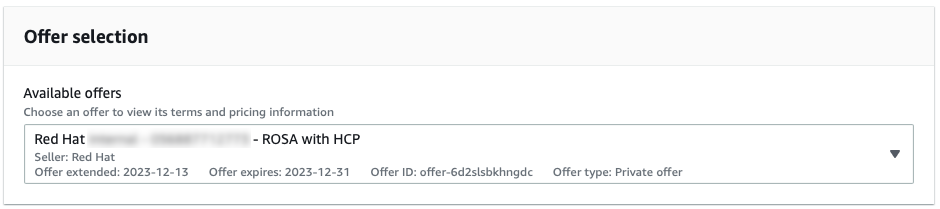

您可以在图 1 中看到带有常规私有提供的所提供的选择下拉菜单。只有在使用公共提供或其他私有产品前没有激活 Red Hat OpenShift Service on AWS 时,才能接受此类优惠。

图 3.1. 常规私有提供

您可以看到,使用公共产品为之前激活的 Red Hat OpenShift Service on AWS 的 AWS 帐户创建的私有产品,显示产品名称和所选私有提供标记为"Upgrade",它取代了 AWS 上 AWS 当前正在运行的合同(图 2)。

图 3.2. 私有提供选择屏幕

下拉菜单允许选择多个服务(如果可用)。之前激活的公共优惠与新提供的协议提供一起显示,在图 3 中被标记为"Upgrade"。

图 3.3. 私有提供选择下拉菜单

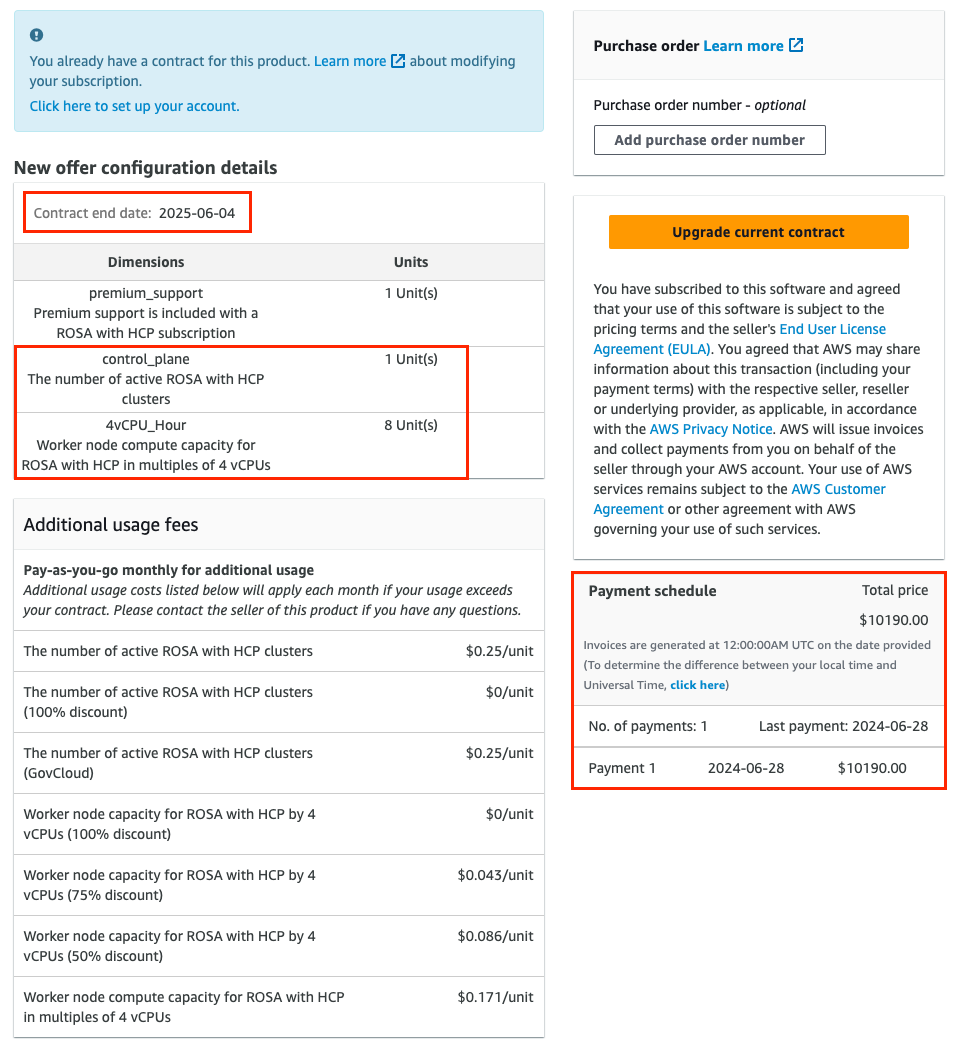

验证您的所提供的配置是否已选中。图 4 显示优惠页面底部部分,并提供详细信息。

注意合同结束日期、所提供的单位数以及付款计划。在本例中,包括 1 个集群,最多使用 4 个 vCPU 的节点。

图 3.4. 私有提供详情

可选:您可以在购买的订阅 中添加您自己的购买订单(PO)号,以便其包含在后续 AWS 发票中。另外,请检查"新提供配置详情"范围以上任何使用量的"附加使用费用"。

注意私有提供几个可用的配置。

- 您接受的私有服务可能是您使用固定的未来开始日期设置的。

- 如果您在接受私有产品时没有另一个有效的 Red Hat OpenShift Service on AWS 订阅,发布或旧的私有授权,请接受私有提供自身,并在指定服务开始日期后继续帐户链接和集群部署步骤。

您必须有一个有效的 Red Hat OpenShift Service on AWS 权利才能完成这些步骤。服务开始日期总是在 UTC 时区中报告

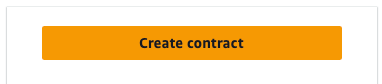

创建或升级您的合同。

对于私有提供的,AWS 帐户尚未激活 Red Hat OpenShift Service on AWS,并且正在为该服务创建第一个合同,点 Create contract 按钮。

图 3.5. 创建合同按钮

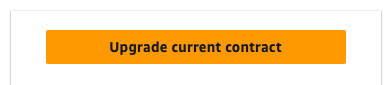

对于基于协议的提供,请单击图 4 和 6 所示 的升级当前合同 按钮。

图 3.6. 升级合同按钮

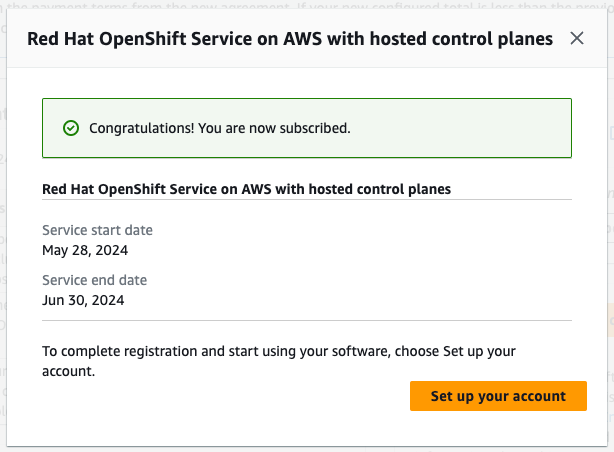

单击 Confirm。

图 3.7. 私有提供接受确认窗口

如果接受的私人提供服务日期被设置为紧跟在所提供的接受后,点确认模态窗口中的 Set up your account 按钮。

图 3.8. 订阅确认

如果接受的私有服务在以后指定了开始日期,请在服务开始日期后返回到私有优惠页,然后点击 Setup your account 按钮继续 Red Hat 和 AWS 帐户链接。

注意如果没有激活协议,则不会触发下面描述的帐户链接,"帐户设置"进程只能在"服务启动日期"后执行。

它们始终在 UTC 时区。

3.2. 共享私有产品

点击上一步中的 Set up your account 按钮可进入 AWS 和红帽帐户链接步骤。目前,您已使用接受提供的 AWS 帐户登录。如果您没有使用 Red Hat 帐户登录,系统会提示您这样做。

Red Hat OpenShift Service on AWS 授权通过您的红帽帐户与其他团队成员共享。同一红帽机构中的所有现有用户都可以按照上述步骤选择接受私有提供的账单 AWS 帐户。当您以 红帽机构管理员身份登录时,您可以管理 红帽机构中的用户,并邀请或创建新用户。

注意Red Hat OpenShift Service on AWS 私有提供无法通过 AWS License Manager 与 AWS 链接的帐户共享。

- 添加您要在 AWS 集群上部署 Red Hat OpenShift Service 的用户。有关红帽帐户 用户管理任务的更多详细信息,请查看此用户管理常见问题解答。

- 验证已登录的红帽帐户是否包含旨在作为 Red Hat OpenShift Service on AWS 集群部署者的所有用户,这些用户可从接受的私有产品中受益。

验证红帽帐户号和 AWS 帐户 ID 是要链接的所需帐户。这个链接是唯一的,红帽帐户只能与一个 AWS (计费)帐户连接。

图 3.9. AWS 和红帽帐户连接

如果您要将 AWS 帐户与比图 9 显示的另一个红帽帐户链接,请在连接帐户前从 Red Hat Hybrid Cloud Console 注销,并通过返回到已接受的私有提供 URL 来重复设置帐户的步骤。

AWS 帐户只能与单个红帽帐户连接。连接红帽和 AWS 帐户后,用户无法更改此帐户。如果需要更改,用户必须创建一个支持问题单。

- 同意条款和条件,然后单击 连接帐户。

3.3. AWS 账单帐户选择

- 在 AWS 集群上部署 Red Hat OpenShift Service 时,请验证最终用户是否选择了接受私有产品的 AWS 账单帐户。

当使用 Web 界面在 AWS 上部署 Red Hat OpenShift Service 时,相关的 AWS 基础架构帐户通常设置为正在创建的集群的管理员使用的 AWS 帐户 ID。

- 这可以与账单 AWS 帐户相同。

AWS 资源部署到此帐户中,并相应地处理与这些资源关联的所有计费。

图 3.10. 在 Red Hat OpenShift Service on AWS 集群部署期间选择基础架构和账单 AWS 帐户

- 上面屏幕截图上的 AWS 账单帐户的下拉列表应设置为接受私有提供的 AWS 帐户,提供购买的配额将供正在创建的集群使用。如果在基础架构中选择了不同的 AWS 帐户并计费"角色",则会显示图 10 中可见的蓝色说明。

3.4. 故障排除

与私有提供验收和红帽帐户链接有关的最常见的问题。

3.4.1. 使用其他 AWS 帐户访问私有服务

如果您在提供中没有定义的 AWS 帐户 ID 下登录时访问私有服务,并查看图 11 中显示的消息,然后验证您是否作为所需的 AWS 账单帐户登录。

图 3.11. 使用私有提供 URL 时的 HTTP 404 错误

- 如果您需要将私有产品扩展到另一个 AWS 帐户,请联系销售人员。

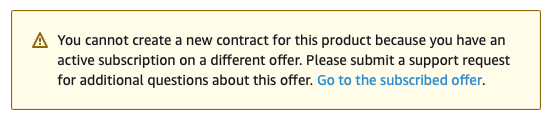

3.4.2. 由于有效订阅,无法接受私人优惠

如果您试图访问首次为 Red Hat OpenShift Service on AWS 激活而创建的私有产品,同时您已使用其他公共或私有产品激活 Red Hat OpenShift Service on AWS,并参阅以下通知,然后联系您提供的 Red Hat OpenShift Service。

销售者可以为您提供一个新的产品,可无缝地替换您当前的协议,而无需取消之前的订阅。

图 3.12. 现有订阅可防止私有提供接受

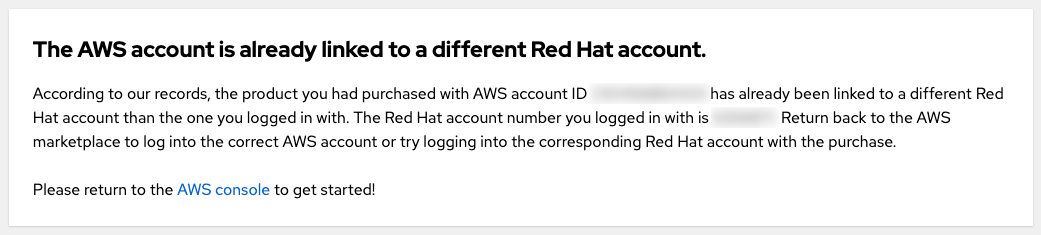

3.4.3. AWS 帐户已链接到不同的红帽帐户

如果您在尝试连接接受私有功能的 AWS 帐户时看到"AWS 帐户已链接到其他红帽帐户"错误信息,则 AWS 帐户已连接到另一个红帽用户。

图 3.13. AWS 帐户已链接到不同的红帽帐户

您可以使用另一个红帽帐户或其他 AWS 帐户登录。

- 但是,由于本指南与私有提供相关,假设是您以购买者指定并已接受私有提供的 AWS 帐户,因此它旨在用作计费帐户。在接受私有提供后,不会预期作为另一个 AWS 帐户登录。

- 您仍然可以使用已连接到接受私有产品的 AWS 帐户的另外一个红帽用户登录。属于同一红帽机构的其他红帽用户能够在创建集群时将链接的 AWS 帐户用作 Red Hat OpenShift Service on AWS 账单帐户,如图 10 所示。

- 如果您认为现有帐户链接可能不正确,请参阅下面的"我的团队成员属于不同的红帽机构"问题,了解如何继续操作。

3.4.4. 我的团队成员属于不同的红帽机构

- AWS 帐户只能连接到单个红帽帐户。任何要创建集群的用户并从授予此 AWS 帐户的私有提供中受益都需要位于同一红帽帐户中。这可以通过将用户移至同一红帽帐户并创建新的红帽用户来实现。

3.4.5. 创建集群时选择了不正确的 AWS 账单帐户

- 如果用户选择了不正确的 AWS 账单帐户,修复它的最快方法是删除集群并创建一个新集群,同时选择正确的 AWS 账单帐户。

- 如果这是无法轻松删除的生产集群,请联系红帽支持来更改现有集群的账单帐户。预计要解决这个问题的一些周转时间。

自定义 DHCP 选项 可让您使用自己的 DNS 服务器、域名等自定义 VPC。Red Hat OpenShift Service on AWS 集群支持使用自定义 DHCP 选项集。默认情况下,Red Hat OpenShift Service on AWS 集群需要将"域名服务器"选项设置为 AmazonProvidedDNS,以确保集群创建和操作成功。希望将自定义 DNS 服务器用于 DNS 解析的客户必须进行额外的配置,以确保 Red Hat OpenShift Service on AWS 集群创建和操作成功。

在本教程中,我们将 DNS 服务器配置为将特定 DNS 区域(如下详述)的 DNS 查找转发到 Amazon Route 53 Inbound Resolver。

本教程使用开源 BIND DNS 服务器(名为)演示将 DNS 查找转发到您计划将 Red Hat OpenShift Service on AWS 集群部署到的 Amazon Route 53 Inbound Resolver 所需的配置。有关如何配置区转发的信息,请参阅您首选的 DNS 服务器文档。

4.1. 先决条件

-

ROSA CLI (

rosa) -

AWS CLI (

aws) - 手动创建 AWS VPC

- 将 DHCP 选项设置为指向自定义 DNS 服务器,并设置为 VPC 的默认 DHCP 选项

4.2. 设置您的环境

配置以下环境变量:

export VPC_ID=<vpc_ID> export REGION=<region> export VPC_CIDR=<vpc_CIDR>

$ export VPC_ID=<vpc_ID>1 $ export REGION=<region>2 $ export VPC_CIDR=<vpc_CIDR>3 Copy to Clipboard Copied! Toggle word wrap Toggle overflow 在移至下一部分前,确保所有字段都正确输出:

echo "VPC ID: ${VPC_ID}, VPC CIDR Range: ${VPC_CIDR}, Region: ${REGION}"$ echo "VPC ID: ${VPC_ID}, VPC CIDR Range: ${VPC_CIDR}, Region: ${REGION}"Copy to Clipboard Copied! Toggle word wrap Toggle overflow

4.3. 创建 Amazon Route 53 Inbound Resolver

使用以下步骤在 VPC 中部署 Amazon Route 53 Inbound Resolver,我们计划将集群部署到中。

在本例中,我们将 Amazon Route 53 Inbound Resolver 部署到集群要使用的同一 VPC 中。如果要将其部署到单独的 VPC 中,您必须在集群创建后手动关联私有托管区。您无法在集群创建过程开始前关联该区域。在集群创建过程中无法关联私有托管区将导致集群创建失败。

创建一个安全组,并允许从 VPC 访问端口

53/tcp和53/udp:SG_ID=$(aws ec2 create-security-group --group-name rosa-inbound-resolver --description "Security group for ROSA inbound resolver" --vpc-id ${VPC_ID} --region ${REGION} --output text) aws ec2 authorize-security-group-ingress --group-id ${SG_ID} --protocol tcp --port 53 --cidr ${VPC_CIDR} --region ${REGION} aws ec2 authorize-security-group-ingress --group-id ${SG_ID} --protocol udp --port 53 --cidr ${VPC_CIDR} --region ${REGION}$ SG_ID=$(aws ec2 create-security-group --group-name rosa-inbound-resolver --description "Security group for ROSA inbound resolver" --vpc-id ${VPC_ID} --region ${REGION} --output text) $ aws ec2 authorize-security-group-ingress --group-id ${SG_ID} --protocol tcp --port 53 --cidr ${VPC_CIDR} --region ${REGION} $ aws ec2 authorize-security-group-ingress --group-id ${SG_ID} --protocol udp --port 53 --cidr ${VPC_CIDR} --region ${REGION}Copy to Clipboard Copied! Toggle word wrap Toggle overflow 在 VPC 中创建 Amazon Route 53 Inbound Resolver:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 注意以上命令使用动态分配的 IP 地址将 Amazon Route 53 Inbound Resolver 端点附加到提供的 VPC 中的所有子网。如果要手动指定子网和/或 IP 地址,请运行以下命令:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- 将

<subnet_ID> 替换为子网 ID,将 <endpoint_IP> 替换为您要入站解析器端点的静态 IP 地址。

获取入站解析器端点的 IP 地址,以便在 DNS 服务器配置中配置:

aws route53resolver list-resolver-endpoint-ip-addresses \ --resolver-endpoint-id ${RESOLVER_ID} \ --region=${REGION} \ --query 'IpAddresses[*].Ip'$ aws route53resolver list-resolver-endpoint-ip-addresses \ --resolver-endpoint-id ${RESOLVER_ID} \ --region=${REGION} \ --query 'IpAddresses[*].Ip'Copy to Clipboard Copied! Toggle word wrap Toggle overflow 输出示例

[ "10.0.45.253", "10.0.23.131", "10.0.148.159" ][ "10.0.45.253", "10.0.23.131", "10.0.148.159" ]Copy to Clipboard Copied! Toggle word wrap Toggle overflow

4.4. 配置 DNS 服务器

使用以下步骤配置 DNS 服务器,将必要的私有托管区转发到 Amazon Route 53 Inbound Resolver。

4.4.1. Red Hat OpenShift Service on AWS

Red Hat OpenShift Service on AWS 集群需要您为两个私有托管区配置 DNS 转发:

-

<cluster-name>.hypershift.local -

rosa.<domain-prefix>.<unique-ID>.p3.openshiftapps.com

这些 Amazon Route 53 私有托管区在集群创建过程中创建。cluster-name 和 domain-prefix 是客户指定的值,但 unique-ID 在集群创建过程中随机生成,且无法预先选择。因此,您必须在为 p3.openshiftapps.com 私有托管区配置转发前等待集群创建过程开始。

在创建集群前,将 DNS 服务器配置为将所有对 <

cluster-name>.hypershift.local的 DNS 请求转发到 Amazon Route 53 Inbound Resolver 端点。对于 BIND DNS 服务器,在您首选的文本编辑器中编辑/etc/named.conf文件,并使用以下示例添加新区:Example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 创建集群。

集群开始创建过程后,找到新创建的私有托管区:

aws route53 list-hosted-zones-by-vpc \ --vpc-id ${VPC_ID} \ --vpc-region ${REGION} \ --query 'HostedZoneSummaries[*].Name' \ --output table$ aws route53 list-hosted-zones-by-vpc \ --vpc-id ${VPC_ID} \ --vpc-region ${REGION} \ --query 'HostedZoneSummaries[*].Name' \ --output tableCopy to Clipboard Copied! Toggle word wrap Toggle overflow 输出示例

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 注意集群创建过程可能需要几分钟时间在 Route 53 中创建私有托管区。如果没有看到

p3.openshiftapps.com域,请等待几分钟,然后再次运行命令。您知道集群域的唯一 ID 后,请将您的 DNS 服务器配置为将

rosa.<domain-prefix>.<unique-ID>.p3.openshiftapps.com的所有 DNS 请求转发到 Amazon Route 53 Inbound Resolver 端点。对于 BIND DNS 服务器,在您首选的文本编辑器中编辑/etc/named.conf文件,并使用以下示例添加新区:Example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

AWS WAF 是一个 Web 应用程序防火墙,可让您监控转发到您受保护的 Web 应用程序资源的 HTTP 和 HTTPS 请求。

您可以使用 Amazon CloudFront 将 Web Application Firewall (WAF)添加到 Red Hat OpenShift Service on AWS 工作负载中。使用外部解决方案可防止 Red Hat OpenShift Service on AWS 资源因为处理 WAF 而导致拒绝服务的问题。

WAFv1、WAF 经典不再被支持。使用 WAFv2。

5.1. 先决条件

- 一个 Red Hat OpenShift Service on AWS 集群。

-

您可以访问 OpenShift CLI(

oc)。 -

您可以访问 AWS CLI (

aws)。

5.1.1. 环境设置

准备环境变量:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- 使用您要用于

IngressController的自定义域替换。

注意上一命令中的"Cluster"输出可以是集群名称、集群的内部 ID 或集群的域前缀。如果要使用另一个标识符,您可以通过运行以下命令来手动设置这个值:

export CLUSTER=my-custom-value

$ export CLUSTER=my-custom-valueCopy to Clipboard Copied! Toggle word wrap Toggle overflow

5.2. 设置二级入口控制器

需要将二级入口控制器配置为对来自您的标准(和默认)集群入口控制器的外部 WAF 保护的流量进行分段。

先决条件

自定义域公开可信 SAN 或通配符证书,如

CN swig.apps.example.com重要Amazon CloudFront 使用 HTTPS 与集群的辅助入口控制器通信。如 Amazon CloudFront 文档中所述,您无法将自签名证书用于 CloudFront 和您的集群之间的 HTTPS 通信。Amazon CloudFront 会验证证书是否由可信证书颁发机构发布。

流程

从私钥和公共证书创建一个新的 TLS secret,其中

fullchain.pem是您的完整通配符证书链(包括任何中间人),privkey.pem是通配符证书的私钥。Example

oc -n openshift-ingress create secret tls waf-tls --cert=fullchain.pem --key=privkey.pem

$ oc -n openshift-ingress create secret tls waf-tls --cert=fullchain.pem --key=privkey.pemCopy to Clipboard Copied! Toggle word wrap Toggle overflow 创建新的

IngressController资源:waf-ingress-controller.yaml示例Copy to Clipboard Copied! Toggle word wrap Toggle overflow 应用

IngressController:Example

oc apply -f waf-ingress-controller.yaml

$ oc apply -f waf-ingress-controller.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow 验证 IngressController 是否已成功创建外部负载均衡器:

oc -n openshift-ingress get service/router-cloudfront-waf

$ oc -n openshift-ingress get service/router-cloudfront-wafCopy to Clipboard Copied! Toggle word wrap Toggle overflow 输出示例

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE router-cloudfront-waf LoadBalancer 172.30.16.141 a68a838a7f26440bf8647809b61c4bc8-4225395f488830bd.elb.us-east-1.amazonaws.com 80:30606/TCP,443:31065/TCP 2m19s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE router-cloudfront-waf LoadBalancer 172.30.16.141 a68a838a7f26440bf8647809b61c4bc8-4225395f488830bd.elb.us-east-1.amazonaws.com 80:30606/TCP,443:31065/TCP 2m19sCopy to Clipboard Copied! Toggle word wrap Toggle overflow

5.2.1. 配置 AWS WAF

AWS WAF 服务是一个 Web 应用程序防火墙,可让您监控、保护和控制转发到您受保护的 Web 应用程序资源的 HTTP 和 HTTPS 请求,如 Red Hat OpenShift Service on AWS。

创建 AWS WAF 规则文件以应用到我们的 Web ACL:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 这将启用 Core (Common)和 SQL AWS Managed Rule Sets。

使用以上指定的规则创建一个 AWS WAF Web ACL:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

5.3. Configure Amazon CloudFront

检索新创建的自定义 ingress 控制器的 NLB 主机名:

NLB=$(oc -n openshift-ingress get service router-cloudfront-waf \ -o jsonpath='{.status.loadBalancer.ingress[0].hostname}')$ NLB=$(oc -n openshift-ingress get service router-cloudfront-waf \ -o jsonpath='{.status.loadBalancer.ingress[0].hostname}')Copy to Clipboard Copied! Toggle word wrap Toggle overflow 将您的证书导入到 Amazon 证书管理器中,其中

cert.pem是通配符证书,fullchain.pem是通配符证书的链,privkey.pem是通配符证书的私钥。注意无论集群要部署哪些区域,您必须将此证书导入到

us-east-1,因为 Amazon CloudFront 是全局 AWS 服务。Example

aws acm import-certificate --certificate file://cert.pem \ --certificate-chain file://fullchain.pem \ --private-key file://privkey.pem \ --region us-east-1

$ aws acm import-certificate --certificate file://cert.pem \ --certificate-chain file://fullchain.pem \ --private-key file://privkey.pem \ --region us-east-1Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 登录到 AWS 控制台 以创建 CloudFront 发行版。

使用以下信息配置 CloudFront 发行版:

注意如果下表中没有指定选项,请将其保留默认值(可能为空)。

Expand 选项 value 原始域

上一命令的输出 [1]

Name

rosa-waf-ingress [2]

Viewer 协议策略

将 HTTP 重定向到 HTTPS

允许的 HTTP 方法

GET, HEAD, OPTIONS, PUT, POST, PATCH, DELETE

缓存策略

CachingDisabled

原始请求策略

AllViewer

Web 应用程序防火墙(WAF)

启用安全保护

使用现有的 WAF 配置

true

选择 Web ACL

cloudfront-waf备用域名(CNAME)

*.apps.example.com [3]

自定义 SSL 证书

从上面的步骤中选择您导入的证书 [4]

-

运行

echo ${NLB}以获取原始域。 - 如果您有多个集群,请确保原始名称是唯一的。

- 这应该与您用来创建自定义入口控制器的通配符域匹配。

- 这应该与上面输入的备用域名匹配。

-

运行

检索 Amazon CloudFront 分发端点:

aws cloudfront list-distributions --query "DistributionList.Items[?Origins.Items[?DomainName=='${NLB}']].DomainName" --output text$ aws cloudfront list-distributions --query "DistributionList.Items[?Origins.Items[?DomainName=='${NLB}']].DomainName" --output textCopy to Clipboard Copied! Toggle word wrap Toggle overflow 将自定义通配符域的 DNS 使用 CNAME 更新为来自上面的步骤的 Amazon CloudFront 分发端点。

Example

*.apps.example.com CNAME d1b2c3d4e5f6g7.cloudfront.net

*.apps.example.com CNAME d1b2c3d4e5f6g7.cloudfront.netCopy to Clipboard Copied! Toggle word wrap Toggle overflow

5.4. 部署示例应用程序

运行以下命令,为示例应用程序创建一个新项目:

oc new-project hello-world

$ oc new-project hello-worldCopy to Clipboard Copied! Toggle word wrap Toggle overflow 部署 hello world 应用程序:

oc -n hello-world new-app --image=docker.io/openshift/hello-openshift

$ oc -n hello-world new-app --image=docker.io/openshift/hello-openshiftCopy to Clipboard Copied! Toggle word wrap Toggle overflow 为指定自定义域名的应用程序创建路由:

Example

oc -n hello-world create route edge --service=hello-openshift hello-openshift-tls \ --hostname hello-openshift.${DOMAIN}$ oc -n hello-world create route edge --service=hello-openshift hello-openshift-tls \ --hostname hello-openshift.${DOMAIN}Copy to Clipboard Copied! Toggle word wrap Toggle overflow 标记路由,使其接受到自定义入口控制器:

oc -n hello-world label route.route.openshift.io/hello-openshift-tls route=waf

$ oc -n hello-world label route.route.openshift.io/hello-openshift-tls route=wafCopy to Clipboard Copied! Toggle word wrap Toggle overflow

5.5. 测试 WAF

测试应用程序是否可以在 Amazon CloudFront 后面访问:

Example

curl "https://hello-openshift.${DOMAIN}"$ curl "https://hello-openshift.${DOMAIN}"Copy to Clipboard Copied! Toggle word wrap Toggle overflow 输出示例

Hello OpenShift!

Hello OpenShift!Copy to Clipboard Copied! Toggle word wrap Toggle overflow 测试 WAF 是否拒绝错误请求:

Example

curl -X POST "https://hello-openshift.${DOMAIN}" \ -F "user='<script><alert>Hello></alert></script>'"$ curl -X POST "https://hello-openshift.${DOMAIN}" \ -F "user='<script><alert>Hello></alert></script>'"Copy to Clipboard Copied! Toggle word wrap Toggle overflow 输出示例

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 预期的结果是一个

403 ERROR,这意味着 AWS WAF 正在保护您的应用程序。

AWS WAF 是一个 Web 应用程序防火墙,可让您监控转发到您受保护的 Web 应用程序资源的 HTTP 和 HTTPS 请求。

您可以使用 AWS Application Load Balancer (ALB)将 Web Application Firewall (WAF)添加到 Red Hat OpenShift Service on AWS 工作负载中。使用外部解决方案可防止 Red Hat OpenShift Service on AWS 资源因为处理 WAF 而导致拒绝服务的问题。

建议您使用更灵活的 CloudFront 方法,除非绝对必须使用基于 ALB 的解决方案。

6.1. 先决条件

AWS 集群上的多个可用区(AZ) Red Hat OpenShift Service。

注意根据 AWS 文档,AWS ALB 在 AZ 之间至少需要两个 公共子网。因此,只有多个 AZ Red Hat OpenShift Service on AWS 集群可以与 ALBs 一起使用。

-

您可以访问 OpenShift CLI(

oc)。 -

您可以访问 AWS CLI (

aws)。

6.1.1. 环境设置

准备环境变量:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.1.2. AWS VPC 和子网

本节只适用于部署到现有 VPC 的集群。如果您没有将集群部署到现有的 VPC 中,请跳过本节并继续进行下面的安装部分。

将以下变量设置为 Red Hat OpenShift Service on AWS 部署的正确值:

export VPC_ID=<vpc-id> export PUBLIC_SUBNET_IDS=(<space-separated-list-of-ids>) export PRIVATE_SUBNET_IDS=(<space-separated-list-of-ids>)

$ export VPC_ID=<vpc-id>1 $ export PUBLIC_SUBNET_IDS=(<space-separated-list-of-ids>)2 $ export PRIVATE_SUBNET_IDS=(<space-separated-list-of-ids>)3 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- 使用集群的 VPC ID 替换,例如:

export VPC_ID=vpc-04c429b7dbc4680ba。 - 2

- 使用空格分隔集群的专用子网 ID 列表替换,确保保留

()。例如:export PUBLIC_SUBNET_IDS=(subnet-056fd6861ad332ba2 subnet-08ce3b4ec753fe74c subnet-071aa28228664972f)。 - 3

- 使用空格分隔集群的专用子网 ID 列表替换,确保保留

()。例如:export PRIVATE_SUBNET_IDS=(subnet-0b933d72a8d72c36a subnet-0817eb72070f1d3c2 subnet-0806e64159b66665a)。

使用集群标识符向集群的 VPC 添加标签:

aws ec2 create-tags --resources ${VPC_ID} \ --tags Key=kubernetes.io/cluster/${CLUSTER},Value=shared --region ${REGION}$ aws ec2 create-tags --resources ${VPC_ID} \ --tags Key=kubernetes.io/cluster/${CLUSTER},Value=shared --region ${REGION}Copy to Clipboard Copied! Toggle word wrap Toggle overflow 在您的公共子网中添加标签:

aws ec2 create-tags \ --resources ${PUBLIC_SUBNET_IDS} \ --tags Key=kubernetes.io/role/elb,Value='1' \ Key=kubernetes.io/cluster/${CLUSTER},Value=shared \ --region ${REGION}$ aws ec2 create-tags \ --resources ${PUBLIC_SUBNET_IDS} \ --tags Key=kubernetes.io/role/elb,Value='1' \ Key=kubernetes.io/cluster/${CLUSTER},Value=shared \ --region ${REGION}Copy to Clipboard Copied! Toggle word wrap Toggle overflow 在您的专用子网中添加标签:

aws ec2 create-tags \ --resources ${PRIVATE_SUBNET_IDS} \ --tags Key=kubernetes.io/role/internal-elb,Value='1' \ Key=kubernetes.io/cluster/${CLUSTER},Value=shared \ --region ${REGION}$ aws ec2 create-tags \ --resources ${PRIVATE_SUBNET_IDS} \ --tags Key=kubernetes.io/role/internal-elb,Value='1' \ Key=kubernetes.io/cluster/${CLUSTER},Value=shared \ --region ${REGION}Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.2. 部署 AWS Load Balancer Operator

AWS Load Balancer Operator 用于在 Red Hat OpenShift Service on AWS 集群上安装、管理和配置 aws-load-balancer-controller 实例。要在 Red Hat OpenShift Service on AWS 中部署 ALB,我们需要首先部署 AWS Load Balancer Operator。

运行以下命令,创建一个新项目来部署 AWS Load Balancer Operator:

oc new-project aws-load-balancer-operator

$ oc new-project aws-load-balancer-operatorCopy to Clipboard Copied! Toggle word wrap Toggle overflow 运行以下命令,为 AWS Load Balancer Controller 创建 AWS IAM 策略(如果不存在):

注意该策略 来自上游 AWS Load Balancer Controller 策略。Operator 需要此功能。

POLICY_ARN=$(aws iam list-policies --query \ "Policies[?PolicyName=='aws-load-balancer-operator-policy'].{ARN:Arn}" \ --output text)$ POLICY_ARN=$(aws iam list-policies --query \ "Policies[?PolicyName=='aws-load-balancer-operator-policy'].{ARN:Arn}" \ --output text)Copy to Clipboard Copied! Toggle word wrap Toggle overflow Copy to Clipboard Copied! Toggle word wrap Toggle overflow 为 AWS Load Balancer Operator 创建 AWS IAM 信任策略:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 为 AWS Load Balancer Operator 创建 AWS IAM 角色:

ROLE_ARN=$(aws iam create-role --role-name "${CLUSTER}-alb-operator" \ --assume-role-policy-document "file://${SCRATCH}/trust-policy.json" \ --query Role.Arn --output text)$ ROLE_ARN=$(aws iam create-role --role-name "${CLUSTER}-alb-operator" \ --assume-role-policy-document "file://${SCRATCH}/trust-policy.json" \ --query Role.Arn --output text)Copy to Clipboard Copied! Toggle word wrap Toggle overflow 运行以下命令,将 AWS Load Balancer Operator 策略附加到之前创建的 IAM 角色中:

aws iam attach-role-policy --role-name "${CLUSTER}-alb-operator" \ --policy-arn ${POLICY_ARN}$ aws iam attach-role-policy --role-name "${CLUSTER}-alb-operator" \ --policy-arn ${POLICY_ARN}Copy to Clipboard Copied! Toggle word wrap Toggle overflow 为 AWS Load Balancer Operator 创建一个 secret,以假定我们新创建的 AWS IAM 角色:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 安装 AWS Load Balancer Operator:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 使用 Operator 部署 AWS Load Balancer Controller 实例:

注意如果在此处收到错误,等待一分钟并重试,这意味着 Operator 还没有完成安装。

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 检查 Operator 和控制器 pod 是否正在运行:

oc -n aws-load-balancer-operator get pods

$ oc -n aws-load-balancer-operator get podsCopy to Clipboard Copied! Toggle word wrap Toggle overflow 如果没有等待时间并重试,您应该看到以下内容:

NAME READY STATUS RESTARTS AGE aws-load-balancer-controller-cluster-6ddf658785-pdp5d 1/1 Running 0 99s aws-load-balancer-operator-controller-manager-577d9ffcb9-w6zqn 2/2 Running 0 2m4s

NAME READY STATUS RESTARTS AGE aws-load-balancer-controller-cluster-6ddf658785-pdp5d 1/1 Running 0 99s aws-load-balancer-operator-controller-manager-577d9ffcb9-w6zqn 2/2 Running 0 2m4sCopy to Clipboard Copied! Toggle word wrap Toggle overflow

6.3. 部署示例应用程序

为我们的示例应用程序创建一个新项目:

oc new-project hello-world

$ oc new-project hello-worldCopy to Clipboard Copied! Toggle word wrap Toggle overflow 部署 hello world 应用程序:

oc new-app -n hello-world --image=docker.io/openshift/hello-openshift

$ oc new-app -n hello-world --image=docker.io/openshift/hello-openshiftCopy to Clipboard Copied! Toggle word wrap Toggle overflow 将预先创建的 service 资源转换为 NodePort 服务类型:

oc -n hello-world patch service hello-openshift -p '{"spec":{"type":"NodePort"}}'$ oc -n hello-world patch service hello-openshift -p '{"spec":{"type":"NodePort"}}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow 使用 AWS Load Balancer Operator 部署 AWS ALB:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow curl AWS ALB Ingress 端点,以验证 hello world 应用程序是否可以访问:

注意AWS ALB 置备需要几分钟时间。如果您收到一个错误,显示

curl: (6) Could not resolve host,请等待并重试。INGRESS=$(oc -n hello-world get ingress hello-openshift-alb -o jsonpath='{.status.loadBalancer.ingress[0].hostname}') curl "http://${INGRESS}"$ INGRESS=$(oc -n hello-world get ingress hello-openshift-alb -o jsonpath='{.status.loadBalancer.ingress[0].hostname}') $ curl "http://${INGRESS}"Copy to Clipboard Copied! Toggle word wrap Toggle overflow 输出示例

Hello OpenShift!

Hello OpenShift!Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.3.1. 配置 AWS WAF

AWS WAF 服务是一个 Web 应用程序防火墙,可让您监控、保护和控制转发到您受保护的 Web 应用程序资源的 HTTP 和 HTTPS 请求,如 Red Hat OpenShift Service on AWS。

创建 AWS WAF 规则文件以应用到我们的 Web ACL:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 这将启用 Core (Common)和 SQL AWS Managed Rule Sets。

使用以上指定的规则创建一个 AWS WAF Web ACL:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 使用 AWS WAF Web ACL ARN 注解 Ingress 资源:

oc annotate -n hello-world ingress.networking.k8s.io/hello-openshift-alb \ alb.ingress.kubernetes.io/wafv2-acl-arn=${WAF_ARN}$ oc annotate -n hello-world ingress.networking.k8s.io/hello-openshift-alb \ alb.ingress.kubernetes.io/wafv2-acl-arn=${WAF_ARN}Copy to Clipboard Copied! Toggle word wrap Toggle overflow 等待 10 秒,以便规则传播并测试应用程序是否仍然可以正常工作:

curl "http://${INGRESS}"$ curl "http://${INGRESS}"Copy to Clipboard Copied! Toggle word wrap Toggle overflow 输出示例

Hello OpenShift!

Hello OpenShift!Copy to Clipboard Copied! Toggle word wrap Toggle overflow 测试 WAF 是否拒绝错误请求:

curl -X POST "http://${INGRESS}" \ -F "user='<script><alert>Hello></alert></script>'"$ curl -X POST "http://${INGRESS}" \ -F "user='<script><alert>Hello></alert></script>'"Copy to Clipboard Copied! Toggle word wrap Toggle overflow 输出示例

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 注意激活 AWS WAF 集成有时可能需要几分钟。如果您没有收到

403 Forbidden错误,请等待几秒钟,然后重试。预期的结果是一个

403 Forbidden错误,这意味着 AWS WAF 正在保护您的应用程序。

此内容由红帽专家编写,但尚未测试每个支持的配置。

环境

准备环境变量:

注意更改集群名称以匹配 Red Hat OpenShift Service on AWS 集群,并确保以管理员身份登录到集群。在继续之前,确保正确输出所有字段。

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

7.1. 准备 AWS 帐户

创建一个 IAM 策略以允许 S3 访问:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 为集群创建 IAM 角色信任策略:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 将 IAM 策略附加到 IAM 角色中:

aws iam attach-role-policy --role-name "${ROLE_NAME}" \ --policy-arn ${POLICY_ARN}$ aws iam attach-role-policy --role-name "${ROLE_NAME}" \ --policy-arn ${POLICY_ARN}Copy to Clipboard Copied! Toggle word wrap Toggle overflow

7.2. 在集群中部署 OADP

为 OADP 创建命名空间:

oc create namespace openshift-adp

$ oc create namespace openshift-adpCopy to Clipboard Copied! Toggle word wrap Toggle overflow 创建凭证 secret:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- 将

<aws_region> 替换为用于安全令牌服务(STS)端点的 AWS 区域。

部署 OADP Operator:

注意目前,Operator 版本 1.1 存在问题,备份具有

PartiallyFailed状态。这似乎不会影响备份和恢复过程,但应该注意它的问题。Copy to Clipboard Copied! Toggle word wrap Toggle overflow 等待 Operator 就绪:

watch oc -n openshift-adp get pods

$ watch oc -n openshift-adp get podsCopy to Clipboard Copied! Toggle word wrap Toggle overflow 输出示例

NAME READY STATUS RESTARTS AGE openshift-adp-controller-manager-546684844f-qqjhn 1/1 Running 0 22s

NAME READY STATUS RESTARTS AGE openshift-adp-controller-manager-546684844f-qqjhn 1/1 Running 0 22sCopy to Clipboard Copied! Toggle word wrap Toggle overflow 创建云存储:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 检查应用程序的存储默认存储类:

oc get pvc -n <namespace>

$ oc get pvc -n <namespace>1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- 输入应用程序的命名空间。

输出示例

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE applog Bound pvc-351791ae-b6ab-4e8b-88a4-30f73caf5ef8 1Gi RWO gp3-csi 4d19h mysql Bound pvc-16b8e009-a20a-4379-accc-bc81fedd0621 1Gi RWO gp3-csi 4d19h

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE applog Bound pvc-351791ae-b6ab-4e8b-88a4-30f73caf5ef8 1Gi RWO gp3-csi 4d19h mysql Bound pvc-16b8e009-a20a-4379-accc-bc81fedd0621 1Gi RWO gp3-csi 4d19hCopy to Clipboard Copied! Toggle word wrap Toggle overflow oc get storageclass

$ oc get storageclassCopy to Clipboard Copied! Toggle word wrap Toggle overflow 输出示例

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE gp2 kubernetes.io/aws-ebs Delete WaitForFirstConsumer true 4d21h gp2-csi ebs.csi.aws.com Delete WaitForFirstConsumer true 4d21h gp3 ebs.csi.aws.com Delete WaitForFirstConsumer true 4d21h gp3-csi (default) ebs.csi.aws.com Delete WaitForFirstConsumer true 4d21h

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE gp2 kubernetes.io/aws-ebs Delete WaitForFirstConsumer true 4d21h gp2-csi ebs.csi.aws.com Delete WaitForFirstConsumer true 4d21h gp3 ebs.csi.aws.com Delete WaitForFirstConsumer true 4d21h gp3-csi (default) ebs.csi.aws.com Delete WaitForFirstConsumer true 4d21hCopy to Clipboard Copied! Toggle word wrap Toggle overflow 使用 gp3-csi、gp2-csi、gp3 或 gp2 可以正常工作。如果正在备份的应用程序都与 CSI 一起使用 PV,请在 OADP DPA 配置中包含 CSI 插件。

仅 CSI:部署数据保护应用程序:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 注意如果为 CSI 卷运行这个命令,您可以跳过下一步。

非 CSI 卷:部署数据保护应用程序:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

-

在 OADP 1.1.x Red Hat OpenShift Service on AWS STS 环境中,容器镜像备份和恢复(

spec.backupImages)值必须设置为false,因为它不被支持。 -

Restic 功能(

restic.enable=false)被禁用,在 Red Hat OpenShift Service on AWS STS 环境中不支持。 -

DataMover 功能(

dataMover.enable=false)被禁用,在 AWS STS 环境中的 Red Hat OpenShift Service 中不被支持。

7.3. 执行备份

以下示例 hello-world 应用没有附加的持久性卷。DPA 配置将正常工作。

创建工作负载以备份:

oc create namespace hello-world oc new-app -n hello-world --image=docker.io/openshift/hello-openshift

$ oc create namespace hello-world $ oc new-app -n hello-world --image=docker.io/openshift/hello-openshiftCopy to Clipboard Copied! Toggle word wrap Toggle overflow 公开路由:

oc expose service/hello-openshift -n hello-world

$ oc expose service/hello-openshift -n hello-worldCopy to Clipboard Copied! Toggle word wrap Toggle overflow 检查应用程序是否正常工作:

curl `oc get route/hello-openshift -n hello-world -o jsonpath='{.spec.host}'`$ curl `oc get route/hello-openshift -n hello-world -o jsonpath='{.spec.host}'`Copy to Clipboard Copied! Toggle word wrap Toggle overflow 输出示例

Hello OpenShift!

Hello OpenShift!Copy to Clipboard Copied! Toggle word wrap Toggle overflow 备份工作负载:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 等待备份完成:

watch "oc -n openshift-adp get backup hello-world -o json | jq .status"

$ watch "oc -n openshift-adp get backup hello-world -o json | jq .status"Copy to Clipboard Copied! Toggle word wrap Toggle overflow 输出示例

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 删除演示工作负载:

oc delete ns hello-world

$ oc delete ns hello-worldCopy to Clipboard Copied! Toggle word wrap Toggle overflow 从备份中恢复:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 等待 Restore 完成:

watch "oc -n openshift-adp get restore hello-world -o json | jq .status"

$ watch "oc -n openshift-adp get restore hello-world -o json | jq .status"Copy to Clipboard Copied! Toggle word wrap Toggle overflow 输出示例

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 检查工作负载是否已恢复:

oc -n hello-world get pods

$ oc -n hello-world get podsCopy to Clipboard Copied! Toggle word wrap Toggle overflow 输出示例

NAME READY STATUS RESTARTS AGE hello-openshift-9f885f7c6-kdjpj 1/1 Running 0 90s

NAME READY STATUS RESTARTS AGE hello-openshift-9f885f7c6-kdjpj 1/1 Running 0 90sCopy to Clipboard Copied! Toggle word wrap Toggle overflow curl `oc get route/hello-openshift -n hello-world -o jsonpath='{.spec.host}'`$ curl `oc get route/hello-openshift -n hello-world -o jsonpath='{.spec.host}'`Copy to Clipboard Copied! Toggle word wrap Toggle overflow 输出示例

Hello OpenShift!

Hello OpenShift!Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 有关故障排除提示的信息,请参阅 OADP 团队的 故障排除文档

- 其他示例应用程序可以在 OADP 团队 的示例应用程序目录中找到

7.4. cleanup

删除工作负载:

oc delete ns hello-world

$ oc delete ns hello-worldCopy to Clipboard Copied! Toggle word wrap Toggle overflow 如果不再需要,从集群中删除备份和恢复资源:

oc delete backups.velero.io hello-world oc delete restores.velero.io hello-world

$ oc delete backups.velero.io hello-world $ oc delete restores.velero.io hello-worldCopy to Clipboard Copied! Toggle word wrap Toggle overflow 删除 s3 中的备份/恢复和远程对象:

velero backup delete hello-world velero restore delete hello-world

$ velero backup delete hello-world $ velero restore delete hello-worldCopy to Clipboard Copied! Toggle word wrap Toggle overflow 删除数据保护应用程序:

oc -n openshift-adp delete dpa ${CLUSTER_NAME}-dpa$ oc -n openshift-adp delete dpa ${CLUSTER_NAME}-dpaCopy to Clipboard Copied! Toggle word wrap Toggle overflow 删除云存储:

oc -n openshift-adp delete cloudstorage ${CLUSTER_NAME}-oadp$ oc -n openshift-adp delete cloudstorage ${CLUSTER_NAME}-oadpCopy to Clipboard Copied! Toggle word wrap Toggle overflow 警告如果这个命令挂起,您可能需要删除终结器:

oc -n openshift-adp patch cloudstorage ${CLUSTER_NAME}-oadp -p '{"metadata":{"finalizers":null}}' --type=merge$ oc -n openshift-adp patch cloudstorage ${CLUSTER_NAME}-oadp -p '{"metadata":{"finalizers":null}}' --type=mergeCopy to Clipboard Copied! Toggle word wrap Toggle overflow 如果不再需要 Operator,则将其删除:

oc -n openshift-adp delete subscription oadp-operator

$ oc -n openshift-adp delete subscription oadp-operatorCopy to Clipboard Copied! Toggle word wrap Toggle overflow 删除 Operator 的命名空间:

oc delete ns redhat-openshift-adp

$ oc delete ns redhat-openshift-adpCopy to Clipboard Copied! Toggle word wrap Toggle overflow 如果不再有它们,请从集群中删除自定义资源定义:

for CRD in `oc get crds | grep velero | awk '{print $1}'`; do oc delete crd $CRD; done $ for CRD in `oc get crds | grep -i oadp | awk '{print $1}'`; do oc delete crd $CRD; done$ for CRD in `oc get crds | grep velero | awk '{print $1}'`; do oc delete crd $CRD; done $ for CRD in `oc get crds | grep -i oadp | awk '{print $1}'`; do oc delete crd $CRD; doneCopy to Clipboard Copied! Toggle word wrap Toggle overflow 删除 AWS S3 Bucket:

aws s3 rm s3://${CLUSTER_NAME}-oadp --recursive aws s3api delete-bucket --bucket ${CLUSTER_NAME}-oadp$ aws s3 rm s3://${CLUSTER_NAME}-oadp --recursive $ aws s3api delete-bucket --bucket ${CLUSTER_NAME}-oadpCopy to Clipboard Copied! Toggle word wrap Toggle overflow 将 Policy 从角色分离:

aws iam detach-role-policy --role-name "${ROLE_NAME}" \ --policy-arn "${POLICY_ARN}"$ aws iam detach-role-policy --role-name "${ROLE_NAME}" \ --policy-arn "${POLICY_ARN}"Copy to Clipboard Copied! Toggle word wrap Toggle overflow 删除角色:

aws iam delete-role --role-name "${ROLE_NAME}"$ aws iam delete-role --role-name "${ROLE_NAME}"Copy to Clipboard Copied! Toggle word wrap Toggle overflow

此内容由红帽专家编写,但尚未在所有支持的配置中进行测试。

AWS Load Balancer Operator 创建的负载均衡器不能用于 OpenShift 路由,并且只应用于不需要 OpenShift Route 完整第 7 层功能的单个服务或入口资源。

AWS Load Balancer Controller 为 Red Hat OpenShift Service on AWS 管理 AWS Elastic Load Balancers。在使用类型为 LoadBalancer 的 Kubernetes Service 资源时,控制器在创建 Kubernetes Ingress 资源和 AWS Network Load Balancers (NLB) 时置备 AWS Application Load Balancers (ALB)。

与默认的 AWS 树内负载均衡器供应商相比,这个控制器使用 ALB 和 NLBs 的高级注解开发。一些高级用例是:

- 使用带有 ALB 的原生 Kubernetes Ingress 对象

将 ALB 与 AWS Web Application Firewall (WAF)服务集成

注意WAFv1、WAF 经典不再被支持。使用 WAFv2。

- 指定自定义 NLB 源 IP 范围

- 指定自定义 NLB 内部 IP 地址

AWS Load Balancer Operator 用于在 Red Hat OpenShift Service on AWS 集群上安装、管理和配置 aws-load-balancer-controller 实例。

8.1. 前提条件

AWS ALB 需要 Multi-AZ 集群,以及与集群相同的 VPC 中的三个公共子网分割。这使得 ALB 不适用于许多 PrivateLink 集群。AWS NLBs 没有这个限制。

- AWS 集群上的 multi-AZ Red Hat OpenShift Service

- BYO VPC 集群

- AWS CLI

- OC CLI

8.1.1. 环境

准备环境变量:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

8.1.2. AWS VPC 和子网

本节只适用于部署到现有 VPC 的集群。如果您没有将集群部署到现有的 VPC 中,请跳过本节并继续以下安装部分。

将以下变量设置为集群部署的正确值:

export VPC_ID=<vpc-id> export PUBLIC_SUBNET_IDS=<public-subnets> export PRIVATE_SUBNET_IDS=<private-subnets> export CLUSTER_NAME=$(oc get infrastructure cluster -o=jsonpath="{.status.infrastructureName}")$ export VPC_ID=<vpc-id> $ export PUBLIC_SUBNET_IDS=<public-subnets> $ export PRIVATE_SUBNET_IDS=<private-subnets> $ export CLUSTER_NAME=$(oc get infrastructure cluster -o=jsonpath="{.status.infrastructureName}")Copy to Clipboard Copied! Toggle word wrap Toggle overflow 使用集群名称在集群 VPC 中添加标签:

aws ec2 create-tags --resources ${VPC_ID} --tags Key=kubernetes.io/cluster/${CLUSTER_NAME},Value=owned --region ${REGION}$ aws ec2 create-tags --resources ${VPC_ID} --tags Key=kubernetes.io/cluster/${CLUSTER_NAME},Value=owned --region ${REGION}Copy to Clipboard Copied! Toggle word wrap Toggle overflow 在您的公共子网中添加标签:

aws ec2 create-tags \ --resources ${PUBLIC_SUBNET_IDS} \ --tags Key=kubernetes.io/role/elb,Value='' \ --region ${REGION}$ aws ec2 create-tags \ --resources ${PUBLIC_SUBNET_IDS} \ --tags Key=kubernetes.io/role/elb,Value='' \ --region ${REGION}Copy to Clipboard Copied! Toggle word wrap Toggle overflow 在您的私有子网中添加标签:

aws ec2 create-tags \ --resources "${PRIVATE_SUBNET_IDS}" \ --tags Key=kubernetes.io/role/internal-elb,Value='' \ --region ${REGION}$ aws ec2 create-tags \ --resources "${PRIVATE_SUBNET_IDS}" \ --tags Key=kubernetes.io/role/internal-elb,Value='' \ --region ${REGION}Copy to Clipboard Copied! Toggle word wrap Toggle overflow

8.2. 安装

为 AWS Load Balancer Controller 创建 AWS IAM 策略:

注意该策略 来自上游 AWS Load Balancer Controller 策略,以及在子网上创建标签的权限。Operator 需要此功能。

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 为 AWS Load Balancer Operator 创建 AWS IAM 信任策略:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 为 AWS Load Balancer Operator 创建 AWS IAM 角色:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 为 AWS Load Balancer Operator 创建 secret,以假定我们新创建的 AWS IAM 角色:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 安装 AWS Load Balancer Operator:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 使用 Operator 部署 AWS Load Balancer Controller 实例:

注意如果您在此处收到错误并尝试重试,这意味着 Operator 还没有完成安装。

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 检查 Operator 和控制器 pod 是否正在运行:

oc -n aws-load-balancer-operator get pods

$ oc -n aws-load-balancer-operator get podsCopy to Clipboard Copied! Toggle word wrap Toggle overflow 如果没有等待片刻并重试,您应该看到以下内容:

NAME READY STATUS RESTARTS AGE aws-load-balancer-controller-cluster-6ddf658785-pdp5d 1/1 Running 0 99s aws-load-balancer-operator-controller-manager-577d9ffcb9-w6zqn 2/2 Running 0 2m4s

NAME READY STATUS RESTARTS AGE aws-load-balancer-controller-cluster-6ddf658785-pdp5d 1/1 Running 0 99s aws-load-balancer-operator-controller-manager-577d9ffcb9-w6zqn 2/2 Running 0 2m4sCopy to Clipboard Copied! Toggle word wrap Toggle overflow

8.3. 验证部署

创建一个新项目

oc new-project hello-world

$ oc new-project hello-worldCopy to Clipboard Copied! Toggle word wrap Toggle overflow 部署 hello world 应用:

oc new-app -n hello-world --image=docker.io/openshift/hello-openshift

$ oc new-app -n hello-world --image=docker.io/openshift/hello-openshiftCopy to Clipboard Copied! Toggle word wrap Toggle overflow 为 AWS ALB 配置 NodePort 服务以连接:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 使用 AWS Load Balancer Operator 部署 AWS ALB:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow curl AWS ALB Ingress 端点,以验证 hello world 应用程序是否可访问:

注意AWS ALB 置备需要几分钟。如果您收到显示

curl: (6) Could not resolve host的错误,请等待再试一次。INGRESS=$(oc -n hello-world get ingress hello-openshift-alb \ -o jsonpath='{.status.loadBalancer.ingress[0].hostname}') curl "http://${INGRESS}"$ INGRESS=$(oc -n hello-world get ingress hello-openshift-alb \ -o jsonpath='{.status.loadBalancer.ingress[0].hostname}') $ curl "http://${INGRESS}"Copy to Clipboard Copied! Toggle word wrap Toggle overflow 输出示例

Hello OpenShift!

Hello OpenShift!Copy to Clipboard Copied! Toggle word wrap Toggle overflow 为您的 hello world 应用程序部署 AWS NLB :

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 测试 AWS NLB 端点:

注意NLB 置备需要几分钟时间。如果您收到显示

curl: (6) Could not resolve host的错误,请等待再试一次。NLB=$(oc -n hello-world get service hello-openshift-nlb \ -o jsonpath='{.status.loadBalancer.ingress[0].hostname}') curl "http://${NLB}"$ NLB=$(oc -n hello-world get service hello-openshift-nlb \ -o jsonpath='{.status.loadBalancer.ingress[0].hostname}') $ curl "http://${NLB}"Copy to Clipboard Copied! Toggle word wrap Toggle overflow 输出示例

Hello OpenShift!

Hello OpenShift!Copy to Clipboard Copied! Toggle word wrap Toggle overflow

8.4. 清理

删除 hello world 应用命名空间(以及命名空间中的所有资源):

oc delete project hello-world

$ oc delete project hello-worldCopy to Clipboard Copied! Toggle word wrap Toggle overflow 删除 AWS Load Balancer Operator 和 AWS IAM 角色:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 删除 AWS IAM 策略:

aws iam delete-policy --policy-arn $POLICY_ARN

$ aws iam delete-policy --policy-arn $POLICY_ARNCopy to Clipboard Copied! Toggle word wrap Toggle overflow

您可以将 Microsoft Entra ID (以前称为 Azure Active Directory)配置为 Red Hat OpenShift Service on AWS 中的集群身份提供程序。

本教程指导您完成以下任务:

- 在 Entra ID 中注册新应用程序进行验证。

- 在 Entra ID 中配置应用程序注册,以在令牌中包含可选和组声明。

- 将 Red Hat OpenShift Service on AWS 集群配置为使用 Entra ID 作为身份提供程序。

- 为各个组授予额外权限。

9.1. 前提条件

- 您已按照 Microsoft 文档 创建了一组安全组并分配用户。

9.2. 在 Entra ID 中注册新应用程序进行验证

要在 Entra ID 中注册您的应用程序,首先创建 OAuth 回调 URL,然后注册您的应用程序。

流程

通过更改指定的变量并运行以下命令来创建集群的 OAuth 回调 URL:

注意请记住保存此回调 URL;之后需要用到。

domain=$(rosa describe cluster -c <cluster_name> | grep "DNS" | grep -oE '\S+.openshiftapps.com') echo "OAuth callback URL: https://oauth.${domain}/oauth2callback/AAD"$ domain=$(rosa describe cluster -c <cluster_name> | grep "DNS" | grep -oE '\S+.openshiftapps.com') echo "OAuth callback URL: https://oauth.${domain}/oauth2callback/AAD"Copy to Clipboard Copied! Toggle word wrap Toggle overflow OAuth 回调 URL 末尾的"AAD"目录必须与稍后在此过程中设置的 OAuth 身份提供程序名称匹配。

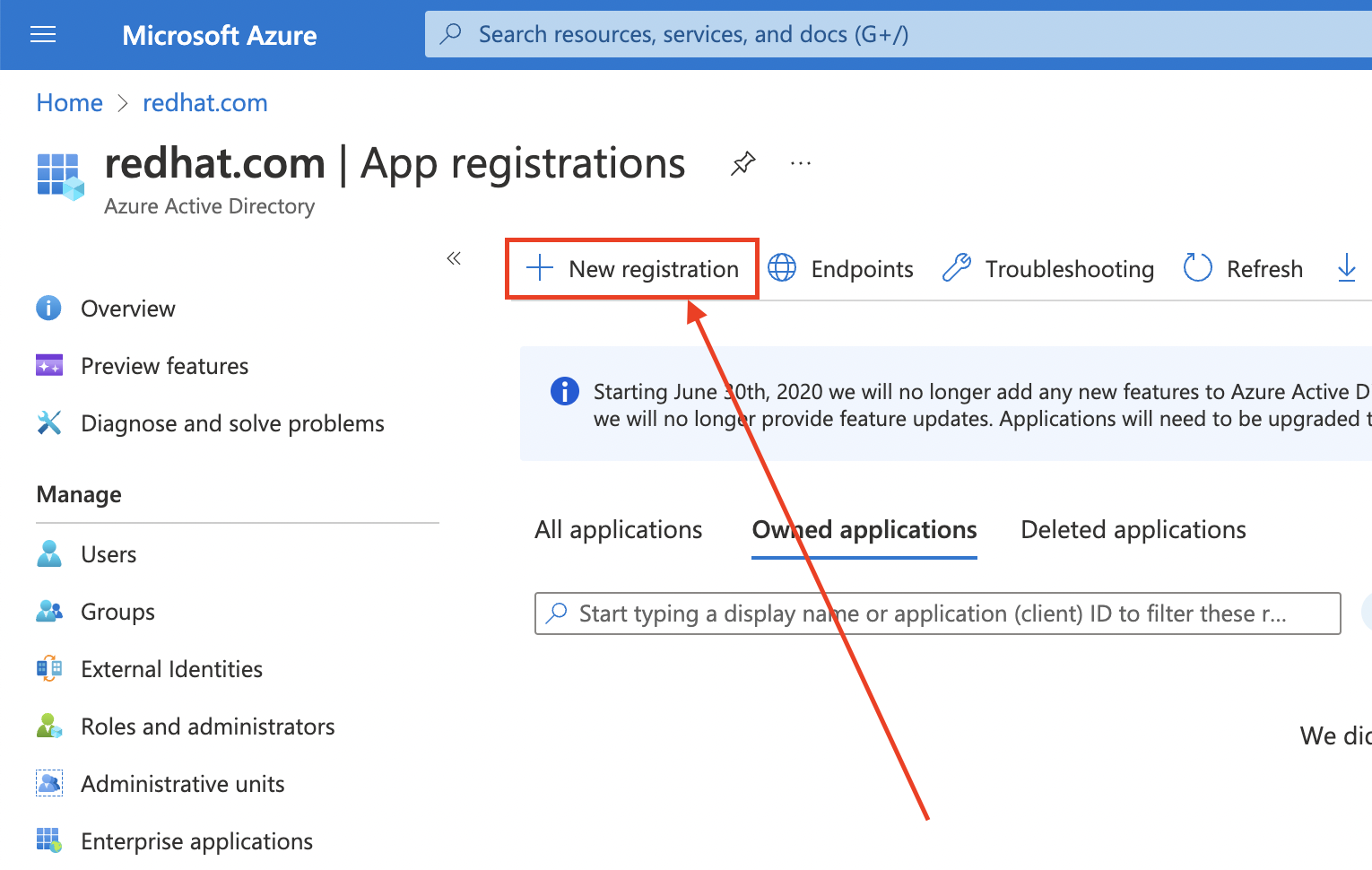

通过登录到 Azure 门户来创建 Entra ID 应用,然后选择 App registrations Blade。然后,选择 New registration 来创建新应用程序。

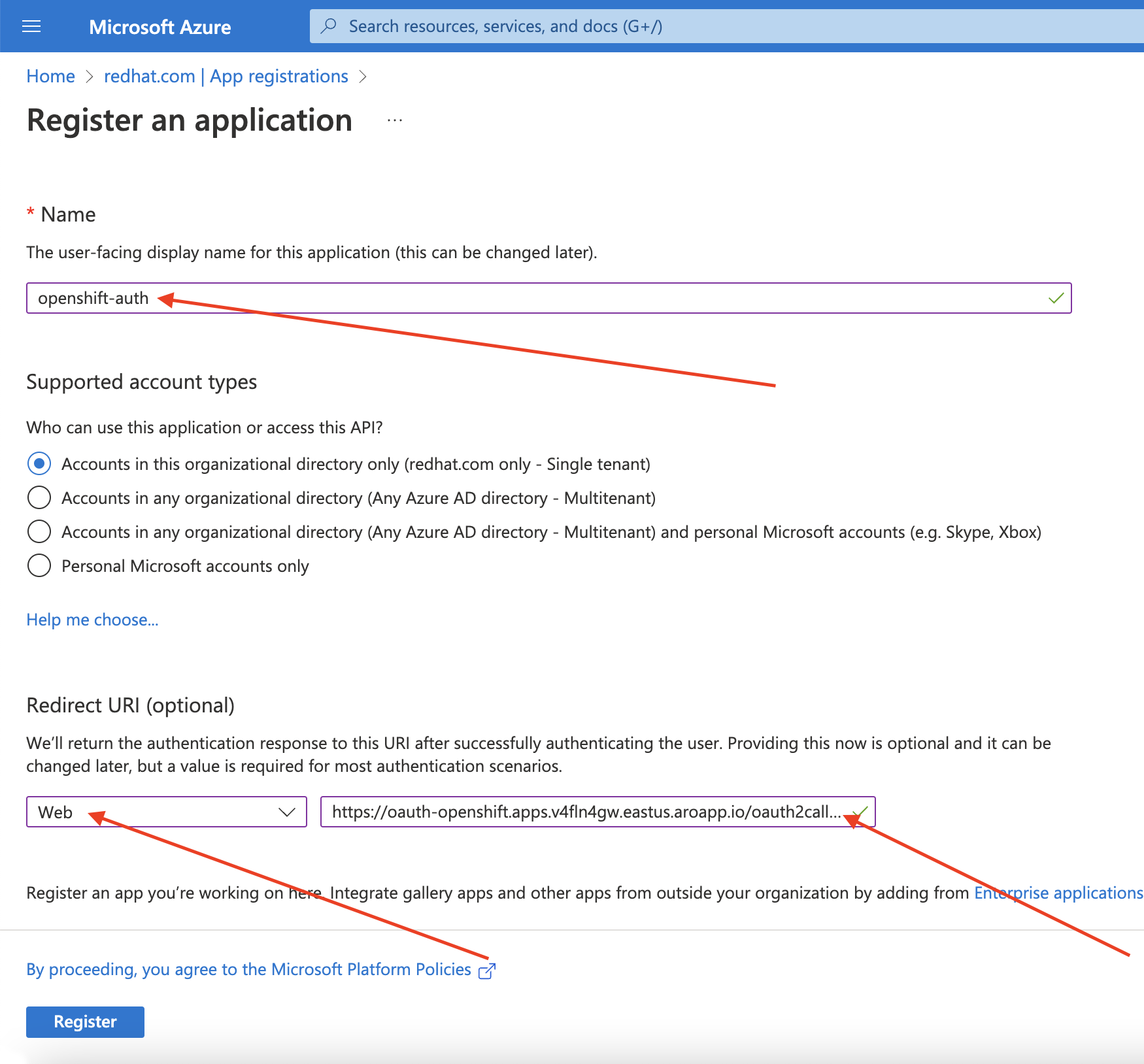

-

将应用程序命名为,如

openshift-auth。 - 从 Redirect URI 下拉菜单中选择 Web,并输入您在上一步中检索的 OAuth 回调 URL 的值。

提供所需信息后,点 Register 创建应用程序。

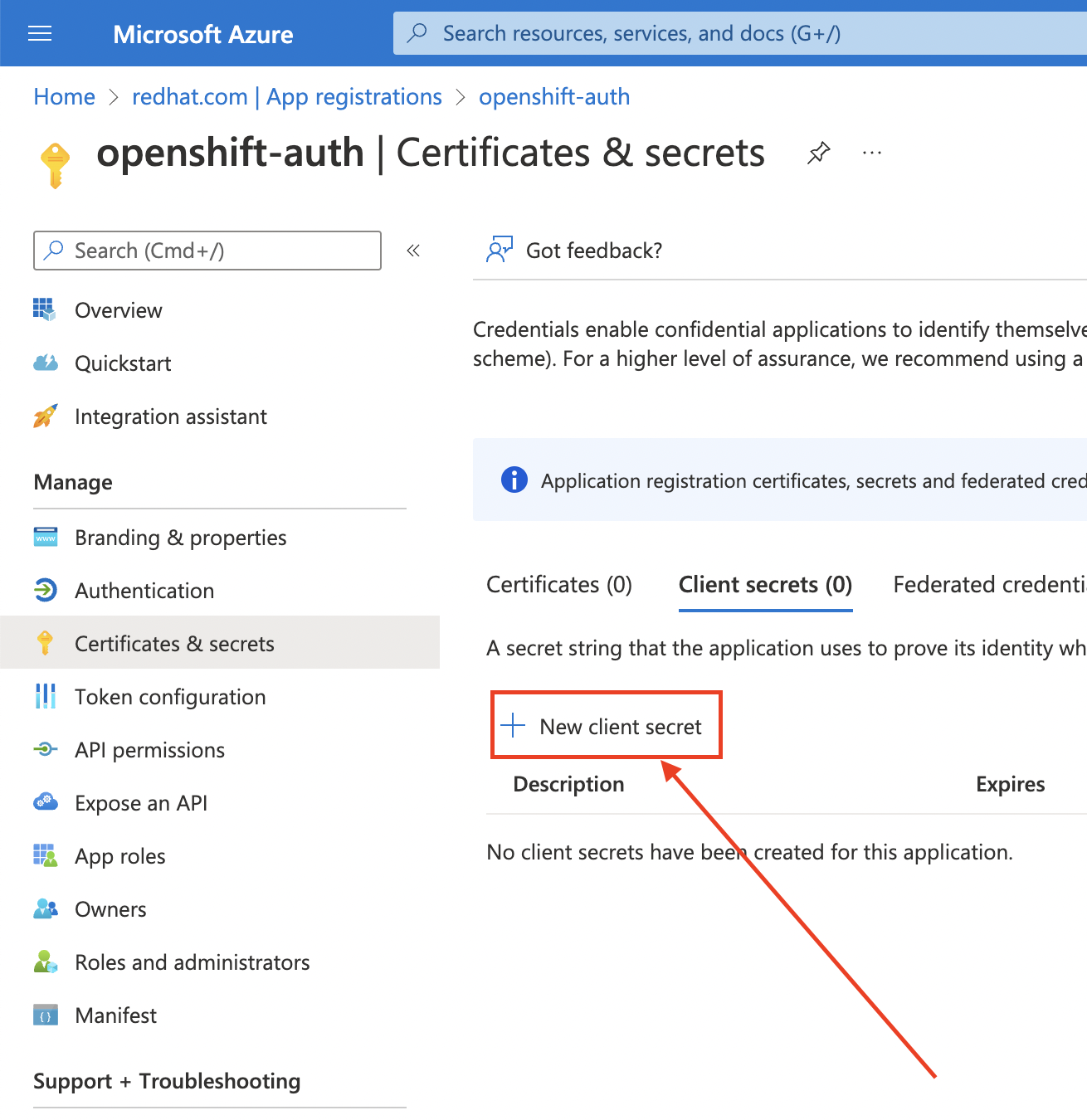

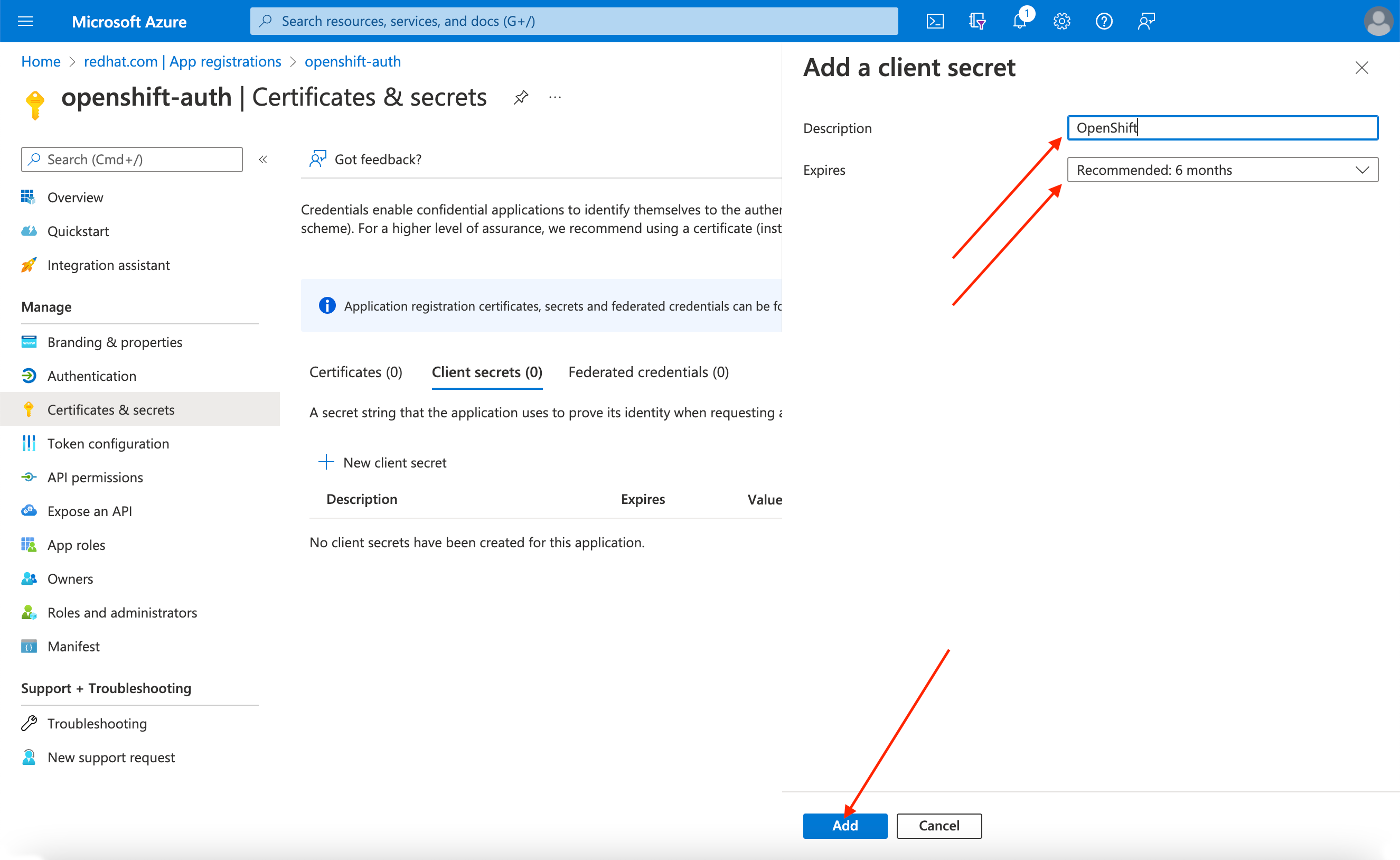

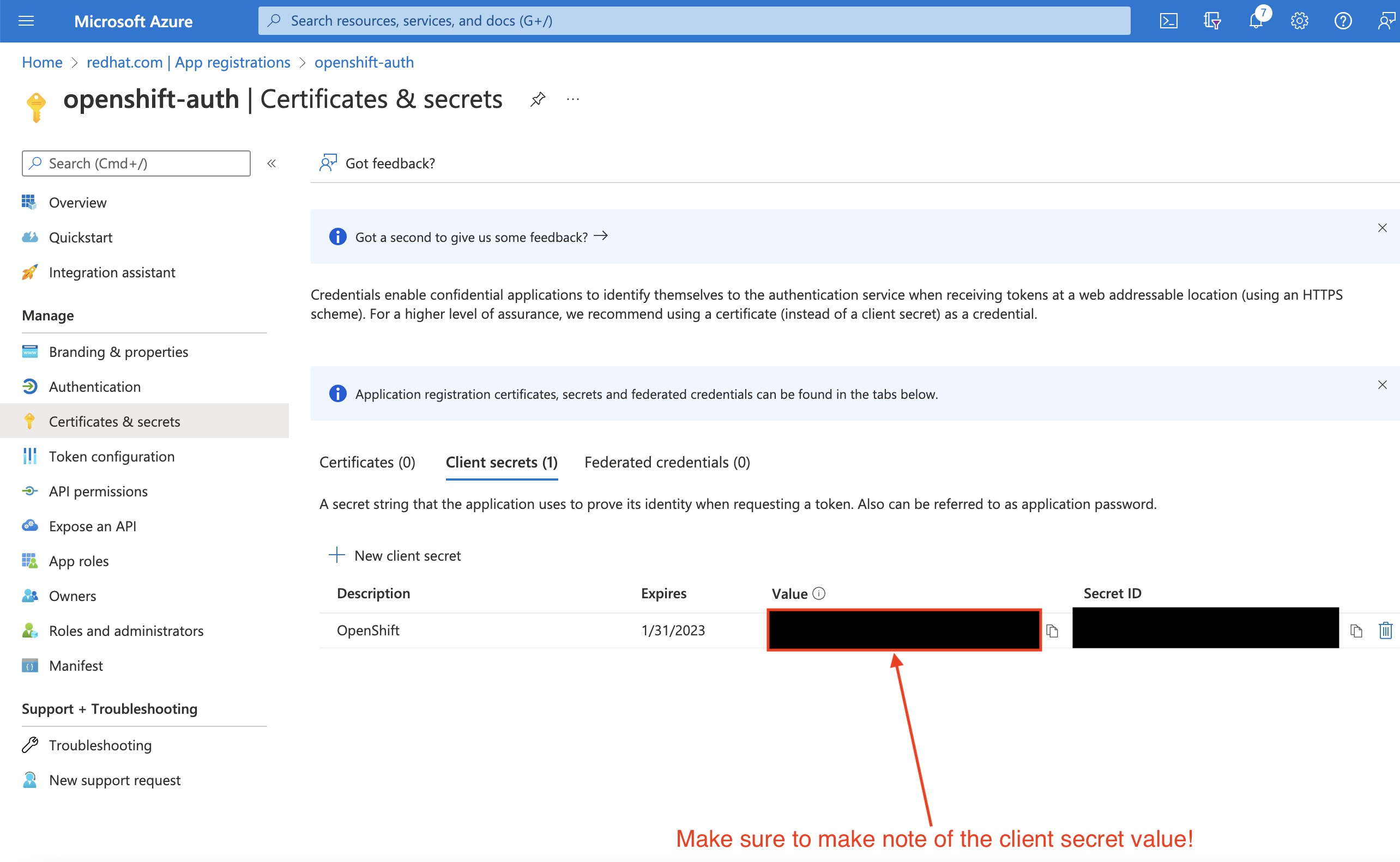

选择 Certificates & secrets sub-blade 并选择 New client secret。

完成请求的详细信息,并存储生成的客户端 secret 值。此流程稍后需要此 secret。

重要初始设置后,您无法看到客户端 secret。如果没有记录客户端 secret,则必须生成新的 secret。

选择 Overview 子功能,并记下

应用程序(客户端)ID和目录(租户)ID。在以后的步骤中,您将需要这些值。

9.3. 在 Entra ID 中配置应用程序注册,使其包含可选的和组声明

因此,Red Hat OpenShift Service on AWS 有足够的信息来创建用户帐户,您必须配置 Entra ID 来提供两个可选声明: email 和 preferred_username。有关 Entra ID 中可选声明的更多信息,请参阅 Microsoft 文档。

除了单独的用户身份验证外,Red Hat OpenShift Service on AWS 还提供组声明功能。此功能允许 OpenID Connect (OIDC)身份提供程序(如 Entra ID)提供用户在 Red Hat OpenShift Service on AWS 中使用的用户组成员资格。

9.3.1. 配置可选声明

您可以在 Entra ID 中配置可选声明。

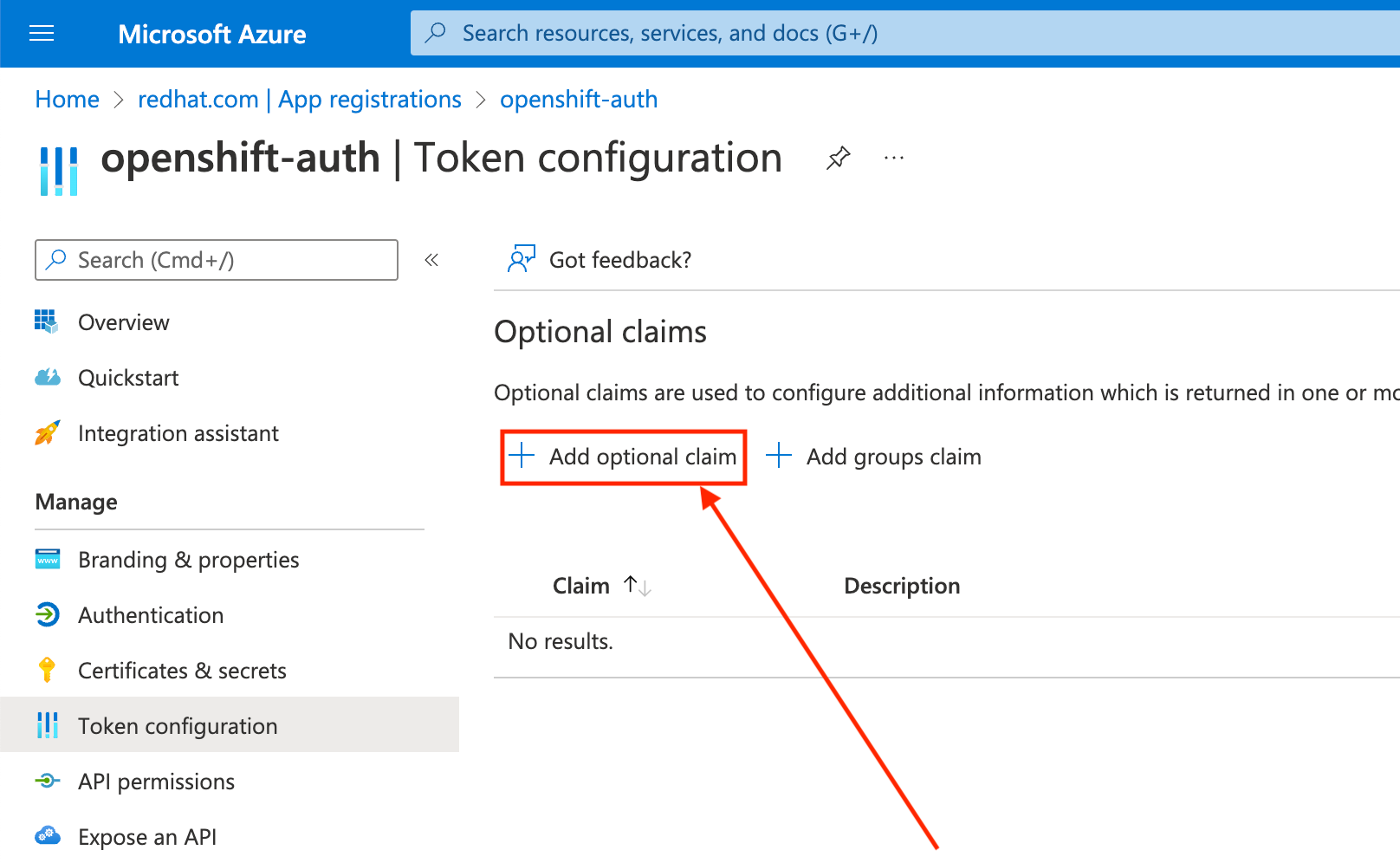

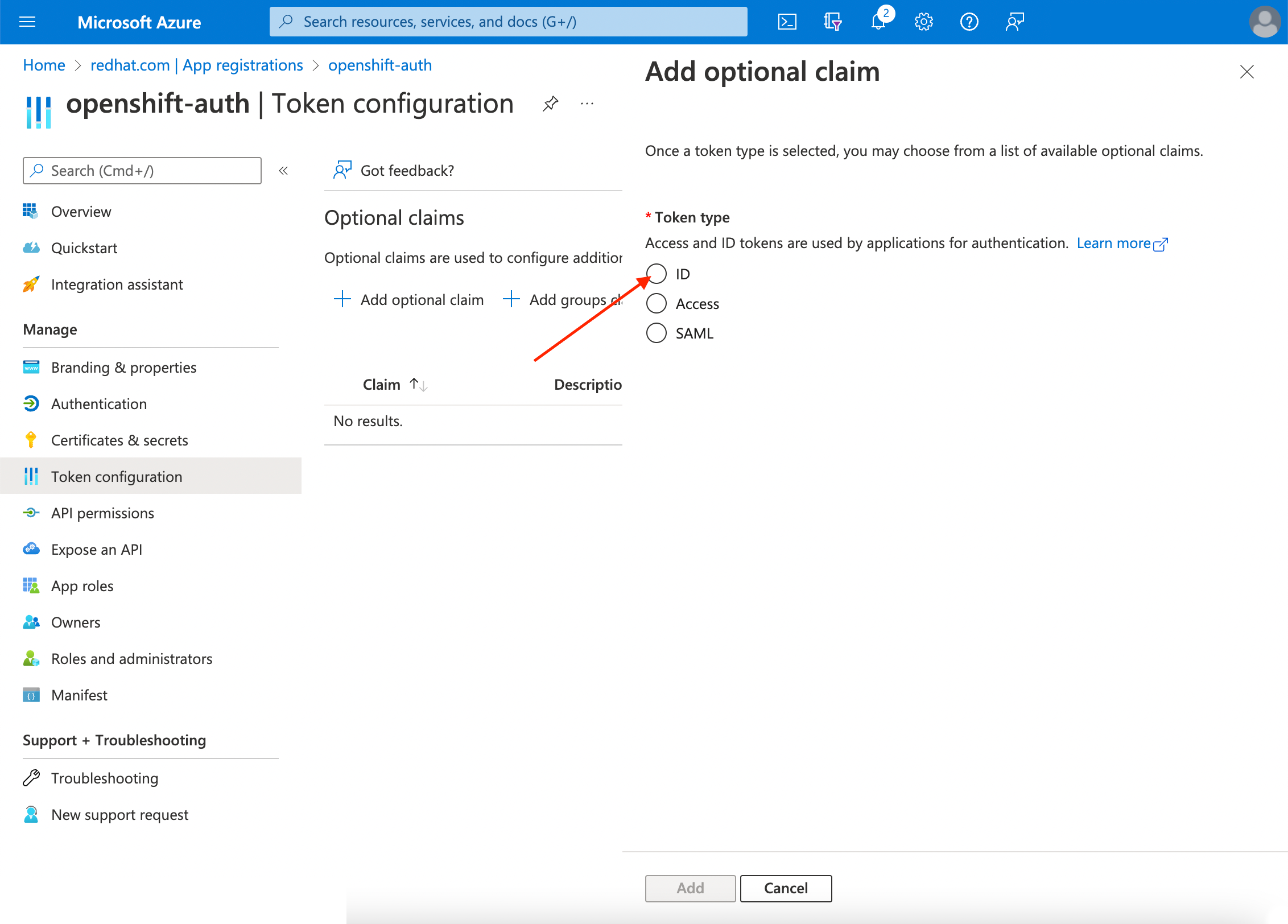

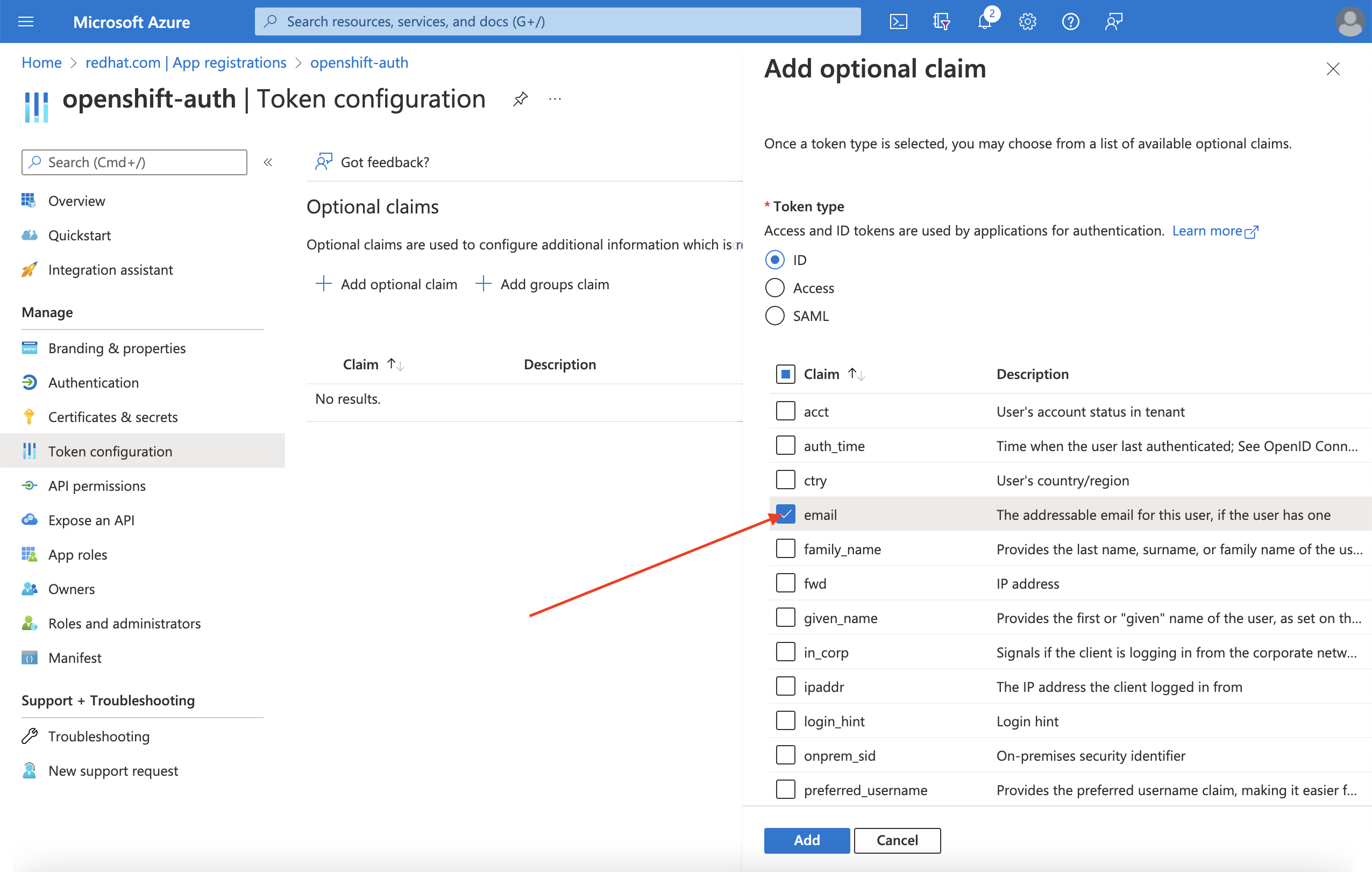

点 Token configuration sub-blade 并选择 Add optional claim 按钮。

选择 ID 单选按钮。

选中 电子邮件 声明复选框。

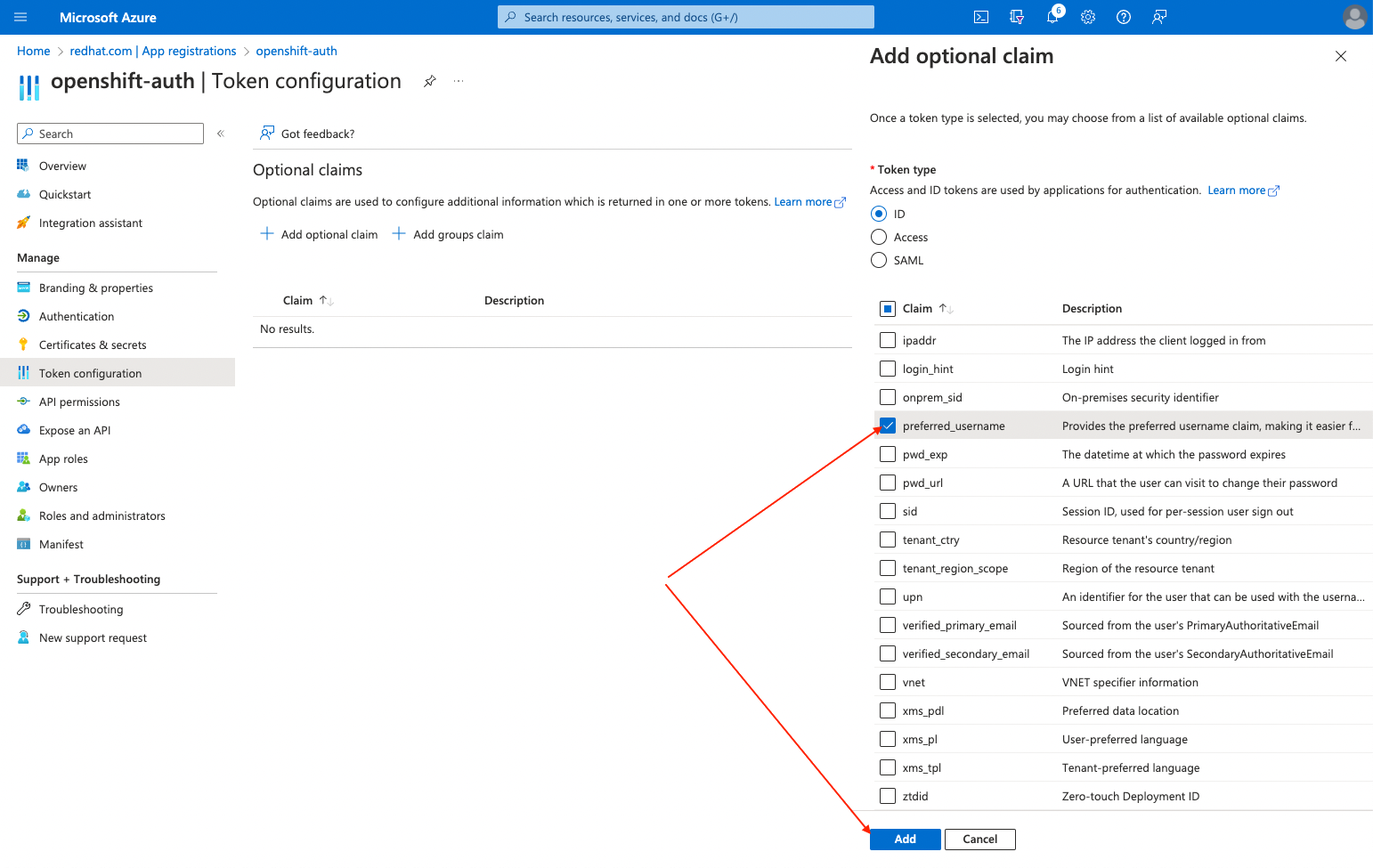

选择

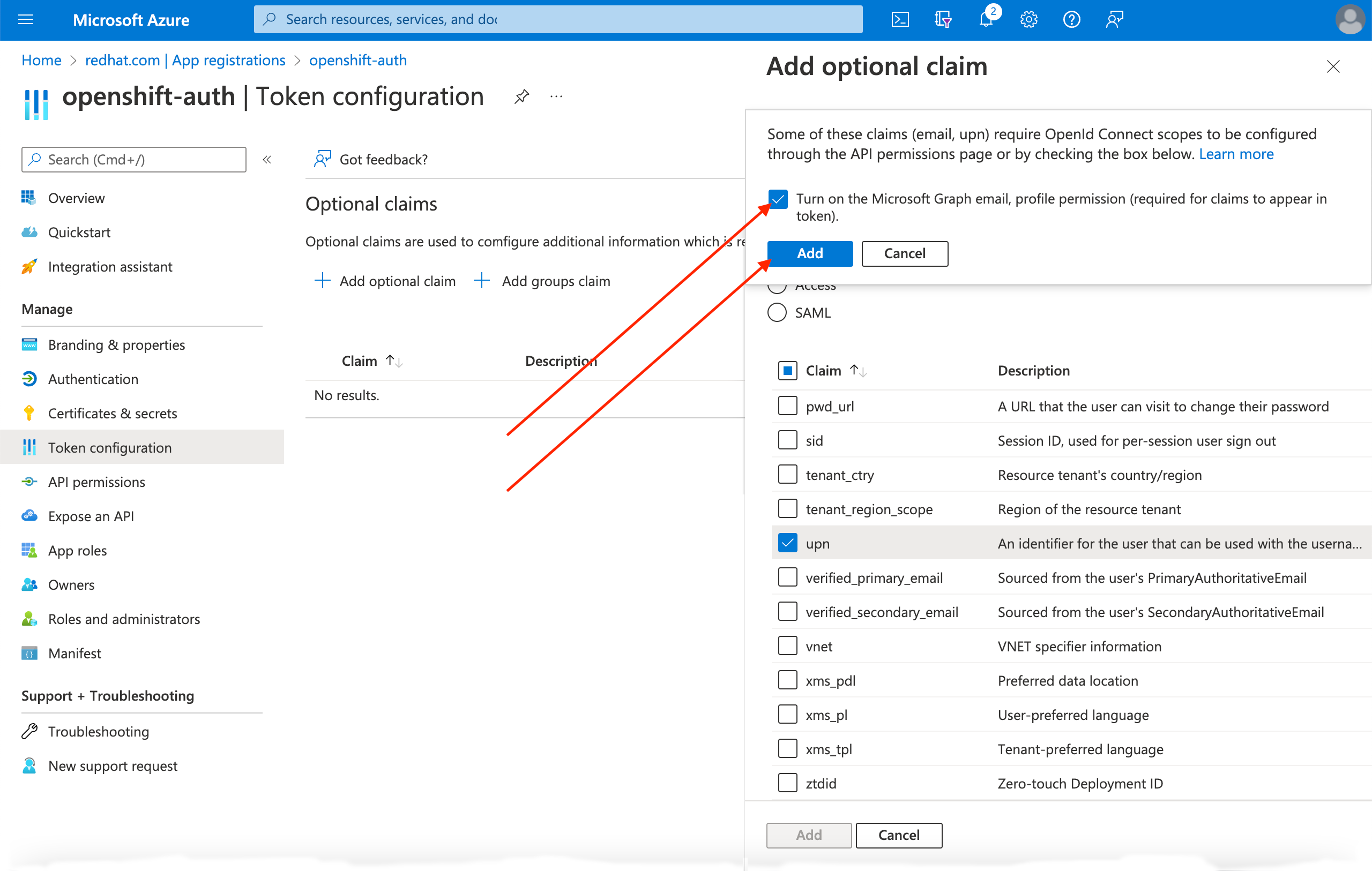

preferred_username声明复选框。然后,点 Add 来配置 电子邮件和 preferred_username 来声明您的 Entra ID 应用程序。

页面顶部会出现一个对话框。按照提示启用所需的 Microsoft Graph 权限。

9.3.2. 配置组声明(可选)

配置 Entra ID 以提供组声明。

流程

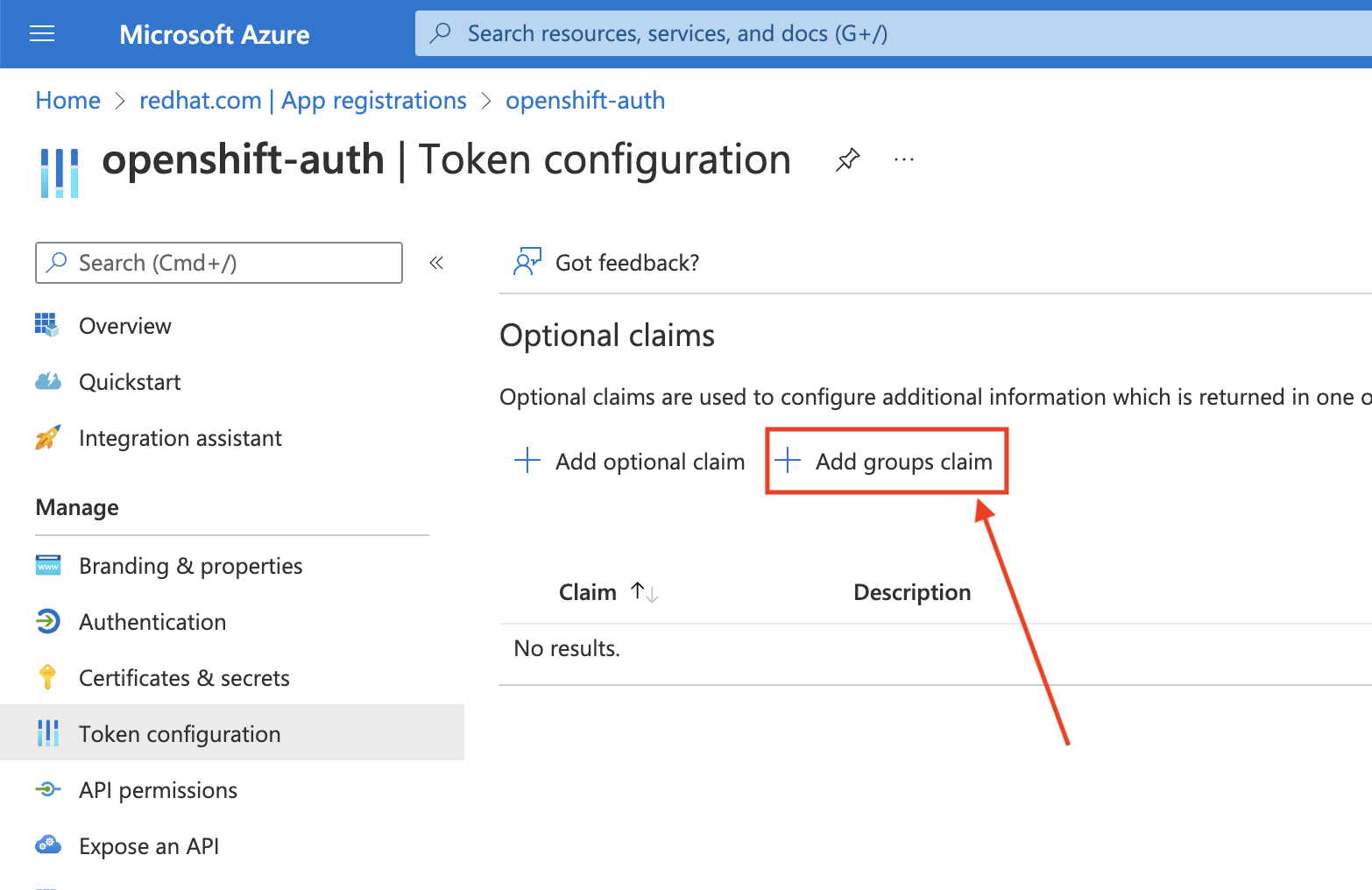

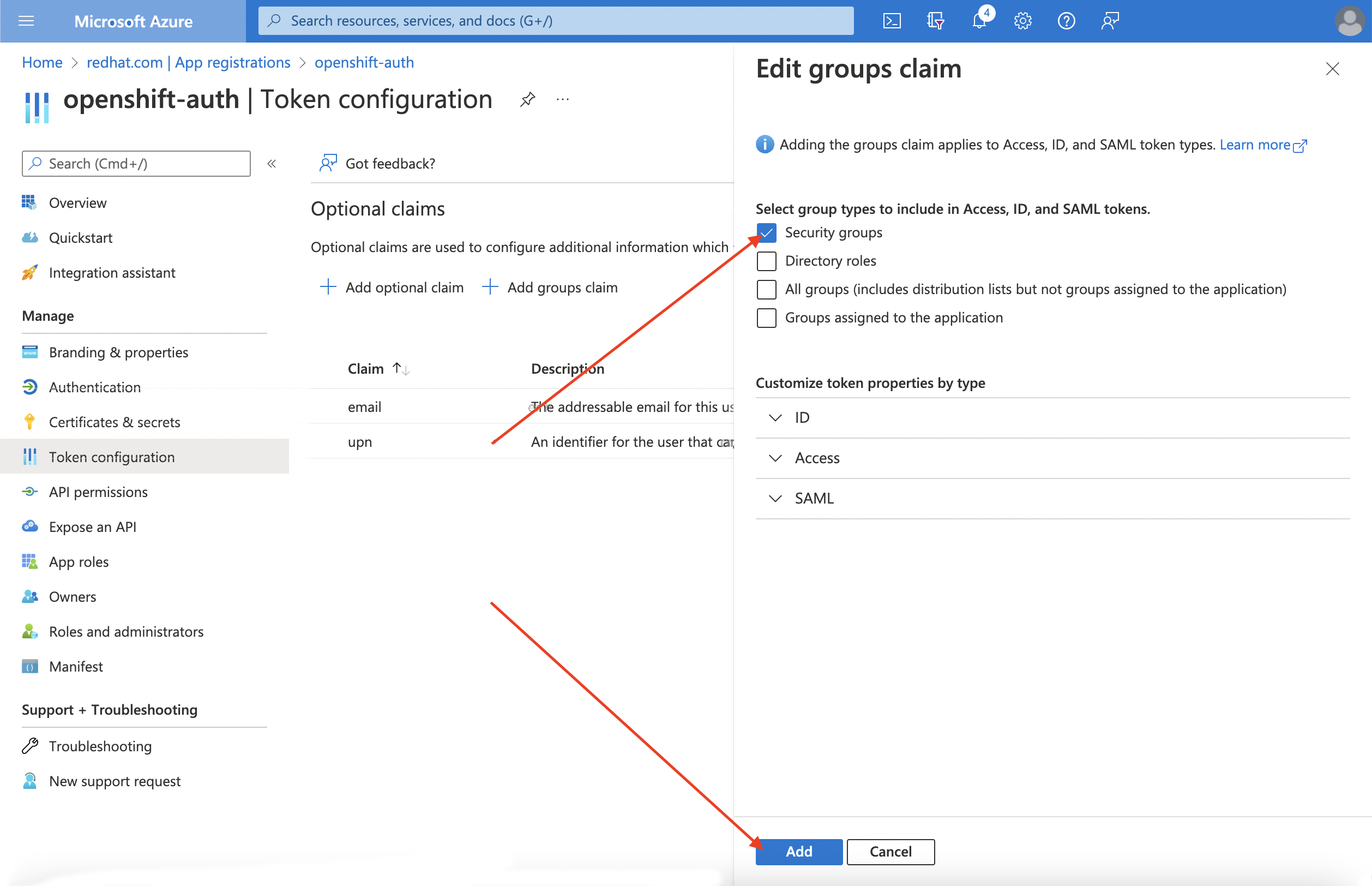

在 Token configuration sub-blade 中,单击 Add groups claim。

要为您的 Entra ID 应用程序配置组声明,请选择 Security groups,然后点 Add。

注意在本例中,组声明包括用户所属的所有安全组。在真实的生产环境中,确保组声明仅包含适用于 Red Hat OpenShift Service on AWS 的组。

您必须将 Red Hat OpenShift Service on AWS 配置为使用 Entra ID 作为其身份提供程序。

虽然 Red Hat OpenShift Service on AWS 提供了使用 OpenShift Cluster Manager 配置身份提供程序的功能,但使用 ROSA CLI 配置集群的 OAuth 供应商,以使用 Entra ID 作为其身份提供程序。在配置身份提供程序前,为身份提供程序配置设置必要的变量。

流程

运行以下命令来创建变量:

CLUSTER_NAME=example-cluster IDP_NAME=AAD APP_ID=yyyyyyyy-yyyy-yyyy-yyyy-yyyyyyyyyyyy CLIENT_SECRET=xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx TENANT_ID=zzzzzzzz-zzzz-zzzz-zzzz-zzzzzzzzzzzz

$ CLUSTER_NAME=example-cluster1 $ IDP_NAME=AAD2 $ APP_ID=yyyyyyyy-yyyy-yyyy-yyyy-yyyyyyyyyyyy3 $ CLIENT_SECRET=xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx4 $ TENANT_ID=zzzzzzzz-zzzz-zzzz-zzzz-zzzzzzzzzzzz5 Copy to Clipboard Copied! Toggle word wrap Toggle overflow 运行以下命令,配置集群的 OAuth 供应商。如果启用了组声明,请确保使用

--group-claims groups参数。如果启用了组声明,请运行以下命令:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 如果没有启用组声明,请运行以下命令:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

几分钟后,集群身份验证 Operator 会协调您的更改,您可以使用 Entra ID 登录到集群。

9.5. 为单个用户和组授予额外权限

第一次登录时,您可能会注意到您具有非常有限的权限。默认情况下,Red Hat OpenShift Service on AWS 只授予您在集群中创建新项目或命名空间的权限。其他项目通过 view 的限制。

您必须为单独的用户和组授予这些额外的功能。

9.5.1. 为单个用户授予额外权限

Red Hat OpenShift Service on AWS 包括大量预配置的角色,包括 cluster-admin 角色,该角色对集群具有完全访问权限和控制。

流程

运行以下命令,授予用户对

cluster-admin角色的访问权限:rosa grant user cluster-admin \ --user=<USERNAME> --cluster=${CLUSTER_NAME}$ rosa grant user cluster-admin \ --user=<USERNAME>1 --cluster=${CLUSTER_NAME}Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- 提供您要具有集群 admin 权限的 Entra ID 用户名。

9.5.2. 为单个组授予其他权限

如果您选择启用组声明,集群 OAuth 供应商会使用组 ID 自动创建或更新用户的组成员资格。集群 OAuth 供应商不会自动为创建的组创建 RoleBindings 和 ClusterRoleBindings ;您负责使用您自己的进程创建这些绑定。

要为 cluster-admin 角色授予自动生成的组访问权限,您必须创建一个 ClusterRoleBinding 到组 ID。

流程

运行以下命令来创建

ClusterRoleBinding:oc create clusterrolebinding cluster-admin-group \ --clusterrole=cluster-admin \ --group=<GROUP_ID>

$ oc create clusterrolebinding cluster-admin-group \ --clusterrole=cluster-admin \ --group=<GROUP_ID>1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- 提供您要具有集群 admin 权限的 Entra ID 组 ID。

现在,指定组中的任何用户会自动接收

cluster-admin访问权限。

AWS Secret 和配置提供程序(ASCP)提供了将 AWS Secret 公开为 Kubernetes 存储卷的方法。使用 ASCP,您可以在 Secret Manager 中存储和管理您的 secret,然后通过运行 Red Hat OpenShift Service on AWS 上的工作负载检索 secret。

10.1. 先决条件

在启动此过程前,请确保您有以下资源和工具:

- 使用 STS 部署的 Red Hat OpenShift Service on AWS 集群

- Helm 3

-

awsCLI -

ocCLI -

jqCLI

10.1.1. 其他环境要求

运行以下命令,登录到 Red Hat OpenShift Service on AWS 集群:

oc login --token=<your-token> --server=<your-server-url>

$ oc login --token=<your-token> --server=<your-server-url>Copy to Clipboard Copied! Toggle word wrap Toggle overflow 您可以通过从 Red Hat OpenShift Cluster Manager 访问 pull secret 中的集群 来查找您的登录令牌。

运行以下命令验证集群是否有 STS:

oc get authentication.config.openshift.io cluster -o json \ | jq .spec.serviceAccountIssuer

$ oc get authentication.config.openshift.io cluster -o json \ | jq .spec.serviceAccountIssuerCopy to Clipboard Copied! Toggle word wrap Toggle overflow 输出示例

"https://xxxxx.cloudfront.net/xxxxx"

"https://xxxxx.cloudfront.net/xxxxx"Copy to Clipboard Copied! Toggle word wrap Toggle overflow 如果您的输出不同,请不要继续。在继续此过程前 ,请参阅创建 STS 集群的红帽文档。

运行以下命令,设置

SecurityContextConstraints权限,以允许 CSI 驱动程序运行:oc new-project csi-secrets-store oc adm policy add-scc-to-user privileged \ system:serviceaccount:csi-secrets-store:secrets-store-csi-driver oc adm policy add-scc-to-user privileged \ system:serviceaccount:csi-secrets-store:csi-secrets-store-provider-aws$ oc new-project csi-secrets-store $ oc adm policy add-scc-to-user privileged \ system:serviceaccount:csi-secrets-store:secrets-store-csi-driver $ oc adm policy add-scc-to-user privileged \ system:serviceaccount:csi-secrets-store:csi-secrets-store-provider-awsCopy to Clipboard Copied! Toggle word wrap Toggle overflow 运行以下命令,创建在此过程中使用的环境变量:

export REGION=$(oc get infrastructure cluster -o=jsonpath="{.status.platformStatus.aws.region}") export OIDC_ENDPOINT=$(oc get authentication.config.openshift.io cluster \ -o jsonpath='{.spec.serviceAccountIssuer}' | sed 's|^https://||') export AWS_ACCOUNT_ID=`aws sts get-caller-identity --query Account --output text` export AWS_PAGER=""$ export REGION=$(oc get infrastructure cluster -o=jsonpath="{.status.platformStatus.aws.region}") $ export OIDC_ENDPOINT=$(oc get authentication.config.openshift.io cluster \ -o jsonpath='{.spec.serviceAccountIssuer}' | sed 's|^https://||') $ export AWS_ACCOUNT_ID=`aws sts get-caller-identity --query Account --output text` $ export AWS_PAGER=""Copy to Clipboard Copied! Toggle word wrap Toggle overflow

10.2. 部署 AWS Secret 和配置提供程序

运行以下命令,使用 Helm 来注册 secret 存储 CSI 驱动程序:

helm repo add secrets-store-csi-driver \ https://kubernetes-sigs.github.io/secrets-store-csi-driver/charts$ helm repo add secrets-store-csi-driver \ https://kubernetes-sigs.github.io/secrets-store-csi-driver/chartsCopy to Clipboard Copied! Toggle word wrap Toggle overflow 运行以下命令来更新 Helm 仓库:

helm repo update

$ helm repo updateCopy to Clipboard Copied! Toggle word wrap Toggle overflow 运行以下命令来安装 secret 存储 CSI 驱动程序:

helm upgrade --install -n csi-secrets-store \ csi-secrets-store-driver secrets-store-csi-driver/secrets-store-csi-driver$ helm upgrade --install -n csi-secrets-store \ csi-secrets-store-driver secrets-store-csi-driver/secrets-store-csi-driverCopy to Clipboard Copied! Toggle word wrap Toggle overflow 运行以下命令来部署 AWS 供应商:

oc -n csi-secrets-store apply -f \ https://raw.githubusercontent.com/rh-mobb/documentation/main/content/misc/secrets-store-csi/aws-provider-installer.yaml$ oc -n csi-secrets-store apply -f \ https://raw.githubusercontent.com/rh-mobb/documentation/main/content/misc/secrets-store-csi/aws-provider-installer.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow 运行以下命令,检查两个 Daemonsets 是否正在运行:

oc -n csi-secrets-store get ds \ csi-secrets-store-provider-aws \ csi-secrets-store-driver-secrets-store-csi-driver$ oc -n csi-secrets-store get ds \ csi-secrets-store-provider-aws \ csi-secrets-store-driver-secrets-store-csi-driverCopy to Clipboard Copied! Toggle word wrap Toggle overflow 运行以下命令,标记 Secrets Store CSI 驱动程序以允许与受限 pod 安全配置集搭配使用:

oc label csidriver.storage.k8s.io/secrets-store.csi.k8s.io security.openshift.io/csi-ephemeral-volume-profile=restricted

$ oc label csidriver.storage.k8s.io/secrets-store.csi.k8s.io security.openshift.io/csi-ephemeral-volume-profile=restrictedCopy to Clipboard Copied! Toggle word wrap Toggle overflow

10.3. 创建 Secret 和 IAM 访问策略

运行以下命令,在 Secrets Manager 中创建 secret:

SECRET_ARN=$(aws --region "$REGION" secretsmanager create-secret \ --name MySecret --secret-string \ '{"username":"shadowman", "password":"hunter2"}' \ --query ARN --output text); echo $SECRET_ARN$ SECRET_ARN=$(aws --region "$REGION" secretsmanager create-secret \ --name MySecret --secret-string \ '{"username":"shadowman", "password":"hunter2"}' \ --query ARN --output text); echo $SECRET_ARNCopy to Clipboard Copied! Toggle word wrap Toggle overflow 运行以下命令来创建 IAM 访问策略文档:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 运行以下命令来创建 IAM 访问策略:

POLICY_ARN=$(aws --region "$REGION" --query Policy.Arn \ --output text iam create-policy \ --policy-name openshift-access-to-mysecret-policy \ --policy-document file://policy.json); echo $POLICY_ARN

$ POLICY_ARN=$(aws --region "$REGION" --query Policy.Arn \ --output text iam create-policy \ --policy-name openshift-access-to-mysecret-policy \ --policy-document file://policy.json); echo $POLICY_ARNCopy to Clipboard Copied! Toggle word wrap Toggle overflow 运行以下命令来创建 IAM 角色信任策略文档:

注意信任策略会锁定到您在此流程后创建的命名空间的默认服务帐户。

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 运行以下命令来创建 IAM 角色:

ROLE_ARN=$(aws iam create-role --role-name openshift-access-to-mysecret \ --assume-role-policy-document file://trust-policy.json \ --query Role.Arn --output text); echo $ROLE_ARN

$ ROLE_ARN=$(aws iam create-role --role-name openshift-access-to-mysecret \ --assume-role-policy-document file://trust-policy.json \ --query Role.Arn --output text); echo $ROLE_ARNCopy to Clipboard Copied! Toggle word wrap Toggle overflow 运行以下命令,将角色附加到策略:

aws iam attach-role-policy --role-name openshift-access-to-mysecret \ --policy-arn $POLICY_ARN$ aws iam attach-role-policy --role-name openshift-access-to-mysecret \ --policy-arn $POLICY_ARNCopy to Clipboard Copied! Toggle word wrap Toggle overflow

10.4. 创建应用程序以使用此 secret

运行以下命令来创建 OpenShift 项目:

oc new-project my-application

$ oc new-project my-applicationCopy to Clipboard Copied! Toggle word wrap Toggle overflow 运行以下命令,注解默认服务帐户以使用 STS 角色:

oc annotate -n my-application serviceaccount default \ eks.amazonaws.com/role-arn=$ROLE_ARN$ oc annotate -n my-application serviceaccount default \ eks.amazonaws.com/role-arn=$ROLE_ARNCopy to Clipboard Copied! Toggle word wrap Toggle overflow 运行以下命令,创建一个 secret 供应商类来访问我们的 secret:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 使用以下命令中的 secret 创建部署:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 运行以下命令验证 pod 是否挂载了 secret:

oc exec -it my-application -- cat /mnt/secrets-store/MySecret

$ oc exec -it my-application -- cat /mnt/secrets-store/MySecretCopy to Clipboard Copied! Toggle word wrap Toggle overflow

10.5. 清理

运行以下命令来删除应用程序:

oc delete project my-application

$ oc delete project my-applicationCopy to Clipboard Copied! Toggle word wrap Toggle overflow 运行以下命令来删除 secret 存储 csi 驱动程序:

helm delete -n csi-secrets-store csi-secrets-store-driver

$ helm delete -n csi-secrets-store csi-secrets-store-driverCopy to Clipboard Copied! Toggle word wrap Toggle overflow 运行以下命令来删除安全性上下文约束:

oc adm policy remove-scc-from-user privileged \ system:serviceaccount:csi-secrets-store:secrets-store-csi-driver; oc adm policy remove-scc-from-user privileged \ system:serviceaccount:csi-secrets-store:csi-secrets-store-provider-aws$ oc adm policy remove-scc-from-user privileged \ system:serviceaccount:csi-secrets-store:secrets-store-csi-driver; oc adm policy remove-scc-from-user privileged \ system:serviceaccount:csi-secrets-store:csi-secrets-store-provider-awsCopy to Clipboard Copied! Toggle word wrap Toggle overflow 运行以下命令来删除 AWS 供应商:

oc -n csi-secrets-store delete -f \ https://raw.githubusercontent.com/rh-mobb/documentation/main/content/misc/secrets-store-csi/aws-provider-installer.yaml

$ oc -n csi-secrets-store delete -f \ https://raw.githubusercontent.com/rh-mobb/documentation/main/content/misc/secrets-store-csi/aws-provider-installer.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow 运行以下命令来删除 AWS 角色和策略:

aws iam detach-role-policy --role-name openshift-access-to-mysecret \ --policy-arn $POLICY_ARN; aws iam delete-role --role-name openshift-access-to-mysecret; aws iam delete-policy --policy-arn $POLICY_ARN$ aws iam detach-role-policy --role-name openshift-access-to-mysecret \ --policy-arn $POLICY_ARN; aws iam delete-role --role-name openshift-access-to-mysecret; aws iam delete-policy --policy-arn $POLICY_ARNCopy to Clipboard Copied! Toggle word wrap Toggle overflow 运行以下命令来删除 Secrets Manager secret:

aws secretsmanager --region $REGION delete-secret --secret-id $SECRET_ARN

$ aws secretsmanager --region $REGION delete-secret --secret-id $SECRET_ARNCopy to Clipboard Copied! Toggle word wrap Toggle overflow

AWS Controller for Kubernetes (ACK)可让您直接从 Red Hat OpenShift Service on AWS 中定义和使用 AWS 服务资源。使用 ACK,您可以利用应用程序的 AWS 管理的服务,而无需定义集群外的资源,或运行提供支持功能(如数据库或消息队列)的服务。

您可以直接从软件目录安装各种 ACK Operator。这样便可在您的应用程序中轻松开始使用 Operator。此控制器是 AWS Controller for Kubernetes 项目的一个组件,目前处于开发人员预览中。

使用本教程部署 ACK S3 Operator。您还可以在集群的软件目录中为任何其他 ACK Operator 进行调整。

11.1. 先决条件

- Red Hat OpenShift Service on AWS 集群

-

具有

cluster-admin权限的用户帐户 -

OpenShift CLI (

oc) -

Amazon Web Services (AWS) CLI (

aws)

11.2. 设置您的环境

配置以下环境变量,更改集群名称以适应集群:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 在移至下一部分前,确保所有字段都正确输出:

echo "Cluster: ${ROSA_CLUSTER_NAME}, Region: ${REGION}, OIDC Endpoint: ${OIDC_ENDPOINT}, AWS Account ID: ${AWS_ACCOUNT_ID}"$ echo "Cluster: ${ROSA_CLUSTER_NAME}, Region: ${REGION}, OIDC Endpoint: ${OIDC_ENDPOINT}, AWS Account ID: ${AWS_ACCOUNT_ID}"Copy to Clipboard Copied! Toggle word wrap Toggle overflow

11.3. 准备 AWS 帐户

为 ACK Operator 创建 AWS Identity Access Management (IAM)信任策略:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 为 ACK Operator 创建 AWS IAM 角色,以假设附加了

AmazonS3FullAccess策略:注意您可以在每个项目的 GitHub 仓库中找到推荐的策略,例如 https://github.com/aws-controllers-k8s/s3-controller/blob/main/config/iam/recommended-policy-arn。

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

11.4. 安装 ACK S3 Controller

创建一个项目,将 ACK S3 Operator 安装到:

oc new-project ack-system

$ oc new-project ack-systemCopy to Clipboard Copied! Toggle word wrap Toggle overflow 使用 ACK S3 Operator 配置创建一个文件:

注意ACK_WATCH_NAMESPACE完全留空,以便控制器可以正确地监视集群中的所有命名空间。Copy to Clipboard Copied! Toggle word wrap Toggle overflow 使用上一步中的文件来创建 ConfigMap:

oc -n ack-system create configmap \ --from-env-file=${SCRATCH}/config.txt ack-${ACK_SERVICE}-user-config$ oc -n ack-system create configmap \ --from-env-file=${SCRATCH}/config.txt ack-${ACK_SERVICE}-user-configCopy to Clipboard Copied! Toggle word wrap Toggle overflow 从软件目录安装 ACK S3 Operator:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 使用 AWS IAM 角色注解 ACK S3 Operator 服务帐户,以假定并重启部署:

oc -n ack-system annotate serviceaccount ${ACK_SERVICE_ACCOUNT} \ eks.amazonaws.com/role-arn=${ROLE_ARN} && \ oc -n ack-system rollout restart deployment ack-${ACK_SERVICE}-controller$ oc -n ack-system annotate serviceaccount ${ACK_SERVICE_ACCOUNT} \ eks.amazonaws.com/role-arn=${ROLE_ARN} && \ oc -n ack-system rollout restart deployment ack-${ACK_SERVICE}-controllerCopy to Clipboard Copied! Toggle word wrap Toggle overflow 验证 ACK S3 Operator 是否正在运行:

oc -n ack-system get pods

$ oc -n ack-system get podsCopy to Clipboard Copied! Toggle word wrap Toggle overflow 输出示例

NAME READY STATUS RESTARTS AGE ack-s3-controller-585f6775db-s4lfz 1/1 Running 0 51s

NAME READY STATUS RESTARTS AGE ack-s3-controller-585f6775db-s4lfz 1/1 Running 0 51sCopy to Clipboard Copied! Toggle word wrap Toggle overflow

11.5. 验证部署

部署 S3 存储桶资源:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 验证 AWS 中已创建了 S3 存储桶:

aws s3 ls | grep ${CLUSTER_NAME}-bucket$ aws s3 ls | grep ${CLUSTER_NAME}-bucketCopy to Clipboard Copied! Toggle word wrap Toggle overflow 输出示例

2023-10-04 14:51:45 mrmc-test-maz-bucket

2023-10-04 14:51:45 mrmc-test-maz-bucketCopy to Clipboard Copied! Toggle word wrap Toggle overflow

11.6. 清理

删除 S3 存储桶资源:

oc -n ack-system delete bucket.s3.services.k8s.aws/${CLUSTER-NAME}-bucket$ oc -n ack-system delete bucket.s3.services.k8s.aws/${CLUSTER-NAME}-bucketCopy to Clipboard Copied! Toggle word wrap Toggle overflow 删除 ACK S3 Operator 和 AWS IAM 角色:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 删除

ack-system项目:oc delete project ack-system

$ oc delete project ack-systemCopy to Clipboard Copied! Toggle word wrap Toggle overflow

第 12 章 教程:为外部流量分配一致的出口 IP

您可以为离开集群的流量分配一致的 IP 地址,如需要基于 IP 的配置才能满足安全标准的安全组。

默认情况下,Red Hat OpenShift Service on AWS 使用 OVN-Kubernetes 容器网络接口(CNI)从池中分配随机 IP 地址。这可能导致配置安全锁定无法预计或打开。

如需更多信息 ,请参阅配置出口 IP 地址。

目标

- 了解如何为出口集群流量配置一组可预测的 IP 地址。

先决条件

- 使用 OVN-Kubernetes 部署的 Red Hat OpenShift Service on AWS 集群

-

OpenShift CLI (

oc) -

ROSA CLI (

rosa) -

jq

12.1. 设置环境变量

运行以下命令来设置环境变量:

注意将

ROSA_MACHINE_POOL_NAME变量的值替换为针对不同的机器池。export ROSA_CLUSTER_NAME=$(oc get infrastructure cluster -o=jsonpath="{.status.infrastructureName}" | sed 's/-[a-z0-9]\{5\}$//') export ROSA_MACHINE_POOL_NAME=worker$ export ROSA_CLUSTER_NAME=$(oc get infrastructure cluster -o=jsonpath="{.status.infrastructureName}" | sed 's/-[a-z0-9]\{5\}$//') $ export ROSA_MACHINE_POOL_NAME=workerCopy to Clipboard Copied! Toggle word wrap Toggle overflow

12.2. 确保容量

每个公共云供应商都限制分配给每个节点的 IP 地址数量。

运行以下命令验证足够的容量:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 输出示例

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

12.3. 创建出口 IP 规则

在创建出口 IP 规则前,请确定您使用哪个出口 IP。

注意您选择的出口 IP 应该作为置备 worker 节点的子网的一部分存在。

可选 :保存您请求的出口 IP,以避免与 AWS Virtual Private Cloud (VPC)动态主机配置协议(DHCP)服务冲突。

12.4. 为命名空间分配出口 IP

运行以下命令创建新项目:

oc new-project demo-egress-ns

$ oc new-project demo-egress-nsCopy to Clipboard Copied! Toggle word wrap Toggle overflow 运行以下命令,为命名空间中的所有 pod 创建出口规则:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

12.5. 为 pod 分配出口 IP

运行以下命令创建新项目:

oc new-project demo-egress-pod

$ oc new-project demo-egress-podCopy to Clipboard Copied! Toggle word wrap Toggle overflow 运行以下命令,为 pod 创建出口规则:

注意spec.namespaceSelector是一个强制字段。Copy to Clipboard Copied! Toggle word wrap Toggle overflow

12.5.1. 标记节点

运行以下命令来获取待处理的出口 IP 分配:

oc get egressips

$ oc get egressipsCopy to Clipboard Copied! Toggle word wrap Toggle overflow 输出示例

NAME EGRESSIPS ASSIGNED NODE ASSIGNED EGRESSIPS demo-egress-ns 10.10.100.253 demo-egress-pod 10.10.100.254

NAME EGRESSIPS ASSIGNED NODE ASSIGNED EGRESSIPS demo-egress-ns 10.10.100.253 demo-egress-pod 10.10.100.254Copy to Clipboard Copied! Toggle word wrap Toggle overflow 您创建的出口 IP 规则只适用于带有

k8s.ovn.org/egress-assignable标签的节点。确保该标签仅位于特定的机器池中。使用以下命令为机器池分配标签:

警告如果您依赖机器池的节点标签,这个命令会替换这些标签。务必将所需的标签输入到-

labels 字段中,以确保您的节点标签保留。rosa update machinepool ${ROSA_MACHINE_POOL_NAME} \ --cluster="${ROSA_CLUSTER_NAME}" \ --labels "k8s.ovn.org/egress-assignable="$ rosa update machinepool ${ROSA_MACHINE_POOL_NAME} \ --cluster="${ROSA_CLUSTER_NAME}" \ --labels "k8s.ovn.org/egress-assignable="Copy to Clipboard Copied! Toggle word wrap Toggle overflow

12.5.2. 检查出口 IP

运行以下命令,查看出口 IP 分配:

oc get egressips

$ oc get egressipsCopy to Clipboard Copied! Toggle word wrap Toggle overflow 输出示例

NAME EGRESSIPS ASSIGNED NODE ASSIGNED EGRESSIPS demo-egress-ns 10.10.100.253 ip-10-10-156-122.ec2.internal 10.10.150.253 demo-egress-pod 10.10.100.254 ip-10-10-156-122.ec2.internal 10.10.150.254

NAME EGRESSIPS ASSIGNED NODE ASSIGNED EGRESSIPS demo-egress-ns 10.10.100.253 ip-10-10-156-122.ec2.internal 10.10.150.253 demo-egress-pod 10.10.100.254 ip-10-10-156-122.ec2.internal 10.10.150.254Copy to Clipboard Copied! Toggle word wrap Toggle overflow

12.6. 验证

12.6.1. 部署示例应用程序

要测试出口 IP 规则,请创建一个限制为我们指定的出口 IP 地址的服务。这会模拟预期 IP 地址小子集的外部服务。

运行

echoserver命令复制请求:oc -n default run demo-service --image=gcr.io/google_containers/echoserver:1.4

$ oc -n default run demo-service --image=gcr.io/google_containers/echoserver:1.4Copy to Clipboard Copied! Toggle word wrap Toggle overflow 运行以下命令,将 pod 公开为服务,并将入口限制为您指定的出口 IP 地址:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 运行以下命令,检索负载均衡器主机名并将其保存为环境变量:

export LOAD_BALANCER_HOSTNAME=$(oc get svc -n default demo-service -o json | jq -r '.status.loadBalancer.ingress[].hostname')

$ export LOAD_BALANCER_HOSTNAME=$(oc get svc -n default demo-service -o json | jq -r '.status.loadBalancer.ingress[].hostname')Copy to Clipboard Copied! Toggle word wrap Toggle overflow

12.6.2. 测试命名空间出口

启动交互式 shell 来测试命名空间出站规则:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 向负载均衡器发送请求,并确保您可以成功连接:

curl -s http://$LOAD_BALANCER_HOSTNAME

$ curl -s http://$LOAD_BALANCER_HOSTNAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow 检查输出是否有成功连接:

注意client_address是负载均衡器的内部 IP 地址,而不是您的出口 IP。您可以通过连接到服务限制为.spec.loadBalancerSourceRanges来验证您是否已正确配置了客户端地址。输出示例

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 运行以下命令退出 pod:

exit

$ exitCopy to Clipboard Copied! Toggle word wrap Toggle overflow

12.6.3. 测试 pod 出口

启动交互式 shell 来测试 pod 出站规则:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 运行以下命令,向负载均衡器发送请求:

curl -s http://$LOAD_BALANCER_HOSTNAME

$ curl -s http://$LOAD_BALANCER_HOSTNAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow 检查输出是否有成功连接:

注意client_address是负载均衡器的内部 IP 地址,而不是您的出口 IP。您可以通过连接到服务限制为.spec.loadBalancerSourceRanges来验证您是否已正确配置了客户端地址。输出示例

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 运行以下命令退出 pod:

exit

$ exitCopy to Clipboard Copied! Toggle word wrap Toggle overflow

12.6.4. 可选:测试阻塞的出口

可选: 通过运行以下命令,测试在未应用出口规则时流量被成功阻止:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 运行以下命令,向负载均衡器发送请求:

curl -s http://$LOAD_BALANCER_HOSTNAME

$ curl -s http://$LOAD_BALANCER_HOSTNAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow - 如果命令失败,则成功阻止出口。

运行以下命令退出 pod:

exit

$ exitCopy to Clipboard Copied! Toggle word wrap Toggle overflow

12.7. 清理集群

运行以下命令清理集群:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 运行以下命令清理分配的节点标签:

警告如果您依赖机器池的节点标签,这个命令会替换这些标签。在-

labels 字段中输入所需的标签,以确保您的节点标签保留。rosa update machinepool ${ROSA_MACHINE_POOL_NAME} \ --cluster="${ROSA_CLUSTER_NAME}" \ --labels ""$ rosa update machinepool ${ROSA_MACHINE_POOL_NAME} \ --cluster="${ROSA_CLUSTER_NAME}" \ --labels ""Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Legal Notice

Copyright © Red Hat

OpenShift documentation is licensed under the Apache License 2.0 (https://www.apache.org/licenses/LICENSE-2.0).

Modified versions must remove all Red Hat trademarks.

Portions adapted from https://github.com/kubernetes-incubator/service-catalog/ with modifications by Red Hat.

Red Hat, Red Hat Enterprise Linux, the Red Hat logo, the Shadowman logo, JBoss, OpenShift, Fedora, the Infinity logo, and RHCE are trademarks of Red Hat, Inc., registered in the United States and other countries.

Linux® is the registered trademark of Linus Torvalds in the United States and other countries.

Java® is a registered trademark of Oracle and/or its affiliates.

XFS® is a trademark of Silicon Graphics International Corp. or its subsidiaries in the United States and/or other countries.

MySQL® is a registered trademark of MySQL AB in the United States, the European Union and other countries.

Node.js® is an official trademark of the OpenJS Foundation.

The OpenStack® Word Mark and OpenStack logo are either registered trademarks/service marks or trademarks/service marks of the OpenStack Foundation, in the United States and other countries and are used with the OpenStack Foundation’s permission. We are not affiliated with, endorsed or sponsored by the OpenStack Foundation, or the OpenStack community.

All other trademarks are the property of their respective owners.