Appendix A. The Device Mapper

The Device Mapper is a kernel driver that provides a framework for volume management. It provides a generic way of creating mapped devices, which may be used as logical volumes. It does not specifically know about volume groups or metadata formats.

The Device Mapper provides the foundation for a number of higher-level technologies. In addition to LVM, Device-Mapper multipath and the

dmraid command use the Device Mapper. The application interface to the Device Mapper is the ioctl system call. The user interface is the dmsetup command.

LVM logical volumes are activated using the Device Mapper. Each logical volume is translated into a mapped device. Each segment translates into a line in the mapping table that describes the device. The Device Mapper supports a variety of mapping targets, including linear mapping, striped mapping, and error mapping. So, for example, two disks may be concatenated into one logical volume with a pair of linear mappings, one for each disk. When LVM creates a volume, it creates an underlying device-mapper device that can be queried with the

dmsetup command. For information about the format of devices in a mapping table, see Section A.1, “Device Table Mappings”. For information about using the dmsetup command to query a device, see Section A.2, “The dmsetup Command”.

A.1. Device Table Mappings

Copy linkLink copied to clipboard!

A mapped device is defined by a table that specifies how to map each range of logical sectors of the device using a supported Device Table mapping. The table for a mapped device is constructed from a list of lines of the form:

start length mapping [mapping_parameters...]

start length mapping [mapping_parameters...]

In the first line of a Device Mapper table, the

start parameter must equal 0. The start + length parameters on one line must equal the start on the next line. Which mapping parameters are specified in a line of the mapping table depends on which mapping type is specified on the line.

Sizes in the Device Mapper are always specified in sectors (512 bytes).

When a device is specified as a mapping parameter in the Device Mapper, it can be referenced by the device name in the filesystem (for example,

/dev/hda) or by the major and minor numbers in the format major:minor. The major:minor format is preferred because it avoids pathname lookups.

The following shows a sample mapping table for a device. In this table there are four linear targets:

0 35258368 linear 8:48 65920 35258368 35258368 linear 8:32 65920 70516736 17694720 linear 8:16 17694976 88211456 17694720 linear 8:16 256

0 35258368 linear 8:48 65920

35258368 35258368 linear 8:32 65920

70516736 17694720 linear 8:16 17694976

88211456 17694720 linear 8:16 256

The first 2 parameters of each line are the segment starting block and the length of the segment. The next keyword is the mapping target, which in all of the cases in this example is

linear. The rest of the line consists of the parameters for a linear target.

The following subsections describe these mapping formats:

- linear

- striped

- mirror

- snapshot and snapshot-origin

- error

- zero

- multipath

- crypt

- device-mapper RAID

- thin

- thin-pool

A.1.1. The linear Mapping Target

Copy linkLink copied to clipboard!

A linear mapping target maps a continuous range of blocks onto another block device. The format of a linear target is as follows:

start length linear device offset

start length linear device offsetstart- starting block in virtual device

length- length of this segment

device- block device, referenced by the device name in the filesystem or by the major and minor numbers in the format

major:minor offset- starting offset of the mapping on the device

The following example shows a linear target with a starting block in the virtual device of 0, a segment length of 1638400, a major:minor number pair of 8:2, and a starting offset for the device of 41146992.

0 16384000 linear 8:2 41156992

0 16384000 linear 8:2 41156992

The following example shows a linear target with the device parameter specified as the device

/dev/hda.

0 20971520 linear /dev/hda 384

0 20971520 linear /dev/hda 384

A.1.2. The striped Mapping Target

Copy linkLink copied to clipboard!

The striped mapping target supports striping across physical devices. It takes as arguments the number of stripes and the striping chunk size followed by a list of pairs of device name and sector. The format of a striped target is as follows:

start length striped #stripes chunk_size device1 offset1 ... deviceN offsetN

start length striped #stripes chunk_size device1 offset1 ... deviceN offsetN

There is one set of

device and offset parameters for each stripe.

start- starting block in virtual device

length- length of this segment

#stripes- number of stripes for the virtual device

chunk_size- number of sectors written to each stripe before switching to the next; must be power of 2 at least as big as the kernel page size

device- block device, referenced by the device name in the filesystem or by the major and minor numbers in the format

major:minor. offset- starting offset of the mapping on the device

The following example shows a striped target with three stripes and a chunk size of 128:

0 73728 striped 3 128 8:9 384 8:8 384 8:7 9789824

0 73728 striped 3 128 8:9 384 8:8 384 8:7 9789824

- 0

- starting block in virtual device

- 73728

- length of this segment

- striped 3 128

- stripe across three devices with chunk size of 128 blocks

- 8:9

- major:minor numbers of first device

- 384

- starting offset of the mapping on the first device

- 8:8

- major:minor numbers of second device

- 384

- starting offset of the mapping on the second device

- 8:7

- major:minor numbers of third device

- 9789824

- starting offset of the mapping on the third device

The following example shows a striped target for 2 stripes with 256 KiB chunks, with the device parameters specified by the device names in the file system rather than by the major and minor numbers.

0 65536 striped 2 512 /dev/hda 0 /dev/hdb 0

0 65536 striped 2 512 /dev/hda 0 /dev/hdb 0

A.1.3. The mirror Mapping Target

Copy linkLink copied to clipboard!

The mirror mapping target supports the mapping of a mirrored logical device. The format of a mirrored target is as follows:

start length mirror log_type #logargs logarg1 ... logargN #devs device1 offset1 ... deviceN offsetN

start length mirror log_type #logargs logarg1 ... logargN #devs device1 offset1 ... deviceN offsetNstart- starting block in virtual device

length- length of this segment

log_type- The possible log types and their arguments are as follows:

core- The mirror is local and the mirror log is kept in core memory. This log type takes 1 - 3 arguments:regionsize [[

no]sync] [block_on_error] disk- The mirror is local and the mirror log is kept on disk. This log type takes 2 - 4 arguments:logdevice regionsize [[

no]sync] [block_on_error] clustered_core- The mirror is clustered and the mirror log is kept in core memory. This log type takes 2 - 4 arguments:regionsize UUID [[

no]sync] [block_on_error] clustered_disk- The mirror is clustered and the mirror log is kept on disk. This log type takes 3 - 5 arguments:logdevice regionsize UUID [[

no]sync] [block_on_error]

LVM maintains a small log which it uses to keep track of which regions are in sync with the mirror or mirrors. The regionsize argument specifies the size of these regions.In a clustered environment, the UUID argument is a unique identifier associated with the mirror log device so that the log state can be maintained throughout the cluster.The optional[no]syncargument can be used to specify the mirror as "in-sync" or "out-of-sync". Theblock_on_errorargument is used to tell the mirror to respond to errors rather than ignoring them. #log_args- number of log arguments that will be specified in the mapping

logargs- the log arguments for the mirror; the number of log arguments provided is specified by the

#log-argsparameter and the valid log arguments are determined by thelog_typeparameter. #devs- the number of legs in the mirror; a device and an offset is specified for each leg

device- block device for each mirror leg, referenced by the device name in the filesystem or by the major and minor numbers in the format

major:minor. A block device and offset is specified for each mirror leg, as indicated by the#devsparameter. offset- starting offset of the mapping on the device. A block device and offset is specified for each mirror leg, as indicated by the

#devsparameter.

The following example shows a mirror mapping target for a clustered mirror with a mirror log kept on disk.

0 52428800 mirror clustered_disk 4 253:2 1024 UUID block_on_error 3 253:3 0 253:4 0 253:5 0

0 52428800 mirror clustered_disk 4 253:2 1024 UUID block_on_error 3 253:3 0 253:4 0 253:5 0

- 0

- starting block in virtual device

- 52428800

- length of this segment

- mirror clustered_disk

- mirror target with a log type specifying that mirror is clustered and the mirror log is maintained on disk

- 4

- 4 mirror log arguments will follow

- 253:2

- major:minor numbers of log device

- 1024

- region size the mirror log uses to keep track of what is in sync

UUID- UUID of mirror log device to maintain log information throughout a cluster

block_on_error- mirror should respond to errors

- 3

- number of legs in mirror

- 253:3 0 253:4 0 253:5 0

- major:minor numbers and offset for devices constituting each leg of mirror

A.1.4. The snapshot and snapshot-origin Mapping Targets

Copy linkLink copied to clipboard!

When you create the first LVM snapshot of a volume, four Device Mapper devices are used:

- A device with a

linearmapping containing the original mapping table of the source volume. - A device with a

linearmapping used as the copy-on-write (COW) device for the source volume; for each write, the original data is saved in the COW device of each snapshot to keep its visible content unchanged (until the COW device fills up). - A device with a

snapshotmapping combining #1 and #2, which is the visible snapshot volume. - The "original" volume (which uses the device number used by the original source volume), whose table is replaced by a "snapshot-origin" mapping from device #1.

A fixed naming scheme is used to create these devices, For example, you might use the following commands to create an LVM volume named

base and a snapshot volume named snap based on that volume.

lvcreate -L 1G -n base volumeGroup lvcreate -L 100M --snapshot -n snap volumeGroup/base

# lvcreate -L 1G -n base volumeGroup

# lvcreate -L 100M --snapshot -n snap volumeGroup/base

This yields four devices, which you can view with the following commands:

The format for the

snapshot-origin target is as follows:

start length snapshot-origin origin

start length snapshot-origin originstart- starting block in virtual device

length- length of this segment

origin- base volume of snapshot

The

snapshot-origin will normally have one or more snapshots based on it. Reads will be mapped directly to the backing device. For each write, the original data will be saved in the COW device of each snapshot to keep its visible content unchanged until the COW device fills up.

The format for the

snapshot target is as follows:

start length snapshot origin COW-device P|N chunksize

start length snapshot origin COW-device P|N chunksizestart- starting block in virtual device

length- length of this segment

origin- base volume of snapshot

COW-device- Device on which changed chunks of data are stored

- P|N

- P (Persistent) or N (Not persistent); indicates whether snapshot will survive after reboot. For transient snapshots (N) less metadata must be saved on disk; they can be kept in memory by the kernel.

chunksize- Size in sectors of changed chunks of data that will be stored on the COW device

The following example shows a

snapshot-origin target with an origin device of 254:11.

0 2097152 snapshot-origin 254:11

0 2097152 snapshot-origin 254:11

The following example shows a

snapshot target with an origin device of 254:11 and a COW device of 254:12. This snapshot device is persistent across reboots and the chunk size for the data stored on the COW device is 16 sectors.

0 2097152 snapshot 254:11 254:12 P 16

0 2097152 snapshot 254:11 254:12 P 16

A.1.5. The error Mapping Target

Copy linkLink copied to clipboard!

With an error mapping target, any I/O operation to the mapped sector fails.

An error mapping target can be used for testing. To test how a device behaves in failure, you can create a device mapping with a bad sector in the middle of a device, or you can swap out the leg of a mirror and replace the leg with an error target.

An error target can be used in place of a failing device, as a way of avoiding timeouts and retries on the actual device. It can serve as an intermediate target while you rearrange LVM metadata during failures.

The

error mapping target takes no additional parameters besides the start and length parameters.

The following example shows an

error target.

0 65536 error

0 65536 error

A.1.6. The zero Mapping Target

Copy linkLink copied to clipboard!

The

zero mapping target is a block device equivalent of /dev/zero. A read operation to this mapping returns blocks of zeros. Data written to this mapping is discarded, but the write succeeds. The zero mapping target takes no additional parameters besides the start and length parameters.

The following example shows a

zero target for a 16Tb Device.

0 65536 zero

0 65536 zero

A.1.7. The multipath Mapping Target

Copy linkLink copied to clipboard!

The multipath mapping target supports the mapping of a multipathed device. The format for the

multipath target is as follows:

start length multipath #features [feature1 ... featureN] #handlerargs [handlerarg1 ... handlerargN] #pathgroups pathgroup pathgroupargs1 ... pathgroupargsN

start length multipath #features [feature1 ... featureN] #handlerargs [handlerarg1 ... handlerargN] #pathgroups pathgroup pathgroupargs1 ... pathgroupargsN

There is one set of

pathgroupargs parameters for each path group.

start- starting block in virtual device

length- length of this segment

#features- The number of multipath features, followed by those features. If this parameter is zero, then there is no

featureparameter and the next device mapping parameter is#handlerargs. Currently there is one supported feature that can be set with thefeaturesattribute in themultipath.conffile,queue_if_no_path. This indicates that this multipathed device is currently set to queue I/O operations if there is no path available.In the following example, theno_path_retryattribute in themultipath.conffile has been set to queue I/O operations only until all paths have been marked as failed after a set number of attempts have been made to use the paths. In this case, the mapping appears as follows until all the path checkers have failed the specified number of checks.0 71014400 multipath 1 queue_if_no_path 0 2 1 round-robin 0 2 1 66:128 \ 1000 65:64 1000 round-robin 0 2 1 8:0 1000 67:192 1000

0 71014400 multipath 1 queue_if_no_path 0 2 1 round-robin 0 2 1 66:128 \ 1000 65:64 1000 round-robin 0 2 1 8:0 1000 67:192 1000Copy to Clipboard Copied! Toggle word wrap Toggle overflow After all the path checkers have failed the specified number of checks, the mapping would appear as follows.0 71014400 multipath 0 0 2 1 round-robin 0 2 1 66:128 1000 65:64 1000 \ round-robin 0 2 1 8:0 1000 67:192 1000

0 71014400 multipath 0 0 2 1 round-robin 0 2 1 66:128 1000 65:64 1000 \ round-robin 0 2 1 8:0 1000 67:192 1000Copy to Clipboard Copied! Toggle word wrap Toggle overflow #handlerargs- The number of hardware handler arguments, followed by those arguments. A hardware handler specifies a module that will be used to perform hardware-specific actions when switching path groups or handling I/O errors. If this is set to 0, then the next parameter is

#pathgroups. #pathgroups- The number of path groups. A path group is the set of paths over which a multipathed device will load balance. There is one set of

pathgroupargsparameters for each path group. pathgroup- The next path group to try.

pathgroupsargs- Each path group consists of the following arguments:

pathselector #selectorargs #paths #pathargs device1 ioreqs1 ... deviceN ioreqsN

pathselector #selectorargs #paths #pathargs device1 ioreqs1 ... deviceN ioreqsNCopy to Clipboard Copied! Toggle word wrap Toggle overflow There is one set of path arguments for each path in the path group.pathselector- Specifies the algorithm in use to determine what path in this path group to use for the next I/O operation.

#selectorargs- The number of path selector arguments which follow this argument in the multipath mapping. Currently, the value of this argument is always 0.

#paths- The number of paths in this path group.

#pathargs- The number of path arguments specified for each path in this group. Currently this number is always 1, the

ioreqsargument. device- The block device number of the path, referenced by the major and minor numbers in the format

major:minor ioreqs- The number of I/O requests to route to this path before switching to the next path in the current group.

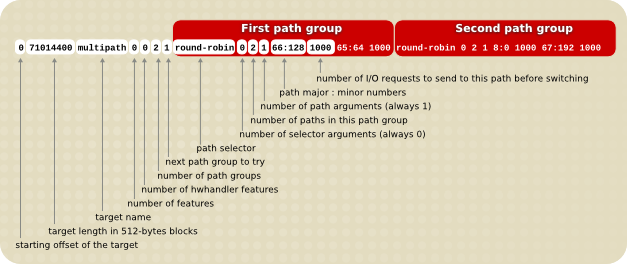

Figure A.1, “Multipath Mapping Target” shows the format of a multipath target with two path groups.

Figure A.1. Multipath Mapping Target

The following example shows a pure failover target definition for the same multipath device. In this target there are four path groups, with only one open path per path group so that the multipathed device will use only one path at a time.

0 71014400 multipath 0 0 4 1 round-robin 0 1 1 66:112 1000 \ round-robin 0 1 1 67:176 1000 round-robin 0 1 1 68:240 1000 \ round-robin 0 1 1 65:48 1000

0 71014400 multipath 0 0 4 1 round-robin 0 1 1 66:112 1000 \

round-robin 0 1 1 67:176 1000 round-robin 0 1 1 68:240 1000 \

round-robin 0 1 1 65:48 1000

The following example shows a full spread (multibus) target definition for the same multipathed device. In this target there is only one path group, which includes all of the paths. In this setup, multipath spreads the load evenly out to all of the paths.

0 71014400 multipath 0 0 1 1 round-robin 0 4 1 66:112 1000 \ 67:176 1000 68:240 1000 65:48 1000

0 71014400 multipath 0 0 1 1 round-robin 0 4 1 66:112 1000 \

67:176 1000 68:240 1000 65:48 1000

For further information about multipathing, see the Using Device Mapper Multipath document.

A.1.8. The crypt Mapping Target

Copy linkLink copied to clipboard!

The

crypt target encrypts the data passing through the specified device. It uses the kernel Crypto API.

The format for the

crypt target is as follows:

start length crypt cipher key IV-offset device offset

start length crypt cipher key IV-offset device offsetstart- starting block in virtual device

length- length of this segment

cipher- Cipher consists of

cipher[-chainmode]-ivmode[:iv options].cipher- Ciphers available are listed in

/proc/crypto(for example,aes). chainmode- Always use

cbc. Do not useebc; it does not use an initial vector (IV). ivmode[:iv options]- IV is an initial vector used to vary the encryption. The IV mode is

plainoressiv:hash. Anivmodeof-plainuses the sector number (plus IV offset) as the IV. Anivmodeof-essivis an enhancement avoiding a watermark weakness.

key- Encryption key, supplied in hex

IV-offset- Initial Vector (IV) offset

device- block device, referenced by the device name in the filesystem or by the major and minor numbers in the format

major:minor offset- starting offset of the mapping on the device

The following is an example of a

crypt target.

0 2097152 crypt aes-plain 0123456789abcdef0123456789abcdef 0 /dev/hda 0

0 2097152 crypt aes-plain 0123456789abcdef0123456789abcdef 0 /dev/hda 0

A.1.9. The device-mapper RAID Mapping Target

Copy linkLink copied to clipboard!

The device-mapper RAID (dm-raid) target provides a bridge from DM to MD. It allows the MD RAID drivers to be accessed using a device-mapper interface. The format of the dm-raid target is as follows

start length raid raid_type #raid_params raid_params #raid_devs metadata_dev0 dev0 [.. metadata_devN devN]

start length raid raid_type #raid_params raid_params #raid_devs metadata_dev0 dev0 [.. metadata_devN devN]

start- starting block in virtual device

length- length of this segment

raid_type- The RAID type can be one of the following

- raid1

- RAID1 mirroring

- raid4

- RAID4 dedicated parity disk

- raid5_la

- RAID5 left asymmetric— rotating parity 0 with data continuation

- raid5_ra

- RAID5 right asymmetric— rotating parity N with data continuation

- raid5_ls

- RAID5 left symmetric— rotating parity 0 with data restart

- raid5_rs

- RAID5 right symmetric— rotating parity N with data restart

- raid6_zr

- RAID6 zero restart— rotating parity 0 (left to right) with data restart

- raid6_nr

- RAID6 N restart— rotating parity N (right to left) with data restart

- raid6_nc

- RAID6 N continue— rotating parity N (right to left) with data continuation

- raid10

- Various RAID10-inspired algorithms selected by further optional arguments— RAID 10: Striped mirrors (striping on top of mirrors)— RAID 1E: Integrated adjacent striped mirroring— RAID 1E: Integrated offset striped mirroring— Other similar RAID10 variants

#raid_params- The number of parameters that follow

raid_params- Mandatory parameters:

chunk_size- Chunk size in sectors. This parameter is often known as "stripe size". It is the only mandatory parameter and is placed first.

Followed by optional parameters (in any order):- [sync|nosync]

- Force or prevent RAID initialization.

- rebuild

idx - Rebuild drive number

idx(first drive is 0). - daemon_sleep

ms - Interval between runs of the bitmap daemon that clear bits. A longer interval means less bitmap I/O but resyncing after a failure is likely to take longer.

- min_recovery_rate

KB/sec/disk - Throttle RAID initialization

- max_recovery_rate

KB/sec/disk - Throttle RAID initialization

- write_mostly

idx - Mark drive index

idxwrite-mostly. - max_write_behind

sectors - See the description of

--write-behindin themdadmman page. - stripe_cache

sectors - Stripe cache size (RAID 4/5/6 only)

- region_size

sectors - The

region_sizemultiplied by the number of regions is the logical size of the array. The bitmap records the device synchronization state for each region. - raid10_copies

#copies - The number of RAID10 copies. This parameter is used in conjunction with the

raid10_formatparameter to alter the default layout of a RAID10 configuration. The default value is 2. - raid10_format near|far|offset

- This parameter is used in conjunction with the

raid10_copiesparameter to alter the default layout of a RAID10 configuration. The default value isnear, which specifies a standard mirroring layout.If theraid10_copiesandraid10_formatare left unspecified, orraid10_copies 2and/orraid10_format nearis specified, then the layouts for 2, 3 and 4 devices are as follows:Copy to Clipboard Copied! Toggle word wrap Toggle overflow The 2-device layout is equivalent to 2-way RAID1. The 4-device layout is what a traditional RAID10 would look like. The 3-device layout is what might be called a 'RAID1E - Integrated Adjacent Stripe Mirroring'.Ifraid10_copies 2andraid10_format farare specified, then the layouts for 2, 3 and 4 devices are as follows:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Ifraid10_copies 2andraid10_format offsetare specified, then the layouts for 2, 3 and 4 devices are as follows:Copy to Clipboard Copied! Toggle word wrap Toggle overflow These layouts closely resemble the layouts fo RAID1E - Integrated Offset Stripe Mirroring'

#raid_devs- The number of devices composing the arrayEach device consists of two entries. The first is the device containing the metadata (if any); the second is the one containing the data.If a drive has failed or is missing at creation time, a '-' can be given for both the metadata and data drives for a given position.

The following example shows a RAID4 target with a starting block of 0 and a segment length of 1960893648. There are 4 data drives, 1 parity, with no metadata devices specified to hold superblock/bitmap info and a chunk size of 1MiB

0 1960893648 raid raid4 1 2048 5 - 8:17 - 8:33 - 8:49 - 8:65 - 8:81

0 1960893648 raid raid4 1 2048 5 - 8:17 - 8:33 - 8:49 - 8:65 - 8:81

The following example shows a RAID4 target with a starting block of 0 and a segment length of 1960893648. there are 4 data drives, 1 parity, with metadata devices, a chunk size of 1MiB, force RAID initialization, and a

min_recovery rate of 20 kiB/sec/disks.

0 1960893648 raid raid4 4 2048 sync min_recovery_rate 20 5 8:17 8:18 8:33 8:34 8:49 8:50 8:65 8:66 8:81 8:82

0 1960893648 raid raid4 4 2048 sync min_recovery_rate 20 5 8:17 8:18 8:33 8:34 8:49 8:50 8:65 8:66 8:81 8:82

A.1.10. The thin and thin-pool Mapping Targets

Copy linkLink copied to clipboard!

The format of a thin-pool target is as follows:

start length thin-pool metadata_dev data_dev data_block_size low_water_mark [#feature_args [arg*] ]

start length thin-pool metadata_dev data_dev data_block_size low_water_mark [#feature_args [arg*] ]

start- starting block in virtual device

length- length of this segment

metadata_dev- The metadata device

data_dev- The data device

data_block_size- The data block size (in sectors). The data block size gives the smallest unit of disk space that can be allocated at a time expressed in units of 512-byte sectors. Data block size must be between 64KB (128 sectors) and 1GB (2097152 sectors) inclusive and it must be a mutlipole of 128 (64KB).

low_water_mark- The low water mark, expressed in blocks of size

data_block_size. If free space on the data device drops below this level then a device-mapper event will be triggered which a user-space daemon should catch allowing it to extend the pool device. Only one such event will be sent. Resuming a device with a new table itself triggers an event so the user-space daemon can use this to detect a situation where a new table already exceeds the threshold.A low water mark for the metadata device is maintained in the kernel and will trigger a device-mapper event if free space on the metadata device drops below it. #feature_args- The number of feature arguments

arg- The thin pool feature argument are as follows:

- skip_block_zeroing

- Skip the zeroing of newly-provisioned blocks.

- ignore_discard

- Disable discard support.

- no_discard_passdown

- Do not pass discards down to the underlying data device, but just remove the mapping.

- read_only

- Do not allow any changes to be made to the pool metadata.

- error_if_no_space

- Error IOs, instead of queuing, if no space.

The following example shows a thin-pool target with a starting block in the virtual device of 0, a segment length of 1638400.

/dev/sdc1 is a small metadata device and /dev/sdc2 is a larger data device. The chunksize is 64k, the low_water_mark is 0, and there are no features.

0 16384000 thin-pool /dev/sdc1 /dev/sdc2 128 0 0

0 16384000 thin-pool /dev/sdc1 /dev/sdc2 128 0 0

The format of a thin target is as follows:

start length thin pool_dev dev_id [external_origin_dev]

start length thin pool_dev dev_id [external_origin_dev]

start- starting block in virtual device

length- length of this segment

pool_dev- The thin-pool device, for example

/dev/mapper/my_poolor 253:0 dev_id- The internal device identifier of the device to be activated.

external_origin_dev- An optional block device outside the pool to be treated as a read-only snapshot origin. Reads to unprovisioned areas of the thin target will be mapped to this device.

The following example shows a 1 GiB thinLV that uses

/dev/mapper/pool as its backing store (thin-pool). The target has a starting block in the virtual device of 0 and a segment length of 2097152.

0 2097152 thin /dev/mapper/pool 1

0 2097152 thin /dev/mapper/pool 1