Chapter 6. Clustering

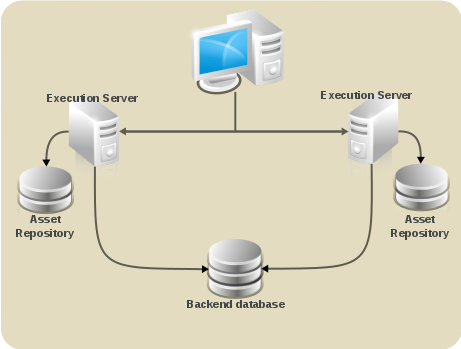

- GIT repository: virtual-file-system (VFS) repository that holds the business assets so that all cluster nodes use the same repository

- Execution Server and Web applications: the runtime server that resides in the container (such as, Red Hat JBoss EAP) along with BRMS and BPM Suite web applications so that nodes share the same runtime data.For instructions on clustering the application, refer to the container clustering documentation.

- Back-end database: database with the state data, such as, process instances, KIE sessions, history log, etc., for fail-over purposes

Figure 6.1. Schema of Red Hat JBoss BPM Suite system with individual system components

GIT repository clustering mechanism

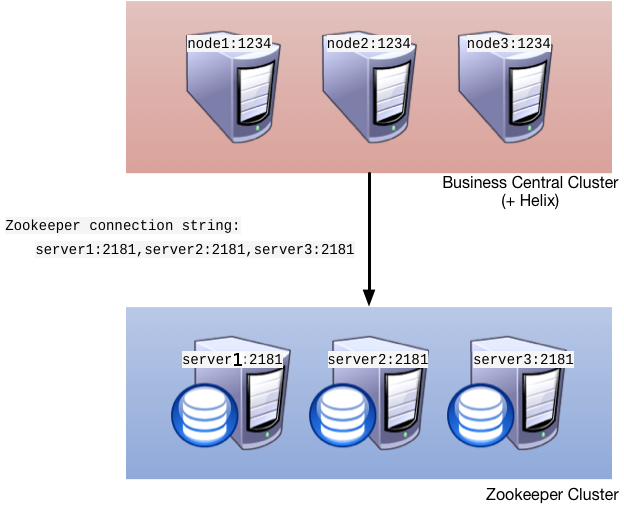

- Apache Zookeeper brings all parts together.

- Apache Helix is the cluster management component that registers all cluster details (the cluster itself, nodes, resources).

- uberfire framework which provides the backbone of the web applications

Figure 6.2. Clustering schema with Helix and Zookeeper

- Setting up the cluster itself using Zookeeper and Helix

- Setting up the back-end database with Quartz tables and configuration

- Configuring clustering on your container (this documentation provides only clustering instructions for Red Hat JBoss EAP 6)

Clustering Maven Repositories

rsync.

6.1. Setting up a Cluster

- Download the

jboss-bpmsuite-brms-VERSION-supplementary-tools.zip, which contains Apache Zookeeper, Apache Helix, and quartz DDL scripts. After downloading, unzip the archive: theZookeeperdirectory ($ZOOKEEPER_HOME) and theHelixdirectory ($HELIX_HOME) are created. - Now Configure ZooKeeper:

- In the ZooKeeper directory, go to

confdirectory and do the following:cp zoo_sample.cfg zoo.cfg

cp zoo_sample.cfg zoo.cfgCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Open

zoo.cfgfor editing and adjust the settings including the following:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Make sure the dataDir location exists and is accessible. - Assign a node ID to each member that will run ZooKeeper. For example, use "1", "2" and "3" respectively for node 1, node 2 and node 3 respectively. ZooKeeper should have an odd number of instances, at least 3 in order to recover from failure.The node ID is specified in a field called

myidunder the data directory of ZooKeeper on each node. For example, on node 1, run:$ echo "1" > /zookeeper/data/myid

- Set up ZooKeeper, so you can use it when creating the cluster with Helix:

- Go to the

$ZOOKEEPER_HOME/bin/directory and start ZooKeeper:./zkServer.sh start

./zkServer.sh startCopy to Clipboard Copied! Toggle word wrap Toggle overflow You can check the ZooKeeper log in the$ZOOKEEPER_HOME/bin/zookeeper.outfile. Check this log to ensure that the 'ensemble' (cluster) is formed successfully. One of the nodes should be elected as leader with the other two nodes following it.

- Once the ZooKeeper ensemble is started, the next step is to configure and start Helix. Helix only needs to be configured once and from a single node. The configuration is then stored by the ZooKeeper ensemble and shared as appropriate.Set up the cluster with the ZooKeeper server as the master of the configuration:

- Create the cluster by providing the ZooKeeper Host and port as a comma separated list:

$HELIX_HOME/bin/helix-admin.sh --zkSvr ZOOKEEPER_HOST:ZOOKEEPER_PORT --addCluster CLUSTER_NAME

$HELIX_HOME/bin/helix-admin.sh --zkSvr ZOOKEEPER_HOST:ZOOKEEPER_PORT --addCluster CLUSTER_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow - Add your nodes to the cluster:

$HELIX_HOME/bin/helix-admin.sh --zkSvr ZOOKEEPER_HOST:ZOOKEEPER_PORT --addNode CLUSTER_NAME NODE_NAMEUNIQUE_ID

$HELIX_HOME/bin/helix-admin.sh --zkSvr ZOOKEEPER_HOST:ZOOKEEPER_PORT --addNode CLUSTER_NAME NODE_NAMEUNIQUE_IDCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example 6.1. Adding three cluster nodes

./helix-admin.sh --zkSvr server1:2181,server2:2181,server3:2181 --addNode bpms-cluster nodeOne:12345 ./helix-admin.sh --zkSvr server1:2181,server2:2181,server3:2181 --addNode bpms-cluster nodeTwo:12346 ./helix-admin.sh --zkSvr server1:2181,server2:2181,server3:2181 --addNode bpms-cluster nodeThree:12347

./helix-admin.sh --zkSvr server1:2181,server2:2181,server3:2181 --addNode bpms-cluster nodeOne:12345 ./helix-admin.sh --zkSvr server1:2181,server2:2181,server3:2181 --addNode bpms-cluster nodeTwo:12346 ./helix-admin.sh --zkSvr server1:2181,server2:2181,server3:2181 --addNode bpms-cluster nodeThree:12347Copy to Clipboard Copied! Toggle word wrap Toggle overflow

- Add resources to the cluster.

Example 6.2. Adding vfs-repo as resource

./helix-admin.sh --zkSvr server1:2181,server2:2181,server3:2181 --addResource bpms-cluster vfs-repo 1 LeaderStandby AUTO_REBALANCE

./helix-admin.sh --zkSvr server1:2181,server2:2181,server3:2181 --addResource bpms-cluster vfs-repo 1 LeaderStandby AUTO_REBALANCECopy to Clipboard Copied! Toggle word wrap Toggle overflow - Rebalance the cluster with the three nodes.

Example 6.3. Rebalancing the bpms-cluster

./helix-admin.sh --zkSvr server1:2181,server2:2181,server3:2181 --rebalance bpms-cluster vfs-repo 3

./helix-admin.sh --zkSvr server1:2181,server2:2181,server3:2181 --rebalance bpms-cluster vfs-repo 3Copy to Clipboard Copied! Toggle word wrap Toggle overflow In the above command,3stands for three zookeeper nodes. - Start the Helix controller in all the nodes in the cluster.

Example 6.4. Starting the Helix controller

./run-helix-controller.sh --zkSvr server1:2181,server2:2181,server3:2181 --cluster bpms-cluster 2>&1 > /tmp/controller.log &

./run-helix-controller.sh --zkSvr server1:2181,server2:2181,server3:2181 --cluster bpms-cluster 2>&1 > /tmp/controller.log &Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Note

Stopping Helix and Zookeeper

Procedure 6.1. Stopping Helix and Zookeeper

- Stop JBoss EAP server processes.

- Stop the Helix process that has been created by

run-helix-controller.sh, for example,kill -15 <pid of HelixControllerMain>. - Stop ZooKeeper server using the

zkServer.sh stopcommand.