Migration Toolkit for Containers

Migrating to OpenShift Container Platform 4

Abstract

Chapter 1. About the Migration Toolkit for Containers

The Migration Toolkit for Containers (MTC) enables you to migrate stateful application workloads between OpenShift Container Platform 4 clusters at the granularity of a namespace.

If you are migrating from OpenShift Container Platform 3, see About migrating from OpenShift Container Platform 3 to 4 and Installing the legacy Migration Toolkit for Containers Operator on OpenShift Container Platform 3.

You can migrate applications within the same cluster or between clusters by using state migration.

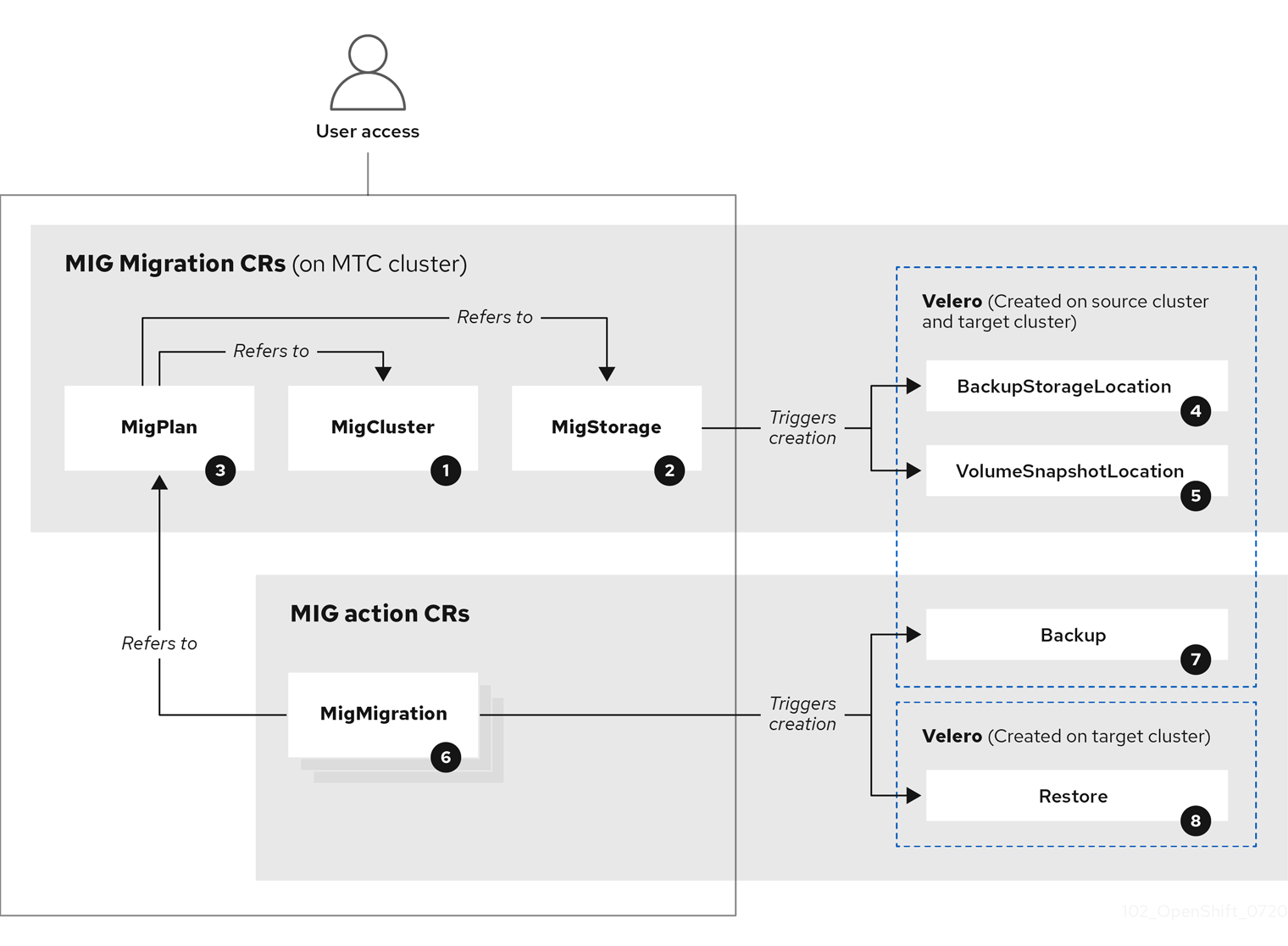

MTC provides a web console and an API, based on Kubernetes custom resources, to help you control the migration and minimize application downtime.

The MTC console is installed on the target cluster by default. You can configure the Migration Toolkit for Containers Operator to install the console on a remote cluster.

See Advanced migration options for information about the following topics:

- Automating your migration with migration hooks and the MTC API.

- Configuring your migration plan to exclude resources, support large-scale migrations, and enable automatic PV resizing for direct volume migration.

1.1. MTC 1.8 support

- MTC 1.8.3 and earlier using OADP 1.3.z is supported on all {OCP-short} versions 4.15 and earlier.

- MTC 1.8.4 and later using OADP 1.3.z is currently supported on all {OCP-short} versions 4.15 and earlier.

- MTC 1.8.4 and later using OADP 1.4.z is currently supported on all supported {OCP-short} versions 4.13 and later.

1.1.1. Support for Migration Toolkit for Containers (MTC)

| MTC version | OpenShift Container Platform version | General availability | Full support ends | Maintenance ends | Extended Update Support (EUS) |

| 1.8 |

| 02 Oct 2023 | Release of 1.9 | Release of 1.10 | N/A |

| 1.7 |

| 01 Mar 2022 | 01 Jul 2024 | 01 Jul 2025 | N/A |

For more details about EUS, see Extended Update Support.

1.2. Terminology

| Term | Definition |

|---|---|

| Source cluster | Cluster from which the applications are migrated. |

| Destination cluster[1] | Cluster to which the applications are migrated. |

| Replication repository | Object storage used for copying images, volumes, and Kubernetes objects during indirect migration or for Kubernetes objects during direct volume migration or direct image migration. The replication repository must be accessible to all clusters. |

| Host cluster |

Cluster on which the The host cluster does not require an exposed registry route for direct image migration. |

| Remote cluster | A remote cluster is usually the source cluster but this is not required.

A remote cluster requires a A remote cluster requires an exposed secure registry route for direct image migration. |

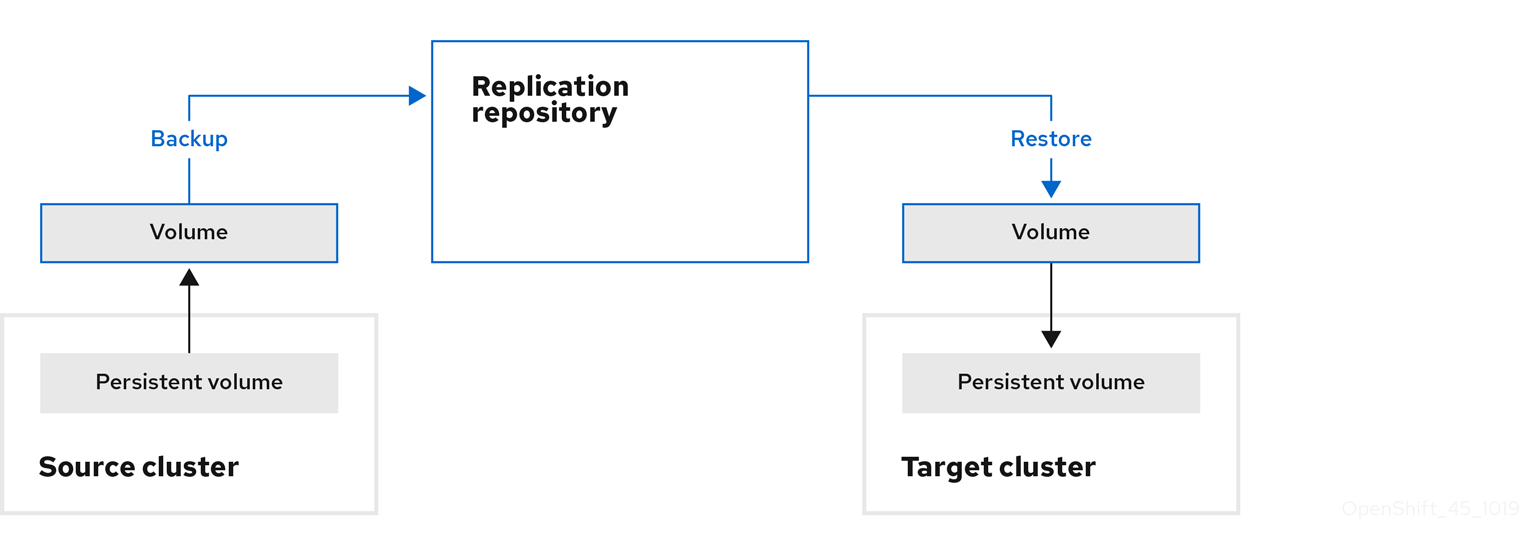

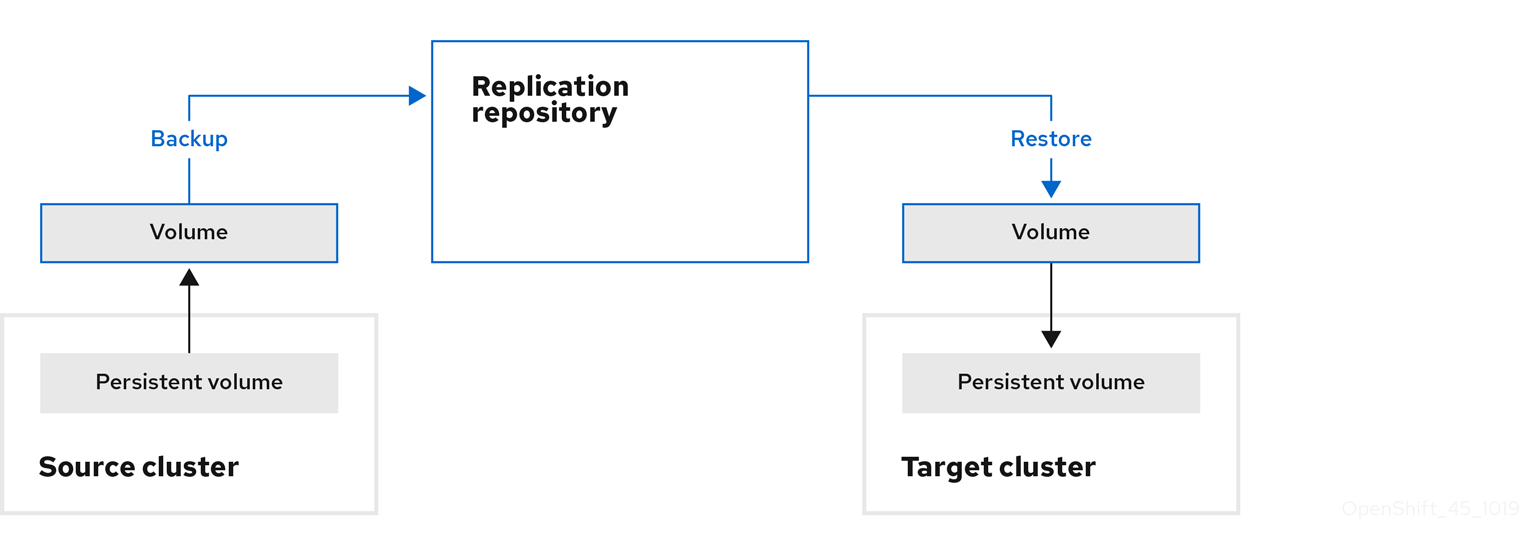

| Indirect migration | Images, volumes, and Kubernetes objects are copied from the source cluster to the replication repository and then from the replication repository to the destination cluster. |

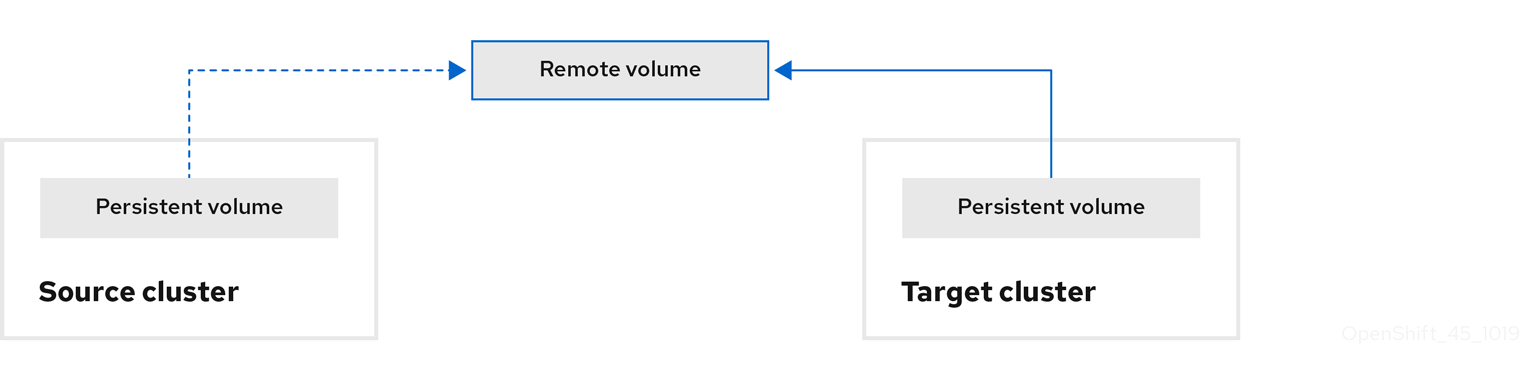

| Direct volume migration | Persistent volumes are copied directly from the source cluster to the destination cluster. |

| Direct image migration | Images are copied directly from the source cluster to the destination cluster. |

| Stage migration | Data is copied to the destination cluster without stopping the application. Running a stage migration multiple times reduces the duration of the cutover migration. |

| Cutover migration | The application is stopped on the source cluster and its resources are migrated to the destination cluster. |

| State migration | Application state is migrated by copying specific persistent volume claims to the destination cluster. |

| Rollback migration | Rollback migration rolls back a completed migration. |

1 Called the target cluster in the MTC web console.

1.3. MTC workflow

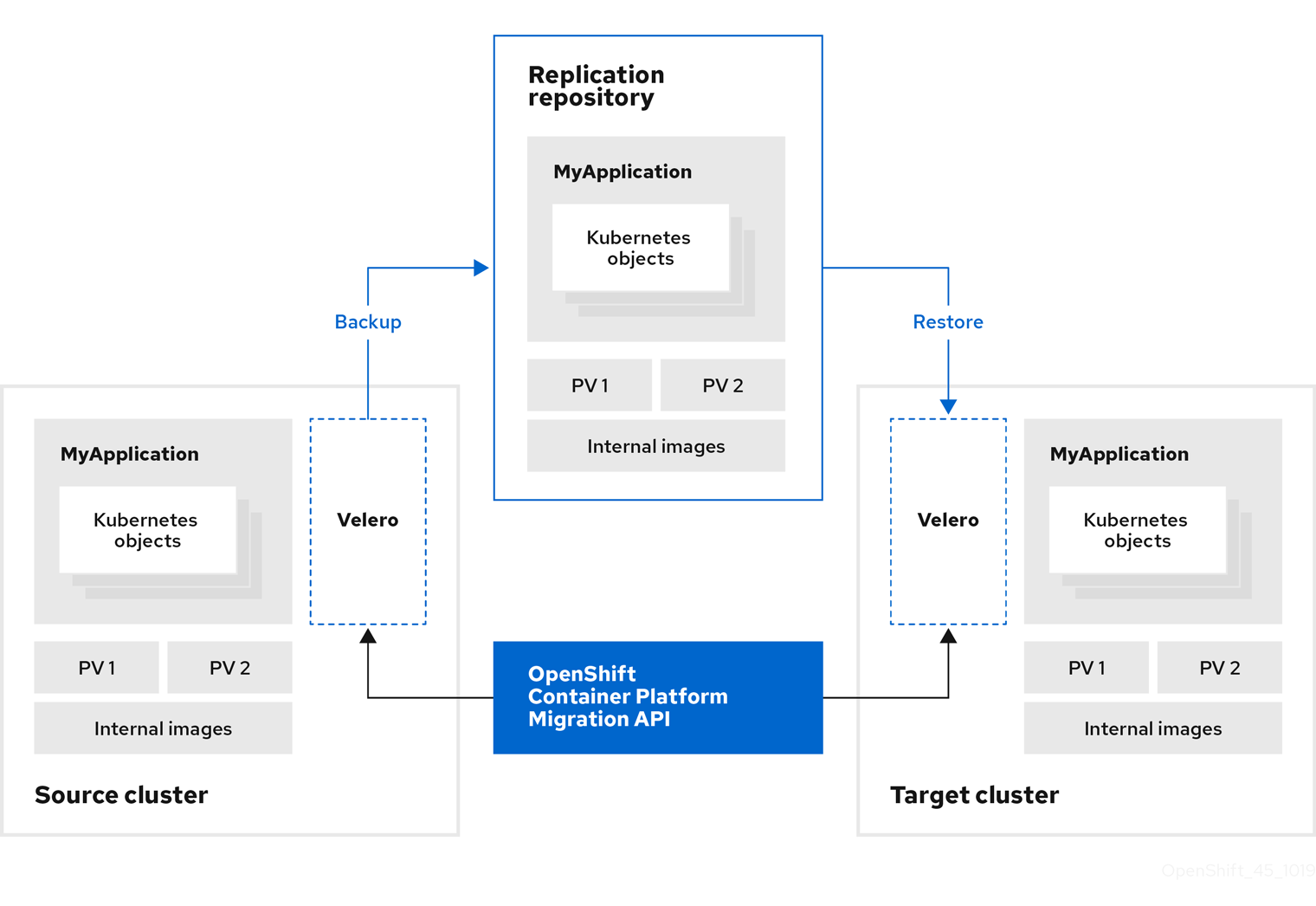

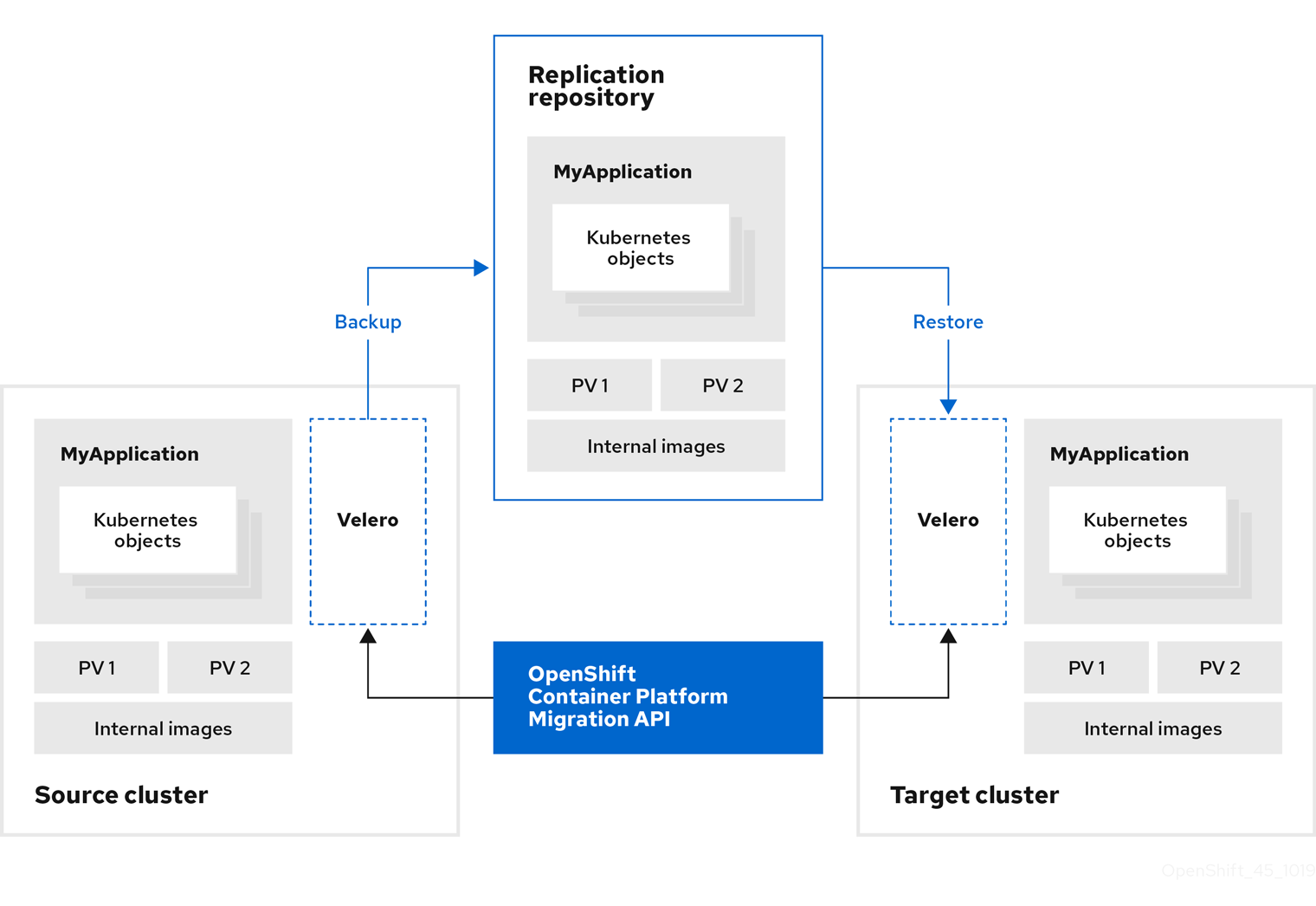

You can migrate Kubernetes resources, persistent volume data, and internal container images to OpenShift Container Platform 4.14 by using the Migration Toolkit for Containers (MTC) web console or the Kubernetes API.

MTC migrates the following resources:

- A namespace specified in a migration plan.

Namespace-scoped resources: When the MTC migrates a namespace, it migrates all the objects and resources associated with that namespace, such as services or pods. Additionally, if a resource that exists in the namespace but not at the cluster level depends on a resource that exists at the cluster level, the MTC migrates both resources.

For example, a security context constraint (SCC) is a resource that exists at the cluster level and a service account (SA) is a resource that exists at the namespace level. If an SA exists in a namespace that the MTC migrates, the MTC automatically locates any SCCs that are linked to the SA and also migrates those SCCs. Similarly, the MTC migrates persistent volumes that are linked to the persistent volume claims of the namespace.

NoteCluster-scoped resources might have to be migrated manually, depending on the resource.

- Custom resources (CRs) and custom resource definitions (CRDs): MTC automatically migrates CRs and CRDs at the namespace level.

Migrating an application with the MTC web console involves the following steps:

Install the Migration Toolkit for Containers Operator on all clusters.

You can install the Migration Toolkit for Containers Operator in a restricted environment with limited or no internet access. The source and target clusters must have network access to each other and to a mirror registry.

Configure the replication repository, an intermediate object storage that MTC uses to migrate data.

The source and target clusters must have network access to the replication repository during migration. If you are using a proxy server, you must configure it to allow network traffic between the replication repository and the clusters.

- Add the source cluster to the MTC web console.

- Add the replication repository to the MTC web console.

Create a migration plan, with one of the following data migration options:

Copy: MTC copies the data from the source cluster to the replication repository, and from the replication repository to the target cluster.

NoteIf you are using direct image migration or direct volume migration, the images or volumes are copied directly from the source cluster to the target cluster.

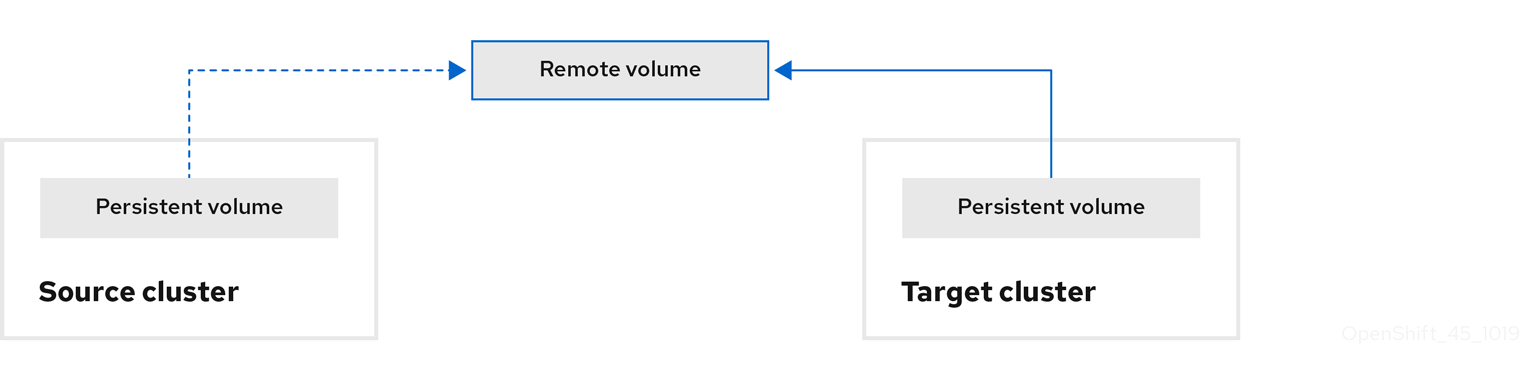

Move: MTC unmounts a remote volume, for example, NFS, from the source cluster, creates a PV resource on the target cluster pointing to the remote volume, and then mounts the remote volume on the target cluster. Applications running on the target cluster use the same remote volume that the source cluster was using. The remote volume must be accessible to the source and target clusters.

NoteAlthough the replication repository does not appear in this diagram, it is required for migration.

Run the migration plan, with one of the following options:

Stage copies data to the target cluster without stopping the application.

A stage migration can be run multiple times so that most of the data is copied to the target before migration. Running one or more stage migrations reduces the duration of the cutover migration.

Cutover stops the application on the source cluster and moves the resources to the target cluster.

Optional: You can clear the Halt transactions on the source cluster during migration checkbox.

1.4. About data copy methods

The Migration Toolkit for Containers (MTC) supports the file system and snapshot data copy methods for migrating data from the source cluster to the target cluster. You can select a method that is suited for your environment and is supported by your storage provider.

1.4.1. File system copy method

MTC copies data files from the source cluster to the replication repository, and from there to the target cluster.

The file system copy method uses Restic for indirect migration or Rsync for direct volume migration.

| Benefits | Limitations |

|---|---|

|

|

The Restic and Rsync PV migration assumes that the PVs supported are only volumeMode=filesystem. Using volumeMode=Block for file system migration is not supported.

1.4.2. Snapshot copy method

MTC copies a snapshot of the source cluster data to the replication repository of a cloud provider. The data is restored on the target cluster.

The snapshot copy method can be used with Amazon Web Services, Google Cloud, and Microsoft Azure.

| Benefits | Limitations |

|---|---|

|

|

1.5. Direct volume migration and direct image migration

You can use direct image migration (DIM) and direct volume migration (DVM) to migrate images and data directly from the source cluster to the target cluster.

If you run DVM with nodes that are in different availability zones, the migration might fail because the migrated pods cannot access the persistent volume claim.

DIM and DVM have significant performance benefits because the intermediate steps of backing up files from the source cluster to the replication repository and restoring files from the replication repository to the target cluster are skipped. The data is transferred with Rsync.

DIM and DVM have additional prerequisites.

Chapter 2. MTC release notes

2.1. Migration Toolkit for Containers 1.8 release notes

The release notes for Migration Toolkit for Containers (MTC) describe new features and enhancements, deprecated features, and known issues.

The MTC enables you to migrate application workloads between OpenShift Container Platform clusters at the granularity of a namespace.

MTC provides a web console and an API, based on Kubernetes custom resources, to help you control the migration and minimize application downtime.

For information on the support policy for MTC, see OpenShift Application and Cluster Migration Solutions, part of the Red Hat OpenShift Container Platform Life Cycle Policy.

2.1.1. Migration Toolkit for Containers 1.8.13 release notes

Migration Toolkit for Containers (MTC) 1.8.13 is a Container Grade Only (CGO) release, which is released to refresh the health grades of the containers. No code was changed in the product itself compared to that of MTC 1.8.12.

2.1.2. Migration Toolkit for Containers 1.8.12 release notes

The Migration Toolkit for Containers (MTC) 1.8.12 section lists known and resolved issues.

2.1.2.1. Resolved issues

Migration Toolkit for Containers (MTC) 1.8.12 has the following resolved issues:

- Live migration of virtual machine instance with RWO configuration works in

MigMigration With this release, the live migration of virtual machine instances (VMIs) no longer fails for

MigMigrationwhen the persistent volume claims (PVC) of VMIs are not shared with the access mode configurationReadWriteMany. The migration plan does not prevent you from migrating the VMIs because of the RWO volumes. As a result, you can migrate VMs withReadWriteOncevolumes.

2.1.3. Migration Toolkit for Containers 1.8.11 release notes

Migration Toolkit for Containers (MTC) 1.8.11 is a Container Grade Only (CGO) release, which is released to refresh the health grades of the containers. No code was changed in the product itself compared to that of MTC 1.8.10.

2.1.4. Migration Toolkit for Containers 1.8.10 release notes

2.1.4.1. Resolved issues

Migration Toolkit for Containers (MTC) 1.8.10 has the following major resolved issues:

migmigration failed to clean up stale virt-handler pods for stopped VMs

With this release, migmigration object no longer fails to clean up stale virt-handler pods for stopped virtual machines (VMs) in an Azure Red Hat OpenShift (ARO) cluster with MTC Operator and Red Hat OpenShift Data Foundation (ODF) storage cluster-CEPH RBD virtualization. This resolution addresses an issue where migration failure occurred due to an attempt to clean up non-existent virt-handler pods during live migration when the VM was stopped and no VMI existed. As a result, stopped VMs no longer prevent successful migration. (MIG-1762)

2.1.5. Migration Toolkit for Containers 1.8.9 release notes

2.1.5.1. Resolved issues

Migration Toolkit for Containers (MTC) 1.8.9 has the following major resolved issues:

VM restarts migration after the MigPlan deletion

Before this update, deleting the MigPlan triggered unnecessary virtual machine (VM) migrations. This caused the VMs to resume, leading to migration failures. This release introduces a fix that prevents VMs from migrating when the MigPlan is actively removed. As a result, VMs no longer unintentionally migrate when MigPlans are deleted, ensuring a stable environment for users. (MIG-1749)

Virt-launcher pod remain in pending after migrating the VM from hostpath-csi-basic to hostpath-csi-pvc-block

Before this update, the virt-launcher pod stalled after migrating a VM due to pod anti-affinity conflicts with bound volumes. This resulted in a failed VM migration, causing the virt-launcher pod to get stuck. This release resolves the pod anti-affinity issue, allowing for scheduling on different nodes. As a result, you can now migrate VMs from hostpath-csi-basic to hostpath-csi-pvc-block without pods getting stuck in a pending state, thereby improving the overall migration process efficiency. (MIG-1750)

Migration-controller panics due to LimitRange "NIL" found in source project

Before this update, the missing Min spec in a LimitRange within the openshift-migration namespace caused a panic in the migration-controller pod, disrupting the user experience and preventing successful volume migration. With this release, the migration-controller pod no longer crashes when encountering a LimitRange set to Nil. As a result, rsync pods now comply with the specified limits, improving the migration process stability and eliminating crashloopbacks in source namespaces.

2.1.6. Migration Toolkit for Containers 1.8.8 release notes

2.1.6.1. Resolved issues

Migration Toolkit for Containers (MTC) 1.8.8 has the following major resolved issues:

The detection of storage live migration status failed

There was an error in detecting the status of storage live migration. This update resolves the issue. (MIG-1746)

2.1.7. Migration Toolkit for Containers 1.8.7 release notes

2.1.7.1. Resolved issues

Migration Toolkit for Containers (MTC) 1.8.7 has the following major resolved issues:

MTC migration stuck in a Prepare phase on OpenShift Container Platform 4.19 due to incompatible OADP version and outdated DPA specification

When running migrations use MTC 1.8.7 on OpenShift Container Platform 4.19, the process halts in the Prepare phase and the migration plan enters in the Suspended phase.

The root cause is the deployment of an incompatible OADP version, earlier than 1.5.0, whose DataProtectionApplication (DPA) specification format is incompatible with OpenShift Container Platform. (MIG-1735)

Velero backup fails with a file already closed error when using the new AWS plugin in MTC

During stage or full migrations, the backup process intermittently fails for MTC with OADP on OpenShift Container Platform clusters configured with the new Amazon Web Services (AWS) plugin. You can see the following error in Velero logs:

error="read |0: file already closed"

error="read |0: file already closed"As a workaround, use the legacy AWS plugin by performing following actions:

-

Set

velero_use_legacy_aws: truein theMigrationControllercustom resource (CR). - Restart the MTC Operator to apply changes.

- Validate the AWS credentials for the cloud-credentials secret.

2.1.8. Migration Toolkit for Containers 1.8.6 release notes

2.1.8.1. Technical changes

Multiple migration plans for a namespace is not supported

MTC version 1.8.6 and later do not support multiple migration plans for a single namespace.

VM storage migration

VM storage migration feature changes from Technology Preview (TP) status to being Generally Available (GA).

2.1.8.2. Resolved issues

MTC 1.8.6 has the following major resolved issues:

UI fails during a namespace search in the migration plan wizard

When searching for a namespace in the Select Namespace step of the migration plan wizard, the user interface (UI) fails and disappears after clicking Search. The browser console shows a JavaScript error indicating that an undefined value has been accessed. This issue has been resolved in MTC 1.8.6. (MIG-1704)

Unable to create a migration plan due to a reconciliation failure

In MTC, when creating a migration plan, the UI remains on Persistent Volumes and you cannot continue. This issue occurs due to a critical reconciliation failure and returns a 404 API error when you attempt to fetch the migration plan from the backend. These issues cause the migration plan to remain in a Not Ready state, and you are prevented from continuing. This issue has been resolved in MTC 1.8.6. (MIG-1705)

Migration process becomes fails to complete after the StageBackup phase

When migrating a Django and PostgreSQL application, the migration becomes fails to complete after the StageBackup phase. Even though all the pods in the source namespace are healthy before the migration begins, after the migration and when terminating the pods on the source cluster, the Django pod fails with a CrashLoopBackOff error. This issue has been resolved in MTC 1.8.6. (MIG-1707)

Migration shown as succeeded despite a failed phase due to a misleading UI status

After running a migration using MTC, the UI incorrectly indicates that the migration was successful, with the status shown as Migration succeeded. However, the Direct Volume Migration (DVM) phase failed. This misleading status appears on both the Migration and the Migration Details pages. This issue has been resolved in MTC 1.8.6. (MIG-1711)

Persistent Volumes page hangs indefinitely for namespaces without persistent volume claims

When a migration plan includes a namespace that does not have any persistent volume claims (PVCs), the Persistent Volumes selection page remains indefinitely with the following message shown: Discovering persistent volumes attached to source projects…. The page never completes loading, preventing you from proceeding with the migration. This issue has been resolved in MTC 1.8.6. (MIG-1713)

2.1.8.3. Known issues

MTC 1.8.6 has the following known issues:

Inconsistent reporting of migration failure status

There is a discrepancy in the reporting of namespace migration status following a rollback and subsequent re-migration attempt when the migration plan is deliberately faulted. Although the Distributed Volume Migration (DVM) phase correctly registers a failure, this failure is not consistently reflected in the user interface (UI) or the migration plan’s YAML representation.

This issue is not only limited to unusual or unexpected cases. In certain circumstances, such as when network restrictions are applied that cause the DVM phase to fail, the UI still reports the migration status as successful. This behavior is similar to what was previously observed in MIG-1711 but occurs under different conditions. (MIG-1719)

2.1.9. Migration Toolkit for Containers 1.8.5 release notes

2.1.9.1. Technical changes

Migration Toolkit for Containers (MTC) 1.8.5 has the following technical changes:

Federal Information Processing Standard (FIPS)

FIPS is a set of computer security standards developed by the United States federal government in accordance with the Federal Information Security Management Act (FISMA).

Starting with version 1.8.5, MTC is designed for FIPS compliance.

2.1.9.2. Resolved issues

For more information, see the list of MTC 1.8.5 resolved issues in Jira.

2.1.9.3. Known issues

MTC 1.8.5 has the following known issues:

The associated SCC for service account cannot be migrated in OpenShift Container Platform 4.12

The associated Security Context Constraints (SCCs) for service accounts in OpenShift Container Platform 4.12 cannot be migrated. This issue is planned to be resolved in a future release of MTC. (MIG-1454)

MTC does not patch statefulset.spec.volumeClaimTemplates[].spec.storageClassName on storage class conversion

While running a Storage Class conversion for a StatefulSet application, MTC updates the persistent volume claims (PVC) references in .spec.volumeClaimTemplates[].metadata.name to use the migrated PVC names. MTC does not update spec.volumeClaimTemplates[].spec.storageClassName, which causes the application to scale up. Additionally, new replicas consume PVCs created under the old Storage Class instead of the migrated Storage Class. (MIG-1660)

Performing a StorageClass conversion triggers the scale-down of all applications in the namespace

When running a StorageClass conversion on more than one application, MTC scales down all the applications in the cutover phase, including those not involved in the migration. (MIG-1661)

MigPlan cannot be edited to have the same target namespace as the source cluster after it is changed

After changing the target namespace to something different from the source namespace while creating a MigPlan in the MTC UI, you cannot edit the MigPlan again to make the target namespace the same as the source namespace. (MIG-1600)

Migrated builder pod fails to push to the image registry

When migrating an application that includes BuildConfig from the source to the target cluster, the builder pod encounters an error, failing to push the image to the image registry. (BZ#2234781)

Conflict condition clears briefly after it is displayed

When creating a new state migration plan that results in a conflict error, the error is cleared shortly after it is displayed. (BZ#2144299)

PvCapacityAdjustmentRequired warning not displayed after setting pv_resizing_threshold

The PvCapacityAdjustmentRequired warning does not appear in the migration plan after the pv_resizing_threshold is adjusted. (BZ#2270160)

For a complete list of all known issues, see the list of MTC 1.8.5 known issues in Jira.

2.1.10. Migration Toolkit for Containers 1.8.4 release notes

2.1.10.1. Technical changes

Migration Toolkit for Containers (MTC) 1.8.4 has the following technical changes:

- MTC 1.8.4 extends its dependency resolution to include support for using OpenShift API for Data Protection (OADP) 1.4.

Support for KubeVirt Virtual Machines with DirectVolumeMigration

MTC 1.8.4 adds support for KubeVirt Virtual Machines (VMs) with Direct Volume Migration (DVM).

2.1.10.2. Resolved issues

MTC 1.8.4 has the following major resolved issues:

Ansible Operator is broken when OpenShift Virtualization is installed

There is a bug in the python3-openshift package that installing OpenShift Virtualization exposes, with an exception, ValueError: too many values to unpack, returned during the task. Earlier versions of MTC are impacted, while MTC 1.8.4 has implemented a workaround. Updating to MTC 1.8.4 means you are no longer affected by this issue. (OCPBUGS-38116)

UI stuck at Namespaces while creating a migration plan

When trying to create a migration plan from the MTC UI, the migration plan wizard becomes stuck at the Namespaces step. This issue has been resolved in MTC 1.8.4. (MIG-1597)

Migration fails with error of no matches for kind Virtual machine in version kubevirt/v1

During the migration of an application, all the necessary steps, including the backup, DVM, and restore, are successfully completed. However, the migration is marked as unsuccessful with the error message no matches for kind Virtual machine in version kubevirt/v1. (MIG-1594)

Direct Volume Migration fails when migrating to a namespace different from the source namespace

On performing a migration from source cluster to target cluster, with the target namespace different from the source namespace, the DVM fails. (MIG-1592)

Direct Image Migration does not respect label selector on migplan

When using Direct Image Migration (DIM), if a label selector is set on the migration plan, DIM does not respect it and attempts to migrate all imagestreams in the namespace. (MIG-1533)

2.1.10.3. Known issues

MTC 1.8.4 has the following known issues:

The associated SCC for service account cannot be migrated in OpenShift Container Platform 4.12

The associated Security Context Constraints (SCCs) for service accounts in OpenShift Container Platform 4.12 cannot be migrated. This issue is planned to be resolved in a future release of MTC. (MIG-1454).

Rsync pod fails to start causing the DVM phase to fail

The DVM phase fails due to the Rsync pod failing to start, because of a permission issue.

Migrated builder pod fails to push to image registry

When migrating an application including BuildConfig from source to target cluster, the builder pod results in error, failing to push the image to the image registry.

Conflict condition gets cleared briefly after it is created

When creating a new state migration plan that results in a conflict error, that error is cleared shorty after it is displayed.

PvCapacityAdjustmentRequired Warning Not Displayed After Setting pv_resizing_threshold

The PvCapacityAdjustmentRequired warning fails to appear in the migration plan after the pv_resizing_threshold is adjusted.

2.1.11. Migration Toolkit for Containers 1.8.3 release notes

2.1.11.1. Technical changes

Migration Toolkit for Containers (MTC) 1.8.3 has the following technical changes:

OADP 1.3 is now supported

MTC 1.8.3 adds support to OpenShift API for Data Protection (OADP) as a dependency of MTC 1.8.z.

2.1.11.2. Resolved issues

MTC 1.8.3 has the following major resolved issues:

CVE-2024-24786: Flaw in Golang protobuf module causes unmarshal function to enter infinite loop

In previous releases of MTC, a vulnerability was found in Golang’s protobuf module, where the unmarshal function entered an infinite loop while processing certain invalid inputs. Consequently, an attacker provided carefully constructed invalid inputs, which caused the function to enter an infinite loop.

With this update, the unmarshal function works as expected.

For more information, see CVE-2024-24786.

CVE-2023-45857: Axios Cross-Site Request Forgery Vulnerability

In previous releases of MTC, a vulnerability was discovered in Axios 1.5.1 that inadvertently revealed a confidential XSRF-TOKEN stored in cookies by including it in the HTTP header X-XSRF-TOKEN for every request made to the host, allowing attackers to view sensitive information.

For more information, see CVE-2023-45857.

Restic backup does not work properly when the source workload is not quiesced

In previous releases of MTC, some files did not migrate when deploying an application with a route. The Restic backup did not function as expected when the quiesce option was unchecked for the source workload.

This issue has been resolved in MTC 1.8.3.

For more information, see BZ#2242064.

The Migration Controller fails to install due to an unsupported value error in Velero

The MigrationController failed to install due to an unsupported value error in Velero. Updating OADP 1.3.0 to OADP 1.3.1 resolves this problem. For more information, see BZ#2267018.

This issue has been resolved in MTC 1.8.3.

For a complete list of all resolved issues, see the list of MTC 1.8.3 resolved issues in Jira.

2.1.11.3. Known issues

Migration Toolkit for Containers (MTC) 1.8.3 has the following known issues:

Ansible Operator is broken when OpenShift Virtualization is installed

There is a bug in the python3-openshift package that installing OpenShift Virtualization exposes, with an exception, ValueError: too many values to unpack, returned during the task. MTC 1.8.4 has implemented a workaround. Updating to MTC 1.8.4 means you are no longer affected by this issue. (OCPBUGS-38116)

The associated SCC for service account cannot be migrated in OpenShift Container Platform 4.12

The associated Security Context Constraints (SCCs) for service accounts in OpenShift Container Platform version 4.12 cannot be migrated. This issue is planned to be resolved in a future release of MTC. (MIG-1454).

For a complete list of all known issues, see the list of MTC 1.8.3 known issues in Jira.

2.1.12. Migration Toolkit for Containers 1.8.2 release notes

2.1.12.1. Resolved issues

This release has the following major resolved issues:

Backup phase fails after setting custom CA replication repository

In previous releases of Migration Toolkit for Containers (MTC), after editing the replication repository, adding a custom CA certificate, successfully connecting the repository, and triggering a migration, a failure occurred during the backup phase.

CVE-2023-26136: tough-cookie package before 4.1.3 are vulnerable to Prototype Pollution

In previous releases of (MTC), versions before 4.1.3 of the tough-cookie package used in MTC were vulnerable to prototype pollution. This vulnerability occurred because CookieJar did not handle cookies properly when the value of the rejectPublicSuffixes was set to false.

For more details, see (CVE-2023-26136)

CVE-2022-25883 openshift-migration-ui-container: nodejs-semver: Regular expression denial of service

In previous releases of (MTC), versions of the semver package before 7.5.2, used in MTC, were vulnerable to Regular Expression Denial of Service (ReDoS) from the function newRange, when untrusted user data was provided as a range.

For more details, see (CVE-2022-25883)

2.1.12.2. Known issues

MTC 1.8.2 has the following known issues:

Ansible Operator is broken when OpenShift Virtualization is installed

There is a bug in the python3-openshift package that installing OpenShift Virtualization exposes, with an exception, ValueError: too many values to unpack, returned during the task. MTC 1.8.4 has implemented a workaround. Updating to MTC 1.8.4 means you are no longer affected by this issue. (OCPBUGS-38116)

2.1.13. Migration Toolkit for Containers 1.8.1 release notes

2.1.13.1. Resolved issues

Migration Toolkit for Containers (MTC) 1.8.1 has the following major resolved issues:

CVE-2023-39325: golang: net/http, x/net/http2: rapid stream resets can cause excessive work

A flaw was found in handling multiplexed streams in the HTTP/2 protocol, which is used by MTC. A client could repeatedly make a request for a new multiplex stream and immediately send an RST_STREAM frame to cancel it. This creates additional workload for the server in terms of setting up and dismantling streams, while avoiding any server-side limitations on the maximum number of active streams per connection, resulting in a denial of service due to server resource consumption. (BZ#2245079)

It is advised to update to MTC 1.8.1 or later, which resolve this issue.

For more details, see (CVE-2023-39325) and (CVE-2023-44487)

2.1.13.2. Known issues

Migration Toolkit for Containers (MTC) 1.8.1 has the following known issues:

Ansible Operator is broken when OpenShift Virtualization is installed

There is a bug in the python3-openshift package that installing OpenShift Virtualization exposes. An exception, ValueError: too many values to unpack, is returned during the task. MTC 1.8.4 has implemented a workaround. Updating to MTC 1.8.4 means you are no longer affected by this issue. (OCPBUGS-38116)

2.1.14. Migration Toolkit for Containers 1.8.0 release notes

2.1.14.1. Resolved issues

Migration Toolkit for Containers (MTC) 1.8.0 has the following resolved issues:

Indirect migration is stuck on backup stage

In previous releases, an indirect migration became stuck at the backup stage, due to InvalidImageName error. ((BZ#2233097))

PodVolumeRestore remain In Progress keeping the migration stuck at Stage Restore

In previous releases, on performing an indirect migration, the migration became stuck at the Stage Restore step, waiting for the podvolumerestore to be completed. ((BZ#2233868))

Migrated application unable to pull image from internal registry on target cluster

In previous releases, on migrating an application to the target cluster, the migrated application failed to pull the image from the internal image registry resulting in an application failure. ((BZ#2233103))

Migration failing on Azure due to authorization issue

In previous releases, on an Azure cluster, when backing up to Azure storage, the migration failed at the Backup stage. ((BZ#2238974))

2.1.14.2. Known issues

MTC 1.8.0 has the following known issues:

Ansible Operator is broken when OpenShift Virtualization is installed

There is a bug in the python3-openshift package that installing OpenShift Virtualization exposes, with an exception ValueError: too many values to unpack returned during the task. MTC 1.8.4 has implemented a workaround. Updating to MTC 1.8.4 means you are no longer affected by this issue. (OCPBUGS-38116)

Old Restic pods are not getting removed on upgrading MTC 1.7.x → 1.8.x

In this release, on upgrading the MTC Operator from 1.7.x to 1.8.x, the old Restic pods are not being removed. Therefore after the upgrade, both Restic and node-agent pods are visible in the namespace. ((BZ#2236829))

Migrated builder pod fails to push to image registry

In this release, on migrating an application including a BuildConfig from a source to target cluster, builder pod results in error, failing to push the image to the image registry. ((BZ#2234781))

[UI] CA bundle file field is not properly cleared

In this release, after enabling Require SSL verification and adding content to the CA bundle file for an MCG NooBaa bucket in MigStorage, the connection fails as expected. However, when reverting these changes by removing the CA bundle content and clearing Require SSL verification, the connection still fails. The issue is only resolved by deleting and re-adding the repository. ((BZ#2240052))

Backup phase fails after setting custom CA replication repository

In (MTC), after editing the replication repository, adding a custom CA certificate, successfully connecting the repository, and triggering a migration, a failure occurs during the backup phase.

This issue is resolved in MTC 1.8.2.

CVE-2023-26136: tough-cookie package before 4.1.3 are vulnerable to Prototype Pollution

Versions before 4.1.3 of the tough-cookie package, used in MTC, are vulnerable to prototype pollution. This vulnerability occurs because CookieJar does not handle cookies properly when the value of the rejectPublicSuffixes is set to false.

This issue is resolved in MTC 1.8.2.

For more details, see (CVE-2023-26136)

CVE-2022-25883 openshift-migration-ui-container: nodejs-semver: Regular expression denial of service

In previous releases of (MTC), versions of the semver package before 7.5.2, used in MTC, are vulnerable to Regular Expression Denial of Service (ReDoS) from the function newRange, when untrusted user data is provided as a range.

This issue is resolved in MTC 1.8.2.

For more details, see (CVE-2022-25883)

2.1.14.3. Technical changes

This release has the following technical changes:

- Migration from OpenShift Container Platform 3 to OpenShift Container Platform 4 requires a legacy Migration Toolkit for Containers Operator and Migration Toolkit for Containers 1.7.x.

- Migration from MTC 1.7.x to MTC 1.8.x is not supported.

You must use MTC 1.7.x to migrate anything with a source of OpenShift Container Platform 4.9 or earlier.

- MTC 1.7.x must be used on both source and destination.

Migration Toolkit for Containers (MTC) 1.8.x only supports migrations from OpenShift Container Platform 4.10 or later to OpenShift Container Platform 4.10 or later. For migrations only involving cluster versions 4.10 and later, either 1.7.x or 1.8.x might be used. However, but it must be the same MTC 1.Y.z on both source and destination.

- Migration from source MTC 1.7.x to destination MTC 1.8.x is unsupported.

- Migration from source MTC 1.8.x to destination MTC 1.7.x is unsupported.

- Migration from source MTC 1.7.x to destination MTC 1.7.x is supported.

- Migration from source MTC 1.8.x to destination MTC 1.8.x is supported.

- MTC 1.8.x by default installs OADP 1.2.x.

- Upgrading from MTC 1.7.x to MTC 1.8.0, requires manually changing the OADP channel to 1.2. If this is not done, the upgrade of the Operator fails.

2.2. Migration Toolkit for Containers 1.7 release notes

The release notes for Migration Toolkit for Containers (MTC) describe new features and enhancements, deprecated features, and known issues.

The MTC enables you to migrate application workloads between OpenShift Container Platform clusters at the granularity of a namespace.

You can migrate from OpenShift Container Platform 3 to 4.14 and between OpenShift Container Platform 4 clusters.

MTC provides a web console and an API, based on Kubernetes custom resources, to help you control the migration and minimize application downtime.

For information on the support policy for MTC, see OpenShift Application and Cluster Migration Solutions, part of the Red Hat OpenShift Container Platform Life Cycle Policy.

2.2.1. Migration Toolkit for Containers 1.7.19 release notes

Migration Toolkit for Containers (MTC) 1.7.19 is a Container Grade Only (CGO) release, which is released to refresh the health grades of the containers. No code was changed in the product itself compared to that of MTC 1.7.18.

2.2.1.1. Resolved issues

This release has the following resolved issues:

python3-requests package vulnerability

A bug was introduced when building the MTC Operator for the 1.7.19 release. During QE testing, this bug meant that when attempting to install MTC 1.7.19 from the OperatorHub, the migration-operator pod failed during startup. The logs indicated that the Ansible Runner was crashing with an unhandled KeyError: 'http+unix' issue. The MTC Operator was in an incomplete state, and the required controller pods were never deployed, which rendered the Operator non-functional. This issue was caused by the python3-requests package.

The workaround was to downgrade to the last known working version of the python3-requests package, used in MTC 1.7.19, to python3-requests-2.20.0-3.el8_8.noarch. However, this action does mean exposure to the following vulnerability, RHSA-2023:4520.

2.2.1.2. Known issues

The restore fails due to a missing backup storage location

Currently, if the backup storage location is not specified, application migration fails. (MIG-1649)

Workaround: Roll back and then restart the migration.

2.2.2. Migration Toolkit for Containers 1.7.18 release notes

Migration Toolkit for Containers (MTC) 1.7.18 is a Container Grade Only (CGO) release, which is released to refresh the health grades of the containers. No code was changed in the product itself compared to that of MTC 1.7.17.

2.2.2.1. Technical changes

Migration Toolkit for Containers (MTC) 1.7.18 has the following technical changes:

Federal Information Processing Standard (FIPS)

FIPS is a set of computer security standards developed by the United States federal government in accordance with the Federal Information Security Management Act (FISMA).

Starting with version 1.7.18, MTC is designed for FIPS compliance.

2.2.3. Migration Toolkit for Containers 1.7.17 release notes

Migration Toolkit for Containers (MTC) 1.7.17 is a Container Grade Only (CGO) release, which is released to refresh the health grades of the containers. No code was changed in the product itself compared to that of MTC 1.7.16.

2.2.4. Migration Toolkit for Containers 1.7.16 release notes

2.2.4.1. Resolved issues

This release has the following resolved issues:

CVE-2023-45290: Golang: net/http: Memory exhaustion in the Request.ParseMultipartForm method

A flaw was found in the net/http Golang standard library package, which impacts earlier versions of MTC. When parsing a multipart form, either explicitly with Request.ParseMultipartForm or implicitly with Request.FormValue, Request.PostFormValue, or Request.FormFile methods, limits on the total size of the parsed form are not applied to the memory consumed while reading a single form line. This permits a maliciously crafted input containing long lines to cause the allocation of arbitrarily large amounts of memory, potentially leading to memory exhaustion.

To resolve this issue, upgrade to MTC 1.7.16.

For more details, see CVE-2023-45290

CVE-2024-24783: Golang: crypto/x509: Verify panics on certificates with an unknown public key algorithm

A flaw was found in the crypto/x509 Golang standard library package, which impacts earlier versions of MTC. Verifying a certificate chain that contains a certificate with an unknown public key algorithm causes Certificate.Verify to panic. This affects all crypto/tls clients and servers that set Config.ClientAuth to VerifyClientCertIfGiven or RequireAndVerifyClientCert. The default behavior is for TLS servers to not verify client certificates.

To resolve this issue, upgrade to MTC 1.7.16.

For more details, see CVE-2024-24783.

CVE-2024-24784: Golang: net/mail: Comments in display names are incorrectly handled

A flaw was found in the net/mail Golang standard library package, which impacts earlier versions of MTC. The ParseAddressList function incorrectly handles comments, text in parentheses, and display names. As this is a misalignment with conforming address parsers, it can result in different trust decisions being made by programs using different parsers.

To resolve this issue, upgrade to MTC 1.7.16.

For more details, see CVE-2024-24784.

CVE-2024-24785: Golang: html/template: Errors returned from MarshalJSON methods may break template escaping

A flaw was found in the html/template Golang standard library package, which impacts earlier versions of MTC. If errors returned from MarshalJSON methods contain user-controlled data, they could be used to break the contextual auto-escaping behavior of the html/template package, allowing subsequent actions to inject unexpected content into templates.

To resolve this issue, upgrade to MTC 1.7.16.

For more details, see CVE-2024-24785.

CVE-2024-29180: webpack-dev-middleware: Lack of URL validation may lead to file leak

A flaw was found in the webpack-dev-middleware package, which impacts earlier versions of MTC. This flaw fails to validate the supplied URL address sufficiently before returning local files, which could allow an attacker to craft URLs to return arbitrary local files from the developer’s machine.

To resolve this issue, upgrade to MTC 1.7.16.

For more details, see CVE-2024-29180.

CVE-2024-30255: envoy: HTTP/2 CPU exhaustion due to CONTINUATION frame flood

A flaw was found in how the envoy proxy implements the HTTP/2 codec, which impacts earlier versions of MTC. There are insufficient limitations placed on the number of CONTINUATION frames that can be sent within a single stream, even after exceeding the header map limits of envoy. This flaw could allow an unauthenticated remote attacker to send packets to vulnerable servers. These packets could consume compute resources and cause a denial of service (DoS).

To resolve this issue, upgrade to MTC 1.7.16.

For more details, see CVE-2024-30255.

2.2.4.2. Known issues

This release has the following known issues:

Direct Volume Migration is failing as the Rsync pod on the source cluster goes into an Error state

On migrating any application with a Persistent Volume Claim (PVC), the Stage migration operation succeeds with warnings, but the Direct Volume Migration (DVM) fails with the rsync pod on the source namespace moving into an error state. (BZ#2256141)

The conflict condition is briefly cleared after it is created

When creating a new state migration plan that returns a conflict error message, the error message is cleared very shortly after it is displayed. (BZ#2144299)

Migration fails when there are multiple Volume Snapshot Locations of different provider types configured in a cluster

When there are multiple Volume Snapshot Locations (VSLs) in a cluster with different provider types, but you have not set any of them as the default VSL, Velero results in a validation error that causes migration operations to fail. (BZ#2180565)

2.2.5. Migration Toolkit for Containers 1.7.15 release notes

2.2.5.1. Resolved issues

This release has the following resolved issues:

CVE-2024-24786: A flaw was found in Golang’s protobuf module, where the unmarshal function can enter an infinite loop

A flaw was found in the protojson.Unmarshal function that could cause the function to enter an infinite loop when unmarshaling certain forms of invalid JSON messages. This condition could occur when unmarshaling into a message that contained a google.protobuf.Any value or when the UnmarshalOptions.DiscardUnknown option was set in a JSON-formatted message.

To resolve this issue, upgrade to MTC 1.7.15.

For more details, see (CVE-2024-24786).

CVE-2024-28180: jose-go improper handling of highly compressed data

A vulnerability was found in Jose due to improper handling of highly compressed data. An attacker could send a JSON Web Encryption (JWE) encrypted message that contained compressed data that used large amounts of memory and CPU when decompressed by the Decrypt or DecryptMulti functions.

To resolve this issue, upgrade to MTC 1.7.15.

For more details, see (CVE-2024-28180).

2.2.5.2. Known issues

This release has the following known issues:

Direct Volume Migration is failing as the Rsync pod on the source cluster goes into an Error state

On migrating any application with Persistent Volume Claim (PVC), the Stage migration operation succeeds with warnings, and Direct Volume Migration (DVM) fails with the rsync pod on the source namespace going into an error state. (BZ#2256141)

The conflict condition is briefly cleared after it is created

When creating a new state migration plan that results in a conflict error message, the error message is cleared shortly after it is displayed. (BZ#2144299)

Migration fails when there are multiple Volume Snapshot Locations (VSLs) of different provider types configured in a cluster with no specified default VSL.

When there are multiple VSLs in a cluster with different provider types, and you set none of them as the default VSL, Velero results in a validation error that causes migration operations to fail. (BZ#2180565)

2.2.6. Migration Toolkit for Containers 1.7.14 release notes

2.2.6.1. Resolved issues

This release has the following resolved issues:

CVE-2023-39325 CVE-2023-44487: various flaws

A flaw was found in the handling of multiplexed streams in the HTTP/2 protocol, which is utilized by Migration Toolkit for Containers (MTC). A client could repeatedly make a request for a new multiplex stream then immediately send an RST_STREAM frame to cancel those requests. This activity created additional workloads for the server in terms of setting up and dismantling streams, but avoided any server-side limitations on the maximum number of active streams per connection. As a result, a denial of service occurred due to server resource consumption.

To resolve this issue, upgrade to MTC 1.7.14.

For more details, see (CVE-2023-44487) and (CVE-2023-39325).

CVE-2023-39318 CVE-2023-39319 CVE-2023-39321: various flaws

(CVE-2023-39318): A flaw was discovered in Golang, utilized by MTC. The

html/templatepackage did not properly handle HTML-like""comment tokens, or the hashbang"#!"comment tokens, in<script>contexts. This flaw could cause the template parser to improperly interpret the contents of<script>contexts, causing actions to be improperly escaped.(CVE-2023-39319): A flaw was discovered in Golang, utilized by MTC. The

html/templatepackage did not apply the proper rules for handling occurrences of"<script","<!--", and"</script"within JavaScript literals in <script> contexts. This could cause the template parser to improperly consider script contexts to be terminated early, causing actions to be improperly escaped.(CVE-2023-39321): A flaw was discovered in Golang, utilized by MTC. Processing an incomplete post-handshake message for a QUIC connection could cause a panic.

(CVE-2023-3932): A flaw was discovered in Golang, utilized by MTC. Connections using the QUIC transport protocol did not set an upper bound on the amount of data buffered when reading post-handshake messages, allowing a malicious QUIC connection to cause unbounded memory growth.

To resolve these issues, upgrade to MTC 1.7.14.

For more details, see (CVE-2023-39318), (CVE-2023-39319), and (CVE-2023-39321).

2.2.6.2. Known issues

There are no major known issues in this release.

2.2.7. Migration Toolkit for Containers 1.7.13 release notes

2.2.7.1. Resolved issues

There are no major resolved issues in this release.

2.2.7.2. Known issues

There are no major known issues in this release.

2.2.8. Migration Toolkit for Containers 1.7.12 release notes

2.2.8.1. Resolved issues

There are no major resolved issues in this release.

2.2.8.2. Known issues

This release has the following known issues:

Error code 504 is displayed on the Migration details page

On the Migration details page, at first, the migration details are displayed without any issues. However, after sometime, the details disappear, and a 504 error is returned. (BZ#2231106)

Old restic pods are not removed when upgrading Migration Toolkit for Containers 1.7.x to Migration Toolkit for Containers 1.8

On upgrading the Migration Toolkit for Containers (MTC) operator from 1.7.x to 1.8.x, the old restic pods are not removed. After the upgrade, both restic and node-agent pods are visible in the namespace. (BZ#2236829)

2.2.9. Migration Toolkit for Containers 1.7.11 release notes

2.2.9.1. Resolved issues

There are no major resolved issues in this release.

2.2.9.2. Known issues

There are no known issues in this release.

2.2.10. Migration Toolkit for Containers 1.7.10 release notes

2.2.10.1. Resolved issues

This release has the following major resolved issue:

Adjust rsync options in DVM

In this release, you can prevent absolute symlinks from being manipulated by Rsync in the course of direct volume migration (DVM). Running DVM in privileged mode preserves absolute symlinks inside the persistent volume claims (PVCs). To switch to privileged mode, in the MigrationController CR, set the migration_rsync_privileged spec to true. (BZ#2204461)

2.2.10.2. Known issues

There are no known issues in this release.

2.2.11. Migration Toolkit for Containers 1.7.9 release notes

2.2.11.1. Resolved issues

There are no major resolved issues in this release.

2.2.11.2. Known issues

This release has the following known issue:

Adjust rsync options in DVM

In this release, users are unable to prevent absolute symlinks from being manipulated by rsync during direct volume migration (DVM). (BZ#2204461)

2.2.12. Migration Toolkit for Containers 1.7.8 release notes

2.2.12.1. Resolved issues

This release has the following major resolved issues:

Velero image cannot be overridden in the Migration Toolkit for Containers (MTC) operator

In previous releases, it was not possible to override the velero image using the velero_image_fqin parameter in the MigrationController Custom Resource (CR). (BZ#2143389)

Adding a MigCluster from the UI fails when the domain name has more than six characters

In previous releases, adding a MigCluster from the UI failed when the domain name had more than six characters. The UI code expected a domain name of between two and six characters. (BZ#2152149)

UI fails to render the Migrations' page: Cannot read properties of undefined (reading 'name')

In previous releases, the UI failed to render the Migrations' page, returning Cannot read properties of undefined (reading 'name'). (BZ#2163485)

Creating DPA resource fails on Red Hat OpenShift Container Platform 4.6 clusters

In previous releases, when deploying MTC on an OpenShift Container Platform 4.6 cluster, the DPA failed to be created according to the logs, which resulted in some pods missing. From the logs in the migration-controller in the OpenShift Container Platform 4.6 cluster, it indicated that an unexpected null value was passed, which caused the error. (BZ#2173742)

2.2.12.2. Known issues

There are no known issues in this release.

2.2.13. Migration Toolkit for Containers 1.7.7 release notes

2.2.13.1. Resolved issues

There are no major resolved issues in this release.

2.2.13.2. Known issues

There are no known issues in this release.

2.2.14. Migration Toolkit for Containers 1.7.6 release notes

2.2.14.1. New features

Implement proposed changes for DVM support with PSA in Red Hat OpenShift Container Platform 4.12

With the incoming enforcement of Pod Security Admission (PSA) in OpenShift Container Platform 4.12 the default pod would run with a restricted profile. This restricted profile would mean workloads to migrate would be in violation of this policy and no longer work as of now. The following enhancement outlines the changes that would be required to remain compatible with OCP 4.12. (MIG-1240)

2.2.14.2. Resolved issues

This release has the following major resolved issues:

Unable to create Storage Class Conversion plan due to missing cronjob error in Red Hat OpenShift Platform 4.12

In previous releases, on the persistent volumes page, an error is thrown that a CronJob is not available in version batch/v1beta1, and when clicking on cancel, the migplan is created with status Not ready. (BZ#2143628)

2.2.14.3. Known issues

This release has the following known issue:

Conflict conditions are cleared briefly after they are created

When creating a new state migration plan that will result in a conflict error, that error is cleared shorty after it is displayed. (BZ#2144299)

2.2.15. Migration Toolkit for Containers 1.7.5 release notes

2.2.15.1. Resolved issues

This release has the following major resolved issue:

Direct Volume Migration is failing as rsync pod on source cluster move into Error state

In previous release, migration succeeded with warnings but Direct Volume Migration failed with rsync pod on source namespace going into error state. (*BZ#2132978)

2.2.15.2. Known issues

This release has the following known issues:

Velero image cannot be overridden in the Migration Toolkit for Containers (MTC) operator

In previous releases, it was not possible to override the velero image using the velero_image_fqin parameter in the MigrationController Custom Resource (CR). (BZ#2143389)

When editing a MigHook in the UI, the page might fail to reload

The UI might fail to reload when editing a hook if there is a network connection issue. After the network connection is restored, the page will fail to reload until the cache is cleared. (BZ#2140208)

2.2.16. Migration Toolkit for Containers 1.7.4 release notes

2.2.16.1. Resolved issues

There are no major resolved issues in this release.

2.2.16.2. Known issues

Rollback missing out deletion of some resources from the target cluster

On performing the roll back of an application from the Migration Toolkit for Containers (MTC) UI, some resources are not being deleted from the target cluster and the roll back is showing a status as successfully completed. (BZ#2126880)

2.2.17. Migration Toolkit for Containers 1.7.3 release notes

2.2.17.1. Resolved issues

This release has the following major resolved issues:

Correct DNS validation for destination namespace

In previous releases, the MigPlan could not be validated if the destination namespace started with a non-alphabetic character. (BZ#2102231)

Deselecting all PVCs from UI still results in an attempted PVC transfer

In previous releases, while doing a full migration, unselecting the persistent volume claims (PVCs) would not skip selecting the PVCs and still try to migrate them. (BZ#2106073)

Incorrect DNS validation for destination namespace

In previous releases, MigPlan could not be validated because the destination namespace started with a non-alphabetic character. (BZ#2102231)

2.2.17.2. Known issues

There are no known issues in this release.

2.2.18. Migration Toolkit for Containers 1.7.2 release notes

2.2.18.1. Resolved issues

This release has the following major resolved issues:

MTC UI does not display logs correctly

In previous releases, the Migration Toolkit for Containers (MTC) UI did not display logs correctly. (BZ#2062266)

StorageClass conversion plan adding migstorage reference in migplan

In previous releases, StorageClass conversion plans had a migstorage reference even though it was not being used. (BZ#2078459)

Velero pod log missing from downloaded logs

In previous releases, when downloading a compressed (.zip) folder for all logs, the velero pod was missing. (BZ#2076599)

Velero pod log missing from UI drop down

In previous releases, after a migration was performed, the velero pod log was not included in the logs provided in the dropdown list. (BZ#2076593)

Rsync options logs not visible in log-reader pod

In previous releases, when trying to set any valid or invalid rsync options in the migrationcontroller, the log-reader was not showing any logs regarding the invalid options or about the rsync command being used. (BZ#2079252)

Default CPU requests on Velero/Restic are too demanding and fail in certain environments

In previous releases, the default CPU requests on Velero/Restic were too demanding and fail in certain environments. Default CPU requests for Velero and Restic Pods are set to 500m. These values were high. (BZ#2088022)

2.2.18.2. Known issues

This release has the following known issues:

Updating the replication repository to a different storage provider type is not respected by the UI

After updating the replication repository to a different type and clicking Update Repository, it shows connection successful, but the UI is not updated with the correct details. When clicking on the Edit button again, it still shows the old replication repository information.

Furthermore, when trying to update the replication repository again, it still shows the old replication details. When selecting the new repository, it also shows all the information you entered previously and the Update repository is not enabled, as if there are no changes to be submitted. (BZ#2102020)

Migrations fails because the backup is not found

Migration fails at the restore stage because of initial backup has not been found. (BZ#2104874)

Update Cluster button is not enabled when updating Azure resource group

When updating the remote cluster, selecting the Azure resource group checkbox, and adding a resource group does not enable the Update cluster option. (BZ#2098594)

Error pop-up in UI on deleting migstorage resource

When creating a backupStorage credential secret in OpenShift Container Platform, if the migstorage is removed from the UI, a 404 error is returned and the underlying secret is not removed. (BZ#2100828)

Miganalytic resource displaying resource count as 0 in UI

After creating a migplan from backend, the Miganalytic resource displays the resource count as 0 in UI. (BZ#2102139)

Registry validation fails when two trailing slashes are added to the Exposed route host to image registry

After adding two trailing slashes, meaning //, to the exposed registry route, the MigCluster resource is showing the status as connected. When creating a migplan from backend with DIM, the plans move to the unready status. (BZ#2104864)

Service Account Token not visible while editing source cluster

When editing the source cluster that has been added and is in Connected state, in the UI, the service account token is not visible in the field. To save the wizard, you have to fetch the token again and provide details inside the field. (BZ#2097668)

2.2.19. Migration Toolkit for Containers 1.7.1 release notes

2.2.19.1. Resolved issues

There are no major resolved issues in this release.

2.2.19.2. Known issues

This release has the following known issues:

Incorrect DNS validation for destination namespace

MigPlan cannot be validated because the destination namespace starts with a non-alphabetic character. (BZ#2102231)

Cloud propagation phase in migration controller is not functioning due to missing labels on Velero pods

The Cloud propagation phase in the migration controller is not functioning due to missing labels on Velero pods. The EnsureCloudSecretPropagated phase in the migration controller waits until replication repository secrets are propagated on both sides. As this label is missing on Velero pods, the phase is not functioning as expected. (BZ#2088026)

Default CPU requests on Velero/Restic are too demanding when making scheduling fail in certain environments

Default CPU requests on Velero/Restic are too demanding when making scheduling fail in certain environments. Default CPU requests for Velero and Restic Pods are set to 500m. These values are high. The resources can be configured in DPA using the podConfig field for Velero and Restic. Migration operator should set CPU requests to a lower value, such as 100m, so that Velero and Restic pods can be scheduled in resource constrained environments Migration Toolkit for Containers (MTC) often operates in. (BZ#2088022)

Warning is displayed on persistentVolumes page after editing storage class conversion plan

A warning is displayed on the persistentVolumes page after editing the storage class conversion plan. When editing the existing migration plan, a warning is displayed on the UI At least one PVC must be selected for Storage Class Conversion. (BZ#2079549)

Velero pod log missing from downloaded logs

When downloading a compressed (.zip) folder for all logs, the velero pod is missing. (BZ#2076599)

Velero pod log missing from UI drop down

After a migration is performed, the velero pod log is not included in the logs provided in the dropdown list. (BZ#2076593)

2.2.20. Migration Toolkit for Containers 1.7.0 release notes

2.2.20.1. New features and enhancements

This release has the following new features and enhancements:

- The Migration Toolkit for Containers (MTC) Operator now depends upon the OpenShift API for Data Protection (OADP) Operator. When you install the MTC Operator, the Operator Lifecycle Manager (OLM) automatically installs the OADP Operator in the same namespace.

-

You can migrate from a source cluster that is behind a firewall to a cloud-based destination cluster by establishing a network tunnel between the two clusters by using the

crane tunnel-apicommand. - Converting storage classes in the MTC web console: You can convert the storage class of a persistent volume (PV) by migrating it within the same cluster.

2.2.20.2. Known issues

This release has the following known issues:

-

MigPlancustom resource does not display a warning when an AWS gp2 PVC has no available space. (BZ#1963927) - Direct and indirect data transfers do not work if the destination storage is a PV that is dynamically provisioned by the AWS Elastic File System (EFS). This is due to limitations of the AWS EFS Container Storage Interface (CSI) driver. (BZ#2085097)

- Block storage for IBM Cloud must be in the same availability zone. See the IBM FAQ for block storage for virtual private cloud.

-

MTC 1.7.6 cannot migrate cron jobs from source clusters that support

v1beta1cron jobs to clusters of OpenShift Container Platform 4.12 and later, which do not supportv1beta1cron jobs. (BZ#2149119)

2.3. Migration Toolkit for Containers 1.6 release notes

The release notes for Migration Toolkit for Containers (MTC) describe new features and enhancements, deprecated features, and known issues.

The MTC enables you to migrate application workloads between OpenShift Container Platform clusters at the granularity of a namespace.

You can migrate from OpenShift Container Platform 3 to 4.14 and between OpenShift Container Platform 4 clusters.

MTC provides a web console and an API, based on Kubernetes custom resources, to help you control the migration and minimize application downtime.

For information on the support policy for MTC, see OpenShift Application and Cluster Migration Solutions, part of the Red Hat OpenShift Container Platform Life Cycle Policy.

2.3.1. Migration Toolkit for Containers 1.6 release notes

2.3.1.1. New features and enhancements

This release has the following new features and enhancements:

- State migration: You can perform repeatable, state-only migrations by selecting specific persistent volume claims (PVCs).

- "New operator version available" notification: The Clusters page of the MTC web console displays a notification when a new Migration Toolkit for Containers Operator is available.

2.3.1.2. Deprecated features

The following features are deprecated:

- MTC version 1.4 is no longer supported.

2.3.1.3. Known issues

This release has the following known issues:

-

On OpenShift Container Platform 3.10, the

MigrationControllerpod takes too long to restart. The Bugzilla report contains a workaround. (BZ#1986796) -

Stagepods fail during direct volume migration from a classic OpenShift Container Platform source cluster on IBM Cloud. The IBM block storage plugin does not allow the same volume to be mounted on multiple pods of the same node. As a result, the PVCs cannot be mounted on the Rsync pods and on the application pods simultaneously. To resolve this issue, stop the application pods before migration. (BZ#1887526) -

MigPlancustom resource does not display a warning when an AWS gp2 PVC has no available space. (BZ#1963927) - Block storage for IBM Cloud must be in the same availability zone. See the IBM FAQ for block storage for virtual private cloud.

2.4. Migration Toolkit for Containers 1.5 release notes

The release notes for Migration Toolkit for Containers (MTC) describe new features and enhancements, deprecated features, and known issues.

The MTC enables you to migrate application workloads between OpenShift Container Platform clusters at the granularity of a namespace.

You can migrate from OpenShift Container Platform 3 to 4.14 and between OpenShift Container Platform 4 clusters.

MTC provides a web console and an API, based on Kubernetes custom resources, to help you control the migration and minimize application downtime.

For information on the support policy for MTC, see OpenShift Application and Cluster Migration Solutions, part of the Red Hat OpenShift Container Platform Life Cycle Policy.

2.4.1. Migration Toolkit for Containers 1.5 release notes

2.4.1.1. New features and enhancements

This release has the following new features and enhancements:

- The Migration resource tree on the Migration details page of the web console has been enhanced with additional resources, Kubernetes events, and live status information for monitoring and debugging migrations.

- The web console can support hundreds of migration plans.

- A source namespace can be mapped to a different target namespace in a migration plan. Previously, the source namespace was mapped to a target namespace with the same name.

- Hook phases with status information are displayed in the web console during a migration.

- The number of Rsync retry attempts is displayed in the web console during direct volume migration.

- Persistent volume (PV) resizing can be enabled for direct volume migration to ensure that the target cluster does not run out of disk space.

- The threshold that triggers PV resizing is configurable. Previously, PV resizing occurred when the disk usage exceeded 97%.

- Velero has been updated to version 1.6, which provides numerous fixes and enhancements.

- Cached Kubernetes clients can be enabled to provide improved performance.

2.4.1.2. Deprecated features

The following features are deprecated:

- MTC versions 1.2 and 1.3 are no longer supported.

-

The procedure for updating deprecated APIs has been removed from the troubleshooting section of the documentation because the

oc convertcommand is deprecated.

2.4.1.3. Known issues

This release has the following known issues:

-

Microsoft Azure storage is unavailable if you create more than 400 migration plans. The

MigStoragecustom resource displays the following message:The request is being throttled as the limit has been reached for operation type. (BZ#1977226) - If a migration fails, the migration plan does not retain custom persistent volume (PV) settings for quiesced pods. You must manually roll back the migration, delete the migration plan, and create a new migration plan with your PV settings. (BZ#1784899)

-

PV resizing does not work as expected for AWS gp2 storage unless the

pv_resizing_thresholdis 42% or greater. (BZ#1973148) PV resizing does not work with OpenShift Container Platform 3.7 and 3.9 source clusters in the following scenarios:

- The application was installed after MTC was installed.

An application pod was rescheduled on a different node after MTC was installed.

OpenShift Container Platform 3.7 and 3.9 do not support the Mount Propagation feature that enables Velero to mount PVs automatically in the

Resticpod. TheMigAnalyticcustom resource (CR) fails to collect PV data from theResticpod and reports the resources as0. TheMigPlanCR displays a status similar to the following:Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow To enable PV resizing, you can manually restart the Restic daemonset on the source cluster or restart the

Resticpods on the same nodes as the application. If you do not restart Restic, you can run the direct volume migration without PV resizing. (BZ#1982729)

2.4.1.4. Technical changes

This release has the following technical changes:

- The legacy Migration Toolkit for Containers Operator version 1.5.1 is installed manually on OpenShift Container Platform versions 3.7 to 4.5.

- The Migration Toolkit for Containers Operator version 1.5.1 is installed on OpenShift Container Platform versions 4.6 and later by using the Operator Lifecycle Manager.

Chapter 3. Installing the Migration Toolkit for Containers

You can install the Migration Toolkit for Containers (MTC) on OpenShift Container Platform 4.

To install MTC on OpenShift Container Platform 3, see Installing the legacy Migration Toolkit for Containers Operator on OpenShift Container Platform 3.

By default, the MTC web console and the Migration Controller pod run on the target cluster. You can configure the Migration Controller custom resource manifest to run the MTC web console and the Migration Controller pod on a remote cluster.

After you have installed MTC, you must configure an object storage to use as a replication repository.

To uninstall MTC, see Uninstalling MTC and deleting resources.

3.1. Compatibility guidelines

You must install the Migration Toolkit for Containers (MTC) Operator that is compatible with your OpenShift Container Platform version.

Definitions

- legacy platform

- OpenShift Container Platform 4.5 and earlier.

- modern platform

- OpenShift Container Platform 4.6 and later.

- legacy operator

- The MTC Operator designed for legacy platforms.

- modern operator

- The MTC Operator designed for modern platforms.

- control cluster

- The cluster that runs the MTC controller and GUI.

- remote cluster

- A source or destination cluster for a migration that runs Velero. The Control Cluster communicates with Remote clusters via the Velero API to drive migrations.

You must use the compatible MTC version for migrating your OpenShift Container Platform clusters. For the migration to succeed both your source cluster and the destination cluster must use the same version of MTC.

MTC 1.7 supports migrations from OpenShift Container Platform 3.11 to 4.9.

MTC 1.8 only supports migrations from OpenShift Container Platform 4.10 and later.

| Details | OpenShift Container Platform 3.11 | OpenShift Container Platform 4.0 to 4.5 | OpenShift Container Platform 4.6 to 4.9 | OpenShift Container Platform 4.10 or later |

|---|---|---|---|---|

| Stable MTC version | MTC v.1.7.z | MTC v.1.7.z | MTC v.1.7.z | MTC v.1.8.z |

| Installation |

Legacy MTC v.1.7.z operator: Install manually with the [IMPORTANT] This cluster cannot be the control cluster. |

Install with OLM, release channel |

Install with OLM, release channel |

Edge cases exist in which network restrictions prevent modern clusters from connecting to other clusters involved in the migration. For example, when migrating from an OpenShift Container Platform 3.11 cluster on premises to a modern OpenShift Container Platform cluster in the cloud, where the modern cluster cannot connect to the OpenShift Container Platform 3.11 cluster.

With MTC v.1.7.z, if one of the remote clusters is unable to communicate with the control cluster because of network restrictions, use the crane tunnel-api command.

With the stable MTC release, although you should always designate the most modern cluster as the control cluster, in this specific case it is possible to designate the legacy cluster as the control cluster and push workloads to the remote cluster.

3.2. Installing the legacy Migration Toolkit for Containers Operator on OpenShift Container Platform 4.2 to 4.5

You can install the legacy Migration Toolkit for Containers Operator manually on OpenShift Container Platform versions 4.2 to 4.5.

Prerequisites

-

You must be logged in as a user with

cluster-adminprivileges on all clusters. -

You must have access to

registry.redhat.io. -

You must have

podmaninstalled.

Procedure

Log in to

registry.redhat.iowith your Red Hat Customer Portal credentials:podman login registry.redhat.io

$ podman login registry.redhat.ioCopy to Clipboard Copied! Toggle word wrap Toggle overflow Download the

operator.ymlfile by entering the following command:podman cp $(podman create registry.redhat.io/rhmtc/openshift-migration-legacy-rhel8-operator:v1.7):/operator.yml ./

podman cp $(podman create registry.redhat.io/rhmtc/openshift-migration-legacy-rhel8-operator:v1.7):/operator.yml ./Copy to Clipboard Copied! Toggle word wrap Toggle overflow Download the

controller.ymlfile by entering the following command:podman cp $(podman create registry.redhat.io/rhmtc/openshift-migration-legacy-rhel8-operator:v1.7):/controller.yml ./

podman cp $(podman create registry.redhat.io/rhmtc/openshift-migration-legacy-rhel8-operator:v1.7):/controller.yml ./Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Log in to your OpenShift Container Platform source cluster.

Verify that the cluster can authenticate with

registry.redhat.io:oc run test --image registry.redhat.io/ubi9 --command sleep infinity

$ oc run test --image registry.redhat.io/ubi9 --command sleep infinityCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create the Migration Toolkit for Containers Operator object:

oc create -f operator.yml

$ oc create -f operator.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- You can ignore

Error from server (AlreadyExists)messages. They are caused by the Migration Toolkit for Containers Operator creating resources for earlier versions of OpenShift Container Platform 4 that are provided in later releases.

Create the

MigrationControllerobject:oc create -f controller.yml

$ oc create -f controller.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that the MTC pods are running:

oc get pods -n openshift-migration

$ oc get pods -n openshift-migrationCopy to Clipboard Copied! Toggle word wrap Toggle overflow

3.3. Installing the Migration Toolkit for Containers Operator on OpenShift Container Platform 4.14

You install the Migration Toolkit for Containers Operator on OpenShift Container Platform 4.14 by using the Operator Lifecycle Manager.

Prerequisites

-

You must be logged in as a user with

cluster-adminprivileges on all clusters.

Procedure

- In the OpenShift Container Platform web console, click Operators → OperatorHub.

- Use the Filter by keyword field to find the Migration Toolkit for Containers Operator.

- Select the Migration Toolkit for Containers Operator and click Install.

Click Install.

On the Installed Operators page, the Migration Toolkit for Containers Operator appears in the openshift-migration project with the status Succeeded.

- Click Migration Toolkit for Containers Operator.

- Under Provided APIs, locate the Migration Controller tile, and click Create Instance.

- Click Create.

- Click Workloads → Pods to verify that the MTC pods are running.

3.4. Proxy configuration

For OpenShift Container Platform 4.1 and earlier versions, you must configure proxies in the MigrationController custom resource (CR) manifest after you install the Migration Toolkit for Containers Operator because these versions do not support a cluster-wide proxy object.