Virtualization

OpenShift Virtualization installation, usage, and release notes

Abstract

Chapter 1. About

1.1. About OpenShift Virtualization

Learn about OpenShift Virtualization’s capabilities and support scope.

1.1.1. What you can do with OpenShift Virtualization

OpenShift Virtualization is an add-on to OpenShift Container Platform that allows you to run and manage virtual machine workloads alongside container workloads.

OpenShift Virtualization adds new objects into your OpenShift Container Platform cluster by using Kubernetes custom resources to enable virtualization tasks. These tasks include:

- Creating and managing Linux and Windows virtual machines (VMs)

- Running pod and VM workloads alongside each other in a cluster

- Connecting to virtual machines through a variety of consoles and CLI tools

- Importing and cloning existing virtual machines

- Managing network interface controllers and storage disks attached to virtual machines

- Live migrating virtual machines between nodes

An enhanced web console provides a graphical portal to manage these virtualized resources alongside the OpenShift Container Platform cluster containers and infrastructure.

OpenShift Virtualization is designed and tested to work well with Red Hat OpenShift Data Foundation features.

When you deploy OpenShift Virtualization with OpenShift Data Foundation, you must create a dedicated storage class for Windows virtual machine disks. See Optimizing ODF PersistentVolumes for Windows VMs for details.

You can use OpenShift Virtualization with OVN-Kubernetes, OpenShift SDN, or one of the other certified network plugins listed in Certified OpenShift CNI Plug-ins.

You can check your OpenShift Virtualization cluster for compliance issues by installing the Compliance Operator and running a scan with the ocp4-moderate and ocp4-moderate-node profiles. The Compliance Operator uses OpenSCAP, a NIST-certified tool, to scan and enforce security policies.

1.1.1.1. OpenShift Virtualization supported cluster version

The latest stable release of OpenShift Virtualization 4.14 is 4.14.16.

OpenShift Virtualization 4.14 is supported for use on OpenShift Container Platform 4.14 clusters. To use the latest z-stream release of OpenShift Virtualization, you must first upgrade to the latest version of OpenShift Container Platform.

1.1.2. About volume and access modes for virtual machine disks

If you use the storage API with known storage providers, the volume and access modes are selected automatically. However, if you use a storage class that does not have a storage profile, you must configure the volume and access mode.

For best results, use the ReadWriteMany (RWX) access mode and the Block volume mode. This is important for the following reasons:

-

ReadWriteMany(RWX) access mode is required for live migration. The

Blockvolume mode performs significantly better than theFilesystemvolume mode. This is because theFilesystemvolume mode uses more storage layers, including a file system layer and a disk image file. These layers are not necessary for VM disk storage.For example, if you use Red Hat OpenShift Data Foundation, Ceph RBD volumes are preferable to CephFS volumes.

You cannot live migrate virtual machines with the following configurations:

-

Storage volume with

ReadWriteOnce(RWO) access mode - Passthrough features such as GPUs

Do not set the evictionStrategy field to LiveMigrate for these virtual machines.

1.1.3. Single-node OpenShift differences

You can install OpenShift Virtualization on single-node OpenShift.

However, you should be aware that Single-node OpenShift does not support the following features:

- High availability

- Pod disruption

- Live migration

- Virtual machines or templates that have an eviction strategy configured

1.2. Supported limits

You can refer to tested object maximums when planning your OpenShift Container Platform environment for OpenShift Virtualization. However, approaching the maximum values can reduce performance and increase latency. Ensure that you plan for your specific use case and consider all factors that can impact cluster scaling.

For more information about cluster configuration and options that impact performance, see the OpenShift Virtualization - Tuning & Scaling Guide in the Red Hat Knowledgebase.

1.2.1. Tested maximums for OpenShift Virtualization

The following limits apply to a large-scale OpenShift Virtualization 4.x environment. They are based on a single cluster of the largest possible size. When you plan an environment, remember that multiple smaller clusters might be the best option for your use case.

1.2.1.1. Virtual machine maximums

The following maximums apply to virtual machines (VMs) running on OpenShift Virtualization. These values are subject to the limits specified in Virtualization limits for Red Hat Enterprise Linux with KVM.

| Objective (per VM) | Tested limit | Theoretical limit |

|---|---|---|

| Virtual CPUs | 216 vCPUs | 255 vCPUs |

| Memory | 6 TB | 16 TB |

| Single disk size | 20 TB | 100 TB |

| Hot-pluggable disks | 255 disks | N/A |

Each VM must have at least 512 MB of memory.

1.2.1.2. Host maximums

The following maximums apply to the OpenShift Container Platform hosts used for OpenShift Virtualization.

| Objective (per host) | Tested limit | Theoretical limit |

|---|---|---|

| Logical CPU cores or threads | Same as Red Hat Enterprise Linux (RHEL) | N/A |

| RAM | Same as RHEL | N/A |

| Simultaneous live migrations | Defaults to 2 outbound migrations per node, and 5 concurrent migrations per cluster | Depends on NIC bandwidth |

| Live migration bandwidth | No default limit | Depends on NIC bandwidth |

1.2.1.3. Cluster maximums

The following maximums apply to objects defined in OpenShift Virtualization.

| Objective (per cluster) | Tested limit | Theoretical limit |

|---|---|---|

| Number of attached PVs per node | N/A | CSI storage provider dependent |

| Maximum PV size | N/A | CSI storage provider dependent |

| Hosts | 500 hosts (100 or fewer recommended) [1] | Same as OpenShift Container Platform |

| Defined VMs | 10,000 VMs [2] | Same as OpenShift Container Platform |

If you use more than 100 nodes, consider using Red Hat Advanced Cluster Management (RHACM) to manage multiple clusters instead of scaling out a single control plane. Larger clusters add complexity, require longer updates, and depending on node size and total object density, they can increase control plane stress.

Using multiple clusters can be beneficial in areas like per-cluster isolation and high availability.

The maximum number of VMs per node depends on the host hardware and resource capacity. It is also limited by the following parameters:

-

Settings that limit the number of pods that can be scheduled to a node. For example:

maxPods. -

The default number of KVM devices. For example:

devices.kubevirt.io/kvm: 1k.

-

Settings that limit the number of pods that can be scheduled to a node. For example:

1.3. Security policies

Learn about OpenShift Virtualization security and authorization.

Key points

-

OpenShift Virtualization adheres to the

restrictedKubernetes pod security standards profile, which aims to enforce the current best practices for pod security. - Virtual machine (VM) workloads run as unprivileged pods.

-

Security context constraints (SCCs) are defined for the

kubevirt-controllerservice account. - TLS certificates for OpenShift Virtualization components are renewed and rotated automatically.

1.3.1. About workload security

By default, virtual machine (VM) workloads do not run with root privileges in OpenShift Virtualization, and there are no supported OpenShift Virtualization features that require root privileges.

For each VM, a virt-launcher pod runs an instance of libvirt in session mode to manage the VM process. In session mode, the libvirt daemon runs as a non-root user account and only permits connections from clients that are running under the same user identifier (UID). Therefore, VMs run as unprivileged pods, adhering to the security principle of least privilege.

1.3.2. TLS certificates

TLS certificates for OpenShift Virtualization components are renewed and rotated automatically. You are not required to refresh them manually.

Automatic renewal schedules

TLS certificates are automatically deleted and replaced according to the following schedule:

- KubeVirt certificates are renewed daily.

- Containerized Data Importer controller (CDI) certificates are renewed every 15 days.

- MAC pool certificates are renewed every year.

Automatic TLS certificate rotation does not disrupt any operations. For example, the following operations continue to function without any disruption:

- Migrations

- Image uploads

- VNC and console connections

1.3.3. Authorization

OpenShift Virtualization uses role-based access control (RBAC) to define permissions for human users and service accounts. The permissions defined for service accounts control the actions that OpenShift Virtualization components can perform.

You can also use RBAC roles to manage user access to virtualization features. For example, an administrator can create an RBAC role that provides the permissions required to launch a virtual machine. The administrator can then restrict access by binding the role to specific users.

1.3.3.1. Default cluster roles for OpenShift Virtualization

By using cluster role aggregation, OpenShift Virtualization extends the default OpenShift Container Platform cluster roles to include permissions for accessing virtualization objects.

| Default cluster role | OpenShift Virtualization cluster role | OpenShift Virtualization cluster role description |

|---|---|---|

|

|

| A user that can view all OpenShift Virtualization resources in the cluster but cannot create, delete, modify, or access them. For example, the user can see that a virtual machine (VM) is running but cannot shut it down or gain access to its console. |

|

|

| A user that can modify all OpenShift Virtualization resources in the cluster. For example, the user can create VMs, access VM consoles, and delete VMs. |

|

|

|

A user that has full permissions to all OpenShift Virtualization resources, including the ability to delete collections of resources. The user can also view and modify the OpenShift Virtualization runtime configuration, which is located in the |

1.3.3.2. RBAC roles for storage features in OpenShift Virtualization

The following permissions are granted to the Containerized Data Importer (CDI), including the cdi-operator and cdi-controller service accounts.

1.3.3.2.1. Cluster-wide RBAC roles

| CDI cluster role | Resources | Verbs |

|---|---|---|

|

|

|

|

|

|

| |

|

|

|

|

|

|

| |

|

|

|

|

|

|

| |

|

|

|

|

| API group | Resources | Verbs |

|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Allow list: |

|

|

|

Allow list: |

|

|

|

|

|

| API group | Resources | Verbs |

|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

1.3.3.2.2. Namespaced RBAC roles

| API group | Resources | Verbs |

|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| API group | Resources | Verbs |

|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

1.3.3.3. Additional SCCs and permissions for the kubevirt-controller service account

Security context constraints (SCCs) control permissions for pods. These permissions include actions that a pod, a collection of containers, can perform and what resources it can access. You can use SCCs to define a set of conditions that a pod must run with to be accepted into the system.

The virt-controller is a cluster controller that creates the virt-launcher pods for virtual machines in the cluster.

By default, virt-launcher pods run with the default service account in the namespace. If your compliance controls require a unique service account, assign one to the VM. The setting applies to the VirtualMachineInstance object and the virt-launcher pod.

The kubevirt-controller service account is granted additional SCCs and Linux capabilities so that it can create virt-launcher pods with the appropriate permissions. These extended permissions allow virtual machines to use OpenShift Virtualization features that are beyond the scope of typical pods.

The kubevirt-controller service account is granted the following SCCs:

-

scc.AllowHostDirVolumePlugin = true

This allows virtual machines to use the hostpath volume plugin. -

scc.AllowPrivilegedContainer = false

This ensures thevirt-launcherpod is not run as a privileged container. scc.AllowedCapabilities = []corev1.Capability{"SYS_NICE", "NET_BIND_SERVICE"}-

SYS_NICEallows setting the CPU affinity. -

NET_BIND_SERVICEallows DHCP and Slirp operations.

-

Viewing the SCC and RBAC definitions for the kubevirt-controller

You can view the SecurityContextConstraints definition for the kubevirt-controller by using the oc tool:

oc get scc kubevirt-controller -o yaml

$ oc get scc kubevirt-controller -o yaml

You can view the RBAC definition for the kubevirt-controller clusterrole by using the oc tool:

oc get clusterrole kubevirt-controller -o yaml

$ oc get clusterrole kubevirt-controller -o yaml1.4. OpenShift Virtualization Architecture

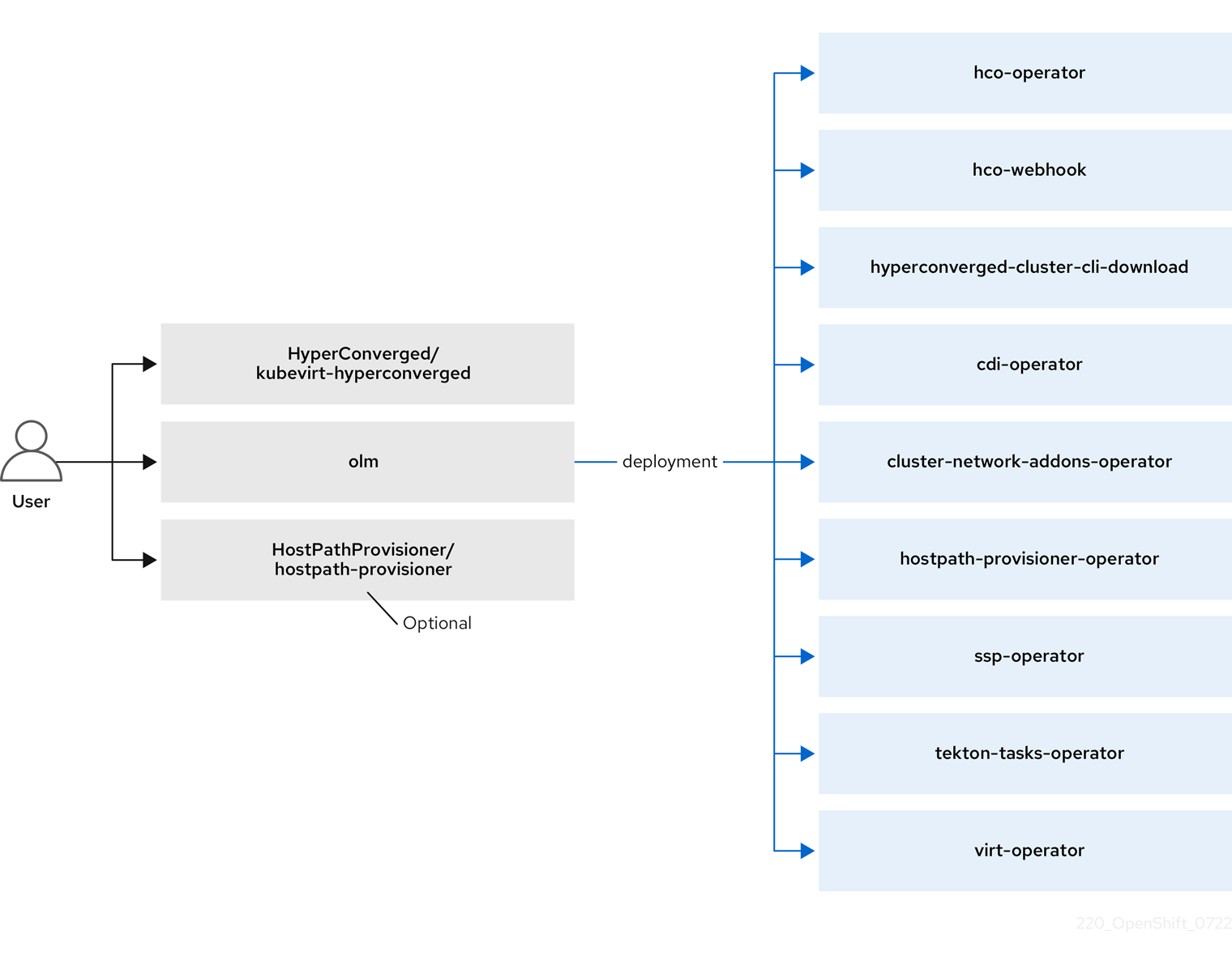

The Operator Lifecycle Manager (OLM) deploys operator pods for each component of OpenShift Virtualization:

-

Compute:

virt-operator -

Storage:

cdi-operator -

Network:

cluster-network-addons-operator -

Scaling:

ssp-operator -

Templating:

tekton-tasks-operator

OLM also deploys the hyperconverged-cluster-operator pod, which is responsible for the deployment, configuration, and life cycle of other components, and several helper pods: hco-webhook, and hyperconverged-cluster-cli-download.

After all operator pods are successfully deployed, you should create the HyperConverged custom resource (CR). The configurations set in the HyperConverged CR serve as the single source of truth and the entrypoint for OpenShift Virtualization, and guide the behavior of the CRs.

The HyperConverged CR creates corresponding CRs for the operators of all other components within its reconciliation loop. Each operator then creates resources such as daemon sets, config maps, and additional components for the OpenShift Virtualization control plane. For example, when the HyperConverged Operator (HCO) creates the KubeVirt CR, the OpenShift Virtualization Operator reconciles it and creates additional resources such as virt-controller, virt-handler, and virt-api.

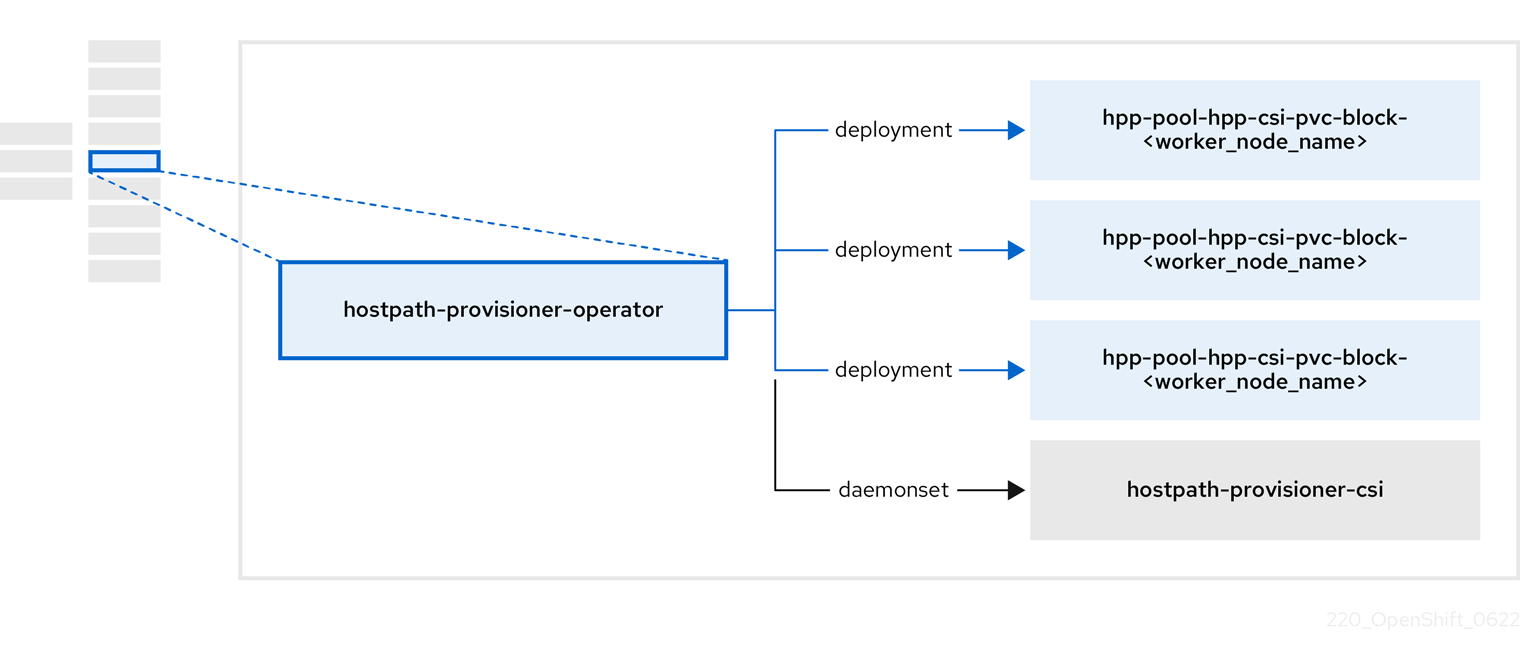

The OLM deploys the Hostpath Provisioner (HPP) Operator, but it is not functional until you create a hostpath-provisioner CR.

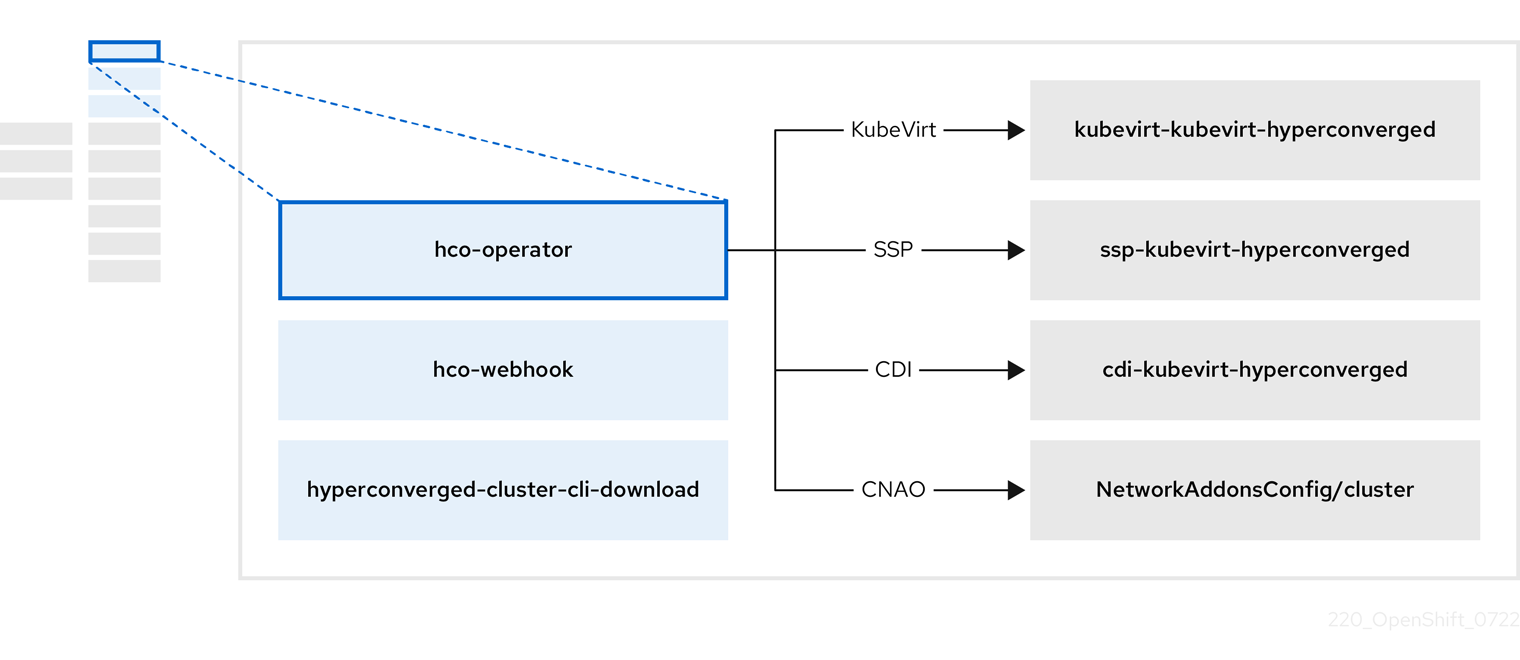

1.4.1. About the HyperConverged Operator (HCO)

The HCO, hco-operator, provides a single entry point for deploying and managing OpenShift Virtualization and several helper operators with opinionated defaults. It also creates custom resources (CRs) for those operators.

| Component | Description |

|---|---|

|

|

Validates the |

|

|

Provides the |

|

| Contains all operators, CRs, and objects needed by OpenShift Virtualization. |

|

| A Scheduling, Scale, and Performance (SSP) CR. This is automatically created by the HCO. |

|

| A Containerized Data Importer (CDI) CR. This is automatically created by the HCO. |

|

|

A CR that instructs and is managed by the |

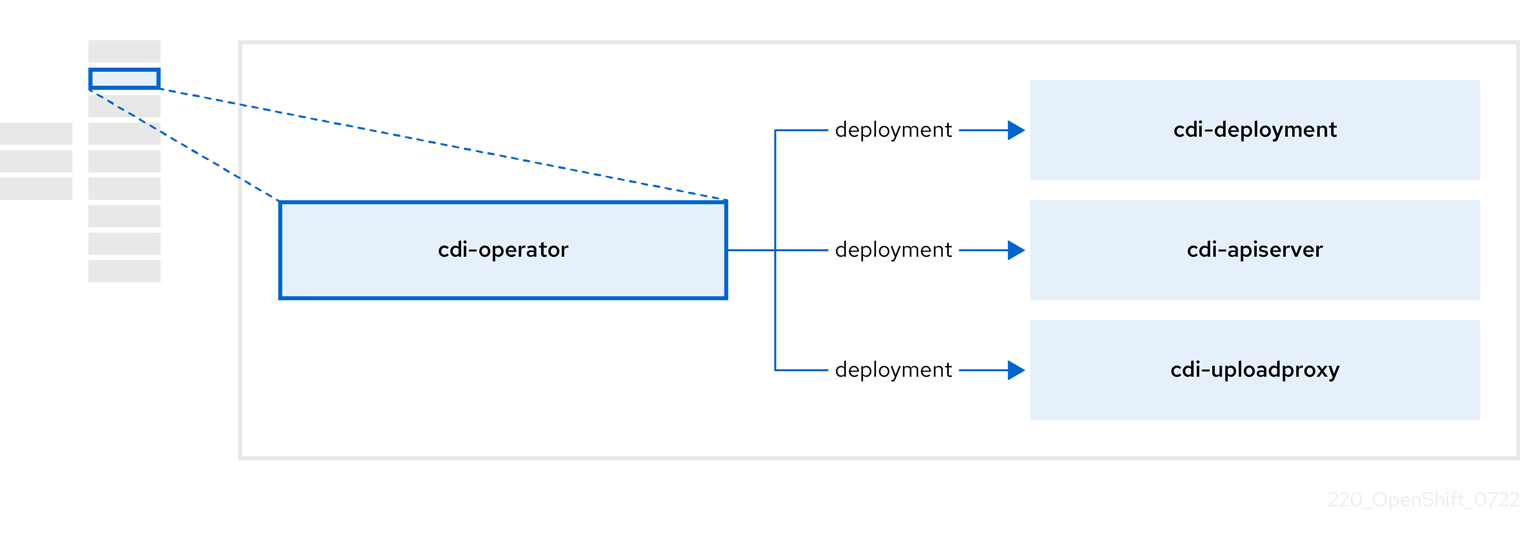

1.4.2. About the Containerized Data Importer (CDI) Operator

The CDI Operator, cdi-operator, manages CDI and its related resources, which imports a virtual machine (VM) image into a persistent volume claim (PVC) by using a data volume.

| Component | Description |

|---|---|

|

| Manages the authorization to upload VM disks into PVCs by issuing secure upload tokens. |

|

| Directs external disk upload traffic to the appropriate upload server pod so that it can be written to the correct PVC. Requires a valid upload token. |

|

| Helper pod that imports a virtual machine image into a PVC when creating a data volume. |

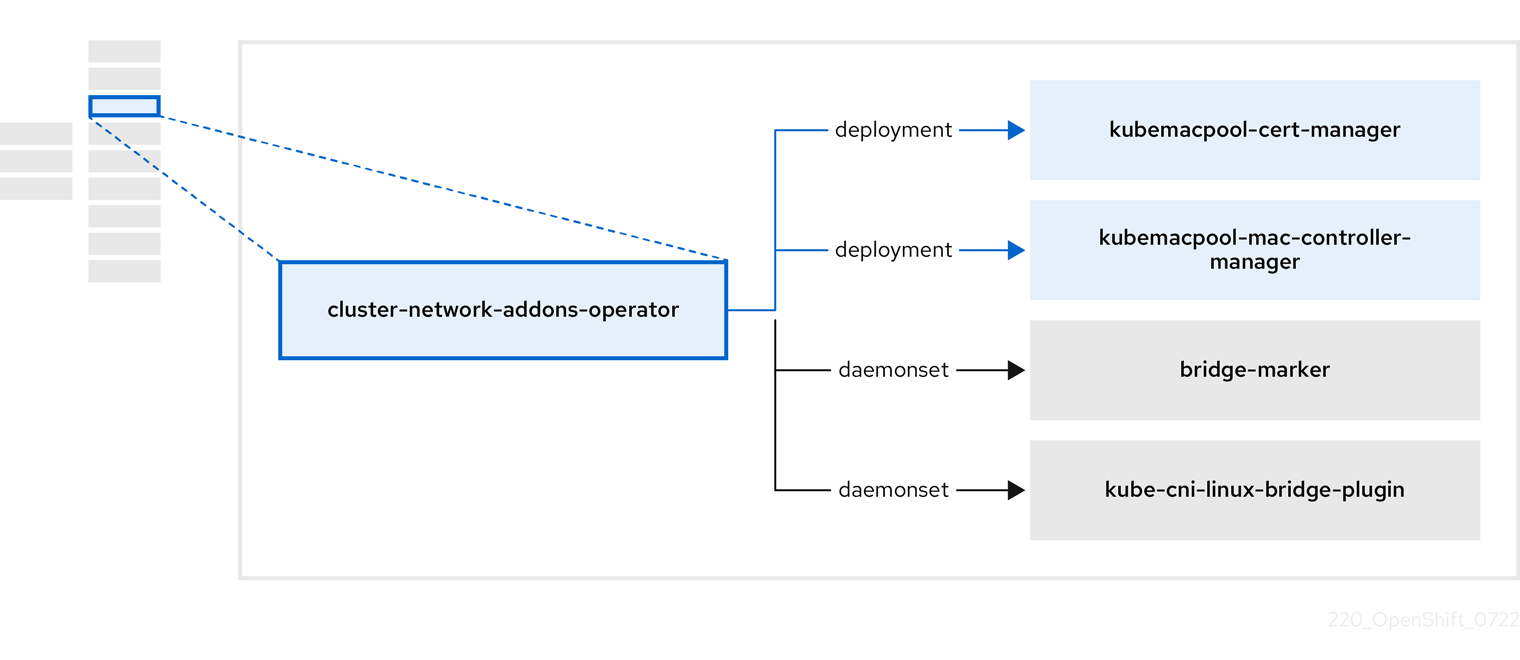

1.4.3. About the Cluster Network Addons Operator

The Cluster Network Addons Operator, cluster-network-addons-operator, deploys networking components on a cluster and manages the related resources for extended network functionality.

| Component | Description |

|---|---|

|

| Manages TLS certificates of Kubemacpool’s webhooks. |

|

| Provides a MAC address pooling service for virtual machine (VM) network interface cards (NICs). |

|

| Marks network bridges available on nodes as node resources. |

|

| Installs Container Network Interface (CNI) plugins on cluster nodes, enabling the attachment of VMs to Linux bridges through network attachment definitions. |

1.4.4. About the Hostpath Provisioner (HPP) Operator

The HPP Operator, hostpath-provisioner-operator, deploys and manages the multi-node HPP and related resources.

| Component | Description |

|---|---|

|

| Provides a worker for each node where the HPP is designated to run. The pods mount the specified backing storage on the node. |

|

| Implements the Container Storage Interface (CSI) driver interface of the HPP. |

|

| Implements the legacy driver interface of the HPP. |

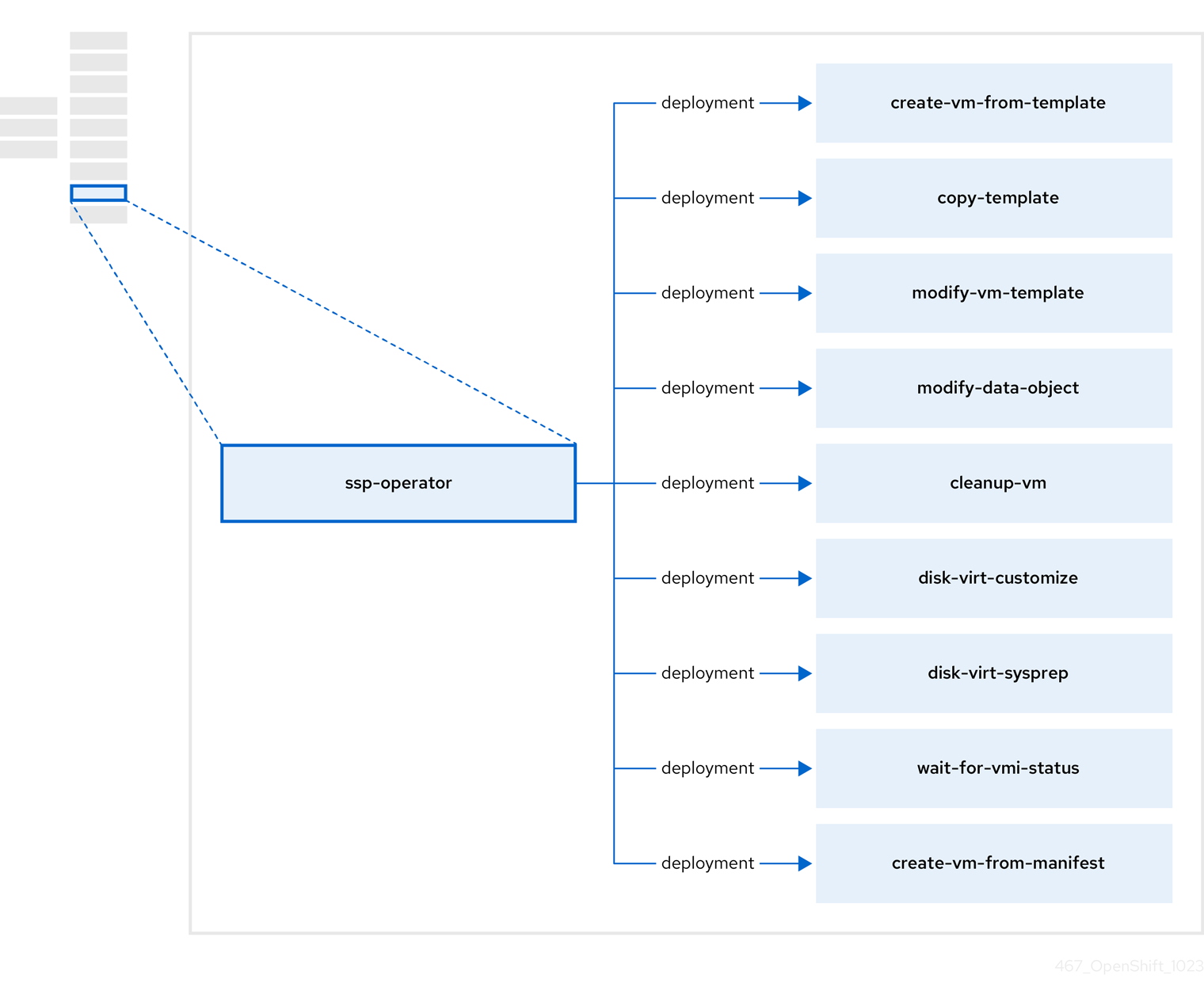

1.4.5. About the Scheduling, Scale, and Performance (SSP) Operator

The SSP Operator, ssp-operator, deploys the common templates, the related default boot sources, the pipeline tasks, and the template validator.

| Component | Description |

|---|---|

|

| Creates a VM from a template. |

|

| Copies a VM template. |

|

| Creates or removes a VM template. |

|

| Creates or removes data volumes or data sources. |

|

| Runs a script or a command on a VM, then stops or deletes the VM afterward. |

|

|

Runs a |

|

|

Runs a |

|

| Waits for a specific virtual machine instance (VMI) status, then fails or succeeds according to that status. |

|

| Creates a VM from a manifest. |

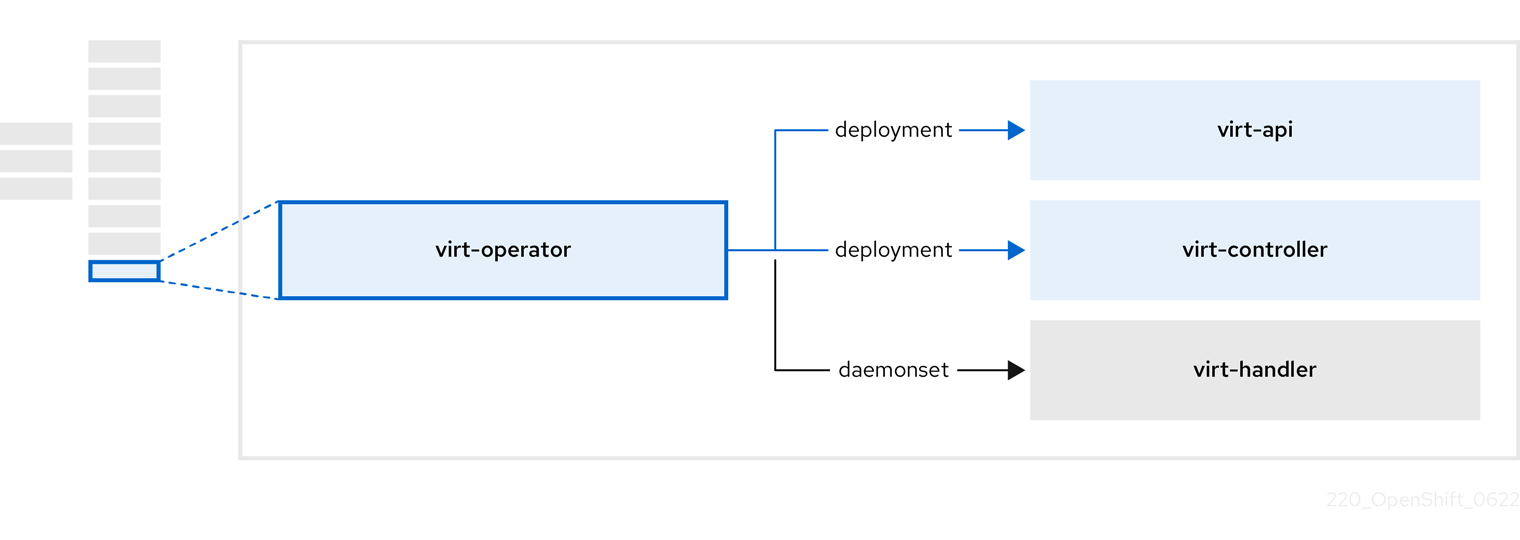

1.4.6. About the OpenShift Virtualization Operator

The OpenShift Virtualization Operator, virt-operator deploys, upgrades, and manages OpenShift Virtualization without disrupting current virtual machine (VM) workloads.

| Component | Description |

|---|---|

|

| HTTP API server that serves as the entry point for all virtualization-related flows. |

|

|

Observes the creation of a new VM instance object and creates a corresponding pod. When the pod is scheduled on a node, |

|

|

Monitors any changes to a VM and instructs |

|

|

Contains the VM that was created by the user as implemented by |

Chapter 2. Release notes

2.1. OpenShift Virtualization release notes

2.1.1. Providing documentation feedback

To report an error or to improve our documentation, log in to your Red Hat Jira account and submit a Jira issue.

2.1.2. About Red Hat OpenShift Virtualization

Red Hat OpenShift Virtualization enables you to bring traditional virtual machines (VMs) into OpenShift Container Platform where they run alongside containers, and are managed as native Kubernetes objects.

OpenShift Virtualization is represented by the

![]() icon.

icon.

You can use OpenShift Virtualization with either the OVN-Kubernetes or the OpenShiftSDN default Container Network Interface (CNI) network provider.

Learn more about what you can do with OpenShift Virtualization.

Learn more about OpenShift Virtualization architecture and deployments.

Prepare your cluster for OpenShift Virtualization.

2.1.2.1. OpenShift Virtualization supported cluster version

The latest stable release of OpenShift Virtualization 4.14 is 4.14.16.

OpenShift Virtualization 4.14 is supported for use on OpenShift Container Platform 4.14 clusters. To use the latest z-stream release of OpenShift Virtualization, you must first upgrade to the latest version of OpenShift Container Platform.

2.1.2.2. Supported guest operating systems

To view the supported guest operating systems for OpenShift Virtualization, see Certified Guest Operating Systems in Red Hat OpenStack Platform, Red Hat Virtualization, OpenShift Virtualization and Red Hat Enterprise Linux with KVM.

2.1.3. New and changed features

OpenShift Virtualization is certified in Microsoft’s Windows Server Virtualization Validation Program (SVVP) to run Windows Server workloads.

The SVVP Certification applies to:

- Red Hat Enterprise Linux CoreOS workers. In the Microsoft SVVP Catalog, they are named Red Hat OpenShift Container Platform 4.14.

- Intel and AMD CPUs.

- Creating hosted control plane clusters on OpenShift Virtualization was previously Technology Preview and is now generally available. For more information, see Managing hosted control plane clusters on OpenShift Virtualization in the Red Hat Advanced Cluster Management (RHACM) documentation.

Using OpenShift Virtualization on Amazon Web Services (AWS) bare-metal OpenShift Container Platform clusters was previously Technology Preview and is now generally available.

In addition, OpenShift Virtualization is now supported on Red Hat OpenShift Service on AWS Classic clusters.

For more information, see OpenShift Virtualization on AWS bare metal.

- Using the NVIDIA GPU Operator to provision worker nodes for GPU-enabled VMs was previously Technology Preview and is now generally available. For more information, see Configuring the NVIDIA GPU Operator.

- As a cluster administrator, you can back up and restore applications running on OpenShift Virtualization by using the OpenShift API for Data Protection (OADP).

- You can add a static authorized SSH key to a project by using the web console. The key is then added to all VMs that you create in the project.

-

OpenShift Virtualization now supports persisting the virtual Trusted Platform Module (vTPM) device state by using Persistent Volume Claims (PVCs) for VMs. You must specify the storage class to be used by the PVC by setting the

vmStateStorageClassattribute in theHyperConvergedcustom resource (CR).

- You can enable dynamic SSH key injection for RHEL 9 VMs. Then, you can update the authorized SSH keys at runtime.

- You can now enable volume snapshots as boot sources.

The access mode and volume mode fields in storage profiles are populated automatically with their optimal values for the following additional Containerized Storage Interface provisioners:

- Dell PowerFlex

- Dell PowerMax

- Dell PowerScale

- Dell Unity

- Dell PowerStore

- Hitachi Virtual Storage Platform

- IBM Fusion Hyper-Converged Infrastructure

- IBM Fusion HCI with Fusion Data Foundation or Fusion Global Data Platform

- IBM Fusion Software-Defined Storage

- IBM FlashSystems

- Hewlett Packard Enterprise 3PAR

- Hewlett Packard Enterprise Nimble

- Hewlett Packard Enterprise Alletra

- Hewlett Packard Enterprise Primera

- You can use a custom scheduler to schedule a virtual machine (VM) on a node.

- Garbage collection for data volumes is disabled by default.

- You can add a static authorized SSH key to a project by using the web console. The key is then added to all VMs that you create in the project.

The following runbooks have been changed:

-

SingleStackIPv6UnsupportedandVMStorageClassWarninghave been added. -

KubeMacPoolDownhas been renamedKubemacpoolDown. -

KubevirtHyperconvergedClusterOperatorInstallationNotCompletedAlerthas been renamedHCOInstallationIncomplete. -

KubevirtHyperconvergedClusterOperatorCRModificationhas been renamedKubeVirtCRModified. -

KubevirtHyperconvergedClusterOperatorUSModificationhas been renamedUnsupportedHCOModification. -

SSPOperatorDownhas been renamedSSPDown.

-

2.1.3.1. Quick starts

-

Quick start tours are available for several OpenShift Virtualization features. To view the tours, click the Help icon ? in the menu bar on the header of the OpenShift Virtualization console and then select Quick Starts. You can filter the available tours by entering the

virtualizationkeyword in the Filter field.

2.1.3.2. Networking

- You can connect a virtual machine (VM) to an OVN-Kubernetes secondary network by using the web console or the CLI.

2.1.3.3. Web console

- Cluster administrators can now enable automatic subscription for Red Hat Enterprise Linux (RHEL) virtual machines in the OpenShift Virtualization web console.

- You can now force stop an unresponsive VM from the action menu. To force stop a VM, select Stop and then Force stop from the action menu.

- The DataSources and the Bootable volumes pages have been merged into the Bootable volumes page so that you can manage these similar resources in a single location.

- Cluster administrators can enable or disable Technology Preview features on the Settings tab on the Virtualization → Overview page.

- You can now generate a temporary token to access the VNC of a VM.

2.1.4. Deprecated and removed features

2.1.4.1. Deprecated features

Deprecated features are included in the current release and supported. However, they will be removed in a future release and are not recommended for new deployments.

-

The RHEL 8

kubevirt-virtctlRPM is deprecated. Download thevirtctlbinary from the OpenShift Container Platform web console instead of using the command line. The RPM will be removed in a future release.

-

The

tekton-tasks-operatoris deprecated and Tekton tasks and example pipelines are now deployed by thessp-operator.

-

The

copy-template,modify-vm-template, andcreate-vm-from-templatetasks are deprecated.

- Many OpenShift Virtualization metrics have changed or will change in a future version. These changes could affect your custom dashboards. See OpenShift Virtualization 4.14 metric changes for details. (BZ#2179660)

- Support for Windows Server 2012 R2 templates is deprecated.

2.1.4.2. Removed features

Removed features are not supported in the current release.

- Support for the legacy HPP custom resource, and the associated storage class, has been removed for all new deployments. In OpenShift Virtualization 4.14, the HPP Operator uses the Kubernetes Container Storage Interface (CSI) driver to configure local storage. A legacy HPP custom resource is supported only if it had been installed on a previous version of OpenShift Virtualization.

-

Installing the

virtctlclient as an RPM is no longer supported for Red Hat Enterprise Linux (RHEL) 7 and RHEL 9.

- CentOS 7 and CentOS Stream 8 are now in the End of Life phase. As a consequence, the container images for these operating systems have been removed from OpenShift Virtualization and are no longer community supported.

2.1.5. Technology Preview features

Some features in this release are currently in Technology Preview. These experimental features are not intended for production use. Note the following scope of support on the Red Hat Customer Portal for these features:

Technology Preview Features Support Scope

- You can now install and edit customized instance types and preferences to create a VM from a volume or PersistentVolumeClaim (PVC).

- You can now configure a VM eviction strategy for the entire cluster.

- You can hot plug a bridge network interface to a running virtual machine (VM). Hot plugging and hot unplugging is supported only for VMs created with OpenShift Virtualization 4.14 or later.

2.1.6. Bug fixes

-

The mediated devices configuration API in the

HyperConvergedcustom resource (CR) has been updated to improve consistency. The field that was previously namedmediatedDevicesTypesis now namedmediatedDeviceTypesto align with the naming convention used for thenodeMediatedDeviceTypesfield. (BZ#2054863)

-

Virtual machines created from common templates on a Single Node OpenShift (SNO) cluster no longer display a

VMCannotBeEvictedalert when the cluster-level eviction strategy isNonefor SNO. (BZ#2092412)

- Windows 11 virtual machines now boot on clusters running in FIPS mode. (BZ#2089301)

-

When you use two pods with different SELinux contexts, VMs with the

ocs-storagecluster-cephfsstorage class no longer fail to migrate. (BZ#2092271)

- If you stop a node on a cluster and then use the Node Health Check Operator to bring the node back up, connectivity to Multus is retained. (OCPBUGS-8398)

-

When restoring a VM snapshot for storage whose binding mode is

WaitForFirstConsumer, the restored PVCs no longer remain in thePendingstate and the restore operation proceeds. (BZ#2149654)

2.1.7. Known issues

Monitoring

The Pod Disruption Budget (PDB) prevents pod disruptions for migratable virtual machine images. If the PDB detects pod disruption, then

openshift-monitoringsends aPodDisruptionBudgetAtLimitalert every 60 minutes for virtual machine images that use theLiveMigrateeviction strategy. (BZ#2026733)- As a workaround, silence alerts.

Networking

If your OpenShift Container Platform cluster uses OVN-Kubernetes as the default Container Network Interface (CNI) provider, you cannot attach a Linux bridge or bonding device to a host’s default interface because of a change in the host network topology of OVN-Kubernetes. (BZ#1885605)

- As a workaround, you can use a secondary network interface connected to your host, or switch to the OpenShift SDN default CNI provider.

-

You cannot SSH into a VM when using the

networkType: OVNKubernetesoption in yourinstall-config.yamlfile. (BZ#2165895)

- You cannot run OpenShift Virtualization on a single-stack IPv6 cluster. (BZ#2193267)

When you update from OpenShift Container Platform 4.12 to a newer minor version, VMs that use the

cnv-bridgeContainer Network Interface (CNI) fail to live migrate. (https://access.redhat.com/solutions/7069807)-

As a workaround, change the

spec.config.typefield in yourNetworkAttachmentDefinitionmanifest fromcnv-bridgetobridgebefore performing the update.

-

As a workaround, change the

Nodes

-

Uninstalling OpenShift Virtualization does not remove the

feature.node.kubevirt.ionode labels created by OpenShift Virtualization. You must remove the labels manually. (CNV-22036)

- In a heterogeneous cluster with different compute nodes, virtual machines that have HyperV reenlightenment enabled cannot be scheduled on nodes that do not support timestamp-counter scaling (TSC) or have the appropriate TSC frequency. (BZ#2151169)

Storage

In some instances, multiple virtual machines can mount the same PVC in read-write mode, which might result in data corruption. (BZ#1992753)

- As a workaround, avoid using a single PVC in read-write mode with multiple VMs.

If you clone more than 100 VMs using the

csi-clonecloning strategy, then the Ceph CSI might not purge the clones. Manually deleting the clones might also fail. (BZ#2055595)-

As a workaround, you can restart the

ceph-mgrto purge the VM clones.

-

As a workaround, you can restart the

If you use Portworx as your storage solution on AWS and create a VM disk image, the created image might be smaller than expected due to the filesystem overhead being accounted for twice. (BZ#2237287)

- As a workaround, you can manually expand the Persistent Volume Claim (PVC) to increase the available space after the initial provisioning process completes.

If you simultaneously clone more than 1000 VMs using the provided DataSources in the

openshift-virtualization-os-imagesnamespace, it is possible that not all of the VMs will move to a running state. (BZ#2216038)- As a workaround, deploy VMs in smaller batches.

- Live migration cannot be enabled for a virtual machine instance (VMI) after a hotplug volume has been added and removed. (BZ#2247593)

Virtualization

- Live migration fails if the VM name exceeds 47 characters. (CNV-61066)

OpenShift Virtualization links a service account token in use by a pod to that specific pod. OpenShift Virtualization implements a service account volume by creating a disk image that contains a token. If you migrate a VM, then the service account volume becomes invalid. (BZ#2037611)

- As a workaround, use user accounts rather than service accounts because user account tokens are not bound to a specific pod.

With the release of the RHSA-2023:3722 advisory, the TLS

Extended Master Secret(EMS) extension (RFC 7627) is mandatory for TLS 1.2 connections on FIPS-enabled RHEL 9 systems. This is in accordance with FIPS-140-3 requirements. TLS 1.3 is not affected. (BZ#2157951)Legacy OpenSSL clients that do not support EMS or TLS 1.3 now cannot connect to FIPS servers running on RHEL 9. Similarly, RHEL 9 clients in FIPS mode cannot connect to servers that only support TLS 1.2 without EMS. This in practice means that these clients cannot connect to servers on RHEL 6, RHEL 7 and non-RHEL legacy operating systems. This is because the legacy 1.0.x versions of OpenSSL do not support EMS or TLS 1.3. For more information, see TLS Extension "Extended Master Secret" enforced with Red Hat Enterprise Linux 9.2.

As a workaround, upgrade legacy OpenSSL clients to a version that supports TLS 1.3 and configure OpenShift Virtualization to use TLS 1.3, with the

ModernTLS security profile type, for FIPS mode.

Web console

If you upgrade OpenShift Container Platform 4.13 to 4.14 without upgrading OpenShift Virtualization, the Virtualization pages of the web console crash. (OCPBUGS-22853)

You must upgrade the OpenShift Virtualization Operator to 4.14 manually or set your subscription approval strategy to "Automatic."

Chapter 3. Getting started

3.1. Getting started with OpenShift Virtualization

You can explore the features and functionalities of OpenShift Virtualization by installing and configuring a basic environment.

Cluster configuration procedures require cluster-admin privileges.

3.1.1. Planning and installing OpenShift Virtualization

Plan and install OpenShift Virtualization on an OpenShift Container Platform cluster:

Planning and installation resources

3.1.2. Creating and managing virtual machines

Create a virtual machine (VM):

Create a VM from a Red Hat image.

You can create a VM by using a Red Hat template or an instance type.

ImportantCreating a VM from an instance type is a Technology Preview feature only. Technology Preview features are not supported with Red Hat production service level agreements (SLAs) and might not be functionally complete. Red Hat does not recommend using them in production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process.

For more information about the support scope of Red Hat Technology Preview features, see Technology Preview Features Support Scope.

Create a VM from a custom image.

You can create a VM by importing a custom image from a container registry or a web page, by uploading an image from your local machine, or by cloning a persistent volume claim (PVC).

Connect a VM to a secondary network:

- Linux bridge network.

- Open Virtual Network (OVN)-Kubernetes secondary network.

Single Root I/O Virtualization (SR-IOV) network.

NoteVMs are connected to the pod network by default.

Connect to a VM:

- Connect to the serial console or VNC console of a VM.

- Connect to a VM by using SSH.

- Connect to the desktop viewer for Windows VMs.

Manage a VM:

3.1.3. Next steps

3.2. Using the virtctl and libguestfs CLI tools

You can manage OpenShift Virtualization resources by using the virtctl command-line tool.

You can access and modify virtual machine (VM) disk images by using the libguestfs command-line tool. You deploy libguestfs by using the virtctl libguestfs command.

3.2.1. Installing virtctl

To install virtctl on Red Hat Enterprise Linux (RHEL) 9, Linux, Windows, and MacOS operating systems, you download and install the virtctl binary file.

To install virtctl on RHEL 8, you enable the OpenShift Virtualization repository and then install the kubevirt-virtctl package.

3.2.1.1. Installing the virtctl binary on RHEL 9, Linux, Windows, or macOS

You can download the virtctl binary for your operating system from the OpenShift Container Platform web console and then install it.

Procedure

- Navigate to the Virtualization → Overview page in the web console.

-

Click the Download virtctl link to download the

virtctlbinary for your operating system. Install

virtctl:For RHEL 9 and other Linux operating systems:

Decompress the archive file:

tar -xvf <virtctl-version-distribution.arch>.tar.gz

$ tar -xvf <virtctl-version-distribution.arch>.tar.gzCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run the following command to make the

virtctlbinary executable:chmod +x <path/virtctl-file-name>

$ chmod +x <path/virtctl-file-name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Move the

virtctlbinary to a directory in yourPATHenvironment variable.You can check your path by running the following command:

echo $PATH

$ echo $PATHCopy to Clipboard Copied! Toggle word wrap Toggle overflow Set the

KUBECONFIGenvironment variable:export KUBECONFIG=/home/<user>/clusters/current/auth/kubeconfig

$ export KUBECONFIG=/home/<user>/clusters/current/auth/kubeconfigCopy to Clipboard Copied! Toggle word wrap Toggle overflow

For Windows:

- Decompress the archive file.

-

Navigate the extracted folder hierarchy and double-click the

virtctlexecutable file to install the client. Move the

virtctlbinary to a directory in yourPATHenvironment variable.You can check your path by running the following command:

path

C:\> pathCopy to Clipboard Copied! Toggle word wrap Toggle overflow

For macOS:

- Decompress the archive file.

Move the

virtctlbinary to a directory in yourPATHenvironment variable.You can check your path by running the following command:

echo $PATH

echo $PATHCopy to Clipboard Copied! Toggle word wrap Toggle overflow

3.2.1.2. Installing the virtctl RPM on RHEL 8

You can install the virtctl RPM package on Red Hat Enterprise Linux (RHEL) 8 by enabling the OpenShift Virtualization repository and installing the kubevirt-virtctl package.

Prerequisites

- Each host in your cluster must be registered with Red Hat Subscription Manager (RHSM) and have an active OpenShift Container Platform subscription.

Procedure

Enable the OpenShift Virtualization repository by using the

subscription-managerCLI tool to run the following command:subscription-manager repos --enable cnv-4.14-for-rhel-8-x86_64-rpms

# subscription-manager repos --enable cnv-4.14-for-rhel-8-x86_64-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Install the

kubevirt-virtctlpackage by running the following command:yum install kubevirt-virtctl

# yum install kubevirt-virtctlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

3.2.2. virtctl commands

The virtctl client is a command-line utility for managing OpenShift Virtualization resources.

The virtual machine (VM) commands also apply to virtual machine instances (VMIs) unless otherwise specified.

3.2.2.1. virtctl information commands

You use virtctl information commands to view information about the virtctl client.

| Command | Description |

|---|---|

|

|

View the |

|

|

View a list of |

|

| View a list of options for a specific command. |

|

|

View a list of global command options for any |

3.2.2.2. VM information commands

You can use virtctl to view information about virtual machines (VMs) and virtual machine instances (VMIs).

| Command | Description |

|---|---|

|

| View the file systems available on a guest machine. |

|

| View information about the operating systems on a guest machine. |

|

| View the logged-in users on a guest machine. |

3.2.2.3. VM management commands

You use virtctl virtual machine (VM) management commands to manage and migrate virtual machines (VMs) and virtual machine instances (VMIs).

| Command | Description |

|---|---|

|

|

Create a |

|

| Start a VM. |

|

| Start a VM in a paused state. This option enables you to interrupt the boot process from the VNC console. |

|

| Stop a VM. |

|

| Force stop a VM. This option might cause data inconsistency or data loss. |

|

| Pause a VM. The machine state is kept in memory. |

|

| Unpause a VM. |

|

| Migrate a VM. |

|

| Cancel a VM migration. |

|

| Restart a VM. |

|

|

Create an |

|

|

Create a |

3.2.2.4. VM connection commands

You use virtctl connection commands to expose ports and connect to virtual machines (VMs) and virtual machine instances (VMIs).

| Command | Description |

|---|---|

|

| Connect to the serial console of a VM. |

|

| Create a service that forwards a designated port of a VM and expose the service on the specified port of the node.

Example: |

|

| Copy a file from your machine to a VM. This command uses the private key of an SSH key pair. The VM must be configured with the public key. |

|

| Copy a file from a VM to your machine. This command uses the private key of an SSH key pair. The VM must be configured with the public key. |

|

| Open an SSH connection with a VM. This command uses the private key of an SSH key pair. The VM must be configured with the public key. |

|

| Connect to the VNC console of a VM.

You must have |

|

| Display the port number and connect manually to a VM by using any viewer through the VNC connection. |

|

| Specify a port number to run the proxy on the specified port, if that port is available. If a port number is not specified, the proxy runs on a random port. |

3.2.2.5. VM export commands

Use virtctl vmexport commands to create, download, or delete a volume exported from a VM, VM snapshot, or persistent volume claim (PVC). Certain manifests also contain a header secret, which grants access to the endpoint to import a disk image in a format that OpenShift Virtualization can use.

| Command | Description |

|---|---|

|

|

Create a

|

|

|

Delete a |

|

|

Download the volume defined in a

Optional:

|

|

|

Create a |

|

| Retrieve the manifest for an existing export. The manifest does not include the header secret. |

|

| Create a VM export for a VM example, and retrieve the manifest. The manifest does not include the header secret. |

|

| Create a VM export for a VM snapshot example, and retrieve the manifest. The manifest does not include the header secret. |

|

| Retrieve the manifest for an existing export. The manifest includes the header secret. |

|

| Retrieve the manifest for an existing export in json format. The manifest does not include the header secret. |

|

| Retrieve the manifest for an existing export. The manifest includes the header secret and writes it to the file specified. |

3.2.2.6. VM memory dump commands

You can use the virtctl memory-dump command to output a VM memory dump on a PVC. You can specify an existing PVC or use the --create-claim flag to create a new PVC.

Prerequisites

-

The PVC volume mode must be

FileSystem. The PVC must be large enough to contain the memory dump.

The formula for calculating the PVC size is

(VMMemorySize + 100Mi) * FileSystemOverhead, where100Miis the memory dump overhead.You must enable the hot plug feature gate in the

HyperConvergedcustom resource by running the following command:oc patch hyperconverged kubevirt-hyperconverged -n openshift-cnv \ --type json -p '[{"op": "add", "path": "/spec/featureGates", \ "value": "HotplugVolumes"}]'$ oc patch hyperconverged kubevirt-hyperconverged -n openshift-cnv \ --type json -p '[{"op": "add", "path": "/spec/featureGates", \ "value": "HotplugVolumes"}]'Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Downloading the memory dump

You must use the virtctl vmexport download command to download the memory dump:

virtctl vmexport download <vmexport_name> --vm|pvc=<object_name> \ --volume=<volume_name> --output=<output_file>

$ virtctl vmexport download <vmexport_name> --vm|pvc=<object_name> \

--volume=<volume_name> --output=<output_file>| Command | Description |

|---|---|

|

|

Save the memory dump of a VM on a PVC. The memory dump status is displayed in the Optional:

|

|

|

Rerun the This command overwrites the previous memory dump. |

|

| Remove a memory dump. You must remove a memory dump manually if you want to change the target PVC.

This command removes the association between the VM and the PVC, so that the memory dump is not displayed in the |

3.2.2.7. Hot plug and hot unplug commands

You use virtctl to add or remove resources from running virtual machines (VMs) and virtual machine instances (VMIs).

| Command | Description |

|---|---|

|

| Hot plug a data volume or persistent volume claim (PVC). Optional:

|

|

| Hot unplug a virtual disk. |

|

| Hot plug a Linux bridge network interface. |

|

| Hot unplug a Linux bridge network interface. |

3.2.2.8. Image upload commands

You use the virtctl image-upload commands to upload a VM image to a data volume.

| Command | Description |

|---|---|

|

| Upload a VM image to a data volume that already exists. |

|

| Upload a VM image to a new data volume of a specified requested size. |

3.2.3. Deploying libguestfs by using virtctl

You can use the virtctl guestfs command to deploy an interactive container with libguestfs-tools and a persistent volume claim (PVC) attached to it.

Procedure

To deploy a container with

libguestfs-tools, mount the PVC, and attach a shell to it, run the following command:virtctl guestfs -n <namespace> <pvc_name>

$ virtctl guestfs -n <namespace> <pvc_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow ImportantThe

<pvc_name>argument is required. If you do not include it, an error message appears.

3.2.3.1. Libguestfs and virtctl guestfs commands

Libguestfs tools help you access and modify virtual machine (VM) disk images. You can use libguestfs tools to view and edit files in a guest, clone and build virtual machines, and format and resize disks.

You can also use the virtctl guestfs command and its sub-commands to modify, inspect, and debug VM disks on a PVC. To see a complete list of possible sub-commands, enter virt- on the command line and press the Tab key. For example:

| Command | Description |

|---|---|

|

| Edit a file interactively in your terminal. |

|

| Inject an ssh key into the guest and create a login. |

|

| See how much disk space is used by a VM. |

|

| See the full list of all RPMs installed on a guest by creating an output file containing the full list. |

|

|

Display the output file list of all RPMs created using the |

|

| Seal a virtual machine disk image to be used as a template. |

By default, virtctl guestfs creates a session with everything needed to manage a VM disk. However, the command also supports several flag options if you want to customize the behavior:

| Flag Option | Description |

|---|---|

|

|

Provides help for |

|

| To use a PVC from a specific namespace.

If you do not use the

If you do not include a |

|

|

Lists the

You can configure the container to use a custom image by using the |

|

|

Indicates that

By default,

If a cluster does not have any

If not set, the |

|

|

Shows the pull policy for the

You can also overwrite the image’s pull policy by setting the |

The command also checks if a PVC is in use by another pod, in which case an error message appears. However, once the libguestfs-tools process starts, the setup cannot avoid a new pod using the same PVC. You must verify that there are no active virtctl guestfs pods before starting the VM that accesses the same PVC.

The virtctl guestfs command accepts only a single PVC attached to the interactive pod.

3.3. Web console overview

The Virtualization section of the OpenShift Container Platform web console contains the following pages for managing and monitoring your OpenShift Virtualization environment.

| Page | Description |

|---|---|

| Manage and monitor the OpenShift Virtualization environment. | |

| Create virtual machines from a catalog of templates. | |

| Create and manage virtual machines. | |

| Create and manage templates. | |

| Create and manage virtual machine instance types. | |

| Create and manage virtual machine preferences. | |

| Create and manage DataSources for bootable volumes. | |

| Create and manage migration policies for workloads. |

| Icon | Description |

|---|---|

|

| Edit icon |

|

| Link icon |

3.3.1. Overview page

The Overview page displays resources, metrics, migration progress, and cluster-level settings.

Example 3.1. Overview page

| Element | Description |

|---|---|

|

Download virtctl |

Download the |

| Resources, usage, alerts, and status. | |

| Top consumers of CPU, memory, and storage resources. | |

| Status of live migrations. | |

| The Settings tab contains the Cluster tab and the User tab. | |

| Settings → Cluster tab | OpenShift Virtualization version, update status, live migration, templates project, preview features, and load balancer service settings. |

| Settings → User tab | Authorized SSH keys, user permissions, and welcome information settings. |

3.3.1.1. Overview tab

The Overview tab displays resources, usage, alerts, and status.

Example 3.2. Overview tab

| Element | Description |

|---|---|

| Getting started resources card |

|

| Memory tile | Memory usage, with a chart showing the last 7 days' trend. |

| Storage tile | Storage usage, with a chart showing the last 7 days' trend. |

| VirtualMachines tile | Number of virtual machines, with a chart showing the last 7 days' trend. |

| vCPU usage tile | vCPU usage, with a chart showing the last 7 days' trend. |

| VirtualMachine statuses tile | Number of virtual machines, grouped by status. |

| Alerts tile | OpenShift Virtualization alerts, grouped by severity. |

| VirtualMachines per resource chart | Number of virtual machines created from templates and instance types. |

3.3.1.2. Top consumers tab

The Top consumers tab displays the top consumers of CPU, memory, and storage.

Example 3.3. Top consumers tab

| Element | Description |

|---|---|

|

View virtualization dashboard | Link to Observe → Dashboards, which displays the top consumers for OpenShift Virtualization. |

| Time period list | Select a time period to filter the results. |

| Top consumers list | Select the number of top consumers to filter the results. |

| CPU chart | Virtual machines with the highest CPU usage. |

| Memory chart | Virtual machines with the highest memory usage. |

| Memory swap traffic chart | Virtual machines with the highest memory swap traffic. |

| vCPU wait chart | Virtual machines with the highest vCPU wait periods. |

| Storage throughput chart | Virtual machines with the highest storage throughput usage. |

| Storage IOPS chart | Virtual machines with the highest storage input/output operations per second usage. |

3.3.1.3. Migrations tab

The Migrations tab displays the status of virtual machine migrations.

Example 3.4. Migrations tab

| Element | Description |

|---|---|

| Time period list | Select a time period to filter virtual machine migrations. |

| VirtualMachineInstanceMigrations information table | List of virtual machine migrations. |

3.3.1.4. Settings tab

The Settings tab displays cluster-wide settings.

Example 3.5. Tabs on the Settings tab

| Tab | Description |

|---|---|

| OpenShift Virtualization version and update status, live migration, templates project, preview features, and load balancer service settings. | |

| Authorized SSH key management, user permissions, and welcome information settings. |

3.3.1.4.1. Cluster tab

The Cluster tab displays the OpenShift Virtualization version and update status. You configure preview features, live migration, and other settings on the Cluster tab.

Example 3.6. Cluster tab

| Element | Description |

|---|---|

| Installed version | OpenShift Virtualization version. |

| Update status | OpenShift Virtualization update status. |

| Channel | OpenShift Virtualization update channel. |

| Preview features section | Expand this section to manage preview features. Preview features are disabled by default and must not be enabled in production environments. |

| Live Migration section | Expand this section to configure live migration settings. |

| Live Migration → Max. migrations per cluster field | Select the maximum number of live migrations per cluster. |

| Live Migration → Max. migrations per node field | Select the maximum number of live migrations per node. |

| Live Migration → Live migration network list | Select a dedicated secondary network for live migration. |

| Automatic subscription of new RHEL VirtualMachines section | Expand this section to enable automatic subscription for Red Hat Enterprise Linux (RHEL) virtual machines. To enable this feature, you need cluster administrator permissions, an organization ID, and an activation key. |

| LoadBalancer section | Expand this section to enable the creation of load balancer services for SSH access to virtual machines. The cluster must have a load balancer configured. |

| Template project section |

Expand this section to select a project for Red Hat templates. The default project is To store Red Hat templates in multiple projects, clone the template and then select a project for the cloned template. |

3.3.1.4.2. User tab

You view user permissions and manage authorized SSH keys and welcome information on the User tab.

Example 3.7. User tab

| Element | Description |

|---|---|

| Manage SSH keys section | Expand this section to add authorized SSH keys to a project. The keys are added automatically to all virtual machines that you subsequently create in the selected project. |

| Permissions section | Expand this section to view cluster-wide user permissions. |

| Welcome information section | Expand this section to show or hide the Welcome information dialog. |

3.3.2. Catalog page

You create a virtual machine from a template or instance type on the Catalog page.

Example 3.8. Catalog page

| Element | Description |

|---|---|

| Displays a catalog of templates for creating a virtual machine. | |

| Displays bootable volumes and instance types for creating a virtual machine. |

3.3.2.1. Template catalog tab

You select a template on the Template catalog tab to create a virtual machine.

Example 3.9. Template catalog tab

| Element | Description |

|---|---|

| Template project list | Select the project in which Red Hat templates are located.

By default, Red Hat templates are stored in the |

| All items|Default templates | Click All items to display all available templates. |

| Boot source available checkbox | Select the checkbox to display templates with an available boot source. |

| Operating system checkboxes | Select checkboxes to display templates with selected operating systems. |

| Workload checkboxes | Select checkboxes to display templates with selected workloads. |

| Search field | Search templates by keyword. |

| Template tiles | Click a template tile to view template details and to create a virtual machine. |

3.3.2.2. InstanceTypes tab

You create a virtual machine from an instance type on the InstanceTypes tab.

Creating a virtual machine from an instance type is a Technology Preview feature only. Technology Preview features are not supported with Red Hat production service level agreements (SLAs) and might not be functionally complete. Red Hat does not recommend using them in production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process.

For more information about the support scope of Red Hat Technology Preview features, see Technology Preview Features Support Scope.

| Element | Description |

|---|---|

| Volumes project field |

Project in which bootable volumes are stored. The default is |

| Add volume button | Click to upload a new volume or to use an existing persistent volume claim. |

| Filter field | Filter boot sources by operating system or resource. |

| Search field | Search boot sources by name. |

| Manage columns icon | Select up to 9 columns to display in the table. |

| Volume table | Select a bootable volume for your virtual machine. |

| Red Hat provided tab | Select an instance type provided by Red Hat. |

| User provided tab | Select an instance type that you created on the InstanceType page. |

| VirtualMachine details pane | Displays the virtual machine settings. |

| Name field | Optional: Enter the virtual machine name. |

| SSH key name | Click the edit icon to add a public SSH key. |

| Start this VirtualMachine after creation checkbox | Clear this checkbox to prevent the virtual machine from starting automatically. |

| Create VirtualMachine button | Creates a virtual machine. |

| YAML & CLI button |

Displays the YAML configuration file and the |

3.3.3. VirtualMachines page

You create and manage virtual machines on the VirtualMachines page.

Example 3.10. VirtualMachines page

| Element | Description |

|---|---|

| Create button | Create a virtual machine from a template, volume, or YAML configuration file. |

| Filter field | Filter virtual machines by status, template, operating system, or node. |

| Search field | Search for virtual machines by name or by label. |

| Manage columns icon | Select up to 9 columns to display in the table. The Namespace column is only displayed when All Projects is selected from the Projects list. |

| Virtual machines table | List of virtual machines.

Click the actions menu

Click a virtual machine to navigate to the VirtualMachine details page. |

3.3.3.1. VirtualMachine details page

You configure a virtual machine on the VirtualMachine details page.

Example 3.11. VirtualMachine details page

| Element | Description |

|---|---|

| Actions menu | Click the Actions menu to select Stop, Restart, Pause, Clone, Migrate, Copy SSH command, Edit labels, Edit annotations, or Delete. If you select Stop, Force stop replaces Stop in the action menu. Use Force stop to initiate an immediate shutdown if the operating system becomes unresponsive. |

| Resource usage, alerts, disks, and devices. | |

| Virtual machine details and configurations. | |

| Memory, CPU, storage, network, and migration metrics. | |

| Virtual machine YAML configuration file. | |

| Contains the Disks, Network interfaces, Scheduling, Environment, and Scripts tabs. | |

| Disks. | |

| Network interfaces. | |

| Scheduling a virtual machine to run on specific nodes. | |

| Config map, secret, and service account management. | |

| Cloud-init settings, authorized SSH key and dynamic key injection for Linux virtual machines, Sysprep settings for Windows virtual machines. | |

| Virtual machine event stream. | |

| Console session management. | |

| Snapshot management. | |

| Status conditions and volume snapshot status. |

3.3.3.1.1. Overview tab

The Overview tab displays resource usage, alerts, and configuration information.

Example 3.12. Overview tab

| Element | Description |

|---|---|

| Details tile | General virtual machine information. |

| Utilization tile | CPU, Memory, Storage, and Network transfer charts. By default, Network transfer displays the sum of all networks. To view the breakdown for a specific network, click Breakdown by network. |

| Hardware devices tile | GPU and host devices. |

| Alerts tile | OpenShift Virtualization alerts, grouped by severity. |

| Snapshots tile |

Take snapshot |

| Network interfaces tile | Network interfaces table. |

| Disks tile | Disks table. |

3.3.3.1.2. Details tab

You view information about the virtual machine and edit labels, annotations, and other metadata and on the Details tab.

Example 3.13. Details tab

| Element | Description |

|---|---|

| YAML switch | Set to ON to view your live changes in the YAML configuration file. |

| Name | Virtual machine name. |

| Namespace | Virtual machine namespace or project. |

| Labels | Click the edit icon to edit the labels. |

| Annotations | Click the edit icon to edit the annotations. |

| Description | Click the edit icon to enter a description. |

| Operating system | Operating system name. |

| CPU|Memory | Click the edit icon to edit the CPU|Memory request. Restart the virtual machine to apply the change.

The number of CPUs is calculated by using the following formula: |

| Machine type | Machine type. |

| Boot mode | Click the edit icon to edit the boot mode. Restart the virtual machine to apply the change. |

| Start in pause mode | Click the edit icon to enable this setting. Restart the virtual machine to apply the change. |

| Template | Name of the template used to create the virtual machine. |

| Created at | Virtual machine creation date. |

| Owner | Virtual machine owner. |

| Status | Virtual machine status. |

| Pod |

|

| VirtualMachineInstance | Virtual machine instance name. |

| Boot order | Click the edit icon to select a boot source. Restart the virtual machine to apply the change. |

| IP address | IP address of the virtual machine. |

| Hostname | Hostname of the virtual machine. Restart the virtual machine to apply the change. |

| Time zone | Time zone of the virtual machine. |

| Node | Node on which the virtual machine is running. |

| Workload profile | Click the edit icon to edit the workload profile. |

| SSH access | These settings apply to Linux. |

| SSH using virtctl |

Click the copy icon to copy the |

| SSH service type | Select SSH over LoadBalancer. After you create a service, the SSH command is displayed. Click the copy icon to copy the command to the clipboard. |

| GPU devices | Click the edit icon to add a GPU device. Restart the virtual machine to apply the change. |

| Host devices | Click the edit icon to add a host device. Restart the virtual machine to apply the change. |

| Headless mode | Click the edit icon to set headless mode to ON and to disable VNC console. Restart the virtual machine to apply the change. |

| Services | Displays a list of services if QEMU guest agent is installed. |

| Active users | Displays a list of active users if QEMU guest agent is installed. |

3.3.3.1.3. Metrics tab

The Metrics tab displays memory, CPU, storage, network, and migration usage charts.

Example 3.14. Metrics tab

| Element | Description |

|---|---|

| Time range list | Select a time range to filter the results. |

|

Virtualization dashboard | Link to the Workloads tab of the current project. |

| Utilization | Memory and CPU charts. |

| Storage | Storage total read/write and Storage IOPS total read/write charts. |

| Network | Network in, Network out, Network bandwidth, and Network interface charts. Select All networks or a specific network from the Network interface list. |

| Migration | Migration and KV data transfer rate charts. |

3.3.3.1.4. YAML tab

You configure the virtual machine by editing the YAML file on the YAML tab.

Example 3.15. YAML tab

| Element | Description |

|---|---|

| Save button | Save changes to the YAML file. |

| Reload button | Discard your changes and reload the YAML file. |

| Cancel button | Exit the YAML tab. |

| Download button | Download the YAML file to your local machine. |

3.3.3.1.5. Configuration tab

You configure scheduling, network interfaces, disks, and other options on the Configuration tab.

Example 3.16. Tabs on the Configuration tab

| Element | Description |

|---|---|

| YAML switch | Set to ON to view your live changes in the YAML configuration file. |

| Disks. | |

| Network interfaces. | |

| Scheduling and resource requirements. | |

| Config maps, secrets, and service accounts. | |

| Cloud-init settings, authorized SSH key for Linux virtual machines, Sysprep answer file for Windows virtual machines. |

3.3.3.1.5.1. Disks tab

You manage disks on the Disks tab.

Example 3.17. Disks tab

| Setting | Description |

|---|---|

| Add disk button | Add a disk to the virtual machine. |

| Filter field | Filter by disk type. |

| Search field | Search for a disk by name. |

| Mount Windows drivers disk checkbox |

Select to mount a |

| Disks table | List of virtual machine disks.

Click the actions menu

|

| File systems table | List of virtual machine file systems. |

3.3.3.1.5.2. Network interfaces tab

You manage network interfaces on the Network interfaces tab.

Example 3.18. Network interfaces tab

| Setting | Description |

|---|---|

| Add network interface button | Add a network interface to the virtual machine. |

| Filter field | Filter by interface type. |

| Search field | Search for a network interface by name or by label. |

| Network interface table | List of network interfaces.

Click the actions menu

|

3.3.3.1.5.3. Scheduling tab

You configure virtual machines to run on specific nodes on the Scheduling tab.

Restart the virtual machine to apply changes.

Example 3.19. Scheduling tab

| Setting | Description |

|---|---|

| Node selector | Click the edit icon to add a label to specify qualifying nodes. |

| Tolerations | Click the edit icon to add a toleration to specify qualifying nodes. |

| Affinity rules | Click the edit icon to add an affinity rule. |

| Descheduler switch | Enable or disable the descheduler. The descheduler evicts a running pod so that the pod can be rescheduled onto a more suitable node. This field is disabled if the virtual machine cannot be live migrated. |

| Dedicated resources | Click the edit icon to select Schedule this workload with dedicated resources (guaranteed policy). |

| Eviction strategy | Click the edit icon to select LiveMigrate as the virtual machine eviction strategy. |

3.3.3.1.5.4. Environment tab

You manage config maps, secrets, and service accounts on the Environment tab.

Example 3.20. Environment tab

| Element | Description |

|---|---|

|

Add Config Map, Secret or Service Account | Click the link and select a config map, secret, or service account from the resource list. |

3.3.3.1.5.5. Scripts tab

You manage cloud-init settings, add SSH keys, or configure Sysprep for Windows virtual machines on the Scripts tab.

Restart the virtual machine to apply changes.

Example 3.21. Scripts tab

| Element | Description |

|---|---|

| Cloud-init | Click the edit icon to edit the cloud-init settings. |

| Authorized SSH key | Click the edit icon to add a public SSH key to a Linux virtual machine. The key is added as a cloud-init data source at first boot. |

| Dynamic SSH key injection switch | Set Dynamic SSH key injection to on to enable dynamic public SSH key injection. Then, you can add or revoke the key at runtime. Dynamic SSH key injection is only supported by Red Hat Enterprise Linux (RHEL) 9. If you manually disable this setting, the virtual machine inherits the SSH key settings of the image from which it was created. |

| Sysprep |

Click the edit icon to upload an |

3.3.3.1.6. Events tab

The Events tab displays a list of virtual machine events.

3.3.3.1.7. Console tab

You can open a console session to the virtual machine on the Console tab.

Example 3.22. Console tab

| Element | Description |

|---|---|

| Guest login credentials section |

Expand Guest login credentials to view the credentials created with |

| Console list | Select VNC console or Serial console. The Desktop viewer option is displayed for Windows virtual machines. You must install an RDP client on a machine on the same network. |

| Send key list | Select a key-stroke combination to send to the console. |

| Disconnect button | Disconnect the console connection. You must manually disconnect the console connection if you open a new console session. Otherwise, the first console session continues to run in the background. |

| Paste button | Paste a string from your clipboard to the VNC console. |

3.3.3.1.8. Snapshots tab

You create snapshots and restore virtual machines from snapshots on the Snapshots tab.

Example 3.23. Snapshots tab

| Element | Description |

|---|---|

| Take snapshot button | Create a snapshot. |

| Filter field | Filter snapshots by status. |

| Search field | Search for snapshots by name or by label. |

| Snapshot table | List of snapshots Click the snapshot name to edit the labels or annotations.

Click the actions menu

|

3.3.3.1.9. Diagnostics tab

You view the status conditions and volume snapshot status on the Diagnostics tab.

Example 3.24. Diagnostics tab

| Element | Description |

|---|---|

| Status conditions table | Display a list of conditions that are reported for the virtual machine. |

| Filter field | Filter status conditions by category and condition. |

| Search field | Search status conditions by reason. |

| Manage columns icon | Select up to 9 columns to display in the table. |

| Volume snapshot status table | List of volumes, their snapshot enablement status, and reason. |

3.3.4. Templates page

You create, edit, and clone virtual machine templates on the VirtualMachine Templates page.

You cannot edit a Red Hat template. However, you can clone a Red Hat template and edit it to create a custom template.

Example 3.25. VirtualMachine Templates page

| Element | Description |

|---|---|

| Create Template button | Create a template by editing a YAML configuration file. |

| Filter field | Filter templates by type, boot source, template provider, or operating system. |

| Search field | Search for templates by name or by label. |

| Manage columns icon | Select up to 9 columns to display in the table. The Namespace column is only displayed when All Projects is selected from the Projects list. |

| Virtual machine templates table | List of virtual machine templates.

Click the actions menu

|

3.3.4.1. Template details page

You view template settings and edit custom templates on the Template details page.

Example 3.26. Template details page

| Element | Description |

|---|---|

| YAML switch | Set to ON to view your live changes in the YAML configuration file. |

| Actions menu | Click the Actions menu to select Edit, Clone, Edit boot source, Edit boot source reference, Edit labels, Edit annotations, or Delete. |

| Template settings and configurations. | |

| YAML configuration file. | |

| Scheduling configurations. | |

| Network interface management. | |

| Disk management. | |

| Cloud-init, SSH key, and Sysprep management. | |

| Name and cloud user password management. |

3.3.4.1.1. Details tab

You configure a custom template on the Details tab.

Example 3.27. Details tab

| Element | Description |

|---|---|

| Name | Template name. |

| Namespace | Template namespace. |

| Labels | Click the edit icon to edit the labels. |

| Annotations | Click the edit icon to edit the annotations. |

| Display name | Click the edit icon to edit the display name. |

| Description | Click the edit icon to enter a description. |

| Operating system | Operating system name. |

| CPU|Memory | Click the edit icon to edit the CPU|Memory request.

The number of CPUs is calculated by using the following formula: |

| Machine type | Template machine type. |

| Boot mode | Click the edit icon to edit the boot mode. |

| Base template | Name of the base template used to create this template. |

| Created at | Template creation date. |

| Owner | Template owner. |

| Boot order | Template boot order. |

| Boot source | Boot source availability. |

| Provider | Template provider. |

| Support | Template support level. |

| GPU devices | Click the edit icon to add a GPU device. |

| Host devices | Click the edit icon to add a host device. |

| Headless mode | Click the edit icon to set headless mode to ON and to disable VNC console. |

3.3.4.1.2. YAML tab

You configure a custom template by editing the YAML file on the YAML tab.

Example 3.28. YAML tab

| Element | Description |

|---|---|

| Save button | Save changes to the YAML file. |

| Reload button | Discard your changes and reload the YAML file. |

| Cancel button | Exit the YAML tab. |

| Download button | Download the YAML file to your local machine. |

3.3.4.1.3. Scheduling tab

You configure scheduling on the Scheduling tab.

Example 3.29. Scheduling tab

| Setting | Description |

|---|---|

| Node selector | Click the edit icon to add a label to specify qualifying nodes. |

| Tolerations | Click the edit icon to add a toleration to specify qualifying nodes. |

| Affinity rules | Click the edit icon to add an affinity rule. |

| Descheduler switch | Enable or disable the descheduler. The descheduler evicts a running pod so that the pod can be rescheduled onto a more suitable node. |

| Dedicated resources | Click the edit icon to select Schedule this workload with dedicated resources (guaranteed policy). |

| Eviction strategy | Click the edit icon to select LiveMigrate as the virtual machine eviction strategy. |

3.3.4.1.4. Network interfaces tab

You manage network interfaces on the Network interfaces tab.

Example 3.30. Network interfaces tab

| Setting | Description |

|---|---|

| Add network interface button | Add a network interface to the template. |

| Filter field | Filter by interface type. |

| Search field | Search for a network interface by name or by label. |

| Network interface table | List of network interfaces.

Click the actions menu

|

3.3.4.1.5. Disks tab

You manage disks on the Disks tab.

Example 3.31. Disks tab

| Setting | Description |