OVN-Kubernetes network plugin

In-depth configuration and troubleshooting for the OVN-Kubernetes network plugin in OpenShift Container Platform

Abstract

Chapter 1. About the OVN-Kubernetes network plugin

The OpenShift Container Platform cluster uses a virtualized network for pod and service networks.

Part of Red Hat OpenShift Networking, the OVN-Kubernetes network plugin is the default network provider for OpenShift Container Platform. OVN-Kubernetes is based on Open Virtual Network (OVN) and provides an overlay-based networking implementation. A cluster that uses the OVN-Kubernetes plugin also runs Open vSwitch (OVS) on each node. OVN configures OVS on each node to implement the declared network configuration.

OVN-Kubernetes is the default networking solution for OpenShift Container Platform and single-node OpenShift deployments.

OVN-Kubernetes, which arose from the OVS project, uses many of the same constructs, such as open flow rules, to decide how packets travel through the network. For more information, see the Open Virtual Network website.

OVN-Kubernetes is a series of daemons for OVS that transform virtual network configurations into OpenFlow rules. OpenFlow is a protocol for communicating with network switches and routers, providing a means for remotely controlling the flow of network traffic on a network device. This means that network administrators can configure, manage, and watch the flow of network traffic.

OVN-Kubernetes provides more of the advanced functionality not available with OpenFlow. OVN supports distributed virtual routing, distributed logical switches, access control, Dynamic Host Configuration Protocol (DHCP), and DNS. OVN implements distributed virtual routing within logic flows that equate to open flows. For example, if you have a pod that sends out a DHCP request to the DHCP server on the network, a logic flow rule in the request helps the OVN-Kubernetes handle the packet. This means that the server can respond with gateway, DNS server, IP address, and other information.

OVN-Kubernetes runs a daemon on each node. There are daemon sets for the databases and for the OVN controller that run on every node. The OVN controller programs the Open vSwitch daemon on the nodes to support the following network provider features:

- Egress IPs

- Firewalls

- Hardware offloading

- Hybrid networking

- Internet Protocol Security (IPsec) encryption

- IPv6

- Multicast.

- Network policy and network policy logs

- Routers

1.1. OVN-Kubernetes purpose

The OVN-Kubernetes network plugin is an open-source, fully-featured Kubernetes CNI plugin that uses Open Virtual Network (OVN) to manage network traffic flows. OVN is a community developed, vendor-agnostic network virtualization solution. The OVN-Kubernetes network plugin uses the following technologies:

- OVN to manage network traffic flows.

- Kubernetes network policy support and logs, including ingress and egress rules.

- The Generic Network Virtualization Encapsulation (Geneve) protocol, rather than Virtual Extensible LAN (VXLAN), to create an overlay network between nodes.

The OVN-Kubernetes network plugin supports the following capabilities:

- Hybrid clusters that can run both Linux and Microsoft Windows workloads. This environment is known as hybrid networking.

- Offloading of network data processing from the host central processing unit (CPU) to compatible network cards and data processing units (DPUs). This is known as hardware offloading.

- IPv4-primary dual-stack networking on bare-metal, VMware vSphere, IBM Power®, IBM Z®, and Red Hat OpenStack Platform (RHOSP) platforms.

- IPv6 single-stack networking on RHOSP and bare metal platforms.

- IPv6-primary dual-stack networking for a cluster running on a bare-metal, a VMware vSphere, or an RHOSP platform.

- Egress firewall devices and egress IP addresses.

- Egress router devices that operate in redirect mode.

- IPsec encryption of intracluster communications.

Red Hat does not support the following postinstallation configurations that use the OVN-Kubernetes network plugin:

- Configuring the primary network interface, including using the NMState Operator to configure bonding for the interface.

-

Configuring a sub-interface or additional network interface on a network device that uses the Open vSwitch (OVS) or an OVN-Kubernetes

br-exbridge network. - Creating additional virtual local area networks (VLANs) on the primary network interface.

-

Using the primary network interface, such as

eth0orbond0, that you created for a node during cluster installation to create additional secondary networks.

Red Hat does support the following postinstallation configurations that use the OVN-Kubernetes network plugin:

-

Creating additional VLANs from the base physical interface, such as

eth0.100, where you configured the primary network interface as a VLAN for a node during cluster installation. This works because the Open vSwitch (OVS) bridge attaches to the initial VLAN sub-interface, such aseth0.100, leaving the base physical interface available for new configurations. -

Creating an additional OVN secondary network with a

localnettopology network requires that you define the secondary network in aNodeNetworkConfigurationPolicy(NNCP) object. After you create the network, pods or virtual machines (VMs) can then attach to the network. These secondary networks give a dedicated connection to the physical network, which might or might not use VLAN tagging. You cannot access these networks from the host network of a node where the host does not have the required setup, such as the required network settings. -

Defining additional VLANs in the

vlanIDparameter of theNetworkAttachmentDefinition(NAD) custom resource (CR). By mapping thebr-exbridge to a physical interface, the interface acts as a trunk port that can carry multiple VLANs. OVN-Kubernetes can then manage tagging and untagging of network packets, also known as Ethernet frames. To ensure strong connectivity, configure the physical switch ports as trunks.

1.2. OVN-Kubernetes IPv6 and dual-stack limitations

The OVN-Kubernetes network plugin has the following limitations:

For clusters configured for dual-stack networking, both IPv4 and IPv6 traffic must use the same network interface as the default gateway.

If this requirement is not met, pods on the host in the

ovnkube-nodedaemon set enter theCrashLoopBackOffstate.If you display a pod with a command such as

oc get pod -n openshift-ovn-kubernetes -l app=ovnkube-node -o yaml, thestatusfield has more than one message about the default gateway, as shown in the following output:I1006 16:09:50.985852 60651 helper_linux.go:73] Found default gateway interface br-ex 192.168.127.1 I1006 16:09:50.985923 60651 helper_linux.go:73] Found default gateway interface ens4 fe80::5054:ff:febe:bcd4 F1006 16:09:50.985939 60651 ovnkube.go:130] multiple gateway interfaces detected: br-ex ens4

I1006 16:09:50.985852 60651 helper_linux.go:73] Found default gateway interface br-ex 192.168.127.1 I1006 16:09:50.985923 60651 helper_linux.go:73] Found default gateway interface ens4 fe80::5054:ff:febe:bcd4 F1006 16:09:50.985939 60651 ovnkube.go:130] multiple gateway interfaces detected: br-ex ens4Copy to Clipboard Copied! Toggle word wrap Toggle overflow The only resolution is to reconfigure the host networking so that both IP families use the same network interface for the default gateway.

For clusters configured for dual-stack networking, both the IPv4 and IPv6 routing tables must contain the default gateway.

If this requirement is not met, pods on the host in the

ovnkube-nodedaemon set enter theCrashLoopBackOffstate.If you display a pod with a command such as

oc get pod -n openshift-ovn-kubernetes -l app=ovnkube-node -o yaml, thestatusfield has more than one message about the default gateway, as shown in the following output:I0512 19:07:17.589083 108432 helper_linux.go:74] Found default gateway interface br-ex 192.168.123.1 F0512 19:07:17.589141 108432 ovnkube.go:133] failed to get default gateway interface

I0512 19:07:17.589083 108432 helper_linux.go:74] Found default gateway interface br-ex 192.168.123.1 F0512 19:07:17.589141 108432 ovnkube.go:133] failed to get default gateway interfaceCopy to Clipboard Copied! Toggle word wrap Toggle overflow The only resolution is to reconfigure the host networking so that both IP families contain the default gateway.

-

If you set the

ipv6.disableparameter to1in thekernelArgumentsection of theMachineConfigcustom resource (CR) for your cluster, OVN-Kubernetes pods enter aCrashLoopBackOffstate. Additionally, updating your cluster to a later version of OpenShift Container Platform fails because the Network Operator remains on aDegradedstate. Red Hat does not support disabling IPv6 addresses for your cluster so do not set theipv6.disableparameter to1.

1.3. Session affinity

Session affinity is a feature that applies to Kubernetes Service objects. You can use session affinity if you want to ensure that each time you connect to a <service_VIP>:<Port>, the traffic is always load balanced to the same back end. For more information, including how to set session affinity based on a client’s IP address, see Session affinity.

1.3.1. Stickiness timeout for session affinity

The OVN-Kubernetes network plugin for OpenShift Container Platform calculates the stickiness timeout for a session from a client based on the last packet. For example, if you run a curl command 10 times, the sticky session timer starts from the tenth packet not the first. As a result, if the client is continuously contacting the service, then the session never times out. The timeout starts when the service has not received a packet for the amount of time set by the timeoutSeconds parameter.

Chapter 2. OVN-Kubernetes architecture

2.1. Introduction to OVN-Kubernetes architecture

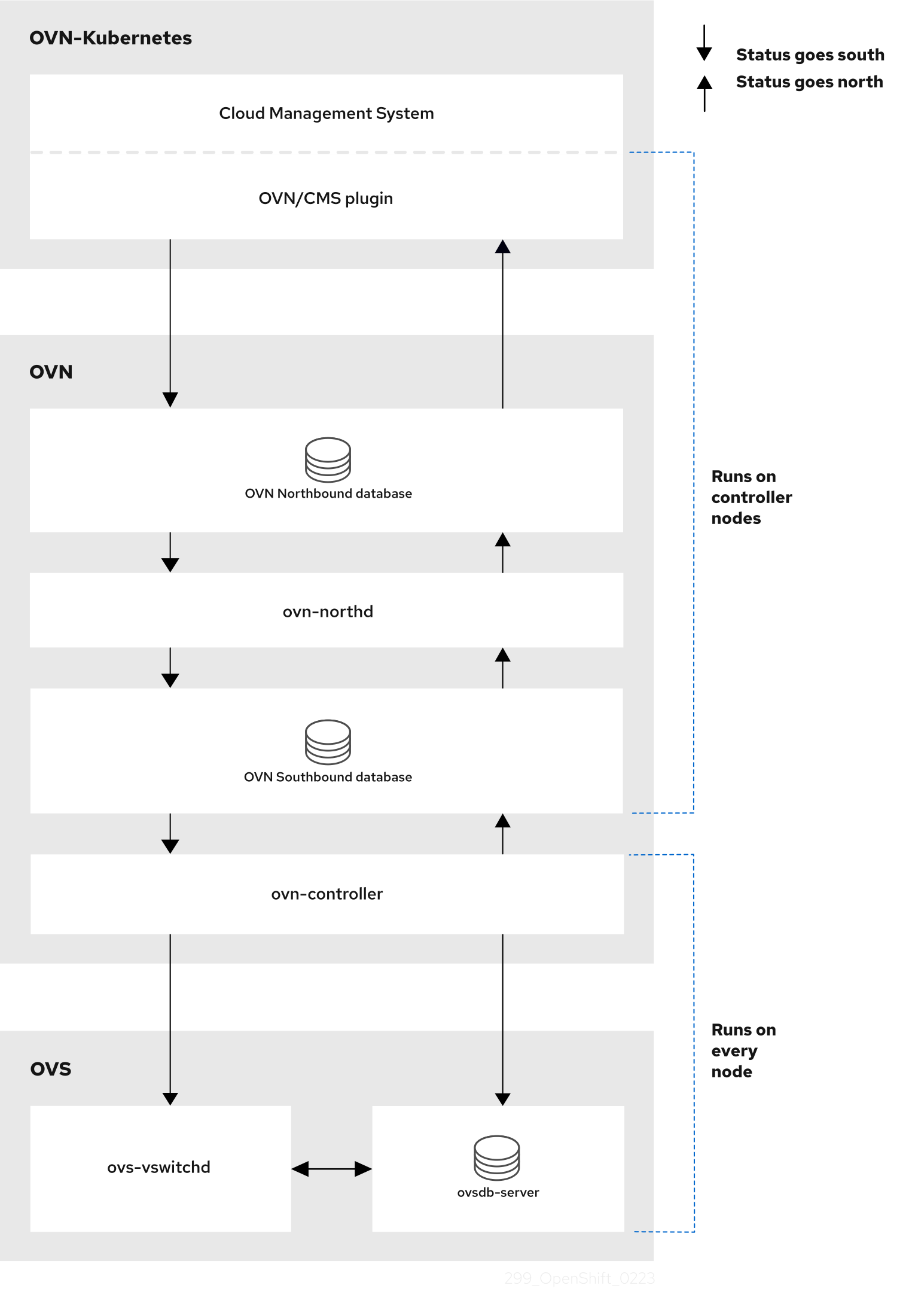

The following diagram shows the OVN-Kubernetes architecture.

Figure 2.1. OVK-Kubernetes architecture

The key components are:

- Cloud Management System (CMS) - A platform specific client for OVN that provides a CMS specific plugin for OVN integration. The plugin translates the cloud management system’s concept of the logical network configuration, stored in the CMS configuration database in a CMS-specific format, into an intermediate representation understood by OVN.

-

OVN Northbound database (

nbdb) container - Stores the logical network configuration passed by the CMS plugin. -

OVN Southbound database (

sbdb) container - Stores the physical and logical network configuration state for Open vSwitch (OVS) system on each node, including tables that bind them. -

OVN north daemon (

ovn-northd) - This is the intermediary client betweennbdbcontainer andsbdbcontainer. It translates the logical network configuration in terms of conventional network concepts, taken from thenbdbcontainer, into logical data path flows in thesbdbcontainer. The container name forovn-northddaemon isnorthdand it runs in theovnkube-nodepods. -

ovn-controller - This is the OVN agent that interacts with OVS and hypervisors, for any information or update that is needed for

sbdbcontainer. Theovn-controllerreads logical flows from thesbdbcontainer, translates them intoOpenFlowflows and sends them to the node’s OVS daemon. The container name isovn-controllerand it runs in theovnkube-nodepods.

The OVN northd, northbound database, and southbound database run on each node in the cluster and mostly contain and process information that is local to that node.

The OVN northbound database has the logical network configuration passed down to it by the cloud management system (CMS). The OVN northbound database contains the current desired state of the network, presented as a collection of logical ports, logical switches, logical routers, and more. The ovn-northd (northd container) connects to the OVN northbound database and the OVN southbound database. It translates the logical network configuration in terms of conventional network concepts, taken from the OVN northbound database, into logical data path flows in the OVN southbound database.

The OVN southbound database has physical and logical representations of the network and binding tables that link them together. It contains the chassis information of the node and other constructs like remote transit switch ports that are required to connect to the other nodes in the cluster. The OVN southbound database also contains all the logic flows. The logic flows are shared with the ovn-controller process that runs on each node and the ovn-controller turns those into OpenFlow rules to program Open vSwitch(OVS).

The Kubernetes control plane nodes contain two ovnkube-control-plane pods on separate nodes, which perform the central IP address management (IPAM) allocation for each node in the cluster. At any given time, a single ovnkube-control-plane pod is the leader.

2.2. Listing all resources in the OVN-Kubernetes project

Finding the resources and containers that run in the OVN-Kubernetes project is important to help you understand the OVN-Kubernetes networking implementation.

Prerequisites

-

Access to the cluster as a user with the

cluster-adminrole. -

The OpenShift CLI (

oc) installed.

Procedure

Run the following command to get all resources, endpoints, and

ConfigMapsin the OVN-Kubernetes project:oc get all,ep,cm -n openshift-ovn-kubernetes

$ oc get all,ep,cm -n openshift-ovn-kubernetesCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow There is one

ovnkube-nodepod for each node in the cluster. Theovnkube-configconfig map has the OpenShift Container Platform OVN-Kubernetes configurations.List all of the containers in the

ovnkube-nodepods by running the following command:oc get pods ovnkube-node-bcvts -o jsonpath='{.spec.containers[*].name}' -n openshift-ovn-kubernetes$ oc get pods ovnkube-node-bcvts -o jsonpath='{.spec.containers[*].name}' -n openshift-ovn-kubernetesCopy to Clipboard Copied! Toggle word wrap Toggle overflow Expected output

ovn-controller ovn-acl-logging kube-rbac-proxy-node kube-rbac-proxy-ovn-metrics northd nbdb sbdb ovnkube-controller

ovn-controller ovn-acl-logging kube-rbac-proxy-node kube-rbac-proxy-ovn-metrics northd nbdb sbdb ovnkube-controllerCopy to Clipboard Copied! Toggle word wrap Toggle overflow The

ovnkube-nodepod is made up of several containers. It is responsible for hosting the northbound database (nbdbcontainer), the southbound database (sbdbcontainer), the north daemon (northdcontainer),ovn-controllerand theovnkube-controllercontainer. Theovnkube-controllercontainer watches for API objects like pods, egress IPs, namespaces, services, endpoints, egress firewall, and network policies. It is also responsible for allocating pod IP from the available subnet pool for that node.List all the containers in the

ovnkube-control-planepods by running the following command:oc get pods ovnkube-control-plane-65c6f55656-6d55h -o jsonpath='{.spec.containers[*].name}' -n openshift-ovn-kubernetes$ oc get pods ovnkube-control-plane-65c6f55656-6d55h -o jsonpath='{.spec.containers[*].name}' -n openshift-ovn-kubernetesCopy to Clipboard Copied! Toggle word wrap Toggle overflow Expected output

kube-rbac-proxy ovnkube-cluster-manager

kube-rbac-proxy ovnkube-cluster-managerCopy to Clipboard Copied! Toggle word wrap Toggle overflow The

ovnkube-control-planepod has a container (ovnkube-cluster-manager) that resides on each OpenShift Container Platform node. Theovnkube-cluster-managercontainer allocates pod subnet, transit switch subnet IP and join switch subnet IP to each node in the cluster. Thekube-rbac-proxycontainer monitors metrics for theovnkube-cluster-managercontainer.

2.3. Listing the OVN-Kubernetes northbound database contents

Each node is controlled by the ovnkube-controller container running in the ovnkube-node pod on that node. To understand the OVN logical networking entities you need to examine the northbound database that is running as a container inside the ovnkube-node pod on that node to see what objects are in the node you wish to see.

Prerequisites

-

Access to the cluster as a user with the

cluster-adminrole. -

The OpenShift CLI (

oc) installed.

To run ovn nbctl or sbctl commands in a cluster you must open a remote shell into the nbdb or sbdb containers on the relevant node

List pods by running the following command:

oc get po -n openshift-ovn-kubernetes

$ oc get po -n openshift-ovn-kubernetesCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: To list the pods with node information, run the following command:

oc get pods -n openshift-ovn-kubernetes -owide

$ oc get pods -n openshift-ovn-kubernetes -owideCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Navigate into a pod to look at the northbound database by running the following command:

oc rsh -c nbdb -n openshift-ovn-kubernetes ovnkube-node-55xs2

$ oc rsh -c nbdb -n openshift-ovn-kubernetes ovnkube-node-55xs2Copy to Clipboard Copied! Toggle word wrap Toggle overflow Run the following command to show all the objects in the northbound database:

ovn-nbctl show

$ ovn-nbctl showCopy to Clipboard Copied! Toggle word wrap Toggle overflow The output is too long to list here. The list includes the NAT rules, logical switches, load balancers and so on.

You can narrow down and focus on specific components by using some of the following optional commands:

Run the following command to show the list of logical routers:

oc exec -n openshift-ovn-kubernetes -it ovnkube-node-55xs2 \ -c northd -- ovn-nbctl lr-list

$ oc exec -n openshift-ovn-kubernetes -it ovnkube-node-55xs2 \ -c northd -- ovn-nbctl lr-listCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

45339f4f-7d0b-41d0-b5f9-9fca9ce40ce6 (GR_ci-ln-t487nnb-72292-mdcnq-master-2) 96a0a0f0-e7ed-4fec-8393-3195563de1b8 (ovn_cluster_router)

45339f4f-7d0b-41d0-b5f9-9fca9ce40ce6 (GR_ci-ln-t487nnb-72292-mdcnq-master-2) 96a0a0f0-e7ed-4fec-8393-3195563de1b8 (ovn_cluster_router)Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteFrom this output you can see there is router on each node plus an

ovn_cluster_router.Run the following command to show the list of logical switches:

oc exec -n openshift-ovn-kubernetes -it ovnkube-node-55xs2 \ -c nbdb -- ovn-nbctl ls-list

$ oc exec -n openshift-ovn-kubernetes -it ovnkube-node-55xs2 \ -c nbdb -- ovn-nbctl ls-listCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

bdd7dc3d-d848-4a74-b293-cc15128ea614 (ci-ln-t487nnb-72292-mdcnq-master-2) b349292d-ee03-4914-935f-1940b6cb91e5 (ext_ci-ln-t487nnb-72292-mdcnq-master-2) 0aac0754-ea32-4e33-b086-35eeabf0a140 (join) 992509d7-2c3f-4432-88db-c179e43592e5 (transit_switch)

bdd7dc3d-d848-4a74-b293-cc15128ea614 (ci-ln-t487nnb-72292-mdcnq-master-2) b349292d-ee03-4914-935f-1940b6cb91e5 (ext_ci-ln-t487nnb-72292-mdcnq-master-2) 0aac0754-ea32-4e33-b086-35eeabf0a140 (join) 992509d7-2c3f-4432-88db-c179e43592e5 (transit_switch)Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteFrom this output you can see there is an ext switch for each node plus switches with the node name itself and a join switch.

Run the following command to show the list of load balancers:

oc exec -n openshift-ovn-kubernetes -it ovnkube-node-55xs2 \ -c nbdb -- ovn-nbctl lb-list

$ oc exec -n openshift-ovn-kubernetes -it ovnkube-node-55xs2 \ -c nbdb -- ovn-nbctl lb-listCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteFrom this truncated output you can see there are many OVN-Kubernetes load balancers. Load balancers in OVN-Kubernetes are representations of services.

Run the following command to display the options available with the command

ovn-nbctl:oc exec -n openshift-ovn-kubernetes -it ovnkube-node-55xs2 \ -c nbdb ovn-nbctl --help

$ oc exec -n openshift-ovn-kubernetes -it ovnkube-node-55xs2 \ -c nbdb ovn-nbctl --helpCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.4. Command-line arguments for ovn-nbctl to examine northbound database contents

The following table describes the command-line arguments that can be used with ovn-nbctl to examine the contents of the northbound database.

Open a remote shell in the pod you want to view the contents of and then run the ovn-nbctl commands.

| Argument | Description |

|---|---|

|

| An overview of the northbound database contents as seen from a specific node. |

|

| Show the details associated with the specified switch or router. |

|

| Show the logical routers. |

|

|

Using the router information from |

|

| Show network address translation details for the specified router. |

|

| Show the logical switches |

|

|

Using the switch information from |

|

| Get the type for the logical port. |

|

| Show the load balancers. |

2.5. Listing the OVN-Kubernetes southbound database contents

Each node is controlled by the ovnkube-controller container running in the ovnkube-node pod on that node. To understand the OVN logical networking entities you need to examine the northbound database that is running as a container inside the ovnkube-node pod on that node to see what objects are in the node you wish to see.

Prerequisites

-

Access to the cluster as a user with the

cluster-adminrole. -

The OpenShift CLI (

oc) installed.

To run ovn nbctl or sbctl commands in a cluster you must open a remote shell into the nbdb or sbdb containers on the relevant node

List the pods by running the following command:

oc get po -n openshift-ovn-kubernetes

$ oc get po -n openshift-ovn-kubernetesCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: To list the pods with node information, run the following command:

oc get pods -n openshift-ovn-kubernetes -owide

$ oc get pods -n openshift-ovn-kubernetes -owideCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Navigate into a pod to look at the southbound database:

oc rsh -c sbdb -n openshift-ovn-kubernetes ovnkube-node-55xs2

$ oc rsh -c sbdb -n openshift-ovn-kubernetes ovnkube-node-55xs2Copy to Clipboard Copied! Toggle word wrap Toggle overflow Run the following command to show all the objects in the southbound database:

ovn-sbctl show

$ ovn-sbctl showCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow This detailed output shows the chassis and the ports that are attached to the chassis which in this case are all of the router ports and anything that runs like host networking. Any pods communicate out to the wider network using source network address translation (SNAT). Their IP address is translated into the IP address of the node that the pod is running on and then sent out into the network.

In addition to the chassis information the southbound database has all the logic flows and those logic flows are then sent to the

ovn-controllerrunning on each of the nodes. Theovn-controllertranslates the logic flows into open flow rules and ultimately programsOpenvSwitchso that your pods can then follow open flow rules and make it out of the network.Run the following command to display the options available with the command

ovn-sbctl:oc exec -n openshift-ovn-kubernetes -it ovnkube-node-55xs2 \ -c sbdb ovn-sbctl --help

$ oc exec -n openshift-ovn-kubernetes -it ovnkube-node-55xs2 \ -c sbdb ovn-sbctl --helpCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.6. Command-line arguments for ovn-sbctl to examine southbound database contents

The following table describes the command-line arguments that can be used with ovn-sbctl to examine the contents of the southbound database.

Open a remote shell in the pod you wish to view the contents of and then run the ovn-sbctl commands.

| Argument | Description |

|---|---|

|

| An overview of the southbound database contents as seen from a specific node. |

|

| List the contents of southbound database for a the specified port . |

|

| List the logical flows. |

2.7. OVN-Kubernetes logical architecture

OVN is a network virtualization solution. It creates logical switches and routers. These switches and routers are interconnected to create any network topologies. When you run ovnkube-trace with the log level set to 2 or 5 the OVN-Kubernetes logical components are exposed. The following diagram shows how the routers and switches are connected in OpenShift Container Platform.

Figure 2.2. OVN-Kubernetes router and switch components

The key components involved in packet processing are:

- Gateway routers

-

Gateway routers sometimes called L3 gateway routers, are typically used between the distributed routers and the physical network. Gateway routers including their logical patch ports are bound to a physical location (not distributed), or chassis. The patch ports on this router are known as l3gateway ports in the ovn-southbound database (

ovn-sbdb). - Distributed logical routers

- Distributed logical routers and the logical switches behind them, to which virtual machines and containers attach, effectively reside on each hypervisor.

- Join local switch

- Join local switches are used to connect the distributed router and gateway routers. It reduces the number of IP addresses needed on the distributed router.

- Logical switches with patch ports

- Logical switches with patch ports are used to virtualize the network stack. They connect remote logical ports through tunnels.

- Logical switches with localnet ports

- Logical switches with localnet ports are used to connect OVN to the physical network. They connect remote logical ports by bridging the packets to directly connected physical L2 segments using localnet ports.

- Patch ports

- Patch ports represent connectivity between logical switches and logical routers and between peer logical routers. A single connection has a pair of patch ports at each such point of connectivity, one on each side.

- l3gateway ports

-

l3gateway ports are the port binding entries in the

ovn-sbdbfor logical patch ports used in the gateway routers. They are called l3gateway ports rather than patch ports just to portray the fact that these ports are bound to a chassis just like the gateway router itself. - localnet ports

-

localnet ports are present on the bridged logical switches that allows a connection to a locally accessible network from each

ovn-controllerinstance. This helps model the direct connectivity to the physical network from the logical switches. A logical switch can only have a single localnet port attached to it.

2.7.1. Installing network-tools on local host

Install network-tools on your local host to make a collection of tools available for debugging OpenShift Container Platform cluster network issues.

Procedure

Clone the

network-toolsrepository onto your workstation with the following command:git clone git@github.com:openshift/network-tools.git

$ git clone git@github.com:openshift/network-tools.gitCopy to Clipboard Copied! Toggle word wrap Toggle overflow Change into the directory for the repository you just cloned:

cd network-tools

$ cd network-toolsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: List all available commands:

./debug-scripts/network-tools -h

$ ./debug-scripts/network-tools -hCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.7.2. Running network-tools

Get information about the logical switches and routers by running network-tools.

Prerequisites

-

You installed the OpenShift CLI (

oc). -

You are logged in to the cluster as a user with

cluster-adminprivileges. -

You have installed

network-toolson local host.

Procedure

List the routers by running the following command:

./debug-scripts/network-tools ovn-db-run-command ovn-nbctl lr-list

$ ./debug-scripts/network-tools ovn-db-run-command ovn-nbctl lr-listCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

944a7b53-7948-4ad2-a494-82b55eeccf87 (GR_ci-ln-54932yb-72292-kd676-worker-c-rzj99) 84bd4a4c-4b0b-4a47-b0cf-a2c32709fc53 (ovn_cluster_router)

944a7b53-7948-4ad2-a494-82b55eeccf87 (GR_ci-ln-54932yb-72292-kd676-worker-c-rzj99) 84bd4a4c-4b0b-4a47-b0cf-a2c32709fc53 (ovn_cluster_router)Copy to Clipboard Copied! Toggle word wrap Toggle overflow List the localnet ports by running the following command:

./debug-scripts/network-tools ovn-db-run-command \ ovn-sbctl find Port_Binding type=localnet

$ ./debug-scripts/network-tools ovn-db-run-command \ ovn-sbctl find Port_Binding type=localnetCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow List the

l3gatewayports by running the following command:./debug-scripts/network-tools ovn-db-run-command \ ovn-sbctl find Port_Binding type=l3gateway

$ ./debug-scripts/network-tools ovn-db-run-command \ ovn-sbctl find Port_Binding type=l3gatewayCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow List the patch ports by running the following command:

./debug-scripts/network-tools ovn-db-run-command \ ovn-sbctl find Port_Binding type=patch

$ ./debug-scripts/network-tools ovn-db-run-command \ ovn-sbctl find Port_Binding type=patchCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 3. Troubleshooting OVN-Kubernetes

OVN-Kubernetes has many sources of built-in health checks and logs. Follow the instructions in these sections to examine your cluster. If a support case is necessary, follow the support guide to collect additional information through a must-gather. Only use the -- gather_network_logs when instructed by support.

3.1. Monitoring OVN-Kubernetes health by using readiness probes

The ovnkube-control-plane and ovnkube-node pods have containers configured with readiness probes.

Prerequisites

-

Access to the OpenShift CLI (

oc). -

You have access to the cluster with

cluster-adminprivileges. -

You have installed

jq.

Procedure

Review the details of the

ovnkube-nodereadiness probe by running the following command:oc get pods -n openshift-ovn-kubernetes -l app=ovnkube-node \ -o json | jq '.items[0].spec.containers[] | .name,.readinessProbe'

$ oc get pods -n openshift-ovn-kubernetes -l app=ovnkube-node \ -o json | jq '.items[0].spec.containers[] | .name,.readinessProbe'Copy to Clipboard Copied! Toggle word wrap Toggle overflow The readiness probe for the northbound and southbound database containers in the

ovnkube-nodepod checks for the health of the databases and theovnkube-controllercontainer.The

ovnkube-controllercontainer in theovnkube-nodepod has a readiness probe to verify the presence of the OVN-Kubernetes CNI configuration file, the absence of which would indicate that the pod is not running or is not ready to accept requests to configure pods.Show all events including the probe failures, for the namespace by using the following command:

oc get events -n openshift-ovn-kubernetes

$ oc get events -n openshift-ovn-kubernetesCopy to Clipboard Copied! Toggle word wrap Toggle overflow Show the events for just a specific pod:

oc describe pod ovnkube-node-9lqfk -n openshift-ovn-kubernetes

$ oc describe pod ovnkube-node-9lqfk -n openshift-ovn-kubernetesCopy to Clipboard Copied! Toggle word wrap Toggle overflow Show the messages and statuses from the cluster network operator:

oc get co/network -o json | jq '.status.conditions[]'

$ oc get co/network -o json | jq '.status.conditions[]'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Show the

readystatus of each container inovnkube-nodepods by running the following script:for p in $(oc get pods --selector app=ovnkube-node -n openshift-ovn-kubernetes \ -o jsonpath='{range.items[*]}{" "}{.metadata.name}'); do echo === $p ===; \ oc get pods -n openshift-ovn-kubernetes $p -o json | jq '.status.containerStatuses[] | .name, .ready'; \ done$ for p in $(oc get pods --selector app=ovnkube-node -n openshift-ovn-kubernetes \ -o jsonpath='{range.items[*]}{" "}{.metadata.name}'); do echo === $p ===; \ oc get pods -n openshift-ovn-kubernetes $p -o json | jq '.status.containerStatuses[] | .name, .ready'; \ doneCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteThe expectation is all container statuses are reporting as

true. Failure of a readiness probe sets the status tofalse.

3.2. Viewing OVN-Kubernetes alerts in the console

The Alerting UI provides detailed information about alerts and their governing alerting rules and silences.

Prerequisites

- You have access to the cluster as a developer or as a user with view permissions for the project that you are viewing metrics for.

Procedure (UI)

- In the Administrator perspective, select Observe → Alerting. The three main pages in the Alerting UI in this perspective are the Alerts, Silences, and Alerting Rules pages.

- View the rules for OVN-Kubernetes alerts by selecting Observe → Alerting → Alerting Rules.

3.3. Viewing OVN-Kubernetes alerts in the CLI

You can get information about alerts and their governing alerting rules and silences from the command line.

Prerequisites

-

Access to the cluster as a user with the

cluster-adminrole. -

The OpenShift CLI (

oc) installed. -

You have installed

jq.

Procedure

View active or firing alerts by running the following commands.

Set the alert manager route environment variable by running the following command:

ALERT_MANAGER=$(oc get route alertmanager-main -n openshift-monitoring \ -o jsonpath='{@.spec.host}')$ ALERT_MANAGER=$(oc get route alertmanager-main -n openshift-monitoring \ -o jsonpath='{@.spec.host}')Copy to Clipboard Copied! Toggle word wrap Toggle overflow Issue a

curlrequest to the alert manager route API by running the following command, replacing$ALERT_MANAGERwith the URL of yourAlertmanagerinstance:curl -s -k -H "Authorization: Bearer $(oc create token prometheus-k8s -n openshift-monitoring)" https://$ALERT_MANAGER/api/v1/alerts | jq '.data[] | "\(.labels.severity) \(.labels.alertname) \(.labels.pod) \(.labels.container) \(.labels.endpoint) \(.labels.instance)"'

$ curl -s -k -H "Authorization: Bearer $(oc create token prometheus-k8s -n openshift-monitoring)" https://$ALERT_MANAGER/api/v1/alerts | jq '.data[] | "\(.labels.severity) \(.labels.alertname) \(.labels.pod) \(.labels.container) \(.labels.endpoint) \(.labels.instance)"'Copy to Clipboard Copied! Toggle word wrap Toggle overflow

View alerting rules by running the following command:

oc -n openshift-monitoring exec -c prometheus prometheus-k8s-0 -- curl -s 'http://localhost:9090/api/v1/rules' | jq '.data.groups[].rules[] | select(((.name|contains("ovn")) or (.name|contains("OVN")) or (.name|contains("Ovn")) or (.name|contains("North")) or (.name|contains("South"))) and .type=="alerting")'$ oc -n openshift-monitoring exec -c prometheus prometheus-k8s-0 -- curl -s 'http://localhost:9090/api/v1/rules' | jq '.data.groups[].rules[] | select(((.name|contains("ovn")) or (.name|contains("OVN")) or (.name|contains("Ovn")) or (.name|contains("North")) or (.name|contains("South"))) and .type=="alerting")'Copy to Clipboard Copied! Toggle word wrap Toggle overflow

3.4. Viewing the OVN-Kubernetes logs using the CLI

You can view the logs for each of the pods in the ovnkube-master and ovnkube-node pods using the OpenShift CLI (oc).

Prerequisites

-

Access to the cluster as a user with the

cluster-adminrole. -

Access to the OpenShift CLI (

oc). -

You have installed

jq.

Procedure

View the log for a specific pod:

oc logs -f <pod_name> -c <container_name> -n <namespace>

$ oc logs -f <pod_name> -c <container_name> -n <namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow where:

-f- Optional: Specifies that the output follows what is being written into the logs.

<pod_name>- Specifies the name of the pod.

<container_name>- Optional: Specifies the name of a container. When a pod has more than one container, you must specify the container name.

<namespace>- Specify the namespace the pod is running in.

For example:

oc logs ovnkube-node-5dx44 -n openshift-ovn-kubernetes

$ oc logs ovnkube-node-5dx44 -n openshift-ovn-kubernetesCopy to Clipboard Copied! Toggle word wrap Toggle overflow oc logs -f ovnkube-node-5dx44 -c ovnkube-controller -n openshift-ovn-kubernetes

$ oc logs -f ovnkube-node-5dx44 -c ovnkube-controller -n openshift-ovn-kubernetesCopy to Clipboard Copied! Toggle word wrap Toggle overflow The contents of log files are printed out.

Examine the most recent entries in all the containers in the

ovnkube-nodepods:for p in $(oc get pods --selector app=ovnkube-node -n openshift-ovn-kubernetes \ -o jsonpath='{range.items[*]}{" "}{.metadata.name}'); \ do echo === $p ===; for container in $(oc get pods -n openshift-ovn-kubernetes $p \ -o json | jq -r '.status.containerStatuses[] | .name');do echo ---$container---; \ oc logs -c $container $p -n openshift-ovn-kubernetes --tail=5; done; done$ for p in $(oc get pods --selector app=ovnkube-node -n openshift-ovn-kubernetes \ -o jsonpath='{range.items[*]}{" "}{.metadata.name}'); \ do echo === $p ===; for container in $(oc get pods -n openshift-ovn-kubernetes $p \ -o json | jq -r '.status.containerStatuses[] | .name');do echo ---$container---; \ oc logs -c $container $p -n openshift-ovn-kubernetes --tail=5; done; doneCopy to Clipboard Copied! Toggle word wrap Toggle overflow View the last 5 lines of every log in every container in an

ovnkube-nodepod using the following command:oc logs -l app=ovnkube-node -n openshift-ovn-kubernetes --all-containers --tail 5

$ oc logs -l app=ovnkube-node -n openshift-ovn-kubernetes --all-containers --tail 5Copy to Clipboard Copied! Toggle word wrap Toggle overflow

3.5. Viewing the OVN-Kubernetes logs using the web console

You can view the logs for each of the pods in the ovnkube-master and ovnkube-node pods in the web console.

Prerequisites

-

Access to the OpenShift CLI (

oc).

Procedure

- In the OpenShift Container Platform console, navigate to Workloads → Pods or navigate to the pod through the resource you want to investigate.

-

Select the

openshift-ovn-kubernetesproject from the drop-down menu. - Click the name of the pod you want to investigate.

-

Click Logs. By default for the

ovnkube-masterthe logs associated with thenorthdcontainer are displayed. - Use the down-down menu to select logs for each container in turn.

3.5.1. Changing the OVN-Kubernetes log levels

The default log level for OVN-Kubernetes is 4. To debug OVN-Kubernetes, set the log level to 5. Follow this procedure to increase the log level of the OVN-Kubernetes to help you debug an issue.

Prerequisites

-

You have access to the cluster with

cluster-adminprivileges. - You have access to the OpenShift Container Platform web console.

Procedure

Run the following command to get detailed information for all pods in the OVN-Kubernetes project:

oc get po -o wide -n openshift-ovn-kubernetes

$ oc get po -o wide -n openshift-ovn-kubernetesCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a

ConfigMapfile similar to the following example and use a filename such asenv-overrides.yaml:Example

ConfigMapfileCopy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the

ConfigMapfile by using the following command:oc apply -n openshift-ovn-kubernetes -f env-overrides.yaml

$ oc apply -n openshift-ovn-kubernetes -f env-overrides.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

configmap/env-overrides.yaml created

configmap/env-overrides.yaml createdCopy to Clipboard Copied! Toggle word wrap Toggle overflow Restart the

ovnkubepods to apply the new log level by using the following commands:oc delete pod -n openshift-ovn-kubernetes \ --field-selector spec.nodeName=ci-ln-3njdr9b-72292-5nwkp-master-0 -l app=ovnkube-node

$ oc delete pod -n openshift-ovn-kubernetes \ --field-selector spec.nodeName=ci-ln-3njdr9b-72292-5nwkp-master-0 -l app=ovnkube-nodeCopy to Clipboard Copied! Toggle word wrap Toggle overflow oc delete pod -n openshift-ovn-kubernetes \ --field-selector spec.nodeName=ci-ln-3njdr9b-72292-5nwkp-master-2 -l app=ovnkube-node

$ oc delete pod -n openshift-ovn-kubernetes \ --field-selector spec.nodeName=ci-ln-3njdr9b-72292-5nwkp-master-2 -l app=ovnkube-nodeCopy to Clipboard Copied! Toggle word wrap Toggle overflow oc delete pod -n openshift-ovn-kubernetes -l app=ovnkube-node

$ oc delete pod -n openshift-ovn-kubernetes -l app=ovnkube-nodeCopy to Clipboard Copied! Toggle word wrap Toggle overflow To verify that the `ConfigMap`file has been applied to all nodes for a specific pod, run the following command:

oc logs -n openshift-ovn-kubernetes --all-containers --prefix ovnkube-node-<xxxx> | grep -E -m 10 '(Logging config:|vconsole|DBG)'

$ oc logs -n openshift-ovn-kubernetes --all-containers --prefix ovnkube-node-<xxxx> | grep -E -m 10 '(Logging config:|vconsole|DBG)'Copy to Clipboard Copied! Toggle word wrap Toggle overflow where:

<XXXX>Specifies the random sequence of letters for a pod from the previous step.

Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Optional: Check the

ConfigMapfile has been applied by running the following command:for f in $(oc -n openshift-ovn-kubernetes get po -l 'app=ovnkube-node' --no-headers -o custom-columns=N:.metadata.name) ; do echo "---- $f ----" ; oc -n openshift-ovn-kubernetes exec -c ovnkube-controller $f -- pgrep -a -f init-ovnkube-controller | grep -P -o '^.*loglevel\s+\d' ; done

for f in $(oc -n openshift-ovn-kubernetes get po -l 'app=ovnkube-node' --no-headers -o custom-columns=N:.metadata.name) ; do echo "---- $f ----" ; oc -n openshift-ovn-kubernetes exec -c ovnkube-controller $f -- pgrep -a -f init-ovnkube-controller | grep -P -o '^.*loglevel\s+\d' ; doneCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

3.6. Checking the OVN-Kubernetes pod network connectivity

The connectivity check controller, in OpenShift Container Platform 4.10 and later, orchestrates connection verification checks in your cluster. These include Kubernetes API, OpenShift API and individual nodes. The results for the connection tests are stored in PodNetworkConnectivity objects in the openshift-network-diagnostics namespace. Connection tests are performed every minute in parallel.

Prerequisites

-

Access to the OpenShift CLI (

oc). -

Access to the cluster as a user with the

cluster-adminrole. -

You have installed

jq.

Procedure

To list the current

PodNetworkConnectivityCheckobjects, enter the following command:oc get podnetworkconnectivitychecks -n openshift-network-diagnostics

$ oc get podnetworkconnectivitychecks -n openshift-network-diagnosticsCopy to Clipboard Copied! Toggle word wrap Toggle overflow View the most recent success for each connection object by using the following command:

oc get podnetworkconnectivitychecks -n openshift-network-diagnostics \ -o json | jq '.items[]| .spec.targetEndpoint,.status.successes[0]'

$ oc get podnetworkconnectivitychecks -n openshift-network-diagnostics \ -o json | jq '.items[]| .spec.targetEndpoint,.status.successes[0]'Copy to Clipboard Copied! Toggle word wrap Toggle overflow View the most recent failures for each connection object by using the following command:

oc get podnetworkconnectivitychecks -n openshift-network-diagnostics \ -o json | jq '.items[]| .spec.targetEndpoint,.status.failures[0]'

$ oc get podnetworkconnectivitychecks -n openshift-network-diagnostics \ -o json | jq '.items[]| .spec.targetEndpoint,.status.failures[0]'Copy to Clipboard Copied! Toggle word wrap Toggle overflow View the most recent outages for each connection object by using the following command:

oc get podnetworkconnectivitychecks -n openshift-network-diagnostics \ -o json | jq '.items[]| .spec.targetEndpoint,.status.outages[0]'

$ oc get podnetworkconnectivitychecks -n openshift-network-diagnostics \ -o json | jq '.items[]| .spec.targetEndpoint,.status.outages[0]'Copy to Clipboard Copied! Toggle word wrap Toggle overflow The connectivity check controller also logs metrics from these checks into Prometheus.

View all the metrics by running the following command:

oc exec prometheus-k8s-0 -n openshift-monitoring -- \ promtool query instant http://localhost:9090 \ '{component="openshift-network-diagnostics"}'$ oc exec prometheus-k8s-0 -n openshift-monitoring -- \ promtool query instant http://localhost:9090 \ '{component="openshift-network-diagnostics"}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow View the latency between the source pod and the openshift api service for the last 5 minutes:

oc exec prometheus-k8s-0 -n openshift-monitoring -- \ promtool query instant http://localhost:9090 \ '{component="openshift-network-diagnostics"}'$ oc exec prometheus-k8s-0 -n openshift-monitoring -- \ promtool query instant http://localhost:9090 \ '{component="openshift-network-diagnostics"}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow

3.7. Checking OVN-Kubernetes network traffic with OVS sampling using the CLI

Checking OVN-Kubernetes network traffic with OVS sampling is a Technology Preview feature only. Technology Preview features are not supported with Red Hat production service level agreements (SLAs) and might not be functionally complete. Red Hat does not recommend using them in production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process.

For more information about the support scope of Red Hat Technology Preview features, see Technology Preview Features Support Scope.

OVN-Kubernetes network traffic can be viewed with OVS sampling via the CLI for the following network APIs:

-

NetworkPolicy -

AdminNetworkPolicy -

BaselineNetworkPolicy -

UserDefinedNetworkisolation -

EgressFirewall - Multicast ACLs.

Scripts for these networking events are found in the /usr/bin/ovnkube-observ path of each OVN-Kubernetes node.

Although both the Network Observability Operator and checking OVN-Kubernetes network traffic with OVS sampling are good for debuggability, the Network Observability Operator is intended for observing network events. Alternatively, checking OVN-Kubernetes network traffic with OVS sampling using the CLI is intended to help with packet tracing; it can also be used while the Network Observability Operator is installed, however that is not a requirement.

Administrators can add the --add-ovs-collect option to view network traffic across the node, or pass in additional flags to filter result for specific pods. Additional flags can be found in the "OVN-Kubernetes network traffic with OVS sampling flags" section.

Use the following procedure to view OVN-Kubernetes network traffic using the CLI.

Prerequisites

-

You are logged in to the cluster as a user with

cluster-adminprivileges. - You have created a source pod and a destination pod and ran traffic between them.

-

You have created at least one of the following network APIs:

NetworkPolicy,AdminNetworkPolicy,BaselineNetworkPolicy,UserDefinedNetworkisolation, multicast, or egress firewalls.

Procedure

To enable the

OVNObservabilitywith OVS sampling feature, enableTechPreviewNoUpgradefeature set in theFeatureGateCR namedclusterby entering the following command:oc patch --type=merge --patch '{"spec": {"featureSet": "TechPreviewNoUpgrade"}}' featuregate/cluster$ oc patch --type=merge --patch '{"spec": {"featureSet": "TechPreviewNoUpgrade"}}' featuregate/clusterCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

featuregate.config.openshift.io/cluster patched

featuregate.config.openshift.io/cluster patchedCopy to Clipboard Copied! Toggle word wrap Toggle overflow Confirm that the

OVNObservabilityfeature is enabled by entering the following command:oc get featuregate cluster -o yaml

$ oc get featuregate cluster -o yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

featureGates: # ... enabled: - name: OVNObservabilityfeatureGates: # ... enabled: - name: OVNObservabilityCopy to Clipboard Copied! Toggle word wrap Toggle overflow Obtain a list of the pods inside of the namespace in which you have created one of the relevant network APIs by entering the following command. Note the

NODEname of the pods, as they are used in the following step.oc get pods -n <namespace> -o wide

$ oc get pods -n <namespace> -o wideCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES destination-pod 1/1 Running 0 53s 10.131.0.23 ci-ln-1gqp7b2-72292-bb9dv-worker-a-gtmpc <none> <none> source-pod 1/1 Running 0 56s 10.131.0.22 ci-ln-1gqp7b2-72292-bb9dv-worker-a-gtmpc <none> <none>

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES destination-pod 1/1 Running 0 53s 10.131.0.23 ci-ln-1gqp7b2-72292-bb9dv-worker-a-gtmpc <none> <none> source-pod 1/1 Running 0 56s 10.131.0.22 ci-ln-1gqp7b2-72292-bb9dv-worker-a-gtmpc <none> <none>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Obtain a list of OVN-Kubernetes pods and locate the pod that shares the same

NODEas the pods from the previous step by entering the following command:oc get pods -n openshift-ovn-kubernetes -o wide

$ oc get pods -n openshift-ovn-kubernetes -o wideCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME ... READY STATUS RESTARTS AGE IP NODE NOMINATED NODE ovnkube-node-jzn5b 8/8 Running 1 (34m ago) 37m 10.0.128.2 ci-ln-1gqp7b2-72292-bb9dv-worker-a-gtmpc <none> ...

NAME ... READY STATUS RESTARTS AGE IP NODE NOMINATED NODE ovnkube-node-jzn5b 8/8 Running 1 (34m ago) 37m 10.0.128.2 ci-ln-1gqp7b2-72292-bb9dv-worker-a-gtmpc <none> ...Copy to Clipboard Copied! Toggle word wrap Toggle overflow Open a bash shell inside of the

ovnkube-nodepod by entering the following command:oc exec -it <pod_name> -n openshift-ovn-kubernetes -- bash

$ oc exec -it <pod_name> -n openshift-ovn-kubernetes -- bashCopy to Clipboard Copied! Toggle word wrap Toggle overflow While inside of the

ovnkube-nodepod, you can run theovnkube-observ -add-ovs-collectorscript to show network events using the OVS collector. For example:/usr/bin/ovnkube-observ -add-ovs-collector

# /usr/bin/ovnkube-observ -add-ovs-collectorCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow You can filter the content by type, such as source pods, by entering the following command with the

-filter-src-ipflag and your pod’s IP address. For example:/usr/bin/ovnkube-observ -add-ovs-collector -filter-src-ip <pod_ip_address>

# /usr/bin/ovnkube-observ -add-ovs-collector -filter-src-ip <pod_ip_address>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow For a full list of flags that can be passed in with

/usr/bin/ovnkube-observ, see "OVN-Kubernetes network traffic with OVS sampling flags".

3.7.1. OVN-Kubernetes network traffic with OVS sampling flags

The following flags are available to view OVN-Kubernetes network traffic by using the CLI. Append these flags to the following syntax in your terminal after you have opened a bash shell inside of the ovnkube-node pod:

Command syntax

/usr/bin/ovnkube-observ <flag>

# /usr/bin/ovnkube-observ <flag>| Flag | Description |

|---|---|

|

|

Returns a complete list flags that can be used with the |

|

| Add OVS collector to enable sampling. Use with caution. Make sure no one else is using observability. |

|

|

Enrich samples with NBDB data. Defaults to |

|

| Filter only packets to a given destination IP. |

|

| Filters only packets from a given source IP. |

|

| Print raw sample cookie with psample group_id. |

|

| Output file to write the samples to. |

|

| Print full received packet. When false, only source and destination IPs are printed with every sample. |

Chapter 4. Tracing Openflow with ovnkube-trace

OVN and OVS traffic flows can be simulated in a single utility called ovnkube-trace. The ovnkube-trace utility runs ovn-trace, ovs-appctl ofproto/trace and ovn-detrace and correlates that information in a single output.

You can execute the ovnkube-trace binary from a dedicated container. For releases after OpenShift Container Platform 4.7, you can also copy the binary to a local host and execute it from that host.

4.1. Installing the ovnkube-trace on local host

The ovnkube-trace tool traces packet simulations for arbitrary UDP or TCP traffic between points in an OVN-Kubernetes driven OpenShift Container Platform cluster. Copy the ovnkube-trace binary to your local host making it available to run against the cluster.

Prerequisites

-

You installed the OpenShift CLI (

oc). -

You are logged in to the cluster with a user with

cluster-adminprivileges.

Procedure

Create a pod variable by using the following command:

POD=$(oc get pods -n openshift-ovn-kubernetes -l app=ovnkube-control-plane -o name | head -1 | awk -F '/' '{print $NF}')$ POD=$(oc get pods -n openshift-ovn-kubernetes -l app=ovnkube-control-plane -o name | head -1 | awk -F '/' '{print $NF}')Copy to Clipboard Copied! Toggle word wrap Toggle overflow Run the following command on your local host to copy the binary from the

ovnkube-control-planepods:oc cp -n openshift-ovn-kubernetes $POD:/usr/bin/ovnkube-trace -c ovnkube-cluster-manager ovnkube-trace

$ oc cp -n openshift-ovn-kubernetes $POD:/usr/bin/ovnkube-trace -c ovnkube-cluster-manager ovnkube-traceCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteIf you are using Red Hat Enterprise Linux (RHEL) 8 to run the

ovnkube-tracetool, you must copy the file/usr/lib/rhel8/ovnkube-traceto your local host.Make

ovnkube-traceexecutable by running the following command:chmod +x ovnkube-trace

$ chmod +x ovnkube-traceCopy to Clipboard Copied! Toggle word wrap Toggle overflow Display the options available with

ovnkube-traceby running the following command:./ovnkube-trace -help

$ ./ovnkube-trace -helpCopy to Clipboard Copied! Toggle word wrap Toggle overflow Expected output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow The command-line arguments supported are familiar Kubernetes constructs, such as namespaces, pods, services so you do not need to find the MAC address, the IP address of the destination nodes, or the ICMP type.

The log levels are:

- 0 (minimal output)

- 2 (more verbose output showing results of trace commands)

- 5 (debug output)

4.2. Running ovnkube-trace

Run ovn-trace to simulate packet forwarding within an OVN logical network.

Prerequisites

-

You installed the OpenShift CLI (

oc). -

You are logged in to the cluster with a user with

cluster-adminprivileges. -

You have installed

ovnkube-traceon local host

Example: Testing that DNS resolution works from a deployed pod

This example illustrates how to test the DNS resolution from a deployed pod to the core DNS pod that runs in the cluster.

Procedure

Start a web service in the default namespace by entering the following command:

oc run web --namespace=default --image=quay.io/openshifttest/nginx --labels="app=web" --expose --port=80

$ oc run web --namespace=default --image=quay.io/openshifttest/nginx --labels="app=web" --expose --port=80Copy to Clipboard Copied! Toggle word wrap Toggle overflow List the pods running in the

openshift-dnsnamespace:oc get pods -n openshift-dns

oc get pods -n openshift-dnsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Run the following

ovnkube-tracecommand to verify DNS resolution is working:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output if the

src&dstpod lands on the same nodeCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output if the

src&dstpod lands on a different nodeCopy to Clipboard Copied! Toggle word wrap Toggle overflow The ouput indicates success from the deployed pod to the DNS port and also indicates that it is successful going back in the other direction. So you know bi-directional traffic is supported on UDP port 53 if my web pod wants to do dns resolution from core DNS.

If for example that did not work and you wanted to get the ovn-trace, the ovs-appctl of proto/trace and ovn-detrace, and more debug type information increase the log level to 2 and run the command again as follows:

The output from this increased log level is too much to list here. In a failure situation the output of this command shows which flow is dropping that traffic. For example an egress or ingress network policy may be configured on the cluster that does not allow that traffic.

Example: Verifying by using debug output a configured default deny

This example illustrates how to identify by using the debug output that an ingress default deny policy blocks traffic.

Procedure

Create the following YAML that defines a

deny-by-defaultpolicy to deny ingress from all pods in all namespaces. Save the YAML in thedeny-by-default.yamlfile:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the policy by entering the following command:

oc apply -f deny-by-default.yaml

$ oc apply -f deny-by-default.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

networkpolicy.networking.k8s.io/deny-by-default created

networkpolicy.networking.k8s.io/deny-by-default createdCopy to Clipboard Copied! Toggle word wrap Toggle overflow Start a web service in the

defaultnamespace by entering the following command:oc run web --namespace=default --image=quay.io/openshifttest/nginx --labels="app=web" --expose --port=80

$ oc run web --namespace=default --image=quay.io/openshifttest/nginx --labels="app=web" --expose --port=80Copy to Clipboard Copied! Toggle word wrap Toggle overflow Run the following command to create the

prodnamespace:oc create namespace prod

$ oc create namespace prodCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run the following command to label the

prodnamespace:oc label namespace/prod purpose=production

$ oc label namespace/prod purpose=productionCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run the following command to deploy an

alpineimage in theprodnamespace and start a shell:oc run test-6459 --namespace=prod --rm -i -t --image=alpine -- sh

$ oc run test-6459 --namespace=prod --rm -i -t --image=alpine -- shCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Open another terminal session.

In this new terminal session run

ovn-traceto verify the failure in communication between the source podtest-6459running in namespaceprodand destination pod running in thedefaultnamespace:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

ovn-trace source pod to destination pod indicates failure from test-6459 to web

ovn-trace source pod to destination pod indicates failure from test-6459 to webCopy to Clipboard Copied! Toggle word wrap Toggle overflow Increase the log level to 2 to expose the reason for the failure by running the following command:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Ingress traffic is blocked due to the default deny policy being in place.

Create a policy that allows traffic from all pods in a particular namespaces with a label

purpose=production. Save the YAML in theweb-allow-prod.yamlfile:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the policy by entering the following command:

oc apply -f web-allow-prod.yaml

$ oc apply -f web-allow-prod.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run

ovnkube-traceto verify that traffic is now allowed by entering the following command:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Expected output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Run the following command in the shell that was opened in step six to connect nginx to the web-server:

wget -qO- --timeout=2 http://web.default

wget -qO- --timeout=2 http://web.defaultCopy to Clipboard Copied! Toggle word wrap Toggle overflow Expected output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 5. Converting to IPv4/IPv6 dual-stack networking

As a cluster administrator, you can convert your IPv4 single-stack cluster to a dual-network cluster network that supports IPv4 and IPv6 address families. After converting to dual-stack networking, new and existing pods have dual-stack networking enabled.

When using dual-stack networking where IPv6 is required, you cannot use IPv4-mapped IPv6 addresses, such as ::FFFF:198.51.100.1.

5.1. Converting to a dual-stack cluster network

As a cluster administrator, you can convert your single-stack cluster network to a dual-stack cluster network.

After converting your cluster to use dual-stack networking, you must re-create any existing pods for them to receive IPv6 addresses, because only new pods are assigned IPv6 addresses.

Converting a single-stack cluster network to a dual-stack cluster network consists of creating patches and applying them to the network and infrastructure of the cluster. You can convert to a dual-stack cluster network for a cluster that runs on either installer-provisioned infrastructure or user-provisioned infrastructure.

Each patch operation that changes clusterNetwork, serviceNetwork, apiServerInternalIPs, and ingressIP objects triggers a restart of the cluster. Changing the MachineNetworks object does not cause a reboot of the cluster.

On installer-provisioned infrastructure only, if you need to add IPv6 virtual IPs (VIPs) for API and Ingress services to an existing dual-stack-configured cluster, you need to patch only the infrastructure and not the network for the cluster.

If you already upgraded your cluster to OpenShift Container Platform 4.16 or later and you need to convert the single-stack cluster network to a dual-stack cluster network, you must specify an existing IPv4 machineNetwork network configuration from the install-config.yaml file for API and Ingress services in the YAML configuration patch file. This configuration ensures that IPv4 traffic exists in the same network interface as the default gateway.

Example YAML configuration file with an added IPv4 address block for the machineNetwork network

- op: add path: /spec/platformSpec/baremetal/machineNetworks/- value: 192.168.1.0/24 # ...

- op: add

path: /spec/platformSpec/baremetal/machineNetworks/-

value: 192.168.1.0/24

# ...- 1

- Ensure that you specify an address block for the

machineNetworknetwork where your machines operate. You must select both API and Ingress IP addresses for the machine network.

Prerequisites

-

You installed the OpenShift CLI (

oc). -

You are logged in to the cluster with a user with

cluster-adminprivileges. - Your cluster uses the OVN-Kubernetes network plugin.

- The cluster nodes have IPv6 addresses.

- You have configured an IPv6-enabled router based on your infrastructure.

Procedure

To specify IPv6 address blocks for cluster and service networks, create a YAML configuration patch file that has a similar configuration to the following example:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Specify an object with the

cidrandhostPrefixparameters. The host prefix must be64or greater. The IPv6 Classless Inter-Domain Routing (CIDR) prefix must be large enough to accommodate the specified host prefix. - 2

- Specify an IPv6 CIDR with a prefix of

112. Kubernetes uses only the lowest 16 bits. For a prefix of112, IP addresses are assigned from112to128bits.

Patch the cluster network configuration by entering the following command in your CLI:

oc patch network.config.openshift.io cluster \ --type='json' --patch-file <file>.yaml

$ oc patch network.config.openshift.io cluster \1 --type='json' --patch-file <file>.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Where

filespecifies the name of your created YAML file.

Example output

network.config.openshift.io/cluster patched

network.config.openshift.io/cluster patchedCopy to Clipboard Copied! Toggle word wrap Toggle overflow On installer-provisioned infrastructure where you added IPv6 VIPs for API and Ingress services, complete the following steps:

Specify IPv6 VIPs for API and Ingress services for your cluster. Create a YAML configuration patch file that has a similar configuration to the following example:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Ensure that you specify an address block for the

machineNetworknetwork where your machines operate. You must select both API and Ingress IP addresses for the machine network. - 2

- Ensure that you specify each file path according to your platform. The example demonstrates a file path on a bare-metal platform.

Patch the infrastructure by entering the following command in your CLI:

oc patch infrastructure cluster \ --type='json' --patch-file <file>.yaml

$ oc patch infrastructure cluster \ --type='json' --patch-file <file>.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Where:

- <file>

Specifies the name of your created YAML file.

Example output

infrastructure/cluster patched

infrastructure/cluster patchedCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Show the cluster network configuration by entering the following command in your CLI:

oc describe network

$ oc describe networkCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify the successful installation of the patch on the network configuration by checking that the cluster network configuration recognizes the IPv6 address blocks that you specified in the YAML file.

Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Complete the following additional tasks for a cluster that runs on installer-provisioned infrastructure:

Show the cluster infrastructure configuration by entering the following command in your CLI:

oc describe infrastructure

$ oc describe infrastructureCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify the successful installation of the patch on the cluster infrastructure by checking that the infrastructure recognizes the IPv6 address blocks that you specified in the YAML file.

Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

5.2. Converting to a single-stack cluster network

As a cluster administrator, you can convert your dual-stack cluster network to a single-stack cluster network.

If you originally converted your IPv4 single-stack cluster network to a dual-stack cluster, you can convert only back to the IPv4 single-stack cluster and not an IPv6 single-stack cluster network. The same restriction applies for converting back to an IPv6 single-stack cluster network.

Prerequisites

-

You installed the OpenShift CLI (

oc). -

You are logged in to the cluster with a user with

cluster-adminprivileges. - Your cluster uses the OVN-Kubernetes network plugin.

- The cluster nodes have IPv6 addresses.

- You have enabled dual-stack networking.

Procedure

Edit the

networks.config.openshift.iocustom resource (CR) by running the following command:oc edit networks.config.openshift.io

$ oc edit networks.config.openshift.ioCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

Remove the IPv4 or IPv6 configuration that you added to the

cidrand thehostPrefixparameters from completing the "Converting to a dual-stack cluster network " procedure steps.

Chapter 6. Configuring OVN-Kubernetes internal IP address subnets

As a cluster administrator, you can change the IP address ranges that the OVN-Kubernetes network plugin uses for the join and transit subnets.

6.1. Configuring the OVN-Kubernetes join subnet

You can change the join subnet used by OVN-Kubernetes to avoid conflicting with any existing subnets already in use in your environment.

Prerequisites

-

Install the OpenShift CLI (

oc). -

Log in to the cluster with a user with

cluster-adminprivileges. - Ensure that the cluster uses the OVN-Kubernetes network plugin.

Procedure

To change the OVN-Kubernetes join subnet, enter the following command:

oc patch network.operator.openshift.io cluster --type='merge' \ -p='{"spec":{"defaultNetwork":{"ovnKubernetesConfig": {"ipv4":{"internalJoinSubnet": "<join_subnet>"}, "ipv6":{"internalJoinSubnet": "<join_subnet>"}}}}}'$ oc patch network.operator.openshift.io cluster --type='merge' \ -p='{"spec":{"defaultNetwork":{"ovnKubernetesConfig": {"ipv4":{"internalJoinSubnet": "<join_subnet>"}, "ipv6":{"internalJoinSubnet": "<join_subnet>"}}}}}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow where:

<join_subnet>-

Specifies an IP address subnet for internal use by OVN-Kubernetes. The subnet must be larger than the number of nodes in the cluster and it must be large enough to accommodate one IP address per node in the cluster. This subnet cannot overlap with any other subnets used by OpenShift Container Platform or on the host itself. The default value for IPv4 is

100.64.0.0/16and the default value for IPv6 isfd98::/64.

Example output

network.operator.openshift.io/cluster patched

network.operator.openshift.io/cluster patchedCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

To confirm that the configuration is active, enter the following command:

oc get network.operator.openshift.io \ -o jsonpath="{.items[0].spec.defaultNetwork}"$ oc get network.operator.openshift.io \ -o jsonpath="{.items[0].spec.defaultNetwork}"Copy to Clipboard Copied! Toggle word wrap Toggle overflow The command operation can take up to 30 minutes for this change to take effect.

Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.2. Configuring the OVN-Kubernetes masquerade subnet as a post-installation operation

You can change the masquerade subnet used by OVN-Kubernetes as a post-installation operation to avoid conflicts with any existing subnets that are already in use in your environment.

Prerequisites

-

Install the OpenShift CLI (

oc). -

Log in to the cluster as a user with

cluster-adminprivileges.

Procedure

Change your cluster’s masquerade subnet:

For dualstack clusters using IPv6, run the following command:

oc patch networks.operator.openshift.io cluster --type=merge -p '{"spec":{"defaultNetwork":{"ovnKubernetesConfig":{"gatewayConfig":{"ipv4":{"internalMasqueradeSubnet": "<ipv4_masquerade_subnet>"},"ipv6":{"internalMasqueradeSubnet": "<ipv6_masquerade_subnet>"}}}}}}'$ oc patch networks.operator.openshift.io cluster --type=merge -p '{"spec":{"defaultNetwork":{"ovnKubernetesConfig":{"gatewayConfig":{"ipv4":{"internalMasqueradeSubnet": "<ipv4_masquerade_subnet>"},"ipv6":{"internalMasqueradeSubnet": "<ipv6_masquerade_subnet>"}}}}}}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow where:

ipv4_masquerade_subnet-

Specifies an IP address to be used as the IPv4 masquerade subnet. This range cannot overlap with any other subnets used by OpenShift Container Platform or on the host itself. In versions of OpenShift Container Platform earlier than 4.17, the default value for IPv4 was

169.254.169.0/29, and clusters that were upgraded to version 4.17 maintain this value. For new clusters starting from version 4.17, the default value is169.254.0.0/17. ipv6_masquerade_subnet-

Specifies an IP address to be used as the IPv6 masquerade subnet. This range cannot overlap with any other subnets used by OpenShift Container Platform or on the host itself. The default value for IPv6 is

fd69::/125.

For clusters using IPv4, run the following command:

oc patch networks.operator.openshift.io cluster --type=merge -p '{"spec":{"defaultNetwork":{"ovnKubernetesConfig":{"gatewayConfig":{"ipv4":{"internalMasqueradeSubnet": "<ipv4_masquerade_subnet>"}}}}}}'$ oc patch networks.operator.openshift.io cluster --type=merge -p '{"spec":{"defaultNetwork":{"ovnKubernetesConfig":{"gatewayConfig":{"ipv4":{"internalMasqueradeSubnet": "<ipv4_masquerade_subnet>"}}}}}}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow where:

ipv4_masquerade_subnet::Specifies an IP address to be used as the IPv4 masquerade subnet. This range cannot overlap with any other subnets used by OpenShift Container Platform or on the host itself. In versions of OpenShift Container Platform earlier than 4.17, the default value for IPv4 was169.254.169.0/29, and clusters that were upgraded to version 4.17 maintain this value. For new clusters starting from version 4.17, the default value is169.254.0.0/17.

6.3. Configuring the OVN-Kubernetes transit subnet

You can change the transit subnet used by OVN-Kubernetes to avoid conflicting with any existing subnets already in use in your environment.

Prerequisites

-

Install the OpenShift CLI (

oc). -

Log in to the cluster with a user with

cluster-adminprivileges. - Ensure that the cluster uses the OVN-Kubernetes network plugin.

Procedure

To change the OVN-Kubernetes transit subnet, enter the following command:

oc patch network.operator.openshift.io cluster --type='merge' \ -p='{"spec":{"defaultNetwork":{"ovnKubernetesConfig": {"ipv4":{"internalTransitSwitchSubnet": "<transit_subnet>"}, "ipv6":{"internalTransitSwitchSubnet": "<transit_subnet>"}}}}}'$ oc patch network.operator.openshift.io cluster --type='merge' \ -p='{"spec":{"defaultNetwork":{"ovnKubernetesConfig": {"ipv4":{"internalTransitSwitchSubnet": "<transit_subnet>"}, "ipv6":{"internalTransitSwitchSubnet": "<transit_subnet>"}}}}}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow where:

<transit_subnet>-

Specifies an IP address subnet for the distributed transit switch that enables east-west traffic. This subnet cannot overlap with any other subnets used by OVN-Kubernetes or on the host itself. The default value for IPv4 is

100.88.0.0/16and the default value for IPv6 isfd97::/64.

Example output

network.operator.openshift.io/cluster patched

network.operator.openshift.io/cluster patchedCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

To confirm that the configuration is active, enter the following command:

oc get network.operator.openshift.io \ -o jsonpath="{.items[0].spec.defaultNetwork}"$ oc get network.operator.openshift.io \ -o jsonpath="{.items[0].spec.defaultNetwork}"Copy to Clipboard Copied! Toggle word wrap Toggle overflow It can take up to 30 minutes for this change to take effect.

Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 7. Configuring a gateway

As a cluster administrator you can configure the gatewayConfig object to manage how external traffic leaves the cluster. You do so by setting the routingViaHost parameter to one of the following values:

-

truemeans that egress traffic routes through a specific local gateway on the node that hosts the pod. Egress traffic routes through the host and this traffic applies to the routing table of the host. -

falsemeans that egress traffic routes through a dedicated node but a group of nodes share the same gateway. Egress traffic does not route through the host. The Open vSwitch (OVS) outputs traffic directly to the node IP interface.

7.1. Configuring egress routing policies

As a cluster administrator you can configure egress routing policies by using the gatewayConfig specification in the Cluster Network Operator (CNO). You can use the following procedure to set the routingViaHost field to true or false.