This documentation is for a release that is no longer maintained

See documentation for the latest supported version 3 or the latest supported version 4.Scalability and performance

Scaling your OpenShift Container Platform cluster and tuning performance in production environments

Abstract

Chapter 1. Recommended practices for installing large clusters

Apply the following practices when installing large clusters or scaling clusters to larger node counts.

1.1. Recommended practices for installing large scale clusters

When installing large clusters or scaling the cluster to larger node counts, set the cluster network cidr accordingly in your install-config.yaml file before you install the cluster:

The default clusterNetwork cidr 10.128.0.0/14 cannot be used if the cluster size is more than 500 nodes. It must be set to 10.128.0.0/12 or 10.128.0.0/10 to get to larger node counts beyond 500 nodes.

Chapter 2. Recommended host practices

This topic provides recommended host practices for OpenShift Container Platform.

2.1. Recommended node host practices

The OpenShift Container Platform node configuration file contains important options. For example, two parameters control the maximum number of pods that can be scheduled to a node: podsPerCore and maxPods.

When both options are in use, the lower of the two values limits the number of pods on a node. Exceeding these values can result in:

- Increased CPU utilization.

- Slow pod scheduling.

- Potential out-of-memory scenarios, depending on the amount of memory in the node.

- Exhausting the pool of IP addresses.

- Resource overcommitting, leading to poor user application performance.

In Kubernetes, a pod that is holding a single container actually uses two containers. The second container is used to set up networking prior to the actual container starting. Therefore, a system running 10 pods will actually have 20 containers running.

podsPerCore sets the number of pods the node can run based on the number of processor cores on the node. For example, if podsPerCore is set to 10 on a node with 4 processor cores, the maximum number of pods allowed on the node will be 40.

kubeletConfig: podsPerCore: 10

kubeletConfig:

podsPerCore: 10

Setting podsPerCore to 0 disables this limit. The default is 0. podsPerCore cannot exceed maxPods.

maxPods sets the number of pods the node can run to a fixed value, regardless of the properties of the node.

kubeletConfig:

maxPods: 250

kubeletConfig:

maxPods: 2502.2. Create a KubeletConfig CRD to edit kubelet parameters

The kubelet configuration is currently serialized as an ignition configuration, so it can be directly edited. However, there is also a new kubelet-config-controller added to the Machine Config Controller (MCC). This allows you to create a KubeletConfig custom resource (CR) to edit the kubelet parameters.

Procedure

Run:

oc get machineconfig

$ oc get machineconfigCopy to Clipboard Copied! Toggle word wrap Toggle overflow This provides a list of the available machine configuration objects you can select. By default, the two kubelet-related configs are

01-master-kubeletand01-worker-kubelet.To check the current value of max Pods per node, run:

oc describe node <node-ip> | grep Allocatable -A6

# oc describe node <node-ip> | grep Allocatable -A6Copy to Clipboard Copied! Toggle word wrap Toggle overflow Look for

value: pods: <value>.For example:

oc describe node ip-172-31-128-158.us-east-2.compute.internal | grep Allocatable -A6

# oc describe node ip-172-31-128-158.us-east-2.compute.internal | grep Allocatable -A6Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow To set the max Pods per node on the worker nodes, create a custom resource file that contains the kubelet configuration. For example,

change-maxPods-cr.yaml:Copy to Clipboard Copied! Toggle word wrap Toggle overflow The rate at which the kubelet talks to the API server depends on queries per second (QPS) and burst values. The default values,

50forkubeAPIQPSand100forkubeAPIBurst, are good enough if there are limited pods running on each node. Updating the kubelet QPS and burst rates is recommended if there are enough CPU and memory resources on the node:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Run:

oc label machineconfigpool worker custom-kubelet=large-pods

$ oc label machineconfigpool worker custom-kubelet=large-podsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run:

oc create -f change-maxPods-cr.yaml

$ oc create -f change-maxPods-cr.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run:

oc get kubeletconfig

$ oc get kubeletconfigCopy to Clipboard Copied! Toggle word wrap Toggle overflow This should return

set-max-pods.Depending on the number of worker nodes in the cluster, wait for the worker nodes to be rebooted one by one. For a cluster with 3 worker nodes, this could take about 10 to 15 minutes.

Check for

maxPodschanging for the worker nodes:oc describe node

$ oc describe nodeCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify the change by running:

oc get kubeletconfigs set-max-pods -o yaml

$ oc get kubeletconfigs set-max-pods -o yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow This should show a status of

Trueandtype:Success

Procedure

By default, only one machine is allowed to be unavailable when applying the kubelet-related configuration to the available worker nodes. For a large cluster, it can take a long time for the configuration change to be reflected. At any time, you can adjust the number of machines that are updating to speed up the process.

Run:

oc edit machineconfigpool worker

$ oc edit machineconfigpool workerCopy to Clipboard Copied! Toggle word wrap Toggle overflow Set

maxUnavailableto the desired value.spec: maxUnavailable: <node_count>

spec: maxUnavailable: <node_count>Copy to Clipboard Copied! Toggle word wrap Toggle overflow ImportantWhen setting the value, consider the number of worker nodes that can be unavailable without affecting the applications running on the cluster.

2.3. Master node sizing

The master node resource requirements depend on the number of nodes in the cluster. The following master node size recommendations are based on the results of control plane density focused testing.

| Number of worker nodes | CPU cores | Memory (GB) |

|---|---|---|

| 25 | 4 | 16 |

| 100 | 8 | 32 |

| 250 | 16 | 64 |

Because you cannot modify the master node size in a running OpenShift Container Platform 4.3 cluster, you must estimate your total node count and use the suggested master size during installation.

In OpenShift Container Platform 4.3, half of a CPU core (500 millicore) is now reserved by the system by default compared to OpenShift Container Platform 3.11 and previous versions. The sizes are determined taking that into consideration.

2.4. Recommended etcd practices

For large and dense clusters, etcd can suffer from poor performance if the keyspace grows excessively large and exceeds the space quota. Periodic maintenance of etcd including defragmentation needs to be done to free up space in the data store. It is highly recommended that you monitor Prometheus for etcd metrics and defragment it when needed before etcd raises a cluster-wide alarm that puts the cluster into a maintenance mode, which only accepts key reads and deletes. Some of the key metrics to monitor are etcd_server_quota_backend_bytes which is the current quota limit, etcd_mvcc_db_total_size_in_use_in_bytes which indicates the actual database usage after a history compaction, and etcd_debugging_mvcc_db_total_size_in_bytes which shows the database size including free space waiting for defragmentation.

2.5. Additional resources

Chapter 3. Recommended cluster scaling practices

The guidance in this section is only relevant for installations with cloud provider integration.

Apply the following best practices to scale the number of worker machines in your OpenShift Container Platform cluster. You scale the worker machines by increasing or decreasing the number of replicas that are defined in the worker MachineSet.

3.1. Recommended practices for scaling the cluster

When scaling up the cluster to higher node counts:

- Spread nodes across all of the available zones for higher availability.

- Scale up by no more than 25 to 50 machines at once.

- Consider creating new MachineSets in each available zone with alternative instance types of similar size to help mitigate any periodic provider capacity constraints. For example, on AWS, use m5.large and m5d.large.

Cloud providers might implement a quota for API services. Therefore, gradually scale the cluster.

The controller might not be able to create the machines if the replicas in the MachineSets are set to higher numbers all at one time. The number of requests the cloud platform, which OpenShift Container Platform is deployed on top of, is able to handle impacts the process. The controller will start to query more while trying to create, check, and update the machines with the status. The cloud platform on which OpenShift Container Platform is deployed has API request limits and excessive queries might lead to machine creation failures due to cloud platform limitations.

Enable machine health checks when scaling to large node counts. In case of failures, the health checks monitor the condition and automatically repair unhealthy machines.

3.2. Modifying a MachineSet

To make changes to a MachineSet, edit the MachineSet YAML. Then, remove all machines associated with the MachineSet by deleting each machine 'or scaling down the MachineSet to 0 replicas. Then, scale the replicas back to the desired number. Changes you make to a MachineSet do not affect existing machines.

If you need to scale a MachineSet without making other changes, you do not need to delete the machines.

By default, the OpenShift Container Platform router pods are deployed on workers. Because the router is required to access some cluster resources, including the web console, do not scale the worker MachineSet to 0 unless you first relocate the router pods.

Prerequisites

- Install an OpenShift Container Platform cluster and the oc command line.

-

Log in to

ocas a user withcluster-adminpermission.

Procedure

Edit the MachineSet:

oc edit machineset <machineset> -n openshift-machine-api

$ oc edit machineset <machineset> -n openshift-machine-apiCopy to Clipboard Copied! Toggle word wrap Toggle overflow Scale down the MachineSet to

0:oc scale --replicas=0 machineset <machineset> -n openshift-machine-api

$ oc scale --replicas=0 machineset <machineset> -n openshift-machine-apiCopy to Clipboard Copied! Toggle word wrap Toggle overflow Or:

oc edit machineset <machineset> -n openshift-machine-api

$ oc edit machineset <machineset> -n openshift-machine-apiCopy to Clipboard Copied! Toggle word wrap Toggle overflow Wait for the machines to be removed.

Scale up the MachineSet as needed:

oc scale --replicas=2 machineset <machineset> -n openshift-machine-api

$ oc scale --replicas=2 machineset <machineset> -n openshift-machine-apiCopy to Clipboard Copied! Toggle word wrap Toggle overflow Or:

oc edit machineset <machineset> -n openshift-machine-api

$ oc edit machineset <machineset> -n openshift-machine-apiCopy to Clipboard Copied! Toggle word wrap Toggle overflow Wait for the machines to start. The new Machines contain changes you made to the Machineset.

3.3. About MachineHealthChecks

MachineHealthChecks automatically repairs unhealthy Machines in a particular MachinePool.

To monitor machine health, you create a resource to define the configuration for a controller. You set a condition to check for, such as staying in the NotReady status for 15 minutes or displaying a permanent condition in the node-problem-detector, and a label for the set of machines to monitor.

You cannot apply a MachineHealthCheck to a machine with the master role.

The controller that observes a MachineHealthCheck resource checks for the status that you defined. If a machine fails the health check, it is automatically deleted and a new one is created to take its place. When a machine is deleted, you see a machine deleted event. To limit disruptive impact of the machine deletion, the controller drains and deletes only one node at a time. If there are more unhealthy machines than the maxUnhealthy threshold allows for in the targeted pool of machines, remediation stops so that manual intervention can take place.

To stop the check, you remove the resource.

3.4. Sample MachineHealthCheck resource

The MachineHealthCheck resource resembles the following YAML file:

MachineHealthCheck

- 1

- Specify the name of the MachineHealthCheck to deploy.

- 2 3

- Specify a label for the machine pool that you want to check.

- 4

- Specify the MachineSet to track in

<cluster_name>-<label>-<zone>format. For example,prod-node-us-east-1a. - 5 6

- Specify the timeout duration for a node condition. If a condition is met for the duration of the timeout, the Machine will be remediated. Long timeouts can result in long periods of downtime for the workload on the unhealthy Machine.

- 7

- Specify the amount of unhealthy machines allowed in the targeted pool of machines. This can be set as a percentage or an integer.

The matchLabels are examples only; you must map your machine groups based on your specific needs.

3.5. Creating a MachineHealthCheck resource

You can create a MachineHealthCheck resource for all MachinePools in your cluster except the master pool.

Prerequisites

-

Install the

occommand line interface.

Procedure

-

Create a

healthcheck.ymlfile that contains the definition of your MachineHealthCheck. Apply the

healthcheck.ymlfile to your cluster:oc apply -f healthcheck.yml

$ oc apply -f healthcheck.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 4. Using the Node Tuning Operator

Learn about the Node Tuning Operator and how you can use it to manage node-level tuning by orchestrating the tuned daemon.

4.1. About the Node Tuning Operator

The Node Tuning Operator helps you manage node-level tuning by orchestrating the Tuned daemon. The majority of high-performance applications require some level of kernel tuning. The Node Tuning Operator provides a unified management interface to users of node-level sysctls and more flexibility to add custom tuning specified by user needs. The Operator manages the containerized Tuned daemon for OpenShift Container Platform as a Kubernetes DaemonSet. It ensures the custom tuning specification is passed to all containerized Tuned daemons running in the cluster in the format that the daemons understand. The daemons run on all nodes in the cluster, one per node.

Node-level settings applied by the containerized Tuned daemon are rolled back on an event that triggers a profile change or when the containerized Tuned daemon is terminated gracefully by receiving and handling a termination signal.

The Node Tuning Operator is part of a standard OpenShift Container Platform installation in version 4.1 and later.

4.2. Accessing an example Node Tuning Operator specification

Use this process to access an example Node Tuning Operator specification.

Procedure

Run:

oc get Tuned/default -o yaml -n openshift-cluster-node-tuning-operator

$ oc get Tuned/default -o yaml -n openshift-cluster-node-tuning-operatorCopy to Clipboard Copied! Toggle word wrap Toggle overflow

The default CR is meant for delivering standard node-level tuning for the OpenShift Container Platform platform and it can only be modified to set the Operator Management state. Any other custom changes to the default CR will be overwritten by the Operator. For custom tuning, create your own Tuned CRs. Newly created CRs will be combined with the default CR and custom tuning applied to OpenShift Container Platform nodes based on node or Pod labels and profile priorities.

4.3. Default profiles set on a cluster

The following are the default profiles set on a cluster.

4.4. Verifying that the Tuned profiles are applied

Use this procedure to check which Tuned profiles are applied on every node.

Procedure

Check which Tuned Pods are running on each node:

oc get pods -n openshift-cluster-node-tuning-operator -o wide

$ oc get pods -n openshift-cluster-node-tuning-operator -o wideCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Extract the profile applied from each Pod and match them against the previous list:

for p in `oc get pods -n openshift-cluster-node-tuning-operator -l openshift-app=tuned -o=jsonpath='{range .items[*]}{.metadata.name} {end}'`; do printf "\n*** $p ***\n" ; oc logs pod/$p -n openshift-cluster-node-tuning-operator | grep applied; done$ for p in `oc get pods -n openshift-cluster-node-tuning-operator -l openshift-app=tuned -o=jsonpath='{range .items[*]}{.metadata.name} {end}'`; do printf "\n*** $p ***\n" ; oc logs pod/$p -n openshift-cluster-node-tuning-operator | grep applied; doneCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Custom profiles for custom tuning specification is a Technology Preview feature only. Technology Preview features are not supported with Red Hat production service level agreements (SLAs) and might not be functionally complete. Red Hat does not recommend using them in production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process.

For more information about the support scope of Red Hat Technology Preview features, see https://access.redhat.com/support/offerings/techpreview/.

4.5. Custom tuning specification

The custom resource (CR) for the operator has two major sections. The first section, profile:, is a list of Tuned profiles and their names. The second, recommend:, defines the profile selection logic.

Multiple custom tuning specifications can co-exist as multiple CRs in the operator’s namespace. The existence of new CRs or the deletion of old CRs is detected by the Operator. All existing custom tuning specifications are merged and appropriate objects for the containerized Tuned daemons are updated.

Profile data

The profile: section lists Tuned profiles and their names.

Recommended profiles

The profile: selection logic is defined by the recommend: section of the CR:

If <match> is omitted, a profile match (for example, true) is assumed.

<match> is an optional array recursively defined as follows:

- label: <label_name> # node or Pod label name value: <label_value> # optional node or Pod label value; if omitted, the presence of <label_name> is enough to match type: <label_type> # optional node or Pod type (`node` or `pod`); if omitted, `node` is assumed <match> # an optional <match> array

- label: <label_name> # node or Pod label name

value: <label_value> # optional node or Pod label value; if omitted, the presence of <label_name> is enough to match

type: <label_type> # optional node or Pod type (`node` or `pod`); if omitted, `node` is assumed

<match> # an optional <match> array

If <match> is not omitted, all nested <match> sections must also evaluate to true. Otherwise, false is assumed and the profile with the respective <match> section will not be applied or recommended. Therefore, the nesting (child <match> sections) works as logical AND operator. Conversely, if any item of the <match> array matches, the entire <match> array evaluates to true. Therefore, the array acts as logical OR operator.

Example

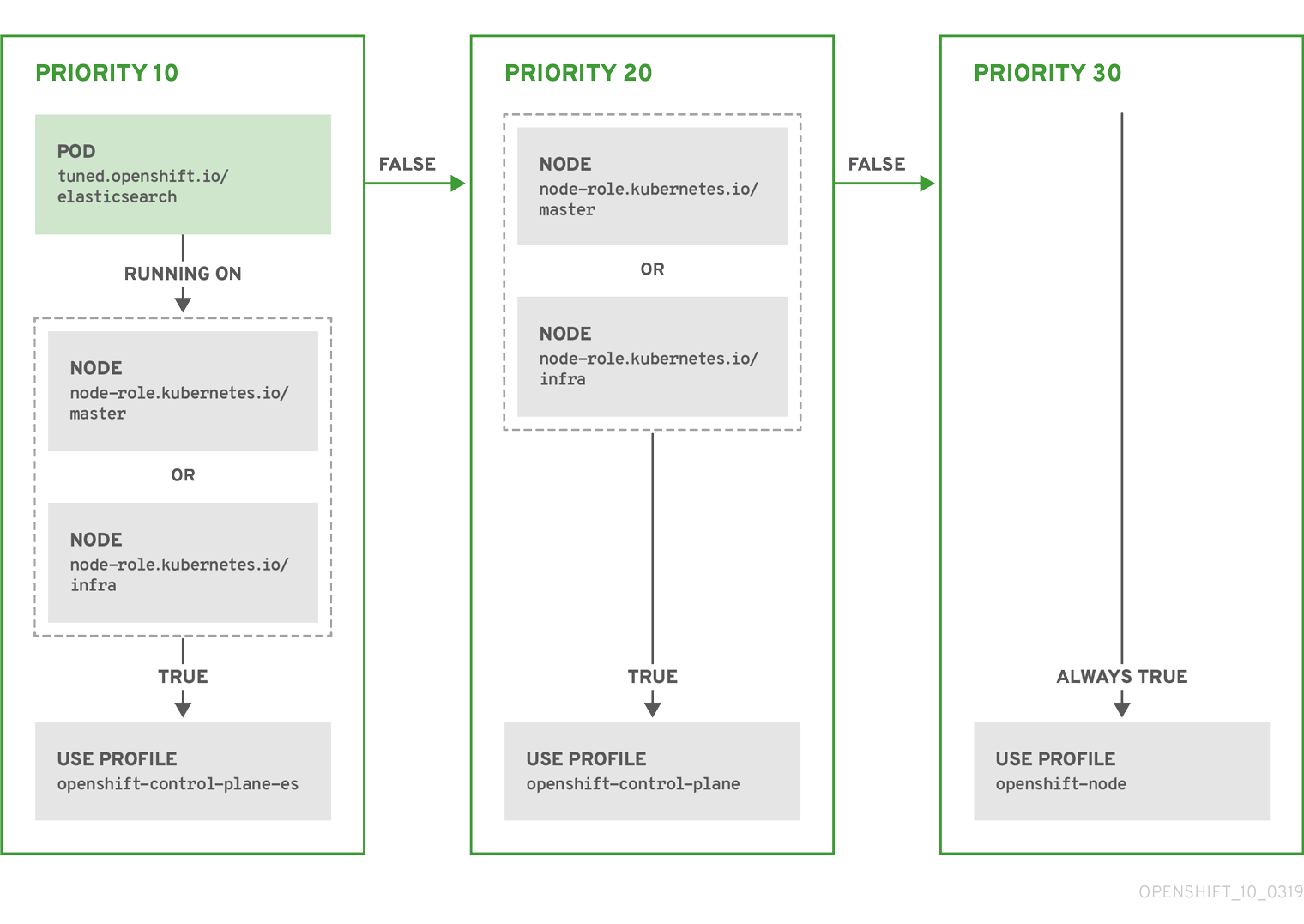

The CR above is translated for the containerized Tuned daemon into its recommend.conf file based on the profile priorities. The profile with the highest priority (10) is openshift-control-plane-es and, therefore, it is considered first. The containerized Tuned daemon running on a given node looks to see if there is a Pod running on the same node with the tuned.openshift.io/elasticsearch label set. If not, the entire <match> section evaluates as false. If there is such a Pod with the label, in order for the <match> section to evaluate to true, the node label also needs to be node-role.kubernetes.io/master or node-role.kubernetes.io/infra.

If the labels for the profile with priority 10 matched, openshift-control-plane-es profile is applied and no other profile is considered. If the node/Pod label combination did not match, the second highest priority profile (openshift-control-plane) is considered. This profile is applied if the containerized Tuned Pod runs on a node with labels node-role.kubernetes.io/master or node-role.kubernetes.io/infra.

Finally, the profile openshift-node has the lowest priority of 30. It lacks the <match> section and, therefore, will always match. It acts as a profile catch-all to set openshift-node profile, if no other profile with higher priority matches on a given node.

4.6. Custom tuning example

The following CR applies custom node-level tuning for OpenShift Container Platform nodes that run an ingress Pod with label tuned.openshift.io/ingress-pod-label=ingress-pod-label-value. As an administrator, use the following command to create a custom Tuned CR.

Example

4.7. Supported Tuned daemon plug-ins

Excluding the [main] section, the following Tuned plug-ins are supported when using custom profiles defined in the profile: section of the Tuned CR:

- audio

- cpu

- disk

- eeepc_she

- modules

- mounts

- net

- scheduler

- scsi_host

- selinux

- sysctl

- sysfs

- usb

- video

- vm

There is some dynamic tuning functionality provided by some of these plug-ins that is not supported. The following Tuned plug-ins are currently not supported:

- bootloader

- script

- systemd

See Available Tuned Plug-ins and Getting Started with Tuned for more information.

Chapter 5. Using Cluster Loader

Cluster Loader is a tool that deploys large numbers of various objects to a cluster, which creates user-defined cluster objects. Build, configure, and run Cluster Loader to measure performance metrics of your OpenShift Container Platform deployment at various cluster states.

5.1. Installing Cluster Loader

Procedure

To pull the container image, run:

sudo podman pull quay.io/openshift/origin-tests:4.3

$ sudo podman pull quay.io/openshift/origin-tests:4.3Copy to Clipboard Copied! Toggle word wrap Toggle overflow

5.2. Running Cluster Loader

Prerequisites

- The repository will prompt you to authenticate. The registry credentials allow you to access the image, which is not publicly available. Use your existing authentication credentials from installation.

Procedure

Execute Cluster Loader using the built-in test configuration, which deploys five template builds and waits for them to complete:

sudo podman run -v ${LOCAL_KUBECONFIG}:/root/.kube/config:z -i \ quay.io/openshift/origin-tests:4.3 /bin/bash -c 'export KUBECONFIG=/root/.kube/config && \ openshift-tests run-test "[sig-scalability][Feature:Performance] Load cluster \ should populate the cluster [Slow][Serial] [Suite:openshift]"'$ sudo podman run -v ${LOCAL_KUBECONFIG}:/root/.kube/config:z -i \ quay.io/openshift/origin-tests:4.3 /bin/bash -c 'export KUBECONFIG=/root/.kube/config && \ openshift-tests run-test "[sig-scalability][Feature:Performance] Load cluster \ should populate the cluster [Slow][Serial] [Suite:openshift]"'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Alternatively, execute Cluster Loader with a user-defined configuration by setting the environment variable for

VIPERCONFIG:Copy to Clipboard Copied! Toggle word wrap Toggle overflow In this example,

${LOCAL_KUBECONFIG}refers to the path to thekubeconfigon your local file system. Also, there is a directory called${LOCAL_CONFIG_FILE_PATH}, which is mounted into the container that contains a configuration file calledtest.yaml. Additionally, if thetest.yamlreferences any external template files or podspec files, they should also be mounted into the container.

5.3. Configuring Cluster Loader

The tool creates multiple namespaces (projects), which contain multiple templates or Pods.

5.3.1. Example Cluster Loader configuration file

Cluster Loader’s configuration file is a basic YAML file:

- 1

- Optional setting for end-to-end tests. Set to

localto avoid extra log messages. - 2

- The tuning sets allow rate limiting and stepping, the ability to create several batches of Pods while pausing in between sets. Cluster Loader monitors completion of the previous step before continuing.

- 3

- Stepping will pause for

Mseconds after eachNobjects are created. - 4

- Rate limiting will wait

Mmilliseconds between the creation of objects.

This example assumes that references to any external template files or podspec files are also mounted into the container.

If you are running Cluster Loader on Microsoft Azure, then you must set the AZURE_AUTH_LOCATION variable to a file that contains the output of terraform.azure.auto.tfvars.json, which is present in the installer directory.

5.3.2. Configuration fields

| Field | Description |

|---|---|

|

|

Set to |

|

|

A sub-object with one or many definition(s). Under |

|

|

A sub-object with one definition per configuration. |

|

| An optional sub-object with one definition per configuration. Adds synchronization possibilities during object creation. |

| Field | Description |

|---|---|

|

| An integer. One definition of the count of how many projects to create. |

|

|

A string. One definition of the base name for the project. The count of identical namespaces will be appended to |

|

| A string. One definition of what tuning set you want to apply to the objects, which you deploy inside this namespace. |

|

|

A string containing either |

|

| A list of key-value pairs. The key is the ConfigMap name and the value is a path to a file from which you create the ConfigMap. |

|

| A list of key-value pairs. The key is the secret name and the value is a path to a file from which you create the secret. |

|

| A sub-object with one or many definition(s) of Pods to deploy. |

|

| A sub-object with one or many definition(s) of templates to deploy. |

| Field | Description |

|---|---|

|

| An integer. The number of Pods or templates to deploy. |

|

| A string. The docker image URL to a repository where it can be pulled. |

|

| A string. One definition of the base name for the template (or pod) that you want to create. |

|

| A string. The path to a local file, which is either a PodSpec or template to be created. |

|

|

Key-value pairs. Under |

| Field | Description |

|---|---|

|

| A string. The name of the tuning set which will match the name specified when defining a tuning in a project. |

|

|

A sub-object identifying the |

|

|

A sub-object identifying the |

| Field | Description |

|---|---|

|

| A sub-object. A stepping configuration used if you want to create an object in a step creation pattern. |

|

| A sub-object. A rate-limiting tuning set configuration to limit the object creation rate. |

| Field | Description |

|---|---|

|

| An integer. How many objects to create before pausing object creation. |

|

|

An integer. How many seconds to pause after creating the number of objects defined in |

|

| An integer. How many seconds to wait before failure if the object creation is not successful. |

|

| An integer. How many milliseconds (ms) to wait between creation requests. |

| Field | Description |

|---|---|

|

|

A sub-object with |

|

|

A boolean. Wait for Pods with labels matching |

|

|

A boolean. Wait for Pods with labels matching |

|

|

A list of selectors to match Pods in |

|

|

A string. The synchronization timeout period to wait for Pods in |

5.4. Known issues

- Cluster Loader fails when called without configuration. (BZ#1761925)

If the

IDENTIFIERparameter is not defined in user templates, template creation fails witherror: unknown parameter name "IDENTIFIER". If you deploy templates, add this parameter to your template to avoid this error:{ "name": "IDENTIFIER", "description": "Number to append to the name of resources", "value": "1" }{ "name": "IDENTIFIER", "description": "Number to append to the name of resources", "value": "1" }Copy to Clipboard Copied! Toggle word wrap Toggle overflow If you deploy Pods, adding the parameter is unnecessary.

Chapter 6. Using CPU Manager

CPU Manager manages groups of CPUs and constrains workloads to specific CPUs.

CPU Manager is useful for workloads that have some of these attributes:

- Require as much CPU time as possible.

- Are sensitive to processor cache misses.

- Are low-latency network applications.

- Coordinate with other processes and benefit from sharing a single processor cache.

6.1. Setting up CPU Manager

Procedure

Optional: Label a node:

oc label node perf-node.example.com cpumanager=true

# oc label node perf-node.example.com cpumanager=trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow Edit the

MachineConfigPoolof the nodes where CPU Manager should be enabled. In this example, all workers have CPU Manager enabled:oc edit machineconfigpool worker

# oc edit machineconfigpool workerCopy to Clipboard Copied! Toggle word wrap Toggle overflow Add a label to the worker

MachineConfigPool:metadata: creationTimestamp: 2019-xx-xxx generation: 3 labels: custom-kubelet: cpumanager-enabledmetadata: creationTimestamp: 2019-xx-xxx generation: 3 labels: custom-kubelet: cpumanager-enabledCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a

KubeletConfig,cpumanager-kubeletconfig.yaml, custom resource (CR). Refer to the label created in the previous step to have the correct nodes updated with the newKubeletConfig. See themachineConfigPoolSelectorsection:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create the dynamic

KubeletConfig:oc create -f cpumanager-kubeletconfig.yaml

# oc create -f cpumanager-kubeletconfig.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow This adds the CPU Manager feature to the

KubeletConfigand, if needed, the Machine Config Operator (MCO) reboots the node. To enable CPU Manager, a reboot is not needed.Check for the merged

KubeletConfig:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Check the worker for the updated

kubelet.conf:oc debug node/perf-node.example.com sh-4.4# cat /host/etc/kubernetes/kubelet.conf | grep cpuManager cpuManagerPolicy: static cpuManagerReconcilePeriod: 5s

# oc debug node/perf-node.example.com sh-4.4# cat /host/etc/kubernetes/kubelet.conf | grep cpuManager cpuManagerPolicy: static1 cpuManagerReconcilePeriod: 5s2 Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a Pod that requests a core or multiple cores. Both limits and requests must have their CPU value set to a whole integer. That is the number of cores that will be dedicated to this Pod:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create the Pod:

oc create -f cpumanager-pod.yaml

# oc create -f cpumanager-pod.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that the Pod is scheduled to the node that you labeled:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that the

cgroupsare set up correctly. Get the process ID (PID) of thepauseprocess:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Pods of quality of service (QoS) tier

Guaranteedare placed within thekubepods.slice. Pods of other QoS tiers end up in childcgroupsofkubepods:cd /sys/fs/cgroup/cpuset/kubepods.slice/kubepods-pod69c01f8e_6b74_11e9_ac0f_0a2b62178a22.slice/crio-b5437308f1ad1a7db0574c542bdf08563b865c0345c86e9585f8c0b0a655612c.scope for i in `ls cpuset.cpus tasks` ; do echo -n "$i "; cat $i ; done cpuset.cpus 1 tasks 32706

# cd /sys/fs/cgroup/cpuset/kubepods.slice/kubepods-pod69c01f8e_6b74_11e9_ac0f_0a2b62178a22.slice/crio-b5437308f1ad1a7db0574c542bdf08563b865c0345c86e9585f8c0b0a655612c.scope # for i in `ls cpuset.cpus tasks` ; do echo -n "$i "; cat $i ; done cpuset.cpus 1 tasks 32706Copy to Clipboard Copied! Toggle word wrap Toggle overflow Check the allowed CPU list for the task:

grep ^Cpus_allowed_list /proc/32706/status Cpus_allowed_list: 1

# grep ^Cpus_allowed_list /proc/32706/status Cpus_allowed_list: 1Copy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that another pod (in this case, the pod in the

burstableQoS tier) on the system cannot run on the core allocated for theGuaranteedpod:cat /sys/fs/cgroup/cpuset/kubepods.slice/kubepods-besteffort.slice/kubepods-besteffort-podc494a073_6b77_11e9_98c0_06bba5c387ea.slice/crio-c56982f57b75a2420947f0afc6cafe7534c5734efc34157525fa9abbf99e3849.scope/cpuset.cpus 0

# cat /sys/fs/cgroup/cpuset/kubepods.slice/kubepods-besteffort.slice/kubepods-besteffort-podc494a073_6b77_11e9_98c0_06bba5c387ea.slice/crio-c56982f57b75a2420947f0afc6cafe7534c5734efc34157525fa9abbf99e3849.scope/cpuset.cpus 0Copy to Clipboard Copied! Toggle word wrap Toggle overflow Copy to Clipboard Copied! Toggle word wrap Toggle overflow This VM has two CPU cores. You set

kube-reservedto 500 millicores, meaning half of one core is subtracted from the total capacity of the node to arrive at theNode Allocatableamount. You can see thatAllocatable CPUis 1500 millicores. This means you can run one of the CPU Manager pods since each will take one whole core. A whole core is equivalent to 1000 millicores. If you try to schedule a second pod, the system will accept the pod, but it will never be scheduled:NAME READY STATUS RESTARTS AGE cpumanager-6cqz7 1/1 Running 0 33m cpumanager-7qc2t 0/1 Pending 0 11s

NAME READY STATUS RESTARTS AGE cpumanager-6cqz7 1/1 Running 0 33m cpumanager-7qc2t 0/1 Pending 0 11sCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 7. Using Topology Manager

Topology Manager is a Kubelet component that collects hints from CPU Manager and Device Manager to align pod CPU and device resources on the same non-uniform memory access (NUMA) node.

Topology Manager uses topology information from collected hints to decide if the pod can be accepted or rejected from the node, based on the configured Topology Manager policy and Pod resources requested.

Topology Manager is useful for workloads that desire to use hardware accelerators to support latency-critical execution and high throughput parallel computation.

Topology Manager is an alpha feature in OpenShift Container Platform.

7.1. Setting up Topology Manager

Prequisites

-

Configure the CPU Manager policy to be

static. Refer to Using CPU Manager in the Scalability and Performance section.

Procedure

Enable the LatencySensitive FeatureGate

oc edit featuregate/cluster

# oc edit featuregate/clusterCopy to Clipboard Copied! Toggle word wrap Toggle overflow Add Feature Set: LatencySensitive to the spec:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Configure the Topology Manager policy with KubeletConfig.

The example YAML file below shows a

single-numa-nodepolicy specified.Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Specify your selected Topology Manager policy.

oc create -f topologymanager-kubeletconfig.yaml

# oc create -f topologymanager-kubeletconfig.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

7.2. Topology Manager policies

Topology Manager works on Nodes and Pods that meet the following conditions:

-

The Node’s CPU Manager Policy is configured as

static. -

The Pods are in the

GuaranteedQoS class.

When the above conditions are met, Topology Manager will align CPU and device requests for the Pod.

Topology Manager supports 4 allocation policies. These policies are set via a Kubelet flag, --topology-manager-policy. The policies are:

-

none(default) -

best-effort -

restricted -

single-numa-node

7.2.1. none policy

This is the default policy and does not perform any topology alignment.

7.2.2. best-effort policy

For each container in a Guaranteed Pod with the best-effort topology management policy, kublet calls each Hint Provider to discover their resource availability. Using this information, the Topology Manager stores the preferred NUMA Node affinity for that container. If the affinity is not preferred, Topology Manager will store this and admit the pod to the node anyway.

7.2.3. restricted policy

For each container in a Guaranteed Pod with the restricted topology management policy, kublet calls each Hint Provider to discover their resource availability. Using this information, the Topology Manager stores the preferred NUMA Node affinity for that container. If the affinity is not preferred, Topology Manager will reject this pod from the node. This will result in a pod in a Terminated state with a pod admission failure.

7.2.4. single-numa-node

For each container in a Guaranteed Pod with the single-numa-node topology management policy, kublet calls each Hint Provider to discover their resource availability. Using this information, the Topology Manager determines if a single NUMA Node affinity is possible. If it is, the pod will be admitted to the node. If this is not possible then the Topology Manager will reject the pod from the node. This will result in a pod in a Terminated state with a pod admission failure.

7.3. Pod interactions with Topology Manager policies

The example Pod specs below help illustrate Pod interactions with Topology Manager.

The following Pod runs in the BestEffort QoS class because no resource requests or limits are specified.

spec:

containers:

- name: nginx

image: nginx

spec:

containers:

- name: nginx

image: nginx

The next Pod runs in the Burstable QoS class because requests are less than limits.

If the selected policy is anything other than none, Topology Manager would not consider either of these Pod specifications.

The last example Pod below runs in the Guaranteed QoS class because requests are equal to limits.

Topology Manager would consider this Pod. The Topology Manager consults the CPU Manager static policy, which returns the topology of available CPUs. Topology Manager also consults Device Manager to discover the topology of available devices for example.com/device.

Topology Manager will use this information to store the best Topology for this container. In the case of this Pod, CPU Manager and Device Manager will use this stored information at the resource allocation stage.

Chapter 8. Scaling the Cluster Monitoring Operator

OpenShift Container Platform exposes metrics that the Cluster Monitoring Operator collects and stores in the Prometheus-based monitoring stack. As an administrator, you can view system resources, containers and components metrics in one dashboard interface, Grafana.

8.1. Prometheus database storage requirements

Red Hat performed various tests for different scale sizes.

| Number of Nodes | Number of Pods | Prometheus storage growth per day | Prometheus storage growth per 15 days | RAM Space (per scale size) | Network (per tsdb chunk) |

|---|---|---|---|---|---|

| 50 | 1800 | 6.3 GB | 94 GB | 6 GB | 16 MB |

| 100 | 3600 | 13 GB | 195 GB | 10 GB | 26 MB |

| 150 | 5400 | 19 GB | 283 GB | 12 GB | 36 MB |

| 200 | 7200 | 25 GB | 375 GB | 14 GB | 46 MB |

Approximately 20 percent of the expected size was added as overhead to ensure that the storage requirements do not exceed the calculated value.

The above calculation is for the default OpenShift Container Platform Cluster Monitoring Operator.

CPU utilization has minor impact. The ratio is approximately 1 core out of 40 per 50 nodes and 1800 pods.

Lab environment

In a previous release, all experiments were performed in an OpenShift Container Platform on RHOSP environment:

- Infra nodes (VMs) - 40 cores, 157 GB RAM.

- CNS nodes (VMs) - 16 cores, 62 GB RAM, NVMe drives.

Recommendations for OpenShift Container Platform

- Use at least three infrastructure (infra) nodes.

- Use at least three openshift-container-storage nodes with non-volatile memory express (NVMe) drives.

8.2. Configuring cluster monitoring

Procedure

To increase the storage capacity for Prometheus:

Create a YAML configuration file,

cluster-monitoring-config.yml. For example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- A typical value is

PROMETHEUS_RETENTION_PERIOD=15d. Units are measured in time using one of these suffixes: s, m, h, d. - 2

- A typical value is

PROMETHEUS_STORAGE_SIZE=2000Gi. Storage values can be a plain integer or as a fixed-point integer using one of these suffixes: E, P, T, G, M, K. You can also use the power-of-two equivalents: Ei, Pi, Ti, Gi, Mi, Ki. - 3

- A typical value is

ALERTMANAGER_STORAGE_SIZE=20Gi. Storage values can be a plain integer or as a fixed-point integer using one of these suffixes: E, P, T, G, M, K. You can also use the power-of-two equivalents: Ei, Pi, Ti, Gi, Mi, Ki.

- Set the values like the retention period and storage sizes.

Apply the changes by running:

oc create -f cluster-monitoring-config.yml

$ oc create -f cluster-monitoring-config.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 9. Planning your environment according to object maximums

Consider the following tested object maximums when you plan your OpenShift Container Platform cluster.

These guidelines are based on the largest possible cluster. For smaller clusters, the maximums are lower. There are many factors that influence the stated thresholds, including the etcd version or storage data format.

In most cases, exceeding these numbers results in lower overall performance. It does not necessarily mean that the cluster will fail.

9.1. OpenShift Container Platform Tested cluster maximums for major releases

Tested Cloud Platforms for OpenShift Container Platform 3.x: Red Hat OpenStack Platform (RHOSP), Amazon Web Services and Microsoft Azure. Tested Cloud Platforms for OpenShift Container Platform 4.x: Amazon Web Services, Microsoft Azure and Google Cloud Platform.

| Maximum type | 3.x tested maximum | 4.x tested maximum |

|---|---|---|

| Number of Nodes | 2,000 | 2,000 |

| Number of Pods [a] | 150,000 | 150,000 |

| Number of Pods per node | 250 | 500 [b] |

| Number of Pods per core | There is no default value. | There is no default value. |

| Number of Namespaces [c] | 10,000 | 10,000 |

| Number of Builds | 10,000 (Default pod RAM 512 Mi) - Pipeline Strategy | 10,000 (Default pod RAM 512 Mi) - Source-to-Image (S2I) build strategy |

| Number of Pods per namespace [d] | 25,000 | 25,000 |

| Number of Services [e] | 10,000 | 10,000 |

| Number of Services per Namespace | 5,000 | 5,000 |

| Number of Back-ends per Service | 5,000 | 5,000 |

| Number of Deployments per Namespace [d] | 2,000 | 2,000 |

[a]

The Pod count displayed here is the number of test Pods. The actual number of Pods depends on the application’s memory, CPU, and storage requirements.

[b]

This was tested on a cluster with 100 worker nodes with 500 Pods per worker node. The default maxPods is still 250. To get to 500 maxPods, the cluster must be created with a hostPrefix of 22 in the install-config.yaml file and maxPods set to 500 using a custom KubeletConfig. The maximum number of Pods with attached Persistant Volume Claims (PVC) depends on storage backend from where PVC are allocated. In our tests, only OpenShift Container Storage v4 (OCS v4) was able to satisfy the number of Pods per node discussed in this document.

[c]

When there are a large number of active projects, etcd might suffer from poor performance if the keyspace grows excessively large and exceeds the space quota. Periodic maintenance of etcd, including defragmentaion, is highly recommended to free etcd storage.

[d]

There are a number of control loops in the system that must iterate over all objects in a given namespace as a reaction to some changes in state. Having a large number of objects of a given type in a single namespace can make those loops expensive and slow down processing given state changes. The limit assumes that the system has enough CPU, memory, and disk to satisfy the application requirements.

[e]

Each Service port and each Service back-end has a corresponding entry in iptables. The number of back-ends of a given Service impact the size of the endpoints objects, which impacts the size of data that is being sent all over the system.

| ||

9.2. OpenShift Container Platform tested cluster maximums

| Maximum type | 3.11 tested maximum | 4.1 tested maximum | 4.2 tested maximum | 4.3 tested maximum |

|---|---|---|---|---|

| Number of Nodes | 2,000 | 2,000 | 2,000 | 2,000 |

| Number of Pods [a] | 150,000 | 150,000 | 150,000 | 150,000 |

| Number of Pods per node | 250 | 250 | 250 | 500 |

| Number of Pods per core | There is no default value. | There is no default value. | There is no default value. | There is no default value. |

| Number of Namespaces [b] | 10,000 | 10,000 | 10,000 | 10,000 |

| Number of Builds | 10,000 (Default pod RAM 512 Mi) - Pipeline Strategy | 10,000 (Default pod RAM 512 Mi) - Pipeline Strategy | 10,000 (Default pod RAM 512 Mi) - Pipeline Strategy | 10,000 (Default pod RAM 512 Mi) - Source-to-Image (S2I) build strategy |

| Number of Pods per Namespace [c] | 25,000 | 25,000 | 25,000 | 25,000 |

| Number of Services [d] | 10,000 | 10,000 | 10,000 | 10,000 |

| Number of Services per Namespace | 5,000 | 5,000 | 5,000 | 5,000 |

| Number of Back-ends per Service | 5,000 | 5,000 | 5,000 | 5,000 |

| Number of Deployments per Namespace [c] | 2,000 | 2,000 | 2,000 | 2,000 |

[a]

The Pod count displayed here is the number of test Pods. The actual number of Pods depends on the application’s memory, CPU, and storage requirements.

[b]

When there are a large number of active projects, etcd might suffer from poor performance if the keyspace grows excessively large and exceeds the space quota. Periodic maintenance of etcd, including defragmentaion, is highly recommended to free etcd storage.

[c]

There are a number of control loops in the system that must iterate over all objects in a given namespace as a reaction to some changes in state. Having a large number of objects of a given type in a single namespace can make those loops expensive and slow down processing given state changes. The limit assumes that the system has enough CPU, memory, and disk to satisfy the application requirements.

[d]

Each service port and each service back-end has a corresponding entry in iptables. The number of back-ends of a given service impact the size of the endpoints objects, which impacts the size of data that is being sent all over the system.

| ||||

In OpenShift Container Platform 4.3, half of a CPU core (500 millicore) is reserved by the system compared to OpenShift Container Platform 3.11 and previous versions.

9.3. OpenShift Container Platform environment and configuration on which the cluster maximums are tested

AWS cloud platform:

| Node | Flavor | vCPU | RAM(GiB) | Disk type | Disk size(GiB)/IOS | Count | Region |

|---|---|---|---|---|---|---|---|

| Master/etcd [a] | r5.4xlarge | 16 | 128 | io1 | 220 / 3000 | 3 | us-west-2 |

| Infra [b] | m5.12xlarge | 48 | 192 | gp2 | 100 | 3 | us-west-2 |

| Workload [c] | m5.4xlarge | 16 | 64 | gp2 | 500 [d] | 1 | us-west-2 |

| Worker | m5.2xlarge | 8 | 32 | gp2 | 100 | 3/25/250/2000 [e] | us-west-2 |

[a]

io1 disks with 3000 IOPS are used for master/etcd nodes as etcd is I/O intensive and latency sensitive.

[b]

Infra nodes are used to host Monitoring, Ingress and Registry components to make sure they have enough resources to run at large scale.

[c]

Workload node is dedicated to run performance and scalability workload generators.

[d]

Larger disk size is used so that there is enough space to store the large amounts of data that is collected during the performance and scalability test run.

[e]

Cluster is scaled in iterations and performance and scalability tests are executed at the specified node counts.

| |||||||

Azure cloud platform:

| Node | Flavor | vCPU | RAM(GiB) | Disk type | Disk size(GiB)/iops | Count | Region |

|---|---|---|---|---|---|---|---|

| Master/etcd [a] | Standard_D8s_v3 | 8 | 32 | Premium SSD | 1024 ( P30 ) | 3 | centralus |

| Infra [b] | Standard_D16s_v3 | 16 | 64 | Premium SSD | 1024 ( P30 ) | 3 | centralus |

| Worker | Standard_D4s_v3 | 4 | 16 | Premium SSD | 1024 ( P30 ) | 3/25/100/110 [c] | centralus |

[a]

For a higher IOPs and throughput cap, 1024GB disks are used for master/etcd nodes because etcd is I/O intensive and latency sensitive.

[b]

Infra nodes are used to host Monitoring, Ingress and Registry components to make sure they have enough resources to run at large scale.

[c]

The cluster is scaled in iterations and performance and scalability tests are executed at the specified node counts.

| |||||||

9.4. How to plan your environment according to tested cluster maximums

Oversubscribing the physical resources on a node affects resource guarantees the Kubernetes scheduler makes during pod placement. Learn what measures you can take to avoid memory swapping.

Some of the tested maximums are stretched only in a single dimension. They will vary when many objects are running on the cluster.

The numbers noted in this documentation are based on Red Hat’s test methodology, setup, configuration, and tunings. These numbers can vary based on your own individual setup and environments.

While planning your environment, determine how many pods are expected to fit per node:

Required Pods per Cluster / Pods per Node = Total Number of Nodes Needed

Required Pods per Cluster / Pods per Node = Total Number of Nodes NeededThe current maximum number of pods per node is 250. However, the number of pods that fit on a node is dependent on the application itself. Consider the application’s memory, CPU, and storage requirements, as described in How to plan your environment according to application requirements.

Example scenario

If you want to scope your cluster at 2200 pods, assuming the 250 maximum pods per node, you would need at least nine nodes:

2200 / 250 = 8.8

2200 / 250 = 8.8If you increase the number of nodes to 20, then the pod distribution changes to 110 pods per node:

2200 / 20 = 110

2200 / 20 = 110Where:

Required Pods per Cluster / Total Number of Nodes = Expected Pods per Node

Required Pods per Cluster / Total Number of Nodes = Expected Pods per Node9.5. How to plan your environment according to application requirements

Consider an example application environment:

| Pod type | Pod quantity | Max memory | CPU cores | Persistent storage |

|---|---|---|---|---|

| apache | 100 | 500 MB | 0.5 | 1 GB |

| node.js | 200 | 1 GB | 1 | 1 GB |

| postgresql | 100 | 1 GB | 2 | 10 GB |

| JBoss EAP | 100 | 1 GB | 1 | 1 GB |

Extrapolated requirements: 550 CPU cores, 450GB RAM, and 1.4TB storage.

Instance size for nodes can be modulated up or down, depending on your preference. Nodes are often resource overcommitted. In this deployment scenario, you can choose to run additional smaller nodes or fewer larger nodes to provide the same amount of resources. Factors such as operational agility and cost-per-instance should be considered.

| Node type | Quantity | CPUs | RAM (GB) |

|---|---|---|---|

| Nodes (option 1) | 100 | 4 | 16 |

| Nodes (option 2) | 50 | 8 | 32 |

| Nodes (option 3) | 25 | 16 | 64 |

Some applications lend themselves well to overcommitted environments, and some do not. Most Java applications and applications that use huge pages are examples of applications that would not allow for overcommitment. That memory can not be used for other applications. In the example above, the environment would be roughly 30 percent overcommitted, a common ratio.

Chapter 10. Optimizing storage

Optimizing storage helps to minimize storage use across all resources. By optimizing storage, administrators help ensure that existing storage resources are working in an efficient manner.

10.1. Available persistent storage options

Understand your persistent storage options so that you can optimize your OpenShift Container Platform environment.

| Storage type | Description | Examples |

|---|---|---|

| Block |

| AWS EBS and VMware vSphere support dynamic persistent volume (PV) provisioning natively in OpenShift Container Platform. |

| File |

| RHEL NFS, NetApp NFS [a], and Vendor NFS |

| Object |

| AWS S3 |

[a]

NetApp NFS supports dynamic PV provisioning when using the Trident plug-in.

| ||

Currently, CNS is not supported in OpenShift Container Platform 4.3.

10.2. Recommended configurable storage technology

The following table summarizes the recommended and configurable storage technologies for the given OpenShift Container Platform cluster application.

| Storage type | ROX1 | RWX2 | Registry | Scaled registry | Metrics3 | Logging | Apps |

|---|---|---|---|---|---|---|---|

| 1 ReadOnlyMany 2 ReadWriteMany. 3 Prometheus is the underlying technology used for metrics. 4 This does not apply to physical disk, VM physical disk, VMDK, loopback over NFS, AWS EBS, and Azure Disk. 5 For metrics, using file storage with the ReadWriteMany (RWX) access mode is unreliable. If you use file storage, do not configure the RWX access mode on any PersistentVolumeClaims that are configured for use with metrics. 6 For logging, using any shared storage would be an anti-pattern. One volume per elasticsearch is required. 7 Object storage is not consumed through OpenShift Container Platform’s PVs/persistent volume claims (PVCs). Apps must integrate with the object storage REST API. | |||||||

| Block | Yes4 | No | Configurable | Not configurable | Recommended | Recommended | Recommended |

| File | Yes4 | Yes | Configurable | Configurable | Configurable5 | Configurable6 | Recommended |

| Object | Yes | Yes | Recommended | Recommended | Not configurable | Not configurable | Not configurable7 |

A scaled registry is an OpenShift Container Platform registry where two or more Pod replicas are running.

10.2.1. Specific application storage recommendations

Testing shows issues with using the NFS server on RHEL as storage backend for core services. This includes the OpenShift Container Registry and Quay, Prometheus for monitoring storage, and Elasticsearch for logging storage. Therefore, using RHEL NFS to back PVs used by core services is not recommended.

Other NFS implementations on the marketplace might not have these issues. Contact the individual NFS implementation vendor for more information on any testing that was possibly completed against these OpenShift Container Platform core components.

10.2.1.1. Registry

In a non-scaled/high-availability (HA) OpenShift Container Platform registry cluster deployment:

- The storage technology does not have to support RWX access mode.

- The storage technology must ensure read-after-write consistency.

- The preferred storage technology is object storage followed by block storage.

- File storage is not recommended for OpenShift Container Platform registry cluster deployment with production workloads.

10.2.1.2. Scaled registry

In a scaled/HA OpenShift Container Platform registry cluster deployment:

- The storage technology must support RWX access mode and must ensure read-after-write consistency.

- The preferred storage technology is object storage.

- Amazon Simple Storage Service (Amazon S3), Google Cloud Storage (GCS), Microsoft Azure Blob Storage, and OpenStack Swift are supported.

- Storage should be S3 or Swift compliant.

- File storage is not recommended for a scaled/HA OpenShift Container Platform registry cluster deployment with production workloads.

- For non-cloud platforms, such as vSphere and bare metal installations, the only configurable technology is file storage.

- Block storage is not configurable.

10.2.1.3. Metrics

In an OpenShift Container Platform hosted metrics cluster deployment:

- The preferred storage technology is block storage.

- Object storage is not configurable.

It is not recommended to use file storage for a hosted metrics cluster deployment with production workloads.

10.2.1.4. Logging

In an OpenShift Container Platform hosted logging cluster deployment:

- The preferred storage technology is block storage.

- File storage is not recommended for a scaled/HA OpenShift Container Platform registry cluster deployment with production workloads.

- Object storage is not configurable.

Testing shows issues with using the NFS server on RHEL as storage backend for core services. This includes Elasticsearch for logging storage. Therefore, using RHEL NFS to back PVs used by core services is not recommended.

Other NFS implementations on the marketplace might not have these issues. Contact the individual NFS implementation vendor for more information on any testing that was possibly completed against these OpenShift Container Platform core components.

10.2.1.5. Applications

Application use cases vary from application to application, as described in the following examples:

- Storage technologies that support dynamic PV provisioning have low mount time latencies, and are not tied to nodes to support a healthy cluster.

- Application developers are responsible for knowing and understanding the storage requirements for their application, and how it works with the provided storage to ensure that issues do not occur when an application scales or interacts with the storage layer.

10.2.2. Other specific application storage recommendations

-

OpenShift Container Platform Internal

etcd: For the bestetcdreliability, the lowest consistent latency storage technology is preferable. -

It is highly recommended that you use

etcdwith storage that handles serial writes (fsync) quickly, such as NVMe or SSD. Ceph, NFS, and spinning disks are not recommended. - Red Hat OpenStack Platform (RHOSP) Cinder: RHOSP Cinder tends to be adept in ROX access mode use cases.

- Databases: Databases (RDBMSs, NoSQL DBs, etc.) tend to perform best with dedicated block storage.

10.3. Data storage management

The following table summarizes the main directories that OpenShift Container Platform components write data to.

| Directory | Notes | Sizing | Expected growth |

|---|---|---|---|

| /var/log | Log files for all components. | 10 to 30 GB. | Log files can grow quickly; size can be managed by growing disks or by using log rotate. |

| /var/lib/etcd | Used for etcd storage when storing the database. | Less than 20 GB. Database can grow up to 8 GB. | Will grow slowly with the environment. Only storing metadata. Additional 20-25 GB for every additional 8 GB of memory. |

| /var/lib/containers | This is the mount point for the CRI-O runtime. Storage used for active container runtimes, including pods, and storage of local images. Not used for registry storage. | 50 GB for a node with 16 GB memory. Note that this sizing should not be used to determine minimum cluster requirements. Additional 20-25 GB for every additional 8 GB of memory. | Growth is limited by capacity for running containers. |

| /var/lib/kubelet | Ephemeral volume storage for pods. This includes anything external that is mounted into a container at runtime. Includes environment variables, kube secrets, and data volumes not backed by persistent volumes. | Varies | Minimal if pods requiring storage are using persistent volumes. If using ephemeral storage, this can grow quickly. |

Chapter 11. Optimizing routing

The OpenShift Container Platform HAProxy router scales to optimize performance.

11.1. Baseline router performance

The OpenShift Container Platform router is the Ingress point for all external traffic destined for OpenShift Container Platform services.

When evaluating a single HAProxy router performance in terms of HTTP requests handled per second, the performance varies depending on many factors. In particular:

- HTTP keep-alive/close mode

- route type

- TLS session resumption client support

- number of concurrent connections per target route

- number of target routes

- back end server page size

- underlying infrastructure (network/SDN solution, CPU, and so on)

While performance in your specific environment will vary, Red Hat lab tests on a public cloud instance of size 4 vCPU/16GB RAM, a single HAProxy router handling 100 routes terminated by backends serving 1kB static pages is able to handle the following number of transactions per second.

In HTTP keep-alive mode scenarios:

| Encryption | LoadBalancerService | HostNetwork |

|---|---|---|

| none | 21515 | 29622 |

| edge | 16743 | 22913 |

| passthrough | 36786 | 53295 |

| re-encrypt | 21583 | 25198 |

In HTTP close (no keep-alive) scenarios:

| Encryption | LoadBalancerService | HostNetwork |

|---|---|---|

| none | 5719 | 8273 |

| edge | 2729 | 4069 |

| passthrough | 4121 | 5344 |

| re-encrypt | 2320 | 2941 |

Default router configuration with ROUTER_THREADS=4 was used and two different endpoint publishing strategies (LoadBalancerService/HostNetwork) tested. TLS session resumption was used for encrypted routes. With HTTP keep-alive, a single HAProxy router is capable of saturating 1 Gbit NIC at page sizes as small as 8 kB.

When running on bare metal with modern processors, you can expect roughly twice the performance of the public cloud instance above. This overhead is introduced by the virtualization layer in place on public clouds and holds mostly true for private cloud-based virtualization as well. The following table is a guide on how many applications to use behind the router:

| Number of applications | Application type |

|---|---|

| 5-10 | static file/web server or caching proxy |

| 100-1000 | applications generating dynamic content |

In general, HAProxy can support routes for 5 to 1000 applications, depending on the technology in use. Router performance might be limited by the capabilities and performance of the applications behind it, such as language or static versus dynamic content.

Router sharding should be used to serve more routes towards applications and help horizontally scale the routing tier.

11.2. Router performance optimizations

OpenShift Container Platform no longer supports modifying router deployments by setting environment variables such as ROUTER_THREADS, ROUTER_DEFAULT_TUNNEL_TIMEOUT, ROUTER_DEFAULT_CLIENT_TIMEOUT, ROUTER_DEFAULT_SERVER_TIMEOUT, and RELOAD_INTERVAL.

You can modify the router deployment, but if the Ingress Operator is enabled, the configuration is overwritten.

Chapter 12. What huge pages do and how they are consumed by applications

12.1. What huge pages do

Memory is managed in blocks known as pages. On most systems, a page is 4Ki. 1Mi of memory is equal to 256 pages; 1Gi of memory is 256,000 pages, and so on. CPUs have a built-in memory management unit that manages a list of these pages in hardware. The Translation Lookaside Buffer (TLB) is a small hardware cache of virtual-to-physical page mappings. If the virtual address passed in a hardware instruction can be found in the TLB, the mapping can be determined quickly. If not, a TLB miss occurs, and the system falls back to slower, software-based address translation, resulting in performance issues. Since the size of the TLB is fixed, the only way to reduce the chance of a TLB miss is to increase the page size.

A huge page is a memory page that is larger than 4Ki. On x86_64 architectures, there are two common huge page sizes: 2Mi and 1Gi. Sizes vary on other architectures. In order to use huge pages, code must be written so that applications are aware of them. Transparent Huge Pages (THP) attempt to automate the management of huge pages without application knowledge, but they have limitations. In particular, they are limited to 2Mi page sizes. THP can lead to performance degradation on nodes with high memory utilization or fragmentation due to defragmenting efforts of THP, which can lock memory pages. For this reason, some applications may be designed to (or recommend) usage of pre-allocated huge pages instead of THP.

In OpenShift Container Platform, applications in a pod can allocate and consume pre-allocated huge pages.

12.2. How huge pages are consumed by apps

Nodes must pre-allocate huge pages in order for the node to report its huge page capacity. A node can only pre-allocate huge pages for a single size.

Huge pages can be consumed through container-level resource requirements using the resource name hugepages-<size>, where size is the most compact binary notation using integer values supported on a particular node. For example, if a node supports 2048KiB page sizes, it exposes a schedulable resource hugepages-2Mi. Unlike CPU or memory, huge pages do not support over-commitment.

- 1

- Specify the amount of memory for

hugepagesas the exact amount to be allocated. Do not specify this value as the amount of memory forhugepagesmultiplied by the size of the page. For example, given a huge page size of 2MB, if you want to use 100MB of huge-page-backed RAM for your application, then you would allocate 50 huge pages. OpenShift Container Platform handles the math for you. As in the above example, you can specify100MBdirectly.

Allocating huge pages of a specific size

Some platforms support multiple huge page sizes. To allocate huge pages of a specific size, precede the huge pages boot command parameters with a huge page size selection parameter hugepagesz=<size>. The <size> value must be specified in bytes with an optional scale suffix [kKmMgG]. The default huge page size can be defined with the default_hugepagesz=<size> boot parameter.

Huge page requirements

- Huge page requests must equal the limits. This is the default if limits are specified, but requests are not.

- Huge pages are isolated at a pod scope. Container isolation is planned in a future iteration.

-

EmptyDirvolumes backed by huge pages must not consume more huge page memory than the pod request. -

Applications that consume huge pages via

shmget()withSHM_HUGETLBmust run with a supplemental group that matches proc/sys/vm/hugetlb_shm_group.

Additional resources

12.3. Configuring huge pages

Nodes must pre-allocate huge pages used in an OpenShift Container Platform cluster. Use the Node Tuning Operator to allocate huge pages on a specific node.

Procedure

Label the node so that the Node Tuning Operator knows on which node to apply the tuned profile, which describes how many huge pages should be allocated:

oc label node <node_using_hugepages> hugepages=true

$ oc label node <node_using_hugepages> hugepages=trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a file with the following content and name it

hugepages_tuning.yaml:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create the custom

hugepagestuned profile by using thehugepages_tuning.yamlfile:oc create -f hugepages_tuning.yaml

$ oc create -f hugepages_tuning.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow After creating the profile, the Operator applies the new profile to the correct node and allocates huge pages. Check the logs of a tuned pod on a node using huge pages to verify:

oc logs <tuned_pod_on_node_using_hugepages> \ -n openshift-cluster-node-tuning-operator | grep 'applied$' | tail -n1 2019-08-08 07:20:41,286 INFO tuned.daemon.daemon: static tuning from profile 'node-hugepages' applied$ oc logs <tuned_pod_on_node_using_hugepages> \ -n openshift-cluster-node-tuning-operator | grep 'applied$' | tail -n1 2019-08-08 07:20:41,286 INFO tuned.daemon.daemon: static tuning from profile 'node-hugepages' appliedCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Legal Notice

Copyright © Red Hat

OpenShift documentation is licensed under the Apache License 2.0 (https://www.apache.org/licenses/LICENSE-2.0).

Modified versions must remove all Red Hat trademarks.

Portions adapted from https://github.com/kubernetes-incubator/service-catalog/ with modifications by Red Hat.

Red Hat, Red Hat Enterprise Linux, the Red Hat logo, the Shadowman logo, JBoss, OpenShift, Fedora, the Infinity logo, and RHCE are trademarks of Red Hat, Inc., registered in the United States and other countries.

Linux® is the registered trademark of Linus Torvalds in the United States and other countries.

Java® is a registered trademark of Oracle and/or its affiliates.

XFS® is a trademark of Silicon Graphics International Corp. or its subsidiaries in the United States and/or other countries.

MySQL® is a registered trademark of MySQL AB in the United States, the European Union and other countries.

Node.js® is an official trademark of the OpenJS Foundation.

The OpenStack® Word Mark and OpenStack logo are either registered trademarks/service marks or trademarks/service marks of the OpenStack Foundation, in the United States and other countries and are used with the OpenStack Foundation’s permission. We are not affiliated with, endorsed or sponsored by the OpenStack Foundation, or the OpenStack community.

All other trademarks are the property of their respective owners.