This documentation is for a release that is no longer maintained

See documentation for the latest supported version 3 or the latest supported version 4.Chapter 4. Using Container Storage Interface (CSI)

4.1. Configuring CSI volumes

The Container Storage Interface (CSI) allows OpenShift Container Platform to consume storage from storage back ends that implement the CSI interface as persistent storage.

4.1.1. CSI Architecture

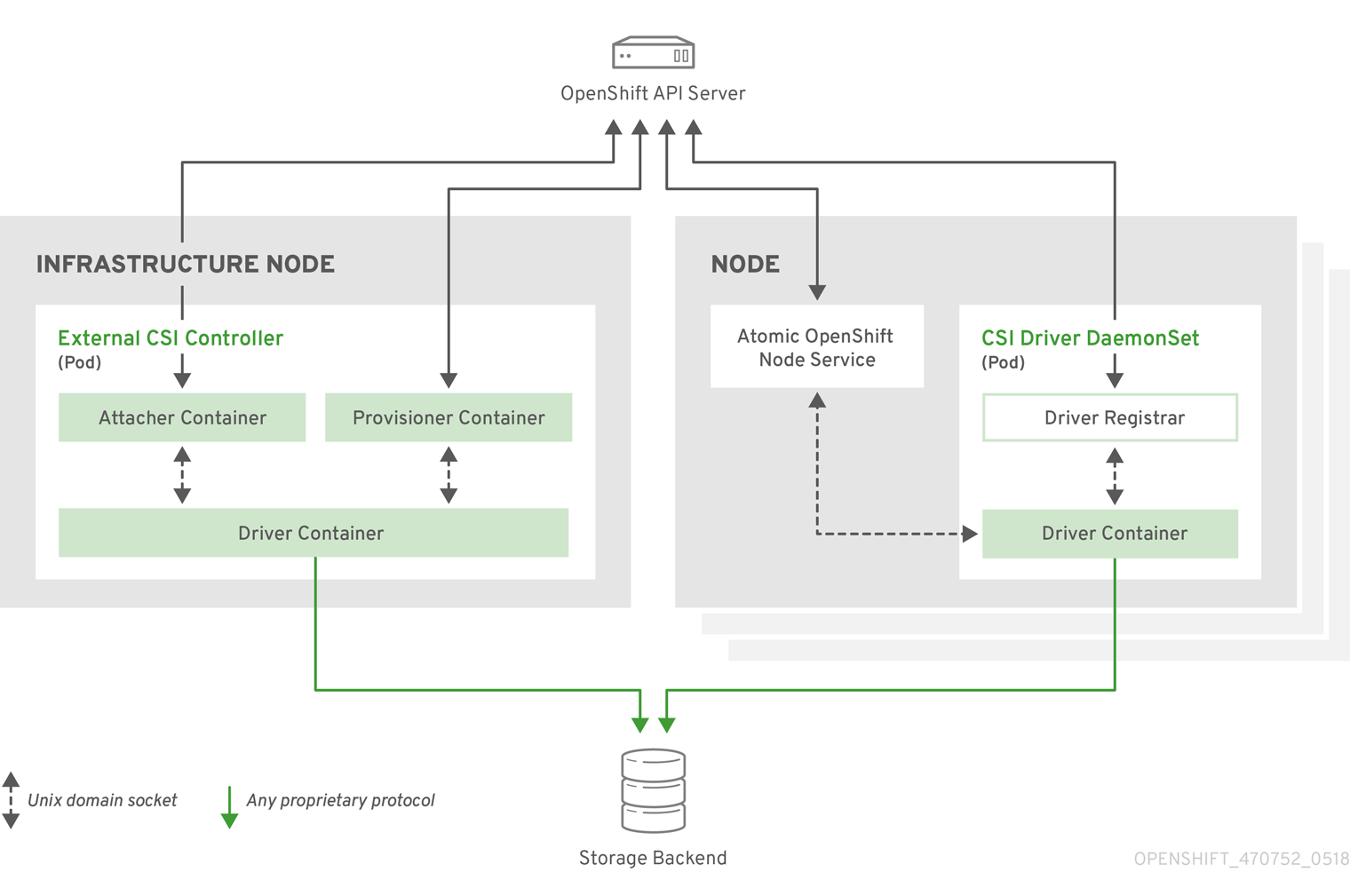

CSI drivers are typically shipped as container images. These containers are not aware of OpenShift Container Platform where they run. To use CSI-compatible storage back end in OpenShift Container Platform, the cluster administrator must deploy several components that serve as a bridge between OpenShift Container Platform and the storage driver.

The following diagram provides a high-level overview about the components running in pods in the OpenShift Container Platform cluster.

It is possible to run multiple CSI drivers for different storage back ends. Each driver needs its own external controllers deployment and daemon set with the driver and CSI registrar.

4.1.1.1. External CSI controllers

External CSI Controllers is a deployment that deploys one or more pods with three containers:

-

An external CSI attacher container translates

attachanddetachcalls from OpenShift Container Platform to respectiveControllerPublishandControllerUnpublishcalls to the CSI driver. -

An external CSI provisioner container that translates

provisionanddeletecalls from OpenShift Container Platform to respectiveCreateVolumeandDeleteVolumecalls to the CSI driver. - A CSI driver container

The CSI attacher and CSI provisioner containers communicate with the CSI driver container using UNIX Domain Sockets, ensuring that no CSI communication leaves the pod. The CSI driver is not accessible from outside of the pod.

attach, detach, provision, and delete operations typically require the CSI driver to use credentials to the storage backend. Run the CSI controller pods on infrastructure nodes so the credentials are never leaked to user processes, even in the event of a catastrophic security breach on a compute node.

The external attacher must also run for CSI drivers that do not support third-party attach or detach operations. The external attacher will not issue any ControllerPublish or ControllerUnpublish operations to the CSI driver. However, it still must run to implement the necessary OpenShift Container Platform attachment API.

4.1.1.2. CSI driver daemon set

The CSI driver daemon set runs a pod on every node that allows OpenShift Container Platform to mount storage provided by the CSI driver to the node and use it in user workloads (pods) as persistent volumes (PVs). The pod with the CSI driver installed contains the following containers:

-

A CSI driver registrar, which registers the CSI driver into the

openshift-nodeservice running on the node. Theopenshift-nodeprocess running on the node then directly connects with the CSI driver using the UNIX Domain Socket available on the node. - A CSI driver.

The CSI driver deployed on the node should have as few credentials to the storage back end as possible. OpenShift Container Platform will only use the node plug-in set of CSI calls such as NodePublish/NodeUnpublish and NodeStage/NodeUnstage, if these calls are implemented.

4.1.2. CSI drivers supported by OpenShift Container Platform

OpenShift Container Platform supports certain CSI drivers that give users storage options that are not possible with in-tree volume plug-ins.

To create CSI-provisioned persistent volumes that mount to these supported storage assets, you can install and configure the CSI driver Operator, which will install the necessary CSI driver and storage class. For more details about installing the Operator and driver, see the documentation for the specific CSI Driver Operator.

The following table describes the CSI drivers that are available with OpenShift Container Platform and which CSI features they support, such as volume snapshots, cloning, and resize.

| CSI driver | CSI volume snapshots | CSI cloning | CSI resize |

|---|---|---|---|

| AWS EBS (Tech Preview) |

✅ |

- |

✅ |

| OpenStack Manila |

✅ |

✅ |

✅ |

If your CSI driver is not listed in the preceding table, you must follow the installation instructions provided by your CSI storage vendor to use their supported CSI features.

4.1.3. Dynamic provisioning

Dynamic provisioning of persistent storage depends on the capabilities of the CSI driver and underlying storage back end. The provider of the CSI driver should document how to create a storage class in OpenShift Container Platform and the parameters available for configuration.

The created storage class can be configured to enable dynamic provisioning.

Procedure

Create a default storage class that ensures all PVCs that do not require any special storage class are provisioned by the installed CSI driver.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

4.1.4. Example using the CSI driver

The following example installs a default MySQL template without any changes to the template.

Prerequisites

- The CSI driver has been deployed.

- A storage class has been created for dynamic provisioning.

Procedure

Create the MySQL template:

oc new-app mysql-persistent

# oc new-app mysql-persistentCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

--> Deploying template "openshift/mysql-persistent" to project default ...

--> Deploying template "openshift/mysql-persistent" to project default ...Copy to Clipboard Copied! Toggle word wrap Toggle overflow oc get pvc

# oc get pvcCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE mysql Bound kubernetes-dynamic-pv-3271ffcb4e1811e8 1Gi RWO cinder 3s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE mysql Bound kubernetes-dynamic-pv-3271ffcb4e1811e8 1Gi RWO cinder 3sCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.2. CSI inline ephemeral volumes

Container Storage Interface (CSI) inline ephemeral volumes allow you to define a Pod spec that creates inline ephemeral volumes when a pod is deployed and delete them when a pod is destroyed.

This feature is only available with supported Container Storage Interface (CSI) drivers.

CSI inline ephemeral volumes is a Technology Preview feature only. Technology Preview features are not supported with Red Hat production service level agreements (SLAs) and might not be functionally complete. Red Hat does not recommend using them in production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process.

For more information about the support scope of Red Hat Technology Preview features, see https://access.redhat.com/support/offerings/techpreview/.

4.2.1. Overview of CSI inline ephemeral volumes

Traditionally, volumes that are backed by Container Storage Interface (CSI) drivers can only be used with a PersistentVolume and PersistentVolumeClaim object combination.

This feature allows you to specify CSI volumes directly in the Pod specification, rather than in a PersistentVolume object. Inline volumes are ephemeral and do not persist across pod restarts.

4.2.1.1. Support limitations

By default, OpenShift Container Platform supports CSI inline ephemeral volumes with these limitations:

- Support is only available for CSI drivers. In-tree and FlexVolumes are not supported.

- OpenShift Container Platform does not include any CSI drivers. Use the CSI drivers provided by community or storage vendors. Follow the installation instructions provided by the CSI driver.

-

CSI drivers might not have implemented the inline volume functionality, including

Ephemeralcapacity. For details, see the CSI driver documentation.

4.2.2. Embedding a CSI inline ephemeral volume in the Pod specification

You can embed a CSI inline ephemeral volume in the Pod specification in OpenShift Container Platform. At runtime, nested inline volumes follow the ephemeral lifecycle of their associated pods so that the CSI driver handles all phases of volume operations as pods are created and destroyed.

Procedure

-

Create the

Podobject definition and save it to a file. Embed the CSI inline ephemeral volume in the file.

my-csi-app.yaml

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- The name of the volume that is used by pods.

Create the object definition file that you saved in the previous step.

oc create -f my-csi-app.yaml

$ oc create -f my-csi-app.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.3. CSI volume snapshots

This document describes how to use volume snapshots with supported Container Storage Interface (CSI) drivers to help protect against data loss in OpenShift Container Platform. Familiarity with persistent volumes is suggested.

CSI volume snapshot is a Technology Preview feature only. Technology Preview features are not supported with Red Hat production service level agreements (SLAs) and might not be functionally complete. Red Hat does not recommend using them in production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process.

For more information about the support scope of Red Hat Technology Preview features, see https://access.redhat.com/support/offerings/techpreview/.

4.3.1. Overview of CSI volume snapshots

A snapshot represents the state of the storage volume in a cluster at a particular point in time. Volume snapshots can be used to provision a new volume.

OpenShift Container Platform supports CSI volume snapshots by default. However, a specific CSI driver is required.

With CSI volume snapshots, a cluster administrator can:

- Deploy a third-party CSI driver that supports snapshots.

- Create a new persistent volume claim (PVC) from an existing volume snapshot.

- Take a snapshot of an existing PVC.

- Restore a snapshot as a different PVC.

- Delete an existing volume snapshot.

With CSI volume snapshots, an app developer can:

- Use volume snapshots as building blocks for developing application- or cluster-level storage backup solutions.

- Rapidly rollback to a previous development version.

- Use storage more efficiently by not having to make a full copy each time.

Be aware of the following when using volume snapshots:

- Support is only available for CSI drivers. In-tree and FlexVolumes are not supported.

- OpenShift Container Platform only ships with select CSI drivers. For CSI drivers that are not provided by an OpenShift Container Platform Driver Operator, it is recommended to use the CSI drivers provided by community or storage vendors. Follow the installation instructions provided by the CSI driver.

-

CSI drivers may or may not have implemented the volume snapshot functionality. CSI drivers that have provided support for volume snapshots will likely use the

csi-external-snapshottersidecar. See documentation provided by the CSI driver for details. - OpenShift Container Platform 4.5 supports version 1.1.0 of the CSI specification.

4.3.2. CSI snapshot controller and sidecar

OpenShift Container Platform provides a snapshot controller that is deployed into the control plane. In addition, your CSI driver vendor provides the CSI snapshot sidecar as a helper container that is installed during the CSI driver installation.

The CSI snapshot controller and sidecar provide volume snapshotting through the OpenShift Container Platform API. These external components run in the cluster.

The external controller is deployed by the CSI Snapshot Controller Operator.

4.3.2.1. External controller

The CSI snapshot controller binds VolumeSnapshot and VolumeSnapshotContent objects. The controller manages dynamic provisioning by creating and deleting VolumeSnapshotContent objects.

4.3.2.2. External sidecar

Your CSI driver vendor provides the csi-external-snapshotter sidecar. This is a separate helper container that is deployed with the CSI driver. The sidecar manages snapshots by triggering CreateSnapshot and DeleteSnapshot operations. Follow the installation instructions provided by your vendor.

4.3.3. About the CSI Snapshot Controller Operator

The CSI Snapshot Controller Operator runs in the openshift-cluster-storage-operator namespace. It is installed by the Cluster Version Operator (CVO) in all clusters by default.

The CSI Snapshot Controller Operator installs the CSI snapshot controller, which runs in the openshift-cluster-storage-operator namespace.

4.3.3.1. Volume snapshot CRDs

During OpenShift Container Platform installation, the CSI Snapshot Controller Operator creates the following snapshot custom resource definitions (CRDs) in the snapshot.storage.k8s.io/v1beta1 API group:

VolumeSnapshotContentA snapshot taken of a volume in the cluster that has been provisioned by a cluster administrator.

Similar to the

PersistentVolumeobject, theVolumeSnapshotContentCRD is a cluster resource that points to a real snapshot in the storage back end.For manually pre-provisioned snapshots, a cluster administrator creates a number of

VolumeSnapshotContentCRDs. These carry the details of the real volume snapshot in the storage system.The

VolumeSnapshotContentCRD is not namespaced and is for use by a cluster administrator.VolumeSnapshotSimilar to the

PersistentVolumeClaimobject, theVolumeSnapshotCRD defines a developer request for a snapshot. The CSI Snapshot Controller Operator runs the CSI snapshot controller, which handles the binding of aVolumeSnapshotCRD with an appropriateVolumeSnapshotContentCRD. The binding is a one-to-one mapping.The

VolumeSnapshotCRD is namespaced. A developer uses the CRD as a distinct request for a snapshot.VolumeSnapshotClassAllows a cluster administrator to specify different attributes belonging to a

VolumeSnapshotCRD. These attributes may differ among snapshots taken of the same volume on the storage system, in which case they would not be expressed by using the same storage class of a persistent volume claim.The

VolumeSnapshotClassCRD defines the parameters for thecsi-external-snapshottersidecar to use when creating a snapshot. This allows the storage back end to know what kind of snapshot to dynamically create if multiple options are supported.Dynamically provisioned snapshots use the

VolumeSnapshotClassCRD to specify storage-provider-specific parameters to use when creating a snapshot.The

VolumeSnapshotContentClassCRD is not namespaced and is for use by a cluster administrator to enable global configuration options for their storage back end.

4.3.4. Volume snapshot provisioning

There are two ways to provision snapshots: dynamically and manually.

4.3.4.1. Dynamic provisioning

Instead of using a preexisting snapshot, you can request that a snapshot be taken dynamically from a persistent volume claim. Parameters are specified using a VolumeSnapshotClass CRD.

4.3.4.2. Manual provisioning

As a cluster administrator, you can manually pre-provision a number of VolumeSnapshotContent objects. These carry the real volume snapshot details available to cluster users.

4.3.5. Creating a volume snapshot

When you create a VolumeSnapshot object, OpenShift Container Platform creates a volume snapshot.

Prerequisites

- Logged in to a running OpenShift Container Platform cluster.

-

A PVC created using a CSI driver that supports

VolumeSnapshotobjects. - A storage class to provision the storage back end.

No pods are using the persistent volume claim (PVC) that you want to take a snapshot of.

NoteDo not create a volume snapshot of a PVC if a pod is using it. Doing so might cause data corruption because the PVC is not quiesced (paused). Be sure to first tear down a running pod to ensure consistent snapshots.

Procedure

To dynamically create a volume snapshot:

Create a file with the

VolumeSnapshotClassobject described by the following YAML:volumesnapshotclass.yaml

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Allows you to specify different attributes belonging to a volume snapshot.

Create the object you saved in the previous step by entering the following command:

oc create -f volumesnapshotclass.yaml

$ oc create -f volumesnapshotclass.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a

VolumeSnapshotobject:volumesnapshot-dynamic.yaml

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- The request for a particular class by the volume snapshot. If

volumeSnapshotClassNameis empty, then no snapshot is created. - 2

- The name of the

PersistentVolumeClaimobject bound to a persistent volume. This defines what you want to create a snapshot of. Required for dynamically provisioning a snapshot.

Create the object you saved in the previous step by entering the following command:

oc create -f volumesnapshot-dynamic.yaml

$ oc create -f volumesnapshot-dynamic.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

To manually provision a snapshot:

Provide a value for the

volumeSnapshotContentNameparameter as the source for the snapshot, in addition to defining volume snapshot class as shown above.volumesnapshot-manual.yaml

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- The

volumeSnapshotContentNameparameter is required for pre-provisioned snapshots.

Create the object you saved in the previous step by entering the following command:

oc create -f volumesnapshot-manual.yaml

$ oc create -f volumesnapshot-manual.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

After the snapshot has been created in the cluster, additional details about the snapshot are available.

To display details about the volume snapshot that was created, enter the following command:

oc describe volumesnapshot mysnap

$ oc describe volumesnapshot mysnapCopy to Clipboard Copied! Toggle word wrap Toggle overflow The following example displays details about the

mysnapvolume snapshot:volumesnapshot.yaml

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- The pointer to the actual storage content that was created by the controller.

- 2

- The time when the snapshot was created. The snapshot contains the volume content that was available at this indicated time.

- 3

- If the value is set to

true, the snapshot can be used to restore as a new PVC.

If the value is set tofalse, the snapshot was created. However, the storage back end needs to perform additional tasks to make the snapshot usable so that it can be restored as a new volume. For example, Amazon Elastic Block Store data might be moved to a different, less expensive location, which can take several minutes.

To verify that the volume snapshot was created, enter the following command:

oc get volumesnapshotcontent

$ oc get volumesnapshotcontentCopy to Clipboard Copied! Toggle word wrap Toggle overflow The pointer to the actual content is displayed. If the

boundVolumeSnapshotContentNamefield is populated, aVolumeSnapshotContentobject exists and the snapshot was created.-

To verify that the snapshot is ready, confirm that the

VolumeSnapshotobject hasreadyToUse: true.

4.3.6. Deleting a volume snapshot

You can configure how OpenShift Container Platform deletes volume snapshots.

Procedure

Specify the deletion policy that you require in the

VolumeSnapshotClassobject, as shown in the following example:volumesnapshot.yaml

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- When deleting the volume snapshot, if the

Deletevalue is set, the underlying snapshot is deleted along with theVolumeSnapshotContentobject. If theRetainvalue is set, both the underlying snapshot andVolumeSnapshotContentobject remain.

If theRetainvalue is set and theVolumeSnapshotobject is deleted without deleting the correspondingVolumeSnapshotContentobject, the content remains. The snapshot itself is also retained in the storage back end.

Delete the volume snapshot by entering the following command:

oc delete volumesnapshot <volumesnapshot_name>

$ oc delete volumesnapshot <volumesnapshot_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

volumesnapshot.snapshot.storage.k8s.io "mysnapshot" deleted

volumesnapshot.snapshot.storage.k8s.io "mysnapshot" deletedCopy to Clipboard Copied! Toggle word wrap Toggle overflow If the deletion policy is set to

Retain, delete the volume snapshot content by entering the following command:oc delete volumesnapshotcontent <volumesnapshotcontent_name>

$ oc delete volumesnapshotcontent <volumesnapshotcontent_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: If the

VolumeSnapshotobject is not successfully deleted, enter the following command to remove any finalizers for the leftover resource so that the delete operation can continue:ImportantOnly remove the finalizers if you are confident that there are no existing references from either persistent volume claims or volume snapshot contents to the

VolumeSnapshotobject. Even with the--forceoption, the delete operation does not delete snapshot objects until all finalizers are removed.oc patch -n $PROJECT volumesnapshot/$NAME --type=merge -p '{"metadata": {"finalizers":null}}'$ oc patch -n $PROJECT volumesnapshot/$NAME --type=merge -p '{"metadata": {"finalizers":null}}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

volumesnapshotclass.snapshot.storage.k8s.io "csi-ocs-rbd-snapclass" deleted

volumesnapshotclass.snapshot.storage.k8s.io "csi-ocs-rbd-snapclass" deletedCopy to Clipboard Copied! Toggle word wrap Toggle overflow The finalizers are removed and the volume snapshot is deleted.

4.3.7. Restoring a volume snapshot

The VolumeSnapshot CRD content can be used to restore the existing volume to a previous state.

After your VolumeSnapshot CRD is bound and the readyToUse value is set to true, you can use that resource to provision a new volume that is pre-populated with data from the snapshot.

Prerequisites

- Logged in to a running OpenShift Container Platform cluster.

- A persistent volume claim (PVC) created using a Container Storage Interface (CSI) driver that supports volume snapshots.

- A storage class to provision the storage back end.

Procedure

Specify a

VolumeSnapshotdata source on a PVC as shown in the following:pvc-restore.yaml

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a PVC by entering the following command:

oc create -f pvc-restore.yaml

$ oc create -f pvc-restore.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that the restored PVC has been created by entering the following command:

oc get pvc

$ oc get pvcCopy to Clipboard Copied! Toggle word wrap Toggle overflow Two different PVCs are displayed.

4.4. CSI volume cloning

Volume cloning duplicates an existing persistent volume to help protect against data loss in OpenShift Container Platform. This feature is only available with supported Container Storage Interface (CSI) drivers. You should be familiar with persistent volumes before you provision a CSI volume clone.

4.4.1. Overview of CSI volume cloning

A Container Storage Interface (CSI) volume clone is a duplicate of an existing persistent volume at a particular point in time.

Volume cloning is similar to volume snapshots, although it is more efficient. For example, a cluster administrator can duplicate a cluster volume by creating another instance of the existing cluster volume.

Cloning creates an exact duplicate of the specified volume on the back-end device, rather than creating a new empty volume. After dynamic provisioning, you can use a volume clone just as you would use any standard volume.

No new API objects are required for cloning. The existing dataSource field in the PersistentVolumeClaim object is expanded so that it can accept the name of an existing PersistentVolumeClaim in the same namespace.

4.4.1.1. Support limitations

By default, OpenShift Container Platform supports CSI volume cloning with these limitations:

- The destination persistent volume claim (PVC) must exist in the same namespace as the source PVC.

- The source and destination storage class must be the same.

- Support is only available for CSI drivers. In-tree and FlexVolumes are not supported.

- OpenShift Container Platform does not include any CSI drivers. Use the CSI drivers provided by community or storage vendors. Follow the installation instructions provided by the CSI driver.

- CSI drivers might not have implemented the volume cloning functionality. For details, see the CSI driver documentation.

- OpenShift Container Platform 4.5 supports version 1.1.0 of the CSI specification.

4.4.2. Provisioning a CSI volume clone

When you create a cloned persistent volume claim (PVC) API object, you trigger the provisioning of a CSI volume clone. The clone pre-populates with the contents of another PVC, adhering to the same rules as any other persistent volume. The one exception is that you must add a dataSource that references an existing PVC in the same namespace.

Prerequisites

- You are logged in to a running OpenShift Container Platform cluster.

- Your PVC is created using a CSI driver that supports volume cloning.

- Your storage back end is configured for dynamic provisioning. Cloning support is not available for static provisioners.

Procedure

To clone a PVC from an existing PVC:

Create and save a file with the

PersistentVolumeClaimobject described by the following YAML:pvc-clone.yaml

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- The name of the storage class that provisions the storage back end. The default storage class can be used and

storageClassNamecan be omitted in the spec.

Create the object you saved in the previous step by running the following command:

oc create -f pvc-clone.yaml

$ oc create -f pvc-clone.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow A new PVC

pvc-1-cloneis created.Verify that the volume clone was created and is ready by running the following command:

oc get pvc pvc-1-clone

$ oc get pvc pvc-1-cloneCopy to Clipboard Copied! Toggle word wrap Toggle overflow The

pvc-1-cloneshows that it isBound.You are now ready to use the newly cloned PVC to configure a pod.

Create and save a file with the

Podobject described by the YAML. For example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- The cloned PVC created during the CSI volume cloning operation.

The created

Podobject is now ready to consume, clone, snapshot, or delete your cloned PVC independently of its originaldataSourcePVC.

4.5. AWS Elastic Block Store CSI Driver Operator

4.5.1. Overview

OpenShift Container Platform is capable of provisioning persistent volumes (PVs) using the Container Storage Interface (CSI) driver for AWS Elastic Block Store (EBS).

Familiarity with PVs, persistent volume claims (PVCs), dynamic provisioning, and RBAC authorization is recommended.

Before PVCs can be created, you must install the AWS EBS CSI Driver Operator. The Operator provides a default StorageClass that you can use to create PVCs. You also have the option to create the EBS StorageClass as described in Persistent Storage Using AWS Elastic Block Store.

After the Operator is installed, you must also create the AWS EBS CSI custom resource (CR) that is required in the OpenShift Container Platform cluster.

AWS EBS CSI Driver Operator is a Technology Preview feature only. Technology Preview features are not supported with Red Hat production service level agreements (SLAs) and might not be functionally complete. Red Hat does not recommend using them in production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process.

For more information about the support scope of Red Hat Technology Preview features, see https://access.redhat.com/support/offerings/techpreview/.

4.5.2. About CSI

Storage vendors have traditionally provided storage drivers as part of Kubernetes. With the implementation of the Container Storage Interface (CSI), third-party providers can instead deliver storage plug-ins using a standard interface without ever having to change the core Kubernetes code.

CSI Operators give OpenShift Container Platform users storage options, such as volume snapshots, that are not possible with in-tree volume plug-ins.

OpenShift Container Platform defaults to using an in-tree, or non-CSI, driver to provision AWS EBS storage. This in-tree driver will be removed in a subsequent update of OpenShift Container Platform. Volumes provisioned using the existing in-tree driver are planned for migration to the CSI driver at that time.

For information about dynamically provisioning AWS EBS persistent volumes in OpenShift Container Platform, see Persistent storage using AWS Elastic Block Store.

4.5.3. Installing the AWS Elastic Block Store CSI Driver Operator

The AWS Elastic Block Store (EBS) Container Storage Interface (CSI) Driver Operator enables the replacement of the existing AWS EBS in-tree storage plug-in.

AWS EBS CSI Driver Operator is a Technology Preview feature only. Technology Preview features are not supported with Red Hat production service level agreements (SLAs) and might not be functionally complete. Red Hat does not recommend using them in production.

Installing the AWS EBS CSI Driver Operator provides the CSI driver that allows you to use CSI volumes with the PersistentVolumeClaims, PersistentVolumes, and StorageClasses API objects in OpenShift Container Platform. It also deploys the StorageClass that you can use to create persistent volume claims (PVCs).

The AWS EBS CSI Driver Operator is not installed in OpenShift Container Platform by default. Use the following procedure to install and configure this Operator to enable the AWS EBS CSI driver in your cluster.

Prerequisites

- Access to the OpenShift Container Platform web console.

Procedure

To install the AWS EBS CSI Driver Operator from the web console:

- Log in to the web console.

-

Navigate to Operators

OperatorHub. - To locate the AWS EBS CSI Driver Operator, type AWS EBS CSI into the filter box.

- Click Install.

- On the Install Operator page, be sure that All namespaces on the cluster (default) is selected. Select openshift-aws-ebs-csi-driver-operator from the Installed Namespace drop-down menu.

- Adjust the values for Update Channel and Approval Strategy to the values that you want.

- Click Install.

Once finished, the AWS EBS CSI Driver Operator is listed in the Installed Operators section of the web console.

4.5.4. Installing the AWS Elastic Block Store CSI driver

The AWS Elastic Block Store (EBS) Container Storage Interface (CSI) driver is a custom resource (CR) that enables you to create and mount AWS EBS persistent volumes.

The driver is not installed in OpenShift Container Platform by default, and must be installed after the AWS EBS CSI Driver Operator has been installed.

Prerequisites

- The AWS EBS CSI Driver Operator has been installed.

- You have access to the OpenShift Container Platform web console.

Procedure

To install the AWS EBS CSI driver from the web console, complete the following steps:

- Log in to the OpenShift Container Platform web console.

-

Navigate to Operators

Installed Operators. - Locate the AWS EBS CSI Driver Operator from the list and click on the Operator link.

Create the driver:

- From the Details tab, click Create Instance.

- Optional: Select YAML view to make modifications, such as adding notations, to the driver object template.

Click Create to finalize.

ImportantRenaming the cluster and specifying a certain namespace are not supported functions.

4.5.5. Uninstalling the AWS Elastic Block Store CSI Driver Operator

Before you uninstall the AWS EBS CSI Driver Operator, you must delete all persistent volume claims (PVCs) that are in use by the Operator.

Prerequisites

- Access to the OpenShift Container Platform web console.

Procedure

To uninstall the AWS EBS CSI Driver Operator from the web console:

- Log in to the web console.

-

Navigate to Storage

Persistent Volume Claims. - Select any PVCs that are in use by the AWS EBS CSI Driver Operator and click Delete.

-

From the Operators

Installed Operators page, scroll or type AWS EBS CSI into the Filter by name field to find the Operator. Then, click on it. - On the right-hand side of the Installed Operators details page, select Uninstall Operator from the Actions drop-down menu.

- When prompted by the Uninstall Operator window, click the Uninstall button to remove the Operator from the namespace. Any applications deployed by the Operator on the cluster will need to be cleaned up manually.

Once finished, the AWS EBS CSI Driver Operator is no longer listed in the Installed Operators section of the web console.

Additional resources

4.6. OpenStack Manila CSI Driver Operator

4.6.1. Overview

OpenShift Container Platform is capable of provisioning persistent volumes (PVs) using the Container Storage Interface (CSI) driver for the OpenStack Manila shared file system service.

Familiarity with PVs, persistent volume claims (PVCs), dynamic provisioning, and RBAC authorization is recommended.

Before PVCs can be created, you must install the Manila CSI Driver Operator. The Operator creates the required storage classes for all available Manila share types needed for dynamic provisioning.

After the Operator is installed, you must also create the ManilaDriver Custom Resource (CR) that is required in the OpenShift Container Platform cluster.

4.6.2. Installing the Manila CSI Driver Operator

The Manila Container Storage Interface (CSI) Driver Operator is not installed in OpenShift Container Platform by default. Use the following procedure to install and configure this Operator to enable the OpenStack Manila CSI driver in your cluster.

Prerequisites

- You have access to the OpenShift Container Platform web console.

- The underlying Red Hat OpenStack Platform (RHOSP) infrastructure cloud deploys Manila serving NFS shares.

Procedure

To install the Manila CSI Driver Operator from the web console, follow these steps:

- Log in to the OpenShift Container Platform web console.

-

Navigate to Operators

OperatorHub. - Type Manila CSI Driver Operator into the filter box to locate the Operator.

- Click Install.

- On the Install Operator page, select openshift-manila-csi-driver-operator from the Installed Namespace drop-down menu.

- Adjust the values for Update Channel and Approval Strategy to the values that you want. The only supported Installation Mode is All namespaces on the cluster.

- Click Install.

Once finished, the Manila CSI Driver Operator is listed in the Installed Operators section of the web console.

4.6.3. Installing the OpenStack Manila CSI driver

The OpenStack Manila Container Storage Interface (CSI) driver is a custom resource (CR) that enables you to create and mount OpenStack Manila shares. It also supports creating snapshots, and recovering shares from snapshots.

The driver is not installed in OpenShift Container Platform by default, and must be installed after the Manila CSI Driver Operator has been installed.

Prerequisites

- The Manila CSI Driver Operator has been installed.

- Access to the OpenShift Container Platform web console or command-line interface (CLI).

UI procedure

To install the Manila CSI driver from the web console, complete the following steps:

- Log in to the OpenShift Container Platform web console.

-

Navigate to Operators

Installed Operators. - Locate the Manila CSI Driver Operator from the list and click on the Operator link.

Create the driver:

- From the Details tab, click Create Instance.

- Optional: Select YAML view to make modifications, such as adding notations, to the ManilaDriver object template.

Click Create to finalize.

ImportantRenaming the cluster and specifying a certain namespace are not supported functions.

CLI procedure

To install the Manila CSI driver from the CLI, complete the following steps:

Create an object YAML file, such as

maniladriver.yaml, to define the ManilaDriver:Example maniladriver

apiVersion: csi.openshift.io/v1alpha1 kind: ManilaDriver metadata: name: clusterapiVersion: csi.openshift.io/v1alpha1 kind: ManilaDriver metadata: name: cluster1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Renaming the cluster and specifying a certain namespace are not supported functions.

Create the ManilaDriver CR object in your OpenShift Container Platform cluster by specifying the file you created in the previous step:

oc create -f maniladriver.yaml

$ oc create -f maniladriver.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

When the Operator installation is finished, the Manila CSI driver is deployed on OpenShift Container Platform for dynamic provisioning of RWX persistent volumes on Red Hat OpenStack Platform (RHOSP).

Verification

Verify that the ManilaDriver CR was created successfully by entering the following command:

oc get all -n openshift-manila-csi-driver

$ oc get all -n openshift-manila-csi-driverCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that the storage class was created successfully by entering the following command:

oc get storageclasses | grep -E "NAME|csi-manila-"

$ oc get storageclasses | grep -E "NAME|csi-manila-"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE csi-manila-gold manila.csi.openstack.org Delete Immediate false 18h

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE csi-manila-gold manila.csi.openstack.org Delete Immediate false 18hCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.6.4. Dynamically provisioning Manila CSI volumes

OpenShift Container Platform installs a StorageClass for each available Manila share type.

The YAML files that are created are completely decoupled from Manila and from its Container Storage Interface (CSI) plug-in. As an application developer, you can dynamically provision ReadWriteMany (RWX) storage and deploy Pods with applications that safely consume the storage using YAML manifests. You can also provision other access modes, such as ReadWriteOnce (RWO).

You can use the same Pod and persistent volume claim (PVC) definitions on-premise that you use with OpenShift Container Platform on AWS, GCP, Azure, and other platforms, with the exception of the storage class reference in the PVC definition.

Prerequisites

- Red Hat OpenStack Platform (RHOSP) is deployed with appropriate Manila share infrastructure so that it can be used to dynamically provision and mount volumes in OpenShift Container Platform.

Procedure (UI)

To dynamically create a Manila CSI volume using the web console:

-

In the OpenShift Container Platform console, click Storage

Persistent Volume Claims. - In the persistent volume claims overview, click Create Persistent Volume Claim.

Define the required options on the resulting page.

- Select the appropriate StorageClass.

- Enter a unique name for the storage claim.

Select the access mode to specify read and write access for the PVC you are creating.

Important- Use RWX if you want the persistent volume (PV) that fulfills this PVC to be mounted to multiple Pods on multiple nodes in the cluster.

- Use RWO mode if you want to prevent additional Pods from being dynamically provisioned.

- Define the size of the storage claim.

- Click Create to create the persistent volume claim and generate a persistent volume.

Procedure (CLI)

To dynamically create a Manila CSI volume using the command-line interface (CLI):

Create and save a file with the

PersistentVolumeClaimobject described by the following YAML:pvc-manila.yaml

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Use RWX if you want the persistent volume (PV) that fulfills this PVC to be mounted to multiple Pods on multiple nodes in the cluster. Use ReadWriteOnce (RWO) mode to prevent additional Pods from being dynamically provisioned.

- 2

- The name of the storage class that provisions the storage back end. Manila StorageClasses are provisioned by the Operator and have the

csi-manila-prefix.

Create the object you saved in the previous step by running the following command:

oc create -f pvc-manila.yaml

$ oc create -f pvc-manila.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow A new PVC is created.

To verify that the volume was created and is ready, run the following command:

oc get pvc pvc-manila

$ oc get pvc pvc-manilaCopy to Clipboard Copied! Toggle word wrap Toggle overflow The

pvc-manilashows that it isBound.

You can now use the new PVC to configure a Pod.

4.6.5. Uninstalling the Manila CSI Driver Operator

Before you uninstall the Manila Container Storage Interface (CSI) Driver Operator, you must delete all persistent volume claims (PVCs) that are in use by the Operator.

Prerequisites

- Access to the OpenShift Container Platform web console.

Procedure

To uninstall the Manila CSI Driver Operator from the web console:

- Log in to the web console.

-

Navigate to Storage

Persistent Volume Claims. - Select any PVCs that are in use by the Manila CSI Driver Operator and click Delete.

-

From the Operators

Installed Operators page, scroll or type Manila CSI into the Filter by name field to find the Operator. Then, click on it. - On the right-hand side of the Installed Operators details page, select Uninstall Operator from the Actions drop-down menu.

- When prompted by the Uninstall Operator window, click the Uninstall button to remove the Operator from the namespace. Any applications deployed by the Operator on the cluster will need to be cleaned up manually.

Once finished, the Manila CSI Driver Operator is no longer listed in the Installed Operators section of the web console.

Additional resources