Chapter 2. Installing 3scale on OpenShift

This section walks you through steps to deploy Red Hat 3scale API Management 2.13 on OpenShift.

The 3scale solution for on-premises deployment is composed of:

- Two API gateways: embedded APIcast

- One 3scale Admin Portal and Developer Portal with persistent storage

- When deploying 3scale, you must first configure registry authentication to the Red Hat container registry. See Configuring container registry authentication.

- The 3scale Istio Adapter is available as an optional adapter that allows labeling a service running within the Red Hat OpenShift Service Mesh, and integrate that service with 3scale. Refer to 3scale adapter documentation for more information.

Prerequisites

- You must configure 3scale servers for UTC (Coordinated Universal Time).

- Create user credentials using the step in Creating a registry service account.

To install 3scale on OpenShift, perform the steps outlined in the following sections:

- System requirements for installing 3scale on OpenShift

- Deploying 3scale using the operator

- External databases for 3scale using the operator

- Deployment configuration options for 3scale on OpenShift using the operator

- Installing 3scale with the operator using Oracle as the system database

- Troubleshooting common 3scale installation issues

2.1. System requirements for installing 3scale on OpenShift

This section lists the system requirements for installing 3scale on OpenShift.

2.1.1. Environment requirements

Red Hat 3scale API Management requires an environment specified in supported configurations.

The requirements for persistent volumes vary between different deployment types. When deploying with external databases, persistent volumes are not necessary. For some deployment types, an Amazon S3 bucket can serve as a substitute for persistent volumes. If you use local file system storage, consider the specific deployment type and its associated requirements for persistent volumes.

Persistent volumes

- 3 RWO (ReadWriteOnce) persistent volumes for Redis and MySQL persistence

- 1 RWX (ReadWriteMany) persistent volume for Developer Portal content and System-app Assets

Configure the RWX persistent volume to be group writable. For a list of persistent volume types that support the required access modes, see the OpenShift documentation.

Network File System (NFS) is supported on 3scale for the RWX volume only.

For IBM Power (ppc64le) and IBM Z (s390x), provision local storage using the following:

Storage

- NFS

If you are using an Amazon Simple Storage Service (Amazon S3) bucket for content management system (CMS) storage:

Persistent volumes

- 3 RWO (ReadWriteOnce) persistent volumes for Redis and MySQL persistence

Storage

- 1 Amazon S3 bucket

- NFS

2.1.2. Hardware requirements

Hardware requirements depend on your usage needs. Red Hat recommends that you test and configure your environment to meet your specific requirements. The following are the recommendations when configuring your environment for 3scale on OpenShift:

- Compute optimized nodes for deployments on cloud environments (AWS c4.2xlarge or Azure Standard_F8).

- Very large installations may require a separate node (AWS M4 series or Azure Av2 series) for Redis if memory requirements exceed your current node’s available RAM.

- Separate nodes between routing and compute tasks.

- Dedicated computing nodes for 3scale specific tasks.

Additional resources

2.2. Installing the 3scale Operator on OpenShift

3scale supports the last two general availability (GA) releases of OpenShift Container Platform (OCP). For more information, see the Red Hat 3scale API Management Supported Configurations page.

This documentation shows you how to:

- Create a new project.

- Deploy a Red Hat 3scale API Management instance.

- Install the 3scale operator through Operator Lifecycle Manager (OLM).

- Deploy the custom resources once the operator has been deployed.

Prerequisites

Access to a supported version of an OpenShift Container Platform 4 cluster using an account with administrator privileges.

- For more information about supported configurations, see the Red Hat 3scale API Management Supported Configurations page.

Deploy the 3scale operator and custom resource definitions (CRDs) in a separate newly created, empty project. If you deploy them in an existing project containing infrastructure, it could alter or delete existing elements.

To install the 3scale operator on OpenShift, perform the steps outlined in the following sections:

2.2.1. Creating a new OpenShift project

This procedure explains how to create a new OpenShift project named 3scale-project. Replace this project name with your own.

Procedure

To create a new OpenShift project:

Indicate a valid name using alphanumeric characters and dashes. As an example, run the command below to create

3scale-project:oc new-project 3scale-project

$ oc new-project 3scale-projectCopy to Clipboard Copied! Toggle word wrap Toggle overflow

This creates the new OpenShift project where the operator, the APIManager custom resource (CR), and the Capabilities custom resources will be installed. The operator manages the custom resources through OLM in that project.

2.2.2. Installing and configuring the 3scale operator using the OLM

Use Operator Lifecycle Manager (OLM) to install the 3scale operator on an OpenShift Container Platform (OCP) 4.8 (or above) cluster through the OperatorHub in the OCP console. You can install the 3scale operator using the following installation modes:

- Cluster-wide in which the operator is available in all namespaces on the cluster.

- A specific namespace on the cluster

If you are using the OpenShift Container Platform on a restricted network or a disconnected cluster, the Operator Lifecycle Manager can no longer use the OperatorHub. Follow the instructions for setting up and using the OLM in the guide titled Using Operator Lifecycle Manager on restricted networks.

Prerequisites

- You must install and deploy the 3scale operator in the project that you defined in Creating a new OpenShift project.

Procedure

In the OpenShift Container Platform console, log in using an account with administrator privileges.

NoteThe menu structure depends on the version of OpenShift you are using:

- Click Operators > OperatorHub

- In the Filter by keyword box, type 3scale operator to find Red Hat Integration - 3scale.

- Click Red Hat Integration - 3scale. Information about the operator is displayed.

- Read the information about the operator and click Install. The Install Operator page opens.

- On the Install Operator page, select the desired channel to update in the Update channel section.

In the Installation mode section, select where to install the operator.

- All namespaces on the cluster (default) - The operator will be available in all namespaces on the cluster.

- A specific namespace on the cluster - The operator will only be available in the specific single namespace on the cluster that you have selected.

- Click Install.

- After the installation is complete, the system displays a confirmation message indicating that the operator is ready for use.

Verify that the 3scale operator ClusterServiceVersion (CSV) is correctly installed. Also check if it reports that the installation of the operator has been successful:

- Click Operators > Installed Operators.

- Click on the Red Hat Integration - 3scale operator.

- In the Details tab, scroll down to the Conditions section, where the Succeeded condition should read InstallSucceeded under the Reason column.

Besides the indicated procedure, create a list of the allowed domains you intend to use in the 3scale Developer Portal while using OCP on restricted networks. Consider the following examples:

- Any link you intend to add to the Developer Portal.

- SSO integrations through third party SSO providers such as GitHub.

- Billing.

- Webhooks that trigger an external URL.

2.2.2.1. Restrictions in disconnected environments

The following list outlines current restrictions in a disconnected environment for 3scale 2.13:

- The GitHub login to the Developer Portal is not available.

- Support links are not operational.

- Links to external documentation are not operational.

- The validator for the OpenAPI Specification (OAS) in the Developer Portal is not operational, affecting links to external services.

In the product Overview page in ActiveDocs, links to OAS are not operational.

- It is also necessary to check the option Skip swagger validations when you create a new ActiveDocs specification.

Additional resources

- For troubleshooting information, see the OpenShift Container Platform documentation.

- For more information about using the OLM on retricted networks, see Using Operator Lifecycle Manager on restricted networks.

- For more information about preparing your installation on restricted networks, see Mirroring images for a disconnected installation.

- For more information about supported configurations, see the Red Hat 3scale API Management Supported Configurations page.

2.2.3. Upgrading the 3scale operator using the OLM

To upgrade the 3scale operator from a single namespace to a cluster-wide installation in all namespaces on an operator-based deployment, you must remove the 3scale operator from the namespace and then reinstall the operator on the cluster.

Cluster administrators can delete installed operators from a selected namespace by using the web console. Uninstalling the operator does not uninstall an existing 3scale instance.

After the 3scale operator is uninstalled from the namespace, you can use OLM to install the operator in the cluster-wide mode.

Prerequisites

- 3scale administrator permissions or an OpenShift role that has delete permissions for the namespace.

Procedure

In the OpenShift Container Platform console, log in using an account with administrator privileges.

NoteThe menu structure depends on the version of OpenShift you are using:

- Click Operators > OperatorHub. The installed Operators page is displayed.

- Enter 3scale into the Filter by name to find the Operator and click on it.

- On the Operator Details page, select Uninstall Operator from the Actions drop-down menu to remove it from a specific namespace.

An Uninstall Operator? dialog box is displayed, reminding you that:

Removing the operator will not remove any of its custom resource definitions or managed resources. If your operator has deployed applications on the cluster or configured off-cluster resources, these will continue to run and need to be cleaned up manually. This action removes the operator as well as the Operator deployments and pods, if any. Any operands and resources managed by the operator, including CRDs and CRs, are not removed. The web console enables dashboards and navigation items for some operators. To remove these after uninstalling the operator, you might need to manually delete the operator CRDs.

Removing the operator will not remove any of its custom resource definitions or managed resources. If your operator has deployed applications on the cluster or configured off-cluster resources, these will continue to run and need to be cleaned up manually. This action removes the operator as well as the Operator deployments and pods, if any. Any operands and resources managed by the operator, including CRDs and CRs, are not removed. The web console enables dashboards and navigation items for some operators. To remove these after uninstalling the operator, you might need to manually delete the operator CRDs.Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Select Uninstall. This operator stops running and no longer receives updates.

- In the OpenShift Container Platform console click Operators > OperatorHub.

- In the Filter by keyword box, type 3scale operator to find Red Hat Integration - 3scale.

- Click Red Hat Integration - 3scale. Information about the operator is displayed.

- Click Install. The Install Operator page opens.

- On the Install Operator page, select the desired channel to update in the Update channel section.

- In the Installation mode section, select All namespaces on the cluster (default). The operator will be available in all namespaces on the cluster.

- Click Subscribe. The 3scale operator details page is displayed and you can see the Subscription Overview.

- Confirm that the subscription Upgrade Status is displayed as Up to date.

- Verify that the 3scale operator ClusterServiceVersion (CSV) is displayed.

Additional Resources

- For more information about installing the 3scale operator, see Install and configure 3scale API Management.

2.2.3.1. Configuring automated application of micro releases

To get automatic updates, the 3scale operator must have its approval strategy set to Automatic. This allows it to apply micro release updates automatically. The following describes the differences between Automatic and Manual settings, and outlines the steps in a procedure to change from one to the other.

Automatic and manual:

- During installation, the Automatic setting is the selected option by default. Installation of new updates occur as they become available. You can change this during the install or at any time afterwards.

- If you select the Manual option during installation or at any time afterwards, you will receive updates when they are available. Next, you must approve the Install Plan and apply it yourself.

Procedure

- Click Operators > Installed Operators.

- Click 3scale API Management from the list of Installed Operators.

- Click the Subscription tab. Under the Subscription Details heading, you will see the subheading Approval.

- Click the link below Approval. The link is set to Automatic by default. A modal with the heading Change Update Approval Strategy will pop up.

- Choose the option of your preference: Automatic (default) or Manual, and then click Save.

Additional resources

- See Approval Strategy under Installing Operators in your namespace.

2.3. Installing the APIcast operator on OpenShift

This guide provides steps for installing the APIcast operator through the OpenShift Container Platform (OCP) console.

Prerequisites

- OCP 4.x or later with administrator privileges.

Procedure

-

Create new project

operator-testin Projects > Create Project. - Click Operators > OperatorHub

- In the Filter by keyword box, type apicast operator to find Red Hat Integration - 3scale APIcast gateway.

- Click Red Hat Integration - 3scale APIcast gateway. Information about the APIcast operator is displayed

- Click Install. The Create Operator Subscription page opens.

Click Install to accept all of the default selections on the Create Operator Subscription page.

NoteYou can select different operator versions and installation modes, such as cluster-wide or namespace-specific options. There can only be one cluster-wide installation per cluster.

- The subscription upgrade status is shown as Up to date.

-

Click Operators > Installed Operators to verify that the APIcast operator ClusterServiceVersion (CSV) status displays to InstallSucceeded in the

operator-testproject.

2.4. Deploying 3scale using the operator

This section takes you through installing and deploying the 3scale solution via the 3scale operator, using the APIManager CR.

Wildcard routes have been removed since 3scale 2.6.

- This functionality is handled by Zync in the background.

- When API providers are created, updated, or deleted, routes automatically reflect those changes.

Prerequisites

- Configuring container registry authentication

- To make sure you receive automatic updates of micro releases for 3scale, you must have enabled the automatic approval functionality in the 3scale operator. Automatic is the default approval setting. To change this at any time based on your specific needs, use the steps for Configuring automated application of micro releases.

- Deploying 3scale using the operator first requires that you follow the steps in Installing the 3scale Operator on OpenShift

OpenShift Container Platform 4

- A user account with administrator privileges in the OpenShift cluster.

- For more information about supported configurations, see the Red Hat 3scale API Management Supported Configurations page.

Follow these procedures to deploy 3scale using the operator:

2.4.1. Deploying the APIManager custom resource

If you decide to use Amazon Simple Storage Service (Amazon S3), see Amazon Simple Storage Service 3scale FileStorage installation.

The operator watches for APIManager CRs and deploys your required 3Scale solution as specified in the APIManager CR.

Procedure

Click Operators > Installed Operators.

- From the list of Installed Operators, click Red Hat Integration - 3scale.

- Click the API Manager tab.

- Click Create APIManager.

Clear the sample content and add the following YAML definitions to the editor, then click Create.

Before 3scale 2.8, you could configure the automatic addition of replicas by setting the

highAvailabilityfield totrue. From 3scale 2.8, the addition of replicas is controlled through the replicas field in the APIManager CR as shown in the following example.NoteThe value of the wildcardDomain parameter must be a valid domain name that resolves to the address of your OpenShift Container Platform (OCP) router. For example,

apps.mycluster.example.com.APIManager CR with minimum requirements:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow APIManager CR with replicas configured:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.4.2. Getting the Admin Portal URL

When you deploy 3scale using the operator, a default tenant is created, with a fixed URL: 3scale-admin.${wildcardDomain}

The 3scale Dashboard shows the new portal URL of the tenant. As an example, if the <wildCardDomain> is 3scale-project.example.com, the Admin Portal URL is: https://3scale-admin.3scale-project.example.com.

The wildcardDomain is the <wildCardDomain> parameter you provided during installation. Open this unique URL in a browser using the this command:

xdg-open https://3scale-admin.3scale-project.example.com

xdg-open https://3scale-admin.3scale-project.example.com

Optionally, you can create new tenants on the MASTER portal URL: master.${wildcardDomain}.

2.4.3. Getting the APIManager Admin Portal and Master Admin Portal credentials

To log in to either the 3scale Admin Portal or Master Admin Portal after the operator-based deployment, you need the credentials for each separate portal. To get these credentials:

Run the following commands to get the Admin Portal credentials:

oc get secret system-seed -o json | jq -r .data.ADMIN_USER | base64 -d oc get secret system-seed -o json | jq -r .data.ADMIN_PASSWORD | base64 -d

oc get secret system-seed -o json | jq -r .data.ADMIN_USER | base64 -d oc get secret system-seed -o json | jq -r .data.ADMIN_PASSWORD | base64 -dCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Log in as the Admin Portal administrator to verify these credentials are working.

Run the following commands to get the Master Admin Portal credentials:

oc get secret system-seed -o json | jq -r .data.MASTER_USER | base64 -d oc get secret system-seed -o json | jq -r .data.MASTER_PASSWORD | base64 -d

oc get secret system-seed -o json | jq -r .data.MASTER_USER | base64 -d oc get secret system-seed -o json | jq -r .data.MASTER_PASSWORD | base64 -dCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Log in as the Master Admin Portal administrator to verify these credentials are working.

Additional resources

For more information about the APIManager fields, refer to the Reference documentation.

2.4.4. External databases for 3scale using the operator

When you externalize databases from a Red Hat 3scale API Management deployment, this means to provide isolation from the application and resilience against service disruptions at the database level. The resilience to service disruptions depends on the service level agreements (SLAs) provided by the infrastructure or platform provider where you host the databases. This is not offered by 3scale. For more details on externalizing of databases offered by your chosen deployment, see the associated documentation.

When you use an external databases for 3scale using the operator, the aim is to provide uninterrupted uptime if, for example, one or more databases were to fail.

If you use external databases in your 3scale operator-based deployment, note the following:

- Configure and deploy 3scale critical databases externally. Critical databases include the system database, system redis, and backend redis components. Ensure that you deploy and configure these components in a way that makes them highly available.

Specify the connection endpoints to those components for 3scale by creating their corresponding Kubernetes secrets before deploying 3scale.

- See External databases installation for more information.

- See Enabling Pod Disruption Budgets for more information about non-database deployment configurations.

In the APIManager CR, set the

.spec.externalComponentsattribute to specify that system database, system redis, and backend redis are external:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Additionally, if you want the zync database to be highly available to avoid zync potentially losing queue jobs data on restart, note the following:

- Deploy and configure the zync database externally. Make sure you deploy and configure the database in a way that it is highly available.

Specify the connection endpoint to the zync database for 3scale by creating its corresponding Kubernetes secret before deploying 3scale.

- See Zync database secret.

-

Configure 3scale by setting the

.spec.externalComponents.zync.databaseattribute in theAPIManagerCR totrueto specify that the zync database is an external database.

2.5. Deployment configuration options for 3scale on OpenShift using the operator

This section provides information about the deployment configuration options for Red Hat 3scale API Management on OpenShift using the operator.

Prerequisites

- Configuring container registry authentication

- Deploying 3scale using the operator first requires that you follow the steps in Installing the 3scale Operator on OpenShift

OpenShift Container Platform 4.x

- A user account with administrator privileges in the OpenShift cluster.

2.5.1. Configuring proxy parameters for embedded APIcast

As a 3scale administrator, you can configure proxy parameters for embedded APIcast staging and production. This section provides reference information for specifying proxy parameters in an APIManager custom resource (CR). In other words, you are using the 3scale operator, an APIManager CR to deploy 3scale on OpenShift.

You can specify these parameters when you deploy an APIManager CR for the first time or you can update a deployed APIManager CR and the operator will reconcile the update. See Deploying the APIManager custom resource.

There are four proxy-related configuration parameters for embedded APIcast:

-

allProxy -

httpProxy -

httpsProxy -

noProxy

allProxy

The allProxy parameter specifies an HTTP or HTTPS proxy to be used for connecting to services when a request does not specify a protocol-specific proxy.

After you set up a proxy, configure APIcast by setting the allProxy parameter to the address of the proxy. Authentication is not supported for the proxy. In other words, APIcast does not send authenticated requests to the proxy.

The value of the allProxy parameter is a string, there is no default, and the parameter is not required. Use this format to set the spec.apicast.productionSpec.allProxy parameter or the spec.apicast.stagingSpec.allProxy parameter:

<scheme>://<host>:<port>

For example:

httpProxy

The httpProxy parameter specifies an HTTP proxy to be used for connecting to HTTP services.

After you set up a proxy, configure APIcast by setting the httpProxy parameter to the address of the proxy. Authentication is not supported for the proxy. In other words, APIcast does not send authenticated requests to the proxy.

The value of the httpProxy parameter is a string, there is no default, and the parameter is not required. Use this format to set the spec.apicast.productionSpec.httpProxy parameter or the spec.apicast.stagingSpec.httpProxy parameter:

http://<host>:<port>

For example:

httpsProxy

The httpsProxy parameter specifies an HTTPS proxy to be used for connecting to services.

After you set up a proxy, configure APIcast by setting the httpsProxy parameter to the address of the proxy. Authentication is not supported for the proxy. In other words, APIcast does not send authenticated requests to the proxy.

The value of the httpsProxy parameter is a string, there is no default, and the parameter is not required. Use this format to set the spec.apicast.productionSpec.httpsProxy parameter or the spec.apicast.stagingSpec.httpsProxy parameter:

https://<host>:<port>

For example:

noProxy

The noProxy parameter specifies a comma-separated list of hostnames and domain names. When a request contains one of these names, APIcast does not proxy the request.

If you need to stop access to the proxy, for example during maintenance operations, set the noProxy parameter to an asterisk (*). This matches all hosts specified in all requests and effectively disables any proxies.

The value of the noProxy parameter is a string, there is no default, and the parameter is not required. Specify a comma-separated string to set the spec.apicast.productionSpec.noProxy parameter or the spec.apicast.stagingSpec.noProxy parameter. For example:

2.5.2. Injecting custom environments with the 3scale operator

In a 3scale installation that uses embedded APIcast, you can use the 3scale operator to inject custom environments. Embedded APIcast is also referred to as managed or hosted APIcast. A custom environment defines behavior that APIcast applies to all upstream APIs that the gateway serves. To create a custom environment, define a global configuration in Lua code.

You can inject a custom environment before or after 3scale installation. After injecting a custom environment and after 3scale installation, you can remove a custom environment. The 3scale operator reconciles the changes.

Prerequisites

- The 3scale operator is installed.

Procedure

Write Lua code that defines the custom environment that you want to inject. For example, the following

env1.luafile shows a custom logging policy that the 3scale operator loads for all services.Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a secret from the Lua file that defines the custom environment. For example:

oc create secret generic custom-env-1 --from-file=./env1.lua

$ oc create secret generic custom-env-1 --from-file=./env1.luaCopy to Clipboard Copied! Toggle word wrap Toggle overflow A secret can contain multiple custom environments. Specify the

`–from-fileoption for each file that defines a custom environment. The operator loads each custom environment.Define an APIManager custom resource (CR) that references the secret you just created. The following example shows only content relative to referencing the secret that defines the custom environment.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow An APIManager CR can reference multiple secrets that define custom environments. The operator loads each custom environment.

Create the APIManager CR that adds the custom environment. For example:

oc apply -f apimanager.yaml

$ oc apply -f apimanager.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Next steps

You cannot update the content of a secret that defines a custom environment. If you need to update the custom environment you can do either of the following:

-

The recommended option is to create a secret with a different name and update the APIManager (CR) field,

customEnvironments[].secretRef.name. The operator triggers a rolling update and loads the updated custom environment. -

Alternatively, you can update the existing secret, redeploy APIcast by setting

spec.apicast.productionSpec.replicasorspec.apicast.stagingSpec.replicasto 0, and then redploy APIcast again by settingspec.apicast.productionSpec.replicasorspec.apicast.stagingSpec.replicasback to its previous value.

2.5.3. Injecting custom policies with the 3scale operator

In a 3scale installation that uses embedded APIcast, you can use the 3scale operator to inject custom policies. Embedded APIcast is also referred to as managed or hosted APIcast. Injecting a custom policy adds the policy code to APIcast. You can then use either of the following to add the custom policy to an API product’s policy chain:

- 3scale API

-

Productcustom resource (CR)

To use the 3scale Admin Portal to add the custom policy to a product’s policy chain, you must also register the custom policy’s schema with a CustomPolicyDefinition CR. Custom policy registration is a requirement only when you want to use the Admin Portal to configure a product’s policy chain.

You can inject a custom policy as part of or after 3scale installation. After injecting a custom policy and after 3scale installation, you can remove a custom policy by removing its specification from the APIManager CR. The 3scale operator reconciles the changes.

Prerequisites

- You are installing or you previously installed the 3scale operator.

-

You have defined a custom policy as described in Write your own policy. That is, you have already created, for example, the

my-policy.lua,apicast-policy.json, andinit.luafiles that define a custom policy,

Procedure

Create a secret from the files that define one custom policy. For example:

oc create secret generic my-first-custom-policy-secret \ --from-file=./apicast-policy.json \ --from-file=./init.lua \ --from-file=./my-first-custom-policy.lua

$ oc create secret generic my-first-custom-policy-secret \ --from-file=./apicast-policy.json \ --from-file=./init.lua \ --from-file=./my-first-custom-policy.luaCopy to Clipboard Copied! Toggle word wrap Toggle overflow If you have more than one custom policy, create a secret for each custom policy. A secret can contain only one custom policy.

Define an APIManager CR that references each secret that contains a custom policy. You can specify the same secret for APIcast staging and APIcast production. The following example shows only content relative to referencing secrets that contain custom policies.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow An APIManager CR can reference multiple secrets that define different custom policies. The operator loads each custom policy.

Create the APIManager CR that references the secrets that contain the custom policies. For example:

oc apply -f apimanager.yaml

$ oc apply -f apimanager.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Next steps

You cannot update the content of a secret that defines a custom policy. If you need to update the custom policy you can do either of the following:

-

The recommended option is to create a secret with a different name and update the APIManager CR

customPoliciessection to refer to the new secret. The operator triggers a rolling update and loads the updated custom policy. -

Alternatively, you can update the existing secret, redeploy APIcast by setting

spec.apicast.productionSpec.replicasorspec.apicast.stagingSpec.replicasto 0, and then redploy APIcast again by settingspec.apicast.productionSpec.replicasorspec.apicast.stagingSpec.replicasback to its previous value.

2.5.4. Configuring OpenTracing with the 3scale operator

In a 3scale installation that uses embedded APIcast, you can use the 3scale operator to configure OpenTracing. You can configure OpenTracing in the staging or production environments or both environments. By enabling OpenTracing, you get more insight and better observability on the APIcast instance.

Prerequisites

- The 3scale operator is installed or you are in the process of installing it.

- Prerequisites listed in Configuring APIcast to use OpenTracing.

- Jaeger is installed.

Procedure

Define a secret that contains your OpenTracing configuration details in

stringData.config. This is the only valid value for the attribute that contains your OpenTracing configuration details. Any other specification prevents APIcast from receiving your OpenTracing configuration details. The folowing example shows a valid secret definition:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create the secret. For example, if you saved the previous secret definition in the

myjaeger.yamlfile, you would run the following command:oc create -f myjaeger.yaml

$ oc create -f myjaeger.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Define an APIManager custom resource (CR) that specifies

OpenTracingattributes. In the CR definition, set theopenTracing.tracingConfigSecretRef.nameattribute to the name of the secret that contains your OpenTracing configuration details. The following example shows only content relative to configuring OpenTracing.Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create the APIManager CR that configures OpenTracing. For example, if you saved the APIManager CR in the

apimanager1.yamlfile, you would run the following command:oc apply -f apimanager1.yaml

$ oc apply -f apimanager1.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Next steps

Depending on how OpenTracing is installed, you should see the traces in the Jaeger service user interface.

Additional resource

2.5.5. Enabling TLS at the pod level with the 3scale operator

3scale deploys two APIcast instances, one for production and the other for staging. TLS can be enabled for only production or only staging, or for both instances.

Prerequisites

- A valid certificate for enabling TLS.

Procedure

Create a secret from your valid certificate, for example:

oc create secret tls mycertsecret --cert=server.crt --key=server.key

$ oc create secret tls mycertsecret --cert=server.crt --key=server.keyCopy to Clipboard Copied! Toggle word wrap Toggle overflow The configuration exposes secret references in the APIManager custom resource (CR). You create the secret and then reference the name of the secret in the APIManager CR as follows:

-

Production: The APIManager CR exposes the certificate in the

.spec.apicast.productionSpec.httpsCertificateSecretReffield. Staging: The APIManager CR exposes the certificate in the

.spec.apicast.stagingSpec.httpsCertificateSecretReffield.Optionally, you can configure the following:

-

httpsPortindicates which port APIcast should start listening on for HTTPS connections. If this clashes with the HTTP port APIcast uses this port for HTTPS only. httpsVerifyDepthdefines the maximum length of the client certificate chain.NoteProvide a valid certificate and reference from the APIManager CR. If the configuration can access

httpsPortbut nothttpsCertificateSecretRef, APIcast uses an embedded self-signed certificate. This is not recommended.

-

Production: The APIManager CR exposes the certificate in the

- Click Operators > Installed Operators.

- From the list of Installed Operators, click 3scale Operator.

- Click the API Manager tab.

- Click Create APIManager.

Add the following YAML definitions to the editor.

If enabling for production, configure the following YAML defintions:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow If enabling for staging, configure the following YAML defintions:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

- Click Create.

2.5.6. Proof of concept for evaluation deployment

The following sections describe the configuration options applicable to the proof of concept for an evaluation deployment of 3scale. This deployment uses internal databases as default.

The configuration for external databases is the standard deployment option for production environments.

2.5.6.1. Default deployment configuration

Containers will have Kubernetes resource limits and requests.

- This ensures a minimum performance level.

- It limits resources to allow external services and allocation of solutions.

- Deployment of internal databases.

File storage will be based on Persistence Volumes (PV).

- One will require read, write, execute (RWX) access mode.

- OpenShift configured to provide them upon request.

- Deploy MySQL as the internal relational database.

The default configuration option is suitable for proof of concept (PoC) or evaluation by a customer.

One, many, or all of the default configuration options can be overridden with specific field values in the APIManager CR. The 3scale operator allows all available combinations.

2.5.6.2. Evaluation installation

For and evaluation installation, containers will not have kubernetes resource limits and requests specified. For example:

- Small memory footprint

- Fast startup

- Runnable on laptop

- Suitable for presale/sales demos

Additional resources

- See APIManager CR for more information.

2.5.7. External databases installation

When you externalize databases from a Red Hat 3scale API Management deployment, this means to provide isolation from the application and resilience against service disruptions at the database level. The resilience to service disruptions depends on the service level agreements (SLAs) provided by the infrastructure or platform provider where you host the databases. This is not offered by 3scale. For more details on externalizing of databases offered by your chosen deployment, see the associated documentation.

An external databases installation is suitable for production where you want to provide uninterrupted uptime or where you plan to reuse your own databases.

When enabling the 3scale external databases installation mode, you can configure one or more of the following databases as external to 3scale:

-

backend-redis -

system-redis -

system-database(mysql,postgresql, ororacle) -

zync-database

Before creating an APIManager CR to deploy 3scale, you must provide the following connection settings for the external databases by using OpenShift secrets.

Additional resources

2.5.7.1. Backend Redis secret

Deploy two external Redis instances and fill in the connection settings as shown in the following example:

The Secret name must be backend-redis.

2.5.7.2. System Redis secret

Deploy two external Redis instances and fill in the connection settings as shown in the following example:

The Secret name must be system-redis.

2.5.7.3. System database secret

-

The Secret name must be

system-database.

When you are deploying 3scale, you have three alternatives for your system database. Configure different attributes and values for each alternative’s related secret.

- MySQL

- PostgreSQL

- Oracle Database

To deploy a MySQL, PostgreSQL, or an Oracle Database system database secret, fill in the connection settings as shown in the following examples:

MySQL system database secret

If you use MySQL 8.0 with 3scale 2.12, you must set the authentication plugin to mysql_native_password. Add the following to the MySQL configuration file:

[mysqld] default_authentication_plugin=mysql_native_password

[mysqld]

default_authentication_plugin=mysql_native_passwordPostgreSQL system database secret

Oracle system database secret

-

{DB_USER}and{DB_PASSWORD}are the username and password of the regular non-system user. -

{DB_NAME}is the Oracle Database service name. -

ORACLE_SYSTEM_PASSWORDis optional, see Configure a database user.

2.5.7.4. Zync database secret

In a zync database setup, when the spec.externalComponents.zync.database field is set to true, you must create a secret named zync before you deploy 3scale. In this secret, set the DATABASE_URL and DATABASE_PASSWORD fields to the values that point to your external zync database, for example:

The zync database must be in high-availability mode.

2.5.7.5. APIManager custom resources to deploy 3scale

-

When you enable external components, you must create a secret for each external component (

backend-redis,system-redis,system-database,zync) before you deploy 3scale. -

For an external

system-database, choose only one type of database to externalize.

Configuration of the APIManager custom resource (CR) depends on whether or not your choice of database is external to your 3scale deployment.

If backend-redis, system-redis, or system-database is external to 3scale, populate the APIManager CR externalComponents object as shown in the following example:

Additional resources

2.5.8. Eabling pod affinity in the 3scale operator

You can enable pod affinities in the 3scale operator for every component. This ensures distribution of pod replicas from each deploymentConfig across different nodes of the cluster, so they will be evenly balanced across different availability zones (AZ).

2.5.8.1. Customizing node affinity and tolerations at component level

Customize kubernetes affinity and tolerations in your 3scale solution through the APIManager CR attributes. You can then customize to schedule different 3scale components onto kubernetes nodes.

For example, to set a custom node affinity for backend-listener and custom tolerations for system-memcached, do the following:

Custom affinity and tolerations

Add the following affinity block to apicastProductionSpec or to any non-database deploymentConfig. This adds a soft podAntiAffinity configuration using preferredDuringSchedulingIgnoredDuringExecution. The scheduler will try to run this set of apicast-production pods in different hosts from different AZs. If it is not possible, then allow them to run elsewhere:

Soft podAntiAffinity

In the following example, a hard podAntiAffinity configuration is set using requiredDuringSchedulingIgnoredDuringExecution. Conditions must be met to schedule a pod onto a node. A risk exists, for example, that you will not be able to schedule new pods on a cluster with low free resources:

Hard podAntiAffinity

Additional resources

See APIManager CDR reference for a full list of attributes related to affinity and tolerations.

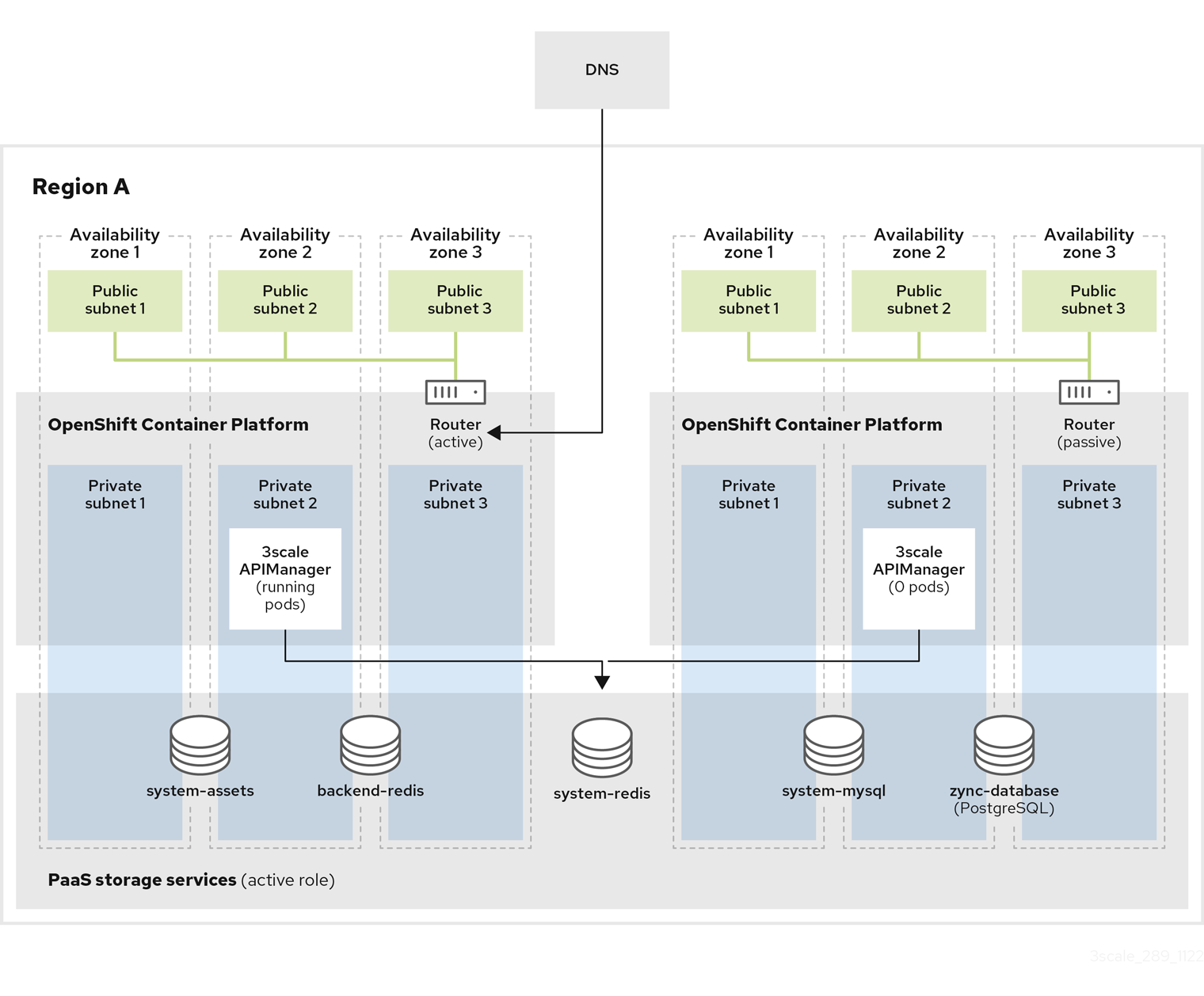

2.5.9. Multiple clusters in multiple availability zones

In case of failure, bringing a passive cluster into active mode disrupts the provision of the service until the procedure finishes. Due to this disruption, be sure to have a maintenance window.

This documentation focuses on deployment using Amazon Web Services (AWS). The same configuration options apply to other public cloud vendors where the provider’s managed database services offer, for example, support for multiple availability zones and multiple regions.

When you want to install 3scale on several OpenShift clusters and high availability (HA) zones, there are options available which you can refer to here.

In multiple cluster installation options, clusters work in an active/passive configuration, with the failover procedure involving a few manual steps.

2.5.9.1. Prerequisites for multiple clusters installations

Use the following in 3scale installations that involve using several OpenShift clusters:

-

Use pod affinities with both

kubernetes.io/hostnameandtopology.kubernetes.io/zonerules in the APIManager custom resource (CR). - Use pod disruption budgets in the APIManager CR.

- A 3scale installation over multiple clusters must use the same shared wildcardDomain attribute specifications in the APIManager CR. The use of a different domain for each cluster is not allowed in this installation mode, as the information stored in the database would be conflicting.

You must manually deploy the secrets containing credentials, such as tokens and passwords, in all clusters with the same values. The 3scale operator creates them with secure random values on every cluster. In this case, you need to have the same credentials in both clusters. You will find the list of secrets and how to configure them in the 3scale operator documentation. The following is the list of secrets you must mirror in both clusters:

-

backend-internal-api -

system-app -

system-events-hook -

system-master-apicast system-seedYou must manually deploy secrets with the database connection strings for

backend-redis,system-redis,system-databaseandzync. See External databases installation.- Databases shared among clusters must use the same values on all clusters.

- If each cluster have their own databases, they must use different values on each cluster.

-

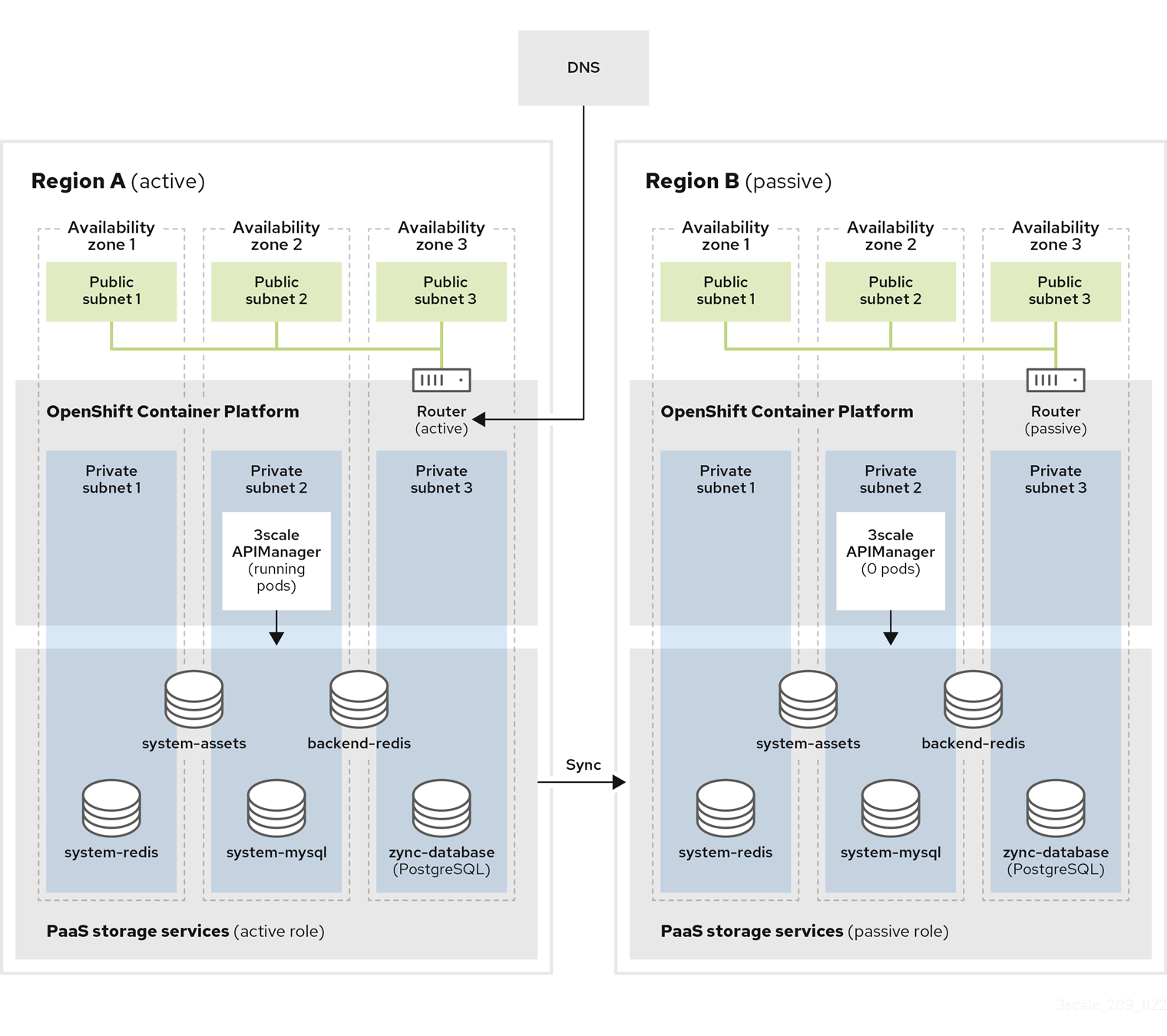

2.5.9.5. Active-passive clusters on different regions with synced databases

This setup consists of having two or more clusters in different regions and deploying 3scale in active-passive mode. One cluster is active, receiving traffic, the others are in standby mode without receiving traffic, therefore passive, but prepared to assume the active role in case there is a failure in the active cluster.

To ensure good database access latency, each cluster has its own database instances. The databases from the active 3scale installation are replicated to the read-replica databases of the 3scale passive installations so the data is available and up to date in all regions for a possible failover.

2.5.9.6. Configuring and installing synced databases

Procedure

- Create two or more OpenShift clusters in different regions using different availability zones. A minimum of three zones is recommended.

Create all required AWS ElastiCache instances with Amazon RDS Multi-AZ enabled on every region:

- Two AWS EC for Backend Redis database: one per region.

- Two AWS EC for System Redis database: one per region.

- Use the cross-region replication with the Global Datastore feature enabled, so the databases from passive regions are read-replicas from the master databases at the active region.

Create all required AWS RDS instances with Amazon RDS Multi-AZ enabled on every region:

- Two AWS RDS for the System database.

- Two AWS RDS for Zync database.

- Use cross-region replication, so the databases from passive regions are read-replicas from the master databases at the active region.

- Configure a AWS S3 bucket for the system assets on every region using cross-region replication.

- Create a custom domain in AWS Route53 or your DNS provider and point it to the OpenShift Router of the active cluster. This must coincide with the wildcardDomain attribute from APIManager CR.

Install 3scale in the passive cluster. The APIManager CR should be identical to the one used in the previous step. When all pods are running, change the APIManager to deploy 0 replicas for all the

backend,system,zync, andAPIcastpods.- Set replicas to 0 to avoid consuming jobs from active database. Deployment will fail due to pod dependencies if each replica is set to 0 at first. For example, pods checking that others are running. First deploy as normal, then set replicas to 0.

2.5.9.7. Manual failover synced databases

Procedure

Do steps 1, 2 and 3 from Manual Failover shared databases.

- Every cluster has its own independent databases: read-replicas from the master at the active region.

- You must manually execute a failover on every database to select the new master on the passive region, which then becomes the active region.

Manual failovers of the databases to execute are:

- AWS RDS: System and Zync.

- AWS ElastiCaches: Backend and System.

- Do step 4 from Manual Failover shared databases.

2.5.10. Amazon Simple Storage Service 3scale fileStorage installation

Before creating APIManager custom resource (CR) to deploy 3scale, provide connection settings for the Amazon Simple Storage Service (Amazon S3) service by using an OpenShift secret.

- Skip this section if you are deploying 3scale with the local filesystem storage.

- The name you choose for a secret can be any name as long as it is not an existing secret name and it will be referenced in the APIManager CR.

-

If

AWS_REGIONis not provided for S3 compatible storage, usedefaultor the deployment will fail. - Disclaimer: Links contained herein to external website(s) are provided for convenience only. Red Hat has not reviewed the links and is not responsible for the content or its availability. The inclusion of any link to an external website does not imply endorsement by Red Hat of the website or their entities, products or services. You agree that Red Hat is not responsible or liable for any loss or expenses that may result due to your use of (or reliance on) the external site or content.

2.5.10.1. Amazon S3 bucket creation

Prerequisites

- You must have an Amazon Web Services (AWS) account.

Procedure

- Create a bucket for storing the system assets.

- Disable the public access blocker of S3 when using the Logo feature of the Developer Portal.

Create an Identity and Access Management (IAM) policy with the following minimum permissions:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a CORS configuration with the following rules:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.5.10.2. Create an OpenShift secret

The following examples show 3scale fileStorage using Amazon S3 instead of persistent volume claim (PVC).

AN AWS S3 compatible provider can be configured in the S3 secret with AWS_HOSTNAME, AWS_PATH_STYLE, and AWS_PROTOCOL optional keys. See the fileStorage S3 credentials secret fields table for more details.

In the following example, Secret name can be anything, as it is be referenced in the APIManager CR.

Lastly, create the APIManager CR to deploy 3scale.

Check APIManager SystemS3Spec.

The following table shows the fileStorage Amazon S3 credentials secret field requirements for Identity and Access Management (IAM) and Security Token Service (STS) settings:

- The S3 authentication method using Secure Token Service (STS) is for short-term, limited-privilege security credentials.

- S3 Identity and Access Management (IAM) is for long-term privilege security credentials.

| Field | Description | Required for IAM | Required for STS |

|---|---|---|---|

| AWS_ACCESS_KEY_ID |

AWS Access Key ID to use in S3 Storage for system’s | Yes | No |

| AWS_SECRET_ACCESS_KEY |

AWS Access Key Secret to use in S3 Storage for system’s | Yes | No |

| AWS_BUCKET |

The S3 bucket to be used as system’s | Yes | Yes |

| AWS_REGION |

The region of the S3 bucket to be used as system’s | Yes | Yes |

| AWS_HOSTNAME | Default: Amazon endpoints - An AWS S3 compatible provider endpoint hostname | No | No |

| AWS_PROTOCOL |

Default: | No | No |

| AWS_PATH_STYLE |

Default: | No | No |

| AWS_ROLE_ARN | ARN of the Role which has a policy attached to authenticate using AWS STS | No | Yes |

| AWS_WEB_IDENTITY_TOKEN_FILE |

Path to mounted token file location. For example: | No | Yes |

Additional resources

2.5.11. PostgreSQL installation

A MySQL internal relational database is the default deployment. This deployment configuration can be overridden to use PostgreSQL instead.

Additional resources

- See APIManager DatabaseSpec for more information.

2.5.12. Configuring SMTP variables (optional)

3scale uses email to send notifications and invite new users. If you intend to use these features, you must provide your own SMTP server and configure SMTP variables in the system-smtp secret.

Perform the following steps to configure the SMTP variables in the system-smtp secret.

Procedure

If you are not already logged in, log in to OpenShift:

oc login

$ oc loginCopy to Clipboard Copied! Toggle word wrap Toggle overflow Using the

oc patchcommand, specify the secret type wheresystem-smtpis the name of the secret, followed by the-poption, and write the new values in JSON for the following variables:Expand Table 2.2. system-smtp Field Description Default value addressThis is the address (hostname or IP) of the remote mail server to use. If this is set to a value different than

"", system will use the mail server to send mails related to events that happen in the API management solution.""portThis is the port of the remote mail server to use.

""domainUse

domainif the mail server requires a HELO domain.""authenticationUse if the mail server requires authentication. Set the authentication types:

plainto send the password in the clear,loginto send password Base64 encoded, orcram_md5to combine a challenge/response mechanism based on the HMAC-MD5 algorithm.""usernameUse

usernameif the mail server requires authentication and the authentication type requires it.""passwordUse

passwordif the mail server requires authentication and the authentication type requires it.""openssl.verify.modeWhen using TLS, you can set how OpenSSL checks the certificate. This is useful if you need to validate a self-signed and/or a wildcard certificate. You can use the name of an OpenSSL verify constant:

noneorpeer.""from_addressfromaddress value for the no-reply mail.""Examples

oc patch secret system-smtp -p '{"stringData":{"address":"<your_address>"}}' oc patch secret system-smtp -p '{"stringData":{"username":"<your_username>"}}' oc patch secret system-smtp -p '{"stringData":{"password":"<your_password>"}}'$ oc patch secret system-smtp -p '{"stringData":{"address":"<your_address>"}}' $ oc patch secret system-smtp -p '{"stringData":{"username":"<your_username>"}}' $ oc patch secret system-smtp -p '{"stringData":{"password":"<your_password>"}}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow After you have set the secret variables, redeploy the

system-appandsystem-sidekiqpods:oc rollout latest dc/system-app oc rollout latest dc/system-sidekiq

$ oc rollout latest dc/system-app $ oc rollout latest dc/system-sidekiqCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check the status of the rollout to ensure it has finished:

oc rollout status dc/system-app oc rollout status dc/system-sidekiq

$ oc rollout status dc/system-app $ oc rollout status dc/system-sidekiqCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.5.13. Customizing compute resource requirements at component level

Customize Kubernetes Compute Resource Requirements in your 3scale solution through the APIManager custom resource (CR) attributes. Do this to customize compute resource requirements, which is CPU and memory, assigned to a specific APIManager component.

The following example outlines how to customize compute resource requirements for the system-master’s system-provider container, for the backend-listener and for the zync-database:

Additional resources

See APIManager CRD reference for more information about how to specify component-level CR requirements.

2.5.13.1. Default APIManager components compute resources

When you configure the APIManager spec.resourceRequirementsEnabled attribute as true, the default compute resources are set for the APIManager components.

The specific compute resources default values that are set for the APIManager components are shown in the following table.

2.5.13.1.1. CPU and memory units

The following list explains the units you will find mentioned in the compute resources default values table. For more information on CPU and memory units, see Managing Resources for Containers.

Resource units explanation

- m - milliCPU or millicore

- Mi - mebibytes

- Gi - gibibyte

- G - gigabyte

| Component | CPU requests | CPU limits | Memory requests | Memory limits |

|---|---|---|---|---|

| system-app’s system-master | 50m | 1000m | 600Mi | 800Mi |

| system-app’s system-provider | 50m | 1000m | 600Mi | 800Mi |

| system-app’s system-developer | 50m | 1000m | 600Mi | 800Mi |

| system-sidekiq | 100m | 1000m | 500Mi | 2Gi |

| system-sphinx | 80m | 1000m | 250Mi | 512Mi |

| system-redis | 150m | 500m | 256Mi | 32Gi |

| system-mysql | 250m | No limit | 512Mi | 2Gi |

| system-postgresql | 250m | No limit | 512Mi | 2Gi |

| backend-listener | 500m | 1000m | 550Mi | 700Mi |

| backend-worker | 150m | 1000m | 50Mi | 300Mi |

| backend-cron | 50m | 150m | 40Mi | 80Mi |

| backend-redis | 1000m | 2000m | 1024Mi | 32Gi |

| apicast-production | 500m | 1000m | 64Mi | 128Mi |

| apicast-staging | 50m | 100m | 64Mi | 128Mi |

| zync | 150m | 1 | 250M | 512Mi |

| zync-que | 250m | 1 | 250M | 512Mi |

| zync-database | 50m | 250m | 250M | 2G |

2.5.14. Customizing node affinity and tolerations at component level

Customize Kubernetes Affinity and Tolerations in your Red Hat 3scale API Management solution through the APIManager CR attributes to customize where and how the different 3scale components of an installation are scheduled onto Kubernetes Nodes.

The following example sets a custom node affinity for the backend. It also sets listener and custom tolerations for the system-memcached:

Additional resources

See APIManager CRD reference for a full list of attributes related to affinity and tolerations.

2.5.15. Reconciliation

Once 3scale has been installed, the 3scale operator enables updating a given set of parameters from the custom resource (CR) to modify system configuration options. Modifications are made by hot swapping, that is, without stopping or shutting down the system.

Not all the parameters of the APIManager custom resource definitions (CRDs) are reconcilable.

The following is a list of reconcilable parameters:

2.5.15.1. Resources

Resource limits and requests for all 3scale components.

2.5.15.2. Backend replicas

Backend components pod count.

2.5.15.3. APIcast replicas

APIcast staging and production components pod count.

2.5.15.4. System replicas

System app and system sidekiq components pod count

2.5.15.5. Zync replicas

Zync app and que components pod count

2.6. Installing 3scale with the operator using Oracle as the system database

As a Red Hat 3scale API Management administrator, you can install the 3scale with the operator using the Oracle Database. By default, 3scale 2.13 has a component called system that stores configuration data in a MySQL database. You can override the default database and store your information in an external Oracle Database.

- The Oracle Database is not supported with OpenShift Container Platform (OCP) versions 4.2 and 4.3 when you are performing an operator-only installation of 3scale. For more information, refer to the Red Hat 3scale API Management Supported Configurations page.

-

In this documentation

myregistry.example.comis used as an example of the registry URL. Replace it with your registry URL. - Disclaimer: Links contained herein to external website(s) are provided for convenience only. Red Hat has not reviewed the links and is not responsible for the content or its availability. The inclusion of any link to an external website does not imply endorsement by Red Hat of the website or their entities, products or services. You agree that Red Hat is not responsible or liable for any loss or expenses that may result due to your use of (or reliance on) the external site or content.

Prerequisites

- A container registry to push container images, accessible by the OCP cluster where 3scale installed.

An installation of the 3scale operator.

- Do not install the APIManager CR, as it will be created in the following procedure.

- A Registry service account for 3scale.

- A supported version of the Oracle Database accessible from your OpenShift cluster.

-

Access to the Oracle Database

SYSTEMuser for installation procedures.

To install 3scale with the operator using Oracle as the system database, use the following steps:

2.6.1. Preparing the Oracle Database

As a 3scale administrator, you must fully prepare the Oracle Database for your 3scale installation when you decide to use it for the System component.

Procedure

- Create a new database.

Apply the following settings:

ALTER SYSTEM SET max_string_size=extended SCOPE=SPFILE;

ALTER SYSTEM SET max_string_size=extended SCOPE=SPFILE;Copy to Clipboard Copied! Toggle word wrap Toggle overflow Configure a database user

There are two options for setting up Oracle Database integration in 3scale: with or without providing the Oracle

SYSTEMuser password.3scale uses the

SYSTEMuser only for the initial setup, which consist in creating a regular user and granting it the required privileges. The following SQL commands will set up a regular user with proper permissions. ({DB_USER}and{DB_PASSWORD}are placeholders that need to be replaced with actual values):Copy to Clipboard Copied! Toggle word wrap Toggle overflow Using the

SYSTEMuser:-

Provide the

SYSTEMuser password inORACLE_SYSTEM_PASSWORDfield of thesystem-databasesecret. - The regular user does not need to exist before the installation. It will be created by the 3scale initialization script.

-

Provide the desired username and password for the regular user in the connection string (e.g.

oracle-enhanced://{DB_USER}:{DB_PASSWORD}@{DB_HOST}:{DB_PORT}/{DB_NAME}) in theURLfield of thesystem-databasesecret. -

The password for the regular Oracle Database non-system user must be unique and not match the

SYSTEMuser password. If the user with the specified username already exists, the 3scale initialization script will attempt to update the password using the following command:

ALTER USER {DB_USER} IDENTIFIED BY {DB_PASSWORD}ALTER USER {DB_USER} IDENTIFIED BY {DB_PASSWORD}Copy to Clipboard Copied! Toggle word wrap Toggle overflow Your database configuration might prevent this command from completing successfully if the parameters

PASSWORD_REUSE_TIMEandPASSWORD_REUSE_MAXare set in a way that restricts reusing the same password.

-

Provide the

Manual setup of the regular database user:

-

You don’t need to provide the

ORACLE_SYSTEM_PASSWORDin thesystem-databasesecret. -

The regular database user (not

SYSTEM) specified in the connection string in theURLfield of thesystem-databasesecret needs to exist prior to the 3scale installation. - The regular user used for the installation must have all the privileges listed above.

-

You don’t need to provide the

Additional resources

- For information on creating a new database, see the Oracle Database 19c documentation.

2.6.2. Building a custom system container image

Procedure

Download 3scale OpenShift templates from the GitHub repository and extract the archive:

tar -xzf 3scale-2.13.0-GA.tar.gz

tar -xzf 3scale-2.13.0-GA.tar.gzCopy to Clipboard Copied! Toggle word wrap Toggle overflow From the Instant Client Downloads page, download:

- A client: It can be either basic-lite or basic.

- The ODBC driver.

The SDK for Oracle Database 19c.

- For 3scale, use Instant Client Downloads for Linux x86-64 (64-bit)

- For ppc64le and 3scale, use Oracle Instant Client Downloads for Linux on Power Little Endian (64-bit)

Check the table for the following Oracle software component versions:

- Oracle Instant Client Package: Basic or Basic Light

- Oracle Instant Client Package: SDK

Oracle Instant Client Package: ODBC

Expand Table 2.4. Oracle 19c example packages for 3scale Oracle 19c package name Compressed file name Basic

instantclient-basic-linux.x64-19.8.0.0.0dbru.zip

Basic Light

instantclient-basiclite-linux.x64-19.8.0.0.0dbru.zip

SDK

instantclient-sdk-linux.x64-19.8.0.0.0dbru.zip

ODBC

instantclient-odbc-linux.x64-19.8.0.0.0dbru.zip

Expand Table 2.5. Oracle 19c example packages for ppc64le and 3scale Oracle 19c package name Compressed file name Basic

instantclient-basic-linux.leppc64.c64-19.3.0.0.0dbru.zip

Basic Light

instantclient-basiclite-linux.leppc64.c64-19.3.0.0.0dbru.zip

SDK

instantclient-sdk-linux.leppc64.c64-19.3.0.0.0dbru.zip

ODBC

instantclient-odbc-linux.leppc64.c64-19.3.0.0.0dbru.zip

NoteIf the client packages versions downloaded and stored locally do not match with the ones 3scale expects, 3scale will automatically download and use the appropriate ones in the following steps.

-

Place your Oracle Database Instant Client Package files into the

system-oracle-3scale-2.13.0-GA/oracle-client-filesdirectory. Login to your

registry.redhat.ioaccount using the credentials you created in Creating a Registry Service Account.docker login registry.redhat.io

$ docker login registry.redhat.ioCopy to Clipboard Copied! Toggle word wrap Toggle overflow Build the custom system Oracle-based image. The image tag must be a fixed image tag as in the following example:

docker build . --tag myregistry.example.com/system-oracle:2.13.0-1

$ docker build . --tag myregistry.example.com/system-oracle:2.13.0-1Copy to Clipboard Copied! Toggle word wrap Toggle overflow Push the system Oracle-based image to a container registry accessible by the OCP cluster. This container registry is where your 3scale solution is going to be installed:

docker push myregistry.example.com/system-oracle:2.13.0-1

$ docker push myregistry.example.com/system-oracle:2.13.0-1Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.6.3. Installing 3scale with Oracle using the operator

Procedure

-

Set up the Oracle Database URL connection string and Oracle Database system password by creating the

system-databasesecret with the corresponding fields. See, External databases installation for the Oracle Database. Install your 3scale solution by creating an APIManager CR. Follow the instructions in Deploying 3scale using the operator.

The APIManager CR must specify the

.spec.system.imagefield set to the system’s Oracle-based image you previous built:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.7. Troubleshooting common 3scale installation issues

This section contains a list of common installation issues and provides guidance for their resolution.

- Previous deployment leaving dirty persistent volume claims

- Wrong or missing credentials of the authenticated image registry

- Incorrectly pulling from the Docker registry

- Permission issues for MySQL when persistent volumes are mounted locally

- Unable to upload logo or images

- Test calls not working on OpenShift

- APIcast on a different project from 3scale failing to deploy

2.7.1. Previous deployment leaving dirty persistent volume claims

Problem

A previous deployment attempt leaves a dirty Persistent Volume Claim (PVC) causing the MySQL container to fail to start.

Cause

Deleting a project in OpenShift does not clean the PVCs associated with it.

Solution

Procedure

Find the PVC containing the erroneous MySQL data with the

oc get pvccommand:Copy to Clipboard Copied! Toggle word wrap Toggle overflow -

Stop the deployment of the system-mysql pod by clicking

cancel deploymentin the OpenShift UI. - Delete everything under the MySQL path to clean the volume.

-

Start a new

system-mysqldeployment.

2.7.2. Wrong or missing credentials of the authenticated image registry

Problem

Pods are not starting. ImageStreams show the following error:

! error: Import failed (InternalError): ...unauthorized: Please login to the Red Hat Registry

! error: Import failed (InternalError): ...unauthorized: Please login to the Red Hat RegistryCause

While installing 3scale on OpenShift 4.x, OpenShift fails to start pods because ImageStreams cannot pull the images they reference. This happens because the pods cannot authenticate against the registries they point to.

Solution

Procedure

Type the following command to verify the configuration of your container registry authentication:

oc get secret

$ oc get secretCopy to Clipboard Copied! Toggle word wrap Toggle overflow If your secret exists, you will see the following output in the terminal:

threescale-registry-auth kubernetes.io/dockerconfigjson 1 4m9s

threescale-registry-auth kubernetes.io/dockerconfigjson 1 4m9sCopy to Clipboard Copied! Toggle word wrap Toggle overflow - However, if you do not see the output, you must do the following:

- Use the credentials you previously set up while Creating a registry service account to create your secret.

-

Use the steps in Configuring registry authentication in OpenShift, replacing

<your-registry-service-account-username>and<your-registry-service-account-password>in theoc create secretcommand provided. Generate the

threescale-registry-authsecret in the same namespace as the APIManager resource. You must run the following inside the<project-name>:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Delete and recreate the APIManager resource:

oc delete -f apimanager.yaml apimanager.apps.3scale.net "example-apimanager" deleted oc create -f apimanager.yaml apimanager.apps.3scale.net/example-apimanager created

$ oc delete -f apimanager.yaml apimanager.apps.3scale.net "example-apimanager" deleted $ oc create -f apimanager.yaml apimanager.apps.3scale.net/example-apimanager createdCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Type the following command to confirm that deployments have a status of

StartingorReady. The pods then begin to spawn:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Type the following command to see the status of each pod:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.7.3. Incorrectly pulling from the Docker registry

Problem

The following error occurs during installation:

svc/system-redis - 1EX.AMP.LE.IP:6379

dc/system-redis deploys docker.io/rhscl/redis-32-rhel7:3.2-5.3

deployment #1 failed 13 minutes ago: config change

svc/system-redis - 1EX.AMP.LE.IP:6379

dc/system-redis deploys docker.io/rhscl/redis-32-rhel7:3.2-5.3

deployment #1 failed 13 minutes ago: config changeCause

OpenShift searches for and pulls container images by issuing the docker command. This command refers to the docker.io Docker registry instead of the registry.redhat.io Red Hat Ecosystem Catalog.

This occurs when the system contains an unexpected version of the Docker containerized environment.

Solution

Procedure

Use the appropriate version of the Docker containerized environment.

2.7.4. Permission issues for MySQL when persistent volumes are mounted locally

Problem

The system-msql pod crashes and does not deploy causing other systems dependant on it to fail deployment. The pod log displays the following error:

[ERROR] Cannot start server : on unix socket: Permission denied [ERROR] Do you already have another mysqld server running on socket: /var/lib/mysql/mysql.sock ? [ERROR] Aborting

[ERROR] Cannot start server : on unix socket: Permission denied

[ERROR] Do you already have another mysqld server running on socket: /var/lib/mysql/mysql.sock ?

[ERROR] AbortingCause

The MySQL process is started with inappropriate user permissions.

Solution

Procedure

The directories used for the persistent volumes MUST have the write permissions for the root group. Having read-write permissions for the root user is not enough as the MySQL service runs as a different user in the root group. Execute the following command as the root user:

chmod -R g+w /path/for/pvs

chmod -R g+w /path/for/pvsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Execute the following command to prevent SElinux from blocking access:

chcon -Rt svirt_sandbox_file_t /path/for/pvs

chcon -Rt svirt_sandbox_file_t /path/for/pvsCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.7.5. Unable to upload logo or images

Problem

Unable to upload a logo - system-app logs display the following error:

Errno::EACCES (Permission denied @ dir_s_mkdir - /opt/system/public//system/provider-name/2

Errno::EACCES (Permission denied @ dir_s_mkdir - /opt/system/public//system/provider-name/2Cause

Persistent volumes are not writable by OpenShift.

Solution

Procedure

Ensure your persistent volume is writable by OpenShift. It should be owned by root group and be group writable.

2.7.6. Test calls not working on OpenShift

Problem

Test calls do not work after creation of a new service and routes on OpenShift. Direct calls via curl also fail, stating: service not available.

Cause

3scale requires HTTPS routes by default, and OpenShift routes are not secured.

Solution

Procedure

Ensure the secure route checkbox is clicked in your OpenShift router settings.

2.7.7. APIcast on a different project from 3scale failing to deploy

Problem

APIcast deploy fails (pod does not turn blue). You see the following error in the logs:

update acceptor rejected apicast-3: pods for deployment "apicast-3" took longer than 600 seconds to become ready

update acceptor rejected apicast-3: pods for deployment "apicast-3" took longer than 600 seconds to become readyYou see the following error in the pod:

Error synching pod, skipping: failed to "StartContainer" for "apicast" with RunContainerError: "GenerateRunContainerOptions: secrets \"apicast-configuration-url-secret\" not found"

Error synching pod, skipping: failed to "StartContainer" for "apicast" with RunContainerError: "GenerateRunContainerOptions: secrets \"apicast-configuration-url-secret\" not found"Cause

The secret was not properly set up.

Solution

Procedure

When creating a secret with APIcast v3, specify apicast-configuration-url-secret:

oc create secret generic apicast-configuration-url-secret --from-literal=password=https://<ACCESS_TOKEN>@<TENANT_NAME>-admin.<WILDCARD_DOMAIN>

oc create secret generic apicast-configuration-url-secret --from-literal=password=https://<ACCESS_TOKEN>@<TENANT_NAME>-admin.<WILDCARD_DOMAIN>