Chapter 2. Governance and risk

Enterprises must meet internal standards for software engineering, secure engineering, resiliency, security, and regulatory compliance for workloads hosted on private, multi and hybrid clouds. Red Hat Advanced Cluster Management for Kubernetes governance provides an extensible policy framework for enterprises to introduce their own security policies.

2.1. Governance architecture

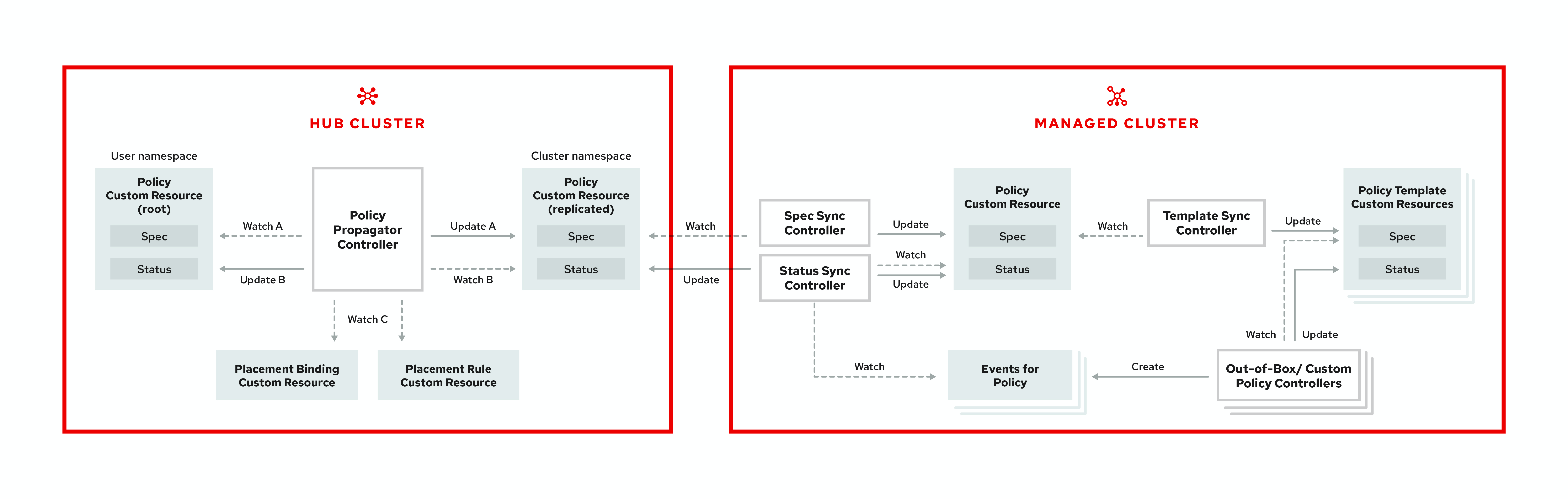

Enhance the security for your cluster with the Red Hat Advanced Cluster Management for Kubernetes governance lifecycle. The product governance lifecycle is based on defined policies, processes, and procedures to manage security and compliance from a central interface page. View the following diagram of the governance architecture:

The governance architecture is composed of the following components:

- Governance and risk dashboard: Provides a summary of your cloud governance and risk details, which include policy and cluster violations.

-

Policy-based governance framework controllers: Support policy creation and deployment to various managed clusters based on attributes associated with clusters, such as a geographical region. See the

policy-collectionrepository to view examples of the predefined policies, and instructions on deploying policies to your cluster. You can also contribute custom policy controllers and policies. - Policy controller: Evaluates one or more policies on the managed cluster against your specified control, and generates Kubernetes events for violations. Violations are propagated to the hub cluster. Policy controllers that are included in your installation are the following: Kubernetes configuration, Certificate, and IAM. You can also create a custom policy controller.

You can customize your Summary view by filtering the violations by categories or standards. Collapse the summary to see less information. You can also search for policies.

Note:

-

When a policy is propagated to a managed cluster, the replicated policy is named as

namespaceName.policyName. When you create a policy, make sure that the length of thenamespaceName.policyNameis less than 63 characters due to the Kubernetes limit for object names. -

When you search for a policy in the hub cluster, you might also receive the name of the replicated policy on your managed cluster. For example, if you search for

policy-dhaz-cert, the following policy name from the hub cluster might appear:default.policy-dhaz-cert.

You can view a table list of violations. The following details are provided in the table: description, resources, severity, cluster, standards, controls, categories, and update time. You can filter the violation table view by policies or cluster violations.

Learn about the structure of an Red Hat Advanced Cluster Management for Kubernetes policy, and how to use the Red Hat Advanced Cluster Management for Kubernetes Governance and risk dashboard.

2.2. Policy overview

Use the Red Hat Advanced Cluster Management for Kubernetes security policy framework to create custom policy controllers and other policies. Kubernetes CustomResourceDefinition (CRD) instance are used to create policies. For more information about CRDs, see Extend the Kubernetes API with CustomResourceDefinitions.

Each Red Hat Advanced Cluster Management for Kubernetes policy can have at least one or more templates. For more details about the policy elements, view the following Policy YAML table section on this page.

The policy requires a PlacementRule that defines the clusters that the policy document is applied to, and a PlacementBinding that binds the Red Hat Advanced Cluster Management for Kubernetes policy to the placement rule.

Important:

-

You must create a

placementRuleto apply your policies to the managed cluster, and bind theplacementRulewith aPlacementBinding. - You can create a policy in any namespace on the hub cluster except the cluster namespace. If you create a policy in the cluster namespace, it is deleted by Red Hat Advanced Cluster Management for Kubernetes.

- Each client and provider is responsible for ensuring that their managed cloud environment meets internal enterprise security standards for software engineering, secure engineering, resiliency, security, and regulatory compliance for workloads hosted on Kubernetes clusters. Use the governance and security capability to gain visibility and remediate configurations to meet standards.

2.2.1. Policy YAML structure

When you create a policy, you must include required parameter fields and values. Depending on your policy controller, you might need to include other optional fields and values. View the following YAML structure for explained parameter fields:

2.2.2. Policy YAML table

| Field | Description |

|---|---|

| apiVersion |

Required. Set the value to |

| kind |

Required. Set the value to |

| metadata.name | Required. The name for identifying the policy resource. |

| metadata.annotations | Optional. Used to specify a set of security details that describes the set of standards the policy is trying to validate. Note: You can view policy violations based on the standards and categories that you define for your policy on the Policies page, from the console. |

| annotations.policy.open-cluster-management.io/standards | The name or names of security standards the policy is related to. For example, National Institute of Standards and Technology (NIST) and Payment Card Industry (PCI). |

| annotations.policy.open-cluster-management.io/categories | A security control category represent specific requirements for one or more standards. For example, a System and Information Integrity category might indicate that your policy contains a data transfer protocol to protect personal information, as required by the HIPAA and PCI standards. |

| annotations.policy.open-cluster-management.io/controls | The name of the security control that is being checked. For example, certificate policy controller. |

| spec.policy-templates | Required. Used to create one or more policies to apply to a managed cluster. |

| spec.disabled |

Required. Set the value to |

| spec.remediationAction |

Optional. Specifies the remediation of your policy. The parameter values are |

2.2.3. Policy sample file

See Managing security policies to create and update a policy. You can also enable and update Red Hat Advanced Cluster Management policy controllers to validate the compliance of your policies. See Policy controllers. See Governance and risk for more policy topics.

2.3. Policy controllers

Policy controllers monitor and report whether your cluster is compliant with a policy. Use the Red Hat Advanced Cluster Management for Kubernetes policy framework by using the out of the box policy templates to apply predefined policy controllers, and policies. The policy controllers are Kubernetes CustomResourceDefinition (CRD) instance. For more information about CRDs, see Extend the Kubernetes API with CustomResourceDefinitions. Policy controllers remediate policy violations to make the cluster status be compliant.

You can create custom policies and policy controllers with the product policy framework. See Creating a custom policy controller for more information.

Important: Only the configuration policy controller supports the enforce feature. You must manually remediate policies, where the policy controller does not support the enforce feature.

View the following topics to learn more about the following Red Hat Advanced Cluster Management for Kubernetes policy controllers:

Refer to Governance and risk for more topics about managing your policies.

2.3.1. Kubernetes configuration policy controller

Configuration policy controller can be used to configure any Kubernetes resource and apply security policies across your clusters.

The configuration policy controller communicates with the local Kubernetes API server to get the list of your configurations that are in your cluster. For more information about CRDs, see Extend the Kubernetes API with CustomResourceDefinitions.

The configuration policy controller is created on the hub cluster during installation. Configuration policy controller supports the enforce feature and monitors the compliance of the following policies:

When the remediationAction for the configuration policy is set to enforce, the controller creates a replicate policy on the target managed clusters.

2.3.1.1. Configuration policy controller YAML structure

2.3.1.2. Configuration policy sample

2.3.1.3. Configuration policy YAML table

| Field | Description |

|---|---|

| apiVersion |

Required. Set the value to |

| kind |

Required. Set the value to |

| metadata.name | Required. The name of the policy. |

| spec | Required. Specifications of which configuration policy to monitor and how to remediate them. |

| spec.namespaceSelector |

Required. The namespaces within the hub cluster that the policy is applied to. Enter at least one namespace for the |

| spec.remediationAction |

Required. Specifies the remediation of your policy. Enter |

| remediationAction.severity |

Required. Specifies the severity when the policy is non-compliant. Use the following parameter values: |

| remediationAction.complianceType | Required. Used to list expected behavior for roles and other Kubernetes object that must be evaluated or applied to the managed clusters. You must use the following verbs as parameter values:

|

Learn about how policies are applied on your hub cluster. See Policy samples for more details. Learn how to create and customize policies, Manage security policies.

See Policy controllers for more information about controllers.

2.3.2. Certificate policy controller

Certificate policy controller can be used to detect certificates that are close to expiring. Configure and customize the certificate policy controller by updating the minimum duration parameter in your controller policy. When a certificate expires in less than the minimum duration amount of time, the policy becomes noncompliant. The certificate policy controller is created on your hub cluster.

The certificate policy controller communicates with the local Kubernetes API server to get the list of secrets that contain certificates and determine all non-compliant certificates. For more information about CRDs, see Extend the Kubernetes API with CustomResourceDefinitions.

Certificate policy controller does not support the enforce feature.

2.3.2.1. Certificate policy controller YAML structure

View the following example of a certificate policy and review the element in the YAML table:

2.3.2.1.1. Certificate policy controller YAML table

| Field | Description |

|---|---|

| apiVersion |

Required. Set the value to |

| kind |

Required. Set the value to |

| metadata.name | Required. The name to identify the policy. |

| metadata.namespace | Required. The namespaces within the managed cluster where the policy is created. |

| metadata.labels |

Optional. In a certificate policy, the |

| spec | Required. Specifications of which certificates to monitor and refresh. |

| spec.namespaceSelector |

Required. Managed cluster namespace to which you want to apply the policy. Enter parameter values for

• When you create multiple certificate policies and apply them to the same managed cluster, each policy

• If the |

| spec.remediationAction |

Required. Specifies the remediation of your policy. Set the parameter value to |

| spec.severity |

Optional. Specifies the severity when the policy is non-compliant. Use the following parameter values: |

| spec.minimumDuration |

Required. Parameter specifies the smallest duration (in hours) before a certificate is considered non-compliant. When the certificate expiration is greater than the |

2.3.2.2. Certificate policy sample

When your certificate policy controller is created on your hub cluster, a replicated policy is created on your managed cluster. Your certificate policy on your managed cluster might resemble the following file:

Learn how to manage a certificate policy, see Managing certificate policies for more details. Refer to Policy controllers for more topics.

2.3.3. IAM policy controller

Identity and Access Management (IAM) policy controller can be used to receive notifications about IAM policies that are non-compliant. The compliance check is based on the parameters that you configure in the IAM policy.

The IAM policy controller checks for compliance of the number of cluster administrators that you allow in your cluster. IAM policy controller communicates with the local Kubernetes API server. For more information, see Extend the Kubernetes API with CustomResourceDefinitions.

The IAM policy controller runs on your managed cluster.

2.3.3.1. IAM policy YAML structure

2.3.3.2. IAM policy YAMl table

| Field | Description |

|---|---|

| apiVersion |

Required. Set the value to |

| kind |

Required. Set the value to |

| metadata.name | Required. The name for identifying the policy resource. |

| spec | Required. Add configuration details for your policy. |

| spec.namespaceSelector |

Required. The namespaces within the hub cluster that the policy is applied to. Enter at least one namespace for the |

| spec.remediationAction |

Optional. Specifies the remediation of your policy. Enter |

| spec.maxClusterRoleBindingUsers | Required. Maximum number of IAM role bindings that are available before a policy is considered non-compliant. |

2.3.3.3. IAM policy sample

Learn how to manage an IAM policy, see Managing IAM policies for more details. Refer to Policy controllers for more topics.

2.3.4. Creating a custom policy controller

Learn to write, apply, view, and update your custom policy controllers. You can create a YAML file for your policy controller to deploy onto your cluster. View the following sections to create a policy controller:

2.3.4.1. Writing a policy controller

Use the policy controller framework that is in the multicloud-operators-policy-controller repository. Complete the following steps to create a policy controller:

Clone the

multicloud-operators-policy-controllerrepository by running the following command:git clone git@github.com:open-cluster-management/multicloud-operators-policy-controller.git

git clone git@github.com:open-cluster-management/multicloud-operators-policy-controller.gitCopy to Clipboard Copied! Toggle word wrap Toggle overflow Customize the controller policy by updating the policy schema definition. Your policy might resemble the following content:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Update the policy controller to watch for the

SamplePolicykind. Run the following command:for file in $(find . -name "*.go" -type f); do sed -i "" "s/SamplePolicy/g" $file; done for file in $(find . -name "*.go" -type f); do sed -i "" "s/samplepolicy-controller/samplepolicy-controller/g" $file; done

for file in $(find . -name "*.go" -type f); do sed -i "" "s/SamplePolicy/g" $file; done for file in $(find . -name "*.go" -type f); do sed -i "" "s/samplepolicy-controller/samplepolicy-controller/g" $file; doneCopy to Clipboard Copied! Toggle word wrap Toggle overflow Recompile and run the policy controller by completing the following steps:

- Log in to your cluster.

- Select the user icon, then click Configure client.

- Copy and paste the configuration information into your command line, and press Enter.

Run the following commands to apply your policy CRD and start the controller:

export GO111MODULE=on kubectl apply -f deploy/crds/policy.open-cluster-management.io_samplepolicies_crd.yaml operator-sdk run --local --verbose

export GO111MODULE=on kubectl apply -f deploy/crds/policy.open-cluster-management.io_samplepolicies_crd.yaml operator-sdk run --local --verboseCopy to Clipboard Copied! Toggle word wrap Toggle overflow You might receive the following output that indicates that your controller runs:

{“level”:”info”,”ts”:1578503280.511274,”logger”:”controller-runtime.manager”,”msg”:”starting metrics server”,”path”:”/metrics”} {“level”:”info”,”ts”:1578503281.215883,”logger”:”controller-runtime.controller”,”msg”:”Starting Controller”,”controller”:”samplepolicy-controller”} {“level”:”info”,”ts”:1578503281.3203468,”logger”:”controller-runtime.controller”,”msg”:”Starting workers”,”controller”:”samplepolicy-controller”,”worker count”:1} Waiting for policies to be available for processing…{“level”:”info”,”ts”:1578503280.511274,”logger”:”controller-runtime.manager”,”msg”:”starting metrics server”,”path”:”/metrics”} {“level”:”info”,”ts”:1578503281.215883,”logger”:”controller-runtime.controller”,”msg”:”Starting Controller”,”controller”:”samplepolicy-controller”} {“level”:”info”,”ts”:1578503281.3203468,”logger”:”controller-runtime.controller”,”msg”:”Starting workers”,”controller”:”samplepolicy-controller”,”worker count”:1} Waiting for policies to be available for processing…Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a policy and verify that the controller retrieves it and applies the policy onto your cluster. Run the following command:

kubectl apply -f deploy/crds/policy.open-cluster-management.io_samplepolicies_crd.yaml

kubectl apply -f deploy/crds/policy.open-cluster-management.io_samplepolicies_crd.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow When the policy is applied, a message appears to indicate that policy is monitored and detected by your custom controller. The mesasge might resemble the following contents:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Check the

statusfield for compliance details by running the following command:kubectl describe SamplePolicy example-samplepolicy -n default

kubectl describe SamplePolicy example-samplepolicy -n defaultCopy to Clipboard Copied! Toggle word wrap Toggle overflow Your output might resemble the following contents:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Change the policy rules and policy logic to introduce new rules for your policy controller. Complete the following steps:

Add new fields in your YAML file by updating the

SamplePolicySpec. Your specification might resemble the following content:Copy to Clipboard Copied! Toggle word wrap Toggle overflow -

Update the

SamplePolicySpecstructure in the samplepolicy_controller.go with new fields. -

Update the

PeriodicallyExecSamplePoliciesfunction in thesamplepolicy_controller.gofile with new logic to run the policy controller. View an example of thePeriodicallyExecSamplePoliciesfield, see open-cluster-management/multicloud-operators-policy-controller. - Recompile and run the policy controller. See Writing a policy controller

Your policy controller is functional.

2.3.4.2. Deploying your controller to the cluster

Deploy your custom policy controller to your cluster and integrate the policy controller with the Governance and risk dashboard. Complete the following steps:

Build the policy controller image by running the following command:

operator-sdk build <username>/multicloud-operators-policy-controller:latest

operator-sdk build <username>/multicloud-operators-policy-controller:latestCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run the following command to push the image to a repository of your choice. For example, run the following commands to push the image to Docker Hub:

docker login docker push <username>/multicloud-operators-policy-controller

docker login docker push <username>/multicloud-operators-policy-controllerCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

Configure

kubectlto point to a cluster managed by Red Hat Advanced Cluster Management for Kubernetes. Replace the operator manifest to use the built-in image name and update the namespace to watch for policies. The namespace must be the cluster namespace. Your manifest might resemble the following contents:

sed -i "" 's|open-cluster-management/multicloud-operators-policy-controller|ycao/multicloud-operators-policy-controller|g' deploy/operator.yaml sed -i "" 's|value: default|value: <namespace>|g' deploy/operator.yaml

sed -i "" 's|open-cluster-management/multicloud-operators-policy-controller|ycao/multicloud-operators-policy-controller|g' deploy/operator.yaml sed -i "" 's|value: default|value: <namespace>|g' deploy/operator.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Update the RBAC role by running the following commands:

sed -i "" 's|samplepolicies|testpolicies|g' deploy/cluster_role.yaml sed -i "" 's|namespace: default|namespace: <namespace>|g' deploy/cluster_role_binding.yaml

sed -i "" 's|samplepolicies|testpolicies|g' deploy/cluster_role.yaml sed -i "" 's|namespace: default|namespace: <namespace>|g' deploy/cluster_role_binding.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Deploy your policy controller to your cluster:

Set up a service account for cluster by runnng the following command:

kubectl apply -f deploy/service_account.yaml -n <namespace>

kubectl apply -f deploy/service_account.yaml -n <namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Set up RBAC for the operator by running the following commands:

kubectl apply -f deploy/role.yaml -n <namespace> kubectl apply -f deploy/role_binding.yaml -n <namespace>

kubectl apply -f deploy/role.yaml -n <namespace> kubectl apply -f deploy/role_binding.yaml -n <namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Set up RBAC for your PolicyController. Run the following commands:

kubectl apply -f deploy/cluster_role.yaml kubectl apply -f deploy/cluster_role_binding.yaml

kubectl apply -f deploy/cluster_role.yaml kubectl apply -f deploy/cluster_role_binding.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Set up a CustomResourceDefinition (CRD) by running the following command:

kubectl apply -f deploy/crds/policies.open-cluster-management.io_samplepolicies_crd.yaml

kubectl apply -f deploy/crds/policies.open-cluster-management.io_samplepolicies_crd.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Deploy the

multicloud-operator-policy-controllerby running the following command:kubectl apply -f deploy/operator.yaml -n <namespace>

kubectl apply -f deploy/operator.yaml -n <namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that the controller is functional by running the following command:

kubectl get pod -n <namespace>

kubectl get pod -n <namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

-

You must integrate your policy controller by creating a

policy-templatefor the controller to monitor. For more information, see Creating a cluster security policy from the console.

2.3.4.2.1. Scaling your controller deployment

Policy controller deployments do not support deletetion or removal. You can scale your deployment to update which pods the deployment is applied to. Complete the following steps:

- Log in to your managed cluster.

- Navigate to the deployment for your custom policy controller.

- Scale the deployment. When you scale your deployment to zero pods, the policy controler deployment is disabled.

For more information on deployments, see OpenShift Container Platform Deployments.

Your policy controller is deployed and integrated on your cluster. View the product policy controllers, see Policy controllers for more information.

2.4. Policy samples

Create and manage policies in Red Hat Advanced Cluster Management for Kubernetes to define rules, processes, and controls on the hub cluster. View the following policy samples to view how specfic policies are applied:

- Kubernetes configuration policy controller sample

- Image vulnerability policy sample

- Memory usage policy sample

- Namespace policy sample

- Pod nginx policy sample

- Pod security policy sample

- Role policy sample

- Rolebinding policy sample

- Security context constraints policy sample

- Certificate policy sample

- IAM policy sample

Refer to Governance and risk for more topics.

2.4.1. Memory usage policy

Kubernetes configuration policy controller monitors the status of the memory usage policy. Use the memory usage policy to limit or restrict your memory and compute usage. For more information, see Limit Ranges in the Kubernetes documentation. Learn more details about the memory usage policy structure in the following sections.

2.4.1.1. Memory usage policy YAML structure

Your memory usage policy might resemble the following YAML file:

2.4.1.2. Memory usage policy table

| Field | Description |

|---|---|

| apiVersion |

Required. Set the value to |

| kind |

Required. Set the value to |

| metadata.name | Required. The name for identifying the policy resource. |

| metadata.namespaces | Optional. |

| spec.namespace |

Required. The namespaces within the hub cluster that the policy is applied to. Enter parameter values for |

| remediationAction |

Optional. Specifies the remediation of your policy. The parameter values are |

| disabled |

Required. Set the value to |

| spec.complianceType |

Required. Set the value to |

| spec.object-template | Optional. Used to list any other Kubernetes object that must be evaluated or applied to the managed clusters. |

2.4.1.3. Memory usage policy sample

See Managing memory usage policies for more information. View other configuration policies that are monitored by controller, see the Kubernetes configuration policy controller page.

2.4.2. Namespace policy

Kubernetes configuration policy controller monitors the status of your namespace policy. Apply the namespace policy to define specific rules for your namespace. Learn more details about the namespace policy structure in the following sections.

2.4.2.1. Namespace policy YAML structure

2.4.2.2. Namespace policy YAML table

| Field | Description |

|---|---|

| apiVersion |

Required. Set the value to |

| kind |

Required. Set the value to |

| metadata.name | Required. The name for identifying the policy resource. |

| metadata.namespaces | Optional. |

| spec.namespace |

Required. The namespaces within the hub cluster that the policy is applied to. Enter parameter values for |

| remediationAction |

Optional. Specifies the remediation of your policy. The parameter values are |

| disabled |

Required. Set the value to |

| spec.complianceType |

Required. Set the value to |

| spec.object-template | Optional. Used to list any other Kubernetes object that must be evaluated or applied to the managed clusters. |

2.4.2.3. Namespace policy sample

Your namespace policy might resemble the following YAML file:

Manage your namespace policy. See Managing namespace policies for more information. See Kubernetes configuration policy controller to learn about other configuration policies.

2.4.3. Image vulnerability policy

Apply the image vulnerability policy to detect if container images have vulnerabilities by leveraging the Container Security Operator. The policy installs the Container Security Operator on your managed cluster if it is not installed.

The image vulnerability policy is checked by the Kubernetes configuration policy controller. For more information about the Security Operator, see the Container Security Operator from the Quay repository.

Note: Image vulnerability policy is not functional during a disconnected installation.

2.4.3.1. Image vulnerability policy YAML structure

2.4.3.2. Image vulnerability policy YAML table

| Field | Description |

|---|---|

| apiVersion |

Required. Set the value to |

| kind |

Required. Set the value to |

| metadata.name | Required. The name for identifying the policy resource. |

| metadata.namespaces | Optional. |

| spec.namespace |

Required. The namespaces within the hub cluster that the policy is applied to. Enter parameter values for |

| remediationAction |

Optional. Specifies the remediation of your policy. The parameter values are |

| disabled |

Required. Set the value to |

| spec.complianceType |

Required. Set the value to |

| spec.object-template | Optional. Used to list any other Kubernetes object that must be evaluated or applied to the managed clusters. |

2.4.3.3. Image vulnerability policy sample

See Managing image vulnerability policies for more information. View other configuration policies that are monitored by the configuration controller, see Kubernetes configuration policy controller.

2.4.4. Pod nginx policy

Kubernetes configuration policy controller monitors the status of you pod nginx policies. Apply the pod policy to define the container rules for your pods. A nginx pod must exist in your cluster.

2.4.4.1. Pod nginx policy YAML structure

2.4.4.2. Pod nginx policy table

| Field | Description |

|---|---|

| apiVersion |

Required. Set the value to |

| kind |

Required. Set the value to |

| metadata.name | Required. The name for identifying the policy resource. |

| metadata.namespaces | Optional. |

| spec.namespace |

Required. The namespaces within the hub cluster that the policy is applied to. Enter parameter values for |

| remediationAction |

Optional. Specifies the remediation of your policy. The parameter values are |

| disabled |

Required. Set the value to |

| spec.complianceType |

Required. Set the value to |

| spec.object-template | Optional. Used to list any other Kubernetes object that must be evaluated or applied to the managed clusters. |

2.4.4.3. Pod nginx policy sample

Your pod policy nginx policy might resemble the following YAML file:

Learn how to manage a pod nginx policy, see Managing pod nginx policies for more details. View other configuration policies that are monitored by the configuration controller, see Kubernetes configuration policy controller. See Manage security policies to manage other policies.

2.4.5. Pod security policy

Kubernetes configuration policy controller monitors the status of the pod security policy. Apply a pod security policy to secure pods and containers. For more information, see Pod Security Policies in the Kubernetes documentation. Learn more details about the pod security policy structure in the following sections.

2.4.5.1. Pod security policy YAML structure

2.4.5.2. Pod security policy table

| Field | Description |

|---|---|

| apiVersion |

Required. Set the value to |

| kind |

Required. Set the value to |

| metadata.name | Required. The name for identifying the policy resource. |

| metadata.namespaces | Optional. |

| spec.namespace |

Required. The namespaces within the hub cluster that the policy is applied to. Enter parameter values for |

| remediationAction |

Optional. Specifies the remediation of your policy. The parameter values are |

| disabled |

Required. Set the value to |

| spec.complianceType |

Required. Set the value to |

| spec.object-template | Optional. Used to list any other Kubernetes object that must be evaluated or applied to the managed clusters. |

2.4.5.3. Pod security policy sample

Your pod security policy might resemble the following YAML file:

See Managing pod security policies for more information. View other configuration policies that are monitored by controller, see the Kubernetes configuration policy controller page.

2.4.6. Role policy

Kubernetes configuration policy controller monitors the status of role policies. Define roles in the object-template to set rules and permissions for specific roles in your cluster. Learn more details about the role policy structure in the following sections.

2.4.6.1. Role policy YAML structure

2.4.6.2. Role policy table

| Field | Description |

|---|---|

| apiVersion |

Required. Set the value to |

| kind |

Required. Set the value to |

| metadata.name | Required. The name for identifying the policy resource. |

| metadata.namespaces | Optional. |

| spec.namespace |

Required. The namespaces within the hub cluster that the policy is applied to. Enter parameter values for |

| remediationAction |

Optional. Specifies the remediation of your policy. The parameter values are |

| disabled |

Required. Set the value to |

| spec.complianceType |

Required. Set the value to |

| spec.object-template | Optional. Used to list any other Kubernetes object that must be evaluated or applied to the managed clusters. |

2.4.6.3. Role policy sample

Apply a role policy to set rules and permissions for specific roles in your cluster. For more information on roles, see Role-based access control. Your role policy might resemble the following YAML file:

See Managing role policies for more information. View other configuration policies that are monitored by controller, see the Kubernetes configuration policy controller page. Learn more about Red Hat Advanced Cluster Management RBAC, see Role-based access control.

2.4.7. Rolebinding policy

Kubernetes configuration policy controller monitors the status of your rolebinding policy. Apply a rolebinding policy to bind a policy to a namespace in your managed cluster. Learn more details about the namespace policy structure in the following sections.

2.4.7.1. Rolebinding policy YAML structure

2.4.7.2. Rolebinding policy table

| Field | Description |

|---|---|

| apiVersion |

Required. Set the value to |

| kind |

Required. Set the value to |

| metadata.name | Required. The name to identify the policy resource. |

| metadata.namespaces | Required. The namespace within the managed cluster where the policy is created. |

| spec | Required. Specifications of how compliance violations are identified and fixed. |

| metadata.name | Required. The name for identifying the policy resource. |

| metadata.namespaces | Optional. |

| spec.complianceType |

Required. Set the value to |

| spec.namespace |

Required. Managed cluster namespace to which you want to apply the policy. Enter parameter values for |

| spec.remediationAction |

Required. Specifies the remediation of your policy. The parameter values are |

| spec.object-template | Required. Used to list any other Kubernetes object that must be evaluated or applied to the managed clusters. |

2.4.7.3. Rolebinding policy sample

Your role binding policy might resemble the following YAML file:

Learn how to manage a rolebinding policy, see Managing rolebinding policies for more details. See Kubernetes configuration policy controller to learn about other configuration policies. See Manage security policies to manage other policies.

2.4.8. Security Context Constraints policy

Kubernetes configuration policy controller monitors the status of your Security Context Constraints (SCC) policy. Apply an Security Context Constraints (SCC) policy to control permissions for pods by defining conditions in the policy. Learn more details about SCC policies in the following sections.

2.4.8.1. SCC policy YAML structure

2.4.8.2. SCC policy table

| Field | Description |

|---|---|

| apiVersion |

Required. Set the value to |

| kind |

Required. Set the value to |

| metadata.name | Required. The name to identify the policy resource. |

| metadata.namespace | Required. The namespace within the managed cluster where the policy is created. |

| spec.complianceType |

Required. Set the value to |

| spec.remediationAction |

Required. Specifies the remediation of your policy. The parameter values are |

| spec.namespace |

Required. Managed cluster namespace to which you want to apply the policy. Enter parameter values for |

| spec.object-template | Required. Used to list any other Kubernetes object that must be evaluated or applied to the managed clusters. |

For explanations on the contents of a SCC policy, see About Security Context Constraints from the OpenShift Container Platform documentation.

2.4.8.3. SCC policy sample

Apply an Security context constraints (SCC) policy to control permissions for pods by defining conditions in the policy. For more information see, Managing Security Context Constraints (SCC). Your SCC policy might resemble the following YAML file:

To learn how to manage an SCC policy, see Managing Security Context Constraints policies for more details. See Kubernetes configuration policy controller to learn about other configuration policies. See Manage security policies to manage other policies.

2.5. Manage security policies

Use the Governance and risk dashboard to create, view, and manage your security policies and policy violations. You can create YAML files for your policies from the CLI and console.

From the Policies page, you can customize your Summary view by filtering the violations by categories or standards, collapse the summary to see less information, and you can search for policies. You can also filter the violation table view by policies or cluster violations.

The table of policies list the following details of a policy: Policy name, Namespace, Remediation, Cluster violation, Standards, Categories, and Controls. You can apply, edit, disable, or remove a policy by selecting the Options icon.

When you select a policy in the table list, the following tabs of information are displayed from the console:

- Details: Select the Details tab to view Policy details, Placement details, and a table list of _Policy templates.

- Violations: Select the Violations tab to view a table list of violations.

- YAML tab: Select the YAML tab to view, and or edit your policy with the editor.

Review the following topics to learn more about creating and updating your security policies:

- Managing security policies

- Managing configuration policies

- Managing image vulnerability policies

- Managing memory usage policies

- Managing namespace policies

- Managing pod nginx policies

- Managing pod security policies

- Managing role policies

- Managing rolebinding policies

- Managing Security Context Constraints policies

- Managing certificate policies

- Managing IAM policies

Refer to Governance and risk for more topics.

2.5.1. Managing security policies

Create a security policy to report and validate your cluster compliance based on your specified security standards, categories, and controls. To create a policy for Red Hat Advanced Cluster Management for Kubernetes, you must create a YAML file on your managed clusters.

2.5.1.1. Creating a security policy

You can create a security policy from the command line interface (CLI) or from the console. Cluster administrator access is required.

The following objects are required for your Red Hat Advanced Cluster Management for Kubernetes policy:

- PlacementRule: Defines a cluster selector where the policy must be deployed.

- PlacementBinding: Binds the placement to a PlacementPolicy.

View more descriptions of the policy YAML files in the Policy overview.

2.5.1.1.1. Creating a security policy from the command line interface

Complete the following steps to create a policy from the command line interface (CLI):

Create a policy by running the following command:

kubectl create -f policy.yaml -n <namespace>

kubectl create -f policy.yaml -n <namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Define the template that the policy uses. Edit your

.yamlfile by adding atemplatesfield to define a template. Your policy might resemble the following YAML file:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Define a

PlacementRule. Be sure to change thePlacementRuleto specify the clusters where the policies need to be applied, either byclusterNames, orclusterLabels. View Creating and managing placement rules. YourPlacementRulemight resemble the following content:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Define a

PlacementBindingto bind your policy and yourPlacementRule. YourPlacementBindingmight resemble the following YAML sample:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.5.1.1.1.1. Viewing your security policy from the CLI

Complete the following steps to view your security policy from the CLI:

View details for a specific security policy by running the following command:

kubectl get securityepolicy <policy-name> -n <namespace> -o yaml

kubectl get securityepolicy <policy-name> -n <namespace> -o yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow View a description of your security policy by running the following command:

kubectl describe securitypolicy <name> -n <namespace>

kubectl describe securitypolicy <name> -n <namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.5.1.1.2. Creating a cluster security policy from the console

As you create your new policy from the console, a YAML file is also created in the YAML editor.

- From the navigation menu, click Govern risk.

- To create a policy, click Create policy.

From the Create policy page, enter the appropriate values for the following policy fields:

- Name

- Specifications

- Cluster selector

- Enforce (rememdiation action)

- Standards

- Categories

- Controls

NOTE: You can copy and paste an existing policy in to the Policy YAML. The values for the parameter fields are automatically entered when you paste your existing policy. You can search the contents in your policy YAML file with the search feature.

View the example Red Hat Advanced Cluster Management for Kubernetes security policy definition. Copy and paste the YAML file for your policy.

Important:

- You must define a PlacementPolicy and PlacementBinding to apply your policy to a specific cluster. Enter a value for the Cluster select field to define a PlacementPolicy and PlacementBinding.

-

Be sure to add values for the

policy.mcm.ibm.com/controlsandpolicy.mcm.ibm.com/standardsto display modal cards of what controls and standards are violated in the Policy Overview section.

Your YAML file might resemble the following policy:

+

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Click Create Policy.

Your policy is enabled by default. You can disable your policy by selecting the

Disabledcheck box.

A security policy is created from the console.

2.5.1.1.2.1. Viewing your security policy from the console

You can view any security policy and its status from the console.

- Log in to your cluster from the console.

From the navigation menu, click Governance and risk to view a table list of your policies.

NoteYou can filter the table list of your policies by selecting the All policies tab or Cluster violations tab.

- Select one of your policies to view more details.

- View the policy violations by selecting the Violations tab.

2.5.1.2. Updating security policies

Learn to update security policies by viewing the following section.

2.5.1.2.1. Disabling security policies

Complete the following steps to disable your security policy:

- Log in to your Red Hat Advanced Cluster Management for Kubernetes console.

- From the navigation menu, click Govern risk to view a table list of your policies.

- Disable your policy by clicking the Options icon > Disable. The Disable Policy dialog box appears.

- Click Disable policy.

Your policy is disabled.

2.5.1.2.2. Deleting a security policy

Delete a security policy from the CLI or the console.

Delete a security policy from the CLI:

Delete a security policy by running the following command:

kubectl delete policy <securitypolicy-name> -n <open-cluster-management-namespace>

kubectl delete policy <securitypolicy-name> -n <open-cluster-management-namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow After your policy is deleted, it is removed from your target cluster or clusters.

Verify that your policy is removed by running the following command:

kubectl get policy <securitypolicy-name> -n <open-cluster-management-namespace>

kubectl get policy <securitypolicy-name> -n <open-cluster-management-namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Delete a security policy from the console:

- From the navigation menu, click Govern risk to view a table list of your policies.

- Click the Options icon for the policy you want to delete in the policy violation table.

- Click Remove.

- From the Remove policy dialog box, click Remove policy

To manage other policies, see Managing security policies for more information. Refer to Governance and risk for more topics about policies.

2.5.2. Managing configuration policies

Learn to create, apply, view, and update your configuration policies.

2.5.2.1. Creating a configuration policy

You can create a YAML file for your configuration policy from the command line interface (CLI) or from the console. View the following sections to create a configuration policy:

2.5.2.1.1. Creating a configuration policy from the CLI

Complete the following steps to create a configuration policy from the (CLI):

Create a YAML file for your configuration policy. Run the following command:

kubectl create -f configpolicy-1.yaml

kubectl create -f configpolicy-1.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Your configuration policy might resemble the following policy:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the policy by running the following command:

kubectl apply -f <policy-file-name> --namespace=<namespace>

kubectl apply -f <policy-file-name> --namespace=<namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Verify and list the policies by running the following command:

kubectl get policy --namespace=<namespace>

kubectl get policy --namespace=<namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Your configuration policy is created.

2.5.2.1.1.1. Viewing your configuration policy from the CLI

Complete the following steps to view your configuration policy from the CLI:

View details for a specific configuration policy by running the following command:

kubectl get policy <policy-name> -n <namespace> -o yaml

kubectl get policy <policy-name> -n <namespace> -o yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow View a description of your configuration policy by running the following command:

kubectl describe policy <name> -n <namespace>

kubectl describe policy <name> -n <namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.5.2.1.2. Creating a configuration policy from the console

As you create a configuration policy from the console, a YAML file is also created in the YAML editor. Complete the following steps to create a configuration policy from the console:

- Log in to your cluster from the console.

- From the navigation menu, click Governance and risk.

- Click Create policy.

Enter or select the appropriate values for the following fields:

- Name

- Namespace

- Specifications

- Cluster selector

- Remediation action

- Standards

- Categories

- Controls

- Disabled

- Click Create.

2.5.2.1.2.1. Viewing your configuration policy from the console

You can view any configuration policy and its status from the console.

- Log in to your cluster from the console.

From the navigation menu, click Govern risk to view a table list of your policies.

NoteYou can filter the table list of your policies by selecting the All policies tab or Cluster violations tab.

- Select one of your policies.

- View the policy violations by selecting the Violations tab.

2.5.2.2. Updating configuration policies

Learn to update configuration policies by viewing the following section.

2.5.2.2.1. Disabling configuration policies

Complete the following steps to disable your configuration policy:

- Log in to your Red Hat Advanced Cluster Management for Kubernetes console.

- From the navigation menu, click Govern risk to view a table list of your policies.

- Disable your policy by clicking the Options icon > Disable. The Disable Policy dialog box appears.

- Click Disable policy.

Your policy is disabled.

2.5.2.3. Deleting a configuration policy

Delete a configuration policy from the CLI or the console.

Delete a configuration policy from the CLI:

Delete a configuration policy by running the following command:

kubectl delete policy <policy-name> -n <mcm namespace>

kubectl delete policy <policy-name> -n <mcm namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow After your policy is deleted, it is removed from your target cluster or clusters.

- Verify that your policy is removed by running the following command:

kubectl get policy <policy-name> -n <mcm namespace>

kubectl get policy <policy-name> -n <mcm namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Delete a configuration policy from the console:

- From the navigation menu, click Govern risk to view a table list of your policies.

- Click the Options icon for the policy you want to delete in the policy violation table.

- Click Remove.

- From the Remove policy dialog box, click Remove policy.

Your policy is deleted.

View configuration policy samples, see Policy samples. See Managing security policies to manage other policies.

2.5.3. Managing image vulnerability policies

Configuration policy controller monitors the status of image vulnerability policies. Image vulnerability policies are applied to check if your containers have vulnerabilities. Learn to create, apply, view, and update your image vulnerability policy.

2.5.3.1. Creating an image vulnerability policy

You can create a YAML for your image vulnerability policy from the command line interface (CLI) or from the console. View the following sections to create an image vulnerability policy:

2.5.3.1.1. Creating an image vulnerability policy from the CLI

Complete the following steps to create an image vulnerability policy from the CLI:

Create a YAML file for your image vulnerability policy by running the following command:

kubectl create -f imagevulnpolicy-1.yaml

kubectl create -f imagevulnpolicy-1.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the policy by running the following command:

kubectl apply -f <imagevuln-policy-file-name> --namespace=<namespace>

kubectl apply -f <imagevuln-policy-file-name> --namespace=<namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow List and verify the policies by running the following command:

kubectl get imagevulnpolicy --namespace=<namespace>

kubectl get imagevulnpolicy --namespace=<namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Your image vulnerability policy is created.

2.5.3.1.1.1. Viewing your image vulnerability policy from the CLI

Complete the following steps to view your image vulnerability policy from the CLI:

View details for a specific image vulnerability policy by running the following command:

kubectl get imagevulnpolicy <policy-name> -n <namespace> -o yaml

kubectl get imagevulnpolicy <policy-name> -n <namespace> -o yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow View a description of your image vulnerability policy by running the following command:

kubectl describe imagevulnpolicy <name> -n <namespace>

kubectl describe imagevulnpolicy <name> -n <namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.5.3.2. Creating an image vulnerability policy from the console

As you create an image vulnerability policy from the console, a YAML file is also created in the YAML editor. Complete the following steps to create the image vulnerability policy from the console:

- Log in to your cluster from the console.

- From the navigation menu, click Governance and risk.

- Click Create policy.

- Select ImageManifestVulnPolicy from the Specifications field. Parameter values are automatically set. You can edit your values.

- Click Create.

An image vulnerability policy is created.

2.5.3.3. Viewing image vulnerability violations from the console

- From the navigation menu, click Govern risk to view a table list of your policies.

Select the

policy-imagemanifestvulnpolicypolicy > Violations tab to view the cluster location of the violation.Your image vulnerability violation might resemble the following:

imagemanifestvulns exist and should be deleted: [sha256.7ac7819e1523911399b798309025935a9968b277d86d50e5255465d6592c0266] in namespace default; [sha256.4109631e69d1d562f014dd49d5166f1c18b4093f4f311275236b94b21c0041c0] in namespace calamari; [sha256.573e9e0a1198da4e29eb9a8d7757f7afb7ad085b0771bc6aa03ef96dedc5b743, sha256.a56d40244a544693ae18178a0be8af76602b89abe146a43613eaeac84a27494e, sha256.b25126b194016e84c04a64a0ad5094a90555d70b4761d38525e4aed21d372820] in namespace open-cluster-management-agent-addon; [sha256.64320fbf95d968fc6b9863581a92d373bc75f563a13ae1c727af37450579f61a] in namespace openshift-cluster-version

imagemanifestvulns exist and should be deleted: [sha256.7ac7819e1523911399b798309025935a9968b277d86d50e5255465d6592c0266] in namespace default; [sha256.4109631e69d1d562f014dd49d5166f1c18b4093f4f311275236b94b21c0041c0] in namespace calamari; [sha256.573e9e0a1198da4e29eb9a8d7757f7afb7ad085b0771bc6aa03ef96dedc5b743, sha256.a56d40244a544693ae18178a0be8af76602b89abe146a43613eaeac84a27494e, sha256.b25126b194016e84c04a64a0ad5094a90555d70b4761d38525e4aed21d372820] in namespace open-cluster-management-agent-addon; [sha256.64320fbf95d968fc6b9863581a92d373bc75f563a13ae1c727af37450579f61a] in namespace openshift-cluster-versionCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Navigate to your OpenShift Container Platform console by selecting the Cluster link.

- From the navigation menu on the OpenShift Container Platform console, click Administration > Custom Resource Definitions.

-

Select

imagemanifestvulns> Instances tab to view all of theimagemanifestvulnsinstances. - Select an entry to view more details.

2.5.3.4. Updating image vulnerability policies

Learn to update image vulnerability policies by viewing the following section.

2.5.3.4.1. Disabling image vulnerability policies

Complete the following steps to disable your image vulnerability policy:

- Log in to your Red Hat Advanced Cluster Management for Kubernetes console.

- From the navigation menu, click Govern risk to view a table list of your policies.

- Disable your policy by clicking the Options icon > Disable. The Disable Policy dialog box appears.

- Click Disable policy.

Your policy is disabled.

2.5.3.4.2. Deleting an image vulnerability policy

Delete the image vulnerability policy from the CLI or the console.

Delete an image vulnerability policy from the CLI:

Delete a certificate policy by running the following command:

kubectl delete policy <imagevulnpolicy-name> -n <mcm namespace>

kubectl delete policy <imagevulnpolicy-name> -n <mcm namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow After your policy is deleted, it is removed from your target cluster or clusters.

Verify that your policy is removed by running the following command:

kubectl get policy <imagevulnpolicy-name> -n <mcm namespace>

kubectl get policy <imagevulnpolicy-name> -n <mcm namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Delete an image vulnerability policy from the console:

- From the navigation menu, click Govern risk to view a table list of your policies.

- Click the Options icon for the policy you want to delete in the policy violation table.

- Click Remove.

- From the Remove policy dialog box, click Remove policy.

Your image vulnerability policy is deleted.

View a sample of an image vulnerability policy, see Image vulnerability policy sample from the Image vulnerability policy page. See Kubernetes configuration policy controller to learn about other policies that are monitored by the Kubernetes configuration policy controller. See Managing security policies to manage other policies.

2.5.4. Managing memory usage policies

Apply a memory usage policy to limit or restrict your memory and compute usage. Learn to create, apply, view, and update your memory usage policy in the following sections.

2.5.4.1. Creating a memory usage policy

You can create a YAML file for your memory usage policy from the command line interface (CLI) or from the console. View the following sections to create a memory usage policy:

2.5.4.1.1. Creating a memory usage policy from the CLI

Complete the following steps to create a memory usage policy from the CLI:

Create a YAML file for your memory usage policy by running the following command:

kubectl create -f memorypolicy-1.yaml

kubectl create -f memorypolicy-1.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the policy by running the following command:

kubectl apply -f <memory-policy-file-name> --namespace=<namespace>

kubectl apply -f <memory-policy-file-name> --namespace=<namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow List and verify the policies by running the following command:

kubectl get memorypolicy --namespace=<namespace>

kubectl get memorypolicy --namespace=<namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Your memory usage policy is created from the CLI.

2.5.4.1.1.1. Viewing your policy from the CLI

Complete the following steps to view your memory usage policy from the CLI:

View details for a specific memory usage policy by running the following command:

kubectl get memorypolicy <policy-name> -n <namespace> -o yaml

kubectl get memorypolicy <policy-name> -n <namespace> -o yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow View a description of your memory usage policy by running the following command:

kubectl describe memorypolicy <name> -n <namespace>

kubectl describe memorypolicy <name> -n <namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.5.4.1.2. Creating an memory usage policy from the console

As you create a memory usage policy from the console, a YAML file is also created in the YAML editor. Complete the following steps to create the memory usage policy from the console:

- Log in to your Red Hat Advanced Cluster Management for Kubernetes console.

- From the navigation menu, click Governance and risk.

- Click Create policy.

- Select Limitrange from the Specifications field. Parameter values are automatically set. You can edit your values.

- Click Create.

2.5.4.1.2.1. Viewing your memory usage policy from the console

You can view any memory usage policy and its status from the console.

- Log in to your cluster from the console.

From the navigation menu, click Govern risk to view a table list of your policies.

NoteYou can filter the table list of your policies by selecting the All policies tab or Cluster violations tab.

- Select one of your policies to view more details.

- View the policy violations by selecting the Violations tab.

2.5.4.2. Updating memory usage policies

Learn to update memory usage policies by viewing the following section.

2.5.4.2.1. Disabling memory usage policies

Complete the following steps to disable your memory usage policy:

- Log in to your Red Hat Advanced Cluster Management for Kubernetes console.

- From the navigation menu, click Govern risk to view a table list of your policies.

- Disable your policy by clicking the Options icon > Disable. The Disable Policy dialog box appears.

- Click Disable policy.

Your policy is disabled.

2.5.4.2.2. Deleting a memory usage policy

Delete the memory usage policy from the CLI or the console.

Delete a memory usage policy from the CLI:

Delete a memory usage policy by running the following command:

kubectl delete policy <memorypolicy-name> -n <mcm namespace>

kubectl delete policy <memorypolicy-name> -n <mcm namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow After your policy is deleted, it is removed from your target cluster or clusters.

Verify that your policy is removed by running the following command:

kubectl get policy <memorypolicy-name> -n <mcm namespace>

kubectl get policy <memorypolicy-name> -n <mcm namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Delete a memory usage policy from the console:

- From the navigation menu, click Govern risk to view a table list of your policies.

- Click the Options icon for the policy you want to delete in the policy violation table.

- Click Remove.

- From the Remove policy dialog box, click Remove policy.

Your memory usage policy is deleted.

View a sample of a memory usage policy, see Memory usage policy sample from the Memory usage policy page. See Kubernetes configuration policy controller to learn about other configuration policies. See Managing security policies to manage other policies.

2.5.5. Managing namespace policies

Namespace policies are applied to define specific rules for your namespace. Learn to create, apply, view, and update your memory usage policy in the following sections.

2.5.5.1. Creating a namespace policy

You can create a YAML file for your namespace policy from the command line interface (CLI) or from the console. View the following sections to create a namespace policy:

2.5.5.1.1. Creating a namespace policy from the CLI

Complete the following steps to create a namespace policy from the CLI:

Create a YAML file for your namespace policy by running the following command:

kubectl create -f namespacepolicy-1.yaml

kubectl create -f namespacepolicy-1.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the policy by running the following command:

kubectl apply -f <namespace-policy-file-name> --namespace=<namespace>

kubectl apply -f <namespace-policy-file-name> --namespace=<namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow List and verify the policies by running the following command:

kubectl get namespacepolicy --namespace=<namespace>

kubectl get namespacepolicy --namespace=<namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Your namespace policy is created from the CLI.

2.5.5.1.1.1. Viewing your namespace policy from the CLI

Complete the following steps to view your namespace policy from the CLI:

View details for a specific namespace policy by running the following command:

kubectl get namespacepolicy <policy-name> -n <namespace> -o yaml

kubectl get namespacepolicy <policy-name> -n <namespace> -o yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow View a description of your namespace policy by running the following command:

kubectl describe namespacepolicy <name> -n <namespace>

kubectl describe namespacepolicy <name> -n <namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.5.5.1.2. Creating a namespace policy from the console

As you create a namespace policy from the console, a YAML file is also created in the YAML editor. Complete the following steps to create a namespace policy from the console:

- Log in to your Red Hat Advanced Cluster Management for Kubernetes console.

- From the navigation menu, click Governance and risk.

- Click Create policy.

- Select Namespace from the Specifications field. Parameter values are automatically set. You can edit your values.

- Click Create.

2.5.5.1.2.1. Viewing your namespace policy from the console

You can view any namespace policy and its status from the console.

- Log in to your cluster from the console.

From the navigation menu, click Governance and risk to view a table list of your policies.

NoteYou can filter the table list of your policies by selecting the All policies tab or Cluster violations tab.

- Select one of your policies to view more details.

- View the policy violations by selecting the Violations tab.

2.5.5.2. Updating namespace policies

Learn to update namespace policies by viewing the following section.

2.5.5.2.1. Disabling namespace policies

Complete the following steps to disable your namespace policy:

- Log in to your Red Hat Advanced Cluster Management for Kubernetes console.

- From the navigation menu, click Govern risk to view a table list of your policies.

- Disable your policy by clicking the Options icon > Disable. The Disable Policy dialog box appears.

- Click Disable policy.

Your policy is disabled.

2.5.5.2.2. Deleting a namespace policy

Delete a namespace policy from the CLI or the console.

Delete a namespace policy from the CLI:

Delete a namespace policy by running the following command:

kubectl delete policy <memorypolicy-name> -n <mcm namespace>

kubectl delete policy <memorypolicy-name> -n <mcm namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow After your policy is deleted, it is removed from your target cluster or clusters.

Verify that your policy is removed by running the following command:

kubectl get policy <memorypolicy-name> -n <mcm namespace>

kubectl get policy <memorypolicy-name> -n <mcm namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Delete a namespace policy from the console:

- From the navigation menu, click Govern risk to view a table list of your policies.

- Click the Options icon for the policy you want to delete in the policy violation table.

- Click Remove.

- From the Remove policy dialog box, click Remove policy.

Your namespace policy is deleted.

View a sample of a namespace policy, see Namespace policy sample on the Namespace policy page. See Kubernetes configuration policy controller to learn about other configuration policies. See Managing security policies to manage other policies.

2.5.6. Managing pod nginx policies

Kubernetes configuration policy controller monitors the status of you pod nginx policies. Pod nginx policies are applied to to define the container rules for your pods. Learn to create, apply, view, and update your pod nginx policy.

2.5.6.1. Creating a pod nginx policy

You can create a YAML for your pod nginx policy from the command line interface (CLI) or from the console. View the following sections to create a pod nginx policy:

2.5.6.1.1. Creating a pod nginx policy from the CLI

Complete the following steps to create a pod nginx policy from the CLI:

Create a YAML file for your pod nginx policy by running the following command:

kubectl create -f podnginxpolicy-1.yaml

kubectl create -f podnginxpolicy-1.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the policy by running the following command:

kubectl apply -f <podnginx-policy-file-name> --namespace=<namespace>

kubectl apply -f <podnginx-policy-file-name> --namespace=<namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow List and verify the policies by running the following command:

kubectl get podnginxpolicy --namespace=<namespace>

kubectl get podnginxpolicy --namespace=<namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Your image pod nginx policy is created from the CLI.

2.5.6.1.1.1. Viewing your nginx policy from the CLI

Complete the following steps to view your pod nginx policy from the CLI:

View details for a specific pod nginx policy by running the following command:

kubectl get podnginxpolicy <policy-name> -n <namespace> -o yaml

kubectl get podnginxpolicy <policy-name> -n <namespace> -o yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow View a description of your pod nginx policy by running the following command:

kubectl describe podnginxpolicy <name> -n <namespace>

kubectl describe podnginxpolicy <name> -n <namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.5.6.2. Creating an pod nginx policy from the console

As you create a pod nginx policy from the console, a YAML file is also created in the YAML editor. Complete the following steps to create the pod nginx policy from the console:

- Log in to your Red Hat Advanced Cluster Management for Kubernetes console.

- From the navigation menu, click Govern risk.

- Click Create policy.

- Select Pod from the Specifications field. Parameter values are automatically set. You can edit your values.

- Click Create.

2.5.6.2.1. Viewing your pod nginx policy from the console

You can view any pod nginx policy and its status from the console.

- Log in to your cluster from the console.

From the navigation menu, click Govern risk to view a table list of your policies.

NoteYou can filter the table list of your policies by selecting the All policies tab or Cluster violations tab.

- Select one of your policies to view more details.

- View the policy violations by selecting the Violations tab.

2.5.6.3. Updating pod nginx policies

Learn to update pod nginx policies by viewing the following section.

2.5.6.3.1. Disabling pod nginx policies

Complete the following steps to disable your pod nginx policy:

- Log in to your Red Hat Advanced Cluster Management for Kubernetes console.

- From the navigation menu, click Govern risk to view a table list of your policies.

- Disable your policy by clicking the Options icon > Disable. The Disable Policy dialog box appears.

- Click Disable policy.

Your policy is disabled.

2.5.6.3.2. Deleting a pod nginx policy

Delete the pod nginx policy from the CLI or the console.

Delete a pod nginx policy from the CLI:

Delete a pod nginx policy by running the following command:

kubectl delete policy <podnginxpolicy-name> -n <namespace>

kubectl delete policy <podnginxpolicy-name> -n <namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow After your policy is deleted, it is removed from your target cluster or clusters.

Verify that your policy is removed by running the following command:

kubectl get policy <podnginxpolicy-name> -n <namespace>

kubectl get policy <podnginxpolicy-name> -n <namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Delete a pod nginx policy from the console:

- From the navigation menu, click Govern risk to view a table list of your policies.

- Click the Options icon for the policy you want to delete in the policy violation table.

- Click Remove.

- From the Remove policy dialog box, click Remove policy.

Your pod nginx policy is deleted.

View a sample of a pod nginx policy, see Pod nginx policy sample from the Pod nginx policy page. See Kubernetes configuration policy controller to learn about other configuration policies. See Managing security policies to manage other policies.

2.5.7. Managing pod security policies

Apply a pod security policy to secure pods and containers. Learn to create, apply, view, and update your pod security policy in the following sections.

2.5.7.1. Creating a pod security policy

You can create a YAML file for your pod security policy from the command line interface (CLI) or from the console. View the following sections to create a pod security policy:

2.5.7.1.1. Creating a pod security policy from the CLI

Complete the following steps to create a pod security from the CLI:

Create a YAML file for your pod security policy by running the following command:

kubectl create -f podsecuritypolicy-1.yaml

kubectl create -f podsecuritypolicy-1.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the policy by running the following command:

kubectl apply -f <podsecurity-policy-file-name> --namespace=<namespace>

kubectl apply -f <podsecurity-policy-file-name> --namespace=<namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow List and verify the policies by running the following command:

kubectl get podsecuritypolicy --namespace=<namespace>

kubectl get podsecuritypolicy --namespace=<namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Your pod security policy is created from the CLI.

2.5.7.1.1.1. Viewing your pod security policy from the CLI

Complete the following steps to view your pod security policy from the CLI:

View details for a specific pod security policy by running the following command:

kubectl get podsecuritypolicy <policy-name> -n <namespace> -o yaml

kubectl get podsecuritypolicy <policy-name> -n <namespace> -o yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow View a description of your pod security policy by running the following command:

kubectl describe podsecuritypolicy <name> -n <namespace>

kubectl describe podsecuritypolicy <name> -n <namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.5.7.1.2. Creating a pod security policy from the console

As you create a pod security policy from the console, a YAML file is also created in the YAML editor. Complete the following steps to create the pod security policy from the console:

- Log in to your Red Hat Advanced Cluster Management for Kubernetes console.

- From the navigation menu, click Govern risk.

- Click Create policy.

- Select Podsecuritypolicy from the Specifications field. Parameter values are automatically set. You can edit your values.

- Click Create.

2.5.7.1.2.1. Viewing your pod security policy from the console

You can view any pod security policy and its status from the console.

- Log in to your cluster from the console.

From the navigation menu, click Govern risk to view a table list of your policies.

NoteYou can filter the table list of your policies by selecting the All policies tab or Cluster violations tab.

- Select one of your policies to view more details.

- View the policy violations by selecting the Violations tab.

2.5.7.2. Updating pod security policies

Learn to update pod security policies by viewing the following section.

2.5.7.2.1. Disabling pod security policies

Complete the following steps to disable your pod security policy:

- Log in to your Red Hat Advanced Cluster Management for Kubernetes console.

- From the navigation menu, click Govern risk to view a table list of your policies.

- Disable your policy by clicking the Options icon > Disable. The Disable Policy dialog box appears.

- Click Disable policy.

Your policy is disabled.

2.5.7.2.2. Deleting a pod security policy

Delete the pod security policy from the CLI or the console.

Delete a pod security policy from the CLI:

Delete a pod security policy by running the following command:

kubectl delete policy <podsecurity-policy-name> -n <mcm namespace>

kubectl delete policy <podsecurity-policy-name> -n <mcm namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow After your policy is deleted, it is removed from your target cluster or clusters.

Verify that your policy is removed by running the following command:

kubectl get policy <podsecurity-policy-name> -n <mcm namespace>

kubectl get policy <podsecurity-policy-name> -n <mcm namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Delete a pod security policy from the console:

- From the navigation menu, click Govern risk to view a table list of your policies.

- Click the Options icon for the policy you want to delete in the policy violation table.

- Click Remove.

- From the Remove policy dialog box, click Remove policy.

Your pod security policy is deleted.

View a sample of a pod security policy, see Pod security policy sample on the Pod security policy page. See Kubernetes configuration policy controller to learn about other configuration policies. See Managing security policies to manage other policies.

2.5.8. Managing role policies

Kubernetes configuration policy controller monitors the status of role policies. Apply a role policy to set rules and permissions for specific roles in your cluster. Learn to create, apply, view, and update your role policy in the following sections.

2.5.8.1. Creating a role policy

You can create a YAML file for your role policy from the command line interface (CLI) or from the console. View the following sections to create a role policy:

2.5.8.1.1. Creating a role policy from the CLI

Complete the following steps to create a role from the CLI:

Create a YAML file for your role policy by running the following command:

kubectl create -f rolepolicy-1.yaml

kubectl create -f rolepolicy-1.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the policy by running the following command: