Chapter 1. Managing applications

Review the following topics to learn more about creating, deploying, and managing your applications. This guide assumes familiarity with Kubernetes concepts and terminology. Key Kubernetes terms and components are not defined. For more information about Kubernetes concepts, see Kubernetes Documentation.

The application management functions provide you with unified and simplified options for constructing and deploying applications and application updates. With these functions, your developers and DevOps personnel can create and manage applications across environments through channel and subscription-based automation.

Important: An application name cannot exceed 37 characters.

See the following topics:

- Application model and definitions

- Application console

- Managing application resources

- Managing apps with Git repositories

- Managing apps with Helm repositories

- Managing apps with Object storage repositories

- Application advanced configuration

- Subscribing Git resources

- Granting subscription admin privilege

- Creating an allow and deny list as subscription administrator

- Adding reconcile options

- Configuring application channel and subscription for a secure Git connection

- Setting up Ansible Tower tasks

- Configuring GitOps on managed clusters

- Scheduling a deployment

- Configuring package overrides

- Channel samples

- Subscription samples

- Placement rule samples

- Application samples

1.1. Application model and definitions

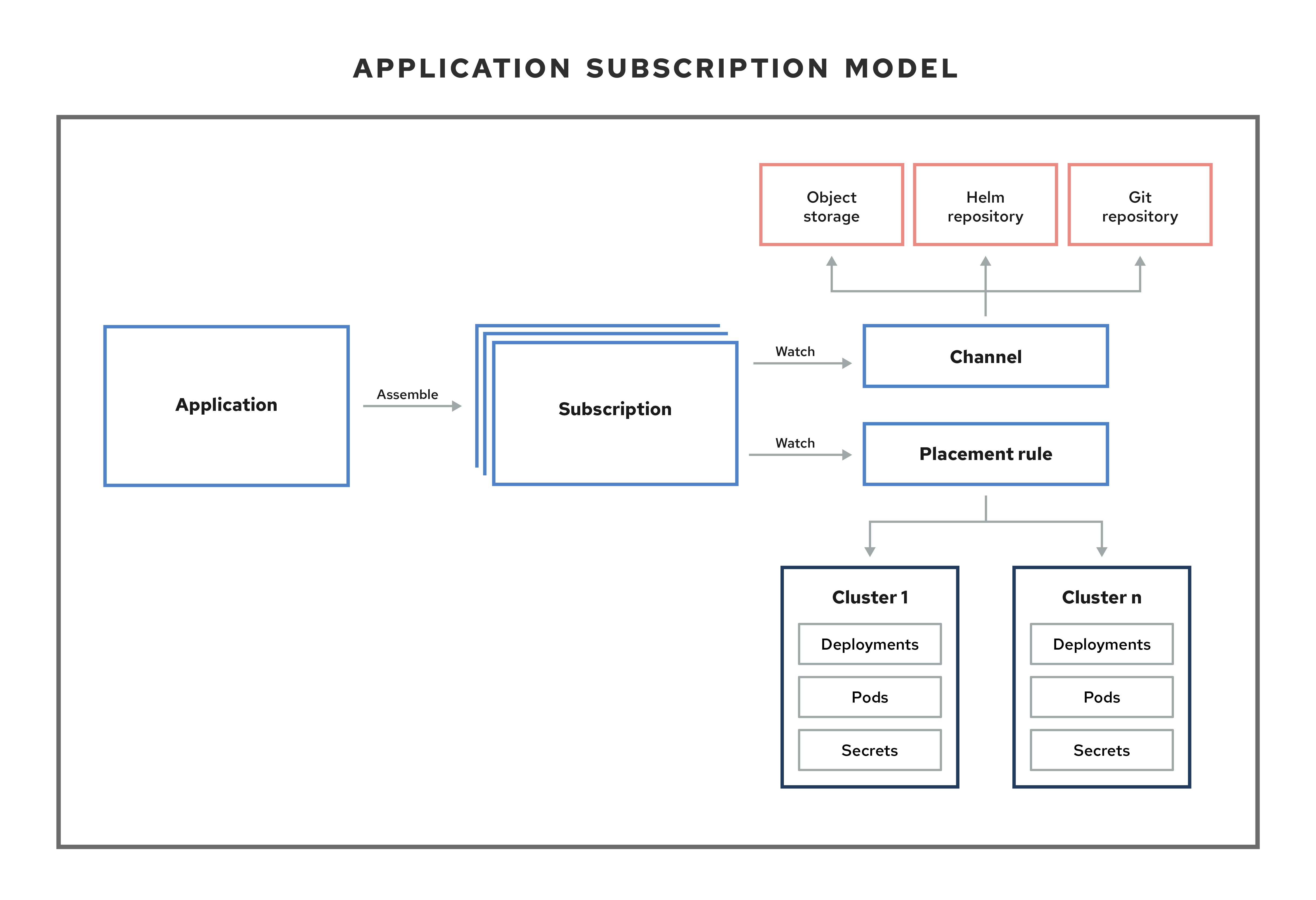

The application model is based on subscribing to one or more Kubernetes resource repositories (channel resources) that contains resources that are deployed on managed clusters. Both single and multicluster applications use the same Kubernetes specifications, but multicluster applications involve more automation of the deployment and application management lifecycle.

See the following image to understand more about the application model:

View the following application resource sections:

1.1.1. Applications

Applications (application.app.k8s.io) in Red Hat Advanced Cluster Management for Kubernetes are used for grouping Kubernetes resources that make up an application.

All of the application component resources for Red Hat Advanced Cluster Management for Kubernetes applications are defined in YAML file spec sections. When you need to create or update an application component resource, you need to create or edit the appropriate spec section to include the labels for defining your resource.

You can also work with Discovered applications, which are applications that are discovered by the OpenShift Container Platform GitOps or an Argo CD operator that is installed in your clusters. Applications that share the same repository are grouped together in this view.

1.1.2. Subscriptions

Subscriptions (subscription.apps.open-cluster-management.io) allow clusters to subscribe to a source repository (channel) that can be the following types: Git repository, Helm release registry, or Object storage repository.

Note: Self-managing the hub cluster is not recommended because the resources might impact the hub cluster.

Subscriptions can deploy application resources locally to the hub cluster, if the hub cluster is self-managed. You can then view the local-cluster subscription in the topology. Resource requirements might adversely impact hub cluster performance.

Subscriptions can point to a channel or storage location for identifying new or updated resource templates. The subscription operator can then download directly from the storage location and deploy to targeted managed clusters without checking the hub cluster first. With a subscription, the subscription operator can monitor the channel for new or updated resources instead of the hub cluster.

See the following subscription architecture image:

1.1.2.1. Channels

Channels (channel.apps.open-cluster-management.io) define the source repositories that a cluster can subscribe to with a subscription, and can be the following types: Git, Helm release, and Object storage repositories, and resource templates on the hub cluster.

If you have applications that require Kubernetes resources or Helm charts from channels that require authorization, such as entitled Git repositories, you can use secrets to provide access to these channels. Your subscriptions can access Kubernetes resources and Helm charts for deployment from these channels, while maintaining data security.

Channels use a namespace within the hub cluster and point to a physical place where resources are stored for deployment. Clusters can subscribe to channels for identifying the resources to deploy to each cluster.

Notes: It is best practice to create each channel in a unique namespace. However, a Git channel can share a namespace with another type of channel, including Git, Helm, and Object storage.

Resources within a channel can be accessed by only the clusters that subscribe to that channel.

1.1.2.1.1. Supported Git repository servers

- GitHub

- GitLab

- Bitbucket

- Gogs

1.1.2.2. Placement rules

Placement rules (placementrule.apps.open-cluster-management.io) define the target clusters where resource templates can be deployed. Use placement rules to help you facilitate the multicluster deployment of your deployables. Placement rules are also used for governance and risk policies. For more information on how, see Governance.

1.1.3. ApplicationSet (Technology Preview)

ApplicationSet (Technology Preview) is a sub-project of Argo CD that adds multicluster support for Argo CD applications. You can create an ApplicationSet from the product console editor.

1.1.4. Application documentation

Learn more from the following documentation:

- Application console

- Managing application resources

- Managing apps with Git repositories

- Managing apps with Helm repositories

- Managing apps with Object storage repositories

- Application advanced configuration

- Subscribing Git resources

- Setting up Ansible Tower tasks

- Channel samples

- Subscription samples

- Placement rule samples

- Application samples

1.2. Application console

The console includes a dashboard for managing the application lifecycle. You can use the console dashboard to create and manage applications and view the status of applications. Enhanced capabilities help your developers and operations personnel create, deploy, update, manage, and visualize applications across your clusters.

See the following application console capabilities:

Important: Available actions are based on your assigned role. Learn about access requirements from the Role-based access control documentation.

- Visualize deployed applications across your clusters, including any associated resource repositories, subscriptions, and placement configurations.

-

Create and edit applications, and subscribe resources. By default, the hub cluster can manage itself and is named the

local cluster. You can choose to deploy application resources to this local cluster, though deploying applications on the local cluster is not best practice. -

From the Overview, click on an application name to view details and topology, which encompasses application resources, including resource repositories, subscriptions, placements, placement rules, and deployed resources, including any optional predeployment and postdeployment hooks using Ansible Tower tasks (for Git repositories). Access the

Editortab from this same view. -

From the Overview, use the

Advanced configurationtab to view or edit subscriptions, placement rules, and channels. - View individual status in the context of an application, including deployments, updates, and subscriptions.

The console includes tools that each provide different application management capabilities. These capabilities allow you to easily create, find, update, and deploy application resources.

1.2.1. Applications overview

From the main Overview tab, see the following:

- A table that lists all applications

- The Search box to filter the applications that are listed

- The application name and other details such as the amount of remote and local clusters where resources are deployed through a subscription, including Argo applications

- The links to repositories where the definitions for the resources that are deployed by the application are located

- Time window constraints, if any were created

-

Any

ApplicationSettypes that represent Argo applications that are generated from the controller - More actions that are available for your assigned role

From the Overview, you can click Create application and choose a type to create.

Technology Preview: You can choose to create an Argo CD ApplicationSet. Modify the required fields and then click Create. You receive a notification when the ApplicationSet is created.

For an ArgoCD ApplicationSet to be created, you need to enable Automatically sync when cluster state changes from the Sync policy.

You can also edit your Argo CD ApplicationSet. From the table, click on your ApplicationSet. By default, the YAML Editor should display along side the fields. You can turn on the editor if it does not appear. Modify your application as needed in each of the options, then save.

1.2.2. Advanced configuration

Click the Advanced configuration tab to view terminology and tables of resources for all applications. You can find resources and you can filter subscriptions, placement rules, and channels. If you have access, you can also click multiple Actions, such as Edit, Search, and Delete.

Select a resource to view or edit the YAML.

1.2.2.1. Single applications overview

From the main Overview, when you click on an application name in the table to view details about a single application, you can see the following information:

- Cluster details, such as resource status

- Resource topology

- Subscription details

- Access to the Editor tab to edit

The Editor tab is only available for Red Hat Advanced Cluster Management applications. Click to edit your application and related resources.

1.2.3. Resource topology

The topology provides a visual representation of the application that was selected including the resources deployed by this application on target clusters.

- You can select any component from the Topology view to view more details.

- View the deployment details for any resource deployed by this application by clicking on the resource node to open the properties view.

- If you selected Argo applications, you can view your Argo applications and launch to the Argo editor.

View cluster CPU and memory from the cluster node, on the properties dialog.

Notes: The cluster CPU and memory percentage that is displayed is the percentage that is currently utilized. This value is rounded down, so a very small value might display as

0.For Helm subscriptions, see Configuring package overrides to define the appropriate

packageNameand thepackageAliasto get an accurate topology display.View a successful Ansible Tower deployment if you are using Ansible tasks as prehook or posthook for the deployed application.

Click the name of the resource node or select Actions > View application to see the details about the Ansible task deployment, including Ansible Tower Job URL and template name. Additionally, you can see errors if your Ansible Tower deployment is not successful.

- Click Launch resource in Search to search for related resources.

Use Search to find application resources by the component kind for each resource. To search for resources, use the following values:

| Application resource | Kind (search parameter) |

|---|---|

| Subscription |

|

| Channel |

|

| Secret |

|

| Placement rule |

|

| Application |

|

You can also search by other fields, including name, namespace, cluster, label, and more.

From the search results, you can view identifying details for each resource, including the name, namespace, cluster, labels, and creation date.

If you have access, you can also click Actions in the search results and select to delete that resource.

Click the resource name in the search results to open the YAML editor and make changes. Changes that you save are applied to the resource immediately.

For more information about using search, see Search in the console.

1.3. Managing application resources

From the console, you can create applications by using Git repositories, Helm repositories, and Object storage repositories.

Important: Git Channels can share a namespace with all other channel types: Helm, Object storage, and other Git namespaces.

See the following topics to start managing apps:

1.3.1. Managing apps with Git repositories

When you deploy Kubernetes resources using an application, the resources are located in specific repositories. Learn how to deploy resources from Git repositories in the following procedure. Learn more about the application model at Application model and definitions.

User required access: A user role that can create applications. You can only perform actions that your role is assigned. Learn about access requirements from the Role-based access control documentation.

- From the console navigation menu, click Manage applications.

Click Create application.

For the following steps, select YAML: On to view the YAML in the console as you create your application. See the YAML samples later in the topic.

Enter the following values in the correct fields:

- Name: Enter a valid Kubernetes name for the application.

- Namespace: Select a namespace from the list. You can also create a namespace by using a valid Kubernetes name if you are assigned the correct access role.

- Choose Git from the list of repositories that you can use.

Enter the required URL path or select an existing path.

If you select an existing Git repository path, you do not need to specify connection information if this is a private repository. The connection information is pre-set and you do not need to view these values.

If you enter a new Git repository path, you can optionally enter Git connection information if this is a private Git repository.

- Enter values for the optional fields, such as branch, path, and commit hash. Optional fields are defined in the fields or in the hover text.

Notice the reconcile option. The

mergeoption is the default selection, which means that new fields are added and existing fields are updated in the resource. You can choose toreplace. With thereplaceoption, the existing resource is replaced with the Git source.-

When the subscription reconcile rate is set to

low, it can take up to one hour for the subscribed application resources to reconcile. On the card on the single application view, click Sync to reconcile manually. If set tooff, it never reconciles.

-

When the subscription reconcile rate is set to

Set any optional pre-deployment and post-deployment tasks.

Set the Ansible Tower secret if you have Ansible Tower jobs that you want to run before or after the subscription deploys the application resources. The Ansible Tower tasks that define Ansible jobs must be placed within

prehookandposthookfolders in this repository.From the Credential section of the console, click the drop-down menu to select an Ansible credential. If you need to add a credential, see more information at Managing credentials overview.

From Select clusters to deploy, you can update the placement rule information for your application. Choose from the following:

- Deploy on local cluster

- Deploy to all online clusters and local cluster

- Deploy application resources only on clusters matching specified labels

- You have the option to Select existing placement configuration if you create an application in an existing namespace with placement rules already defined.

- From Settings, you can specify application behavior. To block or activate changes to your deployment during a specific time window, choose an option for Deployment window and your Time window configuration.

- You can either choose another repository or Click Save.

- You are redirected to the Overview page where you can view the details and topology.

1.3.1.1. GitOps pattern

Learn best practices for organizing a Git repository to manage clusters.

1.3.1.1.1. GitOps example

Folders in this example are defined and named, with each folder containing applications or configurations that are run on managed clusters:

-

Root folder

managed-subscriptions: Contains subscriptions that target thecommon-managedfolder. -

Subfolder

apps/: Used to subscribe applications in thecommon-managedfolder with placement tomanaged-clusters. -

Subfolder

config/: Used to subscribe configurations in thecommon-managedfolder with placement tomanaged-clusters. -

Subfolder

policies/: Used to apply policies with placement tomanaged-clusters. -

Folder

root-subscription/: The initial subscription for the hub cluster that subscribes themanaged-subscriptionsfolder.

See the example of a directory:

1.3.1.1.2. GitOps flow

Your directory structure is created for the following subscription flow: root-subscription > managed-subscriptions > common-managed.

-

A single subscription in

root-subscription/is applied from the CLI terminal to the hub cluster. Subscriptions and policies are downloaded and applied to the hub cluster from the

managed-subscriptionfolder.-

The subscriptions and policies in the

managed-subscriptionfolder then perform work on the managed clusters based on the placement. -

Placement determines which

managed-clusterseach subscription or policy affects. - The subscriptions or policies define what is on the clusters that match their placement.

-

The subscriptions and policies in the

-

Subscriptions apply content from the

common-managedfolder tomanaged-clustersthat match the placement rules. This also applies common applications and configurations to allmanaged-clustersthat match the placement rules.

1.3.1.1.3. More examples

-

For an example of

root-subscription/, see application-subscribe-all. - For examples of subscriptions that point to other folders in the same repository, see subscribe-all.

-

See an example of the

common-managedfolder with application artifacts in the nginx-apps repository. - See policy examples in Policy collection.

1.3.2. Managing apps with Helm repositories

When you deploy Kubernetes resources using an application, the resources are located in specific repositories. Learn how to deploy resources from Helm repositories in the following procedure. Learn more about the application model at Application model and definitions.

User required access: A user role that can create applications. You can only perform actions that your role is assigned. Learn about access requirements from the Role-based access control documentation.

- From the console navigation menu, click Manage applications.

Click Create application.

For the following steps, select YAML: On to view the YAML in the console as you create your application. See YAML samples later in the topic.

Enter the following values in the correct fields:

- Name: Enter a valid Kubernetes name for the application.

- Namespace: Select a namespace from the list. You can also create a namespace by using a valid Kubernetes name if you are assigned the correct access role.

- Choose Helm from the list of repositories that you can use.

Enter the required URL path or select an existing path, then enter the package version.

If you select an existing Helm repository path, you do not need to specify connection information if this is a private repository. The connection information is pre-set and you do not need to view these values.

If you enter a new Helm repository path, you can optionally enter Helm connection information if this is a private Helm repository.

- Enter your Chart name and Package alias.

- Enter values for the optional fields. Optional fields are defined in the fields or in the hover text.

- Enter a Repository reconcile rate to control reconcile frequency.

From Select clusters to deploy, you can update the placement rule information for your application. Choose from the following:

- Deploy on local cluster

- Deploy to all online clusters and local cluster

- Deploy application resources only on clusters matching specified labels

- You have the option to Select existing placement configuration if you create an application in an existing namespace with placement rules already defined.

- From Settings, you can specify application behavior. To block or activate changes to your deployment during a specific time window, choose an option for Deployment window and your Time window configuration.

- You can either choose another repository or Click Save.

- You are redirected to the Overview page where you can view the details and topology.

Note: By default, when a subscription deploys subscribed applications to target clusters, the applications are deployed to that subscription namespace.

1.3.2.1. Sample YAML

The following example channel definition abstracts a Helm repository as a channel:

Note: For Helm, all Kubernetes resources contained within the Helm chart must have the label release. {{ .Release.Name }}` for the application topology to be displayed properly.

The following channel definition shows another example of a Helm repository channel:

Note: To see REST API, use the APIs.

1.3.3. Managing apps with Object storage repositories

When you deploy Kubernetes resources using an application, the resources are located in specific repositories. Learn more about the application model at Application model and definitions:

User required access: Cluster administrator, administrator. (A user role that can create applications.)

You can only perform actions that your role is assigned. Learn about access requirements from the Role-based access control documentation.

1.3.3.1. Deploy resources from object storage

When you deploy Kubernetes resources using an application, the resources are located in specific repositories. Learn how to deploy resources from object storage Git repositories in the following procedure:

- From the console navigation menu, click Applications.

Click Create application.

For the following steps, select YAML: On to view the YAML in the console as you create your application. See YAML samples later in the topic.

Enter the following values in the correct fields:

- Name: Enter a valid Kubernetes name for the application.

- Namespace: Select a namespace from the list. You can also create a namespace by using a valid Kubernetes name if you are assigned the correct access role.

- Choose Object storage from the list of repositories that you can use.

Enter the required URL path or select an existing path.

If you select an existing Object storage repository path, you do not need to specify connection information if this is a private repository. The connection information is pre-set and you do not need to view these values.

If you enter a new Object storage repository path, you can optionally enter Object storage connection information if this is a private Object storage repository.

- Enter values for the optional fields. Optional fields are defined in the fields or in the hover text.

- Set any optional pre and post-deployment tasks.

From Select clusters to deploy, you can update the placement rule information for your application. Choose from the following:

- Deploy on local cluster

- Deploy to all online clusters and local cluster

- Deploy application resources only on clusters matching specified labels

- You have the option to Select existing placement configuration if you create an application in an existing namespace with placement rules already defined.

- From Settings, you can specify application behavior. To block or activate changes to your deployment during a specific time window, choose an option for Deployment window and your Time window configuration.

- You can either choose another repository or Click Save.

- You are redirected to the Overview page where you can view the details and topology.

1.3.3.2. Sample YAML

The following example channel definition abstracts an object storage as a channel:

Note: To see REST API, use the APIs.

1.3.3.3. Creating your Amazon Web Services (AWS) S3 object storage bucket

You can set up subscriptions to subscribe resources that are defined in the Amazon Simple Storage Service (Amazon S3) object storage service. See the following procedure:

- Log into the AWS console with your AWS account, user name, and password.

- Navigate to Amazon S3 > Buckets to the bucket home page.

- Click Create Bucket to create your bucket.

- Select the AWS region, which is essential for connecting your AWS S3 object bucket.

- Create the bucket access token.

- Navigate to your user name in the navigation bar, then from the drop-down menu, select My Security Credentials.

- Navigate to Access keys for CLI, SDK, & API access in the AWS IAM credentials tab and click on Create access key.

- Save your Access key ID , Secret access key.

- Upload your object YAML files to the bucket.

1.3.3.4. Subscribing to the object in the AWS bucket

- Create an object bucket type channel with a secret to specify the AccessKeyID, SecretAccessKey, and Region for connecting the AWS bucket. The three fields are created when the AWS bucket is created.

Add the URL. The URL identifies the channel in a AWS S3 bucket if the URL contains

s3://ors3 and awskeywords. For example, see all of the following bucket URLs have AWS s3 bucket identifiers:https://s3.console.aws.amazon.com/s3/buckets/sample-bucket-1 s3://sample-bucket-1/ https://sample-bucket-1.s3.amazonaws.com/

https://s3.console.aws.amazon.com/s3/buckets/sample-bucket-1 s3://sample-bucket-1/ https://sample-bucket-1.s3.amazonaws.com/Copy to Clipboard Copied! Toggle word wrap Toggle overflow Note: The AWS S3 object bucket URL is not necessary to connect the bucket with the AWS S3 API.

1.3.3.5. Sample AWS subscription

See the following complete AWS S3 object bucket channel sample YAML file:

You can continue to create other AWS subscription and placement rule objects, as you see in the following sample YAML with kind: PlacementRule and kind: Subscription added:

You can also subscribe to objects within a specific subfolder in the object bucket. Add the subfolder annotation to the subscription, which forces the object bucket subscription to only apply all the resources in the subfolder path.

See the annotation with subfolder-1 as the bucket-path:

annotations: apps.open-cluster-management.io/bucket-path: <subfolder-1>

annotations:

apps.open-cluster-management.io/bucket-path: <subfolder-1>See the following complete sample for a subfolder:

1.4. Application advanced configuration

Within Red Hat Advanced Cluster Management for Kubernetes, applications are composed of multiple application resources. You can use channel, subscription, and placement rule resources to help you deploy, update, and manage your overall applications.

Both single and multicluster applications use the same Kubernetes specifications, but multicluster applications involve more automation of the deployment and application management lifecycle.

All of the application component resources for Red Hat Advanced Cluster Management for Kubernetes applications are defined in YAML file spec sections. When you need to create or update an application component resource, you need to create or edit the appropriate spec section to include the labels for defining your resource.

View the following application advanced configuration topics:

- Subscribing Git resources

- Granting subscription admin privilege

- Creating an allow and deny list as subscription administrator

- Adding reconcile options

- Configuring application channel and subscription for a secure Git connection

- Setting up Ansible Tower tasks

- Configuring GitOps on managed clusters

- Configuring package overrides

- Channel samples overview

- Subscription samples overview

- Placement rule samples overview

- Application samples overview

1.4.1. Subscribing Git resources

By default, when a subscription deploys subscribed applications to target clusters, the applications are deployed to that subscription namespace, even if the application resources are associated with other namespaces. A subscription administrator can change default behavior, as described in Granting subscription admin privilege.

Additionally, if an application resource exists in the cluster and was not created by the subscription, the subscription cannot apply a new resource on that existing resource. See the following processes to change default settings as the subscription administrator:

Required access: Cluster administrator

1.4.1.1. Creating application resources in Git

You need to specify the full group and version for apiVersion in resource YAML when you subscribe. For example, if you subscribe to apiVersion: v1, the subscription controller fails to validate the subscription and you receive an error: Resource /v1, Kind=ImageStream is not supported.

If the apiVersion is changed to image.openshift.io/v1, as in the following sample, it passes the validation in the subscription controller and the resource is applied successfully.

Next, see more useful examples of how a subscription administrator can change default behavior.

1.4.1.2. Application namespace example

In this following examples, you are logged in as a subscription administrator.

1.4.1.2.1. Application to different namespaces

Create a subscription to subscribe the sample resource YAML file from a Git repository. The example file contains subscriptions that are located within the following different namespaces:

Applicable channel types: Git

-

ConfigMap

test-configmap-1gets created inmultinsnamespace. -

ConfigMap

test-configmap-2gets created indefaultnamespace. ConfigMap

test-configmap-3gets created in thesubscriptionnamespace.Copy to Clipboard Copied! Toggle word wrap Toggle overflow

If the subscription was created by other users, all the ConfigMaps get created in the same namespace as the subscription.

1.4.1.2.2. Application to same namespace

As a subscription administrator, you might want to deploy all application resources into the same namespace.

You can deploy all application resources into the subscription namespace by Creating an allow and deny list as subscription administrator.

Add apps.open-cluster-management.io/current-namespace-scoped: true annotation to the subscription YAML. For example, when a subscription administrator creates the following subscription, all three ConfigMaps in the previous example are created in subscription-ns namespace.

1.4.1.3. Resource overwrite example

Applicable channel types: Git, ObjectBucket (Object storage in the console)

Note: The resource overwrite option is not applicable to helm charts from the Git repository because the helm chart resources are managed by Helm.

In this example, the following ConfigMap already exists in the target cluster.

Subscribe the following sample resource YAML file from a Git repository and replace the existing ConfigMap. See the change in the data specification:

1.4.1.3.1. Default merge option

See the following sample resource YAML file from a Git repository with the default apps.open-cluster-management.io/reconcile-option: merge annotation. See the following example:

When this subscription is created by a subscription administrator and subscribes the ConfigMap resource, the existing ConfigMap is merged, as you can see in the following example:

When the merge option is used, entries from subscribed resource are either created or updated in the existing resource. No entry is removed from the existing resource.

Important: If the existing resource you want to overwrite with a subscription is automatically reconciled by another operator or controller, the resource configuration is updated by both subscription and the controller or operator. Do not use this method in this case.

1.4.1.3.2. Replace option

You log in as a subscription administrator and create a subscription with apps.open-cluster-management.io/reconcile-option: replace annotation. See the following example:

When this subscription is created by a subscription administrator and subscribes the ConfigMap resource, the existing ConfigMap is replaced by the following:

1.4.1.4. Subscribing specific Git elements

You can subscribe to a specific Git branch, commit, or tag.

1.4.1.4.1. Subscribing to a specific branch

The subscription operator that is included in the multicloud-operators-subscription repository subscribes to the default branch of a Git repository. If you want to subscribe to a different branch, you need to specify the branch name annotation in the subscription.

The following example, the YAML file displays how to specify a different branch with apps.open-cluster-management.io/git-branch: <branch1>:

1.4.1.4.2. Subscribing to a specific commit

The subscription operator that is included in the multicloud-operators-subscription repository subscribes to the latest commit of specified branch of a Git repository by default. If you want to subscribe to a specific commit, you need to specify the desired commit annotation with the commit hash in the subscription.

The following example, the YAML file displays how to specify a different commit with apps.open-cluster-management.io/git-desired-commit: <full commit number>:

The git-clone-depth annotation is optional and set to 20 by default, which means the subscription controller retrieves the previous 20 commit histories from the Git repository. If you specify a much older git-desired-commit, you need to specify git-clone-depth accordingly for the desired commit.

1.4.1.4.3. Subscribing to a specific tag

The subscription operator that is included in the multicloud-operators-subscription repository subscribes to the latest commit of specified branch of a Git repository by default. If you want to subscribe to a specific tag, you need to specify the tag annotation in the subscription.

The following example, the YAML file displays how to specify a different tag with apps.open-cluster-management.io/git-tag: <v1.0>:

Note: If both Git desired commit and tag annotations are specified, the tag is ignored.

The git-clone-depth annotation is optional and set to 20 by default, which means the subscription controller retrieves the previous 20 commit history from the Git repository. If you specify much older git-tag, you need to specify git-clone-depth accordingly for the desired commit of the tag.

1.4.2. Granting subscription administrator privilege

Learn how to grant subscription administrator access. A subscription administrator can change default behavior. Learn more in the following process:

- From the console, log in to your Red Hat OpenShift Container Platform cluster.

Create one or more users. See Preparing for users for information about creating users. You can also prepare groups or service accounts.

Users that you create are administrators for the

app.open-cluster-management.io/subscriptionapplication. With OpenShift Container Platform, a subscription administrator can change default behavior. You can group these users to represent a subscription administrative group, which is demonstrated in later examples.- From the terminal, log in to your Red Hat Advanced Cluster Management cluster.

If

open-cluster-management:subscription-adminClusterRoleBinding does not exist, you need to create it. See the following example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Add the following subjects into

open-cluster-management:subscription-adminClusterRoleBinding with the following command:oc edit clusterrolebinding open-cluster-management:subscription-admin

oc edit clusterrolebinding open-cluster-management:subscription-adminCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note: Initially,

open-cluster-management:subscription-adminClusterRoleBinding has no subject.Your subjects might display as the following example:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

1.4.2.1. Creating an allow and deny list as subscription administrator

As a subscription administrator, you can create an application from a Git repository or Object storage application subscription that contains an allow list to allow deployment of only specified Kubernetes kind resources. You can also create a deny list in the application subscription to deny deployment of specific Kubernetes kind resources.

By default, policy.open-cluster-management.io/v1 resources are not deployed by an application subscription. To avoid this default behavior, application subscription needs deployed by a subscription administrator.

See the following example of allow and deny specifications:

The following application subscription YAML specifies that when the application is deployed from the myapplication directory from the source repository, it deploys only v1/Deployment resources, even if there are other resources in the source repository:

This example application subscription YAML specifies deployments of all valid resources except v1/Service and v1/ConfigMap resources. Instead of listing individual resource kinds within an API group, you can add "*" to allow or deny all resource kinds in the API Group.

1.4.3. Adding reconcile options

You can use the apps.open-cluster-management.io/reconcile-option annotation in individual resources to override the subscription-level reconcile option.

For example, if you add apps.open-cluster-management.io/reconcile-option: replace annotation in the subscription and add apps.open-cluster-management.io/reconcile-option: merge annotation in a resource YAML in the subscribed Git repository, the resource is merged on the target cluster while other resources are replaced.

1.4.3.1. Reconcile frequency Git channel

You can select reconcile frequency options: high, medium, low, and off in channel configuration to avoid unnecessary resource reconciliations and therefore prevent overload on subscription operator.

Required access: Administrator and cluster administrator

See the following definitions of the settings:attribute:<value>:

-

Off: The deployed resources are not automatically reconciled. A change in theSubscriptioncustom resource initiates a reconciliation. You can add or update a label or annotation. -

Low: The deployed resources are automatically reconciled every hour, even if there is no change in the source Git repository. -

Medium: This is the default setting. The subscription operator compares the currently deployed commit ID to the latest commit ID of the source repository every 3 minutes, and applies changes to target clusters. Every 15 minutes, all resources are reapplied from the source Git repository to the target clusters, even if there is no change in the repository. -

High: The deployed resources are automatically reconciled every two minutes, even if there is no change in the source Git repository.

You can set this by using the apps.open-cluster-management.io/reconcile-rate annotation in the channel custom resource that is referenced by subscription.

See the following name: git-channel example:

In the previous example, all subscriptions that use sample/git-channel are assigned low reconciliation frequency.

-

When the subscription reconcile rate is set to

low, it can take up to one hour for the subscribed application resources to reconcile. On the card on the single application view, click Sync to reconcile manually. If set tooff, it never reconciles.

Regardless of the reconcile-rate setting in the channel, a subscription can turn the auto-reconciliation off by specifying apps.open-cluster-management.io/reconcile-rate: off annotation in the Subscription custom resource.

See the following git-channel example:

See that the resources deployed by git-subscription are never automatically reconciled even if the reconcile-rate is set to high in the channel.

1.4.3.2. Reconcile frequency Helm channel

Every 15 minutes, the subscription operator compares currently deployed hash of your Helm chart to the hash from the source repository. Changes are applied to target clusters. The frequency of resource reconciliation impacts the performance of other application deployments and updates.

For example, if there are hundreds of application subscriptions and you want to reconcile all subscriptions more frequently, the response time of reconciliation is slower.

Depending on the Kubernetes resources of the application, appropriate reconciliation frequency can improve performance.

-

Off: The deployed resources are not automatically reconciled. A change in the Subscription custom resource initiates a reconciliation. You can add or update a label or annotation. -

Low: The subscription operator compares currently deployed hash to the hash of the source repository every hour and apply changes to target clusters when there is change. -

Medium: This is the default setting. The subscription operator compares currently deployed hash to the hash of the source repository every 15 minutes and apply changes to target clusters when there is change. -

High: The subscription operator compares currently deployed hash to the hash of the source repository every 2 minutes and apply changes to target clusters when there is change.

You can set this using apps.open-cluster-management.io/reconcile-rate annotation in the Channel custom resource that is referenced by subscription. See the following helm-channel example:

See the following helm-channel example:

In this example, all subscriptions that uses sample/helm-channel are assigned a low reconciliation frequency.

Regardless of the reconcile-rate setting in the channel, a subscription can turn the auto-reconciliation off by specifying apps.open-cluster-management.io/reconcile-rate: off annotation in the Subscription custom resource, as displayed in the following example:

In this example, the resources deployed by helm-subscription are never automatically reconciled, even if the reconcile-rate is set to high in the channel.

1.4.4. Configuring application channel and subscription for a secure Git connection

Git channels and subscriptions connect to the specified Git repository through HTTPS or SSH. The following application channel configurations can be used for secure Git connections:

1.4.4.1. Connecting to a private repo with user and access token

You can connect to a Git server using channel and subscription. See the following procedures for connecting to a private repository with a user and access token:

Create a secret in the same namespace as the channel. Set the

userfield to a Git user ID and theaccessTokenfield to a Git personal access token. The values should be base64 encoded. See the following sample with user and accessToken populated:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Configure the channel with a secret. See the following sample with the

secretRefpopulated:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

1.4.4.2. Making an insecure HTTPS connection to a Git server

You can use the following connection method in a development environment to connect to a privately-hosted Git server with SSL certificates that are signed by custom or self-signed certificate authority. However, this procedure is not recommended for production:

Specify insecureSkipVerify: true in the channel specification. Otherwise, the connection to the Git server fails with an error similar to the following:

x509: certificate is valid for localhost.com, not localhost

x509: certificate is valid for localhost.com, not localhostSee the following sample with the channel specification addition for this method:

1.4.4.3. Using custom CA certificates for a secure HTTPS connection

You can use this connection method to securely connect to a privately-hosted Git server with SSL certificates that are signed by custom or self-signed certificate authority.

Create a ConfigMap to contain the Git server root and intermediate CA certificates in PEM format. The ConfigMap must be in the same namespace as the channel CR. The field name must be

caCertsand use|. From the following sample, notice thatcaCertscan contain multiple certificates, such as root and intermediate CAs:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Configure the channel with this ConfigMap. See the following sample with the

git-caname from the previous step:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

1.4.4.4. Making an SSH connection to a Git server

Create a secret to contain your private SSH key in

sshKeyfield indata. If the key is passphrase-protected, specify the password inpassphrasefield. This secret must be in the same namespace as the channel CR. Create this secret using aoccommand to create a secret genericgit-ssh-key --from-file=sshKey=./.ssh/id_rsa, then add base64 encodedpassphrase. See the following sample:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Configure the channel with the secret. See the following sample:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow The subscription controller does an

ssh-keyscanwith the provided Git hostname to build theknown_hostslist to prevent an Man-in-the-middle (MITM) attack in the SSH connection. If you want to skip this and make insecure connection, useinsecureSkipVerify: truein the channel configuration. This is not best practice, especially in production environments.Copy to Clipboard Copied! Toggle word wrap Toggle overflow

1.4.4.5. Updating certificates and SSH keys

If a Git channel connection configuration requires an update, such as CA certificates, credentials, or SSH key, you need to create a new secret and ConfigMap in the same namespace and update the channel to reference that new secret and ConfigMap. For more information, see Using custom CA certificates for a secure HTTPS connection.

1.4.5. Setting up Ansible Tower tasks

Red Hat Advanced Cluster Management is integrated with Ansible Tower automation so that you can create prehook and posthook AnsibleJob instances for Git subscription application management. With Ansible Tower jobs, you can automate tasks and integrate with external services, such as Slack and PagerDuty services. Your Git repository resource root path will contain prehook and posthook directories for Ansible Tower jobs that run as part of deploying the app, updating the app, or removing the app from a cluster.

Required access: Cluster administrator

1.4.5.1. Prerequisites

- OpenShift Container Platform 4.6 or later

- You must have Ansible Tower version 3.7.3 or a later version installed. It is best practice to install the latest supported version of Ansible Tower. See Red Hat AnsibleTower documentation for more details.

- Install the Ansible Automation Platform Resource Operator to connect Ansible jobs to the lifecycle of Git subscriptions. For best results when using the AnsibleJob to launch Ansible Tower jobs, the Ansible Tower job template should be idempotent when it is run.

Check PROMPT ON LAUNCH on the template for both INVENTORY and EXTRA VARIABLES. See Job templates for more information.

1.4.5.2. Install Ansible Automation Platform Resource Operator

- Log in to your OpenShift Container Platform cluster console.

- Click OperatorHub in the console navigation.

Search for and install the Ansible Automation Platform Resource Operator. Note: To submit prehook and posthook

AnsibleJobs, install Ansible Automation Platform Resource Operator with a corresponding version available on different OpenShift Container Platform versions:- OpenShift Container Platform 4.6 needs (AAP) Resource Operator early-access

- OpenShift Container Platform 4.7 needs (AAP) Resource Operator early-access, stable-2.1

- OpenShift Container Platform 4.8 needs (AAP) Resource Operator early-access, stable-2.1, stable-2.2

- OpenShift Container Platform 4.9 needs (AAP) Resource Operator early-access, stable-2.1, stable-2.2

- OpenShift Container Platform 4.10 needs (AAP) Resource Operator stable-2.1, stable-2.2

1.4.5.3. Set up credential

You can create the credential you need from the Credentials page in the console. Click Add credential or access the page from the navigation. See Creating a credential for Ansible Automation Platform for credential information.

1.4.5.4. Ansible integration

You can integrate Ansible Tower jobs into Git subscriptions. For instance, for a database front-end and back-end application, the database is required to be instantiated using Ansible Tower with an Ansible Job, and the application is installed by a Git subscription. The database is instantiated before you deploy the front-end and back-end application with the subscription.

The application subscription operator is enhanced to define two subfolders: prehook and posthook. Both folders are in the Git repository resource root path and contain all prehook and posthook Ansible jobs, respectively.

When the Git subscription is created, all of the pre and post AnsibleJob resources are parsed and stored in memory as an object. The application subscription controller decides when to create the pre and post AnsibleJob instances.

1.4.5.5. Ansible operator components

When you create a subscription CR, the Git-branch and Git-path points to a Git repository root location. In the Git root location, the two subfolders prehook and posthook should contain at least one Kind:AnsibleJob resource.

1.4.5.5.1. Prehook

The application subscription controller searches all the Kind:AnsibleJob CRs in the prehook folder as the prehook AnsibleJob objects, then generates a new prehook AnsibleJob instance. The new instance name is the prehook AnsibleJob object name and a random suffix string.

See an example instance name: database-sync-1-2913063.

The application subscription controller queues the reconcile request again in a 1 minute loop, where it checks the prehook AnsibleJob status.ansibleJobResult. When the prehook status.ansibleJobResult.status is successful, the application subscription continues to deploy the main subscription.

1.4.5.5.2. Posthook

When the app subscription status is updated, if the subscription status is subscribed or propagated to all target clusters in subscribed status, the app subscription controller searches all of the AnsibleJob Kind CRs in the posthook folder as the posthook AnsibleJob objects. Then, it generates new posthook AnsibleJob instances. The new instance name is the posthook AnsibleJob object name and a random suffix string.

See an example instance name: service-ticket-1-2913849.

1.4.5.5.3. Ansible placement rules

With a valid prehook AnsibleJob, the subscription launches the prehook AnsibleJob regardless of the decision from the placement rule. For example, you can have a prehook AnsibleJob that failed to propagate a placement rule subscription. When the placement rule decision changes, new prehook and posthook AnsibleJob instances are created.

1.4.5.6. Ansible configuration

You can configure Ansible Tower configurations with the following tasks:

1.4.5.6.1. Ansible secrets

You must create an Ansible Tower secret CR in the same subscription namespace. The Ansible Tower secret is limited to the same subscription namespace.

Create the secret from the console by filling in the Ansible Tower secret name section. To create the secret using terminal, edit and apply the following yaml:

Run the following command to add your YAML file:

oc apply -f

oc apply -fSee the following YAML sample:

Note: The namespace is the same namespace as the subscription namespace. The stringData:token and host are from the Ansible Tower.

When the app subscription controller creates prehook and posthook AnsibleJobs, if the secret from subscription spec.hooksecretref is available, then it is sent to the AnsibleJob CR spec.tower_auth_secret and the AnsibleJob can access the Ansible Tower.

1.4.5.7. Set secret reconciliation

For a main-sub subscription with prehook and posthook AnsibleJobs, the main-sub subscription should be reconciled after all prehook and posthook AnsibleJobs or main subscription are updated in the Git repository.

Prehook AnsibleJobs and the main subscription continuously reconcile and relaunch a new pre-AnsibleJob instance.

- After the pre-AnsibleJob is done, re-run the main subscription.

- If there is any specification change in the main subscription, re-deploy the subscription. The main subscription status should be updated to align with the redeployment procedure.

Reset the hub subscription status to

nil. The subscription is refreshed along with the subscription deployment on target clusters.When the deployment is finished on the target cluster, the subscription status on the target cluster is updated to

"subscribed"or"failed", and is synced to the hub cluster subscription status.- After the main subscription is done, relaunch a new post-AnsibleJob instance.

Verify that the DONE subscription is updated. See the following output:

-

subscription.status ==

"subscribed" -

subscription.status ==

"propagated"with all of the target clusters"subscribed"

-

subscription.status ==

When an AnsibleJob CR is created, A Kubernetes job CR is created to launch an Ansible Tower job by communicating to the target Ansible Tower. When the job is complete, the final status for the job is returned to AnsibleJob status.ansibleJobResult.

Notes:

The AnsibleJob status.conditions is reserved by the Ansible Job operator for storing the creation of Kubernetes job result. The status.conditions does not reflect the actual Ansible Tower job status.

The subscription controller checks the Ansible Tower job status by the AnsibleJob.status.ansibleJobResult instead of AnsibleJob.status.conditions.

As previously mentioned in the prehook and posthook AnsibleJob workflow, when the main subscription is updated in Git repository, a new prehook and posthook AnsibleJob instance is created. As a result, one main subscription can link to multiple AnsibleJob instances.

Four fields are defined in subscription.status.ansibleJobs:

- lastPrehookJobs: The most recent prehook AnsibleJobs

- prehookJobsHistory: All the prehook AnsibleJobs history

- lastPosthookJobs: The most recent posthook AnsibleJobs

- posthookJobsHistory: All the posthook AnsibleJobs history

1.4.5.8. Ansible sample YAML

See the following sample of an AnsibleJob .yaml file in a Git prehook and posthook folder:

1.4.6. Configuring Managed Clusters for OpenShift GitOps operator and Argo CD

To configure GitOps, you can register a set of one or more Red Hat Advanced Cluster Management for Kubernetes managed clusters to an instance of Argo CD or Red Hat OpenShift Container Platform GitOps operator. After registering, you can deploy applications to those clusters. Set up a continuous GitOps environment to automate application consistency across clusters in development, staging, and production environments.

1.4.6.1. Prerequisites

- You need to Install Argo CD or the Red Hat OpenShift GitOps operator on your Red Hat Advanced Cluster Management for Kubernetes.

- Import one or more managed clusters.

1.4.6.2. Registering managed clusters to GitOps

Create managed cluster sets and add managed clusters to those managed cluster sets. See the example for managed cluster sets in the multicloud-integrations managedclusterset.

See the Creating and managing ManagedClusterSets documentation for more information.

Create managed cluster set binding to the namespace where Argo CD or OpenShift GitOps is deployed.

See the example in the repository at multicloud-integrations managedclustersetbinding, which binds to the

openshift-gitopsnamespace.See the Creating a ManagedClusterSetBinding resource documentation for more information.

In the namespace that is used in managed cluster set binding, create a placement custom resource to select a set of managed clusters to register to an ArgoCD or OpenShift Container Platform GitOps operator instance. You can use the example in the repository at multicloud-integration placement

See Using ManagedClustersSets with Placement for placement information.

Note: Only OpenShift Container Platform clusters are registered to an Argo CD or OpenShift GitOps operator instance, not other Kubernetes clusters.

Create a

GitOpsClustercustom resource to register the set of managed clusters from the placement decision to the specified instance of Argo CD or Red Hat OpenShift Container Platform GitOps. This enables the Argo CD instance to deploy applications to any of those Red Hat Advanced Cluster Management managed clusters.Use the example in the repository at multicloud-integrations gitops cluster.

Note: The referenced

Placementresource must be in the same namespace as theGitOpsClusterresource.See from the following sample that

placementRef.nameisall-openshift-clusters, and is specified as target clusters for the GitOps instance that is installed inargoNamespace: openshift-gitops. TheargoServer.clusterspecification requires thelocal-clustervalue.Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Save your changes. You can now follow the GitOps workflow to manage your applications. See About GitOps to learn more.

1.4.7. Scheduling a deployment

If you need to deploy new or change Helm charts or other resources during only specific times, you can define subscriptions for those resources to begin deployments during only those specific times. Alternatively, you can restrict deployments.

For instance, you can define time windows between 10:00 PM and 11:00 PM each Friday to serve as scheduled maintenance windows for applying patches or other application updates to your clusters.

You can restrict or block deployments from beginning during specific time windows, such as to avoid unexpected deployments during peak business hours. For instance, to avoid peak hours you can define a time window for a subscription to avoid beginning deployments between 8:00 AM and 8:00 PM.

By defining time windows for your subscriptions, you can coordinate updates for all of your applications and clusters. For instance, you can define subscriptions to deploy only new application resources between 6:01 PM and 11:59 PM and define other subscriptions to deploy only updated versions of existing resources between 12:00 AM to 7:59 AM.

When a time window is defined for a subscription, the time ranges when a subscription is active changes. As part of defining a time window, you can define the subscription to be active or blocked during that window.

The deployment of new or changed resources begins only when the subscription is active. Regardless of whether a subscription is active or blocked, the subscription continues to monitor for any new or changed resource. The active and blocked setting affects only deployments.

When a new or changed resource is detected, the time window definition determines the next action for the subscription.

-

For subscriptions to

HelmRepo,ObjectBucket, andGittype channels: - If the resource is detected during the time range when the subscription is active, the resource deployment begins.

- If the resource is detected outside the time range when the subscription is blocked from running deployments, the request to deploy the resource is cached. When the next time range that the subscription is active occurs, the cached requests are applied and any related deployments begin.

- When a time window is blocked, all resources that were previously deployed by the application subscription remain. Any new update is blocked until the time window is active again.

End user may wrongly think when the app sub time window is blocked, all deployed resources will be removed. And they will be back when the app sub time window is active again.

If a deployment begins during a defined time window and is running when the defined end of the time window elapses, the deployment continues to run to completion.

To define a time window for a subscription, you need to add the required fields and values to the subscription resource definition YAML.

- As part of defining a time window, you can define the days and hours for the time window.

- You can also define the time window type, which determines whether the time window when deployments can begin occurs during, or outside, the defined time frame.

-

If the time window type is

active, deployments can begin only during the defined time frame. You can use this setting when you want deployments to occur within only specific maintenance windows. -

If the time window type is

block, deployments cannot begin during the defined time frame, but can begin at any other time. You can use this setting when you have critical updates that are required, but still need to avoid deployments during specific time ranges. For instance, you can use this type to define a time window to allow security-related updates to be applied at any time except between 10:00 AM and 2:00 PM. - You can define multiple time windows for a subscription, such as to define a time window every Monday and Wednesday.

1.4.8. Configuring package overrides

Configure package overrides for a subscription override value for the Helm chart or Kubernetes resource that is subscribed to by the subscription.

To configure a package override, specify the field within the Kubernetes resource spec to override as the value for the path field. Specify the replacement value as the value for the value field.

For example, if you need to override the values field within the spec for a Helm release for a subscribed Helm chart, you need to set the value for the path field in your subscription definition to spec.

packageOverrides:

- packageName: nginx-ingress

packageOverrides:

- path: spec

value: my-override-values

packageOverrides:

- packageName: nginx-ingress

packageOverrides:

- path: spec

value: my-override-values

The contents for the value field are used to override the values within the spec field of the Helm spec.

-

For a Helm release, override values for the

specfield are merged into the Helm releasevalues.yamlfile to override the existing values. This file is used to retrieve the configurable variables for the Helm release. If you need to override the release name for a Helm release, include the

packageOverridesection within your definition. Define thepackageAliasfor the Helm release by including the following fields:-

packageNameto identify the Helm chart. packageAliasto indicate that you are overriding the release name.By default, if no Helm release name is specified, the Helm chart name is used to identify the release. In some cases, such as when there are multiple releases subscribed to the same chart, conflicts can occur. The release name must be unique among the subscriptions within a namespace. If the release name for a subscription that you are creating is not unique, an error occurs. You must set a different release name for your subscription by defining a

packageOverride. If you want to change the name within an existing subscription, you must first delete that subscription and then recreate the subscription with the preferred release name.

packageOverrides: - packageName: nginx-ingress packageAlias: my-helm-release-name

packageOverrides: - packageName: nginx-ingress packageAlias: my-helm-release-nameCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

1.4.9. Channel samples overview

View samples and YAML definitions that you can use to build your files. Channels (channel.apps.open-cluster-management.io) provide you with improved continuous integration and continuous delivery capabilities for creating and managing your Red Hat Advanced Cluster Management for Kubernetes applications.

To use the OpenShift CLI tool, see the following procedure:

- Compose and save your application YAML file with your preferred editing tool.

Run the following command to apply your file to an API server. Replace

filenamewith the name of your file:oc apply -f filename.yaml

oc apply -f filename.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that your application resource is created by running the following command:

oc get application.app

oc get application.appCopy to Clipboard Copied! Toggle word wrap Toggle overflow

1.4.9.1. Channel YAML structure

For application samples that you can deploy, see the stolostron repository.

The following YAML structures show the required fields for a channel and some of the common optional fields. Your YAML structure needs to include some required fields and values. Depending on your application management requirements, you might need to include other optional fields and values. You can compose your own YAML content with any tool and in the product console.

1.4.9.2. Channel YAML table

| Field | Description |

|---|---|

| apiVersion |

Required. Set the value to |

| kind |

Required. Set the value to |

| metadata.name | Required. The name of the channel. |

| metadata.namespace | Required. The namespace for the channel; Each channel needs a unique namespace, except the Git channel. |

| spec.sourceNamespaces | Optional. Identifies the namespace that the channel controller monitors for new or updated deployables to retrieve and promote to the channel. |

| spec.type |

Required. The channel type. The supported types are: |

| spec.pathname |

Required for |

| spec.secretRef.name |

Optional. Identifies a Kubernetes Secret resource to use for authentication, such as for accessing a repository or chart. You can use a secret for authentication with only |

| spec.gates |

Optional. Defines requirements for promoting a deployable within the channel. If no requirements are set, any deployable that is added to the channel namespace or source is promoted to the channel. The |

| spec.gates.annotations | Optional. The annotations for the channel. Deployables must have matching annotations to be included in the channel. |

| metadata.labels | Optional. The labels for the channel. |

| spec.insecureSkipVerify |

Optional. Default value is |

The definition structure for a channel can resemble the following YAML content:

1.4.9.3. Object storage bucket (ObjectBucket) channel

The following example channel definition abstracts an Object storage bucket as a channel:

1.4.9.4. Helm repository (HelmRepo) channel

The following example channel definition abstracts a Helm repository as a channel:

Deprecation notice: For 2.4, specifying insecureSkipVerify: "true" in channel ConfigMap reference to skip Helm repo SSL certificate is deprecated. See the replacement in the following current sample, with spec.insecureSkipVerify: true that is used in the channel instead:

The following channel definition shows another example of a Helm repository channel:

Note: For Helm, all Kubernetes resources contained within the Helm chart must have the label release {{ .Release.Name }} for the application topology to display properly.

1.4.9.5. Git (Git) repository channel

The following example channel definition displays an example of a channel for the Git Repository. In the following example, secretRef refers to the user identity that is used to access the Git repo that is specified in the pathname. If you have a public repo, you do not need the secretRef label and value:

1.4.10. Secret samples

See more samples in the open-cluster-management repository.

Secrets (Secret) are Kubernetes resources that you can use to store authorization and other sensitive information, such as passwords, OAuth tokens, and SSH keys. By storing this information as secrets, you can separate the information from the application components that require the information to improve your data security.

To use the OpenShift CLI tool, see the following procedure:

- Compose and save your application YAML file with your preferred editing tool.

Run the following command to apply your file to an API server. Replace

filenamewith the name of your file:oc apply -f filename.yaml

oc apply -f filename.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that your application resource is created by running the following command:

oc get application.app

oc get application.appCopy to Clipboard Copied! Toggle word wrap Toggle overflow

The definition structure for a secret can resemble the following YAML content:

1.4.10.1. Secret YAML structure

1.4.11. Subscription samples overview

View samples and YAML definitions that you can use to build your files. As with channels, subscriptions (subscription.apps.open-cluster-management.io) provide you with improved continuous integration and continuous delivery capabilities for application management.

To use the OpenShift CLI tool, see the following procedure:

- Compose and save your application YAML file with your preferred editing tool.

Run the following command to apply your file to an api server. Replace

filenamewith the name of your file:oc apply -f filename.yaml

oc apply -f filename.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that your application resource is created by running the following command:

oc get application.app

oc get application.appCopy to Clipboard Copied! Toggle word wrap Toggle overflow

1.4.11.1. Subscription YAML structure

The following YAML structure shows the required fields for a subscription and some of the common optional fields. Your YAML structure needs to include certain required fields and values.

Depending on your application management requirements, you might need to include other optional fields and values. You can compose your own YAML content with any tool:

1.4.11.2. Subscription YAML table

| Field | Description |

|---|---|

| apiVersion |

Required. Set the value to |

| kind |

Required. Set the value to |

| metadata.name | Required. The name for identifying the subscription. |

| metadata.namespace | Required. The namespace resource to use for the subscription. |

| metadata.labels | Optional. The labels for the subscription. |

| spec.channel |

Optional. The namespace name ("Namespace/Name") that defines the channel for the subscription. Define either the |

| spec.sourceNamespace |

Optional. The source namespace where deployables are stored on the hub cluster. Use this field only for namespace channels. Define either the |

| spec.source |

Optional. The path name ("URL") to the Helm repository where deployables are stored. Use this field for only Helm repository channels. Define either the |

| spec.name |

Required for |

| spec.packageFilter | Optional. Defines the parameters to use to find target deployables or a subset of a deployables. If multiple filter conditions are defined, a deployable must meet all filter conditions. |

| spec.packageFilter.version |

Optional. The version or versions for the deployable. You can use a range of versions in the form |

| spec.packageFilter.annotations | Optional. The annotations for the deployable. |

| spec.packageOverrides | Optional. Section for defining overrides for the Kubernetes resource that is subscribed to by the subscription, such as a Helm chart, deployable, or other Kubernetes resource within a channel. |

| spec.packageOverrides.packageName | Optional, but required for setting an override. Identifies the Kubernetes resource that is being overwritten. |

| spec.packageOverrides.packageAlias | Optional. Gives an alias to the Kubernetes resource that is being overwritten. |

| spec.packageOverrides.packageOverrides | Optional. The configuration of parameters and replacement values to use to override the Kubernetes resource. |

| spec.placement | Required. Identifies the subscribing clusters where deployables need to be placed, or the placement rule that defines the clusters. Use the placement configuration to define values for multicluster deployments. |

| spec.placement.local |

Optional, but required for a stand-alone cluster or cluster that you want to manage directly. Defines whether the subscription must be deployed locally. Set the value to |

| spec.placement.clusters |

Optional. Defines the clusters where the subscription is to be placed. Only one of |

| spec.placement.clusters.name | Optional, but required for defining the subscribing clusters. The name or names of the subscribing clusters. |

| spec.placement.clusterSelector |

Optional. Defines the label selector to use to identify the clusters where the subscription is to be placed. Use only one of |

| spec.placement.placementRef |

Optional. Defines the placement rule to use for the subscription. Use only one of |

| spec.placement.placementRef.name | Optional, but required for using a placement rule. The name of the placement rule for the subscription. |

| spec.placement.placementRef.kind |

Optional, but required for using a placement rule. Set the value to |

| spec.overrides | Optional. Any parameters and values that need to be overridden, such as cluster-specific settings. |

| spec.overrides.clusterName | Optional. The name of the cluster or clusters where parameters and values are being overridden. |

| spec.overrides.clusterOverrides | Optional. The configuration of parameters and values to override. |

| spec.timeWindow | Optional. Defines the settings for configuring a time window when the subscription is active or blocked. |

| spec.timeWindow.type | Optional, but required for configuring a time window. Indicates whether the subscription is active or blocked during the configured time window. Deployments for the subscription occur only when the subscription is active. |

| spec.timeWindow.location | Optional, but required for configuring a time window. The time zone of the configured time range for the time window. All time zones must use the Time Zone (tz) database name format. For more information, see Time Zone Database. |

| spec.timeWindow.daysofweek |

Optional, but required for configuring a time window. Indicates the days of the week when the time range is applied to create a time window. The list of days must be defined as an array, such as |

| spec.timeWindow.hours | Optional, but required for configuring a time window. Defined the time range for the time window. A start time and end time for the hour range must be defined for each time window. You can define multiple time window ranges for a subscription. |

| spec.timeWindow.hours.start |

Optional, but required for configuring a time window. The timestamp that defines the beginning of the time window. The timestamp must use the Go programming language Kitchen format |

| spec.timeWindow.hours.end |

Optional, but required for configuring a time window. The timestamp that defines the ending of the time window. The timestamp must use the Go programming language Kitchen format |

Notes:

-

When you are defining your YAML, a subscription can use

packageFiltersto point to multiple Helm charts, deployables, or other Kubernetes resources. The subscription, however, only deploys the latest version of one chart, or deployable, or other resource. -

For time windows, when you are defining the time range for a window, the start time must be set to occur before the end time. If you are defining multiple time windows for a subscription, the time ranges for the windows cannot overlap. The actual time ranges are based on the

subscription-controllercontainer time, which can be set to a different time and location than the time and location that you are working within. - Within your subscription specification, you can also define the placement of a Helm release as part of the subscription definition. Each subscription can reference an existing placement rule, or define a placement rule directly within the subscription definition.

-

When you are defining where to place your subscription in the

spec.placementsection, use only one ofclusters,clusterSelector, orplacementReffor a multicluster environment. If you include more than one placement setting, one setting is used and others are ignored. The following priority is used to determine which setting the subscription operator uses:

-

placementRef -

clusters -

clusterSelector

-

Your subscription can resemble the following YAML content:

1.4.11.3. Subscription file samples

For application samples that you can deploy, see the stolostron repository.

1.4.11.4. Secondary channel sample