Deploying and Upgrading AMQ Streams on OpenShift

For use with AMQ Streams 1.5 on OpenShift Container Platform

Abstract

Chapter 1. Deployment overview

AMQ Streams simplifies the process of running Apache Kafka in an OpenShift cluster.

This guide provides instructions on all the options available for deploying and upgrading AMQ Streams, describing what is deployed, and the order of deployment required to run Apache Kafka in an OpenShift cluster.

As well as describing the deployment steps, the guide also provides pre- and post-deployment instructions to prepare for and verify a deployment. Additional deployment options described include the steps to introduce metrics. Upgrade instructions are provided for AMQ Streams and Kafka upgrades.

AMQ Streams is designed to work on all types of OpenShift cluster regardless of distribution, from public and private clouds to local deployments intended for development.

1.1. How AMQ Streams supports Kafka

AMQ Streams provides container images and Operators for running Kafka on OpenShift. AMQ Streams Operators are fundamental to the running of AMQ Streams. The Operators provided with AMQ Streams are purpose-built with specialist operational knowledge to effectively manage Kafka.

Operators simplify the process of:

- Deploying and running Kafka clusters

- Deploying and running Kafka components

- Configuring access to Kafka

- Securing access to Kafka

- Upgrading Kafka

- Managing brokers

- Creating and managing topics

- Creating and managing users

1.2. AMQ Streams Operators

AMQ Streams supports Kafka using Operators to deploy and manage the components and dependencies of Kafka to OpenShift.

Operators are a method of packaging, deploying, and managing an OpenShift application. AMQ Streams Operators extend OpenShift functionality, automating common and complex tasks related to a Kafka deployment. By implementing knowledge of Kafka operations in code, Kafka administration tasks are simplified and require less manual intervention.

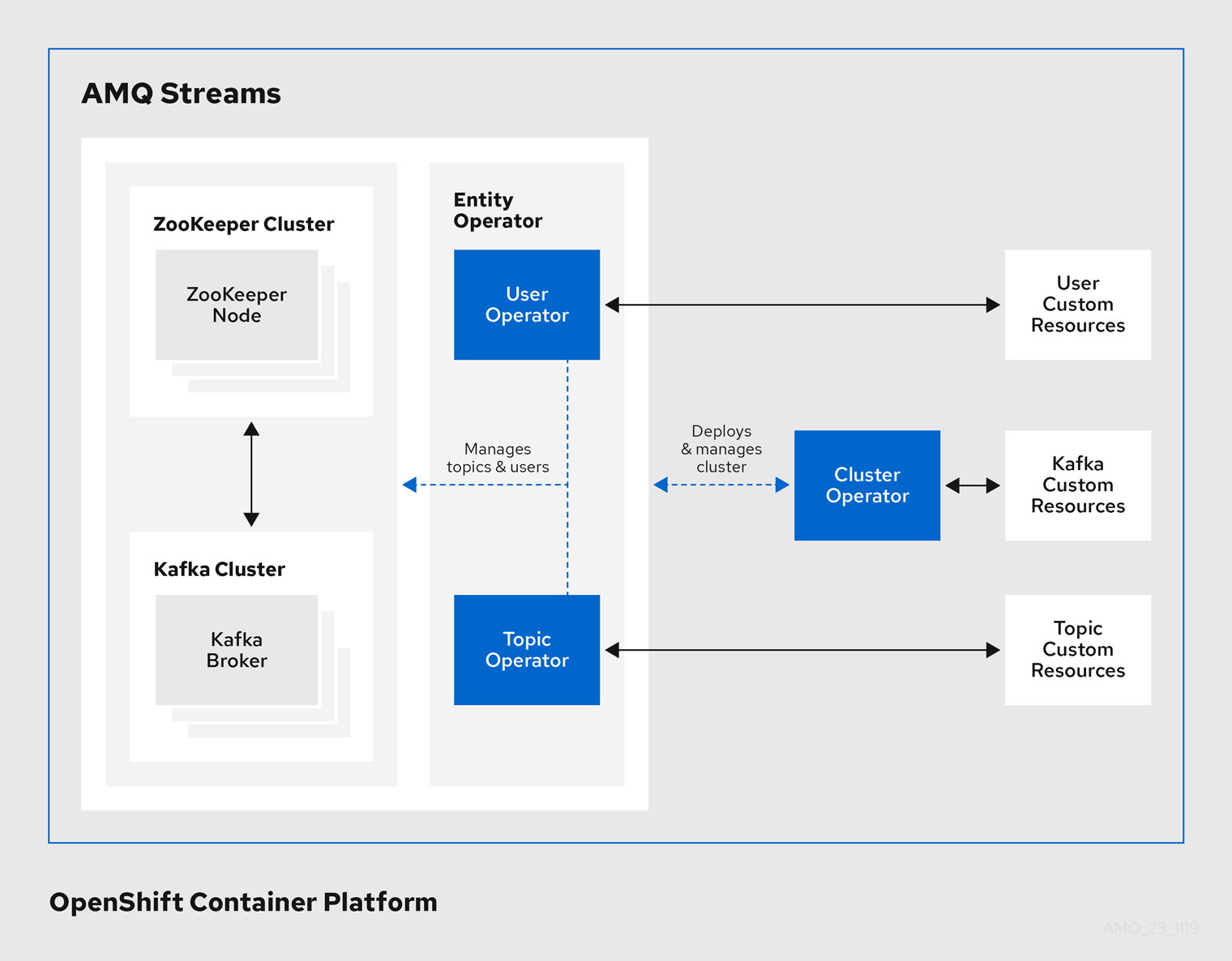

Operators

AMQ Streams provides Operators for managing a Kafka cluster running within an OpenShift cluster.

- Cluster Operator

- Deploys and manages Apache Kafka clusters, Kafka Connect, Kafka MirrorMaker, Kafka Bridge, Kafka Exporter, and the Entity Operator

- Entity Operator

- Comprises the Topic Operator and User Operator

- Topic Operator

- Manages Kafka topics

- User Operator

- Manages Kafka users

The Cluster Operator can deploy the Topic Operator and User Operator as part of an Entity Operator configuration at the same time as a Kafka cluster.

Operators within the AMQ Streams architecture

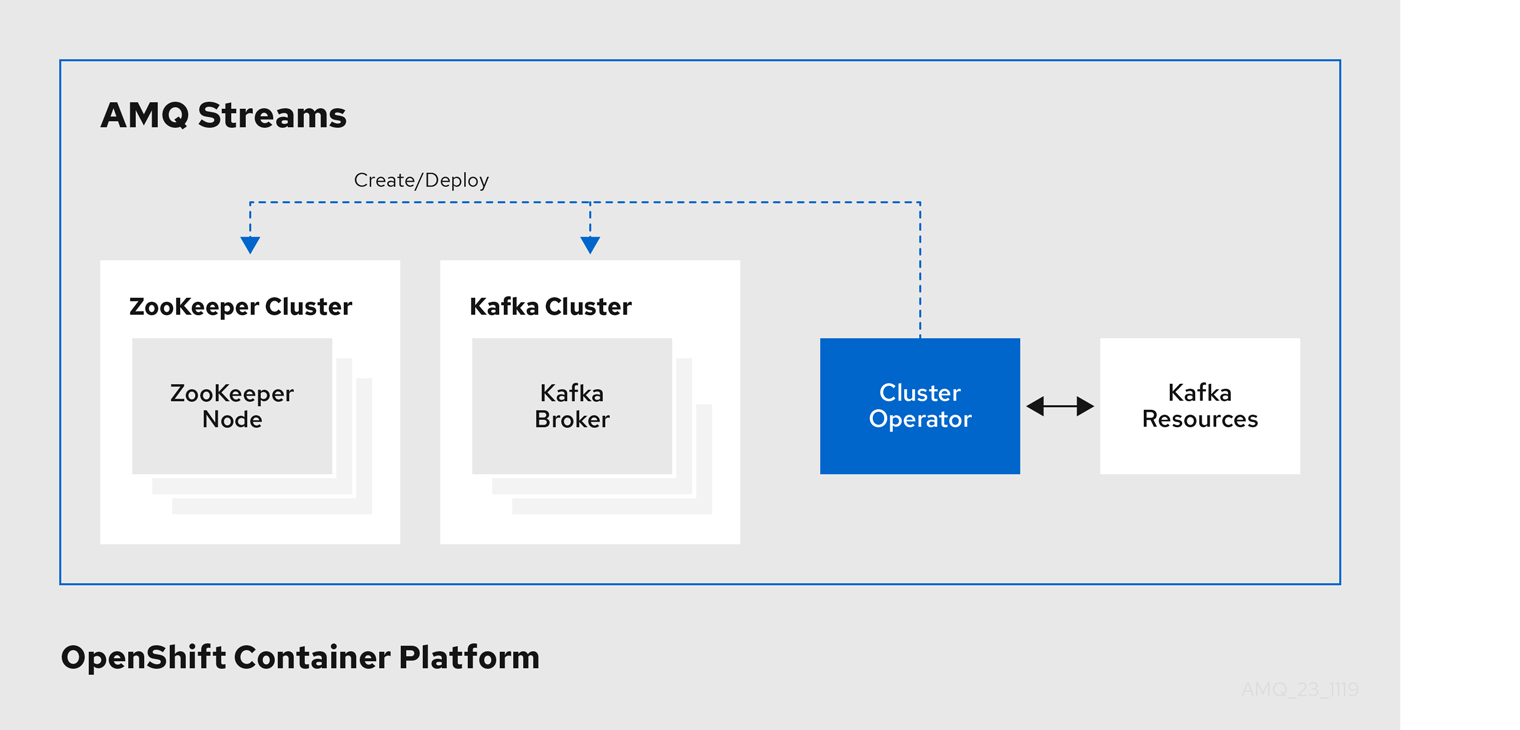

1.2.1. Cluster Operator

AMQ Streams uses the Cluster Operator to deploy and manage clusters for:

- Kafka (including ZooKeeper, Entity Operator, Kafka Exporter, and Cruise Control)

- Kafka Connect

- Kafka MirrorMaker

- Kafka Bridge

Custom resources are used to deploy the clusters.

For example, to deploy a Kafka cluster:

-

A

Kafkaresource with the cluster configuration is created within the OpenShift cluster. -

The Cluster Operator deploys a corresponding Kafka cluster, based on what is declared in the

Kafkaresource.

The Cluster Operator can also deploy (through configuration of the Kafka resource):

-

A Topic Operator to provide operator-style topic management through

KafkaTopiccustom resources -

A User Operator to provide operator-style user management through

KafkaUsercustom resources

The Topic Operator and User Operator function within the Entity Operator on deployment.

Example architecture for the Cluster Operator

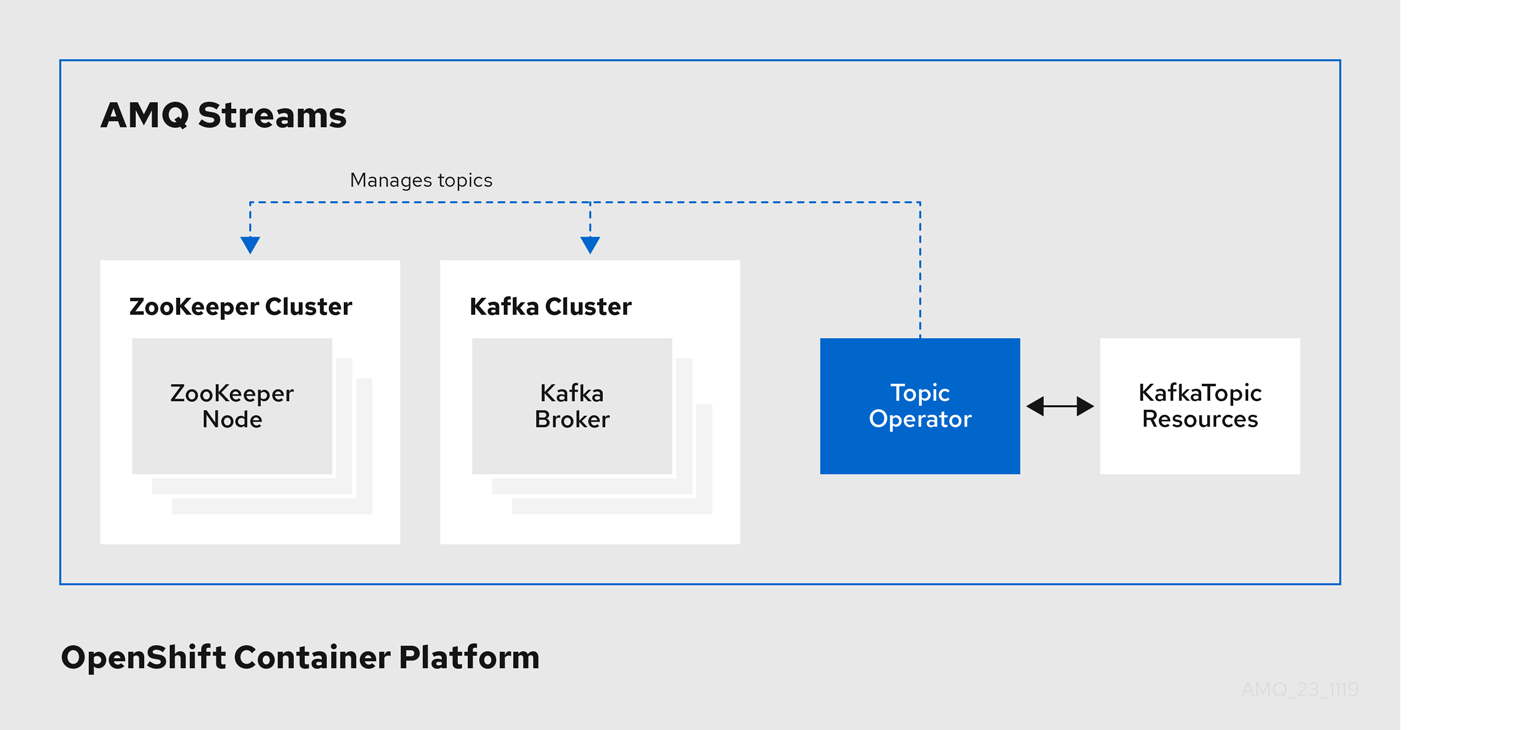

1.2.2. Topic Operator

The Topic Operator provides a way of managing topics in a Kafka cluster through OpenShift resources.

Example architecture for the Topic Operator

The role of the Topic Operator is to keep a set of KafkaTopic OpenShift resources describing Kafka topics in-sync with corresponding Kafka topics.

Specifically, if a KafkaTopic is:

- Created, the Topic Operator creates the topic

- Deleted, the Topic Operator deletes the topic

- Changed, the Topic Operator updates the topic

Working in the other direction, if a topic is:

-

Created within the Kafka cluster, the Operator creates a

KafkaTopic -

Deleted from the Kafka cluster, the Operator deletes the

KafkaTopic -

Changed in the Kafka cluster, the Operator updates the

KafkaTopic

This allows you to declare a KafkaTopic as part of your application’s deployment and the Topic Operator will take care of creating the topic for you. Your application just needs to deal with producing or consuming from the necessary topics.

If the topic is reconfigured or reassigned to different Kafka nodes, the KafkaTopic will always be up to date.

1.2.3. User Operator

The User Operator manages Kafka users for a Kafka cluster by watching for KafkaUser resources that describe Kafka users, and ensuring that they are configured properly in the Kafka cluster.

For example, if a KafkaUser is:

- Created, the User Operator creates the user it describes

- Deleted, the User Operator deletes the user it describes

- Changed, the User Operator updates the user it describes

Unlike the Topic Operator, the User Operator does not sync any changes from the Kafka cluster with the OpenShift resources. Kafka topics can be created by applications directly in Kafka, but it is not expected that the users will be managed directly in the Kafka cluster in parallel with the User Operator.

The User Operator allows you to declare a KafkaUser resource as part of your application’s deployment. You can specify the authentication and authorization mechanism for the user. You can also configure user quotas that control usage of Kafka resources to ensure, for example, that a user does not monopolize access to a broker.

When the user is created, the user credentials are created in a Secret. Your application needs to use the user and its credentials for authentication and to produce or consume messages.

In addition to managing credentials for authentication, the User Operator also manages authorization rules by including a description of the user’s access rights in the KafkaUser declaration.

1.3. AMQ Streams custom resources

A deployment of Kafka components to an OpenShift cluster using AMQ Streams is highly configurable through the application of custom resources. Custom resources are created as instances of APIs added by Custom resource definitions (CRDs) to extend OpenShift resources.

CRDs act as configuration instructions to describe the custom resources in an OpenShift cluster, and are provided with AMQ Streams for each Kafka component used in a deployment, as well as users and topics. CRDs and custom resources are defined as YAML files. Example YAML files are provided with the AMQ Streams distribution.

CRDs also allow AMQ Streams resources to benefit from native OpenShift features like CLI accessibility and configuration validation.

Additional resources

1.3.1. AMQ Streams custom resource example

CRDs require a one-time installation in a cluster to define the schemas used to instantiate and manage AMQ Streams-specific resources.

After a new custom resource type is added to your cluster by installing a CRD, you can create instances of the resource based on its specification.

Depending on the cluster setup, installation typically requires cluster admin privileges.

Access to manage custom resources is limited to AMQ Streams administrators.

A CRD defines a new kind of resource, such as kind:Kafka, within an OpenShift cluster.

The Kubernetes API server allows custom resources to be created based on the kind and understands from the CRD how to validate and store the custom resource when it is added to the OpenShift cluster.

When CRDs are deleted, custom resources of that type are also deleted. Additionally, the resources created by the custom resource, such as pods and statefulsets are also deleted.

Each AMQ Streams-specific custom resource conforms to the schema defined by the CRD for the resource’s kind. The custom resources for AMQ Streams components have common configuration properties, which are defined under spec.

To understand the relationship between a CRD and a custom resource, let’s look at a sample of the CRD for a Kafka topic.

Kafka topic CRD

apiVersion: kafka.strimzi.io/v1beta1 kind: CustomResourceDefinition metadata: 1 name: kafkatopics.kafka.strimzi.io labels: app: strimzi spec: 2 group: kafka.strimzi.io versions: v1beta1 scope: Namespaced names: # ... singular: kafkatopic plural: kafkatopics shortNames: - kt 3 additionalPrinterColumns: 4 # ... subresources: status: {} 5 validation: 6 openAPIV3Schema: properties: spec: type: object properties: partitions: type: integer minimum: 1 replicas: type: integer minimum: 1 maximum: 32767 # ...

- 1

- The metadata for the topic CRD, its name and a label to identify the CRD.

- 2

- The specification for this CRD, including the group (domain) name, the plural name and the supported schema version, which are used in the URL to access the API of the topic. The other names are used to identify instance resources in the CLI. For example,

oc get kafkatopic my-topicoroc get kafkatopics. - 3

- The shortname can be used in CLI commands. For example,

oc get ktcan be used as an abbreviation instead ofoc get kafkatopic. - 4

- The information presented when using a

getcommand on the custom resource. - 5

- The current status of the CRD as described in the schema reference for the resource.

- 6

- openAPIV3Schema validation provides validation for the creation of topic custom resources. For example, a topic requires at least one partition and one replica.

You can identify the CRD YAML files supplied with the AMQ Streams installation files, because the file names contain an index number followed by ‘Crd’.

Here is a corresponding example of a KafkaTopic custom resource.

Kafka topic custom resource

apiVersion: kafka.strimzi.io/v1beta1 kind: KafkaTopic 1 metadata: name: my-topic labels: strimzi.io/cluster: my-cluster 2 spec: 3 partitions: 1 replicas: 1 config: retention.ms: 7200000 segment.bytes: 1073741824 status: conditions: 4 lastTransitionTime: "2019-08-20T11:37:00.706Z" status: "True" type: Ready observedGeneration: 1 / ...

- 1

- The

kindandapiVersionidentify the CRD of which the custom resource is an instance. - 2

- A label, applicable only to

KafkaTopicandKafkaUserresources, that defines the name of the Kafka cluster (which is same as the name of theKafkaresource) to which a topic or user belongs. - 3

- The spec shows the number of partitions and replicas for the topic as well as the configuration parameters for the topic itself. In this example, the retention period for a message to remain in the topic and the segment file size for the log are specified.

- 4

- Status conditions for the

KafkaTopicresource. Thetypecondition changed toReadyat thelastTransitionTime.

Custom resources can be applied to a cluster through the platform CLI. When the custom resource is created, it uses the same validation as the built-in resources of the Kubernetes API.

After a KafkaTopic custom resource is created, the Topic Operator is notified and corresponding Kafka topics are created in AMQ Streams.

1.4. Prometheus support in AMQ Streams

The Prometheus server and the CoreOS Prometheus Operator are not supported as part of the AMQ Streams distribution. However, the Prometheus endpoint and the Prometheus JMX Exporter used to expose the metrics are supported. For more information about these supported components, see Section 6.1.2, “Exposing Prometheus metrics”.

For your convenience, we supply detailed instructions and example metrics configuration files should you wish to use Prometheus with AMQ Streams for monitoring.

1.5. AMQ Streams installation methods

There are two ways to install AMQ Streams on OpenShift.

| Installation method | Description | Supported versions |

|---|---|---|

| Installation artifacts (YAML files) |

Download the | OpenShift 3.11 and later |

| OperatorHub | Use the AMQ Streams Operator in the OperatorHub to deploy the Cluster Operator to a single namespace or all namespaces. | OpenShift 4.x only |

For the greatest flexibility, choose the installation artifacts method. Choose the OperatorHub method if you want to install AMQ Streams to OpenShift 4 in a standard configuration using the OpenShift 4 web console. The OperatorHub also allows you to take advantage of automatic updates.

In the case of both methods, the Cluster Operator is deployed to your OpenShift cluster, ready for you to deploy the other components of AMQ Streams, starting with a Kafka cluster, using the YAML example files provided.

AMQ Streams installation artifacts

The AMQ Streams installation artifacts contain various YAML files that can be deployed to OpenShift, using oc, to create custom resources, including:

- Deployments

- Custom resource definitions (CRDs)

- Roles and role bindings

- Service accounts

YAML installation files are provided for the Cluster Operator, Topic Operator, User Operator, and the Strimzi Admin role.

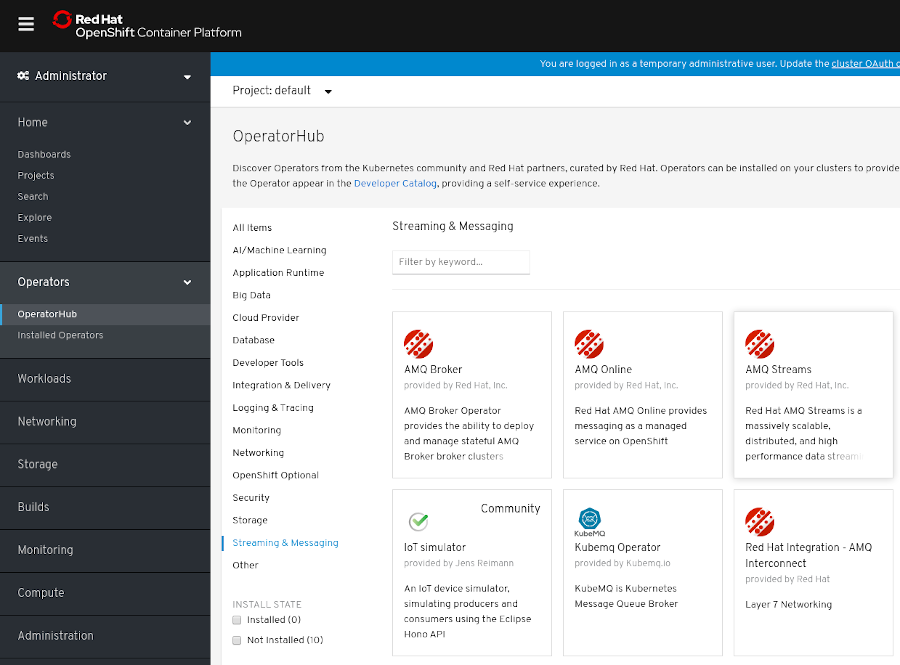

OperatorHub

In OpenShift 4, the Operator Lifecycle Manager (OLM) helps cluster administrators to install, update, and manage the lifecycle of all Operators and their associated services running across their clusters. The OLM is part of the Operator Framework, an open source toolkit designed to manage Kubernetes-native applications (Operators) in an effective, automated, and scalable way.

The OperatorHub is part of the OpenShift 4 web console. Cluster administrators can use it to discover, install, and upgrade Operators. Operators can be pulled from the OperatorHub, installed on the OpenShift cluster to a single (project) namespace or all (projects) namespaces, and managed by the OLM. Engineering teams can then independently manage the software in development, test, and production environments using the OLM.

The OperatorHub is not available in versions of OpenShift earlier than version 4.

AMQ Streams Operator

The AMQ Streams Operator is available to install from the OperatorHub. Once installed, the AMQ Streams Operator deploys the Cluster Operator to your OpenShift cluster, along with the necessary CRDs and role-based access control (RBAC) resources.

Additional resources

Installing AMQ Streams using the installation artifacts:

Installing AMQ Streams from the OperatorHub:

- Section 4.1.1.5, “Deploying the Cluster Operator from the OperatorHub”

- Operators guide in the OpenShift documentation.

Chapter 2. What is deployed with AMQ Streams

Apache Kafka components are provided for deployment to OpenShift with the AMQ Streams distribution. The Kafka components are generally run as clusters for availability.

A typical deployment incorporating Kafka components might include:

- Kafka cluster of broker nodes

- ZooKeeper cluster of replicated ZooKeeper instances

- Kafka Connect cluster for external data connections

- Kafka MirrorMaker cluster to mirror the Kafka cluster in a secondary cluster

- Kafka Exporter to extract additional Kafka metrics data for monitoring

- Kafka Bridge to make HTTP-based requests to the Kafka cluster

Not all of these components are mandatory, though you need Kafka and ZooKeeper as a minimum. Some components can be deployed without Kafka, such as MirrorMaker or Kafka Connect.

2.1. Order of deployment

The required order of deployment to an OpenShift cluster is as follows:

- Deploy the Cluster operator to manage your Kafka cluster

- Deploy the Kafka cluster with the ZooKeeper cluster, and include the Topic Operator and User Operator in the deployment

Optionally deploy:

- The Topic Operator and User Operator standalone if you did not deploy them with the Kafka cluster

- Kafka Connect

- Kafka MirrorMaker

- Kafka Bridge

- Components for the monitoring of metrics

2.2. Additional deployment configuration options

The deployment procedures in this guide describe a deployment using the example installation YAML files provided with AMQ Streams. The procedures highlight any important configuration considerations, but they do not describe all the configuration options available.

You can use custom resources to refine your deployment.

You may wish to review the configuration options available for Kafka components before you deploy AMQ Streams. For more information on the configuration through custom resources, see Deployment configuration.

2.2.1. Securing Kafka

On deployment, the Cluster Operator automatically sets up TLS certificates for data encryption and authentication within your cluster.

AMQ Streams provides additional configuration options for encryption, authentication and authorization:

- Secure data exchange between the Kafka cluster and clients by configuration of Kafka resources.

- Configure your deployment to use an authorization server to provide OAuth 2.0 authentication and OAuth 2.0 authorization.

- Secure Kafka using your own certificates.

2.2.2. Monitoring your deployment

AMQ Streams supports additional deployment options to monitor your deployment.

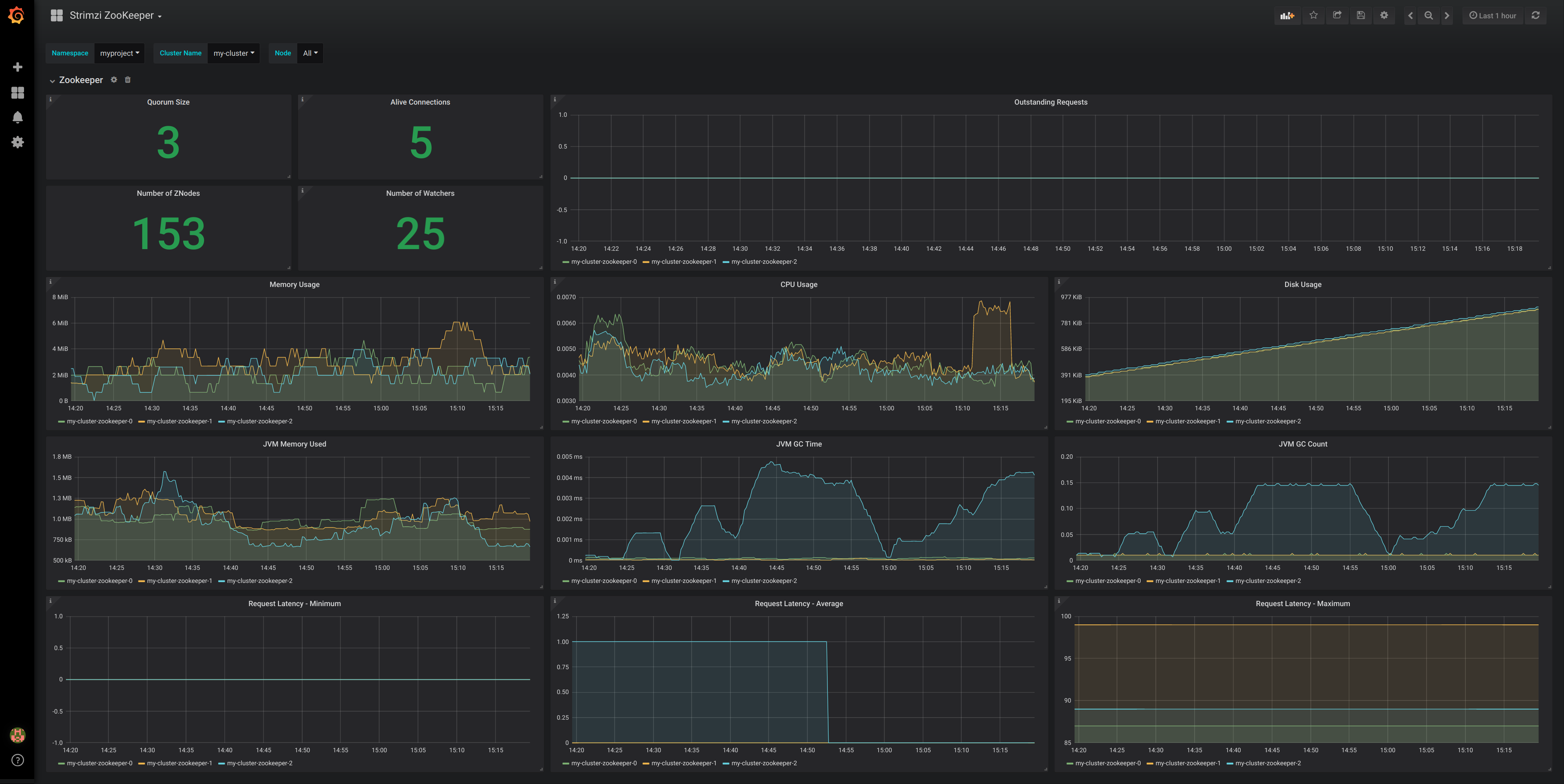

- Extract metrics and monitor Kafka components by deploying Prometheus and Grafana with your Kafka cluster.

- Extract additional metrics, particularly related to monitoring consumer lag, by deploying Kafka Exporter with your Kafka cluster.

- Track messages end-to-end by setting up distributed tracing.

Chapter 3. Preparing for your AMQ Streams deployment

This section shows how you prepare for a AMQ Streams deployment, describing:

- The prerequisites you need before you can deploy AMQ Streams

- How to download the AMQ Streams release artifacts to use in your deployment

- How to push the AMQ Streams container images into you own registry (if required)

- How to set up admin roles for configuration of custom resources used in deployment

To run the commands in this guide, your cluster user must have the rights to manage role-based access control (RBAC) and CRDs.

3.1. Deployment prerequisites

To deploy AMQ Streams, make sure:

An OpenShift 3.11 and later cluster is available

AMQ Streams is based on AMQ Streams Strimzi 0.18.x.

-

The

occommand-line tool is installed and configured to connect to the running cluster.

AMQ Streams supports some features that are specific to OpenShift, where such integration benefits OpenShift users and there is no equivalent implementation using standard OpenShift.

3.2. Downloading AMQ Streams release artifacts

To install AMQ Streams, download and extract the release artifacts from the amq-streams-<version>-ocp-install-examples.zip file from the AMQ Streams download site.

AMQ Streams release artifacts include sample YAML files to help you deploy the components of AMQ Streams to OpenShift, perform common operations, and configure your Kafka cluster.

You deploy AMQ Streams to an OpenShift cluster using the oc command-line tool.

Additionally, AMQ Streams container images are available through the Red Hat Ecosystem Catalog. However, we recommend that you use the YAML files provided to deploy AMQ Streams.

3.3. Pushing container images to your own registry

Container images for AMQ Streams are available in the Red Hat Ecosystem Catalog. The installation YAML files provided by AMQ Streams will pull the images directly from the Red Hat Ecosystem Catalog.

If you do not have access to the Red Hat Ecosystem Catalog or want to use your own container repository:

- Pull all container images listed here

- Push them into your own registry

- Update the image names in the installation YAML files

Each Kafka version supported for the release has a separate image.

| Container image | Namespace/Repository | Description |

|---|---|---|

| Kafka |

| AMQ Streams image for running Kafka, including:

|

| Operator |

| AMQ Streams image for running the operators:

|

| Kafka Bridge |

| AMQ Streams image for running the AMQ Streams Kafka Bridge |

3.4. Designating AMQ Streams administrators

AMQ Streams provides custom resources for configuration of your deployment. By default, permission to view, create, edit, and delete these resources is limited to OpenShift cluster administrators. AMQ Streams provides two cluster roles that you can use to assign these rights to other users:

-

strimzi-viewallows users to view and list AMQ Streams resources. -

strimzi-adminallows users to also create, edit or delete AMQ Streams resources.

When you install these roles, they will automatically aggregate (add) these rights to the default OpenShift cluster roles. strimzi-view aggregates to the view role, and strimzi-admin aggregates to the edit and admin roles. Because of the aggregation, you might not need to assign these roles to users who already have similar rights.

The following procedure shows how to assign a strimzi-admin role that allows non-cluster administrators to manage AMQ Streams resources.

A system administrator can designate AMQ Streams administrators after the Cluster Operator is deployed.

Prerequisites

- The AMQ Streams Custom Resource Definitions (CRDs) and role-based access control (RBAC) resources to manage the CRDs have been deployed with the Cluster Operator.

Procedure

Create the

strimzi-viewandstrimzi-admincluster roles in OpenShift.oc apply -f install/strimzi-admin

If needed, assign the roles that provide access rights to users that require them.

oc create clusterrolebinding strimzi-admin --clusterrole=strimzi-admin --user=user1 --user=user2

Chapter 4. Deploying AMQ Streams

Having prepared your environment for a deployment of AMQ Streams, this section shows:

- How to create the Kafka cluster

Optional procedures to deploy other Kafka components according to your requirements:

The procedures assume an OpenShift cluster is available and running.

4.1. Create the Kafka cluster

In order to create your Kafka cluster, you deploy the Cluster Operator to manage the Kafka cluster, then deploy the Kafka cluster.

When deploying the Kafka cluster using the Kafka resource, you can deploy the Topic Operator and User Operator at the same time. Alternatively, if you are using a non-AMQ Streams Kafka cluster, you can deploy the Topic Operator and User Operator as standalone components.

Deploying a Kafka cluster with the Topic Operator and User Operator

Perform these deployment steps if you want to use the Topic Operator and User Operator with a Kafka cluster managed by AMQ Streams.

- Deploy the Cluster Operator

Use the Cluster Operator to deploy the:

Deploying a standalone Topic Operator and User Operator

Perform these deployment steps if you want to use the Topic Operator and User Operator with a Kafka cluster that is not managed by AMQ Streams.

4.1.1. Deploying the Cluster Operator

The Cluster Operator is responsible for deploying and managing Apache Kafka clusters within an OpenShift cluster.

The procedures in this section show:

How to deploy the Cluster Operator to watch:

Alternative deployment options:

4.1.1.1. Watch options for a Cluster Operator deployment

When the Cluster Operator is running, it starts to watch for updates of Kafka resources.

You can choose to deploy the Cluster Operator to watch Kafka resources from:

- A single namespace (the same namespace containing the Cluster Operator)

- Multiple namespaces

- All namespaces

AMQ Streams provides example YAML files to make the deployment process easier.

The Cluster Operator watches for changes to the following resources:

-

Kafkafor the Kafka cluster. -

KafkaConnectfor the Kafka Connect cluster. -

KafkaConnectS2Ifor the Kafka Connect cluster with Source2Image support. -

KafkaConnectorfor creating and managing connectors in a Kafka Connect cluster. -

KafkaMirrorMakerfor the Kafka MirrorMaker instance. -

KafkaBridgefor the Kafka Bridge instance

When one of these resources is created in the OpenShift cluster, the operator gets the cluster description from the resource and starts creating a new cluster for the resource by creating the necessary OpenShift resources, such as StatefulSets, Services and ConfigMaps.

Each time a Kafka resource is updated, the operator performs corresponding updates on the OpenShift resources that make up the cluster for the resource.

Resources are either patched or deleted, and then recreated in order to make the cluster for the resource reflect the desired state of the cluster. This operation might cause a rolling update that might lead to service disruption.

When a resource is deleted, the operator undeploys the cluster and deletes all related OpenShift resources.

4.1.1.2. Deploying the Cluster Operator to watch a single namespace

This procedure shows how to deploy the Cluster Operator to watch AMQ Streams resources in a single namespace in your OpenShift cluster.

Prerequisites

-

This procedure requires use of an OpenShift user account which is able to create

CustomResourceDefinitions,ClusterRolesandClusterRoleBindings. Use of Role Base Access Control (RBAC) in the OpenShift cluster usually means that permission to create, edit, and delete these resources is limited to OpenShift cluster administrators, such assystem:admin.

Procedure

Edit the AMQ Streams installation files to use the namespace the Cluster Operator is going to be installed into.

For example, in this procedure the Cluster Operator is installed into the namespace

my-cluster-operator-namespace.On Linux, use:

sed -i 's/namespace: .*/namespace: my-cluster-operator-namespace/' install/cluster-operator/*RoleBinding*.yamlOn MacOS, use:

sed -i '' 's/namespace: .*/namespace: my-cluster-operator-namespace/' install/cluster-operator/*RoleBinding*.yamlDeploy the Cluster Operator:

oc apply -f install/cluster-operator -n my-cluster-operator-namespaceVerify that the Cluster Operator was successfully deployed:

oc get deployments

4.1.1.3. Deploying the Cluster Operator to watch multiple namespaces

This procedure shows how to deploy the Cluster Operator to watch AMQ Streams resources across multiple namespaces in your OpenShift cluster.

Prerequisites

-

This procedure requires use of an OpenShift user account which is able to create

CustomResourceDefinitions,ClusterRolesandClusterRoleBindings. Use of Role Base Access Control (RBAC) in the OpenShift cluster usually means that permission to create, edit, and delete these resources is limited to OpenShift cluster administrators, such assystem:admin.

Procedure

Edit the AMQ Streams installation files to use the namespace the Cluster Operator is going to be installed into.

For example, in this procedure the Cluster Operator is installed into the namespace

my-cluster-operator-namespace.On Linux, use:

sed -i 's/namespace: .*/namespace: my-cluster-operator-namespace/' install/cluster-operator/*RoleBinding*.yamlOn MacOS, use:

sed -i '' 's/namespace: .*/namespace: my-cluster-operator-namespace/' install/cluster-operator/*RoleBinding*.yamlEdit the

install/cluster-operator/050-Deployment-strimzi-cluster-operator.yamlfile to add a list of all the namespaces the Cluster Operator will watch to theSTRIMZI_NAMESPACEenvironment variable.For example, in this procedure the Cluster Operator will watch the namespaces

watched-namespace-1,watched-namespace-2,watched-namespace-3.apiVersion: apps/v1 kind: Deployment spec: # ... template: spec: serviceAccountName: strimzi-cluster-operator containers: - name: strimzi-cluster-operator image: registry.redhat.io/amq7/amq-streams-rhel7-operator:1.5.0 imagePullPolicy: IfNotPresent env: - name: STRIMZI_NAMESPACE value: watched-namespace-1,watched-namespace-2,watched-namespace-3For each namespace listed, install the

RoleBindings.In this example, we replace

watched-namespacein these commands with the namespaces listed in the previous step, repeating them forwatched-namespace-1,watched-namespace-2,watched-namespace-3:oc apply -f install/cluster-operator/020-RoleBinding-strimzi-cluster-operator.yaml -n watched-namespace oc apply -f install/cluster-operator/031-RoleBinding-strimzi-cluster-operator-entity-operator-delegation.yaml -n watched-namespace oc apply -f install/cluster-operator/032-RoleBinding-strimzi-cluster-operator-topic-operator-delegation.yaml -n watched-namespace

Deploy the Cluster Operator:

oc apply -f install/cluster-operator -n my-cluster-operator-namespaceVerify that the Cluster Operator was successfully deployed:

oc get deployments

4.1.1.4. Deploying the Cluster Operator to watch all namespaces

This procedure shows how to deploy the Cluster Operator to watch AMQ Streams resources across all namespaces in your OpenShift cluster.

When running in this mode, the Cluster Operator automatically manages clusters in any new namespaces that are created.

Prerequisites

-

This procedure requires use of an OpenShift user account which is able to create

CustomResourceDefinitions,ClusterRolesandClusterRoleBindings. Use of Role Base Access Control (RBAC) in the OpenShift cluster usually means that permission to create, edit, and delete these resources is limited to OpenShift cluster administrators, such assystem:admin.

Procedure

Edit the AMQ Streams installation files to use the namespace the Cluster Operator is going to be installed into.

For example, in this procedure the Cluster Operator is installed into the namespace

my-cluster-operator-namespace.On Linux, use:

sed -i 's/namespace: .*/namespace: my-cluster-operator-namespace/' install/cluster-operator/*RoleBinding*.yamlOn MacOS, use:

sed -i '' 's/namespace: .*/namespace: my-cluster-operator-namespace/' install/cluster-operator/*RoleBinding*.yamlEdit the

install/cluster-operator/050-Deployment-strimzi-cluster-operator.yamlfile to set the value of theSTRIMZI_NAMESPACEenvironment variable to*.apiVersion: apps/v1 kind: Deployment spec: # ... template: spec: # ... serviceAccountName: strimzi-cluster-operator containers: - name: strimzi-cluster-operator image: registry.redhat.io/amq7/amq-streams-rhel7-operator:1.5.0 imagePullPolicy: IfNotPresent env: - name: STRIMZI_NAMESPACE value: "*" # ...Create

ClusterRoleBindingsthat grant cluster-wide access for all namespaces to the Cluster Operator.oc create clusterrolebinding strimzi-cluster-operator-namespaced --clusterrole=strimzi-cluster-operator-namespaced --serviceaccount my-cluster-operator-namespace:strimzi-cluster-operator oc create clusterrolebinding strimzi-cluster-operator-entity-operator-delegation --clusterrole=strimzi-entity-operator --serviceaccount my-cluster-operator-namespace:strimzi-cluster-operator oc create clusterrolebinding strimzi-cluster-operator-topic-operator-delegation --clusterrole=strimzi-topic-operator --serviceaccount my-cluster-operator-namespace:strimzi-cluster-operator

Replace

my-cluster-operator-namespacewith the namespace you want to install the Cluster Operator into.Deploy the Cluster Operator to your OpenShift cluster.

oc apply -f install/cluster-operator -n my-cluster-operator-namespaceVerify that the Cluster Operator was successfully deployed:

oc get deployments

4.1.1.5. Deploying the Cluster Operator from the OperatorHub

You can deploy the Cluster Operator to your OpenShift cluster by installing the AMQ Streams Operator from the OperatorHub. The OperatorHub is available in OpenShift 4 only.

Prerequisites

-

The Red Hat Operators

OperatorSourceis enabled in your OpenShift cluster. If you can see Red Hat Operators in the OperatorHub, the correctOperatorSourceis enabled. For more information, see the Operators guide. - Installation requires a user with sufficient privileges to install Operators from the OperatorHub.

Procedure

- In the OpenShift 4 web console, click Operators > OperatorHub.

Search or browse for the AMQ Streams Operator, in the Streaming & Messaging category.

- Click the AMQ Streams tile and then, in the sidebar on the right, click Install.

On the Create Operator Subscription screen, choose from the following installation and update options:

- Installation Mode: Choose to install the AMQ Streams Operator to all (projects) namespaces in the cluster (the default option) or a specific (project) namespace. It is good practice to use namespaces to separate functions. We recommend that you dedicate a specific namespace to the Kafka cluster and other AMQ Streams components.

- Approval Strategy: By default, the AMQ Streams Operator is automatically upgraded to the latest AMQ Streams version by the Operator Lifecycle Manager (OLM). Optionally, select Manual if you want to manually approve future upgrades. For more information, see the Operators guide in the OpenShift documentation.

Click Subscribe; the AMQ Streams Operator is installed to your OpenShift cluster.

The AMQ Streams Operator deploys the Cluster Operator, CRDs, and role-based access control (RBAC) resources to the selected namespace, or to all namespaces.

On the Installed Operators screen, check the progress of the installation. The AMQ Streams Operator is ready to use when its status changes to InstallSucceeded.

Next, you can deploy the other components of AMQ Streams, starting with a Kafka cluster, using the YAML example files.

4.1.2. Deploying Kafka

Apache Kafka is an open-source distributed publish-subscribe messaging system for fault-tolerant real-time data feeds.

The procedures in this section show:

How to use the Cluster Operator to deploy:

- An ephemeral or persistent Kafka cluster

The Topic Operator and User Operator by configuring the

Kafkacustom resource:

Alternative standalone deployment procedures for the Topic Operator and User Operator:

When installing Kafka, AMQ Streams also installs a ZooKeeper cluster and adds the necessary configuration to connect Kafka with ZooKeeper.

4.1.2.1. Deploying the Kafka cluster

This procedure shows how to deploy a Kafka cluster to your OpenShift using the Cluster Operator.

The deployment uses a YAML file to provide the specification to create a Kafka resource.

AMQ Streams provides example YAMLs files for deployment in examples/kafka/:

kafka-persistent.yaml- Deploys a persistent cluster with three ZooKeeper and three Kafka nodes.

kafka-jbod.yaml- Deploys a persistent cluster with three ZooKeeper and three Kafka nodes (each using multiple persistent volumes).

kafka-persistent-single.yaml- Deploys a persistent cluster with a single ZooKeeper node and a single Kafka node.

kafka-ephemeral.yaml- Deploys an ephemeral cluster with three ZooKeeper and three Kafka nodes.

kafka-ephemeral-single.yaml- Deploys an ephemeral cluster with three ZooKeeper nodes and a single Kafka node.

In this procedure, we use the examples for an ephemeral and persistent Kafka cluster deployment:

- Ephemeral cluster

-

In general, an ephemeral (or temporary) Kafka cluster is suitable for development and testing purposes, not for production. This deployment uses

emptyDirvolumes for storing broker information (for ZooKeeper) and topics or partitions (for Kafka). Using anemptyDirvolume means that its content is strictly related to the pod life cycle and is deleted when the pod goes down. - Persistent cluster

-

A persistent Kafka cluster uses

PersistentVolumesto store ZooKeeper and Kafka data. ThePersistentVolumeis acquired using aPersistentVolumeClaimto make it independent of the actual type of thePersistentVolume. For example, it can use Amazon EBS volumes in Amazon AWS deployments without any changes in the YAML files. ThePersistentVolumeClaimcan use aStorageClassto trigger automatic volume provisioning.

The example clusters are named my-cluster by default. The cluster name is defined by the name of the resource and cannot be changed after the cluster has been deployed. To change the cluster name before you deploy the cluster, edit the Kafka.metadata.name property of the Kafka resource in the relevant YAML file.

apiVersion: kafka.strimzi.io/v1beta1 kind: Kafka metadata: name: my-cluster # ...

For more information about configuring the Kafka resource, see Kafka cluster configuration

Prerequisites

Procedure

Create and deploy an ephemeral or persistent cluster.

For development or testing, you might prefer to use an ephemeral cluster. You can use a persistent cluster in any situation.

To create and deploy an ephemeral cluster:

oc apply -f examples/kafka/kafka-ephemeral.yaml

To create and deploy a persistent cluster:

oc apply -f examples/kafka/kafka-persistent.yaml

Verify that the Kafka cluster was successfully deployed:

oc get deployments

4.1.2.2. Deploying the Topic Operator using the Cluster Operator

This procedure describes how to deploy the Topic Operator using the Cluster Operator.

You configure the entityOperator property of the Kafka resource to include the topicOperator.

If you want to use the Topic Operator with a Kafka cluster that is not managed by AMQ Streams, you must deploy the Topic Operator as a standalone component.

For more information about configuring the entityOperator and topicOperator properties, see Entity Operator.

Prerequisites

Procedure

Edit the

entityOperatorproperties of theKafkaresource to includetopicOperator:apiVersion: kafka.strimzi.io/v1beta1 kind: Kafka metadata: name: my-cluster spec: #... entityOperator: topicOperator: {} userOperator: {}Configure the Topic Operator

specusing the properties described inEntityTopicOperatorSpecschema reference.Use an empty object (

{}) if you want all properties to use their default values.Create or update the resource:

Use

oc apply:oc apply -f <your-file>

4.1.2.3. Deploying the User Operator using the Cluster Operator

This procedure describes how to deploy the User Operator using the Cluster Operator.

You configure the entityOperator property of the Kafka resource to include the userOperator.

If you want to use the User Operator with a Kafka cluster that is not managed by AMQ Streams, you must deploy the User Operator as a standalone component.

For more information about configuring the entityOperator and userOperator properties, see Entity Operator.

Prerequisites

Procedure

Edit the

entityOperatorproperties of theKafkaresource to includeuserOperator:apiVersion: kafka.strimzi.io/v1beta1 kind: Kafka metadata: name: my-cluster spec: #... entityOperator: topicOperator: {} userOperator: {}Configure the User Operator

specusing the properties described inEntityUserOperatorSpecschema reference.Use an empty object (

{}) if you want all properties to use their default values.Create or update the resource:

oc apply -f <your-file>

4.1.3. Alternative standalone deployment options for AMQ Streams Operators

When deploying a Kafka cluster using the Cluster Operator, you can also deploy the Topic Operator and User Operator. Alternatively, you can perform a standalone deployment.

A standalone deployment means the Topic Operator and User Operator can operate with a Kafka cluster that is not managed by AMQ Streams.

4.1.3.1. Deploying the standalone Topic Operator

This procedure shows how to deploy the Topic Operator as a standalone component.

A standalone deployment requires configuration of environment variables, and is more complicated than deploying the Topic Operator using the Cluster Operator. However, a standalone deployment is more flexible as the Topic Operator can operate with any Kafka cluster, not necessarily one deployed by the Cluster Operator.

Prerequisites

- You need an existing Kafka cluster for the Topic Operator to connect to.

Procedure

Edit the

Deployment.spec.template.spec.containers[0].envproperties in theinstall/topic-operator/05-Deployment-strimzi-topic-operator.yamlfile by setting:-

STRIMZI_KAFKA_BOOTSTRAP_SERVERSto list the bootstrap brokers in your Kafka cluster, given as a comma-separated list ofhostname:portpairs. -

STRIMZI_ZOOKEEPER_CONNECTto list the ZooKeeper nodes, given as a comma-separated list ofhostname:portpairs. This should be the same ZooKeeper cluster that your Kafka cluster is using. -

STRIMZI_NAMESPACEto the OpenShift namespace in which you want the operator to watch forKafkaTopicresources. -

STRIMZI_RESOURCE_LABELSto the label selector used to identify theKafkaTopicresources managed by the operator. -

STRIMZI_FULL_RECONCILIATION_INTERVAL_MSto specify the interval between periodic reconciliations, in milliseconds. -

STRIMZI_TOPIC_METADATA_MAX_ATTEMPTSto specify the number of attempts at getting topic metadata from Kafka. The time between each attempt is defined as an exponential back-off. Consider increasing this value when topic creation could take more time due to the number of partitions or replicas. Default6. -

STRIMZI_ZOOKEEPER_SESSION_TIMEOUT_MSto the ZooKeeper session timeout, in milliseconds. For example,10000. Default20000(20 seconds). -

STRIMZI_TOPICS_PATHto the Zookeeper node path where the Topic Operator stores its metadata. Default/strimzi/topics. -

STRIMZI_TLS_ENABLEDto enable TLS support for encrypting the communication with Kafka brokers. Defaulttrue. -

STRIMZI_TRUSTSTORE_LOCATIONto the path to the truststore containing certificates for enabling TLS based communication. Mandatory only if TLS is enabled throughSTRIMZI_TLS_ENABLED. -

STRIMZI_TRUSTSTORE_PASSWORDto the password for accessing the truststore defined bySTRIMZI_TRUSTSTORE_LOCATION. Mandatory only if TLS is enabled throughSTRIMZI_TLS_ENABLED. -

STRIMZI_KEYSTORE_LOCATIONto the path to the keystore containing private keys for enabling TLS based communication. Mandatory only if TLS is enabled throughSTRIMZI_TLS_ENABLED. -

STRIMZI_KEYSTORE_PASSWORDto the password for accessing the keystore defined bySTRIMZI_KEYSTORE_LOCATION. Mandatory only if TLS is enabled throughSTRIMZI_TLS_ENABLED. -

STRIMZI_LOG_LEVELto the level for printing logging messages. The value can be set to:ERROR,WARNING,INFO,DEBUG, andTRACE. DefaultINFO. -

STRIMZI_JAVA_OPTS(optional) to the Java options used for the JVM running the Topic Operator. An example is-Xmx=512M -Xms=256M. -

STRIMZI_JAVA_SYSTEM_PROPERTIES(optional) to list the-Doptions which are set to the Topic Operator. An example is-Djavax.net.debug=verbose -DpropertyName=value.

-

Deploy the Topic Operator:

oc apply -f install/topic-operator

Verify that the Topic Operator has been deployed successfully:

oc describe deployment strimzi-topic-operator

The Topic Operator is deployed when the

Replicas:entry shows1 available.NoteYou may experience a delay with the deployment if you have a slow connection to the OpenShift cluster and the images have not been downloaded before.

4.1.3.2. Deploying the standalone User Operator

This procedure shows how to deploy the User Operator as a standalone component.

A standalone deployment requires configuration of environment variables, and is more complicated than deploying the User Operator using the Cluster Operator. However, a standalone deployment is more flexible as the User Operator can operate with any Kafka cluster, not necessarily one deployed by the Cluster Operator.

Prerequisites

- You need an existing Kafka cluster for the User Operator to connect to.

Procedure

Edit the following

Deployment.spec.template.spec.containers[0].envproperties in theinstall/user-operator/05-Deployment-strimzi-user-operator.yamlfile by setting:-

STRIMZI_KAFKA_BOOTSTRAP_SERVERSto list the Kafka brokers, given as a comma-separated list ofhostname:portpairs. -

STRIMZI_ZOOKEEPER_CONNECTto list the ZooKeeper nodes, given as a comma-separated list ofhostname:portpairs. This must be the same ZooKeeper cluster that your Kafka cluster is using. Connecting to ZooKeeper nodes with TLS encryption is not supported. -

STRIMZI_NAMESPACEto the OpenShift namespace in which you want the operator to watch forKafkaUserresources. -

STRIMZI_LABELSto the label selector used to identify theKafkaUserresources managed by the operator. -

STRIMZI_FULL_RECONCILIATION_INTERVAL_MSto specify the interval between periodic reconciliations, in milliseconds. -

STRIMZI_ZOOKEEPER_SESSION_TIMEOUT_MSto the ZooKeeper session timeout, in milliseconds. For example,10000. Default20000(20 seconds). -

STRIMZI_CA_CERT_NAMEto point to an OpenShiftSecretthat contains the public key of the Certificate Authority for signing new user certificates for TLS client authentication. TheSecretmust contain the public key of the Certificate Authority under the keyca.crt. -

STRIMZI_CA_KEY_NAMEto point to an OpenShiftSecretthat contains the private key of the Certificate Authority for signing new user certificates for TLS client authentication. TheSecretmust contain the private key of the Certificate Authority under the keyca.key. -

STRIMZI_CLUSTER_CA_CERT_SECRET_NAMEto point to an OpenShiftSecretcontaining the public key of the Certificate Authority used for signing Kafka brokers certificates for enabling TLS-based communication. TheSecretmust contain the public key of the Certificate Authority under the keyca.crt. This environment variable is optional and should be set only if the communication with the Kafka cluster is TLS based. -

STRIMZI_EO_KEY_SECRET_NAMEto point to an OpenShiftSecretcontaining the private key and related certificate for TLS client authentication against the Kafka cluster. TheSecretmust contain the keystore with the private key and certificate under the keyentity-operator.p12, and the related password under the keyentity-operator.password. This environment variable is optional and should be set only if TLS client authentication is needed when the communication with the Kafka cluster is TLS based. -

STRIMZI_CA_VALIDITYthe validity period for the Certificate Authority. Default is365days. -

STRIMZI_CA_RENEWALthe renewal period for the Certificate Authority. -

STRIMZI_LOG_LEVELto the level for printing logging messages. The value can be set to:ERROR,WARNING,INFO,DEBUG, andTRACE. DefaultINFO. -

STRIMZI_GC_LOG_ENABLEDto enable garbage collection (GC) logging. Defaulttrue. Default is30days to initiate certificate renewal before the old certificates expire. -

STRIMZI_JAVA_OPTS(optional) to the Java options used for the JVM running User Operator. An example is-Xmx=512M -Xms=256M. -

STRIMZI_JAVA_SYSTEM_PROPERTIES(optional) to list the-Doptions which are set to the User Operator. An example is-Djavax.net.debug=verbose -DpropertyName=value.

-

Deploy the User Operator:

oc apply -f install/user-operator

Verify that the User Operator has been deployed successfully:

oc describe deployment strimzi-user-operator

The User Operator is deployed when the

Replicas:entry shows1 available.NoteYou may experience a delay with the deployment if you have a slow connection to the OpenShift cluster and the images have not been downloaded before.

4.2. Deploy Kafka Connect

Kafka Connect is a tool for streaming data between Apache Kafka and external systems.

In AMQ Streams, Kafka Connect is deployed in distributed mode. Kafka Connect can also work in standalone mode, but this is not supported by AMQ Streams.

Using the concept of connectors, Kafka Connect provides a framework for moving large amounts of data into and out of your Kafka cluster while maintaining scalability and reliability.

Kafka Connect is typically used to integrate Kafka with external databases and storage and messaging systems.

The procedures in this section show how to:

The term connector is used interchangeably to mean a connector instance running within a Kafka Connect cluster, or a connector class. In this guide, the term connector is used when the meaning is clear from the context.

4.2.1. Deploying Kafka Connect to your OpenShift cluster

This procedure shows how to deploy a Kafka Connect cluster to your OpenShift cluster using the Cluster Operator.

A Kafka Connect cluster is implemented as a Deployment with a configurable number of nodes (also called workers) that distribute the workload of connectors as tasks so that the message flow is highly scalable and reliable.

The deployment uses a YAML file to provide the specification to create a KafkaConnect resource.

In this procedure, we use the example file provided with AMQ Streams:

-

examples/kafka-connect/kafka-connect.yaml

For more information about configuring the KafkaConnect resource, see:

Prerequisites

Procedure

Deploy Kafka Connect to your OpenShift cluster.

oc apply -f examples/kafka-connect/kafka-connect.yaml

Verify that Kafka Connect was successfully deployed:

oc get deployments

4.2.2. Extending Kafka Connect with connector plug-ins

The AMQ Streams container images for Kafka Connect include two built-in file connectors for moving file-based data into and out of your Kafka cluster.

| File Connector | Description |

|---|---|

|

| Transfers data to your Kafka cluster from a file (the source). |

|

| Transfers data from your Kafka cluster to a file (the sink). |

The Cluster Operator can also use images that you have created to deploy a Kafka Connect cluster to your OpenShift cluster.

The procedures in this section show how to add your own connector classes to connector images by:

You create the configuration for connectors directly using the Kafka Connect REST API or KafkaConnector custom resources.

4.2.2.1. Creating a Docker image from the Kafka Connect base image

This procedure shows how to create a custom image and add it to the /opt/kafka/plugins directory.

You can use the Kafka container image on Red Hat Ecosystem Catalog as a base image for creating your own custom image with additional connector plug-ins.

At startup, the AMQ Streams version of Kafka Connect loads any third-party connector plug-ins contained in the /opt/kafka/plugins directory.

Prerequisites

Procedure

Create a new

Dockerfileusingregistry.redhat.io/amq7/amq-streams-kafka-25-rhel7:1.5.0as the base image:FROM registry.redhat.io/amq7/amq-streams-kafka-25-rhel7:1.5.0 USER root:root COPY ./my-plugins/ /opt/kafka/plugins/ USER 1001Example plug-in file

$ tree ./my-plugins/ ./my-plugins/ ├── debezium-connector-mongodb │ ├── bson-3.4.2.jar │ ├── CHANGELOG.md │ ├── CONTRIBUTE.md │ ├── COPYRIGHT.txt │ ├── debezium-connector-mongodb-0.7.1.jar │ ├── debezium-core-0.7.1.jar │ ├── LICENSE.txt │ ├── mongodb-driver-3.4.2.jar │ ├── mongodb-driver-core-3.4.2.jar │ └── README.md ├── debezium-connector-mysql │ ├── CHANGELOG.md │ ├── CONTRIBUTE.md │ ├── COPYRIGHT.txt │ ├── debezium-connector-mysql-0.7.1.jar │ ├── debezium-core-0.7.1.jar │ ├── LICENSE.txt │ ├── mysql-binlog-connector-java-0.13.0.jar │ ├── mysql-connector-java-5.1.40.jar │ ├── README.md │ └── wkb-1.0.2.jar └── debezium-connector-postgres ├── CHANGELOG.md ├── CONTRIBUTE.md ├── COPYRIGHT.txt ├── debezium-connector-postgres-0.7.1.jar ├── debezium-core-0.7.1.jar ├── LICENSE.txt ├── postgresql-42.0.0.jar ├── protobuf-java-2.6.1.jar └── README.md

- Build the container image.

- Push your custom image to your container registry.

Point to the new container image.

You can either:

Edit the

KafkaConnect.spec.imageproperty of theKafkaConnectcustom resource.If set, this property overrides the

STRIMZI_KAFKA_CONNECT_IMAGESvariable in the Cluster Operator.apiVersion: kafka.strimzi.io/v1beta1 kind: KafkaConnect metadata: name: my-connect-cluster spec: 1 #... image: my-new-container-image 2 config: 3 #...

or

-

In the

install/cluster-operator/050-Deployment-strimzi-cluster-operator.yamlfile, edit theSTRIMZI_KAFKA_CONNECT_IMAGESvariable to point to the new container image, and then reinstall the Cluster Operator.

Additional resources

-

For more information on the

KafkaConnect.spec.image property, see Container images. -

For more information on the

STRIMZI_KAFKA_CONNECT_IMAGESvariable, see Cluster Operator Configuration.

4.2.2.2. Creating a container image using OpenShift builds and Source-to-Image

This procedure shows how to use OpenShift builds and the Source-to-Image (S2I) framework to create a new container image.

An OpenShift build takes a builder image with S2I support, together with source code and binaries provided by the user, and uses them to build a new container image. Once built, container images are stored in OpenShift’s local container image repository and are available for use in deployments.

A Kafka Connect builder image with S2I support is provided on the Red Hat Ecosystem Catalog as part of the registry.redhat.io/amq7/amq-streams-kafka-25-rhel7:1.5.0 image. This S2I image takes your binaries (with plug-ins and connectors) and stores them in the /tmp/kafka-plugins/s2i directory. It creates a new Kafka Connect image from this directory, which can then be used with the Kafka Connect deployment. When started using the enhanced image, Kafka Connect loads any third-party plug-ins from the /tmp/kafka-plugins/s2i directory.

Procedure

On the command line, use the

oc applycommand to create and deploy a Kafka Connect S2I cluster:oc apply -f examples/connect/kafka-connect-s2i.yaml

Create a directory with Kafka Connect plug-ins:

$ tree ./my-plugins/ ./my-plugins/ ├── debezium-connector-mongodb │ ├── bson-3.4.2.jar │ ├── CHANGELOG.md │ ├── CONTRIBUTE.md │ ├── COPYRIGHT.txt │ ├── debezium-connector-mongodb-0.7.1.jar │ ├── debezium-core-0.7.1.jar │ ├── LICENSE.txt │ ├── mongodb-driver-3.4.2.jar │ ├── mongodb-driver-core-3.4.2.jar │ └── README.md ├── debezium-connector-mysql │ ├── CHANGELOG.md │ ├── CONTRIBUTE.md │ ├── COPYRIGHT.txt │ ├── debezium-connector-mysql-0.7.1.jar │ ├── debezium-core-0.7.1.jar │ ├── LICENSE.txt │ ├── mysql-binlog-connector-java-0.13.0.jar │ ├── mysql-connector-java-5.1.40.jar │ ├── README.md │ └── wkb-1.0.2.jar └── debezium-connector-postgres ├── CHANGELOG.md ├── CONTRIBUTE.md ├── COPYRIGHT.txt ├── debezium-connector-postgres-0.7.1.jar ├── debezium-core-0.7.1.jar ├── LICENSE.txt ├── postgresql-42.0.0.jar ├── protobuf-java-2.6.1.jar └── README.md

Use the

oc start-buildcommand to start a new build of the image using the prepared directory:oc start-build my-connect-cluster-connect --from-dir ./my-plugins/

NoteThe name of the build is the same as the name of the deployed Kafka Connect cluster.

- When the build has finished, the new image is used automatically by the Kafka Connect deployment.

4.2.3. Creating and managing connectors

When you have created a container image for your connector plug-in, you need to create a connector instance in your Kafka Connect cluster. You can then configure, monitor, and manage a running connector instance.

A connector is an instance of a particular connector class that knows how to communicate with the relevant external system in terms of messages. Connectors are available for many external systems, or you can create your own.

You can create source and sink types of connector.

- Source connector

- A source connector is a runtime entity that fetches data from an external system and feeds it to Kafka as messages.

- Sink connector

- A sink connector is a runtime entity that fetches messages from Kafka topics and feeds them to an external system.

AMQ Streams provides two APIs for creating and managing connectors:

-

KafkaConnectorresources (referred to asKafkaConnectors) - Kafka Connect REST API

Using the APIs, you can:

- Check the status of a connector instance

- Reconfigure a running connector

- Increase or decrease the number of tasks for a connector instance

-

Restart failed tasks (not supported by

KafkaConnectorresource) - Pause a connector instance

- Resume a previously paused connector instance

- Delete a connector instance

4.2.3.1. KafkaConnector resources

KafkaConnectors allow you to create and manage connector instances for Kafka Connect in an OpenShift-native way, so an HTTP client such as cURL is not required. Like other Kafka resources, you declare a connector’s desired state in a KafkaConnector YAML file that is deployed to your OpenShift cluster to create the connector instance.

You manage a running connector instance by updating its corresponding KafkaConnector, and then applying the updates. You remove a connector by deleting its corresponding KafkaConnector.

To ensure compatibility with earlier versions of AMQ Streams, KafkaConnectors are disabled by default. To enable them for a Kafka Connect cluster, you must use annotations on the KafkaConnect resource. For instructions, see Enabling KafkaConnector resources.

When KafkaConnectors are enabled, the Cluster Operator begins to watch for them. It updates the configurations of running connector instances to match the configurations defined in their KafkaConnectors.

AMQ Streams includes an example KafkaConnector, named examples/connect/source-connector.yaml. You can use this example to create and manage a FileStreamSourceConnector.

4.2.3.2. Availability of the Kafka Connect REST API

The Kafka Connect REST API is available on port 8083 as the <connect-cluster-name>-connect-api service.

If KafkaConnectors are enabled, manual changes made directly using the Kafka Connect REST API are reverted by the Cluster Operator.

The operations supported by the REST API are described in the Apache Kafka documentation.

4.2.4. Deploying a KafkaConnector resource to Kafka Connect

This procedure describes how to deploy the example KafkaConnector to a Kafka Connect cluster.

The example YAML will create a FileStreamSourceConnector to send each line of the license file to Kafka as a message in a topic named my-topic.

Prerequisites

-

A Kafka Connect deployment in which

KafkaConnectorsare enabled - A running Cluster Operator

Procedure

Edit the

examples/connect/source-connector.yamlfile:apiVersion: kafka.strimzi.io/v1alpha1 kind: KafkaConnector metadata: name: my-source-connector 1 labels: strimzi.io/cluster: my-connect-cluster 2 spec: class: org.apache.kafka.connect.file.FileStreamSourceConnector 3 tasksMax: 2 4 config: 5 file: "/opt/kafka/LICENSE" topic: my-topic # ...

- 1

- Enter a name for the

KafkaConnectorresource. This will be used as the name of the connector within Kafka Connect. You can choose any name that is valid for an OpenShift resource. - 2

- Enter the name of the Kafka Connect cluster in which to create the connector.

- 3

- The name or alias of the connector class. This should be present in the image being used by the Kafka Connect cluster.

- 4

- The maximum number of tasks that the connector can create.

- 5

- Configuration settings for the connector. Available configuration options depend on the connector class.

Create the

KafkaConnectorin your OpenShift cluster:oc apply -f examples/connect/source-connector.yaml

Check that the resource was created:

oc get kctr --selector strimzi.io/cluster=my-connect-cluster -o name

4.3. Deploy Kafka MirrorMaker

The Cluster Operator deploys one or more Kafka MirrorMaker replicas to replicate data between Kafka clusters. This process is called mirroring to avoid confusion with the Kafka partitions replication concept. MirrorMaker consumes messages from the source cluster and republishes those messages to the target cluster.

4.3.1. Deploying Kafka MirrorMaker to your OpenShift cluster

This procedure shows how to deploy a Kafka MirrorMaker cluster to your OpenShift cluster using the Cluster Operator.

The deployment uses a YAML file to provide the specification to create a KafkaMirrorMaker or KafkaMirrorMaker2 resource depending on the version of MirrorMaker deployed.

In this procedure, we use the example files provided with AMQ Streams:

-

examples/mirror-maker/kafka-mirror-maker.yaml -

examples/mirror-maker/kafka-mirror-maker-2.yaml

For information about configuring KafkaMirrorMaker or KafkaMirrorMaker2 resources, see Kafka MirrorMaker configuration.

Prerequisites

Procedure

Deploy Kafka MirrorMaker to your OpenShift cluster:

For MirrorMaker:

oc apply -f examples/mirror-maker/kafka-mirror-maker.yaml

For MirrorMaker 2.0:

oc apply -f examples/mirror-maker/kafka-mirror-maker-2.yaml

Verify that MirrorMaker was successfully deployed:

oc get deployments

4.4. Deploy Kafka Bridge

The Cluster Operator deploys one or more Kafka bridge replicas to send data between Kafka clusters and clients via HTTP API.

4.4.1. Deploying Kafka Bridge to your OpenShift cluster

This procedure shows how to deploy a Kafka Bridge cluster to your OpenShift cluster using the Cluster Operator.

The deployment uses a YAML file to provide the specification to create a KafkaBridge resource.

In this procedure, we use the example file provided with AMQ Streams:

-

examples/bridge/kafka-bridge.yaml

For information about configuring the KafkaBridge resource, see Kafka Bridge configuration.

Prerequisites

Procedure

Deploy Kafka Bridge to your OpenShift cluster:

oc apply -f examples/bridge/kafka-bridge.yaml

Verify that Kafka Bridge was successfully deployed:

oc get deployments

Chapter 5. Verifying the AMQ Streams deployment

Having deployed AMQ Streams, the procedure in this section shows how to deploy example producer and consumer clients.

The procedure assumes a AMQ Streams is available and running in an OpenShift cluster.

5.1. Deploying example clients

This procedure shows how to deploy example producer and consumer clients that use the Kafka cluster you created to send and receive messages.

Prerequisites

- The Kafka cluster is available for the clients.

Procedure

Deploy a Kafka producer.

oc run kafka-producer -ti --image=registry.redhat.io/amq7/amq-streams-kafka-25-rhel7:1.5.0 --rm=true --restart=Never -- bin/kafka-console-producer.sh --broker-list cluster-name-kafka-bootstrap:9092 --topic my-topic

- Type a message into the console where the producer is running.

- Press Enter to send the message.

Deploy a Kafka consumer.

oc run kafka-consumer -ti --image=registry.redhat.io/amq7/amq-streams-kafka-25-rhel7:1.5.0 --rm=true --restart=Never -- bin/kafka-console-consumer.sh --bootstrap-server cluster-name-kafka-bootstrap:9092 --topic my-topic --from-beginning

- Confirm that you see the incoming messages in the consumer console.

Chapter 6. Introducing Metrics to Kafka

This section describes setup options for monitoring your AMQ Streams deployment.

Depending on your requirements, you can:

When you have Prometheus and Grafana enabled, Kafka Exporter provides additional monitoring related to consumer lag.

Additionally, you can configure your deployment to track messages end-to-end by setting up distributed tracing.

Additional resources

- For more information about Prometheus, see the Prometheus documentation.

- For more information about Grafana, see the Grafana documentation.

- Apache Kafka Monitoring describes JMX metrics exposed by Apache Kafka.

- ZooKeeper JMX describes JMX metrics exposed by Apache ZooKeeper.

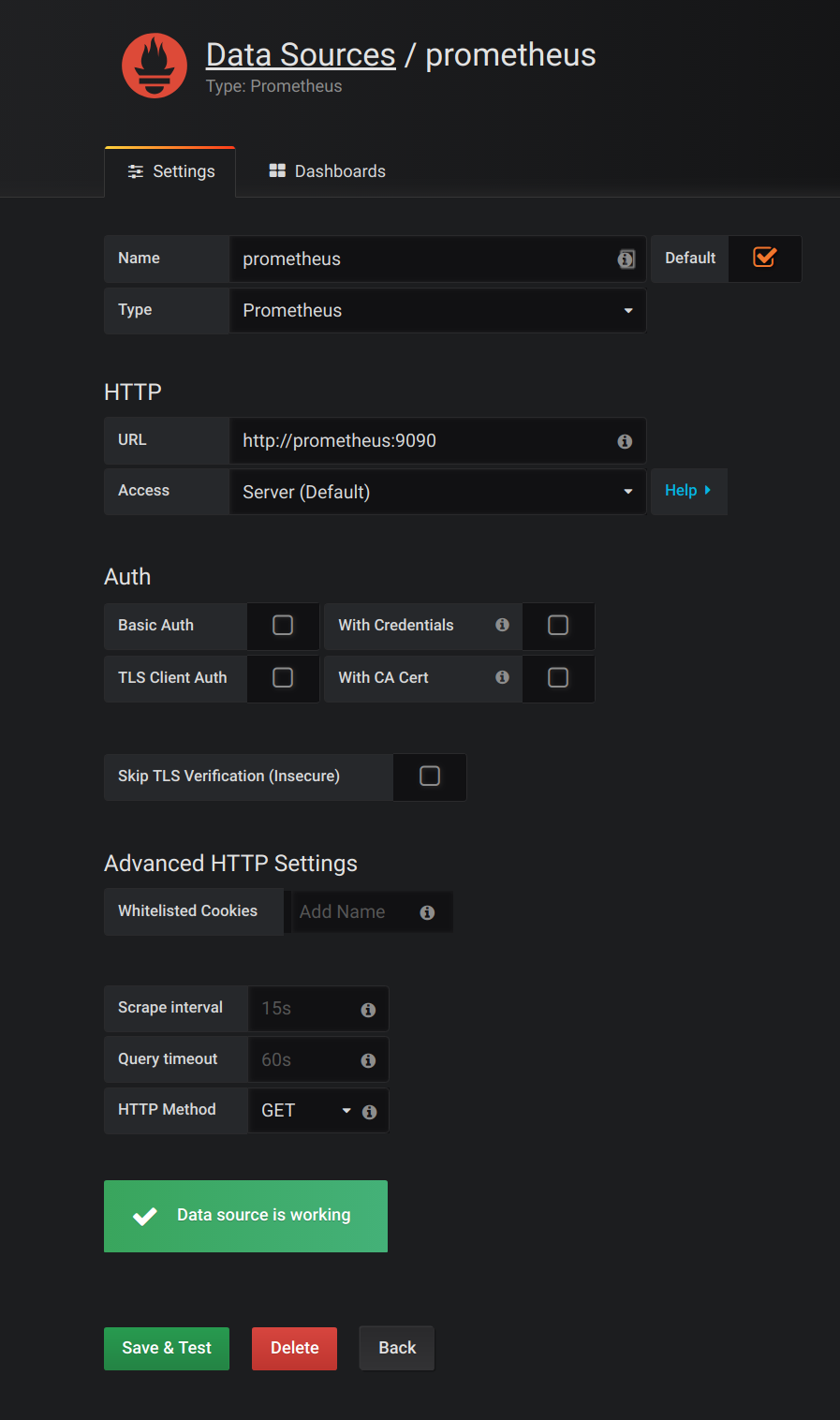

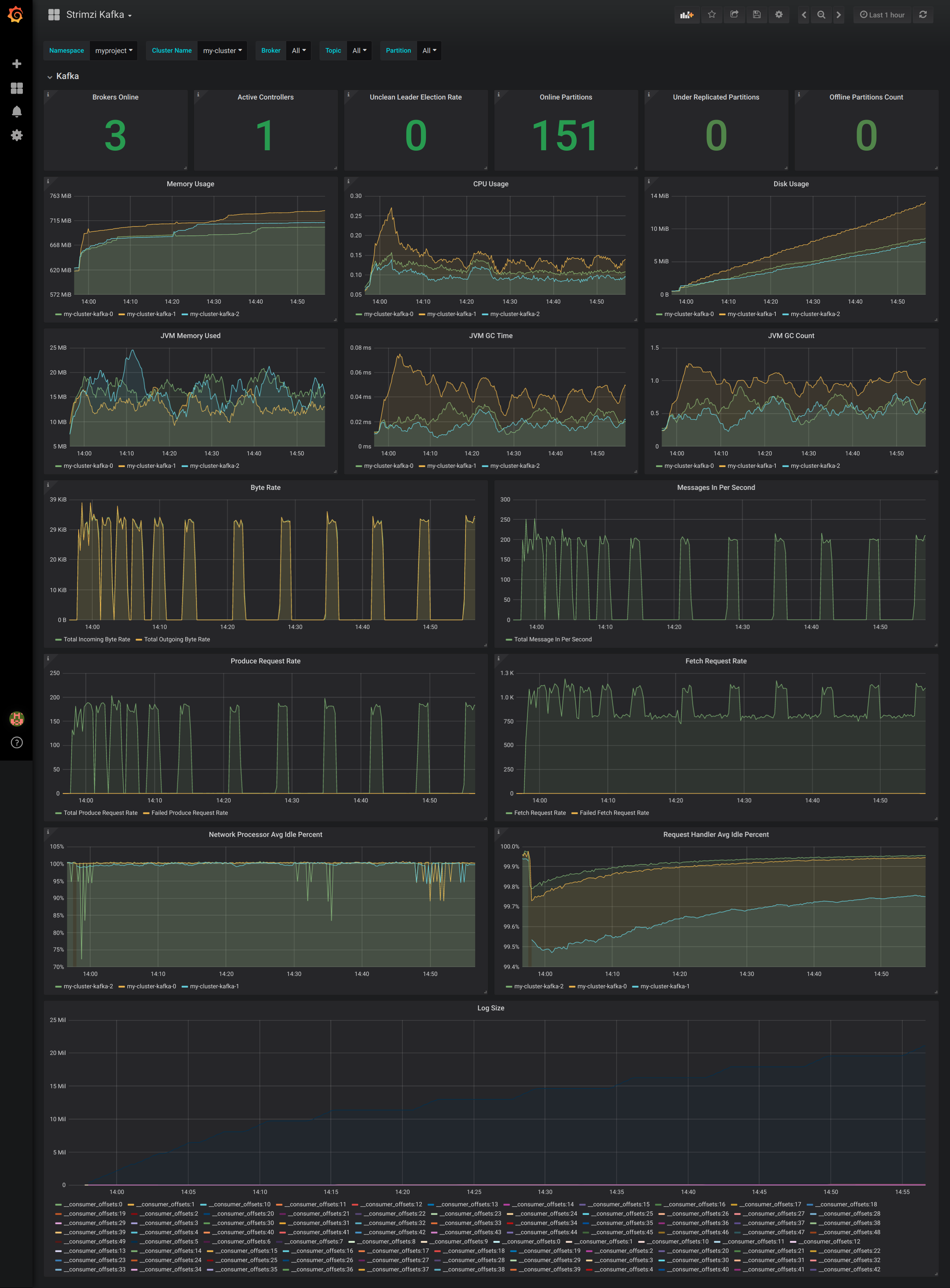

6.1. Add Prometheus and Grafana

This section describes how to monitor AMQ Streams Kafka, ZooKeeper, Kafka Connect, and Kafka MirrorMaker and MirrorMaker 2.0 clusters using Prometheus to provide monitoring data for example Grafana dashboards.

Prometheus and Grafana can be also used to monitor the operators. The example Grafana dashboard for operators provides:

- Information about the operator such as the number of reconciliations or number of Custom Resources they are processing

- JVM metrics from the operators

In order to run the example Grafana dashboards, you must:

The resources referenced in this section are intended as a starting point for setting up monitoring, but they are provided as examples only. If you require further support on configuring and running Prometheus or Grafana in production, try reaching out to their respective communities.

6.1.1. Example Metrics files

You can find the example metrics configuration files in the examples/metrics directory.

metrics ├── grafana-install │ ├── grafana.yaml 1 ├── grafana-dashboards 2 │ ├── strimzi-kafka-connect.json │ ├── strimzi-kafka.json │ ├── strimzi-zookeeper.json │ ├── strimzi-kafka-mirror-maker-2.json │ ├── strimzi-operators.json │ └── strimzi-kafka-exporter.json 3 ├── kafka-connect-metrics.yaml 4 ├── kafka-metrics.yaml 5 ├── prometheus-additional-properties │ └── prometheus-additional.yaml 6 ├── prometheus-alertmanager-config │ └── alert-manager-config.yaml 7 └── prometheus-install ├── alert-manager.yaml 8 ├── prometheus-rules.yaml 9 ├── prometheus.yaml 10 ├── strimzi-pod-monitor.yaml 11 └── strimzi-service-monitor.yaml 12

- 1

- Installation file for the Grafana image

- 2

- Grafana dashboards

- 3

- Grafana dashboard specific to Kafka Exporter

- 4

- Metrics configuration that defines Prometheus JMX Exporter relabeling rules for Kafka Connect

- 5

- Metrics configuration that defines Prometheus JMX Exporter relabeling rules for Kafka and ZooKeeper

- 6

- Configuration to add roles for service monitoring

- 7

- Hook definitions for sending notifications through Alertmanager

- 8

- Resources for deploying and configuring Alertmanager

- 9

- Alerting rules examples for use with Prometheus Alertmanager (deployed with Prometheus)

- 10

- Installation file for the Prometheus image

- 11

- Prometheus job definitions to scrape metrics data from pods

- 12

- Prometheus job definitions to scrape metrics data from services

6.1.2. Exposing Prometheus metrics

AMQ Streams uses the Prometheus JMX Exporter to expose JMX metrics from Kafka and ZooKeeper using an HTTP endpoint, which is then scraped by the Prometheus server.

6.1.2.1. Prometheus metrics configuration

AMQ Streams provides example configuration files for Grafana.

Grafana dashboards are dependent on Prometheus JMX Exporter relabeling rules, which are defined for:

-

Kafka and ZooKeeper as a

Kafkaresource configuration in the examplekafka-metrics.yamlfile -

Kafka Connect as

KafkaConnectandKafkaConnectS2Iresources in the examplekafka-connect-metrics.yamlfile

A label is a name-value pair. Relabeling is the process of writing a label dynamically. For example, the value of a label may be derived from the name of a Kafka server and client ID.

We show metrics configuration using kafka-metrics.yaml in this section, but the process is the same when configuring Kafka Connect using the kafka-connect-metrics.yaml file.

Additional resources

For more information on the use of relabeling, see Configuration in the Prometheus documentation.

6.1.2.2. Prometheus metrics deployment options

To apply the example metrics configuration of relabeling rules to your Kafka cluster, do one of the following:

6.1.2.3. Copying Prometheus metrics configuration to a Kafka resource

To use Grafana dashboards for monitoring, you can copy the example metrics configuration to a Kafka resource.

Procedure

Execute the following steps for each Kafka resource in your deployment.

Update the

Kafkaresource in an editor.oc edit kafka my-cluster-

Copy the example configuration in

kafka-metrics.yamlto your ownKafkaresource definition. - Save the file, exit the editor and wait for the updated resource to be reconciled.

6.1.2.4. Deploying a Kafka cluster with Prometheus metrics configuration

To use Grafana dashboards for monitoring, you can deploy an example Kafka cluster with metrics configuration.

Procedure

Deploy the Kafka cluster with the metrics configuration:

oc apply -f kafka-metrics.yaml

6.1.3. Setting up Prometheus

Prometheus provides an open source set of components for systems monitoring and alert notification.

We describe here how you can use the CoreOS Prometheus Operator to run and manage a Prometheus server that is suitable for use in production environments, but with the correct configuration you can run any Prometheus server.

The Prometheus server configuration uses service discovery to discover the pods in the cluster from which it gets metrics. For this feature to work correctly, the service account used for running the Prometheus service pod must have access to the API server so it can retrieve the pod list.

For more information, see Discovering services.

6.1.3.1. Prometheus configuration

AMQ Streams provides example configuration files for the Prometheus server.

A Prometheus image is provided for deployment:

-

prometheus.yaml

Additional Prometheus-related configuration is also provided in the following files:

-

prometheus-additional.yaml -

prometheus-rules.yaml -

strimzi-pod-monitor.yaml -

strimzi-service-monitor.yaml

For Prometheus to obtain monitoring data:

Then use the configuration files to:

Alerting rules

The prometheus-rules.yaml file provides example alerting rule examples for use with Alertmanager.

6.1.3.2. Prometheus resources

When you apply the Prometheus configuration, the following resources are created in your OpenShift cluster and managed by the Prometheus Operator:

-

A

ClusterRolethat grants permissions to Prometheus to read the health endpoints exposed by the Kafka and ZooKeeper pods, cAdvisor and the kubelet for container metrics. -

A

ServiceAccountfor the Prometheus pods to run under. -

A

ClusterRoleBindingwhich binds theClusterRoleto theServiceAccount. -

A

Deploymentto manage the Prometheus Operator pod. -

A

ServiceMonitorto manage the configuration of the Prometheus pod. -

A

Prometheusto manage the configuration of the Prometheus pod. -

A

PrometheusRuleto manage alerting rules for the Prometheus pod. -

A

Secretto manage additional Prometheus settings. -

A

Serviceto allow applications running in the cluster to connect to Prometheus (for example, Grafana using Prometheus as datasource).

6.1.3.3. Deploying the CoreOS Prometheus Operator

To deploy the Prometheus Operator to your Kafka cluster, apply the YAML bundle resources file from the Prometheus CoreOS repository.

Procedure

Download the

bundle.yamlresources file from the repository.On Linux, use:

curl -s https://raw.githubusercontent.com/coreos/prometheus-operator/master/bundle.yaml | sed -e 's/namespace: .*/namespace: my-namespace/' > prometheus-operator-deployment.yamlOn MacOS, use:

curl -s https://raw.githubusercontent.com/coreos/prometheus-operator/master/bundle.yaml | sed -e '' 's/namespace: .*/namespace: my-namespace/' > prometheus-operator-deployment.yamlReplace the example

namespacewith your own.NoteIf using OpenShift, specify a release of the OpenShift fork of the Prometheus Operator repository.

-

(Optional) If it is not required, you can manually remove the

spec.template.spec.securityContextproperty from theprometheus-operator-deployment.yamlfile. Deploy the Prometheus Operator:

oc apply -f prometheus-operator-deployment.yaml

6.1.3.4. Deploying Prometheus

To deploy Prometheus to your Kafka cluster to obtain monitoring data, apply the example resource file for the Prometheus docker image and the YAML files for Prometheus-related resources.

The deployment process creates a ClusterRoleBinding and discovers an Alertmanager instance in the namespace specified for the deployment.

By default, the Prometheus Operator only supports jobs that include an endpoints role for service discovery. Targets are discovered and scraped for each endpoint port address. For endpoint discovery, the port address may be derived from service (role: service) or pod (role: pod) discovery.

Prerequisites

- Check the example alerting rules provided

Procedure

Modify the Prometheus installation file (

prometheus.yaml) according to the namespace Prometheus is going to be installed into:On Linux, use:

sed -i 's/namespace: .*/namespace: my-namespace/' prometheus.yamlOn MacOS, use:

sed -i '' 's/namespace: .*/namespace: my-namespace/' prometheus.yaml-

Edit the

ServiceMonitorresource instrimzi-service-monitor.yamlto define Prometheus jobs that will scrape the metrics data from services.ServiceMonitoris used to scrape metrics through services and is used for Apache Kafka, ZooKeeper. -

Edit the

PodMonitorresource instrimzi-pod-monitor.yamlto define Prometheus jobs that will scrape the metrics data from pods.PodMonitoris used to scrape data directly from pods and is used for Operators. To use another role:

Create a

Secretresource:oc create secret generic additional-scrape-configs --from-file=prometheus-additional.yaml

-

Edit the

additionalScrapeConfigsproperty in theprometheus.yamlfile to include the name of theSecretand the YAML file (prometheus-additional.yaml) that contains the additional configuration.

Deploy the Prometheus resources:

oc apply -f strimzi-service-monitor.yaml oc apply -f strimzi-pod-monitor.yaml oc apply -f prometheus-rules.yaml oc apply -f prometheus.yaml

6.1.4. Setting up Prometheus Alertmanager

Prometheus Alertmanager is a plugin for handling alerts and routing them to a notification service. Alertmanager supports an essential aspect of monitoring, which is to be notified of conditions that indicate potential issues based on alerting rules.

6.1.4.1. Alertmanager configuration

AMQ Streams provides example configuration files for Prometheus Alertmanager.

A configuration file defines the resources for deploying Alertmanager:

-

alert-manager.yaml

An additional configuration file provides the hook definitions for sending notifications from your Kafka cluster.

-

alert-manager-config.yaml

For Alertmanger to handle Prometheus alerts, use the configuration files to:

6.1.4.2. Alerting rules

Alerting rules provide notifications about specific conditions observed in the metrics. Rules are declared on the Prometheus server, but Prometheus Alertmanager is responsible for alert notifications.

Prometheus alerting rules describe conditions using PromQL expressions that are continuously evaluated.

When an alert expression becomes true, the condition is met and the Prometheus server sends alert data to the Alertmanager. Alertmanager then sends out a notification using the communication method configured for its deployment.

Alertmanager can be configured to use email, chat messages or other notification methods.

Additional resources

For more information about setting up alerting rules, see Configuration in the Prometheus documentation.

6.1.4.3. Alerting rule examples

Example alerting rules for Kafka and ZooKeeper metrics are provided with AMQ Streams for use in a Prometheus deployment.

General points about the alerting rule definitions:

-

A

forproperty is used with the rules to determine the period of time a condition must persist before an alert is triggered. -

A tick is a basic ZooKeeper time unit, which is measured in milliseconds and configured using the

tickTimeparameter ofKafka.spec.zookeeper.config. For example, if ZooKeepertickTime=3000, 3 ticks (3 x 3000) equals 9000 milliseconds. -

The availability of the

ZookeeperRunningOutOfSpacemetric and alert is dependent on the OpenShift configuration and storage implementation used. Storage implementations for certain platforms may not be able to supply the information on available space required for the metric to provide an alert.

Kafka alerting rules

UnderReplicatedPartitions-

Gives the number of partitions for which the current broker is the lead replica but which have fewer replicas than the

min.insync.replicasconfigured for their topic. This metric provides insights about brokers that host the follower replicas. Those followers are not keeping up with the leader. Reasons for this could include being (or having been) offline, and over-throttled interbroker replication. An alert is raised when this value is greater than zero, providing information on the under-replicated partitions for each broker. AbnormalControllerState- Indicates whether the current broker is the controller for the cluster. The metric can be 0 or 1. During the life of a cluster, only one broker should be the controller and the cluster always needs to have an active controller. Having two or more brokers saying that they are controllers indicates a problem. If the condition persists, an alert is raised when the sum of all the values for this metric on all brokers is not equal to 1, meaning that there is no active controller (the sum is 0) or more than one controller (the sum is greater than 1).

UnderMinIsrPartitionCount-

Indicates that the minimum number of in-sync replicas (ISRs) for a lead Kafka broker, specified using