Chapter 12. Security

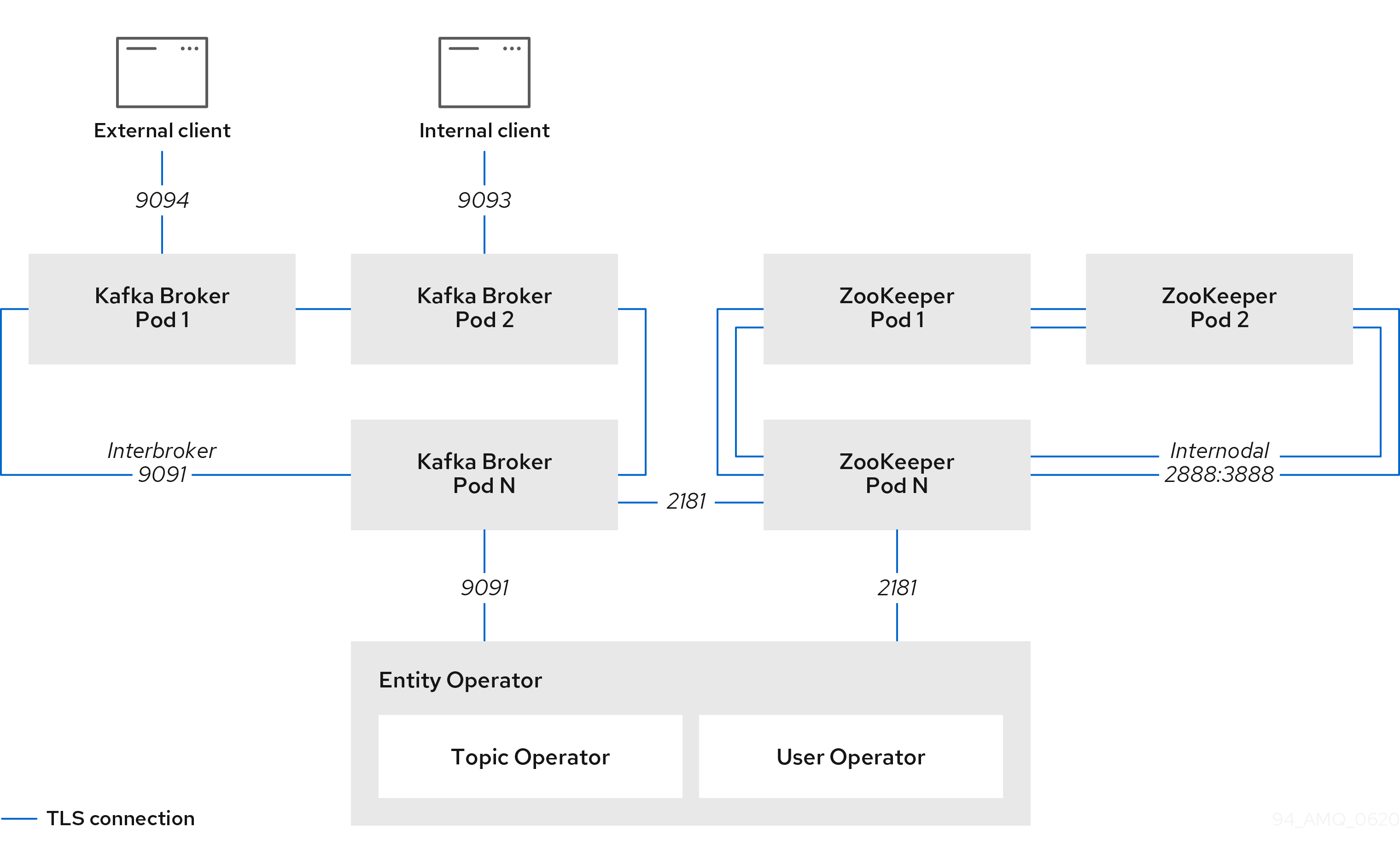

AMQ Streams supports encrypted communication between the Kafka and AMQ Streams components using the TLS protocol. Communication between Kafka brokers (interbroker communication), between ZooKeeper nodes (internodal communication), and between these and the AMQ Streams operators is always encrypted. Communication between Kafka clients and Kafka brokers is encrypted according to how the cluster is configured. For the Kafka and AMQ Streams components, TLS certificates are also used for authentication.

The Cluster Operator automatically sets up and renews TLS certificates to enable encryption and authentication within your cluster. It also sets up other TLS certificates if you want to enable encryption or TLS authentication between Kafka brokers and clients. Certificates provided by users are not renewed.

You can provide your own server certificates, called Kafka listener certificates, for TLS listeners or external listeners which have TLS encryption enabled. For more information, see Section 12.8, “Kafka listener certificates”.

Figure 12.1. Example architecture diagram of the communication secured by TLS.

12.1. Certificate Authorities

To support encryption, each AMQ Streams component needs its own private keys and public key certificates. All component certificates are signed by an internal Certificate Authority (CA) called the cluster CA.

Similarly, each Kafka client application connecting to AMQ Streams using TLS client authentication needs to provide private keys and certificates. A second internal CA, named the clients CA, is used to sign certificates for the Kafka clients.

12.1.1. CA certificates

Both the cluster CA and clients CA have a self-signed public key certificate.

Kafka brokers are configured to trust certificates signed by either the cluster CA or clients CA. Components that clients do not need to connect to, such as ZooKeeper, only trust certificates signed by the cluster CA. Unless TLS encryption for external listeners is disabled, client applications must trust certificates signed by the cluster CA. This is also true for client applications that perform mutual TLS authentication.

By default, AMQ Streams automatically generates and renews CA certificates issued by the cluster CA or clients CA. You can configure the management of these CA certificates in the Kafka.spec.clusterCa and Kafka.spec.clientsCa objects. Certificates provided by users are not renewed.

You can provide your own CA certificates for the cluster CA or clients CA. For more information, see Section 12.1.3, “Installing your own CA certificates”. If you provide your own certificates, you must manually renew them when needed.

12.1.2. Validity periods of CA certificates

CA certificate validity periods are expressed as a number of days after certificate generation. You can configure the validity period of:

-

Cluster CA certificates in

Kafka.spec.clusterCa.validityDays -

Client CA certificates in

Kafka.spec.clientsCa.validityDays

12.1.3. Installing your own CA certificates

This procedure describes how to install your own CA certificates and private keys instead of using CA certificates and private keys generated by the Cluster Operator.

Prerequisites

- The Cluster Operator is running.

- A Kafka cluster is not yet deployed.

Your own X.509 certificates and keys in PEM format for the cluster CA or clients CA.

If you want to use a cluster or clients CA which is not a Root CA, you have to include the whole chain in the certificate file. The chain should be in the following order:

- The cluster or clients CA

- One or more intermediate CAs

- The root CA

- All CAs in the chain should be configured as a CA in the X509v3 Basic Constraints.

Procedure

Put your CA certificate in the corresponding

Secret(<cluster>-cluster-ca-certfor the cluster CA or<cluster>-clients-ca-certfor the clients CA):Run the following commands:

# Delete any existing secret (ignore "Not Exists" errors) oc delete secret <ca-cert-secret> # Create and label the new secret oc create secret generic <ca-cert-secret> --from-file=ca.crt=<ca-cert-file>

# Delete any existing secret (ignore "Not Exists" errors) oc delete secret <ca-cert-secret> # Create and label the new secret oc create secret generic <ca-cert-secret> --from-file=ca.crt=<ca-cert-file>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Put your CA key in the corresponding

Secret(<cluster>-cluster-cafor the cluster CA or<cluster>-clients-cafor the clients CA):# Delete the existing secret oc delete secret <ca-key-secret> # Create the new one oc create secret generic <ca-key-secret> --from-file=ca.key=<ca-key-file>

# Delete the existing secret oc delete secret <ca-key-secret> # Create the new one oc create secret generic <ca-key-secret> --from-file=ca.key=<ca-key-file>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Label both

Secretswith the labelsstrimzi.io/kind=Kafkaandstrimzi.io/cluster=<my-cluster>:oc label secret <ca-cert-secret> strimzi.io/kind=Kafka strimzi.io/cluster=<my-cluster> oc label secret <ca-key-secret> strimzi.io/kind=Kafka strimzi.io/cluster=<my-cluster>

oc label secret <ca-cert-secret> strimzi.io/kind=Kafka strimzi.io/cluster=<my-cluster> oc label secret <ca-key-secret> strimzi.io/kind=Kafka strimzi.io/cluster=<my-cluster>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create the

Kafkaresource for your cluster, configuring either theKafka.spec.clusterCaor theKafka.spec.clientsCaobject to not use generated CAs:Example fragment

Kafkaresource configuring the cluster CA to use certificates you supply for yourselfCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional resources

- For the procedure for renewing CA certificates you have previously installed, see Section 12.3.4, “Renewing your own CA certificates”.

- Section 12.8.1, “Providing your own Kafka listener certificates”.

12.2. Secrets

AMQ Streams uses Secrets to store private keys and certificates for Kafka cluster components and clients. Secrets are used for establishing TLS encrypted connections between Kafka brokers, and between brokers and clients. They are also used for mutual TLS authentication.

- A Cluster Secret contains a cluster CA certificate to sign Kafka broker certificates, and is used by a connecting client to establish a TLS encrypted connection with the Kafka cluster to validate broker identity.

- A Client Secret contains a client CA certificate for a user to sign its own client certificate to allow mutual authentication against the Kafka cluster. The broker validates the client identity through the client CA certificate itself.

- A User Secret contains a private key and certificate, which are generated and signed by the client CA certificate when a new user is created. The key and certificate are used for authentication and authorization when accessing the cluster.

Secrets provide private keys and certificates in PEM and PKCS #12 formats. Using private keys and certificates in PEM format means that users have to get them from the Secrets, and generate a corresponding truststore (or keystore) to use in their Java applications. PKCS #12 storage provides a truststore (or keystore) that can be used directly.

All keys are 2048 bits in size.

12.2.1. PKCS #12 storage

PKCS #12 defines an archive file format (.p12) for storing cryptography objects into a single file with password protection. You can use PKCS #12 to manage certificates and keys in one place.

Each Secret contains fields specific to PKCS #12.

-

The

.p12field contains the certificates and keys. -

The

.passwordfield is the password that protects the archive.

12.2.2. Cluster CA Secrets

| Secret name | Field within Secret | Description |

|---|---|---|

|

|

| The current private key for the cluster CA. |

|

|

| PKCS #12 archive file for storing certificates and keys. |

|

| Password for protecting the PKCS #12 archive file. | |

|

| The current certificate for the cluster CA. | |

|

|

| PKCS #12 archive file for storing certificates and keys. |

|

| Password for protecting the PKCS #12 archive file. | |

|

|

Certificate for Kafka broker pod <num>. Signed by a current or former cluster CA private key in | |

|

| Private key for Kafka broker pod <num>. | |

|

|

| PKCS #12 archive file for storing certificates and keys. |

|

| Password for protecting the PKCS #12 archive file. | |

|

|

Certificate for ZooKeeper node <num>. Signed by a current or former cluster CA private key in | |

|

| Private key for ZooKeeper pod <num>. | |

|

|

| PKCS #12 archive file for storing certificates and keys. |

|

| Password for protecting the PKCS #12 archive file. | |

|

|

Certificate for TLS communication between the Entity Operator and Kafka or ZooKeeper. Signed by a current or former cluster CA private key in | |

|

| Private key for TLS communication between the Entity Operator and Kafka or ZooKeeper |

The CA certificates in <cluster>-cluster-ca-cert must be trusted by Kafka client applications so that they validate the Kafka broker certificates when connecting to Kafka brokers over TLS.

Only <cluster>-cluster-ca-cert needs to be used by clients. All other Secrets in the table above only need to be accessed by the AMQ Streams components. You can enforce this using OpenShift role-based access controls if necessary.

12.2.3. Client CA Secrets

| Secret name | Field within Secret | Description |

|---|---|---|

|

|

| The current private key for the clients CA. |

|

|

| PKCS #12 archive file for storing certificates and keys. |

|

| Password for protecting the PKCS #12 archive file. | |

|

| The current certificate for the clients CA. |

The certificates in <cluster>-clients-ca-cert are those which the Kafka brokers trust.

<cluster>-clients-ca is used to sign certificates of client applications. It needs to be accessible to the AMQ Streams components and for administrative access if you are intending to issue application certificates without using the User Operator. You can enforce this using OpenShift role-based access controls if necessary.

12.2.4. User Secrets

| Secret name | Field within Secret | Description |

|---|---|---|

|

|

| PKCS #12 archive file for storing certificates and keys. |

|

| Password for protecting the PKCS #12 archive file. | |

|

| Certificate for the user, signed by the clients CA | |

|

| Private key for the user |

12.3. Certificate renewal

The cluster CA and clients CA certificates are only valid for a limited time period, known as the validity period. This is usually defined as a number of days since the certificate was generated. For auto-generated CA certificates, you can configure the validity period in Kafka.spec.clusterCa.validityDays and Kafka.spec.clientsCa.validityDays. The default validity period for both certificates is 365 days. Manually-installed CA certificates should have their own validity period defined.

When a CA certificate expires, components and clients which still trust that certificate will not accept TLS connections from peers whose certificate were signed by the CA private key. The components and clients need to trust the new CA certificate instead.

To allow the renewal of CA certificates without a loss of service, the Cluster Operator will initiate certificate renewal before the old CA certificates expire. You can configure the renewal period in Kafka.spec.clusterCa.renewalDays and Kafka.spec.clientsCa.renewalDays (both default to 30 days). The renewal period is measured backwards, from the expiry date of the current certificate.

Not Before Not After

| |

|<--------------- validityDays --------------->|

<--- renewalDays --->|

Not Before Not After

| |

|<--------------- validityDays --------------->|

<--- renewalDays --->|

The behavior of the Cluster Operator during the renewal period depends on whether the relevant setting is enabled, in either Kafka.spec.clusterCa.generateCertificateAuthority or Kafka.spec.clientsCa.generateCertificateAuthority.

12.3.1. Renewal process with generated CAs

The Cluster Operator performs the following process to renew CA certificates:

-

Generate a new CA certificate, but retain the existing key. The new certificate replaces the old one with the name

ca.crtwithin the correspondingSecret. - Generate new client certificates (for ZooKeeper nodes, Kafka brokers, and the Entity Operator). This is not strictly necessary because the signing key has not changed, but it keeps the validity period of the client certificate in sync with the CA certificate.

- Restart ZooKeeper nodes so that they will trust the new CA certificate and use the new client certificates.

- Restart Kafka brokers so that they will trust the new CA certificate and use the new client certificates.

- Restart the Topic and User Operators so that they will trust the new CA certificate and use the new client certificates.

12.3.2. Client applications

The Cluster Operator is not aware of the client applications using the Kafka cluster.

When connecting to the cluster, and to ensure they operate correctly, client applications must:

- Trust the cluster CA certificate published in the <cluster>-cluster-ca-cert Secret.

Use the credentials published in their <user-name> Secret to connect to the cluster.

The User Secret provides credentials in PEM and PKCS #12 format, or it can provide a password when using SCRAM-SHA authentication. The User Operator creates the user credentials when a user is created.

For workloads running inside the same OpenShift cluster and namespace, Secrets can be mounted as a volume so the client Pods construct their keystores and truststores from the current state of the Secrets. For more details on this procedure, see Configuring internal clients to trust the cluster CA.

12.3.2.1. Client certificate renewal

You must ensure clients continue to work after certificate renewal. The renewal process depends on how the clients are configured.

If you are provisioning client certificates and keys manually, you must generate new client certificates and ensure the new certificates are used by clients within the renewal period. Failure to do this by the end of the renewal period could result in client applications being unable to connect to the cluster.

12.3.3. Renewing CA certificates manually

Unless the Kafka.spec.clusterCa.generateCertificateAuthority and Kafka.spec.clientsCa.generateCertificateAuthority objects are set to false, the cluster and clients CA certificates will auto-renew at the start of their respective certificate renewal periods. You can manually renew one or both of these certificates before the certificate renewal period starts, if required for security reasons. A renewed certificate uses the same private key as the old certificate.

Prerequisites

- The Cluster Operator is running.

- A Kafka cluster in which CA certificates and private keys are installed.

Procedure

Apply the

strimzi.io/force-renewannotation to theSecretthat contains the CA certificate that you want to renew.Expand Certificate Secret Annotate command Cluster CA

<cluster-name>-cluster-ca-cert

oc annotate secret <cluster-name>-cluster-ca-cert strimzi.io/force-renew=trueClients CA

<cluster-name>-clients-ca-cert

oc annotate secret <cluster-name>-clients-ca-cert strimzi.io/force-renew=true

At the next reconciliation the Cluster Operator will generate a new CA certificate for the Secret that you annotated. If maintenance time windows are configured, the Cluster Operator will generate the new CA certificate at the first reconciliation within the next maintenance time window.

Client applications must reload the cluster and clients CA certificates that were renewed by the Cluster Operator.

12.3.4. Renewing your own CA certificates

This procedure describes how to renew CA certificates and private keys that you previously installed. You will need to follow this procedure during the renewal period in order to replace CA certificates which will soon expire.

Prerequisites

- The Cluster Operator is running.

- A Kafka cluster in which you previously installed your own CA certificates and private keys.

New cluster and clients X.509 certificates and keys in PEM format. These could be generated using

opensslusing a command such as:openssl req -x509 -new -days <validity> --nodes -out ca.crt -keyout ca.key

openssl req -x509 -new -days <validity> --nodes -out ca.crt -keyout ca.keyCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Procedure

Establish what CA certificates already exist in the

Secret:Use the following commands:

oc describe secret <ca-cert-secret>

oc describe secret <ca-cert-secret>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Prepare a directory containing the existing CA certificates in the secret.

mkdir new-ca-cert-secret cd new-ca-cert-secret

mkdir new-ca-cert-secret cd new-ca-cert-secretCopy to Clipboard Copied! Toggle word wrap Toggle overflow For each certificate <ca-certificate> from the previous step, run:

# Fetch the existing secret oc get secret <ca-cert-secret> -o 'jsonpath={.data.<ca-certificate>}' | base64 -d > <ca-certificate># Fetch the existing secret oc get secret <ca-cert-secret> -o 'jsonpath={.data.<ca-certificate>}' | base64 -d > <ca-certificate>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Rename the old

ca.crtfile toca_DATE.crt, where DATE is the certificate expiry date in the format <year>-<month>-<day>_T<hour>_-<minute>-_<second>_Z, for exampleca-2018-09-27T17-32-00Z.crt.mv ca.crt ca-$(date -u -d$(openssl x509 -enddate -noout -in ca.crt | sed 's/.*=//') +'%Y-%m-%dT%H-%M-%SZ').crt

mv ca.crt ca-$(date -u -d$(openssl x509 -enddate -noout -in ca.crt | sed 's/.*=//') +'%Y-%m-%dT%H-%M-%SZ').crtCopy to Clipboard Copied! Toggle word wrap Toggle overflow Copy the new CA certificate into the directory, naming it

ca.crtcp <path-to-new-cert> ca.crt

cp <path-to-new-cert> ca.crtCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace the CA certificate

Secret(<cluster>-cluster-caor<cluster>-clients-ca). This can be done using the following commands:# Delete the existing secret oc delete secret <ca-cert-secret> # Re-create the secret with the new private key oc create secret generic <ca-cert-secret> --from-file=.

# Delete the existing secret oc delete secret <ca-cert-secret> # Re-create the secret with the new private key oc create secret generic <ca-cert-secret> --from-file=.Copy to Clipboard Copied! Toggle word wrap Toggle overflow You can now delete the directory you created:

cd .. rm -r new-ca-cert-secret

cd .. rm -r new-ca-cert-secretCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace the CA key

Secret(<cluster>-cluster-caor<cluster>-clients-ca). This can be done using the following commands:# Delete the existing secret oc delete secret <ca-key-secret> # Re-create the secret with the new private key oc create secret generic <ca-key-secret> --from-file=ca.key=<ca-key-file>

# Delete the existing secret oc delete secret <ca-key-secret> # Re-create the secret with the new private key oc create secret generic <ca-key-secret> --from-file=ca.key=<ca-key-file>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

12.4. Replacing private keys

You can replace the private keys used by the cluster CA and clients CA certificates. When a private key is replaced, the Cluster Operator generates a new CA certificate for the new private key.

Prerequisites

- The Cluster Operator is running.

- A Kafka cluster in which CA certificates and private keys are installed.

Procedure

Apply the

strimzi.io/force-replaceannotation to theSecretthat contains the private key that you want to renew.Expand Private key for Secret Annotate command Cluster CA

<cluster-name>-cluster-ca

oc annotate secret <cluster-name>-cluster-ca strimzi.io/force-replace=trueClients CA

<cluster-name>-clients-ca

oc annotate secret <cluster-name>-clients-ca strimzi.io/force-replace=true

At the next reconciliation the Cluster Operator will:

-

Generate a new private key for the

Secretthat you annotated - Generate a new CA certificate

If maintenance time windows are configured, the Cluster Operator will generate the new private key and CA certificate at the first reconciliation within the next maintenance time window.

Client applications must reload the cluster and clients CA certificates that were renewed by the Cluster Operator.

Additional resources

12.5. TLS connections

12.5.1. ZooKeeper communication

ZooKeeper does not support TLS itself. By deploying a TLS sidecar within every ZooKeeper pod, the Cluster Operator is able to provide data encryption and authentication between ZooKeeper nodes in a cluster. ZooKeeper only communicates with the TLS sidecar over the loopback interface. The TLS sidecar then proxies all ZooKeeper traffic, TLS decrypting data upon entry into a ZooKeeper pod, and TLS encrypting data upon departure from a ZooKeeper pod.

This TLS encrypting stunnel proxy is instantiated from the spec.zookeeper.stunnelImage specified in the Kafka resource.

12.5.2. Kafka interbroker communication

Communication between Kafka brokers is done through an internal listener on port 9091, which is encrypted by default and not accessible to Kafka clients.

Communication between Kafka brokers and ZooKeeper nodes uses a TLS sidecar, as described above.

12.5.3. Topic and User Operators

Like the Cluster Operator, the Topic and User Operators each use a TLS sidecar when communicating with ZooKeeper. The Topic Operator connects to Kafka brokers on port 9091.

12.5.4. Kafka Client connections

Encrypted communication between Kafka brokers and clients running within the same OpenShift cluster can be provided by configuring the spec.kafka.listeners.tls listener, which listens on port 9093.

Encrypted communication between Kafka brokers and clients running outside the same OpenShift cluster can be provided by configuring the spec.kafka.listeners.external listener (the port of the external listener depends on its type).

Unencrypted client communication with brokers can be configured by spec.kafka.listeners.plain, which listens on port 9092.

12.6. Configuring internal clients to trust the cluster CA

This procedure describes how to configure a Kafka client that resides inside the OpenShift cluster — connecting to the tls listener on port 9093 — to trust the cluster CA certificate.

The easiest way to achieve this for an internal client is to use a volume mount to access the Secrets containing the necessary certificates and keys.

Follow the steps to configure trust certificates that are signed by the cluster CA for Java-based Kafka Producer, Consumer, and Streams APIs.

Choose the steps to follow according to the certificate format of the cluster CA: PKCS #12 (.p12) or PEM (.crt).

The steps describe how to mount the Cluster Secret that verifies the identity of the Kafka cluster to the client pod.

Prerequisites

- The Cluster Operator must be running.

-

There needs to be a

Kafkaresource within the OpenShift cluster. - You need a Kafka client application outside the OpenShift cluster that will connect using TLS, and needs to trust the cluster CA certificate.

-

The client application must be running in the same namespace as the

Kafkaresource.

Using PKCS #12 format (.p12)

Mount the cluster Secret as a volume when defining the client pod.

For example:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Here we’re mounting:

- The PKCS #12 file into an exact path, which can be configured

- The password into an environment variable, where it can be used for Java configuration

Configure the Kafka client with the following properties:

A security protocol option:

-

security.protocol: SSLwhen using TLS for encryption (with or without TLS authentication). -

security.protocol: SASL_SSLwhen using SCRAM-SHA authentication over TLS.

-

-

ssl.truststore.locationwith the truststore location where the certificates were imported. -

ssl.truststore.passwordwith the password for accessing the truststore. -

ssl.truststore.type=PKCS12to identify the truststore type.

Using PEM format (.crt)

Mount the cluster Secret as a volume when defining the client pod.

For example:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Use the certificate with clients that use certificates in X.509 format.

12.7. Configuring external clients to trust the cluster CA

This procedure describes how to configure a Kafka client that resides outside the OpenShift cluster – connecting to the external listener on port 9094 – to trust the cluster CA certificate. Follow this procedure when setting up the client and during the renewal period, when the old clients CA certificate is replaced.

Follow the steps to configure trust certificates that are signed by the cluster CA for Java-based Kafka Producer, Consumer, and Streams APIs.

Choose the steps to follow according to the certificate format of the cluster CA: PKCS #12 (.p12) or PEM (.crt).

The steps describe how to obtain the certificate from the Cluster Secret that verifies the identity of the Kafka cluster.

The <cluster-name>-cluster-ca-cert Secret will contain more than one CA certificate during the CA certificate renewal period. Clients must add all of them to their truststores.

Prerequisites

- The Cluster Operator must be running.

-

There needs to be a

Kafkaresource within the OpenShift cluster. - You need a Kafka client application outside the OpenShift cluster that will connect using TLS, and needs to trust the cluster CA certificate.

Using PKCS #12 format (.p12)

Extract the cluster CA certificate and password from the generated

<cluster-name>-cluster-ca-certSecret.oc get secret <cluster-name>-cluster-ca-cert -o jsonpath='{.data.ca\.p12}' | base64 -d > ca.p12oc get secret <cluster-name>-cluster-ca-cert -o jsonpath='{.data.ca\.p12}' | base64 -d > ca.p12Copy to Clipboard Copied! Toggle word wrap Toggle overflow oc get secret <cluster-name>-cluster-ca-cert -o jsonpath='{.data.ca\.password}' | base64 -d > ca.passwordoc get secret <cluster-name>-cluster-ca-cert -o jsonpath='{.data.ca\.password}' | base64 -d > ca.passwordCopy to Clipboard Copied! Toggle word wrap Toggle overflow Configure the Kafka client with the following properties:

A security protocol option:

-

security.protocol: SSLwhen using TLS for encryption (with or without TLS authentication). -

security.protocol: SASL_SSLwhen using SCRAM-SHA authentication over TLS.

-

-

ssl.truststore.locationwith the truststore location where the certificates were imported. -

ssl.truststore.passwordwith the password for accessing the truststore. This property can be omitted if it is not needed by the truststore. -

ssl.truststore.type=PKCS12to identify the truststore type.

Using PEM format (.crt)

Extract the cluster CA certificate from the generated

<cluster-name>-cluster-ca-certSecret.oc get secret <cluster-name>-cluster-ca-cert -o jsonpath='{.data.ca\.crt}' | base64 -d > ca.crtoc get secret <cluster-name>-cluster-ca-cert -o jsonpath='{.data.ca\.crt}' | base64 -d > ca.crtCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Use the certificate with clients that use certificates in X.509 format.

12.8. Kafka listener certificates

You can provide your own server certificates and private keys for the following types of listeners:

- TLS listeners for inter-cluster communication

-

External listeners (

route,loadbalancer,ingress, andnodeporttypes) which have TLS encryption enabled, for communication between Kafka clients and Kafka brokers

These user-provided certificates are called Kafka listener certificates.

Providing Kafka listener certificates for external listeners allows you to leverage existing security infrastructure, such as your organization’s private CA or a public CA. Kafka clients will connect to Kafka brokers using Kafka listener certificates rather than certificates signed by the cluster CA or clients CA.

You must manually renew Kafka listener certificates when needed.

12.8.1. Providing your own Kafka listener certificates

This procedure shows how to configure a listener to use your own private key and server certificate, called a Kafka listener certificate.

Your client applications should use the CA public key as a trusted certificate in order to verify the identity of the Kafka broker.

Prerequisites

- An OpenShift cluster.

- The Cluster Operator is running.

For each listener, a compatible server certificate signed by an external CA.

- Provide an X.509 certificate in PEM format.

- Specify the correct Subject Alternative Names (SANs) for each listener. For more information, see Section 12.8.2, “Alternative subjects in server certificates for Kafka listeners”.

- You can provide a certificate that includes the whole CA chain in the certificate file.

Procedure

Create a

Secretcontaining your private key and server certificate:oc create secret generic my-secret --from-file=my-listener-key.key --from-file=my-listener-certificate.crt

oc create secret generic my-secret --from-file=my-listener-key.key --from-file=my-listener-certificate.crtCopy to Clipboard Copied! Toggle word wrap Toggle overflow Edit the

Kafkaresource for your cluster. Configure the listener to use yourSecret, certificate file, and private key file in theconfiguration.brokerCertChainAndKeyproperty.Example configuration for a

loadbalancerexternal listener with TLS encryption enabledCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example configuration for a TLS listener

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the new configuration to create or update the resource:

oc apply -f kafka.yaml

oc apply -f kafka.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow The Cluster Operator starts a rolling update of the Kafka cluster, which updates the configuration of the listeners.

NoteA rolling update is also started if you update a Kafka listener certificate in a

Secretthat is already used by a TLS or external listener.

12.8.2. Alternative subjects in server certificates for Kafka listeners

In order to use TLS hostname verification with your own Kafka listener certificates, you must use the correct Subject Alternative Names (SANs) for each listener. The certificate SANs must specify hostnames for:

- All of the Kafka brokers in your cluster

- The Kafka cluster bootstrap service

You can use wildcard certificates if they are supported by your CA.

12.8.2.1. TLS listener SAN examples

Use the following examples to help you specify hostnames of the SANs in your certificates for TLS listeners.

Wildcards example

Non-wildcards example

12.8.2.2. External listener SAN examples

For external listeners which have TLS encryption enabled, the hostnames you need to specify in certificates depends on the external listener type.

| External listener type | In the SANs, specify… |

|---|---|

|

|

Addresses of all Kafka broker You can use a matching wildcard name. |

|

|

Addresses of all Kafka broker You can use a matching wildcard name. |

|

| Addresses of all OpenShift worker nodes that the Kafka broker pods might be scheduled to. You can use a matching wildcard name. |

Additional resources