Chapter 5. Managing snapshots

As a storage administrator, being familiar with Ceph’s snapshotting feature can help you manage the snapshots and clones of images stored in the Red Hat Ceph Storage cluster.

Prerequisites

- A running Red Hat Ceph Storage cluster.

5.1. Ceph block device snapshots

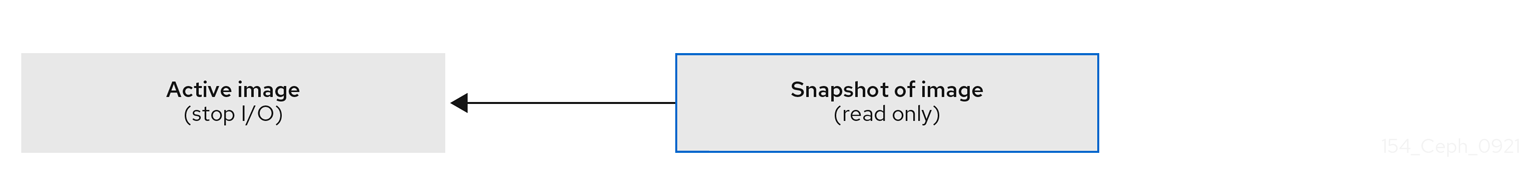

A snapshot is a read-only copy of the state of an image at a particular point in time. One of the advanced features of Ceph block devices is that you can create snapshots of the images to retain a history of an image’s state. Ceph also supports snapshot layering, which allows you to clone images quickly and easily, for example a virtual machine image. Ceph supports block device snapshots using the rbd command and many higher level interfaces, including QEMU, libvirt, OpenStack and CloudStack.

If a snapshot is taken while I/O is occurring, then the snapshot might not get the exact or latest data of the image and the snapshot might have to be cloned to a new image to be mountable. Red Hat recommends stopping I/O before taking a snapshot of an image. If the image contains a filesystem, the filesystem must be in a consistent state before taking a snapshot. To stop I/O you can use fsfreeze command. For virtual machines, the qemu-guest-agent can be used to automatically freeze filesystems when creating a snapshot.

Figure 5.1. Ceph Block device snapshots

Additional Resources

-

See the

fsfreeze(8)man page for more details.

5.2. The Ceph user and keyring

When cephx is enabled, you must specify a user name or ID and a path to the keyring containing the corresponding key for the user.

cephx is enabled by default.

You might also add the CEPH_ARGS environment variable to avoid re-entry of the following parameters:

Syntax

rbd --id USER_ID --keyring=/path/to/secret [commands] rbd --name USERNAME --keyring=/path/to/secret [commands]

rbd --id USER_ID --keyring=/path/to/secret [commands]

rbd --name USERNAME --keyring=/path/to/secret [commands]Example

rbd --id admin --keyring=/etc/ceph/ceph.keyring [commands] rbd --name client.admin --keyring=/etc/ceph/ceph.keyring [commands]

[root@rbd-client ~]# rbd --id admin --keyring=/etc/ceph/ceph.keyring [commands]

[root@rbd-client ~]# rbd --name client.admin --keyring=/etc/ceph/ceph.keyring [commands]

Add the user and secret to the CEPH_ARGS environment variable so that you do not need to enter them each time.

5.3. Creating a block device snapshot

Create a snapshot of a Ceph block device.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Root-level access to the node.

Procedure

Specify the

snap createoption, the pool name and the image name:Method 1:

Syntax

rbd --pool POOL_NAME snap create --snap SNAP_NAME IMAGE_NAME

rbd --pool POOL_NAME snap create --snap SNAP_NAME IMAGE_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

rbd --pool pool1 snap create --snap snap1 image1

[root@rbd-client ~]# rbd --pool pool1 snap create --snap snap1 image1Copy to Clipboard Copied! Toggle word wrap Toggle overflow Method 2:

Syntax

rbd snap create POOL_NAME/IMAGE_NAME@SNAP_NAME

rbd snap create POOL_NAME/IMAGE_NAME@SNAP_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

rbd snap create pool1/image1@snap1

[root@rbd-client ~]# rbd snap create pool1/image1@snap1Copy to Clipboard Copied! Toggle word wrap Toggle overflow

5.4. Listing the block device snapshots

List the block device snapshots.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Root-level access to the node.

Procedure

Specify the pool name and the image name:

Syntax

rbd --pool POOL_NAME --image IMAGE_NAME snap ls rbd snap ls POOL_NAME/IMAGE_NAME

rbd --pool POOL_NAME --image IMAGE_NAME snap ls rbd snap ls POOL_NAME/IMAGE_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

rbd --pool pool1 --image image1 snap ls rbd snap ls pool1/image1

[root@rbd-client ~]# rbd --pool pool1 --image image1 snap ls [root@rbd-client ~]# rbd snap ls pool1/image1Copy to Clipboard Copied! Toggle word wrap Toggle overflow

5.5. Rolling back a block device snapshot

Rollback a block device snapshot.

Rolling back an image to a snapshot means overwriting the current version of the image with data from a snapshot. The time it takes to execute a rollback increases with the size of the image. It is faster to clone from a snapshot than to rollback an image to a snapshot, and it is the preferred method of returning to a pre-existing state.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Root-level access to the node.

Procedure

Specify the

snap rollbackoption, the pool name, the image name and the snap name:Syntax

rbd --pool POOL_NAME snap rollback --snap SNAP_NAME IMAGE_NAME rbd snap rollback POOL_NAME/IMAGE_NAME@SNAP_NAME

rbd --pool POOL_NAME snap rollback --snap SNAP_NAME IMAGE_NAME rbd snap rollback POOL_NAME/IMAGE_NAME@SNAP_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

rbd --pool pool1 snap rollback --snap snap1 image1 rbd snap rollback pool1/image1@snap1

[root@rbd-client ~]# rbd --pool pool1 snap rollback --snap snap1 image1 [root@rbd-client ~]# rbd snap rollback pool1/image1@snap1Copy to Clipboard Copied! Toggle word wrap Toggle overflow

5.6. Deleting a block device snapshot

Delete a snapshot for Ceph block devices.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Root-level access to the node.

Procedure

To delete a block device snapshot, specify the

snap rmoption, the pool name, the image name and the snapshot name:Syntax

rbd --pool POOL_NAME snap rm --snap SNAP_NAME IMAGE_NAME rbd snap rm POOL_NAME-/IMAGE_NAME@SNAP_NAME

rbd --pool POOL_NAME snap rm --snap SNAP_NAME IMAGE_NAME rbd snap rm POOL_NAME-/IMAGE_NAME@SNAP_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

rbd --pool pool1 snap rm --snap snap2 image1 rbd snap rm pool1/image1@snap1

[root@rbd-client ~]# rbd --pool pool1 snap rm --snap snap2 image1 [root@rbd-client ~]# rbd snap rm pool1/image1@snap1Copy to Clipboard Copied! Toggle word wrap Toggle overflow

If an image has any clones, the cloned images retain reference to the parent image snapshot. To delete the parent image snapshot, you must flatten the child images first.

Ceph OSD daemons delete data asynchronously, so deleting a snapshot does not free up the disk space immediately.

Additional Resources

- See the Flattening cloned images in the Red Hat Ceph Storage Block Device Guide for details.

5.7. Purging the block device snapshots

Purge block device snapshots.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Root-level access to the node.

Procedure

Specify the

snap purgeoption and the image name on a specific pool:Syntax

rbd --pool POOL_NAME snap purge IMAGE_NAME rbd snap purge POOL_NAME/IMAGE_NAME

rbd --pool POOL_NAME snap purge IMAGE_NAME rbd snap purge POOL_NAME/IMAGE_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

rbd --pool pool1 snap purge image1 rbd snap purge pool1/image1

[root@rbd-client ~]# rbd --pool pool1 snap purge image1 [root@rbd-client ~]# rbd snap purge pool1/image1Copy to Clipboard Copied! Toggle word wrap Toggle overflow

5.8. Renaming a block device snapshot

Rename a block device snapshot.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Root-level access to the node.

Procedure

To rename a snapshot:

Syntax

rbd snap rename POOL_NAME/IMAGE_NAME@ORIGINAL_SNAPSHOT_NAME POOL_NAME/IMAGE_NAME@NEW_SNAPSHOT_NAME

rbd snap rename POOL_NAME/IMAGE_NAME@ORIGINAL_SNAPSHOT_NAME POOL_NAME/IMAGE_NAME@NEW_SNAPSHOT_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

rbd snap rename data/dataset@snap1 data/dataset@snap2

[root@rbd-client ~]# rbd snap rename data/dataset@snap1 data/dataset@snap2Copy to Clipboard Copied! Toggle word wrap Toggle overflow This renames

snap1snapshot of thedatasetimage on thedatapool tosnap2.-

Execute the

rbd help snap renamecommand to display additional details on renaming snapshots.

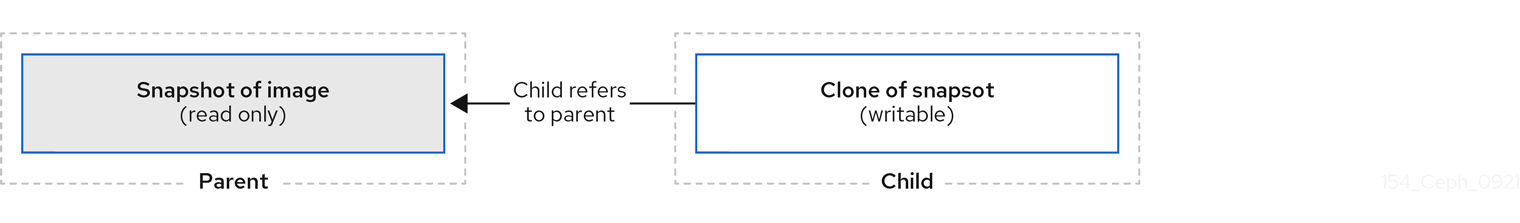

5.9. Ceph block device layering

Ceph supports the ability to create many copy-on-write (COW) or copy-on-read (COR) clones of a block device snapshot. Snapshot layering enables Ceph block device clients to create images very quickly. For example, you might create a block device image with a Linux VM written to it. Then, snapshot the image, protect the snapshot, and create as many clones as you like. A snapshot is read-only, so cloning a snapshot simplifies semantics—making it possible to create clones rapidly.

Figure 5.2. Ceph Block device layering

The terms parent and child mean a Ceph block device snapshot, parent, and the corresponding image cloned from the snapshot, child. These terms are important for the command line usage below.

Each cloned image, the child, stores a reference to its parent image, which enables the cloned image to open the parent snapshot and read it. This reference is removed when the clone is flattened that is, when information from the snapshot is completely copied to the clone.

A clone of a snapshot behaves exactly like any other Ceph block device image. You can read to, write from, clone, and resize the cloned images. There are no special restrictions with cloned images. However, the clone of a snapshot refers to the snapshot, so you MUST protect the snapshot before you clone it.

A clone of a snapshot can be a copy-on-write (COW) or copy-on-read (COR) clone. Copy-on-write (COW) is always enabled for clones while copy-on-read (COR) has to be enabled explicitly. Copy-on-write (COW) copies data from the parent to the clone when it writes to an unallocated object within the clone. Copy-on-read (COR) copies data from the parent to the clone when it reads from an unallocated object within the clone. Reading data from a clone will only read data from the parent if the object does not yet exist in the clone. Rados block device breaks up large images into multiple objects. The default is set to 4 MB and all copy-on-write (COW) and copy-on-read (COR) operations occur on a full object, that is writing 1 byte to a clone will result in a 4 MB object being read from the parent and written to the clone if the destination object does not already exist in the clone from a previous COW/COR operation.

Whether or not copy-on-read (COR) is enabled, any reads that cannot be satisfied by reading an underlying object from the clone will be rerouted to the parent. Since there is practically no limit to the number of parents, meaning that you can clone a clone, this reroute continues until an object is found or you hit the base parent image. If copy-on-read (COR) is enabled, any reads that fail to be satisfied directly from the clone result in a full object read from the parent and writing that data to the clone so that future reads of the same extent can be satisfied from the clone itself without the need of reading from the parent.

This is essentially an on-demand, object-by-object flatten operation. This is specially useful when the clone is in a high-latency connection away from it’s parent, that is the parent in a different pool, in another geographical location. Copy-on-read (COR) reduces the amortized latency of reads. The first few reads will have high latency because it will result in extra data being read from the parent, for example, you read 1 byte from the clone but now 4 MB has to be read from the parent and written to the clone, but all future reads will be served from the clone itself.

To create copy-on-read (COR) clones from snapshot you have to explicitly enable this feature by adding rbd_clone_copy_on_read = true under [global] or [client] section in the ceph.conf file.

Additional Resources

-

For more information on

flattening, see the Flattening cloned images section in the Red Hat Ceph Storage Block Device Gudie.

5.10. Protecting a block device snapshot

Clones access the parent snapshots. All clones would break if a user inadvertently deleted the parent snapshot.

You can set the set-require-min-compat-client parameter to greater than or equal to mimic versions of Ceph.

Example

ceph osd set-require-min-compat-client mimic

ceph osd set-require-min-compat-client mimicThis creates clone v2, by default. However, clients older than mimic cannot access those block device images.

Clone v2 does not require protection of snapshots.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Root-level access to the node.

Procedure

Specify

POOL_NAME,IMAGE_NAME, andSNAP_SHOT_NAMEin the following command:Syntax

rbd --pool POOL_NAME snap protect --image IMAGE_NAME --snap SNAPSHOT_NAME rbd snap protect POOL_NAME/IMAGE_NAME@SNAPSHOT_NAME

rbd --pool POOL_NAME snap protect --image IMAGE_NAME --snap SNAPSHOT_NAME rbd snap protect POOL_NAME/IMAGE_NAME@SNAPSHOT_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

rbd --pool pool1 snap protect --image image1 --snap snap1 rbd snap protect pool1/image1@snap1

[root@rbd-client ~]# rbd --pool pool1 snap protect --image image1 --snap snap1 [root@rbd-client ~]# rbd snap protect pool1/image1@snap1Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteYou cannot delete a protected snapshot.

5.11. Cloning a block device snapshot

Cloning block device snapshots provides an efficient way to create writable copies of existing block device snapshots without duplicating data.

You can either clone a single block device snapshot or a group block device snapshot based on your requirement.

5.11.1. Cloning a single block device snapshot

Clone a block device snapshot to create a read or write child image of the snapshot within the same pool or in another pool. One use case would be to maintain read-only images and snapshots as templates in one pool, and writable clones in another pool.

Clone v2 does not require protection of snapshots.

Prerequisites

Before you begin, make sure that you have the following prerequisites in place:

- A running Red Hat Ceph Storage cluster.

- Root-level access to the node.

Procedure

To clone a snapshot, you need to specify the parent pool, snapshot, child pool and image name:

Syntax

rbd clone --pool POOL_NAME --image PARENT_IMAGE --snap SNAP_NAME --dest-pool POOL_NAME --dest CHILD_IMAGE_NAME rbd clone POOL_NAME/PARENT_IMAGE@SNAP_NAME POOL_NAME/CHILD_IMAGE_NAME

rbd clone --pool POOL_NAME --image PARENT_IMAGE --snap SNAP_NAME --dest-pool POOL_NAME --dest CHILD_IMAGE_NAME rbd clone POOL_NAME/PARENT_IMAGE@SNAP_NAME POOL_NAME/CHILD_IMAGE_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

rbd clone --pool pool1 --image image1 --snap snap1 --dest-pool pool1 --dest childimage1 rbd clone pool1/image1@snap1 pool1/childimage1

[root@rbd-client ~]# rbd clone --pool pool1 --image image1 --snap snap1 --dest-pool pool1 --dest childimage1 [root@rbd-client ~]# rbd clone pool1/image1@snap1 pool1/childimage1Copy to Clipboard Copied! Toggle word wrap Toggle overflow

5.11.2. Cloning block device group snapshot

You can clone new groups from group snapshots created with the rbd group snap create command with the latest --snap-id option for the rbd clone command.

Prerequisites

Before you begin, make sure that you have the following prerequisites in place:

- A running Red Hat Storage Ceph cluster.

- Root-level access to the node.

- A group snapshot.

Cloning from group snapshots is supported only with clone v2 --rbd-default-clone-format 2.

Example

] rbd clone --snap-id 4 pool1/image1 pool1/i1clone1 --rbd-default-clone-format 2

[root@rbd-client]# ] rbd clone --snap-id 4 pool1/image1 pool1/i1clone1 --rbd-default-clone-format 2Procedure

Get the snap ID of the group snapshot.

Syntax

rbd snap ls --all POOL_NAME/PARENT_IMAGE_NAME

rbd snap ls --all POOL_NAME/PARENT_IMAGE_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow The following example has a group snapshot, indicated with

SNAPIDlisted as4and theNAMESPACEasgroup.Example

[root@rbd-client] # rbd snap ls --all pool1/image1 SNAPID NAME SIZE PROTECTED TIMESTAMP NAMESPACE 3 snap1 10 GiB yes Thu Jul 25 06:21:33 2024 user 4 .group.2_39d 10 GiB Wed Jul 31 02:28:49 2024 group (pool1/group1@p1g1snap1)

[root@rbd-client] # rbd snap ls --all pool1/image1 SNAPID NAME SIZE PROTECTED TIMESTAMP NAMESPACE 3 snap1 10 GiB yes Thu Jul 25 06:21:33 2024 user 4 .group.2_39d 10 GiB Wed Jul 31 02:28:49 2024 group (pool1/group1@p1g1snap1)Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a clone of the group snapshot, by using the

--snap-idoption.Syntax

rbd clone --snap-id SNAP_ID POOL_NAME/IMAGE_NAME POOL_NAME/CLONE_IMAGE_NAME --rbd-default-clone-format 2

rbd clone --snap-id SNAP_ID POOL_NAME/IMAGE_NAME POOL_NAME/CLONE_IMAGE_NAME --rbd-default-clone-format 2Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example

rbd clone --snap-id 4 pool1/image1 pool2/clone2 --rbd-default-clone-format 2

[root@rbd-client]# rbd clone --snap-id 4 pool1/image1 pool2/clone2 --rbd-default-clone-format 2Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification steps

Use the

rbd lscommand to verify the clone image of the group snapshot is created successfully.Example

rbd ls -p pool2 clone2

[root@rbd-client]# rbd ls -p pool2 clone2Copy to Clipboard Copied! Toggle word wrap Toggle overflow

5.12. Unprotecting a block device snapshot

Before you can delete a snapshot, you must unprotect it first. Additionally, you may NOT delete snapshots that have references from clones. You must flatten each clone of a snapshot, before you can delete the snapshot.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Root-level access to the node.

Procedure

Run the following commands:

Syntax

rbd --pool POOL_NAME snap unprotect --image IMAGE_NAME --snap SNAPSHOT_NAME rbd snap unprotect POOL_NAME/IMAGE_NAME@SNAPSHOT_NAME

rbd --pool POOL_NAME snap unprotect --image IMAGE_NAME --snap SNAPSHOT_NAME rbd snap unprotect POOL_NAME/IMAGE_NAME@SNAPSHOT_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

rbd --pool pool1 snap unprotect --image image1 --snap snap1 rbd snap unprotect pool1/image1@snap1

[root@rbd-client ~]# rbd --pool pool1 snap unprotect --image image1 --snap snap1 [root@rbd-client ~]# rbd snap unprotect pool1/image1@snap1Copy to Clipboard Copied! Toggle word wrap Toggle overflow

5.13. Listing the children of a snapshot

List the children of a snapshot.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Root-level access to the node.

Procedure

To list the children of a snapshot, execute the following:

Syntax

rbd --pool POOL_NAME children --image IMAGE_NAME --snap SNAP_NAME rbd children POOL_NAME/IMAGE_NAME@SNAPSHOT_NAME

rbd --pool POOL_NAME children --image IMAGE_NAME --snap SNAP_NAME rbd children POOL_NAME/IMAGE_NAME@SNAPSHOT_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

rbd --pool pool1 children --image image1 --snap snap1 rbd children pool1/image1@snap1

[root@rbd-client ~]# rbd --pool pool1 children --image image1 --snap snap1 [root@rbd-client ~]# rbd children pool1/image1@snap1Copy to Clipboard Copied! Toggle word wrap Toggle overflow

5.14. Flattening cloned images

Cloned images retain a reference to the parent snapshot. When you remove the reference from the child clone to the parent snapshot, you effectively "flatten" the image by copying the information from the snapshot to the clone. The time it takes to flatten a clone increases with the size of the snapshot. Because a flattened image contains all the information from the snapshot, a flattened image will use more storage space than a layered clone.

If the deep flatten feature is enabled on an image, the image clone is dissociated from its parent by default.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Root-level access to the node.

Procedure

To delete a parent image snapshot associated with child images, you must flatten the child images first:

Syntax

rbd --pool POOL_NAME flatten --image IMAGE_NAME rbd flatten POOL_NAME/IMAGE_NAME

rbd --pool POOL_NAME flatten --image IMAGE_NAME rbd flatten POOL_NAME/IMAGE_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

rbd --pool pool1 flatten --image childimage1 rbd flatten pool1/childimage1

[root@rbd-client ~]# rbd --pool pool1 flatten --image childimage1 [root@rbd-client ~]# rbd flatten pool1/childimage1Copy to Clipboard Copied! Toggle word wrap Toggle overflow