Configuring and managing high availability clusters

Using the Red Hat High Availability Add-On to create and maintain Pacemaker clusters

Abstract

Providing feedback on Red Hat documentation

We appreciate your feedback on our documentation. Let us know how we can improve it.

Submitting feedback through Jira (account required)

- Log in to the Jira website.

- Click Create in the top navigation bar.

- Enter a descriptive title in the Summary field.

- Enter your suggestion for improvement in the Description field. Include links to the relevant parts of the documentation.

- Click Create at the bottom of the dialogue.

Chapter 1. High Availability Add-On overview

The High Availability Add-On is a clustered system that provides reliability, scalability, and availability to critical production services.

A cluster is two or more computers (called nodes or members) that work together to perform a task. Clusters can be used to provide highly available services or resources. The redundancy of multiple machines is used to guard against failures of many types.

High availability clusters provide highly available services by eliminating single points of failure and by failing over services from one cluster node to another in case a node becomes inoperative. Typically, services in a high availability cluster read and write data (by means of read-write mounted file systems). Therefore, a high availability cluster must maintain data integrity as one cluster node takes over control of a service from another cluster node. Node failures in a high availability cluster are not visible from clients outside the cluster. (High availability clusters are sometimes referred to as failover clusters.) The High Availability Add-On provides high availability clustering through its high availability service management component, Pacemaker.

Red Hat provides a variety of documentation for planning, configuring, and maintaining a Red Hat high availability cluster. For a listing of articles that provide guided indexes to the various areas of Red Hat cluster documentation, see the Red Hat High Availability Add-On Documentation Guide.

1.1. High Availability Add-On components

The Red Hat High Availability Add-On consists of several components that provide the high availability service.

The major components of the High Availability Add-On are as follows:

- Cluster infrastructure - Provides fundamental functions for nodes to work together as a cluster: configuration file management, membership management, lock management, and fencing.

- High availability service management - Provides failover of services from one cluster node to another in case a node becomes inoperative.

- Cluster administration tools - Configuration and management tools for setting up, configuring, and managing the High Availability Add-On. The tools are for use with the cluster infrastructure components, the high availability and service management components, and storage.

You can supplement the High Availability Add-On with the following components:

- Red Hat GFS2 (Global File System 2) - Part of the Resilient Storage Add-On, this provides a cluster file system for use with the High Availability Add-On. GFS2 allows multiple nodes to share storage at a block level as if the storage were connected locally to each cluster node. GFS2 cluster file system requires a cluster infrastructure.

-

LVM Locking Daemon (

lvmlockd) - Part of the Resilient Storage Add-On, this provides volume management of cluster storage.lvmlockdsupport also requires cluster infrastructure. - HAProxy - Routing software that provides high availability load balancing and failover in layer 4 (TCP) and layer 7 (HTTP, HTTPS) services.

1.2. High Availability Add-On concepts

Some of the key concepts of a Red Hat High Availability Add-On cluster are as follows.

1.2.1. Fencing

If communication with a single node in the cluster fails, then other nodes in the cluster must be able to restrict or release access to resources that the failed cluster node may have access to. his cannot be accomplished by contacting the cluster node itself as the cluster node may not be responsive. Instead, you must provide an external method, which is called fencing with a fence agent. A fence device is an external device that can be used by the cluster to restrict access to shared resources by an errant node, or to issue a hard reboot on the cluster node.

Without a fence device configured you do not have a way to know that the resources previously used by the disconnected cluster node have been released, and this could prevent the services from running on any of the other cluster nodes. Conversely, the system may assume erroneously that the cluster node has released its resources and this can lead to data corruption and data loss. Without a fence device configured data integrity cannot be guaranteed and the cluster configuration will be unsupported.

When the fencing is in progress no other cluster operation is allowed to run. Normal operation of the cluster cannot resume until fencing has completed or the cluster node rejoins the cluster after the cluster node has been rebooted.

For more information about fencing, see the Red Hat Knowledgebase solution Fencing in a Red Hat High Availability Cluster.

1.2.2. Quorum

In order to maintain cluster integrity and availability, cluster systems use a concept known as quorum to prevent data corruption and loss. A cluster has quorum when more than half of the cluster nodes are online. To mitigate the chance of data corruption due to failure, Pacemaker by default stops all resources if the cluster does not have quorum.

Quorum is established using a voting system. When a cluster node does not function as it should or loses communication with the rest of the cluster, the majority working nodes can vote to isolate and, if needed, fence the node for servicing.

For example, in a 6-node cluster, quorum is established when at least 4 cluster nodes are functioning. If the majority of nodes go offline or become unavailable, the cluster no longer has quorum and Pacemaker stops clustered services.

The quorum features in Pacemaker prevent what is also known as split-brain, a phenomenon where the cluster is separated from communication but each part continues working as separate clusters, potentially writing to the same data and possibly causing corruption or loss. For more information about what it means to be in a split-brain state, and on quorum concepts in general, see the Red Hat Knowledgebase article Exploring Concepts of RHEL High Availability Clusters - Quorum.

A Red Hat Enterprise Linux High Availability Add-On cluster uses the votequorum service, in conjunction with fencing, to avoid split brain situations. A number of votes is assigned to each system in the cluster, and cluster operations are allowed to proceed only when a majority of votes is present.

1.2.3. Cluster resources

A cluster resource is an instance of a program, data, or application to be managed by the cluster service. These resources are abstracted by agents that provide a standard interface for managing the resource in a cluster environment.

To ensure that resources remain healthy, you can add a monitoring operation to a resource’s definition. If you do not specify a monitoring operation for a resource, one is added by default.

You can determine the behavior of a resource in a cluster by configuring constraints. You can configure the following categories of constraints:

- location constraints - A location constraint determines which nodes a resource can run on.

- ordering constraints - An ordering constraint determines the order in which the resources run.

- colocation constraints - A colocation constraint determines where resources will be placed relative to other resources.

One of the most common elements of a cluster is a set of resources that need to be located together, start sequentially, and stop in the reverse order. To simplify this configuration, Pacemaker supports the concept of groups.

1.3. Pacemaker overview

Pacemaker is a cluster resource manager. It achieves maximum availability for your cluster services and resources by making use of the cluster infrastructure’s messaging and membership capabilities to deter and recover from node and resource-level failure.

1.3.1. Pacemaker architecture components

A cluster configured with Pacemaker comprises separate component daemons that monitor cluster membership, scripts that manage the services, and resource management subsystems that monitor the disparate resources.

The following components form the Pacemaker architecture:

- Cluster Information Base (CIB)

- The Pacemaker information daemon, which uses XML internally to distribute and synchronize current configuration and status information from the Designated Coordinator (DC) - a node assigned by Pacemaker to store and distribute cluster state and actions by means of the CIB - to all other cluster nodes.

- Cluster Resource Management Daemon (CRMd)

Pacemaker cluster resource actions are routed through this daemon. Resources managed by CRMd can be queried by client systems, moved, instantiated, and changed when needed.

Each cluster node also includes a local resource manager daemon (LRMd) that acts as an interface between CRMd and resources. LRMd passes commands from CRMd to agents, such as starting and stopping and relaying status information.

- Shoot the Other Node in the Head (STONITH)

- STONITH is the Pacemaker fencing implementation. It acts as a cluster resource in Pacemaker that processes fence requests, forcefully shutting down nodes and removing them from the cluster to ensure data integrity. STONITH is configured in the CIB and can be monitored as a normal cluster resource.

- corosync

corosyncis the component - and a daemon of the same name - that serves the core membership and member-communication needs for high availability clusters. It is required for the High Availability Add-On to function.In addition to those membership and messaging functions,

corosyncalso:- Manages quorum rules and determination.

- Provides messaging capabilities for applications that coordinate or operate across multiple members of the cluster and thus must communicate stateful or other information between instances.

-

Uses the

kronosnetlibrary as its network transport to provide multiple redundant links and automatic failover.

1.3.2. Pacemaker configuration and management tools

The High Availability Add-On features two configuration tools for cluster deployment, monitoring, and management.

pcsThe

pcscommand-line interface controls and configures Pacemaker and thecorosyncheartbeat daemon. A command-line based program,pcscan perform the following cluster management tasks:- Create and configure a Pacemaker/Corosync cluster

- Modify configuration of the cluster while it is running

- Remotely configure both Pacemaker and Corosync as well as start, stop, and display status information of the cluster

pcsdWeb UI- A graphical user interface to create and configure Pacemaker/Corosync clusters.

1.3.3. The cluster and Pacemaker configuration files

The configuration files for the Red Hat High Availability Add-On are corosync.conf and cib.xml.

The corosync.conf file provides the cluster parameters used by corosync, the cluster manager that Pacemaker is built on. In general, you should not edit the corosync.conf directly but, instead, use the pcs or pcsd interface.

The cib.xml file is an XML file that represents both the cluster’s configuration and the current state of all resources in the cluster. This file is used by Pacemaker’s Cluster Information Base (CIB). The contents of the CIB are automatically kept in sync across the entire cluster. Do not edit the cib.xml file directly; use the pcs or pcsd interface instead.

Chapter 2. Getting started with Pacemaker

To familiarize yourself with the tools and processes you use to create a Pacemaker cluster, you can run the following procedures. They are intended for users who are interested in seeing what the cluster software looks like and how it is administered, without needing to configure a working cluster.

These procedures do not create a supported Red Hat cluster, which requires at least two nodes and the configuration of a fencing device. For full information about Red Hat’s support policies, requirements, and limitations for RHEL High Availability clusters, see Support Policies for RHEL High Availability Clusters.

2.1. Learning to use Pacemaker

By working through this procedure, you will learn how to use Pacemaker to set up a cluster, how to display cluster status, and how to configure a cluster service. This example creates an Apache HTTP server as a cluster resource and shows how the cluster responds when the resource fails.

In this example:

-

The node is

z1.example.com. - The floating IP address is 192.168.122.120.

Prerequisites

- A single node running RHEL 8

- A floating IP address that resides on the same network as one of the node’s statically assigned IP addresses

-

The name of the node on which you are running is in your

/etc/hostsfile

Procedure

Install the Red Hat High Availability Add-On software packages from the High Availability channel, and start and enable the

pcsdservice.yum install pcs pacemaker fence-agents-all systemctl start pcsd.service systemctl enable pcsd.service

# yum install pcs pacemaker fence-agents-all ... # systemctl start pcsd.service # systemctl enable pcsd.serviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow If you are running the

firewallddaemon, enable the ports that are required by the Red Hat High Availability Add-On.firewall-cmd --permanent --add-service=high-availability firewall-cmd --reload

# firewall-cmd --permanent --add-service=high-availability # firewall-cmd --reloadCopy to Clipboard Copied! Toggle word wrap Toggle overflow Set a password for user

haclusteron each node in the cluster and authenticate userhaclusterfor each node in the cluster on the node from which you will be running thepcscommands. This example is using only a single node, the node from which you are running the commands, but this step is included here since it is a necessary step in configuring a supported Red Hat High Availability multi-node cluster.passwd hacluster pcs host auth z1.example.com

# passwd hacluster ... # pcs host auth z1.example.comCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a cluster named

my_clusterwith one member and check the status of the cluster. This command creates and starts the cluster in one step.Copy to Clipboard Copied! Toggle word wrap Toggle overflow A Red Hat High Availability cluster requires that you configure fencing for the cluster. The reasons for this requirement are described in the Red Hat Knowledgebase solution Fencing in a Red Hat High Availability Cluster. For this introduction, however, which is intended to show only how to use the basic Pacemaker commands, disable fencing by setting the

stonith-enabledcluster option tofalse.WarningThe use of

stonith-enabled=falseis completely inappropriate for a production cluster. It tells the cluster to simply pretend that failed nodes are safely fenced.pcs property set stonith-enabled=false

# pcs property set stonith-enabled=falseCopy to Clipboard Copied! Toggle word wrap Toggle overflow Configure a web browser on your system and create a web page to display a simple text message. If you are running the

firewallddaemon, enable the ports that are required byhttpd.NoteDo not use

systemctl enableto enable any services that will be managed by the cluster to start at system boot.Copy to Clipboard Copied! Toggle word wrap Toggle overflow In order for the Apache resource agent to get the status of Apache, create the following addition to the existing configuration to enable the status server URL.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create

IPaddr2andapacheresources for the cluster to manage. TheIPaddr2resource is a floating IP address that must not be one already associated with a physical node. If theIPaddr2resource’s NIC device is not specified, the floating IP must reside on the same network as the statically assigned IP address used by the node.You can display a list of all available resource types with the

pcs resource listcommand. You can use thepcs resource describe resourcetypecommand to display the parameters you can set for the specified resource type. For example, the following command displays the parameters you can set for a resource of typeapache:pcs resource describe apache

# pcs resource describe apache ...Copy to Clipboard Copied! Toggle word wrap Toggle overflow In this example, the IP address resource and the apache resource are both configured as part of a group named

apachegroup, which ensures that the resources are kept together to run on the same node when you are configuring a working multi-node cluster.Copy to Clipboard Copied! Toggle word wrap Toggle overflow After you have configured a cluster resource, you can use the

pcs resource configcommand to display the options that are configured for that resource.Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Point your browser to the website you created using the floating IP address you configured. This should display the text message you defined.

Stop the apache web service and check the cluster status. Using

killall -9simulates an application-level crash.killall -9 httpd

# killall -9 httpdCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check the cluster status. You should see that stopping the web service caused a failed action, but that the cluster software restarted the service and you should still be able to access the website.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow You can clear the failure status on the resource that failed once the service is up and running again and the failed action notice will no longer appear when you view the cluster status.

pcs resource cleanup WebSite

# pcs resource cleanup WebSiteCopy to Clipboard Copied! Toggle word wrap Toggle overflow When you are finished looking at the cluster and the cluster status, stop the cluster services on the node. Even though you have only started services on one node for this introduction, the

--allparameter is included since it would stop cluster services on all nodes on an actual multi-node cluster.pcs cluster stop --all

# pcs cluster stop --allCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.2. Learning to configure failover

The following procedure provides an introduction to creating a Pacemaker cluster running a service that will fail over from one node to another when the node on which the service is running becomes unavailable. By working through this procedure, you can learn how to create a service in a two-node cluster and you can then observe what happens to that service when it fails on the node on which it running.

This example procedure configures a two-node Pacemaker cluster running an Apache HTTP server. You can then stop the Apache service on one node to see how the service remains available.

In this example:

-

The nodes are

z1.example.comandz2.example.com. - The floating IP address is 192.168.122.120.

Prerequisites

- Two nodes running RHEL 8 that can communicate with each other

- A floating IP address that resides on the same network as one of the node’s statically assigned IP addresses

-

The name of the node on which you are running is in your

/etc/hostsfile

Procedure

On both nodes, install the Red Hat High Availability Add-On software packages from the High Availability channel, and start and enable the

pcsdservice.yum install pcs pacemaker fence-agents-all systemctl start pcsd.service systemctl enable pcsd.service

# yum install pcs pacemaker fence-agents-all ... # systemctl start pcsd.service # systemctl enable pcsd.serviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow If you are running the

firewallddaemon, on both nodes enable the ports that are required by the Red Hat High Availability Add-On.firewall-cmd --permanent --add-service=high-availability firewall-cmd --reload

# firewall-cmd --permanent --add-service=high-availability # firewall-cmd --reloadCopy to Clipboard Copied! Toggle word wrap Toggle overflow On both nodes in the cluster, set a password for user

hacluster.passwd hacluster

# passwd haclusterCopy to Clipboard Copied! Toggle word wrap Toggle overflow Authenticate user

haclusterfor each node in the cluster on the node from which you will be running thepcscommands.pcs host auth z1.example.com z2.example.com

# pcs host auth z1.example.com z2.example.comCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a cluster named

my_clusterwith both nodes as cluster members. This command creates and starts the cluster in one step. You only need to run this from one node in the cluster becausepcsconfiguration commands take effect for the entire cluster.On one node in cluster, run the following command.

pcs cluster setup my_cluster --start z1.example.com z2.example.com

# pcs cluster setup my_cluster --start z1.example.com z2.example.comCopy to Clipboard Copied! Toggle word wrap Toggle overflow A Red Hat High Availability cluster requires that you configure fencing for the cluster. The reasons for this requirement are described in the Red Hat Knowledgebase solution Fencing in a Red Hat High Availability Cluster. For this introduction, however, to show only how failover works in this configuration, disable fencing by setting the

stonith-enabledcluster option tofalse.WarningThe use of

stonith-enabled=falseis completely inappropriate for a production cluster. It tells the cluster to simply pretend that failed nodes are safely fenced.pcs property set stonith-enabled=false

# pcs property set stonith-enabled=falseCopy to Clipboard Copied! Toggle word wrap Toggle overflow After creating a cluster and disabling fencing, check the status of the cluster.

NoteWhen you run the

pcs cluster statuscommand, it may show output that temporarily differs slightly from the examples as the system components start up.Copy to Clipboard Copied! Toggle word wrap Toggle overflow On both nodes, configure a web browser and create a web page to display a simple text message. If you are running the

firewallddaemon, enable the ports that are required byhttpd.NoteDo not use

systemctl enableto enable any services that will be managed by the cluster to start at system boot.Copy to Clipboard Copied! Toggle word wrap Toggle overflow In order for the Apache resource agent to get the status of Apache, on each node in the cluster create the following addition to the existing configuration to enable the status server URL.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create

IPaddr2andapacheresources for the cluster to manage. TheIPaddr2resource is a floating IP address that must not be one already associated with a physical node. If theIPaddr2resource’s NIC device is not specified, the floating IP must reside on the same network as the statically assigned IP address used by the node.You can display a list of all available resource types with the

pcs resource listcommand. You can use thepcs resource describe resourcetypecommand to display the parameters you can set for the specified resource type. For example, the following command displays the parameters you can set for a resource of typeapache:pcs resource describe apache

# pcs resource describe apache ...Copy to Clipboard Copied! Toggle word wrap Toggle overflow In this example, the IP address resource and the apache resource are both configured as part of a group named

apachegroup, which ensures that the resources are kept together to run on the same node.Run the following commands from one node in the cluster:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Note that in this instance, the

apachegroupservice is running on node z1.example.com.Access the website you created, stop the service on the node on which it is running, and note how the service fails over to the second node.

- Point a browser to the website you created using the floating IP address you configured. This should display the text message you defined, displaying the name of the node on which the website is running.

Stop the apache web service. Using

killall -9simulates an application-level crash.killall -9 httpd

# killall -9 httpdCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check the cluster status. You should see that stopping the web service caused a failed action, but that the cluster software restarted the service on the node on which it had been running and you should still be able to access the web browser.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Clear the failure status once the service is up and running again.

pcs resource cleanup WebSite

# pcs resource cleanup WebSiteCopy to Clipboard Copied! Toggle word wrap Toggle overflow Put the node on which the service is running into standby mode. Note that since we have disabled fencing we cannot effectively simulate a node-level failure (such as pulling a power cable) because fencing is required for the cluster to recover from such situations.

pcs node standby z1.example.com

# pcs node standby z1.example.comCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check the status of the cluster and note where the service is now running.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Access the website. There should be no loss of service, although the display message should indicate the node on which the service is now running.

To restore cluster services to the first node, take the node out of standby mode. This will not necessarily move the service back to that node.

pcs node unstandby z1.example.com

# pcs node unstandby z1.example.comCopy to Clipboard Copied! Toggle word wrap Toggle overflow For final cleanup, stop the cluster services on both nodes.

pcs cluster stop --all

# pcs cluster stop --allCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 3. The pcs command-line interface

The pcs command-line interface controls and configures cluster services such as corosync, pacemaker,booth, and sbd by providing an easier interface to their configuration files.

Note that you should not edit the cib.xml configuration file directly. In most cases, Pacemaker will reject a directly modified cib.xml file.

3.1. pcs help display

You use the -h option of pcs to display the parameters of a pcs command and a description of those parameters.

The following command displays the parameters of the pcs resource command.

pcs resource -h

# pcs resource -h3.2. Viewing the raw cluster configuration

Although you should not edit the cluster configuration file directly, you can view the raw cluster configuration with the pcs cluster cib command.

You can save the raw cluster configuration to a specified file with the pcs cluster cib filename command. If you have previously configured a cluster and there is already an active CIB, you use the following command to save the raw xml file.

pcs cluster cib filename

pcs cluster cib filename

For example, the following command saves the raw xml from the CIB into a file named testfile.

pcs cluster cib testfile

# pcs cluster cib testfile3.3. Saving a configuration change to a working file

When configuring a cluster, you can save configuration changes to a specified file without affecting the active CIB. This allows you to specify configuration updates without immediately updating the currently running cluster configuration with each individual update.

For information about saving the CIB to a file, see Viewing the raw cluster configuration. Once you have created that file, you can save configuration changes to that file rather than to the active CIB by using the -f option of the pcs command. When you have completed the changes and are ready to update the active CIB file, you can push those file updates with the pcs cluster cib-push command.

Procedure

The following is the recommended procedure for pushing changes to the CIB file. This procedure creates a copy of the original saved CIB file and makes changes to that copy. When pushing those changes to the active CIB, this procedure specifies the diff-against option of the pcs cluster cib-push command so that only the changes between the original file and the updated file are pushed to the CIB. This allows users to make changes in parallel that do not overwrite each other, and it reduces the load on Pacemaker which does not need to parse the entire configuration file.

Save the active CIB to a file. This example saves the CIB to a file named

original.xml.pcs cluster cib original.xml

# pcs cluster cib original.xmlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Copy the saved file to the working file you will be using for the configuration updates.

cp original.xml updated.xml

# cp original.xml updated.xmlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Update your configuration as needed. The following command creates a resource in the file

updated.xmlbut does not add that resource to the currently running cluster configuration.pcs -f updated.xml resource create VirtualIP ocf:heartbeat:IPaddr2 ip=192.168.0.120 op monitor interval=30s

# pcs -f updated.xml resource create VirtualIP ocf:heartbeat:IPaddr2 ip=192.168.0.120 op monitor interval=30sCopy to Clipboard Copied! Toggle word wrap Toggle overflow Push the updated file to the active CIB, specifying that you are pushing only the changes you have made to the original file.

pcs cluster cib-push updated.xml diff-against=original.xml

# pcs cluster cib-push updated.xml diff-against=original.xmlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Alternately, you can push the entire current content of a CIB file with the following command.

pcs cluster cib-push filename

pcs cluster cib-push filename

When pushing the entire CIB file, Pacemaker checks the version and does not allow you to push a CIB file which is older than the one already in a cluster. If you need to update the entire CIB file with a version that is older than the one currently in the cluster, you can use the --config option of the pcs cluster cib-push command.

pcs cluster cib-push --config filename

pcs cluster cib-push --config filename3.4. Displaying cluster status

There are a variety of commands you can use to display the status of a cluster and its components.

You can display the status of the cluster and the cluster resources with the following command.

pcs status

# pcs status

You can display the status of a particular cluster component with the commands parameter of the pcs status command, specifying resources, cluster, nodes, or pcsd.

pcs status commands

pcs status commandsFor example, the following command displays the status of the cluster resources.

pcs status resources

# pcs status resourcesThe following command displays the status of the cluster, but not the cluster resources.

pcs cluster status

# pcs cluster status3.5. Displaying the full cluster configuration

Use the following command to display the full current cluster configuration.

pcs config

# pcs config3.6. Modifying the corosync.conf file with the pcs command

In Red Hat Enterprise Linux 8.4 and later, you can use the pcs command to modify the parameters in the corosync.conf file.

The following command modifies the parameters in the corosync.conf file.

pcs cluster config update [transport pass:quotes[transport options]] [compression pass:quotes[compression options]] [crypto pass:quotes[crypto options]] [totem pass:quotes[totem options]] [--corosync_conf pass:quotes[path]]

pcs cluster config update [transport pass:quotes[transport options]] [compression pass:quotes[compression options]] [crypto pass:quotes[crypto options]] [totem pass:quotes[totem options]] [--corosync_conf pass:quotes[path]]

The following example command udates the knet_pmtud_interval transport value and the token and join totem values.

pcs cluster config update transport knet_pmtud_interval=35 totem token=10000 join=100

# pcs cluster config update transport knet_pmtud_interval=35 totem token=10000 join=1003.7. Displaying the corosync.conf file with the pcs command

The following command displays the contents of the corosync.conf cluster configuration file.

pcs cluster corosync

# pcs cluster corosync

In Red Hat Enterprise Linux 8.4 and later, you can print the contents of the corosync.conf file in a human-readable format with the pcs cluster config command, as in the following example.

The output for this command includes the UUID for the cluster if the cluster was created in RHEL 8.7 or later or if the UUID was added manually as described in Identifying clusters by UUID.

In RHEL 8.4 and later, you can run the pcs cluster config show command with the --output-format=cmd option to display the pcs configuration commands that can be used to recreate the existing corosync.conf file, as in the following example.

Chapter 4. Creating a Red Hat High-Availability cluster with Pacemaker

Create a Red Hat High Availability two-node cluster using the pcs command-line interface with the following procedure.

Configuring the cluster in this example requires that your system include the following components:

-

2 nodes, which will be used to create the cluster. In this example, the nodes used are

z1.example.comandz2.example.com. - Network switches for the private network. We recommend but do not require a private network for communication among the cluster nodes and other cluster hardware such as network power switches and Fibre Channel switches.

-

A fencing device for each node of the cluster. This example uses two ports of the APC power switch with a host name of

zapc.example.com.

You must ensure that your configuration conforms to Red Hat’s support policies. For full information about Red Hat’s support policies, requirements, and limitations for RHEL High Availability clusters, see Support Policies for RHEL High Availability Clusters.

4.1. Installing cluster software

Install the cluster software and configure your system for cluster creation with the following procedure.

Procedure

On each node in the cluster, enable the repository for high availability that corresponds to your system architecture. For example, to enable the high availability repository for an x86_64 system, you can enter the following

subscription-managercommand:subscription-manager repos --enable=rhel-8-for-x86_64-highavailability-rpms

# subscription-manager repos --enable=rhel-8-for-x86_64-highavailability-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow On each node in the cluster, install the Red Hat High Availability Add-On software packages along with all available fence agents from the High Availability channel.

yum install pcs pacemaker fence-agents-all

# yum install pcs pacemaker fence-agents-allCopy to Clipboard Copied! Toggle word wrap Toggle overflow Alternatively, you can install the Red Hat High Availability Add-On software packages along with only the fence agent that you require with the following command.

yum install pcs pacemaker fence-agents-model

# yum install pcs pacemaker fence-agents-modelCopy to Clipboard Copied! Toggle word wrap Toggle overflow The following command displays a list of the available fence agents.

rpm -q -a | grep fence

# rpm -q -a | grep fence fence-agents-rhevm-4.0.2-3.el7.x86_64 fence-agents-ilo-mp-4.0.2-3.el7.x86_64 fence-agents-ipmilan-4.0.2-3.el7.x86_64 ...Copy to Clipboard Copied! Toggle word wrap Toggle overflow WarningAfter you install the Red Hat High Availability Add-On packages, you should ensure that your software update preferences are set so that nothing is installed automatically. Installation on a running cluster can cause unexpected behaviors. For more information, see Recommended Practices for Applying Software Updates to a RHEL High Availability or Resilient Storage Cluster.

If you are running the

firewallddaemon, execute the following commands to enable the ports that are required by the Red Hat High Availability Add-On.NoteYou can determine whether the

firewallddaemon is installed on your system with therpm -q firewalldcommand. If it is installed, you can determine whether it is running with thefirewall-cmd --statecommand.firewall-cmd --permanent --add-service=high-availability firewall-cmd --add-service=high-availability

# firewall-cmd --permanent --add-service=high-availability # firewall-cmd --add-service=high-availabilityCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteThe ideal firewall configuration for cluster components depends on the local environment, where you may need to take into account such considerations as whether the nodes have multiple network interfaces or whether off-host firewalling is present. The example here, which opens the ports that are generally required by a Pacemaker cluster, should be modified to suit local conditions. Enabling ports for the High Availability Add-On shows the ports to enable for the Red Hat High Availability Add-On and provides an explanation for what each port is used for.

In order to use

pcsto configure the cluster and communicate among the nodes, you must set a password on each node for the user IDhacluster, which is thepcsadministration account. It is recommended that the password for userhaclusterbe the same on each node.passwd hacluster

# passwd hacluster Changing password for user hacluster. New password: Retype new password: passwd: all authentication tokens updated successfully.Copy to Clipboard Copied! Toggle word wrap Toggle overflow Before the cluster can be configured, the

pcsddaemon must be started and enabled to start up on boot on each node. This daemon works with thepcscommand to manage configuration across the nodes in the cluster.On each node in the cluster, execute the following commands to start the

pcsdservice and to enablepcsdat system start.systemctl start pcsd.service systemctl enable pcsd.service

# systemctl start pcsd.service # systemctl enable pcsd.serviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.2. Installing the pcp-zeroconf package (recommended)

When you set up your cluster, it is recommended that you install the pcp-zeroconf package for the Performance Co-Pilot (PCP) tool. PCP is Red Hat’s recommended resource-monitoring tool for RHEL systems. Installing the pcp-zeroconf package allows you to have PCP running and collecting performance-monitoring data for the benefit of investigations into fencing, resource failures, and other events that disrupt the cluster.

Cluster deployments where PCP is enabled will need sufficient space available for PCP’s captured data on the file system that contains /var/log/pcp/. Typical space usage by PCP varies across deployments, but 10Gb is usually sufficient when using the pcp-zeroconf default settings, and some environments may require less. Monitoring usage in this directory over a 14-day period of typical activity can provide a more accurate usage expectation.

Procedure

To install the pcp-zeroconf package, run the following command.

yum install pcp-zeroconf

# yum install pcp-zeroconf

This package enables pmcd and sets up data capture at a 10-second interval.

For information about reviewing PCP data, see the Red Hat Knowledgebase solution Why did a RHEL High Availability cluster node reboot - and how can I prevent it from happening again?.

4.3. Creating a high availability cluster

Create a Red Hat High Availability Add-On cluster with the following procedure. This example procedure creates a cluster that consists of the nodes z1.example.com and z2.example.com.

Procedure

Authenticate the

pcsuserhaclusterfor each node in the cluster on the node from which you will be runningpcs.The following command authenticates user

haclusteronz1.example.comfor both of the nodes in a two-node cluster that will consist ofz1.example.comandz2.example.com.pcs host auth z1.example.com z2.example.com

[root@z1 ~]# pcs host auth z1.example.com z2.example.com Username: hacluster Password: z1.example.com: Authorized z2.example.com: AuthorizedCopy to Clipboard Copied! Toggle word wrap Toggle overflow Execute the following command from

z1.example.comto create the two-node clustermy_clusterthat consists of nodesz1.example.comandz2.example.com. This will propagate the cluster configuration files to both nodes in the cluster. This command includes the--startoption, which will start the cluster services on both nodes in the cluster.pcs cluster setup my_cluster --start z1.example.com z2.example.com

[root@z1 ~]# pcs cluster setup my_cluster --start z1.example.com z2.example.comCopy to Clipboard Copied! Toggle word wrap Toggle overflow Enable the cluster services to run on each node in the cluster when the node is booted.

NoteFor your particular environment, you may choose to leave the cluster services disabled by skipping this step. This allows you to ensure that if a node goes down, any issues with your cluster or your resources are resolved before the node rejoins the cluster. If you leave the cluster services disabled, you will need to manually start the services when you reboot a node by executing the

pcs cluster startcommand on that node.pcs cluster enable --all

[root@z1 ~]# pcs cluster enable --allCopy to Clipboard Copied! Toggle word wrap Toggle overflow

You can display the current status of the cluster with the pcs cluster status command. Because there may be a slight delay before the cluster is up and running when you start the cluster services with the --start option of the pcs cluster setup command, you should ensure that the cluster is up and running before performing any subsequent actions on the cluster and its configuration.

4.4. Creating a high availability cluster with multiple links

You can use the pcs cluster setup command to create a Red Hat High Availability cluster with multiple links by specifying all of the links for each node.

The format for the basic command to create a two-node cluster with two links is as follows.

pcs cluster setup pass:quotes[cluster_name] pass:quotes[node1_name] addr=pass:quotes[node1_link0_address] addr=pass:quotes[node1_link1_address] pass:quotes[node2_name] addr=pass:quotes[node2_link0_address] addr=pass:quotes[node2_link1_address]

pcs cluster setup pass:quotes[cluster_name] pass:quotes[node1_name] addr=pass:quotes[node1_link0_address] addr=pass:quotes[node1_link1_address] pass:quotes[node2_name] addr=pass:quotes[node2_link0_address] addr=pass:quotes[node2_link1_address]

For the full syntax of this command, see the pcs(8) man page.

When creating a cluster with multiple links, you should take the following into account.

-

The order of the

addr=addressparameters is important. The first address specified after a node name is forlink0, the second one forlink1, and so forth. -

By default, if

link_priorityis not specified for a link, the link’s priority is equal to the link number. The link priorities are then 0, 1, 2, 3, and so forth, according to the order specified, with 0 being the highest link priority. -

The default link mode is

passive, meaning the active link with the lowest-numbered link priority is used. -

With the default values of

link_modeandlink_priority, the first link specified will be used as the highest priority link, and if that link fails the next link specified will be used. -

It is possible to specify up to eight links using the

knettransport protocol, which is the default transport protocol. -

All nodes must have the same number of

addr=parameters. -

In RHEL 8.1 and later, it is possible to add, remove, and change links in an existing cluster using the

pcs cluster link add, thepcs cluster link remove, thepcs cluster link delete, and thepcs cluster link updatecommands. - As with single-link clusters, do not mix IPv4 and IPv6 addresses in one link, although you can have one link running IPv4 and the other running IPv6.

- As with single-link clusters, you can specify addresses as IP addresses or as names as long as the names resolve to IPv4 or IPv6 addresses for which IPv4 and IPv6 addresses are not mixed in one link.

The following example creates a two-node cluster named my_twolink_cluster with two nodes, rh80-node1 and rh80-node2. rh80-node1 has two interfaces, IP address 192.168.122.201 as link0 and 192.168.123.201 as link1. rh80-node2 has two interfaces, IP address 192.168.122.202 as link0 and 192.168.123.202 as link1.

pcs cluster setup my_twolink_cluster rh80-node1 addr=192.168.122.201 addr=192.168.123.201 rh80-node2 addr=192.168.122.202 addr=192.168.123.202

# pcs cluster setup my_twolink_cluster rh80-node1 addr=192.168.122.201 addr=192.168.123.201 rh80-node2 addr=192.168.122.202 addr=192.168.123.202

To set a link priority to a different value than the default value, which is the link number, you can set the link priority with the link_priority option of the pcs cluster setup command. Each of the following two example commands creates a two-node cluster with two interfaces where the first link, link 0, has a link priority of 1 and the second link, link 1, has a link priority of 0. Link 1 will be used first and link 0 will serve as the failover link. Since link mode is not specified, it defaults to passive.

These two commands are equivalent. If you do not specify a link number following the link keyword, the pcs interface automatically adds a link number, starting with the lowest unused link number.

pcs cluster setup my_twolink_cluster rh80-node1 addr=192.168.122.201 addr=192.168.123.201 rh80-node2 addr=192.168.122.202 addr=192.168.123.202 transport knet link link_priority=1 link link_priority=0 pcs cluster setup my_twolink_cluster rh80-node1 addr=192.168.122.201 addr=192.168.123.201 rh80-node2 addr=192.168.122.202 addr=192.168.123.202 transport knet link linknumber=1 link_priority=0 link link_priority=1

# pcs cluster setup my_twolink_cluster rh80-node1 addr=192.168.122.201 addr=192.168.123.201 rh80-node2 addr=192.168.122.202 addr=192.168.123.202 transport knet link link_priority=1 link link_priority=0

# pcs cluster setup my_twolink_cluster rh80-node1 addr=192.168.122.201 addr=192.168.123.201 rh80-node2 addr=192.168.122.202 addr=192.168.123.202 transport knet link linknumber=1 link_priority=0 link link_priority=1For information about adding nodes to an existing cluster with multiple links, see Adding a node to a cluster with multiple links.

For information about changing the links in an existing cluster with multiple links, see Adding and modifying links in an existing cluster.

4.5. Configuring fencing

You must configure a fencing device for each node in the cluster. For information about the fence configuration commands and options, see Configuring fencing in a Red Hat High Availability cluster.

For general information about fencing and its importance in a Red Hat High Availability cluster, see the Red Hat Knowledgebase solution Fencing in a Red Hat High Availability Cluster.

When configuring a fencing device, attention should be given to whether that device shares power with any nodes or devices in the cluster. If a node and its fence device do share power, then the cluster may be at risk of being unable to fence that node if the power to it and its fence device should be lost. Such a cluster should either have redundant power supplies for fence devices and nodes, or redundant fence devices that do not share power. Alternative methods of fencing such as SBD or storage fencing may also bring redundancy in the event of isolated power losses.

Procedure

This example uses the APC power switch with a host name of zapc.example.com to fence the nodes, and it uses the fence_apc_snmp fencing agent. Because both nodes will be fenced by the same fencing agent, you can configure both fencing devices as a single resource, using the pcmk_host_map option.

You create a fencing device by configuring the device as a stonith resource with the pcs stonith create command. The following command configures a stonith resource named myapc that uses the fence_apc_snmp fencing agent for nodes z1.example.com and z2.example.com. The pcmk_host_map option maps z1.example.com to port 1, and z2.example.com to port 2. The login value and password for the APC device are both apc. By default, this device will use a monitor interval of sixty seconds for each node.

Note that you can use an IP address when specifying the host name for the nodes.

pcs stonith create myapc fence_apc_snmp ipaddr="zapc.example.com" pcmk_host_map="z1.example.com:1;z2.example.com:2" login="apc" passwd="apc"

[root@z1 ~]# pcs stonith create myapc fence_apc_snmp ipaddr="zapc.example.com" pcmk_host_map="z1.example.com:1;z2.example.com:2" login="apc" passwd="apc"The following command displays the parameters of an existing fencing device.

pcs stonith config myapc

[root@rh7-1 ~]# pcs stonith config myapc

Resource: myapc (class=stonith type=fence_apc_snmp)

Attributes: ipaddr=zapc.example.com pcmk_host_map=z1.example.com:1;z2.example.com:2 login=apc passwd=apc

Operations: monitor interval=60s (myapc-monitor-interval-60s)After configuring your fence device, you should test the device. For information about testing a fence device, see Testing a fence device.

Do not test your fence device by disabling the network interface, as this will not properly test fencing.

Once fencing is configured and a cluster has been started, a network restart will trigger fencing for the node which restarts the network even when the timeout is not exceeded. For this reason, do not restart the network service while the cluster service is running because it will trigger unintentional fencing on the node.

4.6. Backing up and restoring a cluster configuration

The following commands back up a cluster configuration in a tar archive and restore the cluster configuration files on all nodes from the backup.

Procedure

Use the following command to back up the cluster configuration in a tar archive. If you do not specify a file name, the standard output will be used.

pcs config backup filename

pcs config backup filename

The pcs config backup command backs up only the cluster configuration itself as configured in the CIB; the configuration of resource daemons is out of the scope of this command. For example if you have configured an Apache resource in the cluster, the resource settings (which are in the CIB) will be backed up, while the Apache daemon settings (as set in`/etc/httpd`) and the files it serves will not be backed up. Similarly, if there is a database resource configured in the cluster, the database itself will not be backed up, while the database resource configuration (CIB) will be.

Use the following command to restore the cluster configuration files on all cluster nodes from the backup. Specifying the --local option restores the cluster configuration files only on the node from which you run this command. If you do not specify a file name, the standard input will be used.

pcs config restore [--local] [filename]

pcs config restore [--local] [filename]4.7. Enabling ports for the High Availability Add-On

The ideal firewall configuration for cluster components depends on the local environment, where you may need to take into account such considerations as whether the nodes have multiple network interfaces or whether off-host firewalling is present.

If you are running the firewalld daemon, execute the following commands to enable the ports that are required by the Red Hat High Availability Add-On.

firewall-cmd --permanent --add-service=high-availability firewall-cmd --add-service=high-availability

# firewall-cmd --permanent --add-service=high-availability

# firewall-cmd --add-service=high-availabilityYou may need to modify which ports are open to suit local conditions.

You can determine whether the firewalld daemon is installed on your system with the rpm -q firewalld command. If the firewalld daemon is installed, you can determine whether it is running with the firewall-cmd --state command.

The following table shows the ports to enable for the Red Hat High Availability Add-On and provides an explanation for what the port is used for.

| Port | When Required |

|---|---|

| TCP 2224 |

Default

It is crucial to open port 2224 in such a way that |

| TCP 3121 | Required on all nodes if the cluster has any Pacemaker Remote nodes

Pacemaker’s |

| TCP 5403 |

Required on the quorum device host when using a quorum device with |

| UDP 5404-5412 |

Required on corosync nodes to facilitate communication between nodes. It is crucial to open ports 5404-5412 in such a way that |

| TCP 21064 |

Required on all nodes if the cluster contains any resources requiring DLM (such as |

| TCP 9929, UDP 9929 | Required to be open on all cluster nodes and Booth arbitrator nodes to connections from any of those same nodes when the Booth ticket manager is used to establish a multi-site cluster. |

Chapter 5. Configuring an active/passive Apache HTTP server in a Red Hat High Availability cluster

Configure an active/passive Apache HTTP server in a two-node Red Hat Enterprise Linux High Availability Add-On cluster with the following procedure. In this use case, clients access the Apache HTTP server through a floating IP address. The web server runs on one of two nodes in the cluster. If the node on which the web server is running becomes inoperative, the web server starts up again on the second node of the cluster with minimal service interruption.

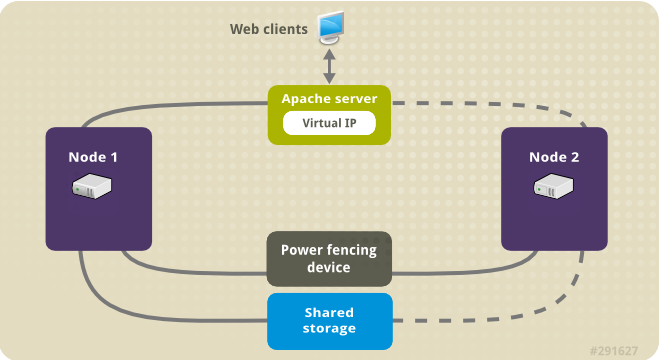

The following illustration shows a high-level overview of the cluster in which the cluster is a two-node Red Hat High Availability cluster which is configured with a network power switch and with shared storage. The cluster nodes are connected to a public network, for client access to the Apache HTTP server through a virtual IP. The Apache server runs on either Node 1 or Node 2, each of which has access to the storage on which the Apache data is kept. In this illustration, the web server is running on Node 1 while Node 2 is available to run the server if Node 1 becomes inoperative.

Figure 5.1. Apache in a Red Hat High Availability Two-Node Cluster

This use case requires that your system include the following components:

- A two-node Red Hat High Availability cluster with power fencing configured for each node. We recommend but do not require a private network. This procedure uses the cluster example provided in Creating a Red Hat High-Availability cluster with Pacemaker.

- A public virtual IP address, required for Apache.

- Shared storage for the nodes in the cluster, using iSCSI, Fibre Channel, or other shared network block device.

The cluster is configured with an Apache resource group, which contains the cluster components that the web server requires: an LVM resource, a file system resource, an IP address resource, and a web server resource. This resource group can fail over from one node of the cluster to the other, allowing either node to run the web server. Before creating the resource group for this cluster, you will be performing the following procedures:

-

Configure an XFS file system on the logical volume

my_lv. - Configure a web server.

After performing these steps, you create the resource group and the resources it contains.

5.1. Configuring an LVM volume with an XFS file system in a Pacemaker cluster

Create an LVM logical volume on storage that is shared between the nodes of the cluster with the following procedure.

LVM volumes and the corresponding partitions and devices used by cluster nodes must be connected to the cluster nodes only.

The following procedure creates an LVM logical volume and then creates an XFS file system on that volume for use in a Pacemaker cluster. In this example, the shared partition /dev/sdb1 is used to store the LVM physical volume from which the LVM logical volume will be created.

Procedure

On both nodes of the cluster, perform the following steps to set the value for the LVM system ID to the value of the

unameidentifier for the system. The LVM system ID will be used to ensure that only the cluster is capable of activating the volume group.Set the

system_id_sourceconfiguration option in the/etc/lvm/lvm.confconfiguration file touname.Configuration option global/system_id_source.

# Configuration option global/system_id_source. system_id_source = "uname"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that the LVM system ID on the node matches the

unamefor the node.lvm systemid uname -n

# lvm systemid system ID: z1.example.com # uname -n z1.example.comCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Create the LVM volume and create an XFS file system on that volume. Since the

/dev/sdb1partition is storage that is shared, you perform this part of the procedure on one node only.pvcreate /dev/sdb1

[root@z1 ~]# pvcreate /dev/sdb1 Physical volume "/dev/sdb1" successfully createdCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteIf your LVM volume group contains one or more physical volumes that reside on remote block storage, such as an iSCSI target, Red Hat recommends that you ensure that the service starts before Pacemaker starts. For information about configuring startup order for a remote physical volume used by a Pacemaker cluster, see Configuring startup order for resource dependencies not managed by Pacemaker.

Create the volume group

my_vgthat consists of the physical volume/dev/sdb1.For RHEL 8.5 and later, specify the

--setautoactivation nflag to ensure that volume groups managed by Pacemaker in a cluster will not be automatically activated on startup. If you are using an existing volume group for the LVM volume you are creating, you can reset this flag with thevgchange --setautoactivation ncommand for the volume group.vgcreate --setautoactivation n my_vg /dev/sdb1

[root@z1 ~]# vgcreate --setautoactivation n my_vg /dev/sdb1 Volume group "my_vg" successfully createdCopy to Clipboard Copied! Toggle word wrap Toggle overflow For RHEL 8.4 and earlier, create the volume group with the following command.

vgcreate my_vg /dev/sdb1

[root@z1 ~]# vgcreate my_vg /dev/sdb1 Volume group "my_vg" successfully createdCopy to Clipboard Copied! Toggle word wrap Toggle overflow For information about ensuring that volume groups managed by Pacemaker in a cluster will not be automatically activated on startup for RHEL 8.4 and earlier, see Ensuring a volume group is not activated on multiple cluster nodes.

Verify that the new volume group has the system ID of the node on which you are running and from which you created the volume group.

vgs -o+systemid

[root@z1 ~]# vgs -o+systemid VG #PV #LV #SN Attr VSize VFree System ID my_vg 1 0 0 wz--n- <1.82t <1.82t z1.example.comCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a logical volume using the volume group

my_vg.lvcreate -L450 -n my_lv my_vg

[root@z1 ~]# lvcreate -L450 -n my_lv my_vg Rounding up size to full physical extent 452.00 MiB Logical volume "my_lv" createdCopy to Clipboard Copied! Toggle word wrap Toggle overflow You can use the

lvscommand to display the logical volume.lvs

[root@z1 ~]# lvs LV VG Attr LSize Pool Origin Data% Move Log Copy% Convert my_lv my_vg -wi-a---- 452.00m ...Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create an XFS file system on the logical volume

my_lv.mkfs.xfs /dev/my_vg/my_lv

[root@z1 ~]# mkfs.xfs /dev/my_vg/my_lv meta-data=/dev/my_vg/my_lv isize=512 agcount=4, agsize=28928 blks = sectsz=512 attr=2, projid32bit=1 ...Copy to Clipboard Copied! Toggle word wrap Toggle overflow

(RHEL 8.5 and later) If you have enabled the use of a devices file by setting

use_devicesfile = 1in thelvm.conffile, add the shared device to the devices file on the second node in the cluster. By default, the use of a devices file is not enabled.lvmdevices --adddev /dev/sdb1

[root@z2 ~]# lvmdevices --adddev /dev/sdb1Copy to Clipboard Copied! Toggle word wrap Toggle overflow

5.2. Ensuring a volume group is not activated on multiple cluster nodes (RHEL 8.4 and earlier)

You can ensure that volume groups that are managed by Pacemaker in a cluster will not be automatically activated on startup with the following procedure. If a volume group is automatically activated on startup rather than by Pacemaker, there is a risk that the volume group will be active on multiple nodes at the same time, which could corrupt the volume group’s metadata.

For RHEL 8.5 and later, you can disable autoactivation for a volume group when you create the volume group by specifying the --setautoactivation n flag for the vgcreate command, as described in Configuring an LVM volume with an XFS file system in a Pacemaker cluster.

This procedure modifies the auto_activation_volume_list entry in the /etc/lvm/lvm.conf configuration file. The auto_activation_volume_list entry is used to limit autoactivation to specific logical volumes. Setting auto_activation_volume_list to an empty list disables autoactivation entirely.

Any local volumes that are not shared and are not managed by Pacemaker should be included in the auto_activation_volume_list entry, including volume groups related to the node’s local root and home directories. All volume groups managed by the cluster manager must be excluded from the auto_activation_volume_list entry.

Procedure

Perform the following procedure on each node in the cluster.

Determine which volume groups are currently configured on your local storage with the following command. This will output a list of the currently-configured volume groups. If you have space allocated in separate volume groups for root and for your home directory on this node, you will see those volumes in the output, as in this example.

vgs --noheadings -o vg_name

# vgs --noheadings -o vg_name my_vg rhel_home rhel_rootCopy to Clipboard Copied! Toggle word wrap Toggle overflow Add the volume groups other than

my_vg(the volume group you have just defined for the cluster) as entries toauto_activation_volume_listin the/etc/lvm/lvm.confconfiguration file.For example, if you have space allocated in separate volume groups for root and for your home directory, you would uncomment the

auto_activation_volume_listline of thelvm.conffile and add these volume groups as entries toauto_activation_volume_listas follows. Note that the volume group you have just defined for the cluster (my_vgin this example) is not in this list.auto_activation_volume_list = [ "rhel_root", "rhel_home" ]

auto_activation_volume_list = [ "rhel_root", "rhel_home" ]Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteIf no local volume groups are present on a node to be activated outside of the cluster manager, you must still initialize the

auto_activation_volume_listentry asauto_activation_volume_list = [].Rebuild the

initramfsboot image to guarantee that the boot image will not try to activate a volume group controlled by the cluster. Update theinitramfsdevice with the following command. This command may take up to a minute to complete.dracut -H -f /boot/initramfs-$(uname -r).img $(uname -r)

# dracut -H -f /boot/initramfs-$(uname -r).img $(uname -r)Copy to Clipboard Copied! Toggle word wrap Toggle overflow Reboot the node.

NoteIf you have installed a new Linux kernel since booting the node on which you created the boot image, the new

initrdimage will be for the kernel that was running when you created it and not for the new kernel that is running when you reboot the node. You can ensure that the correctinitrddevice is in use by running theuname -rcommand before and after the reboot to determine the kernel release that is running. If the releases are not the same, update theinitrdfile after rebooting with the new kernel and then reboot the node.When the node has rebooted, check whether the cluster services have started up again on that node by executing the

pcs cluster statuscommand on that node. If this yields the messageError: cluster is not currently running on this nodethen enter the following command.pcs cluster start

# pcs cluster startCopy to Clipboard Copied! Toggle word wrap Toggle overflow Alternately, you can wait until you have rebooted each node in the cluster and start cluster services on all of the nodes in the cluster with the following command.

pcs cluster start --all

# pcs cluster start --allCopy to Clipboard Copied! Toggle word wrap Toggle overflow

5.3. Configuring an Apache HTTP Server

Configure an Apache HTTP Server with the following procedure.

Procedure

Ensure that the Apache HTTP Server is installed on each node in the cluster. You also need the

wgettool installed on the cluster to be able to check the status of the Apache HTTP Server.On each node, execute the following command.

yum install -y httpd wget

# yum install -y httpd wgetCopy to Clipboard Copied! Toggle word wrap Toggle overflow If you are running the

firewallddaemon, on each node in the cluster enable the ports that are required by the Red Hat High Availability Add-On and enable the ports you will require for runninghttpd. This example enables thehttpdports for public access, but the specific ports to enable forhttpdmay vary for production use.firewall-cmd --permanent --add-service=http firewall-cmd --permanent --zone=public --add-service=http firewall-cmd --reload

# firewall-cmd --permanent --add-service=http # firewall-cmd --permanent --zone=public --add-service=http # firewall-cmd --reloadCopy to Clipboard Copied! Toggle word wrap Toggle overflow In order for the Apache resource agent to get the status of Apache, on each node in the cluster create the following addition to the existing configuration to enable the status server URL.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a web page for Apache to serve up.

On one node in the cluster, ensure that the logical volume you created in Configuring an LVM volume with an XFS file system is activated, mount the file system that you created on that logical volume, create the file

index.htmlon that file system, and then unmount the file system.Copy to Clipboard Copied! Toggle word wrap Toggle overflow

5.4. Creating the resources and resource groups

Create the resources for your cluster with the following procedure. To ensure these resources all run on the same node, they are configured as part of the resource group apachegroup. The resources to create are as follows, listed in the order in which they will start.

-

An

LVM-activateresource namedmy_lvmthat uses the LVM volume group you created in Configuring an LVM volume with an XFS file system. -

A

Filesystemresource namedmy_fs, that uses the file system device/dev/my_vg/my_lvyou created in Configuring an LVM volume with an XFS file system. -

An

IPaddr2resource, which is a floating IP address for theapachegroupresource group. The IP address must not be one already associated with a physical node. If theIPaddr2resource’s NIC device is not specified, the floating IP must reside on the same network as one of the node’s statically assigned IP addresses, otherwise the NIC device to assign the floating IP address cannot be properly detected. -

An

apacheresource namedWebsitethat uses theindex.htmlfile and the Apache configuration you defined in Configuring an Apache HTTP server.

The following procedure creates the resource group apachegroup and the resources that the group contains. The resources will start in the order in which you add them to the group, and they will stop in the reverse order in which they are added to the group. Run this procedure from one node of the cluster only.

Procedure

The following command creates the

LVM-activateresourcemy_lvm. Because the resource groupapachegroupdoes not yet exist, this command creates the resource group.NoteDo not configure more than one

LVM-activateresource that uses the same LVM volume group in an active/passive HA configuration, as this could cause data corruption. Additionally, do not configure anLVM-activateresource as a clone resource in an active/passive HA configuration.pcs resource create my_lvm ocf:heartbeat:LVM-activate vgname=my_vg vg_access_mode=system_id --group apachegroup

[root@z1 ~]# pcs resource create my_lvm ocf:heartbeat:LVM-activate vgname=my_vg vg_access_mode=system_id --group apachegroupCopy to Clipboard Copied! Toggle word wrap Toggle overflow When you create a resource, the resource is started automatically. You can use the following command to confirm that the resource was created and has started.

pcs resource status

# pcs resource status Resource Group: apachegroup my_lvm (ocf::heartbeat:LVM-activate): StartedCopy to Clipboard Copied! Toggle word wrap Toggle overflow You can manually stop and start an individual resource with the

pcs resource disableandpcs resource enablecommands.The following commands create the remaining resources for the configuration, adding them to the existing resource group

apachegroup.pcs resource create my_fs Filesystem device="/dev/my_vg/my_lv" directory="/var/www" fstype="xfs" --group apachegroup pcs resource create VirtualIP IPaddr2 ip=198.51.100.3 cidr_netmask=24 --group apachegroup pcs resource create Website apache configfile="/etc/httpd/conf/httpd.conf" statusurl="http://127.0.0.1/server-status" --group apachegroup

[root@z1 ~]# pcs resource create my_fs Filesystem device="/dev/my_vg/my_lv" directory="/var/www" fstype="xfs" --group apachegroup [root@z1 ~]# pcs resource create VirtualIP IPaddr2 ip=198.51.100.3 cidr_netmask=24 --group apachegroup [root@z1 ~]# pcs resource create Website apache configfile="/etc/httpd/conf/httpd.conf" statusurl="http://127.0.0.1/server-status" --group apachegroupCopy to Clipboard Copied! Toggle word wrap Toggle overflow After creating the resources and the resource group that contains them, you can check the status of the cluster. Note that all four resources are running on the same node.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Note that if you have not configured a fencing device for your cluster, by default the resources do not start.

Once the cluster is up and running, you can point a browser to the IP address you defined as the

IPaddr2resource to view the sample display, consisting of the simple word "Hello".Hello

HelloCopy to Clipboard Copied! Toggle word wrap Toggle overflow If you find that the resources you configured are not running, you can run the

pcs resource debug-start resourcecommand to test the resource configuration.When you use the

apacheresource agent to manage Apache, it does not usesystemd. Because of this, you must edit thelogrotatescript supplied with Apache so that it does not usesystemctlto reload Apache.Remove the following line in the

/etc/logrotate.d/httpdfile on each node in the cluster./bin/systemctl reload httpd.service > /dev/null 2>/dev/null || true

/bin/systemctl reload httpd.service > /dev/null 2>/dev/null || trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow For RHEL 8.6 and later, replace the line you removed with the following three lines, specifying

/var/run/httpd-website.pidas the PID file path where website is the name of the Apache resource. In this example, the Apache resource name isWebsite./usr/bin/test -f /var/run/httpd-Website.pid >/dev/null 2>/dev/null && /usr/bin/ps -q $(/usr/bin/cat /var/run/httpd-Website.pid) >/dev/null 2>/dev/null && /usr/sbin/httpd -f /etc/httpd/conf/httpd.conf -c "PidFile /var/run/httpd-Website.pid" -k graceful > /dev/null 2>/dev/null || true

/usr/bin/test -f /var/run/httpd-Website.pid >/dev/null 2>/dev/null && /usr/bin/ps -q $(/usr/bin/cat /var/run/httpd-Website.pid) >/dev/null 2>/dev/null && /usr/sbin/httpd -f /etc/httpd/conf/httpd.conf -c "PidFile /var/run/httpd-Website.pid" -k graceful > /dev/null 2>/dev/null || trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow For RHEL 8.5 and earlier, replace the line you removed with the following three lines.

/usr/bin/test -f /run/httpd.pid >/dev/null 2>/dev/null && /usr/bin/ps -q $(/usr/bin/cat /run/httpd.pid) >/dev/null 2>/dev/null && /usr/sbin/httpd -f /etc/httpd/conf/httpd.conf -c "PidFile /run/httpd.pid" -k graceful > /dev/null 2>/dev/null || true

/usr/bin/test -f /run/httpd.pid >/dev/null 2>/dev/null && /usr/bin/ps -q $(/usr/bin/cat /run/httpd.pid) >/dev/null 2>/dev/null && /usr/sbin/httpd -f /etc/httpd/conf/httpd.conf -c "PidFile /run/httpd.pid" -k graceful > /dev/null 2>/dev/null || trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow

5.5. Testing the resource configuration

Test the resource configuration in a cluster with the following procedure.

In the cluster status display shown in Creating the resources and resource groups, all of the resources are running on node z1.example.com. You can test whether the resource group fails over to node z2.example.com by using the following procedure to put the first node in standby mode, after which the node will no longer be able to host resources.

Procedure

The following command puts node

z1.example.cominstandbymode.pcs node standby z1.example.com

[root@z1 ~]# pcs node standby z1.example.comCopy to Clipboard Copied! Toggle word wrap Toggle overflow After putting node

z1instandbymode, check the cluster status. Note that the resources should now all be running onz2.Copy to Clipboard Copied! Toggle word wrap Toggle overflow The web site at the defined IP address should still display, without interruption.

To remove

z1fromstandbymode, enter the following command.pcs node unstandby z1.example.com

[root@z1 ~]# pcs node unstandby z1.example.comCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteRemoving a node from

standbymode does not in itself cause the resources to fail back over to that node. This will depend on theresource-stickinessvalue for the resources. For information about theresource-stickinessmeta attribute, see Configuring a resource to prefer its current node.

Chapter 6. Configuring an active/passive NFS server in a Red Hat High Availability cluster

The Red Hat High Availability Add-On provides support for running a highly available active/passive NFS server on a Red Hat Enterprise Linux High Availability Add-On cluster using shared storage. In the following example, you are configuring a two-node cluster in which clients access the NFS file system through a floating IP address. The NFS server runs on one of the two nodes in the cluster. If the node on which the NFS server is running becomes inoperative, the NFS server starts up again on the second node of the cluster with minimal service interruption.

This use case requires that your system include the following components:

- A two-node Red Hat High Availability cluster with power fencing configured for each node. We recommend but do not require a private network. This procedure uses the cluster example provided in Creating a Red Hat High-Availability cluster with Pacemaker.

- A public virtual IP address, required for the NFS server.

- Shared storage for the nodes in the cluster, using iSCSI, Fibre Channel, or other shared network block device.

Configuring a highly available active/passive NFS server on an existing two-node Red Hat Enterprise Linux High Availability cluster requires that you perform the following steps:

- Configure a file system on an LVM logical volume on the shared storage for the nodes in the cluster.

- Configure an NFS share on the shared storage on the LVM logical volume.

- Create the cluster resources.

- Test the NFS server you have configured.

6.1. Configuring an LVM volume with an XFS file system in a Pacemaker cluster

Create an LVM logical volume on storage that is shared between the nodes of the cluster with the following procedure.

LVM volumes and the corresponding partitions and devices used by cluster nodes must be connected to the cluster nodes only.

The following procedure creates an LVM logical volume and then creates an XFS file system on that volume for use in a Pacemaker cluster. In this example, the shared partition /dev/sdb1 is used to store the LVM physical volume from which the LVM logical volume will be created.

Procedure

On both nodes of the cluster, perform the following steps to set the value for the LVM system ID to the value of the

unameidentifier for the system. The LVM system ID will be used to ensure that only the cluster is capable of activating the volume group.Set the

system_id_sourceconfiguration option in the/etc/lvm/lvm.confconfiguration file touname.Configuration option global/system_id_source.

# Configuration option global/system_id_source. system_id_source = "uname"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that the LVM system ID on the node matches the

unamefor the node.lvm systemid uname -n

# lvm systemid system ID: z1.example.com # uname -n z1.example.comCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Create the LVM volume and create an XFS file system on that volume. Since the

/dev/sdb1partition is storage that is shared, you perform this part of the procedure on one node only.pvcreate /dev/sdb1