Configuring basic system settings

Set up the essential functions of your system and customize your system environment

Abstract

Providing feedback on Red Hat documentation

We are committed to providing high-quality documentation and value your feedback. To help us improve, you can submit suggestions or report errors through the Red Hat Jira tracking system.

Procedure

Log in to the Jira website.

If you do not have an account, select the option to create one.

- Click Create in the top navigation bar.

- Enter a descriptive title in the Summary field.

- Enter your suggestion for improvement in the Description field. Include links to the relevant parts of the documentation.

- Click Create at the bottom of the dialogue.

Chapter 1. Configuring and managing basic network access

NetworkManager creates a connection profile for each Ethernet adapter that is installed in a host. By default, this profile uses DHCP for both IPv4 and IPv6 connections. Modify this automatically-created profile or add a new one in the following cases:

- The network requires custom settings, such as a static IP address configuration.

- You require multiple profiles because the host roams among different networks.

Red Hat Enterprise Linux provides administrators different options to configure Ethernet connections. For example:

- Use nmcli to configure connections on the command line.

- Use nmtui to configure connections in a text-based user interface.

- Use the GNOME Settings menu or nm-connection-editor application to configure connections in a graphical interface.

- Use nmstatectl to configure connections through the Nmstate API.

- Use RHEL system roles to automate the configuration of connections on one or multiple hosts.

1.1. Configuring the network and host name in the graphical installation mode

Follow the steps in this procedure to configure your network and host name.

Procedure

- From the Installation Summary window, click .

- From the list in the left-hand pane, select an interface. The details are displayed in the right-hand pane.

Toggle the switch to enable or disable the selected interface.

You cannot add or remove interfaces manually.

- Click to add a virtual network interface, which can be either: Team, Bond, Bridge, or VLAN.

- Click to remove a virtual interface.

- Click to change settings such as IP addresses, DNS servers, or routing configuration for an existing interface (both virtual and physical).

Type a host name for your system in the Host Name field.

The host name can either be a fully qualified domain name (FQDN) in the format

hostname.domainname, or a short host name without the domain. Many networks have a Dynamic Host Configuration Protocol (DHCP) service that automatically supplies connected systems with a domain name. To allow the DHCP service to assign the domain name to this system, specify only the short host name.Host names can only contain alphanumeric characters and

-or.. Host name should be equal to or less than 64 characters. Host names cannot start or end with-and.. To be compliant with DNS, each part of a FQDN should be equal to or less than 63 characters and the FQDN total length, including dots, should not exceed 255 characters.The value

localhostmeans that no specific static host name for the target system is configured, and the actual host name of the installed system is configured during the processing of the network configuration, for example, by NetworkManager using DHCP or DNS.When using static IP and host name configuration, it depends on the planned system use case whether to use a short name or FQDN. Red Hat Identity Management configures FQDN during provisioning but some 3rd party software products may require a short name. In either case, to ensure availability of both forms in all situations, add an entry for the host in

/etc/hostsin the formatIP FQDN short-alias.- Click to apply the host name to the installer environment.

- Alternatively, in the Network and Hostname window, you can choose the Wireless option. Click in the right-hand pane to select your wifi connection, enter the password if required, and click .

1.2. Configuring an Ethernet connection by using nmcli

If you connect a host to the network over Ethernet, you can manage the connection’s settings on the command line by using the nmcli utility.

Prerequisites

- A physical or virtual Ethernet Network Interface Controller (NIC) exists in the server’s configuration.

Procedure

List the NetworkManager connection profiles:

nmcli connection show NAME UUID TYPE DEVICE Wired connection 1 a5eb6490-cc20-3668-81f8-0314a27f3f75 ethernet enp1s0

# nmcli connection show NAME UUID TYPE DEVICE Wired connection 1 a5eb6490-cc20-3668-81f8-0314a27f3f75 ethernet enp1s0Copy to Clipboard Copied! Toggle word wrap Toggle overflow By default, NetworkManager creates a profile for each NIC in the host. If you plan to connect this NIC only to a specific network, adapt the automatically-created profile. If you plan to connect this NIC to networks with different settings, create individual profiles for each network.

If you want to create an additional connection profile, enter:

nmcli connection add con-name <connection-name> ifname <device-name> type ethernet

# nmcli connection add con-name <connection-name> ifname <device-name> type ethernetCopy to Clipboard Copied! Toggle word wrap Toggle overflow Skip this step to modify an existing profile.

Optional: Rename the connection profile:

nmcli connection modify "Wired connection 1" connection.id "Internal-LAN"

# nmcli connection modify "Wired connection 1" connection.id "Internal-LAN"Copy to Clipboard Copied! Toggle word wrap Toggle overflow On hosts with multiple profiles, a meaningful name makes it easier to identify the purpose of a profile.

Display the current settings of the connection profile:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Configure the IPv4 settings:

To use DHCP, enter:

nmcli connection modify Internal-LAN ipv4.method auto

# nmcli connection modify Internal-LAN ipv4.method autoCopy to Clipboard Copied! Toggle word wrap Toggle overflow Skip this step if

ipv4.methodis already set toauto(default).To set a static IPv4 address, network mask, default gateway, DNS servers, and search domain, enter:

nmcli connection modify Internal-LAN ipv4.method manual ipv4.addresses 192.0.2.1/24 ipv4.gateway 192.0.2.254 ipv4.dns 192.0.2.200 ipv4.dns-search example.com

# nmcli connection modify Internal-LAN ipv4.method manual ipv4.addresses 192.0.2.1/24 ipv4.gateway 192.0.2.254 ipv4.dns 192.0.2.200 ipv4.dns-search example.comCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Configure the IPv6 settings:

To use stateless address autoconfiguration (SLAAC), enter:

nmcli connection modify Internal-LAN ipv6.method auto

# nmcli connection modify Internal-LAN ipv6.method autoCopy to Clipboard Copied! Toggle word wrap Toggle overflow Skip this step if

ipv6.methodis already set toauto(default).To set a static IPv6 address, network mask, default gateway, DNS servers, and search domain, enter:

nmcli connection modify Internal-LAN ipv6.method manual ipv6.addresses 2001:db8:1::fffe/64 ipv6.gateway 2001:db8:1::fffe ipv6.dns 2001:db8:1::ffbb ipv6.dns-search example.com

# nmcli connection modify Internal-LAN ipv6.method manual ipv6.addresses 2001:db8:1::fffe/64 ipv6.gateway 2001:db8:1::fffe ipv6.dns 2001:db8:1::ffbb ipv6.dns-search example.comCopy to Clipboard Copied! Toggle word wrap Toggle overflow

To customize other settings in the profile, use the following command:

nmcli connection modify <connection-name> <setting> <value>

# nmcli connection modify <connection-name> <setting> <value>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Enclose values with spaces or semicolons in quotes.

Activate the profile:

nmcli connection up Internal-LAN

# nmcli connection up Internal-LANCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Display the IP settings of the NIC:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Display the IPv4 default gateway:

ip route show default default via 192.0.2.254 dev enp1s0 proto static metric 102

# ip route show default default via 192.0.2.254 dev enp1s0 proto static metric 102Copy to Clipboard Copied! Toggle word wrap Toggle overflow Display the IPv6 default gateway:

ip -6 route show default default via 2001:db8:1::fffe dev enp1s0 proto static metric 102 pref medium

# ip -6 route show default default via 2001:db8:1::fffe dev enp1s0 proto static metric 102 pref mediumCopy to Clipboard Copied! Toggle word wrap Toggle overflow Display the DNS settings:

cat /etc/resolv.conf search example.com nameserver 192.0.2.200 nameserver 2001:db8:1::ffbb

# cat /etc/resolv.conf search example.com nameserver 192.0.2.200 nameserver 2001:db8:1::ffbbCopy to Clipboard Copied! Toggle word wrap Toggle overflow If multiple connection profiles are active at the same time, the order of

nameserverentries depend on the DNS priority values in these profiles and the connection types.Use the

pingutility to verify that this host can send packets to other hosts:ping <host-name-or-IP-address>

# ping <host-name-or-IP-address>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Troubleshooting

- Verify that the network cable is plugged-in to the host and a switch.

- Check whether the link failure exists only on this host or also on other hosts connected to the same switch.

- Verify that the network cable and the network interface are working as expected. Perform hardware diagnosis steps and replace defective cables and network interface cards.

- If the configuration on the disk does not match the configuration on the device, starting or restarting NetworkManager creates an in-memory connection that reflects the configuration of the device. For further details and how to avoid this problem, see the Red Hat Knowledgebase solution NetworkManager duplicates a connection after restart of NetworkManager service.

1.3. Configuring an Ethernet connection by using nmtui

If you connect a host to an Ethernet network, you can manage the connection’s settings in a text-based user interface. Use the nmtui application to create new profiles and to update existing ones on a host without a graphical interface.

In nmtui:

- Navigate by using the cursor keys.

- Press a button by selecting it and hitting Enter.

- Select and clear checkboxes by using Space.

- To return to the previous screen, use ESC.

Prerequisites

- A physical or virtual Ethernet Network Interface Controller (NIC) exists in the server’s configuration.

Procedure

If you do not know the network device name you want to use in the connection, display the available devices:

nmcli device status DEVICE TYPE STATE CONNECTION enp1s0 ethernet unavailable -- ...

# nmcli device status DEVICE TYPE STATE CONNECTION enp1s0 ethernet unavailable -- ...Copy to Clipboard Copied! Toggle word wrap Toggle overflow Start

nmtui:nmtui

# nmtuiCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Select Edit a connection, and press Enter.

Choose whether to add a new connection profile or to modify an existing one:

To create a new profile:

- Press Add.

- Select Ethernet from the list of network types, and press Enter.

- To modify an existing profile, select the profile from the list, and press Enter.

Optional: Update the name of the connection profile.

On hosts with multiple profiles, a meaningful name makes it easier to identify the purpose of a profile.

- If you create a new connection profile, enter the network device name into the Device field.

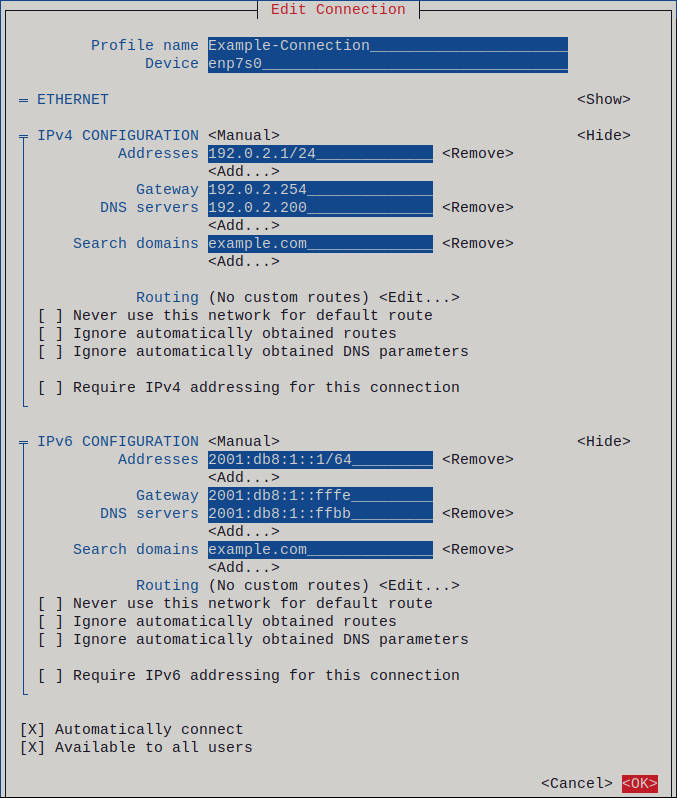

Depending on your environment, configure the IP address settings in the

IPv4 configurationandIPv6 configurationareas accordingly. For this, press the button next to these areas, and select:- Disabled, if this connection does not require an IP address.

- Automatic, if a DHCP server dynamically assigns an IP address to this NIC.

Manual, if the network requires static IP address settings. In this case, you must fill further fields:

- Press Show next to the protocol you want to configure to display additional fields.

Press Add next to Addresses, and enter the IP address and the subnet mask in Classless Inter-Domain Routing (CIDR) format.

If you do not specify a subnet mask, NetworkManager sets a

/32subnet mask for IPv4 addresses and/64for IPv6 addresses.- Enter the address of the default gateway.

- Press Add next to DNS servers, and enter the DNS server address.

- Press Add next to Search domains, and enter the DNS search domain.

Figure 1.1. Example of an Ethernet connection with static IP address settings

- Press OK to create and automatically activate the new connection.

- Press Back to return to the main menu.

-

Select Quit, and press Enter to close the

nmtuiapplication.

Verification

Display the IP settings of the NIC:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Display the IPv4 default gateway:

ip route show default default via 192.0.2.254 dev enp1s0 proto static metric 102

# ip route show default default via 192.0.2.254 dev enp1s0 proto static metric 102Copy to Clipboard Copied! Toggle word wrap Toggle overflow Display the IPv6 default gateway:

ip -6 route show default default via 2001:db8:1::fffe dev enp1s0 proto static metric 102 pref medium

# ip -6 route show default default via 2001:db8:1::fffe dev enp1s0 proto static metric 102 pref mediumCopy to Clipboard Copied! Toggle word wrap Toggle overflow Display the DNS settings:

cat /etc/resolv.conf search example.com nameserver 192.0.2.200 nameserver 2001:db8:1::ffbb

# cat /etc/resolv.conf search example.com nameserver 192.0.2.200 nameserver 2001:db8:1::ffbbCopy to Clipboard Copied! Toggle word wrap Toggle overflow If multiple connection profiles are active at the same time, the order of

nameserverentries depend on the DNS priority values in these profiles and the connection types.Use the

pingutility to verify that this host can send packets to other hosts:ping <host-name-or-IP-address>

# ping <host-name-or-IP-address>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Troubleshooting

- Verify that the network cable is plugged-in to the host and a switch.

- Check whether the link failure exists only on this host or also on other hosts connected to the same switch.

- Verify that the network cable and the network interface are working as expected. Perform hardware diagnosis steps and replace defective cables and network interface cards.

- If the configuration on the disk does not match the configuration on the device, starting or restarting NetworkManager creates an in-memory connection that reflects the configuration of the device. For further details and how to avoid this problem, see the Red Hat Knowledgebase solution NetworkManager duplicates a connection after restart of NetworkManager service.

1.4. Configuring an Ethernet connection with a dynamic IP address by using the network RHEL system role with an interface name

You can use the network RHEL system role to configure an Ethernet connection that retrieves its IP addresses, gateways, and DNS settings from a DHCP server and IPv6 stateless address autoconfiguration (SLAAC). With this role you can assign the connection profile to the specified interface name.

To connect a Red Hat Enterprise Linux host to an Ethernet network, create a NetworkManager connection profile for the network device. By using Ansible and the network RHEL system role, you can automate this process and remotely configure connection profiles on the hosts defined in a playbook.

Prerequisites

- You have prepared the control node and the managed nodes.

-

The account you use to connect to the managed nodes has

sudopermissions for these nodes. - A physical or virtual Ethernet device exists in the servers' configuration.

- A DHCP server and SLAAC are available in the network.

- The managed nodes use the NetworkManager service to configure the network.

Procedure

Create a playbook file, for example,

~/playbook.yml, with the following content:Copy to Clipboard Copied! Toggle word wrap Toggle overflow The settings specified in the example playbook include the following:

dhcp4: yes- Enables automatic IPv4 address assignment from DHCP, PPP, or similar services.

auto6: yes-

Enables IPv6 auto-configuration. By default, NetworkManager uses Router Advertisements. If the router announces the

managedflag, NetworkManager requests an IPv6 address and prefix from a DHCPv6 server.

For details about all variables used in the playbook, see the

/usr/share/ansible/roles/rhel-system-roles.network/README.mdfile on the control node.Validate the playbook syntax:

ansible-playbook --syntax-check ~/playbook.yml

$ ansible-playbook --syntax-check ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note that this command only validates the syntax and does not protect against a wrong but valid configuration.

Run the playbook:

ansible-playbook ~/playbook.yml

$ ansible-playbook ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Query the Ansible facts of the managed node and verify that the interface received IP addresses and DNS settings:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 2. Registering the system and managing subscriptions

Subscriptions cover products installed on Red Hat Enterprise Linux, including the operating system itself. If you have not registered the system, you have no access to the RHEL repositories. You cannot install software updates such as security, bug fixes. Even if you have a self-support subscription, it grants access to the knowledge base while more resources remain unavailable in the lack of subscriptions. By purchasing subscriptions and using Red Hat Content Delivery Network (CDN), you can track:

- Registered systems

- Products installed on registered systems

- Subscriptions attached to the installed products

2.1. Registering a system by using the command line

Subscriptions cover products installed on Red Hat Enterprise Linux, including the operating system itself. If you have not registered the system, you have no access to the RHEL repositories. You cannot install software updates such as security, bug fixes. Even if you have a self-support subscription, it grants access to the knowledge base while more resources remain unavailable in the lack of subscriptions. You need register the system to activate and manage Red Hat Enterprise Linux subscription for your Red Hat account.

To register the system with Red Hat Lightspeed, you can use the rhc connect utility. For details, see Setting up remote host configuration.

Prerequisites

- You have an active subscription of the Red Hat Enterprise Linux system.

Procedure

Register and subscribe the system:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow The command prompts you to enter username and password of Red Hat Customer Portal account.

If the registration process fails, you can register the system with a specific pool. For details, proceed with the following steps:

Determine the pool ID of a subscription:

subscription-manager list --available --all

# subscription-manager list --available --allCopy to Clipboard Copied! Toggle word wrap Toggle overflow This command displays all available subscriptions for your Red Hat account. For every subscription, various characteristics are displayed, including the pool ID.

Attach the appropriate subscription to your system by replacing <example_pool_id> with the pool ID determined in the previous step:

subscription-manager attach --pool=<example_pool_id>

# subscription-manager attach --pool=<example_pool_id>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

- Verify the system under → in the Hybrid Cloud Console.

2.2. Registering a system by using the web console

Subscriptions cover products installed on Red Hat Enterprise Linux, including the operating system itself. If you have not registered the system, you have no access to the RHEL repositories. You cannot install software updates such as security, bug fixes. Even if you have a self-support subscription, it grants access to the Red Hat Knowledgebase portal while more resources remain unavailable in the lack of subscriptions. You can register a newly installed Red Hat Enterprise Linux with your account credentials in the Red Hat Enterprise Linux web console.

Prerequisites

- You have an active subscription of the RHEL system.

- You have installed the RHEL 8 web console.

- You have enabled the cockpit service.

Your user account is allowed to log in to the web console.

For instructions, see Installing and enabling the web console.

Procedure

Log in to the RHEL 8 web console.

For details, see Logging in to the web console.

- In the Health field on the Overview page, click the Not registered warning, or click Subscriptions in the main menu to move to page with your subscription information.

- In the Overview field, click .

In the Register system dialog, select the registration method.

Optional: Enter your organization’s name or ID. If your account belongs to more than one organization on the Red Hat Customer Portal, you must add the organization name or ID. To get the organization ID, check with your Technical Account Manager at Red Hat.

- If you do not want to connect your system to Red Hat Red Hat Lightspeed, clear the Insights checkbox.

- Click .

Verification

- Check details of your subscription in the Hybrid Cloud Console.

2.3. Registering a system in the GNOME desktop environment

Subscriptions cover products installed on Red Hat Enterprise Linux, including the operating system itself. If you have not registered the system, you have no access to the RHEL repositories. You cannot install software updates such as security, bug fixes. Even if you have a self-support subscription, it grants access to the knowledge base while more resources remain unavailable in the lack of subscriptions. Follow the steps in this procedure to enroll the system with your Red Hat account.

Prerequisites

- You have created a Red Hat account.

- You are a root user and logged in to the GNOME desktop environment. For details, see Register and subscribe RHEL system to Red Hat Subscription Manager.

Procedure

Open the system menu, which is accessible from the upper-right screen corner, and click the Settings icon.

- In the → section, click .

- Select Registration Server.

- If you are not using the Red Hat server, enter the server address in the URL field.

- In the Registration Type menu, select Red Hat Account.

Under Registration Details:

- Enter your Red Hat account user name in the Login field.

- Enter your Red Hat account password in the Password field.

- Enter the name of your organization in the Organization field.

- Click .

2.4. Registering RHEL 8 using the installer GUI

You can register a Red Hat Enterprise Linux 8 by using the RHEL installer GUI.

Prerequisites

- You have a valid user account on the Red Hat Customer Portal. See the Create a Red Hat Login page.

- You have a valid Activation Key and Organization id.

Procedure

- From the Installation Summary screen, under Software, click Connect to Red Hat.

- Authenticate your Red Hat account using the Account or Activation Key option.

Optional: In the Set System Purpose field select the Role, SLA, and Usage attribute that you want to set from the drop-down menu.

At this point, your Red Hat Enterprise Linux 8 system has been successfully registered.

Chapter 3. Accessing the Red Hat Support

If you require help with troubleshooting a problem, you can contact Red Hat Support.

Procedure

Log in to the Red Hat Support web site and choose one of the following options:

- Open a new support case.

- Initiate a live chat with a Red Hat expert.

- Contact a Red Hat expert by making a call or sending an email.

3.1. Using the sosreport utility to collect daignostic information about a system to attach it to a support ticket

The sosreport command collects configuration details, system information and diagnostic information from a Red Hat Enterprise Linux system.

The following section describes how to use the sosreport command to produce reports for your support cases.

Prerequisites

- A valid user account on the Red Hat Customer Portal. See Create a Red Hat Login.

- An active subscription for the RHEL system.

- A support-case number.

Procedure

Install the

sospackage:yum install sos

# yum install sosCopy to Clipboard Copied! Toggle word wrap Toggle overflow Generate a report:

sosreport

# sosreportCopy to Clipboard Copied! Toggle word wrap Toggle overflow Optionally, pass the

--uploadoption to the command to automatically upload and attach the report to a support case. This requires internet access and your Customer Portal credentials.Optional: Manually attach the report to your support case.

See the Red Hat Knowledgebase solution How can I attach a file to a Red Hat support case? for more information.

Chapter 4. Changing basic environment settings

Configuration of basic environment settings is a part of the installation process. The following sections guide you when you change them later. The basic configuration of the environment includes:

- Date and time

- System locales

- Keyboard layout

- Language

4.1. Configuring the date and time

Accurate timekeeping is important for several reasons. In Red Hat Enterprise Linux, timekeeping is ensured by the NTP protocol, which is implemented by a daemon running in user space. The user-space daemon updates the system clock running in the kernel. The system clock can keep time by using various clock sources.

Red Hat Enterprise Linux 8 uses the chronyd daemon to implement NTP. chronyd is available from the chrony package. For more information, see Using the chrony suite to configure NTP.

4.1.1. Manually configuring the date, time, and timezone settings

To display the current date and time, use either of these steps.

Procedure

Optional: List the timezones:

timedatectl list-timezones Europe/Berlin

# timedatectl list-timezones Europe/BerlinCopy to Clipboard Copied! Toggle word wrap Toggle overflow Set the time zone:

timedatectl set-timezone <time_zone>

# timedatectl set-timezone <time_zone>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Set the date and time:

timedatectl set-time <YYYY-mm-dd HH:MM:SS>

# timedatectl set-time <YYYY-mm-dd HH:MM:SS>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Display the date, time, and timezone:

date Mon Mar 30 16:02:59 CEST 2020

# date Mon Mar 30 16:02:59 CEST 2020Copy to Clipboard Copied! Toggle word wrap Toggle overflow To see more details, use the timedatectl command:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

4.2. Configuring time settings by using the web console

You can set a time zone and synchronize the system time with a Network Time Protocol (NTP) server in the RHEL web console.

Prerequisites

- You have installed the RHEL 8 web console.

- You have enabled the cockpit service.

Your user account is allowed to log in to the web console.

For instructions, see Installing and enabling the web console.

Procedure

Log in to the RHEL 8 web console.

For details, see Logging in to the web console.

- Click the current system time in Overview.

- Click System time.

- In the Change System Time dialog box, change the time zone if necessary.

In the Set Time drop-down menu, select one of the following:

- Manually

- Use this option if you need to set the time manually, without an NTP server.

- Automatically using NTP server

- This is a default option, which synchronizes time automatically with the preset NTP servers.

- Automatically using specific NTP servers

- Use this option only if you need to synchronize the system with a specific NTP server. Specify the DNS name or the IP address of the server.

- Click Change.

Verification

- Check the system time displayed in the System tab.

4.3. Configuring the system locale

System-wide locale settings are stored in the /etc/locale.conf file that is read at early boot by the systemd daemon. Every service or user inherits the locale settings configured in /etc/locale.conf, unless individual programs or individual users override them.

Procedure

Optional: Display the current system locales settings:

localectl status System Locale: LANG=en_US.UTF-8 VC Keymap: de-nodeadkeys X11 Layout: de X11 Variant: nodeadkeys

# localectl status System Locale: LANG=en_US.UTF-8 VC Keymap: de-nodeadkeys X11 Layout: de X11 Variant: nodeadkeysCopy to Clipboard Copied! Toggle word wrap Toggle overflow List available system locale settings:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Update the syste locale setting:

For example:

+

localectl set-locale LANG=en_US.UTF-8

# localectl set-locale LANG=en_US.UTF-8The GNOME Terminal does not support non-UTF8 system locales. For more information, see the Red Hat Knowledgebase solution The gnome-terminal application fails to start when the system locale is set to non-UTF8.

4.4. Configuring the keyboard layout

The keyboard layout settings control the layout used on the text console and graphical user interfaces.

Procedure

To list available keymaps:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow To display the current status of keymaps settings:

localectl status ... VC Keymap: us ...

$ localectl status ... VC Keymap: us ...Copy to Clipboard Copied! Toggle word wrap Toggle overflow To set or change the default system keymap. For example:

localectl set-keymap us

# localectl set-keymap usCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.5. Changing the font size in text console mode

You can change the font size in the virtual console.

Procedure

Display the currently-used font file:

cat /etc/vconsole.conf FONT="eurlatgr"

# cat /etc/vconsole.conf FONT="eurlatgr"Copy to Clipboard Copied! Toggle word wrap Toggle overflow List the available font files:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Select a font file that supports your character set and code page.

Optional: To test a font file, load it temporarily:

setfont LatArCyrHeb-16.psfu.gz

# setfont LatArCyrHeb-16.psfu.gzCopy to Clipboard Copied! Toggle word wrap Toggle overflow The

setfontutility applies the font file immediately and terminals use the new and font size until you reboot or apply a different font file.-

To return to the font file defined in

/etc/vconsole.conf, entersetfontwithout any parameters. Edit the

/etc/vconsole.conffile and set theFONTvariable to the font file RHEL should load at boot time, for example:FONT=LatArCyrHeb-16

FONT=LatArCyrHeb-16Copy to Clipboard Copied! Toggle word wrap Toggle overflow Reboot the host

reboot

# rebootCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 5. Using secure communications between two systems with OpenSSH

SSH (Secure Shell) is a protocol which provides secure communications between two systems using a client-server architecture and allows users to log in to server host systems remotely. Unlike other remote communication protocols, such as FTP or Telnet, SSH encrypts the login session, which prevents intruders from collecting unencrypted passwords from the connection.

5.1. Generating SSH key pairs

You can log in to an OpenSSH server without entering a password by generating an SSH key pair on a local system and copying the generated public key to the OpenSSH server. Each user who wants to create a key must run this procedure.

To preserve previously generated key pairs after you reinstall the system, back up the ~/.ssh/ directory before you create new keys. After reinstalling, copy it back to your home directory. You can do this for all users on your system, including root.

Prerequisites

- You are logged in as a user who wants to connect to the OpenSSH server by using keys.

- The OpenSSH server is configured to allow key-based authentication.

Procedure

Generate an ECDSA key pair:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow You can also generate an RSA key pair by using the

ssh-keygencommand without any parameter or an Ed25519 key pair by entering thessh-keygen -t ed25519command. Note that the Ed25519 algorithm is not FIPS-140-compliant, and OpenSSH does not work with Ed25519 keys in FIPS mode.Copy the public key to a remote machine:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Replace

<username>@<ssh-server-example.com>with your credentials.If you do not use the

ssh-agentprogram in your session, the previous command copies the most recently modified~/.ssh/id*.pubpublic key if it is not yet installed. To specify another public-key file or to prioritize keys in files over keys cached in memory byssh-agent, use thessh-copy-idcommand with the-ioption.

Verification

Log in to the OpenSSH server by using the key file:

ssh -o PreferredAuthentications=publickey <username>@<ssh-server-example.com>

$ ssh -o PreferredAuthentications=publickey <username>@<ssh-server-example.com>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

5.2. Setting key-based authentication as the only method on an OpenSSH server

To improve system security, enforce key-based authentication by disabling password authentication on your OpenSSH server.

Prerequisites

-

The

openssh-serverpackage is installed. -

The

sshddaemon is running on the server. You can already connect to the OpenSSH server by using a key.

See the Generating SSH key pairs section for details.

Procedure

Open the

/etc/ssh/sshd_configconfiguration in a text editor, for example:vi /etc/ssh/sshd_config

# vi /etc/ssh/sshd_configCopy to Clipboard Copied! Toggle word wrap Toggle overflow Change the

PasswordAuthenticationoption tono:PasswordAuthentication no

PasswordAuthentication noCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

On a system other than a new default installation, check that the

PubkeyAuthenticationparameter is either not set or set toyes. Set the

ChallengeResponseAuthenticationdirective tono.Note that the corresponding entry is commented out in the configuration file and the default value is

yes.To use key-based authentication with NFS-mounted home directories, enable the

use_nfs_home_dirsSELinux boolean:setsebool -P use_nfs_home_dirs 1

# setsebool -P use_nfs_home_dirs 1Copy to Clipboard Copied! Toggle word wrap Toggle overflow - If you are connected remotely, not using console or out-of-band access, test the key-based login process before disabling password authentication.

Reload the

sshddaemon to apply the changes:systemctl reload sshd

# systemctl reload sshdCopy to Clipboard Copied! Toggle word wrap Toggle overflow

5.3. Caching your SSH credentials by using ssh-agent

To avoid entering a passphrase each time you initiate an SSH connection, you can use the ssh-agent utility to cache the private SSH key for a login session. If the agent is running and your keys are unlocked, you can log in to SSH servers by using these keys but without having to enter the key’s password again. The private key and the passphrase remain secure.

Prerequisites

- You have a remote host with the SSH daemon running and reachable through the network.

- You know the IP address or hostname and credentials to log in to the remote host.

You have generated an SSH key pair with a passphrase and transferred the public key to the remote machine.

See the Generating SSH key pairs section for details.

Procedure

Add the command for automatically starting

ssh-agentin your session to the~/.bashrcfile:Open

~/.bashrcin a text editor of your choice, for example:vi ~/.bashrc

$ vi ~/.bashrcCopy to Clipboard Copied! Toggle word wrap Toggle overflow Add the following line to the file:

eval $(ssh-agent)

eval $(ssh-agent)Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Save the changes, and quit the editor.

Add the following line to the

~/.ssh/configfile:AddKeysToAgent yes

AddKeysToAgent yesCopy to Clipboard Copied! Toggle word wrap Toggle overflow With this option and

ssh-agentstarted in your session, the agent prompts for a password only for the first time when you connect to a host.

Verification

Log in to a host which uses the corresponding public key of the cached private key in the agent, for example:

ssh <example.user>@<ssh-server@example.com>

$ ssh <example.user>@<ssh-server@example.com>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Note that you did not have to enter the passphrase.

5.4. Authenticating by SSH keys stored on a smart card

You can create and store ECDSA and RSA keys on a smart card and authenticate by the smart card on an OpenSSH client. Smart-card authentication replaces the default password authentication.

Prerequisites

-

On the client side, the

openscpackage is installed and thepcscdservice is running.

Procedure

List all keys provided by the OpenSC PKCS #11 module including their PKCS #11 URIs and save the output to the

keys.pubfile:ssh-keygen -D pkcs11: > keys.pub

$ ssh-keygen -D pkcs11: > keys.pubCopy to Clipboard Copied! Toggle word wrap Toggle overflow Transfer the public key to the remote server. Use the

ssh-copy-idcommand with thekeys.pubfile created in the previous step:ssh-copy-id -f -i keys.pub <username@ssh-server-example.com>

$ ssh-copy-id -f -i keys.pub <username@ssh-server-example.com>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Connect to <ssh-server-example.com> by using the ECDSA key. You can use just a subset of the URI, which uniquely references your key, for example:

ssh -i "pkcs11:id=%01?module-path=/usr/lib64/pkcs11/opensc-pkcs11.so" <ssh-server-example.com> Enter PIN for 'SSH key': [ssh-server-example.com] $

$ ssh -i "pkcs11:id=%01?module-path=/usr/lib64/pkcs11/opensc-pkcs11.so" <ssh-server-example.com> Enter PIN for 'SSH key': [ssh-server-example.com] $Copy to Clipboard Copied! Toggle word wrap Toggle overflow Because OpenSSH uses the

p11-kit-proxywrapper and the OpenSC PKCS #11 module is registered to thep11-kittool, you can simplify the previous command:ssh -i "pkcs11:id=%01" <ssh-server-example.com> Enter PIN for 'SSH key': [ssh-server-example.com] $

$ ssh -i "pkcs11:id=%01" <ssh-server-example.com> Enter PIN for 'SSH key': [ssh-server-example.com] $Copy to Clipboard Copied! Toggle word wrap Toggle overflow If you skip the

id=part of a PKCS #11 URI, OpenSSH loads all keys that are available in the proxy module. This can reduce the amount of typing required:ssh -i pkcs11: <ssh-server-example.com> Enter PIN for 'SSH key': [ssh-server-example.com] $

$ ssh -i pkcs11: <ssh-server-example.com> Enter PIN for 'SSH key': [ssh-server-example.com] $Copy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: You can use the same URI string in the

~/.ssh/configfile to make the configuration permanent:cat ~/.ssh/config IdentityFile "pkcs11:id=%01?module-path=/usr/lib64/pkcs11/opensc-pkcs11.so" $ ssh <ssh-server-example.com> Enter PIN for 'SSH key': [ssh-server-example.com] $

$ cat ~/.ssh/config IdentityFile "pkcs11:id=%01?module-path=/usr/lib64/pkcs11/opensc-pkcs11.so" $ ssh <ssh-server-example.com> Enter PIN for 'SSH key': [ssh-server-example.com] $Copy to Clipboard Copied! Toggle word wrap Toggle overflow The

sshclient utility now automatically uses this URI and the key from the smart card.

Chapter 6. Configuring logging

Most services in Red Hat Enterprise Linux log status messages, warnings, and errors. You can use the rsyslogd service to log these entries to local files or to a remote logging server.

6.1. Configuring a remote logging solution

To ensure that logs from various machines in your environment are recorded centrally on a logging server, you can configure the Rsyslog application to record logs that fit specific criteria from the client system to the server.

6.1.1. The Rsyslog logging service

The Rsyslog application, in combination with the systemd-journald service, provides local and remote logging support in Red Hat Enterprise Linux. The rsyslogd daemon continuously reads syslog messages received by the systemd-journald service from the Journal. rsyslogd then filters and processes these syslog events and records them to rsyslog log files or forwards them to other services according to its configuration.

The rsyslogd daemon also provides extended filtering, encryption protected relaying of messages, input and output modules, and support for transportation using the TCP and UDP protocols.

In /etc/rsyslog.conf, which is the main configuration file for rsyslog, you can specify the rules according to which rsyslogd handles the messages. Generally, you can classify messages by their source and topic (facility) and urgency (priority), and then assign an action that should be performed when a message fits these criteria.

In /etc/rsyslog.conf, you can also see a list of log files maintained by rsyslogd. Most log files are located in the /var/log/ directory. Some applications, such as httpd and samba, store their log files in a subdirectory within /var/log/.

6.1.2. Installing Rsyslog documentation

The Rsyslog application has extensive online documentation that is available at https://www.rsyslog.com/doc/, but you can also install the rsyslog-doc documentation package locally.

Prerequisites

-

You have activated the

AppStreamrepository on your system. -

You are authorized to install new packages using

sudo.

Procedure

Install the

rsyslog-docpackage:yum install rsyslog-doc

# yum install rsyslog-docCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Open the

/usr/share/doc/rsyslog/html/index.htmlfile in a browser of your choice, for example:firefox /usr/share/doc/rsyslog/html/index.html &

$ firefox /usr/share/doc/rsyslog/html/index.html &Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.1.3. Configuring a server for remote logging over TCP

The Rsyslog application enables you to both run a logging server and configure individual systems to send their log files to the logging server. To use remote logging through TCP, configure both the server and the client. The server collects and analyzes the logs sent by one or more client systems.

With the Rsyslog application, you can maintain a centralized logging system where log messages are forwarded to a server over the network. To avoid message loss when the server is not available, you can configure an action queue for the forwarding action. This way, messages that failed to be sent are stored locally until the server is reachable again. Note that such queues cannot be configured for connections using the UDP protocol.

The omfwd plug-in provides forwarding over UDP or TCP. The default protocol is UDP. Because the plug-in is built in, it does not have to be loaded.

By default, rsyslog uses TCP on port 514.

Prerequisites

- Rsyslog is installed on the server system.

-

You are logged in as

rooton the server. -

The

policycoreutils-python-utilspackage is installed for the optional step using thesemanagecommand. -

The

firewalldservice is running.

Procedure

Optional: To use a different port for

rsyslogtraffic, add thesyslogd_port_tSELinux type to port. For example, enable port30514:semanage port -a -t syslogd_port_t -p tcp 30514

# semanage port -a -t syslogd_port_t -p tcp 30514Copy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: To use a different port for

rsyslogtraffic, configurefirewalldto allow incomingrsyslogtraffic on that port. For example, allow TCP traffic on port30514:firewall-cmd --zone=<zone-name> --permanent --add-port=30514/tcp success firewall-cmd --reload

# firewall-cmd --zone=<zone-name> --permanent --add-port=30514/tcp success # firewall-cmd --reloadCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a new file in the

/etc/rsyslog.d/directory named, for example,remotelog.conf, and insert the following content:Copy to Clipboard Copied! Toggle word wrap Toggle overflow -

Save the changes to the

/etc/rsyslog.d/remotelog.conffile. Test the syntax of the

/etc/rsyslog.conffile:rsyslogd -N 1 rsyslogd: version 8.1911.0-2.el8, config validation run... rsyslogd: End of config validation run. Bye.

# rsyslogd -N 1 rsyslogd: version 8.1911.0-2.el8, config validation run... rsyslogd: End of config validation run. Bye.Copy to Clipboard Copied! Toggle word wrap Toggle overflow Make sure the

rsyslogservice is running and enabled on the logging server:systemctl status rsyslog

# systemctl status rsyslogCopy to Clipboard Copied! Toggle word wrap Toggle overflow Restart the

rsyslogservice.systemctl restart rsyslog

# systemctl restart rsyslogCopy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: If

rsyslogis not enabled, ensure thersyslogservice starts automatically after reboot:systemctl enable rsyslog

# systemctl enable rsyslogCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Your log server is now configured to receive and store log files from the other systems in your environment.

6.1.4. Configuring remote logging to a server over TCP

You can configure a system for forwarding log messages to a server over the TCP protocol. The omfwd plug-in provides forwarding over UDP or TCP. The default protocol is UDP. Because the plug-in is built in, you do not have to load it.

Prerequisites

-

The

rsyslogpackage is installed on the client systems that should report to the server. - You have configured the server for remote logging.

- The specified port is permitted in SELinux and open in firewall.

-

The system contains the

policycoreutils-python-utilspackage, which provides thesemanagecommand for adding a non-standard port to the SELinux configuration.

Procedure

Create a new file in the

/etc/rsyslog.d/directory named, for example,10-remotelog.conf, and insert the following content:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Where:

-

The

queue.type="linkedlist"setting enables a LinkedList in-memory queue, -

The

queue.filenamesetting defines a disk storage. The backup files are created with theexample_fwdprefix in the working directory specified by the preceding globalworkDirectorydirective. -

The

action.resumeRetryCount -1setting preventsrsyslogfrom dropping messages when retrying to connect if server is not responding, -

The

queue.saveOnShutdown="on"setting saves in-memory data ifrsyslogshuts down. The last line forwards all received messages to the logging server. Port specification is optional.

With this configuration,

rsyslogsends messages to the server but keeps messages in memory if the remote server is not reachable. A file on disk is created only ifrsyslogruns out of the configured memory queue space or needs to shut down, which benefits the system performance.

NoteRsyslog processes configuration files

/etc/rsyslog.d/in the lexical order.-

The

Restart the

rsyslogservice.systemctl restart rsyslog

# systemctl restart rsyslogCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

To verify that the client system sends messages to the server, follow these steps:

On the client system, send a test message:

logger test

# logger testCopy to Clipboard Copied! Toggle word wrap Toggle overflow On the server system, view the

/var/log/messageslog, for example:cat /var/log/remote/msg/hostname/root.log Feb 25 03:53:17 hostname root[6064]: test

# cat /var/log/remote/msg/hostname/root.log Feb 25 03:53:17 hostname root[6064]: testCopy to Clipboard Copied! Toggle word wrap Toggle overflow Where hostname is the host name of the client system. Note that the log contains the user name of the user that entered the

loggercommand, in this caseroot.

6.1.5. Configuring TLS-encrypted remote logging

By default, Rsyslog sends remote-logging communication in the plain text format. If your scenario requires to secure this communication channel, you can encrypt it using TLS.

To use encrypted transport through TLS, configure both the server and the client. The server collects and analyzes the logs sent by one or more client systems.

You can use either the ossl network stream driver (OpenSSL) or the gtls stream driver (GnuTLS).

If you have a separate system with higher security, for example, a system that is not connected to any network or has stricter authorizations, use the separate system as the certifying authority (CA).

You can customize your connection settings with stream drivers on the server side on the global, module, and input levels, and on the client side on the global and action levels. The more specific configuration overrides the more general configuration. This means, for example, that you can use ossl in global settings for most connections and gtls on the input and action settings only for specific connections.

Prerequisites

-

You have

rootaccess to both the client and server systems. The following packages are installed on the server and the client systems:

-

The

rsyslogpackage. -

For the

osslnetwork stream driver, thersyslog-opensslpackage. -

For the

gtlsnetwork stream driver, thersyslog-gnutlspackage. -

For generating certificates by using the

certtoolcommand, thegnutls-utilspackage.

-

The

On your logging server, the following certificates are in the

/etc/pki/ca-trust/source/anchors/directory and your system configuration is updated by using theupdate-ca-trustcommand:-

ca-cert.pem- a CA certificate that can verify keys and certificates on logging servers and clients. -

server-cert.pem- a public key of the logging server. -

server-key.pem- a private key of the logging server.

-

On your logging clients, the following certificates are in the

/etc/pki/ca-trust/source/anchors/directory and your system configuration is updated by usingupdate-ca-trust:-

ca-cert.pem- a CA certificate that can verify keys and certificates on logging servers and clients. -

client-cert.pem- a public key of a client. -

client-key.pem- a private key of a client.

-

Procedure

Configure the server for receiving encrypted logs from your client systems:

-

Create a new file in the

/etc/rsyslog.d/directory named, for example,securelogser.conf. To encrypt the communication, the configuration file must contain paths to certificate files on your server, a selected authentication method, and a stream driver that supports TLS encryption. Add the following lines to the

/etc/rsyslog.d/securelogser.conffile:Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteIf you prefer the GnuTLS driver, use the

StreamDriver.Name="gtls"configuration option. See the documentation installed with thersyslog-docpackage for more information about less strict authentication modes thanx509/name.-

Save the changes to the

/etc/rsyslog.d/securelogser.conffile. Verify the syntax of the

/etc/rsyslog.conffile and any files in the/etc/rsyslog.d/directory:rsyslogd -N 1 rsyslogd: version 8.1911.0-2.el8, config validation run (level 1)... rsyslogd: End of config validation run. Bye.

# rsyslogd -N 1 rsyslogd: version 8.1911.0-2.el8, config validation run (level 1)... rsyslogd: End of config validation run. Bye.Copy to Clipboard Copied! Toggle word wrap Toggle overflow Make sure the

rsyslogservice is running and enabled on the logging server:systemctl status rsyslog

# systemctl status rsyslogCopy to Clipboard Copied! Toggle word wrap Toggle overflow Restart the

rsyslogservice:systemctl restart rsyslog

# systemctl restart rsyslogCopy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: If Rsyslog is not enabled, ensure the

rsyslogservice starts automatically after reboot:systemctl enable rsyslog

# systemctl enable rsyslogCopy to Clipboard Copied! Toggle word wrap Toggle overflow

-

Create a new file in the

Configure clients for sending encrypted logs to the server:

-

On a client system, create a new file in the

/etc/rsyslog.d/directory named, for example,securelogcli.conf. Add the following lines to the

/etc/rsyslog.d/securelogcli.conffile:Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteIf you prefer the GnuTLS driver, use the

StreamDriver.Name="gtls"configuration option.-

Save the changes to the

/etc/rsyslog.d/securelogcli.conffile. Verify the syntax of the

/etc/rsyslog.conffile and other files in the/etc/rsyslog.d/directory:rsyslogd -N 1 rsyslogd: version 8.1911.0-2.el8, config validation run (level 1)... rsyslogd: End of config validation run. Bye.

# rsyslogd -N 1 rsyslogd: version 8.1911.0-2.el8, config validation run (level 1)... rsyslogd: End of config validation run. Bye.Copy to Clipboard Copied! Toggle word wrap Toggle overflow Make sure the

rsyslogservice is running and enabled on the logging server:systemctl status rsyslog

# systemctl status rsyslogCopy to Clipboard Copied! Toggle word wrap Toggle overflow Restart the

rsyslogservice:systemctl restart rsyslog

# systemctl restart rsyslogCopy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: If Rsyslog is not enabled, ensure the

rsyslogservice starts automatically after reboot:systemctl enable rsyslog

# systemctl enable rsyslogCopy to Clipboard Copied! Toggle word wrap Toggle overflow

-

On a client system, create a new file in the

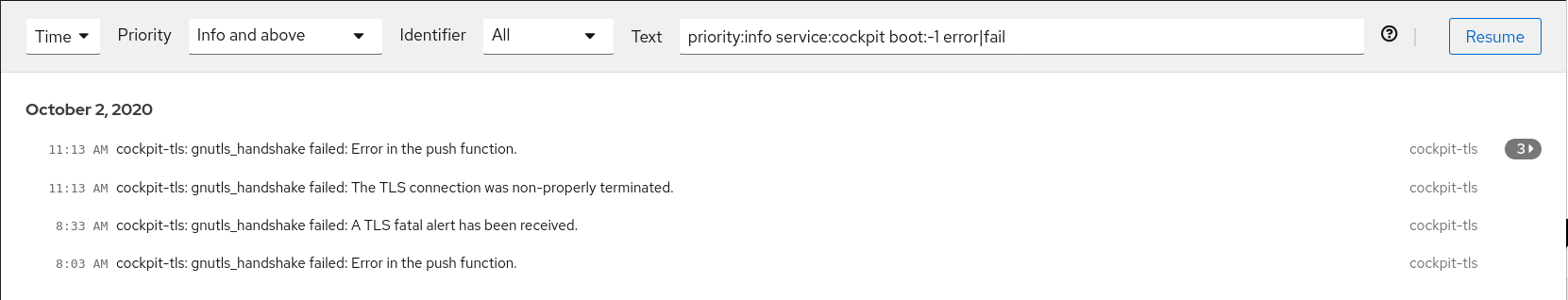

Verification

To verify that the client system sends messages to the server, follow these steps:

On the client system, send a test message:

logger test

# logger testCopy to Clipboard Copied! Toggle word wrap Toggle overflow On the server system, view the

/var/log/messageslog, for example:cat /var/log/remote/msg/<hostname>/root.log Feb 25 03:53:17 <hostname> root[6064]: test

# cat /var/log/remote/msg/<hostname>/root.log Feb 25 03:53:17 <hostname> root[6064]: testCopy to Clipboard Copied! Toggle word wrap Toggle overflow Where

<hostname>is the hostname of the client system. Note that the log contains the user name of the user that entered the logger command, in this caseroot.

6.1.6. Configuring a server for receiving remote logging information over UDP

The Rsyslog application enables you to configure a system to receive logging information from remote systems. To use remote logging through UDP, configure both the server and the client. The receiving server collects and analyzes the logs sent by one or more client systems. By default, rsyslog uses UDP on port 514 to receive log information from remote systems.

Follow this procedure to configure a server for collecting and analyzing logs sent by one or more client systems over the UDP protocol.

Prerequisites

- Rsyslog is installed on the server system.

-

You are logged in as

rooton the server. -

The

policycoreutils-python-utilspackage is installed for the optional step using thesemanagecommand. -

The

firewalldservice is running.

Procedure

Optional: To use a different port for

rsyslogtraffic than the default port514:Add the

syslogd_port_tSELinux type to the SELinux policy configuration, replacingportnowith the port number you wantrsyslogto use:semanage port -a -t syslogd_port_t -p udp portno

# semanage port -a -t syslogd_port_t -p udp portnoCopy to Clipboard Copied! Toggle word wrap Toggle overflow Configure

firewalldto allow incomingrsyslogtraffic, replacingportnowith the port number andzonewith the zone you wantrsyslogto use:firewall-cmd --zone=zone --permanent --add-port=portno/udp success firewall-cmd --reload

# firewall-cmd --zone=zone --permanent --add-port=portno/udp success # firewall-cmd --reloadCopy to Clipboard Copied! Toggle word wrap Toggle overflow Reload the firewall rules:

firewall-cmd --reload

# firewall-cmd --reloadCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Create a new

.conffile in the/etc/rsyslog.d/directory, for example,remotelogserv.conf, and insert the following content:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Where

514is the port numberrsysloguses by default. You can specify a different port instead.Verify the syntax of the

/etc/rsyslog.conffile and all.conffiles in the/etc/rsyslog.d/directory:rsyslogd -N 1 rsyslogd: version 8.1911.0-2.el8, config validation run...

# rsyslogd -N 1 rsyslogd: version 8.1911.0-2.el8, config validation run...Copy to Clipboard Copied! Toggle word wrap Toggle overflow Restart the

rsyslogservice.systemctl restart rsyslog

# systemctl restart rsyslogCopy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: If

rsyslogis not enabled, ensure thersyslogservice starts automatically after reboot:systemctl enable rsyslog

# systemctl enable rsyslogCopy to Clipboard Copied! Toggle word wrap Toggle overflow

6.1.7. Configuring remote logging to a server over UDP

You can configure a system for forwarding log messages to a server over the UDP protocol. The omfwd plug-in provides forwarding over UDP or TCP. The default protocol is UDP. Because the plug-in is built in, you do not have to load it.

Prerequisites

-

The

rsyslogpackage is installed on the client systems that should report to the server. - You have configured the server for remote logging as described in Configuring a server for receiving remote logging information over UDP.

Procedure

Create a new

.conffile in the/etc/rsyslog.d/directory, for example,10-remotelogcli.conf, and insert the following content:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Where:

-

The

queue.type="linkedlist"setting enables a LinkedList in-memory queue. -

The

queue.filenamesetting defines a disk storage. The backup files are created with theexample_fwdprefix in the working directory specified by the preceding globalworkDirectorydirective. -

The

action.resumeRetryCount -1setting preventsrsyslogfrom dropping messages when retrying to connect if the server is not responding. -

The

enabled queue.saveOnShutdown="on"setting saves in-memory data ifrsyslogshuts down. -

The

portnovalue is the port number you wantrsyslogto use. The default value is514. The last line forwards all received messages to the logging server, port specification is optional.

With this configuration,

rsyslogsends messages to the server but keeps messages in memory if the remote server is not reachable. A file on disk is created only ifrsyslogruns out of the configured memory queue space or needs to shut down, which benefits the system performance.

NoteRsyslog processes configuration files

/etc/rsyslog.d/in the lexical order.-

The

Restart the

rsyslogservice.systemctl restart rsyslog

# systemctl restart rsyslogCopy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: If

rsyslogis not enabled, ensure thersyslogservice starts automatically after reboot:systemctl enable rsyslog

# systemctl enable rsyslogCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

To verify that the client system sends messages to the server, follow these steps:

On the client system, send a test message:

logger test

# logger testCopy to Clipboard Copied! Toggle word wrap Toggle overflow On the server system, view the

/var/log/remote/msg/hostname/root.loglog, for example:cat /var/log/remote/msg/hostname/root.log Feb 25 03:53:17 hostname root[6064]: test

# cat /var/log/remote/msg/hostname/root.log Feb 25 03:53:17 hostname root[6064]: testCopy to Clipboard Copied! Toggle word wrap Toggle overflow Where

hostnameis the host name of the client system. Note that the log contains the user name of the user that entered the logger command, in this caseroot.

6.1.8. Load balancing helper in Rsyslog

When used in a cluster, you can improve Rsyslog load balancing by modifying the RebindInterval setting.

RebindInterval specifies an interval at which the current connection is broken and is re-established. This setting applies to TCP, UDP, and RELP traffic. The load balancers perceive it as a new connection and forward the messages to another physical target system.

RebindInterval is helpful in scenarios when a target system has changed its IP address. The Rsyslog application caches the IP address when the connection is established, therefore, the messages are sent to the same server. If the IP address changes, the UDP packets are lost until the Rsyslog service restarts. Re-establishing the connection ensures the IP is resolved by DNS again.

Example usage of RebindInterval for TCP, UDP, and RELP traffic

action(type="omfwd" protocol="tcp" RebindInterval="250" target="example.com" port="514" …) action(type="omfwd" protocol="udp" RebindInterval="250" target="example.com" port="514" …) action(type="omrelp" RebindInterval="250" target="example.com" port="6514" …)

action(type="omfwd" protocol="tcp" RebindInterval="250" target="example.com" port="514" …)

action(type="omfwd" protocol="udp" RebindInterval="250" target="example.com" port="514" …)

action(type="omrelp" RebindInterval="250" target="example.com" port="6514" …)6.1.9. Configuring reliable remote logging

With the Reliable Event Logging Protocol (RELP), you can send and receive syslog messages over TCP with a much reduced risk of message loss. RELP provides reliable delivery of event messages, which makes it useful in environments where message loss is not acceptable. To use RELP, configure the imrelp input module, which runs on the server and receives the logs, and the omrelp output module, which runs on the client and sends logs to the logging server.

Prerequisites

-

You have installed the

rsyslog,librelp, andrsyslog-relppackages on the server and the client systems. - The specified port is permitted in SELinux and open in the firewall.

Procedure

Configure the client system for reliable remote logging:

On the client system, create a new

.conffile in the/etc/rsyslog.d/directory named, for example,relpclient.conf, and insert the following content:module(load="omrelp") *.* action(type="omrelp" target="_target_IP_" port="_target_port_")

module(load="omrelp") *.* action(type="omrelp" target="_target_IP_" port="_target_port_")Copy to Clipboard Copied! Toggle word wrap Toggle overflow Where:

-

target_IPis the IP address of the logging server. -

target_portis the port of the logging server.

-

-

Save the changes to the

/etc/rsyslog.d/relpclient.conffile. Restart the

rsyslogservice.systemctl restart rsyslog

# systemctl restart rsyslogCopy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: If

rsyslogis not enabled, ensure thersyslogservice starts automatically after reboot:systemctl enable rsyslog

# systemctl enable rsyslogCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Configure the server system for reliable remote logging:

On the server system, create a new

.conffile in the/etc/rsyslog.d/directory named, for example,relpserv.conf, and insert the following content:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Where:

-

log_pathspecifies the path for storing messages. -

target_portis the port of the logging server. Use the same value as in the client configuration file.

-

-

Save the changes to the

/etc/rsyslog.d/relpserv.conffile. Restart the

rsyslogservice.systemctl restart rsyslog

# systemctl restart rsyslogCopy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: If

rsyslogis not enabled, ensure thersyslogservice starts automatically after reboot:systemctl enable rsyslog

# systemctl enable rsyslogCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

To verify that the client system sends messages to the server, follow these steps:

On the client system, send a test message:

logger test

# logger testCopy to Clipboard Copied! Toggle word wrap Toggle overflow On the server system, view the log at the specified

log_path, for example:cat /var/log/remote/msg/hostname/root.log Feb 25 03:53:17 hostname root[6064]: test

# cat /var/log/remote/msg/hostname/root.log Feb 25 03:53:17 hostname root[6064]: testCopy to Clipboard Copied! Toggle word wrap Toggle overflow Where

hostnameis the host name of the client system. Note that the log contains the user name of the user that entered the logger command, in this caseroot.

6.1.10. Supported Rsyslog modules

To expand the functionality of the Rsyslog application, you can use specific modules. Modules provide additional inputs (Input Modules), outputs (Output Modules), and other functionalities. A module can also provide additional configuration directives that become available after you load the module.

You can list the input and output modules installed on your system by entering the following command:

ls /usr/lib64/rsyslog/{i,o}m*

# ls /usr/lib64/rsyslog/{i,o}m*

You can view the list of all available rsyslog modules in the /usr/share/doc/rsyslog/html/configuration/modules/idx_output.html file after you install the rsyslog-doc package.

6.1.11. Configuring the netconsole service to log kernel messages to a remote host

When logging to disk or using a serial console is not possible, you can use the netconsole kernel module and the same-named service to log kernel messages over a network to a remote rsyslog service.

Prerequisites

-

A system log service, such as

rsyslogis installed on the remote host. - The remote system log service is configured to receive incoming log entries from this host.

Procedure

Install the

netconsole-servicepackage:yum install netconsole-service

# yum install netconsole-serviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow Edit the

/etc/sysconfig/netconsolefile and set theSYSLOGADDRparameter to the IP address of the remote host:SYSLOGADDR=192.0.2.1

# SYSLOGADDR=192.0.2.1Copy to Clipboard Copied! Toggle word wrap Toggle overflow Enable and start the

netconsoleservice:systemctl enable --now netconsole

# systemctl enable --now netconsoleCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

-

Display the

/var/log/messagesfile on the remote system log server.

6.2. Using the logging system role

As a system administrator, you can use the logging system role to configure a Red Hat Enterprise Linux host as a logging server to collect logs from many client systems.

6.2.1. Filtering local log messages by using the logging RHEL system role

You can use the property-based filter of the logging RHEL system role to filter your local log messages based on various conditions.

You can achieve, for example:

- Log clarity: In a high-traffic environment, logs can grow rapidly. The focus on specific messages, like errors, can help to identify problems faster.

- Optimized system performance: Excessive amount of logs is usually connected with system performance degradation. Selective logging for only the important events can prevent resource depletion, which enables your systems to run more efficiently.

- Enhanced security: Efficient filtering through security messages, like system errors and failed logins, helps to capture only the relevant logs. This is important for detecting breaches and meeting compliance standards.

Prerequisites

- You have prepared the control node and the managed nodes.

-

The account you use to connect to the managed nodes has

sudopermissions for these nodes.

Procedure

Create a playbook file, for example,

~/playbook.yml, with the following content:Copy to Clipboard Copied! Toggle word wrap Toggle overflow The settings specified in the example playbook include the following:

logging_inputs-

Defines a list of logging input dictionaries. The

type: basicsoption covers inputs fromsystemdjournal or Unix socket. logging_outputs-

Defines a list of logging output dictionaries. The

type: filesoption supports storing logs in the local files, usually in the/var/log/directory. Theproperty: msg;property: contains; andproperty_value: erroroptions specify that all logs that contain theerrorstring are stored in the/var/log/errors.logfile. Theproperty: msg;property: !contains; andproperty_value: erroroptions specify that all other logs are put in the/var/log/others.logfile. You can replace theerrorvalue with the string by which you want to filter. logging_flows-

Defines a list of logging flow dictionaries to specify relationships between

logging_inputsandlogging_outputs. Theinputs: [files_input]option specifies a list of inputs, from which processing of logs starts. Theoutputs: [files_output0, files_output1]option specifies a list of outputs, to which the logs are sent.

For details about all variables used in the playbook, see the

/usr/share/ansible/roles/rhel-system-roles.logging/README.mdfile on the control node.Validate the playbook syntax:

ansible-playbook --syntax-check ~/playbook.yml

$ ansible-playbook --syntax-check ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note that this command only validates the syntax and does not protect against a wrong but valid configuration.

Run the playbook:

ansible-playbook ~/playbook.yml

$ ansible-playbook ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

On the managed node, test the syntax of the

/etc/rsyslog.conffile:rsyslogd -N 1 rsyslogd: version 8.1911.0-6.el8, config validation run... rsyslogd: End of config validation run. Bye.

# rsyslogd -N 1 rsyslogd: version 8.1911.0-6.el8, config validation run... rsyslogd: End of config validation run. Bye.Copy to Clipboard Copied! Toggle word wrap Toggle overflow On the managed node, verify that the system sends messages that contain the

errorstring to the log:Send a test message:

logger error

# logger errorCopy to Clipboard Copied! Toggle word wrap Toggle overflow View the

/var/log/errors.loglog, for example:cat /var/log/errors.log Aug 5 13:48:31 hostname root[6778]: error

# cat /var/log/errors.log Aug 5 13:48:31 hostname root[6778]: errorCopy to Clipboard Copied! Toggle word wrap Toggle overflow Where

hostnameis the host name of the client system. Note that the log contains the user name of the user that entered the logger command, in this caseroot.

6.2.2. Applying a remote logging solution by using the logging RHEL system role

You can use the logging RHEL system role to configure centralized log management across multiple systems. The server receives remote input from the remote_rsyslog and remote_files configurations, and outputs the logs to local files in directories named by remote host names.

As a result, you can cover use cases where you need for example:

- Centralized log management: Collecting, accessing, and managing log messages of multiple machines from a single storage point simplifies day-to-day monitoring and troubleshooting tasks. Also, this use case reduces the need to log in to individual machines to check the log messages.

- Enhanced security: Storing log messages in one central place increases chances they are in a secure and tamper-proof environment. Such an environment makes it easier to detect and respond to security incidents more effectively and to meet audit requirements.

- Improved efficiency in log analysis: Correlating log messages from multiple systems is important for fast troubleshooting of complex problems that span multiple machines or services. That way you can quickly analyze and cross-reference events from different sources.

- Define the ports in the SELinux policy of the server or client system and open the firewall for those ports. The default SELinux policy includes ports 601, 514, 6514, 10514, and 20514. To use a different port, see modify the SELinux policy on the client and server systems.

Prerequisites

- You have prepared the control node and the managed nodes.

-

The account you use to connect to the managed nodes has

sudopermissions for these nodes.

Procedure

Create a playbook file, for example,

~/playbook.yml, with the following content:Copy to Clipboard Copied! Toggle word wrap Toggle overflow The settings specified in the first play of the example playbook include the following:

logging_inputs-

Defines a list of logging input dictionaries. The

type: remoteoption covers remote inputs from the other logging system over the network. Theudp_ports: [ 601 ]option defines a list of UDP port numbers to monitor. Thetcp_ports: [ 601 ]option defines a list of TCP port numbers to monitor. If bothudp_portsandtcp_portsare set,udp_portsis used andtcp_portsis dropped. logging_outputs-

Defines a list of logging output dictionaries. The

type: remote_filesoption makes output store logs to the local files per remote host and program name originated the logs. logging_flows-

Defines a list of logging flow dictionaries to specify relationships between

logging_inputsandlogging_outputs. Theinputs: [remote_udp_input, remote_tcp_input]option specifies a list of inputs, from which processing of logs starts. Theoutputs: [remote_files_output]option specifies a list of outputs, to which the logs are sent.

The settings specified in the second play of the example playbook include the following:

logging_inputs-

Defines a list of logging input dictionaries. The

type: basicsoption covers inputs fromsystemdjournal or Unix socket. logging_outputs-

Defines a list of logging output dictionaries. The

type: forwardsoption supports sending logs to the remote logging server over the network. Theseverity: infooption refers to log messages of informative importance. Thefacility: mailoption refers to the type of system program that is generating the log message. Thetarget: <host1.example.com>option specifies the hostname of the remote logging server. Theudp_port: 601/tcp_port: 601options define the UDP/TCP ports on which the remote logging server listens. logging_flows-

Defines a list of logging flow dictionaries to specify relationships between

logging_inputsandlogging_outputs. Theinputs: [basic_input]option specifies a list of inputs, from which processing of logs starts. Theoutputs: [forward_output0, forward_output1]option specifies a list of outputs, to which the logs are sent.

For details about all variables used in the playbook, see the

/usr/share/ansible/roles/rhel-system-roles.logging/README.mdfile on the control node.Validate the playbook syntax:

ansible-playbook --syntax-check ~/playbook.yml

$ ansible-playbook --syntax-check ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note that this command only validates the syntax and does not protect against a wrong but valid configuration.

Run the playbook:

ansible-playbook ~/playbook.yml

$ ansible-playbook ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

On both the client and the server system, test the syntax of the

/etc/rsyslog.conffile:rsyslogd -N 1 rsyslogd: version 8.1911.0-6.el8, config validation run (level 1), master config /etc/rsyslog.conf rsyslogd: End of config validation run. Bye.

# rsyslogd -N 1 rsyslogd: version 8.1911.0-6.el8, config validation run (level 1), master config /etc/rsyslog.conf rsyslogd: End of config validation run. Bye.Copy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that the client system sends messages to the server:

On the client system, send a test message:

logger test

# logger testCopy to Clipboard Copied! Toggle word wrap Toggle overflow On the server system, view the

/var/log/<host2.example.com>/messageslog, for example:cat /var/log/<host2.example.com>/messages Aug 5 13:48:31 <host2.example.com> root[6778]: test

# cat /var/log/<host2.example.com>/messages Aug 5 13:48:31 <host2.example.com> root[6778]: testCopy to Clipboard Copied! Toggle word wrap Toggle overflow Where

<host2.example.com>is the host name of the client system. Note that the log contains the user name of the user that entered the logger command, in this caseroot.

6.2.3. Using the logging RHEL system role with TLS

You can use the logging RHEL system role to configure a secure transfer of log messages, where one or more clients take logs from the systemd-journal service and transfer them to a remote server while using TLS.