Chapter 4. Getting Started for Developers

4.1. Preparing Development Environment

The fundamental requirement for developing and testing Fuse on OpenShift projects is having access to an OpenShift Server. You have the following basic alternatives:

4.1.1. Installing Container Development Kit (CDK) on Your Local Machine

As a developer, if you want to get started quickly, the most practical alternative is to install Red Hat CDK on your local machine. Using CDK, you can boot a virtual machine (VM) instance that runs an image of OpenShift on Red Hat Enterprise Linux (RHEL) 7. An installation of CDK consists of the following key components:

- A virtual machine (libvirt, VirtualBox, or Hyper-V)

- Minishift to start and manage the Container Development Environment

Red Hat CDK is intended for development purposes only. It is not intended for other purposes, such as production environments, and may not address known security vulnerabilities. For full support of running mission-critical applications inside of docker-formatted containers, you need an active RHEL 7 or RHEL Atomic subscription. For more details, see Support for Red Hat Container Development Kit (CDK).

Prerequisites

Java Version

On your developer machine, make sure you have installed a Java version that is supported by Fuse 7.4. For details of the supported Java versions, see Supported Configurations.

Procedure

To install the CDK on your local machine:

- For Fuse on OpenShift, we recommend that you install version 3.9 of CDK. Detailed instructions for installing and using CDK 3.9 are provided in the Red Hat CDK 3.9 Getting Started Guide.

- Configure your OpenShift credentials to gain access to the Red Hat container registry by following the instructions in Configuring Red Hat Container Registry authentication.

Install the Fuse on OpenShift images and templates manually as described in Chapter 2, Getting Started for Administrators.

NoteYour version of CDK might have Fuse on OpenShift images and templates pre-installed. However, you must install (or update) the Fuse on OpenShift images and templates after you configure your OpenShift credentials.

- Before you proceed with the examples in this chapter, you should read and thoroughly understand the contents of the Red Hat CDK 3.9 Getting Started Guide.

4.1.2. Getting Remote Access to an Existing OpenShift Server

Your IT department might already have set up an OpenShift cluster on some server machines. In this case, the following requirements must be satisfied for getting started with Fuse on OpenShift:

- The server machines must be running a supported version of OpenShift Container Platform (as documented in the Supported Configurations page). The examples in this guide have been tested against version 3.11.

- Ask the OpenShift administrator to install the latest Fuse on OpenShift container base images and the Fuse on OpenShift templates on the OpenShift servers.

- Ask the OpenShift administrator to create a user account for you, having the usual developer permissions (enabling you to create, deploy, and run OpenShift projects).

-

Ask the administrator for the URL of the OpenShift Server (which you can use either to browse to the OpenShift console or connect to OpenShift using the

occommand-line client) and the login credentials for your account.

4.1.3. Installing Client-Side Tools

We recommend that you have the following tools installed on your developer machine:

- Apache Maven 3.6.x: Required for local builds of OpenShift projects. Download the appropriate package from the Apache Maven download page. Make sure that you have at least version 3.6.x (or later) installed, otherwise Maven might have problems resolving dependencies when you build your project.

- Git: Required for the OpenShift S2I source workflow and generally recommended for source control of your Fuse on OpenShift projects. Download the appropriate package from the Git Downloads page.

OpenShift client: If you are using CDK, you can add the

ocbinary to your PATH usingminishift oc-envwhich displays the command you need to type into your shell (the output ofoc-envwill differ depending on OS and shell type):minishift oc-env export PATH="/Users/john/.minishift/cache/oc/v1.5.0:$PATH" # Run this command to configure your shell: eval $(minishift oc-env)

$ minishift oc-env export PATH="/Users/john/.minishift/cache/oc/v1.5.0:$PATH" # Run this command to configure your shell: # eval $(minishift oc-env)Copy to Clipboard Copied! Toggle word wrap Toggle overflow For more details, see Using the OpenShift Client Binary in CDK 3.9 Getting Started Guide.

If you are not using CDK, follow the instructions in the CLI Reference to install the

occlient tool.(Optional) Docker client: Advanced users might find it convenient to have the Docker client tool installed (to communicate with the docker daemon running on an OpenShift server). For information about specific binary installations for your operating system, see the Docker installation site.

For more details, see Reusing the docker Daemon in CDK 3.9 Getting Started Guide.

ImportantMake sure that you install versions of the

octool and thedockertool that are compatible with the version of OpenShift running on the OpenShift Server.

Additional Resources

(Optional) Red Hat JBoss CodeReady Studio: Red Hat JBoss CodeReady Studio is an Eclipse-based development environment that includes support for developing Fuse on OpenShift applications. For details about how to install this development environment, see Install Red Hat JBoss CodeReady Studio.

4.1.4. Configuring Maven Repositories

Configure the Maven repositories, which hold the archetypes and artifacts that you will need for building an Fuse on OpenShift project on your local machine.

Procedure

-

Open your Maven

settings.xmlfile, which is usually located in~/.m2/settings.xml(on Linux or macOS) orDocuments and Settings\<USER_NAME>\.m2\settings.xml(on Windows). Add the following Maven repositories.

-

Maven central:

https://repo1.maven.org/maven2 -

Red Hat GA repository:

https://maven.repository.redhat.com/ga Red Hat EA repository:

https://maven.repository.redhat.com/earlyaccess/allYou must add the preceding repositories both to the dependency repositories section as well as the plug-in repositories section of your

settings.xmlfile.

-

Maven central:

4.2. Creating and Deploying Applications on Fuse on OpenShift

You can start using Fuse on OpenShift by creating an application and deploying it to OpenShift using one of the following OpenShift Source-to-Image (S2I) application development workflows:

- S2I binary workflow

- S2I with build input from a binary source. This workflow is characterized by the fact that the build is partly executed on the developer’s own machine. After building a binary package locally, this workflow hands off the binary package to OpenShift. For more details, see Binary Source from the OpenShift 3.5 Developer Guide.

- S2I source workflow

- S2I with build input from a Git source. This workflow is characterized by the fact that the build is executed entirely on the OpenShift server. For more details, see Git Source from the OpenShift 3.5 Developer Guide.

4.2.1. Creating and Deploying a Project Using the S2I Binary Workflow

In this section, you will use the OpenShift S2I binary workflow to create, build, and deploy an Fuse on OpenShift project.

Procedure

Create a new Fuse on OpenShift project using a Maven archetype. For this example, we use an archetype that creates a sample Spring Boot Camel project. Open a new shell prompt and enter the following Maven command:

mvn org.apache.maven.plugins:maven-archetype-plugin:2.4:generate \ -DarchetypeCatalog=https://maven.repository.redhat.com/ga/io/fabric8/archetypes/archetypes-catalog/2.2.0.fuse-740017-redhat-00003/archetypes-catalog-2.2.0.fuse-740017-redhat-00003-archetype-catalog.xml \ -DarchetypeGroupId=org.jboss.fuse.fis.archetypes \ -DarchetypeArtifactId=spring-boot-camel-xml-archetype \ -DarchetypeVersion=2.2.0.fuse-740017-redhat-00003

mvn org.apache.maven.plugins:maven-archetype-plugin:2.4:generate \ -DarchetypeCatalog=https://maven.repository.redhat.com/ga/io/fabric8/archetypes/archetypes-catalog/2.2.0.fuse-740017-redhat-00003/archetypes-catalog-2.2.0.fuse-740017-redhat-00003-archetype-catalog.xml \ -DarchetypeGroupId=org.jboss.fuse.fis.archetypes \ -DarchetypeArtifactId=spring-boot-camel-xml-archetype \ -DarchetypeVersion=2.2.0.fuse-740017-redhat-00003Copy to Clipboard Copied! Toggle word wrap Toggle overflow The archetype plug-in switches to interactive mode to prompt you for the remaining fields.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow When prompted, enter

org.example.fisfor thegroupIdvalue andfuse74-spring-bootfor theartifactIdvalue. Accept the defaults for the remaining fields.-

If the previous command exited with the

BUILD SUCCESSstatus, you should now have a new Fuse on OpenShift project under thefuse74-spring-bootsubdirectory. You can inspect the XML DSL code in thefuse74-spring-boot/src/main/resources/spring/camel-context.xmlfile. The demonstration code defines a simple Camel route that continuously sends message containing a random number to the log. In preparation for building and deploying the Fuse on OpenShift project, log in to the OpenShift Server as follows.

oc login -u developer -p developer https://OPENSHIFT_IP_ADDR:8443

oc login -u developer -p developer https://OPENSHIFT_IP_ADDR:8443Copy to Clipboard Copied! Toggle word wrap Toggle overflow Where,

OPENSHIFT_IP_ADDRis a placeholder for the OpenShift server’s IP address as this IP address is not always the same.NoteThe

developeruser (withdeveloperpassword) is a standard account that is automatically created on the virtual OpenShift Server by CDK. If you are accessing a remote server, use the URL and credentials provided by your OpenShift administrator.Run the following command to ensure that Fuse on OpenShift images and templates are already installed and you can access them.

oc get template -n openshift

oc get template -n openshiftCopy to Clipboard Copied! Toggle word wrap Toggle overflow If the images and templates are not pre-installed, or if the provided versions are out of date, install (or update) the Fuse on OpenShift images and templates manually. For more information on how to install Fuse on OpenShift images see Chapter 2, Getting Started for Administrators.

Create a new project namespace called

test(assuming it does not already exist), as follows.oc new-project test

oc new-project testCopy to Clipboard Copied! Toggle word wrap Toggle overflow If the

testproject namespace already exists, you can switch to it using the following command.oc project test

oc project testCopy to Clipboard Copied! Toggle word wrap Toggle overflow You are now ready to build and deploy the

fuse74-spring-bootproject. Assuming you are still logged into OpenShift, change to the directory of thefuse74-spring-bootproject, and then build and deploy the project, as follows.cd fuse74-spring-boot mvn fabric8:deploy -Popenshift

cd fuse74-spring-boot mvn fabric8:deploy -PopenshiftCopy to Clipboard Copied! Toggle word wrap Toggle overflow At the end of a successful build, you should see some output like the following.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteThe first time you run this command, Maven has to download a lot of dependencies, which takes several minutes. Subsequent builds will be faster.

-

Navigate to the OpenShift console in your browser and log in to the console with your credentials (for example, with username

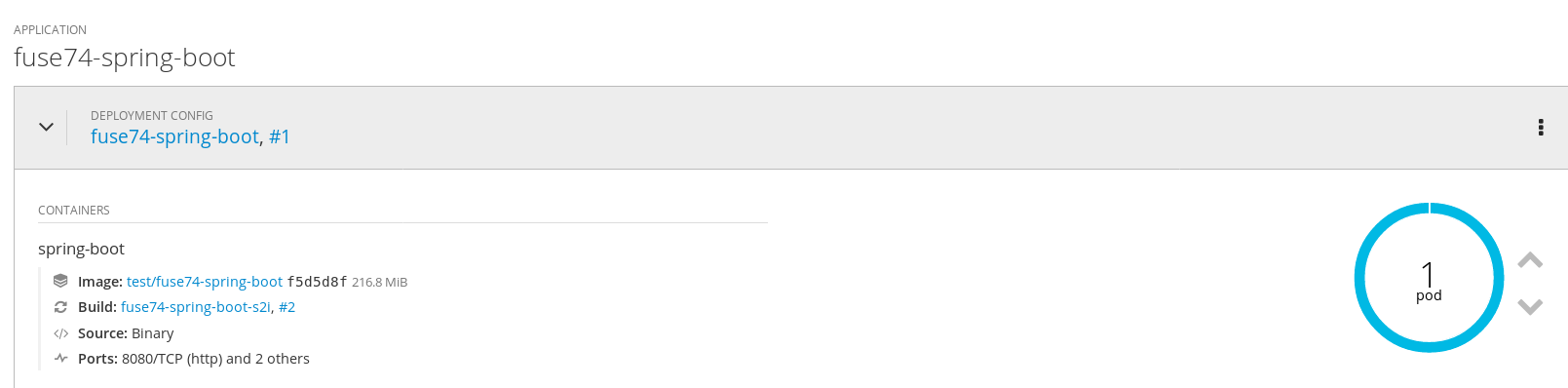

developerand password,developer). - In the OpenShift console, scroll down to find the test project namespace. Click the test project to open the test project namespace. The Overview tab of the test project opens, showing the fuse74-spring-boot application.

Click the arrow on the left of the fuse74-spring-boot deployment to expand and view the details of this deployment, as shown.

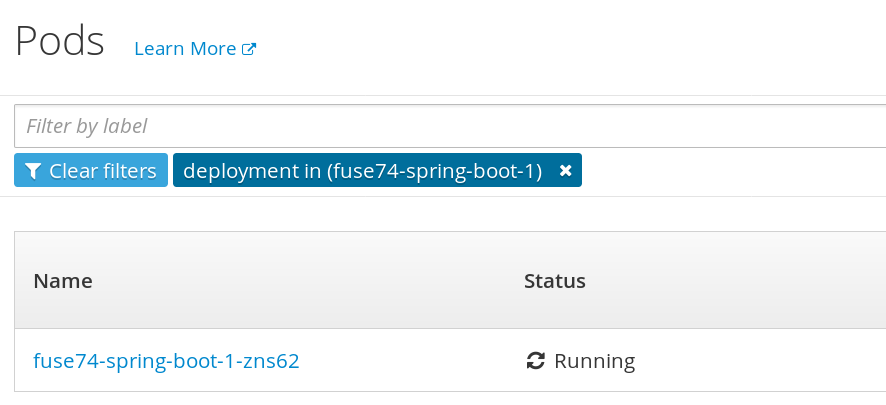

Click in the center of the pod icon (blue circle) to view the list of pods for fuse74-spring-boot.

Click on the pod Name (in this example,

fuse74-spring-boot-1-kxdjm) to view the details of the running pod.

Click on the Logs tab to view the application log and scroll down the log to find the random number log messages generated by the Camel application.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow -

Click Overview on the left-hand navigation bar to return to the applications overview in the

testnamespace. To shut down the running pod, click the down arrow beside the pod icon. When a dialog prompts you with the question Scale down deployment fuse74-spring-boot-1?, click Scale Down.

beside the pod icon. When a dialog prompts you with the question Scale down deployment fuse74-spring-boot-1?, click Scale Down.

(Optional) If you are using CDK, you can shut down the virtual OpenShift Server completely by returning to the shell prompt and entering the following command:

minishift stop

minishift stopCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.2.2. Undeploying and Redeploying the Project

You can undeploy or redeploy your projects, as follows:

Procedure

To undeploy the project, enter the command:

mvn fabric8:undeploy

mvn fabric8:undeployCopy to Clipboard Copied! Toggle word wrap Toggle overflow To redeploy the project, enter the commands:

mvn fabric8:undeploy mvn fabric8:deploy -Popenshift

mvn fabric8:undeploy mvn fabric8:deploy -PopenshiftCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.2.3. Set up Fuse Console on OpenShift

In OpenShift, you can access the Fuse Console in two ways:

- From a specific pod so that you can monitor that single running Fuse container.

- By adding the centralized Fuse Console catalog item to your project so that you can monitor all the running Fuse containers in your project.

You can deploy the Fuse Console either from the OpenShift Console or from the command line.

- On OpenShift 4, if you want to manage Fuse 7.4 services with the Fuse Console, you must install the community version (Hawtio) as described in the Fuse 7.4 release notes.

- Security and user management for the Fuse Console is handled by OpenShift.

- The Fuse Console templates configure end-to-end encryption by default so that your Fuse Console requests are secured end-to-end, from the browser to the in-cluster services.

- Role-based access control (for users accessing the Fuse Console after it is deployed) is not yet available for Fuse on OpenShift.

Prerequisites

- Install the Fuse on OpenShift image streams and the templates for the Fuse Console as described in Fuse on OpenShift Guide.

- If you want to deploy the Fuse Console in cluster mode on the OpenShift Container Platform environment, you need the cluster admin role and the cluster mode template. Run the following command:

oc adm policy add-cluster-role-to-user cluster-admin system:serviceaccount:openshift-infra:template-instance-controller

oc adm policy add-cluster-role-to-user cluster-admin system:serviceaccount:openshift-infra:template-instance-controllerThe cluster mode template is only available, by default, on the latest version of the OpenShift Container Platform. It is not provided with the OpenShift Online default catalog.

4.2.3.1. Monitoring a single Fuse pod from the Fuse Console

You can open the Fuse Console for a Fuse pod running on OpenShift:

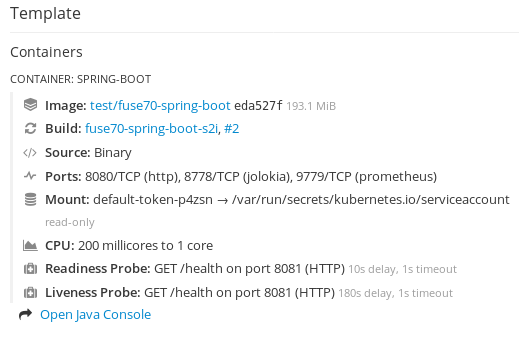

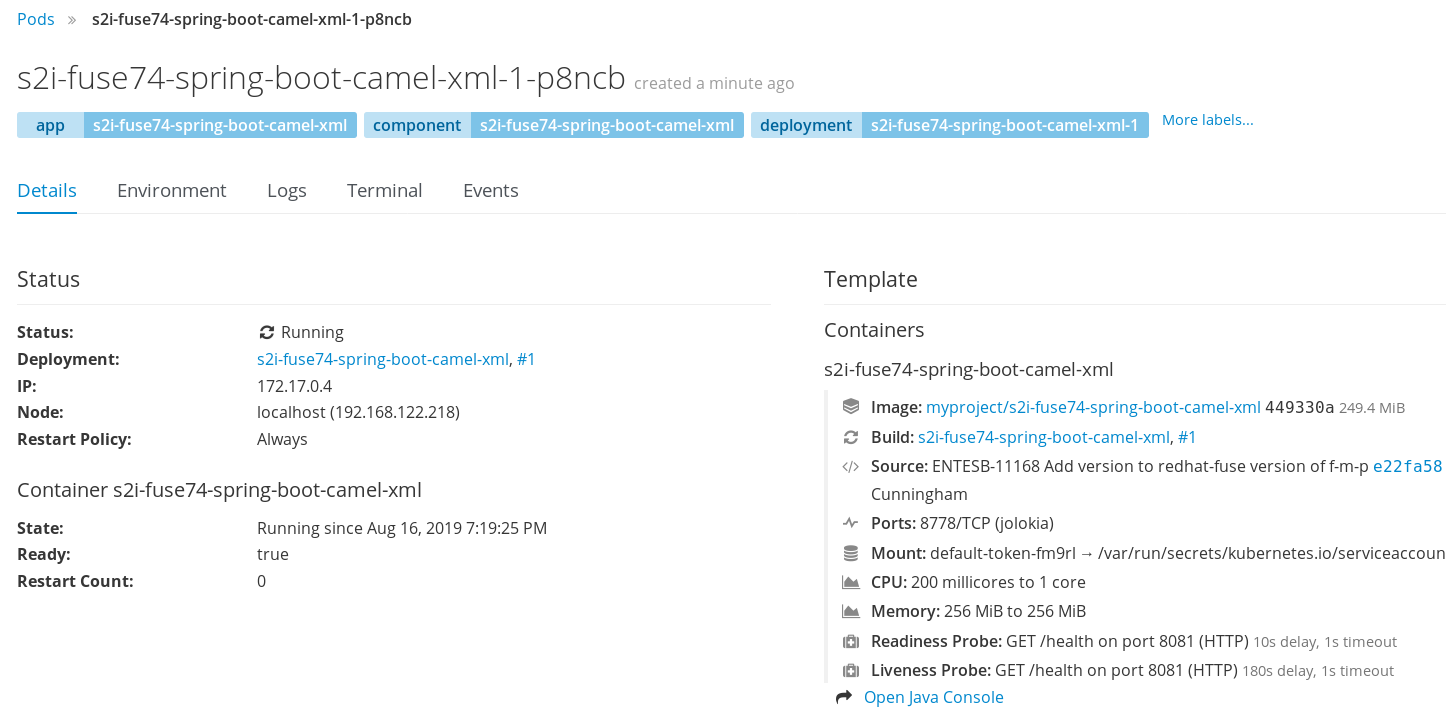

From the Applications

Pods view in your OpenShift project, click on the pod name to view the details of the running Fuse pod. On the right-hand side of this page, you see a summary of the container template:

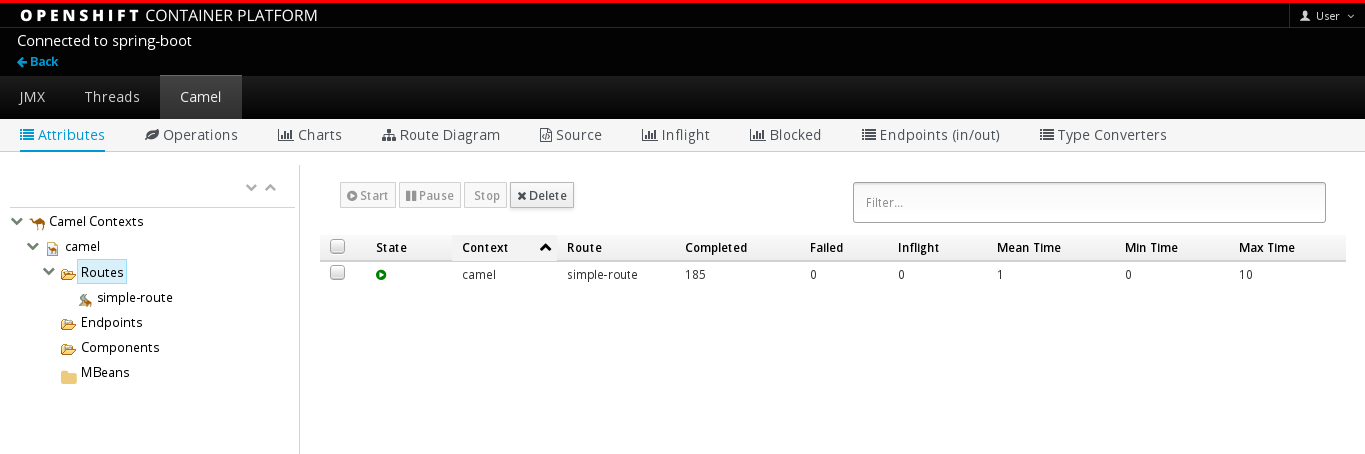

From this view, click on the Open Java Console link to open the Fuse Console.

Note

NoteIn order to configure OpenShift to display a link to Fuse Console in the pod view, the pod running a Fuse on OpenShift image must declare a TCP port within a name attribute set to

jolokia:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

4.2.3.2. Deploying the Fuse Console from the OpenShift Console

To deploy the Fuse Console on your OpenShift cluster from the OpenShift Console, follow these steps.

Procedure

- In the OpenShift console, open an existing project or create a new project.

Add the Fuse Console to your OpenShift project:

Select Add to Project

Browse Catalog. The Select an item to add to the current project page opens.

In the Search field, type Fuse Console.

The Red Hat Fuse 7.x Console and Red Hat Fuse 7.x Console (cluster) items should appear as the search result.

If the Red Hat Fuse Console items do not appear as the search result, or if the items that appear are not the latest version, you can install the Fuse Console templates manually as described in the "Prepare the OpenShift server" section of the Fuse on OpenShift Guide.

Click one of the Red Hat Fuse Console items:

- Red Hat Fuse 7.x Console - This version of the Fuse Console discovers and connects to Fuse applications deployed in the current OpenShift project.

- Red Hat Fuse 7.x Console (cluster) - This version of the Fuse Console can discover and connect to Fuse applications deployed across multiple projects on the OpenShift cluster.

In the Red Hat Fuse Console wizard, click Next. The Configuration page of the wizard opens.

Optionally, you can change the default values of the configuration parameters.

Click Create.

The Results page of the wizard indicates that the Red Hat Fuse Console has been created.

- Click the Continue to the project overview link to verify that the Fuse Console application is added to the project.

To open the Fuse Console, click the provided URL link and then log in.

An Authorize Access page opens in the browser listing the required permissions.

Click Allow selected permissions.

The Fuse Console opens in the browser and shows the Fuse pods running in the project.

Click Connect for the application that you want to view.

A new browser window opens showing the application in the Fuse Console.

4.2.3.3. Deploying the Fuse Console from the command line

Table 4.1, “Fuse Console templates” describes the two OpenShift templates that you can use to access the Fuse Console from the command line, depending on the type of Fuse application deployment.

| Type | Description |

|---|---|

| cluster | Use an OAuth client that requires the cluster-admin role to be created. The Fuse Console can discover and connect to Fuse applications deployed across multiple namespaces or projects. |

| namespace | Use a service account as OAuth client, which only requires the admin role in a project to be created. This restricts the Fuse Console access to this single project, and as such acts as a single tenant deployment. |

Optionally, you can view a list of the template parameters by running the following command:

oc process --parameters -f https://raw.githubusercontent.com/jboss-fuse/application-templates/application-templates-2.1.fuse-740025-redhat-00003/fis-console-namespace-template.json

oc process --parameters -f https://raw.githubusercontent.com/jboss-fuse/application-templates/application-templates-2.1.fuse-740025-redhat-00003/fis-console-namespace-template.jsonProcedure

To deploy the Fuse Console from the command line:

Create a new application based on a Fuse Console template by running one of the following commands (where myproject is the name of your project):

For the Fuse Console cluster template, where

myhostis the hostname to access the Fuse Console:oc new-app -n myproject -f https://raw.githubusercontent.com/jboss-fuse/application-templates/application-templates-2.1.fuse-740025-redhat-00003/fis-console-cluster-template.json -p ROUTE_HOSTNAME=myhost

oc new-app -n myproject -f https://raw.githubusercontent.com/jboss-fuse/application-templates/application-templates-2.1.fuse-740025-redhat-00003/fis-console-cluster-template.json -p ROUTE_HOSTNAME=myhostCopy to Clipboard Copied! Toggle word wrap Toggle overflow For the Fuse Console namespace template:

oc new-app -n myproject -f https://raw.githubusercontent.com/jboss-fuse/application-templates/application-templates-2.1.fuse-740025-redhat-00003/fis-console-namespace-template.json

oc new-app -n myproject -f https://raw.githubusercontent.com/jboss-fuse/application-templates/application-templates-2.1.fuse-740025-redhat-00003/fis-console-namespace-template.jsonCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteYou can omit the route_hostname parameter for the namespace template because OpenShift automatically generates one.

Obtain the status and the URL of your Fuse Console deployment by running this command:

oc status

oc statusCopy to Clipboard Copied! Toggle word wrap Toggle overflow - To access the Fuse Console from a browser, use the provided URL (for example, https://fuse-console.192.168.64.12.nip.io).

4.2.3.4. Ensuring that data displays correctly in the Fuse Console

If the display of the queues and connections in the Fuse Console is missing queues, missing connections, or displaying inconsistent icons, adjust the Jolokia collection size parameter that specifies the maximum number of elements in an array that Jolokia marshals in a response.

Procedure

In the upper right corner of the Fuse Console, click the user icon and then click Preferences.

- Increase the value of the Maximum collection size option (the default is 50,000).

- Click Close.

4.2.4. Creating and Deploying a Project Using the S2I Source Workflow

In this section, you will use the OpenShift S2I source workflow to build and deploy a Fuse on OpenShift project based on a template. The starting point for this demonstration is a quickstart project stored in a remote Git repository. Using the OpenShift console, you will download, build, and deploy this quickstart project in the OpenShift server.

Procedure

-

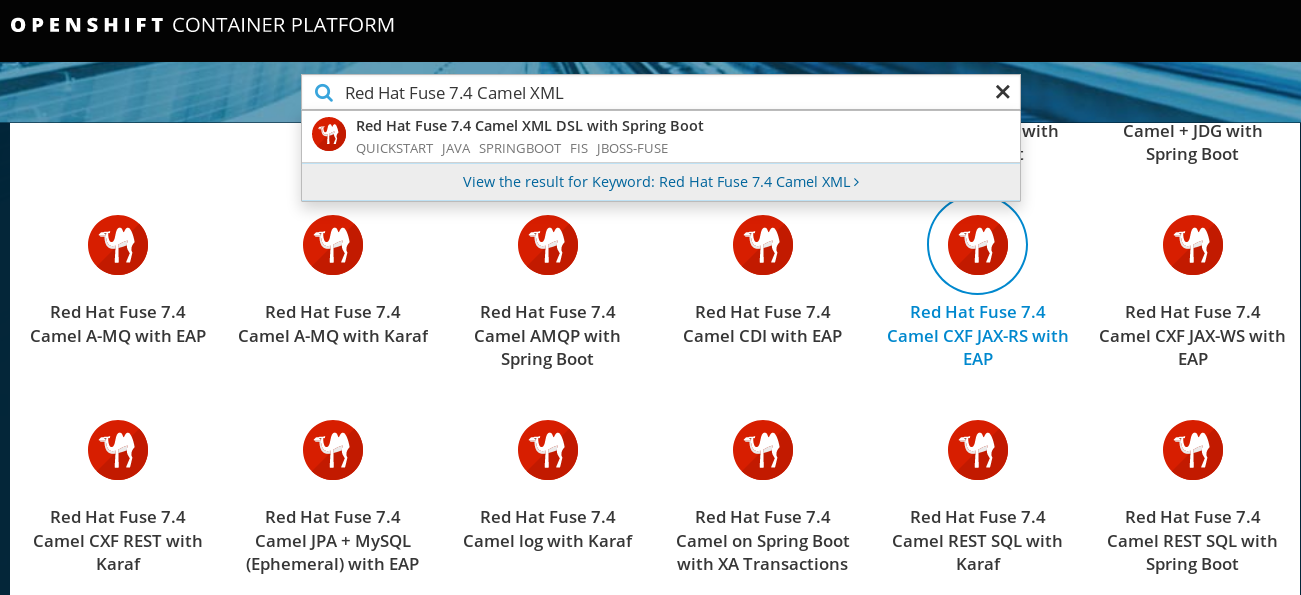

Navigate to the OpenShift console in your browser (https://OPENSHIFT_IP_ADDR:8443, replace

OPENSHIFT_IP_ADDRwith the IP address that was displayed in the case of CDK) and log in to the console with your credentials (for example, with usernamedeveloperand password,developer). In the Catalog search field, enter

Red Hat Fuse 7.4 Camel XMLas the search string and select the Red Hat Fuse 7.4 Camel XML DSL with Spring Boot template.

- The Information step of the template wizard opens. Click Next.

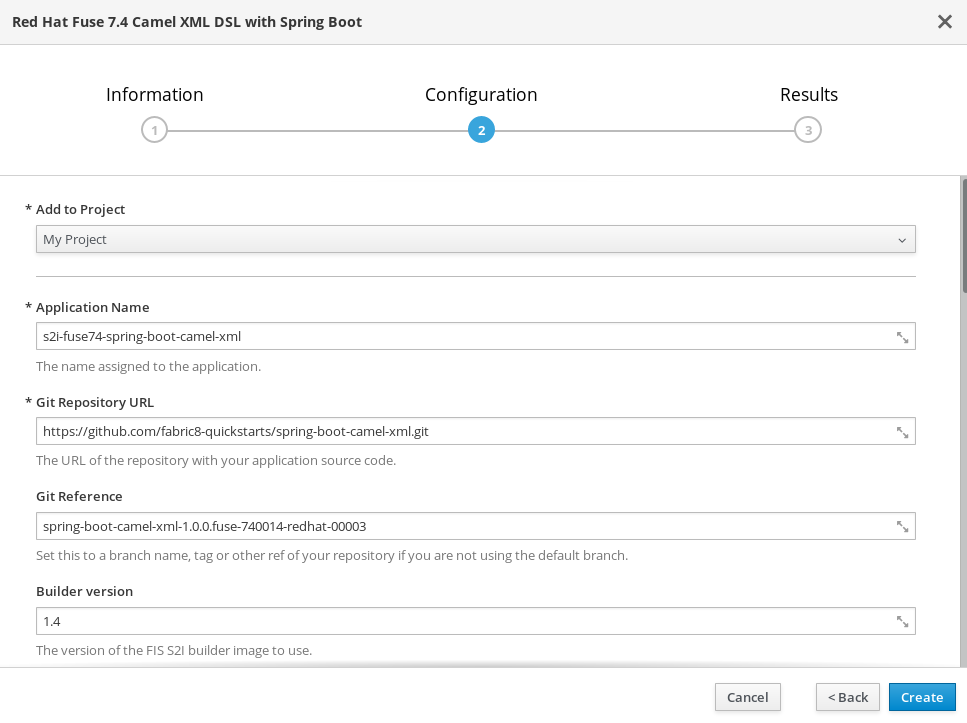

The Configuration step of the template wizard opens, as shown. From the Add to Project dropdown, select My Project.

NoteAlternatively, if you prefer to create a new project for this example, select Create Project from the Add to Project dropdown. A Project Name field then appears for you to fill in the name of the new project.

You can accept the default values for the rest of the settings in the Configuration step. Click Create.

Note

NoteIf you want to modify the application code (instead of just running the quickstart as is), you would need to fork the original quickstart Git repository and fill in the appropriate values in the Git Repository URL and Git Reference fields.

- The Results step of the template wizard opens. Click Close.

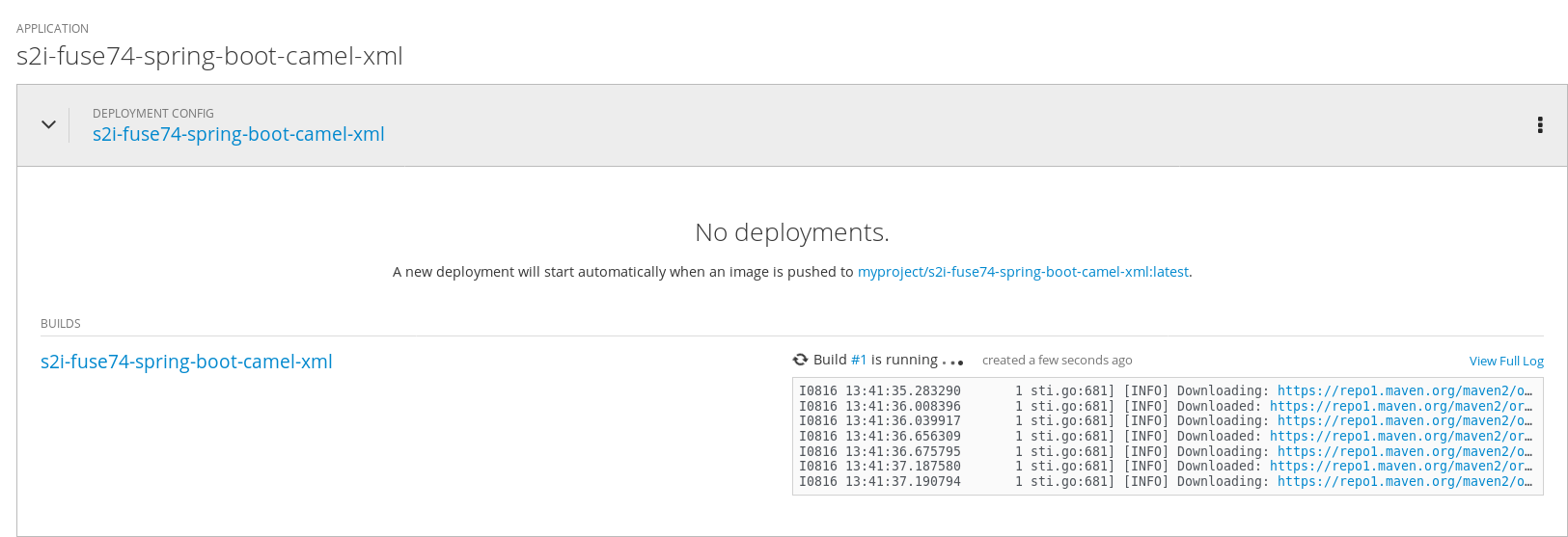

- In the right-hand My Projects pane, click My Project. The Overview tab of the My Project project opens, showing the s2i-fuse74-spring-boot-camel-xml application.

Click the arrow on the left of the s2i-fuse74-spring-boot-camel-xml deployment to expand and view the details of this deployment, as shown.

In this view, you can see the build log. If the build should fail for any reason, the build log can help you to diagnose the problem.

NoteThe build can take several minutes to complete, because a lot of dependencies must be downloaded from remote Maven repositories. To speed up build times, we recommend you deploy a Nexus server on your local network.

If the build completes successfully, the pod icon shows as a blue circle with 1 pod running. Click in the centre of the pod icon (blue circle) to view the list of pods for s2i-fuse74-spring-boot-camel-xml.

NoteIf multiple pods are running, you would see a list of running pods at this point. Otherwise (if there is just one pod), you get straight through to the details view of the running pod.

The pod details view opens. Click on the Logs tab to view the application log and scroll down the log to find the log messages generated by the Camel application.

-

Click Overview on the left-hand navigation bar to return to the overview of the applications in the

My Projectnamespace. To shut down the running pod, click the down arrow beside the pod icon. When a dialog prompts you with the question Scale down deployment s2i-fuse74-spring-boot-camel-xml-1?, click Scale Down.

beside the pod icon. When a dialog prompts you with the question Scale down deployment s2i-fuse74-spring-boot-camel-xml-1?, click Scale Down.

(Optional) If you are using CDK, you can shut down the virtual OpenShift Server completely by returning to the shell prompt and entering the following command:

minishift stop

minishift stopCopy to Clipboard Copied! Toggle word wrap Toggle overflow