Chapter 1. Introduction to Service Registry

This chapter introduces Service Registry concepts and features and provides details on the supported artifact types that are stored in the registry:

- Section 1.1, “Service Registry overview”

- Section 1.2, “Service Registry artifacts”

- Section 1.3, “Service Registry web console”

- Section 1.4, “Registry REST API”

- Section 1.5, “Storage options”

- Section 1.6, “Kafka client serializers/deserializers”

- Section 1.7, “Kafka Connect converters”

- Section 1.8, “Registry demonstration examples”

- Section 1.9, “Available distributions”

1.1. Service Registry overview

Service Registry is a datastore for sharing standard event schemas and API designs across API and event-driven architectures. You can use Service Registry to decouple the structure of your data from your client applications, and to share and manage your data types and API descriptions at runtime using a REST interface.

For example, client applications can dynamically push or pull the latest schema updates to or from Service Registry at runtime without needing to redeploy. Developer teams can query the registry for existing schemas required for services already deployed in production, and can register new schemas required for new services in development.

You can enable client applications to use schemas and API designs stored in Service Registry by specifying the registry URL in your client application code. For example, the registry can store schemas used to serialize and deserialize messages, which can then be referenced from your client applications to ensure that the messages that they send and receive are compatible with those schemas.

Using Service Registry to decouple your data structure from your applications reduces costs by decreasing overall message size, and creates efficiencies by increasing consistent reuse of schemas and API designs across your organization. Service Registry provides a web console to make it easy for developers and administrators to manage registry content.

In addition, you can configure optional rules to govern the evolution of your registry content. For example, these include rules to ensure that uploaded content is syntactically and semantically valid, or is backwards and forwards compatible with other versions. Any configured rules must pass before new versions can be uploaded to the registry, which ensures that time is not wasted on invalid or incompatible schemas or API designs.

Service Registry is based on the Apicurio Registry open source community project. For details, see https://github.com/apicurio/apicurio-registry.

Service Registry features

Service Registry provides the following main features:

- Support for multiple payload formats for standard event schemas and API specifications

- Pluggable storage options including AMQ Streams, embedded Infinispan, or PostgreSQL database

- Registry content management using a web console, REST API command, Maven plug-in, or Java client

- Rules for content validation and version compatibility to govern how registry content evolves over time

- Full Apache Kafka schema registry support, including integration with Kafka Connect for external systems

- Client serializers/deserializers (Serdes) to validate Kafka and other message types at runtime

- Cloud-native Quarkus Java runtime for low memory footprint and fast deployment times

- Compatibility with existing Confluent schema registry client applications

- Operator-based installation of Service Registry on OpenShift

1.2. Service Registry artifacts

The items stored in Service Registry, such as event schemas and API specifications, are known as registry artifacts. The following shows an example of an Apache Avro schema artifact in JSON format for a simple share price application:

When a schema or API contract is added as an artifact in the registry, client applications can then use that schema or API contract to validate that client messages conform to the correct data structure at runtime.

Service Registry supports a wide range of message payload formats for standard event schemas and API specifications. For example, supported formats include Apache Avro, Google protocol buffers, GraphQL, AsyncAPI, OpenAPI, and others. For more details, see Section 8.1, “Service Registry artifact types”.

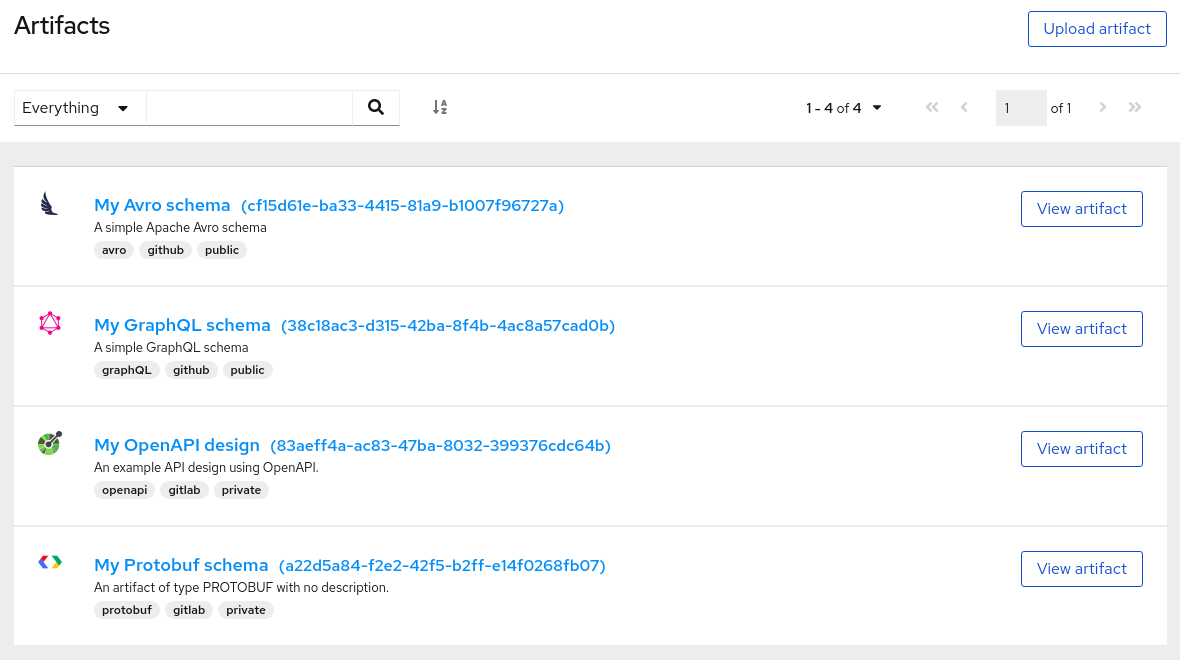

1.3. Service Registry web console

You can use the Service Registry web console to browse and search the artifacts stored in the registry, and to upload new artifacts and artifact versions. You can search for artifacts by label, name, and description. You can also view an artifact’s content, view all of its available versions, or download an artifact file locally.

You can also use the Service Registry web console to configure optional rules for registry content, both globally and for each artifact. These optional rules for content validation and compatibility are applied when new artifacts or artifact versions are uploaded to the registry. For more details, see Section 2.1, “Rules for registry content”.

Figure 1.1. Service Registry web console

The Service Registry web console is available from the main endpoint of your Service Registry deployment, for example, on http://MY-REGISTRY-URL/ui. For more details, see Chapter 5, Managing Service Registry content using the web console.

1.4. Registry REST API

Using the Registry REST API, client applications can manage the artifacts in Service Registry. This API provides create, read, update, and delete operations for:

- Artifacts

- Manage the schema and API design artifacts stored in the registry. This also includes browse or search for artifacts, for example, by name, ID, description, or label. You can also manage the lifecycle state of an artifact: enabled, disabled, or deprecated.

- Artifact versions

- Manage the versions that are created when artifact content is updated. This also includes browse or search for versions, for example, by name, ID, description, or label. You can also manage the lifecycle state of a version: enabled, disabled, or deprecated.

- Artifact metadata

- Manage details about artifacts such as when an artifact was created or modified, its current state, and so on. Users can edit some metadata, and some is read-only. For example, editable metadata includes artifact name, description, or label, but when the artifact was created and modified are read-only.

- Global rules

- Configure rules to govern the content evolution of all artifacts to prevent invalid or incompatible content from being added to the registry. Global rules are applied only if an artifact does not have its own specific artifact rules configured.

- Artifact rules

- Configure rules to govern the content evolution of a specific artifact to prevent invalid or incompatible content from being added to the registry. Artifact rules override any global rules configured.

Compatibility with other schema registries

The Registry REST API is compatible with the Confluent schema registry REST API, which includes support for Apache Avro, Google Protocol buffers, and JSON Schema artifact types. Applications using Confluent client libraries can use Service Registry as a drop-in replacement instead. For more details, see Replacing Confluent Schema Registry with Red Hat Integration Service Registry.

Additional resources

- For detailed information, see the Apicurio Registry REST API documentation.

-

The Registry REST API documentation is also available from the main endpoint of your Service Registry deployment, for example, on

http://MY-REGISTRY-URL/api.

1.5. Storage options

Service Registry provides the following underlying storage implementations for registry artifacts:

| Storage option | Release |

|---|---|

| Kafka Streams-based storage in AMQ Streams 1.5 | General Availability |

| Cache-based storage in embedded Infinispan 10 | Technical Preview only |

| Java Persistence API-based storage in PostgreSQL 12 database | Technical Preview only |

Service Registry storage in Infinispan or PostgreSQL is a Technology Preview feature only. Technology Preview features are not supported with Red Hat production service level agreements (SLAs) and might not be functionally complete. Red Hat does not recommend using them in production.

These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process. For more information about the support scope of Red Hat Technology Preview features, see https://access.redhat.com/support/offerings/techpreview.

Additional resources

- For details on how to install into your preferred storage option, see Chapter 3, Installing Service Registry on OpenShift.

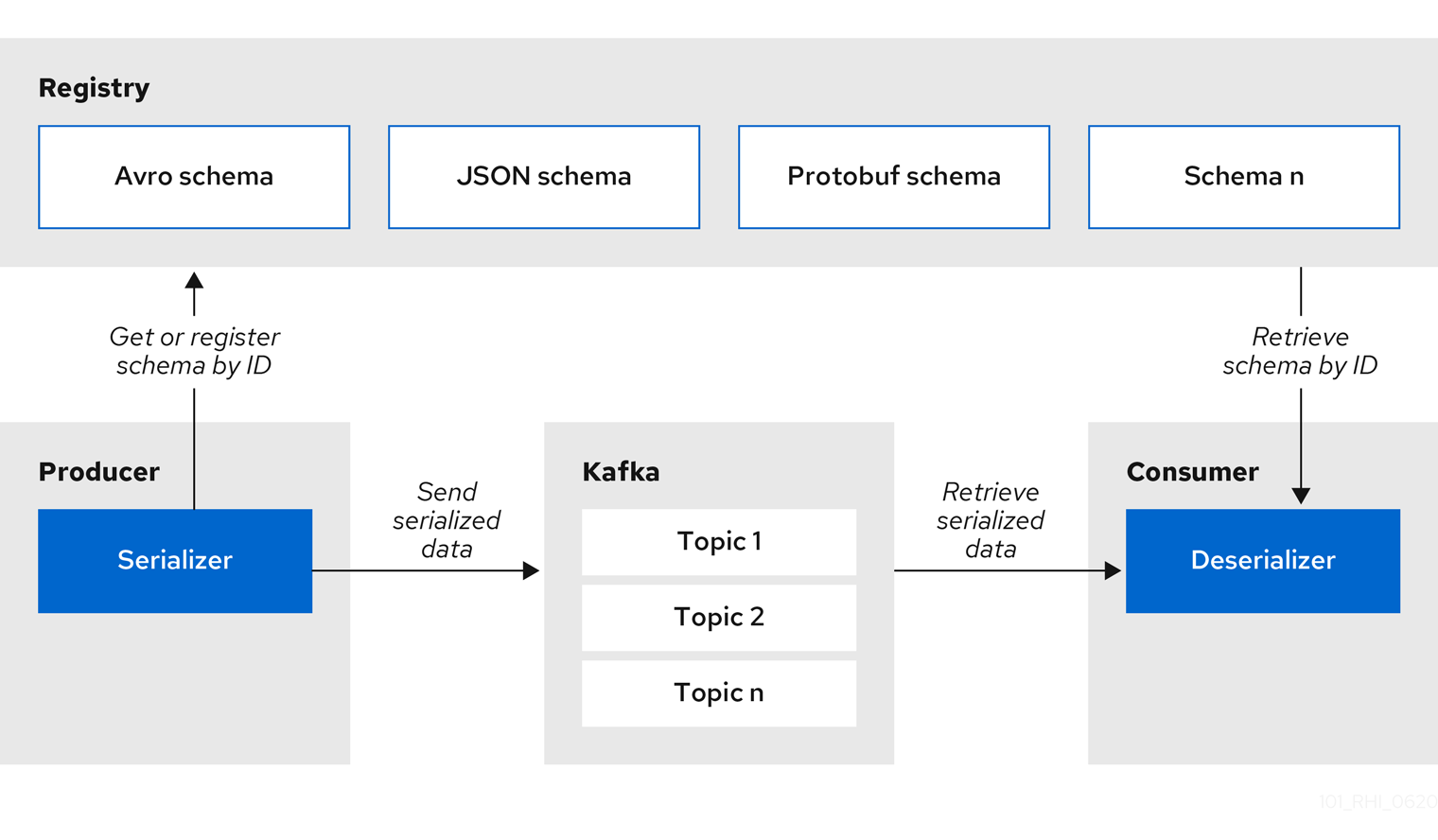

1.6. Kafka client serializers/deserializers

Kafka producer applications can use serializers to encode messages that conform to a specific event schema. Kafka consumer applications can then use deserializers to validate that messages have been serialized using the correct schema, based on a specific schema ID.

Figure 1.2. Service Registry and Kafka client serializer/deserializer architecture

Service Registry provides Kafka client serializers/deserializers (Serdes) to validate the following message types at runtime:

- Apache Avro

- Google protocol buffers

- JSON Schema

The Service Registry Maven repository and source code distributions include the Kafka serializer/deserializer implementations for these message types, which Kafka client developers can use to integrate with the registry. These implementations include custom io.apicurio.registry.utils.serde Java classes for each supported message type, which client applications can use to pull schemas from the registry at runtime for validation.

Additional resources

- For instructions on how to use the Service Registry Kafka client serializer/deserializer for Apache Avro in AMQ Streams producer and consumer applications, see Using AMQ Streams on Openshift.

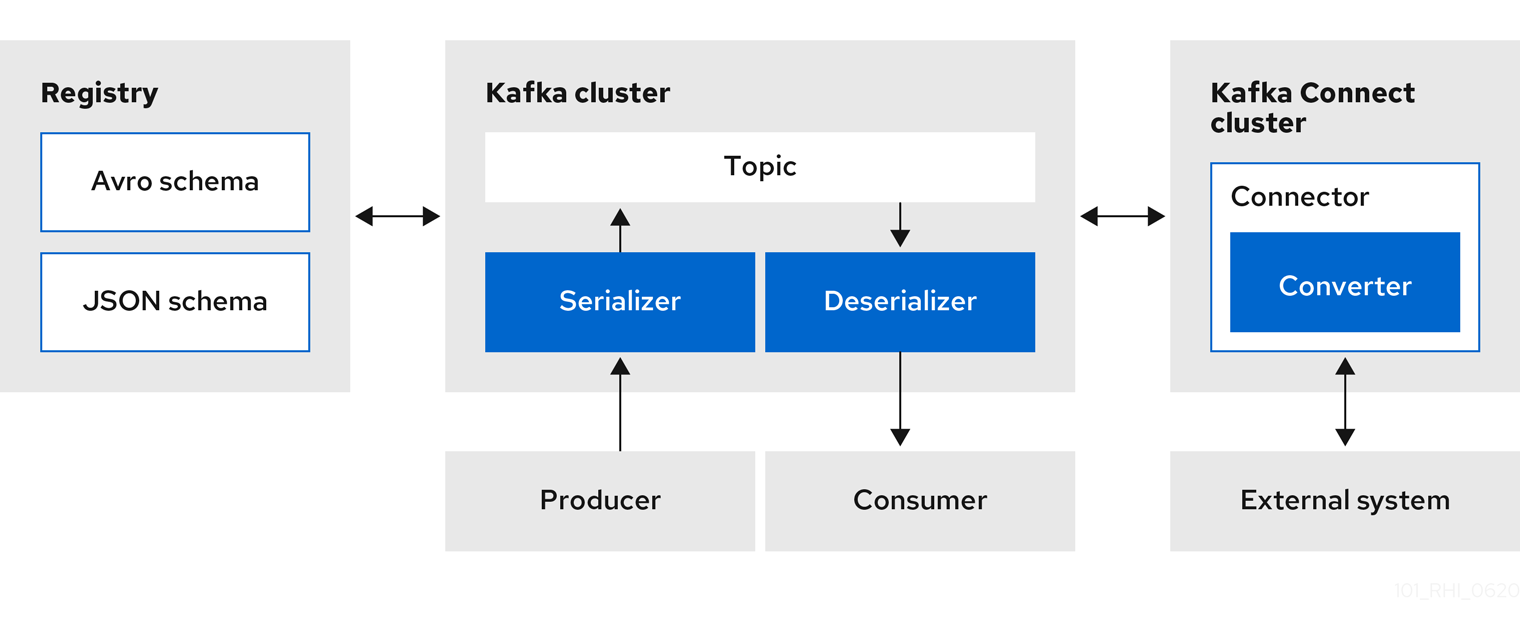

1.7. Kafka Connect converters

You can use Service Registry with Apache Kafka Connect to stream data between Kafka and external systems. Using Kafka Connect, you can define connectors for different systems to move large volumes of data into and out of Kafka-based systems.

Figure 1.3. Service Registry and Kafka Connect architecture

Service Registry provides the following features for Kafka Connect:

- Storage for Kafka Connect schemas

- Kafka Connect converters for Apache Avro and JSON Schema

- Registry REST API to manage schemas

You can use the Avro and JSON Schema converters to map Kafka Connect schemas into Avro or JSON schemas. Those schemas can then serialize message keys and values into the compact Avro binary format or human-readable JSON format. The converted JSON is also less verbose because the messages do not contain the schema information, only the schema ID.

Service Registry can manage and track the Avro and JSON schemas used in the Kafka topics. Because the schemas are stored in Service Registry and decoupled from the message content, each message must only include a tiny schema identifier. For an I/O bound system like Kafka, this means more total throughput for producers and consumers.

The Avro and JSON Schema serializers and deserializers (Serdes) provided by Service Registry are also used by Kafka producers and consumers in this use case. Kafka consumer applications that you write to consume change events can use the Avro or JSON Serdes to deserialize these change events. You can install these Serdes into any Kafka-based system and use them along with Kafka Connect, or with Kafka Connect-based systems such as Debezium and Camel Kafka Connector.

1.8. Registry demonstration examples

Service Registry provides an open source demonstration example of Apache Avro serialization/deserialization with storage in Apache Kafka Streams. This example shows how the serializer/deserializer obtains the Avro schema from the registry at runtime and uses it to serialize and deserialize Kafka messages. For more details, see https://github.com/Apicurio/apicurio-registry-demo.

This demonstration also provides simple examples of both Avro and JSON Schema serialization/deserialization with storage in Apache Kafka.

For another open source demonstration example with detailed instructions on Avro serialization/deserialization with storage in Apache Kafka, see the Red Hat Developer article on Getting Started with Red Hat Integration Service Registry.

1.9. Available distributions

Service Registry includes the following distributions:

| Distribution | Location | Release |

|---|---|---|

| Service Registry Operator |

OpenShift web console under Operators | General Availability |

| Container image for Service Registry Operator | General Availability | |

| Container image for Kafka storage in AMQ Streams | General Availability | |

| Container image for embedded Infinispan storage | Technical Preview only | |

| Container image for JPA storage in PostgreSQL | Technical Preview only |

Service Registry storage in Infinispan or PostgreSQL is a Technology Preview feature only. Technology Preview features are not supported with Red Hat production service level agreements (SLAs) and might not be functionally complete. Red Hat does not recommend using them in production.

These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process. For more information about the support scope of Red Hat Technology Preview features, see https://access.redhat.com/support/offerings/techpreview.

| Distribution | Location | Release |

|---|---|---|

| Example custom resource definitions for installation | General Availability and Technical Preview | |

| Kafka Connect converters | General Availability | |

| Maven repository | General Availability | |

| Source code | General Availability |

You must have a subscription for Red Hat Integration and be logged into the Red Hat Customer Portal to access the available Service Registry distributions.