OpenShift Container Storage is now OpenShift Data Foundation starting with version 4.9.

Chapter 2. Deploying OpenShift Container Storage using local storage devices

Use this section to deploy OpenShift Container Storage on bare metal, Amazon EC2 storage optimized I3, and VMware infrastructures where OpenShift Container Platform is already installed.

Installing OpenShift Container Storage on Amazon EC2 storage optimized I3 instance using local storage operator is a Technology Preview feature. Technology Preview features are not supported with Red Hat production service level agreements (SLAs) and might not be functionally complete. Red Hat does not recommend using them in production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process. Red Hat OpenShift Container Storage deployment assumes a new cluster, without any application or other workload running on the 3 worker nodes. Applications should run on additional worker nodes.

To deploy OpenShift Container Storage using local storage devices, perform the following steps:

- Understand the requirements for installing OpenShift Container Storage using local storage devices.

- Install Red Hat OpenShift Container Storage Operator.

- Install Local Storage Operator.

- Find the available storage devices.

Create OpenShift Container Storage cluster based on your requirement:

- For Amazon EC2, follow the instructions in Creating OpenShift Container Storage cluster on Amazon EC2.

- For VMware, follow the instructions in Creating OpenShift Container Storage cluster on VMware.

- For bare metal, follow the instructions in Creating OpenShift Container Storage cluster on bare metal.

2.1. Requirements for installing OpenShift Container Storage using local storage devices

You must have at least three OpenShift Container Platform worker nodes in the cluster with locally attached storage devices on each of them.

- Each of the three worker nodes must have at least one raw block device available to be used by OpenShift Container Storage.

- For minimum starting node requirements, see Node Requirements section in Planning guide.

- The devices to be used must be empty, that is, there should be no PVs, VGs, or LVs remaining on the disks.

You must have a minimum of three labeled nodes.

Each worker node that has local storage devices to be used by OpenShift Container Storage must have a specific label to deploy OpenShift Container Storage pods. To label the nodes, use the following command:

oc label nodes <NodeName> cluster.ocs.openshift.io/openshift-storage=''

$ oc label nodes <NodeName> cluster.ocs.openshift.io/openshift-storage=''Copy to Clipboard Copied! Toggle word wrap Toggle overflow

- There should not be any storage providers managing locally mounted storage on the storage nodes that would conflict with the use of Local Storage Operator for Red Hat OpenShift Container Storage.

- Local Storage Operator version must match with the Red Hat OpenShift Container Platform version in order to have the Local Storage Operator fully supported with Red Hat OpenShift Container Storage. Local Storage Operator does not get upgraded when Red Hat OpenShift Container Platform is upgraded.

2.2. Installing Red Hat OpenShift Container Storage Operator using the Operator Hub

You can install Red Hat OpenShift Container Storage using the Red Hat OpenShift Container Platform Operator Hub on Amazon Web Services (AWS) and VMware vSphere platforms. For information about the hardware and software requirements, see Planning your deployment guide.

Prerequisites

- Log in to OpenShift Container Platform cluster.

- You must have at least three worker nodes in the OpenShift Container Platform cluster.

You must create a namespace called

openshift-storageas follows:-

Click Administration

Namespaces in the left pane of the OpenShift Web Console. - Click Create Namespace.

-

In the Create Namespace dialog box, enter

openshift-storagefor Name andopenshift.io/cluster-monitoring=truefor Labels. This label is required to get the dashboards. - Select No restrictions option for Default Network Policy.

- Click Create.

-

Click Administration

When you need to override the cluster-wide default node selector for OpenShift Container Storage, you can use the following command in command line interface to specify a blank node selector for the openshift-storage namespace:

oc annotate namespace openshift-storage openshift.io/node-selector=

$ oc annotate namespace openshift-storage openshift.io/node-selector=Procedure

Click Operators

OperatorHub in the left pane of the OpenShift Web Console. Figure 2.1. List of operators in the Operator Hub

- Search for OpenShift Container Storage from the list of operators and click on it.

- On the OpenShift Container Storage operator page, click Install.

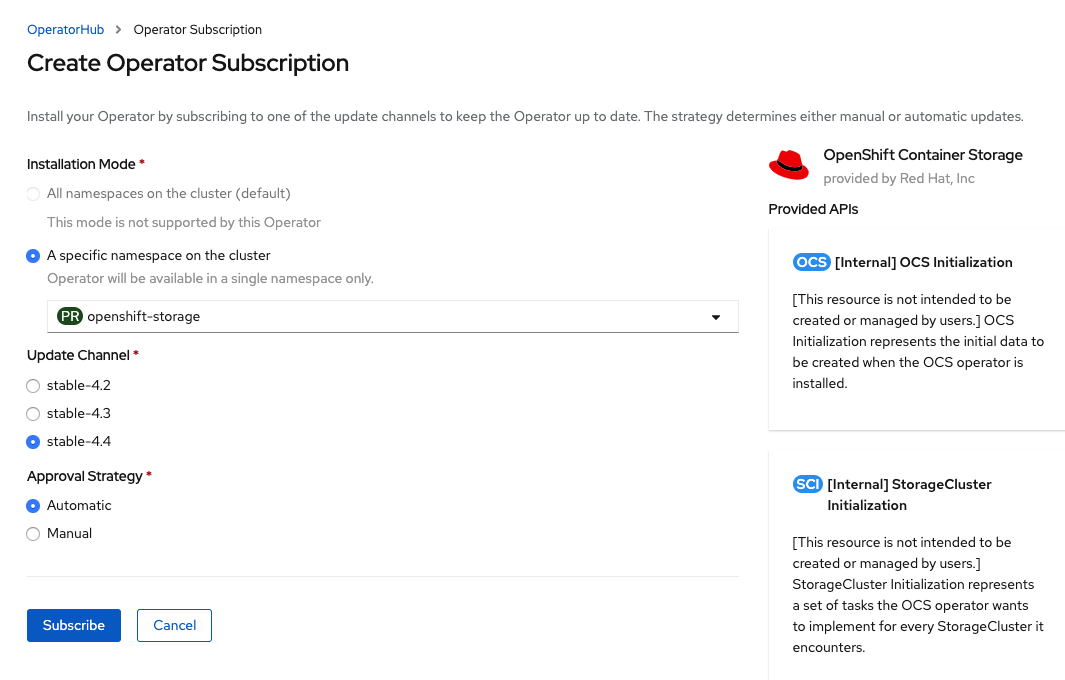

On the Create Operator Subscription page:

Figure 2.2. Create Operator Subscription page

Select A specific namespace on the cluster for the Installation Mode option.

-

Select

openshift-storagenamespace from the drop down menu.

-

Select

- Select stable-4.4 as the update channel.

Select an Approval Strategy:

- Automatic specifies that you want OpenShift Container Platform to upgrade OpenShift Container Storage automatically.

- Manual specifies that you want to have control to upgrade OpenShift Container Storage manually.

Click Subscribe.

Figure 2.3. Installed operators

The Installed Operators page is displayed with the status of the operator.

Verification steps

-

Verify that OpenShift Container Storage Operator show the Status as

Succeeded.

2.3. Installing Local Storage Operator

Use this procedure to install Local Storage Operator from the Operator Hub before creating OpenShift Container Storage clusters on local storage devices in Amazon EC2 I3, VMware, and bare metal infrastructures.

Prerequisites

Create a namespace called

local-storageas follows:-

Click Administration

Namespaces in the left pane of the OpenShift Web Console. - Click Create Namespace.

-

In the Create Namespace dialog box, enter

local-storagefor Name. - Select No restrictions option for Default Network Policy.

- Click Create.

-

Click Administration

Procedure

-

Click Operators

OperatorHub in the left pane of the OpenShift Web Console. - Search for Local Storage Operator from the list of operators and click on it.

Click Install.

Figure 2.4. Create Operator Subscription page

Select A specific namespace on the cluster for the Installation Mode option.

-

Select

local-storagenamespace from the drop down menu.

-

Select

- Select a desired value for the Update Channel option.

- Select the desired Approval Strategy.

- Click Subscribe.

-

Verify that the Local Storage Operator show the Status as

Succeeded.

2.4. Finding available storage devices

Use this procedure to identify the device name for each of the three or more worker nodes that you have labeled with OpenShift Container Storage label, cluster.ocs.openshift.io/openshift-storage='' before creating PVs for bare metal, Amazon EC2 I3, or VMware storage devices.

Procedure

List and verify the name of the worker nodes with the OpenShift Container Storage label.

oc get nodes -l cluster.ocs.openshift.io/openshift-storage=

$ oc get nodes -l cluster.ocs.openshift.io/openshift-storage=Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output:

NAME STATUS ROLES AGE VERSION ip-10-0-135-71.us-east-2.compute.internal Ready worker 6h45m v1.16.2 ip-10-0-145-125.us-east-2.compute.internal Ready worker 6h45m v1.16.2 ip-10-0-160-91.us-east-2.compute.internal Ready worker 6h45m v1.16.2

NAME STATUS ROLES AGE VERSION ip-10-0-135-71.us-east-2.compute.internal Ready worker 6h45m v1.16.2 ip-10-0-145-125.us-east-2.compute.internal Ready worker 6h45m v1.16.2 ip-10-0-160-91.us-east-2.compute.internal Ready worker 6h45m v1.16.2Copy to Clipboard Copied! Toggle word wrap Toggle overflow Log in to each worker node that is used for OpenShift Container Storage resources and find the unique

by-iddevice name for each available raw block device.oc debug node/<Nodename>

$ oc debug node/<Nodename>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow In this example, the local devices available are

nvme1n1andnvme2n1.Find the unique

by-iddevice name depending on the hardware serial number for each device.ls -l /dev/disk/by-id/ | grep Storage

sh-4.4# ls -l /dev/disk/by-id/ | grep Storage lrwxrwxrwx. 1 root root 13 Jun 26 07:29 nvme-Amazon_EC2_NVMe_Instance_Storage_AWS1924C57D4F1FC5236 -> ../../nvme2n1 lrwxrwxrwx. 1 root root 13 Jun 26 07:29 nvme-Amazon_EC2_NVMe_Instance_Storage_AWS22ABDB45A3BC9028F -> ../../nvme1n1Copy to Clipboard Copied! Toggle word wrap Toggle overflow In this example, the local devices available are

nvme1n1andnvme2n1with a size of 2.3 TiBFor each worker node that has the OpenShift Container Storage label (a minimum of three), you need to find the unique

by-iddevice names. In this example, theby-iddevice names are:-

nvme-Amazon_EC2_NVMe_Instance_Storage_AWS1924C57D4F1FC5236 -

nvme-Amazon_EC2_NVMe_Instance_Storage_AWS22ABDB45A3BC9028F

-

You must repeat finding the device name by-id for all the other nodes that have the storage devices to be used by OpenShift Container Storage. See https://access.redhat.com/solutions/4928841 for more details.

2.5. Creating OpenShift Container Storage cluster on Amazon EC2 storage optimized - i3en.2xlarge instance type

Use this procedure to create OpenShift Container Storage cluster on Amazon EC2 (storage optimized - i3en.2xlarge instance type) infrastructure, which involves:

-

Creating PVs by using the

LocalVolumeCR -

Creating a new

StorageClass

Installing OpenShift Container Storage on Amazon EC2 storage optimized - i3en.2xlarge instance using local storage operator is a Technology Preview feature. Technology Preview features are not supported with Red Hat production service level agreements (SLAs) and might not be functionally complete. Red Hat does not recommend using them in production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process. Red Hat OpenShift Container Storage deployment assumes a new cluster, without any application or other workload running on the 3 worker nodes. Applications should run on additional worker nodes.

The Amazon EC2 storage optimized - i3en.2xlarge instance type includes two non-volatile memory express (NVMe) disks. The example in this procedure illustrates the use of both the disks that the instance type comes with.

It is not recommended to use ephemeral storage of Amazon EC2 I3 for OpenShift Container Storage persistent data, because stopping all the three nodes can cause data loss. It is recommended to use ephemeral storage only in scenarios such as the following:

- Cloud burst where data is copied from another location for a specific data crunching, which is limited in time

- Development or testing environment

When you are using the ephemeral storage of Amazon EC2 I3, it is recommended to:

- Use three availability zones to decrease the risk of losing all the data

-

Limit the number of users with

ec2:StopInstancespermissions to avoid instance shutdown by mistake

Prerequisites

- Ensure that all the requirements in the Requirements for installing OpenShift Container Storage using local storage devices section are met.

Verify your OpenShift Container Platform worker nodes are labeled for OpenShift Container Storage, which is used as the

nodeSelector.oc get nodes -l cluster.ocs.openshift.io/openshift-storage -o jsonpath='{range .items[*]}{.metadata.name}{"\n"}'$ oc get nodes -l cluster.ocs.openshift.io/openshift-storage -o jsonpath='{range .items[*]}{.metadata.name}{"\n"}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output:

ip-10-0-135-71.us-east-2.compute.internal ip-10-0-145-125.us-east-2.compute.internal ip-10-0-160-91.us-east-2.compute.internal

ip-10-0-135-71.us-east-2.compute.internal ip-10-0-145-125.us-east-2.compute.internal ip-10-0-160-91.us-east-2.compute.internalCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Procedure

Create local persistent volumes (PVs) on the storage nodes using

LocalVolumecustom resource (CR).Example of

LocalVolumeCRlocal-storage-block.yamlusing OpenShift Storage Container label as node selector andby-iddevice identifier:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Each Amazon EC2 i3en.2xlarge instance has two disks and this example uses both the disks.

Create the

LocalVolumeCR.oc create -f local-storage-block.yaml

$ oc create -f local-storage-block.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output:

localvolume.local.storage.openshift.io/local-block created

localvolume.local.storage.openshift.io/local-block createdCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check if the pods are created.

oc -n local-storage get pods

$ oc -n local-storage get podsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Check if the PVs are created.

You must see a new PV for each of the local storage devices on the three worker nodes. Refer the example in the Finding available storage devices section that shows two available storage devices per worker node with a size 2328Gi for each node.

oc get pv

$ oc get pvCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Check if a new

StorageClassis created due to the creation ofLocalVolumeCR. ThisStorageClassis used while creatingStorageClusterto create PVCs.oc get sc | grep localblock

$ oc get sc | grep localblockCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output:

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE localblock kubernetes.io/no-provisioner Delete WaitForFirstConsumer false 3h47m

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE localblock kubernetes.io/no-provisioner Delete WaitForFirstConsumer false 3h47mCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create

StorageClusterCR that uses thelocalblockStorageClass and the PVs that are created.Example of

StorageClusterCRocs-cluster-service.yamlusingmonDataDirHostPathandlocalblockStorageClass.Copy to Clipboard Copied! Toggle word wrap Toggle overflow ImportantTo ensure that the OSDs have a guaranteed size across the nodes, the storage size for

storageDeviceSetsmust be specified as less than or equal to the size of the desired PVs created on the nodes.Create

StorageClusterCR.oc create -f ocs-cluster-service.yaml

$ oc create -f ocs-cluster-service.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

storagecluster.ocs.openshift.io/ocs-cluster-service created

storagecluster.ocs.openshift.io/ocs-cluster-service createdCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification steps

2.6. Creating OpenShift Container Storage cluster on VMware

Use this procedure to create storage cluster on VMware infrastructure.

VMware supports the following three types of local storage:

- Virtual machine disk (VMDK)

- Raw device mapping (RDM)

- VMDirectPath I/O

Prerequisites

- Ensure that all the requirements in the Requirements for installing OpenShift Container Storage using local storage devices section are met.

- You must have three worker nodes with the same storage type and size attached to each node to use local storage devices on VMware.

-

For VMs on VMware, ensure the

disk.EnableUUIDoption is set toTRUE.You need to have vCenter account privileges to configure the VMs. For more information, see Required vCenter account privileges. To set thedisk.EnableUUIDoption, use the Advanced option of the VM Options in the Customize hardware tab. For more information, see Creating Red Hat Enterprise Linux CoreOS (RHCOS) machines in vSphere. Verify your OpenShift Container Platform worker nodes are labeled for OpenShift Container Storage:

oc get nodes -l cluster.ocs.openshift.io/openshift-storage -o jsonpath='{range .items[*]}{.metadata.name}{"\n"}'$ oc get nodes -l cluster.ocs.openshift.io/openshift-storage -o jsonpath='{range .items[*]}{.metadata.name}{"\n"}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow

To identify storage devices on each node, refer to Finding available storage devices.

Procedure

Create the LocalVolume CR for block PVs.

Example of

LocalVolumeCRlocal-storage-block.yamlusing OpenShift Container Storage label as node selector:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create

LocalVolumeCR for block PVs.oc create -f local-storage-block.yaml

$ oc create -f local-storage-block.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output:

localvolume.local.storage.openshift.io/local-block created

localvolume.local.storage.openshift.io/local-block createdCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check if the pods are created.

oc -n local-storage get pods

$ oc -n local-storage get podsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Check the new

localblockStorageClass.oc get sc | grep localblock

$ oc get sc | grep localblockCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output:

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE localblock kubernetes.io/no-provisioner Delete WaitForFirstConsumer false 3h47m

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE localblock kubernetes.io/no-provisioner Delete WaitForFirstConsumer false 3h47mCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check the PVs that are created with the

Availablestatus.oc get pv

$ oc get pvCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output:

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE local-pv-150fdc87 2TiB RWO Delete Available localblock 2m11s local-pv-183bfc0a 2TiB RWO Delete Available localblock 2m11s local-pv-b2f5cb25 2TiB RWO Delete Available localblock 2m21s

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE local-pv-150fdc87 2TiB RWO Delete Available localblock 2m11s local-pv-183bfc0a 2TiB RWO Delete Available localblock 2m11s local-pv-b2f5cb25 2TiB RWO Delete Available localblock 2m21sCopy to Clipboard Copied! Toggle word wrap Toggle overflow In this example, three PVs are used for OSD storage.

Create

StorageClusterCRocs-cluster-service-VMware.yamlthat uses themonDataDirHostPathandlocalblockStorageClass.Copy to Clipboard Copied! Toggle word wrap Toggle overflow ImportantTo ensure that the OSDs have a guaranteed size across the nodes, the storage size for

storageDeviceSetsmust be specified as less than or equal to the size of the desired PVs created on the nodes.Create

StorageClusterCR.oc create -f ocs-cluster-service-VMware.yaml

$ oc create -f ocs-cluster-service-VMware.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output:

storagecluster.ocs.openshift.io/ocs-storagecluster created

storagecluster.ocs.openshift.io/ocs-storagecluster createdCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification steps

2.7. Creating OpenShift Container Storage cluster on bare metal

Prerequisites

- Ensure that all the requirements in the Requirements for installing OpenShift Container Storage using local storage devices section are met.

- You must have three worker nodes with the same storage type and size attached to each node (for example, 2TB NVMe hard drive) to use local storage devices on bare metal.

Verify your OpenShift Container Platform worker nodes are labeled for OpenShift Container Storage:

oc get nodes -l cluster.ocs.openshift.io/openshift-storage -o jsonpath='{range .items[*]}{.metadata.name}{"\n"}'$ oc get nodes -l cluster.ocs.openshift.io/openshift-storage -o jsonpath='{range .items[*]}{.metadata.name}{"\n"}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow

To identify storage devices on each node, refer to Finding available storage devices.

Procedure

Create

LocalVolumeCR for block PVs.Example of

LocalVolumeCRlocal-storage-block.yamlusing OCS label as node selector.Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create the

LocalVolumeCR for block PVs.oc create -f local-storage-block.yaml

$ oc create -f local-storage-block.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check if the pods are created.

oc -n local-storage get pods

$ oc -n local-storage get podsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check if the PVs are created.

oc get pv

$ oc get pvCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output:

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE local-pv-150fdc87 2Ti RWO Delete Available localblock 2m11s local-pv-183bfc0a 2Ti RWO Delete Available localblock 2m11s local-pv-b2f5cb25 2Ti RWO Delete Available localblock 2m21s

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE local-pv-150fdc87 2Ti RWO Delete Available localblock 2m11s local-pv-183bfc0a 2Ti RWO Delete Available localblock 2m11s local-pv-b2f5cb25 2Ti RWO Delete Available localblock 2m21sCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check for the new

localblockStorageClass.oc get sc|egrep -e "localblock|NAME"

$ oc get sc|egrep -e "localblock|NAME"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output:

NAME PROVISIONER RECLAIMPOLICY localblock kubernetes.io/no-provisioner Delete VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE WaitForFirstConsumer false 4d23h

NAME PROVISIONER RECLAIMPOLICY localblock kubernetes.io/no-provisioner Delete VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE WaitForFirstConsumer false 4d23hCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

Create

StorageClusterCR that uses thelocalblockStorageClass and the three PVs that are created. Example of

StorageClusterCRcluster-service-metal.yamlusingmonDataDirHostPathandlocalblockStorageClass.Copy to Clipboard Copied! Toggle word wrap Toggle overflow ImportantTo ensure that the OSDs have a guaranteed size across the nodes, the storage size for

storageDeviceSetsmust be specified as less than or equal to the size of the desired PVs created on the nodes.Create the

StorageClusterCR.oc create -f cluster-service-metal.yaml

$ oc create -f cluster-service-metal.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output:

storagecluster.ocs.openshift.io/ocs-storagecluster created

storagecluster.ocs.openshift.io/ocs-storagecluster createdCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification steps