OpenShift Container Storage is now OpenShift Data Foundation starting with version 4.9.

Chapter 7. Infrastructure requirements

7.1. Platform requirements

Red Hat OpenShift Data Foundation 4.9 is supported only on OpenShift Container Platform version 4.9 and its next minor version.

Bug fixes for previous version of Red Hat OpenShift Data Foundation will be released as bug fix versions. For details, see Red Hat OpenShift Container Platform Life Cycle Policy.

For external cluster subscription requirements, see this Red Hat Knowledgebase article.

For a complete list of supported platform versions, see the Red Hat OpenShift Data Foundation and Red Hat OpenShift Container Platform interoperability matrix.

7.1.1. Amazon EC2

Supports internal Red Hat OpenShift Data Foundation clusters only.

An Internal cluster must meet both, storage device requirements and have a storage class providing

- EBS storage via the aws-ebs provisioner

7.1.2. Bare Metal

Supports internal clusters and consuming external clusters.

An Internal cluster must meet both, storage device requirements and have a storage class providing local SSD (NVMe/SATA/SAS, SAN) via the Local Storage Operator.

7.1.3. VMware vSphere

Supports internal clusters and consuming external clusters.

Recommended versions:

- vSphere 6.7, Update 2 or later

- vSphere 7.0 or later

See VMware vSphere infrastructure requirements for details.

If VMware ESXi does not recognize its devices as flash, mark them as flash devices. Before Red Hat OpenShift Data Foundation deployment, refer to Mark Storage Devices as Flash.

Additionally, an Internal cluster must meet both, storage device requirements and have a storage class providing either

- vSAN or VMFS datastore via the vsphere-volume provisioner

- VMDK, RDM, or DirectPath storage devices via the Local Storage Operator.

7.1.4. Microsoft Azure

Supports internal Red Hat OpenShift Data Foundation clusters only.

An Internal cluster must meet both, storage device requirements and have a storage class providing

- Azure disk via the azure-disk provisioner

7.1.5. Google Cloud [Technology Preview]

Supports internal Red Hat OpenShift Data Foundation clusters only.

An Internal cluster must meet both, storage device requirements and have a storage class providing

- GCE Persistent Disk via the gce-pd provisioner

7.1.6. Red Hat Virtualization Platform

Supports internal Red Hat OpenShift Data Foundation clusters only.

An Internal cluster must meet both, storage device requirements and have a storage class providing local SSD (NVMe/SATA/SAS, SAN) via the Local Storage Operator.

7.1.7. Red Hat OpenStack Platform [Technology Preview]

Supports internal Red Hat OpenShift Data Foundation clusters and consuming external clusters.

An Internal cluster must meet both, storage device requirements and have a storage class providing

- standard disk via the Cinder provisioner

7.1.8. IBM Power

Supports internal Red Hat OpenShift Data Foundation clusters only.

An Internal cluster must meet both, storage device requirements and have a storage class providing local SSD (NVMe/SATA/SAS, SAN) via the Local Storage Operator.

7.1.9. IBM Z and LinuxONE

Supports internal Red Hat OpenShift Data Foundation clusters only.

An Internal cluster must meet both, storage device requirements and have a storage class providing local SSD (NVMe/SATA/SAS, SAN) via the Local Storage Operator.

7.2. External mode requirement

7.2.1. Red Hat Ceph Storage

Red Hat Ceph Storage (RHCS) version 4.2z1 or later is required. For more information on versions supported, see this knowledge base article on Red Hat Ceph Storage releases and corresponding Ceph package versions.

For instructions regarding how to install a RHCS 4 cluster, see Installation guide.

7.2.2. IBM FlashSystem

To use IBM FlashSystem as a pluggable external storage on other providers, you need to first deploy it before you can deploy OpenShift Data Foundation, which would use the IBM FlashSystem storage class as a backing storage.

For the latest supported FlashSystem products and versions, see Reference > Red Hat OpenShift Data Foundation support summary within your Spectrum Virtualize family product documentation on IBM Documentation.

For instructions about how to deploy OpenShift Data Foundation, see Creating an OpenShift Data Foundation Cluster for external IBM FlashSystem storage.

7.3. Resource requirements

Red Hat OpenShift Data Foundation services consist of an initial set of base services, and can be extended with additional device sets. All of these Red Hat OpenShift Data Foundation services pods are scheduled by kubernetes on OpenShift Container Platform nodes according to resource requirements. Expanding the cluster in multiples of three, one node in each failure domain, is an easy way to satisfy pod placement rules.

These requirements relate to OpenShift Data Foundation services only, and not to any other services, operators or workloads that are running on these nodes.

| Deployment Mode | Base services | Additional device Set |

|---|---|---|

| Internal |

|

|

| External |

| Not applicable |

Example: For a 3 node cluster in an internal mode deployment with a single device set, a minimum of 3 x 10 = 30 units of CPU are required.

For more information, see Chapter 6, Subscriptions and CPU units.

For additional guidance with designing your Red Hat OpenShift Data Foundation cluster, see the ODF Sizing Tool.

CPU units

In this section, 1 CPU Unit maps to the Kubernetes concept of 1 CPU unit.

- 1 unit of CPU is equivalent to 1 core for non-hyperthreaded CPUs.

- 2 units of CPU are equivalent to 1 core for hyperthreaded CPUs.

- Red Hat OpenShift Data Foundation core-based subscriptions always come in pairs (2 cores).

| Deployment Mode | Base services |

|---|---|

| Internal |

|

| External |

|

Example: For a 3 node cluster in an internal-attached devices mode deployment, a minimum of 3 x 16 = 48 units of CPU and 3 x 64 = 192 GB of memory is required.

7.3.1. Resource requirements for IBM Z and LinuxONE infrastructure

Red Hat OpenShift Data Foundation services consist of an initial set of base services, and can be extended with additional device sets.

All of these Red Hat OpenShift Data Foundation services pods are scheduled by kubernetes on OpenShift Container Platform nodes according to the resource requirements.

Expanding the cluster in multiples of three, one node in each failure domain, is an easy way to satisfy the pod placement rules.

| Deployment Mode | Base services | Additional device Set | IBM Z and LinuxONE minimum hardware requirements |

|---|---|---|---|

| Internal |

|

| 1 IFL |

| External |

| Not applicable | Not applicable |

- CPU

- Is the number of virtual cores defined in the hypervisor, IBM z/VM, Kernel Virtual Machine (KVM), or both.

- IFL (Integrated Facility for Linux)

- Is the physical core for IBM Z and LinuxONE.

Minimum system environment

- In order to operate a minimal cluster with 1 logical partition (LPAR), one additional IFL is required on top of the 6 IFLs. OpenShift Container Platform consumes these IFLs .

7.3.2. Minimum deployment resource requirements [Technology Preview]

An OpenShift Data Foundation cluster will be deployed with minimum configuration when the standard deployment resource requirement is not met.

These requirements relate to OpenShift Data Foundation services only, and not to any other services, operators or workloads that are running on these nodes.

| Deployment Mode | Base services |

|---|---|

| Internal |

|

If you want to add additional device sets, we recommend converting your minimum deployment to standard deployment.

Deployment of OpenShift Data Foundation with minimum configuration is a Technology Preview feature. Technology Preview features are not supported with Red Hat production service level agreements (SLAs) and might not be functionally complete. Red Hat does not recommend using them in production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process.

For more information, see Technology Preview Features Support Scope.

7.3.3. Compact deployment resource requirements

Red Hat OpenShift Data Foundation can be installed on a three-node OpenShift compact bare metal cluster, where all the workloads run on three strong master nodes. There are no worker or storage nodes.

These requirements relate to OpenShift Data Foundation services only, and not to any other services, operators or workloads that are running on these nodes.

| Deployment Mode | Base services | Additional device Set |

|---|---|---|

| Internal |

|

|

To configure OpenShift Container Platform on a compact bare metal cluster, see Configuring a three-node cluster and Delivering a Three-node Architecture for Edge Deployments.

7.3.4. Resource requirements for MCG only deployment

An OpenShift Data Foundation cluster deployed only with the Multicloud Object Gateway (MCG) component provides the flexibility in deployment and helps to reduce the resource consumption.

| Deployment Mode | Core | Database (DB) | Endpoint |

|---|---|---|---|

| Internal |

|

|

Note The defaut auto scale is between 1 - 2. |

7.4. Pod placement rules

Kubernetes is responsible for pod placement based on declarative placement rules. The Red Hat OpenShift Data Foundation base service placement rules for Internal cluster can be summarized as follows:

-

Nodes are labeled with the

cluster.ocs.openshift.io/openshift-storagekey - Nodes are sorted into pseudo failure domains if none exist

- Components requiring high availability are spread across failure domains

- A storage device must be accessible in each failure domain

This leads to the requirement that there be at least three nodes, and that nodes be in three distinct rack or zone failure domains in the case of pre-existing topology labels.

For additional device sets, there must be a storage device, and sufficient resources for the pod consuming it, in each of the three failure domains. Manual placement rules can be used to override default placement rules, but generally this approach is only suitable for bare metal deployments.

7.5. Storage device requirements

Use this section to understand the different storage capacity requirements that you can consider when planning internal mode deployments and upgrades. We generally recommend 9 devices or less per node. This recommendation ensures both that nodes stay below cloud provider dynamic storage device attachment limits, and to limit the recovery time after node failures with local storage devices. Expanding the cluster in multiples of three, one node in each failure domain, is an easy way to satisfy pod placement rules.

You can expand the storage capacity only in the increment of the capacity selected at the time of installation.

7.5.1. Dynamic storage devices

Red Hat OpenShift Data Foundation permits the selection of either 0.5 TiB, 2 TiB or 4 TiB capacities as the request size for dynamic storage device sizes. The number of dynamic storage devices that can run per node is a function of the node size, underlying provisioner limits and resource requirements.

7.5.2. Local storage devices

For local storage deployment, any disk size of 4 TiB or less can be used, and all disks should be of the same size and type. The number of local storage devices that can run per node is a function of the node size and resource requirements. Expanding the cluster in multiples of three, one node in each failure domain, is an easy way to satisfy pod placement rules.

Disk partitioning is not supported.

7.5.3. Capacity planning

Always ensure that available storage capacity stays ahead of consumption. Recovery is difficult if available storage capacity is completely exhausted, and requires more intervention than simply adding capacity or deleting or migrating content.

Capacity alerts are issued when cluster storage capacity reaches 75% (near-full) and 85% (full) of total capacity. Always address capacity warnings promptly, and review your storage regularly to ensure that you do not run out of storage space. When you get to 75% (near-full), either free up space or expand the cluster. When you get the 85% (full) alert, it indicates that you have run out of storage space completely and cannot free up space using standard commands. At this point, contact Red Hat Customer Support.

The following tables show example node configurations for Red Hat OpenShift Data Foundation with dynamic storage devices.

| Storage Device size | Storage Devices per node | Total capacity | Usable storage capacity |

|---|---|---|---|

| 0.5 TiB | 1 | 1.5 TiB | 0.5 TiB |

| 2 TiB | 1 | 6 TiB | 2 TiB |

| 4 TiB | 1 | 12 TiB | 4 TiB |

| Storage Device size (D) | Storage Devices per node (M) | Total capacity (D * M * N) | Usable storage capacity (D*M*N/3) |

|---|---|---|---|

| 0.5 TiB | 3 | 45 TiB | 15 TiB |

| 2 TiB | 6 | 360 TiB | 120 TiB |

| 4 TiB | 9 | 1080 TiB | 360 TiB |

7.6. Multi network plug-in (Multus) support [Technology Preview]

By default, Red Hat OpenShift Data Foundation is configured to use the Red Hat OpenShift Software Defined Network (SDN). In this default configuration the SDN carries the following types of traffic:

- Pod to pod traffic

- Pod to OpenShift Data Foundation traffic, known as the OpenShift Data Foundation public network traffic

- OpenShift Data Foundation replication and rebalancing, known as the OpenShift Data Foundation cluster network traffic

However, OpenShift Data Foundation 4.8 and later supports as a technology preview the ability to use Multus to improve security and performance by isolating the different types of network traffic.

Multus support is a Technology Preview feature that is only supported and has been tested on bare metal and VMWare deployments. Technology Preview features are not supported with Red Hat production service level agreements (SLAs) and might not be functionally complete. Red Hat does not recommend using them in production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process.

For more information, see Technology Preview Features Support Scope.

7.6.1. Understanding multiple networks

In Kubernetes, container networking is delegated to networking plug-ins that implement the Container Network Interface (CNI).

OpenShift Container Platform uses the Multus CNI plug-in to allow chaining of CNI plug-ins. During cluster installation, you configure your default pod network. The default network handles all ordinary network traffic for the cluster. You can define an additional network based on the available CNI plug-ins and attach one or more of these networks to your pods. You can define more than one additional network for your cluster, depending on your needs. This gives you flexibility when you configure pods that deliver network functionality, such as switching or routing.

7.6.1.1. Usage scenarios for an additional network

You can use an additional network in situations where network isolation is needed, including data plane and control plane separation. Isolating network traffic is useful for the following performance and security reasons:

- Performance

- You can send traffic on two different planes in order to manage how much traffic is along each plane.

- Security

- You can send sensitive traffic onto a network plane that is managed specifically for security considerations, and you can separate private data that must not be shared between tenants or customers.

All of the pods in the cluster still use the cluster-wide default network to maintain connectivity across the cluster. Every pod has an eth0 interface that is attached to the cluster-wide pod network. You can view the interfaces for a pod by using the oc exec -it <pod_name> -- ip a command. If you add additional network interfaces that use Multus CNI, they are named net1, net2, …, netN.

To attach additional network interfaces to a pod, you must create configurations that define how the interfaces are attached. You specify each interface by using a NetworkAttachmentDefinition custom resource (CR). A CNI configuration inside each of these CRs defines how that interface is created.

7.6.2. Segregating storage traffic using Multus

In order to use Multus, before deployment of the OpenShift Data Foundation cluster you must create network attachment definitions (NADs) that later will be attached to the cluster. For more information, see:

- Creating network attachment definitions for bare metal

- Creating network attachment definitions for VMware

Using Multus, the following configurations are possible depending on your hardware setup or your VMWare instance network setup:

Nodes with a dual network interface recommended configuration

Segregated storage traffic

- Configure one interface for OpenShift SDN (pod to pod traffic)

- Configure one interface for all OpenShift Data Foundation traffic

Nodes with a triple network interface recommended configuration

Full traffic segregation

- Configure one interface for OpenShift SDN (pod to pod traffic)

- Configure one interface for all pod to OpenShift Data Foundation traffic (OpenShift Data Foundation public traffic)

- Configure one interface for all OpenShift Data Foundation replication and rebalancing traffic (OpenShift Data Foundation cluster traffic)

7.6.3. Recommended network configuration and requirements for a Multus configuration

If you decide to leverage a Multus configuration, the following prerequisites must be met:

- All the nodes used to deploy OpenShift Data Foundation must have the same network interface configuration to guarantee a fully functional Multus configuration. The network interfaces names on all nodes must be the same and connected to the same underlying switching mechanism for the Multus public network and the Multus cluster network.

- All the Worker nodes used to deploy applications that leverage OpenShift Data Foundation for persistent storage must have the same network interface configuration to guarantee a fully functional Multus configuration. One of the two interfaces must be the same interface name as that used to configure the Multus public network on the Storage nodes. All Worker network interfaces must be connected to the same underlying switching mechanism as that used for the Storage node’s Multus public network.

Dual network interface segregated configuration schematic example:

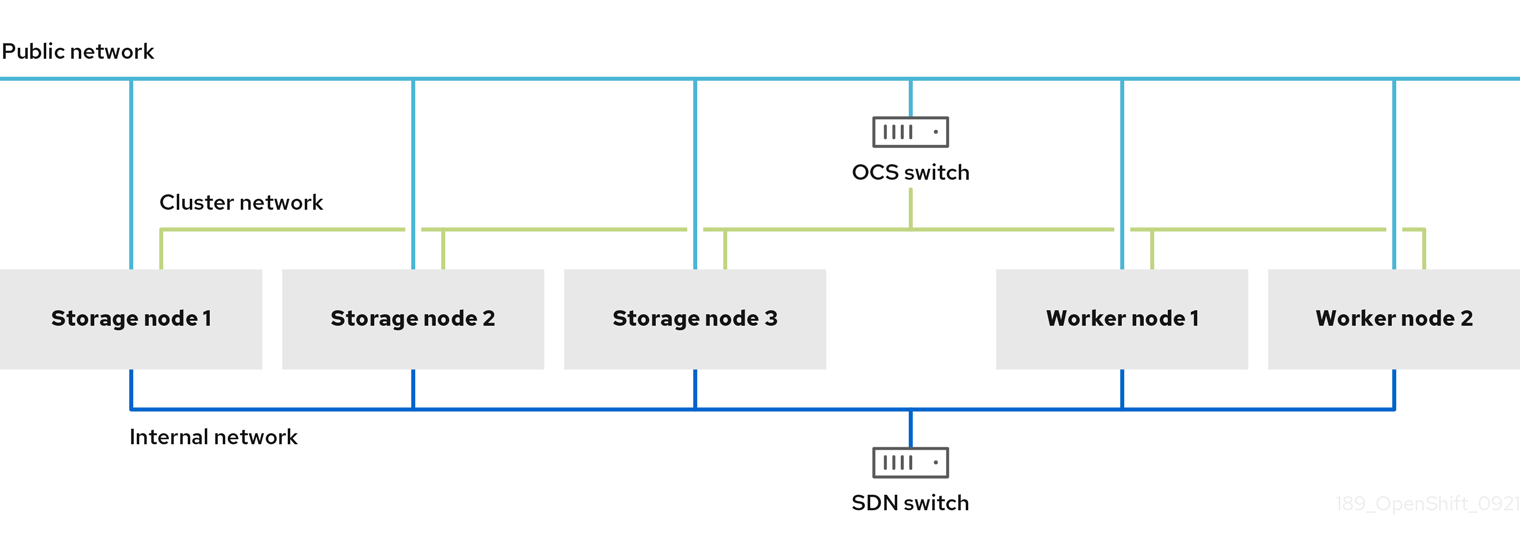

Triple network interface full segregated configuration schematic example:

Only the Storage nodes where OpenShift Data Foundation OSDs are running require access to the OpenShift Data Foundation cluster network configured via Multus.

See Creating Multus networks for the necessary steps to configure a Multus based configuration on bare metal.

See Creating Multus networks for the necessary steps to configure a Multus based configuration on VMware.