Chapter 4. Knative Eventing CLI commands

4.1. kn source commands

You can use the following commands to list, create, and manage Knative event sources.

4.1.1. Listing available event source types by using the Knative CLI

You can list event source types that can be created and used on your cluster by using the kn source list-types CLI command.

Prerequisites

- The OpenShift Serverless Operator and Knative Eventing are installed on the cluster.

-

You have installed the Knative (

kn) CLI.

Procedure

List the available event source types in the terminal:

kn source list-types

$ kn source list-typesCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

TYPE NAME DESCRIPTION ApiServerSource apiserversources.sources.knative.dev Watch and send Kubernetes API events to a sink PingSource pingsources.sources.knative.dev Periodically send ping events to a sink SinkBinding sinkbindings.sources.knative.dev Binding for connecting a PodSpecable to a sink

TYPE NAME DESCRIPTION ApiServerSource apiserversources.sources.knative.dev Watch and send Kubernetes API events to a sink PingSource pingsources.sources.knative.dev Periodically send ping events to a sink SinkBinding sinkbindings.sources.knative.dev Binding for connecting a PodSpecable to a sinkCopy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: On OpenShift Container Platform, you can also list the available event source types in YAML format:

kn source list-types -o yaml

$ kn source list-types -o yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.1.2. Knative CLI sink flag

When you create an event source by using the Knative (kn) CLI, you can specify a sink where events are sent to from that resource by using the --sink flag. The sink can be any addressable or callable resource that can receive incoming events from other resources.

The following example creates a sink binding that uses a service, http://event-display.svc.cluster.local, as the sink:

Example command using the sink flag

kn source binding create bind-heartbeat \ --namespace sinkbinding-example \ --subject "Job:batch/v1:app=heartbeat-cron" \ --sink http://event-display.svc.cluster.local \ --ce-override "sink=bound"

$ kn source binding create bind-heartbeat \

--namespace sinkbinding-example \

--subject "Job:batch/v1:app=heartbeat-cron" \

--sink http://event-display.svc.cluster.local \

--ce-override "sink=bound"- 1

svcinhttp://event-display.svc.cluster.localdetermines that the sink is a Knative service. Other default sink prefixes includechannel, andbroker.

4.1.3. Creating and managing container sources by using the Knative CLI

You can use the kn source container commands to create and manage container sources by using the Knative (kn) CLI. Using the Knative CLI to create event sources provides a more streamlined and intuitive user interface than modifying YAML files directly.

Create a container source

kn source container create <container_source_name> --image <image_uri> --sink <sink>

$ kn source container create <container_source_name> --image <image_uri> --sink <sink>Delete a container source

kn source container delete <container_source_name>

$ kn source container delete <container_source_name>Describe a container source

kn source container describe <container_source_name>

$ kn source container describe <container_source_name>List existing container sources

kn source container list

$ kn source container listList existing container sources in YAML format

kn source container list -o yaml

$ kn source container list -o yamlUpdate a container source

This command updates the image URI for an existing container source:

kn source container update <container_source_name> --image <image_uri>

$ kn source container update <container_source_name> --image <image_uri>4.1.4. Creating an API server source by using the Knative CLI

You can use the kn source apiserver create command to create an API server source by using the kn CLI. Using the kn CLI to create an API server source provides a more streamlined and intuitive user interface than modifying YAML files directly.

Prerequisites

- The OpenShift Serverless Operator and Knative Eventing are installed on the cluster.

- You have created a project or have access to a project with the appropriate roles and permissions to create applications and other workloads in OpenShift Container Platform.

-

You have installed the OpenShift CLI (

oc). -

You have installed the Knative (

kn) CLI.

If you want to re-use an existing service account, you can modify your existing ServiceAccount resource to include the required permissions instead of creating a new resource.

Create a service account, role, and role binding for the event source as a YAML file:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the YAML file:

oc apply -f <filename>

$ oc apply -f <filename>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create an API server source that has an event sink. In the following example, the sink is a broker:

kn source apiserver create <event_source_name> --sink broker:<broker_name> --resource "event:v1" --service-account <service_account_name> --mode Resource

$ kn source apiserver create <event_source_name> --sink broker:<broker_name> --resource "event:v1" --service-account <service_account_name> --mode ResourceCopy to Clipboard Copied! Toggle word wrap Toggle overflow To check that the API server source is set up correctly, create a Knative service that dumps incoming messages to its log:

kn service create event-display --image quay.io/openshift-knative/showcase

$ kn service create event-display --image quay.io/openshift-knative/showcaseCopy to Clipboard Copied! Toggle word wrap Toggle overflow If you used a broker as an event sink, create a trigger to filter events from the

defaultbroker to the service:kn trigger create <trigger_name> --sink ksvc:event-display

$ kn trigger create <trigger_name> --sink ksvc:event-displayCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create events by launching a pod in the default namespace:

oc create deployment event-origin --image quay.io/openshift-knative/showcase

$ oc create deployment event-origin --image quay.io/openshift-knative/showcaseCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check that the controller is mapped correctly by inspecting the output generated by the following command:

kn source apiserver describe <source_name>

$ kn source apiserver describe <source_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

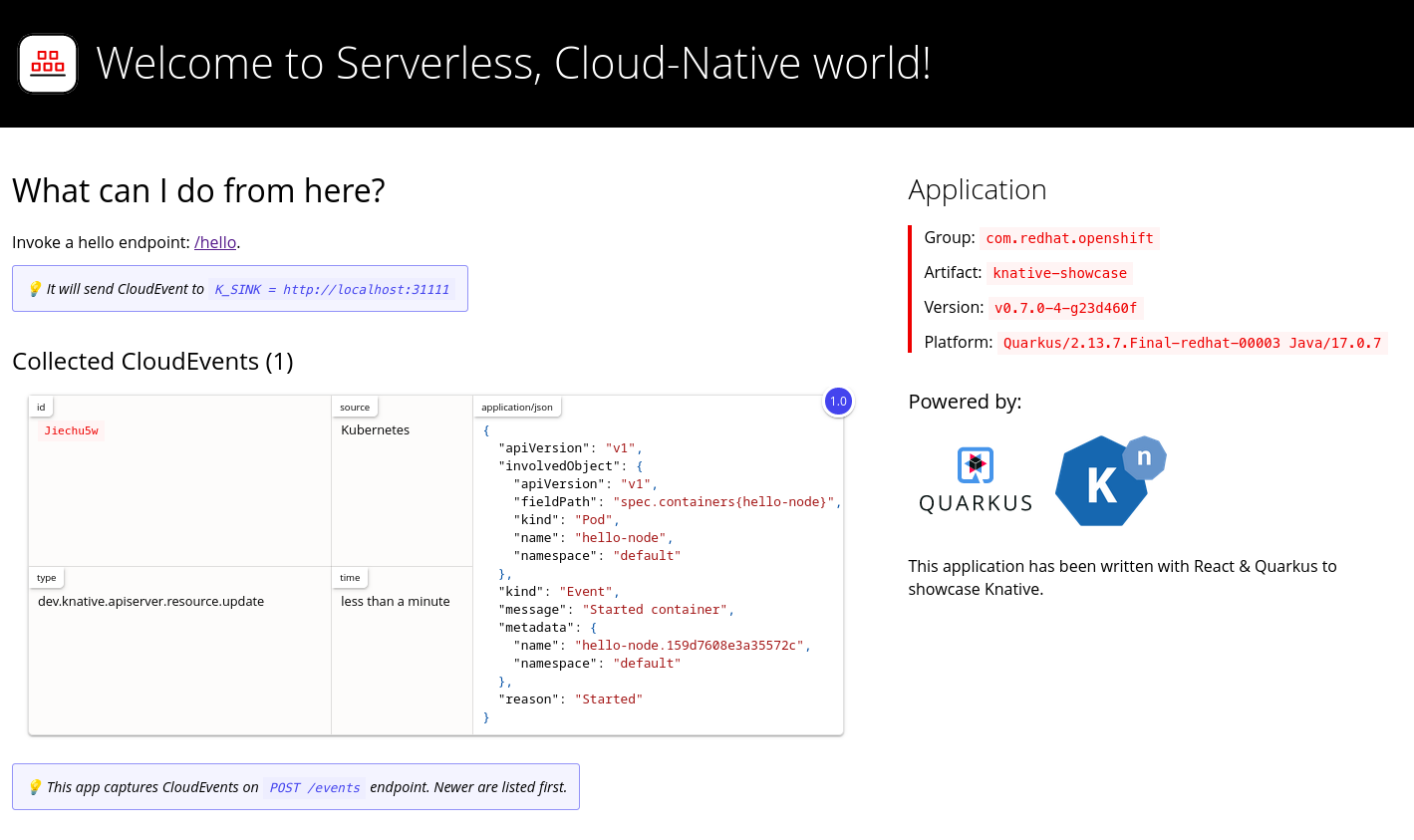

To verify that the Kubernetes events were sent to Knative, look at the event-display logs or use web browser to see the events.

To view the events in a web browser, open the link returned by the following command:

kn service describe event-display -o url

$ kn service describe event-display -o urlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Figure 4.1. Example browser page

Alternatively, to see the logs in the terminal, view the event-display logs for the pods by entering the following command:

oc logs $(oc get pod -o name | grep event-display) -c user-container

$ oc logs $(oc get pod -o name | grep event-display) -c user-containerCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Deleting the API server source

Delete the trigger:

kn trigger delete <trigger_name>

$ kn trigger delete <trigger_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Delete the event source:

kn source apiserver delete <source_name>

$ kn source apiserver delete <source_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Delete the service account, cluster role, and cluster binding:

oc delete -f authentication.yaml

$ oc delete -f authentication.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.1.5. Creating a ping source by using the Knative CLI

You can use the kn source ping create command to create a ping source by using the Knative (kn) CLI. Using the Knative CLI to create event sources provides a more streamlined and intuitive user interface than modifying YAML files directly.

Prerequisites

- The OpenShift Serverless Operator, Knative Serving and Knative Eventing are installed on the cluster.

-

You have installed the Knative (

kn) CLI. - You have created a project or have access to a project with the appropriate roles and permissions to create applications and other workloads in OpenShift Container Platform.

-

Optional: If you want to use the verification steps for this procedure, install the OpenShift CLI (

oc).

Procedure

To verify that the ping source is working, create a simple Knative service that dumps incoming messages to the service logs:

kn service create event-display \ --image quay.io/openshift-knative/showcase$ kn service create event-display \ --image quay.io/openshift-knative/showcaseCopy to Clipboard Copied! Toggle word wrap Toggle overflow For each set of ping events that you want to request, create a ping source in the same namespace as the event consumer:

kn source ping create test-ping-source \ --schedule "*/2 * * * *" \ --data '{"message": "Hello world!"}' \ --sink ksvc:event-display$ kn source ping create test-ping-source \ --schedule "*/2 * * * *" \ --data '{"message": "Hello world!"}' \ --sink ksvc:event-displayCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check that the controller is mapped correctly by entering the following command and inspecting the output:

kn source ping describe test-ping-source

$ kn source ping describe test-ping-sourceCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

You can verify that the Kubernetes events were sent to the Knative event sink by looking at the logs of the sink pod.

By default, Knative services terminate their pods if no traffic is received within a 60 second period. The example shown in this guide creates a ping source that sends a message every 2 minutes, so each message should be observed in a newly created pod.

Watch for new pods created:

watch oc get pods

$ watch oc get podsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Cancel watching the pods using Ctrl+C, then look at the logs of the created pod:

oc logs $(oc get pod -o name | grep event-display) -c user-container

$ oc logs $(oc get pod -o name | grep event-display) -c user-containerCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Deleting the ping source

Delete the ping source:

kn delete pingsources.sources.knative.dev <ping_source_name>

$ kn delete pingsources.sources.knative.dev <ping_source_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

4.1.6. Creating an Apache Kafka event source by using the Knative CLI

You can use the kn source kafka create command to create a Kafka source by using the Knative (kn) CLI. Using the Knative CLI to create event sources provides a more streamlined and intuitive user interface than modifying YAML files directly.

Prerequisites

-

The OpenShift Serverless Operator, Knative Eventing, Knative Serving, and the

KnativeKafkacustom resource (CR) are installed on your cluster. - You have created a project or have access to a project with the appropriate roles and permissions to create applications and other workloads in OpenShift Container Platform.

- You have access to a Red Hat AMQ Streams (Kafka) cluster that produces the Kafka messages you want to import.

-

You have installed the Knative (

kn) CLI. -

Optional: You have installed the OpenShift CLI (

oc) if you want to use the verification steps in this procedure.

Procedure

To verify that the Kafka event source is working, create a Knative service that dumps incoming events into the service logs:

kn service create event-display \ --image quay.io/openshift-knative/showcase$ kn service create event-display \ --image quay.io/openshift-knative/showcaseCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a

KafkaSourceCR:kn source kafka create <kafka_source_name> \ --servers <cluster_kafka_bootstrap>.kafka.svc:9092 \ --topics <topic_name> --consumergroup my-consumer-group \ --sink event-display$ kn source kafka create <kafka_source_name> \ --servers <cluster_kafka_bootstrap>.kafka.svc:9092 \ --topics <topic_name> --consumergroup my-consumer-group \ --sink event-displayCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteReplace the placeholder values in this command with values for your source name, bootstrap servers, and topics.

The

--servers,--topics, and--consumergroupoptions specify the connection parameters to the Kafka cluster. The--consumergroupoption is optional.Optional: View details about the

KafkaSourceCR you created:kn source kafka describe <kafka_source_name>

$ kn source kafka describe <kafka_source_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification steps

Trigger the Kafka instance to send a message to the topic:

oc -n kafka run kafka-producer \ -ti --image=quay.io/strimzi/kafka:latest-kafka-2.7.0 --rm=true \ --restart=Never -- bin/kafka-console-producer.sh \ --broker-list <cluster_kafka_bootstrap>:9092 --topic my-topic$ oc -n kafka run kafka-producer \ -ti --image=quay.io/strimzi/kafka:latest-kafka-2.7.0 --rm=true \ --restart=Never -- bin/kafka-console-producer.sh \ --broker-list <cluster_kafka_bootstrap>:9092 --topic my-topicCopy to Clipboard Copied! Toggle word wrap Toggle overflow Enter the message in the prompt. This command assumes that:

-

The Kafka cluster is installed in the

kafkanamespace. -

The

KafkaSourceobject has been configured to use themy-topictopic.

-

The Kafka cluster is installed in the

Verify that the message arrived by viewing the logs:

oc logs $(oc get pod -o name | grep event-display) -c user-container

$ oc logs $(oc get pod -o name | grep event-display) -c user-containerCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow