Chapter 15. Configure Load Balancing-as-a-Service (LBaaS)

Load Balancing-as-a-Service (LBaaS) enables OpenStack Networking to distribute incoming requests evenly between designated instances. This step-by-step guide configures OpenStack Networking to use LBaaS with the Open vSwitch (OVS) plug-in.

Introduced in Red Hat OpenStack Platform 5, Load Balancing-as-a-Service (LBaaS) enables OpenStack Networking to distribute incoming requests evenly between designated instances. This ensures the workload is shared predictably among instances, and allows more effective use of system resources. Incoming requests are distributed using one of these load balancing methods:

- Round robin - Rotates requests evenly between multiple instances.

- Source IP - Requests from a unique source IP address are consistently directed to the same instance.

- Least connections - Allocates requests to the instance with the least number of active connections.

| Feature | Description |

|---|---|

| Monitors | LBaaS provides availability monitoring with the ping, TCP, HTTP and HTTPS GET methods. Monitors are implemented to determine whether pool members are available to handle requests. |

| Management | LBaaS is managed using a variety of tool sets. The REST API is available for programmatic administration and scripting. Users perform administrative management of load balancers through either the CLI (neutron) or the OpenStack dashboard. |

| Connection limits | Ingress traffic can be shaped with connection limits. This feature allows workload control and can also assist with mitigating DoS (Denial of Service) attacks. |

| Session persistence | LBaaS supports session persistence by ensuring incoming requests are routed to the same instance within a pool of multiple instances. LBaaS supports routing decisions based on cookies and source IP address. |

LBaaS is currently supported only with IPv4 addressing.

LBaaSv1 has been removed in Red Hat OpenStack Platform 11 (Ocata) and replaced by LBaaSv2.

15.1. OpenStack Networking and LBaaS Topology

OpenStack Networking (neutron) services can be broadly classified into two categories.

1. - Neutron API server - This service runs the OpenStack Networking API server, which has the main responsibility of providing an API for end users and services to interact with OpenStack Networking. This server also has the responsibility of interacting with the underlying database to store and retrieve tenant network, router, and loadbalancer details, among others.

2. - Neutron Agents - These are the services that deliver various network functionality for OpenStack Networking.

- neutron-dhcp-agent - manages DHCP IP addressing for tenant private networks.

- neutron-l3-agent - facilitates layer 3 routing between tenant private networks, the external network, and others.

- neutron-lbaasv2-agent - provisions the LBaaS routers created by tenants.

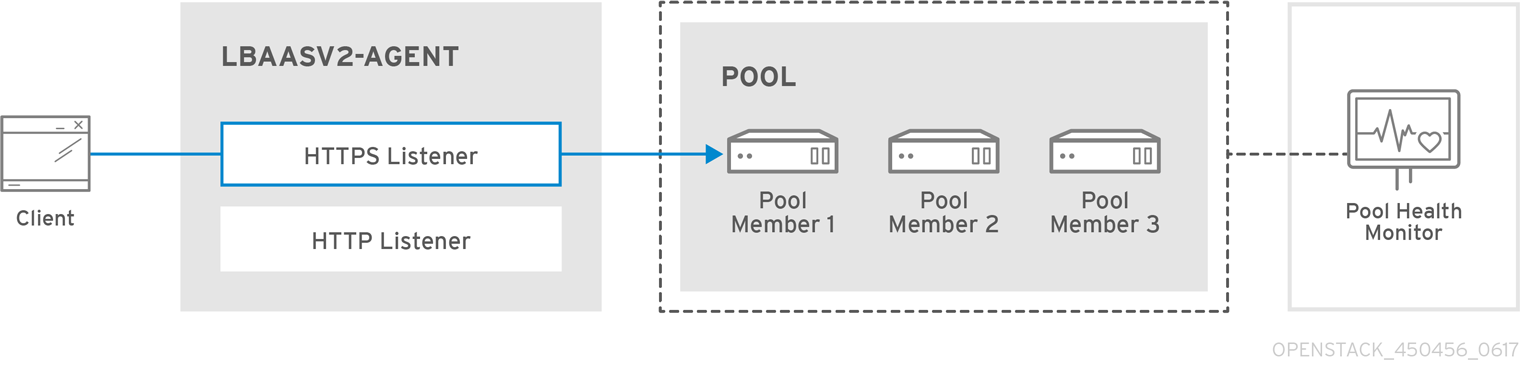

The following diagram describes the flow of HTTPS traffic through to a pool member:

15.1.1. Support Status of LBaaS

- LBaaS v1 API was removed in version 10.

- LBaaS v2 API was introduced in Red Hat OpenStack Platform 7, and is the only available LBaaS API as of version 10.

- LBaaS deployment is not currently supported in Red Hat OpenStack Platform director.

15.1.2. Service Placement

The OpenStack Networking services can either run together on the same physical server, or on separate dedicated servers.

Red Hat OpenStack Platform 11 added support for composable roles, allowing you to separate network services into a custom role. However, for simplicity, this guide assumes that a deployment uses the default controller role.

The server that runs API server is usually called the Controller node, whereas the server that runs the OpenStack Networking agents is called the Network node. An ideal production environment would separate these components to their own dedicated nodes for performance and scalability reasons, but a testing or PoC deployment might have them all running on the same node. This chapter covers both of these scenarios; the section under Controller node configuration need to be performed on the API server, whereas the section on Network node is performed on the server that runs the LBaaS agent.

If both the Controller and Network roles are on the same physical node, then the steps must be performed on that server.

15.2. Configure LBaaS

This procedure configures OpenStack Networking (neutron) to use LBaaS with the Open vSwitch (OVS) plug-in. Perform these steps on nodes running the neutron-server service.

These steps are not intended for deployments based on Octavia. For more information, see https://access.redhat.com/documentation/en-us/red_hat_openstack_platform/11/html-single/advanced_overcloud_customization/#Composable_Service_Reference-New_Services

On the Controller node (API Server):

Install HAProxy:

yum install haproxy

# yum install haproxyCopy to Clipboard Copied! Toggle word wrap Toggle overflow Add the LBaaS tables to the neutron database:

neutron-db-manage --subproject neutron-lbaas --config-file /etc/neutron/neutron.conf --config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head

$ neutron-db-manage --subproject neutron-lbaas --config-file /etc/neutron/neutron.conf --config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade headCopy to Clipboard Copied! Toggle word wrap Toggle overflow Change the service provider in

/etc/neutron/neutron_lbaas.conf. In the[service providers]section, comment out (#) all entries except for:service_provider=LOADBALANCERV2:Haproxy:neutron_lbaas.drivers.haproxy.plugin_driver.HaproxyOnHostPluginDriver:default

service_provider=LOADBALANCERV2:Haproxy:neutron_lbaas.drivers.haproxy.plugin_driver.HaproxyOnHostPluginDriver:defaultCopy to Clipboard Copied! Toggle word wrap Toggle overflow In

/etc/neutron/neutron.conf, confirm that you have the LBaaS v2 plugin configured inservice_plugins:service_plugins=neutron_lbaas.services.loadbalancer.plugin.LoadBalancerPluginv2

service_plugins=neutron_lbaas.services.loadbalancer.plugin.LoadBalancerPluginv2Copy to Clipboard Copied! Toggle word wrap Toggle overflow You can also expect to see any other plugins you have previously added.

NoteIf you have

lbaasv1configured, replace it with the above setting forlbaasv2.In

/etc/neutron/lbaas_agent.ini, add the following to the[DEFAULT]section:ovs_use_veth = False interface_driver =neutron.agent.linux.interface.OVSInterfaceDriver

ovs_use_veth = False interface_driver =neutron.agent.linux.interface.OVSInterfaceDriverCopy to Clipboard Copied! Toggle word wrap Toggle overflow In

/etc/neutron/services_lbaas.conf, add the following to the[haproxy]section:user_group = haproxy

user_group = haproxyCopy to Clipboard Copied! Toggle word wrap Toggle overflow Comment out any other

device driverentries.NoteIf the

l3-agentis in a failed mode, see thel3_agentlog files. You may need to edit/etc/neutron/neutron.confand comment out certain values in[DEFAULT], and uncomment the corresponding values inoslo_messaging_rabbit, as described in the log file.

Configure the LbaaS services, and review their status:

Stop the

lbaasv1services and startlbaasv2:systemctl disable neutron-lbaas-agent.service systemctl stop neutron-lbaas-agent.service systemctl mask neutron-lbaas-agent.service systemctl enable neutron-lbaasv2-agent.service systemctl start neutron-lbaasv2-agent.service

# systemctl disable neutron-lbaas-agent.service # systemctl stop neutron-lbaas-agent.service # systemctl mask neutron-lbaas-agent.service # systemctl enable neutron-lbaasv2-agent.service # systemctl start neutron-lbaasv2-agent.serviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow Review the status of

lbaasv2:systemctl status neutron-lbaasv2-agent.service

# systemctl status neutron-lbaasv2-agent.serviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow Restart

neutron-serverand check the status:systemctl restart neutron-server.service systemctl status neutron-server.service

# systemctl restart neutron-server.service # systemctl status neutron-server.serviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check the

Loadbalancerv2agent:neutron agent-list

$ neutron agent-listCopy to Clipboard Copied! Toggle word wrap Toggle overflow

15.3. Automatically Reschedule Load Balancers

You can configure neutron to automatically reschedule load balancers from failed LBaaS agents. Previously, load balancers could be scheduled across multiple LBaaS agents, however if a hypervisor died, the load balancers scheduled to that node would cease operation. Now these load balancers can be automatically rescheduled to a different agent. This feature is turned off by default and controlled using allow_automatic_lbaas_agent_failover.

15.3.1. Enable Automatic Failover

You will need at least two nodes running the Loadbalancerv2 agent.

On all nodes running the

Loadbalancerv2agent, edit /etc/neutron/neutron_lbaas.conf:allow_automatic_lbaas_agent_failover=True

allow_automatic_lbaas_agent_failover=TrueCopy to Clipboard Copied! Toggle word wrap Toggle overflow Restart the LBaaS agents and

neutron-server:systemctl restart neutron-lbaasv2-agent systemctl restart neutron-server.service

systemctl restart neutron-lbaasv2-agent systemctl restart neutron-server.serviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow Review the state of the agents:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

15.3.2. Sample Failover Configuration

Create a new loadbalancer:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a listener so that haproxy is spawned:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Check which LBaaS agent the loadbalancer was scheduled to:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that haproxy runs on that node. In addition, verify that it is not currently running on the other node:

stack@node2:~/$ ps -ef | grep "haproxy -f" | grep lbaas nobody 14503 1 0 17:14 ? 00:00:00 haproxy -f /opt/openstack/data/neutron/lbaas/v2/b130e956-b8d1-4290-ab83-febc19797683/haproxy.conf -p /opt/openstack/data/neutron/lbaas/v2/b130e956-b8d1-4290-ab83-febc19797683/haproxy.pid stack@node1:~/$ ps -ef | grep "haproxy -f" | grep lbaas stack@node1:~/$

stack@node2:~/$ ps -ef | grep "haproxy -f" | grep lbaas nobody 14503 1 0 17:14 ? 00:00:00 haproxy -f /opt/openstack/data/neutron/lbaas/v2/b130e956-b8d1-4290-ab83-febc19797683/haproxy.conf -p /opt/openstack/data/neutron/lbaas/v2/b130e956-b8d1-4290-ab83-febc19797683/haproxy.pid stack@node1:~/$ ps -ef | grep "haproxy -f" | grep lbaas stack@node1:~/$Copy to Clipboard Copied! Toggle word wrap Toggle overflow Kill the hosting lbaas agent process, then wait for

neutron-serverto recognize this event and consequently reschedule the loadbalancer:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Review

q-svc log:2016-12-08 17:17:48.427 WARNING neutron.db.agents_db [req-1518b1ee-1ce3-4813-9999-9e9323666df7 None None] Agent healthcheck: found 1 dead agents out of 7: Type Last heartbeat host Loadbalancerv2 agent 2016-12-08 17:15:11 node2 2016-12-08 17:18:06.000 WARNING neutron.db.agentschedulers_db [req-d0c689d4-434b-4db7-8140-27d3d3442dec None None] Rescheduling loadbalancer b130e956-b8d1-4290-ab83-febc19797683 from agent 88f4c436-7152-4d30-a9e8-a793750bcbba because the agent did not report to the server in the last 150 seconds.2016-12-08 17:17:48.427 WARNING neutron.db.agents_db [req-1518b1ee-1ce3-4813-9999-9e9323666df7 None None] Agent healthcheck: found 1 dead agents out of 7: Type Last heartbeat host Loadbalancerv2 agent 2016-12-08 17:15:11 node2 2016-12-08 17:18:06.000 WARNING neutron.db.agentschedulers_db [req-d0c689d4-434b-4db7-8140-27d3d3442dec None None] Rescheduling loadbalancer b130e956-b8d1-4290-ab83-febc19797683 from agent 88f4c436-7152-4d30-a9e8-a793750bcbba because the agent did not report to the server in the last 150 seconds.Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verify that the loadbalancer was actually rescheduled to the live lbaas agent, and that haproxy is running:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Review the state of the haproxy process:

stack@node1:~/$ ps -ef | grep "haproxy -f" | grep lbaas nobody 768 1 0 17:18 ? 00:00:00 haproxy -f /opt/openstack/data/neutron/lbaas/v2/b130e956-b8d1-4290-ab83-febc19797683/haproxy.conf -p /opt/openstack/data/neutron/lbaas/v2/b130e956-b8d1-4290-ab83-febc19797683/haproxy.pid

stack@node1:~/$ ps -ef | grep "haproxy -f" | grep lbaas nobody 768 1 0 17:18 ? 00:00:00 haproxy -f /opt/openstack/data/neutron/lbaas/v2/b130e956-b8d1-4290-ab83-febc19797683/haproxy.conf -p /opt/openstack/data/neutron/lbaas/v2/b130e956-b8d1-4290-ab83-febc19797683/haproxy.pidCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Revive the lbaas agent that you killed in step 4. Verify haproxy is no longer running on that node and that

neutron-serverrecognizes the agent:stack@node2:~/$ ps -ef | grep "haproxy -f" | grep lbaas stack@node2:~/$

stack@node2:~/$ ps -ef | grep "haproxy -f" | grep lbaas stack@node2:~/$Copy to Clipboard Copied! Toggle word wrap Toggle overflow Review the agent list:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow