Chapter 9. Using Composable Networks

With composable networks, you are no longer constrained by the pre-defined network segments (Internal, Storage, Storage Management, Tenant, External, Control Plane), and instead you can now create your own networks and assign them to any role: default or custom. For example, if you have a network dedicated to NFS traffic, you can now present it to multiple different roles.

Director supports the creation of custom networks during the deployment and update phases. These additional networks can be used for ironic bare metal nodes, system management, or to create separate networks for different roles. They can also be used to create multiple sets of networks for split deployments, where traffic is routed between networks.

A single data file (network_data.yaml) manages the list of networks that will be deployed; the role definition process then assigns the networks to the required roles through network isolation (see Chapter 8, Isolating Networks for more information).

9.1. Defining a Composable Network

To create composable networks, edit a local copy of the /usr/share/openstack-tripleo-heat-templates/network_data.yaml Heat template. For example:

-

name - is the only mandatory value, however you can also use

name_lowerto normalize names for readability. For example, changingInternalApitointernal_api. - vip:true will create a virtual IP address (VIP) on the new network, with the remaining parameters setting the defaults for the new network.

- ip_subnet and allocation_pools will set the default IPv4 subnet and IP range for the network.

- ipv6_subnet and ipv6_allocation_pools will set the default IPv6 subnets for the network.

You can override these defaults using an environment file (usually named network-environment.yaml). The sample network-environment.yaml file can be created after modifying the network_data.yaml file by running this command from the root of the director’s core Heat templates you are using (local copy of /usr/share/openstack-tripleo-heat-templates/):

[stack@undercloud ~/templates] $ ./tools/process-templates.py

[stack@undercloud ~/templates] $ ./tools/process-templates.py9.1.1. Define Network Interface Configuration for Composable Networks

When using composable networks, the parameter definition for the network IP address must be added to the NIC configuration template used for each role, even if the network is not used on the role. See the directories in /usr/share/openstack-tripleo-heat-templates/network/config for examples of these NIC configurations. For instance, if a StorageBackup network is added to only the Ceph nodes, the following would need to be added to the resource definitions in the NIC configuration templates for all roles:

StorageBackupIpSubnet:

default: ''

description: IP address/subnet on the external network

type: string

StorageBackupIpSubnet:

default: ''

description: IP address/subnet on the external network

type: stringYou may also create resource definitions for VLAN IDs and/or gateway IP, if needed:

The IpSubnet parameter for the custom network appears in the parameter definitions for each role. However, since the Ceph role is the only role that makes use of the StorageBackup network in our example, only the NIC configuration template for the Ceph role would make use of the StorageBackup parameters in the network_config section of the template.

9.1.2. Assign Composable Networks to Services

If vip: true is specified in the custom network definition, then it is possible to assign services to the network using the ServiceNetMap parameters. The custom network chosen for the service must exist on the role hosting the service. You can override the default networks by overriding the ServiceNetMap that is defined in /usr/share/openstack-tripleo-heat-templates/network/service_net_map.j2.yaml in your network_environment.yaml (or in a different environment file):

9.1.3. Define the Routed Networks

When using composable networks to deploy routed networks, you define routes and router gateways for use in the network configuration. You can create network routes and supernet routes to define which interface to use when routing traffic between subnets. For example, in a deployment where traffic is routed between the Compute and Controller roles, you may want to define supernets for sets of isolated networks. For instance, 172.17.0.0/16 is a supernet that contains all networks beginning with 172.17, so the Internal API network used on the controllers might use 172.17.1.0/24 and the Internal API network used on the Compute nodes might use 172.17.2.0/24. On both roles, you would define a route to the 172.17.0.0/16 supernet through the router gateway that is specific to the network used on the role.

The available parameters in network-environment.yaml:

These parameters can be used in the NIC configuration templates for the roles.

The controller uses the parameters for the InternalApi network in controller.yaml:

The compute role uses the parameters for the InternalApi2 network in compute.yaml:

If specific network routes are not applied on isolated networks, all traffic to non-local networks use the default gateway. This is generally undesirable from both a security and performance standpoint since it mixes different kinds of traffic and puts all outbound traffic on the same interface. In addition, if the routing is asymmetric (traffic is sent through a different interface than received), it might cause unreachable services. Using a route to the supernet on both the client and server directs traffic to use the correct interface on both sides.

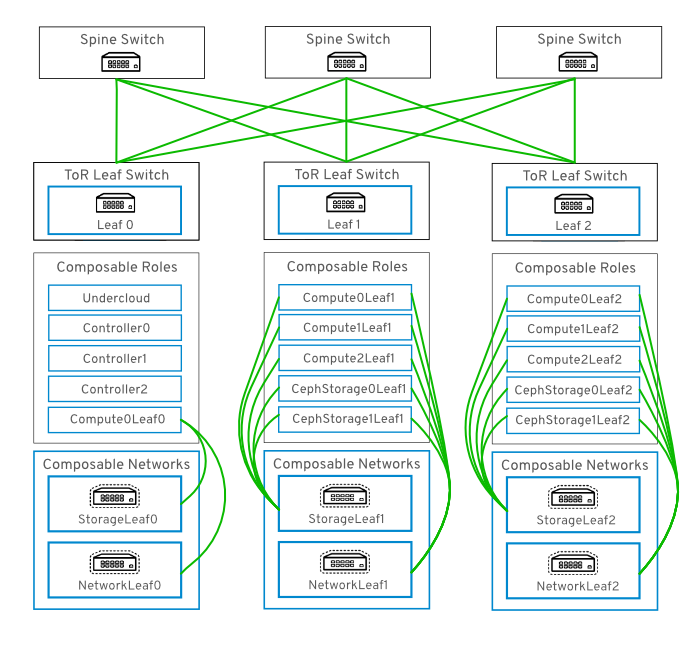

9.2. Networking with Routed Spine-Leaf

Composable networks allow you to adapt your OpenStack Networking deployment to the popular routed spine-leaf data center topology. In a practical application of routed spine-leaf, a leaf is represented as a composable Compute or Storage role usually in a datacenter rack, as shown in Figure 9.1, “Routed spine-leaf example”. The leaf 0 rack has an undercloud node, controllers, and compute nodes. The composable networks are presented to the nodes, which have been assigned to composable roles. In this diagram, the StorageLeaf networks are presented to the Ceph storage and Compute nodes; the NetworkLeaf represents an example of any network you may want to compose.

Figure 9.1. Routed spine-leaf example

9.3. Hardware Provisioning with Routed Spine-Leaf

This section describes an example hardware provisioning use case and explains how to deploy an evaluation environment to demonstrate the functionality of routed spine-leaf with composable networks. The resulting deployment has multiple sets of networks with routing available.

To use a provisioning network in a routed spine-leaf network, there are two options available: a VXLAN tunnel configured in the switch fabric, or an extended VLAN trunked to each ToR switch:

In a future release, it is expected that DHCP relays can be used to make DHCPOFFER broadcasts traverse across the routed layer 3 domains.

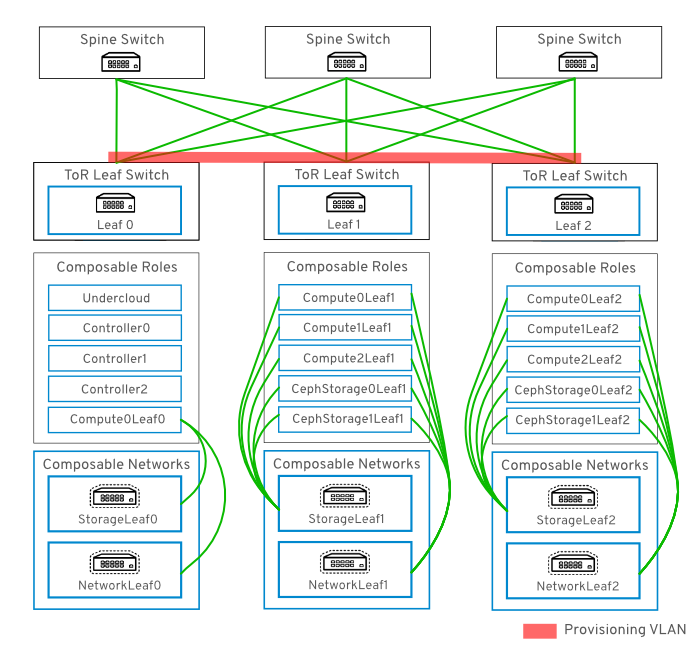

9.3.1. Example VLAN Provisioning Network

In this example, new overcloud nodes are deployed through the provisioning network. The provisioning network cannot be composed, and there cannot be more than one. Instead, a VLAN tunnel is used to span across the layer 3 topology (see Figure 9.2, “VLAN provisioning network topology”). This allows DHCPOFFER broadcasts to be sent to any leaf. This tunnel is established by trunking a VLAN between the Top-of-Rack (ToR) leaf switches. In this diagram, the StorageLeaf networks are presented to the Ceph storage and Compute nodes; the NetworkLeaf represents an example of any network you may want to compose.

Figure 9.2. VLAN provisioning network topology

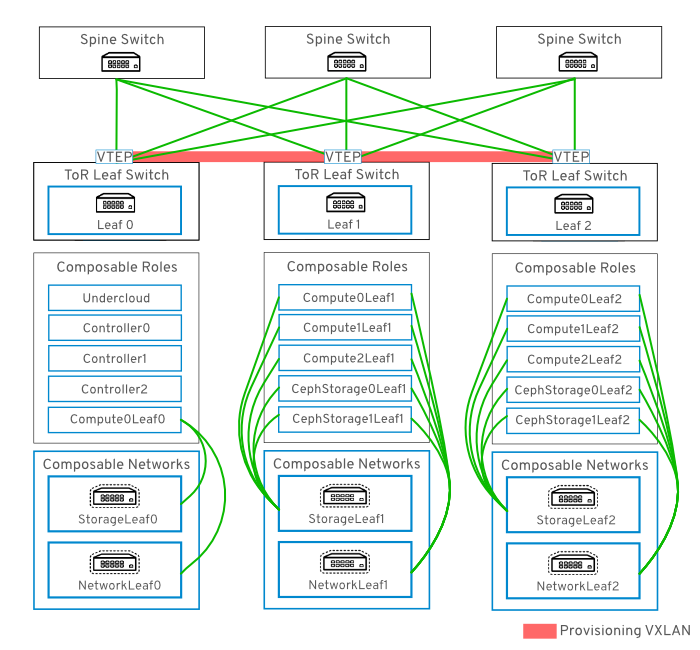

9.3.2. Example VXLAN Provisioning Network

In this example, new overcloud nodes are deployed through the provisioning network. The provisioning network cannot be composed, and there cannot be more than one. Instead, VXLAN tunnel is used to span across the layer 3 topology (see Figure 9.3, “VXLAN provisioning network topology”). This allows DHCPOFFER broadcasts to be sent to any leaf. This tunnel is established using VXLAN endpoints configured on the Top-of-Rack (ToR) leaf switches.

Figure 9.3. VXLAN provisioning network topology

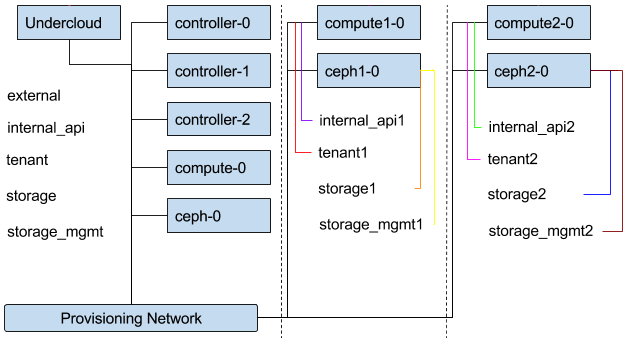

9.3.3. Network Topology for Provisioning

The routed spine-leaf bare metal environment has one or more layer 3 capable switches, which route traffic between the isolated VLANs in the separate layer 2 broadcast domains.

The intention of this design is to isolate the traffic according to function. For example, if the controller nodes host an API on the Internal API network, when a compute node accesses the API it should use its own version of the Internal API network. For this routing to work, you need routes that force traffic destined for the Internal API network to use the required interface. This can be configured using supernet routes. For example, if you use 172.18.0.0/24 as the Internal API network for the controller nodes, you can use 172.18.1.0/24 for the second Internal API network, and 172.18.2.0/24 for the third, and so on. As a result, you can have a route pointing to the larger 172.18.0.0/16 supernet that uses the gateway IP on the local Internal API network for each role in each layer 2 domain.

The following networks could be used in an environment that was deployed using director:

| Network | Roles attached | Interface | Bridge | Subnet |

|---|---|---|---|---|

| Provisioning | All | UC - nic2 and Other - nic1 | UC: br-ctlplane | |

| External | Controller | nic7, OC: nic6 | br-ex | 192.168.24.0/24 |

| Storage | Controller | nic3, OC: nic2 | 172.16.0.0/24 | |

| Storage Mgmt | Controller | nic4, OC: nic3 | 172.17.0.0/24 | |

| Internal API | Controller | nic5, OC: nic4 | 172.18.0.0/24 | |

| Tenant | Controller | nic6, OC: nic5 | 172.19.0.0/24 | |

| Storage1 | Compute1, Ceph1 | nic8, OC: nic7 | 172.16.1.0/24 | |

| Storage Mgmt1 | Ceph1 | nic9, OC: nic8 | 172.17.1.0/24 | |

| Internal API1 | Compute1 | nic10, OC: nic9 | 172.18.1.0/24 | |

| Tenant1 | Compute1 | nic11, OC: nic10 | 172.19.1.0/24 | |

| Storage2 | Compute2, Ceph2 | nic12, OC: nic11 | 172.16.2.0/24 | |

| Storage Mgmt2 | Ceph2 | nic13, OC: nic12 | 172.17.2.0/24 | |

| Internal API2 | Compute2 | nic14, OC: nic13 | 172.18.2.0/24 | |

| Tenant2 | Compute2 | nic15, OC:nic14 | 172.19.2.0/24 |

The undercloud must also be attached to an uplink for external/Internet connectivity. Typically, the undercloud would be the only node attached to the uplink network. This is likely to be an infrastructure VLAN, separate from the OpenStack deployment.

9.3.4. Topology Diagram

Figure 9.4. Composable Network Topology

9.3.5. Assign IP Addresses to the Custom Roles

The roles require routes for each of the isolated networks. Each role has its own NIC configs and you have to customize the TCP/IP settings to support the custom networks. You can also parameterize or hard-code the gateway IP addresses and routes into the role NIC configs.

For example, using the existing NIC configs as a basic template, you must add the network-specific parameters to all NIC configs:

Perform this for each of the custom networks, for each role used in the deployment.

9.3.6. Assign Routes for the Roles

Each isolated network should have a supernet route applied. Using the suggestion above of 172.18.0.0/16 as the supernet route, you would apply the same route to each interface, but using the local gateway.

network-environment.yaml:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Each role requires routes on each isolated network, pointing to the other subnets used for the same function. So when a Compute1 node contacts a controller on the InternalApi VIP, the traffic should target the InternalApi1 interface through the InternalApi1 gateway. As a result, the return traffic from the controller to the InternalApi1 network should go through the InternalApi network gateway.

Controller configuration:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Compute1 configuration:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

The supernet routes apply to all isolated networks on each role to avoid sending traffic through the default gateway, which by default is the Control Plane network on non-controllers, and the External network on the controllers.

You need to configure these routes on the isolated networks because Red Hat Enterprise Linux by default implements strict reverse path filtering on inbound traffic. If an API is listening on the Internal API interface and a request comes in to that API, it only accepts the request if the return path route is on the Internal API interface. If the server is listening on the Internal API network but the return path to the client is through the Control Plane, then the server drops the requests due to the reverse path filter.

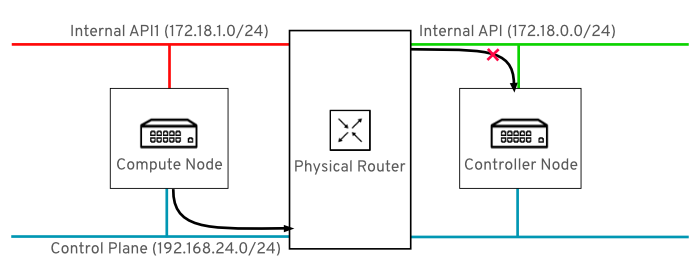

For example, this diagram shows an attempt to route traffic through the control plane, which will not succeed. The return route from the router to the controller node does not match the interface where the VIP is listening, so the packet is dropped. 192.168.24.0/24 is directly connected to the controller, so it is considered local to the Control Plane network.

Figure 9.5. Routed traffic through Control Plane

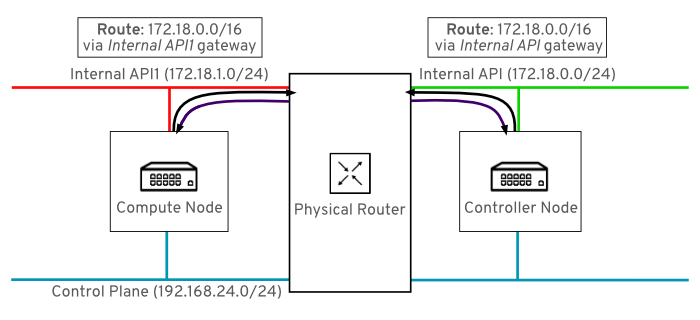

For comparison, this diagram shows routing running through the Internal API networks:

Figure 9.6. Routed traffic through Internal API

In this diagram, the return route to 172.18.1.0 matches the interface where the virtual IP address (VIP) is listening. As a result, packets are not dropped and the API connectivity works as expected.

The following ExtraConfig settings address the issue described above. Note that the InternalApi1 value is ultimately represented by the internal_api1 value and is case-sensitive.

-

CephAnsibleExtraConfig- Thepublic_networksetting lists all the storage network leaves. Thecluster_networkentries lists the storage management networks (one per leaf).

9.3.7. Custom NIC definitions

The following custom definitions were applied in the nic-config template for nodes. Change the following example to suit your deployment:

Review the

network_data.yamlvalues. They should be similar to the following example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteThere is currently no validation performed for the network subnet and

allocation_poolsvalues. Be certain you have defined these consistently and there is no conflict with existing networks.Review the

/home/stack/roles_data.yamlvalues. They should be similar to the following example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Review the

nic-configtemplate for the Compute node:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Run the

openstack overcloud deploycommand to apply the changes. For example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow