Chapter 15. Load Balancing-as-a-Service (LBaaS) with Octavia

The OpenStack Load-balancing service (Octavia) provides a Load Balancing-as-a-Service (LBaaS) version 2 implementation for Red Hat OpenStack platform director installations. This section contains information about enabling Octavia and assumes that Octavia services are hosted on the same nodes as the Networking API server. By default, the load-balancing services are on the Controller nodes.

Red Hat does not support a migration path from Neutron-LBaaS to Octavia. However, there are some unsupported open source tools that are available. For more information, see https://github.com/nmagnezi/nlbaas2octavia-lb-replicator/tree/stable_1.

15.1. Overview of Octavia

Octavia uses a set of instances on a Compute node called amphorae and communicates with the amphorae over a load-balancing management network (lb-mgmt-net).

Octavia includes the following services:

- API Controller(

octavia_api container) - Communicates with the controller worker for configuration updates and to deploy, monitor, or remove amphora instances.

- Controller Worker(

octavia_worker container) - Sends configuration and configuration updates to amphorae over the LB network.

- Health Manager

- Monitors the health of individual amphorae and handles failover events if amphorae fail unexpectedly.

Health monitors of type PING only check if the member is reachable and responds to ICMP echo requests. PING does not detect if your application running on that instance is healthy or not. PING should only be used in specific cases where an ICMP echo request is a valid health check.

- Housekeeping Manager

- Cleans up stale (deleted) database records, manages the spares pool, and manages amphora certificate rotation.

- Loadbalancer

- The top API object that represents the load balancing entity. The VIP address is allocated when the loadbalancer is created. When creating the loadbalancer, an Amphora instance launches on the compute node.

- Amphora

- The instance that performs the load balancing. Amphorae are typically instances running on Compute nodes and are configured with load balancing parameters according to the listener, pool, health monitor, L7 policies, and members configuration. Amphorae send a periodic heartbeat to the Health Manager.

- Listener

- The listening endpoint,for example HTTP, of a load balanced service. A listener might refer to several pools and switch between pools using layer 7 rules.

- Pool

- A group of members that handle client requests from the load balancer (amphora). A pool is associated with only one listener.

- Member

- Compute instances that serve traffic behind the load balancer (amphora) in a pool.

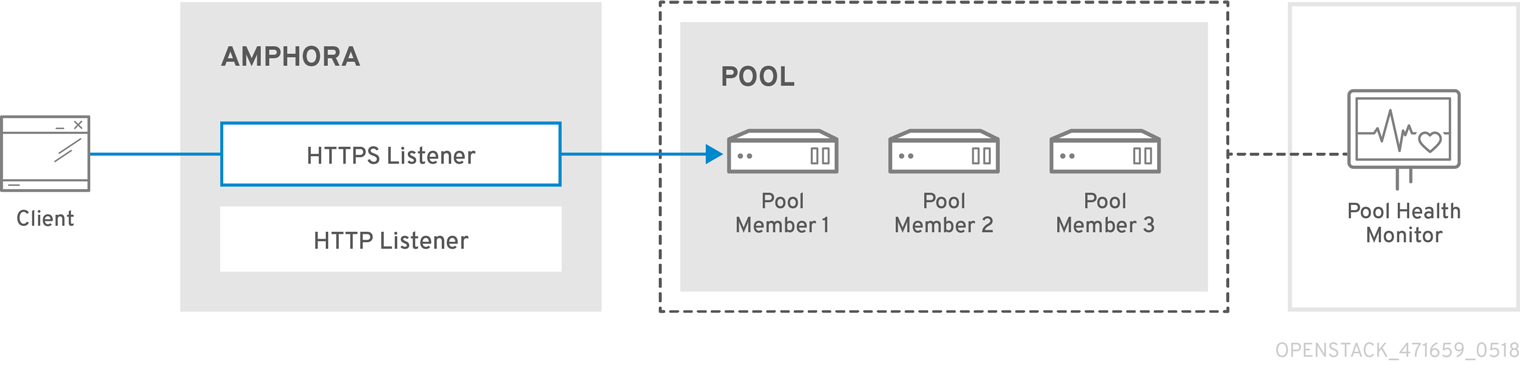

The following diagram shows the flow of HTTPS traffic through to a pool member:

15.2. Octavia software requirements

Octavia requires that you configure the following core OpenStack components:

- Compute (nova)

-

Networking (enable

allowed_address_pairs) - Image (glance)

- Identity (keystone)

- RabbitMQ

- MySQL

15.3. Prerequisites for the undercloud

This section assumes that:

- your undercloud is already installed and ready to deploy an overcloud with Octavia enabled.

- only container deployments are supported.

- Octavia runs on your Controller node.

If you want to enable the Octavia service on an existing overcloud deployment, you must prepare the undercloud. Failure to do so results in the overcloud installation being reported as successful yet without Octavia running. To prepare the undercloud, see the Transitioning to Containerized Services guide.

15.3.1. Octavia feature support matrix

| Feature | Support level in RHOSP 16.0 |

|---|---|

| ML2/OVS L3 HA | Full support |

| ML2/OVS DVR | Full support |

| ML2/OVS L3 HA + composable network node [1] | Full support |

| ML2/OVS DVR + composable network node [1] | Full support |

| ML2/OVN L3 HA | Full support |

| ML2/OVN DVR | Full support |

| Amphora active-standby | |

| Terminated HTTPS load balancers | Full support |

| Amphora spare pool | Technology Preview only |

| UDP | Technology Preview only |

| Backup members | Technology Preview only |

| Provider framework | Technology Preview only |

| TLS client authentication | Technology Preview only |

| TLS backend encryption | Technology Preview only |

| Octavia flavors | Full support |

| Object tags | Technology Preview only |

| Listener API timeouts | Full support |

| Log offloading | Technology Preview only |

| VIP access control list | Full support |

| Volume-based amphora | No support |

[1] Network node with OVS, metadata, DHCP, L3, and Octavia (worker, health monitor, house keeping).

15.4. Planning your Octavia deployment

Red Hat OpenStack Platform provides a workflow task to simplify the post-deployment steps for the Load-balancing service. This Octavia workflow runs a set of Ansible playbooks to provide the following post-deployment steps as the last phase of the overcloud deployment:

- Configure certificates and keys.

- Configure the load-balancing management network between the amphorae and the Octavia Controller worker and health manager.

Do not modify the OpenStack heat templates directly. Create a custom environment file (for example, octavia-environment.yaml) to override default parameter values.

Amphora Image

On pre-provisioned servers, you must install the amphora image on the undercloud before deploying octavia:

sudo dnf install octavia-amphora-image-x86_64.noarch

$ sudo dnf install octavia-amphora-image-x86_64.noarchOn servers that are not pre-provisioned, Red Hat OpenStack Platform director automatically downloads the default amphora image, uploads it to the overcloud Image service, and then configures Octavia to use this amphora image. During a stack update or upgrade, director updates this image to the latest amphora image.

Custom amphora images are not supported.

15.4.1. Configuring Octavia certificates and keys

Octavia containers require secure communication with load balancers and with each other. You can specify your own certificates and keys or allow these to be automatically generated by Red Hat OpenStack Platform director. We recommend you allow director to automatically create the required private certificate authorities and issue the necessary certificates.

If you must use your own certificates and keys, complete the following steps:

On the machine on which you run the

openstack overcloud deploycommand, create a custom YAML environment file.vi /home/stack/templates/octavia-environment.yaml

$ vi /home/stack/templates/octavia-environment.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow In the YAML environment file, add the following parameters with values appropriate for your site:

OctaviaCaCert:The certificate for the CA Octavia will use to generate certificates.

OctaviaCaKey:The private CA key used to sign the generated certificates.

OctaviaClientCert:The client certificate and un-encrypted key issued by the Octavia CA for the controllers.

OctaviaCaKeyPassphrase:The passphrase used with the private CA key above.

OctaviaGenerateCerts:The Boolean that instructs director to enable (true) or disable (false) automatic certificate and key generation.

Here is an example:

NoteThe certificates and keys are multi-line values, and you must indent all of the lines to the same level.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

If you use the default certificates that director generates, and if you set the OctaviaGenerateCerts parameter to false, then your certificates do not renew automatically when they expire.

15.5. Deploying Octavia

You deploy Octavia using the Red Hat OpenStack Platform (RHOSP) director. Director uses heat templates to deploy Octavia (and other RHOSP components).

Ensure that your environment has access to the Octavia image. For more information about image registry methods, see the Containerization section of the Director Installation and Usage guide.

To deploy Octavia in the overcloud:

openstack overcloud deploy --templates -e \ /usr/share/openstack-tripleo-heat-templates/environments/services/octavia.yaml

$ openstack overcloud deploy --templates -e \

/usr/share/openstack-tripleo-heat-templates/environments/services/octavia.yamlThe director updates the amphora image to the latest amphora image during a stack update or upgrade.

15.6. Changing Octavia default settings

If you want to override the default parameters that director uses to deploy Octavia, you can specify your values in one or more custom, YAML-formatted environment files (for example, octavia-environment.yaml).

As a best practice, always make Octavia configuration changes to the appropriate Heat templates and re-run Red Hat OpenStack Platform director. You risk losing ad hoc configuration changes when you manually change individual files and do not use director.

The parameters that director uses to deploy and configure Octavia are pretty straightforward. Here are a few examples:

OctaviaControlNetworkThe name for the neutron network used for the amphora control network.

OctaviaControlSubnetCidrThe subnet for amphora control subnet in CIDR form.

OctaviaMgmtPortDevNameThe name of the Octavia management network interface used for communication between octavia worker/health-manager with the amphora machine.

OctaviaConnectionLoggingA Boolean that enables (true) and disables connection flow logging in load-balancing instances (amphorae). As the amphorae have log rotation on, logs are unlikely to fill up disks. When disabled, performance is marginally impacted.

For the list of Octavia parameters that director uses, consult the following file on the undercloud:

/usr/share/openstack-tripleo-heat-templates/deployment/octavia/octavia-deployment-config.j2.yaml

/usr/share/openstack-tripleo-heat-templates/deployment/octavia/octavia-deployment-config.j2.yaml

Your environment file must contain the keywords parameter_defaults:. Put your parameter value pairs after the parameter_defaults: keyword. Here is an example:

YAML files are extremely sensitive about where in the file a parameter is placed. Make sure that parameter_defaults: starts in the first column (no leading whitespace characters), and your parameter value pairs start in column five (each parameter has four whitespace characters in front of it).

15.7. Secure a load balancer with an access control list

You can use the Octavia API to create an access control list (ACL) to limit incoming traffic to a listener to a set of allowed source IP addresses. Any other incoming traffic is rejected.

Prerequisites

This document uses the following assumptions for demonstrating how to secure an Octavia load balancer:

-

The back-end servers, 192.0.2.10 and 192.0.2.11, are on subnet named

private-subnet, and have been configured with a custom application on TCP port 80. -

The subnet,

public-subnet, is a shared external subnet created by the cloud operator that is reachable from the internet. - The load balancer is a basic load balancer that is accessible from the internet and distributes requests to the back-end servers.

- The application on TCP port 80 is accessible to limited-source IP addresses (192.0.2.0/24 and 198.51.100/24).

Procedure

Create a load balancer (

lb1) on the subnet (public-subnet):NoteReplace the names in parentheses () with names that your site uses.

openstack loadbalancer create --name lb1 --vip-subnet-id public-subnet

$ openstack loadbalancer create --name lb1 --vip-subnet-id public-subnetCopy to Clipboard Copied! Toggle word wrap Toggle overflow Re-run the following command until the load balancer (

lb1) shows a status that isACTIVEandONLINE:openstack loadbalancer show lb1

$ openstack loadbalancer show lb1Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create listener (

listener1) with the allowed CIDRs:openstack loadbalancer listener create --name listener1 --protocol TCP --protocol-port 80 --allowed-cidr 192.0.2.0/24 --allowed-cidr 198.51.100/24 lb1

$ openstack loadbalancer listener create --name listener1 --protocol TCP --protocol-port 80 --allowed-cidr 192.0.2.0/24 --allowed-cidr 198.51.100/24 lb1Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create the default pool (

pool1) for the listener (listener1):openstack loadbalancer pool create --name pool1 --lb-algorithm ROUND_ROBIN --listener listener1 --protocol TCP

$ openstack loadbalancer pool create --name pool1 --lb-algorithm ROUND_ROBIN --listener listener1 --protocol TCPCopy to Clipboard Copied! Toggle word wrap Toggle overflow Add members 192.0.2.10 and 192.0.2.11 on the subnet (

private-subnet) to the pool (pool1) that you created:openstack loadbalancer member create --subnet-id private-subnet --address 192.0.2.10 --protocol-port 80 pool1 openstack loadbalancer member create --subnet-id private-subnet --address 192.0.2.11 --protocol-port 80 pool1

$ openstack loadbalancer member create --subnet-id private-subnet --address 192.0.2.10 --protocol-port 80 pool1 $ openstack loadbalancer member create --subnet-id private-subnet --address 192.0.2.11 --protocol-port 80 pool1Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification steps

Enter the following command:

openstack loadbalancer listener show listener1

$ openstack loadbalancer listener show listener1Copy to Clipboard Copied! Toggle word wrap Toggle overflow You should see output similar to the following:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow The parameter,

allowed_cidrs, is set to allow traffic only from 192.0.2.0/24 and 198.51.100/24.To verify that the load balancer is secure, try to make a request to the listener from a client whose CIDR is not in the

allowed_cidrslist; the request should not succeed. You should see output similar to the following:curl: (7) Failed to connect to 10.0.0.226 port 80: Connection timed out curl: (7) Failed to connect to 10.0.0.226 port 80: Connection timed out curl: (7) Failed to connect to 10.0.0.226 port 80: Connection timed out curl: (7) Failed to connect to 10.0.0.226 port 80: Connection timed out

curl: (7) Failed to connect to 10.0.0.226 port 80: Connection timed out curl: (7) Failed to connect to 10.0.0.226 port 80: Connection timed out curl: (7) Failed to connect to 10.0.0.226 port 80: Connection timed out curl: (7) Failed to connect to 10.0.0.226 port 80: Connection timed outCopy to Clipboard Copied! Toggle word wrap Toggle overflow

15.8. Configuring an HTTP load balancer

To configure a simple HTTP load balancer, complete the following steps:

Create the load balancer on a subnet:

openstack loadbalancer create --name lb1 --vip-subnet-id private-subnet

$ openstack loadbalancer create --name lb1 --vip-subnet-id private-subnetCopy to Clipboard Copied! Toggle word wrap Toggle overflow Monitor the state of the load balancer:

openstack loadbalancer show lb1

$ openstack loadbalancer show lb1Copy to Clipboard Copied! Toggle word wrap Toggle overflow When you see a status of Active and ONLINE, the load balancer is created and running and you can go to the next step.

NoteTo check load balancer status from the Compute service (nova), use the

openstack server list --all | grep amphoracommand. Creating load balancers can be a slow process (status displaying as PENDING) because load balancers are virtual machines (VMs) and not containers.Create a listener:

openstack loadbalancer listener create --name listener1 --protocol HTTP --protocol-port 80 lb1

$ openstack loadbalancer listener create --name listener1 --protocol HTTP --protocol-port 80 lb1Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create the listener default pool:

openstack loadbalancer pool create --name pool1 --lb-algorithm ROUND_ROBIN --listener listener1 --protocol HTTP

$ openstack loadbalancer pool create --name pool1 --lb-algorithm ROUND_ROBIN --listener listener1 --protocol HTTPCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a health monitor on the pool to test the “/healthcheck” path:

openstack loadbalancer healthmonitor create --delay 5 --max-retries 4 --timeout 10 --type HTTP --url-path /healthcheck pool1

$ openstack loadbalancer healthmonitor create --delay 5 --max-retries 4 --timeout 10 --type HTTP --url-path /healthcheck pool1Copy to Clipboard Copied! Toggle word wrap Toggle overflow Add load balancer members to the pool:

openstack loadbalancer member create --subnet-id private-subnet --address 192.0.2.10 --protocol-port 80 pool1 openstack loadbalancer member create --subnet-id private-subnet --address 192.0.2.11 --protocol-port 80 pool1

$ openstack loadbalancer member create --subnet-id private-subnet --address 192.0.2.10 --protocol-port 80 pool1 $ openstack loadbalancer member create --subnet-id private-subnet --address 192.0.2.11 --protocol-port 80 pool1Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a floating IP address on a public subnet:

openstack floating ip create public

$ openstack floating ip create publicCopy to Clipboard Copied! Toggle word wrap Toggle overflow Associate this floating IP with the load balancer VIP port:

openstack floating ip set --port `_LOAD_BALANCER_VIP_PORT_` `_FLOATING_IP_`

$ openstack floating ip set --port `_LOAD_BALANCER_VIP_PORT_` `_FLOATING_IP_`Copy to Clipboard Copied! Toggle word wrap Toggle overflow TipTo locate LOAD_BALANCER_VIP_PORT, run the

openstack loadbalancer show lb1command.

15.9. Verifying the load balancer

To verify the load balancer, complete the following steps:

Run the

openstack loadbalancer showcommand to verify the load balancer settings:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Run the

amphora listcommand to find the UUID of the amphora associated with load balancer lb1:openstack loadbalancer amphora list | grep <UUID of loadbalancer lb1>

(overcloud) [stack@undercloud-0 ~]$ openstack loadbalancer amphora list | grep <UUID of loadbalancer lb1>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Run the

amphora showcommand with the amphora UUID to view amphora information:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Run the

openstack loadbalancer listener showcommand to view the listener details:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Run the

openstack loadbalancer pool showcommand to view the pool and load-balancer members:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Run the

openstack floating ip listcommand to verify the floating IP address:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Verify HTTPS traffic flows across the load balancer:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

15.10. Overview of TLS-terminated HTTPS load balancer

When a TLS-terminated HTTPS load balancer is implemented, web clients communicate with the load balancer over Transport Layer Security (TLS) protocols. The load balancer terminates the TLS session and forwards the decrypted requests to the back end servers.

By terminating the TLS session on the load balancer, we offload the CPU-intensive encryption operations to the load balancer, and allows the load balancer to use advanced features such as Layer 7 inspection.

15.11. Creating a TLS-terminated HTTPS load balancer

This procedure describes how to configure a TLS-terminated HTTPS load balancer that is accessible from the internet through Transport Layer Security (TLS) and distributes requests to the back end servers over the non-encrypted HTTP protocol.

Prerequisites

- A private subnet that contains back end servers that host non-secure HTTP applications on TCP port 80.

- A shared external (public) subnet that is reachable from the internet.

TLS public-key cryptography has been configured with the following characteristics:

- A TLS certificate, key, and intermediate certificate chain have been obtained from an external certificate authority (CA) for the DNS name assigned to the load balancer VIP address (for example, www.example.com).

- The certificate, key, and intermediate certificate chain reside in separate files in the current directory.

- The key and certificate are PEM-encoded.

- The key is not encrypted with a passphrase.

- The intermediate certificate chain is contains multiple certificates that are PEM-encoded and concatenated together.

- You must configure the Load-balancing service (octavia) to use the Key Manager service (barbican). For more information, see the Manage Secrets with OpenStack Key Manager guide.

Procedure

Combine the key (

server.key), certificate (server.crt), and intermediate certificate chain (ca-chain.crt) into a single PKCS12 file (server.p12).NoteThe values in parentheses are provided as examples. Replace these with values appropriate for your site.

openssl pkcs12 -export -inkey server.key -in server.crt -certfile ca-chain.crt -passout pass: -out server.p12

$ openssl pkcs12 -export -inkey server.key -in server.crt -certfile ca-chain.crt -passout pass: -out server.p12Copy to Clipboard Copied! Toggle word wrap Toggle overflow Using the Key Manager service, create a secret resource (

tls_secret1) for the PKCS12 file.openstack secret store --name='tls_secret1' -t 'application/octet-stream' -e 'base64' --payload="$(base64 < server.p12)"

$ openstack secret store --name='tls_secret1' -t 'application/octet-stream' -e 'base64' --payload="$(base64 < server.p12)"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a load balancer (

lb1) on the public subnet (public-subnet).openstack loadbalancer create --name lb1 --vip-subnet-id public-subnet

$ openstack loadbalancer create --name lb1 --vip-subnet-id public-subnetCopy to Clipboard Copied! Toggle word wrap Toggle overflow Before you can proceed, the load balancer that you created (

lb1) must be in an active and online state.Run the

openstack loadbalancer showcommand, until the load balancer responds with anACTIVEandONLINEstatus. (You might need to run this command more than once.)openstack loadbalancer show lb1

$ openstack loadbalancer show lb1Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a TERMINATED_HTTPS listener (

listener1), and reference the secret resource as the default TLS container for the listener.openstack loadbalancer listener create --protocol-port 443 --protocol TERMINATED_HTTPS --name listener1 --default-tls-container=$(openstack secret list | awk '/ tls_secret1 / {print $2}') lb1$ openstack loadbalancer listener create --protocol-port 443 --protocol TERMINATED_HTTPS --name listener1 --default-tls-container=$(openstack secret list | awk '/ tls_secret1 / {print $2}') lb1Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a pool (

pool1) and make it the default pool for the listener.openstack loadbalancer pool create --name pool1 --lb-algorithm ROUND_ROBIN --listener listener1 --protocol HTTP

$ openstack loadbalancer pool create --name pool1 --lb-algorithm ROUND_ROBIN --listener listener1 --protocol HTTPCopy to Clipboard Copied! Toggle word wrap Toggle overflow Add the non-secure HTTP back end servers (

192.0.2.10and192.0.2.11) on the private subnet (private-subnet) to the pool.openstack loadbalancer member create --subnet-id private-subnet --address 192.0.2.10 --protocol-port 80 pool1 openstack loadbalancer member create --subnet-id private-subnet --address 192.0.2.11 --protocol-port 80 pool1

$ openstack loadbalancer member create --subnet-id private-subnet --address 192.0.2.10 --protocol-port 80 pool1 $ openstack loadbalancer member create --subnet-id private-subnet --address 192.0.2.11 --protocol-port 80 pool1Copy to Clipboard Copied! Toggle word wrap Toggle overflow

15.12. Creating a TLS-terminated HTTPS load balancer with SNI

This procedure describes how to configure a TLS-terminated HTTPS load balancer that is accessible from the internet through Transport Layer Security (TLS) and distributes requests to the back end servers over the non-encrypted HTTP protocol. In this configuration, there is one listener that contains multiple TLS certificates and implements Server Name Indication (SNI) technology.

Prerequisites

- A private subnet that contains back end servers that host non-secure HTTP applications on TCP port 80.

- A shared external (public) subnet that is reachable from the internet.

TLS public-key cryptography has been configured with the following characteristics:

- Multiple TLS certificates, keys, and intermediate certificate chains have been obtained from an external certificate authority (CA) for the DNS names assigned to the load balancer VIP address (for example, www.example.com and www2.example.com).

- The certificates, keys, and intermediate certificate chains reside in separate files in the current directory.

- The keys and certificates are PEM-encoded.

- The keys are not encrypted with passphrases.

- The intermediate certificate chains contain multiple certificates that are PEM-encoded and are concatenated together.

- You must configure the Load-balancing service (octavia) to use the Key Manager service (barbican). For more information, see the Manage Secrets with OpenStack Key Manager guide.

Procedure

For each of the TLS certificates in the SNI list, combine the key (

server.key), certificate (server.crt), and intermediate certificate chain (ca-chain.crt) into a single PKCS12 file (server.p12).In this example, you create two PKCS12 files (

server.p12andserver2.p12) one for each certificate (www.example.comandwww2.example.com).NoteThe values in parentheses are provided as examples. Replace these with values appropriate for your site.

openssl pkcs12 -export -inkey server.key -in server.crt -certfile ca-chain.crt -passout pass: -out server.p12 openssl pkcs12 -export -inkey server2.key -in server2.crt -certfile ca-chain2.crt -passout pass: -out server2.p12

$ openssl pkcs12 -export -inkey server.key -in server.crt -certfile ca-chain.crt -passout pass: -out server.p12 $ openssl pkcs12 -export -inkey server2.key -in server2.crt -certfile ca-chain2.crt -passout pass: -out server2.p12Copy to Clipboard Copied! Toggle word wrap Toggle overflow Using the Key Manager service, create secret resources (

tls_secret1andtls_secret2) for the PKCS12 file.openstack secret store --name='tls_secret1' -t 'application/octet-stream' -e 'base64' --payload="$(base64 < server.p12)" openstack secret store --name='tls_secret2' -t 'application/octet-stream' -e 'base64' --payload="$(base64 < server2.p12)"

$ openstack secret store --name='tls_secret1' -t 'application/octet-stream' -e 'base64' --payload="$(base64 < server.p12)" $ openstack secret store --name='tls_secret2' -t 'application/octet-stream' -e 'base64' --payload="$(base64 < server2.p12)"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a load balancer (

lb1) on the public subnet (public-subnet).openstack loadbalancer create --name lb1 --vip-subnet-id public-subnet

$ openstack loadbalancer create --name lb1 --vip-subnet-id public-subnetCopy to Clipboard Copied! Toggle word wrap Toggle overflow Before you can proceed, the load balancer that you created (

lb1) must be in an active and online state.Run the

openstack loadbalancer showcommand, until the load balancer responds with anACTIVEandONLINEstatus. (You might need to run this command more than once.)openstack loadbalancer show lb1

$ openstack loadbalancer show lb1Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a TERMINATED_HTTPS listener (

listener1), and reference both the secret resources using SNI.(Reference

tls_secret1as the default TLS container for the listener.)openstack loadbalancer listener create --protocol-port 443 \ --protocol TERMINATED_HTTPS --name listener1 \ --default-tls-container=$(openstack secret list | awk '/ tls_secret1 / {print $2}') \ --sni-container-refs $(openstack secret list | awk '/ tls_secret1 / {print $2}') \ $(openstack secret list | awk '/ tls_secret2 / {print $2}') -- lb1$ openstack loadbalancer listener create --protocol-port 443 \ --protocol TERMINATED_HTTPS --name listener1 \ --default-tls-container=$(openstack secret list | awk '/ tls_secret1 / {print $2}') \ --sni-container-refs $(openstack secret list | awk '/ tls_secret1 / {print $2}') \ $(openstack secret list | awk '/ tls_secret2 / {print $2}') -- lb1Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a pool (

pool1) and make it the default pool for the listener.openstack loadbalancer pool create --name pool1 --lb-algorithm ROUND_ROBIN --listener listener1 --protocol HTTP

$ openstack loadbalancer pool create --name pool1 --lb-algorithm ROUND_ROBIN --listener listener1 --protocol HTTPCopy to Clipboard Copied! Toggle word wrap Toggle overflow Add the non-secure HTTP back end servers (

192.0.2.10and192.0.2.11) on the private subnet (private-subnet) to the pool.openstack loadbalancer member create --subnet-id private-subnet --address 192.0.2.10 --protocol-port 80 pool1 openstack loadbalancer member create --subnet-id private-subnet --address 192.0.2.11 --protocol-port 80 pool1

$ openstack loadbalancer member create --subnet-id private-subnet --address 192.0.2.10 --protocol-port 80 pool1 $ openstack loadbalancer member create --subnet-id private-subnet --address 192.0.2.11 --protocol-port 80 pool1Copy to Clipboard Copied! Toggle word wrap Toggle overflow

15.13. Creating HTTP and TLS-terminated HTTPS load balancers on the same back end

This procedure describes how to configure a non-secure listener and a TLS-terminated HTTPS listener on the same load balancer (and same IP address). You would use this configuration when you want to respond to web clients with the exact same content, regardless if the client is connected with secure or non-secure HTTP protocol.

Prerequisites

- A private subnet that contains back end servers that host non-secure HTTP applications on TCP port 80.

- A shared external (public) subnet that is reachable from the internet.

TLS public-key cryptography has been configured with the following characteristics:

- A TLS certificate, key, and optional intermediate certificate chain have been obtained from an external certificate authority (CA) for the DNS name assigned to the load balancer VIP address (for example, www.example.com).

- The certificate, key, and intermediate certificate chain reside in separate files in the current directory.

- The key and certificate are PEM-encoded.

- The key is not encrypted with a passphrase.

- The intermediate certificate chain is contains multiple certificates that are PEM-encoded and concatenated together.

- You must configure the Load-balancing service (octavia) to use the Key Manager service (barbican). For more information, see the Manage Secrets with OpenStack Key Manager guide.

- The non-secure HTTP listener is configured with the same pool as the HTTPS TLS-terminated load balancer.

Procedure

Combine the key (

server.key), certificate (server.crt), and intermediate certificate chain (ca-chain.crt) into a single PKCS12 file (server.p12).NoteThe values in parentheses are provided as examples. Replace these with values appropriate for your site.

openssl pkcs12 -export -inkey server.key -in server.crt -certfile ca-chain.crt -passout pass: -out server.p12

$ openssl pkcs12 -export -inkey server.key -in server.crt -certfile ca-chain.crt -passout pass: -out server.p12Copy to Clipboard Copied! Toggle word wrap Toggle overflow Using the Key Manager service, create a secret resource (

tls_secret1) for the PKCS12 file.openstack secret store --name='tls_secret1' -t 'application/octet-stream' -e 'base64' --payload="$(base64 < server.p12)"

$ openstack secret store --name='tls_secret1' -t 'application/octet-stream' -e 'base64' --payload="$(base64 < server.p12)"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a load balancer (

lb1) on the public subnet (public-subnet).openstack loadbalancer create --name lb1 --vip-subnet-id public-subnet

$ openstack loadbalancer create --name lb1 --vip-subnet-id public-subnetCopy to Clipboard Copied! Toggle word wrap Toggle overflow Before you can proceed, the load balancer that you created (

lb1) must be in an active and online state.Run the

openstack loadbalancer showcommand, until the load balancer responds with anACTIVEandONLINEstatus. (You might need to run this command more than once.)openstack loadbalancer show lb1

$ openstack loadbalancer show lb1Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a TERMINATED_HTTPS listener (

listener1), and reference the secret resource as the default TLS container for the listener.openstack loadbalancer listener create --protocol-port 443 --protocol TERMINATED_HTTPS --name listener1 --default-tls-container=$(openstack secret list | awk '/ tls_secret1 / {print $2}') lb1$ openstack loadbalancer listener create --protocol-port 443 --protocol TERMINATED_HTTPS --name listener1 --default-tls-container=$(openstack secret list | awk '/ tls_secret1 / {print $2}') lb1Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a pool (

pool1) and make it the default pool for the listener.openstack loadbalancer pool create --name pool1 --lb-algorithm ROUND_ROBIN --listener listener1 --protocol HTTP

$ openstack loadbalancer pool create --name pool1 --lb-algorithm ROUND_ROBIN --listener listener1 --protocol HTTPCopy to Clipboard Copied! Toggle word wrap Toggle overflow Add the non-secure HTTP back end servers (

192.0.2.10and192.0.2.11) on the private subnet (private-subnet) to the pool.openstack loadbalancer member create --subnet-id private-subnet --address 192.0.2.10 --protocol-port 80 pool1 openstack loadbalancer member create --subnet-id private-subnet --address 192.0.2.11 --protocol-port 80 pool1

$ openstack loadbalancer member create --subnet-id private-subnet --address 192.0.2.10 --protocol-port 80 pool1 $ openstack loadbalancer member create --subnet-id private-subnet --address 192.0.2.11 --protocol-port 80 pool1Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a non-secure, HTTP listener (

listener2), and make its default pool, the same as the secure listener.openstack loadbalancer listener create --protocol-port 80 --protocol HTTP --name listener2 --default-pool pool1 lb1

$ openstack loadbalancer listener create --protocol-port 80 --protocol HTTP --name listener2 --default-pool pool1 lb1Copy to Clipboard Copied! Toggle word wrap Toggle overflow

15.14. Accessing Amphora logs

Amphora is the instance that performs load balancing. You can view Amphora logging information in the systemd journal.

Start the ssh-agent, and add your user identity key to the agent:

[stack@undercloud-0] $ eval `ssh-agent -s` [stack@undercloud-0] $ ssh-add

[stack@undercloud-0] $ eval `ssh-agent -s` [stack@undercloud-0] $ ssh-addCopy to Clipboard Copied! Toggle word wrap Toggle overflow Use SSH to connect to the Amphora instance:

[stack@undercloud-0] $ ssh -A -t heat-admin@<controller node IP address> ssh cloud-user@<IP address of Amphora in load-balancing management network>

[stack@undercloud-0] $ ssh -A -t heat-admin@<controller node IP address> ssh cloud-user@<IP address of Amphora in load-balancing management network>Copy to Clipboard Copied! Toggle word wrap Toggle overflow View the systemd journal:

[cloud-user@amphora-f60af64d-570f-4461-b80a-0f1f8ab0c422 ~] $ sudo journalctl

[cloud-user@amphora-f60af64d-570f-4461-b80a-0f1f8ab0c422 ~] $ sudo journalctlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Refer to the journalctl man page for information about filtering journal output.

When you are finished viewing the journal, and have closed your connections to the Amphora instance and the Controller node, make sure that you stop the SSH agent:

[stack@undercloud-0] $ exit

[stack@undercloud-0] $ exitCopy to Clipboard Copied! Toggle word wrap Toggle overflow

15.15. Updating running amphora instances

15.15.1. Overview

Periodically, you must update a running load balancing instance (amphora) with a newer image. For example, you must update an amphora instance during the following events:

- An update or upgrade of Red Hat OpenStack Platform.

- A security update to your system.

- A change to a different flavor for the underlying virtual machine.

To update an amphora image, you must fail over the load balancer and then wait for the load balancer to regain an active state. When the load balancer is again active, it is running the new image.

15.15.2. Prerequisites

New images for amphora are available during an OpenStack update or upgrade.

15.15.3. Update amphora instances with new images

During an OpenStack update or upgrade, director automatically downloads the default amphora image, uploads it to the overcloud Image service (glance), and then configures Octavia to use the new image. When you failover the load balancer, you are forcing Octavia to start the new amphora image.

- Make sure that you have reviewed the prerequisites before you begin updating amphora.

List the IDs for all of the load balancers that you want to update:

openstack loadbalancer list -c id -f value

$ openstack loadbalancer list -c id -f valueCopy to Clipboard Copied! Toggle word wrap Toggle overflow Fail over each load balancer:

openstack loadbalancer failover <loadbalancer_id>

$ openstack loadbalancer failover <loadbalancer_id>Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteWhen you start failing over the load balancers, monitor system utilization, and as needed, adjust the rate at which you perform failovers. A load balancer failover creates new virtual machines and ports, which might temporarily increase the load on OpenStack Networking.

Monitor the state of the failed over load balancer:

openstack loadbalancer show <loadbalancer_id>

$ openstack loadbalancer show <loadbalancer_id>Copy to Clipboard Copied! Toggle word wrap Toggle overflow The update is complete when the load balancer status is

ACTIVE.