Chapter 3. Design

Section 3.1, “Planning Models”

Section 3.2, “Compute Resources”

Section 3.3, “Storage Resources”

Section 3.4, “Network Resources”

Section 3.6, “Maintenance and Support”

Section 3.9, “Additional Software”

This section describes technical and operational considerations to take when you design your Red Hat OpenStack Platform deployment.

All architecture examples in this guide assume that you deploy OpenStack Platform on Red Hat Enterprise Linux 7.2 with the KVM hypervisor.

3.1. Planning Models

When you design a Red Hat OpenStack Platform deployment, the duration of the project can affect the configuration and resource allocation of the deployment. Each planning model might aim to achieve different goals, and therefore requires different considerations.

3.1.1. Short-term model (3 months)

To perform short-term capacity planning and forecasting, consider capturing a record of the following metrics:

- Total vCPU number

- Total vRAM allocation

- I/O mean latency

- Network traffic

- Compute load

- Storage allocation

The vCPU, vRAM, and latency metrics are the most accessible for capacity planning. With these details, you can apply a standard second-order regression and receive a usable capacity estimate covering the following three months. Use this estimate to determine whether you need to deploy additional hardware.

3.1.2. Middle-term model (6 months)

This model requires review iterations and you must estimate deviations from the forecasting trends and the actual usage. You can analyze this information with standard statistical tools or with specialized analysis models such as Nash-Sutcliffe. Trends can also be calculated using second-order regression.

In deployments with multiple instance flavors, you can correlate vRAM and vCPU usage more easily if you treat the vCPU and vRAM metrics as a single metric.

3.1.3. Long-term model (1 year)

Total resource usage can vary during one year, and deviations normally occur from the original long-term capacity estimate. Therefore, second-order regression might be an insufficient measure for capacity forecasting, especially in cases where usage is cyclical.

When planning for a long-term deployment, a capacity-planning model based on data that spans over a year must fit at least the first derivative. Depending on the usage pattern, frequency analysis might also be required.

3.2. Compute Resources

Compute resources are the core of the OpenStack cloud. Therefore, it is recommended to consider physical and virtual resource allocation, distribution, failover, and additional devices, when you design your Red Hat OpenStack Platform deployment.

3.2.1. General considerations

- Number of processors, memory, and storage in each hypervisor

The number of processor cores and threads directly affects the number of worker threads that can run on a Compute node. Therefore, you must determine the design based on the service and based on a balanced infrastructure for all services.

Depending on the workload profile, additional Compute resource pools can be added to the cloud later. In some cases, the demand on certain instance flavors might not justify individual hardware design, with preference instead given to commoditized systems.

In either case, initiate the design by allocating hardware resources that can service common instances requests. If you want to add additional hardware designs to the overall architecture, this can be done at a later time.

- Processor type

Processor selection is an extremely important consideration in hardware design, especially when comparing the features and performance characteristics of different processors.

Processors can include features specifically for virtualized compute hosts, such as hardware-assisted virtualization and memory paging, or EPT shadowing technology. These features can have a significant impact on the performance of your cloud VMs.

- Resource nodes

- You must take into account Compute requirements of non-hypervisor resource nodes in the cloud. Resource nodes include the controller node and nodes that run Object Storage, Block Storage, and Networking services.

- Resource pools

Use a Compute design that allocates multiple pools of resources to be provided on-demand. This design maximizes application resource usage in the cloud. Each resource pool should service specific flavors of instances or groups of flavors.

Designing multiple resource pools helps to ensure that whenever instances are scheduled to Compute hypervisors, each set of node resources is allocated to maximize usage of available hardware. This is commonly referred to as bin packing.

Using a consistent hardware design across nodes in a resource pool also helps to support bin packing. Hardware nodes selected for a Compute resource pool should share a common processor, memory, and storage layout. Choosing a common hardware design helps easier deployment, support and node lifecycle maintenance.

- Over-commit ratios

OpenStack enables users to over-commit CPU and RAM on Compute nodes, which helps to increase the number of instances that run in the cloud. However, over-committing can reduce the performance of the instances.

The over-commit ratio is the ratio of available virtual resources compared to the available physical resources.

- The default CPU allocation ratio of 16:1 means that the scheduler allocates up to 16 virtual cores for every physical core. For example, if a physical node has 12 cores, the scheduler can allocate up to 192 virtual cores. With typical flavor definitions of 4 virtual cores per instance, this ratio can provide 48 instances on the physical node.

- The default RAM allocation ratio of 1.5:1 means that the scheduler allocates instances to a physical node if the total amount of RAM associated with the instances is less than 1.5 times the amount of RAM available on the physical node.

Tuning the over-commit ratios for CPU and memory during the design phase is important because it has a direct impact on the hardware layout of your Compute nodes. When designing a hardware node as a Compute resource pool to service instances, consider the number of processor cores available on the node, as well as the required disk and memory to service instances running at full capacity.

For example, an m1.small instance uses 1 vCPU, 20 GB of ephemeral storage, and 2,048 MB of RAM. For a server with 2 CPUs of 10 cores each, with hyperthreading turned on:

- The default CPU overcommit ratio of 16:1 allows for 640 (2 × 10 × 2 × 16) total m1.small instances.

- The default memory over-commit ratio of 1.5:1 means that the server needs at least 853 GB (640 × 2,048 MB / 1.5) of RAM.

When sizing nodes for memory, it is also important to consider the additional memory required to service operating system and service needs.

3.2.2. Flavors

Each created instance is given a flavor, or resource template, which determines the instance size and capacity. Flavors can also specify secondary ephemeral storage, swap disk, metadata to restrict usage, or special project access. Default flavors do not have these additional attributes defined. Instance flavors allow to measure capacity forecasting, because common use cases are predictably sized and not sized ad-hoc.

To facilitate packing virtual machines to physical hosts, the default selection of flavors provides a second largest flavor half the size of the largest flavor in every dimension. The flavor has half the vCPUs, half the vRAM, and half the ephemeral disk space. Each subsequent largest flavor is half the size of the previous flavor.

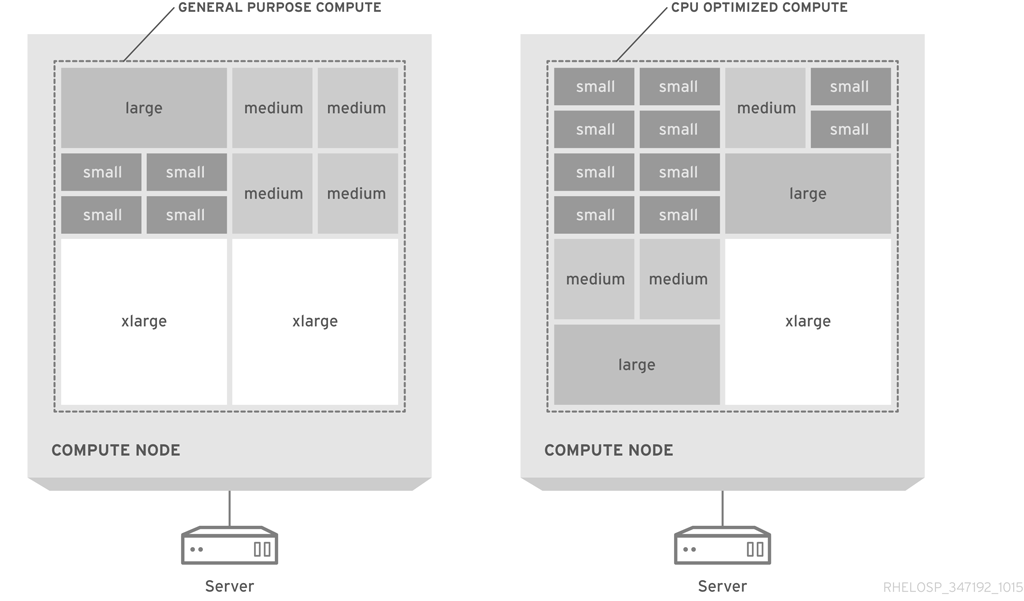

The following diagram shows a visual representation of flavor assignments in a general-purpose computing design and for a CPU-optimized, packed server:

The default flavors are recommended for typical configurations of commodity server hardware. To maximize utilization, you might need to customize the flavors or to create new flavors to align instance sizes to available hardware.

If possible, limit your flavors to one vCPU for each flavor. It is important to note that Type 1 hypervisors can schedule CPU time more easily to VMs that are configured with one vCPU. For example, a hypervisor that schedules CPU time to a VM that is configured with 4 vCPUs must wait until four physical cores are available, even if the task to perform requires only one vCPU.

Workload characteristics can also influence hardware choices and flavor configuration, especially where the tasks present different ratios of CPU, RAM, or HDD requirements. For information about flavors, see Managing Instances and Images.

3.2.3. vCPU-to-physical CPU core ratio

The default allocation ratio in Red Hat OpenStack Platform is 16 vCPUs per physical, or hyperthreaded, core.

The following table lists the maximum number of VMs that can be suitably run on a physical host based on the total available memory, including 4GB reserved for the system:

| Total RAM | VMs | Total vCPU |

|---|---|---|

| 64GB | 14 | 56 |

| 96GB | 20 | 80 |

| 128GB | 29 | 116 |

For example, planning an initial greenfields standup of 60 instances requires 3+1 compute nodes. Usually, memory bottlenecks are more common than CPU bottlenecks. However, the allocation ratio can be lowered to 8 vCPUs per physical core if needed.

3.2.4. Memory overhead

The KVM hypervisor requires a small amount of VM memory overhead, including non-shareable memory. Shareable memory for QEMU/KVM systems can be rounded to 200 MB for each hypervisor.

| vRAM | Physical memory usage (average) |

|---|---|

| 256 | 310 |

| 512 | 610 |

| 1024 | 1080 |

| 2048 | 2120 |

| 4096 | 4180 |

Typically, you can estimate hypervisor overhead of 100mb per VM.

3.2.5. Over-subscription

Memory is a limiting factor for hypervisor deployment. The number of VMs that you can run on each physical host is limited by the amount of memory that the host can access. For example, deploying a quad-core CPU with 256GB of RAM and more than 200 1GB instances leads to poor performance. Therefore, you must carefully determine the optimal ratio of CPU cores and memory to distribute across the instances.

3.2.6. Density

- Instance density

- In a compute-focused architecture, instance density is lower, which means that CPU and RAM over-subscription ratios are also lower. You might require more hosts to support the anticipated scale if instance density is lower, especially if the design uses dual-socket hardware designs.

- Host density

- You can address the higher host count of dual-socket designs by using a quad-socket platform. This platform decreases host density, which then increases the rack count. This configuration can affect the network requirements, the number of power connections, and might also impact the cooling requirements.

- Power and cooling density

- Reducing power and cooling density is an important consideration for data centers with older infrastructure. For example, the power and cooling density requirements for 2U, 3U, or even 4U server designs, might be lower than for blade, sled, or 1U server designs due to lower host density.

3.2.7. Compute hardware

- Blade servers

- Most blade servers can support dual-socket, multi-core CPUs. To avoid exceeding the CPU limit, select full-width or full-height blades. These blade types can also decrease server density. For example, high-density blade servers, such as HP BladeSystem or Dell PowerEdge M1000e, support up to 16 servers in only ten rack units. Half-height blades are twice as dense as full-height blades, which results in only eight servers per ten rack units.

- 1U servers

1U rack-mounted servers that occupy only a single rack unit might offer higher server density than a blade server solution. You can use 40 units of 1U servers in one rack to provide space for the top of rack (ToR) switches. In comparison, you can only use 32 full-width blade servers in one rack.

However, 1U servers from major vendors have only dual-socket, multi-core CPU configurations. To support a higher CPU configuration in a 1U rack-mount form factor, purchase systems from original design manufacturers (ODMs) or second-tier manufacturers.

- 2U servers

- 2U rack-mounted servers provide quad-socket, multi-core CPU support, but with a corresponding decrease in server density. 2U rack-mounted servers offer half of the density that 1U rack-mounted servers offer.

- Larger servers

- Larger rack-mounted servers, such as 4U servers, often provide higher CPU capacity and typically support four or even eight CPU sockets. These servers have greater expandability, but have much lower server density and are often more expensive.

- Sled servers

Sled servers are rack-mounted servers that support multiple independent servers in a single 2U or 3U enclosure. These servers deliver higher density than typical 1U or 2U rack-mounted servers.

For example, many sled servers offer four independent dual-socket nodes in 2U for a total of eight CPU sockets. However, the dual-socket limitation on individual nodes might not be sufficient to offset the additional cost and configuration complexity.

3.2.8. Additional devices

You might consider the following additional devices for Compute nodes:

- Graphics processing units (GPUs) for high-performance computing jobs.

-

Hardware-based random number generators to avoid entropy starvation for cryptographic routines. A random number generator device can be added to an instance using with the instance image properties.

/dev/randomis the default entropy source. - SSDs for ephemeral storage to maximize read/write time for database management systems.

- Host aggregates work by grouping together hosts that share similar characteristics, such as hardware similarities. The addition of specialized hardware to a cloud deployment might add to the cost of each node, so consider whether the additional customization is needed for all Compute nodes, or just a subset.

3.3. Storage Resources

When you design your cloud, the storage solution you choose impacts critical aspects of the deployment, such as performance, capacity, availability, and interoperability.

Consider the following factors when you choose your storage solution:

3.3.1. General Considerations

- Applications

Applications should be aware of underlying storage sub-systems to use cloud storage solutions effectively. If natively-available replication is not available, operations personnel must be able to configure the application to provide replication service.

An application that can detect underlying storage systems can function in a wide variety of infrastructures and still have the same basic behavior regardless of the differences in the underlying infrastructure.

- I/O

Benchmarks for input-output performance provide a baseline for expected performance levels. The benchmark results data can help to model behavior under different loads, and help you to design a suitable architecture.

Smaller, scripted benchmarks during the lifecycle of the architecture can help to record the system health at different times. The data from the scripted benchmarks can assist to scope and gain a deeper understanding of the organization needs.

- Interoperability

- Ensure that any hardware or storage management platform that you select is interoperable with OpenStack components, such as the KVM hypervisor, which affects whether you can use it for short-term instance storage.

- Security

- Data security design can focus on different aspects based on SLAs, legal requirements, industry regulations, and required certifications for systems or personnel. Consider compliance with HIPPA, ISO9000, or SOX based on the type of data. For certain organizations, access control levels should also be considered.

3.3.2. OpenStack Object Storage (swift)

- Availability

Design your object storage resource pools to provide the level of availability that you need for your object data. Consider rack-level and zone-level designs to accommodate the number of necessary replicas. The defult number of replicas is three. Each replica of data should exist in a separate availability zone with independent power, cooling, and network resources that service the specific zone.

The OpenStack Object Storage service places a specific number of data replicas as objects on resource nodes. These replicas are distributed across the cluster based on a consistent hash ring, which exists on all nodes in the cluster. In addition, a pool of Object Storage proxy servers that provide access to data stored on the object nodes should service each availability zone.

Design the Object Storage system with a sufficient number of zones to provide the minimum required successful responses for the number of replicas. For example, if you configure three replicas in the Swift cluster, the recommended number of zones to configure inside the Object Storage cluster is five.

Although you can deploy a solution with fewer zones, some data may not be available and API requests to some objects stored in the cluster might fail. Therefore, ensure you account for the number of zones in the Object Storage cluster.

Object proxies in each region should leverage local read and write affinity so that local storage resources facilitate access to objects wherever possible. You should deploy upstream load balancing to ensure that proxy services are distributed across multiple zones. In some cases, you might need third-party solutions to assist with the geographical distribution of services.

A zone within an Object Storage cluster is a logical division, and can be comprised of a single disk, a node, a collection of nodes, multiple racks, or multiple DCs. You must allow the Object Storage cluster to scale while providing an available and redundant storage system. You might need to configure storage policies with different requirements for replicas, retention, and other factors that could affect the design of storage in a specific zone.

- Node storage

When designing hardware resources for OpenStack Object Storage, the primary goal is to maximize the amount of storage in each resource node while also ensuring that the cost per terabyte is kept to a minimum. This often involves utilizing servers that can hold a large number of spinning disks. You might use 2U server form factors with attached storage or with an external chassis that holds a larger number of drives.

The consistency and partition tolerance characteristics of OpenStack Object Storage ensure that data stays current and survives hardware faults without requiring specialized data-replication devices.

- Performance

- Object storage nodes should be designed so that the number of requests does not hinder the performance of the cluster. The object storage service is a chatty protocol. Therefore, using multiple processors with higher core counts ensures that the IO requests do not inundate the server.

- Weighting and cost

OpenStack Object Storage provides the ability to mix and match drives with weighting inside the swift ring. When designing your swift storage cluster, you can use most cost-effective storage solution.

Many server chassis can hold 60 or more drives in 4U of rack space. Therefore, you can maximize the amount of storage for each rack unit at the best cost per terabyte. However, it is not recommended to use RAID controllers in an object storage node.

- Scaling

When you design your storage solution, you must determine the maximum partition power required by the Object Storage service, which then determines the maximum number of partitions that you can create. Object Storage distributes data across the entire storage cluster, but each partition cannot span more than one disk. Therefore, the maximum number of partitions cannot exceed the number of disks.

For example, a system with an initial single disk and a partition power of three can hold eight (23) partitions. Adding a second disk means that each disk can hold four partitions. The one-disk-per-partition limit means that this system cannot have more than eight disks and limits its scalability. However, a system with an initial single disk and a partition power of 10 can have up to 1024 (210) partitions.

Whenever you increase the system backend storage capacity, the partition maps redistribute data across the storage nodes. In some cases, this replication consists of extremely large data sets. In those cases, you should use backend replication links that do not conflict with tenant access to data.

If more tenants begin to access data in the cluster and the data sets grow, you must add front-end bandwidth to service data access requests. Adding front-end bandwidth to an Object Storage cluster requires designing Object Storage proxies that tenants can use to gain access to the data, along with the high availability solutions that enable scaling of the proxy layer.

You should design a front-end load balancing layer that tenants and consumers use to gain access to data stored within the cluster. This load balancing layer can be distributed across zones, regions or even across geographic boundaries.

In some cases, you must add bandwidth and capacity to the network resources that service requests between proxy servers and storage nodes. Therefore, the network architecture that provides access to storage nodes and proxy servers should be scalable.

3.3.3. OpenStack Block Storage (cinder)

- Availability and Redundancy

The input-output per second (IOPS) demand of your application determines whether you should use a RAID controller and which RAID level is required. For redundancy, you should use a redundant RAID configuration, such as RAID 5 or RAID 6. Some specialized features, such as automated replication of block storage volumes, might require third-party plug-ins or enterprise block storage solutions to handle the higher demand.

In environments with extreme demand on Block Storage, you should use multiple storage pools. Each device pool should have a similar hardware design and disk configuration across all hardware nodes in that pool. This design provides applications with access to a wide variety of Block Storage pools with various redundancy, availability, and performance characteristics.

The network architecture should also take into account the amount of East-West bandwidth required for instances to use available storage resources. The selected network devices should support jumbo frames to transfer large blocks of data. In some cases, you might need to create an additional dedicated backend storage network to provide connectivity between instances and Block Storage resources to reduce load on network resources.

When you deploy multiple storage pools, you must consider the impact on the Block Storage scheduler, which provisions storage across resource nodes. Ensure that applications can schedule volumes in multiple regions with specific network, power, and cooling infrastructure. This design allows tenants to build fault-tolerant applications distributed across multiple availability zones.

In addition to the Block Storage resource nodes, it is important to design for high availability and redundancy of APIs and related services that are responsible for provisioning and providing access to the storage nodes. You should design a layer of hardware or software load balancers to achieve high availability of the REST API services to provide uninterrupted service.

In some cases, you might need to deploy an additional load balancing layer to provide access to backend database services that are responsible for servicing and storing the state of Block Storage volumes. You should design a highly-available database solution to store the Block Storage databases, such as MariaDB and Galera.

- Attached storage

The Block Storage service can take advantage of enterprise storage solutions using a plug-in driver developed by the hardware vendor. A large number of enterprise plug-ins ship out-of-the-box with OpenStack Block Storage, and others are available through third-party channels.

General-purpose clouds typically use directly-attached storage in the majority of Block Storage nodes. Therefore, you might need to provide additional levels of service to tenants. These levels might only be provided by enterprise storage solutions.

- Performance

- If higher performance is needed, you can use high-performance RAID volumes. For extreme performance, you can use high-speed solid-state drive (SSD) disks.

- Pools

- Block Storage pools should allow tenants to choose appropriate storage solutions for their applications. By creating multiple storage pools of different types and configuring an advanced storage scheduler for the Block Storage service, you can provide tenants a large catalog of storage services with a variety of performance levels and redundancy options.

- Scaling

You can upgrade Block Storage pools to add storage capacity without interruption to the overall Block Storage service. Add nodes to the pool by installing and configuring the appropriate hardware and software. You can then configure the new nodes to report to the proper storage pool with the message bus.

Because Block Storage nodes report the node availability to the scheduler service, when a new node is online and available, tenants can use the new storage resources immediately.

In some cases, the demand on Block Storage from instances might exhaust the available network bandwidth. Therefore, you should design the network infrastructure to service Block Storage resources to allow you to add capacity and bandwidth seamlessly.

This often involves dynamic routing protocols or advanced networking solutions to add capacity to downstream devices. The front-end and backend storage network designs should include the ability to quickly and easily add capacity and bandwidth.

3.3.4. Storage Hardware

- Capacity

Node hardware should support enough storage for the cloud services, and should ensure that capacity can be added after deployment. Hardware nodes should support a large number of inexpensive disks with no reliance on RAID controller cards.

Hardware nodes should also be capable of supporting high-speed storage solutions and RAID controller cards to provide hardware-based storage performance and redundancy. Selecting hardware RAID controllers that automatically repair damaged arrays assists with the replacement and repair of degraded or destroyed storage devices.

- Connectivity

- If you use non-Ethernet storage protocols in the storage solution, ensure that the hardware can handle these protocols. If you select a centralized storage array, ensure that the hypervisor can connect to that storage array for image storage.

- Cost

- Storage can be a significant portion of the overall system cost. If you need vendor support, a commercial storage solution is recommended but incurs a bigger expense. If you need to minimize initial financial investment, you can design a system based on commodity hardware. However, the initial saving might lead to increased running support costs and higher incompatibility risks.

- Directly-Attached Storage

- Directly-attached storage (DAS) impacts the server hardware choice and affects host density, instance density, power density, OS-hypervisor, and management tools.

- Scalability

- Scalability is a major consideration in any OpenStack cloud. It can sometimes be difficult to predict the final intended size of the implementation; consider expanding the initial deployment in order to accommodate growth and user demand.

- Expandability

Expandability is a major architecture factor for storage solutions. A storage solution that expands to 50 PB is considered more expandable than a solution that only expands to 10 PB. This metric is different from scalability, which is a measure of the solution’s performance as its workload increases.

For example, the storage architecture for a development platform cloud might not require the same expandability and scalability as a commercial product cloud.

- Fault tolerance

Object Storage resource nodes do not require hardware fault tolerance or RAID controllers. You do not need to plan for fault tolerance in the Object Storage hardware, because the Object Storage service provides replication between zones by default.

Block Storage nodes, Compute nodes, and cloud controllers should have fault tolerance built-in at the hardware level with hardware RAID controllers and varying levels of RAID configuration. The level of RAID should be consistent with the performance and availability requirements of the cloud.

- Location

- The geographical location of instance and image storage might impact your architecture design.

- Performance

Disks that run Object Storage services do not need to be fast-performing disks. You can therefore maximize the cost efficiency per terabyte for storage. However, disks that run Block Storage services should use performance-boosting features that might require SSDs or flash storage to provide high-performance Block Storage pools.

The storage performance of short-term disks that you use for instances should also be considered. If Compute pools need high utilization of short-term storage, or requires very high performance, you should deploy similar hardware solutions the solutions you deploy for Block Storage.

- Server type

- Scaled-out storage architecture that includes DAS affects the server hardware selection. This architecture can also affect host density, instance density, power density, OS-hypervisor, management tools, and so on.

3.3.5. Ceph Storage

If you consider Ceph for your external storage, the Ceph cluster backend must be sized to handle the expected number of concurrent VMs with reasonable latency. An acceptable service level can maintain 99% of I/O operations in under 20ms for write operations and in under 10ms for read operations.

You can isolate I/O spikes from other VMs by configuring the maximum bandwidth for each Rados Block Device (RBD) or by setting a minimum guaranteed commitment.

3.4. Network Resources

Network availability is critical to the hypervisors in your cloud deployment. For example, if the hypervisors support only a few virtual machines (VMs) for each node and your applications do not require high-speed networking, then you can use one or two 1GB ethernet links. However, if your applications require high-speed networking or your hypervisors support many VMs for each node, one or two 10GB ethernet links are recommended.

A typical cloud deployment uses more peer-to-peer communication than a traditional core network topology normally requires. Although VMs are provisioned randomly across the cluster, these VMs need to communicate with each other as if they are on the same network. This requirement might slow down the network and cause packet loss on traditional core network topologies, due to oversubscribed links between the edges and the core of the network.

3.4.1. Segregate Your Services

OpenStack clouds traditionally have multiple network segments. Each segment provides access to resources in the cloud to operators and tenants. The network services also require network communication paths separated from the other networks. Segregating services to separate networks helps to secure sensitive data and protects against unauthorized access to services.

The minimum recommended segragation involves the following network segments:

- A public network segment used by tenants and operators to access the cloud REST APIs. Normally, only the controller nodes and swift proxies in the cloud are required to connect to this network segment. In some cases, this network segment might also be serviced by hardware load balancers and other network devices.

An administrative network segment used by cloud administrators to manage hardware resources and by configuration management tools to deploy software and services to new hardware. In some cases, this network segment might also be used for internal services, including the message bus and database services that need to communicate with each other.

Due to the security requirements for this network segment, it is recommended to secure this network from unauthorized access. This network segment usually needs to communicate with every hardware node in the cloud.

An application network segment used by applications and consumers to provide access to the physical network and by users to access applications running in the cloud. This network needs to be segregated from the public network segment and should not communicate directly with the hardware resources in the cloud.

This network segment can be used for communication by Compute resource nodes and network gateway services that transfer application data to the physical network outside of the cloud.

3.4.2. Choose Your Network Type

The network type that you choose plays a critical role in the design of the cloud network architecture.

- OpenStack Networking (neutron) is the core software-defined networking (SDN) component of the OpenStack forward-looking roadmap and is under active development.

- Compute networking (nova-network) was deprecated in the OpenStack technology roadmap, but is still currently available.

There is no migration path between Compute networking and OpenStack Networking. Therefore, if you plan to deploy Compute networking, you cannot upgrade to OpenStack Networking, and any migration between network types must be performed manually and will require network outage.

3.4.2.1. When to choose OpenStack Networking (neutron)

Choose OpenStack Networking if you require any of the following functionality:

Overlay network solution. OpenStack Networking supports GRE and VXLAN tunneling for virtual machine traffic isolation. GRE or VXLAN do not require VLAN configuration on the network fabric and only require the physical network to provide IP connectivity between the nodes.

VXLAN or GRE also allow a theoretical scale limit of 16 million unique IDs, compared to the 4094 unique IDs limitation on a 802.1q VLAN. Compute networking bases the network segregation on 802.1q VLANs and does not support tunneling with GRE or VXLAN.

Overlapping IP addresses between tenants. OpenStack Networking uses the network namespace capabilities in the Linux kernel, which allows different tenants to use the same subnet range, such as 192.168.100/24, on the same Compute node without the risk of overlap or interference. This capability is appropriate for large multi-tenancy deployments.

By comparison, Compute networking offers only flat topologies that must remain aware of subnets that are used by all tenants.

Red Hat-certified third-party OpenStack Networking plug-in. By default, Red Hat OpenStack Platform uses the open source ML2 core plug-in with the Open vSwitch (OVS) mechanism driver. The modular structure of OpenStack Networking allows you to deploy third-party OpenStack Networking plug-ins instead of the default ML2/Open vSwitch driver based on the physical network fabric and other network requirements.

Red Hat is expanding the Partner Certification Program to certify more OpenStack Networking plug-ins for Red Hat OpenStack Platform. You can learn more about the Certification Program and view a list of certified OpenStack Networking plug-ins at: http://marketplace.redhat.com

- VPN-as-a-service (VPNaaS), Firewall-as-a-service (FWaaS), or Load-Balancing-as-a-service (LBaaS). These network services are available only in OpenStack Networking and are not available in Compute networking. The dashboard allows tenants to manage these services without administrator intervention.

3.4.2.2. When to choose Compute Networking (nova-network)

The Compute networking (nova-network) service is primarily a layer-2 networking service that functions in two modes. These modes differ by the VLAN usage:

- Flat-network mode. All network hardware nodes and devices across the cloud are connected to a single layer-2 network segment that provides access to application data.

- VLAN segmentation mode. If network devices in the cloud support segmentation by VLANs, each tenant in the cloud is assigned a network subnet mapped to a VLAN on the physical network. If your cloud design needs to support multiple tenants and you want to use Compute networking, you should use this mode.

Compute networking is managed only by the cloud operator. Tenants cannot control network resources. If tenants need to manage and create network resources, such as network segments and subnets, you must install the OpenStack Networking service to provide network access to instances.

Choose Compute Networking in the following cases:

- If your deployment requires flat untagged networking or tagged VLAN 802.1q networking. You must keep in mind that networking topology restricts the theoretical scale to 4094 VLAN IDs, and physical switches normally support a much lower number. This network also reduces management and provisioning limits. You must manually configure the network to trunk the required set of VLANs between the nodes.

- If your deployment does not require overlapping IP addresses between tenants. This is usually suitable only for small, private deployments.

- If you do not need a software-defined networking (SDN) solution or the ability to interact with the physical network fabric.

- If you do not need self-service VPN, Firewall, or Load-Balancing services.

3.4.3. General Considerations

- Security

Ensure that you segregate your network services and that traffic flows to the correct destinations without crossing through unnecessary locations.

Consider the following example factors:

- Firewalls

- Overlay interconnects for joining separated tenant networks

- Routing through or avoiding specific networks

The way that networks attach to hypervisors can expose security vulnerabilities. To mitigate against exploiting hypervisor breakouts, separate networks from other systems and schedule instances for the network to dedicated Compute nodes. This separation prevents attackers from gaining access to the networks from a compromised instance.

- Capacity planning

- Cloud networks require capacity and growth management. Capacity planning can include the purchase of network circuits and hardware with lead times that are measurable in months or years.

- Complexity

- An complex network design can be difficult to maintain and troubleshoot. Although device-level configuration can ease maintenance concerns and automated tools can handle overlay networks, avoid or document non-traditional interconnects between functions and specialized hardware to prevent outages.

- Configuration errors

- Configuring incorrect IP addresses, VLANs, or routers can cause outages in areas of the network or even in the entire cloud infrastructure. Automate network configurations to minimize the operator error that can disrupt the network availability.

- Non-standard features

Configuring the cloud network to take advantage of vendor-specific features might create additional risks.

For example, you might use multi-link aggregation (MLAG) to provide redundancy at the aggregator switch level of the network. MLAG is not a standard aggregation format and each vendor implements a proprietary flavor of the feature. MLAG architectures are not interoperable across switch vendors, which leads to vendor lock-in and can cause delays or problems when you upgrade network components.

- Single Point of Failure

- If your network has a Single Point Of Failure (SPOF) due to only one upstream link or only one power supply, you might experience a network outage in the event of failure.

- Tuning

- Configure cloud networks to minimize link loss, packet loss, packet storms, broadcast storms, and loops.

3.4.4. Networking Hardware

There is no single best-practice architecture for networking hardware to support an OpenStack cloud that you can apply to all implementations. Key considerations for the selection of networking hardware include:

- Availability

To ensure uninterrupted cloud node access, the network architecture should identify any single points of failure and provide adequate redundancy or fault-tolerance:

- Network redundancy can be achieved by adding redundant power supplies or paired switches.

- For the network infrastructure, networking protocols such as LACP, VRRP or similar can be used to achieve a highly available network connection.

- To ensure that the OpenStack APIs and any other services in the cloud are highly available, you should design a load-balancing solution within the network architecture.

- Connectivity

- All nodes within an OpenStack cloud require network connectivity. In some cases, nodes require access to multiple network segments. The design must include sufficient network capacity and bandwidth to ensure that all north-south and east-west traffic in the cloud have sufficient resources.

- Ports

Any design requires networking hardware that has the required ports:

- Ensure you have the physical space required to provide the ports. A higher port density is preferred, as it leaves more rack space for Compute or storage components. Adequate port availability also prevents fault domains and assists with power density. Higher density switches are more expensive and should also be considered, as it is important to not to over-design the network if it is not required.

- The networking hardware must support the proposed network speed. For example: 1 GbE, 10 GbE, or 40 GbE (or even 100 GbE).

- Power

- Ensure that the physical data center provides the necessary power for the selected network hardware. For example, spine switches in a leaf-and-spine fabric or end of row (EoR) switches might not provide sufficient power.

- Scalability

- The network design should include a scalable physical and logical network design. Network hardware should offer the types of interfaces and speeds that are required by the hardware nodes.

3.5. Performance

The performance of an OpenStack deployment depends on multiple factors that are related to the infrastructure and controller services. User requirements can be divided to general network performance, Compute resource performance, and storage systems performance.

Ensure that you retain a historical performance baseline of your systems, even when these systems perform consistently with no slow-downs. Available baseline information is a useful reference when you encounter performance issues and require data for comparison purposes.

In addition to Section 1.5.2, “OpenStack Telemetry (ceilometer)”, external software can also be used to track performance. The Operational Tools repository for Red Hat OpenStack Platform includes the following tools:

3.5.1. Network Performance

The network requirements help to determine performance capabilities. For example, smaller deployments might employ 1 Gigabit Ethernet (GbE) networking, and larger installations that serve multiple departments or many users should use 10 GbE networking.

The performance of running instances might be limited by these network speeds. You can design OpenStack environments that run a mix of networking capabilities. By utilizing the different interface speeds, the users of the OpenStack environment can choose networks that fit their purposes.

For example, web application instances can run on a public network with OpenStack Networking with 1 GbE capability, and the backend database can use an OpenStack Networking network with 10 GbE capability to replicate its data. In some cases, the design can incorporate link aggregation to increase throughput.

Network performance can be boosted by implementing hardware load balancers that provide front-end services to the cloud APIs. The hardware load balancers can also perform SSL termination if necessary. When implementing SSL offloading, it is important to verify the SSL offloading capabilities of the selected devices.

3.5.2. Compute Nodes Performance

Hardware specifications used in compute nodes including CPU, memory, and disk type, directly affect the performance of the instances. Tunable parameters in the OpenStack services can also directly affect performance.

For example, the default over-commit ratio for OpenStack Compute is 16:1 for CPU and 1.5 for memory. These high ratios can lead to an increase in "noisy-neighbor" activity. You must carefully size your Compute environment to avoid this scenario and ensure that you monitor your environment when usage increases.

3.5.3. Block Storage Hosts Performance

Block Storage can use enterprise backend systems such as NetApp or EMC, scale-out storage such as Ceph, or utilize the capabilities of directly-attached storage in the Block Storage nodes.

Block Storage can be deployed to enable traffic to traverse the host network, which could affect, and be adversely affected by, the front-side API traffic performance. Therefore, consider using a dedicated data storage network with dedicated interfaces on the controller and Compute hosts.

3.5.4. Object Storage Hosts Performance

Users typically access Object Storage through the proxy services, which run behind hardware load balancers. By default, highly resilient storage system replicate stored data, which can affect the overall system performance. In this case, 10 GbE or higher networking capacity is recommended across the storage network architecture.

3.5.5. Controller Nodes

Controller nodes provide management services to the end-user and provide services internally for the cloud operation. It is important to carefully design the hardware that is used to run the controller infrastructure.

The controllers run message-queuing services for system messaging between the services. Performance issues in messaging can lead to delays in operational functions such as spinning up and deleting instances, provisioning new storage volumes, and managing network resources. These delays can also adversely affect the ability of the application to react to some conditions, especially when using auto-scaling features.

You also need to ensure that controller nodes can handle the workload of multiple concurrent users. Ensure that the APIs and Horizon services are load-tested to improve service reliability for your customers.

It is important to consider the OpenStack Identity Service (keystone), which provides authentication and authorization for all services, internally to OpenStack and to end-users. This service can lead to a degradation of overall performance if it is not sized appropriately.

Metrics that are critically important to monitor include:

- Image disk utilization

- Response time to the Compute API

3.6. Maintenance and Support

To support and maintain an installation, OpenStack cloud management requires the operations staff to understand the architecture design. The skill level and role separation between the operations and engineering staff depends on the size and purpose of the installation.

- Large cloud service providers, or telecom providers, are more likely to be managed by specially-trained, dedicated operations organization.

- Smaller implementations are more likely to rely on support staff that need to take on combined engineering, design and operations functions.

If you incorporate features that reduce the operations overhead in the design, you might be able to automate some operational functions.

Your design is also directly affected by terms of Service Level Agreements (SLAs). SLAs define levels of service availability and usually include penalties if you do not meet contractual obligations. SLA terms that affect the design include:

- API availability guarantees that imply multiple infrastructure services and highly available load balancers.

- Network uptime guarantees that affect switch design and might require redundant switching and power.

- Network security policy requirements that imply network segregation or additional security mechanisms.

3.6.1. Backups

Your design might be affected by your backup and restore strategy, data valuation or hierarchical storage management, retention strategy, data placement, and workflow automation.

3.6.2. Downtime

An effective cloud architecture should support the following:

- Planned downtime (maintenance)

- Unplanned downtime (system faults)

For example, if a compute host fails, instances might be restored from a snapshot or by re-spawning an instance. However, for high availability you might need to deploy additional support services such as shared storage or design reliable migration paths.

3.7. Availability

OpenStack can provide a highly-available deployment when you use at least two servers. The servers can run all services from the RabbitMQ message queuing service and the MariaDB database service.

When you scale services in the cloud, backend services also need to scale. Monitoring and reporting server utilization and response times, as well as load testing your systems, can help determine scaling decisions.

- To avoid a single point of failure, OpenStack services should be deployed across multiple servers. API availability can be achieved by placing these services behind highly-available load balancers with multiple OpenStack servers as members.

- Ensure that your deployment has adequate backup capabilities. For example, in a deployment with two infrastructure controller nodes using high availability, if you lose one controller you can still run the cloud services from the other.

- OpenStack infrastructure is integral to provide services and should always be available, especially when operating with SLAs. Consider the number of switches, routes and redundancies of power that are necessary for the core infrastructure, as well as the associated bonding of networks to provide diverse routes to a highly available switch infrastructure. Pay special attention to the type of networking backend to use. For information about how to choose a networking backend, see Chapter 2, Networking In-Depth.

- If you do not configure your Compute hosts for live-migration and a Compute host fails, the Compute instance and any data stored on that instance might be lost. To do ensure the uptime of your Compute hosts, you can use shared file systems on enterprise storage or OpenStack Block storage.

External software can be used to check service availability or threshold limits and to set appropriate alarms. The Operational Tools repository for Red Hat OpenStack Platform includes:

For a reference architecture that uses high availability in OpenStack, see: Deploying Highly Available Red Hat OpenStack Platform 6 with Ceph Storage

3.8. Security

A security domain includes users, applications, servers, or networks that share common trust requirements and expectations in a single system. Security domains typically use the same authentication and authorization requirements and users.

Typical security domain categories are Public, Guest, Management, and Data. The domains can be mapped to an OpenStack deployment individually or combined. For example, some deployment topologies combine guest and data domains in one physical network, and in other cases the networks are physically separated. Each case requires the cloud operator to be aware of the relevant security concerns.

Security domains should be mapped to your specific OpenStack deployment topology. The domains and the domain trust requirements depend on whether the cloud instance is public, private, or hybrid.

- Public domain

- Entirely untrusted area of the cloud infrastructure. A public domain can refer to the Internet as a whole or networks over which you have no authority. This domain should always be considered untrusted.

- Guest domain

Typically used for Compute instance-to-instance traffic and handles Compute data generated by instances on the cloud, but not by services that support the operation of the cloud, such as API calls.

Public cloud providers and private cloud providers that do not have strict controls on instance usage or that allow unrestricted Internet access to instances, should consider this domain untrusted. Private cloud providers might consider this network internal and therefore trusted only with controls that assert trust in instances and all cloud tenants.

- Management domain

- The domain where services interact with each other. This domain is sometimes known as the control plane. Networks in this domain transfer confidential data, such as configuration parameters, user names, and passwords. In most deployments, this domain is considered trusted.

- Data domain

- The domain where storage services transfer data. Most data that crosses this domain has high integrity and confidentiality requirements and, depending on the type of deployment, might also have strong availability requirements. The trust level of this network is dependent on other deployment decisions.

When you deploy OpenStack in an enterprise as a private cloud, the deployment is usually behind a firewall and inside the trusted network with existing systems. Users of the cloud are typically employees that are bound by the security requirements that the company defines. This deployment implies that most of the security domains can be trusted.

However, when you deploy OpenStack in a public facing role, no assumptions can be made regarding the trust level of the domains, and the attack vectors significantly increase. For example, the API endpoints and the underlying software become vulnerable to bad actors that want to gain unauthorized access or prevent access to services. These attacks might lead to loss data, functionality, and reputation. These services must be protected using auditing and appropriate filtering.

You must exercise caution also when you manage users of the system for both public and private clouds. The Identity service can use external identity backends such as LDAP, which can ease user management inside OpenStack. Because user authentication requests include sensitive information such as user names, passwords, and authentication tokens, you should place the API services behind hardware that performs SSL termination.

3.9. Additional Software

A typical OpenStack deployment includes OpenStack-specific components and Section 1.6.1, “Third-party Components”. Supplemental software can include software for clustering, logging, monitoring, and alerting. The deployment design must therefore account for additional resource consumption, such as CPU, RAM, storage, and network bandwidth.

When you design your cloud, consider the following factors:

- Databases and messaging

The underlying message queue provider might affect the required number of controller services, as well as the technology to provide highly resilient database functionality. For example, if you use MariaDB with Galera, the replication of services relies on quorum. Therefore, the underlying database should consist of at least three nodes to account for the recovery of a failed Galera node.

When you increase the number of nodes to support a software feature, consider both rack space and switch port density.

- External caching

Memcached is a distributed memory object caching system, and Redis is a key-value store. Both systems can be deployed in the cloud to reduce load on the Identity service. For example, the memcached service caches tokens, and uses a distributed caching system to help reduce some bottlenecks from the underlying authentication system.

Using memcached or Redis does not affect the overall design of your architecture, because these services are typically deployed to the infrastructure nodes that provide the OpenStack services.

- Load balancing

Although many general-purpose deployments use hardware load balancers to provide highly available API access and SSL termination, software solutions such as HAProxy can also be considered. You must ensure that software-defined load balancing implementations are also highly available.

You can configure software-defined high availability with solutions such as Keepalived or Pacemaker with Corosync. Pacemaker and Corosync can provide active-active or active-passive highly available configuration based on the specific service in the OpenStack environment.

These applications might affect the design because they require a deployment with at least two nodes, where one of the controller nodes can run services in standby mode.

- Logging and monitoring

Logs should be stored in a centralized location to make analytics easier. Log data analytics engines can also provide automation and issue notification with mechanisms to alert and fix common issues.

You can use external logging or monitoring software in addition to the basic OpenStack logs, as long as the tools support existing software and hardware in your architectural design. The Operational Tools repository for Red Hat OpenStack Platform includes the following tools:

3.10. Planning Tool

The Cloud Resource Calculator tool can help you calculate capacity requirements.

To use the tool, enter your hardware details into the spreadsheet. The tool then shows a calculated estimate of the number of instances available to you, including flavor variations.

This tool is provided only for your convenience. It is not officially supported by Red Hat.