Chapter 2. Architecture

The director advocates the use of native OpenStack APIs to configure, deploy, and manage OpenStack environments itself. This means integration with director requires integrating with these native OpenStack APIs and supporting components. The major benefit of utilizing such APIs is that they are well documented, undergo extensive integration testing upstream, are mature, and makes understanding how the director works easier for those that have a foundational knowledge of OpenStack. This also means the director automatically inherits core OpenStack feature enhancements, security patches, and bug fixes.

The Red Hat OpenStack Platform director is a toolset for installing and managing a complete OpenStack environment. It is based primarily on the OpenStack project TripleO, which is an abbreviation for "OpenStack-On-OpenStack". This project takes advantage of OpenStack components to install a fully operational OpenStack environment. This includes new OpenStack components that provision and control bare metal systems to use as OpenStack nodes. This provides a simple method for installing a complete Red Hat OpenStack Platform environment that is both lean and robust.

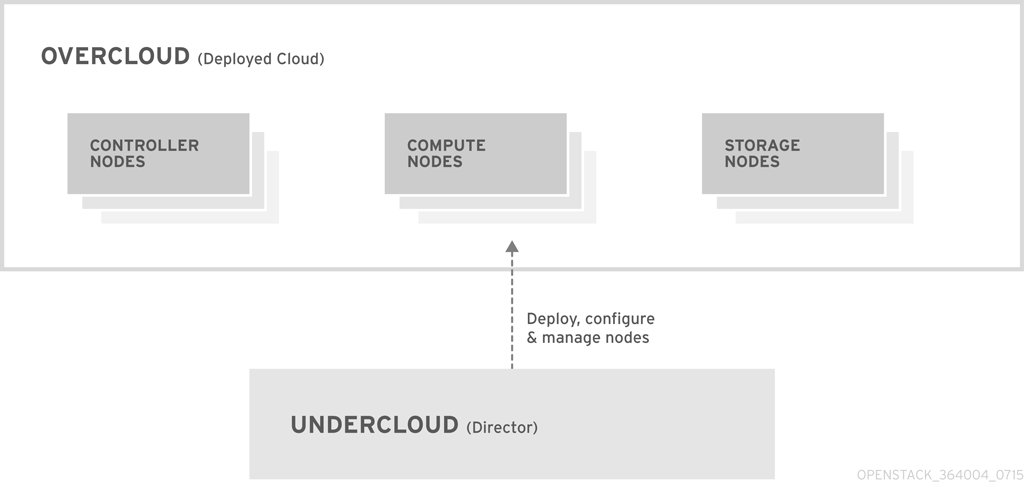

The Red Hat OpenStack Platform director uses two main concepts: an Undercloud and an Overcloud. This director itself is comprised of a subset of OpenStack components that form a single-system OpenStack environment, otherwise known as the Undercloud. The Undercloud acts as a management system that can create a production-level cloud for workloads to run. This production-level cloud is the Overcloud. For more information on the Overcloud and the Undercloud, see the Director Installation and Usage guide.

Director ships with tools, utilities, and example templates for creating an Overcloud configuration. The director captures configuration data, parameters, and network topology information then uses this information in conjunction with components such as Ironic, Heat, and Puppet to orchestrate an Overcloud installation.

Partners have varied requirements. Understanding the director’s architecture aids in understand which components matter for a given integration effort.

2.1. Core Components

This section examines some of the core components of the Red Hat OpenStack Platform director and describes how they contribute to Overcloud creation.

2.1.1. Ironic

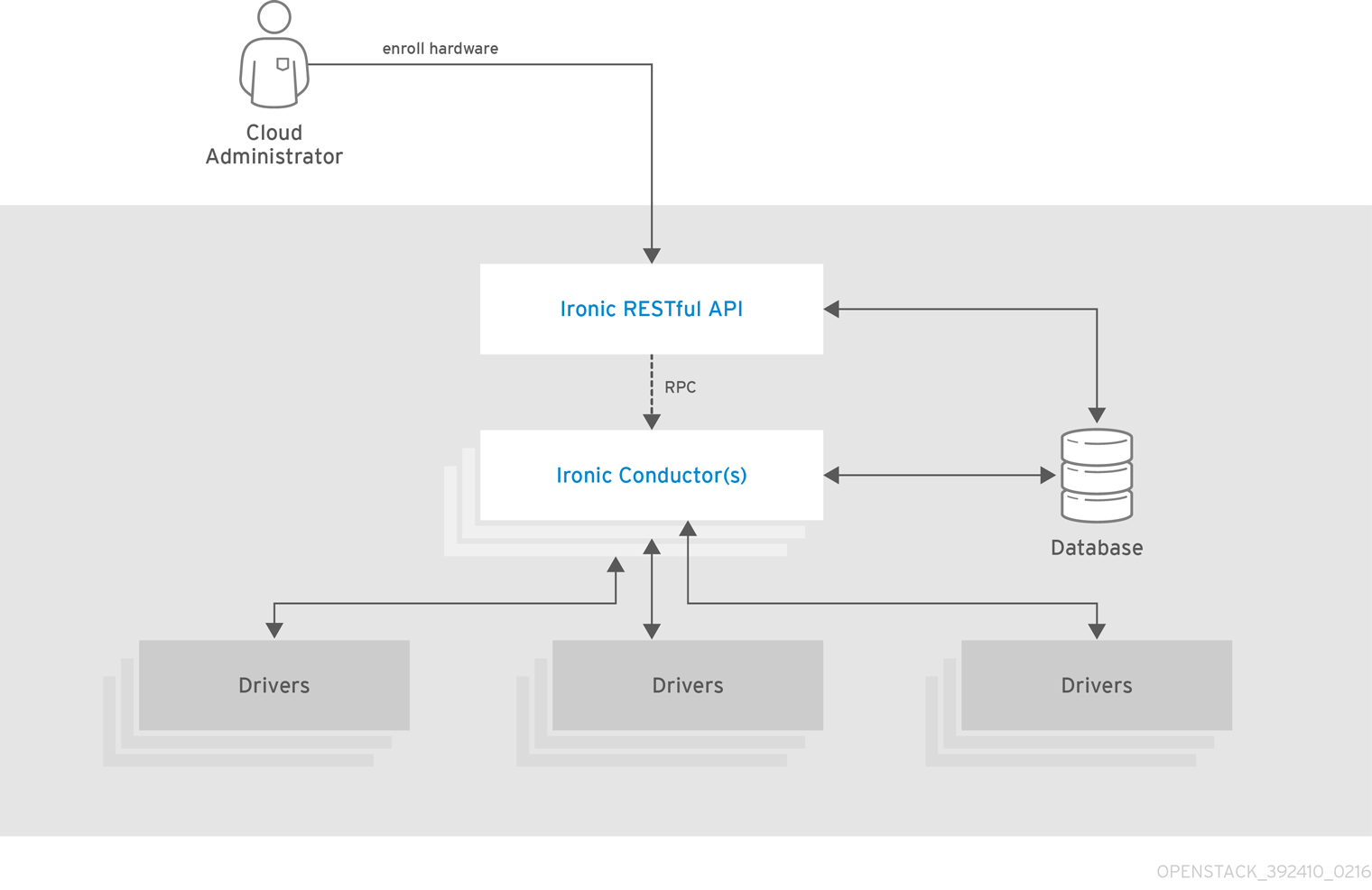

Ironic provides dedicated bare metal hosts to end users through self-service provisioning. The director uses Ironic to manage the lifecycle of the bare metal hardware in our Overcloud. Ironic has its own native API for defining bare metal nodes. Administrators aiming to provision OpenStack environments with the director must register their nodes with Ironic using a specific driver. The main supported driver is The Intelligent Platform Management Interface (IPMI) as most hardware contains some support for IPMI power management functions. However, ironic also contains vendor specific equivalents such as HP iLO, Cisco UCS, or Dell DRAC. Ironic controls the power management of the nodes and gathers hardware information or facts using a discovery mechanism. The director uses the information obtained from the discovery process to match node to various OpenStack environment roles, such as Controller nodes, Compute nodes, and storage nodes. For example, a discovered node with 10-disks will more than likely be provisioned as a storage node.

Partners wishing to have director support for their hardware will need to have driver coverage in Ironic.

2.1.2. Heat

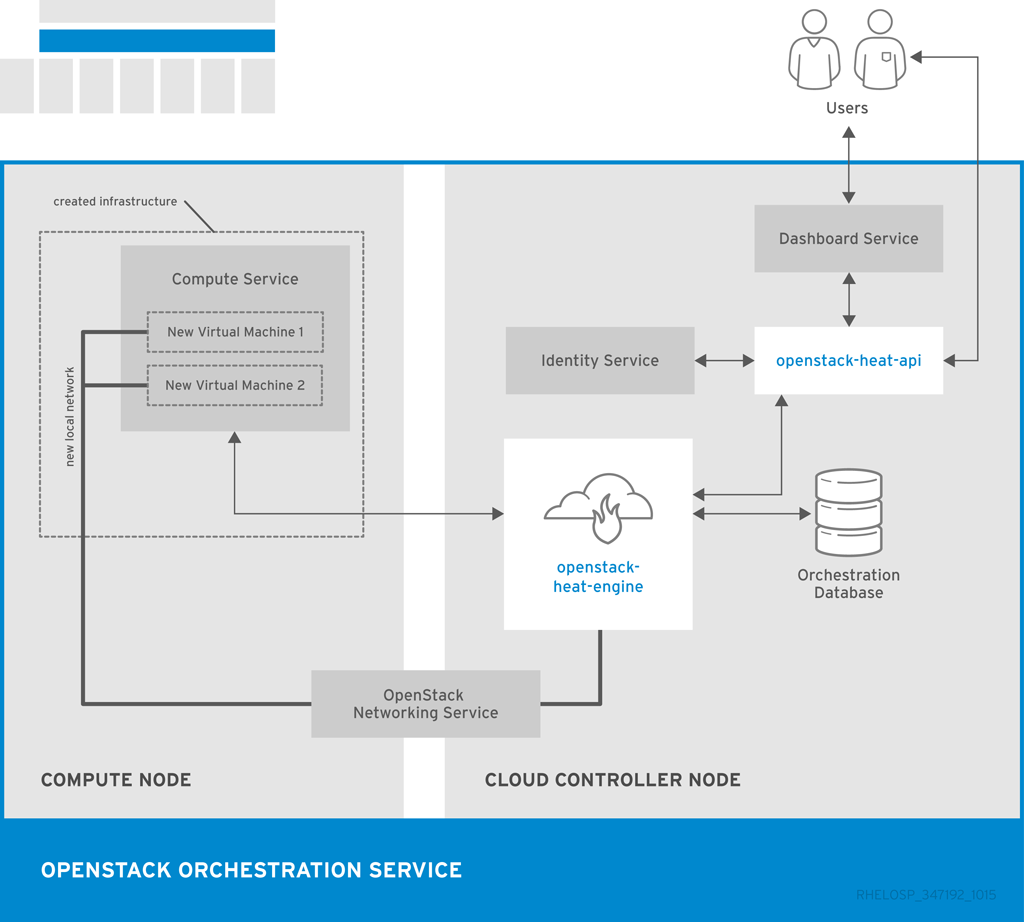

Heat acts as an application stack orchestration engine. This allows organizations to define elements for a given application before deploying it to a cloud. This involves creating a stack template that includes a number of infrastructure resources (e.g. instances, networks, storage volumes, elastic IPs, etc) along with a set of parameters for configuration. Heat creates these resources based on a given dependency chain, monitors them for availability, and scales them where necessary. These templates enable application stacks to become portable and achieve repeatability with expected results.

The director uses the native OpenStack Heat APIs to provision and manage the resources associated with deploying an Overcloud. This includes precise details such as defining the number of nodes to provision per node role, the software components to configure for each node, and the order in which the director configures these components and node types. The director also uses Heat for troubleshooting a deployment and making changes post-deployment with ease.

The following example is a snippet from a Heat template that defines parameters of a Controller node:

Heat consumes templates included with the director to facilitate the creation of an Overcloud, which includes calling Ironic to power the nodes. We can view the resources (and their status) of an in-progress Overcloud using the standard Heat tools. For example, you can use the Heat tools to display the Overcloud as a nested application stack.

Heat provides a comprehensive and powerful syntax for declaring and creating production OpenStack clouds. However, it requires some prior understanding and proficiency for partner integration. Every partner integration use case requires Heat templates.

2.1.3. Puppet

Puppet is a configuration management and enforcement tool. It is used as a mechanism to describe the end state of a machine and keep it that way. You define this end state in a Puppet manifest. Puppet supports two models:

- A standalone mode in which instructions in the form of manifests are ran locally

- A server mode where it retrieves its manifests from a central server, called a Puppet Master.

Administrators make changes in two ways: either uploading new manifests to a node and executing them locally, or in the client/server model by making modifications on the Puppet Master.

We use Puppet in many areas of director:

- We use Puppet on the Undercloud host locally to install and configure packages as per the configuration laid out in undercloud.conf.

- We inject the openstack-puppet-modules package into the base Overcloud image. These Puppet modules are ready for post-deployment configuration. By default, we create an image that contains all OpenStack services and use it for each node.

- We provide additional Puppet manifests and parameters to the nodes via Heat, and apply the configuration after the Overcloud’s deployment. This includes the services to enable and start and the OpenStack configuration to apply, which are dependent on the node type.

We provide Puppet hieradata to the nodes. The Puppet modules and manifests are free from site or node-specific parameters to keep the manifests consistent. The hieradata acts as a form of parameterized values that you can push to a Puppet module and reference in other areas. For example, to reference the MySQL password inside of a manifest, save this information as hieradata and reference it within the manifest.

Viewing the hieradata:

grep mysql_root_password hieradata.yaml # View the data in the hieradata file openstack::controller::mysql_root_password: ‘redhat123'

[root@localhost ~]# grep mysql_root_password hieradata.yaml # View the data in the hieradata file openstack::controller::mysql_root_password: ‘redhat123'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Referencing it in the Puppet manifest:

grep mysql_root_password example.pp # Now referenced in the Puppet manifest mysql_root_password => hiera(‘openstack::controller::mysql_root_password')

[root@localhost ~]# grep mysql_root_password example.pp # Now referenced in the Puppet manifest mysql_root_password => hiera(‘openstack::controller::mysql_root_password')Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Partner integrated services that need package installation and service enablement should consider creating Puppet modules to meet their requirement. For examples, see Section 4.2, “Obtaining OpenStack Puppet Modules” for information on how to obtain current Openstack Puppet modules.

2.1.4. TripleO and TripleO Heat Templates

As mentioned previously, the director is based on the upstream TripleO project. This project combines a set of OpenStack services that:

- Store Overcloud images (Glance)

- Orchestrate the Overcloud (Heat)

- Provision bare metal machines (Ironic)

TripleO also includes a Heat template collection that defines a Red Hat-supported Overcloud environment. The director, using Heat, reads this template collection and orchestrates the Overcloud stack. Heat also launches software configuration for certain resources in these core Heat templates. This software configuration is usually either Bash scripts or a Puppet manifest.

A typical software configuration relies on two main Heat resources:

-

A resource to define the configuration (

OS::Heat::SoftwareConfig) -

A resource to implement the configuration on the node (

OS::Heat::SoftwareDeployment)

For example, in the Heat template collection, the post deployment template for Compute nodes (puppet/compute-post.yaml) contains the following section:

The ComputePuppetConfig resource loads a Puppet manifest (puppet/manifests/overcloud_compute.pp), which contains the configuration for Compute nodes. The ComputePuppetDeployment resource applies the configuration from ComputePuppetConfig to a list of servers (servers: {get_param: servers}), which the parent Heat template defines as the Compute nodes. Depending if Puppet successfully applies the complete manifest, the node reports back whether ComputePuppetDeployment is a success or failure.

This software configuration data flow is important to understanding how to integrate a third party solution through the director. This guide uses this data flow to show how to include custom configuration on an Overcloud both before and after the core configuration. For examples of the software configuration data flow used to implement custom configuration, see: