Red Hat Quay Operator features

Advanced Red Hat Quay Operator features

Abstract

Chapter 1. Federal Information Processing Standard (FIPS) readiness and compliance

The Federal Information Processing Standard (FIPS) developed by the National Institute of Standards and Technology (NIST) is regarded as the highly regarded for securing and encrypting sensitive data, notably in highly regulated areas such as banking, healthcare, and the public sector. Red Hat Enterprise Linux (RHEL) and OpenShift Container Platform support FIPS by providing a FIPS mode, in which the system only allows usage of specific FIPS-validated cryptographic modules like openssl. This ensures FIPS compliance.

1.1. Enabling FIPS compliance

Use the following procedure to enable FIPS compliance on your Red Hat Quay deployment.

Prerequisite

- If you are running a standalone deployment of Red Hat Quay, your Red Hat Enterprise Linux (RHEL) deployment is version 8 or later and FIPS-enabled.

- If you are deploying Red Hat Quay on OpenShift Container Platform, OpenShift Container Platform is version 4.10 or later.

- Your Red Hat Quay version is 3.5.0 or later.

If you are using the Red Hat Quay on OpenShift Container Platform on an IBM Power or IBM Z cluster:

- OpenShift Container Platform version 4.14 or later is required

- Red Hat Quay version 3.10 or later is required

- You have administrative privileges for your Red Hat Quay deployment.

Procedure

In your Red Hat Quay

config.yamlfile, set theFEATURE_FIPSconfiguration field toTrue. For example:--- FEATURE_FIPS = true ---

--- FEATURE_FIPS = true ---Copy to Clipboard Copied! Toggle word wrap Toggle overflow With

FEATURE_FIPSset toTrue, Red Hat Quay runs using FIPS-compliant hash functions.

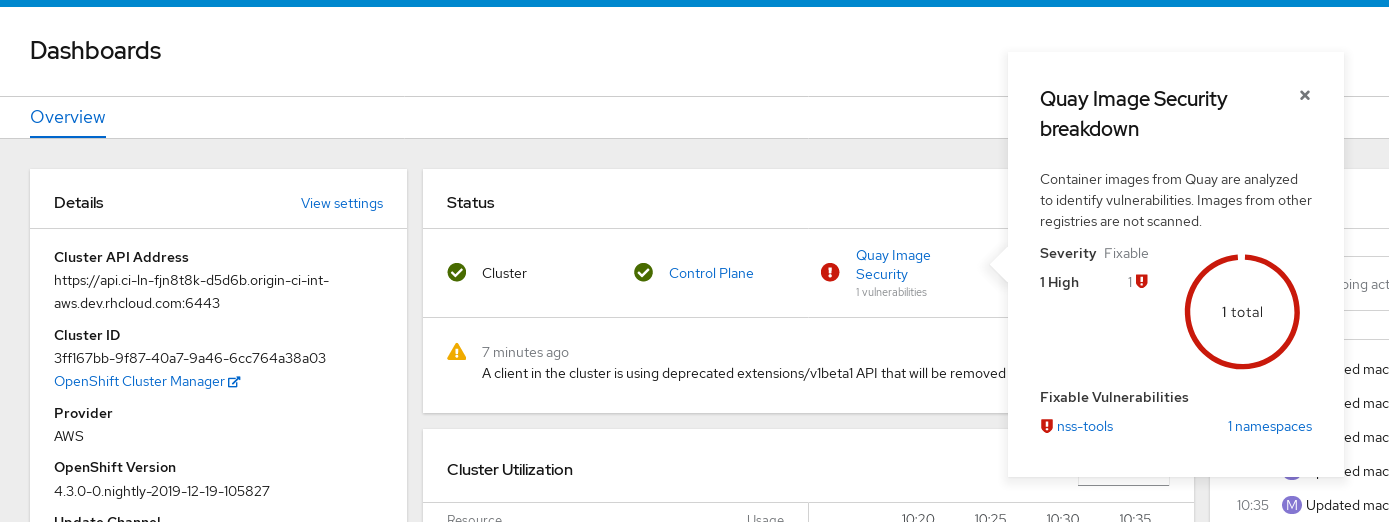

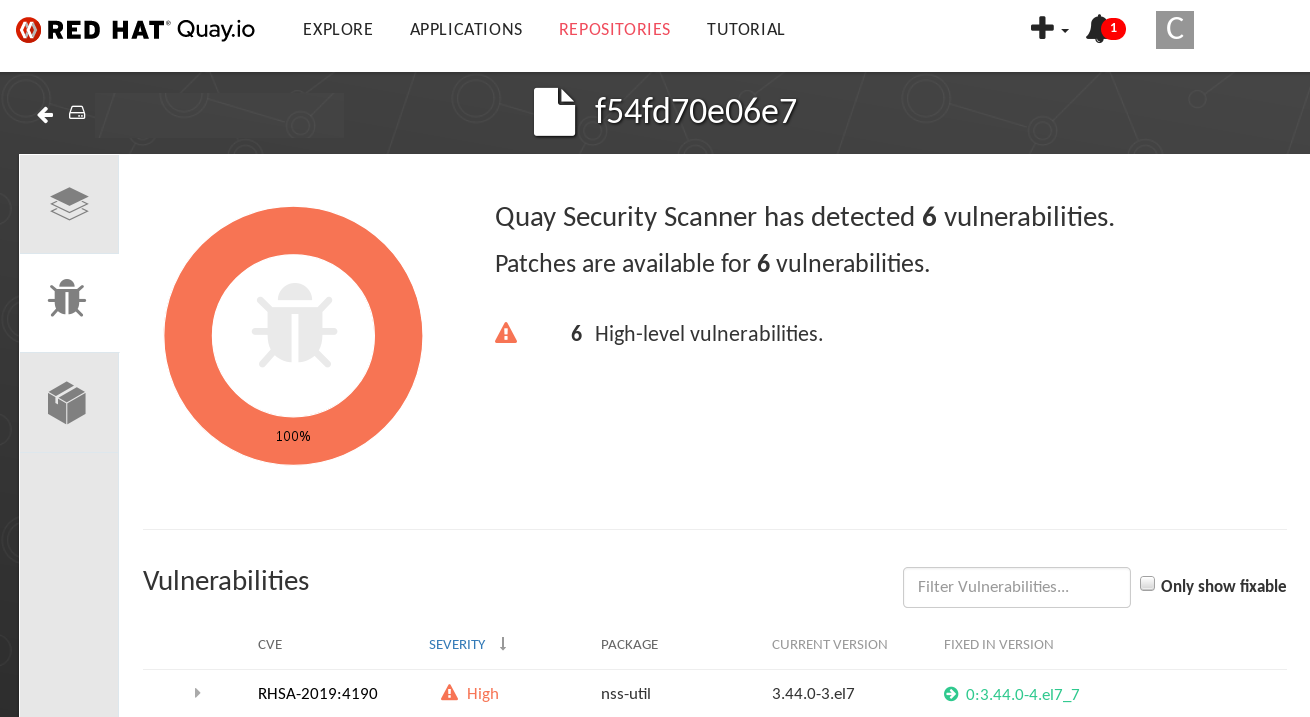

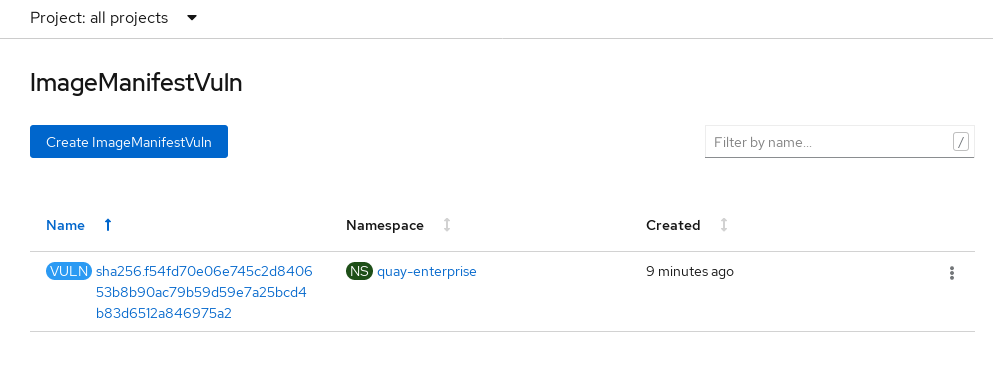

Chapter 2. Console monitoring and alerting

Red Hat Quay provides support for monitoring instances that were deployed by using the Red Hat Quay Operator, from inside the OpenShift Container Platform console. The new monitoring features include a Grafana dashboard, access to individual metrics, and alerting to notify for frequently restarting Quay pods.

To enable the monitoring features, you must select All namespaces on the cluster as the installation mode when installing the Red Hat Quay Operator.

2.1. Dashboard

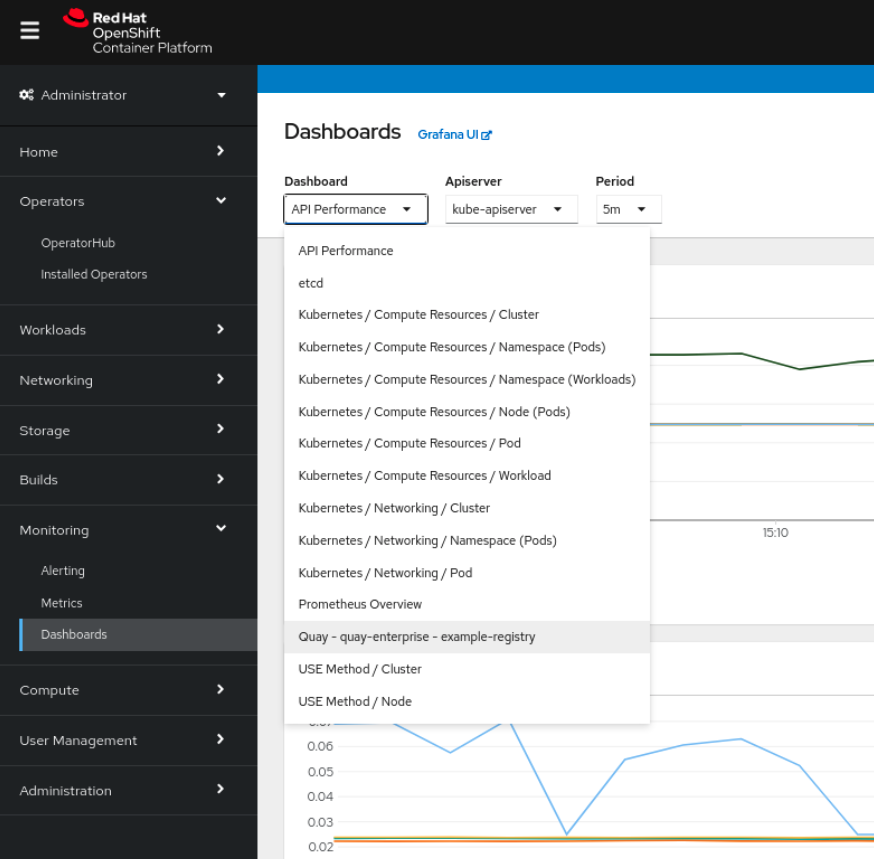

On the OpenShift Container Platform console, click Monitoring → Dashboards and search for the dashboard of your desired Red Hat Quay registry instance:

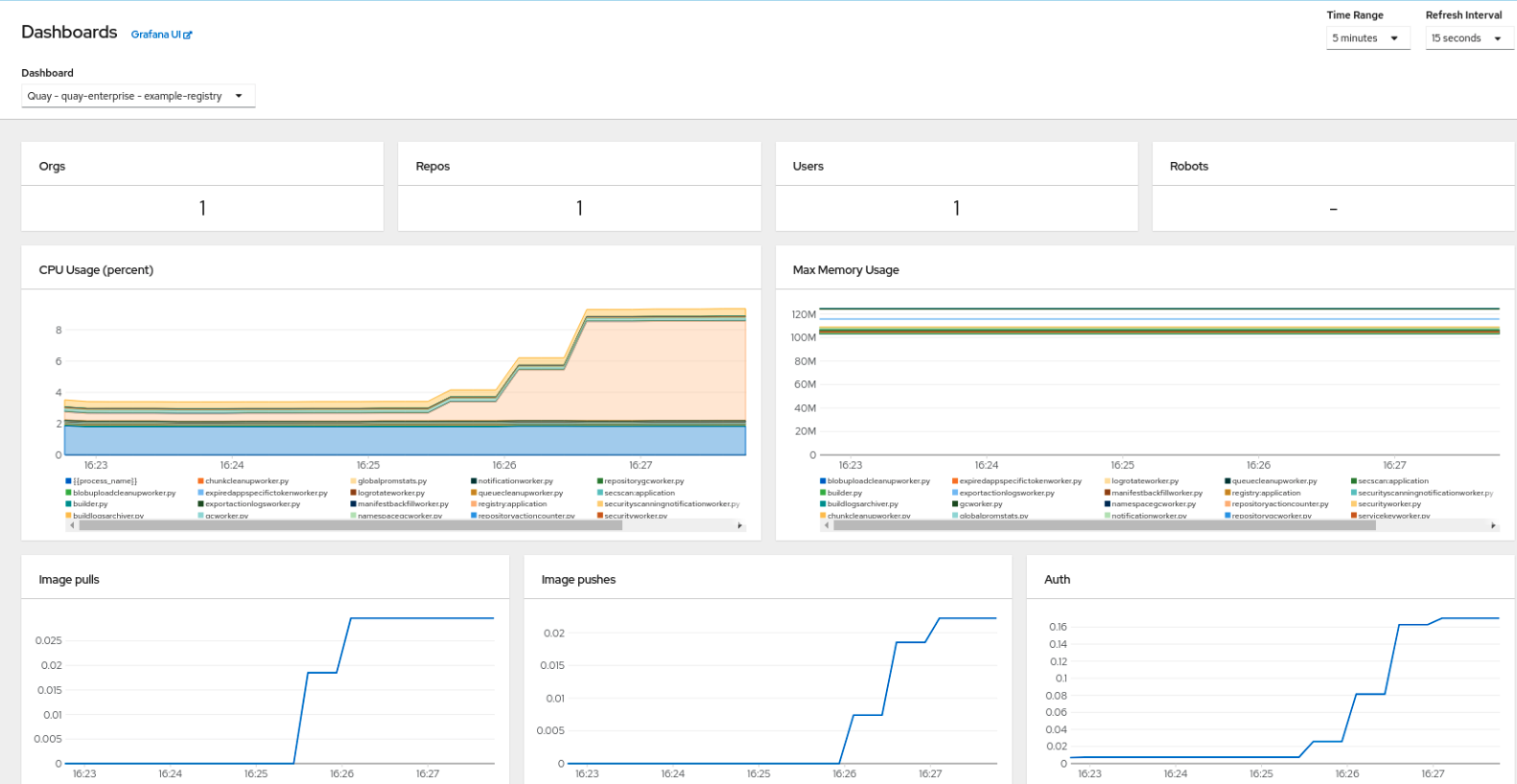

The dashboard shows various statistics including the following:

- The number of Organizations, Repositories, Users, and Robot accounts

- CPU Usage

- Max memory usage

- Rates of pulls and pushes, and authentication requests

- API request rate

- Latencies

2.2. Metrics

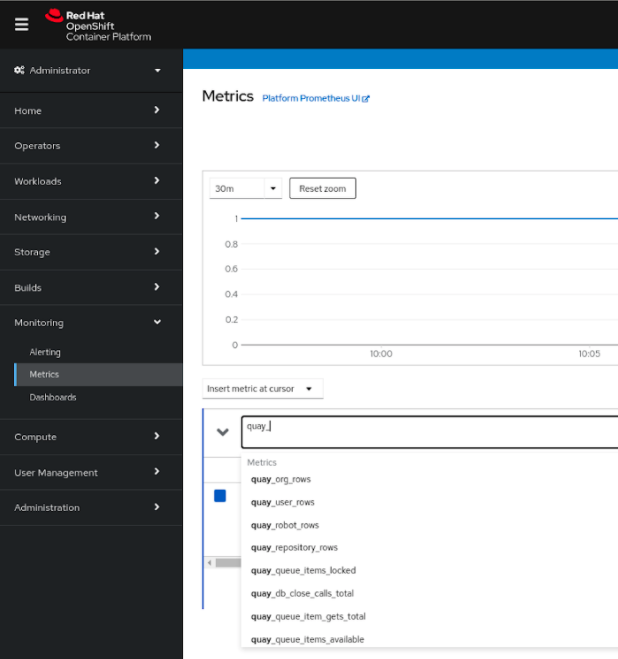

You can see the underlying metrics behind the Red Hat Quay dashboard by accessing Monitoring → Metrics in the UI. In the Expression field, enter the text quay_ to see the list of metrics available:

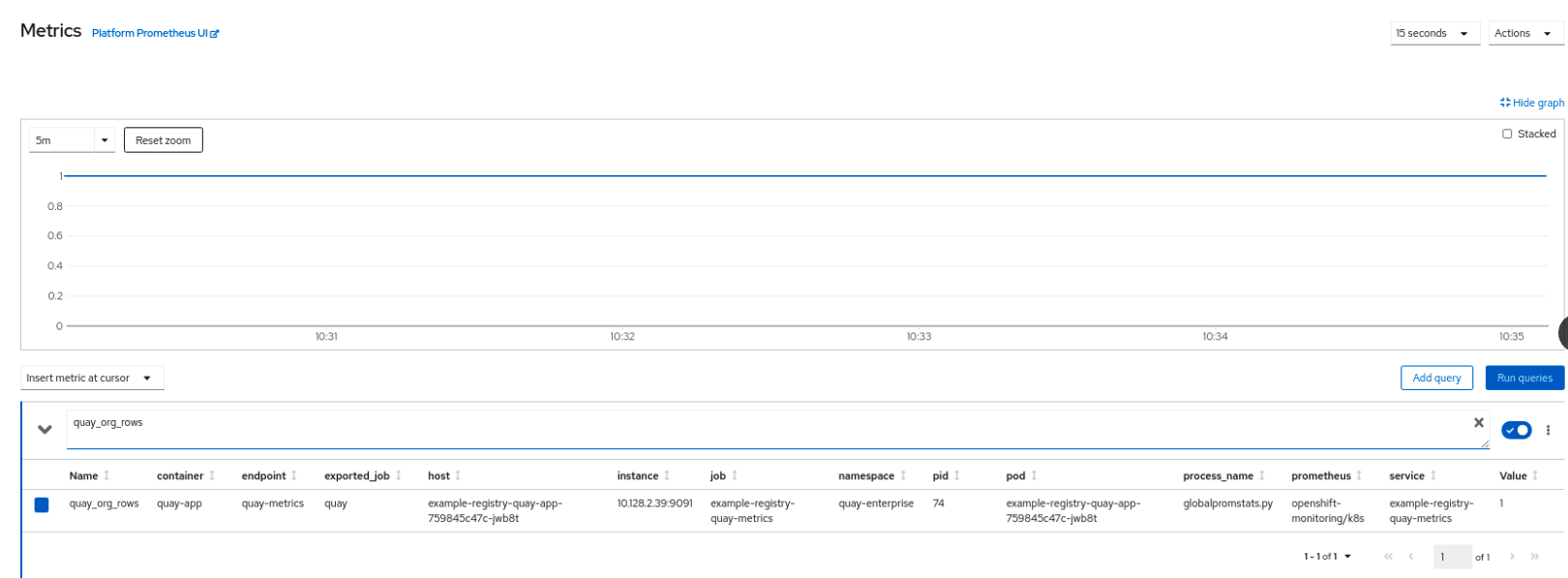

Select a sample metric, for example, quay_org_rows:

This metric shows the number of organizations in the registry. It is also directly surfaced in the dashboard.

2.3. Alerting

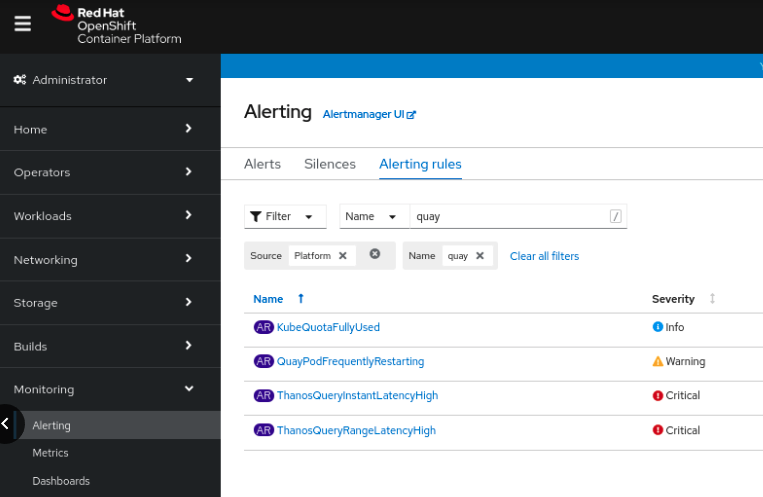

An alert is raised if the Quay pods restart too often. The alert can be configured by accessing the Alerting rules tab from Monitoring → Alerting in the console UI and searching for the Quay-specific alert:

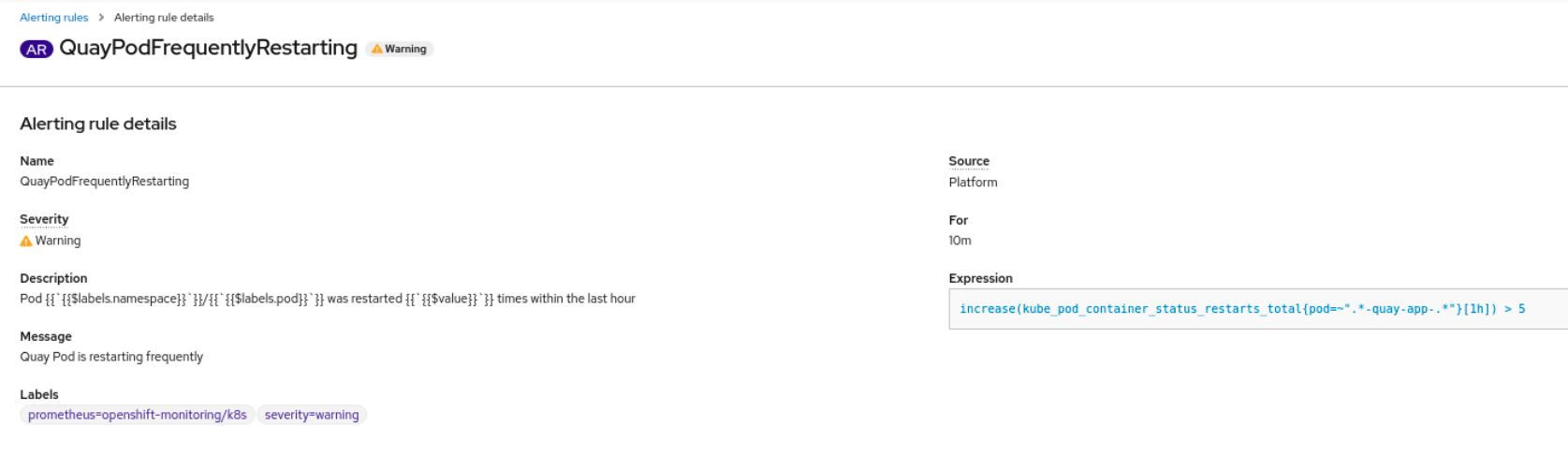

Select the QuayPodFrequentlyRestarting rule detail to configure the alert:

Chapter 3. Clair security scanner

3.1. Clair vulnerability databases

Clair uses the following vulnerability databases to report for issues in your images:

- Ubuntu Oval database

- Debian Security Tracker

- Red Hat Enterprise Linux (RHEL) Oval database

- SUSE Oval database

- Oracle Oval database

- Alpine SecDB database

- VMware Photon OS database

- Amazon Web Services (AWS) UpdateInfo

- Open Source Vulnerability (OSV) Database

For information about how Clair does security mapping with the different databases, see Claircore Severity Mapping.

3.1.1. Information about Open Source Vulnerability (OSV) database for Clair

Open Source Vulnerability (OSV) is a vulnerability database and monitoring service that focuses on tracking and managing security vulnerabilities in open source software.

OSV provides a comprehensive and up-to-date database of known security vulnerabilities in open source projects. It covers a wide range of open source software, including libraries, frameworks, and other components that are used in software development. For a full list of included ecosystems, see defined ecosystems.

Clair also reports vulnerability and security information for golang, java, and ruby ecosystems through the Open Source Vulnerability (OSV) database.

By leveraging OSV, developers and organizations can proactively monitor and address security vulnerabilities in open source components that they use, which helps to reduce the risk of security breaches and data compromises in projects.

For more information about OSV, see the OSV website.

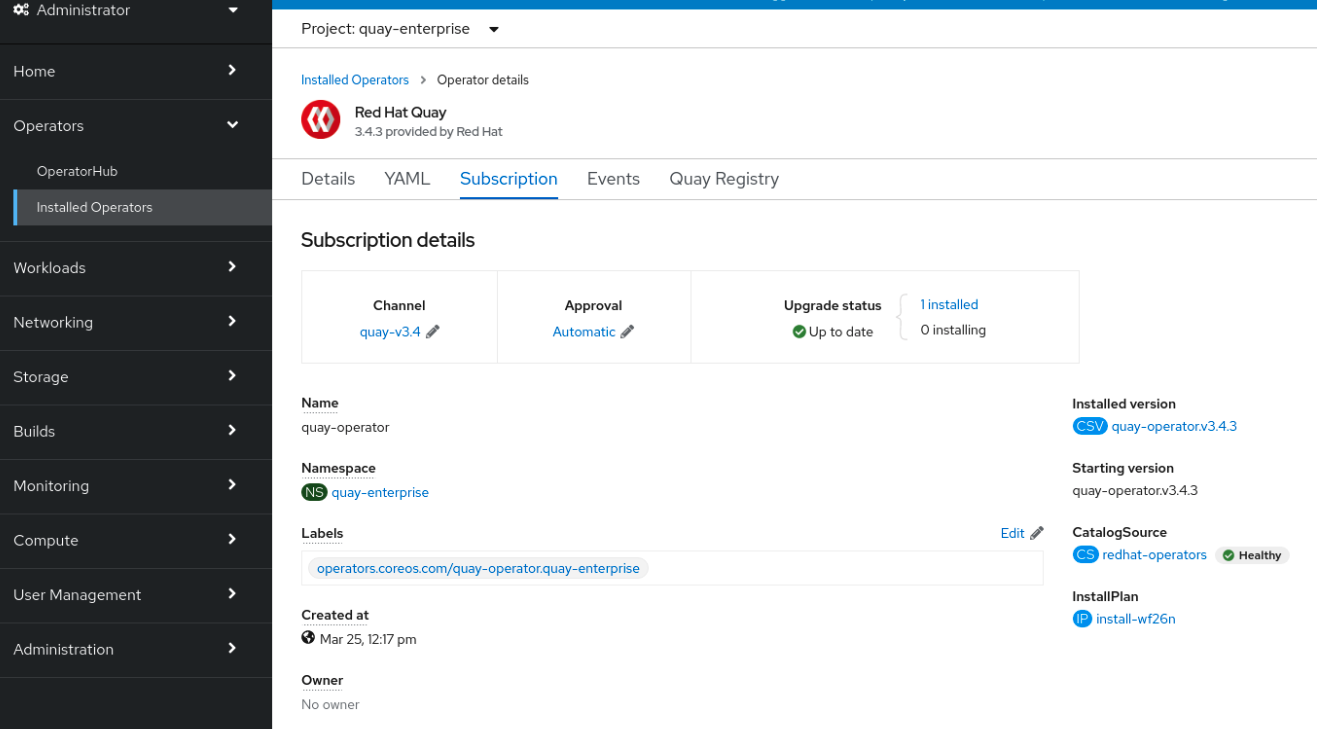

3.2. Clair on OpenShift Container Platform

To set up Clair v4 (Clair) on a Red Hat Quay deployment on OpenShift Container Platform, it is recommended to use the Red Hat Quay Operator. By default, the Red Hat Quay Operator installs or upgrades a Clair deployment along with your Red Hat Quay deployment and configure Clair automatically.

3.3. Testing Clair

Use the following procedure to test Clair on either a standalone Red Hat Quay deployment, or on an OpenShift Container Platform Operator-based deployment.

Prerequisites

- You have deployed the Clair container image.

Procedure

Pull a sample image by entering the following command:

podman pull ubuntu:20.04

$ podman pull ubuntu:20.04Copy to Clipboard Copied! Toggle word wrap Toggle overflow Tag the image to your registry by entering the following command:

sudo podman tag docker.io/library/ubuntu:20.04 <quay-server.example.com>/<user-name>/ubuntu:20.04

$ sudo podman tag docker.io/library/ubuntu:20.04 <quay-server.example.com>/<user-name>/ubuntu:20.04Copy to Clipboard Copied! Toggle word wrap Toggle overflow Push the image to your Red Hat Quay registry by entering the following command:

sudo podman push --tls-verify=false quay-server.example.com/quayadmin/ubuntu:20.04

$ sudo podman push --tls-verify=false quay-server.example.com/quayadmin/ubuntu:20.04Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Log in to your Red Hat Quay deployment through the UI.

- Click the repository name, for example, quayadmin/ubuntu.

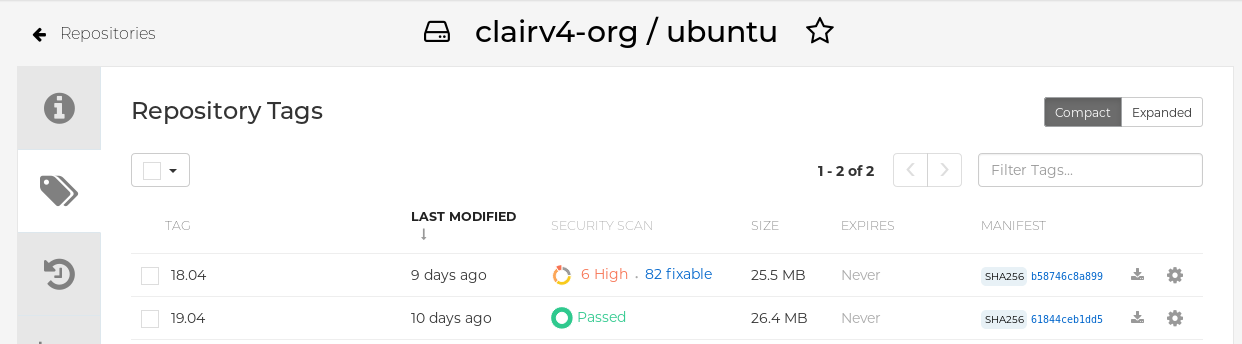

In the navigation pane, click Tags.

Report summary

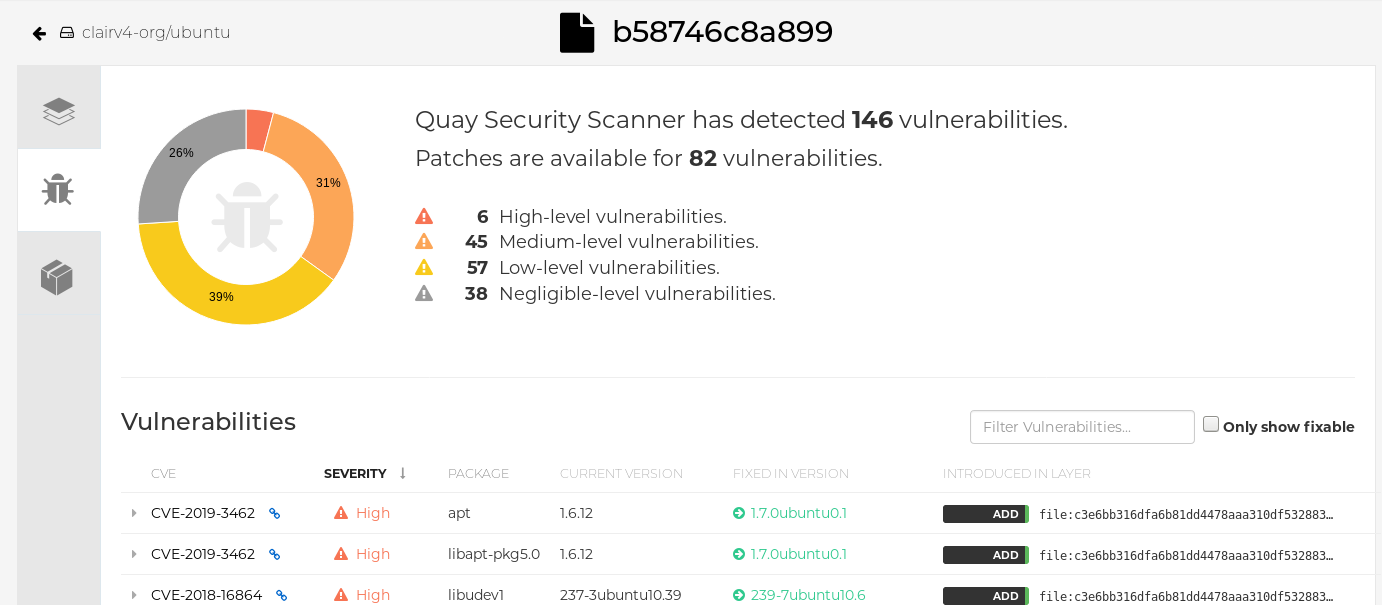

Click the image report, for example, 45 medium, to show a more detailed report:

Report details

Note

NoteIn some cases, Clair shows duplicate reports on images, for example,

ubi8/nodejs-12orubi8/nodejs-16. This occurs because vulnerabilities with same name are for different packages. This behavior is expected with Clair vulnerability reporting and will not be addressed as a bug.

3.4. Advanced Clair configuration

Use the procedures in the following sections to configure advanced Clair settings.

3.4.1. Unmanaged Clair configuration

Red Hat Quay users can run an unmanaged Clair configuration with the Red Hat Quay OpenShift Container Platform Operator. This feature allows users to create an unmanaged Clair database, or run their custom Clair configuration without an unmanaged database.

An unmanaged Clair database allows the Red Hat Quay Operator to work in a geo-replicated environment, where multiple instances of the Operator must communicate with the same database. An unmanaged Clair database can also be used when a user requires a highly-available (HA) Clair database that exists outside of a cluster.

3.4.1.1. Running a custom Clair configuration with an unmanaged Clair database

Use the following procedure to set your Clair database to unmanaged.

You must not use the same externally managed PostgreSQL database for both Red Hat Quay and Clair deployments. Your PostgreSQL database must also not be shared with other workloads, as it might exhaust the natural connection limit on the PostgreSQL side when connection-intensive workloads, like Red Hat Quay or Clair, contend for resources. Additionally, pgBouncer is not supported with Red Hat Quay or Clair, so it is not an option to resolve this issue.

Procedure

In the Quay Operator, set the

clairpostgrescomponent of theQuayRegistrycustom resource tomanaged: false:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

3.4.1.2. Configuring a custom Clair database with an unmanaged Clair database

Red Hat Quay on OpenShift Container Platform allows users to provide their own Clair database.

Use the following procedure to create a custom Clair database.

The following procedure sets up Clair with SSL/TLS certifications. To view a similar procedure that does not set up Clair with SSL/TLS certifications, see "Configuring a custom Clair database with a managed Clair configuration".

Procedure

Create a Quay configuration bundle secret that includes the

clair-config.yamlby entering the following command:oc create secret generic --from-file config.yaml=./config.yaml --from-file extra_ca_cert_rds-ca-2019-root.pem=./rds-ca-2019-root.pem --from-file clair-config.yaml=./clair-config.yaml --from-file ssl.cert=./ssl.cert --from-file ssl.key=./ssl.key config-bundle-secret

$ oc create secret generic --from-file config.yaml=./config.yaml --from-file extra_ca_cert_rds-ca-2019-root.pem=./rds-ca-2019-root.pem --from-file clair-config.yaml=./clair-config.yaml --from-file ssl.cert=./ssl.cert --from-file ssl.key=./ssl.key config-bundle-secretCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example Clair

config.yamlfileCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note-

The database certificate is mounted under

/run/certs/rds-ca-2019-root.pemon the Clair application pod in theclair-config.yaml. It must be specified when configuring yourclair-config.yaml. -

An example

clair-config.yamlcan be found at Clair on OpenShift config.

-

The database certificate is mounted under

Add the

clair-config.yamlfile to your bundle secret, for example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteWhen updated, the provided

clair-config.yamlfile is mounted into the Clair pod. Any fields not provided are automatically populated with defaults using the Clair configuration module.You can check the status of your Clair pod by clicking the commit in the Build History page, or by running

oc get pods -n <namespace>. For example:oc get pods -n <namespace>

$ oc get pods -n <namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME READY STATUS RESTARTS AGE f192fe4a-c802-4275-bcce-d2031e635126-9l2b5-25lg2 1/1 Running 0 7s

NAME READY STATUS RESTARTS AGE f192fe4a-c802-4275-bcce-d2031e635126-9l2b5-25lg2 1/1 Running 0 7sCopy to Clipboard Copied! Toggle word wrap Toggle overflow

3.4.2. Running a custom Clair configuration with a managed Clair database

In some cases, users might want to run a custom Clair configuration with a managed Clair database. This is useful in the following scenarios:

- When a user wants to disable specific updater resources.

When a user is running Red Hat Quay in an disconnected environment. For more information about running Clair in a disconnected environment, see Clair in disconnected environments.

Note-

If you are running Red Hat Quay in an disconnected environment, the

airgapparameter of yourclair-config.yamlmust be set toTrue. - If you are running Red Hat Quay in an disconnected environment, you should disable all updater components.

-

If you are running Red Hat Quay in an disconnected environment, the

3.4.2.1. Setting a Clair database to managed

Use the following procedure to set your Clair database to managed.

Procedure

In the Quay Operator, set the

clairpostgrescomponent of theQuayRegistrycustom resource tomanaged: true:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

3.4.2.2. Configuring a custom Clair database with a managed Clair configuration

Red Hat Quay on OpenShift Container Platform allows users to provide their own Clair database.

Use the following procedure to create a custom Clair database.

Procedure

Create a Quay configuration bundle secret that includes the

clair-config.yamlby entering the following command:oc create secret generic --from-file config.yaml=./config.yaml --from-file extra_ca_cert_rds-ca-2019-root.pem=./rds-ca-2019-root.pem --from-file clair-config.yaml=./clair-config.yaml config-bundle-secret

$ oc create secret generic --from-file config.yaml=./config.yaml --from-file extra_ca_cert_rds-ca-2019-root.pem=./rds-ca-2019-root.pem --from-file clair-config.yaml=./clair-config.yaml config-bundle-secretCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example Clair

config.yamlfileCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note-

The database certificate is mounted under

/run/certs/rds-ca-2019-root.pemon the Clair application pod in theclair-config.yaml. It must be specified when configuring yourclair-config.yaml. -

An example

clair-config.yamlcan be found at Clair on OpenShift config.

-

The database certificate is mounted under

Add the

clair-config.yamlfile to your bundle secret, for example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Note-

When updated, the provided

clair-config.yamlfile is mounted into the Clair pod. Any fields not provided are automatically populated with defaults using the Clair configuration module.

-

When updated, the provided

You can check the status of your Clair pod by clicking the commit in the Build History page, or by running

oc get pods -n <namespace>. For example:oc get pods -n <namespace>

$ oc get pods -n <namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME READY STATUS RESTARTS AGE f192fe4a-c802-4275-bcce-d2031e635126-9l2b5-25lg2 1/1 Running 0 7s

NAME READY STATUS RESTARTS AGE f192fe4a-c802-4275-bcce-d2031e635126-9l2b5-25lg2 1/1 Running 0 7sCopy to Clipboard Copied! Toggle word wrap Toggle overflow

3.4.3. Clair in disconnected environments

Clair uses a set of components called updaters to handle the fetching and parsing of data from various vulnerability databases. Updaters are set up by default to pull vulnerability data directly from the internet and work for immediate use. However, some users might require Red Hat Quay to run in a disconnected environment, or an environment without direct access to the internet. Clair supports disconnected environments by working with different types of update workflows that take network isolation into consideration. This works by using the clairctl command line interface tool, which obtains updater data from the internet by using an open host, securely transferring the data to an isolated host, and then important the updater data on the isolated host into Clair.

Use this guide to deploy Clair in a disconnected environment.

Currently, Clair enrichment data is CVSS data. Enrichment data is currently unsupported in disconnected environments.

For more information about Clair updaters, see "Clair updaters".

3.4.3.1. Setting up Clair in a disconnected OpenShift Container Platform cluster

Use the following procedures to set up an OpenShift Container Platform provisioned Clair pod in a disconnected OpenShift Container Platform cluster.

3.4.3.1.1. Installing the clairctl command line utility tool for OpenShift Container Platform deployments

Use the following procedure to install the clairctl CLI tool for OpenShift Container Platform deployments.

Procedure

Install the

clairctlprogram for a Clair deployment in an OpenShift Container Platform cluster by entering the following command:oc -n quay-enterprise exec example-registry-clair-app-64dd48f866-6ptgw -- cat /usr/bin/clairctl > clairctl

$ oc -n quay-enterprise exec example-registry-clair-app-64dd48f866-6ptgw -- cat /usr/bin/clairctl > clairctlCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteUnofficially, the

clairctltool can be downloadedSet the permissions of the

clairctlfile so that it can be executed and run by the user, for example:chmod u+x ./clairctl

$ chmod u+x ./clairctlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

3.4.3.1.2. Retrieving and decoding the Clair configuration secret for Clair deployments on OpenShift Container Platform

Use the following procedure to retrieve and decode the configuration secret for an OpenShift Container Platform provisioned Clair instance on OpenShift Container Platform.

Prerequisites

-

You have installed the

clairctlcommand line utility tool.

Procedure

Enter the following command to retrieve and decode the configuration secret, and then save it to a Clair configuration YAML:

oc get secret -n quay-enterprise example-registry-clair-config-secret -o "jsonpath={$.data['config\.yaml']}" | base64 -d > clair-config.yaml$ oc get secret -n quay-enterprise example-registry-clair-config-secret -o "jsonpath={$.data['config\.yaml']}" | base64 -d > clair-config.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Update the

clair-config.yamlfile so that thedisable_updatersandairgapparameters are set toTrue, for example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

3.4.3.1.3. Exporting the updaters bundle from a connected Clair instance

Use the following procedure to export the updaters bundle from a Clair instance that has access to the internet.

Prerequisites

-

You have installed the

clairctlcommand line utility tool. -

You have retrieved and decoded the Clair configuration secret, and saved it to a Clair

config.yamlfile. -

The

disable_updatersandairgapparameters are set toTruein your Clairconfig.yamlfile.

Procedure

From a Clair instance that has access to the internet, use the

clairctlCLI tool with your configuration file to export the updaters bundle. For example:./clairctl --config ./config.yaml export-updaters updates.gz

$ ./clairctl --config ./config.yaml export-updaters updates.gzCopy to Clipboard Copied! Toggle word wrap Toggle overflow

3.4.3.1.4. Configuring access to the Clair database in the disconnected OpenShift Container Platform cluster

Use the following procedure to configure access to the Clair database in your disconnected OpenShift Container Platform cluster.

Prerequisites

-

You have installed the

clairctlcommand line utility tool. -

You have retrieved and decoded the Clair configuration secret, and saved it to a Clair

config.yamlfile. -

The

disable_updatersandairgapparameters are set toTruein your Clairconfig.yamlfile. - You have exported the updaters bundle from a Clair instance that has access to the internet.

Procedure

Determine your Clair database service by using the

ocCLI tool, for example:oc get svc -n quay-enterprise

$ oc get svc -n quay-enterpriseCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE example-registry-clair-app ClusterIP 172.30.224.93 <none> 80/TCP,8089/TCP 4d21h example-registry-clair-postgres ClusterIP 172.30.246.88 <none> 5432/TCP 4d21h ...

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE example-registry-clair-app ClusterIP 172.30.224.93 <none> 80/TCP,8089/TCP 4d21h example-registry-clair-postgres ClusterIP 172.30.246.88 <none> 5432/TCP 4d21h ...Copy to Clipboard Copied! Toggle word wrap Toggle overflow Forward the Clair database port so that it is accessible from the local machine. For example:

oc port-forward -n quay-enterprise service/example-registry-clair-postgres 5432:5432

$ oc port-forward -n quay-enterprise service/example-registry-clair-postgres 5432:5432Copy to Clipboard Copied! Toggle word wrap Toggle overflow Update your Clair

config.yamlfile, for example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Replace the value of the

hostin the multipleconnstringfields withlocalhost. - 2

- For more information about the

rhel-repository-scannerparameter, see "Mapping repositories to Common Product Enumeration information". - 3

- For more information about the

rhel_containerscannerparameter, see "Mapping repositories to Common Product Enumeration information".

3.4.3.1.5. Importing the updaters bundle into the disconnected OpenShift Container Platform cluster

Use the following procedure to import the updaters bundle into your disconnected OpenShift Container Platform cluster.

Prerequisites

-

You have installed the

clairctlcommand line utility tool. -

You have retrieved and decoded the Clair configuration secret, and saved it to a Clair

config.yamlfile. -

The

disable_updatersandairgapparameters are set toTruein your Clairconfig.yamlfile. - You have exported the updaters bundle from a Clair instance that has access to the internet.

- You have transferred the updaters bundle into your disconnected environment.

Procedure

Use the

clairctlCLI tool to import the updaters bundle into the Clair database that is deployed by OpenShift Container Platform. For example:./clairctl --config ./clair-config.yaml import-updaters updates.gz

$ ./clairctl --config ./clair-config.yaml import-updaters updates.gzCopy to Clipboard Copied! Toggle word wrap Toggle overflow

3.4.3.2. Setting up a self-managed deployment of Clair for a disconnected OpenShift Container Platform cluster

Use the following procedures to set up a self-managed deployment of Clair for a disconnected OpenShift Container Platform cluster.

3.4.3.2.1. Installing the clairctl command line utility tool for a self-managed Clair deployment on OpenShift Container Platform

Use the following procedure to install the clairctl CLI tool for self-managed Clair deployments on OpenShift Container Platform.

Procedure

Install the

clairctlprogram for a self-managed Clair deployment by using thepodman cpcommand, for example:sudo podman cp clairv4:/usr/bin/clairctl ./clairctl

$ sudo podman cp clairv4:/usr/bin/clairctl ./clairctlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Set the permissions of the

clairctlfile so that it can be executed and run by the user, for example:chmod u+x ./clairctl

$ chmod u+x ./clairctlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

3.4.3.2.2. Deploying a self-managed Clair container for disconnected OpenShift Container Platform clusters

Use the following procedure to deploy a self-managed Clair container for disconnected OpenShift Container Platform clusters.

Prerequisites

-

You have installed the

clairctlcommand line utility tool.

Procedure

Create a folder for your Clair configuration file, for example:

mkdir /etc/clairv4/config/

$ mkdir /etc/clairv4/config/Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a Clair configuration file with the

disable_updatersparameter set toTrue, for example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Start Clair by using the container image, mounting in the configuration from the file you created:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

3.4.3.2.3. Exporting the updaters bundle from a connected Clair instance

Use the following procedure to export the updaters bundle from a Clair instance that has access to the internet.

Prerequisites

-

You have installed the

clairctlcommand line utility tool. - You have deployed Clair.

-

The

disable_updatersandairgapparameters are set toTruein your Clairconfig.yamlfile.

Procedure

From a Clair instance that has access to the internet, use the

clairctlCLI tool with your configuration file to export the updaters bundle. For example:./clairctl --config ./config.yaml export-updaters updates.gz

$ ./clairctl --config ./config.yaml export-updaters updates.gzCopy to Clipboard Copied! Toggle word wrap Toggle overflow

3.4.3.2.4. Configuring access to the Clair database in the disconnected OpenShift Container Platform cluster

Use the following procedure to configure access to the Clair database in your disconnected OpenShift Container Platform cluster.

Prerequisites

-

You have installed the

clairctlcommand line utility tool. - You have deployed Clair.

-

The

disable_updatersandairgapparameters are set toTruein your Clairconfig.yamlfile. - You have exported the updaters bundle from a Clair instance that has access to the internet.

Procedure

Determine your Clair database service by using the

ocCLI tool, for example:oc get svc -n quay-enterprise

$ oc get svc -n quay-enterpriseCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE example-registry-clair-app ClusterIP 172.30.224.93 <none> 80/TCP,8089/TCP 4d21h example-registry-clair-postgres ClusterIP 172.30.246.88 <none> 5432/TCP 4d21h ...

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE example-registry-clair-app ClusterIP 172.30.224.93 <none> 80/TCP,8089/TCP 4d21h example-registry-clair-postgres ClusterIP 172.30.246.88 <none> 5432/TCP 4d21h ...Copy to Clipboard Copied! Toggle word wrap Toggle overflow Forward the Clair database port so that it is accessible from the local machine. For example:

oc port-forward -n quay-enterprise service/example-registry-clair-postgres 5432:5432

$ oc port-forward -n quay-enterprise service/example-registry-clair-postgres 5432:5432Copy to Clipboard Copied! Toggle word wrap Toggle overflow Update your Clair

config.yamlfile, for example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Replace the value of the

hostin the multipleconnstringfields withlocalhost. - 2

- For more information about the

rhel-repository-scannerparameter, see "Mapping repositories to Common Product Enumeration information". - 3

- For more information about the

rhel_containerscannerparameter, see "Mapping repositories to Common Product Enumeration information".

3.4.3.2.5. Importing the updaters bundle into the disconnected OpenShift Container Platform cluster

Use the following procedure to import the updaters bundle into your disconnected OpenShift Container Platform cluster.

Prerequisites

-

You have installed the

clairctlcommand line utility tool. - You have deployed Clair.

-

The

disable_updatersandairgapparameters are set toTruein your Clairconfig.yamlfile. - You have exported the updaters bundle from a Clair instance that has access to the internet.

- You have transferred the updaters bundle into your disconnected environment.

Procedure

Use the

clairctlCLI tool to import the updaters bundle into the Clair database that is deployed by OpenShift Container Platform:./clairctl --config ./clair-config.yaml import-updaters updates.gz

$ ./clairctl --config ./clair-config.yaml import-updaters updates.gzCopy to Clipboard Copied! Toggle word wrap Toggle overflow

3.4.4. Mapping repositories to Common Product Enumeration information

Clair’s Red Hat Enterprise Linux (RHEL) scanner relies on a Common Product Enumeration (CPE) file to map RPM packages to the corresponding security data to produce matching results. Red Hat Product Security maintains and regularly updates these files.

The CPE file must be present, or access to the file must be allowed, for the scanner to properly process RPM packages. If the file is not present, RPM packages installed in the container image will not be scanned.

| CPE | Link to JSON mapping file |

|---|---|

|

| |

|

|

By default, Clair’s indexer includes the repos2cpe and names2repos data files within the Clair container. This means that you can reference /data/repository-to-cpe.json and /data/container-name-repos-map.json in your clair-config.yaml file without the need for additional configuration.

Although Red Hat Product Security updates the repos2cpe and names2repos files regularly, the versions included in the Clair container are only updated with Red Hat Quay releases (for example, version 3.14.1 → 3.14.2). This can lead to discrepancies between the latest CPE files and those bundled with Clair."

3.4.4.1. Mapping repositories to Common Product Enumeration example configuration

Use the repo2cpe_mapping_file and name2repos_mapping_file fields in your Clair configuration to include the CPE JSON mapping files. For example:

For more information, see How to accurately match OVAL security data to installed RPMs.

3.5. Resizing Managed Storage

When deploying Red Hat Quay on OpenShift Container Platform, three distinct persistent volume claims (PVCs) are deployed:

- One for the PostgreSQL 13 registry.

- One for the Clair PostgreSQL 13 registry.

- One that uses NooBaa as a backend storage.

The connection between Red Hat Quay and NooBaa is done through the S3 API and ObjectBucketClaim API in OpenShift Container Platform. Red Hat Quay leverages that API group to create a bucket in NooBaa, obtain access keys, and automatically set everything up. On the backend, or NooBaa, side, that bucket is creating inside of the backing store. As a result, NooBaa PVCs are not mounted or connected to Red Hat Quay pods.

The default size for the PostgreSQL 13 and Clair PostgreSQL 13 PVCs is set to 50 GiB. You can expand storage for these PVCs on the OpenShift Container Platform console by using the following procedure.

The following procedure shares commonality with Expanding Persistent Volume Claims on Red Hat OpenShift Data Foundation.

3.5.1. Resizing PostgreSQL 13 PVCs on Red Hat Quay

Use the following procedure to resize the PostgreSQL 13 and Clair PostgreSQL 13 PVCs.

Prerequisites

- You have cluster admin privileges on OpenShift Container Platform.

Procedure

- Log into the OpenShift Container Platform console and select Storage → Persistent Volume Claims.

-

Select the desired

PersistentVolumeClaimfor either PostgreSQL 13 or Clair PostgreSQL 13, for example,example-registry-quay-postgres-13. - From the Action menu, select Expand PVC.

Enter the new size of the Persistent Volume Claim and select Expand.

After a few minutes, the expanded size should reflect in the PVC’s Capacity field.

3.6. Customizing Default Operator Images

Currently, customizing default Operator images is not supported on IBM Power and IBM Z.

In certain circumstances, it might be useful to override the default images used by the Red Hat Quay Operator. This can be done by setting one or more environment variables in the Red Hat Quay Operator ClusterServiceVersion.

Using this mechanism is not supported for production Red Hat Quay environments and is strongly encouraged only for development or testing purposes. There is no guarantee your deployment will work correctly when using non-default images with the Red Hat Quay Operator.

3.6.1. Environment Variables

The following environment variables are used in the Red Hat Quay Operator to override component images:

| Environment Variable | Component |

|

|

|

|

|

|

|

|

|

|

|

|

Overridden images must be referenced by manifest (@sha256:) and not by tag (:latest).

3.6.2. Applying overrides to a running Operator

When the Red Hat Quay Operator is installed in a cluster through the Operator Lifecycle Manager (OLM), the managed component container images can be easily overridden by modifying the ClusterServiceVersion object.

Use the following procedure to apply overrides to a running Red Hat Quay Operator.

Procedure

The

ClusterServiceVersionobject is Operator Lifecycle Manager’s representation of a running Operator in the cluster. Find the Red Hat Quay Operator’sClusterServiceVersionby using a Kubernetes UI or thekubectl/ocCLI tool. For example:oc get clusterserviceversions -n <your-namespace>

$ oc get clusterserviceversions -n <your-namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Using the UI,

oc edit, or another method, modify the Red Hat QuayClusterServiceVersionto include the environment variables outlined above to point to the override images:JSONPath:

spec.install.spec.deployments[0].spec.template.spec.containers[0].envCopy to Clipboard Copied! Toggle word wrap Toggle overflow

This is done at the Operator level, so every QuayRegistry will be deployed using these same overrides.

3.7. AWS S3 CloudFront

Use the following procedure if you are using AWS S3 Cloudfront for your backend registry storage.

Procedure

Enter the following command to specify the registry key:

oc create secret generic --from-file config.yaml=./config_awss3cloudfront.yaml --from-file default-cloudfront-signing-key.pem=./default-cloudfront-signing-key.pem test-config-bundle

$ oc create secret generic --from-file config.yaml=./config_awss3cloudfront.yaml --from-file default-cloudfront-signing-key.pem=./default-cloudfront-signing-key.pem test-config-bundleCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 4. Virtual builds with Red Hat Quay on OpenShift Container Platform

Documentation for the builds feature has been moved to Builders and image automation. This chapter will be removed in a future version of Red Hat Quay.

Chapter 5. Geo-replication

Geo-replication allows multiple, geographically distributed Red Hat Quay deployments to work as a single registry from the perspective of a client or user. It significantly improves push and pull performance in a globally-distributed Red Hat Quay setup. Image data is asynchronously replicated in the background with transparent failover and redirect for clients.

Deployments of Red Hat Quay with geo-replication is supported on standalone and Operator deployments.

Additional resources

- For more information about the geo-replication feature’s architecture, see the architecture guide, which includes technical diagrams and a high-level overview.

5.1. Geo-replication features

- When geo-replication is configured, container image pushes will be written to the preferred storage engine for that Red Hat Quay instance. This is typically the nearest storage backend within the region.

- After the initial push, image data will be replicated in the background to other storage engines.

- The list of replication locations is configurable and those can be different storage backends.

- An image pull will always use the closest available storage engine, to maximize pull performance.

- If replication has not been completed yet, the pull will use the source storage backend instead.

5.2. Geo-replication requirements and constraints

- In geo-replicated setups, Red Hat Quay requires that all regions are able to read and write to all other region’s object storage. Object storage must be geographically accessible by all other regions.

- In case of an object storage system failure of one geo-replicating site, that site’s Red Hat Quay deployment must be shut down so that clients are redirected to the remaining site with intact storage systems by a global load balancer. Otherwise, clients will experience pull and push failures.

- Red Hat Quay has no internal awareness of the health or availability of the connected object storage system. Users must configure a global load balancer (LB) to monitor the health of your distributed system and to route traffic to different sites based on their storage status.

-

To check the status of your geo-replication deployment, you must use the

/health/endtoendcheckpoint, which is used for global health monitoring. You must configure the redirect manually using the/health/endtoendendpoint. The/health/instanceend point only checks local instance health. - If the object storage system of one site becomes unavailable, there will be no automatic redirect to the remaining storage system, or systems, of the remaining site, or sites.

- Geo-replication is asynchronous. The permanent loss of a site incurs the loss of the data that has been saved in that sites' object storage system but has not yet been replicated to the remaining sites at the time of failure.

A single database, and therefore all metadata and Red Hat Quay configuration, is shared across all regions.

Geo-replication does not replicate the database. In the event of an outage, Red Hat Quay with geo-replication enabled will not failover to another database.

- A single Redis cache is shared across the entire Red Hat Quay setup and needs to be accessible by all Red Hat Quay pods.

-

The exact same configuration should be used across all regions, with exception of the storage backend, which can be configured explicitly using the

QUAY_DISTRIBUTED_STORAGE_PREFERENCEenvironment variable. - Geo-replication requires object storage in each region. It does not work with local storage.

- Each region must be able to access every storage engine in each region, which requires a network path.

- Alternatively, the storage proxy option can be used.

- The entire storage backend, for example, all blobs, is replicated. Repository mirroring, by contrast, can be limited to a repository, or an image.

- All Red Hat Quay instances must share the same entrypoint, typically through a load balancer.

- All Red Hat Quay instances must have the same set of superusers, as they are defined inside the common configuration file.

In geo-replication environments, your Clair configuration can be set to

unmanaged. An unmanaged Clair database allows the Red Hat Quay Operator to work in a geo-replicated environment where multiple instances of the Operator must communicate with the same database. For more information, see Advanced Clair configuration.If you keep your Clair configuration

managed, you must retrieve the configuration file for the deployed Clair instance that is deployed by the Operator. For more information, see Retrieving and decoding the Clair configuration secret for Clair deployments on OpenShift Container Platform.- Geo-Replication requires SSL/TLS certificates and keys. For more information, see * Geo-Replication requires SSL/TLS certificates and keys. For more information, see Proof of concept deployment using SSL/TLS certificates. .

If the above requirements cannot be met, you should instead use two or more distinct Red Hat Quay deployments and take advantage of repository mirroring functions.

5.2.1. Setting up geo-replication on OpenShift Container Platform

Use the following procedure to set up geo-replication on OpenShift Container Platform.

Procedure

- Deploy a postgres instance for Red Hat Quay.

Login to the database by entering the following command:

psql -U <username> -h <hostname> -p <port> -d <database_name>

psql -U <username> -h <hostname> -p <port> -d <database_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a database for Red Hat Quay named

quay. For example:CREATE DATABASE quay;

CREATE DATABASE quay;Copy to Clipboard Copied! Toggle word wrap Toggle overflow Enable pg_trm extension inside the database

\c quay; CREATE EXTENSION IF NOT EXISTS pg_trgm;

\c quay; CREATE EXTENSION IF NOT EXISTS pg_trgm;Copy to Clipboard Copied! Toggle word wrap Toggle overflow Deploy a Redis instance:

Note- Deploying a Redis instance might be unnecessary if your cloud provider has its own service.

- Deploying a Redis instance is required if you are leveraging Builders.

- Deploy a VM for Redis

- Verify that it is accessible from the clusters where Red Hat Quay is running

- Port 6379/TCP must be open

Run Redis inside the instance

sudo dnf install -y podman podman run -d --name redis -p 6379:6379 redis

sudo dnf install -y podman podman run -d --name redis -p 6379:6379 redisCopy to Clipboard Copied! Toggle word wrap Toggle overflow

- Create two object storage backends, one for each cluster. Ideally, one object storage bucket will be close to the first, or primary, cluster, and the other will run closer to the second, or secondary, cluster.

- Deploy the clusters with the same config bundle, using environment variable overrides to select the appropriate storage backend for an individual cluster.

- Configure a load balancer to provide a single entry point to the clusters.

5.2.1.1. Configuring geo-replication for the Red Hat Quay on OpenShift Container Platform

Use the following procedure to configure geo-replication for the Red Hat Quay on OpenShift Container Platform.

Procedure

Create a

config.yamlfile that is shared between clusters. Thisconfig.yamlfile contains the details for the common PostgreSQL, Redis and storage backends:Geo-replication

config.yamlfileCopy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- A proper

SERVER_HOSTNAMEmust be used for the route and must match the hostname of the global load balancer.

Create the

configBundleSecretby entering the following command:oc create secret generic --from-file config.yaml=./config.yaml georep-config-bundle

$ oc create secret generic --from-file config.yaml=./config.yaml georep-config-bundleCopy to Clipboard Copied! Toggle word wrap Toggle overflow In each of the clusters, set the

configBundleSecretand use theQUAY_DISTRIBUTED_STORAGE_PREFERENCEenvironmental variable override to configure the appropriate storage for that cluster. For example:NoteThe

config.yamlfile between both deployments must match. If making a change to one cluster, it must also be changed in the other.US cluster

QuayRegistryexampleCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteBecause SSL/TLS is unmanaged, and the route is managed, you must supply the certificates directly in the config bundle. For more information, see Configuring SSL/TLS and Routes.

European cluster

Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteBecause SSL/TLS is unmanaged, and the route is managed, you must supply the certificates directly in the config bundle. For more information, see Configuring SSL/TLS and Routes.

5.2.2. Mixed storage for geo-replication

Red Hat Quay geo-replication supports the use of different and multiple replication targets, for example, using AWS S3 storage on public cloud and using Ceph storage on premise. This complicates the key requirement of granting access to all storage backends from all Red Hat Quay pods and cluster nodes. As a result, it is recommended that you use the following:

- A VPN to prevent visibility of the internal storage, or

- A token pair that only allows access to the specified bucket used by Red Hat Quay

This results in the public cloud instance of Red Hat Quay having access to on-premise storage, but the network will be encrypted, protected, and will use ACLs, thereby meeting security requirements.

If you cannot implement these security measures, it might be preferable to deploy two distinct Red Hat Quay registries and to use repository mirroring as an alternative to geo-replication.

5.3. Upgrading a geo-replication deployment of Red Hat Quay on OpenShift Container Platform

Use the following procedure to upgrade your geo-replicated Red Hat Quay on OpenShift Container Platform deployment.

- When upgrading geo-replicated Red Hat Quay on OpenShift Container Platform deployment to the next y-stream release (for example, Red Hat Quay 3.7 → Red Hat Quay 3.8), you must stop operations before upgrading.

- There is intermittent downtime down upgrading from one y-stream release to the next.

- It is highly recommended to back up your Red Hat Quay on OpenShift Container Platform deployment before upgrading.

This procedure assumes that you are running the Red Hat Quay registry on three or more systems. For this procedure, we will assume three systems named System A, System B, and System C. System A will serve as the primary system in which the Red Hat Quay Operator is deployed.

On System B and System C, scale down your Red Hat Quay registry. This is done by disabling auto scaling and overriding the replica county for Red Hat Quay, mirror workers, and Clair if it is managed. Use the following

quayregistry.yamlfile as a reference:Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteYou must keep the Red Hat Quay registry running on System A. Do not update the

quayregistry.yamlfile on System A.Wait for the

registry-quay-app,registry-quay-mirror, andregistry-clair-apppods to disappear. Enter the following command to check their status:oc get pods -n <quay-namespace>

oc get pods -n <quay-namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

quay-operator.v3.7.1-6f9d859bd-p5ftc 1/1 Running 0 12m quayregistry-clair-postgres-7487f5bd86-xnxpr 1/1 Running 1 (12m ago) 12m quayregistry-quay-app-upgrade-xq2v6 0/1 Completed 0 12m quayregistry-quay-redis-84f888776f-hhgms 1/1 Running 0 12m

quay-operator.v3.7.1-6f9d859bd-p5ftc 1/1 Running 0 12m quayregistry-clair-postgres-7487f5bd86-xnxpr 1/1 Running 1 (12m ago) 12m quayregistry-quay-app-upgrade-xq2v6 0/1 Completed 0 12m quayregistry-quay-redis-84f888776f-hhgms 1/1 Running 0 12mCopy to Clipboard Copied! Toggle word wrap Toggle overflow - On System A, initiate a Red Hat Quay upgrade to the latest y-stream version. This is a manual process. For more information about upgrading installed Operators, see Upgrading installed Operators. For more information about Red Hat Quay upgrade paths, see Upgrading the Red Hat Quay Operator.

-

After the new Red Hat Quay registry is installed, the necessary upgrades on the cluster are automatically completed. Afterwards, new Red Hat Quay pods are started with the latest y-stream version. Additionally, new

Quaypods are scheduled and started. Confirm that the update has properly worked by navigating to the Red Hat Quay UI:

In the OpenShift console, navigate to Operators → Installed Operators, and click the Registry Endpoint link.

ImportantDo not execute the following step until the Red Hat Quay UI is available. Do not upgrade the Red Hat Quay registry on System B and on System C until the UI is available on System A.

Confirm that the update has properly worked on System A, initiate the Red Hat Quay upgrade on System B and on System C. The Operator upgrade results in an upgraded Red Hat Quay installation, and the pods are restarted.

NoteBecause the database schema is correct for the new y-stream installation, the new pods on System B and on System C should quickly start.

After updating, revert the changes made in step 1 of this procedure by removing

overridesfor the components. For example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- If the

horizontalpodautoscalerresource was set toTruebefore the upgrade procedure, or if you want Red Hat Quay to scale in case of a resource shortage, set it toTrue.

5.3.1. Removing a geo-replicated site from your Red Hat Quay on OpenShift Container Platform deployment

By using the following procedure, Red Hat Quay administrators can remove sites in a geo-replicated setup.

Prerequisites

- You are logged into OpenShift Container Platform.

-

You have configured Red Hat Quay geo-replication with at least two sites, for example,

usstorageandeustorage. - Each site has its own Organization, Repository, and image tags.

Procedure

Sync the blobs between all of your defined sites by running the following command:

python -m util.backfillreplication

$ python -m util.backfillreplicationCopy to Clipboard Copied! Toggle word wrap Toggle overflow WarningPrior to removing storage engines from your Red Hat Quay

config.yamlfile, you must ensure that all blobs are synced between all defined sites.When running this command, replication jobs are created which are picked up by the replication worker. If there are blobs that need replicated, the script returns UUIDs of blobs that will be replicated. If you run this command multiple times, and the output from the return script is empty, it does not mean that the replication process is done; it means that there are no more blobs to be queued for replication. Customers should use appropriate judgement before proceeding, as the allotted time replication takes depends on the number of blobs detected.

Alternatively, you could use a third party cloud tool, such as Microsoft Azure, to check the synchronization status.

This step must be completed before proceeding.

-

In your Red Hat Quay

config.yamlfile for siteusstorage, remove theDISTRIBUTED_STORAGE_CONFIGentry for theeustoragesite. Enter the following command to identify your

Quayapplication pods:oc get pod -n <quay_namespace>

$ oc get pod -n <quay_namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

quay390usstorage-quay-app-5779ddc886-2drh2 quay390eustorage-quay-app-66969cd859-n2ssm

quay390usstorage-quay-app-5779ddc886-2drh2 quay390eustorage-quay-app-66969cd859-n2ssmCopy to Clipboard Copied! Toggle word wrap Toggle overflow Enter the following command to open an interactive shell session in the

usstoragepod:oc rsh quay390usstorage-quay-app-5779ddc886-2drh2

$ oc rsh quay390usstorage-quay-app-5779ddc886-2drh2Copy to Clipboard Copied! Toggle word wrap Toggle overflow Enter the following command to permanently remove the

eustoragesite:ImportantThe following action cannot be undone. Use with caution.

python -m util.removelocation eustorage

sh-4.4$ python -m util.removelocation eustorageCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 6. Backing up and restoring Red Hat Quay managed by the Red Hat Quay Operator

Use the content within this section to back up and restore Red Hat Quay when managed by the Red Hat Quay Operator on OpenShift Container Platform

6.1. Optional: Enabling read-only mode for Red Hat Quay on OpenShift Container Platform

Enabling read-only mode for your Red Hat Quay on OpenShift Container Platform deployment allows you to manage the registry’s operations. Administrators can enable read-only mode to restrict write access to the registry, which helps ensure data integrity, mitigate risks during maintenance windows, and provide a safeguard against unintended modifications to registry data. It also helps to ensure that your Red Hat Quay registry remains online and available to serve images to users.

When backing up and restoring, you are required to scale down your Red Hat Quay on OpenShift Container Platform deployment. This results in service unavailability during the backup period which, in some cases, might be unacceptable. Enabling read-only mode ensures service availability during the backup and restore procedure for Red Hat Quay on OpenShift Container Platform deployments.

In some cases, a read-only option for Red Hat Quay is not possible since it requires inserting a service key and other manual configuration changes. As an alternative to read-only mode, Red Hat Quay administrators might consider enabling the DISABLE_PUSHES feature. When this field is set to True, users are unable to push images or image tags to the registry when using the CLI. Enabling DISABLE_PUSHES differs from read-only mode because the database is not set as read-only when it is enabled.

This field might be useful in some situations such as when Red Hat Quay administrators want to calculate their registry’s quota and disable image pushing until after calculation has completed. With this method, administrators can avoid putting putting the whole registry in read-only mode, which affects the database, so that most operations can still be done.

For information about enabling this configuration field, see Miscellaneous configuration fields.

Prerequisites

If you are using Red Hat Enterprise Linux (RHEL) 7.x:

- You have enabled the Red Hat Software Collections List (RHSCL).

- You have installed Python 3.6.

-

You have downloaded the

virtualenvpackage. -

You have installed the

gitCLI.

If you are using Red Hat Enterprise Linux (RHEL) 8:

- You have installed Python 3 on your machine.

-

You have downloaded the

python3-virtualenvpackage. -

You have installed the

gitCLI.

-

You have cloned the

https://github.com/quay/quay.gitrepository. -

You have installed the

ocCLI. -

You have access to the cluster with

cluster-adminprivileges.

6.1.1. Creating service keys for Red Hat Quay on OpenShift Container Platform

Red Hat Quay uses service keys to communicate with various components. These keys are used to sign completed requests, such as requesting to scan images, login, storage access, and so on.

Procedure

Enter the following command to obtain a list of Red Hat Quay pods:

oc get pods -n <namespace>

$ oc get pods -n <namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Open a remote shell session to the

Quaycontainer by entering the following command:oc rsh example-registry-quay-app-76c8f55467-52wjz

$ oc rsh example-registry-quay-app-76c8f55467-52wjzCopy to Clipboard Copied! Toggle word wrap Toggle overflow Enter the following command to create the necessary service keys:

python3 tools/generatekeypair.py quay-readonly

sh-4.4$ python3 tools/generatekeypair.py quay-readonlyCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Writing public key to quay-readonly.jwk Writing key ID to quay-readonly.kid Writing private key to quay-readonly.pem

Writing public key to quay-readonly.jwk Writing key ID to quay-readonly.kid Writing private key to quay-readonly.pemCopy to Clipboard Copied! Toggle word wrap Toggle overflow

6.1.2. Adding keys to the PostgreSQL database

Use the following procedure to add your service keys to the PostgreSQL database.

Prerequistes

- You have created the service keys.

Procedure

Enter the following command to enter your Red Hat Quay database environment:

oc rsh example-registry-quay-database-76c8f55467-52wjz psql -U <database_username> -d <database_name>

$ oc rsh example-registry-quay-database-76c8f55467-52wjz psql -U <database_username> -d <database_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Display the approval types and associated notes of the

servicekeyapprovalby entering the following command:quay=# select * from servicekeyapproval;

quay=# select * from servicekeyapproval;Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Add the service key to your Red Hat Quay database by entering the following query:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

INSERT 0 1

INSERT 0 1Copy to Clipboard Copied! Toggle word wrap Toggle overflow Next, add the key approval with the following query:

quay=# INSERT INTO servicekeyapproval ('approval_type', 'approved_date', 'notes') VALUES ("ServiceKeyApprovalType.SUPERUSER", "CURRENT_DATE", {include_notes_here_on_why_this_is_being_added});quay=# INSERT INTO servicekeyapproval ('approval_type', 'approved_date', 'notes') VALUES ("ServiceKeyApprovalType.SUPERUSER", "CURRENT_DATE", {include_notes_here_on_why_this_is_being_added});Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

INSERT 0 1

INSERT 0 1Copy to Clipboard Copied! Toggle word wrap Toggle overflow Set the

approval_idfield on the created service key row to theidfield from the created service key approval. You can use the followingSELECTstatements to get the necessary IDs:UPDATE servicekey SET approval_id = (SELECT id FROM servicekeyapproval WHERE approval_type = 'ServiceKeyApprovalType.SUPERUSER') WHERE name = 'quay-readonly';

UPDATE servicekey SET approval_id = (SELECT id FROM servicekeyapproval WHERE approval_type = 'ServiceKeyApprovalType.SUPERUSER') WHERE name = 'quay-readonly';Copy to Clipboard Copied! Toggle word wrap Toggle overflow UPDATE 1

UPDATE 1Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.1.3. Configuring read-only mode Red Hat Quay on OpenShift Container Platform

After the service keys have been created and added to your PostgreSQL database, you must restart the Quay container on your OpenShift Container Platform deployment.

Deploying Red Hat Quay on OpenShift Container Platform in read-only mode requires you to modify the secrets stored inside of your OpenShift Container Platform cluster. It is highly recommended that you create a backup of the secret prior to making changes to it.

Prerequisites

- You have created the service keys and added them to your PostgreSQL database.

Procedure

Enter the following command to read the secret name of your Red Hat Quay on OpenShift Container Platform deployment:

oc get deployment -o yaml <quay_main_app_deployment_name>

$ oc get deployment -o yaml <quay_main_app_deployment_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Use the

base64command to encode thequay-readonly.kidandquay-readonly.pemfiles:base64 -w0 quay-readonly.kid

$ base64 -w0 quay-readonly.kidCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

ZjUyNDFm...

ZjUyNDFm...Copy to Clipboard Copied! Toggle word wrap Toggle overflow base64 -w0 quay-readonly.pem

$ base64 -w0 quay-readonly.pemCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

LS0tLS1CRUdJTiBSU0E...

LS0tLS1CRUdJTiBSU0E...Copy to Clipboard Copied! Toggle word wrap Toggle overflow Obtain the current configuration bundle and secret by entering the following command:

oc get secret quay-config-secret-name -o json | jq '.data."config.yaml"' | cut -d '"' -f2 | base64 -d -w0 > config.yaml

$ oc get secret quay-config-secret-name -o json | jq '.data."config.yaml"' | cut -d '"' -f2 | base64 -d -w0 > config.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Edit the

config.yamlfile and add the following information:# ... REGISTRY_STATE: readonly INSTANCE_SERVICE_KEY_KID_LOCATION: 'conf/stack/quay-readonly.kid' INSTANCE_SERVICE_KEY_LOCATION: 'conf/stack/quay-readonly.pem' # ...

# ... REGISTRY_STATE: readonly INSTANCE_SERVICE_KEY_KID_LOCATION: 'conf/stack/quay-readonly.kid' INSTANCE_SERVICE_KEY_LOCATION: 'conf/stack/quay-readonly.pem' # ...Copy to Clipboard Copied! Toggle word wrap Toggle overflow Save the file and

base64encode it by running the following command:base64 -w0 quay-config.yaml

$ base64 -w0 quay-config.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Scale down the Red Hat Quay Operator pods to

0. This ensures that the Operator does not reconcile the secret after editing it.oc scale --replicas=0 deployment quay-operator -n openshift-operators

$ oc scale --replicas=0 deployment quay-operator -n openshift-operatorsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Edit the secret to include the new content:

oc edit secret quay-config-secret-name -n quay-namespace

$ oc edit secret quay-config-secret-name -n quay-namespaceCopy to Clipboard Copied! Toggle word wrap Toggle overflow Copy to Clipboard Copied! Toggle word wrap Toggle overflow With your Red Hat Quay on OpenShift Container Platform deployment on read-only mode, you can safely manage your registry’s operations and perform such actions as backup and restore.

6.1.3.1. Scaling up the Red Hat Quay on OpenShift Container Platform from a read-only deployment

When you no longer want Red Hat Quay on OpenShift Container Platform to be in read-only mode, you can scale the deployment back up and remove the content added from the secret.

Procedure

Edit the

config.yamlfile and remove the following information:# ... REGISTRY_STATE: readonly INSTANCE_SERVICE_KEY_KID_LOCATION: 'conf/stack/quay-readonly.kid' INSTANCE_SERVICE_KEY_LOCATION: 'conf/stack/quay-readonly.pem' # ...

# ... REGISTRY_STATE: readonly INSTANCE_SERVICE_KEY_KID_LOCATION: 'conf/stack/quay-readonly.kid' INSTANCE_SERVICE_KEY_LOCATION: 'conf/stack/quay-readonly.pem' # ...Copy to Clipboard Copied! Toggle word wrap Toggle overflow Scale the Red Hat Quay Operator back up by entering the following command:

oc scale --replicas=1 deployment quay-operator -n openshift-operators

oc scale --replicas=1 deployment quay-operator -n openshift-operatorsCopy to Clipboard Copied! Toggle word wrap Toggle overflow

6.2. Backing up Red Hat Quay

Database backups should be performed regularly using either the supplied tools on the PostgreSQL image or your own backup infrastructure. The Red Hat Quay Operator does not ensure that the PostgreSQL database is backed up.

This procedure covers backing up your Red Hat Quay PostgreSQL database. It does not cover backing up the Clair PostgreSQL database. Strictly speaking, backing up the Clair PostgreSQL database is not needed because it can be recreated. If you opt to recreate it from scratch, you will wait for the information to be repopulated after all images inside of your Red Hat Quay deployment are scanned. During this downtime, security reports are unavailable.

If you are considering backing up the Clair PostgreSQL database, you must consider that its size is dependent upon the number of images stored inside of Red Hat Quay. As a result, the database can be extremely large.

This procedure describes how to create a backup of Red Hat Quay on OpenShift Container Platform using the Operator.

Prerequisites

-

A healthy Red Hat Quay deployment on OpenShift Container Platform using the Red Hat Quay Operator. The status condition

Availableis set toTrue. -

The components

quay,postgresandobjectstorageare set tomanaged: true -

If the component

clairis set tomanaged: truethe componentclairpostgresis also set tomanaged: true(starting with Red Hat Quay v3.7 or later)

If your deployment contains partially unmanaged database or storage components and you are using external services for PostgreSQL or S3-compatible object storage to run your Red Hat Quay deployment, you must refer to the service provider or vendor documentation to create a backup of the data. You can refer to the tools described in this guide as a starting point on how to backup your external PostgreSQL database or object storage.

6.2.1. Red Hat Quay configuration backup

Use the following procedure to back up your Red Hat Quay configuration.

Procedure

To back the

QuayRegistrycustom resource by exporting it, enter the following command:oc get quayregistry <quay_registry_name> -n <quay_namespace> -o yaml > quay-registry.yaml

$ oc get quayregistry <quay_registry_name> -n <quay_namespace> -o yaml > quay-registry.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Edit the resulting

quayregistry.yamland remove the status section and the following metadata fields:metadata.creationTimestamp metadata.finalizers metadata.generation metadata.resourceVersion metadata.uid

metadata.creationTimestamp metadata.finalizers metadata.generation metadata.resourceVersion metadata.uidCopy to Clipboard Copied! Toggle word wrap Toggle overflow Backup the managed keys secret by entering the following command:

NoteIf you are running a version older than Red Hat Quay 3.7.0, this step can be skipped. Some secrets are automatically generated while deploying Red Hat Quay for the first time. These are stored in a secret called

<quay_registry_name>-quay-registry-managed-secret-keysin the namespace of theQuayRegistryresource.oc get secret -n <quay_namespace> <quay_registry_name>-quay-registry-managed-secret-keys -o yaml > managed_secret_keys.yaml

$ oc get secret -n <quay_namespace> <quay_registry_name>-quay-registry-managed-secret-keys -o yaml > managed_secret_keys.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Edit the resulting

managed_secret_keys.yamlfile and remove the entrymetadata.ownerReferences. Yourmanaged_secret_keys.yamlfile should look similar to the following:Copy to Clipboard Copied! Toggle word wrap Toggle overflow All information under the

dataproperty should remain the same.Redirect the current

Quayconfiguration file by entering the following command:oc get secret -n <quay-namespace> $(oc get quayregistry <quay_registry_name> -n <quay_namespace> -o jsonpath='{.spec.configBundleSecret}') -o yaml > config-bundle.yaml$ oc get secret -n <quay-namespace> $(oc get quayregistry <quay_registry_name> -n <quay_namespace> -o jsonpath='{.spec.configBundleSecret}') -o yaml > config-bundle.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Backup the

/conf/stack/config.yamlfile mounted inside of theQuaypods:oc exec -it quay_pod_name -- cat /conf/stack/config.yaml > quay_config.yaml

$ oc exec -it quay_pod_name -- cat /conf/stack/config.yaml > quay_config.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Obtain the

Quaydatabase name:oc -n <quay_namespace> rsh $(oc get pod -l app=quay -o NAME -n <quay_namespace> |head -n 1) cat /conf/stack/config.yaml|awk -F"/" '/^DB_URI/ {print $4}'$ oc -n <quay_namespace> rsh $(oc get pod -l app=quay -o NAME -n <quay_namespace> |head -n 1) cat /conf/stack/config.yaml|awk -F"/" '/^DB_URI/ {print $4}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

quayregistry-quay-database

quayregistry-quay-databaseCopy to Clipboard Copied! Toggle word wrap Toggle overflow

6.2.2. Scaling down your Red Hat Quay deployment

Use the following procedure to scale down your Red Hat Quay deployment.

This step is needed to create a consistent backup of the state of your Red Hat Quay deployment. Do not omit this step, including in setups where PostgreSQL databases and/or S3-compatible object storage are provided by external services (unmanaged by the Red Hat Quay Operator).

Procedure

Depending on the version of your Red Hat Quay deployment, scale down your deployment using one of the following options.

For Operator version 3.7 and newer: Scale down the Red Hat Quay deployment by disabling auto scaling and overriding the replica count for Red Hat Quay, mirror workers, and Clair (if managed). Your

QuayRegistryresource should look similar to the following:Copy to Clipboard Copied! Toggle word wrap Toggle overflow For Operator version 3.6 and earlier: Scale down the Red Hat Quay deployment by scaling down the Red Hat Quay registry first and then the managed Red Hat Quay resources:

oc scale --replicas=0 deployment $(oc get deployment -n <quay-operator-namespace>|awk '/^quay-operator/ {print $1}') -n <quay-operator-namespace>$ oc scale --replicas=0 deployment $(oc get deployment -n <quay-operator-namespace>|awk '/^quay-operator/ {print $1}') -n <quay-operator-namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow oc scale --replicas=0 deployment $(oc get deployment -n <quay-namespace>|awk '/quay-app/ {print $1}') -n <quay-namespace>$ oc scale --replicas=0 deployment $(oc get deployment -n <quay-namespace>|awk '/quay-app/ {print $1}') -n <quay-namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow oc scale --replicas=0 deployment $(oc get deployment -n <quay-namespace>|awk '/quay-mirror/ {print $1}') -n <quay-namespace>$ oc scale --replicas=0 deployment $(oc get deployment -n <quay-namespace>|awk '/quay-mirror/ {print $1}') -n <quay-namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow oc scale --replicas=0 deployment $(oc get deployment -n <quay-namespace>|awk '/clair-app/ {print $1}') -n <quay-namespace>$ oc scale --replicas=0 deployment $(oc get deployment -n <quay-namespace>|awk '/clair-app/ {print $1}') -n <quay-namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Wait for the

registry-quay-app,registry-quay-mirrorandregistry-clair-apppods (depending on which components you set to be managed by the Red Hat Quay Operator) to disappear. You can check their status by running the following command:oc get pods -n <quay_namespace>

$ oc get pods -n <quay_namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output:

oc get pod

$ oc get podCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

quay-operator.v3.7.1-6f9d859bd-p5ftc 1/1 Running 0 12m quayregistry-clair-postgres-7487f5bd86-xnxpr 1/1 Running 1 (12m ago) 12m quayregistry-quay-app-upgrade-xq2v6 0/1 Completed 0 12m quayregistry-quay-database-859d5445ff-cqthr 1/1 Running 0 12m quayregistry-quay-redis-84f888776f-hhgms 1/1 Running 0 12m

quay-operator.v3.7.1-6f9d859bd-p5ftc 1/1 Running 0 12m quayregistry-clair-postgres-7487f5bd86-xnxpr 1/1 Running 1 (12m ago) 12m quayregistry-quay-app-upgrade-xq2v6 0/1 Completed 0 12m quayregistry-quay-database-859d5445ff-cqthr 1/1 Running 0 12m quayregistry-quay-redis-84f888776f-hhgms 1/1 Running 0 12mCopy to Clipboard Copied! Toggle word wrap Toggle overflow

6.2.3. Backing up the Red Hat Quay managed database

Use the following procedure to back up the Red Hat Quay managed database.

If your Red Hat Quay deployment is configured with external, or unmanged, PostgreSQL database(s), refer to your vendor’s documentation on how to create a consistent backup of these databases.

Procedure

Identify the Quay PostgreSQL pod name:

oc get pod -l quay-component=postgres -n <quay_namespace> -o jsonpath='{.items[0].metadata.name}'$ oc get pod -l quay-component=postgres -n <quay_namespace> -o jsonpath='{.items[0].metadata.name}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output:

quayregistry-quay-database-59f54bb7-58xs7

quayregistry-quay-database-59f54bb7-58xs7Copy to Clipboard Copied! Toggle word wrap Toggle overflow Download a backup database:

oc -n <quay_namespace> exec quayregistry-quay-database-59f54bb7-58xs7 -- /usr/bin/pg_dump -C quayregistry-quay-database > backup.sql

$ oc -n <quay_namespace> exec quayregistry-quay-database-59f54bb7-58xs7 -- /usr/bin/pg_dump -C quayregistry-quay-database > backup.sqlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

6.2.3.1. Backing up the Red Hat Quay managed object storage

Use the following procedure to back up the Red Hat Quay managed object storage. The instructions in this section apply to the following configurations:

- Standalone, multi-cloud object gateway configurations

- OpenShift Data Foundations storage requires that the Red Hat Quay Operator provisioned an S3 object storage bucket from, through the ObjectStorageBucketClaim API

If your Red Hat Quay deployment is configured with external (unmanged) object storage, refer to your vendor’s documentation on how to create a copy of the content of Quay’s storage bucket.

Procedure

Decode and export the

AWS_ACCESS_KEY_IDby entering the following command:export AWS_ACCESS_KEY_ID=$(oc get secret -l app=noobaa -n <quay-namespace> -o jsonpath='{.items[0].data.AWS_ACCESS_KEY_ID}' |base64 -d)$ export AWS_ACCESS_KEY_ID=$(oc get secret -l app=noobaa -n <quay-namespace> -o jsonpath='{.items[0].data.AWS_ACCESS_KEY_ID}' |base64 -d)Copy to Clipboard Copied! Toggle word wrap Toggle overflow Decode and export the

AWS_SECRET_ACCESS_KEY_IDby entering the following command:export AWS_SECRET_ACCESS_KEY=$(oc get secret -l app=noobaa -n <quay-namespace> -o jsonpath='{.items[0].data.AWS_SECRET_ACCESS_KEY}' |base64 -d)$ export AWS_SECRET_ACCESS_KEY=$(oc get secret -l app=noobaa -n <quay-namespace> -o jsonpath='{.items[0].data.AWS_SECRET_ACCESS_KEY}' |base64 -d)Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a new directory:

mkdir blobs

$ mkdir blobsCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Copy all blobs to the directory by entering the following command:

aws s3 sync --no-verify-ssl --endpoint https://$(oc get route s3 -n openshift-storage -o jsonpath='{.spec.host}') s3://$(oc get cm -l app=noobaa -n <quay-namespace> -o jsonpath='{.items[0].data.BUCKET_NAME}') ./blobs$ aws s3 sync --no-verify-ssl --endpoint https://$(oc get route s3 -n openshift-storage -o jsonpath='{.spec.host}') s3://$(oc get cm -l app=noobaa -n <quay-namespace> -o jsonpath='{.items[0].data.BUCKET_NAME}') ./blobsCopy to Clipboard Copied! Toggle word wrap Toggle overflow

6.2.4. Scale the Red Hat Quay deployment back up

Depending on the version of your Red Hat Quay deployment, scale up your deployment using one of the following options.

For Operator version 3.7 and newer: Scale up the Red Hat Quay deployment by re-enabling auto scaling, if desired, and removing the replica overrides for Quay, mirror workers and Clair as applicable. Your

QuayRegistryresource should look similar to the following:Copy to Clipboard Copied! Toggle word wrap Toggle overflow For Operator version 3.6 and earlier: Scale up the Red Hat Quay deployment by scaling up the Red Hat Quay registry:

oc scale --replicas=1 deployment $(oc get deployment -n <quay_operator_namespace> | awk '/^quay-operator/ {print $1}') -n <quay_operator_namespace>$ oc scale --replicas=1 deployment $(oc get deployment -n <quay_operator_namespace> | awk '/^quay-operator/ {print $1}') -n <quay_operator_namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Check the status of the Red Hat Quay deployment by entering the following command:

oc wait quayregistry registry --for=condition=Available=true -n <quay_namespace>

$ oc wait quayregistry registry --for=condition=Available=true -n <quay_namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.3. Restoring Red Hat Quay

Use the following procedures to restore Red Hat Quay when the Red Hat Quay Operator manages the database. It should be performed after a backup of your Red Hat Quay registry has been performed. See Backing up Red Hat Quay for more information.

Prerequisites

- Red Hat Quay is deployed on OpenShift Container Platform using the Red Hat Quay Operator.

- A backup of the Red Hat Quay configuration managed by the Red Hat Quay Operator has been created following the instructions in the Backing up Red Hat Quay section

- Your Red Hat Quay database has been backed up.

- The object storage bucket used by Red Hat Quay has been backed up.

-

The components

quay,postgresandobjectstorageare set tomanaged: true -

If the component

clairis set tomanaged: true, the componentclairpostgresis also set tomanaged: true(starting with Red Hat Quay v3.7 or later) - There is no running Red Hat Quay deployment managed by the Red Hat Quay Operator in the target namespace on your OpenShift Container Platform cluster

If your deployment contains partially unmanaged database or storage components and you are using external services for PostgreSQL or S3-compatible object storage to run your Red Hat Quay deployment, you must refer to the service provider or vendor documentation to restore their data from a backup prior to restore Red Hat Quay

6.3.1. Restoring Red Hat Quay and its configuration from a backup

Use the following procedure to restore Red Hat Quay and its configuration files from a backup.

These instructions assume you have followed the process in the Backing up Red Hat Quay guide and create the backup files with the same names.

Procedure

Restore the backed up Red Hat Quay configuration by entering the following command:

oc create -f ./config-bundle.yaml

$ oc create -f ./config-bundle.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow ImportantIf you receive the error

Error from server (AlreadyExists): error when creating "./config-bundle.yaml": secrets "config-bundle-secret" already exists, you must delete your existing resource with$ oc delete Secret config-bundle-secret -n <quay-namespace>and recreate it with$ oc create -f ./config-bundle.yaml.Restore the generated keys from the backup by entering the following command:

oc create -f ./managed-secret-keys.yaml

$ oc create -f ./managed-secret-keys.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Restore the

QuayRegistrycustom resource:oc create -f ./quay-registry.yaml

$ oc create -f ./quay-registry.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check the status of the Red Hat Quay deployment and wait for it to be available:

oc wait quayregistry registry --for=condition=Available=true -n <quay-namespace>

$ oc wait quayregistry registry --for=condition=Available=true -n <quay-namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.3.2. Scaling down your Red Hat Quay deployment

Use the following procedure to scale down your Red Hat Quay deployment.

Procedure

Depending on the version of your Red Hat Quay deployment, scale down your deployment using one of the following options.

For Operator version 3.7 and newer: Scale down the Red Hat Quay deployment by disabling auto scaling and overriding the replica count for Quay, mirror workers and Clair (if managed). Your

QuayRegistryresource should look similar to the following:Copy to Clipboard Copied! Toggle word wrap Toggle overflow For Operator version 3.6 and earlier: Scale down the Red Hat Quay deployment by scaling down the Red Hat Quay registry first and then the managed Red Hat Quay resources: